95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroinform. , 02 December 2021

Volume 15 - 2021 | https://doi.org/10.3389/fninf.2021.738342

This article is part of the Research Topic Open-Source Software for Neurodata Curation and Analysis View all 14 articles

Ruslan Masharipov

Ruslan Masharipov Irina Knyazeva

Irina Knyazeva Yaroslav Nikolaev

Yaroslav Nikolaev Alexander Korotkov

Alexander Korotkov Michael Didur

Michael Didur Denis Cherednichenko

Denis Cherednichenko Maxim Kireev*

Maxim Kireev*Classical null hypothesis significance testing is limited to the rejection of the point-null hypothesis; it does not allow the interpretation of non-significant results. This leads to a bias against the null hypothesis. Herein, we discuss statistical approaches to ‘null effect’ assessment focusing on the Bayesian parameter inference (BPI). Although Bayesian methods have been theoretically elaborated and implemented in common neuroimaging software packages, they are not widely used for ‘null effect’ assessment. BPI considers the posterior probability of finding the effect within or outside the region of practical equivalence to the null value. It can be used to find both ‘activated/deactivated’ and ‘not activated’ voxels or to indicate that the obtained data are not sufficient using a single decision rule. It also allows to evaluate the data as the sample size increases and decide to stop the experiment if the obtained data are sufficient to make a confident inference. To demonstrate the advantages of using BPI for fMRI data group analysis, we compare it with classical null hypothesis significance testing on empirical data. We also use simulated data to show how BPI performs under different effect sizes, noise levels, noise distributions and sample sizes. Finally, we consider the problem of defining the region of practical equivalence for BPI and discuss possible applications of BPI in fMRI studies. To facilitate ‘null effect’ assessment for fMRI practitioners, we provide Statistical Parametric Mapping 12 based toolbox for Bayesian inference.

In the neuroimaging field, it is a common practice to identify statistically significant differences in local brain activity using the general linear model approach for mass-univariate null hypothesis significance testing (NHST) (Friston et al., 1994). NHST considers the probability of obtaining the observed data, or more extreme data, given that the null hypothesis of no difference is true. This probability, or p-value, of 0.01, means that, on average, in one out of 100 ‘hypothetical’ replications of the experiment, we find a difference no less than the one found under the null hypothesis. We conventionally suppose that this is unlikely, therefore, we ‘reject the null’; that is, NHST employs ‘proof by contradiction’ (Cohen, 1994). Conversely, when the p-value is large, it is tempting to ‘accept the null.’ However, the absence of evidence is not evidence of absence (Altman and Bland, 1995). Using NHST, we can only state that we have ‘failed to reject the null.’ Therefore, in the classical NHST framework, the question of interpreting non-significant results remains.

The most pervasive misinterpretation of non-significant results is that they provide evidence for the null hypothesis that there is no difference, or ‘no effect’ (Nickerson, 2000; Greenland et al., 2016; Wasserstein and Lazar, 2016). In fact, non-significant results can be obtained in two cases (Dienes, 2014): (1) the data are insufficient to distinguish the alternative from the null hypothesis, or (2) an effect is indeed null or trivial. To date, the extent to which the problem of making ‘no effect’ conclusions from non-significant results have affected the field of neuroimaging remains unclear, particularly in functional magnetic resonance imaging (fMRI) studies1. Regarding other fields of science such as psychology, neuropsychology, and biology, it was found that in 38–72% of surveyed articles, the null hypothesis was accepted based on non-significant results only (Finch et al., 2001; Schatz et al., 2005; Fidler et al., 2006; Hoekstra et al., 2006; Aczel et al., 2018).

Not mentioning non-significant results at all is another problem. Firstly, some authors may consider non-significant results disappointing or not worth publishing. Secondly, papers with non-significant results are less likely to be published. This publishing bias is also known as the ‘file-drawer problem’ (Rosenthal, 1979; Ioannidis et al., 2014; de Winter and Dodou, 2015; for evidence in fMRI studies, see Jennings and Van Horn, 2012; Acar et al., 2018; David et al., 2018; Samartsidis et al., 2020). Prejudice against the null hypothesis systematically biases our knowledge of true effects (Greenwald, 1975).

This problem is further compounded by the fact that NHST is usually based on the point-null hypothesis, that is, the hypothesis that the effect is exactly zero. However, the probability thereof is zero (Meehl, 1967; Friston et al., 2002a). This means that studies with a sufficiently large sample size will find statistically significant differences even when the effect is trivial or has no practical significance (Cohen, 1965, 1994; Serlin and Lapsley, 1985; Kirk, 1996).

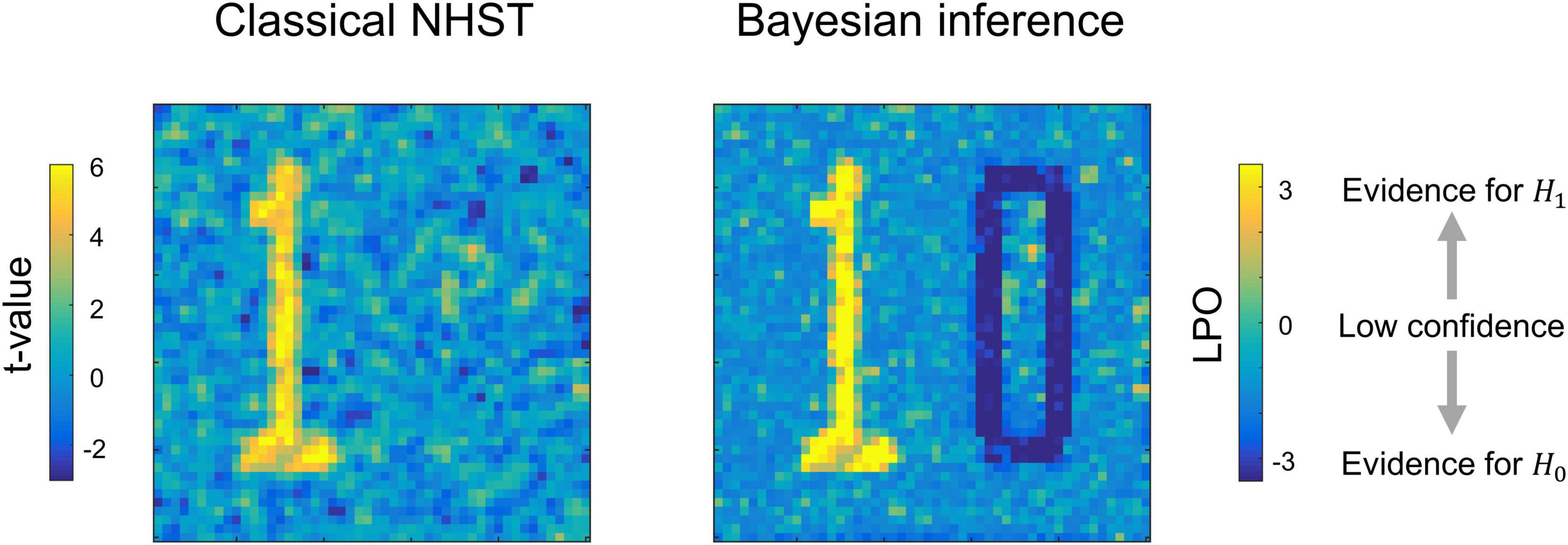

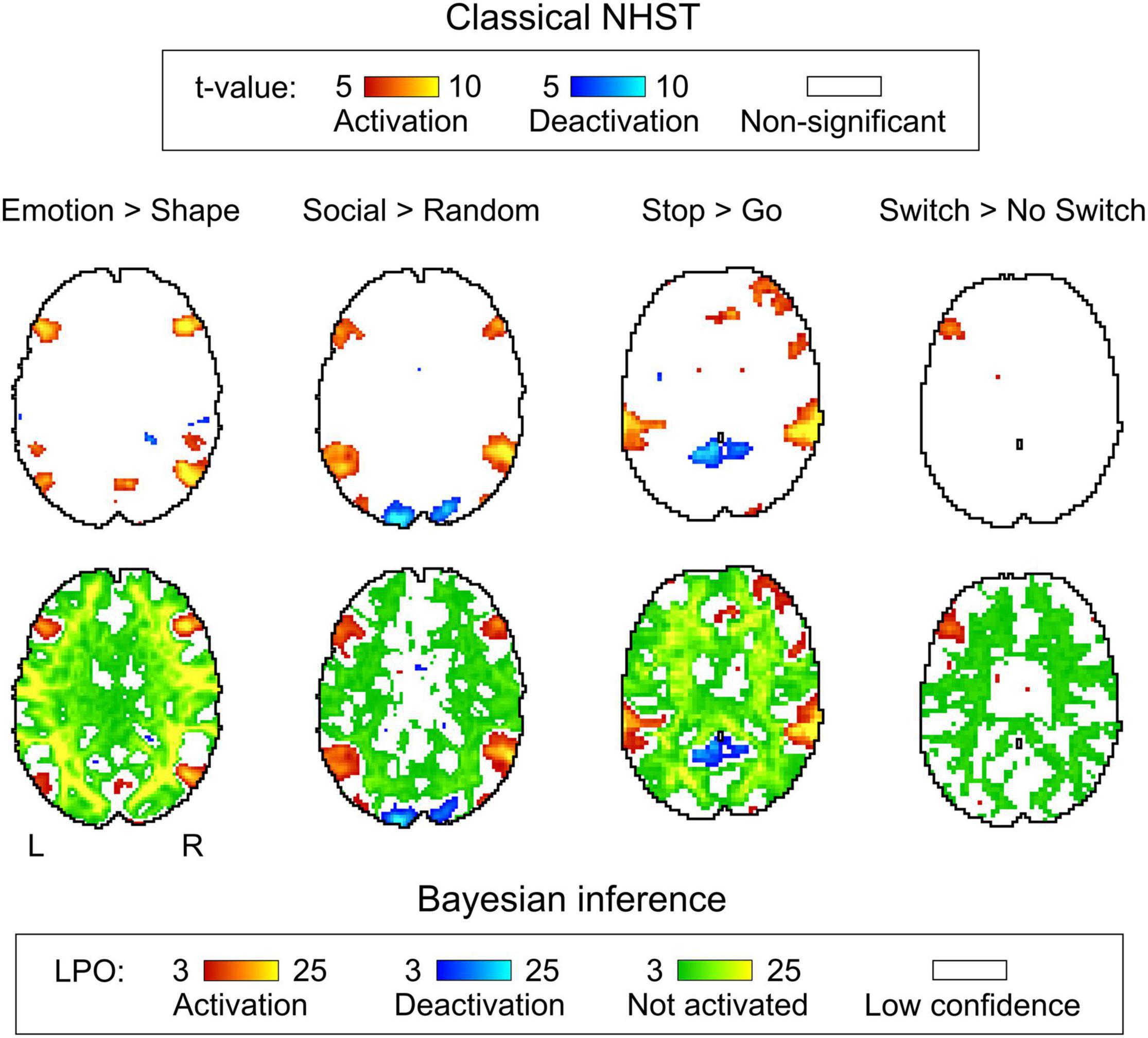

Having the means to assess non-significant results would mitigate these problems. To this end, two main alternatives are available: Firstly, there are frequentist approaches that shift from point-null to interval-null hypothesis testing, for example, equivalence testing based on the two one-sided tests (TOST) procedure (Schuirmann, 1987; Wellek, 2010). Secondly, Bayesian approaches that are based on posterior parameter distributions (Lindley, 1965; Greenwald, 1975; Kruschke, 2010) and Bayes factors (Jeffreys, 1939/1948; Kass and Raftery, 1995; Rouder et al., 2009). The advantage of frequentist approaches is that they do not require a substantial paradigm shift (Lakens, 2017; Campbell and Gustafson, 2018). However, it has been argued that Bayesian approaches may be more natural and straightforward than frequentist approaches (Edwards et al., 1963; Lindley, 1975; Friston et al., 2002a; Wagenmakers, 2007; Rouder et al., 2009; Dienes, 2014; Kruschke and Liddell, 2017b). It has long been noted that we tend to perceive lower p-values as stronger evidence for the alternative hypothesis, and higher p-values as evidence for the null, i.e., the ‘inverse probability’ fallacy as it is referred to by Cohen (1994). This is what we obtain in Bayesian approaches by calculating posterior probabilities. Instead of considering infinite ‘hypothetical’ replications and employing probabilistic ‘proof by contradiction,’ Bayesian approaches directly provide evidence for the null and alternative hypotheses given the data, updating our prior beliefs in light of new relevant information. Bayesian inference allows us to ‘reject and accept’ the null hypothesis on an equal footing. Moreover, it allows us to talk about ‘low confidence,’ indicating the need to either accumulate more data or revise the study design (see Figure 1).

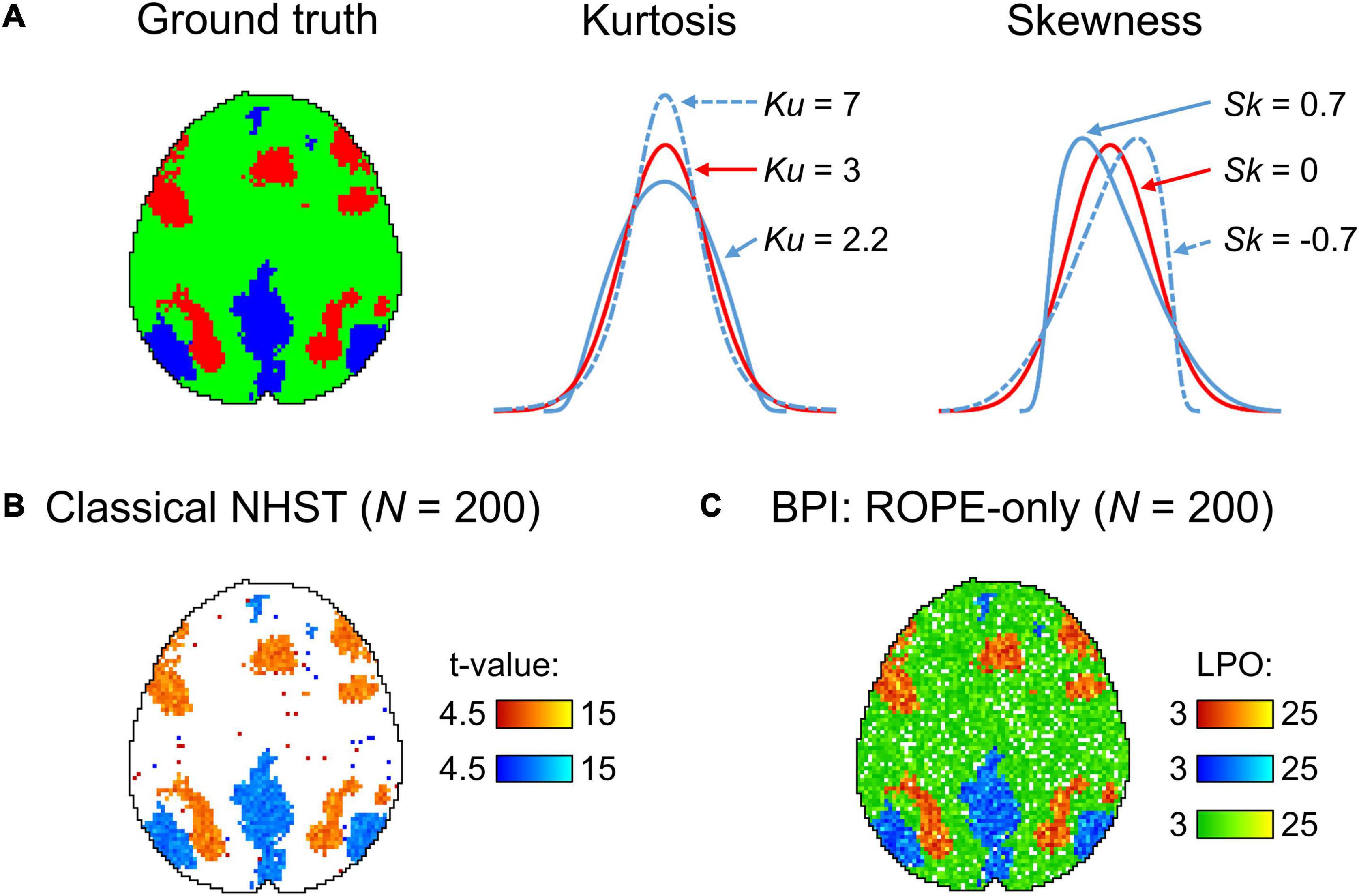

Figure 1. Possible results for the same data, obtained using classical NHST and Bayesian parameter inference. Classical NHST detects only areas with a statistically significant difference (‘number one’). Bayesian parameter inference based on the logarithm of posterior probability odds (LPO) provides us with additional information that is not available in classical NHST: (1) it provides relative evidence for the null (H0) and alternative (H1) hypotheses, (2) it detects areas with a trivial effect size (‘number zero’), (3) it indicates ‘low confidence’ areas surrounding the ‘number one’ and ‘number zero.’ To make this conceptual illustration, we generated 100 images consisted of 50 × 50 voxels smoothed by 2 voxel full width at half maximum (FWHM) Gaussian kernel. Data were drawn from normal distributions with different mean, m, and standard deviation, SD. For the ‘number one,’ m = 0.1, SD = 0.37. For the ‘number zero,’ m = 0, SD = 0.6. For the ‘low confidence’ area, m = 0.01, SD = 0.37. LPOs were calculated using an effect size threshold of 0.02. The code to recreate the illustration is available online https://github.com/Masharipov/BPI_2021/tree/main/conceptual_illustration.

Despite the importance of this issue, and the high level of theoretical elaboration and implementation of Bayesian methods in common neuroimaging software programs, for example, Statistical Parametric Mapping 12 (SPM12) and FMRIB’s Software Library (FSL), to date, only a few fMRI studies implemented the Bayesian inference to assess ‘null effects’ (for example, see subject-level analysis in Magerkurth et al., 2015, group-level analysis in Dandolo and Schwabe, 2019; Feng et al., 2019). Therefore, this study is intended to introduce fMRI practitioners to the methods for assessing ‘null effects.’ In particular, we focus on Bayesian parameter inference (Friston and Penny, 2003; Penny and Ridgway, 2013), as implemented in SPM12. Although Bayesian methods have been described elsewhere, the distinguishing feature of this study is that we aim to demonstrate the practical implementation of Bayesian inference to the assessment of ‘null effects,’ and reemphasize its contributions over and above those of classical NHST. We deliberately aim to avoid mathematical details, which can be found elsewhere (Genovese, 2000; Friston et al., 2002a,2007; Friston and Penny, 2003; Penny et al., 2003, 2005, 2007; Penny and Ridgway, 2013; Woolrich et al., 2004). Firstly, we briefly review the frequentist and Bayesian approaches for the assessment of the ‘null effects.’ Next, we compare the classical NHST and Bayesian parameter inference using the Human Connectome Project (HCP) and the UCLA Consortium for Neuropsychiatric Phenomics datasets, focusing on group-level analysis. We then consider the choice of the threshold of the effect size for Bayesian parameter inference and estimate the typical effect sizes in different fMRI task designs. To demonstrate how the common sources of variability in empirical data influence NHST and Bayesian parameter inference, we examined their behavior for different sample sizes and spatial smoothing. We also used simulated data to assess BPI performance under different effect sizes, noise levels, noise distributions and sample sizes. Finally, we discuss practical research and clinical applications of Bayesian inference.

In this section, we briefly describe the classical NHST framework and review statistical methods which can be used to assess the ‘null effect.’ We also considered two historical trends in statistical analysis: the shift from point-null hypothesis testing to interval estimation and interval-null hypothesis testing (Murphy and Myors, 2004; Wellek, 2010; Cumming, 2013), and the shift from frequentist to Bayesian approaches (Kruschke and Liddell, 2017b).

Most task-based fMRI studies rely on the general linear model approach (Friston et al., 1994; Poline and Brett, 2012). It provides a simple way to separate blood-oxygenated-level dependent (BOLD) signals associated with particular task conditions from nuisance signals and residual noise when analyzing single-subject data (subject-level analysis). At the same time, it allows us to analyze mean BOLD signals within one group of subjects or between different groups (group-level analysis). Firstly, we must specify a general linear model and estimate its parameters:

where Y are the data (further, D), X is the design matrix, which includes regressors of interest and nuisance regressors, β are the model parameters (‘beta values’), and ε is residual noise or error, which is assumed to have a zero-mean normal distribution. At the subject level of analysis, the data are BOLD-signals. At the group level, the data are linear contrasts of parameters estimated at the subject level, which typically reflect individual subject amplitudes of BOLD responses evoked in particular task conditions. In turn, the parameters of the group-level general linear model reflect the group mean BOLD responses evoked in particular task conditions and groups of subjects. The linear contrast of these parameters, θ = cβ, represents the experimental effect of interest (hereinafter ‘the effect’), expressed as the difference between conditions or groups of subjects.

Next, we test the effect against the point-null hypothesis, H0: θ = γ (usually, θ = 0). To do this, we use test statistics that summarize the data in a single value, for example, the t-value. For the one-sample case, the t-value is the ratio of the discrepancy of the estimated effect from the hypothetical null value to its standard error. Finally, we calculate the probability of obtaining the observed t-value or a more extreme value, given that the null hypothesis is true (p-value). This is also commonly formulated as the probability of obtaining the observed data or more extreme data, given that the null hypothesis is true (Cohen, 1994). It can be simply written as a conditional probability P(D+|H0), where ‘D +’ denotes the observed data or more extreme data which can be obtained in infinite ‘hypothetical’ replications under the null (Schneider, 2014, 2018). If this probability is lower than some conventional threshold, or alpha level (for example, α = 0.05), then we can ‘reject the null hypothesis’ and state that we found a statistically significant effect. When this procedure is repeated for a massive number of voxels, it is referred to as ‘mass-univariate analysis.’ However, if we consider m = 100 000 voxels with no true effect and repeat significance testing for each voxel at α = 0.05, we would expect to obtain 5000 false rejections of the null hypothesis (false positives). To control the number of false positives, we must reduce the alpha level for each significance test by applying the multiple comparison correction (Genovese et al., 2002; Nichols and Hayasaka, 2003; Nichols, 2012).

To date, the classical NHST has been the most widely used statistical inference method in neuroscience, psychology, and biomedicine (Szucs and Ioannidis, 2017, 2020; Ioannidis, 2019). It is often criticized for the use of the point-null hypothesis (Meehl, 1967), also known as the ‘nil null’ (Cohen, 1994) or ‘sharp null’ hypothesis (Edwards et al., 1963). It was argued that the point-null hypothesis could be appropriate only in hard sciences such as physics, but it is always false in soft sciences; this problem is sometimes known as the Meehl’s paradox (Meehl, 1967, 1978; Serlin and Lapsley, 1985, 1993; Cohen, 1994; Kirk, 1996). In the case of fMRI research, we face complex brain activity which is influenced by numerous psychophysiological factors. This means that with a large amount of data, we find a statistically significant effect in all voxels for any linear contrast (Friston et al., 2002a). For example, Gonzalez-Castillo et al. (2012) showed a statistically significant difference between simple visual stimulation and rest in over 95% of the brain when averaging single-subject data from 100 runs (approximately 8 h of scanning), which consisted of five blocks of stimulation (20 s of visual stimulation, 40 s of rest). Approximately half of the brain areas showed statistically significant positive effects or ‘activations,’ whereas the other half showed statistically significant negative effects or ‘deactivations.’

Whole-brain ‘’activations/deactivations’ can also be found when analyzing large datasets such as the HCP (N > 1000) or UK Biobank (N > 10 000) datasets. For example, Smith and Nichols (2018) showed significant positive and negative effects for the emotion processing task (‘Emotional faces vs. Shapes’ contrast) in 81% of voxels using data from UK Biobank (N = 12 600) and conservative Bonferroni multiple comparison correction. When we increase the sample size, the effect estimate does not change much. Still, the standard error in the denominator of the t-value becomes increasingly smaller, resulting in negligible effects becoming statistically significant. Thus, the classical NHST ignores the magnitude of the effect. Attempts to overcome this problem led to the proposal of making a distinction between ‘statistical significance’ and ‘material significance’ (Hodges and Lehmann, 1954) or ‘practical significance’ (Cohen, 1965; Kirk, 1996). That is, we can test whether the effect size is larger or smaller than some practically meaningful value using interval-null hypothesis testing (Friston et al., 2002a,b; Friston, 2013). In this case, we use the terms ‘activations’ and ‘deactivations’ for those voxels that show a practically significant positive or negative effect.

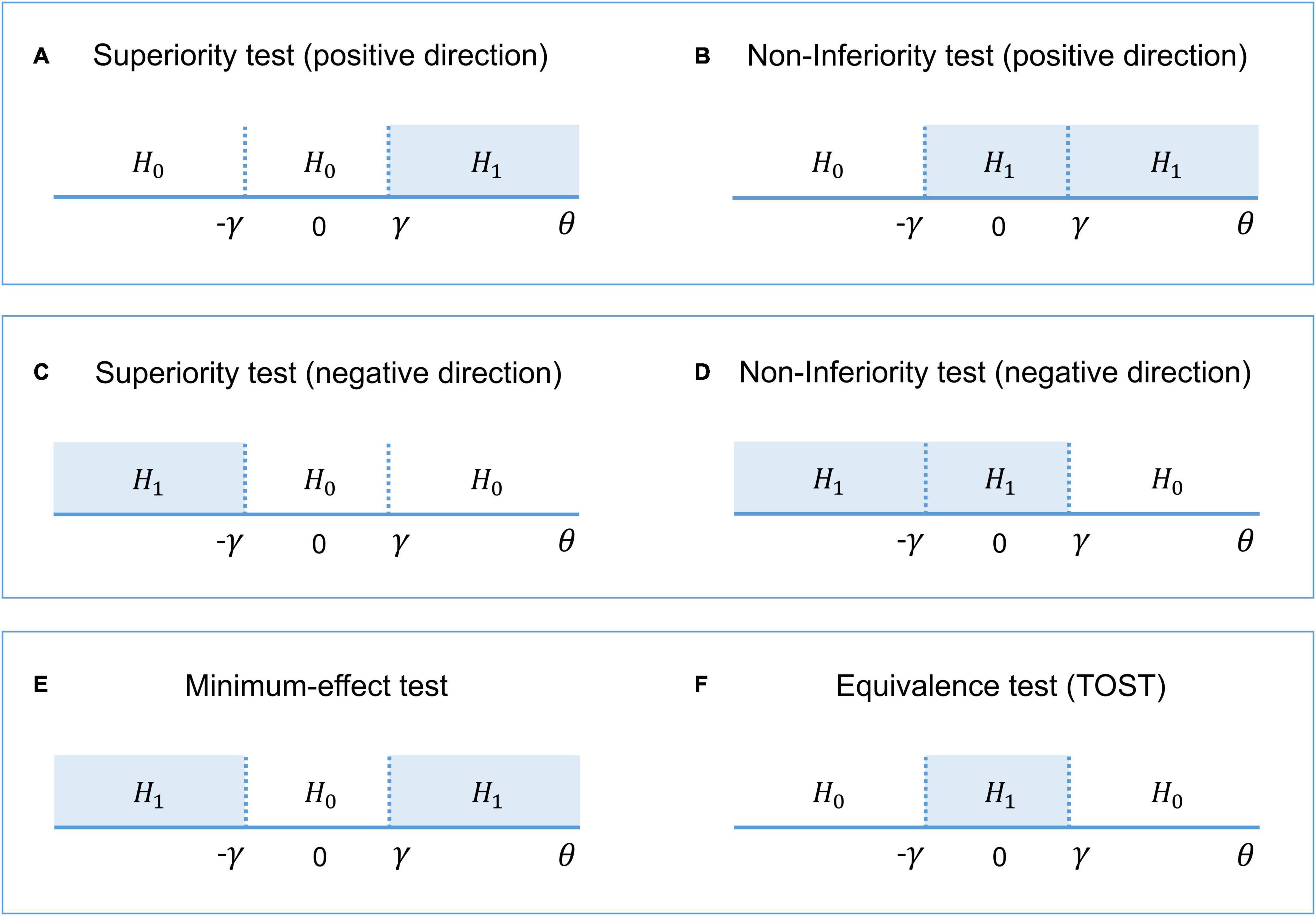

Interval-null hypothesis testing is widely used in medicine and biology (Meyners, 2012). Consider, for example, a pharmacological study designed to compare a new treatment with an old treatment that has already shown its effectiveness. Let βnew be the mean effect on brain activity of the new treatment and βold the mean effect of the old treatment. Then, θ = (βnew – βold) is the relative effect of the new treatment. The practical significance is defined by the effect size (ES) threshold γ. If a larger effect on brain activity is preferable, then we can test whether there is a practically meaningful difference in a positive direction (H1: θ > γ vs. H0: θ ≤ γ). This procedure is known as the superiority test (see Figure 2A). We can also test whether the effect of the new treatment is no worse (practically smaller) than the effect of the old treatment (H1: θ > –γ vs. H0: θ ≤ –γ). This procedure is sometimes known as the non-inferiority test (see Figure 2B). If a smaller effect on brain activity is preferable, we can use the superiority or non-inferiority test in the opposite direction (see Figures 2C,D). The combination of these two superiority tests allows us to find a practically meaningful difference in both directions (H1: θ > γ and θ < –γ vs. H0: –γ ≤ θ ≤ γ), that is, the minimum-effect test (see Figure 2E). The combination of the two non-inferiority tests allows us to reject the hypothesis of practically meaningful differences in any direction (H1: –γ ≤ θ ≤ γ vs. H0: θ > γ and θ < –γ). This is the most widely used approach to equivalence testing, known as the two one-sided tests (TOST) procedure (see Figure 2F). For more details on the superiority and minimum-effect tests, see Serlin and Lapsley (1985, 1993), Murphy and Myors (1999, 2004). For more details on the non-inferiority test and TOST procedure see Schuirmann (1987), Rogers et al. (1993), Wellek (2010), Meyners (2012), Lakens (2017)).

Figure 2. The alternative (H1) and null (H0) hypotheses for different types of interval-null hypotheses tests. (A,B) One-sided tests in the positive direction (‘the larger is better’). (C,D) One-sided tests in the negative direction (‘the smaller is better’). (E) Combination of both superiority tests. (F) Combination of both non-inferiority tests.

The interval [–γ; γ] defines trivially small effect sizes that we consider to be equivalent to the ‘null effect’ for practical purposes. This interval is also known as the ‘equivalence interval’ (Schuirmann, 1987) or ‘region of practical equivalence (ROPE)’ (Kruschke, 2011). The TOST procedure, in contrast to classical NHST, allows us to assess the ‘null effects.’ If we reject the null hypothesis of a practically meaningful difference, we can conclude that the effect is trivially small. The TOST procedure can also be intuitively related to frequentist interval estimates, known as confidence intervals (‘confidence interval approach,’ Westlake, 1972; Schuirmann, 1987). Confidence intervals reflect the uncertainty in the point estimation of the parameters defined by its standard error. The confidence level of (1 – α) means that among infinite ‘hypothetical’ replications, (1 – α)% of the confidence intervals will contain the true effect under the null. Therefore, the TOST procedure is operationally identical to considering whether the (1 – 2α)% confidence interval falls entirely into the ROPE, as it uses two one-sided tests with an alpha level of α.

Interval-null hypothesis testing can be used in fMRI studies not only to compare the effects of different treatments. For example, we can apply superiority tests in the positive and negative directions to detect ‘activated’ and ‘deactivated’ voxels and additionally apply the TOST procedure to detect ‘not activated’ voxels. However, even though we can solve the Meehl’s paradox and assess the ‘null effects’ by switching from point-null to interval-null hypothesis testing within the frequentist approach, this approach still has fundamental philosophical and practical difficulties which can be effectively addressed using Bayesian statistics.

The pitfalls of the frequentist approach have been actively discussed by statisticians and researchers for decades. Here, we briefly mention a few of the main problems associated with the frequency approach.

(1) NHST is a hybrid of Fisher’s approach that focuses on the p-value (thought to be a measure of evidence against the null hypothesis), and Neyman-Pearson’s approach that focuses on controlling false positives with the alpha level while maximizing true positives in long-run replications. These two approaches are argued to be incompatible and have given rise to several misinterpretations among researchers, for example, confusing the meaning of p-values and alpha levels (Edwards et al., 1963; Gigerenzer, 1993; Goodman, 1993; Royall, 1997; Finch et al., 2001; Berger, 2003; Hubbard and Bayarri, 2003; Turkheimer et al., 2004; Schneider, 2014; Perezgonzalez, 2015; Szucs and Ioannidis, 2017; Greenland, 2019).

(2) The logical structure of NHST is the same as that of ‘proof by contradiction’ or ‘indirect proof,’ which becomes formally invalid when applied to probabilistic statements (Pollard and Richardson, 1987; Cohen, 1994; Falk and Greenbaum, 1995; Nickerson, 2000; Sober, 2008; Schneider, 2014, 2018; Wagenmakers et al., 2017; but see Hagen, 1997). Valid ‘proof by contradiction’ can be expressed in syllogistic form as: (1) ‘If A, then B’ (Premise No 1), (2) ‘Not B’ (Premise No 2), (3) ‘Therefore not A’ (Conclusion). Probabilistic ‘proof by contradiction’ in relation to NHST can be formulated as: (1) ‘If H0 is true, then D+ are highly unlikely, (2) ‘D+ was obtained,’ (3) ‘Therefore H0 is highly unlikely.’ This problem is also referred to as the ‘illusion of probabilistic proof by contradiction’ (Falk and Greenbaum, 1995). To illustrate the fallacy of such logic, consider the following example from Pollard and Richardson (1987): (1) ‘If a person is an American (H0), then he is most probably not a member of Congress,’ (2) ‘The person is a member of Congress,’ (3) ‘Therefore the person is most probably not an American.’ Based on this, one ‘rejects the null’ and makes an obviously wrong inference, as only American citizens can be a member of Congress. At the same time, using Bayesian statistics, we can show that the null hypothesis (‘the person is an American’) is true (see the Bayesian solution of the ‘Congress example’ in the Supplementary Materials). The ‘illusion of probabilistic proof by contradiction’ leads to widespread confusion between the probability of obtaining the data, or more extreme data, under the null P(D+|H0) and the probability of the null under the data P(H0|D) (Pollard and Richardson, 1987; Gigerenzer, 1993; Cohen, 1994; Falk and Greenbaum, 1995; Nickerson, 2000; Finch et al., 2001; Hoekstra et al., 2006; Goodman, 2008; Greenland et al., 2016; Wasserstein and Lazar, 2016; Amrhein et al., 2017). The latter is a posterior probability calculated based on Bayes’ rule. The fact that researchers usually treat the p-value as a continuous measure of evidence (the Fisherian interpretation) only exacerbates this problem. ‘The lower the p-value, the stronger the evidence against the null’ statement can be erroneously transformed to statements such as ‘the lower the p-value, the stronger the evidence for the alternative’ or ‘the higher the p-value, the stronger the evidence for the null.’ NHST can only provide evidence against, but never for, a hypothesis. In contrast, posterior probability provides direct evidence for a hypothesis; hence, it has a simple intuitive interpretation.

(3) The p-value is not a plausible measure of evidence (Berger and Berry, 1988; Berger and Sellke, 1987; Cornfield, 1966; Goodman, 1993; Hubbard and Lindsay, 2008; Johansson, 2011; Royall, 1986; Wagenmakers, 2007; Wagenmakers et al., 2008, 2017; Wasserstein and Lazar, 2016; bet see Greenland, 2019). The frequentist approach considers infinite ‘hypothetical’ replications of the experiment (sampling distribution); that is, the p-value depends on unobserved (‘more extreme’) data. One of the most prominent theorists of Bayesian statistics, Harold Jeffreys, put it as follows: ‘What the use of P implies, therefore, is that a hypothesis that may be true may be rejected because it has not predicted observable results that have not occurred’ (Jeffreys, 1939/1948, p. 357). In turn, the sampling distribution depends on the researcher’s intentions. These intentions may include different kinds of multiplicities, such as multiple comparisons, double-sided comparisons, secondary analyses, subgroup analyses, exploratory analyses, preliminary analyses, and interim analyses of sequentially obtained data with optional stopping (Gopalan and Berry, 1998). Two researchers with different intentions may obtain different p-values based on the same dataset. The problem is that these intentions are usually unknown. When null findings are considered disappointing, it is tempting to increase the sample size until one obtains a statistically significant result. However, a statistically significant result may arise when the null is, in fact, true, which can be shown by Bayesian statistics. That is, the p-value usually exaggerates evidence against the null hypothesis. The discrepancy that may arise between frequentist and Bayesian inference is also known as the Jeffreys–Lindley paradox (Jeffreys, 1939/1948; Lindley, 1957). In addition, it is argued that a consistent measure of evidence should not depend on the sample size (Cornfield, 1966). However, identical p-values provide different evidence against the null hypothesis for small and large sample sizes (Wagenmakers, 2007). In contrast, evidence provided by posterior probabilities and Bayes factors depends only on the exact observed data and the prior, and does not depend on the testing or stopping intentions or the sample size (Wagenmakers, 2007; Kruschke and Liddell, 2017b).

(4) Although frequentist interval estimates (Cohen, 1990, 1994; Cumming, 2013) and interval-based hypothesis testing (Murphy and Myors, 2004; Wellek, 2010; Meyners, 2012; Lakens, 2017) greatly facilitate the mitigation of the abovementioned pitfalls in data interpretation, they are still subject to some of the same types of problems as the p-values and classic NHST (Cortina and Dunlap, 1997; Nickerson, 2000; Belia et al., 2005; Wagenmakers et al., 2008; Hoekstra et al., 2014; Morey et al., 2015; Greenland et al., 2016; Kruschke and Liddell, 2017a). Confidence intervals also depend on unobserved data and the intentions of the researcher. Moreover, the meaning of confidence intervals seems counterintuitive to many researchers. For example, one of the most common misinterpretations of the (1 – α)% confidence interval is that the probability of finding an effect within the confidence interval is (1 – α)%. In fact, it is a Bayesian interval estimate known as a credible interval.

Nevertheless, we would like to emphasize that we do not advocate abandoning the frequency approach. Correctly interpreted frequentist interval-based hypothesis testing with a priori power analysis defining the sample size and proper multiplicity adjustments often lead to conclusions similar to those of Bayesian inference (Lakens et al., 2018). However, it may be logically and practically difficult to carry out an appropriate power analysis and make multiplicity adjustments (Berry and Hochberg, 1999; Cramer et al., 2015; Streiner, 2015; Schönbrodt et al., 2017; Sjölander and Vansteelandt, 2019). These procedures may be even more complicated in fMRI research than in psychological or social studies (see discussion on power analysis in Mumford and Nichols, 2008; Joyce and Hayasaka, 2012; Mumford, 2012; Cremers et al., 2017; Poldrack et al., 2017; multiple comparisons in Nichols and Hayasaka, 2003; Nichols, 2012; Eklund et al., 2016; and other types of multiplicities in Turkheimer et al., 2004; Chen et al., 2018, 2019, 2020; Alberton et al., 2020). For example, at the beginning of a long-term study, one may want to check whether stimulus onset timings are precisely synchronized with fMRI data collection and perform preliminary analysis on the first five subjects. The question of whether the researcher must make an adjustment for this technical check when reporting the results for the final sample become important in the frequentist approach. Such preliminary analyses (or other forms of interim analyses) are generally not considered a source of concern in Bayesian inference because posterior probabilities do not depend on the sampling plan (for discussion, see Berry, 1988; Berger and Berry, 1988; Edwards et al., 1963; Wagenmakers, 2007; Kruschke and Liddell, 2017b; Rouder, 2014; Schönbrodt et al., 2017). Or, for example, one may want to find both ‘activated/deactivated’ and ‘not activated’ brain areas and use two superiority tests in combination with the TOST procedure. It is not trivial to make appropriate multiplicity adjustments in this case. In contrast, Bayesian inference suggests a single decision rule without the need for additional adjustments. Moreover, to our knowledge, practical implementations of superiority tests and the TOST procedure in common software for fMRI data analysis do not yet exist. At the same time, Bayesian analysis has already been implemented in SPM122 and is easily accessible to end-users. It consists of two steps: Bayesian parameter estimation and Bayesian inference. In general, it is not necessary to use Bayesian analysis at the subject level of analysis to apply it at the group level. One can combine computationally less demanding frequentist parameter estimation for single subjects with Bayesian estimation and inference at the group level. In the next sections, we consider the group-level Bayesian analysis implemented in SPM12.

Bayesian statistics is based on Bayes’ rule:

where P(H|D) is the probability of the hypothesis given the obtained data or posterior probability. P(D|H) is the probability of obtaining the exact data given the hypothesis or the likelihood (notice the difference from P(D+|H), which includes more extreme data). P(H) is the prior probability of the hypothesis (our knowledge of the hypothesis before we obtain the data). P(D) is a normalizing constant ensuring that the sum of posterior probabilities over all possible hypotheses equals one (marginal likelihood). In the case of mutually exclusive hypotheses, the denominator of Bayes’s rule is the sum of the probabilities of obtaining the data under any of the possible hypotheses, multiplied by its prior probability. For example, if we consider two mutually exclusive hypotheses H0 and H1, then P(D) = P(D|H0) P(H0) + P(D|H1)P(H1) and P(H0|D) + P(H1|D) = 1. When we consider continuous hypotheses, the denominator is obtained by integrating over all hypotheses (parameter spaces). For relatively simple models, these integrals can be solved analytically. However, for more complex models, the integrals become analytically intractable. In this case, there are two main approaches to obtain the posterior probability: (1) use computationally demanding numerical integration (Markov chain Monte Carlo methods); (2) use less accurate but computationally efficient analytical approximations to the posterior distribution (e.g., Expectation Maximization or Variational Bayes techniques). Describing these procedures go beyond the scope of this paper and described elsewhere (for their implementations in fMRI analysis, see Genovese, 2000; Friston et al., 2002a,2007; Friston and Penny, 2003; Penny et al., 2003, 2005, 2007; Penny and Ridgway, 2013; Woolrich et al., 2004).

In verbal form, Bayes’ rule can be expressed as:

This means that we can update our prior beliefs about the hypothesis based on the obtained data.

One of the main difficulties in using Bayesian statistics, in addition to the computational complexity, is the choice of appropriate prior assumptions. The prior can be chosen based on theoretical arguments or from independent experimental data (full Bayes approach). At the same time, if the data are organized hierarchically, which is the case for neuroimaging data, priors can be specified based on the obtained data itself using an empirical Bayes approach. The lower level of the hierarchy corresponds to the experimental effects at any given voxel, and the higher level of the hierarchy comprises the effect over all voxels. Thus, the variance of the experimental effect over all voxels can be used as the prior variance of the effect at any given voxel. This approach is known as the parametric empirical Bayes (PEB) with the ‘global shrinkage’ prior (Friston and Penny, 2003). The prior variance is estimated from the data under the assumption that the prior probability density corresponds to a Gaussian distribution with zero mean. In other words, a global experimental effect is assumed to be absent. An increase in local activity can be detected in some brain areas; a decrease can be found in others, but the total change in neural metabolism in the whole brain is approximately zero. This is a reasonable physiological assumption because studies of brain energy metabolism have shown that the global metabolism is ‘remarkably constant despite widely varying mental and motoric activity’ (Raichle and Gusnard, 2002), and ‘the changes in the global measurements of blood flow and metabolism’ are ‘too small to be measured’ by functional imaging techniques such as PET and fMRI (Gusnard and Raichle, 2001).

Now, we can rewrite Bayes’ rule (eq. 2) for the effect θ = cβ:

In the process of Bayesian updating with the ‘global shrinkage’ prior, the effect estimate ‘shrinks’ toward zero. The greater the uncertainty of the effect estimate (variability) in a particular voxel, the less confidence in this estimate, and the more it shrinks (see Figure 3).

The assumption of a Gaussian prior, likelihood, and posterior essentially reduces computational demands for Bayesian analysis. However, the normality assumption can be violated for empirical data. For example, violations can be observed in the presence of outliers, particularly with small sample sizes or unbalanced designs, which diminishes the validity of the statistical analysis. This problem is not specific to Bayesian analysis but is inherent to all group-level analyses that assume a normal distribution of the effect. Nevertheless, in fMRI studies, the most common approach is to use the Gaussian general linear models (Poline and Brett, 2012), which have been shown to be robust against violations of the normality assumption (Knief and Forstmeier, 2021). Still, we need to be ensured that these assumptions are not violated substantially. If that is the case, one can use Bayesian estimation based on non-Gaussian distributions. In this work, we consider Bayesian estimation with Gaussian ‘global shrinkage’ prior implemented in SPM12.

After Bayesian parameter estimation, we can apply one of the two main types of Bayesian inference (Penny and Ridgway, 2013): Bayesian parameter inference (BPI) or Bayesian model inference (BMI). BPI is also known as Bayesian parameter estimation (Kruschke and Liddell, 2017b). However, we deliberately separate these two terms, as they correspond to two different steps of data analysis in SPM12. BMI is also known as Bayesian model comparison, Bayesian model selection, or Bayesian hypothesis testing (Kruschke and Liddell, 2017b). We chose the term BMI as it is consonant with the term BPI.

The BPI is based on the posterior probability of finding the effect within or outside the ROPE. Let effects larger than the ES threshold γ be ‘activations,’ those smaller than –γ be ‘deactivations,’ and those falling within the ROPE [–γ; γ] be ‘no activations.’ Then, we can classify voxels as ‘activated,’ ‘deactivated,’ or ‘not activated’ if:

where Pthr is the posterior probability threshold (usually Pthr = 95%). Note that Pact + Pdeact + Pnull = 1.

If none of the above criteria are satisfied, the data in a particular voxel are insufficient to distinguish voxels that are ‘activated/deactivated’ from those that are ‘not activated.’ Hereinafter, we refer to them as ‘low confidence’ voxels (Magerkurth et al., 2015). This decision rule is also known as the ‘ROPE-only’ rule (Kruschke and Liddell, 2017a), see also Greenwald (1975); Wellek (2010), Liao et al. (2019). To the best of our knowledge, the application of this decision rule to neuroimaging data was pioneered by Friston et al. (2002a; 2002b; Friston and Penny, 2003). For convenience and visualization purposes, we can use the natural logarithm of the posterior probability odds (LPO), for example:

This allows us to more effectively discriminate voxels with a posterior probability close to unity (Penny and Ridgway, 2013). LPOnull > 3 corresponds to Pnull > 95%. In addition, LPO also allows us to identify the connection between BPI and BMI. The maps of the LPO are termed posterior probability maps (PPMs) in SPM12.

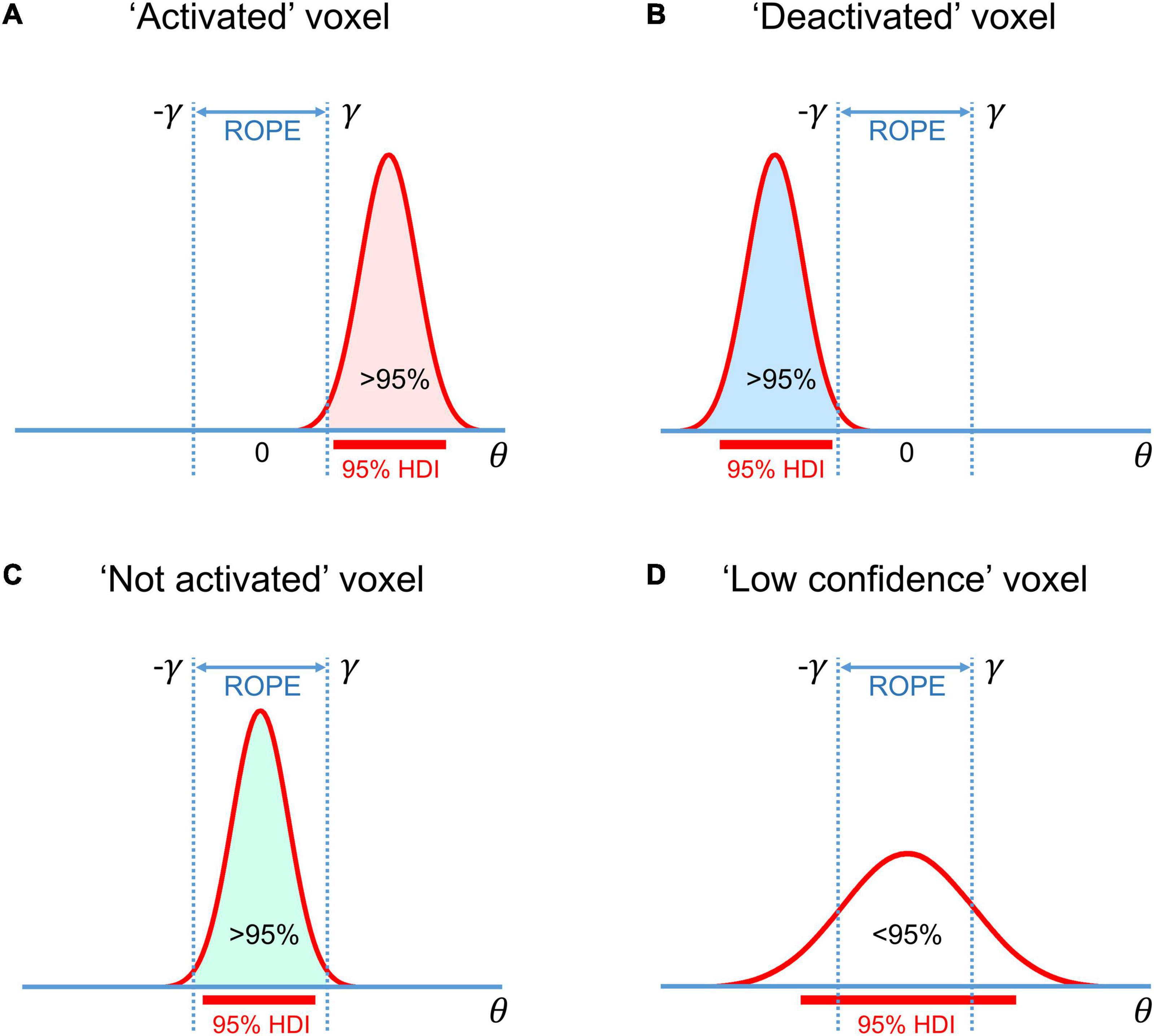

Another possible decision rule considers the overlap between ROPE and the 95% highest density interval (HDI). HDI is a type of credible interval (Bayesian analog of the confidence interval), which contains only the effects with the highest posterior probability density. If the HDI falls entirely inside the ROPE, we can classify voxels as ‘not activated.’ In contrast, if the HDI lies completely outside the ROPE, we can classify voxels as either ‘activated’ or ‘deactivated.’ If the HDI overlaps with the ROPE, we cannot make a confident decision (we can consider them to be ‘low confidence’ voxels). This decision rule is known as the ‘HDI+ROPE’ rule (Kruschke and Liddell, 2017a). It is more conservative than the ‘ROPE-only’ rule because it does not consider the effects from the low-density tails of the posterior probability distribution. Differences between the ‘HDI+ROPE’ rule and the ‘ROPE-only’ are most evident for strongly skewed distributions. In such cases, the ROPE may contain more than 95% of the posterior probability distribution, but the 95% HDI may overlap with the ROPE. In the case of a Gaussian posterior probability distribution, both decision rules should produce similar results. The ‘HDI+ROPE rule is advocated by Kruschke and Liddell (2017a) and the ‘ROPE-only’ rule is preferred by Friston et al. (2002a; 2002b; Friston and Penny, 2003), Wellek (2010); Liao et al. (2019). These decision rules are illustrated in Figure 4.

Figure 4. Possible variants of the posterior probability distributions of the effect θ = cβ in (A) ‘activated’ voxels, (B) ‘deactivated’ voxels, (C) ‘not activated’ voxels, (D) ‘low confidence’ voxels. The ‘ROPE only’ rule considers only the colored parts of the distributions. The ‘HDI+ROPE’ rule considers overlap between the ROPE and 95% HDI.

With BPI, we consider the posterior probabilities of the linear contrast of parameters θ = cβ. Instead, we can consider models using BMI.

Let Halt and Hnull be two non-overlapping hypotheses represented by models Malt and Mnull. These models are defined by two parameter spaces: (1) Malt: θ > γ and θ < –γ, and (2) Mnull: –γ ≤ θ ≤ γ.

Now, we can rewrite Bayes’ rule (eq. 2) for Malt and Mnull

If we divide equation (6.1) by (6.2), P(D) is canceled out, and we obtain:

In verbal form equation (7) can be expressed as:

Posterior Odds = Bayes Factor × Prior Odds

The Bayes factor (BF) is a multiplier that converts prior model probability odds to posterior model probability odds. It indicates the relative evidence for one model against another. For example, if , then the observed data are twice as likely under the null model than under the alternative.

A connection exists between the BPI (eq. 3–5), and BMI (eq. 7) (see Morey and Rouder, 2011; Liao et al., 2019):

or, in verbal form:

For convenience, BF may also be expressed in the form of a natural logarithm:

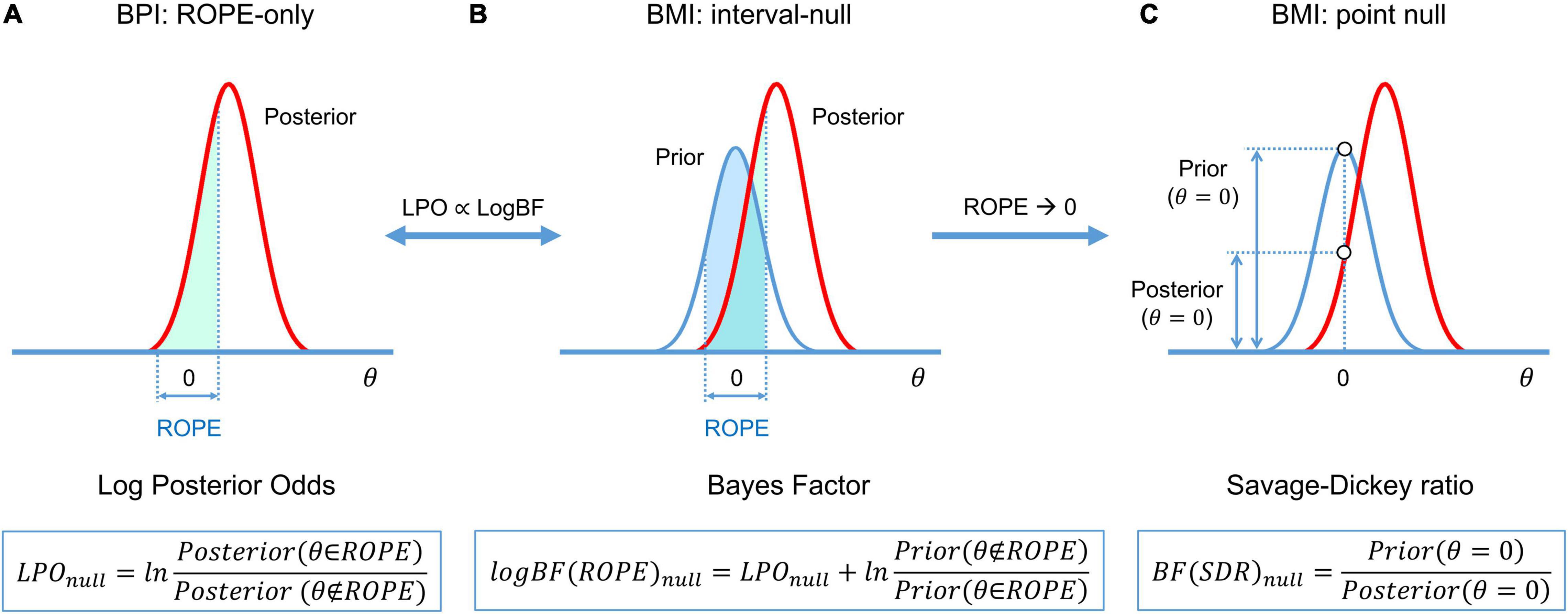

The calculation of BF may be computationally challenging, as it requires integration over the parameter space. However, if the ROPE has zero width (point-null hypothesis), then the BF has an analytical solution known as the Savage–Dickey ratio (SDR) (Wagenmakers et al., 2010; Friston and Penny, 2011; Rosa et al., 2012; Penny and Ridgway, 2013). BF(SDR)null is calculated by dividing the prior probability density by the posterior probability density at θ = 0. The interpretation of the BF(SDR)null is simple: if the effect size is less likely to equal zero after obtaining the data than before, then BF(SDR)null < 1: that is, we have more evidence for Malt. See schematic illustration of BMI based on interval-null and point-null hypotheses and its relation to BPI in Figure 5.

Figure 5. Schematic of BFs used in BMI and their relation to LPO used in BPI. (A) BPI based on the ‘ROPE-only’ decision rule. (B) BF(ROPE) is related to the areas under the functions of the posterior and prior probability densities inside and outside the ROPE. (C) BF(SDR) is the relation between the posterior and prior probability at θ = 0. LPOs and BFs provide relative evidence for the null and alternative hypotheses.

Now we can point out the conceptual links between the frequentist and Bayesian approaches.

(1) Parameter estimation: When we have no prior information, that is, all parameter values are a priori equally probable (‘flat’ prior), the PEB estimation reduces to the frequentist parameter estimation (maximum likelihood estimation; Friston et al., 2002a).

(2) Multiplicity adjustments: One of the major concerns in frequentist inference is the multiplicity problem. In general, after the Bayesian parameter estimation, it is not necessary to classify any voxel as ‘activated/deactivated ‘ or ‘not activated.’ If we consider unthresholded maps of posterior probabilities, LPOs, or LogBFs, the multiple comparisons problem does not arise (Friston and Penny, 2003). However, if we apply a decision rule to classify voxels, we should control for wrong decisions across multiple comparisons (Woolrich et al., 2009, see also possible loss functions in Muller et al., 2006; Kruschke and Liddell, 2017a). The advantage of PEB with the ‘global shrinkage’ prior is that it automatically accounts for multiple comparisons without the need for ad hoc multiplicity adjustments (Berry, 1988; Friston and Penny, 2003; Gelman et al., 2012). The frequentist approach processes every voxel independently, whereas the PEB algorithm considers joint information from all voxels. Frequentist inference uncorrected for multiple independent comparisons is prone to label noise-driven, random extremes as ‘statistically significant.’ Bayesian analysis specifies that extreme values are unlikely a priori, and thus they shrink toward a common mean (Lindley, 1990; Westfall et al., 1997; Berry and Hochberg, 1999; Friston et al., 2002a,b; Gelman et al., 2012; Kruschke and Liddell, 2017b). If we consider thresholded maps of posterior probabilities, for example, Pact > 95%, then as many as 5% of ‘activated’ voxels could be falsely labeled so. This is conceptually similar to the false discovery rate (FDR) correction (Berry and Hochberg, 1999; Friston et al., 2002b; Friston and Penny, 2003; Storey, 2003; Muller et al., 2006; Schwartzman et al., 2009). In practice, BPI with γ = 0 should produce similar results (in terms of the number of ‘activated/deactivated’ voxels) as classical NHST with FDR correction. If we increase the ES threshold, fewer voxels will be classified as ‘activated/deactivated,’ and at some γ value, BPI will produce results similar to the more conservative Family Wise Error (FWE) correction3.

(3) Interval-based hypothesis testing: Frequentist interval-based hypothesis testing is conceptually connected with BPI, particularly, the ‘HDI+ROPE’ decision rule. The former considers the intersection between ROPE and the confidence intervals. The latter considers the intersection between ROPE and the HDI (credible intervals).

(4) BPI and BMI: BMI based on BF(ROPE) is conceptually linked to BPI based on the ‘ROPE-only’ decision rule. The interval-based Bayes factor BF(ROPE) is proportional to the posterior probability odds. When ROPE is infinitesimally narrow, BF can be approximated using the SDR. Note that even though BF(SDR) is based on the point-null hypothesis, it can still provide evidence for the null hypothesis, in contrast to BPI with γ = 0. However, BF(SDR) in PEB settings has not yet been tested using empirical fMRI data. Because the point-null hypothesis is always false (Meehl, 1967), BPI and BF(ROPE) may be preferred over BF(SDR).

The main difficulty in applying interval-based methods is the choice of the ES threshold γ. To date, only a few studies have been devoted to determining the minimal relevant effect size. One of them suggested a method to objectively define γ at the subject level of analysis which was calibrated by clinical experts and may be implemented for pre-surgical planning (Magerkurth et al., 2015). At the same time, the problem of choosing the ES threshold γ for the group-level Bayesian analysis remains unresolved.

Several ways in which to define the ES threshold are available. Firstly, we can conduct a pilot study to determine the expected effect sizes. Secondly, we can use data from the literature to determine the typical effect sizes for the condition of interest. Thirdly, we can use the default ES thresholds that are commonly accepted in the field. One of the first ES thresholds proposed in the neuroimaging literature was γ = 0.1% (Friston et al., 2002b). This is the default ES threshold for the subject-level BPI in SPM12. For the group-level BPI, the default ES threshold is one prior standard deviation of the effect γ = 1 prior SDθ (Friston and Penny, 2003). Fourthly, γ can be selected in such a way as to ensure maximum similarity of the activation patterns revealed by classical NHST and Bayesian inference. This would allow us to reanalyze the data using Bayesian inference, reveal similar activation patterns as was previously the case for classic inference, and detect the ‘not activated’ and ‘low confidence’ voxels. Lastly, we can consider the posterior probabilities at multiple ES thresholds or compute the ROPE maps (see below).

The ES threshold can be expressed as unstandardized (raw β values or percent signal change) and standardized values (for example, Cohen’s d). Raw β values calculated by SPM12 at the first level of analysis represent the BOLD signal in arbitrary units. However, they can be scaled to a more meaningful unit, the BOLD percent signal change (PSC) (Poldrack et al., 2011; Chen et al., 2017). Unstandardized and standardized values have disadvantages and advantages. Different ways exist in which to scale β values to PSC (Pernet, 2014; Chen et al., 2017), which is problematic when comparing the results of different studies. Standardized values represent the effect size in terms of the standard deviation units, which supposedly facilitate the comparison of results between different experiments. However, standardized values are relatively more unstable between measurements and less interpretable (Baguley, 2009; Chen et al., 2017). Moreover, Cohen’s d is closely related to the t-value (for one sample case, ) and may share some drawbacks with t-values. Reimold et al. (2005) showed that spatial smoothing has a nonlinear effect on voxel variance. Using t-values or Cohen’s d for inference in neuroimaging may lead to spatially inaccurate results (spatial shift of local maxima in t-maps or Cohen’s d maps compared to PSC-maps). In this study, we focused on PSCs.

It is also important to note that effect sizes (both BOLD PSC and Cohen’s d) depend on the MRI scanner (e.g., field strength, coil sensitivity), acquisition parameters (e.g., echo time, spin echo vs. gradient echo sequences) and field inhomogeneity (UIudag et al., 2009). For example, the effect sizes may be underestimated in brain areas near air–tissue interfaces because of field inhomogeneities. This fact further complicates the selection of the ES threshold. However, this does not mean that we should ignore the effect size and return to the point-null hypothesis. One may choose different ES thresholds for different regions of interest, scanners or acquisition parameters.

Seven block-design tasks were considered from the HCP dataset, including working memory, gambling, motor, language, social cognition, relation processing, and emotion processing tasks (Barch et al., 2013). Two event-related tasks, including the stop-signal and task-switching tasks were considered from the UCLA dataset (Poldrack et al., 2016). The length, conditions, and number of scans of the tasks are provided in the Supplementary Materials (Supplementary Table 1). A subset of 100 unrelated subjects (S1200 release) was selected from the HCP dataset (54 females, 46 males, mean age = 29.1 ± 3.7 years) for assessment. A total of 115 subjects from the UCLA dataset were included in the analysis (55 females, 60 males, mean age = 31.7 ± 8.9 years) after removing subjects with no data for the stop-signal task, a high level (>15%) of errors in the Go-trials, and those of which the raw data were reported to be problematic (Gorgolewski et al., 2017). See the fMRI acquisition parameters in the Supplementary Materials, Par. 1.

The minimal preprocessing pipelines for the HCP and UCLA datasets were described by Glasser et al. (2013) and Gorgolewski et al. (2017), respectively. Spatial smoothing was applied to the preprocessed images with a 4 mm full width at half maximum (FWHM) Gaussian smoothing kernel. Additionally, to compare the extent to which the performance of classical NHST and BPI depended on the smoothing, we applied 8 mm FWHM smoothing to the emotion processing task. Spatial smoothing was performed using SPM12. The results are reported for the 4 mm FWHM smoothing filter, unless otherwise specified.

Frequentist parameter estimation was applied at the subject level of analysis. A detailed description of the general linear models for each task design is available in the Supplementary Materials, Par. 2. Fixation blocks and null events were not modeled explicitly in any of the tasks. Twenty-four head motion regressors were included in each subject-level model (six head motion parameters, six head motion parameters one time point before, and 12 corresponding squared items) to minimize head motion artifacts (Friston et al., 1996). Raw β values were converted to PSC relative to the mean whole-brain ‘baseline’ signal (Supplementary Materials, Par. 3). The linear contrasts of the β values were calculated to describe the effects of interest θ = cβ in different tasks. The sum of positive terms in the contrast vector, c, is equal to one. The list of contrasts calculated in the current study to explore typical effect sizes is presented in Supplementary Table 1. At the group level of analysis, the Bayesian parameter estimation with the ‘global shrinkage’ prior was applied using SPM12 (v6906). We performed a one-sample test on the linear contrasts created at the subject level of analysis.

Classical inference was performed using voxel-wise FWE correction with α = 0.05. This is the default SPM threshold and is known to be conservative and to guarantee protection from false positives (Eklund et al., 2016). Although voxel-wise FWE correction may be too conservative for small sample sizes, it is recommended when large sample sizes are available (Woo et al., 2014).

Bayesian parameter inference, accessible via the SPM12 GUI, allows the user to declare only whether the voxels are ‘activated’ or ‘deactivated.’ The classification of voxels as being either ‘not activated’ or ‘low confidence’ requires the posterior mean and variance. At the group level of analysis, SPM12 does not save the posterior variance image. However, the posterior variance can be reconstructed from the image of the noise hyperparameter using a first-order Taylor series approximation (Penny and Ridgway, 2013). Therefore, in the current study, BPI was performed using the developed SPM12-based toolbox4. For the ‘ROPE-only’ rule, the posterior probability threshold was Pthr = 95% (LPO > 3). For the ‘HDI+ROPE’ rule, we used the 95% HDI.

We compared the number of ‘activated’ voxels (as a percentage of the total number of voxels) detected by Bayesian and classical inference. We also compared the number of ‘activated,’ ‘deactivated,’ and ‘not activated’ voxels detected using BPI with the ‘ROPE-only’ and ‘HDI+ROPE’ decision rules and different ES thresholds. To estimate the influence of the sample size on the results, all the above-mentioned analyses were performed with samples of different sizes: 5 to 100 subjects from the HCP dataset (the emotion processing task, ‘Emotion > Shape’ contrast) and 5 to 115 subjects from the UCLA dataset (the stop signal task, ‘Correct Stop > Go’ contrast), in steps of 5 subjects. Ten random groups were sampled for each step.

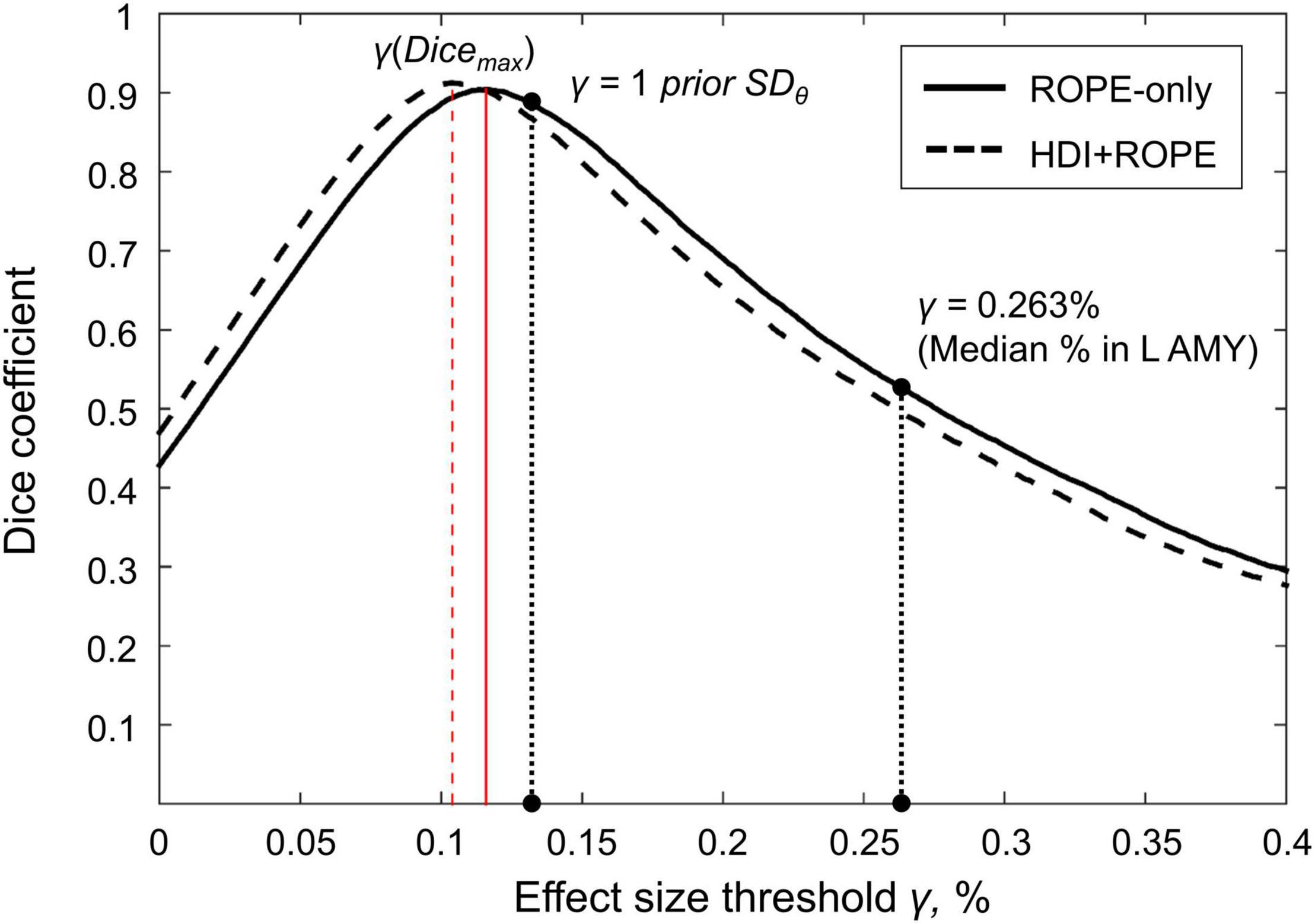

We considered three ES thresholds: firstly, the default ES threshold for the subject-level γ = 0.1% (BOLD PSC); secondly, the default ES threshold for the group-level γ = 1 prior SDθ; thirdly, the γ(Dicemax) threshold, which ensures maximum similarity of the activation patterns revealed by classical NHST and BPI. The similarity was assessed using the Dice coefficient:

where Vclassic is the number of ‘activated’ voxels detected using classical NHST, Vbayesian(γ) is the number of ‘activated’ voxels detected using BMI with the ES threshold γ, and Voverlap is the number of ‘activated’ voxels detected by both methods. A Dice coefficient of 0 indicates no overlap between the patterns, and 1 indicates complete overlap. Dice coefficients were calculated for γ ranging from 0 to 0.4% in steps of 0.001%.

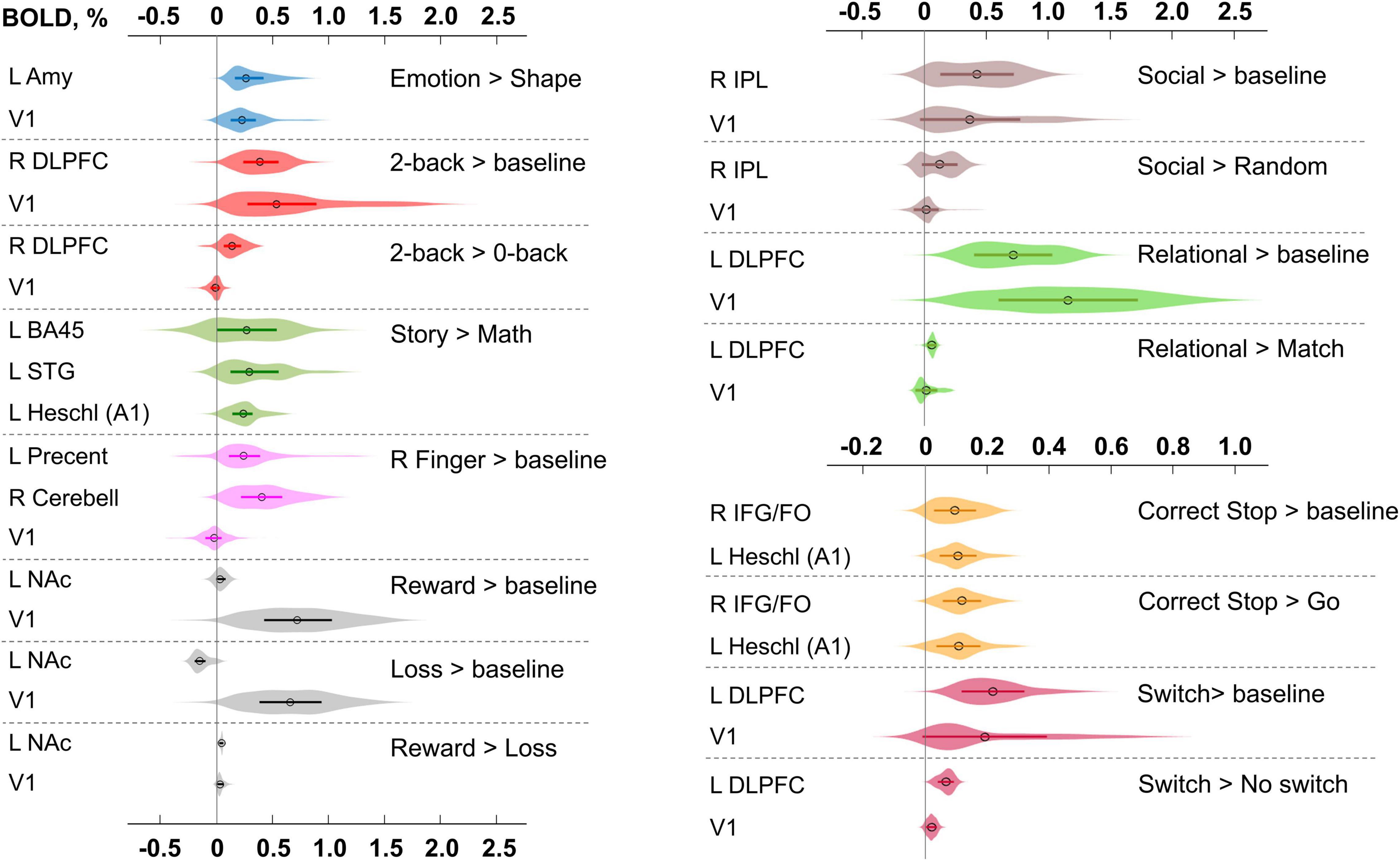

In the current study, we aimed to provide a reference set of typical effect sizes for different task designs (block and event-related) and different contrasts (‘task-condition > control-condition,’ ‘task-condition > baseline,’) in a set of a priori defined regions of interest (ROI). Effect sizes were expressed in PSC and Cohen’s d. ROI masks were defined using anatomical and a priori functional masks. For more details, see Supplementary Materials, Par. 4.

Bayesian parameter inference should be able to detect the ‘null effect’ in the majority of voxels when comparing samples with no expected practically significant difference. For example, there may be two groups of healthy adult subjects performing the same task or two sessions with the same task instructions. To test this, we used fMRI data from the emotion processing task. To emulate two ‘similar’ independent samples, 100 healthy adult subjects’ contrasts (‘Emotion > Shape’) were randomly divided into two groups of 50 subjects. A two-sample test comparing the ‘Group #1’ and ‘Group #2’ was performed with the assumption of unequal variances between the groups (SPM12 default option). To emulate ‘similar’ dependent samples, we randomized ‘Emotion > Shape’ contrasts from right-to-left (RL) and left-to-right (LR) phase encoding sessions in the ‘Session #1’ and ‘Session #2’ samples. Each sample consisted of 50 contrasts from the RL session and 50 from the LR session. A paired test designed to compare ‘Session #1’ and ‘Session #2’ was equivalent to the one-sample test on 50 ‘RL > LR session’ and 50 ‘LR > RL session’ contrasts.

To check for violations of the normality assumption we performed Shapiro-Wilk test (Shapiro and Wilk, 1965) for each voxel for one block-design task (‘Emotion >Shape’ contrast) and one event-related task (‘Correct Stop > Go’ contrast). We reported the number of voxels that were significantly non-Gaussian (using α = 0.001 uncorrected for multiple comparisons and α = 0.05 with Bonferroni correction). We also calculated median kurtosis and skewness across voxels. Kurtosis is a measure of the heaviness of the tails. Skewness is a measure of asymmetry of distribution.

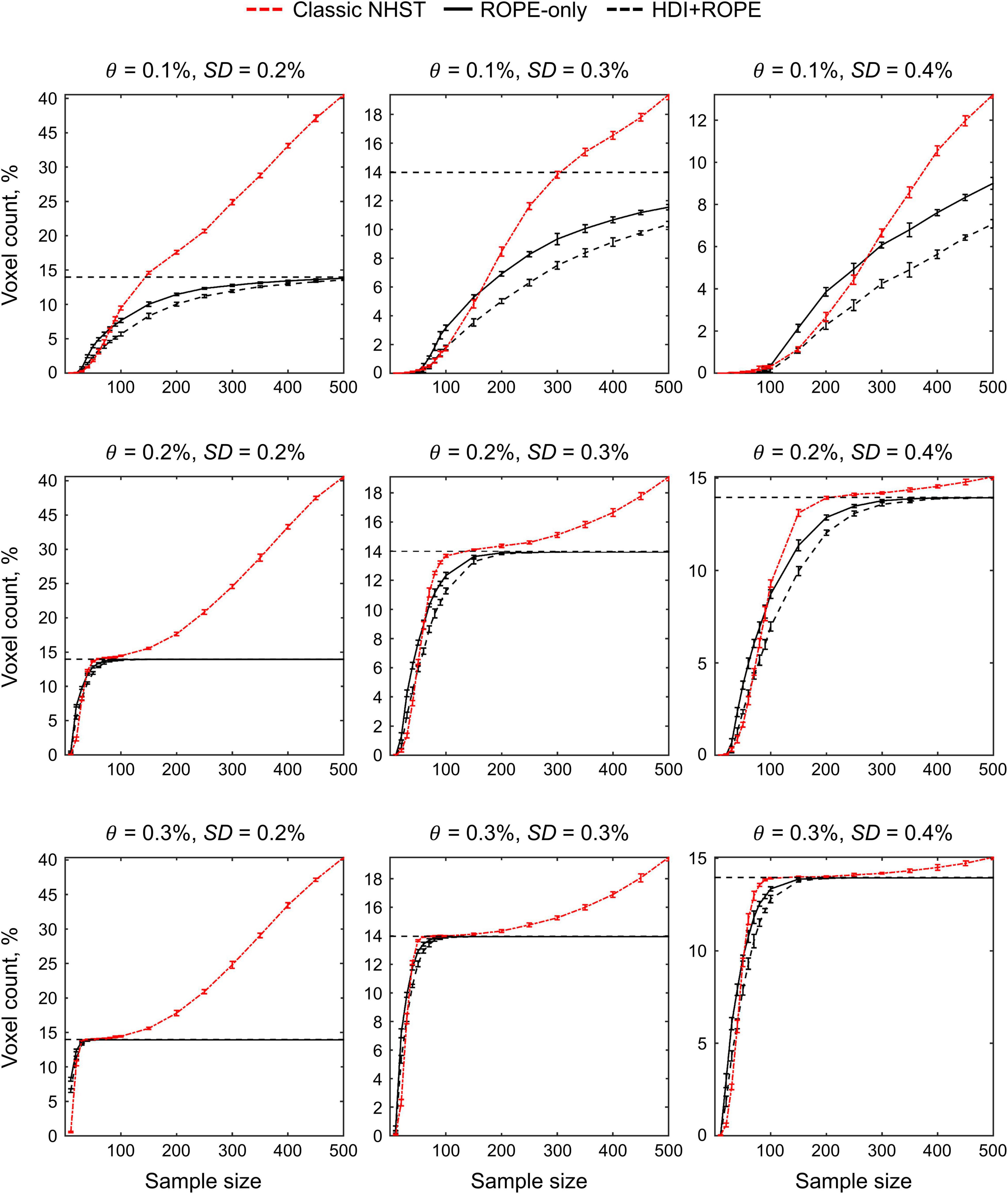

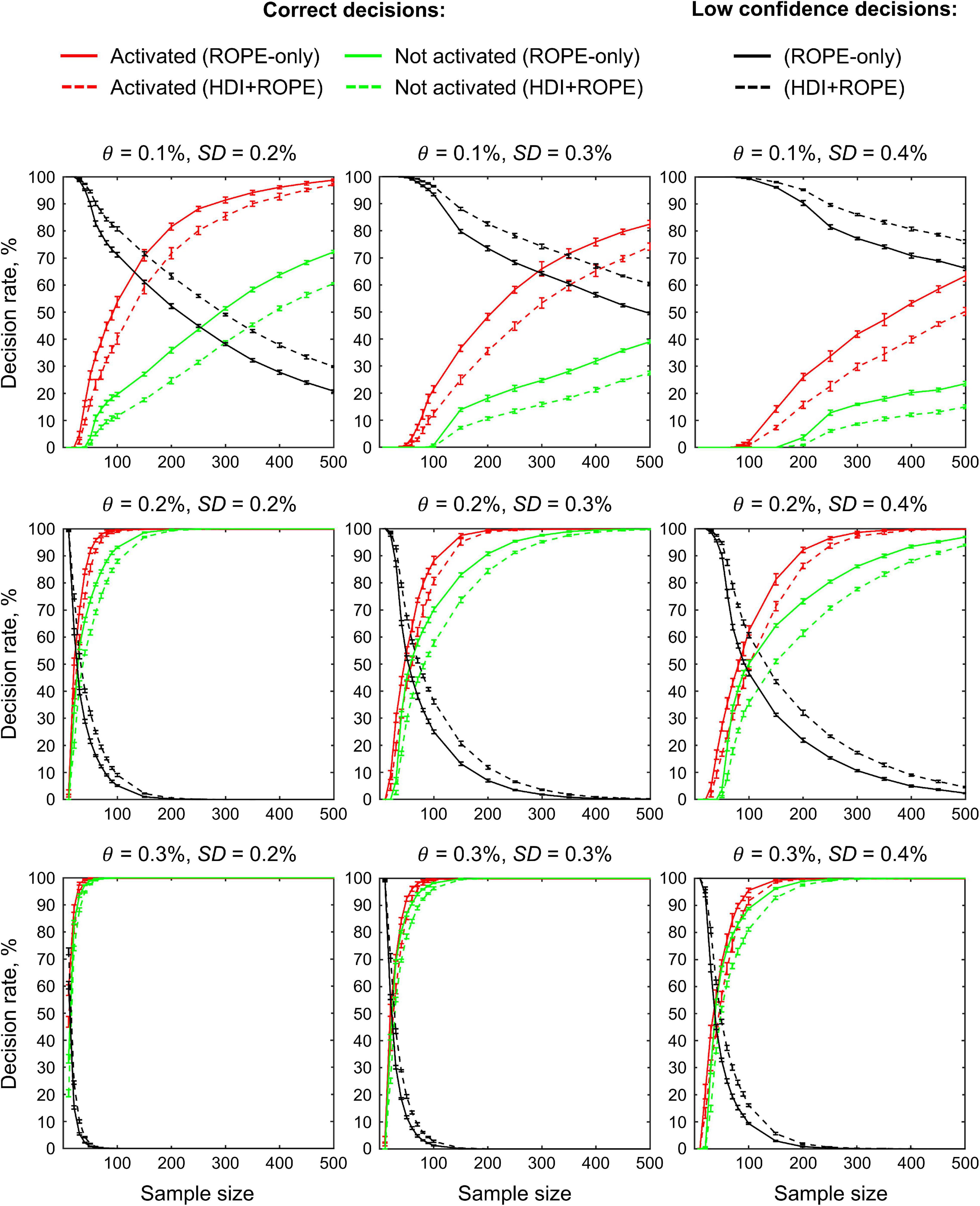

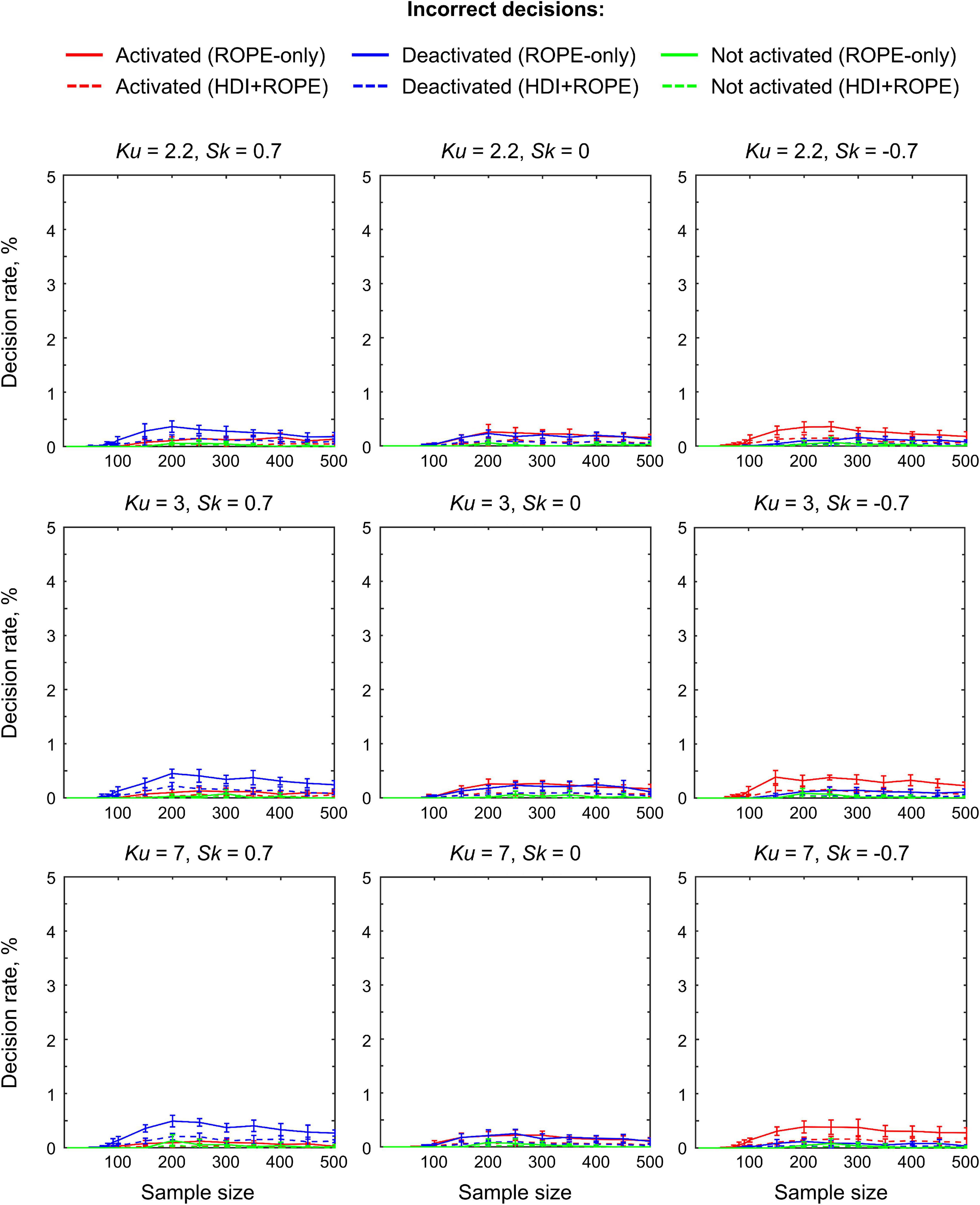

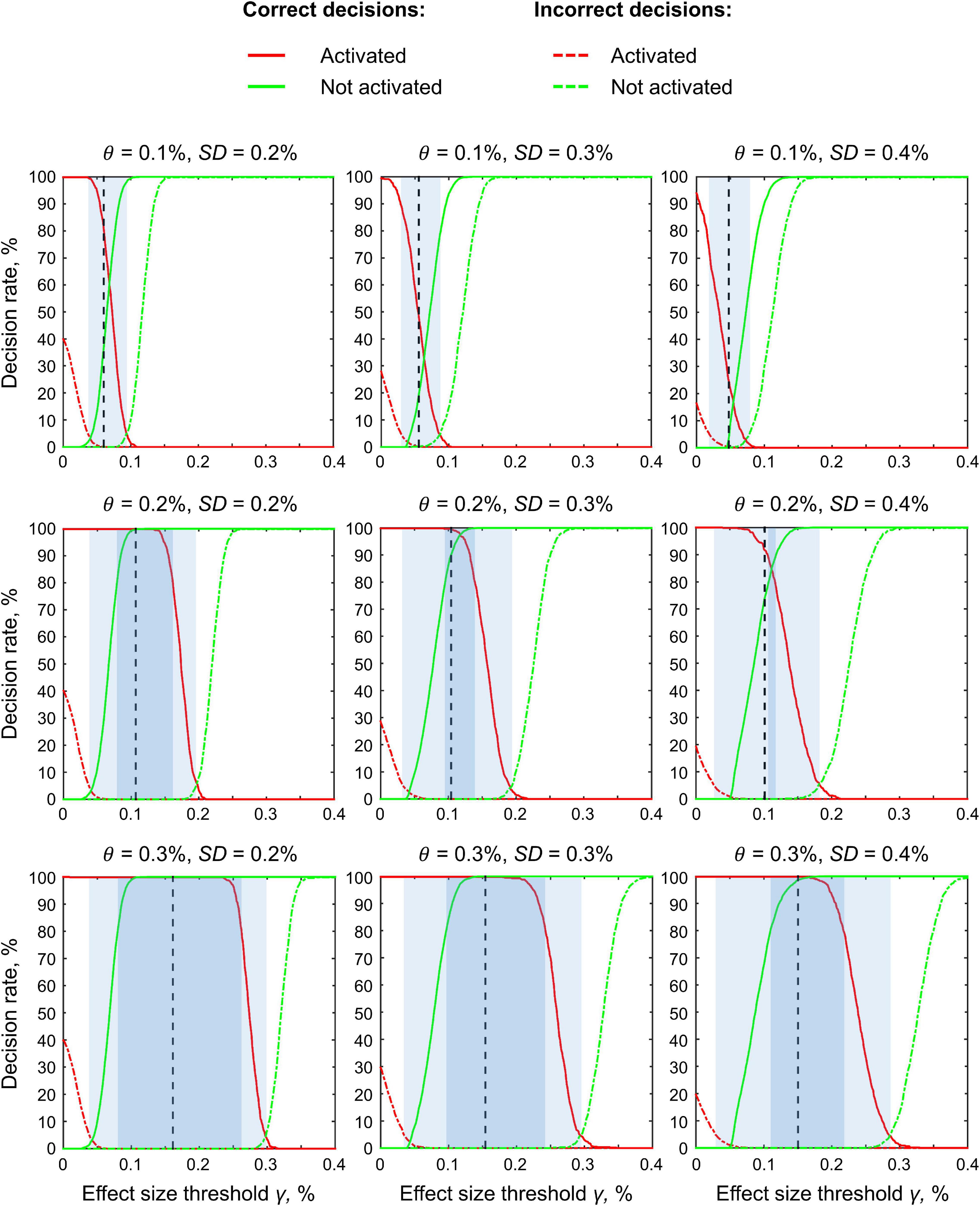

The main limitation of using empirical data to assess the performance of statistical methods lies in the lack of knowledge of the ground truth. Therefore, we performed group-level simulations to better understand how the sample size and effect size threshold affect BPI results given different known effect sizes and noises. Simulations also allowed us to assess the robustness of BPI to the violations of the normality assumption. We generated the parameter maps (contrast images) similar to Nichols and Hayasaka (2003); Schwartzman et al. (2009) and Cremers et al. (2017). Each contrast image consisted of ‘activated’ and ‘deactivated’ voxels and ‘trivial’ background voxels surrounding them. Locations of ‘activated’ and ‘deactivated’ voxels were specified based on the NeuroSynth meta-analysis results (Yarkoni et al., 2011) obtained using the search terms ‘task’ and ‘default,’ respectfully (association test, α = 0.01 with FDR correction). Data were drawn from the Pearson system distribution (Johnson et al., 1994) with kurtosis, Ku = 2.2, 3, 7 and skewness, Sk = −0.7, 0, 0.7. The normal distribution corresponds to Ku = 3 and Sk = 0. Other combinations of Ku and Sk resulted in four-parameter beta distributions. The mean effect in practically significant (‘activated’ and ‘deactivated’) voxels was θ = ± 0.1, 0.2, 0.3%. For practically non-significant or ‘trivial’ voxels, the mean effect was θ = ± 0.04%, which can be considered equivalent to the null value for practical purposes (‘not activated’ voxels). Noise standard deviation was SD = 0.2, 0.3, 0.4%. The mean effect size and noise were consistent with those observed in the empirical data (see Supplementary Tables 11–19). Contrast-to-noise ratio was varied from 0.25 to 1.5. For each combination of the Pearson system distribution parameters, we generated 1000 images.

To evaluate sample size dependencies, we randomly drawn images from the full sample (N = 1000) ranging from N = 10 to 100 (with step 10) and from N = 150 to 500 (step 50). This procedure was repeated ten times for each step. The analysis was limited to the single axial slice (z = 36 mm) containing 579 ‘activated’ voxels, 500 ‘deactivated’ voxels and 3067 ‘trivial’ or ‘not activated’ voxels. For classical NHST and BPI, we calculated the number of ‘activated’ voxels in relation to the total number of voxels. For BPI, we additionally calculated:

(1) Correct decision rate. The number of correctly classified ‘activated,’ ‘deactivated,’ and ‘not activated’ voxels to its true number (c.f. ‘hit rate’ in detection theory or ‘true positive rate’ in frequentist framework).

(2) Incorrect decision rate. The number of voxels incorrectly classified as ‘activated,’ ‘deactivated,’ and ‘not activated’ to the true number of voxels not belonging to ‘activated,’ ‘deactivated,’ and ‘not activated’ categories, respectfully (c.f. ‘false alarm rate’ in detection theory or ‘false positive rate’ in frequentist framework);

(3) Low confidence decision rate. The number of ‘low confidence’ voxels to the total number of voxels.

The code for the simulations is available online5.

Classical NHST did not show a significant difference between ‘Group #1’ and ‘Group #2’ (see Supplementary Figure 1). BPI with the ‘ROPE-only’ decision rule and default ES threshold γ = 1 prior SDθ = 0.190% classified 83.4% of voxels as having ‘no difference’ in which the null hypothesis was accepted (see Supplementary Figure 1). The ‘HDI+ROPE’ rule classified 76.2% of voxels as having ‘no difference.’

Classical NHST did not reveal a significant difference between ‘Session #1’ and ‘Session #2’ (see Supplementary Figure 2). The prior SDθ was 0.005%. In this case, using the default ES threshold γ = 1 prior SDθ did not allow the detection of any ‘no difference’ voxels, because the ROPE was unreasonably narrow. The ‘null effect’ was detected in all voxels beginning with a γ = 0.013% threshold using the ‘ROPE-only’ and ‘HDI+ROPE’ decision rules (see Supplementary Figure 2).

In this way, when comparing two ‘similar’ independent samples (two groups of healthy subjects performing the same task), BPI with the default group-level threshold (one prior SDθ) allowed us to correctly label voxels as having ‘no difference’ for the majority of the voxels of the brain. However, when comparing two ‘similar’ dependent samples (two sessions from the same task), the one prior SDθ threshold became inadequately small.

Therefore, the default one prior SDθ threshold is not suitable when the difference between dependent conditions is very small (paired sample test or one-sample test). In such cases, one can use an a priori defined ES threshold based on previously reported effect sizes or provide an ES threshold at which most of the voxels can be labeled as having ‘no difference,’ allowing the critical reader to decide whether this speaks in favor of the absence of differences.

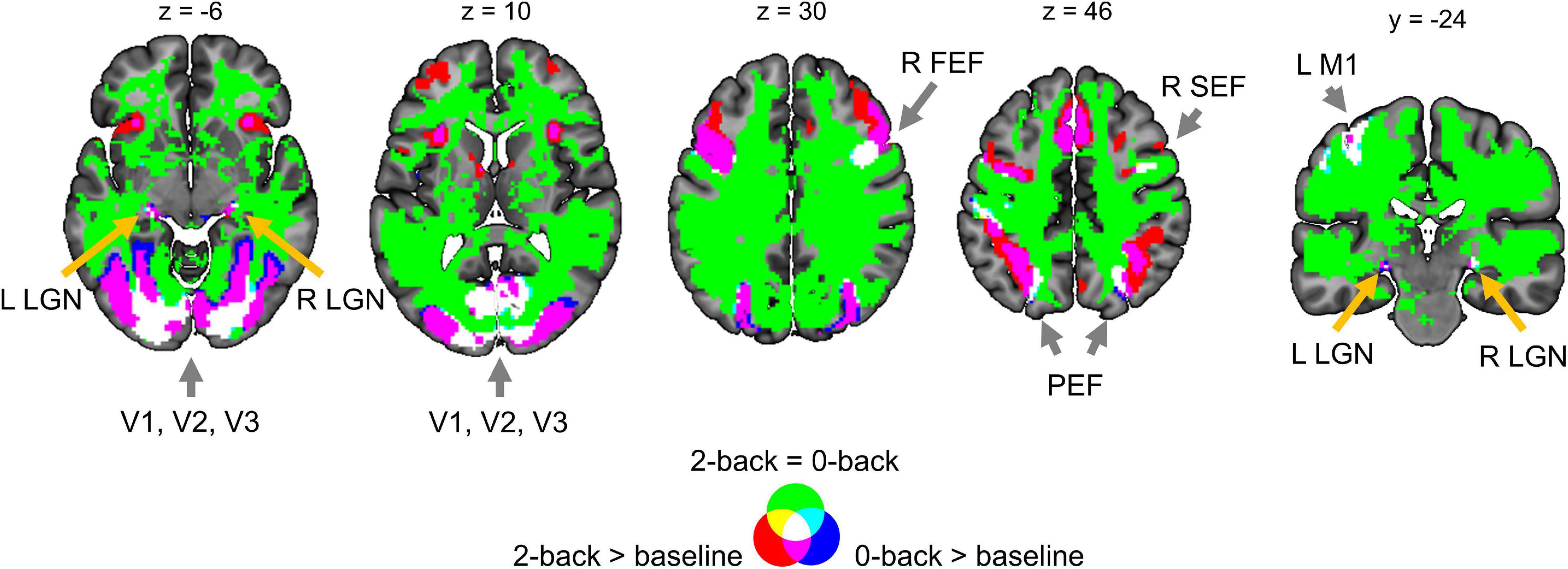

Generally, classical NHST with voxel-wise FWE correction and BPI with the ‘ROPE-only’ decision rule and default group-level ES threshold γ = 1 prior SDθ revealed similar (de)activation patterns in all considered contrasts (see Figure 6, Table 1, and Supplementary Tables 2–10). The median ES threshold based on Dicemax and median default group-level ES threshold across all considered contrasts were close in magnitude to the default subject-level ES threshold γ = 0.1%: γ(Dicemax) = 0.118% and γ = 1 prior SDθ = 0.142%. The median Dicemax across all the considered contrasts reached 0.904. At the same time, BPI allowed us to classify ‘non-significant’ voxels as ‘not activated’ or ‘low confidence.’ As it can be clearly seen from Figure 6, areas with ‘non-activated’ voxels surround clusters with ‘activated/deactivated’ voxels. Both are separated by areas comprising ‘low confidence’ voxels.

Figure 6. Examples of results obtained with classical NHST and BPI. Four contrasts were chosen for the illustration purposes (two event-related and two block-design tasks). Classical NHST was implemented using voxel-wise FWE correction (α = 0.05). BPI was implemented using the ‘ROPE-only’ decision rule, Pthr = 95% (LPO > 3) and γ = 1 prior SDθ. Axial slice z = 18 mm (MNI152 standard space).

As expected, compared with the ‘HDI+ROPE’ rule, using the ‘ROPE-only’ rule slightly increases the number of ‘activated/deactivated’ and ‘not activated’ voxels (see Table 1 and Supplementary Tables 2–10). The ‘HDI+ROPE’ rule labeled more voxels as ‘low confidence.’

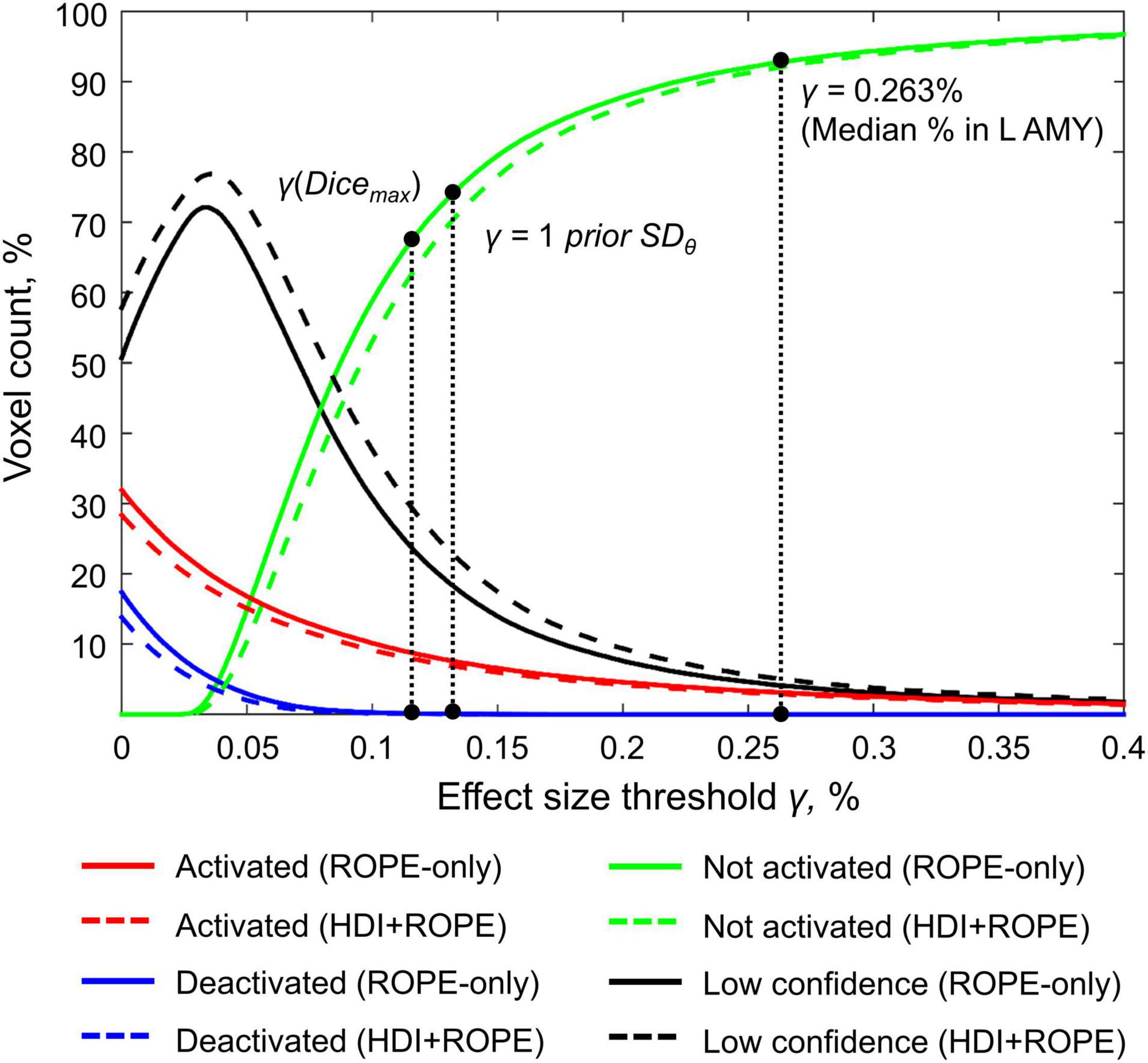

Here, we focus on the ‘ROPE-only’ rule. We first consider the results for the emotional processing task and then consider other tasks. Using the default single-subject ES threshold γ = 0.1% for the emotional processing task (‘Emotion > Shape’ contrast), 58.8% of all voxels can be classified as ‘not activated,’ 30.8% as ‘low confidence,’ and 10.1% as ‘activated’ (see Figure 7 and Supplementary Table 2). The default group-level ES threshold γ = 1 prior SDθ = 0.135% allowed us to classify 75.0% of all voxels as ‘non-activated,’ 17.5% as ‘low confidence,’ and 7.4% as ‘activated’ (see Figure 7 and Supplementary Table 2). Both types of thresholds were comparable to those of classical NHST for the detection of ‘activated’ voxels. The maximum overlap between ‘activations’ patterns revealed by classical NHST and BPI was observed at γ(Dicemax) = 0.116% (see Figure 8 and Table 1).

Figure 7. Number of voxels classified into the four categories depending on the ES threshold γ. The results for the emotion processing task (‘Emotion>Shape’ contrast) are presented for illustration. L AMY, left amygdala.

Figure 8. Dependence of the Dice coefficient on the ES threshold γ. Results for the emotion processing task (‘Emotion>Shape’ contrast). The red lines denote γ(Dicemax). L AMY, left amygdala.

For the ‘2-back > 0-back,’ ‘Left Finger > baseline,’ ‘Right Finger > baseline,’ and ‘Social > Random’ contrasts, the three ES thresholds that were considered—0.1%, one prior SDθ, γ(Dicemax)—produced similar results (see Table 1 and Supplementary Tables 3, 5, 7). For the event-related stop-signal task (‘Correct Stop > baseline’ and ‘Correct Stop > Go’ contrasts), one prior SDθ and γ(Dicemax) were close in terms of their values but smaller than 0.1% (see Table 1). Block designs tend to evoke higher BOLD PSC than event-related designs; therefore, a lower prior SDθ should be expected for event-related designs and higher prior SDθ for block designs. Within a single design, in contrasts such as ‘task-condition > baseline,’ higher BOLD PSC and prior SDθ would be expected than in contrasts in which the experimental conditions are compared directly. For example, the contrast ‘2-back > baseline’ has prior SDθ = 0.325% and contrast ‘2-back > 0-back’ has prior SDθ = 0.089%.

As previously noted, some contrasts did not elicit robust activations: ‘Reward > Loss,’ ‘Relational > Match,’ (Barch et al., 2013) and ‘Switch > No switch’ (Gorgolewski et al., 2017). The corresponding γ(Dicemax) thresholds were 0.044, 0.073, and 0.037% (see Table 1 and Supplementary Tables 6, 8, 10). The prior SDθ were 0.032, 0.051, and 0.030%. Correspondingly, BPI with the γ = 1 prior SDθ threshold classified 0, 18.4, and 42.2% of voxels as ‘not activated.’ This demonstrates that when we compare conditions with similar neural activity and minor differences, it becomes more difficult to separate ‘activations/deactivations’ from the ‘null effects’ using the γ = 1 prior SDθ threshold.

A complete list of effect sizes (BOLD PSC and Cohen’s d) estimated for different tasks and a priori defined ROIs is presented in the Supplementary Materials (Supplementary Tables 11–19). Here, we focus only on the BOLD PSC. The violin plots for some of these are shown in Figure 9.

Figure 9. Typical BOLD PSC in fMRI studies. The box plots inside the violins represent the first and third quartile, and the black circles represent median values. Contrasts from the same task are indicated in one color. L/R, left/right; AMY, amygdala; V1, primary visual cortex; DLPFC, dorsolateral prefrontal cortex; BA, Brodmann area; STG, superior temporal gyrus; A1, primary auditory cortex; NAc, nucleus accumbens; IPL, inferior parietal lobule; IFG/FO, inferior frontal gyrus/frontal operculum.

For example, the median BOLD PSC in the left amygdala ROI, one of the key brain areas for emotional processing, was 0.263%, which is approximately twice as large as one prior SDθ (see Figure 7). Thus, using this PSC as the ES threshold in future studies may cause the ROPE to become too wide compared to the effect sizes typical for tasks with such designs. Therefore, such a threshold can be used to detect large and highly localized effects. However, it may fail to detect small but widely distributed effects previously described for HCP data (Cremers et al., 2017).

In general, median PSCs within ROIs were up to 1% for block designs and 0.5% for event-related designs. The maximum PSCs reached 2.5% and were usually observed in the primary visual cortex (V1) for visual tasks comparing experimental conditions with baseline activity. For ‘moderate’ physiological effects, PSC varied in the range 0.1−0.2%, for example, for the ‘2-back > 0-back’ contrast, the median PSC in the right dorsolateral prefrontal cortex (R DLPFC in Figure 9) was 0.137%. Likewise, for the ‘Social > Random’ contrast, the right inferior parietal lobule (R IPL) median PSC was 0.137%, for the ‘Correct Stop > Go,’ the right inferior frontal gyrus/frontal operculum (R IFG/FO) median PSC was 0.120%. For more ‘strong’ physiological effects, the PSC was in the range 0.2−0.3%, for example, for the ‘Emotion > Shape’ contrast, the median PSC in the left amygdala was 0.263%, and for the ‘Story > Math’ contrast, the median PSC in the left Brodmann area 45 (Broca’s area) was 0.269%. For the motor activity, for example the ‘Right Finger > baseline’ contrast, the median PSC in the left precentral gyrus was 0.239%, in the left postcentral gyrus was 0.362%, in the left putamen was 0.290%, and in the right cerebellum was 0.401%. For the contrasts that did not elicit robust activations (Barch et al., 2013), the PSC was approximately 0.05–0.1%; for example, for the ‘Reward > Loss’ contrast, the median PSC in the left nucleus accumbens was 0.043%, and for the ‘Relational > Match’ contrast, the median PSC in the left dorsolateral prefrontal cortex was 0.062%.

We considered BPI with two consecutive thresholding steps: (1) calculate the LPOs (or PPMs) with a selected ES threshold γ, (2) apply the posterior probability threshold pth = 95% or consider the overlap between the 95% HDI and ROPE. We can reverse the thresholding sequence and calculate the ROPE maps.

For the ‘activated/deactivated’ voxels, the ROPE map contains the maximum ES thresholds that allow voxels to be classified as ‘activated/deactivated’ based on the ‘ROPE-only’ or ‘HDI+ROPE’ decision rules. For the ‘not activated’ voxels, the map contains the minimum effect size thresholds that allow voxels to be classified as ‘not activated.’

The procedure for calculating the ROPE map can be performed as follows. Let us consider a gradual increase in the ROPE radius (i.e., the half-width of ROPE or the ES threshold γ) from zero to the maximum effect size in observed volume. (1) For voxels in which PSC is close to zero, at a certain ROPE radius, the posterior probability of finding the effect within the ROPE becomes higher than 95%. This width is indicated on the ROPE map for ‘not activated’ voxels. (2) For voxels in which the PSC deviates from zero, at a certain ROPE radius, the posterior probability of finding the effect outside the ROPE becomes lower than 95%. This width is indicated on the ROPE map for ‘activated/deactivated’ voxels. The same maps can be calculated for the ‘HDI+ROPE’ decision rule.

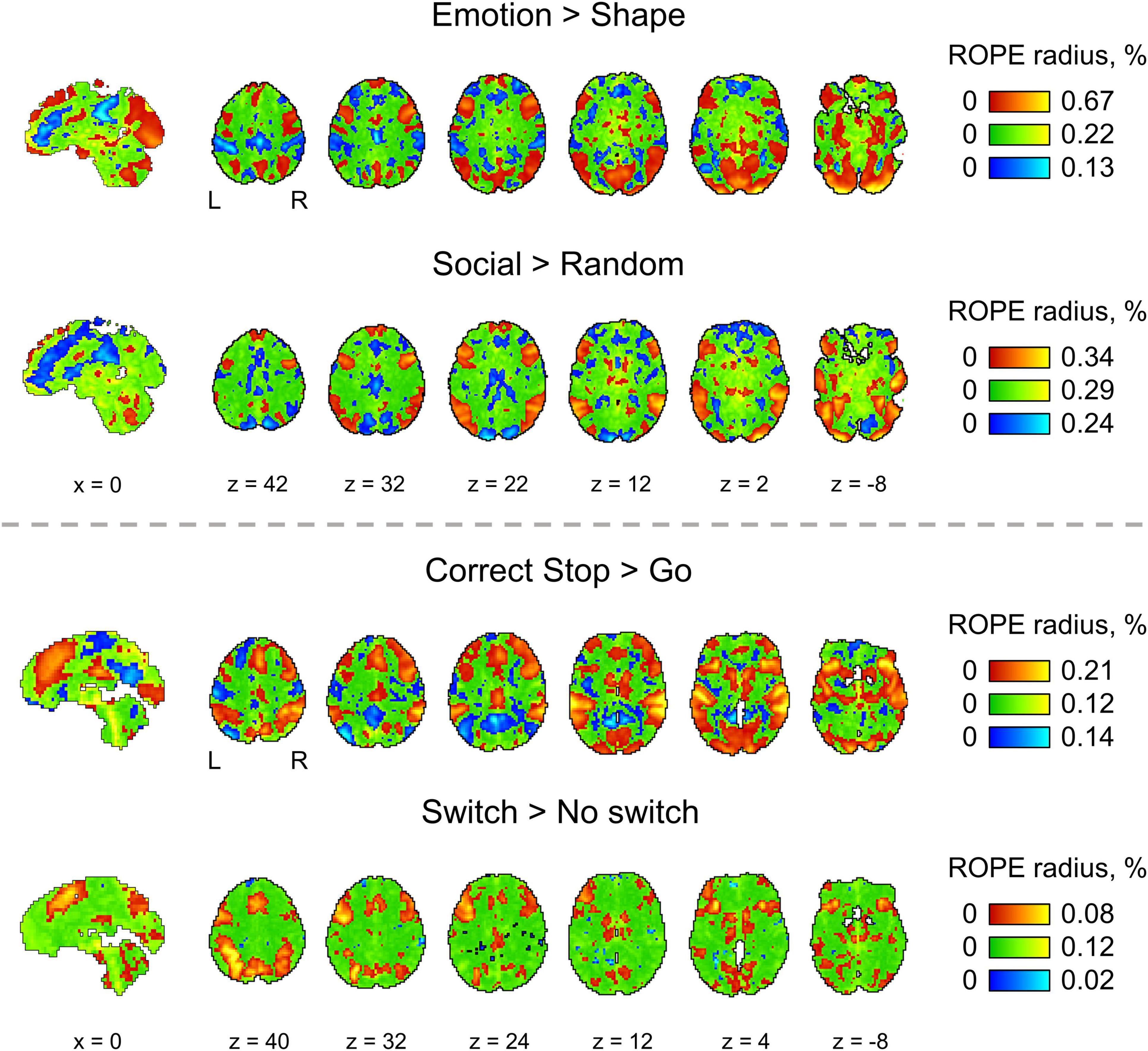

Examples of the ROPE maps are shown in Figure 10. From our point of view, ROPE maps, as well as unstandardized effect size (PSC) maps, may facilitate an intuitive understanding of the spatial distribution of a physiological effect under investigation (Chen et al., 2017). They can also be a valuable addition to standard PPMs, allowing researchers to flexibly choose the ES threshold based on expected effect size for specific experimental conditions, ROIs and MR acquisition parameters. The default ES thresholds may be more conservative to brain areas near air–tissue interfaces due to signal dropout. The researcher may choose a lower ES threshold to increase sensitivity to these brain areas.

Figure 10. The ROPE maps. Four contrasts were chosen for the illustration purposes (two event-related and two block-design tasks). The ROPE maps are presented using different colors for the ‘activated,’ ‘deactivated,’ and ‘not activated’ voxels. The green bars represent the minimum ROPE radii at which voxels with a PSC close to zero can be classified as ‘not activated’ based on the ‘ROPE-only’ decision rule. The red and blue bars represent the maximum ROPE radii at which voxels of which the PSC deviates from zero can be classified as ‘activated’ and ‘deactivated,’ respectively.

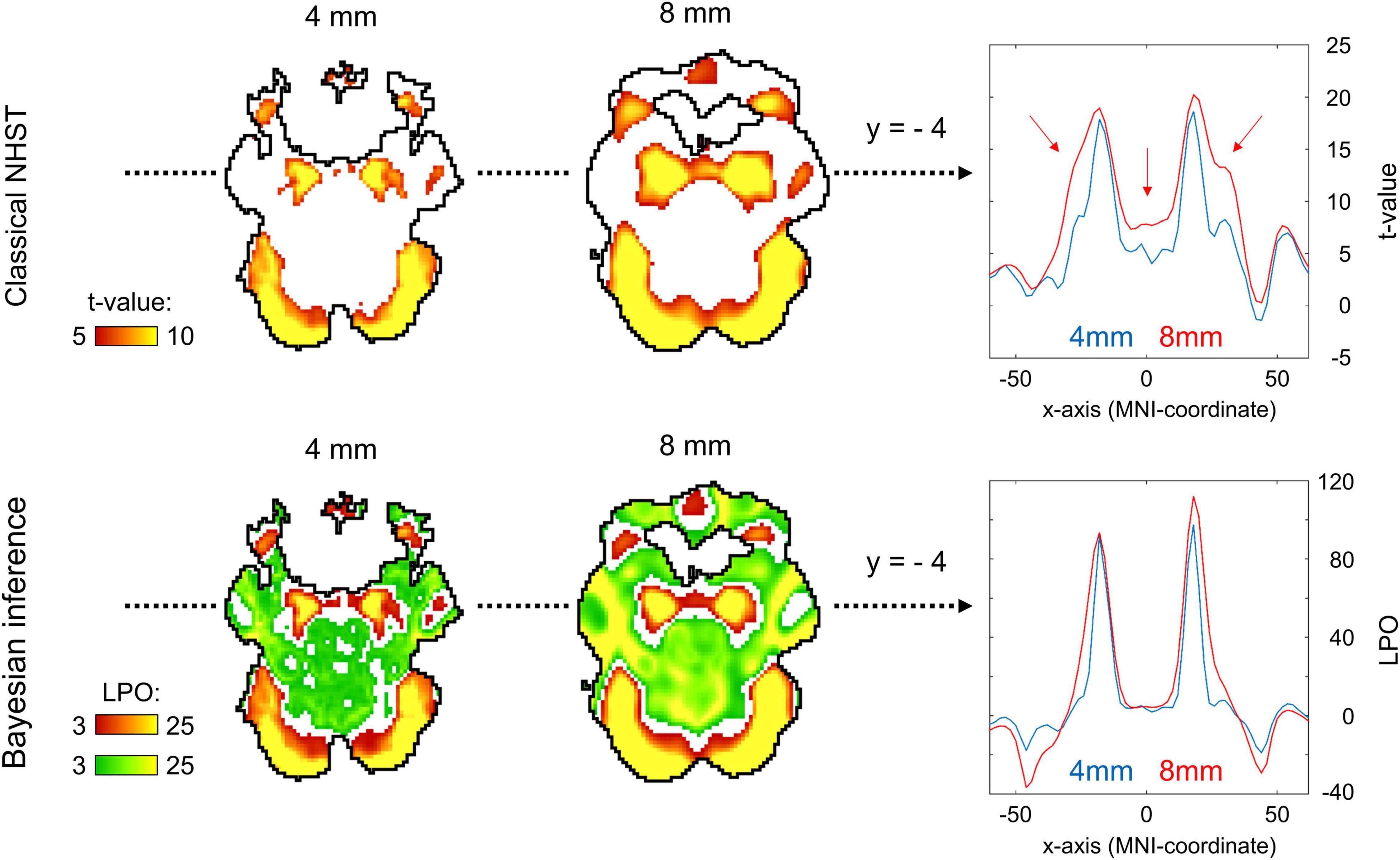

Two main effects of spatial smoothing were identified. Firstly, higher spatial smoothing increased the number of both ‘activated/deactivated’ and ‘not activated’ voxels classified by BPI, reducing the number of ‘low confidence’ voxels. Secondly, higher smoothing blurred the spatial localisation of local maxima of t-maps and PPMs (LPO-maps) to a different extent. Consider, for example, the emotion processing task (‘Emotion > Shape’ contrast). The broadening of two peaks in the left and right amygdala was more noticeable on the t-map than on the PPM (see Figure 11).

Figure 11. Influence of spatial smoothing on classical NHST and BPI: results for the emotion processing task (‘Emotion > Shape’ contrast). Classical NHST was implemented using voxel-wise FWE correction (α = 0.05). BPI was implemented using the ‘ROPE-only’ decision rule, Pthr = 95% (LPO > 3) and γ = 1 prior SDθ = 0.135%. Axial slice z = –14 mm (MNI152 standard space). Slice images have different outlines due to spatial smoothing (higher spatial smoothing increases the size of implicit masks for single subjects and group of subjects). In the panels on the right, 1-D images are presented for t-values and LPOs along the x-axis for y = –4 mm. The red arrows indicate a noticeable broadening of two peaks of local maxima (left and right amygdala) at higher smoothing.

Smoothing was previously shown to have a nonlinear effect on the voxel variances and thus to affect more t-maps than β value maps, sometimes leading to counterintuitive artifacts (Reimold et al., 2005). This is especially noticeable at the border between two different tissues or between the two narrow peaks of the local maxima. If the peak is localized close to white matter voxels with low variability, then smoothing can shift the peak to the white matter. If low-variance white matter voxels separate two close peaks, then after smoothing, they may serve as a ‘bridge’ between the two peaks. To avoid this problem, Reimold et al. (2005) recommended using masked β value maps. In the present study, we suggest that PPMs based on BOLD PSC thresholding can mitigate this problem. Importantly, smoothing artifacts can also arise on Cohen’s d maps. Therefore, PPMs based on PSC thresholding may be preferable to PPMs based on Cohen’s d thresholding.

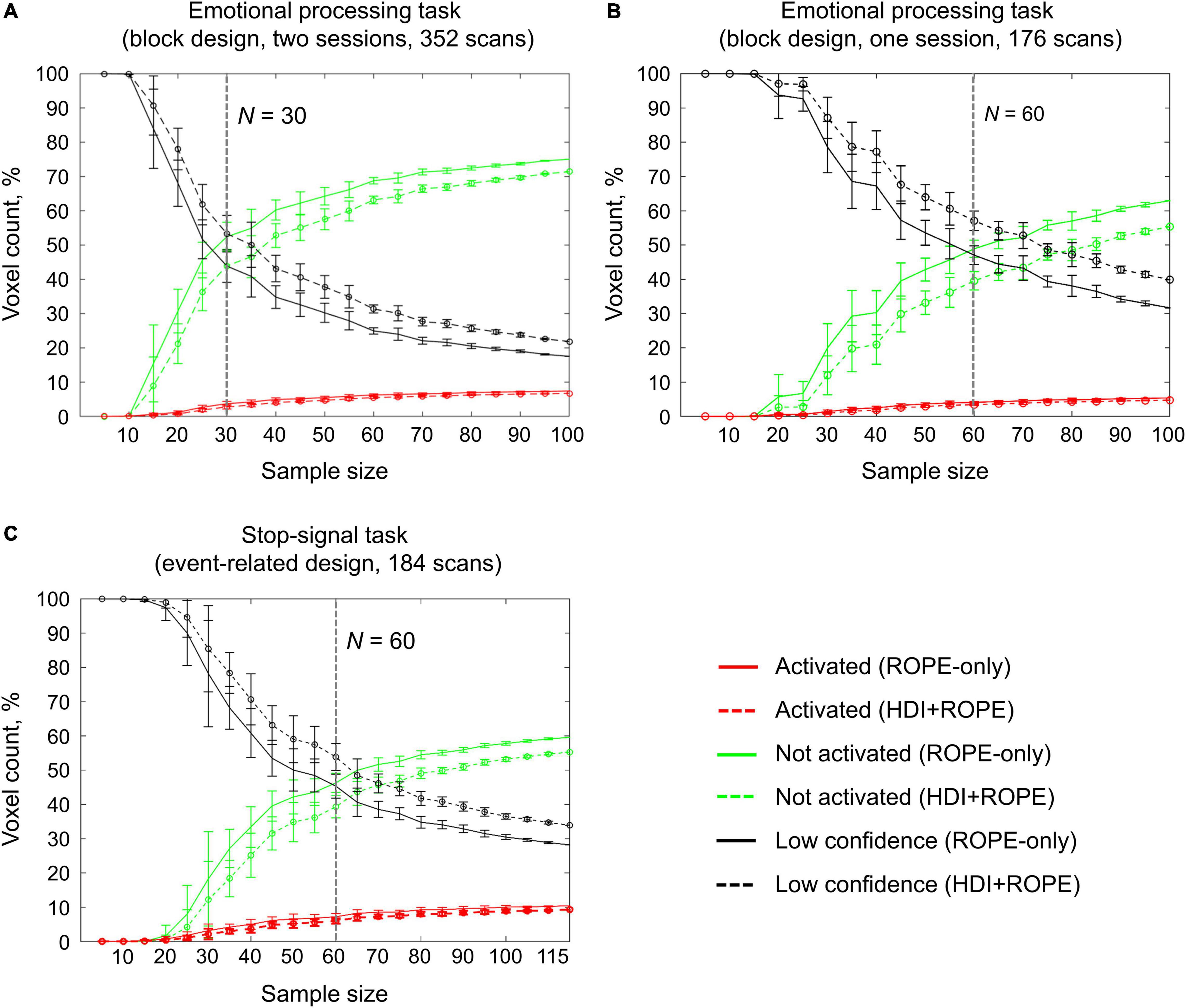

An enlargement of the sample size led to an increase in the number of ‘activated’ and ‘not activated’ voxels, and a decrease in the number of ‘low confidence’ voxels. This is due to a decrease in the posterior variance. The curve of the ‘activated’ voxels rose much slower than that of the ‘not activated’ voxels. For the emotion processing task (‘Emotion > Shape’ contrast, block-design, two sessions, 352 scans), the largest gain in the number of ‘activated’ and ‘not activated’ voxels can be noted from 20 to 30 subjects (see Figure 12A). With a sample size of N > 30, the number of ‘activated’ and ‘not activated’ voxels increased less steeply. The ‘not activated’ and ‘low confidence’ voxels curves intersected at N = 30 subjects. After the intersection point, the graphs reached a plateau.

Figure 12. Dependencies of the number of ‘activated,’ ‘not activated,’ and ‘low confidence’ voxels on the sample size. BPI was implemented using γ = 1 prior SDθ. (A) The emotional processing task (‘Emotion > Shape’ contrast, two sessions). (B) The emotional processing task (‘Emotion > Shape’ contrast, one session). (C) The stop-signal task (‘Correct Stop > Go’ contrast). The error bars represent the mean and standard deviation across ten random groups.

Considering only half of the emotional processing task data (one session, 176 scans), the intersection point shifted from N = 30 to N = 60 (see Figure 12B). For the event-related task (‘Correct Stop > Go’ contrast, the stop-signal task, 184 scans), all considered dependencies had the same features as for the block-design task, and the point of intersection was at N = 60 subjects (see Figure 12C). For the fixed ES threshold, the moment at which the graphs reach a plateau depends on task design, data quality and the amount of data at the subject level, that is, on the number of scans, blocks, and events. The task designs from the HCP and UCLA datasets have relatively short durations (for example, the stop-signal task has approximately 15 ‘Correct Stop’ trials per subject). Studies with a shorter scanning time generally require a larger sample size to enable inferences to be made with confidence. Lowering the ES threshold would also require larger sample size to reach a plateau.

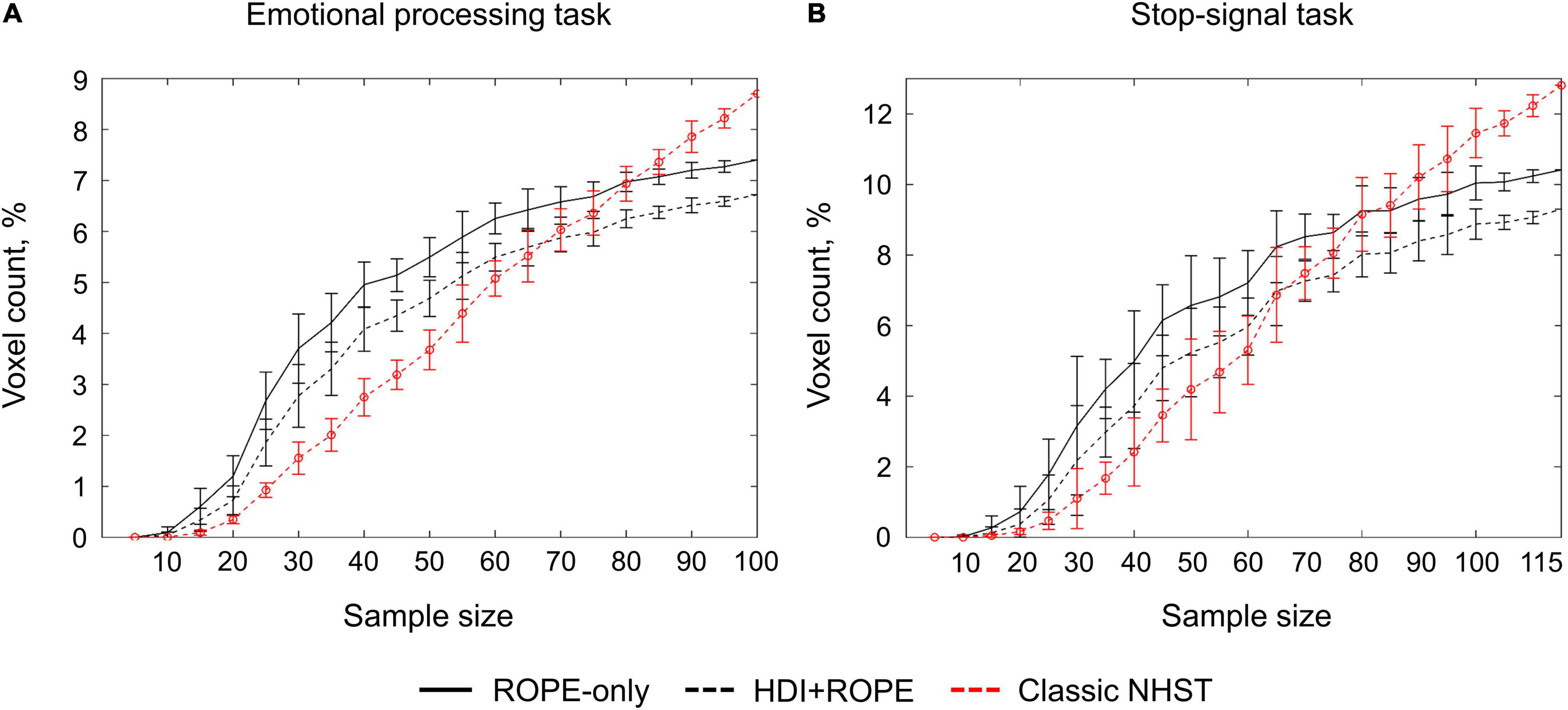

Classical NHST with the voxel-wise FWE correction showed a steady linear increase in the number of ‘activated’ voxels with increasing sample size (see Figure 13). With a further increase in the sample size, the number of statistically significant voxels revealed by classical NHST is expected to approach 100% (see, for example, Gonzalez-Castillo et al., 2012; Smith and Nichols, 2018). In contrast, the BPI with the γ = 1 prior SDθ threshold demonstrated hyperbolic dependencies. We observed a steeper increase at small and moderate sample sizes (N = 15−60). The curve of the ‘activated’ voxels flattened at large sample sizes (N > 80). BPI offers protection against the detection of ‘trivial’ effects that can appear as a result of an increased sample size if classical NHST with the point-null hypothesis is used (Friston et al., 2002a; Friston, 2012; Chen et al., 2017). This is achieved by the ES threshold γ, which eliminates physiologically (practically) negligible effects. Figure 13 presents an illustration of the Jeffreys-Lindley paradox, that is, the discrepancy between results obtained using classical and Bayesian inference, which is usually manifested at higher sample sizes (Jeffreys, 1939/1948; Lindley, 1957; Friston, 2012).

Figure 13. Dependencies of the number of ‘activated’ voxels on the sample size. Classical NHST was implemented using FWE correction (α = 0.05). BPI was implemented using γ = 1 prior SDθ. (A) The emotional processing task (block design, ‘Emotion > Shape’ contrast). (B) The stop-signal task (event-related design, ‘Correct Stop > Go’ contrast). The error bars represent the mean and standard deviation across ten random groups.

For the block-design task (‘Emotion > Shape’ contrast), the number of significantly non-Gaussian voxels was 17% with αuncorr = 0.001 and 2% with αBonf = 0.05. The median kurtosis and skewness across voxels was Ku = 3.77 and Sk = 0.05. For the event-related task (‘Correct Stop > Go’ contrast), the number of significantly non-Gaussian voxels was 19% with αuncorr = 0.001 and 4% with αBonf = 0.05. The median kurtosis and skewness across voxels was Ku = 3.77 and Sk = 0.05. In general, the data are consistent with the normality assumption, though some voxels violate it.

The simulations results reproduced the results obtained from the empirical data (see Figure 14 for an overview of the simulations). Further, they allowed us to demonstrate how various factors affect BPI performance with the known ground truth.