95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

TECHNOLOGY REPORT article

Front. Neuroinform. , 05 April 2019

Volume 13 - 2019 | https://doi.org/10.3389/fninf.2019.00020

High frequency oscillations (HFOs) are electroencephalographic correlates of brain activity detectable in a frequency range above 80 Hz. They co-occur with physiological processes such as saccades, movement execution, and memory formation, but are also related to pathological processes in patients with epilepsy. Localization of the seizure onset zone, and, more specifically, of the to-be resected area in patients with refractory epilepsy seems to be supported by the detection of HFOs. The visual identification of HFOs is very time consuming with approximately 8 h for 10 min and 20 channels. Therefore, automated detection of HFOs is highly warranted. So far, no software for visual marking or automated detection of HFOs meets the needs of everyday clinical practice and research. In the context of the currently available tools and for the purpose of related local HFO study activities we aimed at converging the advantages of clinical and experimental systems by designing and developing a comprehensive and extensible software framework for HFO analysis that, on the one hand, focuses on the requirements of clinical application and, on the other hand, facilitates the integration of experimental code and algorithms. The development project included the definition of use cases, specification of requirements, software design, implementation, and integration. The work comprised the engineering of component-specific requirements, component design, as well as component- and integration-tests. A functional and tested software package is the deliverable of this activity. The project MEEGIPS, a Modular EEG Investigation and Processing System for visual and automated detection of HFOs, introduces a highly user friendly software that includes five of the most prominent automated detection algorithms. Future evaluation of these, as well as implementation of further algorithms is facilitated by the modular software architecture.

Pathological interictal high frequency oscillations (HFO) are activity in the electroencephalogram (EEG) exceeding 80 Hz. They were found to delineate the seizure onset zone in patients with epilepsy largely independently of their co-occurrence with epileptic spikes, and the resulting localization was reported to be more specifically and accurately than epileptic spikes (Jacobs et al., 2008; Andrade-Valenca et al., 2011). HFOs are said to point to the seizure onset zone more reliably than an underlying, potentially non-congruent lesion (Jacobs et al., 2009). This clinical potential was specifically emphasized for HFOs in higher frequency bands (fast ripples, 250–500 Hz; as compared to ripples, 80–250 Hz; Jacobs et al., 2008).

Apart from the considerable amount of time it takes even an expert neurologist to identify and categorize HFOs, the process is obviously prone to subjective perception and bias (von Ellenrieder et al., 2012). During recent years a number of studies elaborated on this topic and proposed concepts and algorithms for automated detection of HFOs (Kobayashi et al., 2009; Zelmann et al., 2009, 2010; Jacobs et al., 2010; Dümpelmann et al., 2012). Due to important factors, such as cost or inherent risk of invasive procedures, the question whether high-frequency oscillations are detectable using scalp EEG has been steadily moving into the focus of research. Detection of scalp HFOs is much more time consuming, error-prone and difficult than detection of HFOs in invasive recordings. Therefore, automated detection is highly warranted (Höller et al., 2018). A number of recent studies set out to detect fast oscillations non-invasively in the magnetoencephalogram (MEG) (e.g., Papadelis et al., 2016; Pellegrino et al., 2016; van Klink et al., 2016; von Ellenrieder et al., 2016; Migliorelli et al., 2017; Tamilia et al., 2017). MEG is associated with high costs and long-term or bed-side recordings are not possible. High-density scalp EEG remains an open and demanding field when it comes to analyzing actual patient data. MEG is associated with high-costs and long-term or bed-side recordings are not possible, but it offers a localization accuracy of few mm (Leahy et al., 1998; Papadelis et al., 2009). The assumed small size of cortical generators as well as the, relative to invasive data, poor signal-to-noise ratio are frequently stated as reasons for unsatisfactory HFO analysis results in scalp recordings. These factors affect the success of visual identification as well as the set of wide-spread analytical detection strategies.

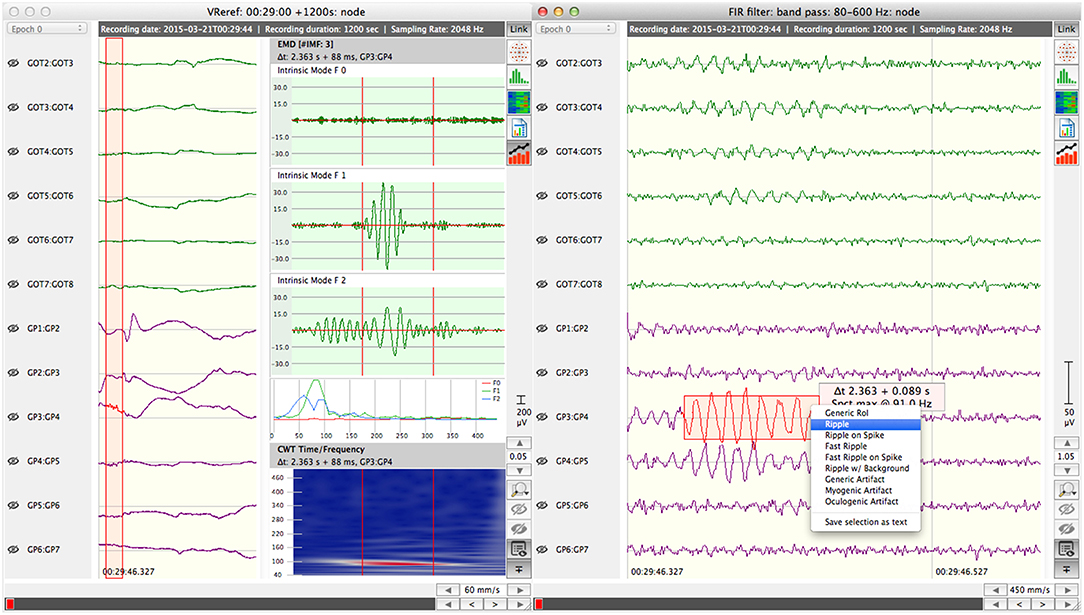

Analyzing HFOs in these different types of brain signals—EEG and MEG—requires a level software support that is depending on the analysis approach. Even visual assessment based on raw waveform representation mandates as a minimum suitable bandpass filter mechanisms as well as time- and amplitude-wise scaling of data display.

So far, software support for HFO detection has been emerging mainly from two opposed directions. A number of commercial software systems are available for EEG review and analysis in clinical settings. These systems are either part of a complete EEG system from a particular manufacturer (such as “SystemPLUS Evolution”1 or “NetStation”2) or are marketed as manufacturer-independent third-party software solutions (e.g., “BESA Epilepsy”3 or “CURRY”4). The primary purpose of these systems is to assist neurologists in visually reviewing EEG recordings for pathological patterns. Most of them offer supportive tools, such as configurable frequency filters, spectral decomposition of the signal, time-frequency plots, or automated detection of epileptic spikes. Advanced functions may include the automated localization of signal sources on the basis of spikes (EGI “GeoSource”), dipole representation of electrical potentials on the scalp (“BESA”), or super-imposing implantation schemes and electrode positions upon patient MRI images (“CURRY”). Essentially, these commercial systems are tailored to integrate well with the clinical environment. The set of functions as well as the user interfaces are optimized to meet the requirements of the day-to-day workflow. Presentations of commercial products during the Freiburg-Workshop on High-frequency Oscillations in March 2016 confirmed that support for HFO analysis in these systems is typically limited to specific filter presets (e.g., band pass 80–500 Hz) and optimized data display settings. Comprehensive HFO analysis support seemed out of reach at that time.

On the other side, numerous experimental HFO analysis systems have been developed by different research and focus groups. Virtually all of them are based on “Matlab”5 and are frequently the results of individual or series of related studies. Consequently, development of these systems concentrated on the specific research questions, supporting the validation of concrete hypotheses, rather than on providing universal HFO research tools. Thus, the majority of these experimental approaches cannot be considered integrated systems but are actually loosely coupled sets of Matlab scripts, each of which implements a specific algorithm. “RippleLab” (Navarrete et al., 2016) which emerged into a framework that experiences a somewhat wider distribution within the research community should be mentioned as an exception.

Accordingly, the user interfaces of these systems are typically minimalistic and often reduced to the Matlab console. In order to execute a complex, multi-stage process upon the data, small programmes (scripts) have to be invoked in sequence or compiled together by means of some additional top-level script. Use and operation of these software packages is, thus, fundamentally different from commercial systems for clinical use. Usage often requires a certain level of expertise in the hosting run-time environment. Usability aspects are not within the scope of these experimental packages. Moreover, Matlab, as a domain-specific high-level environment, provides a considerable level of flexibility and a rich set of stable functional primitives at the same time, which is clearly an asset for rapidly prototyping and experimenting with new algorithms.

In view of the constant advances in understanding origin and function of high-frequency oscillations (Menendez de la Prida et al., 2015; Jacobs et al., 2016a,b; Bruder et al., 2017; Pail et al., 2017; von Ellenrieder et al., 2017) and an increasing confidence in their clinical value (Frauscher et al., 2017). It is foreseeable that all major manufacturers will integrate HFO detection and classification functionality within their commercial EEG software systems. Nevertheless, Matlab-based experimental systems will continue to play the main role in the research context, as commercial systems are usually closed and are not meant to be extended by own, experimental code and algorithms. At most they offer a limited application programming interface (API), allowing external code to interact with certain parts of the system, or data import/export functions.

In the context of the currently available tools and for the purpose of related local HFO study activities we aimed at proceeding along the path of convergence of clinical and experimental systems. We designed and developed a comprehensive and extensible software framework for HFO analysis. This framework focuses on the requirements of clinical application and facilitates the integration of experimental code and algorithms. One specific requirement was that the software is not limited to the analysis of invasive EEG, but could be used for scalp recordings, as well. Thus, the software framework should allow to analyse: (i) invasive EEG; (ii) standard low-density scalp-EEG; and (iii) high-density scalp-EEG recordings. Furthermore, a general flexibility to integrate magnetoencephalographic (MEG) data should be given.

The development project included the definition of use cases, specification of requirements, software design, implementation, and integration. The work comprised the engineering of component-specific requirements, component design, as well as component- and integration-tests. A functional and tested software package is the deliverable of this activity.

To this end, we reviewed the current literature on HFO detection in general and implemented published algorithms as modules that can be plugged into the software framework.

In the following sections we will delineate the software project MEEGIPS, Modular EEG Investigation and Processing System for visual and automated detection of HFOs. The project resulted in a highly user friendly modular software framework that is suited for both, visual and automated detection of HFOs. To date, it integrates five of the most prominent automated detection algorithms, and it can be easily extended to include newly developed algorithms. Comparing algorithms to each other was done previously (Salami et al., 2012; Zelmann et al., 2012) and is beyond the scope of this manuscript. Here, we rather focus on the framework to which algorithms can be added. The software package can be obtained via email request tobWVlZ2lwc0BwbXUuYWMuYXQ=. It is provided as binary application package for Mac OS under GNU Lesser General Public License v.3 (“LGPL”).

Elaborating on the primary aim of the software framework a number of high-level use cases have been identified. In software engineering terms, a “use case” represents a defined scenario of interaction between an “actor” and the system in order to achieve a particular goal. Figure S1 depicts the interaction scenarios of our software framework by means of Unified Modeling Language (UML, Object Management Group Inc., 2015) and indicates that two “actors,” a “Neuroscientist” and a “clinical EEG specialist,” are using the system in disjoint sets of use cases. The neuroscientist and the clinical EEG specialist play specific roles. In general, “Actors” in UML define the roles that are taken on by persons or external systems when interacting with the system. They do not refer to particular user, groups of users, or professions.

While the primary intention of the EEG specialist is to compile a comprehensive report about HFO occurrence and characteristics within an EEG recording, the scientist is interested in injecting, analyzing, and optimizing HFO detection and classification strategies. Compiling an HFO report requires the EEG recording to be analyzed for HFO events, either visually or by means of automated detection mechanisms. Either approach, in turn, requires to import the recording into the system. Likewise, generating machine-learning based detection models implies the definition of suitable feature sets and the systematic analysis of detection performance during that process. Figure S1 indicates serialization of use cases as stereotyped (“include”) dependencies. As all subordinate steps can also be executed independently they are rendered as separate use cases.

Although not formalized, the use case diagram indicates that the two actors, EEG specialist and neuroscientist, are not only interacting with the system but are using the system to interact with each other. The use cases of the neuroscientist aim at establishing the most suitable system configuration that allows the EEG specialist to generate an accurate and detailed HFO report.

Summarizing the application scenarios reflected in Figure S1 we identify two actors, EEG specialist and neuroscientist, and three principal use cases:

• UC.1 “Generate HFO report.” The EEG specialist imports an EEG recording and analyses it for HFO events, either visually or by means of analytical or machine-learning based algorithms.

• UC.2 “Define analytical HFO detection process.” The neuroscientist defines or re-implements, integrates, and possibly optimizes an analytical HFO detection and classification algorithm. The tuned and configured algorithm is made available to the EEG specialist.

• UC.3 “Generate machine-learning model.” The neuroscientist selects a machine-learning technique and defines an appropriate feature set. Similar to UC.2, the resulting HFO classification model is made available to the EEG specialist.

While the scope of this work does not permit to reproduce the full requirements engineering process, we elaborate on the set of fundamental properties of the software framework which have been defined in accordance with the use cases determined in section 2. These design objectives serve as guidelines throughout the system design and development process.

• Adaptability is one of the key drivers for the in-house development. It mandates a modular architectural design, ensuring the extensibility of the system by integrating functional modules which implement particular algorithms or individual steps of staged detection procedures without the need to modify the core system. The system shall support the aggregation of functional modules into complex HFO detection and classification processes and shall allow their parameterization via store- and recallable configurations sets. For the neuroscientist this is the foundation for experimenting with and optimizing detection approaches.

• Interoperability. Most commercial EEG systems store EEG data in their own, proprietary file formats. In order to facilitate migration of data between systems we require that our framework is able to import EEG data produced by the locally used clinical EEG recording systems (i.e., invasive EEG, scalp EEG, low- or high-density recordings.

• Usability is considered the further important design objective. It refers to appropriateness of graphical user interface (GUI) as well as to clarity and comprehensibleness of activities required to trigger a particular process or achieve a certain goal. Both aspects require a balance of specificity—functionality and user interface should be tailored to the core purpose of the system, HFO detection and classification—and intuitiveness and cross-system consistency of user interfaces. The system shall not require a radical reorientation with regard to usage patterns in comparison to systems in day-to-day clinical use. As little system-specific expert knowledge as possible shall be required to efficiently use the software framework.

• In order to achieve this goal, it is essential to closely interact with the prospective user community, physicians as well as scientific personnel, throughout the development process. Incremental process models (details in section 4), early prototypes, and user involvement generating qualified feedback have proven indispensable for a system that is perceived as appropriate and useful (Eckkrammer et al., 2010).

• Efficiency in terms of both, execution time and development effort, is determined as a further objective. Execution time is a critical issue as high-frequency oscillations in scalp EEG reportedly occur at a very low rate (Andrade-Valenca et al., 2011), requiring extended recording times. In addition, high-density EEG supports up to 256 channels recorded with a sampling rate of at least 1 kHz. Predictive models produced by machine-learning techniques typically benefit from a reasonably large training set. The accordingly large number of patients contributes to the huge volumes of data to be processed as an additional factor.

There are several measures to more or less accurately assess the complexity of a software system and the effort required to develop it (Sneed, 2010). Obviously, the considerable number of data processing functions and the graphical user interface are the main contributors in this case. Third party software libraries provide mathematical or signal processing related functions which are well tested and established throughout the scientific community. In order to constrain the development effort without sacrificing relevant functionality these libraries shall be integrated.

• Platform independency. As a last and subordinate requirement the software framework should be platform independent and should not require to be executed on any specific operating system.

In combination with the set of use cases these design objectives are the basis for deriving the user and system requirements.

Use case-driven high-level structuring has proven beneficial particularly for planning and implementing larger scale and more complex software development projects (Tiemeyer, 2010, own experience). A phased approach supports the definition of intermediate milestones, early releases of mature precursor products with limited functional scope, and facilitates the familiarization and integration process with the operational environment.

The considered use cases of the HFO detection software framework suggest a partitioning of development activities into three successive phases, each extending the software framework by a set of related functions. Figure S1 illustrates the association of principal use cases and development phases:

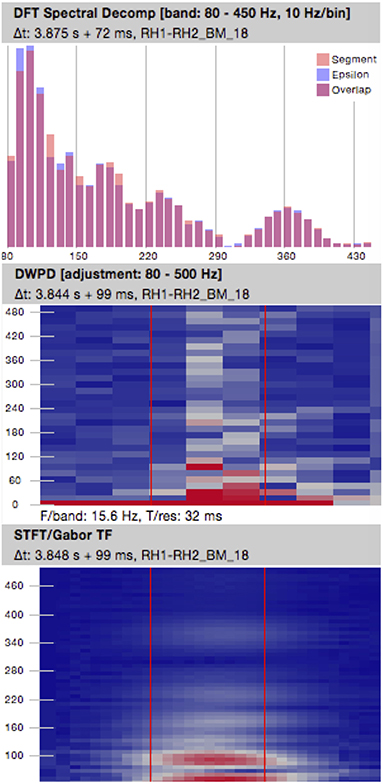

• Compiling a comprehensive report on the analysis of an EEG recording with respect to HFO occurrences (UC.1) is the key use case from the perspective of the EEG specialist and, therefore, has to be supported as of phase 1 of the development. The means of generating the essential information for the report are based on the analysis and classification of visually identified data fragments by human perception and interpretation in this phase. Automation support is limited to transforming the data of the selected fragment to time-frequency representation or spectral decomposition.

Nevertheless, the outcome of this phase is essential for subsequent phases. It already implements functions that are fundamental also for advanced processing techniques introduced in later phases, such as importing EEG recordings created by EEG systems in clinical use, or extracting/filtering specific frequency bands from the data. In addition, the system resulting from phase 1 is needed to establish the “ground truth,” i.e. a sufficiently large, representative, and expert-validated set of HFO events within a diversified set of recordings, which serve as a reference and basis for validation of automated detection strategies.

• Phase 2 provides initial coverage of use cases from the neuroscientist's perspective. The software system resulting from this phase allows the neuroscientist to implement and test analytical, automatic HFO detection and classification strategies (UC.2). These additional capabilities are a precondition for the subordinate use case that focuses on analysis and comparison of detection performance of different detection algorithms. Moreover, phase 2 extends UC.1, report generation, by making the tested and optimized algorithms available to the EEG specialist for determining HFO report data.

• The final phase 3 introduces automatic HFO detection and classification based on machine learning techniques. It focuses on generating validated machine learning models for the various types of EEG data, invasively recorded, classical scalp EEG, and scalp-HD-EEG, including the definition and optimization of suitable feature sets. The required infrastructure for assessing the detection performance is already put in place during phase 2. In terms of use cases, phase 3 covers use case UC.3, machine learning based detection strategies from the neuroscientist's point of view. Furthermore, it extends UC.1, allowing the EEG specialist to utilize machine learning models for generating HFO reports.

The development phases are basically executed sequentially. Marginal overlaps, however, allow the inception of the subsequent phase in parallel to deployment of and user training for the previous one.

Within each single phase of development, which extends from requirements specification to deployment of the intermediate product, an incremental development process model is applied. In contrast to pure sequential models that follow a strictly forward-oriented flow of activities, incremental approaches cyclically iterate the sequence of development steps. The overall functional scope of the system is divided into smaller subsets of functions each of which is specified in detail, implemented, verified and validated, and integrated with the output of previous turns, within a single iteration. The rationale of these types of process models allows to start with the design and implementation already at an early stage of the project. Even though some portions of the expected functionality may be unclear or vaguely defined, a first iteration can focus on those aspects that are agreed upon and which are fully understood by all stake-holders. In the meantime, requirements and expectations for other functions can evolve and mature in order to be processed in a subsequent iteration. Apart from being robust with regard to late or changing requirements, incremental models allow to flexibly include or exclude system functions throughout the proceeding development.

Figure S2 details the steps of the incremental process: on the basis of the applicable use cases, the subset of functions that shall be implemented in the particular iteration (the “increment”) is defined in the first step. Obviously, in the first iterations these are the functions which are well understood a priori or that are, due to technical risks, critical for the success of the entire project. The subsequent step, specification, leads to the set of requirements, which serve as the basis for the following design, implementation, and validation steps.

Each iteration results in an incomplete but functional prototype, which is made available also to the prospective users of the system for testing and evaluation purposes. These early and successively refined and extended prototypes allow a strong involvement of the targeted user community. Therefore, they allow a timely discovery of misunderstandings of use cases or requirements. The outcome of the evaluation and the resulting feedback is considered in the subsequent iterations.

After all applicable use cases of the current development phase have been covered to the agreed extent the iterative process ends with the deployment of an intermediate release of the system with a limited set of functions, integrable with the operational environment.

The system architecture denotes the basic structuring of the system in terms of its subsystems, interfaces between subsystems, as well as data structures that are exchanges using these interfaces. A system's architecture can be described on different levels of detail and may comprise hardware as well as software building blocks. In the scope of this project only software components are relevant. Architectural decisions are influenced by a number of parameters, such as properties of the underlying hardware platform, the operational environment, and, most important, the fundamental requirements it has to comply with. Among the requirements for this HFO detection framework, outlined in section 3, adaptability, interoperability, and usability are the key drivers for the architectural design.

Figure 1 depicts the six primary constituents of the software system from the architectural perspective. All subsystems rely on the “Base” facility which provides fundamental data structures representing concepts such as “sessions” or “data nodes” (section 6.1). Like the “Core” subsystem which is the second central unit of the framework, it is a fixed part of the main component. The “Core” subsystem is responsible for subject and session management and coordinates the interaction among all further functional modules. As such it e.g., invokes and controls data processing operations whenever requested by an operator via the user interface subsystem.

All other subsystem are built upon modules that are loaded into the software system at runtime during the system's initialization phase as required. Each module implements a particular type of function in a module-specific way (polymorphism) and pertains to one of the following subsystems:

• In compliance with the requirement of interoperability with external EEG recording systems the software framework must be capable of handling proprietary data formats. The “Data I/O” subsystem consists of modules to import raw EEG data or MRI image sets produced by external systems.

• The “Processing” subsystem provides EEG data processing and manipulation functions. Modules of this subsystem implement data filtering or segmentation functions as well as all HFO-related processing, such as event-of-interest detection or event classification according to the various supported approaches.

• Usability is a crucial design criterion of the system and is primarily depending on the means for an operator to interact with the system. The “User Interface” subsystem enables to comprehensively control the system via a GUI. While the foundations for the user interface are built into the main component of the system, specific views onto or representation modes of the data are implemented in loadable modules.

• Functions to summarize and format information resulting from data processing activities are provided by the “Reporting” subsystem. The current system specification supports a single type of report termed “HFO Report.”

The outlined subsystems are not one-to-one representing separate software components, i.e., loadable modules or executable programmes. Although it is reasonable for the architectural approach to be reflected in the components structuring, multiple components may constitute a particular subsystem, such as in the systems's activities and processes concept (section 7.2). Similarly, several subsystem may reside within the same software component, such as “Base” and “Core” subsystems, both hosted in the main executable.

Interoperability with EEG recording systems in local clinical use is a critical aspect in order to facilitate the integration of the software framework with the every-day work-flow. The capability to import externally generated EEG recordings without prior format conversion is therefore an essential requirement. Moreover, direct access to clinical data considerably increases the amount of data instantaneously available for the purpose of testing and validating software functions.

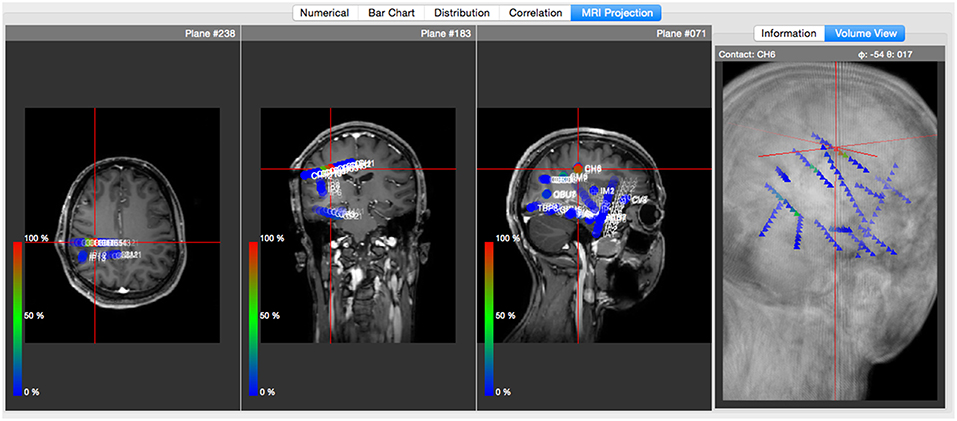

Likewise, the software framework supports the importing of structural patient MRI (magnetic resonance imaging) images stored in NIfTI format (Neuroimaging Informatics Technology Initiative6), which can be linked to EEG recordings in order to define and visualize anatomic electrode coordinates.

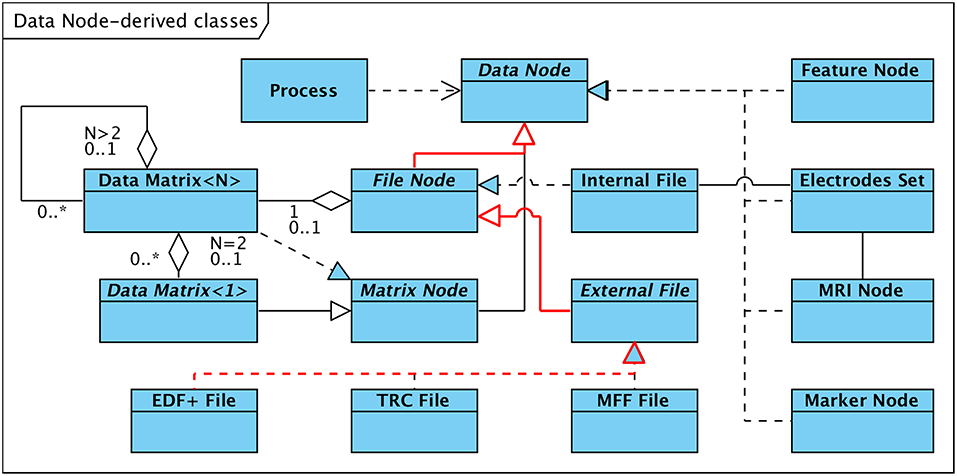

The provision of a unified interface to data structures representing different types of data is referred to as “subtype polymorphism” and is a key concept in object-oriented software engineering (Cardelli and Wegner, 1985; Gamma et al., 1995). Our HFO software framework incorporates this technique e.g., to facilitate the implementation of interoperability in terms of reading and interpreting externally generated data. Figure 2 depicts the hierarchical organization of interfaces with the abstract class “Data Node” as the root. “Data Node” represents the top-level and most generic interface to access any kind of supported data structure, such as EEG recordings or MRI data sets. It defines the methods (i.e., operations) that other parts of the software, such as processing or visualization components, have to use in order to access data, without implementing any data-type specific mechanisms (thus the term “abstract”).

Figure 2. Data Structures derived from abstract Class “Data Node.” Arrows between classes denote “generalization” in the arrow direction and “specialization” in the opposite direction. The inheritance path for the “EDF+ File” class is highlighted in red.

Subclasses of the abstract root class either specialize the interface by defining additional methods more trimmed to the type of data they represent, or implement (or “realize”) the interface, i.e. provide the actual data manipulation mechanisms and data-type specific code.

Figure 2 details the hierarchy on the example of externally generated EEG recordings in EDF+ (European Data Format7) format; the relevant dependencies are highlighted in red: A set of specialized subclasses of “Data Node” represent the first specialization step. The subclass “File Node” extends the generic “Data Node” interface by methods that are more specialized to access EEG data that is persistently stored in a file. “File Node” allows users of this interface to access/read EEG data without the need to know the details of how signal and meta data are structured and arranged within the file. The next level of specialization distinguishes between “Internal File,” a “realization” implementing read and write access to EEG data stored by the software framework in its internal data format, and “External File”. “External File,” in turn, extends the “File Node” interface by methods to read meta information that is not part of internal data files, such as subject, epoch, trigger, or event data associated with the recording. It defines the most specific interface for accessing EEG data stored in external data formats. Currently the following three realizations of the “External File” interface are available (see also section 6.1):

• The EDF+ File class discussed in the example above implements read-only access to EEG data stored in either type (binary and textual) of EDF+ file.

• Long-term EEG recording in the local epilepsy monitoring unit (EMU) is based on a system manufactured by Micromed, Italy. The system stores EEG data and associated meta information in files according to a proprietary format (TRC, “TRaCe file”). Support for read-only access to TRC (version 4) formatted data is provided via the TRC File class.

• Read-only access to MFF (“Meta File Format”) formatted EEG data generated by EGI (Electrical Geodesics Inc.) high-density EEG systems is implemented in the MFF File realization of the “External File” interface.

The obvious advantage of the hierarchical data abstraction is that software entities can access EEG data in a generic way without needing to know the actual structure and format of the file the data is stored in. This way it is possible to add support for additional external data formats without impacting portions of the software that access the data using the generic interface.

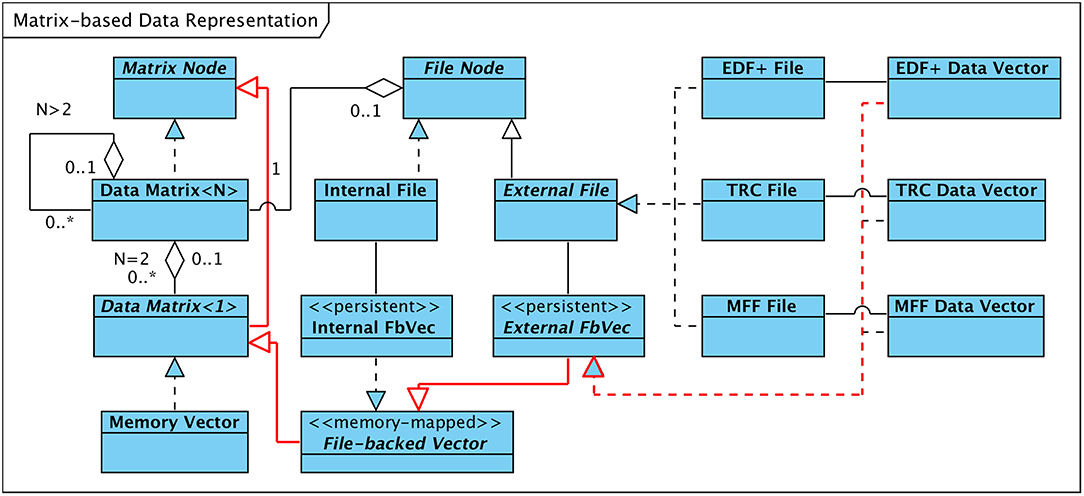

A critical aspect with respect to interoperability is the encapsulation of EEG data within constructs that are independent of the format in which the data are physically stored on the persistent medium (typically hard disk), while at the same time accurately reflecting the logical structure of the data, i.e., its partitioning into channels and epochs. Moreover, in order to ensure efficient processing of the data, a direct and random access to individual data elements (samples or measurement values of a certain channel at a particular point in time) has to be facilitated.

For that purpose the internal data storage concept of the software framework is based on virtual matrices of arbitrary dimension. Successive samples of a single channel are stored as a matrix of dimension (or order) one, that is, a contiguous vector or array of data values. In case of multiple simultaneously recorded channels the dimension of the matrix is increased to accommodate the set of channels. The typical representation of a recording covering a single epoch therefore results in a matrix of dimension two. A crucial precondition for the organization of individual channels within a single matrix is that their samples cover the same span of time. Whereas, the sampling rate and, thus, the number of samples per channel may differ.

Recordings that consist of multiple epochs require the extension of the matrix to an order of three, with the third dimension covering the epochs. The order of the data matrices can be set as required. For example a set of recordings of the same subject, each consisting of multiple epochs and multiple channels would be organized as a single four-dimensional matrix.

Figure 3 visualizes the concept. An instance of the template class “Data Matrix” of dimension N holds a vector of instances of the template class of dimension N−1 and may or may not have an association with an object of type “File Node” (indicated in the figure as a containment or “part-of” relationship of multiplicity 0 or 1). Matrices of dimension one (i.e., vectors) require special consideration since they are responsible for adapting the format-dependent physical data storage to the uniform vector-based structuring. Accordingly, these vectors are implemented by a set of classes. They either represent an array of measurement data that is available in the working memory of the computer, denoted “Memory Vector” in Figure 3, or enable access to data stored in internally or externally generated persistent data files (“File-backed Vector”).

Figure 3. Descendants of abstract Classes “Matrix Node” and “File Node.” Arrows between classes denote “generalization” and “specialization” relationships. Inheritance Tree for EDF+ Data Vector Class in red.

As outlined in Figure 3 a specific implementation of the “File-backed Vector” interface manages read/write access to data stored in internally generated EEG data files (“Internal FbVec”). For data files in proprietary third-party formats, a more specialized interface is derived from “File-backed Vector,” labeled “External FbVec”. This interface is realized by three classes, each of which transforms the vendor-specific layout of the EEG data of the individual channels stored within EDF+ files, TRC files, or MFF files, respectively, into a linear, contiguous data vector. Figure 3 highlights in red the exemplary inheritance hierarchy for the “EDF+ Data Vector” case.

In both cases, “Internal FbVec” implementation or realizations of “External FbVec,” the data that are physically kept in files on the hard disk are mapped into the logical address space of the process executing the HFO software framework using the computer's Memory Management Unit (MMU). This technique, called “memory-mapping,” allows a process to access the data as if it were available in the computer's main memory, regardless whether the data are actually in memory or on disk. As a major benefit memory-mapping enables direct access to data values at arbitrary positions without prior allocation of buffer storage that needs to be filled with the proper portion of data loaded from the file. Buffer management and optimization is entirely left to the operating system.

An aspect substantially driving the in-house development of a software framework for HFO detection is the high level of flexibility which is required for experimenting with, testing, and validating novel HFO detection and classification strategies. Tools that enable the definition, revision, and parameterization of detection processes are an important requirement and precondition for an adaptable framework. A comprehensive concept of configurable processes aids in achieving this flexibility.

In general, HFO detection and classification strategies, regardless whether following analytical approaches or utilizing machine-learning techniques, can be broken up into sets of sequentially executed processing steps (see also Zijlmans et al., 2017). The data flow diagramme in Figure 4 illustrates the typical functional decomposition that is followed by our software framework and indicates the data items that are passed between consecutive processing steps.

In the preprocessing stage the original EEG recording is band-pass or high-pass filtered and potentially subject to artifact detection and removal. If only particular portions of the EEG data shall be further processed, preprocessing may include a segmentation of data according to defined types of associated events or specified time-ranges. The preprocessed data is passed on the next step, “event of interest” (EOI) detection. Depending on the algorithm, EOI detection may additionally rely on the original (unfiltered) EEG signal, which is provided as auxiliary input data. Detection algorithms that are well-balanced in terms of sensitivity and specificity may already yield an acceptable fast oscillation detection performance. However, the purpose of the EOI detection steps enclosed in the process definitions of our software framework is to preselect potential HFO occurrences by looking at morphological (time-domain) and spectral (frequency-domain) properties of the preprocessed and optionally raw signal data. The scope of subsequent processing steps is reduced from the entire recording (or selected segments thereof) to a set of time-bounded fragments of data. That is, it is reduced from continuous data to discrete sections of short duration, significantly decreasing the computational effort required by feature extraction and classification operations.

The set of EOIs serves as a database of time/channel records for the following steps. The feature extraction stage separately analyses the referenced sections of data with respect to time-domain or frequency-domain characteristics (features), using preprocessed and/or the original signal data. The resulting output, a map that associates each event of interest with the extracted properties at the specified section of data, is forwarded to the classification step. Classification evaluates the features either analytically or utilizing machine-learning techniques and classifies the events accordingly into fast oscillations or artifacts.

Although this theoretical concept considers detection and classification as separate stages, process definition and parameterization requires a sound understanding of the inter-dependency of the implemented algorithms. The upper bound for the sensitivity of the entire process, for instance, is obviously predetermined by the detection step; the classifier has no means to compensate for a high type II error (false negatives) introduced by the detecting stage.

A process in terms of our software framework is composed of a sequence of individual processing steps (“activities”) that are executed upon the data in a well-defined order and which incorporate each a specific algorithm or part thereof. Each processing step is implemented as a loadable and exchangeable software module and can be initialized with either pre-defined or operator-defined parameters. In this manner complex processes can be defined and parameterized based on an extensible set of simple and combinable steps.

Figure 5 visualizes the concept. Each process is subject to a process-lifecycle that is controlled by the process framework and which is in charge of instantiating, parameterizing, invoking, and removing processes. A process comprises at least a single “Activity.” All activities of a process are executed in a defined sequence and are provided with the applicable set of activity-specific parameters, which has been previously loaded or defined by the operator.

The primary data set that shall be processed by an activity as well as possible auxiliary data elements required in support of the operation to be performed are provided to the activity by means of a list of input data items. These data items may be of any type that is derived from the “Data Node” class (see also Figure 2), i.e., raw or preprocessed EEG data, sets of events or markers, or extracted features. The initial list of input data nodes is created by the process framework upon process initialization and contains data nodes which either reside in working memory or are stored on the hard disk. In the latter case, the “Data I/O” subsystem, one of the major architectural building blocks (ref. section 5), is utilized to access the data.

New data that may be generated by the executed activity is as well encapsulated in data structures subclassed from “Data Node” and are returned to the invoking process as a list of output data nodes. The process appends the list of output data nodes received from the activity to the list of input data nodes passed on to subsequent activities, enabling activities to use data structures created by preceding activities. The general approach is to not modify input data nodes in the course of activity execution but to create new (output) data nodes holding the modified input data.

After the process has completed (i.e., all activities finished successfully) the process framework adds all or a subset of the newly generated data nodes to the list of sub-nodes of the primary input data node—as per process definition. By means of the Data I/O subsystem these new nodes are copied to persistent data storage.

Processes are aggregations of activities that are executed strictly in sequence. The set of activities and their order is statically defined in the “process definition file.” At system startup the process framework parses the process definition file and instantiates the processes in accordance with their “building plans.” Apart from a process' structure this file also defines the set of input data nodes each of its activities receives upon execution. Figure 6 gives an overview of processes, activities, and the defining structures residing in the system's “configuration repository.”

The process definition file is based on XML8 and internally structured as detailed in Figure S3. The first section of the file (“moduleList”) holds the list of activity-modules that shall be dynamically loaded during the software framework's initialization phase. Each module is described by a textual identifier (“moduleName”) via which it can be referenced from within activity definitions and the module's “path” (i.e., its storage location on the hard disk). The subsequent section contains the definitions of the individual processes. Apart from an element that can be used to refer to literature the process is based upon (“reference”), each process definition holds the sequence of associated activities, identified by their names, as well as a (possibly empty) list of “Data Node XML” items (“outputList”) that shall be stored as the process' final output. Each of these data node items refers to a specific type of result of a particular activity. In accordance with data node inheritance tree outlined in section 6.1 data nodes can be classified as either signal data nodes, markers or events nodes, or feature nodes.

Activities are defined by “Activity XML” elements. Such element contains the activity's textual identifier (“activityName”), a reference to the software module that implements the activity, and a list of “Data Node XML” items (“inputList”) that specify the data nodes the activity receives as input.

Most activities of a process can be configured through an arbitrarily large set of parameters that can be adjusted within the respective ranges of values. Adjustment of parameters is conducted by the user by means of the software framework's graphical user interface. For this purpose, each activity module provides a custom dialogue page (“Activity Configuration Widget,” see Figure 6) which lists the parameters of the implemented processing step and their current values. The dialogue pages of all activities of a process are aggregated by the “user interface” subsystem (see section 5) into an interactive dialogue (“Process Parameter Dialog”) in order to provide a comprehensive overview of the parameter set and to allow the user to modify the parameters' values within their respective boundaries.

So as to have a reasonable baseline for the parameterization of processes, default parameter sets that are typically derived from the values used in the literature are prepared for all defined processes and are pre-stored in the Configuration Repository of the Software Framework. In addition, a complete set of modified parameters can be persistently stored and recalled for subsequent use. After parameter adjustment or recall is completed a “Parameter Set” object is generated from the current values, which is made available to the process and its activities during execution.

Although modules implementing activities follow a unified technical structure and are not differentiated by design, activities can be classified according to their purpose, type of operation, or stage within an embracing process. The type of output or result that is generated by an activity can be considered as an additional discriminating criterion. Figure 7 gives an overview of a logical classification of activities with particular focus on HFO detection.

In accordance with Figure 4 activities can be classified as follows:

• The class of preprocessing activities comprises all operations that prepare or preprocess the input signal data in a way required or suitable for the subsequent processing steps. This class of activities includes e.g., filtering or data segmentation operations. The generated output is typically a modified version of the input signal data, thus, again a “Matrix Node.”

• Activities that analyse and scan the input data for events of interest can be classified as event detection activities, with a context-specific definition of EOI. In the scope of HFO detection, EOIs are defined as signal data fragments that possibly contain high-frequency oscillations. The result of a detection activity is a data node that contains a list of event markers (“Marker Node”), each identifying a detected event of interest.

• Feature extraction denotes the class of activities that analyse those fragments of the input signal data that have previously been marked as events of interest. Feature extraction operations explore the signal with respect to specific properties, either based e.g., on a morphological analysis, a spectral decomposition, or a time-frequency transform of the signal. The analysis results in an arbitrarily large set of properties or features per EOI. These feature sets are encapsulated in an output element of type “Feature Node.”

• Event classification operations review events of interest detected by an event detection activity and classify the events according to a defined scheme. In the framework of HFO detection, classification activities differentiate EOIs between ripples, fast ripples, and artifacts.

As outlined in Figure 7 classification activities can be further grouped into analytical methods and machine-learning based approaches. Analytical algorithms analyse and classify EOIs on an individual basis. The signal characteristics considered depend on the implemented algorithms and may include time-domain as well as frequency-domain aspects. Classification is typically performed according to some theoretically founded thresholds.

Machine-learning classifiers, in contrast, classify events based on a previously generated “prediction model” in combination with the set of properties extracted by preceding feature extraction activities.

All activity modules have to implement the “Activity” interface in order to provide to the process or process framework a generic means of controlling the operational state of an activity and to supply it with the required set of parameters.

This classification of activities is in line with the conceptual process decomposition presented in Figure 4. Nevertheless, separate processing steps are not necessarily contained in dedicated modules. As implemented in the current system, analytical classifiers combine their own feature extraction operations and the actual classification steps in integrated modules.

Table 1 details a selection of available activity modules. These modules have been implemented in course of reviewing relevant publications on automated HFO detection strategies. They either represent the building-blocks of algorithms that, according to the respective authors, produced promising results in comparison to an expert-validated ground truth, or are the results of experimental optimizations and research into complementary methods.

Further implemented activity modules not listed in the table include test signal generators, event matching and reclassification operations, or signal segmentation modules.

On the basis of the implemented activity modules, a set of HFO detection and classification processes has been defined, either in strict accordance with the reviewed publications or as recombinations of processing steps suggested in the literature. The selection of analytical detection algorithms is primarily influenced by comparative benchmarks performed in Zelmann et al. (2010); Chaibi et al. (2013), and Burnos et al. (2014).

Table 2 lists the predefined processes and their constituting activities. These processes together with default sets of parameters are ready to be applied to input EEG data in one of the supported recording formats (see section 6.1).

Behavioral models describe the dynamic aspects of a software system: its activities, communication via internal and external interfaces, or state machines of the entire system and its constituting components. UML provides a class of diagrams supporting behavioral analysis and dynamic modeling as observed from different perspectives and with focus on particular facets.

The activity diagram in Figure S4 details the flows of control and data during the execution of a defined process consisting of a sequence of activities. It can be considered as complementary to Figure 5, which elaborates on the structural aspects of a process while the Figure S4 details the generic behavior of a process being executed. The activity (“Process::execute”) commences with checking whether the “Input Data Node” received from the process framework is a valid data matrix and setting up of the “Input Matrix Vector” with that initial element. In the case of HFO detection the input data node is the recording to be analyzed with respect to HFO occurrences.

The core of process execution is the iterative invocation of its activities according to the defined sequence (“Chain of Activities”). Before an activity is actually executed, it is attached to the “Progress Monitor” which allows the software framework, and the system's user, to keep track of the status of the activity. Each activity internally follows the classical IPO (input – processing – output) model and generates output data by processing its input data in a specific way. If the activity completes successfully the produced output (“Output Data Node” in Figure S4) is appended to the input matrix vector in order to be used by subsequent activities. The loop ends either after the last iteration, i.e., execution of the last activity in the sequence completed, or as soon as an activity failed to successfully execute. In the latter case an “Error Vector” is assembled that summarizes the reasons for failure and provides respective feedback to the user. If, in contrast, the entire process completed successfully the resulting “Output Data Node” is handled according to the process definition (“outputList” element in Figure S3). It may be copied to persistent storage and is either registered as a new sub-node of the input data node in case the output is of type data matrix, or is associated to the input node as a set of event markers, or both.

Additionally, the process framework allows to store intermediate results of a process, i.e., output data nodes created by other than the last activity in the sequence, in order to make them accessible for display and review. An option that can be configured by means of the process definition.

One of the key assets of the software framework is its support for extending (HFO detection and classification) processes by new activities and for defining entirely new processes and activity sequences. Activities in terms of the software system are implemented in dedicated software modules (dynamic libraries) that are loaded on demand, i.e., in case the implemented activity is referenced by any defined process, at system startup time.

Figure S5 illustrates the fundamental structure an activity module has to comply with in order to be integrated with the software framework. Primary constituent of the module is its main activity class, denoted “Activity A” in this exemplary outline, a concrete realization of the “Activity” interface. From the set of methods that have to be backed by activity-specific implementations, “execute” and “parameterTab” deserve a close look. The “parameterTab” method instantiates and provides a configuration object, derived from the “Activity Configuration Widget,” that lists all parameters relevant to the implemented algorithm for review and modification by the user. The methods it provides are used to convert the activity-related section of a “Parameter Set” (see Figure 6) to and from a set of Graphical User Interface (GUI) elements.

The “execute” method is invoked by the hosting process. It actually performs the implemented operation on the input data, using the previously adjusted parameters. There is no interaction between activity and process framework, and in particular no concurrent access to the processed data from outside the activity, until the “execute” method has completed and returns control to the embracing process. Thus, the activity can autonomously schedule and organize access to the data as best suited for the applied algorithm. While, for instance, frequency-band filter activities are likely to work on a per channel basis, some artifact detection techniques may require to access data across channels. This opens up the possibility to delegate processing of defined portions of the data, e.g., channels, to a pool of concurrent threads (“Channel Processing” class in Figure S5). Depending on the hosting hardware platform, this may lead to a considerable reduction of execution time.

The classes stereotyped “Qt” are base classes provided by the embedding cross-platform application framework and link the elements of the activity module into the framework (see section 9 for more information). Modules are independently constructed and compiled and represent separate binary components which can be modified without impact on other components of the HFO detection software framework. Eventually, this enables interested external institutions to extend the HFO detection framework by their own algorithms and experiments.

In most general terms machine learning can be described as the capability of a technical system (i.e., a computer programme in this context) to adapt its behavior or decision making according to a history of input data—it aims at generalizing on the basis of provided examples or, less technically, it “learns” from “experience.” Machine learning algorithms typically analyse training data sets for intrinsic patterns or rules and construct predictive models under the assumption that discovered patterns are valid for the entire population.

Machine learning techniques can be a valid alternative to direct, analytical programme design in case of ill-posed problems. Ill-posed problems defy an exhaustive and precise description by analytical algorithms and can be better explained by means of representative examples. A frequent inherent characteristic of such problems is a high level of complexity, a high number of degrees of freedom, and an insufficient understanding of their interrelationship. A further, related indication for machine learning approaches is the analysis of data for structure-defining features that are obscured by more prominent properties or the sheer volume of data (see also Nilsson, 1998; Shalev-Shwartz and Ben-David, 2014). Indeed, most of these factors apply to the problem of HFO detection in invasive and particularly scalp EEG recordings, not least reflected in the poor inter-rater agreement rates reported e.g., by Blanco et al. (2010), rendering HFO detection a candidate for the potentially successful utilization of a machine learning-based approach.

Machine learning techniques can be typed based on a number of aspects, among which the provision of the correct function values or labels associated with the training data, i.e., supervised vs. unsupervised learning, is the most distinctive one. Supervised learning aims at evaluating properties of the training data in order to support the underlying hypothesis that is represented in the association of provided training samples and provided values or labels. The learning process establishes a relation between given properties of the data and given labels. Unsupervised learning, in contrast, analyses the training data without any a-priori knowledge of the structure of the data. The unsupervised learner is provided the (number of) labels only without association to training data samples and autonomously attempts to discover intrinsic structural characteristics in order to partition the data into meaningful clusters.

A large class of applications of supervised machine learning is the automatic classification of data instances. The classification of EOIs into ripples, fast ripples, and artifacts in course of HFO detection in EEG recordings is one such application. Considering the data flow diagramme in Figure 4, classification of detected events is the last stage of the conceptual detection and classification process. Accordingly, in our software framework machine learning-based classification can be configured as the final step (“Event Classification”, see Figure 7), as a particular type of classifier, of an HFO detection and classification process.

The publications on machine learning in HFO detection use four different techniques to approach the problem (see Figure S6). Studies going for a supervised method apparently favor support vector machines (SVM), while only a single publication is using k-nearest-neighbor (k-NN) and logistic regression models in addition.

Support vector machines have been preferred over k-NN as a machine learning classifier model for integration with our HFO detection software framework for mainly two reasons: As detailed in Kim et al. (2012) and Kaushik and Singh (2013) k-NN models are very sensitive to the choice of the parameter “k” and the used measure of “distance” between any two samples. In applications resulting in higher-dimensional feature spaces k-NN performance deteriorates in terms of both, classification and execution time. In addition, outliers tend to excessively influence the classification accuracy as all data values contribute to the result to the same extent. In particular, for a large number of features even in combination with a small number of samples SVM have proven to yield better classification results as long as the margin (i.e., the geometric “distance”) between classes is sufficiently large. The second reason for a decision in favor of SVM is the availability of open-source SVM libraries (“LIBSVM” and “LIBLINEAR,” see Chang and Lin, 2011) that can be easily linked into a classification module/activity.

Besides the decision for a suitable machine learning model, the diligent construction of the feature vector is of critical importance for the success of the learning algorithm. The careful selection of those input data properties which are assumed to have the most discriminative power with regard to the intended classification considerably influences the quality of the resulting predictive model. Not even the best type of learner is able to compensate for poorly chosen features. Selecting reasonable features requires a certain understanding of the nature and characteristics of the input data. This type of a priori information, also called “bias” or “prior knowledge,” is a vital prerequisite for any useful learning process (Nilsson, 1998; Shalev-Shwartz and Ben-David, 2014).

The events of interest to be classified in our application are fragments of short duration of a digitized, that is, a quantized and discretized EEG signal. Such signal can be analyzed with regard to its morphological, time domain properties (observing the signal as a function of amplitude over time), as well as considering its spectral composition (in terms of proportional contributions of oscillations of distinct frequencies to the original signal). In addition to the review of features used in earlier studies using machine learning, the in-depth assessment of published analytical approaches is of particular advantage for the feature vector definition. Analytical methods are basically considering the same distinctive signal characteristics, only the processing strategy is entirely different.

The HFO detection software framework implements the extraction of time domain features within a dedicated module (class “feature extraction” in Figure 7) that can be registered as one step of a process' sequence of activities. The time domain feature extraction module receives a data matrix node, i.e., the high- or band-pass filtered EEG signal, and a list of events of interest to be processed as input data. Each event is analyzed with respect to the signal characteristics detailed in Table 3.

The feature extraction module allows to selectively include subsets of the listed features in the feature vector, depending on the configuration of the extraction step within the hosting process. In order to extract specific properties from the output of distinct preprocessing stages, e.g., from high-pass filtered, low-pass filtered, or unfiltered EEG data, it is possible to include the same feature extraction step multiple times within a single process, with each instance receiving a different input data set.

Events of interest can be analyzed with regard to their characteristics in the spectral representation. Frequency domain analysis in our software framework is currently limited to a static spectral decomposition. We use Fast Fourier Transform with a Tukey window and α = 0.5, the ratio of the cosine-tapered length of the full window, applied upfront. The resulting magnitude values are aggregated into frequency sub-bands (bins) of defined width. Lower and upper frequency limits, as well as the sub-band width can be specified. The calibrated magnitude of each sub-band is added to the feature vector as a separate feature. Optionally, the generated spectrum can be normalized to the value range [0..1]. The computed scaling factor is considered as a further feature in this case.

A more elaborate spectral analysis could generate further potentially useful and distinctive features, such as the spectral centroid (Blanco et al., 2010; Matsumoto et al., 2013; Pearce et al., 2013) of the segment. In particular, a time-frequency analysis could yield interesting details about the development of the signal's spectrum throughout the event. Systematic tests would be necessary to assess the added value of these properties in addition to the static spectrum.

Depending on the number of observed input data properties and their numerical representations, feature extraction may result in feature vectors of considerable length, i.e., in high-dimensional feature spaces. Not all of these elements, particularly of high-dimensional feature vectors, contribute equally to the quality of the constructed predictive model. The longer a feature vector, the higher the chance that some features convey redundant information or are insignificant or even irrelevant with regard to the targeted classification (Dash and Liu, 1997; Guyon and Elisseeff, 2003). Obviously, this may significantly increase computational cost in terms of both, memory usage and CPU time, for no benefit.

The second aspect mandating dimensionality reduction is the risk of poor generalizability of the resulting model in case the extracted features are adapted too closely to the training data set. This problem is known as “overfitting” (Shalev-Shwartz and Ben-David, 2014). Generally, the training data samples are assumed to be selected independently and identically distributed from and, thus, to be representative for the underlying distribution (universe). In that case the ideal strategy would be to minimize the empirical error, i.e., the error in classifying the training data set [“Empirical Risk Minimization,” see Shalev-Shwartz and Ben-David (2014)]. Since EEG recordings differ from patient to patient and are influenced by a number of factors, such as circadian rhythm, received medication, activity, or exogenous artifacts, this assumption is not valid for the HFO classification case. The better the classifier performs on the training data set, the higher the risk of overfitting and failing on previously unseen recordings, i.e. the “true error” increases with a decreasing empirical error. Reducing the number of features according to a suitable algorithm may also decrease the risk of overfitting by reducing the susceptibility of the constructed model to (potentially exceptional) peculiarities of the training data set (Nilsson, 1998).

A third reason calling for a reduction of feature vector length is an improved understandability of how characteristics of the data are related to the classification outcome. Knowing which properties are the most influential and discriminating may be of interest also for the advancement of analytical HFO detection algorithms.

Feature subset selection addresses the issue of finding the smallest subset of features that yields the best classification performance. This problem raises two questions: how to select a particular subset? And how to assess its performance? The conceptually simplest solution is to minimize the true error using all possible combinations of features and to validate each combination using samples not in the training data set (Guyon and Elisseeff, 2003). Considering the exponential complexity requiring 2|F| iterations, with |F| the dimension of the feature space, this approach is computationally not feasible.

The algorithm implemented in our SVM classifier module iteratively partitions the provided training data set into a training and a validation subset (resampling with replacement) and assesses the predictive power of selected feature subsets by creating a new model in each iteration. Technically, this so-called “wrapper” method is agnostic with respect to the particular type of machine learner and could be encapsulated in a dedicated module in order to reuse it with potential future classifiers. The encapsulating procedure of the feature subset selector is depicted in Figure S7. The procedure receives the full set of features and the training data set as input items. In an outer loop the training data set is randomly shuffled and partitioned into a training subset and a validation subset (“hold-out” samples) using the GSL implementation of the MT19937 random number generator (Galassi et al., 2017).

Subsequently, ANOVA is performed separately on each feature in order to reorder them according to their discriminative power with regard to the classes to be distinguished (ripple, fast ripple, artifact). ANOVA maintains the functional dependency between data and labels and, thus, satisfies this requirement for a suitable variable ranking function (see Song et al., 2012). To avoid a bias by the validation subset, only the training subset is considered in this step. The inner loop, detailed in Figure S8, derives a (locally) optimized subset of features based on the reordered set of features and the disjoint training and validation data subsets. The resulting feature subsets of all iterations of this procedure are aggregated into a feature frequency histogramme. The number of iterations of this outer loop depends on the configured ratio (r) of the validation subset size with respect to the total training set size and is calculated as n = ⌊1/r⌋. The final step of the procedure generates an optimized feature subset using only those features that appeared in at least two locally optimized feature subsets.

The inner loop (Figure S8) uses the “greedy forward selection” algorithm, also referred to as “hill climbing” (Guyon and Elisseeff, 2003), to create a locally optimized subset of features on the basis of the provided training and validation data subsets. The algorithm iteratively adds features, taken from the ordered input feature vector, to the (initially empty) local feature set. This local feature set is used for model generation, using the training subset, and validation, using the validation subset. After each iteration, the feature that resulted in the highest improvement of classification accuracy is permanently added to the local feature set. The loop terminates as soon as no improvement can be made by adding further features. Adding only features that improve the outcome also reduces redundancy.

As the input feature vector is ordered according to the discriminative power of the features, the algorithm tends to converge after a small number of iterations. A further speedup is achieved by removing from the ordered feature set all features that resulted in a classification accuracy when added, causing them to be ignored in subsequent iterations.

Here, we provide an example based on 19 invasive recordings with macro electrodes (Montreal reference data set) and a sampling rate of 2,000 samples/s. The total number of events was 11434 (avg. 600 events/recording), with a total duration of events of 80,5426 ms (equivalent to 161,0852 samples). The average event duration was 70.5 ms (141 samples), standard deviation of 43.9 ms and median 62 ms. The selection starts with 85 spectral features (i.e., spectral bins). The algorithm proceeds as follows:

• Feature node 1: 11434 instances, 9 features (on high pass filtered data)

• Feature node 2: 11434 instances, 6 features (on low pass filtered data)

• MgpActClsSVM: concatenated 100 features from 3 feature nodes for each of 11434 instances

• MgpActClsSVM: random number generator

• MgpActClsSVM: runs: 10, training set size: 10291, validation set size: 1143, classes: 3

• MgpActClsSVM: Instance count: class[ [EOI] ] = 1379

• MgpActClsSVM: Instance count: class[ [F] ] = 688

• MgpActClsSVM: Instance count: class[ [R] ] = 9367

• […]

• All features that occurred (i.e. were part of the computed subset) at least in 2 of the performed 10 runs were selected for the final subset:

• MgpActClsSVM: Feature vector: len: 6: [42 43 90 91 93 95 ]

• MgpActClsSVM: Avg accuracy: 0.973561

The execution time for feature subset selection from 100 to 6 features was in total 137 minutes on a Apple Mac Pro “Eight Core” 2.66, 16 GB RAM, Solid-state disk.

Having large and varying ranges of values for different features may negatively affect the classification performance, as those features with the largest value ranges may govern the model generation process and may be a source of overfitting the model to the training set (Lin, 2015). This is of particular concern in geometrical machine learning models. A commonly used technique to mitigate this problem is to scale (or normalize) the features to some common value range (Nilsson, 1998): , with xmin and xmax the minimum and maximum of the original range and m and M the normalized minimum and maximum (setting m = 0 and M = 1 is termed “unity-based normalization”). The implemented SVM classifier module allows to specify m and M.

During training, the SVM module derives the scaling factors for each feature within each iteration of the outer feature subset selection loop (Figure S7), considering the random training data subset only. Feature scaling is then performed for all samples using the calculated factors. Before concluding a learning process and building the final prediction model, feature scaling is re-performed using scaling factors calculation over the entire training set.

SVM technique partitions the instance (sample) space by a hyperplane (the class or decision boundary) that is defined by its support vectors (those samples of the training set that are closest to the boundary) and the “margin” between hyperplane and support vectors. Since HFO classification involves three classes, ripples, fast ripples, and artifacts, the problem is internally broken down in LIBSVM into a set of three models (n(n−1)/2 for n classes), each distinguishing between two classes (Chang and Lin, 2011).

According to Cortes and Vapnik (1995), what they term “soft margin hyperplane” can be found by minimizing the functional , with wT the transpose of the (weight) vector w defining the hyperplane and the sum of training errors. A training error ξi is defined such that with ξi>0, xi an element of the training set, ϕ a feature space mapping function (see below), yi the respective class label (1 or −1), and b, bias, a scalar. (The optimal class-separating hyperplane would imply ξi = 0 for all samples of the training set, while 0 < ξi < 1 results in a margin violation and for ξi>1 the corresponding sample xi is misclassified Zisserman, 2015.)

LIBSVM allows to adjust the regularization (or misclassification penalty) parameter C which controls the emphasis that is put on misclassifications during training, i.e., how hard the learner shall try to avoid misclassifications of training samples. A smaller C results in a wider margin and a reduced risk of overfitting the model to the training data, at the cost of possibly misclassifying some training samples.

Support vector machines can transfer the feature vectors into a higher-dimensional feature space in case the data is not separable in the original feature space, enabling non-linear decision boundaries in the original feature space. This process requires the computation of the inner product of the mapping function ϕ: 〈ϕ(xi), ϕ(x)〉 which can be efficiently calculated (without knowing ϕ) using a suitable “kernel function” K(xi, x) with xi a single vector of the training set and x the sample under test (Jordan, 2004).

The implemented SVM classifier module configures LIBSVM to use the Gaussian radial basis function (RBF) as a kernel function. It is defined as with γ≥0 the weight associated with the distance ||xi−x||. K(xi, x), thus, gives a weighted measure of distance or “closeness” of the values xi and x and converges toward 0 for increasing values of γ and toward 1 for decreasing values (Shalev-Shwartz and Ben-David, 2014). From a simplifying perspective, this metric can be seen as a measure of complexity of the hyperplane or “peakedness” toward its support vectors. A large γ trims the model more tightly to the support vectors increasing the risk of overfitting, while a small γ may not be able to reflect the complexity of the data (Keerthi and Lin, 2003; Ansari and Ahmadi-Nedushan, 2016). LIBSVM allows to adjust γ to tune the predictive model as required.

Since the parameters C and γ mutually influence each other the SVM classifier module optimizes them in a grid (quadratic loop) fashion. The configurable ranges for both parameters are exhaustively probed, computing the accuracy via cross-validation over the training set for each combination of values. A clear disadvantage of this method is the quadratic complexity O(n2). More elaborate parameter optimization algorithms, such as proposed by Keerthi et al. (2007), could be considered in the future in order to reduce the computational cost.

The flow of data items, from the provision of raw EEG input data to the output of classified HFO markers, and the life-cycles of necessary temporary data nodes depend on the configuration of the process. Figure S9 illustrates the movement of information within a HFO detection process based on SVM classification. The process comprises four stages, arranged horizontally from left to right in the figure, which are traversed sequentially (see also Figure 7).

• In the preprocessing stage a “FIR filter” activity creates a high-pass and a low-pass filtered version of the raw EEG recording it receives as input.

• The “RMS-based event detection” activity in the EOI detection stage receives the high-pass filtered EEG and analyses it for occurrences of potential high-frequency oscillations which are compiled into a list of event markers.

• The feature extraction stage hosts two activities. The “Frequency-domain feature extraction” step uses the EOI list generated by the detector to create a feature vector from the normalized spectral decomposition of the high-pass filtered EEG data segment corresponding to each listed event. The second activity, “Time domain feature extraction,” extracts morphological properties from both, the low-pass and the high-pass filtered versions of the EEG recording. Again, the EOI list defines the data segments to be analyzed.

• The “classification” stage is the final stage of the process. It hosts an instance of the SVM-based classifier which relabels the events in the EOI list into “ripple,” “fast ripple,” and optionally “artifact,” based on the aggregated feature vector associated with each event. In validation mode (see below) the activity generates a classification performance report, informing about the achieved level of accuracy.

The implemented SVM classifier module can be operated in three distinct modes that depend on the context in which the hosting process is executed.