- Program of Computational and Data Science, Department of Mathematical Sciences, Middle Tennessee State University, Murfreesboro, TN, United States

Functional magnetic resonance imaging (fMRI)-based study of functional connections in the brain has been highlighted by numerous human and animal studies recently, which have provided significant information to explain a wide range of pathological conditions and behavioral characteristics. In this paper, we propose the use of a graph neural network, a deep learning technique called graphSAGE, to investigate resting state fMRI (rs-fMRI) and extract the default mode network (DMN). Comparing typical methods such as seed-based correlation, independent component analysis, and dictionary learning, real data experiment results showed that the graphSAGE is more robust, reliable, and defines a clearer region of interests. In addition, graphSAGE requires fewer and more relaxed assumptions, and considers the single subject analysis and group subjects analysis simultaneously.

1. Introduction

Functional connectivity is usually based on two approaches: task-based fMRI analysis and resting-state fMRI analysis. In general, task-based fMRI depends not only on the ability of the subjects to follow the task procedure, but also on the good design of the experiment, especially in clinical applications (Amaro and Barker, 2006; Daliri and Behroozi, 2014; Zhang et al., 2016). In contrast, resting functional magnetic resonance imaging can measure spontaneous fluctuations in the human brain and reflect the relationship among different networks (Biswal et al., 1995; Fox and Raichle, 2007). Therefore, rs-fMRI is applicable to situations in which task-related fMRI may provide insufficient information or sometimes fail to perform (Shimony et al., 2009). In resting state the brain is still constantly active and parts of the brain are connected by its intrinsic connections, and some connections even show a stronger relationship when doing certain tasks. Therefore, it is more reasonable to use resting state to study basic functional connections, especially to identify intrinsic connectivity networks or resting state networks (RSNs) (Greicius et al., 2003; Beckmann et al., 2005; De Luca et al., 2006; Seeley et al., 2007; Canario et al., 2021; Morris et al., 2022). As mentioned in several studies (Beckmann et al., 2005; De Luca et al., 2006; Fox and Raichle, 2007; Smith et al., 2009; Cole et al., 2010), RSNs are located in the gray matter regions of the human brain, these RSNs reflect the core perceptual and cognitive processes of functional brain systems.

There are several common RSNs (Cole et al., 2010; Lee et al., 2013) that are often identified from the resting-state fMRI study. The most basic RSN is the default mode network (DMN) (Greicius et al., 2003; Damoiseaux et al., 2006; Van Den Heuvel M. et al., 2008; van den Heuvel M. P. et al., 2008; Thomas Yeo et al., 2011; Andrews-Hanna et al., 2014; Shafiei et al., 2019) which is active when the human brain is at rest without any external or attention-demanding tasks. Mostly it includes the posterior cingulate cortex (PCC) and the precuneus, the medial prefrontal cortex (MPFC), and the inferior parietal cortex.

The Somatomotor network (SMN) is another network which includes somatosensory and motor regions related to motor tasks (Biswal et al., 1995; Chenji et al., 2016). The visual network (VIN) includes the main part of the occipital cortex, which deals with related visual activity (De Luca et al., 2006; Smith et al., 2009; Power et al., 2011; Thomas Yeo et al., 2011; Hendrikx et al., 2019). The dorsal attention network (DAN), also known as the visuospatial attention network, is used to provide attention orientation and participate in external tasks; it is located primarily in the intraparietal sulcus and the area of connection between the central anterior sulcus and the forehead sulcus (Vincent et al., 2008; Lei et al., 2014; Vossel et al., 2014; Bell and Shine, 2015; Hutton et al., 2019; Shafiei et al., 2019). The Salience Network (SAN) mainly evaluates external stimuli and internal events and helps direct attention by switching to related processing systems.

There are several techniques such as seed-based correlation method, independent component analysis (ICA), and dictionary learning method that identify DMN from rs-fMRI, which will be briefly reviewed in the next section. In Section 3, we propose the use of the graph neural network, a deep leaning technique called graphSAGE (Hamilton et al., 2017) to specifically extract DMN from resting fMRI. In Section 4, data analysis experiments and comparisons are performed based on two real data sets followed by conclusions and final remarks.

2. Common methods review

For comparison purposes, we briefly review several typical methods in rs-fMRI data studies, including the seed-based correlation method, independent component analysis (ICA), and the dictionary learning method.

2.1. Seed-based correlation

Seed-based correlation (SBC) method was the first method to be used in identifying resting-state networks by Biswal et al. (1995). It calculates the Pearson correlation coefficient between two voxels or region of interests (ROI) from the corresponding two time series, denoted as x = [x1, x2, x3, ..., xn] and y = [y1, y2, y3, ..., yn]. The correlation coefficient is calculated as usual

where and are the mean values of x and y, respectively.

Normally, there are approximately one million voxels in one entire brain scan, and the data amount is based on the size of voxels and the scanned brain. Though it is possible to calculate all the correlations between any two voxels, this will cause numerous pairs of comparisons. More often, this method uses a seed or ROI selected earlier as a reference and calculates the correlation with the rest of the brain voxels. A high correlation value means closer connectivity between the two seeds or ROIs. This method has been applied in many research studies and identifies functional connectivity based on rs-fMRI. In Fox et al. (2006), the authors identified a bilateral dorsal attention system, a right-lateralized ventral attention system, and detected a potential mediating function in the prefrontal cortex. In Fox et al. (2005), the authors identified two opposed brain networks based on correlations within each network and anticorrelations between networks. One network is related to task-related activation and the other is related to task-related deactivation. In de Oliveira et al. (2019), the authors used sensorimotor networks as the seed to extract similar RSNs from rs-fMRI with those from finger tapping task fMRI data based on eight healthy volunteers. In addition to a previously selected seed or ROI, this method also needs a threshold to determine the significant voxels with the seed or ROI. The main advantages of SBC are its algorithmic simplicity and straightforward interpretation, which make it play an important role in the study of functional connectivity (FC). The main disadvantage of this method is that all relationships are only between the prior selected seeds or ROIs and the remaining other voxels of the brain. Therefore, the univariate analysis method completely ignores possible relationships between other seeds. In other words, significant information between other voxels is usually ignored. This restricts the detection of more possible networks in FC. Therefore, the choices of seeds, sizes, and positions directly determine the interpretation of the results (Buckner et al., 2008; Cole et al., 2010).

2.2. Independent component analysis

Independent component analysis (ICA) is a data-driven technique that is one of the most popular decomposition methods for analyzing fMRI data. Since this technique was first used in the field (McKeown et al., 1998), it has been applied in many studies thus far. ICA assumes that the observed data is a linear combination of sources that are statistically independent (Brown et al., 2001).

The purpose of ICA is to extract independent components based on an optimization technique, such as maximum likelihood estimation (MLE) (Stone, 2004), minimum mutual information between sources (InfoMax) (Bell and Sejnowski, 1995), or maximum non-Gaussianity between sources (FastICA) (Hyvärinen and Oja, 2000).

Because ICA is looking for non-Gaussian sources, most ICA algorithms often use Principal Component Analysis (PCA) (Tharwat, 2016) to remove Gaussian signals in the observed data. The difference between ICA and PCA is that ICA searches statistically independent components with maximum possibility and PCA searches uncorrelated components with an orthogonal property and maximum of variance. Since fMRI is four-dimensional data, it has to be transferred into two-dimensional data when using ICA or PCA. ICA can be one of two types of analysis: spatial ICA and temporal ICA, and different types of analysis determine how to transfer fMRI data. If the spatially independent components are detected, the columns of the transferred matrix are voxels and the rows are the time points; if the temporally independent components are detected, the columns of the transferred matrix are time points and the rows are the voxels. ICA can be divided into spatial ICA and temporal ICA, but spatial ICA is typically used because it can produce as many components as time points (McKeown et al., 1998; Calhoun et al., 2001).

Here is the basic algorithm of the spatial ICA (McKeown et al., 1998; Calhoun et al., 2001). Let X be a matrix with dimension t × n (X∈ℝt × n), where t means the number of time points and n means the number of voxels. The goal is to solve the following equation to find W, S:

where W is the matrix of mixing coefficient with dimension t × p, S is the source matrix with dimension p × n, and the rows of the matrix S represent spatially independent components. The basic algorithm of temporal ICA is similar to spatial ICA (McKeown et al., 1998; Calhoun et al., 2001), where X is the matrix with dimension n × t (X∈ℝn × t). The goal is to solve the following equation to find :

where is still the matrix of the mixing coefficient with dimension n × p, is the source matrix with dimension p × t, and the rows of the matrix represent the temporally independent time courses. Usually, spatial ICA is used more often than temporal ICA.

There are many techniques applied in the ICA. All algorithms fall into one of these three groups: The first is based on the projection pursuit (Stone, 2004), which basically extracts one component at one time. The second is based on infomax (Bell and Sejnowski, 1995) which extracts multiple components at one time in a parallel way. The third is based on the estimate of the maximum likelihood (Stone, 2004), which is a statistical tool to calculate the mixing coefficient matrix to best fit the observed data. There are many practical methods used in the literature, for example, in Beckmann et al. (2005), the authors proposed a probabilistic independent component analysis (PICA), compared with typical noise-free ICA, PICA tried to detect the independent components under additive noise interference. Hyvärinen and Oja (2000), introduced a widely used method called FastICA with kurtosis as a cost function. Beckmann et al. (2009), used multiple subjects and dual regression to do a group comparison. To date, ICA still plays an important role in resting state analysis.

ICA does not provide an order for independent components (ICs), unlike PCA which offers an order for principal components. This property makes it hard to tell which IC is more important. To solve the mixing coefficient W and sources S, ICA needs prior assumptions. It also becomes tricky to determine the number of ICs, which can cause over-fitting or under-fitting problems.

2.3. Dictionary learning

Dictionary learning (DL) is a linear decomposition technique to extract the fundamental components. This technique emphasizes the sparsity between components, unlike ICA which focuses on the independence between components or PCA which focuses on the orthogonal components. The idea of DL is to decompose the observed data into two matrices; a matrix is called the dictionary D which is a collection of atoms, and the other is the corresponding spare coefficients A. Here is the basic algorithm of dictionary learning (Olshausen and Field, 1996, 1997; Kreutz-Delgado et al., 2003; Mairal et al., 2009; Joneidi, 2019), let Y = [y1, y2, ..., yn] be the observed data with and Y∈ℝm × n, and the dictionary matrix D∈ℝm × k (D = [d1, d2, ..., dk]) with the corresponding sparse coefficient matrix A∈ℝk × n (A = [a1, a2, ..., an]). The number of columns k in the dictionary D is the number of atoms. The purpose is to find D and A from the following optimization problem:

where C is the parameter to control the sparsity for each column of A.

There are several improved ways to solve the optimization problem. For example, Aharon and Elad (2008), used the stochastic gradient descent technique to solve this problem, and Lee et al. (2007), used the property of duality to solve the optimization problem. A widely used method is the K-SVD method (Aharon et al., 2006), which uses singular value decomposition to generate clustering K. It is an iterative process to update the sparse matrix and dictionary matrix alternatively.

3. Graph neural network

Brain connectivity patterns from fMRI data are usually classified as statistical dependencies, named functional connectivity or causal interactions, called effective connectivity among various neural units. Investigating the human brain based on connectivity patterns reveals important information about the brain's structural, functional, and causal organization. Graph theory-based methods have recently played a significant role in understanding brain connectivity architecture. A graph neural network (GNN) is a type of deep neural network that is applied to graph-based data. To date, there are many variants of GNN, such as graph convolution networks (GCN), graph attention networks (GAN), graph autoencoders that have been used successfully in many fields including computer vision, recommendation system, and more. Yao et al. (2019), used GCN for text classification in natural language processing. The study by Kosaraju et al. (2019), proposed a new method based on GAN to predict the future trajectories of multiple interacting pedestrians. Pan et al. (2018), proposed a novel graph autoencoder framework to reconstruct the graph structure. Yao et al. (2021), discussed a mutual multi-scale triplet graph convolutional network (MMTGCN) for classification of brain disorders based on fMRI or diffusion MRI data. Yao et al. (2020), proposed a temporal-adaptive graph convolution network (TAGCN) to mine spatial and temporal information using rs-fMRI time series. TCGCN can take advantage of both spatial and temporal information using resting-state functional connectivity (FC) patterns and time-series, and can also the explicitly characterize subject-level specificity of FC patterns.

In graph theory, an undirected and unweighted graph is represented by G(V, E), where G is the graph, V is the set of vertices (nodes) and E is the set of edges connected between two vertices (Butts, 2008; van den Heuvel M. P. et al., 2008). In fMRI data analysis, a graph G represents the network of a brain, V represents the set or a subset of voxels or ROIs, and E is the set of functional connections or correlations between elements in V, especially between the ROIs.

An adjacent matrix A with dimension N × N is used to describe this kind of graph. The entry of A is one or zero depending on whether there is an existing edge between two nodes. A matrix X∈ℝm × |V| often indicates the attributes or features of a graph, where m is the number of features of each node (ROI) and |V| indicates the number of nodes (ROIs).

GraphSAGE (sample and aggregate) (Hamilton et al., 2017) is an inductive learning technique to extract node embedding. GraphSAGE can be used in unsupervised and supervised learning. Here, specifically for node extraction in fMRI analysis, an unsupervised learning procedure based on GraphSAGE can be implemented as follows:

1) Given a graph G(V, E) and feature matrix X∈ℝm × |V|; for ∀v∈V, each xv has a vector of feature with m dimension.

2) Set up a neural network with K layers and let indicate the embedding of the original node for ∀v∈V, and this is the feature of the node or the attribute with m dimensions originally.

3) For the k = 1 layer, and for each :

(a) Let indicate the local neighborhood of nodes of v, all information from the neighborhood is aggregated by a function indicated by f, then the generalized aggregation is indicated as follows:

aggregate function f can be one of the three: i) the mean value function which takes the average among all the ; ii) the pool value function which applies an element-wise max-pooling operation on neighbor set nodes; iii) a long short-term memory (LSTM) function which applies LSTM to the random permutation of the neighbor set of nodes.

(b) After summarizing information from local neighbor sets by the aggregate function, the embedding for each node is in the following form.

where σ is non-linearity function and W1 is the weight matrix.

4) After all nodes through parts a) and b) from step 3), the node embedding after the first layer is represented below:

5) For 2 ≤ k ≤ K layers step 3) and 4) are repeated. The embedding for each node is in the form below:

and the common form of aggregate functions is:

6) The embedding of node after the layer K is as follows:

7) The process is iterated to minimize the loss function as follows:

where σ is non-linearity function, zv is the embedding of neighbor node of u, Q is the negative samples (non-neighbor node) of u and vn is from negative sample distribution Pn(v).

As one of the characteristics of GNN, it can provide a learning procedure to identify relationships efficiently between nodes with relatively relaxing restrictions and assumptions. The following applications of the graphSAGE for extracting the default mode network show that the GNN defines ROIs clearer and provides more robust and reliable results in comparing to the traditional methods.

4. Data analysis

In this section, two real data sets are used to extract the default mode network, based on the methods mentioned above. A comparison will be made among the methods correspondingly.

Both data sets are obtained from an open shared neuroimage data resource (http://fcon_1000.projects.nitrc.org/fcpClassic/FcpTable.html), namely, the 1000 Functional Connectomes Project.

4.1. Case one

The data has 84 subjects including 43 males and 41 females. Their ages range from 7 to 49 years. The repetition time (TR) is 2 s, the number of slices is 39, and the time points are 192.

Data preprocessing is an important step before doing any statistical analysis. There are several software packages that deal with fMRI data preprocessing. For this data set, the DPABI (Yan et al., 2016), a toolbox of Matlab, is used. The first ten time points are removed. The pipeline for preprocessing is as follows; first, time correction is done; this is followed by realignment, co-registration between T1 weighted and functional images; head motion model with Friston 24 parameters motion covariates is used to reduce head motion effect (Friston et al., 1996); nuisance regression with linear trend, average cerebrospinal fluid (CSF) and white matters (WM) as nuisance regressors are to reduce respiratory and cardiac effects; finally normalization to MNI template is performed. The temporal filtering is set in the range of 0.01~ 0.1 Hz and the smoothing at FWHM is equal to 6 mm.

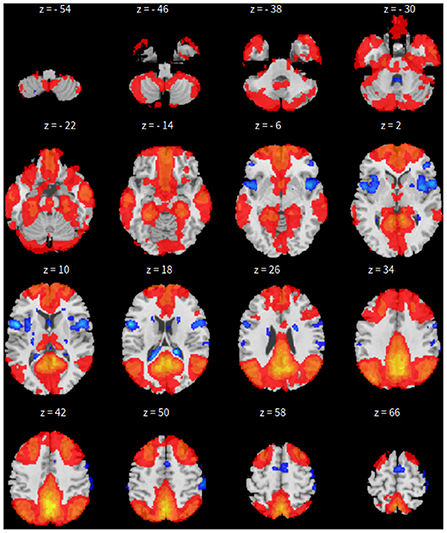

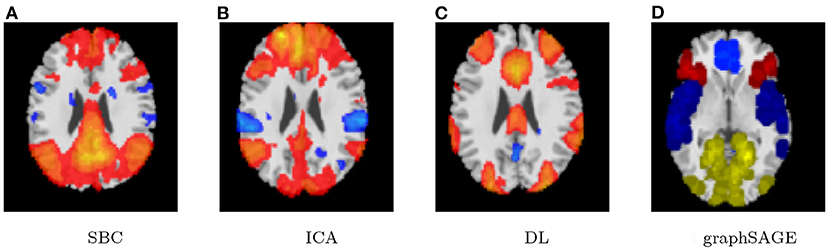

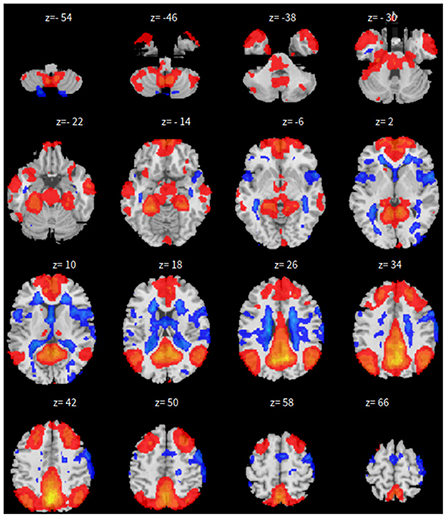

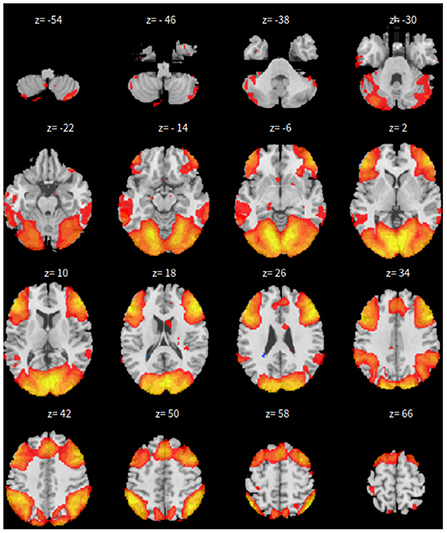

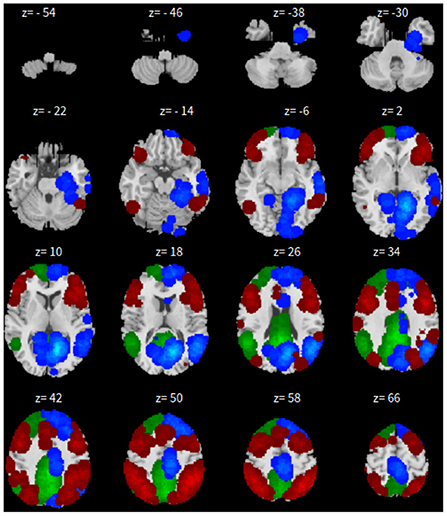

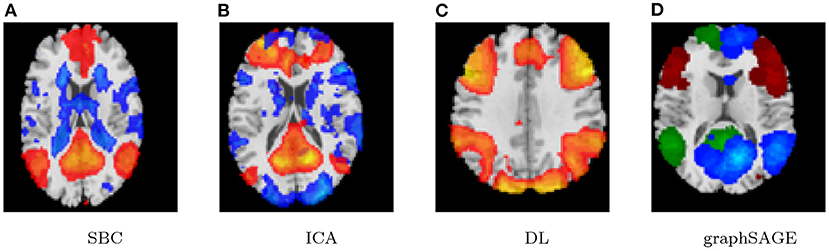

First, the SBC is performed in the CONN toolbox (Whitfield-Gabrieli and Nieto-Castanon, 2012) in Matlab. The voxel threshold p-uncorrected value is set less than 0.0001 and the familywise error rate (FWER) is set less than 0.01 which attempts to control the probability of false positives. The |T| value is larger than 4.09 with degree of freedom 83 for the two-sided test. See Figure 1 for details based on the seed of posterior cingulate cortex (PCC) from the default mode network.

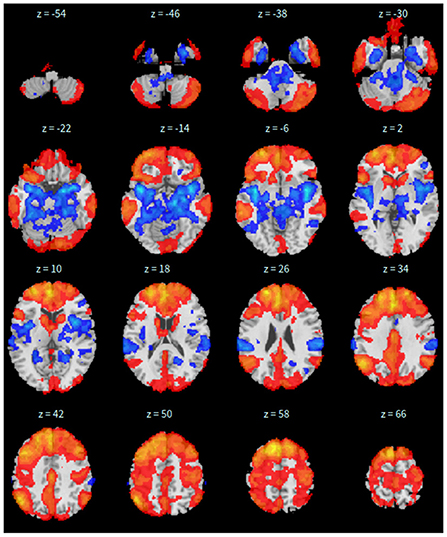

Second, the group fastICA is also performed in CONN (Whitfield-Gabrieli and Nieto-Castanon, 2012) and the independent components are set to 10. Other parameters are the same as SBC. See Figure 2 for details.

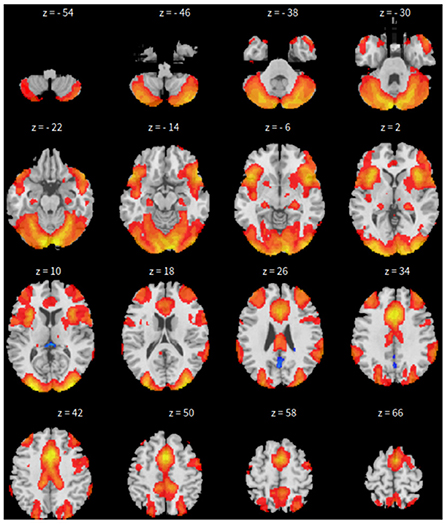

Third, dictionary learning is performed in Python with the Nilearn (Abraham et al., 2014) package. As mentioned in Section 2.3, dictionary learning focuses on sparsity between components. The component is set to 10 and the sparsity parameter is set to 15. See Figure 3 for details.

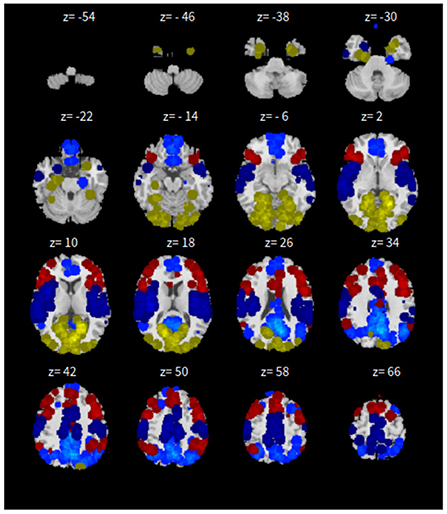

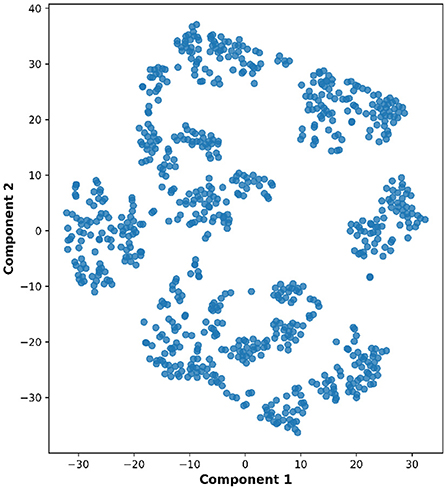

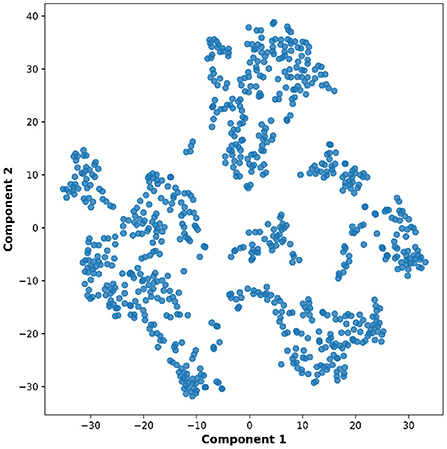

Lastly, the graph neural network method GraphSAGE is performed through the package of StellarGraph (Data61, 2018) in Python. See Figure 4 for details. Since the 800 ROIs of the brain are used, there are 800 nodes in the graph and each two makes an edge so that there are a total of 319,600 edges in the graph. For each subject, the correlation between any two ROIs is calculated based on 182 time series data. Each subject has 182 time points, which means that each ROI of each subject has 182 values. Each subject has a correlation matrix with dimension of 800 × 800. There are 84 correlation matrices for 84 subjects. To reduce complexity and noise, all the average correlation values less than 0.1 are replaced by zero, and thus the associated edges are dropped. The correlation for each node is the node feature, which is used in the subsequent analysis. A two-layer graph network is set for analysis. The number of walks for each node v is set to 10; the length of walk is 5; batch size is set to 10; 1-hop neighbors node for each node v is set to 50 and 2-hop neighbors node is set to 10 for each node v; the first layer is set to 200 hidden neurons; the second layer is set to 100 neurons; the learning rate is set to 0.01; epochs is set to 100 and dropout rate is set to 0.15. After analysis each node embedding is represented by a vector with 100 dimensions. To visualize the node embedding, a principal component analysis (PCA) with 95% variation is used, and the first two components are used for plot. Figure 5 shows seven groups. Then the K-means (Lloyd, 1982) cluster algorithm is performed with components extracted from PCA to group ROIs into seven parts. The comparison of DMN network for all algorithms is shown in Figure 6.

4.2. Case two

The second dataset has 46 subjects, including 15 men and 31 women. Their ages range from 44 to 65 years. The repetition time (TR) is 2 s; the number of slices is 64 and the time points are 175.

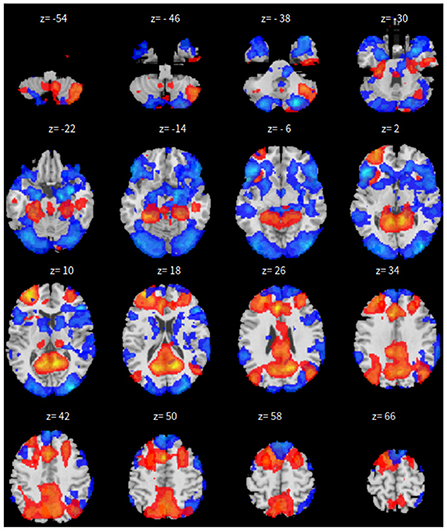

For this dataset, the fMRIPrep package (Esteban et al., 2017) is used for data preprocessing. The first ten time points are removed. The preprocessing pipeline and the procedure of data processing are analogous to the case-1.

The only difference is that, we only have 165 time points to compute the correlation between every pair of ROIs and 46 correlation matrices, corresponding to the 46 subjects. These are fed in to train the neural network. As all other parameters are set the same, the dropout rate is changed to 0.05 for this experiment. The results for SBC, fastICA, Dictionary Learning, and GraphSAGE, are shown in Figures 7–10, respectively. The 7-cluster node embedding based on GraphSAGE is shown in Figure 11. The comparison of DMN network from four algorithms is illustrated in Figure 12.

5. Discussion and conclusion

Noninvasive neuroimaging techniques such as fMRI have broadened and enhanced our understanding of the development and functions of the brain. The resting-state fMRI has gained popularity in studies of the brain's functional architecture and the assessment of neural networks of the brain since it measures integral resting-state functional connectivity across the whole brain without relying on any explicit tasks. It gives an ideal mean for examining possible changes of the whole brain network organization during the developments. Graph neural networks can be an effective framework for representation learning of functional connections. Farahani et al. (2019), provided a systematic review on applications of graph theory for identifying connectivity patterns in human brain networks. Machine learning on graph-structured network data has proliferated in a number of important applications. Graph-based network analysis reveals meaningful information of topological architecture of the brain networks and may provide novel insights into biological mechanisms underlying human cognition, health, and disease. Many graph neural networks achieve state-of-the-art results on nodes and graph classification tasks, but there is limited mathematical understanding of the GNN in general. Xu et al. (2019), presented a theoretical framework for analyzing the expressive power of GNN's to capture different graph structures.

As neuroimaging methods became more accurate, data continued to accumulate that suggested activity during resting states followed a certain pattern. The default mode network refers to this resting activity in the areas of the brain that are most active during these rest states (Raichle et al., 2001). The DMN research development showed that brain development is characterized by a trend of reduced segregation (i.e., local clustering) between spatially adjacent regions and increased integration of distant regions (Fair et al., 2007; Ma and Ma, 2018). Therefore, in regard to the study of DMN and FC for the brain, the knowledge on normal development of brain connectivity architecture could provide important insight into understanding the aspects of emergence, course, and severity of development-related brain disorders as well.

GraphSAGE is a framework for inductive representation learning on large graphs. GraphSAGE is used to generate low-dimensional vector representations for nodes, and is especially useful for graphs that have rich node attribute information. Oh et al. (2019), proposed a new data-driven sampling algorithm trained with reinforcement learning to replace the subsampling algorithm in graphSAGE. Transfer learning has proven successful for traditional deep learning problems. Kooverjee et al. (2022), recently demonstrated that transfer learning is effective with GNNs and compared the performances of graph convolution networks (GCN) and graphSAGE.

In this paper, we propose the use of a graph neural network to extract DMN-based functional connectivity on rs-fMRI data based on two data sets from the open shared neuroimage data resource (http://fcon_1000.projects.nitrc.org/fcpClassic/FcpTable.html).

As we can see in this study, the seed-based method can find the default mode network in two underlying cases and show a strong correlation with the prior selected seed of PCC. Its results are usually affected by the chosen seed and thus other possible networks are often ignored. The ICA and the dictionary learning methods can also find the default mode network in both cases, but these two methods provide some different networks relying on prior assumptions, some extracted networks are often difficult to interpret, and the results usually depend on the number of components.

The proposed graph neural network technique named graphSAGE can extract functional connectivity of DMN well based on the rs-fMRI data experiments. Furthermore, compared to the seed-based method, the ICA, as well as the DL, the graphSAGE method gives a more robust result. In addition, the three compared methods need to make prior threshold p-values for single subject analysis and group level analysis as well as to assume a certain number of components for ICA and dictionary learning before hand. Therefore, the analysis results often depend on these prior assumptions and are more or less subjective. In contrast, graphSAGE can be applied under relaxing restrictions and assumptions, as well as in consideration of the single subject analysis and group subjects analysis simultaneously. It can give more reliable results without subjective facts.

It is sometimes difficult to integrate all of the reported findings as pathological brain networks due to the fact that results often do not coincide with each other. Therefore, more consistent comparisons should be made across the studies. In addition, for some possible projects, statistical learning methods for longitudinal high-dimensional data (Chen et al., 2014) and longitudinal studies could be employed for monitoring brain network topological changes using different therapeutic strategies across a longer time duration (Mears and Pollard, 2016).

Many existing studies usually characterize static properties of the FC patterns, ignoring the time-varying dynamic information. A model of temporal-adaptive graph convolution network (TAGCN) to mine spatial and temporal information using rs-fMRI time series was studied in Yao et al. (2020). Textual descriptive information in the data can help improve classification and prediction in modeling. But one needs to use tools in natural language processing (NLP). For instance, in a recent work, Xu et al. (2022), used a newly developed NLP tool, called BERT to incorporate textual data in predictive modeling. Yao et al. (2019), used GCN for text classification in NLP. Incorporating textual data with GNN/GCN in fMRI data analysis could be a challenging but promising study.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: 1000 Functional Connectomes Project (FCP), http://fcon_1000.projects.nitrc.org/fcpClassic/FcpTable.html.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the patients/participants or patients/participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

DW carried out mainly the computing tasks and implemented the algorithm. All authors contributed equally to the design of the research, to the analysis of the results, and to the writing of the manuscript.

Acknowledgments

The authors gratefully thank the editor and referees for their constructive comments and suggestions, which helped improve the quality of the paper. DH would like to thank his NIA award support from MTSU.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abraham, A., Pedregosa, F., Eickenberg, M., Gervais, P., Mueller, A., Kossaifi, J., et al. (2014). Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 8, 14. doi: 10.3389/fninf.2014.00014

Aharon, M., Elad, M. (2008). Sparse and redundant modeling of image content using an image-signature-dictionary. SIAM J. Imaging Sci. 1, 228–247. doi: 10.1137/07070156X

Aharon, M., Elad, M., Bruckstein, A. (2006). K-svd: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54, 4311–4322. doi: 10.1109/TSP.2006.881199

Amaro Jr, E., Barker, G. J. (2006). Study design in fMRI: basic principles. Brain Cogn. 60, 220–232. doi: 10.1016/j.bandc.2005.11.009

Andrews-Hanna, J. R., Smallwood, J., Spreng, R. N. (2014). The default network and self-generated thought: component processes, dynamic control, and clinical relevance. Ann. N. Y. Acad. Sci. 1316, 29. doi: 10.1111/nyas.12360

Beckmann, C. F., DeLuca, M., Devlin, J. T., Smith, S. M. (2005). Investigations into resting-state connectivity using independent component analysis. Philos. Trans. R. Soc. B Biol. Sci. 360, 1001–1013. doi: 10.1098/rstb.2005.1634

Beckmann, C. F., Mackay, C. E., Filippini, N., Smith, S. M. (2009). Group comparison of resting-state fmri data using multi-subject ica and dual regression. Neuroimage 47(Suppl. 1), S148. doi: 10.1016/S1053-8119(09)71511-3

Bell, A. J., Sejnowski, T. J. (1995). An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7, 1129–1159. doi: 10.1162/neco.1995.7.6.1129

Bell, P. T., Shine, J. M. (2015). Estimating large-scale network convergence in the human functional connectome. Brain Connect. 5, 565–574. doi: 10.1089/brain.2015.0348

Biswal, B., Zerrin Yetkin, F., Haughton, V. M., Hyde, J. S. (1995). Functional connectivity in the motor cortex of resting human brain using echo-planar mri. Magn. Reson. Med. 34, 537–541. doi: 10.1002/mrm.1910340409

Brown, G. D., Yamada, S., Sejnowski, T. J. (2001). Independent component analysis at the neural cocktail party. Trends Neurosci. 24, 54–63. doi: 10.1016/S0166-2236(00)01683-0

Buckner, R. L., Andrews-Hanna, J. R., Schacter, D. L. (2008). The brain's default network: anatomy, function, and relevance to disease. Ann. N. Y. Acad. Sci. 1124, 1–38. doi: 10.1196/annals.1440.011

Butts, C. T. (2008). Social network analysis: a methodological introduction. Asian J. Soc. Psychol. 11, 13–41. doi: 10.1111/j.1467-839X.2007.00241.x

Calhoun, V. D., Adali, T., Pearlson, G., Pekar, J. J. (2001). Spatial and temporal independent component analysis of functional mri data containing a pair of task-related waveforms. Hum Brain Mapp. 13, 43–53. doi: 10.1002/hbm.1024

Canario, E., Chen, D., Biswal, B. (2021). A review of resting-state fmri and its use to examine psychiatric disorders. Psychoradiology 1, 42–53. doi: 10.1093/psyrad/kkab003

Chen, S., Grant, E., Wu, T. T., Bowman, F. D. (2014). Some recent statistical learning methods for longitudinal high-dimensional data. Wiley Interdisc. Rev. Comput. Stat. 6, 10–18. doi: 10.1002/wics.1282

Chenji, S., Jha, S., Lee, D., Brown, M., Seres, P., Mah, D., et al. (2016). Investigating default mode and sensorimotor network connectivity in amyotrophic lateral sclerosis. PLoS ONE 11, e0157443. doi: 10.1371/journal.pone.0157443

Cole, D. M., Smith, S. M., Beckmann, C. F. (2010). Advances and pitfalls in the analysis and interpretation of resting-state fmri data. Front. Syst. Neurosci. 4, 8. doi: 10.3389/fnsys.2010.00008

Daliri, M. R., Behroozi, M. (2014). Advantages and disadvantages of resting state functional connectivity magnetic resonance imaging for clinical applications. OMICS J. Radiol. 3, e123. doi: 10.4172/2167-7964.1000e123

Damoiseaux, J. S., Rombouts, S., Barkhof, F., Scheltens, P., Stam, C. J., Smith, S. M., et al. (2006). Consistent resting-state networks across healthy subjects. Proc. Natl. Acad. Sci. U.S.A. 103, 13848–13853. doi: 10.1073/pnas.0601417103

Data61, C. (2018). Stellargraph Machine Learning Library. Available online at: https://github.com/stellargraph/stellargraph.

De Luca, M., Beckmann, C. F., De Stefano, N., Matthews, P. M., Smith, S. M. (2006). fmri resting state networks define distinct modes of long-distance interactions in the human brain. Neuroimage 29, 1359–1367. doi: 10.1016/j.neuroimage.2005.08.035

de Oliveira, B. G., Alves Filho, J. O., Esper, N. B., de Azevedo, D. F. G., Franco, A. R. (2019). “Automated mapping of sensorimotor network for resting state fmri data with seed-based correlation analysis,” in XXVI Brazilian Congress on Biomedical Engineering (Armação de Buzios: Springer), 537–544.

Esteban, O., Blair, R., Markiewicz, C. J., Berleant, S. L., Moodie, C., Ma, F., et al. (2017). poldracklab/fmriprep: 1.0.0-rc5. Zenedo. doi: 10.5281/zenodo.996169

Fair, D., Dosenbach, N. U. F., Church, J., Cohen, A. L., Brahmbhatt, S., Miezin, F. M., et al. (2007). Development of distinct control networks through segregation and integration. Proc. Natl. Acad. Sci. U.S.A. 104, 13507–13512. doi: 10.1073/pnas.0705843104

Farahani, F., Karwowski, W., Lighthall, N. (2019). Application of graph theory for identifying connectivity patterns in human brain networks: a systematic review. Front Neurosci. 13, 585. doi: 10.3389/fnins.2019.00585

Fox, M. D., Corbetta, M., Snyder, A. Z., Vincent, J. L., Raichle, M. E. (2006). Spontaneous neuronal activity distinguishes human dorsal and ventral attention systems. Proc. Natl. Acad. Sci. U.S.A. 103, 10046–10051. doi: 10.1073/pnas.0604187103

Fox, M. D., Raichle, M. E. (2007). Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat. Rev. Neurosci. 8, 700–711. doi: 10.1038/nrn2201

Fox, M. D., Snyder, A. Z., Vincent, J. L., Corbetta, M., Van Essen, D. C., Raichle, M. E. (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc. Natl. Acad. Sci. U.S.A. 102, 9673–9678. doi: 10.1073/pnas.0504136102

Friston, K. J., Williams, S., Howard, R., Frackowiak, R. S., Turner, R. (1996). Movement-related effects in fMRI time-series. Magn. Reson. Med. 35, 346–355. doi: 10.1002/mrm.1910350312

Greicius, M. D., Krasnow, B., Reiss, A. L., Menon, V. (2003). Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proc. Natl. Acad. Sci. U.S.A. 100, 253–258. doi: 10.1073/pnas.0135058100

Hamilton, W., Ying, Z., Leskovec, J. (2017). “Inductive representation learning on large graphs,” in Advances in Neural Information Processing Systems (Long Beach, CA), 1024–1034.

Hendrikx, D., Smits, A., Lavanga, M., De Wel, O., Thewissen, L., Caicedo, A., et al. (2019). Measurement of neurovascular coupling in neonates. Front. Physiol. 10, 65. doi: 10.3389/fphys.2019.00065

Hutton, J. S., Dudley, J., Horowitz-Kraus, T., DeWitt, T., Holland, S. K. (2019). Functional connectivity of attention, visual, and language networks during audio, illustrated, and animated stories in preschool-age children. Brain Connect. 9, 580–592. doi: 10.1089/brain.2019.0679

Hyvärinen, A., Oja, E. (2000). Independent component analysis: algorithms and applications. Neural Networks 13, 411–430. doi: 10.1016/S0893-6080(00)00026-5

Joneidi, M. (2019). Functional brain networks discovery using dictionary learning with correlated sparsity. arXiv preprint arXiv:1907.03929. doi: 10.48550/arXiv.1907.03929

Kooverjee, N., James, S., van Zyl, T. (2022). Investigating transfer learning in graph neural networks. arXiv:2022.00740v1 [cs.LG]. doi: 10.20944/preprints202201.0457.v1

Kosaraju, V., Sadeghian, A., Martín-Martín, R., Reid, I., Rezatofighi, H., Savarese, S. (2019). “Social-bigat: multimodal trajectory forecasting using bicycle-gan and graph attention networks,” in Neural Information Processing Systems 32 (Vancouver, BC).

Kreutz-Delgado, K., Murray, J. F., Rao, B. D., Engan, K., Lee, T.-W., Sejnowski, T. J. (2003). Dictionary learning algorithms for sparse representation. Neural Comput. 15, 349–396. doi: 10.1162/089976603762552951

Lee, H., Battle, A., Raina, R., Ng, A. Y. (2007). “Efficient sparse coding algorithms,” in Advances in Neural Information Processing Systems (Vancouver, BC), 801–808.

Lee, M. H., Smyser, C. D., Shimony, J. S. (2013). Resting-state fMRI: a review of methods and clinical applications. Am. J. Neuroradiol. 34, 1866–1872. doi: 10.3174/ajnr.A3263

Lei, X., Wang, Y., Yuan, H., Mantini, D. (2014). Neuronal oscillations and functional interactions between resting state networks. Hum. Brain Mapp. 35, 3517–3528. doi: 10.1002/hbm.22418

Lloyd, S. (1982). Least squares quantization in pcm. IEEE Trans. Inf. Theory 28, 129–137. doi: 10.1109/TIT.1982.1056489

Ma Z. Ma Y. and Zhang N. (2018). Development of brain-wide connectivity architecture in awake rats. Neuroimage 176, 380e389. doi: 10.1016/j.neuroimage.2018.05.009

Mairal, J., Bach, F., Ponce, J., Sapiro, G. (2009). “Online dictionary learning for sparse coding,” in Proceedings of the 26th Annual International Conference on Machine Learning (Montreal, QC), 689–696.

McKeown, M. J., Makeig, S., Brown, G. G., Jung, T.-P., Kindermann, S. S., Bell, A. J., et al. (1998). Analysis of fmri data by blind separation into independent spatial components. Hum. Brain Mapp. 6, 160–188. doi: 10.1002/(SICI)1097-0193(1998)6:3andlt;160::AID-HBM5andgt;3.0.CO;2-1

Mears, D., Pollard, H. B. (2016). Network science and the human brain: using graph theory to understand the brain and one of its hubs, the amygdala, in health and disease. J. Neurosci. Res. 94, 590–605. doi: 10.1002/jnr.23705

Morris, T., Kucyi, A., Anteraper, S., Geddes, M. R., Nieto-Castañon, A., Burzynska, A., et al. (2022). Resting state functional connectivity provides mechanistic predictions of future changes in sedentary behavior. Scientific Rep. 12, 940. doi: 10.1038/s41598-021-04738-y

Oh, J., Cho, K., Bruna, J. (2019). Advancing graphsage with a data-driven node sampling. arXiv:1904.12935v1 [cs.LG]. doi: 10.48550/arXiv.1904.12935

Olshausen, B. A., Field, D. J. (1996). Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381, 607–609. doi: 10.1038/381607a0

Olshausen, B. A., Field, D. J. (1997). Sparse coding with an overcomplete basis set: a strategy employed by v1? Vision Res. 37, 3311–3325. doi: 10.1016/S0042-6989(97)00169-7

Pan, S., Hu, R., Long, G., Jiang, J., Yao, L., Zhang, C. (2018). Adversarially regularized graph autoencoder for graph embedding. arXiv preprint arXiv:1802.04407. doi: 10.24963/ijcai.2018/362

Power, J. D., Cohen, A. L., Nelson, S. M., Wig, G. S., Barnes, K. A., Church, J. A., et al. (2011). Functional network organization of the human brain. Neuron 72, 665–678. doi: 10.1016/j.neuron.2011.09.006

Raichle, M., MacLeod, A., Snyder, A., Powers, W., Gusnard, D., Shulman, G. (2001). A default mode of brain function. Proc. Natl. Acad. Sci. U.S.A. 98, 676–682. doi: 10.1073/pnas.98.2.676

Seeley, W. W., Menon, V., Schatzberg, A. F., Keller, J., Glover, G. H., Kenna, H., et al. (2007). Dissociable intrinsic connectivity networks for salience processing and executive control. J. Neurosci. 27, 2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007

Shafiei, G., Zeighami, Y., Clark, C. A., Coull, J. T., Nagano-Saito, A., Leyton, M., et al. (2019). Dopamine signaling modulates the stability and integration of intrinsic brain networks. Cereb. Cortex 29, 397–409. doi: 10.1093/cercor/bhy264

Shimony, J. S., Zhang, D., Johnston, J. M., Fox, M. D., Roy, A., Leuthardt, E. C. (2009). Resting-state spontaneous fluctuations in brain activity: a new paradigm for presurgical planning using fmri. Acad. Radiol. 16, 578–583. doi: 10.1016/j.acra.2009.02.001

Smith, S. M., Fox, P. T., Miller, K. L., Glahn, D. C., Fox, P. M., Mackay, C. E., et al. (2009). Correspondence of the brain's functional architecture during activation and rest. Proc. Natl. Acad. Sci. U.S.A. 106, 13040–13045. doi: 10.1073/pnas.0905267106

Stone, J. V. (2004). Independent Component Analysis: A Tutorial Introduction. MIT Press. doi: 10.7551/mitpress/3717.001.0001

Tharwat, A. (2016). Principal component analysis: an overview. Pattern Recog. 3, 197–240. doi: 10.1504/IJAPR.2016.10000630

Thomas Yeo, B., Krienen, F. M., Sepulcre, J., Sabuncu, M. R., Lashkari, D., Hollinshead, M., et al. (2011). The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 106, 1125–1165. doi: 10.1152/jn.00338.2011

Van Den Heuvel, M., Mandl, R., Pol, H. H. (2008). Normalized cut group clustering of resting-state fmri data. PLoS ONE 3, e2001. doi: 10.1371/journal.pone.0002001

van den Heuvel, M. P., Stam, C. J., Boersma, M., Hulshoff Pol, H. E. (2008). Small-world and scale-free organization of voxel-based resting-state functional connectivity in the human brain. Neuroimage 43, 528–539. doi: 10.1016/j.neuroimage.2008.08.010

Vincent, J. L., Kahn, I., Snyder, A. Z., Raichle, M. E., Buckner, R. L. (2008). Evidence for a frontoparietal control system revealed by intrinsic functional connectivity. J. Neurophysiol. 100, 3328–3342. doi: 10.1152/jn.90355.2008

Vossel, S., Geng, J. J., Fink, G. R. (2014). Dorsal and ventral attention systems: distinct neural circuits but collaborative roles. Neuroscientist 20, 150–159. doi: 10.1177/1073858413494269

Whitfield-Gabrieli, S., Nieto-Castanon, A. (2012). Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2, 125–141. doi: 10.1089/brain.2012.0073

Xu, K., Hu, W., Leskovec, J., Jegelka, S. (2019). How powerful are graph neural networks? arXiv:1810.00826v3 [cs.LG]. doi: 10.48550/arXiv.1810.00826

Xu, S., Zhang, C., Hong, D. (2022). Bert-based nlp techniques for classification and severity modeling in basic warranty data study. Insurance Math. Econ. 107, 57–67. doi: 10.1016/j.insmatheco.2022.07.013

Yan, C.-G., Wang, X.-D., Zuo, X.-N., Zang, Y.-F. (2016). Dpabi: data processing &analysis for (resting-state) brain imaging. Neuroinformatics 14, 339–351. doi: 10.1007/s12021-016-9299-4

Yao, D., Sui, J., Wang, M., Yang, E., Jiaerken, Y., Luo, N., et al. (2021). A mutual multi-scale triplet graph convolutional network for classification of brain disorders using functional or structural connectivity. IEEE Trans. Med. Imaging 40, 1279–1289. doi: 10.1109/TMI.2021.3051604

Yao, D., Sui, J., Yang, E., Yap, P.-T., Shen, D., Liu, M. (2020). “Temporal-adaptive graph convolutional network for automated identification of major depressive disorder using resting-state fMRI,” in International Workshop on Machine Learning in Medical Imaging (Limu: Springer), 1–10.

Yao, L., Mao, C., Luo, Y. (2019). Graph convolutional networks for text classification. Proc. AAAI Conf. Artif. Intell. 33, 7370–7377. doi: 10.1609/aaai.v33i01.33017370

Keywords: default mode network (DMN), graph neural network, graphSAGE, independent component analysis (ICA), rs-fMRI = resting-state fMRI

Citation: Wang D, Wu Q and Hong D (2022) Extracting default mode network based on graph neural network for resting state fMRI study. Front. Neuroimaging 1:963125. doi: 10.3389/fnimg.2022.963125

Received: 07 June 2022; Accepted: 08 August 2022;

Published: 07 September 2022.

Edited by:

Shuo Chen, University of Maryland, United StatesReviewed by:

Fengqing Zhang, Drexel University, United StatesDongren Yao, Harvard Medical School, United States

Copyright © 2022 Wang, Wu and Hong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Don Hong, ZG9uLmhvbmdAbXRzdS5lZHU=

Donglin Wang

Donglin Wang Qiang Wu

Qiang Wu Don Hong

Don Hong