94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neuroergonomics, 14 July 2023

Sec. Neurotechnology and Systems Neuroergonomics

Volume 4 - 2023 | https://doi.org/10.3389/fnrgo.2023.1189179

This article is part of the Research TopicNeurotechnology for sensing the brain out of the lab: methods and applications for mobile functional neuroimagingView all 5 articles

We have all experienced the sense of time slowing down when we are bored or speeding up when we are focused, engaged, or excited about a task. In virtual reality (VR), perception of time can be a key aspect related to flow, immersion, engagement, and ultimately, to overall quality of experience. While several studies have explored changes in time perception using questionnaires, limited studies have attempted to characterize them objectively. In this paper, we propose the use of a multimodal biosensor-embedded VR headset capable of measuring electroencephalography (EEG), electrooculography (EOG), electrocardiography (ECG), and head movement data while the user is immersed in a virtual environment. Eight gamers were recruited to play a commercial action game comprised of puzzle-solving tasks and first-person shooting and combat. After gameplay, ratings were given across multiple dimensions, including (1) the perception of time flowing differently than usual and (2) the gamers losing sense of time. Several features were extracted from the biosignals, ranked based on a two-step feature selection procedure, and then mapped to a predicted time perception rating using a Gaussian process regressor. Top features were found to come from the four signal modalities and the two regressors, one for each time perception scale, were shown to achieve results significantly better than chance. An in-depth analysis of the top features is presented with the hope that the insights can be used to inform the design of more engaging and immersive VR experiences.

The use of virtual reality (VR) has increased significantly in recent years due to its numerous applications in various fields such as gaming, education, training, and therapy (Xie et al., 2021; Ding and Li, 2022). VR provides immersive and interactive environments that can simulate different scenarios and offer the user a unique experience. As new VR technologies emerge, the measurement of the quality of immersive experiences has become crucial (Perkis et al., 2020). For example, researchers and developers have become interested in understanding the psychological and physiological factors that influence the users' immersive quality of experiences, such as the sense of presence, immersion, engagement, and perception of time (Moinnereau et al., 2022a). While substantial work has been reported on presence, immersion, and engagement (e.g., Berkman and Akan, 2019; Muñoz et al., 2020; Rogers et al., 2020), very little work has been presented to date on characterizing the user's perception of time and the role it has on overall immersive media quality of experience.

Time perception is a complex cognitive process that allows individuals to estimate the duration and timing of events. It plays a crucial role in several aspects of human life, such as decision-making, memory, and attention (Wittmann and Paulus, 2008; Block and Gruber, 2014). Measuring a user's sense of perception of time in VR can be challenging due to potential confounds with sense of presence and flow (Mullen and Davidenko, 2021). Existing methods of measuring time perception in VR commonly rely on self-reports and questionnaires, such as the Metacognitive Questionnaire on Time (Lamotte et al., 2014). While these methods have provided valuable insights into the mechanisms of time perception, they have several limitations. For instance, they are prone to response biases and are influenced by various cognitive and contextual factors, such as attention and arousal (Askim and Knardahl, 2021). Furthermore, these methods may not capture the dynamic nature of time perception as they depend on the individual's ability to accurately perceive and report time. Moreover, questionnaires are often presented post-experiment, thus providing little insight for in-experiment environment adaptation to maximize the user experience.

Recently, there has been a push to use wearables and biosignals to measure, in real-time, cognitive and affective states of users while immersed in VR experiences (Moinnereau et al., 2022b,c,d). For example, electroencephalography (EEG) signals have been used to study engagement correlates within virtual reality experiences (Muñoz et al., 2020; Rogers et al., 2020). Electrocardiograms (ECG) have been used to uncover the relationship between valence and heart rate variability (HRV) (Maia and Furtado, 2019; Abril et al., 2020), while the research by Lopes et al. (2022) has established connections between relaxation, heart rate, and breathing patterns while the user is immersed in a virtual forest. Electrodermal activity (EDA), in turn, has been investigated to gain insights into the user's sense of presence and relaxation in virtual environments (Terkildsen and Makransky, 2019; Salgado et al., 2022). Furthermore, other studies have explored head movements to better comprehend users' reported levels of valence and arousal, as well as emotional states (Li et al., 2017; Xue et al., 2021). Eye movement has also been examined to assess factors such as immersion, concentration levels, and cybersickness in VR settings (Ju et al., 2019; Chang et al., 2021). The work by Moinnereau et al. (2022d) explored several neuro-psychological features as correlates of different user emotional states, engagement levels, and arousal-valence dimensions.

Despite all of these advances, limited research exists on the use of biosignals to monitor correlates of time perception while the user is immersed in VR. This is precisely the gap that the current study aims to address. In particular, two research questions are addressed: (1) what modalities and features provide the most important cues for time perception modeling and (2) how well can we objectively measure time perception. To achieve this goal, we build on the work from Moinnereau et al. (2022d) and show the importance of different modalities, including features extracted from EEG, eye gaze patterns derived from electro-oculography (EOG) signals, heart rate measures computed from ECG, and head movement information extracted from tri-axis accelerometer signals from the headset, for time perception monitoring. It is important to emphasize that given the exploratory nature of this study and the limited number of participants, our findings should be considered preliminary and indicative of the feasibility for objective time perception monitoring. Despite these limitations, this study contributes to the development of new methods to monitor time perception from biosignals, thus opening the door for future adaptive systems that maximize user experience on a per-user basis.

The remainder of this paper is organized as follows: Section 2 provides a review of the relevant literature in the field. Section 3 delves into the experimental procedures and details the bio-signal feature extraction pipelines utilized in this study. Section 4 presents the experimental results and contextualizes them within existing works. Finally, Section 5 offers concluding remarks.

Previous studies have investigated the connection between the brain and time perception, revealing that the frontal and parietal cortices, basal ganglia, cerebellum, and hippocampus are critical brain regions involved in the perception of time (Fontes et al., 2016). Specifically, the dorsolateral prefrontal right cortex has been identified as a significant region involved in time perception. EEG has been a common method used to study the neural correlates of temporal processing. EEG is a non-invasive technique that measures the electrical activity of the brain. It is important to note that time perception involves various psychological constructs, including attention, engagement, arousal, and even the influence of emotional stimuli. Each of these factors can modulate our perception of time in different ways, making time perception a multifaceted and dynamic process (Buhusi and Meck, 2005; Zakay, 2014). This complexity is reflected in the diverse range of methods and measures used to study time perception, as we will discuss in the following sections.

The work of Johari et al. (2023) examined semantic processing of time using EEG and found that the right parietal electrodes showed an event-related potential (ERP) response specific to the perception of event duration, with stronger alpha/beta band desynchronization. The work of Vallet et al. (2019) investigated the mechanisms involved in time perception related to emotional stimuli with equal valence and arousal levels using electrophysiological data. The results show that the ability to estimate time is influenced by various cognitive and emotional factors. Specifically, valence and arousal can modulate time perception, thus altering perceived duration. This highlights the importance of considering these factors when studying time perception, and the need for measures that can capture these dynamics.

In the work of Silva et al. (2022), in turn, the effects of oral bromazepam (a drug used for short-term treatment of anxiety by generating calming effects) on time perception were explored. The study monitored the EEG alpha asymmetry in electrodes associated with the frontal and motor cortex. The study found that bromazepam modulated the EEG alpha asymmetry in cortical areas during time judgment, with greater left hemispheric dominance during a time perception task. Moreover, the study of Ghaderi et al. (2018) explored the use of EEG absolute power and coherence as neural correlates of time perception. They found that participants who overestimated time exhibited lower activity in the beta band (18–30 Hz) at several electrode sites. The study suggested that although beta amplitude in central regions is important for timing mechanisms, its role may be more complex than previously thought. Lastly, the work by Kononowicz and van Rijn (2015) investigated correlates of time perception and reported on the importance of beta and theta frequency subbands.

Moreover, eye movements have become a valuable tool to investigate temporal processing and its relation to attention and eye gaze dynamics. Recent studies, for example, have highlighted the close link between eye movement and time perception, revealing that time compression could be due to the lack of catch-up saccades (Huang et al., 2022). Other works have linked saccade and microsaccade misperceptions (Yu et al., 2017), as well as their role in visual attention and, consequently, on time perception (Cheng and Penney, 2015). Finally, it has been reported that when short intervals between two successive perisaccadic visual stimuli are underestimated, a compression of time is perceived (Morrone et al., 2005). These findings emphasize the importance of eye movements in understanding temporal processing, its connection to visual perception, and how our perception of time is influenced not only by our cognitive and emotional state but also by our visual attention and gaze dynamics.

Physiological measures, such as heart rate variability (HRV) and skin conductance, have also been used to explore the mechanisms underlying time perception. A study found that low-frequency components of HRV were associated with less accurate time perception, suggesting that the autonomic nervous system function may play a crucial role in temporal processing (Fung et al., 2017). Another study showed that increased HRV was linked to higher temporal accuracy (Cellini et al., 2015). Additionally, changes in the sympathetic nervous system (SNS) activity have been found to affect time perception, with research showing that increased SNS activity, indicated by elevated heart rate and frequency of phasic skin conductance response, was linked to the perception of time-passing more quickly (Ogden et al., 2022). These findings highlight the importance of physiological measures in understanding the complex interaction between the body and time perception.

As can be seen, while numerous studies exist exploring the use of psychophysiological measures to characterize the perception of time, their measurement in virtual reality has remained a relatively unexplored area of research. Perception of time in VR has relied mostly on participant-provided ratings of judgement of time, usually provided post-experiment (Volante et al., 2018). Being able to track time perception objectively in real time could be extremely useful for immersive experiences. While time compression has been linked with high levels of engagement and attentional resources (Read et al., 2022), time elongation could also be linked to boredom (Igarzábal et al., 2021). As such, time perception monitoring could be extremely useful for user experience assessment. In the next section, the materials and method used to achieve this goal are described.

In this section, we detail the experimental protocol followed, including the dataset used, signal pre-processing, feature extraction and selection methods, and the regression method used.

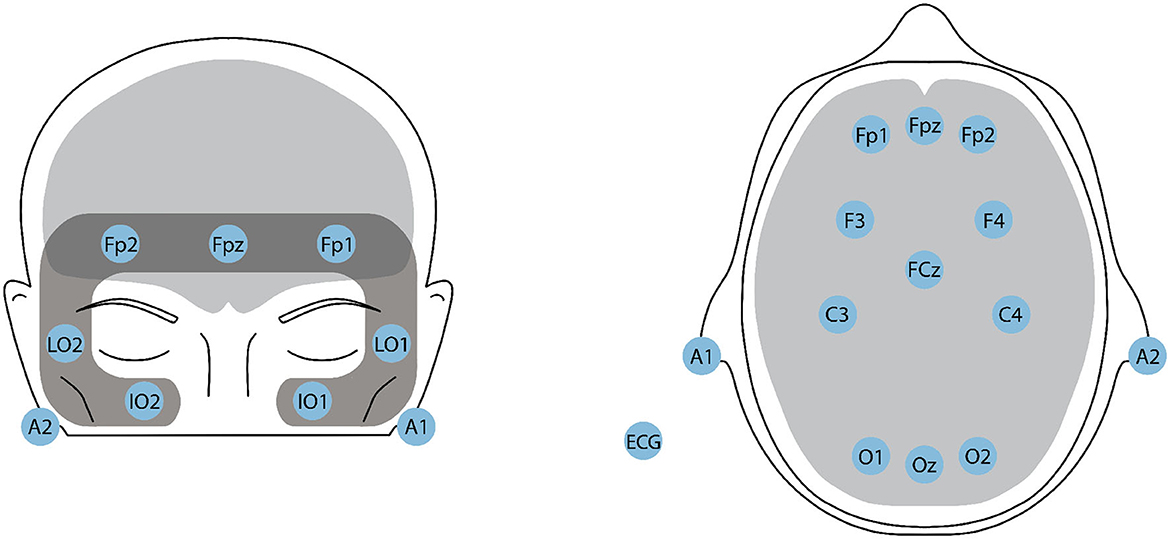

The experimental protocol followed in this study was designed to ensure the collection of high-quality data while minimizing experimenter intervention. The dataset used in this study has been described in detail by Moinnereau et al. (2022d). Here, we provide a comprehensive summary of the data and the experimental procedure and the interested reader is referred to Moinnereau et al. (2022d) for more details. An instrumented headset was created following advice by Cassani et al. (2020); Figure 1 (left) shows the instrumented HTC VIVE Pro Eye headset equipped with 16 ExG sensors. All data were recorded using the OpenBCI system (OpenBCI, United States) with a sampling rate of 125 Hz, ensuring synchronization across all signals. Data was collected during the COVID-19 lockdown. The instrumented headset along with the necessary accessories (e.g., laptops, controllers) was dropped off at participants' homes and later picked up and sanitized following protocols approved by the authors' institution. Eight participants (five male and three female, 28.9 ± 2.9 years of age), all of whom were students at the authors' institution, consented to participate in the experiment, which involved playing the VR game Half-Life Alyx, as shown in Figure 1 (right). The game is a first-person shooter game combining elements of exploration, puzzle-solving, combat, and story. During the action parts, players need to get supplies, use interfaces, throw objects, and engage in combat.

Figure 1. (Left) 16 ExG biosensor-instrumented VR headset used to collect data; (Right) Participant's view of a scene played in the VR game Half-Life: Alyx.

Participants were given instructions remotely (via videoconference) on how to place the headset and minimal experimenter intervention was needed. The experimenter would show participants how to assess signal quality utilizing in-house developed software. When signal quality was deemed acceptable, participants could play the game at their own pace and the videoconference call was terminated. In the experiment, participants engaged in two distinct tasks, referred to as “baseline” and “fighting/shooting.” The “baseline” task corresponds to the initial two chapters of the game (~30 min of gameplay), during which the player becomes familiar with the game storyline, learns navigation techniques, and practices manipulating in-game objects. The “fighting/shooting” task, which follows the “baseline” task, involves more complex gameplay, including puzzle-solving and combat challenges (~1 h of gameplay). Participants played on average a total of 1.5 h, resulting in close to 10 h of physiological data recordings. At the end of each condition, participants responded to the unified experience questionnaire that combines items compiled from 10 different scales, including sense of presence, engagement, immersion, flow, usability, skill, emotion, cybersickness, judgement, and technology adoption, all using a 10-point Likert scale (Tcha-Tokey et al., 2016). The work by Moinnereau et al. (2022d) compared the changes reported by the participants between the baseline and the fighting/shooting tasks. Here, we combine both tasks and explore the use of the biosignals in monitoring the two ratings provided to two questions related to time perception: Q1—“Time seemed to flow differently than usual” and Q2—“I lost the sense of time”.

To answer our first research question, we extracted several features from the EEG, EOG, ECG, and accelerometer data. These features were selected based on their potential relevance to time perception and cognitive processing in VR. For a comprehensive understanding of our methodology, including feature extraction and subsequent steps, please refer to the processing pipeline illustrated in Figure 2. In the following sections, we provide a detailed description of the different features we used and how they were extracted from the physiological signals. All of these features are summarized in Table 1.

In this study, the total 1.5 h of data collected per subject was divided into 5-min window. To prepare the EEG signals for feature extraction, we first applied a finite impulse response band-pass filter with a range of 0.5–45 Hz using the EEGLab toolbox in Matlab. Next, we utilized the artifact subspace reconstruction (ASR) algorithm, from the EEGLAB plugin with default parameters, to enhance the signal quality and remove motion artifacts (Mullen et al., 2015). These parameters were shown in our previous work (Moinnereau et al., 2022d) to accurately remove artifacts from the EEG signals. This pre-processing step ensured that the EEG signals were of high quality and suitable for feature extraction. Before the beginning of each game session, it is important to note that the initial loading time of the game was utilized as a reference point for calibrating the ASR algorithm for each participant. Lastly, each 5-min window was further segmented into 2-s epochs with a 50% overlap for features extraction.

Next, the power distribution of the five EEG frequency bands was computed: delta (1–4 Hz), theta (4–8 Hz), alpha (8–12 Hz), beta (12–30 Hz), and gamma (30–45 Hz) using the Welch method. For each frequency band, the average power was calculated across all EEG channels, and four ratios were computed for each of the average power of each frequency band, resulting in 20 features. Indeed, previous research has shown that certain EEG band ratios, such as delta-beta coupled oscillations, play a crucial role in temporal processing (Arnal et al., 2014). These features provided valuable information on the power distribution of different frequency bands in the EEG signals, which are known to be associated with various cognitive and emotional states.

In addition to the EEG band ratio features, we extracted four indexes to further explore the participants' mental states during the task, namely engagement, arousal, valence, and frontal alpha asymmetry. These measures have been shown to be related to cognitive and emotional processes that are involved in time perception, such as attention, motivation, and affective valence (Yoo and Lee, 2015; Polti et al., 2018; Read et al., 2021). The engagement score (ES), for instance, is a measure of the participant's attention and involvement in the task, which can directly influence their perception of time. Similarly, arousal and valence indexes (AI and VI respectively) provide insights into the participant's emotional state during the task, which has been shown to affect time perception. The frontal alpha asymmetry index (FAA), on the other hand, is a measure of the balance of power between the left and right frontal cortex, which has been linked to emotional valence and motivation, both of which can influence time perception.

To calculate ES, we first computed the relative powers by summing the absolute power across the delta, theta, and alpha bands, dividing each individual band's absolute power by the total power, and expressing it as a percentage in the Fp1 channel. AI and VI were calculated using the F3 and F4 channels. Finally, FAA was calculated by subtracting the log-power of the alpha EEG band in electrode F4 from the log-power of the alpha band from electrode F3. The electrode locations are illustrated in Figure 3. Additionally, the skewness and kurtosis of each of these four indexes were also calculated, resulting in a total of 12 additional features for EEG modality. More details about these features can be found in Moinnereau et al. (2022d).

Figure 3. Headplots showcasing the positioning of the 16-ExG electrodes on the HTC VIVE Pro Eye VR headset. (Left) The 7-channel EOG montage, with LO1 and LO2 for horizontal EOG (left and right cantus respectively), and Fp1, Fpz, Fp2 (above superior orbit), IO1, and IO2 (below inferior orbit) for vertical EOG. (Right) The 11-channel EEG montage, with electrodes placed in the prefrontal, frontal, central, and occipital areas. A1 and A2 serve as references. An additional sensor is placed on the left collarbone for ECG recording.

To measure the connectivity between cortical regions, we used the Phase and Magnitude Spectral Coherence (PMSC) features, represented in Table 1 by phc-band and msc-band for phase coherence and magnitude spectral coherence respectively. These features measure the co-variance of the phase and magnitude between two signals. The motivation for the inclusion of connectivity measures as possible features is based on the hypothesis that the level of connectivity could potentially indicate changes in time perception within the immersive virtual environment (Lewis and Miall, 2003). In our case, with 15 electrodes, we computed PMSC for all possible pairs of electrodes for each of the five sub-bands (δ, θ, α, β, γ). Given that there are 55 unique electrode pairs and 5 sub-bands, this results in a total of 550 PMSC features. For the computation of PMSC, including the complex coherency function and cross-spectral densities, we followed the method detailed in Aoki et al. (1999). By using PMSC, we were able to examine the functional connectivity between different brain regions, which could provide insights into how individuals perceive time in virtual environments. This could potentially help identify the neural mechanisms underlying accurate time perception in immersive virtual experiences.

Moreover, we utilized the Phase and Magnitude Spectral Coherence of Amplitude Modulation (PMSC-AM) features, which extend the capacity of PMSC features to amplitude modulations and provide information about the rate-of-change of specific frequency sub-bands. These interactions are represented by the notation band_mband and reveal interactions between different brain processes and long-range communication. These features have proven to be useful in predicting arousal and valence by Clerico et al. (2018). Given the established relationship between arousal and valence and time perception (Yoo and Lee, 2015; Jeong-Won et al., 2021), here we explore the potential use of these features as correlates of time perception. The PMSC-AM features were calculated based on the modulated signals of each band, resulting in a total of fifteen signals per channel. The magnitude spectral coherence and phase coherence were then computed for all channel pairs, resulting in a total of 1,540 features. More details about the PMSC and PMSC-AM features can be found in Clerico et al. (2018).

The EOG signal has a frequency range of 0.1–50 Hz and amplitude between 100 and 3,500 μV (López et al., 2017). In this study, we extracted eye blink and saccades measures from the 5-min windows using the EOG event recognizer toolbox in Matlab (Toivanen et al., 2015), providing information on blink duration, blink count, saccade duration, and saccade count. These metrics have been shown to have a correlation with time perception (Morrone et al., 2005; Huang et al., 2022).

Next, we extracted time-domain and frequency-domain features from the EOG signals using the signal processing toolbox in MATLAB. These features were extracted from 2-s epochs of the EOG signals, with a 50% overlap between consecutive intervals. The time-domain features include measures such as mean, standard deviation, skewness, and kurtosis, while the frequency-domain measures include mean frequency, median frequency, peak amplitude, and frequency location of the peak amplitude. These features provide information on the distribution of power across different frequency bands in the EOG signals and can reveal patterns related to eye movement. We also calculated statistical features such as interquartile range, variance, and energy. In total, we extracted 31 features from the EOG signals, providing valuable information on eye movements during gameplay.

In addition to the previously mentioned EOG features, we also extracted eye movement features related to the number of times eye movements shifted from the upper to the lower quadrant, as well as from the left to the right quadrant in the field of view of the VR headset. To this end, we employed a Support Vector Machine (SVM) classifier, as proposed by Moinnereau et al. (2020). This classifier was trained on a separate dataset where eye direction was tracked across 36 distinct points within the visual field, each separated by an angle of 10 degrees. For each 500 ms window within the seven EOG signals recorded by the instrumented headset from electrode locations Fp1, Fpz, Fp2, horizontal EOG right, horizontal EOG left, vertical EOG right, and vertical EOG left (namely, the sensors placed on the faceplate of the headset, as shown in Figure 1), we calculated the signal slope and input it into the SVM classifiers. These classifiers were designed to discern between up-down and left-right eye movements. The output of the classifier provided the eye's direction, and we subsequently calculated the number of gaze shifts between the quadrants of interest. These shifts were then incorporated as two additional features within our EOG feature set.

Both heart rate (HR) and HRV have been linked to time perception, with studies suggesting that fluctuations in these physiological markers can influence the experience of time (Meissner and Wittmann, 2011; Pollatos et al., 2014). Therefore, we extracted ECG features from the 5-min windows using an open-source MATLAB toolbox to gather 15 features that relate to HR and HRV.1 The analysis of HRV can be categorized into three methods: time-domain, frequency-domain, and nonlinear methods. The time-domain features measured the variation in time between two successive heartbeats, or interbeat intervals (IBI). We extracted the average IBI, the standard deviation of NN intervals (SDNN), the root mean square of successive RR interval differences (RMSSD), the number of pairs of successive RR intervals that differ by more than 50 ms, and the percentage of this difference (NN50 and pNN50, respectively). Frequency-domain analysis focuses on the power spectral density of the RR time series. We obtained the relative power of the low-frequency (LF) band (0.04–0.15 Hz) and high-frequency (HF) band (0.15–0.4 Hz), as well as their percentages. We also calculated the ratio of LF to HF and the total power, which is the sum of the four spectral bands, LF, HF, the absolute power of the ultra-low-frequency (ULF) band ( ≤ 0.0003 Hz), and the absolute power of the very-low-frequency (VLF) band (0.0033–0.04 Hz). Finally, nonlinear measurements were used to assess the unpredictability of a time series. We extracted the Pointcare plot standard deviation perpendicular to the line of identity (SD1) and along the line of identity (SD2). These features provide valuable insights into the variability of HR and HRV during the VR gameplay session.

The OpenBCI bioamplifier includes an accelerometer, which was placed toward the back of the VR headset. This allows for the analysis of head motion and orientation, which is crucial as head movements have been associated with influencing temporal perception and varying based on arousal and valence states (Behnke et al., 2021). In fact, the speed and nature of head movements can affect the perception of simultaneity between sensory events (Sachgau et al., 2018; Allingham et al., 2020) and can lead to recalibration of time perception in a virtual reality context (Bansal et al., 2019). To capture these dynamics, we extracted features from the x, y, and z signals of the accelerometer data, segmented into 2-s epochs with a 50% overlap. Specifically, we extracted statistical measures (i.e., mean, standard deviation, skewness, kurtosis, and energy) for acceleration, velocity, and displacement along the x, y, and z axes. This resulted in a total of 117 features that provide valuable information about the user's head movements during the VR experience.

Feature selection is an important step in classification tasks that involves the removal of irrelevant or redundant features, thus providing dimensionality reduction prior to classification. In our study, given the relatively small amount of data collected, feature selection is particularly important. We performed a two-step process for feature selection using built-in functions in Matlab: first, we applied the Spearman correlation coefficient to identify features with a medium to high correlation with the ratings from Q1 and Q2; second, we applied the minimum redundancy maximum relevance (mRMR) algorithm to these selected features. The Spearman correlation coefficient measures the strength and direction of the relationship between the physiological features extracted and the ratings of the two questions on time perception. Specifically, we performed a Spearman correlation between all the 2,279 features extracted from each 5-min window of the entire dataset, which includes all recordings from all participants, and the ratings of the two questions Q1 and Q2. To align the number of ratings with the number of 5-min window, we replicated the same rating for each window within a single recording. This approach allowed us to assess the relationship between each feature and the time perception ratings across all 5-min windows and all participants. Spearman correlation is a non-parametric measure that assesses the monotonic relationship between two variables (Schober et al., 2018); hence, does not assume linearity, making it suitable for our analysis. Only features with a Spearman correlation coefficient >0.3 or < −0.3 were retained, indicating a medium to high correlation.

Next, we applied the minimum redundancy maximum relevance (mRMR) algorithm (Peng et al., 2005) on the physiological features that showed significant Spearman correlations with the ratings from Q1 and Q2. The mRMR method finds the most relevant features for the classification task and removes features with high mutual information to minimize redundancy. This approach helps to reduce the dimensionality of the data, improves classification performance, and avoids overfitting. This selection method has been show to be very useful for biosignal data (Clerico et al., 2018; Jesus et al., 2021; Rosanne et al., 2021). As our dataset is small, we used five-fold cross-validation in our analysis. In this process, the dataset was divided into five subsets. For each fold, 80% of the data was used to calculate the mRMR, and the top features were recorded. This process was repeated five times, each time with a different subset held out. At the end, we compare the features that were consistently present across the five folds and use these as candidate features for time perception monitoring. With this analysis, a total of 18 top-features were found to be present in at least two of the five folds.

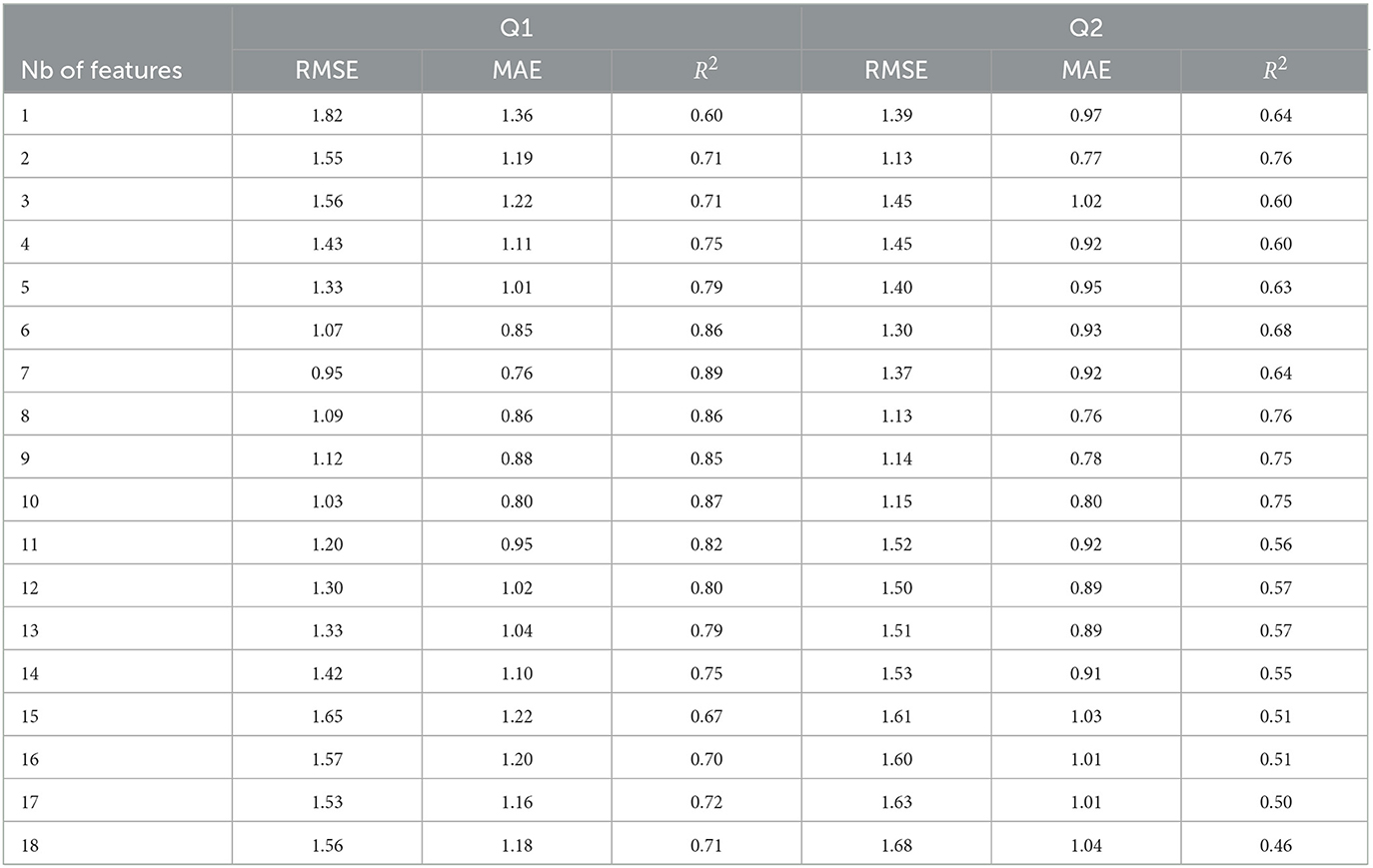

With the top-18 ranked features found, we employed a Gaussian process regressor (GPR) with a rational quadratic kernel to predict the two time perception ratings (Williams and Rasmussen, 1995) with the aim of answering our research question #2. This process was implemented using the Regression Learner toolbox in MATLAB. To find the optimal number of features to use, top-ranked features were added one by one and three figures-of-merit were used, namely root mean square error (RMSE), mean absolute error (MAE), and the R-squared (R2). Both RMSE and MAE provide insights into to overall error distribution of the predictor, with RMSE providing greater emphasis to larger errors. In both cases, lower values are better. The R-squared measure, in turn, measures the goodness of fit of the data to the regression model; higher values are desired. These three figures-of-merit are widely used in regression to assess the performance of the model.

For the analysis, a bootstrap testing methodology was followed where the data was randomly partitioned into 80% for training and 20% for testing and this partitioning was repeated 100 times. Lastly, to gauge if the obtained results were significantly better than chance, a “random regressor” was used. With this regressor, the same bootstrap testing setup was used, but instead of training the regressor with the true ratings reported by the participants, random ratings between 1 and 10 were assigned. To test for significance, a Kruskal–Wallis test was used (Kruskal and Wallis, 1952) for each of the metrics (RMSE, MAE, and R2).

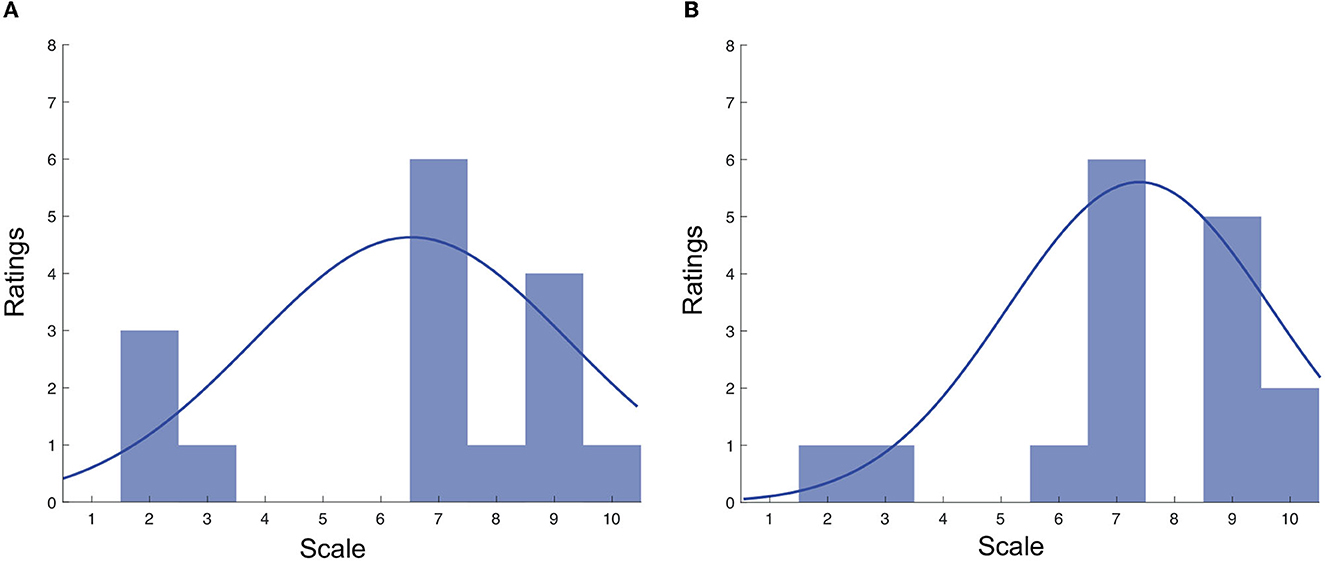

Figures 4A, B display the distribution of the Q1 and Q2 ratings, respectively. For Q1, the ratings ranged from 2 to 10, with the majority being in the range of 7–9. The mean rating for Q1 was 6.40 (SD = 2.78). Similar findings were seen for Q2, where an average rating of 7.37 (SD = 2.27) was seen.

Figure 4. (A) Distribution of Q1 ratings across both testing conditions; (B) Distribution of Q2 ratings across both testing conditions.

A total of 2,279 features were extracted from the EEG, EOG, ECG, and accelerometer signals. After passing through the two feature ranking steps mentioned in Section 3.3, 18 top features were found for each of the two time perception ratings. Figures 5A, B display the selected features for Q1 and Q2, respectively, arranged in ascending order of importance given by the mRMR selection algorithm. As can be seen, for both Q1 (“Time seemed to flow differently than usual”) and Q2 (“I lost the sense of time”), head movement and HRV-related measures corresponded to the top-two most important features. The majority of the other top features are related to EEG measures of coherence between different electrode sites. For Q1, two EOG measures stood out, one of them corresponding to a number of shifts from the top-bottom quadrants based on outputs from the EOG-based classifier described by Moinnereau et al. (2020).

Table 2 reports the impact that including these top features one-by-one has on regressor accuracy. The goal of this analysis is to explore the optimal number of features for time perception monitoring. As can be seen from the Table, there is an elbow point for Q1 at seven features and at eight features for Q2. In fact, for Q2, the accuracy achieved with just two features was very close to that achieved with 8. For Q1, the top-seven features included: IQR-acc-x, SDNN, median-acc-x, msc-beta-mbeta-Fpz-Fp2, gamma-theta ratio, msc-beta-F1-F2, and UP-DOWN. For Q2, the top eight features correspond to IQR-acc-x, SDNN, pNN50, msc-beta-Fp1-Fpz, msc-alpha-Fp1-Fpz, msc-beta-mtheta-Fp1-Fpz, msc-beta-mbeta-Fpz-Fp2, msc-beta-F1-F2.

Table 2. Figures-of-merit as a function of number of features used to train the regressor for Q1 and Q2 ratings.

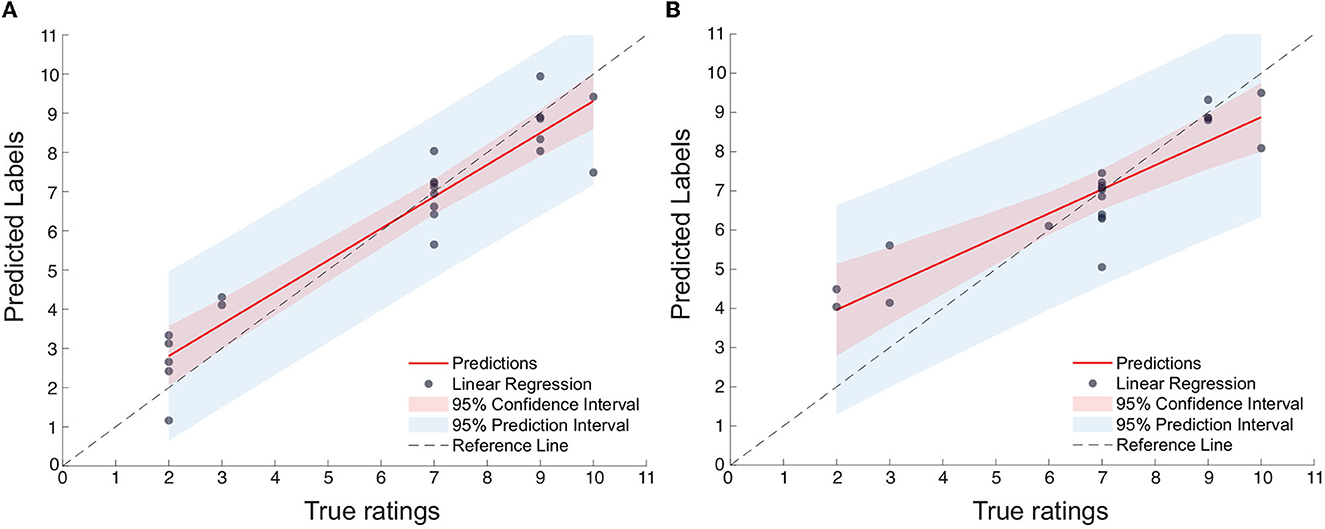

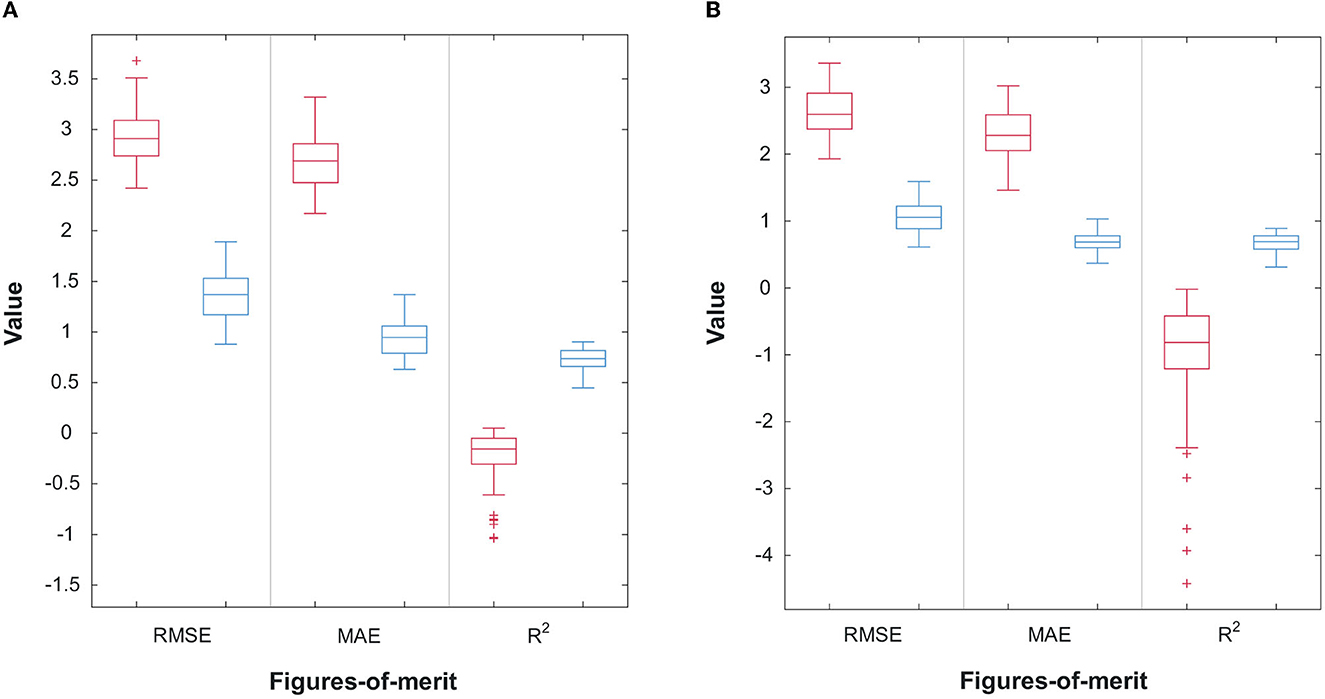

Figures 6A, B display the scatterplots, including confidence intervals, of predicted vs. true subjective ratings for Q1 and Q2, respectively, using the top-7 and top-8 features mentioned above for one of the bootstrap runs. The reference, perfect-correlation line is included for comparisons. For Q1, a significant correlation of 0.95 can be seen between predicted and true ratings. For Q2, in turn, a significant correlation of 0.90 is observed. To test if these results are significantly better than chance, the prediction task is repeated 100 times using a bootstrap method. Figures 7A, B show the boxplots of the figures-of-merit achieved with the chance regressor and the proposed regressors for 100 bootstrap runs. As can be seen, the error based measures from the chance regressor result in similar trends and are almost three times as higher as the proposed method. Lastly, the Kruskal–Wallis test was performed over the entire 100 bootstrap trials and showed a significant difference (p-value = 10−34) for all three figures-of-merit.

Figure 6. (A) Scatterplot of predicted vs. true ratings for Q1 (number of features = 7); (B) Scatterplot of predicted vs. true ratings for Q2 (Number of features = 8).

Figure 7. (A) Performance comparison of 100 random bootstrap trials for the random (red) and proposed (blue) regressors for Q1; (B) Performance comparison of 100 random bootstrap trials for the random (red) and proposed (blue) regressors for Q2.

Our findings suggest that the majority of the participants experienced altered time perception during the experiment, with the majority strongly agreeing that time seemed to flow differently than usual for them and that they lost the sense of time while in gameplay. This is consistent with previous research on time perception in VR, which has demonstrated that the sense of time passing can be significantly influenced by the level of immersion and engagement with the virtual environment (Mullen and Davidenko, 2021; Read et al., 2022). The VR environment used in our study was a highly-rated videogame known for its immersive qualities. This emphasizes the importance of examining the relationship between immersion and time perception in VR settings.

To empirically investigate this relationship, we conducted a correlation analysis between the Q1 ratings and the ratings from the seven items in the immersion scale. A significant correlation of 0.81 was found between the Q1 ratings and the ratings from the seven items in the immersion scale, particularly with the item—“I felt physically fit in the virtual environment”. This strong correlation suggests that the more participants felt physically fit and comfortable in the virtual environment, the more they experienced altered time perception. This provides empirical support for the link between immersion and time perception, reinforcing the idea that immersion can significantly influence how individuals perceive time in VR. Interestingly, lower values were observed during the puzzle-solving tasks, as shown in Figure 4, which were rated by the participants as being less engaging than the shooting tasks. This further corroborates the link between engagement, immersion, and altered time perception in VR. The immersive qualities of the VR environment, combined with the engaging nature of the tasks, appear to have a significant impact on participants' perception of time.

The identified features provide valuable insights into the neural, physiological, and behavioral correlates of time perception in VR. The prominence of head movement and HRV-related measures among the top features for both Q1 and Q2 underscores the integral role of physical engagement and physiological responses in shaping time perception in VR. The acceleration of head movements along the x-axis may be indicative of embodiment while in gameplay, as one often moves their head sideways to move away from shots fired by the enemy. Indeed, levels of embodiment have been linked to altered perceptions of time (Charbonneau et al., 2017; Unruh et al., 2021, 2023). In a similar vein, the Up-Down shifts feature, present in Q1, suggests that the players are continuously scanning the scene and engaged. This is expected for fully immersed gamers, as players need to get supplies, which could be on the ground, pick up objects and throw them, and engage in combat with enemies appearing, for example, on floors below you, as shown in Figure 1. In contrast, less immersive screen-based first-person shooter games have shown eye gaze patterns to be mostly in the center of the screen, where the aiming reticle is usually placed (Kenny et al., 2005; El-Nasr and Yan, 2006). SDNN, in turn, characterizes the heart rate variability of the players and has been linked to emotional states (Shi et al., 2017), stress (Wu and Lee, 2009; Pereira et al., 2017), and mental load (Hao et al., 2022), which in turn, have also been shown to modulate the perception of time. This suggests that physiological responses, as indicated by HRV measures, could also significantly influence time perception in VR.

For the top EEG measures, two out of three top measures corresponded to coherence measures in the beta frequency band. The electrodes showing the strongest involvement were located over the pre-frontal cortex areas. The pre-frontal cortex has been linked with temporal processing with activation in the right pre-frontal cortex has been reported during time perception tasks (Üstün et al., 2017). The inter-hemispheric PMSC measure msc-beta-F1-F2 may be quantifying this activation. Moreover, beta-band activation has also been linked with perception of time across multiple studies (e.g., Ghaderi et al., 2018; Damsma et al., 2021). The work by Li and Kim (2021) also showed that beta band activity around the Fpz region could be linked to task complexity, which in turn, was shown to also modulate time perception. In a similar vein, the work by Wiener et al. (2018) showed that transcranial alternating current stimulation over the fronto-central cortex at beta frequency could shift the perception of time to make stimuli seem longer in duration. Such properties could be captured by the PMSC-AM feature msc-beta-mbeta-Fpz-Fp2. Lastly, cortical gamma-theta coupling has been linked to mental workload (Baldauf et al., 2009; Gu et al., 2022), whereas theta-gamma coupling to working memory (Lisman and Jensen, 2013; Park et al., 2013), which itself has been shown to modulate perception of time (Pan and Luo, 2011). As the puzzle-solving tasks often require the use of short-term working memory, this feature is likely quantifying this aspect.

For Q2, four of the top eight features (i.e., IQR-acc-x, SDNN, msc-beta-F1-F2, and msc-beta-mbeta-Fpz-Fp2) overlap with those seen with Q1, suggesting their importance for time perception monitoring and the need for a multimodal system to combine information from EEG, ECG and head movements. The other four provide alternate views of HRV and EEG modulations. For example, pNN50 has been shown to be an HRV correlate of focus (Won et al., 2018). High levels of focus and attention have been shown to be a driving factor for losing sense of time in VR (Winkler et al., 2020). In turn, inter-hemispheric differences in alpha band have also been linked to time perception (Anliker, 1963; Contreras et al., 1985). Lastly, several previous works have linked the theta-beta ratio to attentional control (Putman et al., 2013; Morillas-Romero et al., 2015; Angelidis et al., 2016, 2018). While the ratio is usually computed using frequency bands computed over a certain analysis window, the PMSC-AM feature msc-beta-mtheta-Fp1-Fpz computes the temporal dynamics of this ratio over the window, thus may capture temporal attention changes more reliably. As with focus, high attention levels have been linked to losing sense of time in VR (Winkler et al., 2020).

The high correlation coefficients observed between the predicted and true subjective ratings for Q1 and Q2, as shown in Figures 6A, B, are not only indicative of the accuracy of the proposed feature selection but also highlight the potential of using such features to effectively characterize time perception in VR. The fact that the error-based measures from the chance regressor were almost three times higher than those from the proposed method underscores the importance of careful feature selection and the use of machine learning techniques in predicting time perception. The significant difference in figures-of-merit between the chance regressor and the proposed regressors, as revealed by the Kruskal–Wallis test, further emphasizes the enhanced performance and effectiveness of the present study. This finding is particularly encouraging as it suggests that the proposed method could be used to enhance the design and evaluation of VR experiences by providing a more nuanced understanding of how users perceive time in VR. Moreover, the overlap of top features between Q1 and Q2 suggests that there are common underlying mechanisms in different aspects of time perception in VR, reinforcing the need for a multimodal system that combines information from EEG, EOG, ECG, and head movements.

The experiments described herein have shown the importance of a multimodal system to characterize time perception while immersed in VR. To characterize aspects of time flowing differently, features from four modalities—EEG, ECG, EOG, and accelerometry—were shown to be crucial, thus signaling the importance of an instrumented headset. The aspect of time flowing differently also showed significant correlations with aspects related to immersion, thus suggesting that the developed instrumented headset could provide useful insights for overall monitoring of immersive media quality of experience. If interested in monitoring only aspects of the users losing sense of time while in VR, our results suggest that head movement features and HRV measures can achieve reliable results. Such findings could potentially be achieved with accelerometer data already present in VR headsets and with a heart rate monitor. Additionally, other performance measures such as gameplay duration and score could also provide additional support to the self-assessed ratings of time perception.

The instrumented headset, nonetheless, could provide neural correlates of additional factors related to the overall experience and other aspects of time perception. In fact, one modality that was not explored here was EDA. Electrodermal activity has been used in VR to measure the immersive experience (Egan et al., 2016) as well as player arousal states (Klarkowski et al., 2016). As arousal has been linked to attention and emotional resources, it has also been linked to time perception (Angrilli et al., 1997; Mella et al., 2011). As such, future studies could explore the use of EDA-derived features. Recent innovations in VR headset development are already exploring the inclusion of such sensors directly on the headset (Bernal et al., 2022).

While this study provides promising results, some limitations should be acknowledged. First, as the study was conducted amidst the first COVID-19 lockdown, it has a small number of participants, thus limiting the generalizability of our findings. Moreover, the presence of potential multicollinearity among the predictors in our multiple regression model could affect the interpretability of our findings. Despite these limitations, this study should be considered a feasibility study, exploring the potential of a multimodal system to characterize time perception while immersed in VR. Increasing the sample size in future studies would improve the statistical power and strengthen the validity of our results. Moreover, future studies should consider using a wider range of stimuli to investigate better the relationship between immersion, presence, and time perception in VR, as well as their role in overall quality of experience.

In this study, we examined time perception in a highly immersive VR environment using a combination of physiological signals, including head movement, heart rate variability, EEG, and EOG measured from sensors embedded directly into the VR headset. Experimental results show that participants experienced a high degree of time distortion when playing the game. Top features were found and used to characterize the gamers' sense of time perception using a simple Gaussian process regressor. An in-depth analysis of these top features were performed. Results showed that the proposed models were able to characterize the gamers' perception of time significantly better than chance. Ultimately, it is hoped that the insights and models shown herein can be used by the community to understand better the relationship between immersion and time perception in virtual environments, thus leading to improve immersive media experiences.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by INRS Ethics Committee. The patients/participants provided their written informed consent to participate in this study.

M-AM conducted the experiments, analyzed the data, and wrote the first draft of the paper. All authors conceived and designed the study and the experiment analysis, contributed to manuscript revisions, approved the final version of the manuscript, and agree to be held accountable for the content therein.

The authors acknowledge funding from the Natural Sciences and Engineering Research Council of Canada (RGPIN-2021-03246).

TF declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^https://www.mathworks.com/matlabcentral/fileexchange/84692-ecg-class-for-heart-rate-variability-analysis

Abril, J. D., Rivera, O., Caldas, O. I., Mauledoux, M., and Avilés, Ó. (2020). Serious game design of virtual reality balance rehabilitation with a record of psychophysiological variables and emotional assessment. Int. J. Adv. Sci. Eng. Inf. Technol. 10, 1519–1525. doi: 10.18517/ijaseit.10.4.10319

Allingham, E., Hammerschmidt, D., and W'́ollner, C. (2020). Time perception in human movement: effects of speed and agency on duration estimation. Q. J. Exp. Psychol. 74, 559–572. doi: 10.1177/1747021820979518

Angelidis, A., Hagenaars, M., van Son, D., van der Does, W., and Putman, P. (2018). Do not look away! spontaneous frontal EEG theta/beta ratio as a marker for cognitive control over attention to mild and high threat. Biol. Psychol. 135, 8–17. doi: 10.1016/j.biopsycho.2018.03.002

Angelidis, A., van der Does, W., Schakel, L., and Putman, P. (2016). Frontal EEG theta/beta ratio as an electrophysiological marker for attentional control and its test-retest reliability. Biol. Psychol. 121, 49–52. doi: 10.1016/j.biopsycho.2016.09.008

Angrilli, A., Cherubini, P., Pavese, A., and Mantredini, S. (1997). The influence of affective factors on time perception. Percept. Psychophys. 59, 972–82. doi: 10.3758/BF03205512

Anliker, J. (1963). Variations in alpha voltage of the electroencephalogram and time perception. Science 140, 1307–1309. doi: 10.1126/science.140.3573.1307

Aoki, F., Fetz, E., Shupe, L., Lettich, E., and Ojemann, G. (1999). Increased gamma-range activity in human sensorimotor cortex during performance of visuomotor tasks. Clin. Neurophysiol. 110, 524–537. doi: 10.1016/S1388-2457(98)00064-9

Arnal, L. H., Doelling, K. B., and Poeppel, D. (2014). Delta–beta coupled oscillations underlie temporal prediction accuracy. Cereb. Cortex 25, 3077–3085. doi: 10.1093/cercor/bhu103

Askim, K., and Knardahl, S. (2021). The influence of affective state on subjective-report measurements: evidence from experimental manipulations of mood. Front. Psychol. 12, 601083. doi: 10.3389/fpsyg.2021.601083

Baldauf, D., Burgard, E., and Wittmann, M. (2009). Time perception as a workload measure in simulated car driving. Appl. Ergon. 40, 929–935. doi: 10.1016/j.apergo.2009.01.004

Bansal, A., Weech, S., and Barnett-Cowan, M. (2019). Movement-contingent time flow in virtual reality causes temporal recalibration. Sci. Rep. 9, 1–13. doi: 10.1038/s41598-019-40870-6

Behnke, M., Bianchi-Berthouze, N., and Kaczmarek, L. (2021). Head movement differs for positive and negative emotions in video recordings of sitting individuals. Sci. Rep. 11, 7405. doi: 10.1038/s41598-021-86841-8

Berkman, M. I., and Akan, E. (2019). “Presence and immersion in virtual reality,” in Encyclopedia of Computer Graphics and Games, ed N. Lee (Cham: Springer), 1–10. doi: 10.1007/978-3-319-08234-9_162-1

Bernal, G., Hidalgo, N., Russomanno, C., and Maes, P. (2022). “GALEA: a physiological sensing system for behavioral research in virtual environments,” in IEEE Conference on Virtual Reality and 3D User Interfaces, VR 2022, March 12-16, 2022 (Christchurch: IEEE), 66–76. doi: 10.1109/VR51125.2022.00024

Block, R. A., and Gruber, R. P. (2014). Time perception, attention, and memory: a selective review. Acta Psychol. 149, 129–133. doi: 10.1016/j.actpsy.2013.11.003

Buhusi, C. V., and Meck, W. H. (2005). What makes us tick? Functional and neural mechanisms of interval timing. Nat. Rev. Neurosci. 6, 755–765. doi: 10.1038/nrn1764

Cassani, R., Moinnereau, M.-A., Ivanescu, L., Rosanne, O., and Falk, T. (2020). Neural interface instrumented virtual reality headset: toward next-generation immersive applications. IEEE Syst. Man Cybern. Mag. 6, 20–28. doi: 10.1109/MSMC.2019.2953627

Cellini, N., Mioni, G., Levorato, I., Grondin, S., Stablum, F., Sarlo, M., et al. (2015). Heart rate variability helps tracking time more accurately. Brain Cogn. 101, 57–63. doi: 10.1016/j.bandc.2015.10.003

Chang, E., Kim, H. T., and Yoo, B. (2021). Predicting cybersickness based on user's gaze behaviors in HMD-based virtual reality. J. Comput. Des. Eng. 8, 728–739. doi: 10.1093/jcde/qwab010

Charbonneau, P., Dallaire-Côté, M., Côté, S. S.-P., Labbe, D. R., Mezghani, N., Shahnewaz, S., et al. (2017). “GAITZILLA: exploring the effect of embodying a giant monster on lower limb kinematics and time perception,” in 2017 International Conference on Virtual Rehabilitation (ICVR) (Montreal, QC: IEEE), 1–8. doi: 10.1109/ICVR.2017.8007535

Cheng, X., and Penney, T. (2015). Modulation of time perception by eye movements. Front. Hum. Neurosci. 9, 49. doi: 10.3389/conf.fnhum.2015.219.00049

Clerico, A., Tiwari, A., Gupta, R., Jayaraman, S., and Falk, T. (2018). Electroencephalography amplitude modulation analysis for automated affective tagging of music video clips. Front. Comput. Neurosci. 11, 115. doi: 10.3389/fncom.2017.00115

Contreras, C. M., Mayagoitia, L., and Mexicano, G. (1985). Interhemispheric changes in alpha rhythm related to time perception. Physiol. Behav. 34, 525–529. doi: 10.1016/0031-9384(85)90044-7

Damsma, A., Schlichting, N., and van Rijn, H. (2021). Temporal context actively shapes EEG signatures of time perception. J. Neurosci. 41, 4514–4523. doi: 10.1523/JNEUROSCI.0628-20.2021

Ding, X., and Li, Z. (2022). A review of the application of virtual reality technology in higher education based on web of science literature data as an example. Front. Educ. 7, 1048816. doi: 10.3389/feduc.2022.1048816

Egan, D., Brennan, S., Barrett, J., Qiao, Y., Timmerer, C., Murray, N., et al. (2016). “An evaluation of heart rate and electrodermal activity as an objective qoe evaluation method for immersive virtual reality environments,” in Eighth International Conference on Quality of Multimedia Experience, QoMEX 2016, June 6-8, 2016 (Lisbon: IEEE), 1–6. doi: 10.1109/QoMEX.2016.7498964

El-Nasr, M. S., and Yan, S. (2006). “Visual attention in 3D video games,” in Proceedings of the 2006 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology (New York, NY: Association for Computing Machinery), ACE '06, 22. doi: 10.1145/1178823.1178849

Fontes, R., Ribeiro, J., Gupta, D. S., Machado, D., Lopes-Júnior, F., Magalhães, F., et al. (2016). Time perception mechanisms at central nervous system. Neurol. Int. 8, 5939. doi: 10.4081/ni.2016.5939

Fung, B. J., Crone, D. L., Bode, S., and Murawski, C. (2017). Cardiac signals are independently associated with temporal discounting and time perception. Front. Behav. Neurosci. 11, 1. doi: 10.3389/fnbeh.2017.00001

Ghaderi, A. H., Moradkhani, S., Haghighatfard, A., Akrami, F., and Khayyer, Z. Balcı, F. (2018). Time estimation and beta segregation: an EEG study and graph theoretical approach. PLoS ONE 13, 1–16. doi: 10.1371/journal.pone.0195380

Gu, H., Chen, H., Yao, Q., Wang, S., Ding, Z., Yuan, Z., et al. (2022). Cortical theta-gamma coupling tracks the mental workload as an indicator of mental schema development during simulated quadrotor uav operation. J. Neural Eng. 19, 066029. doi: 10.1088/1741-2552/aca5b6

Hao, T., Zheng, X., Wang, H., Xu, K., and Chen, S. (2022). Linear and nonlinear analyses of heart rate variability signals under mental load. Biomed. Signal Process. Control 77, 103758. doi: 10.1016/j.bspc.2022.103758

Huang, Z., Luo, H., and Zhang, H. (2022). Time compression induced by voluntary-action and its underlying mechanism: the role of eye movement in intentional binding (IB). J. Vis. 22, 3042–3042. doi: 10.1167/jov.22.14.3042

Igarzábal, F. A., Hruby, H., Witowska, J., Khoshnoud, S., and Wittmann, M. (2021). What happens while waiting in virtual reality? A comparison between a virtual and a real waiting situation concerning boredom, self-regulation, and the experience of time. Technol. Mind Behav. 2. doi: 10.1037/tmb0000038

Jeong-Won, C., Lee, G.-E., and Jang-Han, L. (2021). The effects of valence and arousal on time perception in depressed patients. Psychol. Res. Behav. Manag. 14, 17–26. doi: 10.2147/PRBM.S287467

Jesus, B. Jr., Cassani, R., McGeown, W. J., Cecchi, M., Fadem, K., Falk, T. H., et al. (2021). Multimodal prediction of Alzheimer's disease severity level based on resting-state eeg and structural mri. Front. Hum. Neurosci. 15, 700627. doi: 10.3389/fnhum.2021.700627

Johari, K., Lai, V. T., Riccardi, N., and Desai, R. H. (2023). Temporal features of concepts are grounded in time perception neural networks: an EEG study. Brain Lang. 237, 105220. doi: 10.1016/j.bandl.2022.105220

Ju, Y. S., Hwang, J. S., Kim, S. J., and Suk, H. J. (2019). “Study of eye gaze and presence effect in virtual reality.” in HCI International 2019 - Posters- 21st International Conference, HCII 2019, Orlando, FL, USA, July 26-31, 2019. Proceedings, Part II, volume 1033 of Communications in Computer and Information Science, ed C. Stephanidis (Cham: Springer), 446–449. doi: 10.1007/978-3-030-23528-4_60

Kenny, A., Koesling, H., Delaney, D. T., McLoone, S. C., and Ward, T. E. (2005). “A preliminary investigation into eye gaze data in a first person shooter game,” in 19th European Conference on Modelling and Simulation (ECMS 2005) (Riga), 733–740.

Klarkowski, M., Johnson, D., Wyeth, P., Phillips, C., and Smith, S. (2016). “Psychophysiology of challenge in play: EDA and self-reported arousal,” in Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, CHI EA '16 (New York, NY: Association for Computing Machinery), 1930–1936. doi: 10.1145/2851581.2892485

Kononowicz, T. W., and van Rijn, H. (2015). Single trial beta oscillations index time estimation. Neuropsychologia 75, 381–389. doi: 10.1016/j.neuropsychologia.2015.06.014

Kruskal, W. H., and Wallis, W. A. (1952). Use of ranks in one-criterion variance analysis. J. Am. Stat. Assoc. 47, 583–621. doi: 10.1080/01621459.1952.10483441

Lamotte, M., Chakroun, N., Droit-Volet, S., and Izaute, M. (2014). Metacognitive questionnaire on time: feeling of the passage of time. Timing Time Percept. 2, 339–359. doi: 10.1163/22134468-00002031

Lewis, P. A., and Miall, R. C. (2003). Distinct systems for automatic and cognitively controlled time measurement: evidence from neuroimaging. Curr. Opin. Neurobiol. 13, 250–255. doi: 10.1016/S0959-4388(03)00036-9

Li, B. J., Bailenson, J. N., Pines, A., Greenleaf, W. J., and Williams, L. M. (2017). A public database of immersive VR videos with corresponding ratings of arousal, valence, and correlations between head movements and self report measures. Front. Psychol. 8, 2116. doi: 10.3389/fpsyg.2017.02116

Li, J., and Kim, J.-E. (2021). The effect of task complexity on time estimation in the virtual reality environment: an EEG study. Appl. Sci. 11, 9779. doi: 10.3390/app11209779

Lisman, J., and Jensen, O. (2013). The theta-gamma neural code. Neuron 77, 1002–1016. doi: 10.1016/j.neuron.2013.03.007

Lopes, M. K. S., de Jesus, B. J., Moinnereau, M.-A., Gougeh, R. A., Rosanne, O. M., Schubert, T. H., et al. (2022). “Nat(UR)e: quantifying the relaxation potential of ultra-reality multisensory nature walk experiences,” in 2022 IEEE International Conference on Metrology for Extended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE) (Rome: Italy), 459–464. doi: 10.1109/MetroXRAINE54828.2022.9967576

López, A., Martín, F. J. F., Yang'́uela, D., Álvarez, C., and Postolache, O. (2017). Development of a computer writing system based on EOG. Sensors 17, 1505. doi: 10.3390/s17071505

Maia, C. L. B., and Furtado, E. S. (2019). An approach to analyze user's emotion in HCI experiments using psychophysiological measures. IEEE Access 7, 36471–36480. doi: 10.1109/ACCESS.2019.2904977

Meissner, K., and Wittmann, M. (2011). Body signals, cardiac awareness, and the perception of time. Biol. Psychol. 86, 289–297. doi: 10.1016/j.biopsycho.2011.01.001

Mella, N., Conty, L., and Pouthas, V. (2011). The role of physiological arousal in time perception: psychophysiological evidence from an emotion regulation paradigm. Brain Cogn. 75, 182–7. doi: 10.1016/j.bandc.2010.11.012

Moinnereau, M.-A., Alves de Oliveira, A. Jr., and Falk, T. H. (2022a). Immersive media experience: a survey of existing methods and tools for human influential factors assessment. Qual. User Exp. 7, 5. doi: 10.1007/s41233-022-00052-1

Moinnereau, M.-A., de Oliveira, A. A. Jr., and Falk, T. H. (2022b). “Measuring human influential factors during VR gaming at home: towards optimized per-user gaming experiences,” in Human Factors in Virtual Environments and Game Design. AHFE (2022) International Conference. AHFE Open Access, Vol 50 (New York, NY: AHFE International), 15.

Moinnereau, M.-A., Oliveira, A., and Falk, T. H. (2020). “Saccadic eye movement classification using exg sensors embedded into a virtual reality headset,” in 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (Toronto, ON: IEEE), 3494–3498. doi: 10.1109/SMC42975.2020.9283019

Moinnereau, M.-A., Oliveira, A., and Falk, T. H. (2022c). “Human influential factors assessment during at-home gaming with an instrumented VR headset,” in 2022 14th International Conference on Quality of Multimedia Experience (QoMEX) (Lippstadt), 1–4. doi: 10.1109/QoMEX55416.2022.9900912

Moinnereau, M.-A., Oliveira, A. A., and Falk, T. H. (2022d). Instrumenting a virtual reality headset for at-home gamer experience monitoring and behavioural assessment. Front. Virtual Real. 3, 156. doi: 10.3389/frvir.2022.971054

Morillas-Romero, A., Tortella-Feliu, M., Bornas, X., and Putman, P. (2015). Spontaneous EEG theta/beta ratio and delta-beta coupling in relation to attentional network functioning and self-reported attentional control. Cogn. Affect. Behav. Neurosci. 15, 598–606. doi: 10.3758/s13415-015-0351-x

Morrone, M. C., Ross, J., and Burr, D. (2005). Saccadic eye movements cause compression of time as well as space. Nat. Neurosci. 8, 950–954. doi: 10.1038/nn1488

Mullen, G., and Davidenko, N. (2021). Time compression in virtual reality. Timing Time Percept. 9, 1–16. doi: 10.1163/22134468-bja10034

Mullen, T. R., Kothe, C. A. E., Chi, Y. M., Ojeda, A., Kerth, T., Makeig, S., et al. (2015). Real-time neuroimaging and cognitive monitoring using wearable dry EEG. IEEE Trans. Biomed. Eng. 62, 2553–2567. doi: 10.1109/TBME.2015.2481482

Muñoz, J. E., Quintero, L., Stephens, C. L., and Pope, A. (2020). A psychophysiological model of firearms training in police officers: a virtual reality experiment for biocybernetic adaptation. Front. Psychol. 11, 683. doi: 10.3389/fpsyg.2020.00683

Ogden, R. S., Dobbins, C., Slade, K., McIntyre, J., and Fairclough, S. (2022). The psychophysiological mechanisms of real-world time experience. Sci. Rep. 12, 12890. doi: 10.1038/s41598-022-16198-z

Pan, Y., and Luo, Q.-Y. (2011). Working memory modulates the perception of time. Psychon. Bull. Rev. 19, 46–51. doi: 10.3758/s13423-011-0188-4

Park, J. Y., Jhung, K., Lee, J., and An, S. K. (2013). Theta-gamma coupling during a working memory task as compared to a simple vigilance task. Neurosci. Lett. 532, 39–43. doi: 10.1016/j.neulet.2012.10.061

Peng, H., Long, F., and Ding, C. (2005). Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1226–1238. doi: 10.1109/TPAMI.2005.159

Pereira, T., Almeida, P. R., Cunha, J. P., and Aguiar, A. (2017). Heart rate variability metrics for fine-grained stress level assessment. Comput. Methods Programs Biomed. 148, 71–80. doi: 10.1016/j.cmpb.2017.06.018

Perkis, A., Timmerer, C., Baraković, S., Barakovic, J., Bech, S., Bosse, S., et al. (2020). Qualinet white paper on definitions of immersive media experience (IMEx). arXiv. [preprint]. doi: 10.48550/arXiv.2007.07032

Pollatos, O., Laubrock, J., and Wittmann, M. (2014). Interoceptive focus shapes the experience of time. PLoS ONE 9, e86934. doi: 10.1371/journal.pone.0086934

Polti, I., Martin, B., and van Wassenhove, V. (2018). The effect of attention and working memory on the estimation of elapsed time. Sci. Rep. 8, 6690. doi: 10.1038/s41598-018-25119-y

Putman, P., Verkuil, B., Arias-Garcia, E., Pantazi, I., and van Schie, C. (2013). Erratum to: EEG theta/beta ratio as a potential biomarker for attentional control and resilience against deleterious effects of stress on attention. Cogn. Affect. Behav. Neurosci. 14, 1165. doi: 10.3758/s13415-014-0264-0

Read, T., Sanchez, C. A., and Amicis, R. (2022). The influence of attentional engagement and spatial characteristics on time perception in virtual reality. Virtual Real. 27, 1–8. doi: 10.1007/s10055-022-00723-6

Read, T., Sanchez, C. A., and De Amicis, R. (2021). “Engagement and time perception in virtual reality,” in Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 65 (Los Angeles, CA: SAGE Publications Sage CA), 913–918. doi: 10.1177/1071181321651337

Rogers, J., Jensen, J., Valderrama, J., Johnstone, S., and Wilson, P. (2020). Single-channel EEG measurement of engagement in virtual rehabilitation: a validation study. Virtual Real. 25, 357–366. doi: 10.1007/s10055-020-00460-8

Rosanne, O., Albuquerque, I., Cassani, R., Gagnon, J.-F., Tremblay, S., Falk, T. H., et al. (2021). Adaptive filtering for improved eeg-based mental workload assessment of ambulant users. Front. Neurosci. 15, 611962. doi: 10.3389/fnins.2021.611962

Sachgau, C., Chung, W., and Barnett-Cowan, M. (2018). Perceived timing of active head movement at different speeds. Neurosci. Lett. 687, 253–258. doi: 10.1016/j.neulet.2018.09.065

Salgado, D. P., Flynn, R., Naves, E. L. M., and Murray, N. (2022). “A questionnaire-based and physiology-inspired quality of experience evaluation of an immersive multisensory wheelchair simulator,” in MMSys '22, 13th ACM Multimedia Systems Conference, June 14–17, 2022, eds N. Murray, G. Simon, M. C. Q. Farias, I. Viola, and M. Montagud (Athlone: ACM), 1–11. doi: 10.1145/3524273.3528175

Schober, P., Boer, C., and Schwarte, L. A. (2018). Correlation coefficients: appropriate use and interpretation. Anesth. Analg. 126, 1763–1768. doi: 10.1213/ANE.0000000000002864

Shi, H., Yang, L., Zhao, L., Su, Z., Mao, X., Zhang, L., et al. (2017). Differences of heart rate variability between happiness and sadness emotion states: a pilot study. J. Med. Biol. Eng. 37, 527–539. doi: 10.1007/s40846-017-0238-0

Silva, P. R., Marinho, V., Magalhães, F., Farias, T., Gupta, D. S., Barbosa, A. L. R., et al. (2022). Bromazepam increases the error of the time interval judgments and modulates the EEG alpha asymmetry during time estimation. Conscious. Cogn. 100, 103317. doi: 10.1016/j.concog.2022.103317

Tcha-Tokey, K., Christmann, O., Loup-Escande, E., and Richir, S. (2016). Proposition and validation of a questionnaire to measure the user experience in immersive virtual environments. Int. J. Virtual Real. 16, 33–48. doi: 10.20870/IJVR.2016.16.1.2880

Terkildsen, T., and Makransky, G. (2019). Measuring presence in video games: an investigation of the potential use of physiological measures as indicators of presence. Int. J. Hum. Comput. Stud. 126, 64–80. doi: 10.1016/j.ijhcs.2019.02.006

Toivanen, M., Pettersson, K., and Lukander, K. (2015). A probabilistic real-time algorithm for detecting blinks, saccades, and fixations from eog data. J. Eye Mov. Res. 8. doi: 10.16910/jemr.8.2.1

Unruh, F., Landeck, M., Oberd'́orfer, S., Lugrin, J., and Latoschik, M. E. (2021). The influence of avatar embodiment on time perception - towards VR for time-based therapy. Front. Virtual Real. 2, 658509. doi: 10.3389/frvir.2021.658509

Unruh, F., Vogel, D., Landeck, M., Lugrin, J.-L., and Latoschik, M. E. (2023). Body and time: virtual embodiment and its effect on time perception. IEEE Trans. Vis. Comput. Graph. 29, 1–11. doi: 10.1109/TVCG.2023.3247040

Üstün, S., Kale, E. H., and Çiçek, M. (2017). Neural networks for time perception and working memory. Front. Hum. Neurosci. 11, 83. doi: 10.3389/fnhum.2017.00083

Vallet, W., Laflamme, V., and Grondin, S. (2019). An EEG investigation of the mechanisms involved in the perception of time when expecting emotional stimuli. Biol. Psychol. 148, 107777. doi: 10.1016/j.biopsycho.2019.107777

Volante, W., Cruit, J., Tice, J., Shugars, W., and Hancock, P. (2018). Time flies: investigating duration judgments in virtual reality. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 62, 1777–1781. doi: 10.1177/1541931218621403

Wiener, M., Parikh, A., Krakow, A., and Coslett, H. (2018). An intrinsic role of beta oscillations in memory for time estimation. Sci. Rep. 8, 7992. doi: 10.1038/s41598-018-26385-6

Williams, C. K. I., and Rasmussen, C. E. (1995). “Gaussian processes for regression,” in Advances in Neural Information Processing Systems 8, NIPS, November 27–30 (jDenver, CO: MIT Press), 514–520.

Winkler, N., Roethke, K., Siegfried, N., and Benlian, A. (2020). “Lose yourself in VR: exploring the effects of virtual reality on individuals' immersion,” in 53rd Hawaii International Conference on System Sciences, HICSS 2020, January 7-10 (Maui, Hawaii: ScholarSpace), 1–10. doi: 10.24251/HICSS.2020.186

Wittmann, M., and Paulus, M. P. (2008). Decision making, impulsivity and time perception. Trends Cogn. Sci. 12, 7–12. doi: 10.1016/j.tics.2007.10.004

Won, L., Park, S., and Whang, M. (2018). The recognition method for focus level using ECG (electrocardiogram). J. Korea Contents Assoc. 18, 370–377. doi: 10.5392/JKCA.2018.18.02.370

Wu, W., and Lee, J. (2009). “Improvement of HRV methodology for positive/negative emotion assessment,” in The 5th International Conference on Collaborative Computing: Networking, Applications and Worksharing, CollaborateCom 2009, November 11-14, 2009, eds J. B. D. Joshi, and T. Zhang (Washington DC: ICST/IEEE.), 1–6. doi: 10.4108/ICST.COLLABORATECOM2009.8296

Xie, B., Liu, H., Alghofaili, R., Zhang, Y., Jiang, Y., Lobo, F. D., et al. (2021). A review on virtual reality skill training applications. Front. Virtual Real. 2, 645153. doi: 10.3389/frvir.2021.645153

Xue, T., Ali, A. E., Ding, G., and Cesaŕ, P. (2021). “Investigating the relationship between momentary emotion self-reports and head and eye movements in HMD-based 360° VR video watching,” in CHI '21: CHI Conference on Human Factors in Computing Systems, Virtual Event/Yokohama Japan, May 8-13, 2021. Extended Abstracts (Yokohama: ACM), 353:1–353:8. doi: 10.1145/3411763.3451627

Yoo, J.-Y., and Lee, J.-H. (2015). The effects of valence and arousal on time perception in individuals with social anxiety. Front. Psychol. 6, 1208. doi: 10.3389/fpsyg.2015.01208

Yu, G., Yang, M., Yu, P., and Dorris, M. C. (2017). Time compression of visual perception around microsaccades. J. Neurophysiol. 118, 416–424. doi: 10.1152/jn.00029.2017

Keywords: physiological signals, virtual reality, features selection, remote experiment, machine learning

Citation: Moinnereau M-A, Oliveira AA and Falk TH (2023) Quantifying time perception during virtual reality gameplay using a multimodal biosensor-instrumented headset: a feasibility study. Front. Neuroergon. 4:1189179. doi: 10.3389/fnrgo.2023.1189179

Received: 18 March 2023; Accepted: 29 June 2023;

Published: 14 July 2023.

Edited by:

Frederic Dehais, Institut Supérieur de l'Aéronautique et de l'Espace (ISAE-SUPAERO), FranceReviewed by:

Mathias Vukelić, Fraunhofer Institute for Industrial Engineering, GermanyCopyright © 2023 Moinnereau, Oliveira and Falk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marc-Antoine Moinnereau, bWFyYy1hbnRvaW5lLm1vaW5uZXJlYXVAaW5ycy5jYQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.