- 1Department of Ultrasound, Shenzhen People’s Hospital, The Second Clinical College of Jinan University, Shenzhen, China

- 2Department of Gastroenterology, The First Affiliated Hospital of Guangdong Pharmaceutical University, Guangzhou, China

- 3Department of Applied Mathematics and Theoretical Physics, University of Cambridge, Cambridge, United Kingdom

- 4School of Science and Technology, The Open University of Hong Kong, Hong Kong, China

- 5Department of Neurosurgery, Sun Yat-sen Memorial Hospital, Sun Yat-sen University, Guangzhou, China

- 6Guangdong Provincial Key Laboratory of Malignant Tumor Epigenetics and Gene Regulation, Sun Yat-Sen Memorial Hospital, Sun Yat-Sen University, Guangzhou, China

- 7Department of Computing and Decision Sciences, Lingnan University, Hong Kong, China

- 8Department of Mathematics and Information Technology, The Education University of Hong Kong, Hong Kong, China

- 9The First Affiliated Hospital of Southern University of Science and Technology, Shenzhen, China

Automatic and accurate segmentation of breast lesion regions from ultrasonography is an essential step for ultrasound-guided diagnosis and treatment. However, developing a desirable segmentation method is very difficult due to strong imaging artifacts e.g., speckle noise, low contrast and intensity inhomogeneity, in breast ultrasound images. To solve this problem, this paper proposes a novel boundary-guided multiscale network (BGM-Net) to boost the performance of breast lesion segmentation from ultrasound images based on the feature pyramid network (FPN). First, we develop a boundary-guided feature enhancement (BGFE) module to enhance the feature map for each FPN layer by learning a boundary map of breast lesion regions. The BGFE module improves the boundary detection capability of the FPN framework so that weak boundaries in ambiguous regions can be correctly identified. Second, we design a multiscale scheme to leverage the information from different image scales in order to tackle ultrasound artifacts. Specifically, we downsample each testing image into a coarse counterpart, and both the testing image and its coarse counterpart are input into BGM-Net to predict a fine and a coarse segmentation maps, respectively. The segmentation result is then produced by fusing the fine and the coarse segmentation maps so that breast lesion regions are accurately segmented from ultrasound images and false detections are effectively removed attributing to boundary feature enhancement and multiscale image information. We validate the performance of the proposed approach on two challenging breast ultrasound datasets, and experimental results demonstrate that our approach outperforms state-of-the-art methods.

1 Introduction

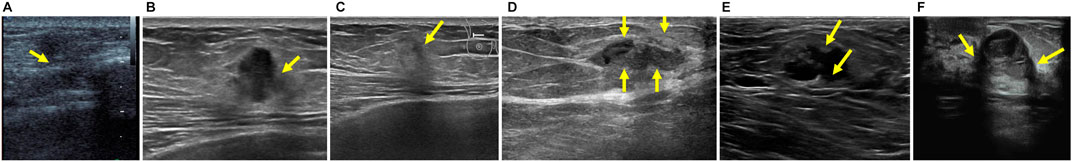

Breast cancer is the most commonly occurring cancer in women and is also the second leading cause of cancer death Siegel et al. (2017). Ultrasonography has been an attractive imaging modality for the detection and analysis of breast lesions because of its various advantages, e.g., safety, flexibility and versatility Stavros et al. (1995). However, clinical diagnosis of breast lesions based on ultrasound imaging generally requires well-trained and experienced radiologists as ultrasound images are hard to interpret and quantitative measurements of breast lesion regions are tedious and difficult tasks. Thus, automatic localization of breast lesion regions will facilitate the process of clinical detection and analysis, making the diagnosis more efficient, as well as achieving higher sensitivity and specificity Yap et al. (2018). Unfortunately, accurate breast lesion segmentation from ultrasound images is very challenging due to strong imaging artifacts, e.g., speckle noise, low contrast and intensity inhomogeneity. Please refer to Figure 1 for some ultrasound samples.

FIGURE 1. Examples of breast ultrasound images. (A–C) Ambiguous boundaries due to similar appearance between lesion and non-lesion regions. (D–F) Intensity inhomogeneity inside lesion regions. Note that the green arrows are marked by radiologists.

To solve this problem, we propose a boundary-guided multiscale network (BGM-Net) to boost the performance of breast lesion segmentation from ultrasound images based on the feature pyramid network (FPN) Lin et al. (2017). Specifically, we first develop a boundary-guided feature enhancement (BGFE) module to enhance the feature map for each FPN layer by learning a boundary map of breast lesion regions. This step is particularly important for the performance of the proposed network because it improves the capability of the FPN framework to detect the boundaries of breast lesion regions in low contrast ultrasound images, eliminating boundary leakages in ambiguous regions. Then, we design a multiscale scheme to leverage the information from different image scales in order to tackle ultrsound artifacts, where the segmentation result is produced by fusing a fine and a coarse segmentation maps predicted from the testing image and its coarse counterpart, respectively. The multiscale scheme can effectively remove false detections that result from strong imaging artifacts. We demonstrate the superiority of the proposed network over state-of-the-art methods on two challenging breast ultrasound datasets.

2 Related Work

In the literature, algorithms for breast lesion segmentation from ultrasound images have been extensively studied. Early methods Boukerroui et al. (1998), Madabhushi and Metaxas (2002), Madabhushi and Metaxas (2003), Shan et al. (2008), Shan et al. (2012), Xian et al. (2015), Gómez-Flores and Ruiz-Ortega (2016) mainly exploit hand-crafted features to construct segmentation models to infer the boundaries of breast lesion regions, and can be divided into three categories according to Xian et al. (2018), including region growing methods Kwak et al. (2005), Shan et al. (2008), Shan et al. (2012)deformable models Yezzi et al. (1997), Chen et al. (2002), Chang et al. (2003), Madabhushi and Metaxas (2003), Gao et al. (2012), and graph models Ashton and Parker (1995), Chiang et al. (2010), Xian et al. (2015).

Region growing methods start the segmentation from a set of manual or automatic selected seeds, which gradually expand to capture the boundaries of target regions according to the predefined growing criteria. Shan et al. Shan et al. (2012) developed an efficient mehtod to automatically generate region-of-interest (ROI) for breast lesion segmentation, while Kwak et al. Kwak et al. (2005) utilized common contour smoothness and region similarity (mean intensity and size) to define the growing criteria.

Deformable models first construct an initial model and then deform the model to reach object boundaries according to internal and external energies. Madabhushi et al. Madabhushi and Metaxas (2003) initialized the deformable model using boundary points and employed balloon forces to define the extern energy, while Chang et al. Chang et al. (2003) applied the stick filter to reduce speckle noise in ultrasound images before deforming the model to segment breast lesion regions.

Graph models perform breast lesion segmentation with efficient energy optimization by using Markov random field or graph cut framework. Chiang et al. Chiang et al. (2010) employed a pre-trained Probabilistic Boosting Tree (PBT) classifier to determine the data term of the graph cut energy, while Xian et al. Xian et al. (2015) formulated the energy function by modeling the information from both frequency and space domains. Although many a priori models haved been designed to assist breast lesion segmentation, these methods have limited capability to capture high-level semantic features in order to identify weak boundaries in ambiguous regions, leading to boundary leakages in low contrast ultrasound images.

In contrast, Learning-based methods utilize a set of manually designed features to train the classifier for segmentation tasks Huang et al. (2008), Lo et al. (2014), Moon et al. (2014), Othman and Tizhoosh (2011). Liu et al. Liu et al. (2010) extracted 18 local image features to train a SVM classifier to segment breast lesion regions, and Jiang et al. Jiang et al. (2012) utilized 24 Harr-like features and trained Adaboost classifier for breast tumor segmentation. Recently, convolution neural networks (CNNs) have been demonstrated to achieve excellent performance in a lot of medical applications by building a series of deep convolutional layers to learn high-level semantic features from labeled data. Inspired from this, several CNN frameworks Yap et al. (2018), Xu et al. (2019) have been developed to segment breast lesion regions from ultrasound images. For example, Yap et al. Yap et al. (2017) investigated the performance of three networks: a Patch-based LeNet, a U-Net, and a transfer learning approach with a pretrained FCN-AlexNet, for breast lesion detection. Lei et al. Lei et al. (2018) proposed a deep convolutional encoder-decoder network equipped with deep boundary supervision and adaptive domain transfer for the segmentation of breast anatomical layers. Hu et al. Hu et al. (2019) combined a dilated fully convolutional network with an active contour model to segment breast tumors. Although CNN-based methods improve the performance of breast lesion segmentation in low contrast ultrasound images, they still suffer from strong artifacts of speckle noise and intensity inhomogeneity, which typically occur in clinical scenarios, and tend to generate inaccurate segmentation results.

3 Our Approach

3.1 Overview

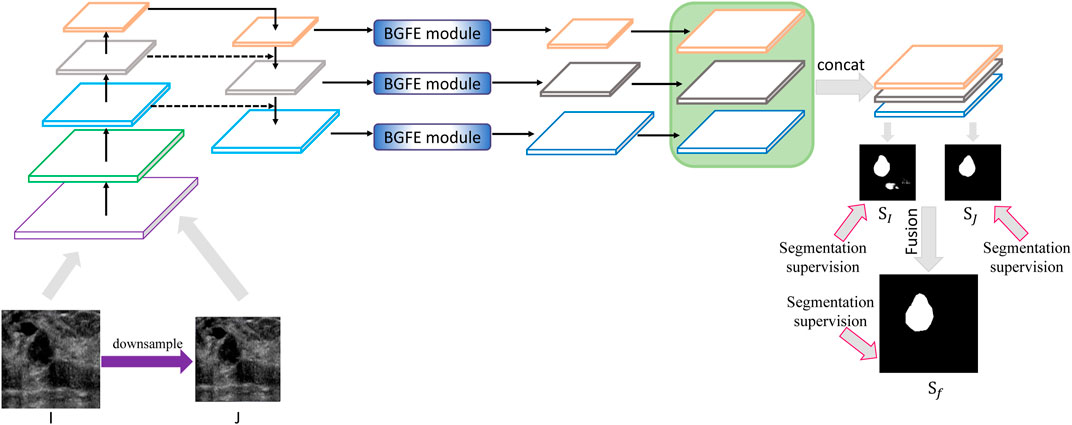

Figure 2 illustrates the architecture of the proposed approach. Given a testing breast ultrasound image I, we first downsample I into a coarse counterpart J, and then input both I and J into the feature pyramid network to obtain a set of feature maps with different spatial resolutions. After that, a boundary-guided feature enhancement module is developed to enhance the feature map for each FPN layer by learning a boundary map of breast lesion regions. All of the refined feature maps are then upsampled and concatenated to predict a fine

FIGURE 2. Schematic illustration of the proposed approach for breast lesion segmentation from ultrasound images. Please refer to Figure 3 for BGFE module. Best viewed in color.

3.2 Boundary-Guided Feature Enhancement

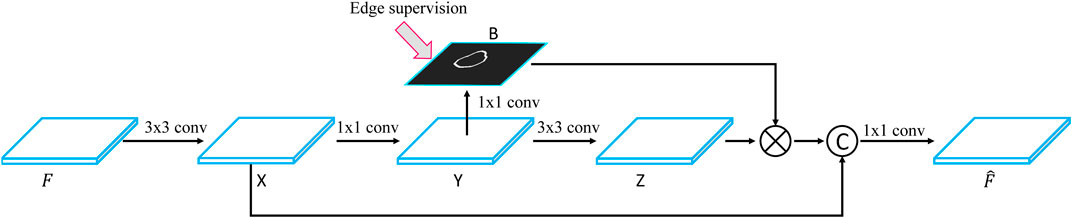

The FPN framework first uses a convolutional neural network to extract a set of feature maps with different spatial resolutions and then iteratively merges two adjacent layers from the last layer to the first layer. Although FPN improves the performance of breast lesion segmentation, it still suffers from the inaccuracy of boundary detection because of strong ultrasound artifacts. To solve this problem, we develop a boundary-guided feature enhancement module to improve the boundary detection capability of the feature map for each FPN layer by learning a boundary map of breast lesion regions.

Figure 3 shows the flowchart of the BGFE module. Given a feature map F, we first apply a 3×3 convolutional layer on F to obtain the first intermediate image X, followed by a 1×1 convolutional layer to obtain the second intermediate image Y, which will be used to learn a boundary map B of breast lesion regions. Then, we apply a 3×3 convolutional layer on Y to obtain the third intermediate image Z, and multiply each channel of Z with B in an element-wise manner. Finally, we concatenate X and Z, followed by a 1×1 convolutional layer, to obtain the enhanced feature map

where

FIGURE 3. Flowchart of the BGFE module.

3.3 Multiscale Scheme

After the BGFE module, all of the refined feature maps will be upsampled and concatenated to predict the segmentation map of the input image. To account for various ultrasound artifacts, we design a multiscale scheme to produce the final segmentation result by fusing the information from different image scales. Specifically, for each testing breast ultrasound image, we first downsample it into a coarse counterpart with the resolution of 320×320. In our experiment, the training images are all resized to the resolution of 416×416 according to previous experience, and thus the testing image is also resized to the same resolution. Then, both the testing image and its coarse counterpart are input into the proposed network to predict a fine and a coarse segmentation maps, respectively. Finally, the segmentation result is produced by fusing the fine and the coarse segmentation maps so that false detections from the fine scale can be counteracted by the information from the coarse scale, leading to an accurate segmentation of breast lesion regions.

3.4 Loss Fuction

In our study, there is an annotated mask of breast lesion regions for each training image, which will serve as the ground true for breast lesion segmentation. In addition, we employ a canny detector Canny (1986) on the annotated mask to obtain a boundary map of breast lesion regions, which will serve as the ground true for boundary detection. Based on the two ground truths, we combine a segmentation loss and a boundary detection loss to compute the total loss function

where

where

where

3.5 Training and Testing Strategies

Training Parameters

We initialize the parameters of the basic convolutional neural network by a pre-trained DenseNet-121 Huang et al. (2017) on ImageNet while the others are trained from scratch noise. The breast ultrasound images in our training dataset are randomly rotated, cropped, and horizontally flipped for data augmentation. We use Adam optimizer to train the whole framework by 10, 000 iterations. The learning rate is initialized as 0.0001 and reduced to 0.00001 after 5, 000 iterations. We implement our BGM-Net on Keras and run it on a single GPU with a mini-batch size of 8.

Inference

We take

4 Experiments

This section conducts extensive experiments, as well as an ablation study, to evaluate the performance of the proposed approach for breast lesion segmentation from ultrasound images.

4.1 Dataset

Two challenging breast ultrasound datasets are utilized for the evaluation. The first dataset (i.e., Al-Dhabyani et al., 2020) is from the Baheya Hospital for Early Detection and Treatment of Womenś Cancer (Cairo, Egypt). BUSI includes 780 tumor images from 600 patients. We randomly select 661 images as the training dataset and the remaining 119 images serve as the testing dataset. The second dataset includes 632 breast ultrasound images (denoted as BUSZPH), collected from Shenzhen People’s Hospital where informed consent is obtained from all patients. We randomly select 500 images as the training dataset and the remaining 132 images serve as the testing dataset. The breast lesion regions in all the images are manually segmented by experienced radiologies, and each annotation result is confirmed by three clinicians.

4.2 Evaluation Metric

We adopt five widely used metrics for quantitative comparison, including Dice Similarity Coefficient (Dice), Average Distance between Boundaries (ADB, in pixel), Jaccard, Precision, and Recall. Please refer to Chang et al. (2009), Wang et al. (2018) for more details about these metrics. Dice and Jaccard measure the similarity between the segmentation result and the ground truth. ADB measures the pixel distance between the boundaries of the segmentation result and the ground truth. Precision and Recall compute pixel-wise classification accuracy to evaluate the segmentation result. Overall, a good segmentation result shall have a low ADB value, but high values for the other four metrics.

4.3 Segmentation Performance

Comparison Methods

We validate the proposed approach by comparing it with five state-of-the-art methods, including U-Net Ronneberger et al. (2015), U-Net++ Zhou et al. (2018), feature pyramid network (FPN) Lin et al. (2017), DeeplabV3+ Chen et al. (2018) and ConvEDNet Lei et al. (2018). For consistent comparison, we obtain the segmentation results of the five methods by the public code (if available) or by our implementation, which is tuned for the best result.

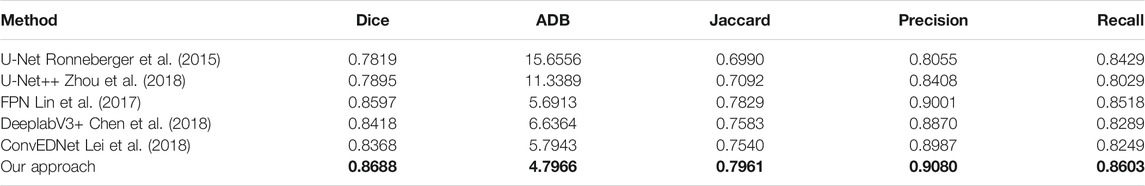

Quantitative Comparison

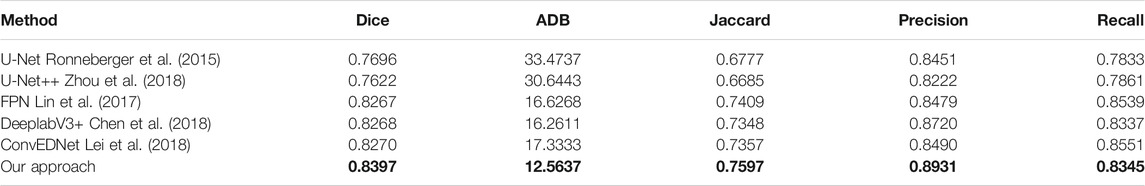

Tables 1, 2 present the measurement results of different segmentation methods on the two datasets, respectively. Apparently, our approach achieves higher values on Dice, Jaccard, Precision and Recall measurements, and lower value on ADB measurement, demenstrating the high accuracy of the proposed approach for breast lesion segmentation from ultrasound images.

TABLE 1. Measurement results of different segmentation methods on the BUSZPH dataset. Our results are highlighted in bold.

TABLE 2. Measurement results of different segmentation methods on the BUSI dataset. Our results are highlighted in bold.

Visual Comparison

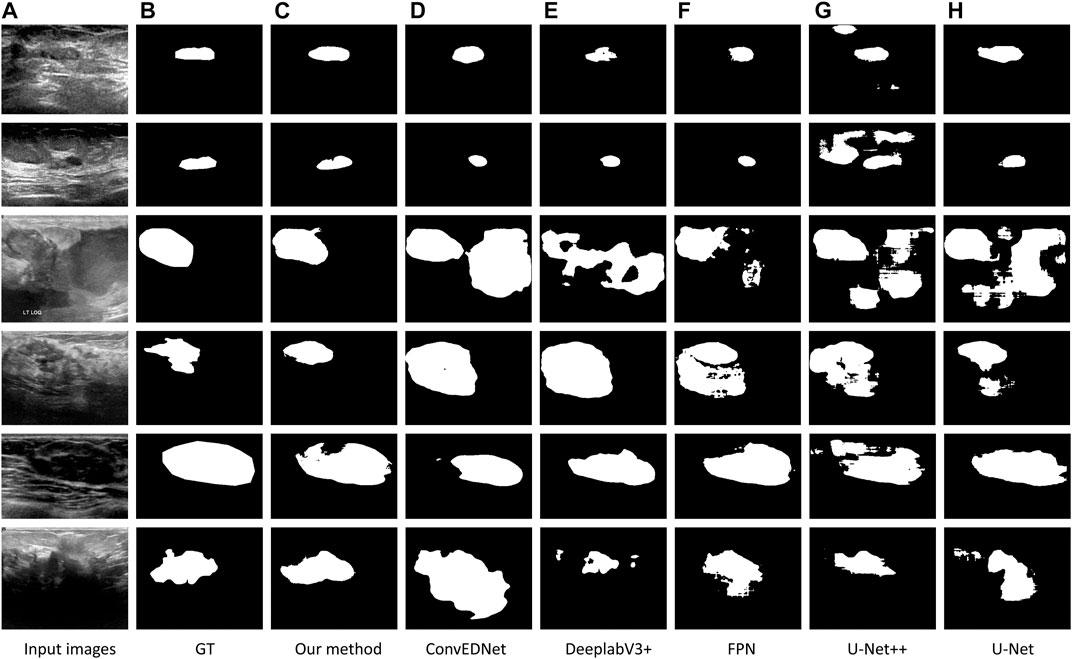

Figure 4 visually compares the segmentation results obtained by our approach and the other five segmentation methods. As shown in the figure, our approach precisely segments the breast lesion regions from ultrasound images despite of sevious artifacts, while the other methods tend to generate over or under-segmentation results as they wrongly classify some non-lesion regions or miss parts of lesion regions. In the first and second rows where high speckle noise is presented, our result shows the highest similarity against the ground true. This is because the boundary detection loss in our loss function explicitly regularizes the boundary shape of the detected regions using the boundary information in the ground true. In addition, non-lesion regions are greatly removed even though there are ambiguous regions with weak boundaries, see the third and fourth rows, since the multiscale shceme in our approach effectively fuses the information from different image scales. Moreover, our approach accurately locate the boundaries of breast lesion regions in inhomogeneous ultrasound images attributing to the boundary feature enhancement of the BGFE module, see the fifth and sixth rows. In contrast, segmentation results from the other methods are inferior as these methods have limited capability to cope with strong ultrasound artifacts.

FIGURE 4. Comparison of breast lesion segmentation among different methods. (A) Testing images. (B) Ground truth (denoted as GT). (C–H): Segmentation results obtained by our approach (BGM-Net), ConvEDNet Lei et al. (2018), DeeplabV3+ Chen et al. (2018), FPN Lin et al. (2017), U-Net++ Zhou et al. (2018), and U-Net Ronneberger et al. (2015), respectively. Note that the images in first three rows are from BUSZPH, while the images in last three rows are from BUSI.

4.4 Ablation Study

Network Design

We conduct an ablation study to evaluate the key components of the proposed approach. Specifically, three baseline networks are considered and their quantitative results on the two datasets are reported in comparison with our approach. The first baseline network (denoted as “Basic”) removes both the BGFE modules and multiscale scheme from our approach, meaning that both boundary feature enhancement and multiscale fusing are disabled and the proposed approach degrades to the FPN framework. The second baseline network (denoted as “Basic + Multiscale”) removes the BGFE modules from our approach, meaning that boundary feature enhancement is disabled while multiscale fusing is enabled. The third baseline network (denoted as “Basic + BGFE”) removes the multiscale scheme from our approach, meaning that multiscale fusing is disabled while boundary feature enhancement is enabled.

Quantitative Comparison

Tables 3, 4 present the measurement results of different baseline networks on the two datasets, respectively. As shown in the table, both “Basic + BGFE” and “Basic + Multiscale” perform better than “Basic” by showing higher values on Dice, Jaccard, Precision and Recall measurements, but a lower value on ADB measurement. This clearly demonstrates the benifits from the FPN module and the multiscale scheme. In addition, our approach achieves the best result compared with the three baseline networks, which validates the superiority of the proposed approach by combining boundary feature enhancement and multiscale fusing into a unified framework.

TABLE 3. Measurement results of different baseline networks on the BUSZPH dataset. Our results are highlighted in bold.

TABLE 4. Measurement results of different baseline networks on the BUSI dataset. Our results are highlighted in bold.

Visual Comparison

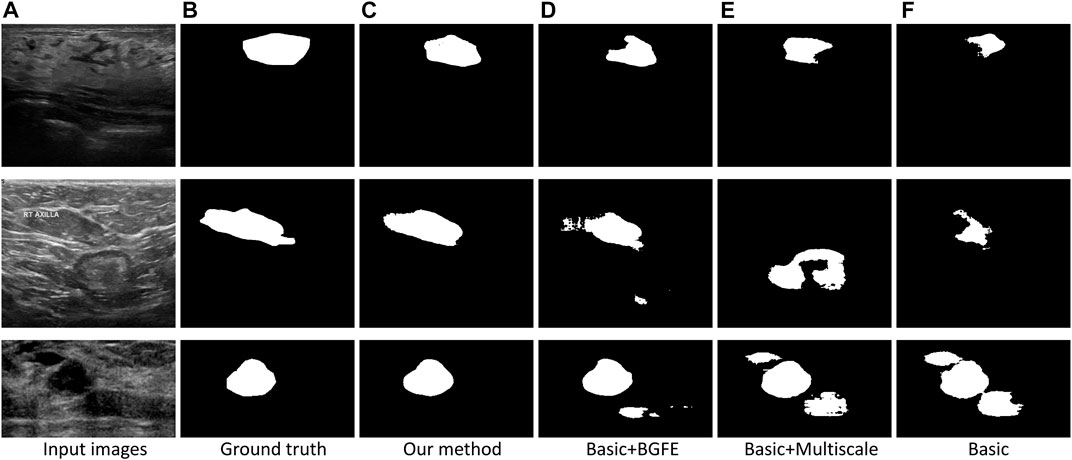

Figure 5 visually compares the segmentation results obtained by our approach and the three baseline networks. Apparently, our approach better segments breast lesion regions than the three baseline networks. False detections resulted from speckle noise are observed in the result of “Basic + BGFE”, while “Basic + Multiscale” wrongly classifies a large part of non-lesion regions due to unclear boundaries in ambiguous regions. In contrast, our approach accurately locates the boundaries of breast lesion regions by learning an enhanced boundary map using the BGFE module. Moreover, false detections are effectively removed attributing to the multiscale scheme. Thus, our result achieves the highest similarity against the ground true.

FIGURE 5. Comparison of breast lesion segmentation between our approach (C) and the three baseline networks (D–F) against the ground truth (B).

5 Conclusion

This paper proposes a novel boundary-guided multiscale network to boost the performance of breast lesion segmentation from ultrasound images based on the FPN framework. By combining boundary feature enhancement and multiscale image information into a unified framework, the boundary detection capability of the FPN framework is greatly improved so that weak boundaries in ambiguous regions can be correctly identified. In addition, the segmentation accuracy is notably increased as false detections resulted from strong ultrasound artifacts are effectively removed attributing to the multiscale scheme. Experimental results on two challenging breast ultrasound datasets demonstrate the superiority of our approach compared with state-of-the-art methods. However, similar to previous work, our approach also relies on labeled data to train the network, which limits its applications in scenarios where unlabeled data is presented. Thus, the future work will consider the adaptation from labeled data to unlabeled data in order to improve the generalization of the proposed approach.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation. The data presented in the study are deposited in https://sites.google.com/site/indexlzhu/datasets.

Author Contributions

YW, RZ, LZ, and WW designed the convolutional neural network and draft the paper. SW, XH, and HZ collected the data and pre-processed the original data. HX, GC, and FL prepared the quantitative and qualitative comparisons and revised the paper.

Acknowledgments

This paper was supported by Natural Science Foundation of Shenzhen city (No. JCYJ20190806150001764), Natural Science Foundation of Guangdong province (No. 2020A1515010978), The Sanming Project of Medicine in Shenzhen training project (No. SYJY201802), National Natural Science Foundation of China (No. 61802072), General Research Fund (No. 18601118) of Research Grants Council of Hong Kong SAR, One-off Special Fund from Central and Faculty Fund in Support of Research from 2019/20 to 2021/22 (MIT02/19-20), Research Cluster Fund (RG 78/2019-2020R), Dean's Research Fund 2019/20 (FLASS/DRF/IDS-2) of The Education University of Hong Kong, and the Faculty Research Grant (DB21B6) of Lingnan University, Hong Kong.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Al-Dhabyani, W., Gomaa, M., Khaled, H., and Fahmy, A. (2020). Dataset of Breast Ultrasound Images. Data in Brief 28, 104863.

Ashton, E. A., and Parker, K. J. (1995). Multiple Resolution Bayesian Segmentation of Ultrasound Images. Ultrason. Imaging 17, 291–304. doi:10.1177/016173469501700403

Boukerroui, D., Basset, O., Guérin, N., and Baskurt, A. (1998). Multiresolution Texture Based Adaptive Clustering Algorithm for Breast Lesion Segmentation. Eur. J. Ultrasound 8, 135–144. doi:10.1016/s0929-8266(98)00062-7

Canny, J. (1986). A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. PAMI-8, 679–698. doi:10.1109/tpami.1986.4767851

Chang, H.-H., Zhuang, A. H., Valentino, D. J., and Chu, W.-C. (2009). Performance Measure Characterization for Evaluating Neuroimage Segmentation Algorithms. Neuroimage 47, 122–135. doi:10.1016/j.neuroimage.2009.03.068

Chang, R.-F., Wu, W.-J., Moon, W. K., Chen, W.-M., Lee, W., and Chen, D.-R. (2003). Segmentation of Breast Tumor in Three-Dimensional Ultrasound Images Using Three-Dimensional Discrete Active Contour Model. Ultrasound Med. Biol. 29, 1571–1581. doi:10.1016/s0301-5629(03)00992-x

Chen, C.-M., Lu, H. H.-S., and Huang, Y.-S. (2002). Cell-based Dual Snake Model: a New Approach to Extracting Highly Winding Boundaries in the Ultrasound Images. Ultrasound Med. Biol. 28, 1061–1073. doi:10.1016/s0301-5629(02)00531-8

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., and Adam, H. (2018). “Encoder-decoder with Atrous Separable Convolution for Semantic Image Segmentation,” in ECCV (Springer), 801–818.

Chiang, H.-H., Cheng, J.-Z., Hung, P.-K., Liu, C.-Y., Chung, C.-H., and Chen, C.-M. (2010). “Cell-based Graph Cut for Segmentation of 2d/3d Sonographic Breast Images,” in ISBI (IEEE), 177–180.

Gao, L., Liu, X., and Chen, W. (2012). Phase-and Gvf-Based Level Set Segmentation of Ultrasonic Breast Tumors. J. Appl. Math. 2012. doi:10.1155/2012/810805

Gómez-Flores, W., and Ruiz-Ortega, B. A. (2016). New Fully Automated Method for Segmentation of Breast Lesions on Ultrasound Based on Texture Analysis. Ultrasound Med. Biol. 42, 1637–1650. doi:10.1016/j.ultrasmedbio.2016.02.016

Hu, Y., Guo, Y., Wang, Y., Yu, J., Li, J., Zhou, S., et al. (2019). Automatic Tumor Segmentation in Breast Ultrasound Images Using a Dilated Fully Convolutional Network Combined with an Active Contour Model. Med. Phys. 46, 215–228. doi:10.1002/mp.13268

Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K. Q. (2017). “Densely Connected Convolutional Networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4700–4708.

Huang, S.-F., Chen, Y.-C., and Moon, W. K. (2008). “Neural Network Analysis Applied to Tumor Segmentation on 3d Breast Ultrasound Images,” in IEEE International Symposium on Biomedical Imaging: From Nano to Macro, 1303–1306.

Jiang, P., Peng, J., Zhang, G., Cheng, E., Megalooikonomou, V., and Ling, H. (2012). “Learning-based Automatic Breast Tumor Detection and Segmentation in Ultrasound Images,” in ISBI (IEEE), 1587–1590.

Kwak, J. I., Kim, S. H., and Kim, N. C. (2005). “Rd-based Seeded Region Growing for Extraction of Breast Tumor in an Ultrasound Volume,” in International Conference on Computational and Information Science (Springer), 799–808. doi:10.1007/11596448_118

Lei, B., Huang, S., Li, R., Bian, C., Li, H., Chou, Y.-H., et al. (2018). Segmentation of Breast Anatomy for Automated Whole Breast Ultrasound Images with Boundary Regularized Convolutional Encoder-Decoder Network. Neurocomputing 321, 178–186. doi:10.1016/j.neucom.2018.09.043

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., and Belongie, S. (2017). “Feature Pyramid Networks for Object Detection,” in CVPR, 2117–2125.

Liu, B., Cheng, H. D., Huang, J., Tian, J., Tang, X., and Liu, J. (2010). Fully Automatic and Segmentation-Robust Classification of Breast Tumors Based on Local Texture Analysis of Ultrasound Images. Pattern Recognition 43, 280–298. doi:10.1016/j.patcog.2009.06.002

Lo, C., Shen, Y.-W., Huang, C.-S., and Chang, R.-F. (2014). Computer-aided Multiview Tumor Detection for Automated Whole Breast Ultrasound. Ultrason. Imaging 36, 3–17. doi:10.1177/0161734613507240

Madabhushi, A., and Metaxas, D. (2002). “Automatic Boundary Extraction of Ultrasonic Breast Lesions,” in Proceedings IEEE International Symposium on Biomedical Imaging, 601–604.

Madabhushi, A., and Metaxas, D. N. (2003). Combining Low-, High-Level and Empirical Domain Knowledge for Automated Segmentation of Ultrasonic Breast Lesions. IEEE Trans. Med. Imaging 22, 155–169. doi:10.1109/tmi.2002.808364

Moon, W. K., Lo, C.-M., Chen, R.-T., Shen, Y.-W., Chang, J. M., Huang, C.-S., et al. (2014). Tumor Detection in Automated Breast Ultrasound Images Using Quantitative Tissue Clustering. Med. Phys. 41, 042901. doi:10.1118/1.4869264

Othman, A. A., and Tizhoosh, H. R. (2011). “Segmentation of Breast Ultrasound Images Using Neural Networks,” in Engineering Applications of Neural Networks (Springer), 260–269. doi:10.1007/978-3-642-23957-1_30

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: Convolutional Networks for Biomedical Image Segmentation,” in International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI) (Springer), 234–241. doi:10.1007/978-3-319-24574-4_28

Shan, J., Cheng, H.-D., and Wang, Y. (2008). “A Novel Automatic Seed point Selection Algorithm for Breast Ultrasound Images,” in International Conference on Pattern Recognition, 1–4.

Shan, J., Cheng, H. D., and Wang, Y. (2012). Completely Automated Segmentation Approach for Breast Ultrasound Images Using Multiple-Domain Features. Ultrasound Med. Biol. 38, 262–275. doi:10.1016/j.ultrasmedbio.2011.10.022

Siegel, R. L., Miller, K. D., and Jemal, A. (2017). Cancer Statistics, 2017. CA: A Cancer J. Clinicians 67, 7–30. doi:10.3322/caac.21387

Stavros, A. T., Thickman, D., Rapp, C. L., Dennis, M. A., Parker, S. H., and Sisney, G. A. (1995). Solid Breast Nodules: Use of Sonography to Distinguish between Benign and Malignant Lesions. Radiology 196, 123–134. doi:10.1148/radiology.196.1.7784555

Wang, Y., Deng, Z., Hu, X., Zhu, L., Yang, X., Xu, X., et al. (2018). “Deep Attentional Features for Prostate Segmentation in Ultrasound,” in MICCAI (Springer), 523–530. doi:10.1007/978-3-030-00937-3_60

Xian, M., Zhang, Y., Cheng, H.-D., Xu, F., Huang, K., Zhang, B., et al. (2018). A Benchmark for Breast Ultrasound Image Segmentation (BUSIS). arXiv:1801.03182.

Xian, M., Zhang, Y., and Cheng, H. D. (2015). Fully Automatic Segmentation of Breast Ultrasound Images Based on Breast Characteristics in Space and Frequency Domains. Pattern Recognition 48, 485–497. doi:10.1016/j.patcog.2014.07.026

Xu, Y., Wang, Y., Yuan, J., Cheng, Q., Wang, X., and Carson, P. L. (2019). Medical Breast Ultrasound Image Segmentation by Machine Learning. Ultrasonics 91, 1–9. doi:10.1016/j.ultras.2018.07.006

Yap, M. H., Pons, G., Martí, J., Ganau, S., Sentís, M., Zwiggelaar, R., et al. (2018). Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 22, 1218–1226. doi:10.1109/JBHI.2017.2731873

Yap, M. H., Pons, G., Martí, J., Ganau, S., Sentís, M., Zwiggelaar, R., et al. (2018). Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 22, 1218–1226. doi:10.1109/jbhi.2017.2731873

Yezzi, A., Kichenassamy, S., Kumar, A., Olver, P., and Tannenbaum, A. (1997). A Geometric Snake Model for Segmentation of Medical Imagery. IEEE Trans. Med. Imaging 16, 199–209. doi:10.1109/42.563665

Keywords: breast lesion segmentation, boundary-guided feature enhancement, multiscale image analysis, ultrasound image segmentation, deep learning

Citation: Wu Y, Zhang R, Zhu L, Wang W, Wang S, Xie H, Cheng G, Wang FL, He X and Zhang H (2021) BGM-Net: Boundary-Guided Multiscale Network for Breast Lesion Segmentation in Ultrasound. Front. Mol. Biosci. 8:698334. doi: 10.3389/fmolb.2021.698334

Received: 21 April 2021; Accepted: 14 June 2021;

Published: 19 July 2021.

Edited by:

William C. Cho, Queen Elizabeth Hospital, ChinaReviewed by:

Nawab Ali, University of Arkansas at Little Rock, United StatesKenneth S. Hettie, Stanford University, United States

Copyright © 2021 Wu, Zhang, Zhu, Wang, Wang, Xie, Cheng, Wang, He and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xingxiang He, aGV4aW5neGlhbmdAZ2RwdS5lZHUuY24=; Hai Zhang, c3p6aGhhbnMuc2NjLmpudUBmb3htYWlsLmNvbQ==;

†These authors share first authorship

ORCID: Hai Zhang, orcid.org/0000-0002-9018-1858

Yunzhu Wu1†

Yunzhu Wu1† Lei Zhu

Lei Zhu Haoran Xie

Haoran Xie Xingxiang He

Xingxiang He