94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med., 12 March 2025

Sec. Pathology

Volume 12 - 2025 | https://doi.org/10.3389/fmed.2025.1550512

Zhaochen Liu1,2†

Zhaochen Liu1,2† Peiyan Wang1,2†

Peiyan Wang1,2† Nian Deng3

Nian Deng3 Hui Zhang1,2

Hui Zhang1,2 Fangjie Xin4

Fangjie Xin4 Xiaofei Yu1,2

Xiaofei Yu1,2 Mujie Yuan1,2

Mujie Yuan1,2 Qiyue Yu5

Qiyue Yu5 Yuhao Tang5

Yuhao Tang5 Keke Dou2

Keke Dou2 Jie Zhao2

Jie Zhao2 Bing He4*

Bing He4* Jing Deng1,6*

Jing Deng1,6*This study aimed to evaluate the feasibility of applying deep learning combined with a super-resolution scanner for the digital scanning and diagnosis of oral epithelial dysplasia (OED) slides. A model of a super-resolution digital slide scanning system based on deep learning was built and trained using 40 pathological slides of oral epithelial tissue. Two hundred slides with definite OED diagnoses were scanned into digital slides by the DS30R and Nikon scanners, and the scanner parameters were obtained for comparison. Considering that diagnosis under a microscope is the gold standard, the sensitivity and specificity of OED pathological feature recognition by the same pathologist when reading different scanner images were evaluated. Furthermore, the consistency of whole-slide diagnosis results obtained by pathologists using various digital scanning imaging systems was assessed. This was done to evaluate the feasibility of the super-resolution digital slide-scanning system, which is based on deep learning, for the pathological diagnosis of OED. The DS30R scanner processes an entire slide in a single layer within 0.25 min, occupying 0.35GB of storage. In contrast, the Nikon scanner requires 15 min for scanning, utilizing 0.5GB of storage. Following model training, the system enhanced the clarity of imaging pathological sections of oral epithelial tissue. Both the DS30R and Nikon scanners demonstrate high sensitivity and specificity for detecting structural features in OED pathological images; however, DS30R excels at identifying certain cellular features. The agreement in full-section diagnostic conclusions by the same pathologist using different imaging systems was exceptionally high, with kappa values of 0.969 for DS30R-optical microscope and 0.979 for DS30R-Nikon-optical microscope. The performance of the super-resolution microscopic imaging system based on deep learning has improved. It preserves the diagnostic information of the OED and addresses the shortcomings of existing digital scanners, such as slow imaging speed, large data volumes, and challenges in rapid transmission and sharing. This high-quality super-resolution image lays a solid foundation for the future popularization of artificial intelligence (AI) technology and will aid AI in the accurate diagnosis of oral potential malignant diseases.

Oral potential malignant diseases (OPMDs) are a general term for a class of diseases with the potential for malignant transformation occurring in the oral mucosal membrane, including oral leukoplakia, oral erythema, oral submucous fibrosis, etc. Current research shows that the overall malignant transformation rate of OPMDs is between 2.6 and 7.9% (1–5). Once OPMDs become malignant into oral squamous cell carcinoma, the patient’s 5-year survival rate and quality of life are significantly reduced. Pathological diagnosis often directly guides clinical intervention measures in the management of OPMDs patients. Oral epithelial dysplasia (OED) is the primary predictor of malignant transformation risk. The gold standard for tumor discrimination and grading is histopathological examination (6, 7). However, due to the diversity of pathological manifestations of OED and the subjective differences among pathologists, the diagnostic consistency for OED grading is low (8–10). Considering these issues in the pathological diagnosis of OED, numerous studies have indicated that consulting different pathologists on challenging cases can enhance the accuracy of the diagnosis (11, 12). Furthermore, with the advancement of computer technology, AI-assisted diagnosis has emerged as a prominent research focus in the pathological diagnosis of OED. Adeoye et al. (13) developed an OED malignant transformation prediction system that integrates clinical photographs of oral leukoplakia with deep learning algorithms, which achieved favorable calibration and discrimination. However, researchers have argued that this system cannot replace conventional biopsy for oral leukoplakia assessment. Consequently, recent investigations have explored the integration of digital pathology techniques, advanced imaging technologies, and deep learning methodologies to achieve precise diagnosis of OED (14).

Digital pathology technology utilizes advanced digital scanners to capture slices, transforming slice information into digital data that can be stored, analyzed, and shared. This advancement significantly enhances the efficiency of remote consultations between hospitals and establishes the groundwork for using AI technology in OED diagnosis (15, 16). The digital scanning of slices is an important aspect of the application of digital pathology technology. However, traditional scanning technologies encounter several problems. When a high-magnification objective lens is used to scan clear, high-resolution images, the scanning efficiency, equipment stability, and single-scanning success rate of traditional scanning systems must be improved. In addition, the large storage capacity of digital images and the high cost of scanning equipment limit the use of digital slice scanning and remote diagnosis in primary medical institutions and pose a great burden to individuals or small and medium-sized scientific research teams. To meet these challenges and make the obtained higher-definition cell microstructure images easy for clinical application, many optical super-resolution technologies have been developed, such as structured illumination microscopy, photoactivated localization microscopy (17), and sub-diffraction-limit imaging using the stochastic optical reconstruction microscopy technique (18). Single-image super-resolution (SISR) technology, an image-transformation method, reconstructs high-resolution (HR) images from degraded low-resolution (LR) images (19). Recently, SISR technology, enhanced by deep learning, has been extensively utilized by researchers in digital pathology and clinically verified. This technology has been applied to the pathological diagnosis of leiomyosarcoma of the ovary and uterus. It was confirmed that both whole and local features were preserved during the reconstruction of high-resolution images using deep learning. Additionally, 20× whole slide images (WSIs) were converted into 40× WSIs (20). By maintaining image clarity, super-resolution scanning technology significantly accelerates scanning speeds compared to previous technologies, facilitating the widespread adoption and application of digital pathology in clinical settings (21). In this study, we integrated a digital pathological scanner with super-resolution technology and developed a digital slice optical scanner using an array objective lens (DS30R DAKEWE). We utilized images of OED slides as training data for deep learning. By combining it with SISR technology, the OED slide imaging algorithm was established. Given the pathological characteristics of oral tissues, this integration enables clearer and more detailed microscopic imaging, enhancing the accuracy of observations of dense cells in oral epithelial tissues and their nuclear morphological features.

This is a cross-sectional diagnostic study in which the dataset included 240 hematoxylin and eosin (H&E) stained WSIs of OPMDs with biopsy-proven dysplasia and clinical presentation as homogeneous and non-homogeneous leukoplakias at one or more sites, diagnosed between 2018 and 2023. This study adhered to the Declaration of Helsinki and received approval from the ethics committee of The Affiliated Hospital of Qingdao University (registration number: QDFYWZLL29058). All patients were required to have at least 1 year of follow-up, with comprehensive photography and documentation. Patients with oral lichen planus were excluded, while those presenting with simple hyperplasia and mild, moderate, or severe dysplasia/carcinoma in situ were included (7). All pathological sections have previously passed quality control checks. The fixation time for the samples was uniformly maintained between 6 to 24 h, and the thickness of all sections consistently measured within the range of 3-5 μm. The slides were scanned and stored using a DS30R scanner and a Nikon scanner. Of these materials, 40 slides were utilized for super-resolution machine training, and 200 slides were used for diagnosis and verification. The proposal super-resolution deep learning model is deployed locally on the DS30R product, without connecting to the Internet or being accessible from the Internet. The pathology slides used for training and evaluation data are sourced from the Affiliated Hospital of Qingdao University. The digital images are stored in a private storage of the Affiliated Hospital of Qingdao University. The storage and use of digital images are conducted in an environment with data network security and under the authorization of relevant regulations. The super-resolution deep learning model and software system in the DS30R comply with the standards of ICE/TR 80001–2-2 and ISO/IEC 81001–5-1, as well as the YY/T 1843–2022 standard.

We used the SISR technology based on the transition from LR image to HR image to realize super-resolution imaging. Based on SISR framework, this technology used multiscale fully convolutional networks and conditional generative adversarial networks. Through stochastic curriculum learning training strategies, the complexity of data pairs was gradually increased in the training stage to urge the model to capture more complex image details, thus achieving the imaging clarity equivalent to 20× NA0.8 objective lens.

Forty oral tissue slides were scanned using a DS30R scanner equipped with a 20× array objective lens to generate low-resolution WSIs. Comparative high-resolution acquisitions were performed through a Nikon scanner under dual configurations: 20× NA0.4 optical parameters (designated HR01) and 20× NA0.8 specifications (designated HR02). The resultant dataset comprising 150,000 LR-HR01-HR02 triplets was partitioned through stratified randomization into training (n = 120,000), testing (n = 15,000), and validation (n = 15,000) subsets, maintaining an 8:1:1 allocation ratio.

HR01 images, acquired through the 20× NA0.4 objective lens, served as the ground-truth reference for establishing baseline optical parameters. These were paired with array-derived LR images to computationally model a point spread function (PSF) and imaging resolution parameters equivalent to conventional optical microscopy. Subsequent super-resolution learning employed stochastic curriculum learning (SCL) with progressive difficulty escalation, wherein HR02 images (20× NA0.8 acquisitions) were integrated as advanced training targets to enhance imaging fidelity.

Two distinct training paradigms were implemented:

Dataset A: HR01 (20× NA0.4) ↔ LR (array-based) mapping.

Dataset B: HR02 (20× NA0.8) ↔ LR (array-based) mapping.

Critical implementation consideration: Spatial registration between Datasets A and B was rigorously maintained through field-of-view alignment, effectively mitigating catastrophic forgetting during incremental model training. The flow chart of the collection and preparation of super-resolution image data sets is shown in Figure 1.

Figure 1. Preparation process of super-resolution data set. Image data were acquired from H&E-stained oral pathology slides using three objectives: (i) DAKEWE DS30R array objective 20×, (ii) NIKON CFI Plan Apochromat 20× NA0.8, and (iii) NIKON CFI Plan Achromat 20× NA0.4. Step 1 Cross-high-resolution registration: Scale-Invariant Feature Transform (SIFT) feature matching achieved subpixel alignment between HR01 and HR02 (error < 1px), with adaptive color normalization to minimize brightness differences (Δ grayscale ≤ ±2). Step 2 Cross-scale precise registration: A novel combination of Template Matching and SIFT algorithms aligned low-resolution WSI to HR02 (error < 1px), followed by adaptive color normalization (Δ grayscale ≤ ±2). Step 3 Dataset creation: Cropped images to 256 × 256 pixels, generating two datasets: A (LR-HR01 pairs) and B (LR-HR02 pairs).

In the OED slide dataset, the scaling factor between the LR and HR images is 6, and SISR with this and other large scaling factors presents significant challenges. Consequently, adopting a coarse learning mechanism proves advantageous for training the SISR model to handle large scaling factors. Initially, the model is trained using simple samples, and progressively, more complex samples are incorporated into the training set. This strategy enhances the model’s ability to restore low-resolution images to high-resolution ones. Therefore, utilizing the coarse-learning mechanism, we segmented the model training process into two distinct phases: Stage 1: Training begins on an untrained model using Dataset-A, focusing on super-resolution training in deep learning to match the imaging clarity of 20 × NA0.4. Stage 2: Building on the model trained in the first stage, further super-resolution training is conducted using Dataset-B, aiming to achieve imaging clarity of 20 × NA0.8 and meet diagnostic definition requirements.

The OED pathological tissue samples from our slide database were randomly selected based on their positions within the epithelium. Images in the same field of vision were captured using a DS30R scanner, Nikon scanner, and optical microscope, comprising 200 low magnification and 200 high magnification images. The images were categorized into groups A (DS30R 10×), B (Nikon 10×), C (optical microscope 10×), D (DS30R 20×), E (Nikon 20×), and F (optical microscope 20×). Groups A, B, and C focused on identifying structural features, while groups D, E, and F targeted cellular features. Upon acquiring the images, a senior oral pathologist randomly reviewed and annotated each group based on the pathological features defined in the WHO classification standard (2022) for OED (22). The results from the optical microscope groups (C and F) served as the gold standard. Subsequently, the sensitivity and specificity of feature recognition in groups A, B, D, and E were calculated. The calculation formulas for sensitivity and specificity are as follows:

These metrics represent fundamental statistical parameters, where:

True Positives = number of correctly identified positive features.

False Negatives = number of incorrectly identified negative features.

True Negatives = number of correctly identified negative features.

False Positives = number of incorrectly identified positive features.

A senior oral pathologist successively browsed and read the whole sections using a DS30R scanner, a Nikon scanner, and an optical microscope. The order of each section was randomized. The pathologist arrived at the diagnostic conclusion for each slide and recorded it. All slide samples were grouped according to the original diagnostic conclusion, and the agreement rate between the diagnostic conclusions of the pathologists in each group and the original diagnostic conclusion was calculated. The formula used is as follows:

The consistency of the diagnoses made by pathologists using the three instruments was compared, with DS30R-optical microscope, DS30R-optical microscope, Nikon-DS30R and three evaluators (23, 24). The results were consistent by Kappa test.

The collected data were analyzed using the Statistical Package for the Social Sciences (SPSS) for Windows, version 22.0. The weighted kappa test was used for the consistency test between two, and Fleiss’ kappa test was used for the consistency test between three. When the Kappa coefficient is greater than 0, it indicates meaningful consistency. A larger Kappa coefficient denotes better consistency. A Kappa coefficient of 0.00–0.20 indicates low consistency, 0.21–0.40 indicates general consistency, 0.41–0.60 indicates moderate consistency, 0.61–0.80 indicates high consistency, and 0.81–1.00 also indicates very high consistency.

When preparing the super-resolution dataset, we used the DS30R and Nikon scanners to scan the same slice data and save them as digital data. The operating parameters of the two scanning devices are listed in Table 1.

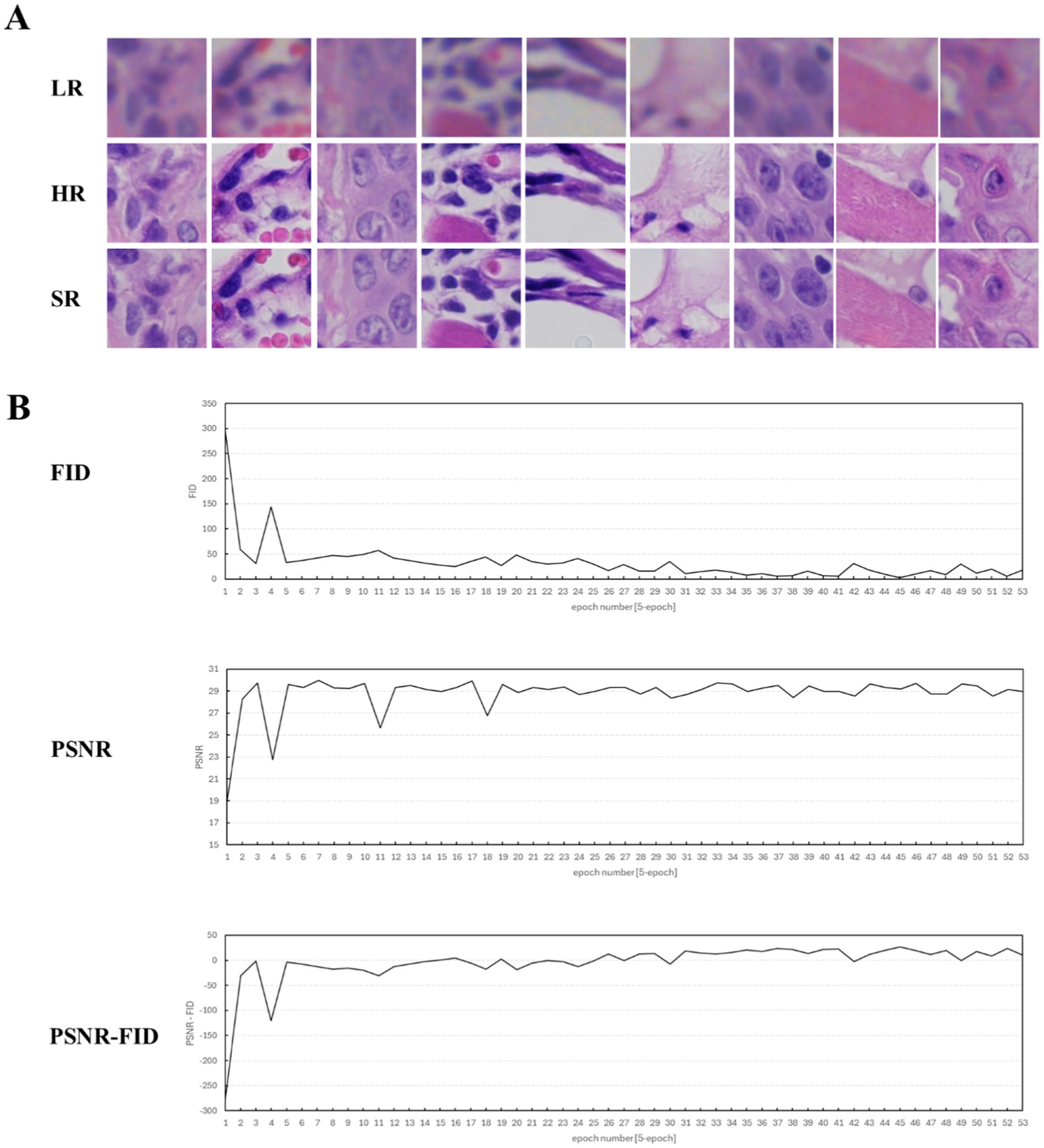

The DS30R scanner can automatically focus the slide and scan it in single or multiple layers through parallel array scanning. It requires 0.25 min to scan a slide in a single layer, with the data storage volume for the entire slide at 0.35GB. A Nikon scanner was used for area scanning, taking 15 min per slice, with a storage volume of 0.5GB. In the deep learning framework, the critical hyperparameters were configured as follows: the model underwent 900 training epochs with a generator learning rate of 1 × 10−4 and a discriminator learning rate of 1 × 10−5, while the adversarial loss coefficient was maintained at 0.9 throughout the training process. We employed two metrics, the peak signal-to-noise ratio (PSNR) and Fréchet inception distance (FID), to assess the model’s performance and produce a super-resolution image, as depicted in Figure 2. Throughout the training, we examined the image data and determined that the PSNR primarily assessed the image clarity, whereas the FID gaged the image fidelity. In the SISR model training, these two metrics produced divergent outcomes; the peak PSNR did not guarantee the lowest FID. This divergence arises because the PSNR measures only image clarity and not fidelity. An overly high PSNR may indicate that an image is either too smooth or distorted, leading to a feature distribution that does not align with the actual image, thereby elevating the FID. To generate a comprehensive evaluation index that considers both definition and fidelity, we introduced the PSNR-FID difference. A greater difference suggests that the model output is more balanced in terms of definition and fidelity, offering a more thorough evaluation of the model’s performance. Pivotal images from the SISR model training are illustrated in Figure 2A. After super-resolution training, the images became clearer, and intracellular granularity was enhanced. As shown in Figure 2B, the deep learning model underwent progressive optimization (manifested by a reduction in the FID metric) and enhanced processing performance (demonstrated by an elevation in the PSNR values) during training, ultimately stabilizing at equilibrium. The maximal discrepancy between the PSNR and FID metrics served as the determinant for optimal training termination, thereby achieving an optimal equilibrium between the model optimization (fidelity preservation) and processing efficacy (output clarity). The full dataset is available in the Supplementary material (Sections S1–S3).

Figure 2. Display of training process data and key image results (epoch = 1–165). (A) Key H&E-stained image results of the training. LR, the original image captured by the DS30R array objective lens. HR, the image captured by the microscope using a Nikon 20× NA0.8 objective lens. SR, the super-resolution image, which is obtained by processing the LR image through the super-resolution deep learning model. (B) Evaluation curves of the training results during the super-resolution model training process. The evaluation metrics selected FID, PSNR, and the difference between FID and PSNR.

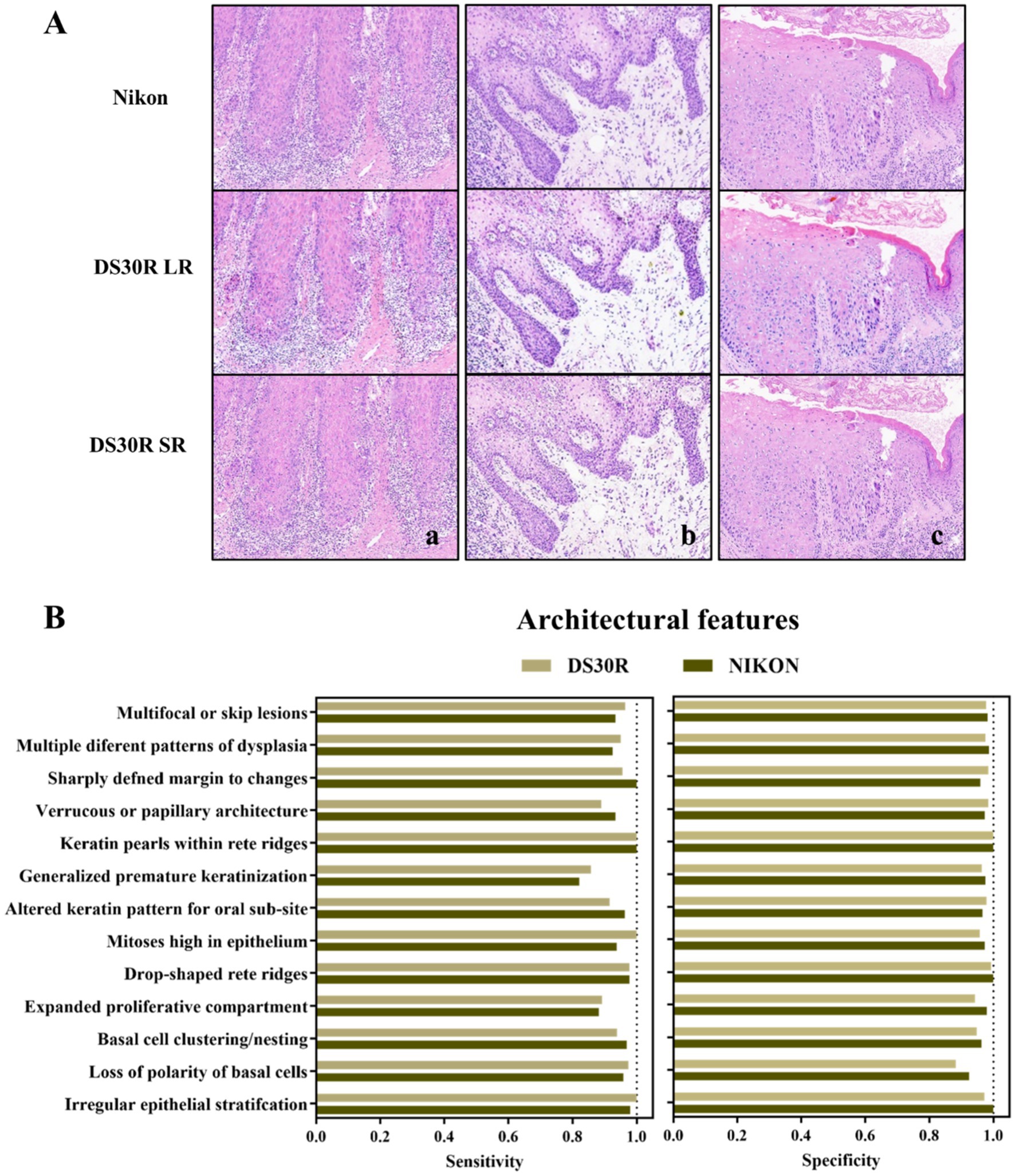

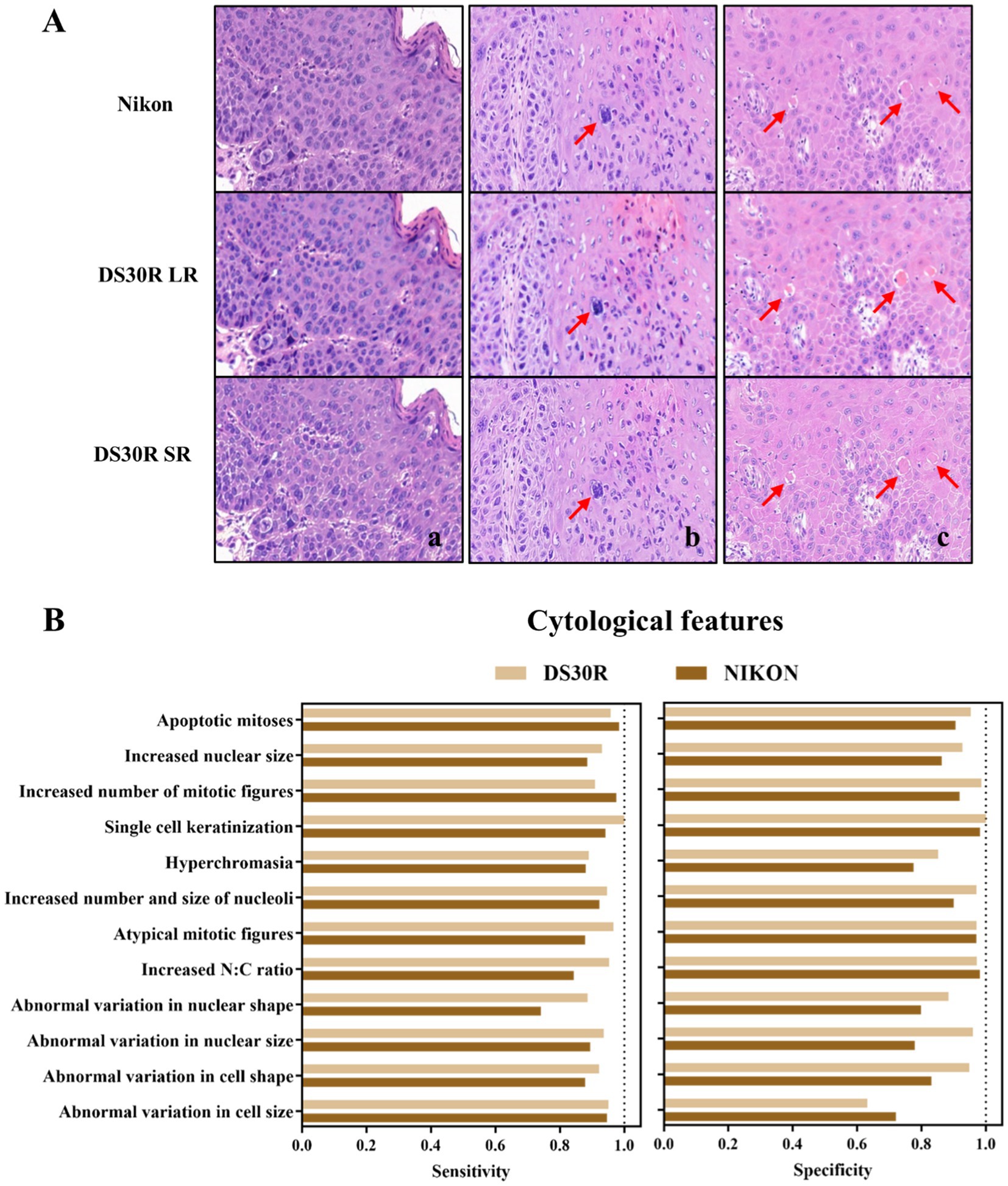

Oral pathologists examined the characteristic pathological images of each group and identified the information they contained. As shown in Figure 3A, the histopathological analysis of OED lesions revealed characteristic architectural disturbances. Figure 3Aa shows the disorganization of epithelial stratification accompanied by the loss of basal cell polarity and irregular cellular alignment. Figure 3Ab illustrates the well-defined epithelial drop-shaped rete ridges, which are a hallmark of early dysplastic progression. Figure 3Ac shows abrupt transition zones between orthokeratotic and parakeratotic keratinization patterns, indicative of advanced epithelial maturation abnormalities. Notably, these diagnostic morphological signatures were preserved with high fidelity following computational enhancement using the DS30R super-resolution imaging system. Moreover, the color information of the H&E-stained slices was closer to the actual values. In the structural feature group, as illustrated in Figure 3, the sensitivity and specificity of the feature recognition were high, particularly when the specificity exceeded 95%. The sensitivity and specificity of image recognition using the DS30R and Nikon scanners closely matched. Detailed data are presented in Supplementary Table S1. Figure 4A shows the three characteristic cellular features observed in OED. Figure 4Aa shows an increased nuclear-to-cytoplasmic (N/C) ratio, accompanied by nuclear abnormalities, such as nucleolar enlargement and hyperchromasia, indicative of enhanced cellular proliferation and elevated mitotic activity. Figure 4Ab shows a distinct abnormal mitotic pattern with concomitant nuclear enlargement and chromatin condensation. Figure 4Ac shows multiple single-cell keratinization patterns, a hallmark of dysplastic epithelial differentiation. Initial low-resolution scanning using the DS30R system yielded suboptimal image clarity for these subtle cytological features compared to the Nikon scanner. However, following the application of super-resolution reconstruction algorithms optimized with a generative adversarial network loss function, the DS30R SR output exhibited significantly enhanced image quality. The processed images showed improved spatial resolution, optical translucency, and intracellular granularity. As shown in Figure 4, the sensitivity for the identification of cellular features was high, although the specificity was somewhat lower than that of the structural feature group. Specificity diminished when the size and morphology of the cells and nuclei were recognized. With the exception of cell size, the DS30R’s specificity for identifying cellular characteristics surpassed that of the Nikon scanner. Further details are provided in Supplementary Table S2.

Figure 3. Structure characteristics in OED slides. (A) Examples of OED structural feature images: (a) irregular epithelial stratification, (b) drop-shaped rete ridges, and (c) altered keratin pattern for oral sub-site. Images at 10× magnification were obtained from H&E-stained tissue sections scanned using DS30R and Nikon. (B) Sensitivity and specificity of structural features recognition.

Figure 4. Cell morphological characteristics in OED slides. (A) Examples of OED cell feature images: (a) increased N/C ratio, (b) atypical mitotic figures, (c) single cell keratinization. Images at 20× magnification were obtained from H&E-stained tissue sections scanned using DS30R and Nikon. (B) Sensitivity and specificity of cell morphological characteristics recognition.

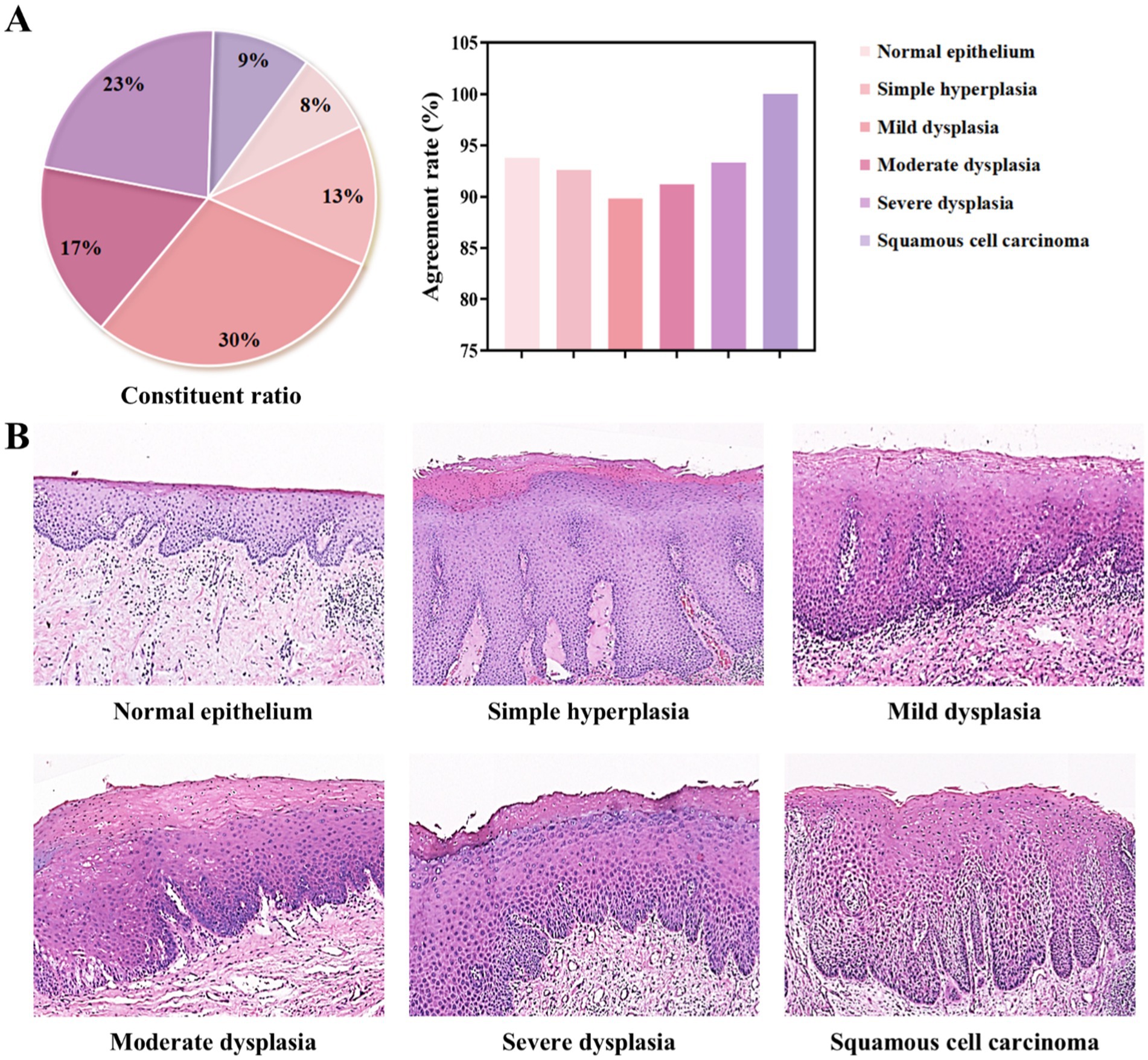

Based on the original diagnoses, the 200 oral histopathological sections selected in this study were classified as normal epithelium, simple hyperplasia, mild dysplasia, moderate dysplasia, severe dysplasia, or squamous cell carcinoma (Figure 5A and Supplementary Table S3). The coincidence rates between the diagnostic conclusion under light microscopy and the historical diagnostic conclusion were as follows: normal, 93.8%; simple hyperplasia, 92.6%; mild dysplasia, 89.8%; moderate dysplasia, 91.2%; severe dysplasia, 93.3%; and cancer, 100%. The DS30R scanning images corresponding to each diagnosis are shown in Figure 5B. These images were generated using super-resolution technology after scanning with a 20× array objective lens using a DS30R pathological section scanner. This demonstrated the characteristic features of OED across different histopathological grades. The key diagnostic criteria include the extent of epithelial involvement due to dysplastic changes and the presence of localized cellular atypia, such as nuclear pleomorphism, loss of polarity, and abnormal mitotic activity. These features are critical for distinguishing mild, moderate, and severe dysplasia. For example, in Figure 5B, normal or hyperplastic epithelial tissues showed no cellular or structural atypia. In mild dysplasia, atypical hyperplasia is confined to the lower third of the epithelial layer. Moderate dysplasia exhibits features extending to the middle third of the epithelial layer, whereas severe dysplasia demonstrates characteristics involving almost the entire epithelial layer and/or shows significant cellular atypia. The pathologists used a DS30R scanner, a Nikon scanner, and a light microscope to examine the entire section and establish a definitive diagnosis. The consistencies in the diagnoses made using the three methods were calculated and compared (Supplementary Table S4 and Section S4). The kappa values were 0.981 for the Nikon-optical microscope (p < 0.05), 0.969 for the DS30R-optical microscope (p < 0.05), and 0.988 for the Nikon-DS30R (p < 0.05). The consistency test among the three evaluators was conducted using Fleiss’ kappa test, with a value of 0.979 (p < 0.05), indicating very high consistency among the evaluators.

Figure 5. Classification of oral epithelial tissues with different grading of dysplasia degree. (A) Composition ratios of each diagnostic category and their agreement rates in diagnostic outcomes. (B) Representative pathological H&E-stained images for each category, all acquired using the DS30R scanner at 10× objective magnification.

To the best of our knowledge, this study is the first to use deep-learning-based super-resolution technology with the morphological features of oral epithelial tissues through methodological refinement for OED imaging. Employing H&E-stained slides of oral mucosal epithelial tissue for machine learning and continuously adjusting parameters, such as granularity, during training enhances image clarity and suitability for oral histopathological diagnosis. Our research confirmed that scanning the images of OED slides with this system effectively preserved the characteristics of tissue structure and cells. By incorporating a deep learning algorithm, the final generated image exhibits high sensitivity and specificity for identifying lesion features, particularly for imaging fine cellular structures. Compared with the regional scanning mode of the Nikon scanner, our system significantly enhanced the scanning speed and reduced the storage volume of the image files. These results indicate that the array objective scanning imaging system embodied by the DS30R combined with deep learning has significant application value in the histopathological diagnosis of OED.

To overcome the diffraction limit and achieve higher-resolution imaging of cellular microstructures, various microscopic cellular imaging techniques have been developed since 2000, including super-resolution technologies based on the structured illumination microscopy (SIM) principles (25) and photoactivated localization microscopy (PALM) developed by Betzig et al. (17), which utilize fluorescent molecules combined with proteins. However, these techniques require expensive optical equipment and involve complex procedures. The cellular imaging technology employed in this study adopts a pyramid architecture based on convolutional neural networks, referencing the SISR framework proposed by Li et al. in 2020 (26). This is one of the few clinically validated methodologies, and our findings demonstrate its applicability for the histopathological diagnosis of OED using H&E-stained slides.

Some limitations exist in this study. In OPMDs histopathological slides, epithelial tissue alterations such as inflammatory infiltration and ulceration - which frequently coexist with OED - may concurrently influence cellular and architectural characteristics, yet these confounding factors were not systematically addressed. Regarding OED diagnosis and intervention, immunohistochemical staining is occasionally required to assess the risk of malignant transformation (27). However, such analyses were not performed in this investigation. Therefore, future research should evaluate the efficacy of this technology for interpreting immunohistochemically stained specimens.

Moreover, different pathologists noted certain discrepancies in the diagnoses of the same tissue sections. This aligns with the findings of Allard et al. (28), namely that despite clear standards for identifying pathological features, subjective variances among diagnosing pathologists are inevitable due to OED. Although it specifies the boundary involving epithelial layers, the exact indicators for pathological features remain vague, leading to a grading system that assesses the overall morphology of several attributes (29, 30). Owing to the challenges in diagnosing this disease, particularly in difficult cases, consultation with a more experienced physician may be required to confirm the diagnosis. In such instances, the utility of DS30R slice scanning data becomes evident. These histopathological data can be quickly shared among various physicians, enhancing collaboration, and facilitating educational sessions. With the advancements in computer science and technology, an increasing number of researchers are creating more objective systems for evaluating pathological sections using AI (31–33). Gupta et al. (34) used a deep learning algorithm to categorize the severity of epithelial dysplasia in 52 histopathological sections of potentially malignant oral conditions, achieving an accuracy of 89.3%. Employing AI to develop a computer-aided diagnostic system offers the benefits of high precision, time and labor savings, and comprehensive analyses (35, 36). Compared to traditional scanners, the DS30R scanning system, which is enhanced with super-resolution imaging technology, shows significant potential for AI-assisted diagnosis owing to its faster imaging capabilities and lower storage and operational requirements. In future studies, we plan to further assess the effectiveness of the AI-assisted diagnosis of oral diseases.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by the ethics committee of the Affiliated Hospital of Qingdao University. The studies were conducted in accordance with the local legislation and institutional requirements. The human samples used in this study were acquired from primarily isolated as part of your previous study for which ethical approval was obtained. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

ZL: Conceptualization, Investigation, Writing – original draft. PW: Conceptualization, Investigation, Writing – original draft. ND: Investigation, Software, Writing – original draft. HZ: Funding acquisition, Supervision, Writing – original draft. FX: Investigation, Software, Writing – original draft. XY: Investigation, Software, Writing – original draft. MY: Investigation, Software, Writing – original draft. QY: Data curation, Investigation, Writing – original draft. YT: Data curation, Investigation, Writing – original draft. KD: Formal analysis, Validation, Writing – original draft. JZ: Formal analysis, Validation, Writing – original draft. BH: Supervision, Writing – review & editing. JD: Supervision, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the National Natural Science Foundation of China (82401111) and the Peak-Climbing Project in stomatology of Qingdao.

We thank our colleagues in Department of Stomatology, The Affiliated Hospital of Qingdao University and for their valuable efforts on this article. We also thank the personnel of Dakewe (Shenzhen) Medical Equipment Co., Ltd. for their technical support.

QY and YT were employed by Dakewe (Shenzhen) Medical Equipment Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declare that no Gen AI was used in the creation of this manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2025.1550512/full#supplementary-material

1. Chiu, SF, Ho, CH, Chen, YC, Wu, LW, Chen, YL, Wu, JH, et al. Malignant transformation of oral potentially malignant disorders in Taiwan: an observational nationwide population database study. Medicine (Baltimore). (2021) 100:e24934. doi: 10.1097/md.0000000000024934

2. Iocca, O, Sollecito, TP, Alawi, F, Weinstein, GS, Newman, JG, De Virgilio, A, et al. Potentially malignant disorders of the oral cavity and oral dysplasia: a systematic review and meta-analysis of malignant transformation rate by subtype. Head Neck. (2020) 42:539–55. doi: 10.1002/hed.26006

3. Chuang, SL, Wang, CP, Chen, MK, Su, WW, Su, CW, Chen, SL, et al. Malignant transformation to oral cancer by subtype of oral potentially malignant disorder: a prospective cohort study of Taiwanese nationwide oral cancer screening program. Oral Oncol. (2018) 87:58–63. doi: 10.1016/j.oraloncology.2018.10.021

4. Wang, YY, Tail, YH, Wang, WC, Chen, CY, Kao, YH, Chen, YK, et al. Malignant transformation in 5071 southern Taiwanese patients with potentially malignant oral mucosal disorders. BMC Oral Health. (2014) 14:99. doi: 10.1186/1472-6831-14-99

5. Warnakulasuriya, S, Kovacevic, T, Madden, P, Coupland, VH, Sperandio, M, Odell, E, et al. Factors predicting malignant transformation in oral potentially malignant disorders among patients accrued over a 10-year period in south East England. J Oral Pathol Med. (2011) 40:677–83. doi: 10.1111/j.1600-0714.2011.01054.x

6. Reichart, P, Sciubba, JJ, and Philipsen, HP. Splitters or lumpers: the 2017 WHO classification of head and neck Tumours. J Am Dent Assoc. (2018) 149:567–71. doi: 10.1016/j.adaj.2018.03.029

7. Sarradin, V, Siegfried, A, Uro-Coste, E, and Delord, JP. WHO classification of head and neck tumours 2017: Main novelties and update of diagnostic methods. Bull Cancer. (2018) 105:596–602. doi: 10.1016/j.bulcan.2018.04.004

8. Kujan, O, Khattab, A, Oliver, RJ, Roberts, SA, Thakker, N, and Sloan, P. Why oral histopathology suffers inter-observer variability on grading oral epithelial dysplasia: an attempt to understand the sources of variation. Oral Oncol. (2007) 43:224–31. doi: 10.1016/j.oraloncology.2006.03.009

9. Fleskens, S, and Slootweg, P. Grading systems in head and neck dysplasia: their prognostic value, weaknesses and utility. Head Neck Oncol. (2009) 1:11. doi: 10.1186/1758-3284-1-11

10. Shubhasini, AR, Praveen, BN, Usha, H, Uma, K, Shubha, G, Keerthi, G, et al. Inter- and intra-observer variability in diagnosis of Oral dysplasia. Asian Pac J Cancer Prev. (2017) 18:3251–4. doi: 10.22034/apjcp.2017.18.12.3251

11. Wang, C, Liddy, C, Afkham, A, Islam, S, Shehata, F, Posner, G, et al. Pathology perspective on gynaecologic malignancy screening questions in electronic consultation. J Telemed Telecare. (2021) 27:123–30. doi: 10.1177/1357633x19864825

12. Rocha, R, Vassallo, J, Soares, F, Miller, K, and Gobbi, H. Digital slides: present status of a tool for consultation, teaching, and quality control in pathology. Pathol Res Pract. (2009) 205:735–41. doi: 10.1016/j.prp.2009.05.004

13. Adeoye, J, Chaurasia, A, Akinshipo, A, Suleiman, IK, Zheng, LW, Lo, AWI, et al. A deep learning system to predict epithelial dysplasia in Oral leukoplakia. J Dent Res. (2024) 103:1218–26. doi: 10.1177/00220345241272048

14. Confer, MP, Falahkheirkhah, K, Surendran, S, Sunny, SP, Yeh, K, Liu, YT, et al. Rapid and label-free histopathology of Oral lesions using deep learning applied to optical and infrared spectroscopic imaging data. J Pers Med. (2024) 14:304. doi: 10.3390/jpm14030304

15. Madabhushi, A, and Lee, G. Image analysis and machine learning in digital pathology: challenges and opportunities. Med Image Anal. (2016) 33:170–5. doi: 10.1016/j.media.2016.06.037

16. Frei, AL, Merki, S, Henke, MJ, Wey, N, Moch, H, Mertz, KD, et al. Future medicine: digital pathology. Ther Umsch. (2019) 76:404–8. doi: 10.1024/0040-5930/a001108

17. Betzig, E, Patterson, GH, Sougrat, R, Lindwasser, OW, Olenych, S, Bonifacino, JS, et al. Imaging intracellular fluorescent proteins at nanometer resolution. Science. (2006) 313:1642–5. doi: 10.1126/science.1127344

18. Rust, MJ, Bates, M, and Zhuang, X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nat Methods. (2006) 3:793–6. doi: 10.1038/nmeth929

19. Shin, M, Seo, M, Lee, K, and Yoon, K. Super-resolution techniques for biomedical applications and challenges. Biomed Eng Lett. (2024) 14:465–96. doi: 10.1007/s13534-024-00365-4

20. Deng, Y, Feng, M, Jiang, Y, Zhou, Y, Qin, H, Xiang, F, et al. Development of pathological reconstructed high-resolution images using artificial intelligence based on whole slide image. MedComm. (2020) 1:410–7. doi: 10.1002/mco2.39

21. Jiang, S, Guo, C, Song, P, Wang, T, Wang, R, Zhang, T, et al. High-throughput digital pathology via a handheld, multiplexed, and AI-powered ptychographic whole slide scanner. Lab Chip. (2022) 22:2657–70. doi: 10.1039/d2lc00084a

22. Muller, S, and Tilakaratne, WM. Update from the 5th edition of the World Health Organization classification of head and neck tumors: Tumours of the Oral cavity and Mobile tongue. Head Neck Pathol. (2022) 16:54–62. doi: 10.1007/s12105-021-01402-9

23. McHugh, ML. Interrater reliability: the kappa statistic. Biochem Med. (2012) 22:276–82. doi: 10.11613/BM.2012.031

24. Gustafsson, MG. Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy. J Microsc. (2000) 198:82–7. doi: 10.1046/j.1365-2818.2000.00710.x

25. Novack, R, Chapman, E, Gao, J, Basil, H, Lynn, NH, Tony, LN, et al. Utilization of p53 and p16 immunohistochemistry in the classification of human papillomavirus-associated, p53 wild-type, and p53 abnormal Oral epithelial dysplasia. Mod Pathol. (2023) 36:100348. doi: 10.1016/j.modpat.2023.100348

26. Li, B, Keikhosravi, A, Loeffler, AG, and Eliceiri, KW. Single image super-resolution for whole slide image using convolutional neural networks and self-supervised color normalization. Med Image Anal. (2021) 68:101938. doi: 10.1016/j.media.2020.101938

27. Gisev, N, Bell, JS, and Chen, TF. Interrater agreement and interrater reliability: key concepts, approaches, and applications. Res Social Adm Pharm. (2013) 9:330–8. doi: 10.1016/j.sapharm.2012.04.004

28. Allard, FD, Goldsmith, JD, Ayata, G, Challies, TL, Najarian, RM, Nasser, IA, et al. Intraobserver and Interobserver variability in the assessment of dysplasia in Ampullary mucosal biopsies. Am J Surg Pathol. (2018) 42:1095–100. doi: 10.1097/pas.0000000000001079

29. Peng, JK, Dan, HX, Xu, H, Zeng, X, and Chen, Q. Agreement evaluation of the severity of oral epithelial dysplasia in oral leukoplakia. Zhonghua Kou Qiang Yi Xue Za Zhi. (2022) 57:921–6. doi: 10.3760/cma.j.cn112144-20211206-00537

30. Odell, E, Kujan, O, Warnakulasuriya, S, and Sloan, P. Oral epithelial dysplasia: recognition, grading and clinical significance. Oral Dis. (2021) 27:1947–76. doi: 10.1111/odi.13993

31. Thalakottor, LA, Shirwaikar, RD, Pothamsetti, PT, and Mathews, LM. Classification of histopathological images from breast Cancer patients using deep learning: a comparative analysis. Crit Rev Biomed Eng. (2023) 51:41–62. doi: 10.1615/CritRevBiomedEng.2023047793

32. Katayama, A, Aoki, Y, Watanabe, Y, Horiguchi, J, Rakha, EA, and Oyama, T. Current status and prospects of artificial intelligence in breast cancer pathology: convolutional neural networks to prospective vision transformers. Int J Clin Oncol. (2024) 29:1648–68. doi: 10.1007/s10147-024-02513-3

33. Tsuji, K, Kaneko, M, Harada, Y, Fujihara, A, Ueno, K, Nakanishi, M, et al. A fully automated artificial intelligence system to assist Pathologists’ diagnosis to predict histologically high-grade urothelial carcinoma from digitized urine cytology slides using deep learning. Eur Urol Oncol. (2024) 7:258–65. doi: 10.1016/j.euo.2023.11.009

34. Gupta, RK, Kaur, M, and Manhas, JS. Tissue level based deep learning framework for early detection of dysplasia in Oral squamous epithelium. J Multim Inf Syst. (2019) 6:81–6. doi: 10.33851/JMIS.2019.6.2.81

35. Cong, L, Feng, W, Yao, Z, Zhou, X, and Xiao, W. Deep learning model as a new trend in computer-aided diagnosis of tumor pathology for lung Cancer. J Cancer. (2020) 11:3615–22. doi: 10.7150/jca.43268

Keywords: oral epithelial dysplasia, deep learning, pathological diagnosis, digital pathology, artificial intelligence

Citation: Liu Z, Wang P, Deng N, Zhang H, Xin F, Yu X, Yuan M, Yu Q, Tang Y, Dou K, Zhao J, He B and Deng J (2025) Feasibility study of single-image super-resolution scanning system based on deep learning for pathological diagnosis of oral epithelial dysplasia. Front. Med. 12:1550512. doi: 10.3389/fmed.2025.1550512

Received: 03 January 2025; Accepted: 19 February 2025;

Published: 12 March 2025.

Edited by:

Gabriela Anaya-Saavedra, Metropolitan Autonomous University, MexicoReviewed by:

Jessica Maldonado-Mendoza, Metropolitan Autonomous University, MexicoCopyright © 2025 Liu, Wang, Deng, Zhang, Xin, Yu, Yuan, Yu, Tang, Dou, Zhao, He and Deng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bing He, aGViaW5nOTAxMEAxMjYuY29t; Jing Deng, ZGVuZ2ppbmczMzMzQDEyNi5jb20=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.