- 1College of Information and Communication Engineering, Harbin Engineering University, Harbin, China

- 2Key Laboratory of Advanced Marine Communication and Information Technology, Ministry of Industry and Information Technology, Harbin Engineering University, Harbin, China

- 3School of Public Health, Harbin Medical University, Harbin, China

- 4School of Medical Humanity, Harbin Medical University, Harbin, China

- 5Department of Ophthalmology, The Second Affiliated Hospital of Harbin Medical University, Harbin Medical University, Harbin, China

Introduction: Pterygium, a prevalent ocular disorder, requires accurate severity assessment to optimize treatment and alleviate patient suffering. The growing patient population and limited ophthalmologist resources necessitate efficient AI-based diagnostic solutions. This study aims to develop an automated grading system combining deep learning and image processing techniques for precise pterygium evaluation.

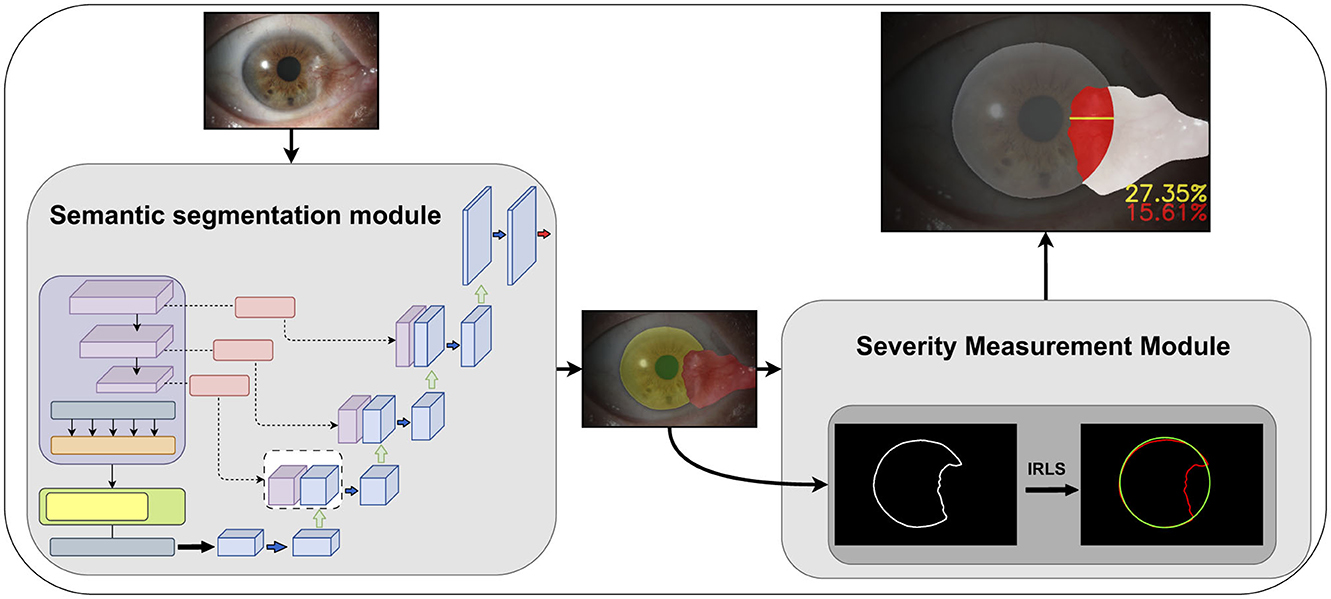

Methods: The proposed system integrates two modules: 1) A semantic segmentation module utilizing an improved TransUnet architecture for pixel-level pterygium localization, trained on annotated slit-lamp microscope images from clinical datasets. 2) A severity assessment module employing enhanced curve fitting algorithms to quantify pterygium invasion depth in critical ocular regions. The framework merges deep learning with traditional computational methods for comprehensive analysis.

Results: The semantic segmentation model achieved an average Dice coefficient of 0.9489 (0.9041 specifically for pterygium class) on test datasets. In clinical validation, the system attained 0.9360 grading accuracy and 0.9363 weighted F1 score. Notably, it demonstrated strong agreement with expert evaluations (Kappa coefficient: 0.8908), confirming its diagnostic reliability.

Discussion: The AI-based diagnostic method proposed in this study achieves automatic grading of pterygium by integrating semantic segmentation and curve fitting technology, which is highly consistent with the clinical evaluation of doctors. The quantitative evaluation framework established in this study is expected to meet multiple clinical needs beyond basic diagnosis. The construction of the data set should continue to be optimized in future studies.

1 Introduction

Pterygium is an abnormal tissue originating from the conjunctiva and growing toward the corneal region (1), typically presenting as pink and wedge-shaped. The majority of these abnormal tissues grow from the inner canthus toward the corneal region, with a minority growing from the outer canthus in the direction of the cornea or bilaterally toward the corneal direction (2). In its early stages, it can cause eye fatigue and dryness (3). As the condition worsens, more abnormal tissue invades the cornea and even the pupil region. In the worst-case scenario, it can lead to blindness, as these tissues obstruct the passage of light through the pupil. The global prevalence of pterygium is 12%, with the lowest rate observed in Saudi Arabia at 0.04% and the highest in Taiwan, China, at 53% (4).

In the early stages of pterygium development, patients can suppress inflammation through the use of medicinal eye drops or delay the invasion of abnormal tissues into the corneal region by reducing exposure to light (5). Beyond a certain point, surgical intervention becomes necessary. Accurate assessment of the condition allows for a timely excision, minimizing damage caused by the operation and reducing recurrence rates (6). Individuals like farmers, fishermen, and workers who work outdoors for prolonged periods and are exposed to sun radiation constitute a high-risk group for developing pterygium (4). Furthermore, those in this demographic often lack access to medical resources, leading to a lack of accurate diagnosis and timely treatment.

With the rapid development of deep learning technologies in recent years, the integration of ophthalmology and artificial intelligence has deepened significantly (7). Many studies have propelled the use of deep learning models in assisting the diagnosis of eye diseases, significantly enhancing diagnostic and treatment efficiencies (8). In the auxiliary diagnosis of pterygium, deep neural networks are primarily used to achieve the following three objectives: (1) classification of pterygium in images, which includes distinguishing between normal eyes and those with pterygium (9–14), as well as categorizing different types of pterygium (15–19); (2) using object detection techniques to locate lesion tissues (11, 20); (3) performing semantic segmentation of lesion tissues at the pixel level (21–23). Specifically, Liu et al. (18) and Wan et al. (19) have based their classification tasks on semantic segmentation results (assessing and grading the severity of pterygium), constructing multi-stage auxiliary diagnostic systems.

The main contributions of this article are summarized as follows:

1. Anterior segment images with pterygium captured by slit lamp photography are collected. The corresponding three regions in the anterior segment image, namely, the cornea, the pupil, and the pterygium, are marked to construct a semantic segmentation dataset of three categories. This provides a data foundation for the auxiliary diagnosis of pterygium.

2. A pterygium assessment and grading system is proposed, utilizing a semantic segmentation network based on convolutional and Vision Transformer architectures to segment three target regions in anterior segment images. The system extracts the contours of the corneal and pupil regions and estimates the depth and region of pterygium invasion into critical regions using an improved curve fitting algorithm. The results are then graded according to clinical standards.

2 Materials and methods

Figure 1 shows the workflow of our proposed automated pterygium grading system based on anterior segment images. The semantic segmentation module extracts the corneal, pupil, and pterygium regions from the images. The severity assessment module processes these segmented regions using traditional image processing techniques to: (1) quantify the invasion region and depth (expressed as a ratio) of the pterygium within the corneal region; (2) determine whether the pterygium covers the pupil region. The Iteratively Reweighted Least Squares (IRLS) method is primarily employed to robustly fit and reconstruct corneal and pupil regions, demonstrating enhanced robustness against outliers. Final grading integrates these results with clinical expertise.

Figure 1. The improved Transunet incorporates a channel and shape attention module (CSAM) into the skip connections.

2.1 Dataset

The dataset images in this article are from the Ophthalmology Department of the Second Affiliated Hospital of Harbin Medical University, consisting of 434 anterior segment images containing pterygium of varying degrees. All images have a uniform aspect ratio of 3:2 and a resolution of 2,256 × 1,504 pixels. Anterior segment images are medical images taken with a slit-lamp microscope under diffused light mode and are included in electronic medical records for ophthalmologic diseases. With patient consent, these data were used for this study, with any patient information removed to prevent a breach of privacy.

2.2 Segmentation module–Transunet with CSAM

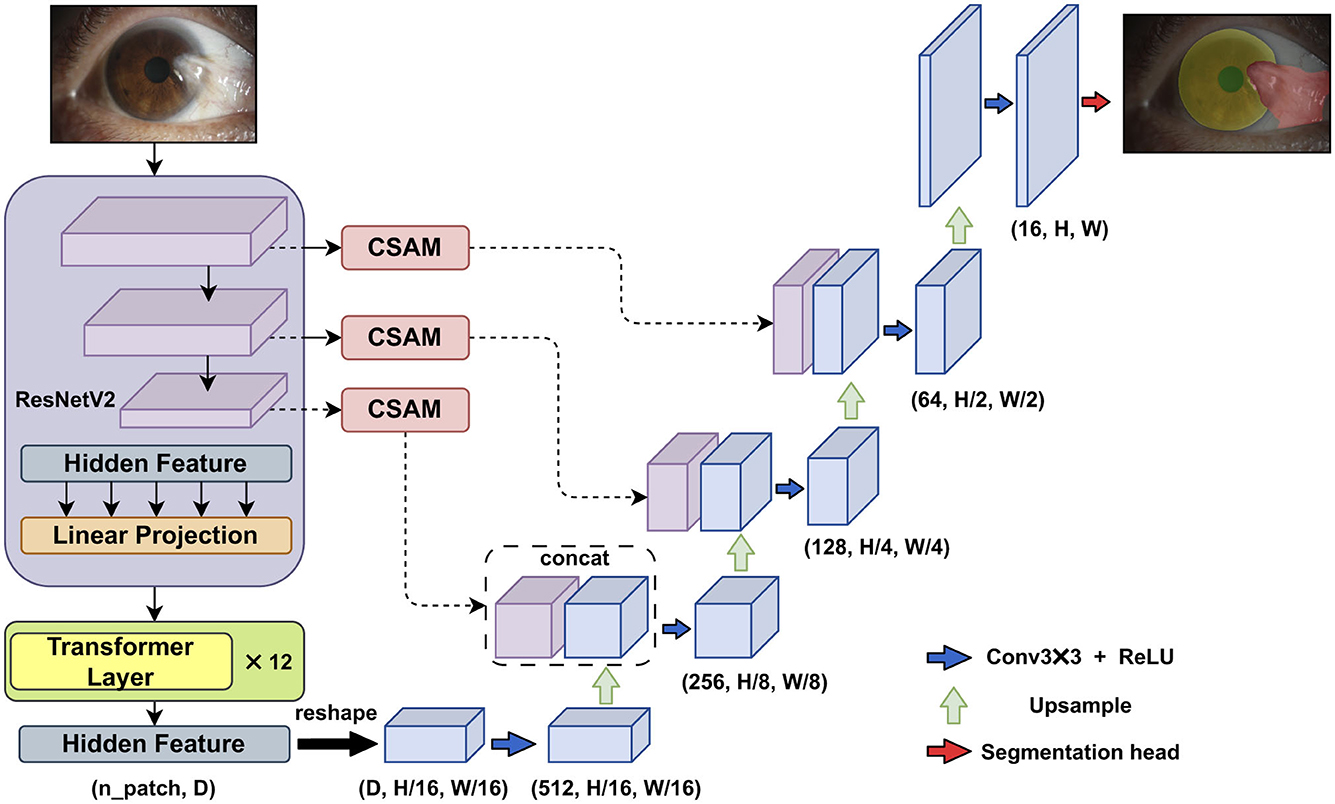

In the first module of the algorithm's workflow, we improved the Transunet architecture by incorporating the channel and shape attention module (CSAM) into the skip connections, as shown in Figure 2.

Figure 2. The improved Transunet incorporates a channel and shape attention module (CSAM) into the skip connections.

The original Transunet architecture includes several main components: ResNetV2 (the first stage of the encoder), Transformer layers (the second stage of the encoder), a convolution-based decoder, and skip connections between the different parts of the network.

In the first stage of the encoder, ResNetV2 is used to downsample the input, generating multi-stage feature maps. The second part of the encoder consists of multiple connected Transformer encoders. The core of each Transformer encoder is the multi-head self-attention mechanism, enabling the Vision Transformer (ViT) to effectively capture long-range semantic relationships within the image.

In the decoder stage, the low-level feature maps are restored to the original input size through consecutive upsampling and skip connections.

2.2.1 Channel and shape attention module (CSAM)

Numerous studies have demonstrated the effectiveness of attention mechanisms in enhancing neural network performance. In the field of computer vision, attention mechanisms improve model performance by guiding the network to focus on the more important features within an image. SENet (24) and CBAM (25) are two representative studies in this region.

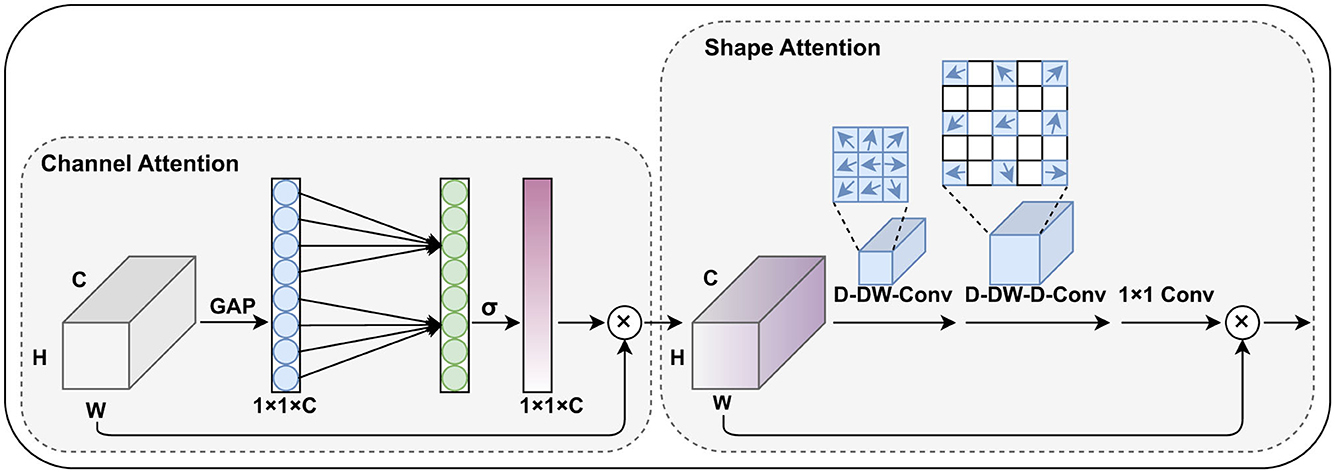

Based on the concept of generating weights for different channels and spatial positions, this paper improves the CBAM module and proposes the channel and shape attention module (CSAM), as illustrated in the Figure 3.

Figure 3. The proposed channel and shape attention module (CSAM), inherits the design principles of CBAM and includes both channel attention and spatial (shape) attention mechanisms.

Similar to CBAM, the CSAM is also divided into two parts: the channel attention module and the spatial attention module. In the channel weight generation stage, we adopted the lightweight design from Wang et al. (26), replacing the fully connected layers with 1D convolutions to capture the relationships between each channel and its neighboring channels. This approach reduces the number of parameters while preserving global information.

In our task, pterygium is a tissue with a relatively clear shape prior, and the feature maps generated by the shallow layers of the encoder contain strong structural information about the target's shape. Research such as Guo et al. (27) and Dai et al. (28) has shown that large convolutional kernels can effectively expand the network's receptive field, and deformable convolutions can effectively capture shape information of the target. Therefore, in the spatial attention module, we combine large convolutional kernels and deformable convolutions to generate spatial position weights for the feature maps, specifically including a deformable depth wise convolution, a deformable depthwise dilated convolution, and a 1 × 1 convolution. This part can be formulated as:

F′ represents the feature maps from the skip connections at each stage.

2.3 Severity measurement module

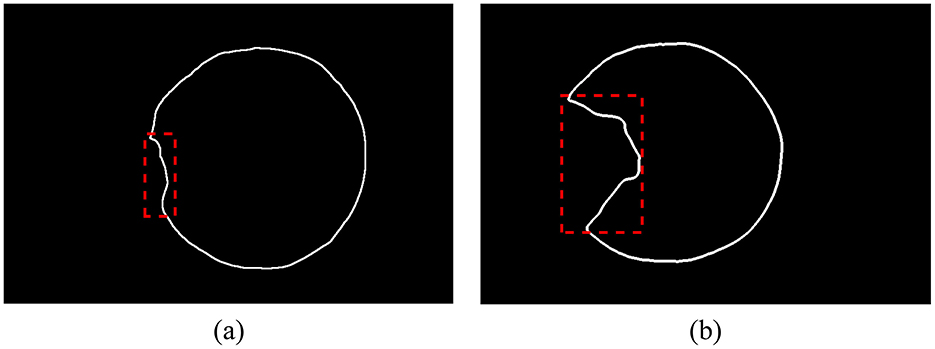

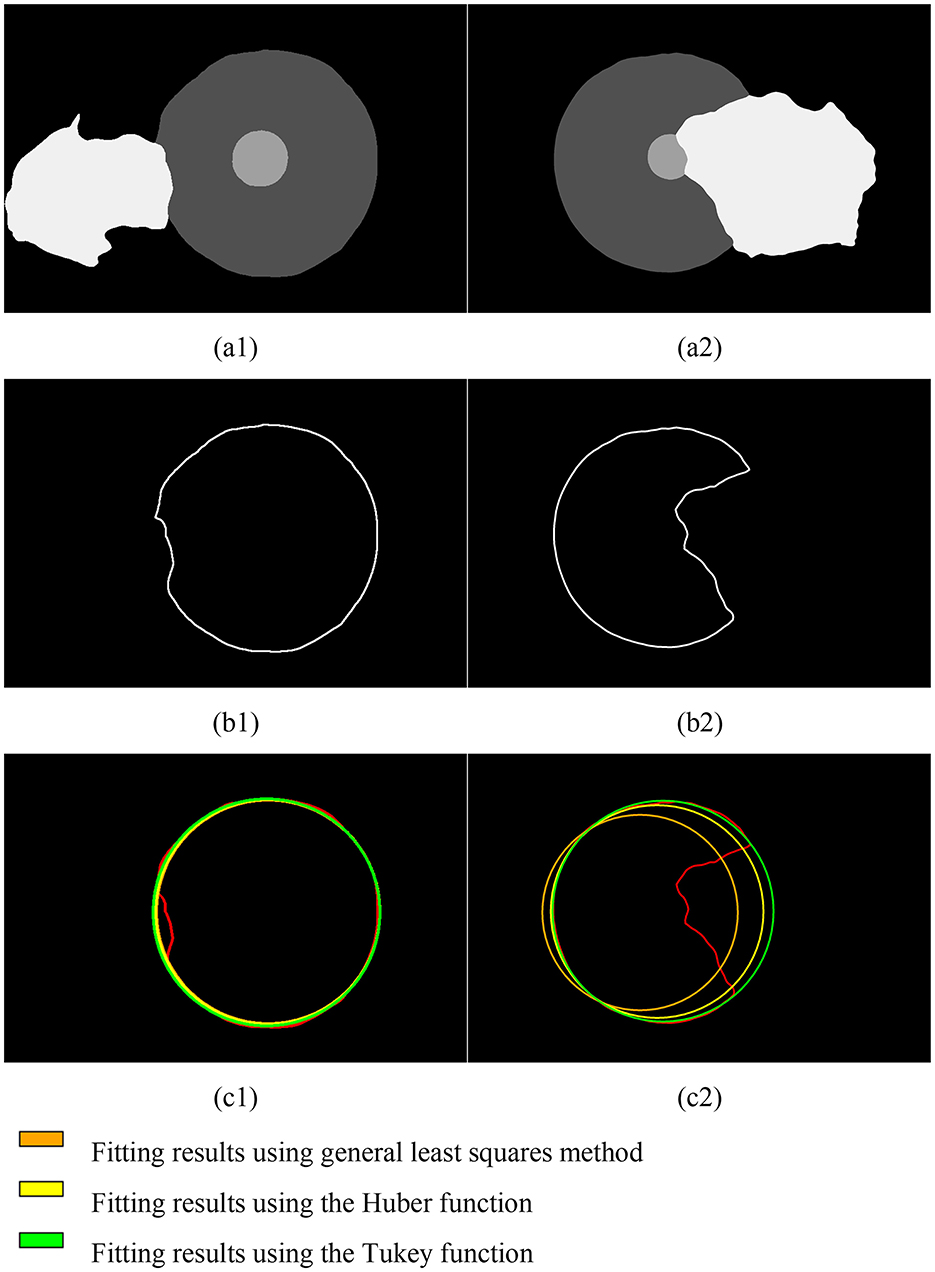

The contour of the corneal region (or pupil region) is extracted based on the semantic segmentation results, followed by fitting and subsequent calculations. When using ordinary least squares, different degrees of pterygium invasion will affect the fitting results, as shown in Figure 4.

Figure 4. The corneal contour extracted from the segmentation result, and the outliers are shown in the dotted box. (A) Pterygium slightly invades the cornea, the proportion of outliers is small. (B) Pterygium severely invades the cornea, the proportion of outliers is large.

The Iteratively Reweighted Least Squares (IRLS) introduces a distance-weighting function to calculate the weight for each point. After several iterations, the weight of outliers gradually decreases during the fitting process. The weights can be represented as a diagonal matrix, where the values on the diagonal correspond to the weight of each sample.

The Huber function and Tukey function are both widely used weighting functions, as shown in Equations 4, 5 respectively. Here, δ represents the residual of a data point, and γ is a hyperparameter.

The formula for calculating the invaded region is as follows:

Where Ap represents the region of pterygium covering the corneal or pupil region, and Ac represents the region of the corneal or pupil region (in the same image). Ap and Ac are obtained by performing per-pixel counting of the semantic segmentation results and curve fitting results.

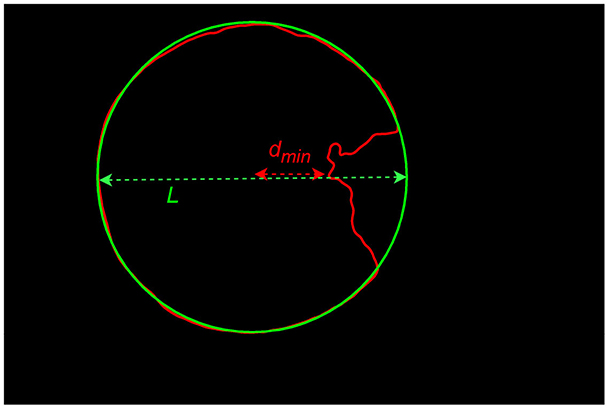

The formula for calculating the invasion depth is as follows:

Where dmin represents the minimum distance from the center of the corneal region (obtained based on the fitting results) to a point on the incomplete corneal contour, and is the corneal diameter in the direction between these two points. As illustrated in Figure 5.

2.4 Experiment

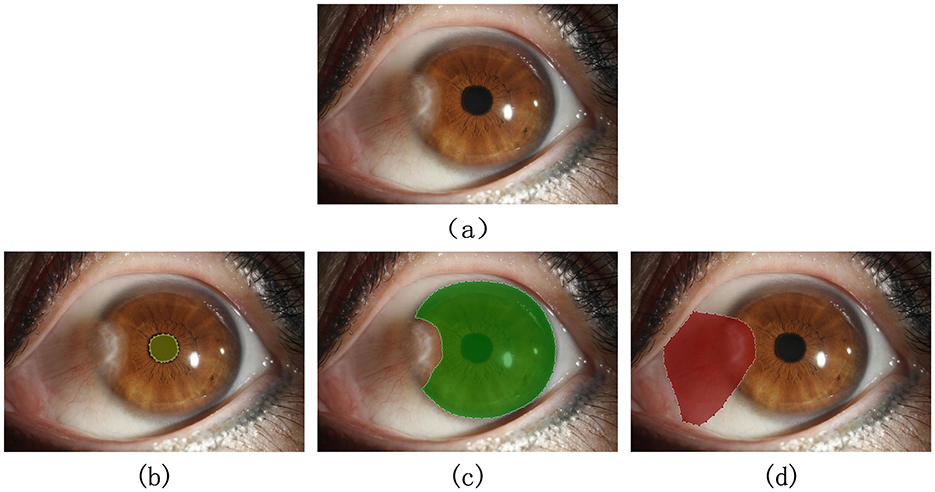

To train the semantic segmentation algorithm for automatically segmenting the target categories in images, the original images need to be manually annotated, accurately marking the external contours of the pupil region, corneal region, and pterygium tissue, forming a three-category semantic segmentation dataset, as shown in Figure 6. This process was completed under the guidance of professional physicians.

Figure 6. Qualified image and its annotation example: (A) original image. (B–D) Show the correct marking of the pupil region, corneal region, and the pterygium region, respectively.

The dataset was randomly divided into training and testing sets at a ratio of 85:15. The training set consists of 368 images, while the testing set contains 66 images.

We performed data augmentation on the dataset, including random vertical and horizontal flips, as well as color space transformations. During the training phase, we resized all images to 512 × 512 pixels. The backbone of the semantic segmentation network was initialized using the pre-trained weights provided by the authors. Training was conducted on an NVIDIA RTX2080 (Windows system), using the PyTorch framework and the Adam optimizer. The initial learning rate was set to 0.0001, and the training lasted for 150 epochs.

The loss function used for training in this article consists of the cross-entropy loss function and the dice loss function, as illustrated in the following formula:

3 Experimental results analysis

3.1 Evaluation of the image segmentation module

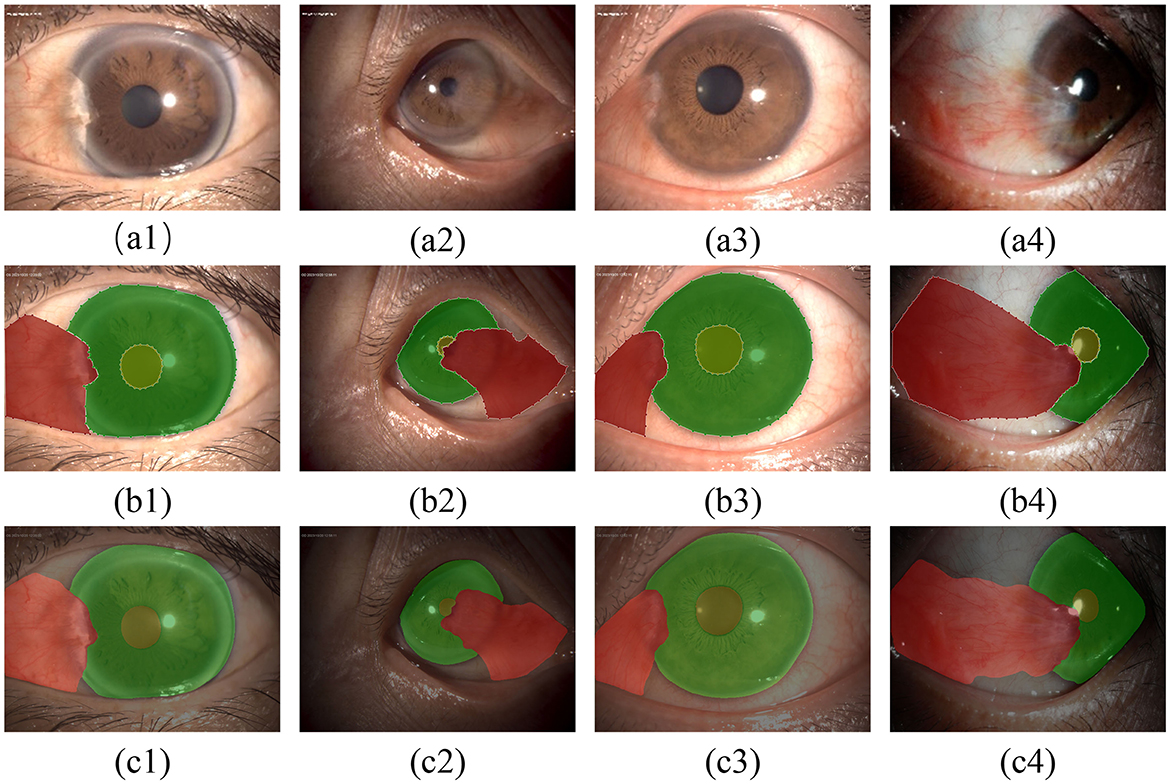

In this study, semantic segmentation was performed on the three types of targets present in anterior segment images. Some results are presented in Figure 7.

Figure 7. Qualitative evaluation of the segmentation model: (A1–A4) show the original images; (B1–B4) show the results of annotations under the guidance of experts; (C1–C4) show the segmentation results of the model.

The test set contains 66 images, and we used a comprehensive set of metrics to evaluate the performance of the segmentation module on the test set. These metrics include Intersection over Union (IoU), Dice Coefficient (DSC), Precision, and Recall, which are calculated as follows (for individual categories):

where TP refers to the true positive part of the category, FP is the false positive part, and FN is the false negative part.

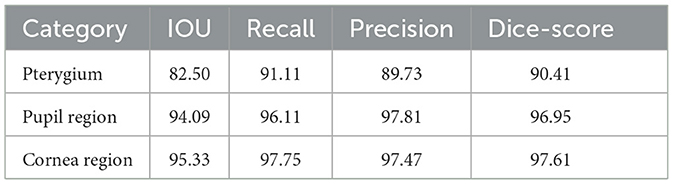

To evaluate the performance of the model on the test set, the aforementioned metrics are used to perform a quantitative analysis of the segmentation module's results, which are shown in Table 1.

Here CSAM is used as an attention module to enhance the features in the skip connections. Therefore, we compared its performance with other classic attention modules commonly used in the medical image segmentation field, as shown in Table 2 (below each category are the IOU metrics for that category).

Compared to other attention modules, the attention module we proposed achieves better improvements.

According to Tables 1, 2 and Figure 7, the model has achieved high accuracy in segmenting the pupil and corneal regions, while the segmentation performance for pterygium is influenced by its specific morphology. Pterygium that is raised with well-defined edges yields better segmentation results, whereas the performance is poorer for less distinct edges, as shown in Figure 7C4.

For the portion of the pterygium invading the corneal limbus, where the edges are clearer, the segmentation results are more accurate. This clarity is beneficial for the post-processing of the segmentation results.

3.2 Severity assessment module analysis

In this study, we used the Iterative Reweighted Least Squares (IRLS) method to fit and restore the incomplete corneal region extracted via semantic segmentation. We tested two weight functions—the Huber function and the Tukey function—and conducted a total of ten iterative processes, comparing the results with those from the general least squares method. The results are shown in the Figure 8.

Figure 8. Fitting results of the corneal contour based on semantic segmentation for pterygium of different severity levels. (A1, A2) Results of semantic segmentation; (B1, B2) corneal contours extracted based on semantic segmentation results; (C1, C2) fitting results.

Due to the varying depth of pterygium invasion into the corneal limbus, the Iteratively Reweighted Least Squares (IRLS) method using the Tukey weight function achieved the best fitting results under comprehensive conditions.

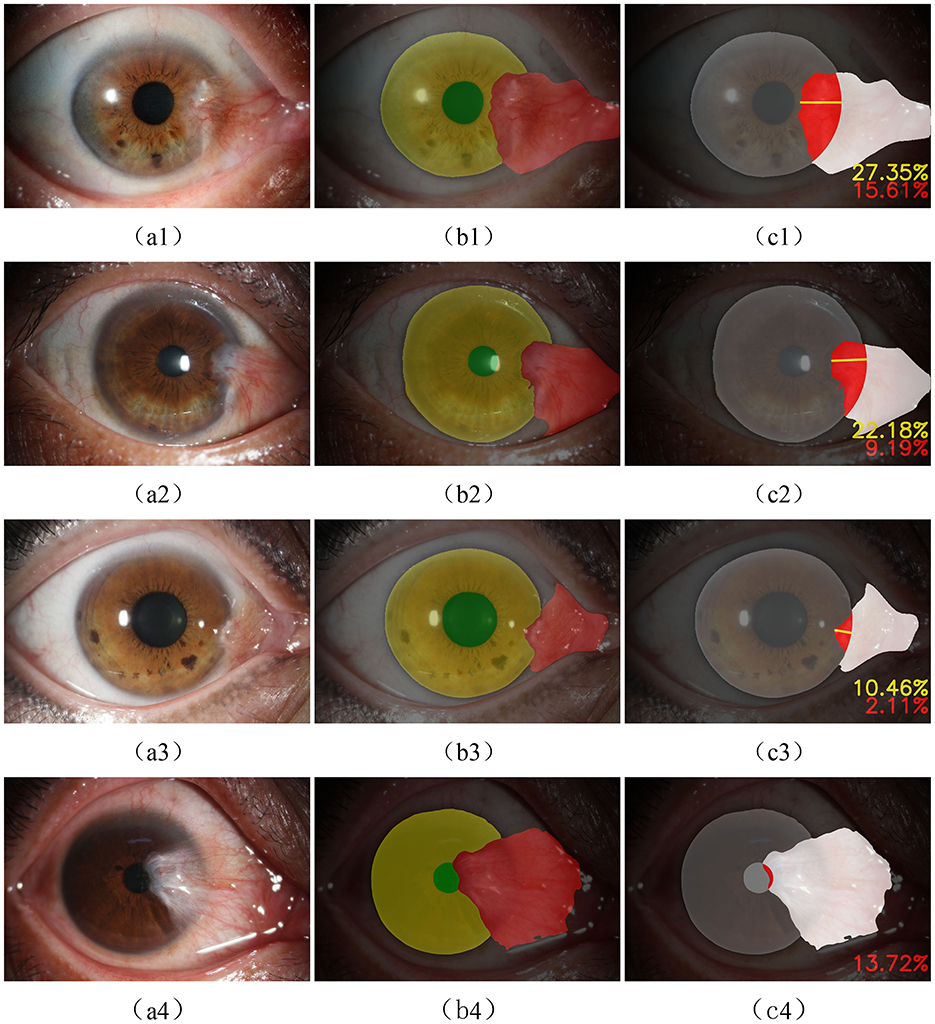

The output of the evaluation module based on the IRLS using the Tukey weight function is shown in Figure 9. Figures 9A1–A3 depict typical cases where the pterygium covers the corneal surface but does not obscure the pupil, with results showing the depth and region (in percentage) of its invasion into the corneal region. Figure 9A4 presents a more severe case of pterygium, where the pterygium has already covered the pupil (with the invasion region percentage also calculated). All of these results are derived from images in the test set.

Figure 9. (A1–A4) the original anterior segment image, (B1–B4) the result of semantic segmentation, and (C1–C4) the outputs of the evaluation module.

3.3 Overall reliability analysis of the system

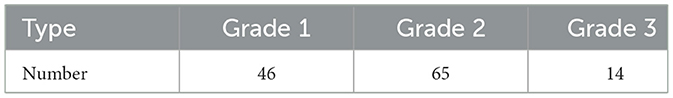

Clinically, pterygium can be classified into three grades. Grade 1: pterygium invades the cornea ≤ 3 mm; Grade 2: pterygium invades the cornea >3 mm but does not reach the pupil margin; Grade 3: pterygium has covered the pupil (as shown in Figure 9A4).

The algorithm is presented externally as a classification model. To evaluate the reliability of the proposed method for pterygium grading, we additionally collected anterior segment images containing pterygium from 125 cases. These images were graded by experienced ophthalmologists, and the number of pterygium cases with varying severity levels is shown in Table 3.

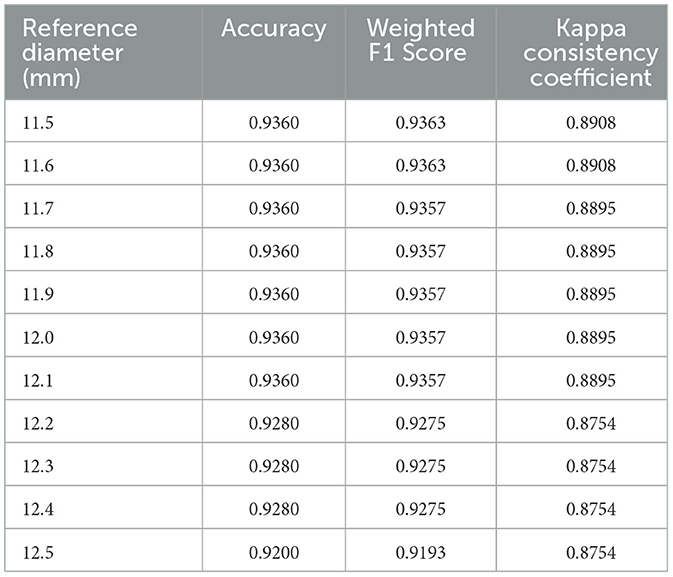

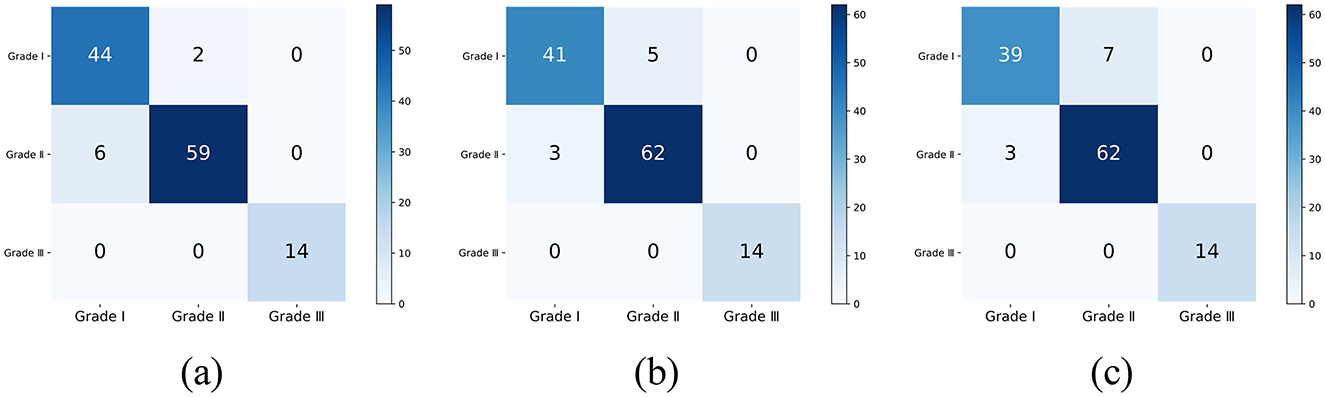

The common range of adult corneal diameters is 11.5 mm to 12.5 mm. Within this range, we selected a reference diameter at intervals of 0.1 mm. The confusion matrices of the classification results, when the reference diameters L are 11.5 mm, 12.0 mm, and 12.5 mm, are shown in Figure 10 (different reference diameters mainly affect the distinction between Grade 1 and Grade 2).

Figure 10. The classification confusion matrices under different reference diameters: (A) L = 11.5 mm, (B) L = 12.0 mm, (C) L = 12.5 mm.

We use accuracy to measure the overall classification performance, and the weighted F1 score and Kappa consistency coefficient to evaluate the impact of class imbalance. Their calculation expressions are as follows:

F1i is the F1 score of the i-th class, and wi is the proportion of samples in that class.

Where Po is the actual classification accuracy, and Pe is the expected accuracy.

The classification results for all reference diameters are shown in the Table 4.

For the dataset used in this study, 11.5 mm may be the most optimal reference diameter, with an accuracy of 0.9360, a weighted F1 score of 0.9363, and a Kappa consistency coefficient of 0.8909. This indicates that the grading results of the proposed method have a high level of agreement with the physicians' grading results.

The method proposed in this paper achieves quantitative calculation of the extent of pterygium invasion into critical regions. Based on semantic segmentation and curve fitting, it performs well in tasks such as determining the size and location of the pterygium and assessing its severity. In primary healthcare units and rural regions, clinicians can use it as an auxiliary tool to quantify pterygium progression and standardize severity evaluation. By integrating personal experience and patient history, it enhances the diagnostic accuracy of pterygium.

4 Discussion

In this study, we aimo utilize a deep learning model trained on anterior segment images, supplemented by traditional image analysis methods, to achieve automated measurement of pterygium progression.

The key to training the deep learning model lies in acquiring a high-quality dataset. The slit lamp, an effective tool for ophthalmic examinations, can capture high-resolution images with rich details. However, this does not entirely eliminate the limitations in dataset construction. In this study, all anterior segment images were obtained from the same device model, and the majority of subjects were from the same region. Such a dataset may limit the model's generalizability. Future research should focus on enriching the dataset, such as collecting images from patients in diverse regions and incorporating images captured by smartphones and cameras to enhance the model's generalization performance. In studies (21–23), the Intersection over Union (IoU) metrics for pterygium segmentation were 83.8%, 86.4%, and 78.1%, respectively. However, due to differences in datasets, it is challenging to objectively compare the performance of different segmentation models. Additionally, this method has other limitations. Compared to Hilmi et al. (31), who considered the degree of redness in pterygium, this study only utilized information related to the size and location of the target in anterior segment images, without incorporating other valuable features such as color and transparency of the pterygium. The lack of utilization of patient medical history also reduces the reliability of auxiliary diagnosis.

This study references medical prior knowledge, assuming a corneal diameter of 12 mm to calculate the actual invasion depth and approximating the corneal region as a circular shape without considering curvature or other shape parameters. While this simplification facilitates computation, it introduces additional errors. Future work should seek more accurate region calculation methods and account for individual differences among patients to optimize diagnostic logic.

5 Conclusion

Pterygium is a common ocular surface disorder characterized by abnormal tissue growth from the conjunctiva toward the cornea. Surgical removal is required when the pterygium encroaches significantly onto the corneal region. Accurate assessment of its pathological progression is essential for determining treatment strategies. This paper proposes an automated pterygium evaluation method that integrates deep learning models with traditional image processing algorithms, aiming to optimize diagnostic workflows and enhance efficiency. We utilized patient images captured by slit-lamp microscopy from the Ophthalmology Department of the Second Affiliated Hospital of Harbin Medical University, annotated as a dataset for model training and testing. The system was further evaluated on additional patient images to validate its effectiveness.

This study establishes a standardized quantitative assessment framework for pterygium, which is expected to address multiple clinical needs beyond basic diagnosis: (1) Longitudinal monitoring of disease progression. This is particularly crucial in telemedicine scenarios for determining the timing of surgical intervention and monitoring treatment efficacy; (2) Automated measurement significantly reduces inter-observer variability inherent in manual assessments, ensuring consistency in evaluation; and (3) By enabling digital recording and standardized grading criteria, the framework supports large-scale screening programs, facilitates extensive epidemiological studies, and allows for cross-population analysis of disease progression characteristics.

Although slit-lamp examination remains the gold standard for diagnosis, our automated system specifically addresses challenges in quantitative analysis and assessment standardization, proving particularly valuable in telemedicine settings and regions with limited specialist resources.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: the dataset images are exclusively sourced from the Second Affiliated Hospital of Harbin Medical University, which may limit the generalizability of the findings to other regions or institutions. The dataset contains anterior segment images with varying degrees of pterygium, which might limit the statistical power and robustness of models trained on this dataset, particularly for rare or extreme cases. All images were captured using a slit-lamp microscope in diffuse illumination mode. This specific imaging condition may restrict the applicability of the dataset to other imaging techniques or settings. The segmentation labels for pupil, cornea, and pterygium regions were manually annotated under the guidance of professional ophthalmologists. Although expert-guided, manual annotation can introduce subjectivity and potential inter-observer variability, affecting the consistency of the dataset. While patient information was removed to protect privacy, the dataset is limited to cases where patient consent was obtained. This might exclude certain patient demographics and could introduce selection bias. Requests to access these datasets should be directed to Lijun Qu, qulijun@hrbmu.edu.cn.

Ethics statement

The studies involving human participants were reviewed and approved by Medical Ethics Committee of the Second Affiliated Hospital of Harbin Medical University. All participants provided their written informed consent to participate in this study.

Author contributions

QJ: Formal analysis, Methodology, Supervision, Writing – review & editing. WL: Methodology, Resources, Supervision, Writing – review & editing. QM: Data curation, Software, Writing – original draft, Validation. LQ: Data curation, Resources, Writing – review & editing, Investigation, Supervision. LZ: Data curation, Resources, Writing – review & editing. HH: Data curation, Resources, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported by the Beijing Medical Award Foundation (YXJL-2021-0815-0547) and the Key Projection of the “Twelfth Five-Year-Plan” for Educational Science in Heilongjiang Province (GBB1211035).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Chu WK, Choi HL, Bhat AK, Jhanji V. Pterygium: new insights. Eye. (2020) 34:1047–50. doi: 10.1038/s41433-020-0786-3

2. Wang F, Ge QM, Shu HY, Liao XL, Liang RB Li QY, et al. Decreased retinal microvasculature densities in pterygium. Int J Ophthalmol. (2021) 14:1858. doi: 10.18240/ijo.2021.12.08

3. Cornejo CAM, Levano ERM. Correlation between pterygium and dry eye: diagnostics and risk factors not considered. Arq Bras Oftalmol. (2022) 85:649–51. doi: 10.5935/0004-2749.2022-0240

4. Rezvan F, Khabazkhoob M, Hooshmand E, Yekta A, Saatchi M, Hashemi H. Prevalence and risk factors of pterygium: a systematic review and meta-analysis. Surv Ophthalmol. (2018) 63:719–35. doi: 10.1016/j.survophthal.2018.03.001

5. Fonseca EC, Rocha EM, Arruda GV. Comparison among adjuvant treatments for primary pterygium: a network meta-analysis. Br J Ophthalmol. (2018) 102:748–56. doi: 10.1136/bjophthalmol-2017-310288

6. Shahraki T, Arabi A, Feizi S. Pterygium: an update on pathophysiology, clinical features, and management. Therap Adv Ophthalmol. (2021) 13:25158414211020152. doi: 10.1177/25158414211020152

7. Benet D, Pellicer-Valero OJ. Artificial intelligence: the unstoppable revolution in ophthalmology. Surv Ophthalmol. (2022) 67:252–70. doi: 10.1016/j.survophthal.2021.03.003

8. Abràmoff MD, Lavin PT, Birch M, Shah N, Folk JC. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digital Med. (2018) 1:39. doi: 10.1038/s41746-018-0040-6

9. Lopez YP, Aguilera LR. Automatic classification of pterygium-non pterygium images using deep learning. In: VipIMAGE 2019: Proceedings of the VII ECCOMAS Thematic Conference on Computational Vision and Medical Image Processing, October 16-18, 2019, Porto, Portugal. Springer (2019). p. 391–400. doi: 10.1007/978-3-030-32040-9_40

10. Abdani SR, Zulkifley MA, Hussain A. Compact convolutional neural networks for pterygium classification using transfer learning. In: 2019 IEEE International Conference on Signal and Image Processing Applications (ICSIPA). IEEE (2019). p. 140–143. doi: 10.1109/ICSIPA45851.2019.8977757

11. Zulkifley MA, Abdani SR, Zulkifley NH. Pterygium-Net: a deep learning approach to pterygium detection and localization. Multimed Tools Appl. (2019) 78:34563–84. doi: 10.1007/s11042-019-08130-x

12. Zamani NSM, Zaki WMDW, Huddin AB, Hussain A, Mutalib HA, Ali A. Automated pterygium detection using deep neural network. IEEE Access. (2020) 8:191659–72. doi: 10.1109/ACCESS.2020.3030787

13. Fang X, Deshmukh M, Chee ML, Soh ZD, Teo ZL, Thakur S, et al. Deep learning algorithms for automatic detection of pterygium using anterior segment photographs from slit-lamp and hand-held cameras. Br J Ophthalmol. (2022) 106:1642–7. doi: 10.1136/bjophthalmol-2021-318866

14. Gan F, Chen WY, Liu H, Zhong YL. Application of artificial intelligence models for detecting the pterygium that requires surgical treatment based on anterior segment images. Front Neurosci. (2022) 16:1084118. doi: 10.3389/fnins.2022.1084118

15. Xu W, Jin L, Zhu PZ, He K, Yang WH, Wu MN. Implementation and application of an intelligent pterygium diagnosis system based on deep learning. Front Psychol. (2021) 12:759229. doi: 10.3389/fpsyg.2021.759229

16. Hung KH, Lin C, Roan J, Kuo CF, Hsiao CH, Tan HY, et al. Application of a deep learning system in pterygium grading and further prediction of recurrence with slit lamp photographs. Diagnostics. (2022) 12:888. doi: 10.3390/diagnostics12040888

17. Zheng B, Liu Y, He K, Wu M, Jin L, Jiang Q, et al. Research on an intelligent lightweight-assisted pterygium diagnosis model based on anterior segment images. Dis Markers. (2021) 2021:7651462. doi: 10.1155/2021/7651462

18. Liu Y, Xu C, Wang S, Chen Y, Lin X, Guo S, et al. Accurate detection and grading of pterygium through smartphone by a fusion training model. Br J Ophthalmol. (2024) 108:336–42. doi: 10.1136/bjo-2022-322552

19. Wan C, Shao Y, Wang C, Jing J, Yang W, A. novel system for measuring pterygium's progress using deep learning. Front Med. (2022) 9:819971. doi: 10.3389/fmed.2022.819971

20. Saad A, Zamani N, Zaki W, Huddin A, Hussain A. Automated pterygium detection in anterior segment photographed images using deep convolutional neural network. Int J Adv Trends Comput Sci Eng. (2019) 8:225–232. doi: 10.30534/ijatcse/2019/3481.62019

21. Abdani SR, Zulkifley MA, Moubark AM. Pterygium tissues segmentation using densely connected deeplab. In: 2020 IEEE 10th symposium on computer applications & industrial electronics (ISCAIE). IEEE (2020). p. 229–232. doi: 10.1109/ISCAIE47305.2020.9108822

22. Abdani SR, Zulkifley MA, Zulkifley NH. Group and shuffle convolutional neural networks with pyramid pooling module for automated pterygium segmentation. Diagnostics. (2021) 11:1104. doi: 10.3390/diagnostics11061104

23. Zhu S, Fang X, Qian Y, He K, Wu M, Zheng B, et al. Pterygium screening and lesion area segmentation based on deep learning. J Health Eng. (2022) 2022:3942110. doi: 10.1155/2022/3942110

24. Hu J, Shen L, Sun G. Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (2018). p. 7132–7141. doi: 10.1109/CVPR.2018.00745

25. Woo S, Park J, Lee JY, Kweon IS. Cbam: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV). (2018). p. 3–19. doi: 10.1007/978-3-030-01234-2_1

26. Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q. ECA-Net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2020). p. 11534–11542. doi: 10.1109/CVPR42600.2020.01155

27. Guo MH, Lu CZ, Liu ZN, Cheng MM, Hu SM. Visual attention network. Comput Visual Media. (2023) 9:733–52. doi: 10.1007/s41095-023-0364-2

28. Dai J, Qi H, Xiong Y, Li Y, Zhang G, Hu H, et al. Deformable convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision. (2017). p. 764–773. doi: 10.1109/ICCV.2017.89

29. Chen J, Lu Y, Yu Q, Luo X, Adeli E, Wang Y, et al. Transunet: transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:210204306. (2021).

30. Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, et al. Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv:180403999. (2018).

Keywords: pterygium, semantic segmentation, deep learning, curve fitting, AI-based diagnostic

Citation: Ji Q, Liu W, Ma Q, Qu L, Zhang L and He H (2025) A semantic segmentation-based automatic pterygium assessment and grading system. Front. Med. 12:1507226. doi: 10.3389/fmed.2025.1507226

Received: 22 January 2025; Accepted: 18 February 2025;

Published: 13 March 2025.

Edited by:

Weihua Yang, Southern Medical University, ChinaReviewed by:

Xiaoqun Dong, Michigan State University, United StatesBaikai Ma, Peking University Third Hospital, China

Copyright © 2025 Ji, Liu, Ma, Qu, Zhang and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lijun Qu, qulijun@hrbmu.edu.cn

†These authors have contributed equally to this work and share first authorship

Qingbo Ji1,2†

Qingbo Ji1,2†