- 1German Research Center for Artificial Intelligence GmbH, Kaiserslautern, Germany

- 2Intelligentx GmbH (intelligentx.com), Kaiserslautern, Germany

- 3Department of Computer Science, Technical University of Kaiserslautern, Kaiserslautern, Germany

Deoxyribonucleic acid (DNA) serves as fundamental genetic blueprint that governs development, functioning, growth, and reproduction of all living organisms. DNA can be altered through germline and somatic mutations. Germline mutations underlie hereditary conditions, while somatic mutations can be induced by various factors including environmental influences, chemicals, lifestyle choices, and errors in DNA replication and repair mechanisms which can lead to cancer. DNA sequence analysis plays a pivotal role in uncovering the intricate information embedded within an organism's genetic blueprint and understanding the factors that can modify it. This analysis helps in early detection of genetic diseases and the design of targeted therapies. Traditional wet-lab experimental DNA sequence analysis through traditional wet-lab experimental methods is costly, time-consuming, and prone to errors. To accelerate large-scale DNA sequence analysis, researchers are developing AI applications that complement wet-lab experimental methods. These AI approaches can help generate hypotheses, prioritize experiments, and interpret results by identifying patterns in large genomic datasets. Effective integration of AI methods with experimental validation requires scientists to understand both fields. Considering the need of a comprehensive literature that bridges the gap between both fields, contributions of this paper are manifold: It presents diverse range of DNA sequence analysis tasks and AI methodologies. It equips AI researchers with essential biological knowledge of 44 distinct DNA sequence analysis tasks and aligns these tasks with 3 distinct AI-paradigms, namely, classification, regression, and clustering. It streamlines the integration of AI into DNA sequence analysis tasks by consolidating information of 36 diverse biological databases that can be used to develop benchmark datasets for 44 different DNA sequence analysis tasks. To ensure performance comparisons between new and existing AI predictors, it provides insights into 140 benchmark datasets related to 44 distinct DNA sequence analysis tasks. It presents word embeddings and language models applications across 44 distinct DNA sequence analysis tasks. It streamlines the development of new predictors by providing a comprehensive survey of 39 word embeddings and 67 language models based predictive pipeline performance values as well as top performing traditional sequence encoding-based predictors and their performances across 44 DNA sequence analysis tasks.

1 Introduction

Deoxyribonucleic acid (DNA) functions as the blueprint of life as it contains essential instructions for the development, operation, growth, and reproduction of all living organisms (1). Organisms utilize cell division process to grow from fertilized egg to a multicellular adult. Throughout an organism's lifespan, the health of tissues and organs is maintained through a continuous cycle of cell replacement. In this cycle, worn-out or damaged cells are systematically replaced with new, healthy cells. When a cell divides, each new cell requires an exact copy of the DNA to function correctly (1). DNA replication and repair processes ensure that each daughter cell receives the same genetic information as the parent cell, which is essential for the survival and proper functioning of all living organisms (2). DNA sequence changes occur through two fundamental mechanisms: germline mutations inherited from parents and somatic mutations acquired during an individual's lifetime (3). Germline mutations are present in all cells and can be passed to offspring, underlying hereditary conditions. Somatic mutations occur post-conception and can be caused by various factors including internal factors such as cellular metabolites, replication errors, and spontaneous chemical changes and external factors such as ionizing radiation, chemical mutagens, environmental pollutants, and lifestyle factors (3, 4). Understanding these distinct mutation types is crucial as they require different analytical approaches. Germline mutation analysis typically involves comparing an individual's sequence to population databases, while somatic mutation analysis often requires comparing affected tissue to unaffected tissue from the same individual. Regardless of type, mutations in genetic information can lead to complex diseases and disorders such as cancer (1). To detect susceptibility, initiation, and progression of such diseases at early stages, scientists perform large-scale DNA sequence analysis (5). Through DNA sequence analysis, scientists can decode the intricate genetic data by uncovering the origins of genetic mutations and disorders (6). In addition, this analysis is crucial for the development of targeted therapies and the advancement of personalized medicine (1).

DNA sequence analysis through traditional wet-lab experiments is expensive and time-consuming (7, 8). This is because wet-lab experiments require specialized equipment, e.g., PCR machines, and costly reagents (e.g., enzymes and chemicals). Detailed experiments on multiple patient samples may take weeks or even months. Moreover, experimentation requires careful execution and validation to prevent incorrect interpretations of genetic mutations due to errors or inconsistencies. The influx of next-generation sequencing and high-throughput approaches has given rise to huge sequences data. This abundance of genomic information has created both opportunities and challenges for comprehensive analysis. To expedite genomics sequence analysis, researchers are analyzing publicly available sequences data by harnessing the capabilities of Artificial Intelligence (AI) methods. It is important to mention that AI approaches serve to augment rather than replace experimental methods in DNA sequence analysis. For example, in precision medicine, AI models trained on large genomic databases can help to interpret patient-specific data by identifying relevant patterns and potential functional impacts. However, patient-specific experimental data remain essential, particularly for understanding unique aspects of individual cases such as tumor mutations. Thus, AI methods provide a valuable tool for generating hypotheses and guiding experimental design while working in concert with traditional molecular biology approaches.

While DNA sequence analysis encompasses a broad range of computational approaches in bioinformatics, from genome assembly and variant detection to evolutionary analysis and microbiome studies, this review focuses specifically on DNA sequence analysis tasks that involve pattern recognition and prediction, where artificial intelligence approaches can be effectively applied. These tasks include predicting functional elements, identifying regulatory regions, and classifying sequence types applications where AI can learn complex sequence patterns that may not be apparent through traditional computational methods.

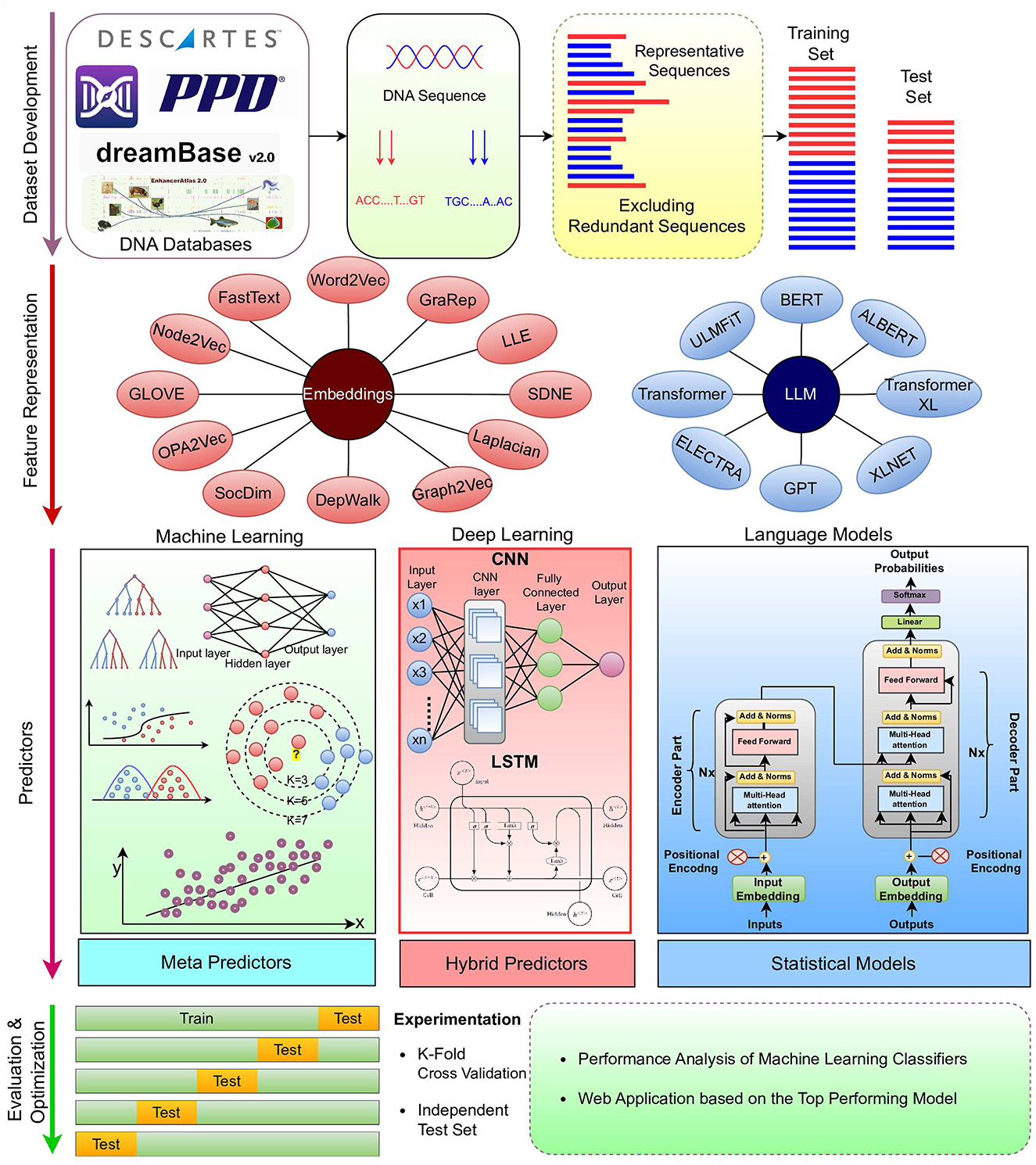

Most of the AI-based genomics sequence analysis methods fall under the hood of regression and classification paradigms (9–11). Figure 1 illustrates a unified workflow of AI-based predictive pipelines for genomics sequence analysis tasks. It is evident in the Figure that, overall, AI predictive pipelines can be divided into 4 different stages (12). First stage emphasizes on the collection and development of quality benchmark datasets using public databases (13). Second stage focuses on the characterization of raw DNA sequences in terms of statistical vectors using different kinds of sequence encoders (14–16). This is primarily done to address the inherent dependency of AI predictive pipelines on statistical vectors (17–19). In entire predictive pipeline, this stage is the most crucial one because highly informative and discriminative statistical vectors help the predictors to learn comprehensive useful patterns for accurate prediction (14–16). It is widely accepted that with quality statistical vectors, even simple machine learning predictors can produce promising performance. On contrary, with less informative and discriminative statistical representations, even sophisticated deep learning predictors fail to produce decent performance (17–19).

There is a marathon of developing powerful sequence encoders for generating highly informative and discriminative statistical vectors of raw sequences. To date, hundreds of sequence encoding methods have been developed (12) that can be broadly classified into four categories: Physico-chemical properties based methods, statistical methods (12, 20), neural word embedding methods (21), and language models (22). While physico-chemical properties based methods generate statistical vectors of raw sequences using pre-computed physical and chemical values of nucleotides, statistical methods rely on occurrence frequencies of individual or group of nucleotides with DNA sequences (12). Physico-chemical properties based and statistical methods capture the intrinsic characteristics of biological sequences, such as nucleotide composition and distributional information. However, these methods lack to capture complex relationships of nucleotides such as long range interactions of nucleotides in the sequences (12, 23). In addition, these methods may not fully capture the semantic and functional similarities between sequences (12, 23). Neural word embedding methods learn distributed representations of nucleotides in the continuous vector space. These methods capture the syntactic and semantic similarities of nucleotides by mapping them to vectors in a high-dimensional space. This enables the representation of residues with similar contexts to be closer together in the vector space. Neural word embeddings methods efficiently capture semantic and contextual information of nucleotides. However, these methods lack to efficiently handle different contexts of same nucleotides (21). Language models also learn representation of individual nucleotides or groups of nucleotides (k-mers) in an unsupervised fashion by predicting masked nucleotides based on the context of surrounding nucleotides. Language models based methods capture complex nucleotide relations; however, these methods require large amount of sequence data for training and hyperparameter optimization (22).

Third stage includes predictors that make best use of statistical vectors produced by second stage to extract informative patterns for creating decision boundaries. Overall, these predictors can be classified into two categories: machine learning and deep learning (12). Machine learning predictors require less data and computational power for training. However, these predictors lack to capture comprehensive complex relationships of nucleotide (12), whereas deep learning predictors (24) are capable to learn highly complex relationships of nucleotide. However, these predictors require a huge amount of training data and computational power (12). In fourth stage, comprehensive evaluation of predictors using different experimental settings and evaluation measures is performed (24).

AI researchers have been endeavoring to complement wet-lab-based DNA sequence analysis methods by incorporating more innovative sequence encoders at second stage and predictors at third stage of predictive pipeline. However, there is still ample room for the development of more powerful predictive pipelines. Different fields such as Natural Language Processing (NLP), Energy, and Computer Vision have seen substantial progress in the development of diverse predictive pipelines. Whereas, the DNA sequence analysis field is known for its wide range of tasks, still the progress of AI applications in this area is hindered mainly due to the lack of integration between molecular biologist and AI experts. For instance, the field of NLP has made strides with multi-task learning predictors. However, the DNA sequence analysis field lags behind due to AI experts limited understanding of the diverse range of DNA analysis tasks that could support the development of multi-task learning predictors. Furthermore, the efficacy of AI applications hinges on the availability of benchmark datasets. Although developing datasets in DNA sequence analysis is relatively straightforward due to abundance of public databases which contain raw biological sequences along with associated labels, there is a tendency among researchers to overlook existing benchmark datasets, develop new benchmark datasets, and neglect comprehensive performance comparisons with existing predictors. This oversight often complicates the determination of the most effective predictors for specific tasks. For example, up to date, according to our best of knowledge, approximately 127 predictive models have been developed and published in 59 different conferences and journals for widely studied 44 different DNA sequence analysis tasks. To enhance the performance of predictive models developed for diverse DNA sequence analysis tasks, researchers need to conduct a comprehensive examination of existing literature to find most effective algorithms for different stages of new predictive pipelines. With an aim to expedite progress in the development of fair and robust AI applications for DNA sequence analysis, numerous review articles have emerged. However, these reviews typically focus on isolated tasks rather than providing a holistic overview. Considering the need and significance of a comprehensive study that bridges the gap between AI specialists and biologists, this paper makes manifold contributions:

• It bridges the gap between DNA sequence analysis and artificial intelligence fields by presenting a diverse range of DNA analysis tasks and AI methodologies.

• It empowers AI researchers by equipping them with essential biological knowledge related to 44 distinct DNA sequence analysis tasks. It categorizes 44 different DNA sequence analysis tasks into 8 different categories on the basis of sequence analysis goals. This categorization provides a structured overview to biologists and AI researchers in navigating the complex landscape of genomics studies more efficiently.

• It streamlines the integration of AI into DNA sequence analysis by consolidating information of 36 diverse biological databases being used to develop benchmark datasets for 44 different DNA sequence analysis tasks.

• It sheds light on the nature of 44 different DNA sequence analysis tasks and categorizes them into three primary categories: regression, classification, and clustering, and three secondary categories: binary classification, multi-class classification, and multi-label classification. This categorization assists computer scientists in efficient selection of most suitable algorithms for each task category, development of more effective and specialized computational frameworks, and to significantly accelerate advancements in AI-driven genomic research.

• It provides insights of 140 benchmark datasets related to 44 distinct DNA sequence analysis tasks to ensure performance comparisons between new and existing AI predictors.

• It presents word embeddings and language models applications for 44 distinct DNA sequence analysis tasks.

• It streamlines the development of new predictors by providing a comprehensive survey of current top predictors, their performances across 44 DNA sequence analysis tasks, and their public accessibility. This comprehensive overview serves as a valuable resource for researchers developing and validating predictive pipelines in computational genomics.

It is important to note that our categorization of 44 DNA sequence analysis tasks emerges from the AI and computational biology literature rather than representing a definitive biological taxonomy. We have organized these tasks into biologically relevant groupings based on their functional and analytical similarities, while recognizing that many tasks span multiple biological domains. This organization aims to bridge the gap between computational methodologies and biological applications, although we acknowledge that future refinements with deeper domain expert input would further enhance this framework.

2 Research methodology

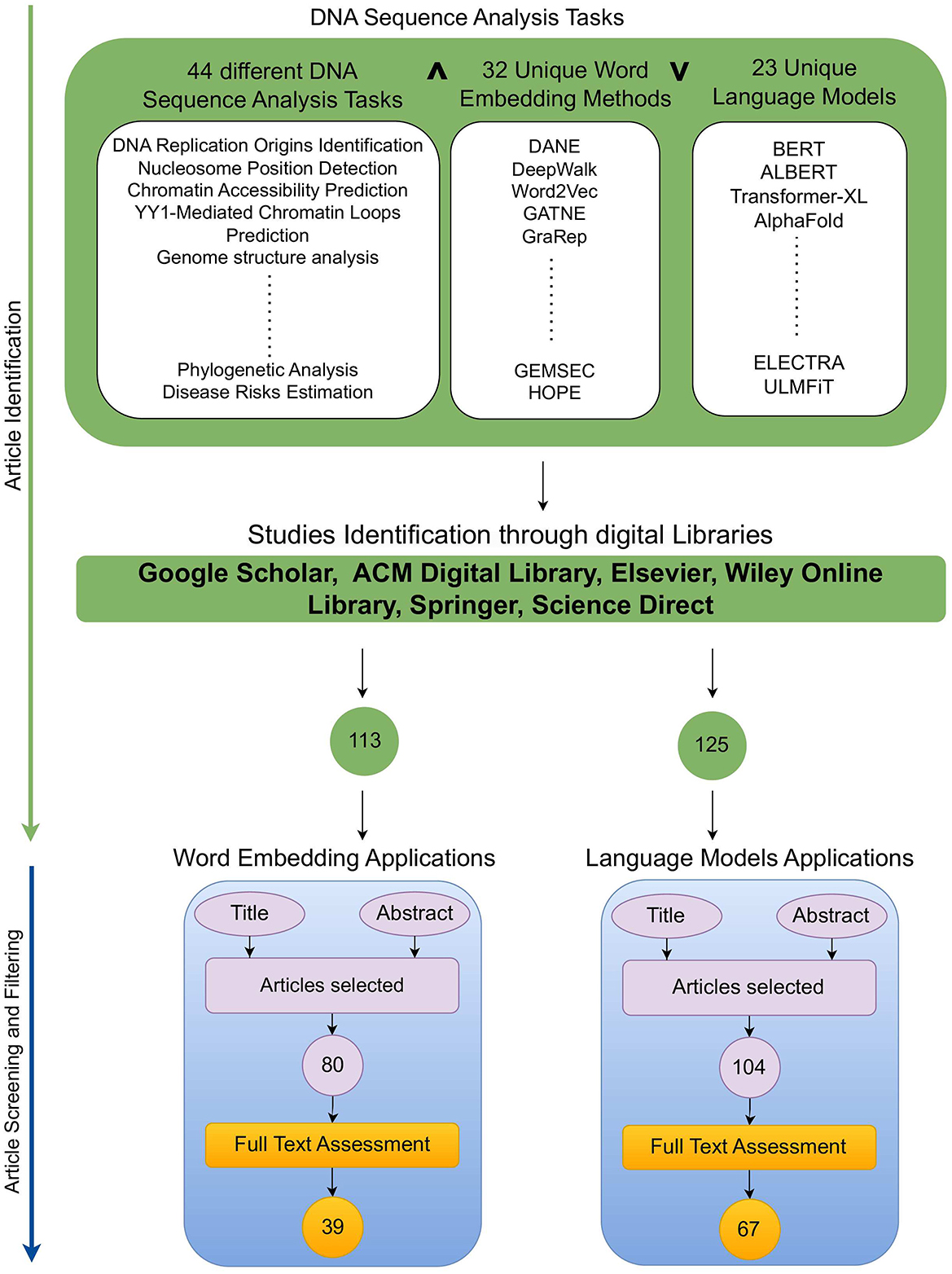

This section provides a detailed overview of the research methodology used to identify articles focused on word embeddings and large language models applications in DNA sequence analysis landscape (10, 11). Figure 2 illustrates two stage processes for article identification and selection.

2.1 Article searching

To identify a wide range of relevant scholarly articles, initial stage involves formulation of quality search queries using different keywords. In Figure 2, article identification module contains keywords cell of three different categories, namely, DNA tasks, word embedding methods, and Language models. To formulate quality search queries, keywords within same category are combined using OR ∨ operator, while keywords of different categories are combined using AND ∧ operator. For instance, few sample search queries include DNA Replication Origins Identification using BERT language model, DNA Replication Origins Identification using DeepWalk word embedding method, etc. To acquire relevant papers, formulated search queries are executed on academic search engines such as Google Scholar,1 ACM Digital Library,2 Elsevier,3 IEEEXplore,4 Wiley Online Library,5 Springer,6 and ScienceDirect.7 In addition, snowballing method is employed to explore sources referenced in extracted papers to identify more research articles. This technique is particularly useful in research contexts where access to resources is limited, such as niche topics or hard-to-reach communities, as it expands the pool of resources for a study. Execution of queries across multiple academic databases acquired approximately 238 research articles which are screened and filtered in second stage.

2.2 Article screening and filtering

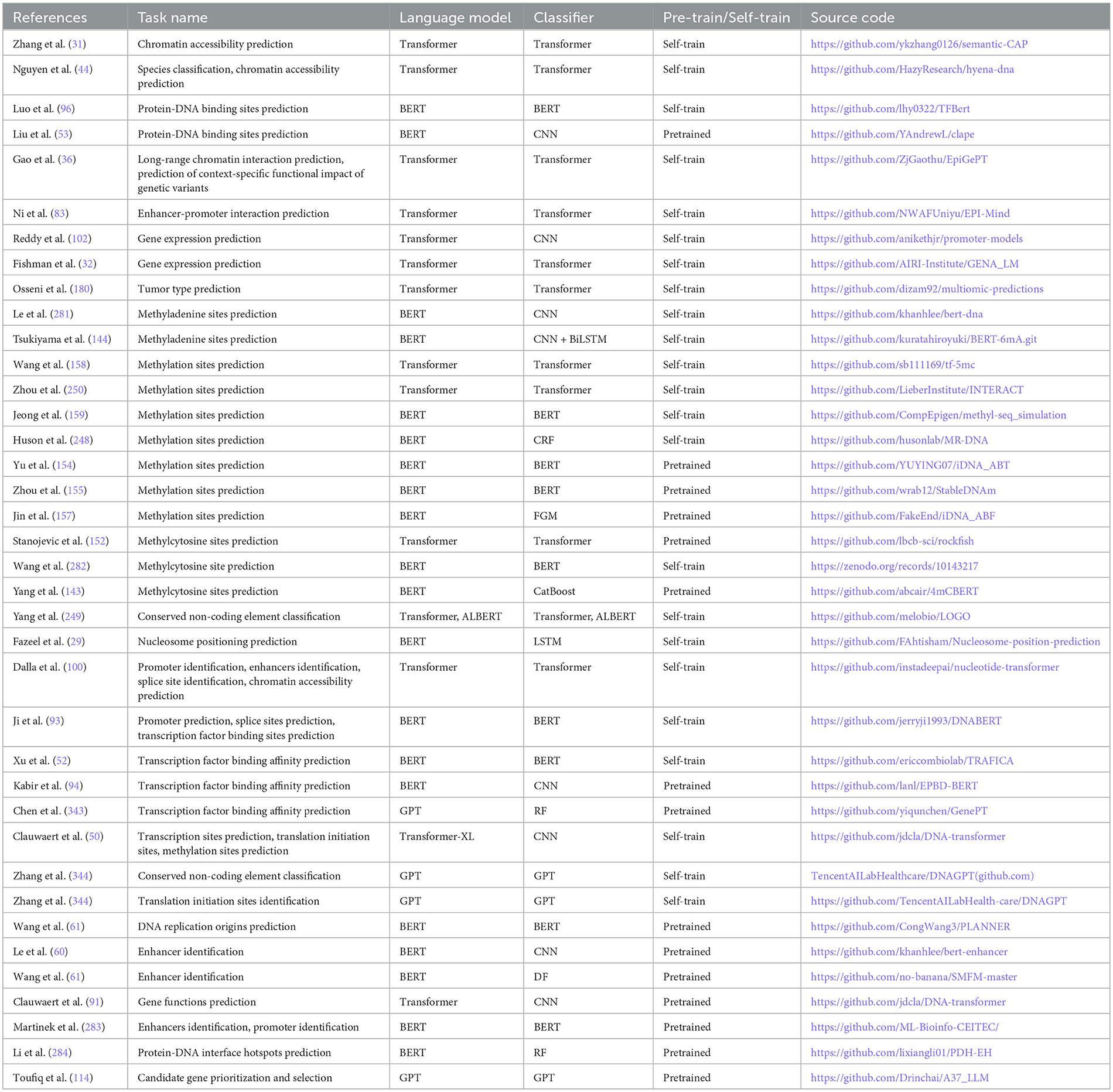

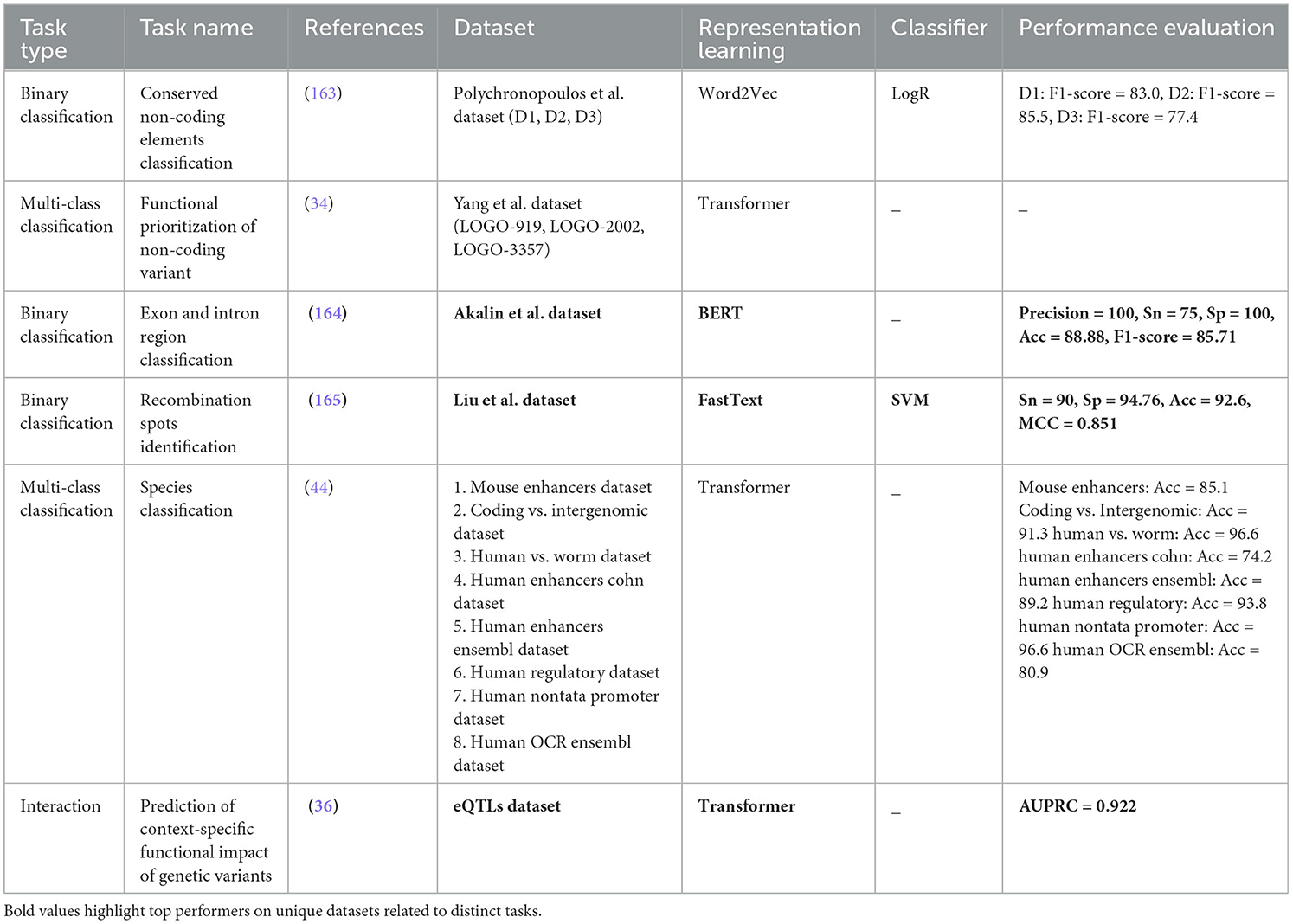

Second stage selects most relevant articles in two steps. In the first step, titles and abstracts of 113 word embeddings and 125 large language models related studies were reviewed. This review analysis identified 80 word embeddings and 104 language models related relevant articles. Second step involves full-text assessment of articles selected in first step, resulting in 39 word embeddings and 67 language models related articles.

Our selection criteria focused on DNA sequence analysis tasks where (1) raw DNA sequence data serve as the primary input, (2) AI methods extract patterns from these sequences, and (3) the analysis predicts specific biological properties or functions. This allowed us to examine AI's impact on genomic sequence interpretation while acknowledging that bioinformatics encompasses many other types of analyses not covered here.

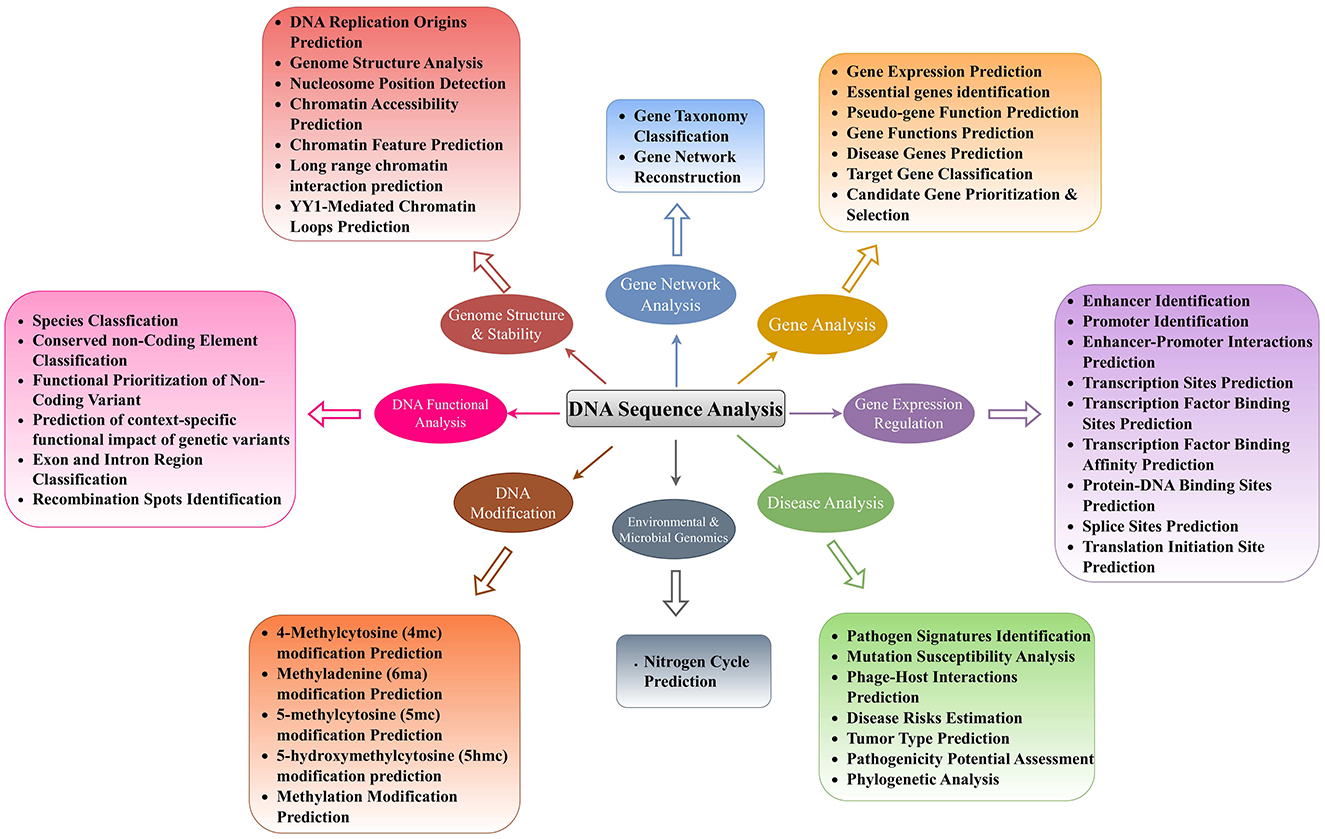

3 Biological foundations of DNA sequence analysis goals and tasks

With an aim to find molecular basis of diseases initiation and progression, their effective detection at early stages, and development of potent drugs, researchers are trying to understand DNA sequence language by performing a variety of sequence analysis tasks. Every unique DNA sequence analysis task aims to enhance the understanding of one specific aspect of DNA, and a bunch of tasks can enhance the understanding of specific major biological goal. To summarize the biological background of 44 distinct DNA sequence analysis tasks, we have categorized them into 8 major biological goals. Figure 3 depicts the biological categorization of 44 unique DNA sequences analysis tasks into 8 different goals, namely, genome structure and stability, gene expression regulation, gene analysis, gene network analysis, DNA modification prediction, DNA functional analysis, environmental and microbial genomics, and disease analysis. This biologically informed organization was developed by analyzing both the computational biology literature and aligning with biological processes in genomics research. While computational researchers often approach these tasks through the lens of AI methodologies, we have endeavored to categorize them according to their biological relevance and function. Our categorization into 8 major biological goals represents an attempt to bridge computational approaches with biological understanding. Although we recognize the inherent complexity and interconnectedness of biological systems which indicates that many tasks could reasonably be classified in multiple categories, thus, this categorization represents one of several possible ways to organize these tasks. This categorization reflects the diverse biological applications where AI-based sequence analysis has made significant contributions. However, we recognize that DNA sequence analysis in bioinformatics extends beyond these pattern recognition tasks to include other critical applications such as genome assembly, variant detection, and population genetics studies. We specifically examine how modern AI approaches are transforming our ability to extract meaningful biological insights from sequence data through pattern-based prediction tasks.

Figure 3. Precise classification of 44 unique DNA sequence analysis tasks in 8 major biological goals.

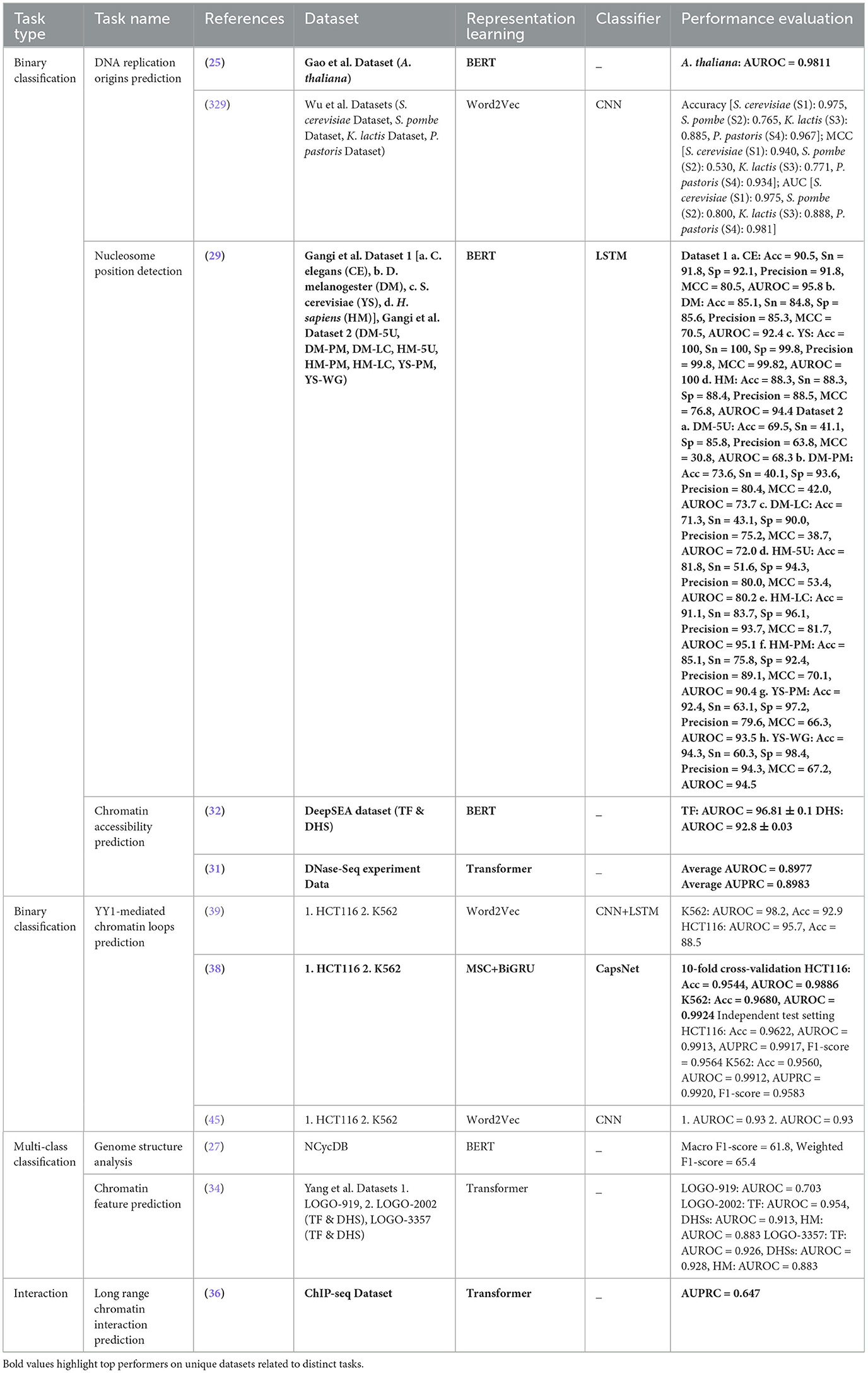

In living organisms, DNA is packaged at multiple levels to condense vast genetic information into a well-organized structure within the cell nucleus (1). At the first level, DNA is wrapped around histone octamers also known as nucleosomes. These nucleosomes further assemble into chromatin, which then folds and condenses into an even more compact structure known as the genome (1). The exploration of genome structure and stability is pivotal in understanding the biological intricacies and potential therapeutic avenues. Genome structure can affect how genes are accessed and used. Disruptions in this structure, such as missing or misplaced DNA sections, or changes in how tightly DNA is wrapped around histone octamers, or irregularities in nucleosomes positions can lead to genes being turned on or off at the wrong times or in the wrong amounts (1). This can cause various diseases and biological disorders. DNA is an instruction manual that controls biological functioning within living organisms. If genome gets unstable, the manual gets messed up such as typos and missing sections. It can lead to uncontrolled growth of the cells (cancer) and improper working of the genes (many diseases) (1). In a nutshell, a stable genome possesses clear, complete instruction manual, essential for keeping biological functions working smooth. To better understand genome structure and stability, it is essential to explore various tasks such as DNA Replication Origins Prediction (25, 26), Genome Structure Analysis (27, 28), Nucleosome Position Detection (29, 30), Chromatin Accessibility Prediction (31–33), Chromatin Feature Prediction (31, 34, 35), Long-range Chromatin Interaction Prediction (36, 37), and YY1-Mediated Chromatin Loops Prediction (38, 39). These tasks are crucial for comprehending the intricate mechanisms governing genetic information processing and regulation within cells (40).

DNA replication origin prediction is fundamental as accurate replication of the genome is vital for maintaining genomic stability (25). The prediction of replication origins involves calculating DNA structural properties to identify sites crucial for initiating DNA replication (25). Understanding where these sites are located and how they are specified is essential for comprehending DNA replication and ensuring genome integrity (41). Genome structure analysis plays a pivotal role in deciphering the organization and arrangement of genetic material within the cell (27). By analyzing the structural features of the genome, researchers can gain insights into the functional and spatial organization of chromosomes, aiding in the identification of genomic elements involved in gene regulation and phenotypic variations (27, 42). Furthermore, nucleosome position detection is essential for understanding how nucleosomes, the basic units of genome, are arranged along the DNA strand (29, 43). This information is crucial for elucidating gene regulation mechanisms and chromatin dynamics within the cell (29, 43). Chromatin accessibility prediction is a key task that involves determining the regions of chromatin that are accessible for transcription factors and other regulatory proteins to bind (31–33). Prediction of chromatin accessibility across different cellular contexts provides valuable insights into gene regulation and chromatin dynamics (31–33). Chromatin feature prediction complements accessibility prediction by identifying specific chromatin features and epigenetic markers that influence gene expression and regulatory processes (31, 34, 35, 44). These features include transcription factor (TF) binding sites, DNase I-hypersensitive sites (DHS), and histone marks (HM). By understanding these features, researchers can unravel the mechanisms underlying chromatin regulation and gene expression (34). Long-range chromatin interactions make bridges between distant enhancers and promoters. These interactions enable interactions between enhancers and promoters by bringing them closer to each other (36, 37). YY1-mediated chromatin loop prediction provides comprehensive understanding about gene regulation (38, 39, 45). YY1 is a protein that makes loop between enhancers and promoters. These loops are essential for gene regulation, and by predicting these loops, we can see which genes can be controlled through YY1 protein (38, 39, 45). This knowledge is valuable for understanding diseases where gene regulation goes wrong. To sum up, only through multi-dimensional exploration of genome structure and stability, researchers can discriminate healthy cellular processes from malfunctioned processes, find the root causes of diseases, and develop potent therapies.

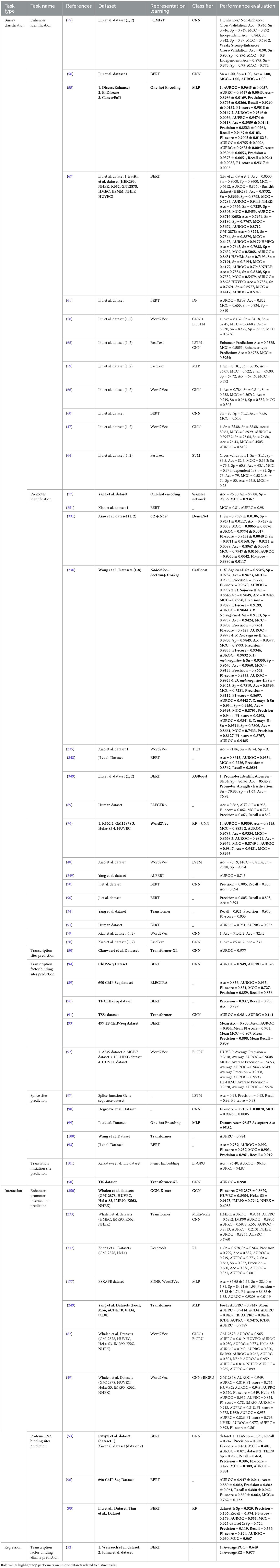

Another major goal of molecular biologists behind is gene expression regulation. Gene expression regulation provides fundamental insights into how genes are activated or repressed in response to various cellular cues (46). Specifically, researchers are trying to unravel the intricate mechanisms that control when and up to what extent specific genes are turned on or off in different cells and tissues (46). This knowledge forms the basis for understanding the functional behavior of genes in different biological contexts and sets the stage for further analyses. Hence, it holds immense promise for scientists and pharmaceutical industries. This helps scientists to detect irregularities in normal gene expression regulation, the way diseases develop at the molecular level, and identify potential drug targets (46). Furthermore, this understanding can assist pharmaceutical industries to develop improved diagnostic tools, innovative personalized therapies, and targeted interventions, which will ultimately contribute to advancements in personalized healthcare (46). In addition, it can provide a deeper understanding of biological systems which can lead to breakthroughs in biotechnology (46). For better understanding of gene expression regulation, researchers are performing nine different DNA sequence analysis tasks, namely, enhancer identification (47), promoter identification (48), enhancer-promoter interactions prediction (49), transcription site prediction (50), transcription factor binding site prediction (51), transcription factor binding affinity prediction (52), protein-DNA binding site prediction (53), splice site prediction (53), and translation initiation site prediction (54). Enhancers (47, 55–75) and promoters identification (48, 76–81), along with their interactions (82–86) prediction are important to decipher a complex control panel for gene expression (47–49). Enhancers are known as distant switches of genes, while promoters are the landing sites where gene activation starts. Identification of these elements and predicting how they loop together provide a comprehensive understanding of gene regulation, including which genes are activated or repressed, the intensity of their expression, and the specific cell types involved (87, 88). This knowledge reveals the intricate regulatory code that governs gene expression and offers valuable insights into the mechanisms underlying normal cellular function as well as the dysregulation that may contribute to various diseases.

Furthermore, prediction of different genomic sites including transcription sites (50), transcription factor binding sites (89–93), transcription factor binding site affinity (52), protein-DNA binding site (53, 94–96), splice site (93, 97–100), and translation initiation site (50, 101) provide deep insights into gene expression regulation. A transcription site refers to the specific location on the DNA where the process of transcription takes place. Transcription is the synthesis of RNA from a DNA template, and the transcription site represents the region where the RNA polymerase enzyme binds and initiates the transcription process, whereas transcription factor binding sites are specific DNA sequences where transcription factors (proteins), that regulate gene expression, bind. These binding sites are typically located near the transcription start site and are recognized by transcription factors to control the initiation or repression of transcription. In contrast, transcription factor binding site affinity refers to the strength or affinity with which a transcription factor binds to its specific binding site on DNA. It represents the likelihood of a transcription factor binding to its target site and influencing gene expression. A protein-DNA binding site refers to any region on the DNA where a protein binds. This can include transcription factors, as mentioned earlier, as well as other proteins involved in various cellular processes such as DNA replication, repair, and chromatin remodeling. Splice sites are specific sequences within a gene's DNA that mark the boundaries of introns and exons. During the process of RNA splicing, introns are removed from the pre-mRNA molecule, and exons are joined together to form the mature mRNA. Splice sites are essential for the accurate and precise splicing of RNA. Translation initiation site (TIS) is the specific location on the mRNA molecule where the process of translation begins. TIS prediction seems like a RNA sequence analysis task; however, in molecular biology research, to study gene expression, researchers are synthesizing complementary DNA (cDNA) data from messenger RNA (mRNA) template through a process called reverse transcription. In the context of cDNA data, the translation initiation site (TIS) represents the position where the ribosome, the cellular machinery responsible for protein synthesis, binds to the mRNA to initiate translation. The TIS is typically identified by the presence of specific start codons, such as AUG, which serve as signals for the ribosome to start protein synthesis.

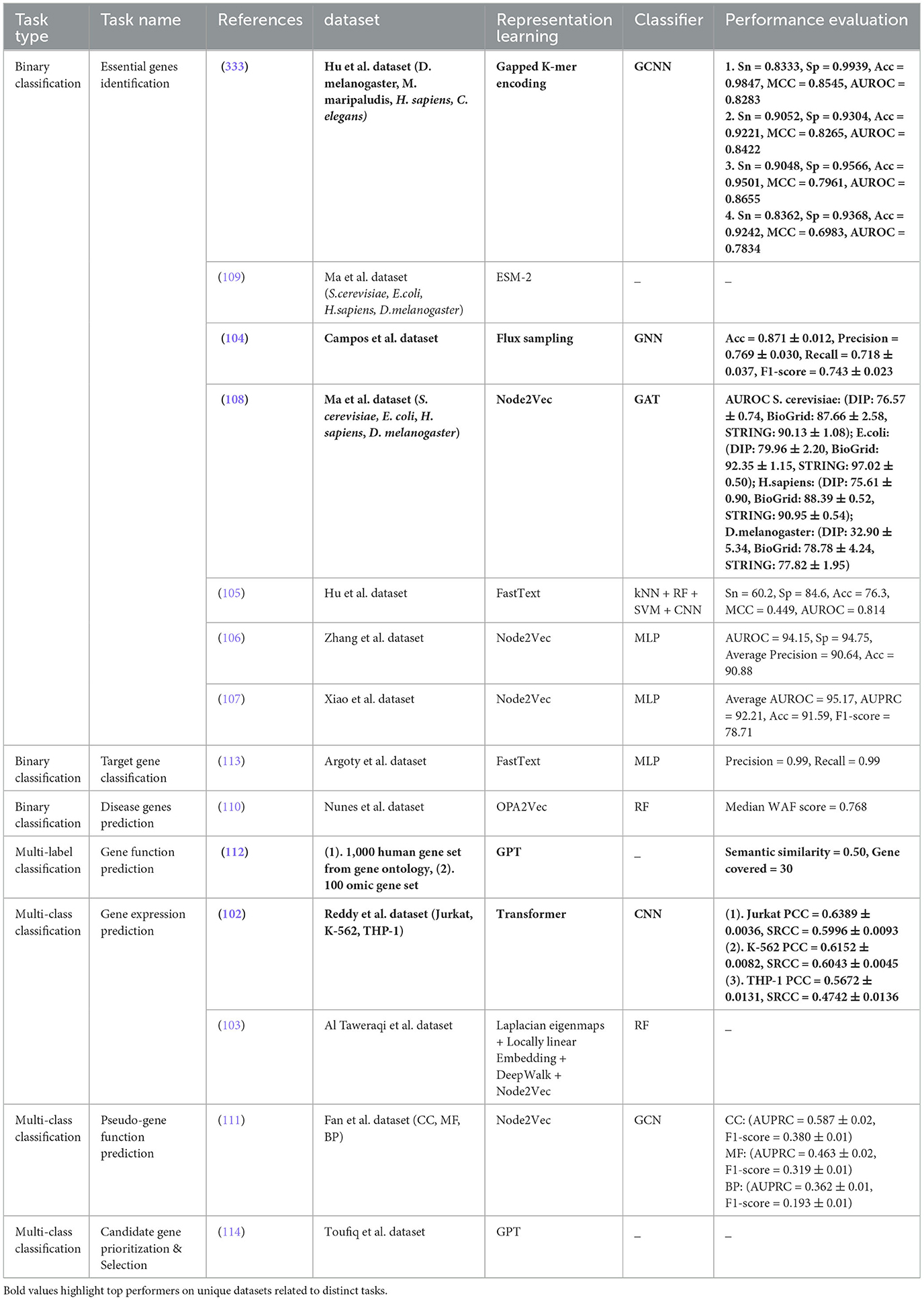

To better understand gene functions and their roles in disease initiation, researchers are exploring various aspects such as gene expression prediction (102, 103), identification of essential (104–109) and disease-specific genes (110), gene function prediction (111, 112), pseudo-gene function prediction (111), target gene classification (113), and candidate gene prioritization (114). Overall together, these tasks provide a comprehensive platform for disease diagnosis and development of treatment strategies by uncovering disease mechanisms, identifying potential therapeutic targets, and organizing genes into functional categories. Specifically, gene expression prediction provides useful information about the level of gene activity in different cells or tissues (115). This task is vital for understanding the molecular mechanisms underlying complex diseases such as cancer and identifying potential therapeutic targets. Essential gene identification is another critical task in gene analysis that helps researchers pinpoint genes that are crucial for an organism's survival and development (116, 117). This task is particularly important in understanding gene function and the genetic basis of various disorders. Gene function prediction elucidates the roles of genes in different pathways and biological processes and provides valuable insights into disease mechanisms and potential therapeutic interventions.

Apart from gene function prediction, pseudo-gene function prediction has gained a lot of attention as a critical task in gene analysis (111). Pseudogenes were once thought to be useless DNA because they cannot code for proteins due to mutations that happened over time. However, recent studies have shown that pseudogenes actually play important roles in controlling genes, especially in cancer. For instance, the pseudogene PTENP1 helps to regulate the tumor suppressor gene PTEN in various cancer conditions, showing that pseudogenes can have important functions. Pseudogene function prediction offers numerous advantages, including better understanding of gene regulation, disease mechanisms, evolutionary biology, and the potential for new biomarkers and drug targets. In addition, disease gene prediction is a pivotal task in gene analysis that focuses on identifying genes associated with specific diseases or disorders (118). By pinpointing disease-related genes, researchers can unravel the genetic basis of diseases, discover novel biomarkers for diagnosis and prognosis, and develop targeted therapies. This task is instrumental in precision medicine approaches, where understanding the genetic underpinnings of diseases is crucial for personalized treatment strategies. Target gene classification involves categorizing genes based on their functions, interactions, or regulatory mechanisms (119). By classifying target genes, researchers can better understand gene networks, signaling pathways, and biological processes. This task is essential for deciphering the complex relationships between genes and their roles in health and disease. Candidate gene prioritization and selection are critical tasks in gene analysis that aim to identify genes with the highest likelihood of being involved in a particular biological process or disease (120). By prioritizing candidate genes, researchers can focus their efforts on studying genes that are most likely to have significant effects, accelerating the discovery of novel gene functions and disease mechanisms. This task is crucial for efficiently allocating research resources and maximizing the impact of genetic studies. Aforementioned seven DNA sequence analysis tasks are essential for advancing our understanding of genes and their roles in health and disease. By leveraging these tasks, researchers can unravel the complexities of the genome, uncover novel gene functions, and pave the way for innovative diagnostic and therapeutic strategies in various fields of biology and medicine.

Furthermore, gene network analysis is a promising goal that seeks to comprehend the intricate interactions and relationships between genes within a biological system. Two primary tasks within Gene Network Analysis are Gene Taxonomy Classification and Gene Network Reconstruction. Gene Taxonomy Classification (121–123) involves categorizing genes based on their evolutionary relationships and functional similarities, providing a structured framework for organizing genetic information. Gene Taxonomy Classification plays a crucial role in gene network analysis by offering a foundational structure for understanding the evolutionary history and functional relationships between genes. By classifying genes into taxonomic groups based on shared characteristics and evolutionary relatedness, researchers can infer valuable insights into the origins and evolutionary trajectories of genes within a network (124). This classification allows for the identification of core genes that have remained conserved throughout evolution, providing a basis for inferring phylogenetic relationships and understanding the fundamental building blocks of gene networks. Moreover, Gene Taxonomy Classification enables researchers to utilize existing knowledge about gene functions and evolutionary relationships to guide Gene Network Reconstruction. By categorizing genes into taxonomic groups, researchers can pinpoint gene clusters with similar functions or evolutionary origins, facilitating the identification of modules within gene networks that exhibit coordinated activity (125). This classification serves as a roadmap for exploring the functional roles of genes within a network and understanding how these roles have evolved over time. On the other hand, Gene Network Reconstruction (126–128) involves creating a detailed map of the interactions and regulatory relationships between genes within a cell or an organism. The primary input for gene network reconstruction is gene expression data obtained through high-throughput techniques such as RNA sequencing (RNA-seq) or microarrays. This task is pivotal for understanding how genes work together to control various biological functions and processes (129). By reconstructing gene networks, researchers can uncover key regulatory hubs involving highly connected genes, clusters of closely interacting genes, pathways, and interactions that steer cellular functions and responses to external stimuli (130).

DNA modification prediction is also a crucial goal where researchers aim is to decipher how tiny tweaks to the DNA code can lead to big changes in cellular functions (131–133). In DNA modifications, distinct chemical groups are added to specific locations on the DNA molecule. These additions do not change the actual sequence of nucleotides (A, C, G, T) but can alter the physical properties of DNA sequence. Understanding these modifications, such as 4-Methylcytosine (4mc) (134–143), Methyladenine (6ma) (144–151), 5-methylcytosine (5mc) (152, 153), 5-hydroxymethylcytosine (5hmc) (154–157), and methylation modifications (146, 154–159), are essential for advancing our comprehension of epigenetic regulation (160–162). Specifically, methylation modifications that occur due to the addition of methyl groups to DNA molecules play a pivotal role in regulating gene expression and maintaining genomic integrity. Similarly, methyladenine modifications, such as DNA N6-methyladenine (6mA), occur due to the addition of a methyl group to the adenine base of DNA. DNA 6mA modifications dynamically influence DNA thermal stability, curvature, and transcription factor interactions, impacting gene expression in a heritable manner. Understanding the prediction of 6mA sites is pivotal for both basic and clinical research as it aids in the identification of gene expression patterns and potential epigenetic changes induced by environmental factors. These predictions enhance our ability to study the role of 6mA modifications in diseases and could lead to improved therapeutic strategies, highlighting the relevance of accurate prediction methods in unraveling the complexities of DNA modifications. Moreover, 5-methylcytosine (5mc) modification occurs due to the addition of a methyl group to the cytosine base of DNA, whereas 5-hydroxymethylcytosine (5hmc) modification is an oxidized derivative of 5mc, where an additional hydroxyl group (-OH) is added to the methyl group of 5mc. Prediction of 5-methylcytosine (5mc) and 5-hydroxymethylcytosine (5hmc) modifications is essential for decoding their roles in gene regulation, developmental processes, and disease states. These critical epigenetic modifications are dynamically regulated by enzymes and influence gene expression crucial for neuronal differentiation and cellular proliferation. Abnormal levels of these modifications have been linked to diseases such as cancer. Precise prediction of 5mc and 5hmc sites is useful for the development of targeted therapies and improved prognostic assessments.

Functional genomics is also a critical goal that encompasses multiple sub-tasks including species classification (44), conserved non-coding element (NCE) classification (163), functional prioritization of non-coding variants (34), prediction of context specific functional impact of genetic variants (36), exon and intron region classification (164), and recombination spots identification (165). Each of these tasks plays a vital role in unraveling the complexities of genetic regulation and molecular mechanisms within the genome. In biomedical research, understanding the genetic similarities and differences between humans and other species is crucial for modeling diseases and studying genetic disorders. Majority of the genome is conserved across different species which makes it difficult to distinguish humans and non-human species. Despite very high genetic similarity across species (< 10% sequence divergence), small differences are extremely valuable and they have significant biological implications. Species classification determines the source species of genetic sequences based on such differences and pave way for better modeling diseases and studying genetic disorders (44). Conserved non-coding element classification is another critical task in functional genomics that focuses on identifying and understanding non-coding regions of the genome that are evolutionarily conserved across different species (163). It is essential for advancing our understanding of gene regulation, evolutionary biology, and the genetic basis of diseases. By elucidating the functions of these non-coding regions, researchers can gain insights into the intricate regulatory networks that govern gene expression and cellular processes and contribute to the development of targeted therapies.

Functional prioritization of non-coding variants (34) is another crucial task for making sense of the vast amount of genetic data generated by modern sequencing technologies. By identifying which variants have significant biological impacts, researchers can gain a deeper understanding of the genetic architecture of complex diseases, uncover novel therapeutic targets, and advance the field of precision medicine. This prioritization is essential for translating genomic research into practical health benefits and ultimately improving patient outcomes and advancing our knowledge of human biology (34). As functional prioritization of non-coding variants task involves identifying which non-coding variants among millions are likely to have functional consequences, it does not account for the specific context in which these variants might exert their effects, whereas prediction of context-specific functional impact of genetic variants aims to provide a detailed understanding of how specific variants influence gene function in different contexts (e.g., specific tissue) (36). This is particularly important for genetic studies that seek to uncover the mechanisms by which variants contribute to disease phenotypes. Unlike functional prioritization of non-coding variants task which only filters the variants that are most likely to have functional significance. Prediction of context-specific functional impact of genetic variants provides a finer level of detail by predicting the actual effect of a variant on gene expression or other functional outcomes in specific tissues. This granularity is essential for precisely understanding the specific biological mechanisms and for developing targeted therapies (36).

Exon and intron region classification is crucial for understanding gene structure and function within the genome. Exons are coding regions that are translated into proteins, while introns are non-coding regions that are spliced out during mRNA processing. By classifying exons and introns, researchers can describe gene boundaries, identify functional elements, and elucidate the mechanisms of gene expression regulation (166). This task is essential for deciphering the genetic code and unraveling the complexities of gene regulation in health and disease. Recombination spots identification is a pivotal task in functional genomics that focuses on mapping regions of the genome where genetic recombination events occur. Genetic recombination is a natural process where DNA segments are exchanged between two chromosomes during cell division. Recombination plays a vital role in generating genetic diversity, ensuring proper chromosome segregation, and driving evolution (167). By identifying recombination hot spots, researchers can gain insights into the mechanisms underlying genetic diversity and genome evolution, shedding light on the processes that shape genetic variation and adaptation in populations. In conclusion, the tasks related to functional genomics, including species classification, conserved non-coding element classification, functional prioritization of non-coding variant, prediction of context-specific functional impact of genetic variants, exon and intron region classification, and recombination spots identification, are essential for advancing our understanding of genetic regulation, molecular mechanisms, and disease pathogenesis. By delving into these tasks, researchers can unravel the complexities of the genome, decipher the genetic basis of diseases, and pave the way for precision medicine and personalized healthcare interventions tailored to an individual's genetic profile.

Another goal of researchers is to study overlap between two distinct fields namely environmental science and microbial genomics (27). This interdisciplinary study enables researchers to explore how environmental factors such as pollution, climate change, and agricultural practices affect on function and diversity of microbial communities (27). A key area of focus in this field is the nitrogen cycle prediction. By examining the genomes of microbes involved in nitrogen fixation, nitrification, and denitrification, scientists can predict how these processes might respond to environmental changes (168). This prediction provides understanding about potential impacts of environmental shifts on ecosystem health (169) and nitrogen availability, which are essential for plant growth and overall biogeochemical cycles (170).

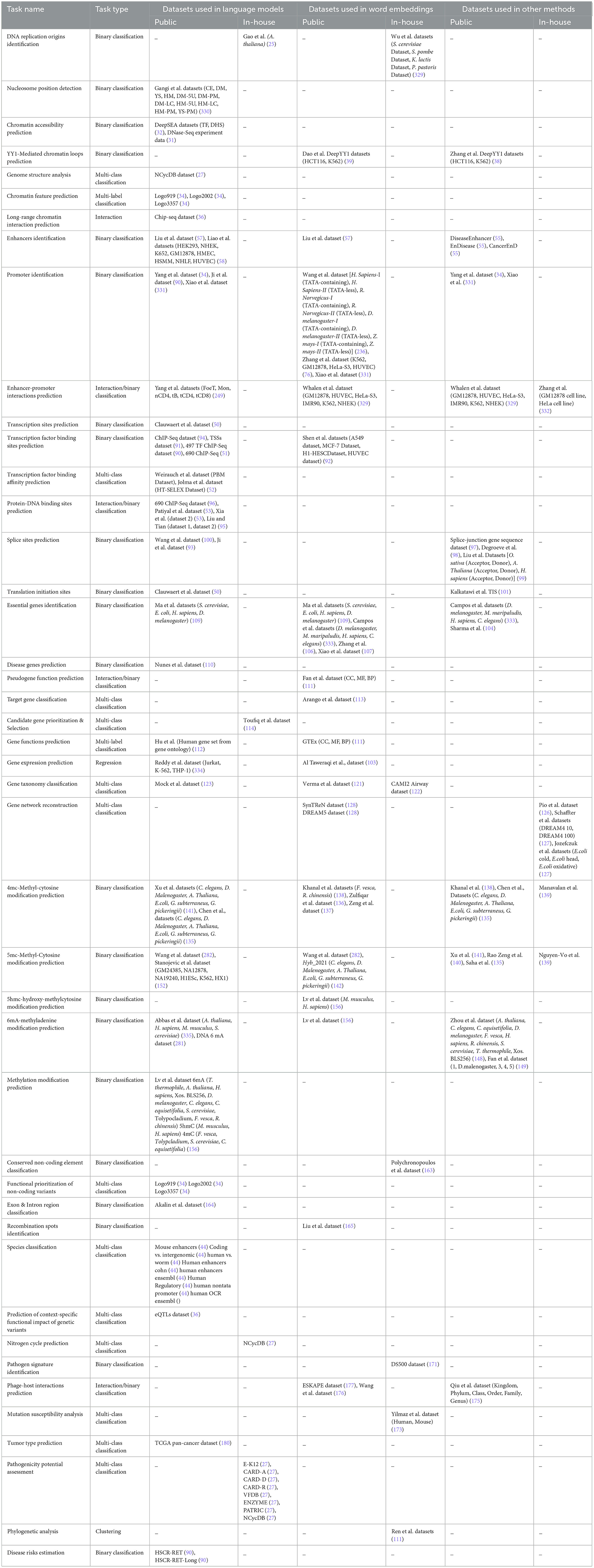

From all eight different biological goals, disease analysis goal has received huge attention in scientific community as it aims to understand, diagnose, and treat various illnesses. Within this field, several tasks play a vital role in enhancing our comprehension of diseases. One such task is Pathogen Signatures Identification (171), which involves identifying specific markers or characteristics of pathogens that can aid in their detection and classification (172). By pinpointing these signatures, researchers can develop targeted diagnostic tools and therapies, ultimately improving disease management and control. Mutation Susceptibility Analysis (173) is another essential task in disease analysis. This task focuses on investigating the genetic variations that make individuals more prone to developing certain diseases (174). Understanding mutation susceptibility can aid in personalized medicine approaches, where individuals at higher risk can be identified early for preventive interventions or closer monitoring. Phage-Host Interactions Prediction (175–177) is a task that delves into the relationships between bacteriophages and their host bacteria (178). By predicting these interactions, researchers can gain insights into how phages influence bacterial populations, which is crucial for developing phage-based therapies to combat bacterial infections and antibiotic resistance. Disease Risks Estimation (90) is a fundamental aspect of disease analysis that involves assessing the likelihood of an individual developing a particular condition based on various factors such as genetics, lifestyle, and environmental exposures (179). Accurately estimating disease risks enables healthcare providers to offer targeted interventions and counseling to high-risk individuals, potentially preventing the onset or progression of diseases. Tumor Type Prediction (180) is a significant task in disease analysis that focuses on identifying the specific type of tumor a patient may have based on various characteristics such as genetic markers, imaging features, and histopathological findings (181). Predicting tumor types is essential for determining the most effective treatment strategies and prognostic outcomes for patients with cancer. Pathogenicity Potential Assessment (27) is a critical task that involves evaluating the ability of pathogens to cause disease in a host. By assessing the pathogenicity potential of different microorganisms, researchers can prioritize the development of interventions against the most virulent pathogens, thereby improving disease prevention and control strategies. Phylogenetic Analysis (21) is a key component of disease analysis that involves studying the evolutionary relationships between different strains of pathogens or tumor cells. Phylogenetic analysis provides insights into the origins, spread, and diversification of diseases, aiding in the development of targeted interventions and understanding disease transmission dynamics.

4 A look on DNA sequence analysis tasks from the perspective of computer scientists

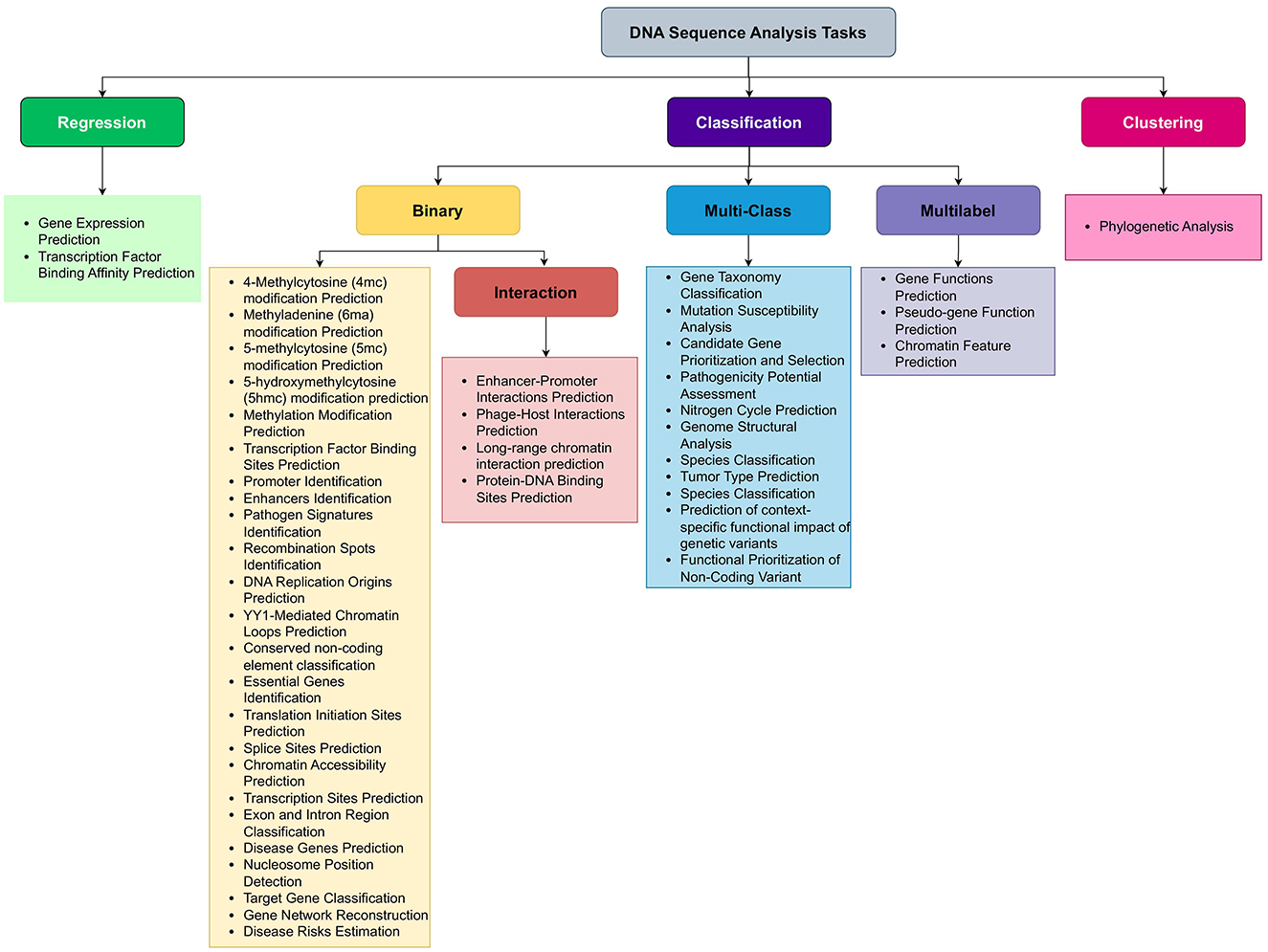

While Section 3 presents a biologically motivated categorization of DNA sequence analysis tasks, this section reframes these same tasks from a computational perspective. This dual categorization approach (biological and computational) aims to facilitate interdisciplinary understanding between life scientists and AI researchers. With the influx of biological data and rise of AI, researchers are increasingly applying AI in diverse areas of molecular biology. Development of large scale AI applications requires a good understanding of variety of sequence analysis tasks. However, there exist a huge domain gap between computer scientists and molecular biologists. Molecular biologists know the need, biological importance, and pharmaceutical worth of different sequence analysis tasks. However, they do not know which machine or deep learning models are most appropriate to use to either replace or complement experimental work. Similarly, computer scientists know which Artificial Intelligence predictive pipeline can potentially perform better with specific type of data; however, they do not know the nature of biological sequence analysis tasks. For instance, DNA sequence analysis tasks such as gene function prediction, gene network reconstruction, gene expression prediction, and disease risk estimation can be challenging for computer scientists to grasp. However, a comprehensive literature review that explains the basics of these tasks can bridge this gap. For example, gene function prediction is a multi-label classification tasks, gene expression prediction is a regression task, while gene network reconstruction and disease risk estimation are binary classification tasks. With this foundational understanding, computer scientists can more easily develop predictive pipelines for these binary, multi-label classification, and regression tasks. To empower AI experts, we have presented 44 DNA sequence analysis tasks in computer scientist language in Figure 4. A simple look on Figure 4 reveals that nature of DNA sequence analysis tasks can be categorized into three primary types: regression, clustering, and classification where classification can be further divided into three secondary types: binary classification, multi-class classification, and multi-label classification. Let us mathematically formulate the possible natures of DNA sequence analysis tasks.

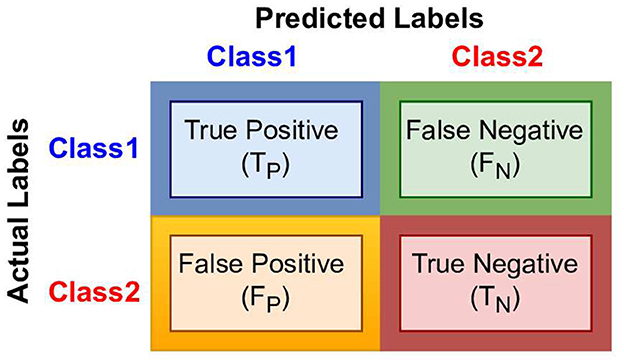

In binary classification, researchers aim to predict the outcome of a binary variable (0 or 1). Given a dataset with features X ∈ ℝnxd, binary labels y ∈ 0, 1, and training dataset (x1, y1), (x2, y2), …, our goal is to learn a decision function f:X → Y that maps inputs to binary outputs 0, 1 on the basis of hypothesis function h(x) learned from the training data.

In multi-class classification, researchers aim to predict the outcome from more than two classes. Specifically, given a dataset having sequences X ∈ ℝnxd, labels y ∈ 1, 2, …, K where K is the number of classes, and training dataset (x1, y1), (x2, y2), …, (xn, yn) where xi ∈ X and yi ∈ Y, our goal is to learn a decision function f:X→Y that assigns inputs to one of the classes.

where hk(x) is the hypothesis function for class k learned from the training data. On the other hand, in multi-label classification, each input can be assigned to multiple classes simultaneously. Given a dataset with features X ∈ ℝnxd, labels y ∈ 1, 2, …, K where K is the number of classes, and training dataset (x1, y1, y2, ..), (x2, y1, y4, …), …, (xn, y5, yn, ….) where xi ∈ X and yi ∈ Y, our goal is to learn a decision function f:X → 0, 1K that assigns inputs to multiple classes simultaneously using hypothesis function hk(x) for class k learned from the training data.

Furthermore, in regression, researchers goal is to predict a continuous outcome variable. Given a dataset with sequences X ∈ ℝnxd, labels y ∈ ℝ, and training dataset (x1, y1), (x2, y2), …, (xn, yn) where xi ∈ X and yi ∈ Y, our goal is to learn a function f:X → ℝ that predicts continuous outputs using hypothesis function h(x) learned from the training data.

In clustering, the goal is to group similar data points into same clusters. Given a dataset with data points X = x1, x2, …, xn, where each , our goal is to find a partition of the data into clusters C = C1, C2, …, CK. This is done on the basis of a distance metric d(x, μc) between data point x and the centroid μc of cluster c.

5 DNA sequence analysis databases

This section provides a comprehensive overview of various databases employed to develop benchmark datasets for development of AI-based applications for 44 distinct DNA sequence analysis tasks. A total of 45 DNA sequence databases have been identified from 127 existing studies. Among these, 36 databases are publicly accessible, while the remaining 9 databases are either inaccessible or no longer exist. To ease the lives of researchers and practitioners, Table 1 summarizes accessible databases in terms of their release year, types of inherent genetic data (DNA, RNA, protein), details of species and organisms, statistics of raw sequences, and supported data formats.

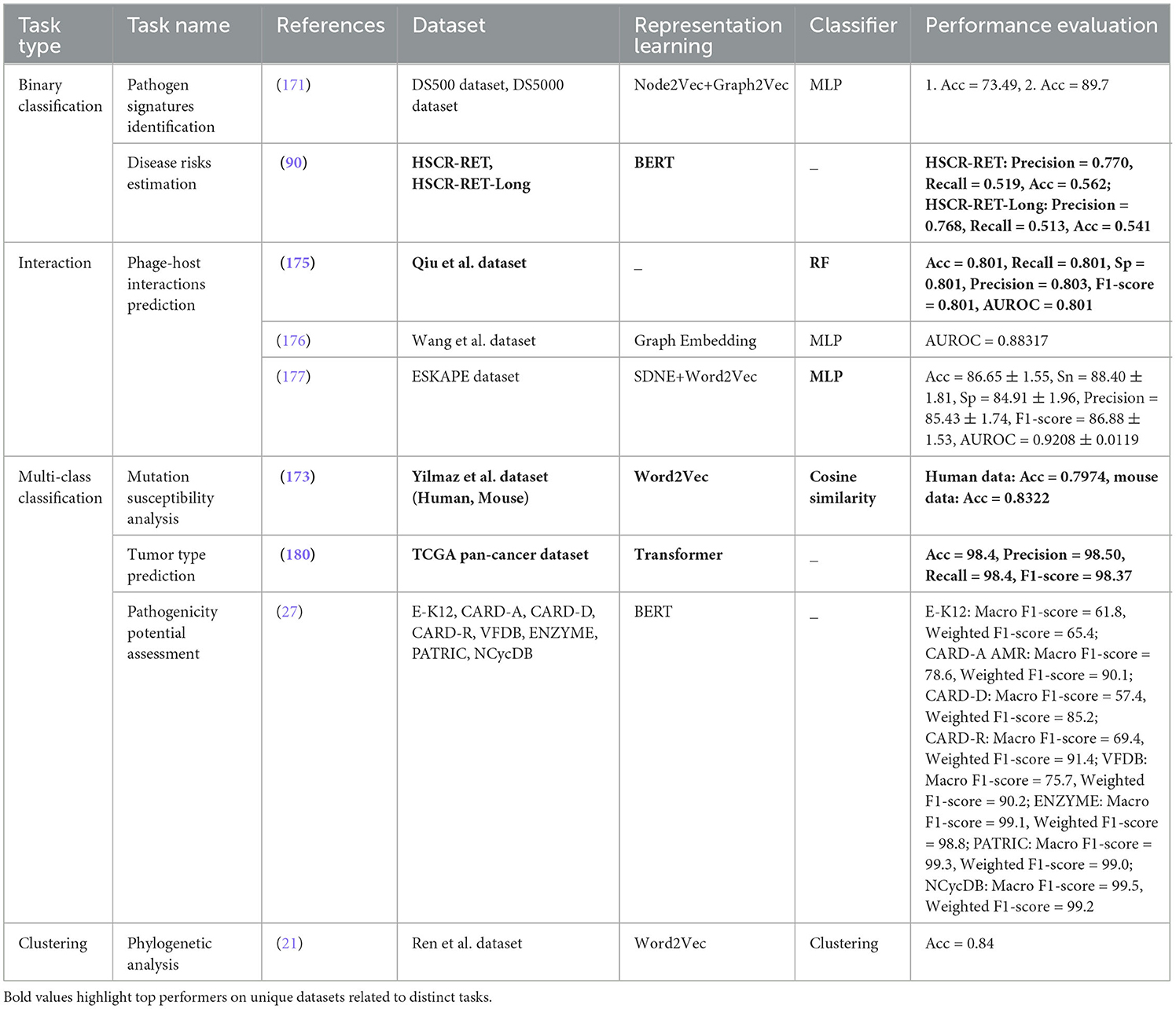

Table 1. Summary of publicly accessible biological databases, their inherent data types, species diversity, and statistics of raw sequences related to different genomic and proteomic data.

A holistic view of the Table 1 reveals that 12 databases provide RNA and protein sequences as well in addition to providing DNA sequences. As word embeddings methods and large language models are trained in unsupervised fashion and when they are trained on large sequence data usually, they produce better representations. To efficiently train word embedding methods and large language models, raw data can be acquired from these databases. To facilitate researchers, we have categorized 36 databases into three different categories on the basis of volume of raw sequences: low sequence facilitators, medium sequence facilitators, and high sequence facilitators. Specifically, 13 low sequence facilitators, namely, HOCOMOCO Human v11 database (182), Consensus Coding Sequence Database (183), MSigDB (184), Broad DepMap (185), JASPAR (186), Database of Essential Genes (187), ENCODE (188), MGC (189), Exon-Intron Database (190), Ensembl (191), RegulonDB (192), EPD2 (193), offer up to 100,000 DNA sequences each, while 9 medium sequence facilitators, namely, PPD (194), DREAM (195), EmExplorer database (196), GenomAD (197), DeOri (198), BioLip (199), DeOri6.0 (198), GWAS (200), Eukaryotic Promoter Database (193), provide up to 1 million DNA sequences. In contrast, 13 high sequence facilitators such as Descartes (201), EnhancerAtlas 2.0 (202), COSMIC (203), DisGeNet (204), ClinVar (205), CCLE (206), GENCODE (207), Gene Ontology (208), DataBase of Transcriptional Start Sites (209), GEO (210), KEGG (211), NCBI (212), GenBank (213), and dbSNP (214, 215) offer more than 1 million DNA sequences each. These databases predominantly house DNA sequences from a diverse array of species, including humans, mice, plants, bacteria, and fungi. A comprehensive analysis reveals that approximately 22 databases, namely, Descartes (201), DREAM (195), EmExplorer database (196), COSMIC (203), DisGeNet (204), GenomAD (197), ClinVar (205), HOCOMOCO Human v11 database (182), DeOri (198), BioLip (199), GWAS (200), Broad DepMap (185), CCLE (206), GENCODE (207), Consensus Coding Sequence Database (183), MSigDB (184), ENCODE (188), DataBase of Transcriptional Start Sites (209), MGC (189), GEO (210), Ensembl (191), and OMIM (216), focus on animal DNA sequences, 4 databases including PPD (194), Database of Essential Genes (187), RegulonDB and (192) on bacterial sequences, and JASPAR (186) on plant DNA sequences. EnhancerAtlas 2.0 (202) is the only database that facilitates with both animal and bacterial DNA sequences, while 4 databases namely DeOri6.0 (198), Exon-Intron Database (190), EPD2 (193), and Eukaryotic Promoter Database (193) focus on animal and plant DNA sequences, whereas Gene Ontology (208), KEGG (211), and GenBank (213) provide DNA sequences for animal, plant, and bacteria. In addition, sequences from other organisms such as eukaryotes, invertebrates, fungi, and various microorganisms are also well-represented. Some databases encompass a broad spectrum of species. For instance, the EDP2 (193) database includes genomics data for 139 species, GenBank (213) houses sequences for 557,000 species, and PPD (194) has genomics data of 63 species.

Moreover, Table 1 includes data formats utilized by various databases to manage and provide access to DNA sequences. TXT and FASTA format are universally accepted by almost all DNA sequence analysis programs. Each entry in both format types contains at least two lines: First line or header includes accession number, species name, or identification details, while next line contains nucleotide sequences. CSV and TSV are text-based formats in which values in rows are separated by commas or tabs, respectively. In both file formats, first row specifies headers which defines names of columns (“SeqID”, “SeqName”, “Type”, “Function”) and subsequent rows represent data. In VCF format, first row specifies headers which defines names of columns, but this format is specifically used to store genetic variation data including single nucleotide polymorphisms (SNPs), insertions, deletions, and structural variants. In addition, XLSX formats represent complex datasets that contain information computed with various formulas across multiple columns, whereas EMBL format includes structured sections for sequence data, feature annotations (genes and other biological features), organism information, references, and other details. An extensive analysis of Table 1 reveals that most widely used data formats are FASTA, TXT, CSV, XLSX, and EMBL in DNA sequence analysis.

A rigorous analysis of Table 1 reveals that out of 36 publicly accessible databases, several key categories of data emerge. Four databases, namely, Broad DepMap (185), genomAD, COSMIC, and MGC, provide data for DNA functional analysis tasks such as prediction of context-specific functional impact of genetic variants and conserved non-coding element classification. Seven databases, namely, BioLip, HOCOMOCO Human v11, GWAS, EnhancerAtlas 2.0, DataBase of Transcriptional Start Sites, Exon-Intron Database, and Eukaryotic Promoter Database, offer data on gene expression regulation. Three databases, namely, PPD, CCLE, and EmExplorer, focus on DNA modification data including methylcytosine and methyladenine modifications. In addition, DeOri, Descartes, DeOri6.0, and JASPAR provide information on gene structure and stability, including chromatin accessibility prediction, YY1-mediated chromatin loop identification, and DNA replication origins identification. GENCODE, Consensus Coding Sequence Database, MSigDB, Gene Ontology, DisGeNet, Database of Essential Genes, KEGG, and NCBI offer comprehensive gene analysis data. Furthermore, eight other databases, namely, EPD, ENCODE, RegulonDB, GEO, Ensembl, ClinVar, GenBank, and OMIM, provide a range of data on gene expression regulation, DNA modification prediction, genome structure and stability, DNA functional analysis, disease information, and gene analysis.

6 DNA sequence analysis benchmark datasets

The quality and quantity of datasets utilized in AI-driven DNA sequence analysis applications are vital determinants of their effectiveness and functionality. This section aims to provide a comprehensive overview of datasets relevant to 44 distinct DNA sequence analysis tasks. Overall, these datasets fall into two primary categories: publicly available datasets and in-house datasets. This categorization serves to illuminate the significance of dataset accessibility and its implications for the advancement of AI-driven DNA sequence analysis. Specifically, publicly available datasets are accessible to the wider research community and are commonly employed in the development of AI-based predictive models. They serve as foundational resources that facilitate the advancement of AI-driven DNA sequence analysis pipelines by ensuring accessibility, reusability, and transparency in research endeavors. Furthermore, the utilization of publicly available datasets fosters collaboration and knowledge exchange within the scientific community, thereby contributing to the overall progress of the field. In contrast, in-house datasets are proprietary in nature and are developed within specific research laboratories or institutions. These datasets often contain sensitive data tailored to particular research objectives. As in-house datasets cannot be shared publicly, their proprietary nature may limit broader access, reproducibility, and applicability of findings.

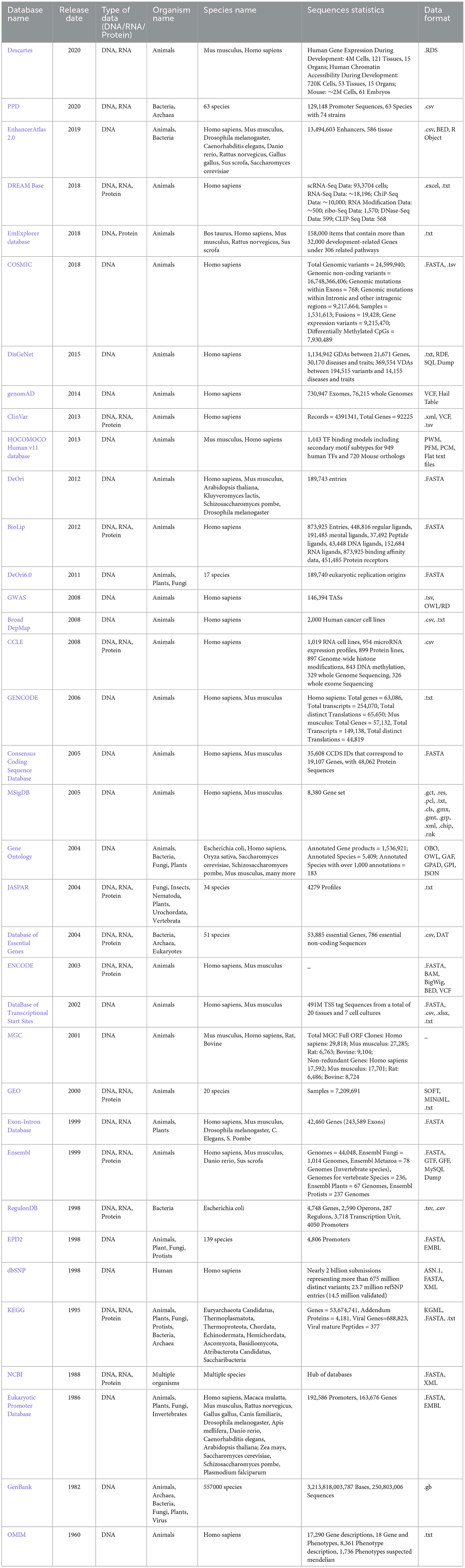

Rigorous assessment of 127 existing studies reveals that a total of 242 benchmark datasets related to 44 distinct DNA sequence analysis tasks are constructed or acquired from existing literature. Specifically, among these 242 benchmark datasets, 199 are publicly available and 43 are in-house datasets. Table 2 provides the distribution of public and in-house datasets for 44 distinct DNA sequence analysis tasks. It provides information about which of these datasets are used by word embeddings, large language models, nucleotide composition, and positional information-based predictive pipelines.

Table 2. Overview of 199 public and 43 in-house datasets used across 44 different DNA sequence analysis tasks.

For each DNA sequences analysis task, public and in-house datasets are distributed as DNA Replication Origins Identification (0, 5), Nucleosome Position Detection (11, 0), Chromatin Accessibility Prediction (2, 0), YY1-Mediated Chromatin Loop Prediction (4, 0), Genome structure analysis (0, 1), Chromatin Feature Prediction (3, 0), Long-range chromatin interaction prediction (1, 0), Enhancers Identification (12, 0), Promoter Identification (15, 0), Enhancer-Promoter Interactions Prediction (18, 2), Transcription Site Prediction (1, 0), Transcription Factor Binding Site Prediction (4, 4), Transcription Factor Binding Affinity Prediction (2, 0), Protein-DNA Binding Site Prediction (5, 0), Splice Site Prediction (10, 0), Translation Initiation Sites (1, 1), Essential Gene Identification (6, 5), Disease Gene Prediction (1, 0), Pseudogene Function Prediction (3, 0), Target Gene Classification (1, 0), Candidate Gene Prioritization/ Identification (0, 1), Gene Function Prediction (4, 0), Gene Expression Prediction (4, 0), Gene Taxonomy Classification (2, 1), Gene Network Reconstruction (2, 6), 4mc-Methylcytosine Site Prediction (16, 0), 6mA-Methyladenine Site Prediction (5, 0), 5mc-Methylcytosine Site Prediction (24, 1), 5hmc-Methylcytosine Site Prediction (2, 0), Methylation Site Prediction (17, 0), Conserved Non-Coding Elements Classification (0, 1), Functional Priorizitation of non-coding variants (3, 0), Exon and Intron Region Classification (0, 1), Recombination Spots Identification (1, 0), Species Classification (8, 0), Prediction of context-specific functional impact of genetic variant (1, 0), Nitrogen Cycle Prediction (0, 1), Pathogen Signatures Identification (0, 1), Phage-Host Interactions Prediction (8, 0), Mutation Susceptibility Analysis (0, 2), Tumor Type Prediction (1, 0), Pathogenicity Potential Assessment (0, 8), Phylogenetic Analysis (0, 1), and Disease Risks Estimation (2, 0). First entry in brackets refers to count of public datasets, and second entry indicates total number of in-house datasets for a particular task. For example, in “Essential Gene Identification (6, 5)” task, 6 refers to public datasets while 5 represents in-house datasets.

A holistic view of Table 2 reveals 110 public and 18 in-house datasets are employed to develop both word embeddings and language models based predictive pipelines for 12 DNA sequence analysis tasks, namely, DNA replication origins identification, enhancers identification, promoters identification, enhancer-promoter interaction prediction, transcription factor binding site prediction, essential gene identification, gene function prediction, gene expression prediction, gene taxonomy classification, 4mC-methyl cytosine modification prediction, 5mC-methl cytosine modification, and 6mA-methyl modification prediction. Notably, both types of predictive pipelines have utilized 1 common dataset to evaluate the performance of predictive models developed for three tasks, namely, enhancer identification, essential gene identification, and 5mC-methyl cytosine modification prediction.

Furthermore, 112 public and 15 in-house datasets are used to develop both word embedding and nucleotide compositional and positional information-based predictive pipelines for 11 DNA sequence analysis tasks including essential gene identification, gene network reconstruction, 4mC-methyl cytosine modification prediction, 5mC-modification prediction, 6mA-methyl adenine modification prediction, and phage-host interaction prediction. However, both predictive pipelines have used 9 common pubic dataset for only three tasks. Specifically, six public datasets for enhancer-promoter interactions prediction, one public data for essential gene identification, and two public datasets for 4mC-Methyl cytosine modification prediction are commonly employed by both predictive pipelines.

Moreover, Table 2 highlights that 107 public and 9 in-house datasets are utilized by 9 DNA sequence analysis tasks, namely, enhancers identification, promoters identification, enhancer-promoter interaction prediction, splice site prediction, translation initiation sites identification, essential gene identification, 4mC-methyl cytosine modification prediction, 5mC-methl cytosine modification, and 6mA-methyl modification prediction for developing both language models and nucleotide compositional and positional information-based predictive pipelines. Merely, 7 public datasets are used commonly by both predictive pipelines for two tasks: one for promoter identification and six for 4mC-methyl cytosine modification prediction.

Although all three different types of representation learning-based predictive pipelines are employed across six different DNA sequence analysis tasks, namely, enhancers identification, promoters identification, enhancer-promoter interaction prediction 4mC-methyl cytosine modification prediction, 5mC-methl cytosine modification, and 6mA-methyl modification prediction, only on one task, namely, promoters identification, all three kinds of predictive pipelines are evaluated on one common dataset. These statistics reveal that researchers have focused on creating new datasets for each kind of predictive pipelines instead of using existing datasets. Consequently, this domain lacks a fair performance comparison between different kinds of predictive pipelines.

7 A brief look on representation learning methods and predictors used in DNA sequence analysis predictive pipelines

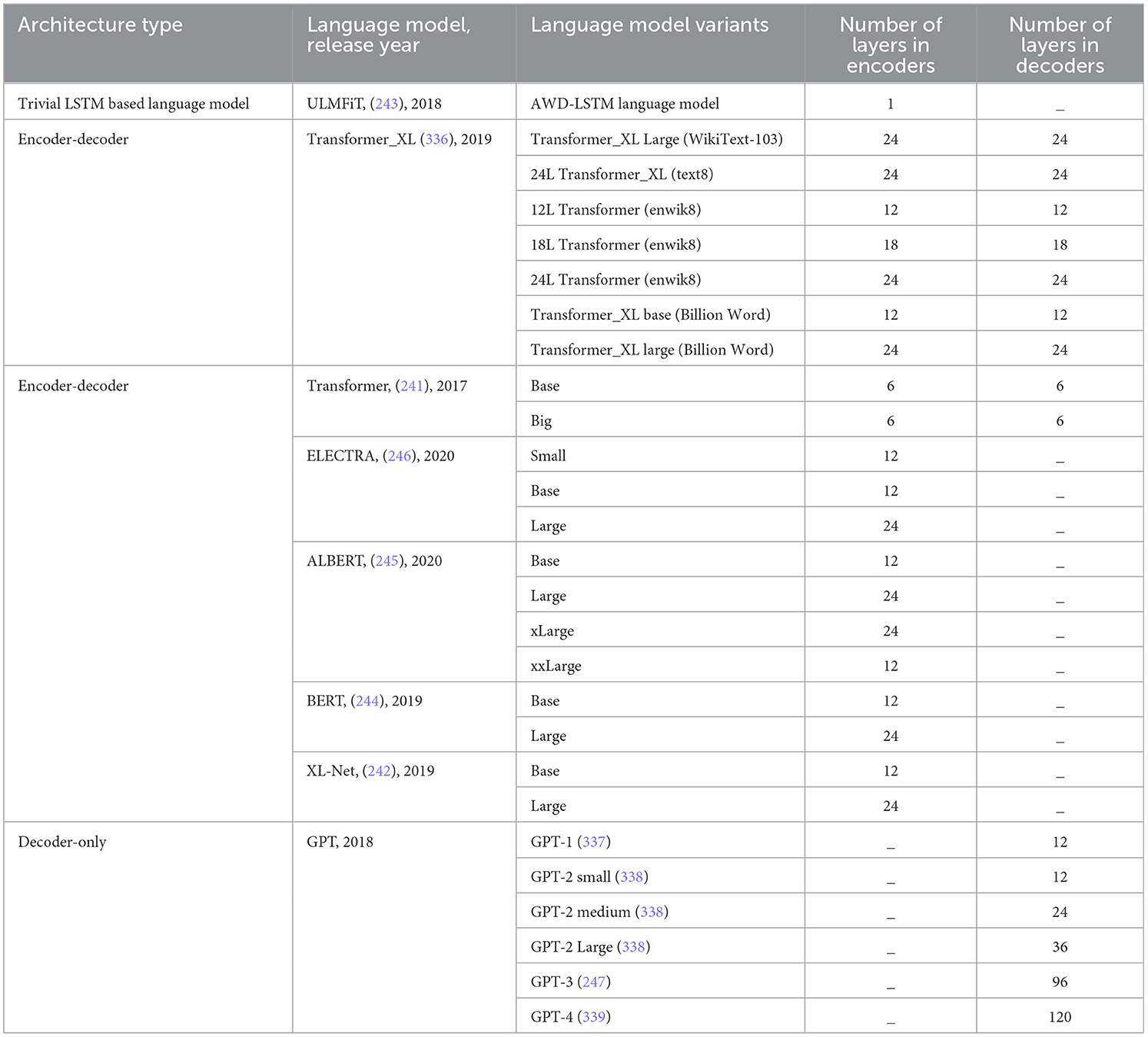

This section dives into 12 most commonly used word embedding approaches, 8 large language models, 9 machine learning, 8 deep learning, and 3 statistical algorithms that are used in development of predictive pipelines for 44 different DNA sequence analysis tasks.

7.1 DNA sequence representation learning using word embeddings

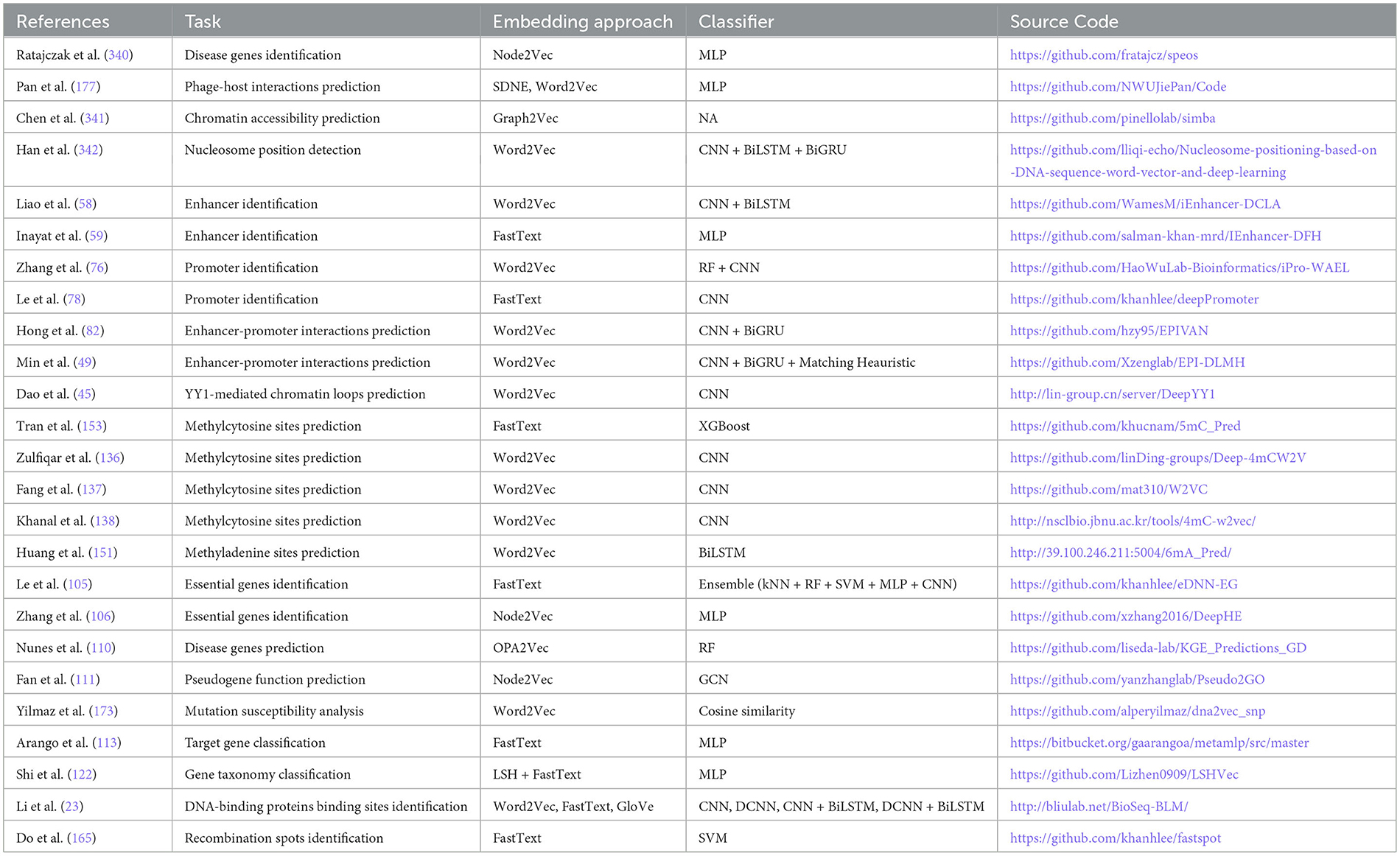

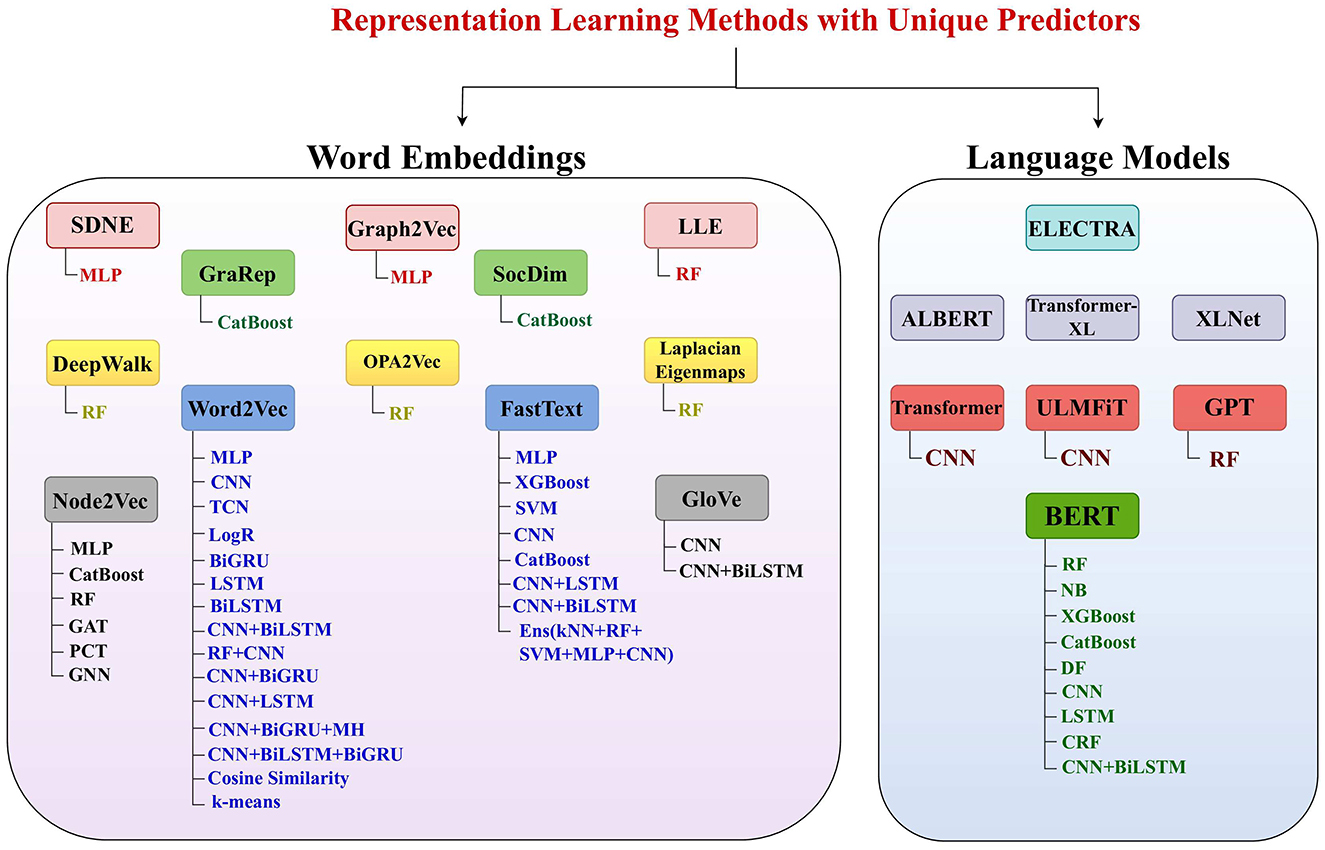

In the domain of natural language processing (NLP), the introduction of word embedding techniques represented a significant advancement by enabling the development of more accurate machine and deep learning predictive models. These approaches assign statistical vectors to words by capturing contextual representations of words within extensive, unlabelled corpora (217, 218). The primary objective is to assign comparable vectors to semantically similar words and distinct vectors to dissimilar words (217, 218). Leveraging transfer learning strategies, these contextual word representations have empowered data-hungry deep learning models to achieve exceptional performance, even with limited training data. Following the success of word embeddings in various NLP tasks (217–220), researchers have adopted these approaches for genomic and proteomic sequence analysis tasks, which share similarities with NLP tasks. This section offers a comprehensive overview of 12 distinct word embedding approaches that are utilized in DNA sequence analysis predictive pipelines. Figure 5 visually illustrates the utilization of various word embedding methods in conjunction with different machine and deep learning algorithms.

Figure 5. Utilization of 12 different word embedding approaches and 8 large language models in diverse DNA sequence analysis pipelines based on a variety of machine and deep learning predictors such that RF, random forest; DF, deep forest; SVM, support vector machine; LogR, logistic regression; NB, Naive Bayes; kNN, k-nearest neighbors; MLP, multilayer perceptron; CNN, convolutional neural network; GNN, graph neural network; GCN, graph convolutional network; TCN, temporal convolutional network; GAT, graph attention network; LSTM, long short-term memory; BiLSTM, bidirectional Llong short-term memory; BiGRU, bidirectional gated recurrent unit; PCT, predictive clustering tree; CRF, conditional random field; FGM, fast gradient method. All language models are used with self-classifiers and few language models like transformer, ULMFiT, GPT, and BERT are also used with separate standalone or hybrid algorithms.

These word embedding approaches leveraged for DNA sequence analysis tasks can be categorized into two types: (1) non-graph-based methods and (2) graph-based methods. Non-graph-based methods segregate DNA sequences into overlapping or non-overlapping k-mers. Specifically, overlapping k-mers are generated by sliding a fixed-size window over sequence with a smaller stride as compared to window size. For instance, for ACGTG sequence with a window size of 4 and a stride of 1, the k-mers generated are ACGT and CGTA. Alternatively, in non-overlapping k-mers generation, window and stride size must be equal in size. For same sequence used in the overlapping case, this non-overlapping approach generates only one k-mer, such as ACGT. The length of the k-mer is determined by the window size. Researchers often create pre-trained embeddings with different k-mer sizes and then select the size which yields best performance in downstream tasks. Once k-mers are generated, these k-mers sequences are passed to traditional word embedding models (Word2vec, FastText, GloVe) to generate representation.

A high level overview of Figure 5 indicates that various studies have explored the potential of the Word2Vec embedding method in combination with 13 different machine and deep learning algorithms as well as 2 statistical algorithms. The era of word embedding approaches begin in 2013 with introduction of Word2Vec (221). Word2vec has two different embeddings generation paradigm: (1) SkipGram and (2) Continuous Bag of Words (CBoW). SkipGram learns representations of k-mers by predicting surrounding k-mers for every k-mers of corpus. The number of surrounding k-mers is a hyper-parameter that can be adjusted according to available data. Contrarily, CBoW model learns k-mers representations by predicting single k-mer based on the context of its surrounding k-mers. Similar to SkipGram model, here context of surrounding k-mers is a hyper-parameter. Word2vec architecture is comprised of input layer, hidden layer, and an output layer. At input layer, a random d-dimensional vector is initialized for each k-mer, while the hidden layer extracts relationships between k-mers. These relationships are further passed to output layer, which predicts probabilities of output k-mers based on the context of input k-mers. The predicted probabilities are passed to loss function which computes loss value. To facilitate readers, Equation 10 embodies mathematical expressions for computing loss values of both variants.

In above expression, N refer to number of k-mers, wi indicates target k-mers, wj is one of k-mers within contextual window, and Ws refers to set of k-mers in contextual windows of k-mers wi. After computing loss, weights are updated during back propagation which eventually helps in generating similar vectors for similar k-mers and distinct vectors for dissimilar k-mers.

Pennington et al. (222) proposed another k-mers embedding approach named Global Vectors (GloVe) which generates k-mers vectors by capturing both global and local contextual information of k-mer within corpora. It can be seen in Figure 5, in the context of DNA sequence analysis, the potential of Glove k-mers embedding method is explored with two distinct deep learning methods. Primarily, this embedding generation method computes local and global contextual information by incorporating occurrence frequencies of k-mer pairs into an objective function shown in Equation 7.

In above expression, wi and wj are k-mers within a pair, bi and bj are corresponding biases, and f(Cij) is a weighted function to normalize co-occurrence matrix values and eradicate biases and their impact of noise on k-mers embeddings.

Figure 5 shows that Word2Vec is the most commonly explored word embedding method, followed by FastText. Mikolov et al. (223) proposed FastText approach by extending the working paradigm of Word2Vec model. Primarily, this approach handles out-of-vocabulary (OOV) k-mers by discretizing k-mers into sub k-mers. After generating sub k-mers. it takes average of sub k-mers vectors to generate k-mers vectors and passed them to word2vec model. During back propagation, it updates vectors of both k-mers and sub k-mers. Through this strategy, vectors are generated for both k-mers and sub k-mers.

Furthermore, in NLP domain, with an aim to generate more comprehensive vectors of k-mers by capturing k-mers informative patterns from textual corpora, researchers have proposed different graph-based methods. These approaches include DeepWalk (224), Node2Vec (225), Graph2Vec (226), SDNE (227), SocDim (228), GraRep (229), Laplacian Eigenmaps (230), Locally Linear Embedding (231), and OPA2Vec (232). Figure 5 highlights that within the context of DNA sequence analysis, the potential of the 7 graph-based methods is less explored compared to the two foundational word embedding methods, Word2vec and FastText. In addition, among the graph-based methods, Node2Vec (225) has been investigated more extensively than DeepWalk (224), Graph2Vec (226), SDNE (227), SocDim (228), GraRep (229), Laplacian Eigenmaps (230), Locally Linear Embedding (231), and OPA2Vec (232). Similar to non-graph-based methods, graph-based methods segregate sequences into k-mers and generate k-mers pairs by sliding a 2 size window over k-mers sequences. By using k-mers paris, a graph is formed where nodes represent k-mers, and edges represent relationships between the k-mers. For example, to generate a graph from the DNA sequence ACTGCA with k = 3, first, overlapping k-mers (ACT, CTG, TGC, GCA) are generated. By sliding a window of size 2 over these k-mers sequence, k-mers pairs [(ACT, CTG), (CTG, TGC), and (TGC, GCA)] are created. These pairs form edges of graph, with k-mers serving as nodes. Perrozi et al. (224) proposed DeepWalk approach that utilizes graphical space to generate new sequences by capturing extensive relationships between k-mers. After generating new sequences, it makes use of Word2Vec model for generation of k-mers vectors. In contrast, Grover et al. (225) proposed Node2Vec approach that utilizes a distinct strategy for generation of new sequences. Primarily, Node2Vec employs second order random walk sampling strategy which reaps the benefits of breath first search (BFS) and depth first search (DFS) algorithms. This strategy computes probability of visiting next node depending on the previously visited nodes rather than just randomly selecting one of neighboring nodes. Naeayanan et al., (226) introduced another embedding generation approach namely Graph2Vec. It extracts root node, its sub-graph, and degree of intended sub-graph to generate a sorted list of nodes which is then passed to SkipGram with negative sampling (SGNS) model.

Matrix factorization embedding approaches extend graph-based embedding approaches by using adjacency matrix rather than generating new sequences directly from graph. Adjacency matrix encodes the relationships between nodes within the graph which is then decomposed using matrix factorization methods namely SVD and NMF. These approaches also decompose adjacency matrix of graph into lower-dimensional matrices which represents node embeddings. These embeddings extract nodes latent features and relationships between them. Mainly, matrix factorization methods aim to minimize reconstruction error between original adjacency matrix and reconstructed matrix from node embeddings. These methods include Laplacian Eigenmaps (230), Locally Linear Embedding (231), SDNE (227), SocDim (228), GraRep (229), and OPA2Vec (232). A closer view of Figure 5 indicates that 6 matrix factorization embedding approaches method are least explored as compared to foundational word embedding methods (Word2vec, FastText) and graph-based methods.

Laplacian Eigenmaps (230) approach derives degree matrix from adjacency matrix and computes graph Laplacian matrix by computing the difference between degree matrix and adjacency matrix. Next, it computes eigen values and constructs eigenvectors corresponding to smallest non-zero eigenvalues which results in generating lower-dimensional k-mer embeddings and preserving local k-mers relationships. Another matrix representation approach graph representations (GraRep) (229) make use of adjacency (Ai, j) and degree (Di, j) matrices driven from nodes and edges of graph. Equation 8 depicts mathematical expression for computing proximity matrix from Ai, j and Di, j matrices.