- 1School of Health Sciences, Swinburne University of Technology, Hawthorn, VIC, Australia

- 2School of Medicine and Dentistry, Griffith Health, Griffith University, Southport, QLD, Australia

- 3Centre for Health and Social Practice and Centre for Sports Science and Human Performance, Waikato Institute of Technology, Hamilton, Waikato, New Zealand

- 4College of Health, Medicine and Wellbeing, The University of Newcastle, Callaghan, NSW, Australia

- 5Faculty of Health and Environmental Sciences, Auckland University of Technology, Auckland, Auckland, New Zealand

- 6School of Health Sciences, Faculty of Health, University of Canberra, Canberra, ACT, Australia

- 7Division of Health, University of Waikato, Hamilton, Waikato, New Zealand

- 8School of Nursing, Midwifery and Paramedicine, University of the Sunshine Coast, Maroochydore, QLD, Australia

Introduction: Healthcare professionals are expected to demonstrate competence in the effective management of chronic disease and long-term health and rehabilitation needs. Care provided by groups of collaborating professionals is currently well recognized as a more effective way to support people living with these conditions than routine, single-profession clinical encounters. Clinical learning contexts provide hands-on opportunities to develop the interprofessional competencies essential for health professional students in training; however, suitable assessment tools are needed to support student attainment of interprofessional competencies with self-assessment espoused as an important component of learning.

Method: A structured approach was taken to locate and review existing tools used for the self-assessment and peer assessment of students’ competencies relevant to interprofessional practice.

Results: A range of self- and/or peer assessment approaches are available, including formally structured tools and less structured processes inclusive of focus groups and reflection.

Discussion: The identified tools will usefully inform discussion regarding interprofessional competency self- and peer assessment options by healthcare students participating in a broad range of clinical learning contexts.

Conclusion: Self- and/or peer assessment is a useful approach for those seeking to effectively enhance interprofessional learning and measure the attainment of related competencies.

1 Introduction

An increasing focus on interprofessional education is needed as student health professionals prepare for a context of increasing health complexity, non-communicable disease, co-morbid conditions, and aging populations (1, 2). Programs of care provided by professionals working together are currently well recognized as a more effective way to support people living with these conditions than routine, single-profession clinical encounters. Patients increasingly expect a broader and more coordinated approach to their care (2, 3). Recognition of the need for team-based collaborative care and interprofessional education is not new as shown by documents such as the World Health Organization (2010) Framework for Action on Interprofessional Education and Collaborative Practice (4). Subsequently, curriculum content focused on the development of interprofessional competencies is an increasingly expected component of health professional education (5, 6). Interprofessional competencies form the basis of safety, quality, and patient-centeredness in team collaboration contexts (7, 8). These competencies include team participation, leadership, and communication (9). Interprofessional competence also includes soft skills such as attitudes, values, ethics, and teamwork, facilitating difficult conversations, multi-party communication, and trust building (8, 10, 11). Interprofessional competence is required for effective modern healthcare practice but all too often, various barriers get in the way of teaching interprofessional (IP) competencies, given that training usually involves professionals working in isolation using their own discipline knowledge base (1, 12).

Clinical learning environments, or contexts in which health professional programs are taught and practice placements occur, provide hands-on opportunities to support student attainment of IP competencies. Best practices in clinical education involve continuous feedback as a critical link between teaching and assessment and essential in supporting the educational process (13, 14) with self- and peer assessment espoused and regularly used as an important component of the learning sequence (15, 16). Twenty years ago, Ward et al. (2002) reported that reflection on practice using self- and peer assessment is not without difficulties, raising concerns such as issues in objectivity and reliability of students assessing their own performance (17), and debates have persisted since that time (18). Despite these concerns, self-assessment is widely implemented as an educational learning process (16). In the face of concerns, suitably validated self- and peer assessment tools are needed to guide best practices, complement faculty assessment processes, and effectively maximize learning (19).

Previous reviews identifying interprofessional assessment tools for use with prelicensure students have focused on post-placement or post-intervention assessment of IP competency (20) or the identification of tools for use by faculty in the assessment of student IP development (21). This inquiry aimed to locate assessment tools and assessment processes used by prelicensure healthcare students for the self- and peer assessment of IP competency attainment in clinical learning contexts, including the potential for use within an interprofessional student-led clinic. Student-led clinics (SLCs) are a unique option for the provision of practice placements in health professional programs (22). They are used with increasing frequency to enhance the opportunity and experience for prelicensure students in hands-on practice, especially in primary healthcare settings, while also providing benefits to service users and communities (22–24). SLCs May involve students from single professions (22) or May be interprofessional in nature (25, 26). Within both general clinical learning contexts and SLCs, tools May be used to assess either individuals or whole teams in interprofessional competencies.

This study sought to understand what assessment tools and self/peer assessment processes have been used by prelicensure healthcare students during interprofessional self-assessment and peer assessment processes in clinical learning environments with two or more health professionals working together. In developing this search, we noted that “tools,” “techniques,” instruments,” and “scales” are frequent terms used interchangeably in the literature (27–29). Definitions are closely aligned and often contradictory (30, 31). For this review, the term ‘tool’ is reported for consistency. Consistent with our research question, we also report processes that did not include the utilization of formally developed ‘tools’ but also other means such as self- or peer reflection and focus group discussions to measure, assess, or reflect on interprofessional competency development.

The inquiry focused on student self- and peer assessment versus assessment undertaken by teaching faculty and on the self-assessment of interprofessional competencies versus profession-specific competencies. The review aimed to answer the following questions:

• What tools and self−/peer assessment processes have been used by prelicensure healthcare students to undertake self- and/or peer assessment of interprofessional competencies in an interprofessional clinical learning context (contexts in which health professional programs are taught and practice placements occur) with two or more health professions working together?

2 Method

2.1 Reporting guideline

A scoping review was considered most appropriate for investigating the research question as this topic has not yet been comprehensively reviewed. In such instances, scoping reviews are suitable to provide a general overview of available evidence (assessment tools) as a precursor to more detailed inquiry (32). A scholarly approach was undertaken in conducting the review using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews (PRISMA-ScR) statement (33).

2.2 Eligibility criteria

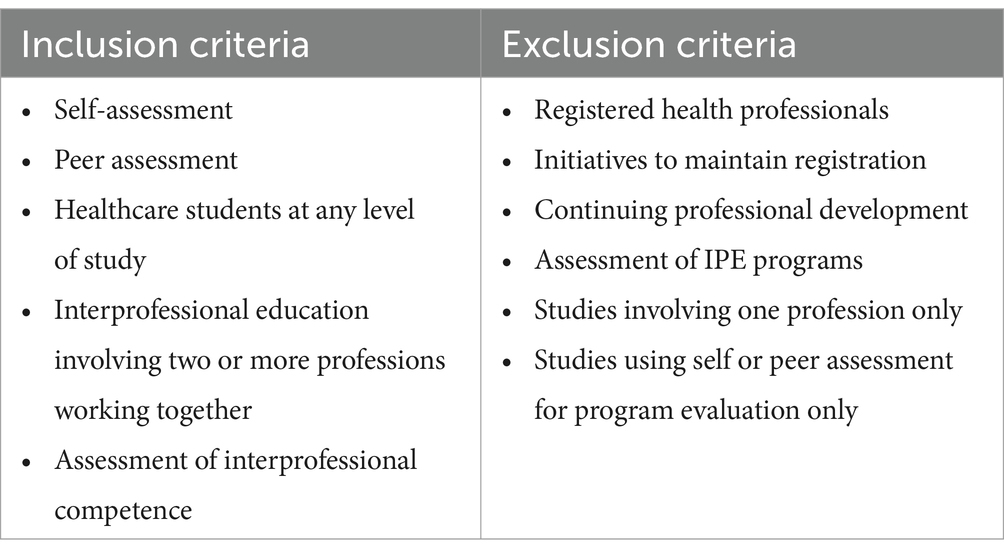

This review sought primary studies using qualitative, quantitative, or mixed methods for assessing interprofessional competencies. Specifically, we searched for studies involving healthcare students (from two or more professions working together) at any level of study, participating in interprofessional education activities, and utilizing tools to self-assess interprofessional competence or peer assess other students. The search focused on prelicensure students. Publications in which participants included registered health professionals and those with initiatives to maintain registration or undertake continuing professional development were excluded. Studies that assessed IPE programs more broadly and in which tools were used for the primary purpose of program evaluation, as opposed to specifically assessing student IPE competencies as a result of such programs, were excluded. The selection criteria are summarized in Table 1.

2.3 Information sources

The literature search was completed in May 2023 and updated in November 2023 followed by analysis and write-up. Four electronic databases, ProQuest, ERIC, Medline, and Embase, were searched for literature published in the 25 years preceding the search date. By focusing on the last 25 years, the review aligns with the transfer in various nations of hospital-based education to university-based education and captures the most relevant and impactful developments in the field of Interprofessional Education and Collaborative Practice (IPECP). This approach allowed us to concentrate on the period during which these concepts gained significant traction, thereby providing a more focused and pertinent analysis.

2.4 Search process

The search strategy was guided by the research question and the inclusion/exclusion criteria, focusing on three broad concepts: healthcare student, peer- and self-assessment, and interprofessional competence, with refinement through MeSH headings in Medline. The initial search in ERIC used the following keywords: [(Pre-registration OR Pre-licensure) AND (Healthcare student OR Healthcare student) AND (postgraduate OR undergraduate) AND (Evaluate OR Assessment OR assessing OR assess OR outcome OR outcomes OR examin* OR evaluate) OR (measurement OR measure OR measuring) AND (Competenc* OR Competent) AND (interprofession*) AND tools]. The search strategy was then tailored to each database accordingly. Google Scholar was specifically used to search for gray and narrative literature that might have been missed in the focused search as well as to explore reference lists of relevant primary papers in the database search.

2.5 Study selection

Search results were imported into Covidence® (34), an online software for review data management and screening, which automatically removed duplicates. Initial screening of the titles and abstracts was conducted by two sets of independent reviewers. Disagreements regarding paper inclusion were resolved by discussion between a third and fourth reviewer.

Full texts of included studies were then reviewed by two sets of independent reviewers. Discrepancies and conflicts were resolved by a third reviewer.

2.6 Data extraction

Two reviewers independently extracted relevant data from the included studies via Covidence for review and discussion by all authors. This information encompassed the following parts: the characteristics of studies (publication year, country, study design, sample population, and size), participant features (student professional field and level of study), and characteristics relating to the intervention, control, and outcome measures (IPE, interprofessional competency, and self/peer assessment tools). Any conflicts that arose between the reviewers were resolved by consensus.

Focused effort with significant rereading and team discussion was needed to locate studies directly relevant to the research questions. This was because significant literature was identified where students undertook self-assessment activities using published scales; however, on close examination, the student self-assessment data were used to inform tutor evaluation of the effectiveness of the IPE program or intervention rather than for the students’ personal assessment, discussion, and reflection. Examples include (35–37). Articles that used student self-assessment data purely to inform program evaluations were excluded in the review process because this review directly related to the question ‘what tools and self/peer assessment processes have been used by prelicensure healthcare students to undertake self- and/or peer assessment of interprofessional competencies in an interprofessional clinical learning context with two or more health professions working together?’ Some studies had a dual-purpose use of the student self-evaluation data—to inform both student self-evaluation and program evaluation. If data were available for student self-assessment and/or reflection, the study was identified as relevant to this review.

3 Results

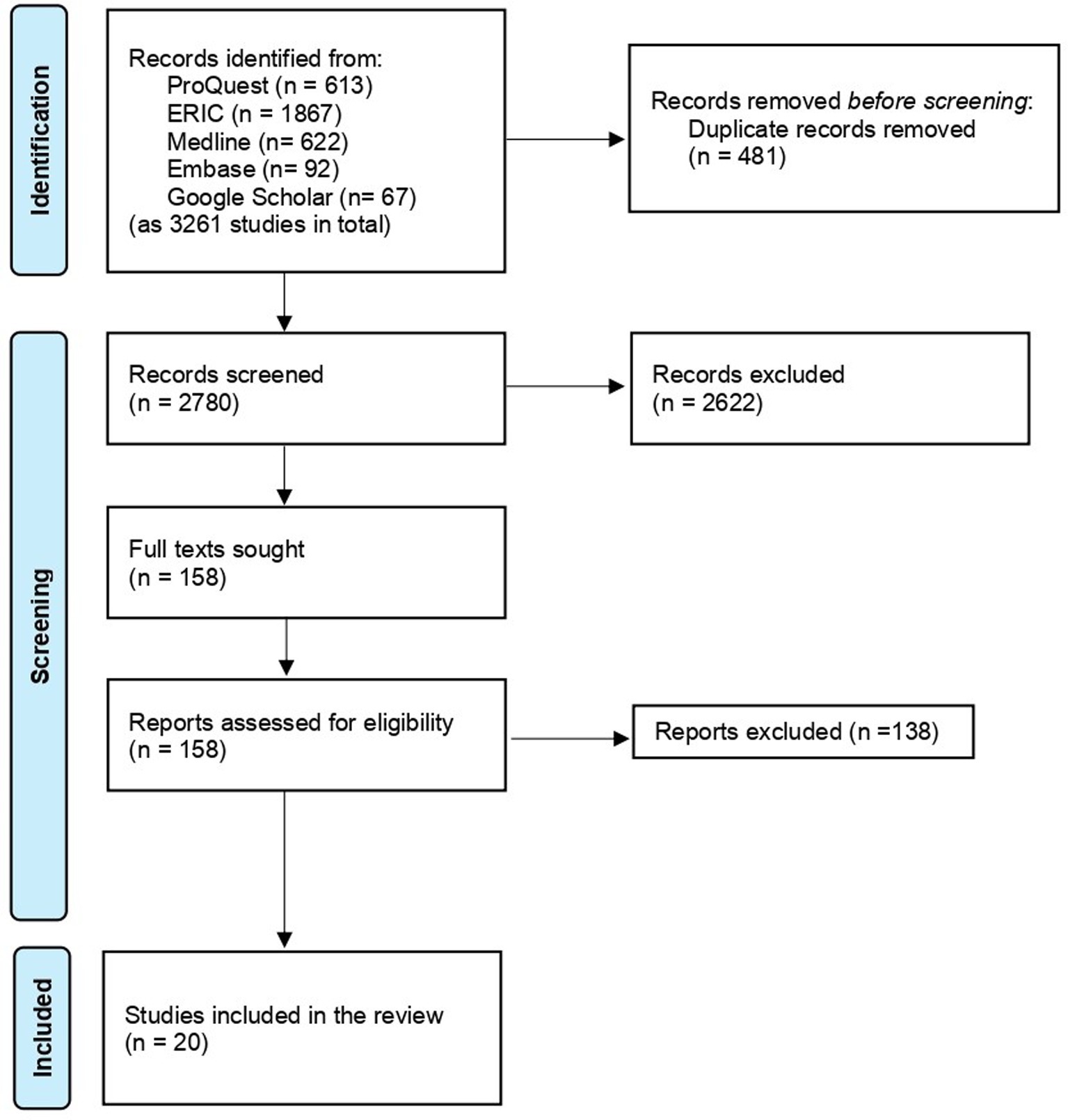

Twenty studies were identified of direct relevance to the review question (see Figure 1).

3.1 Characteristics of included studies

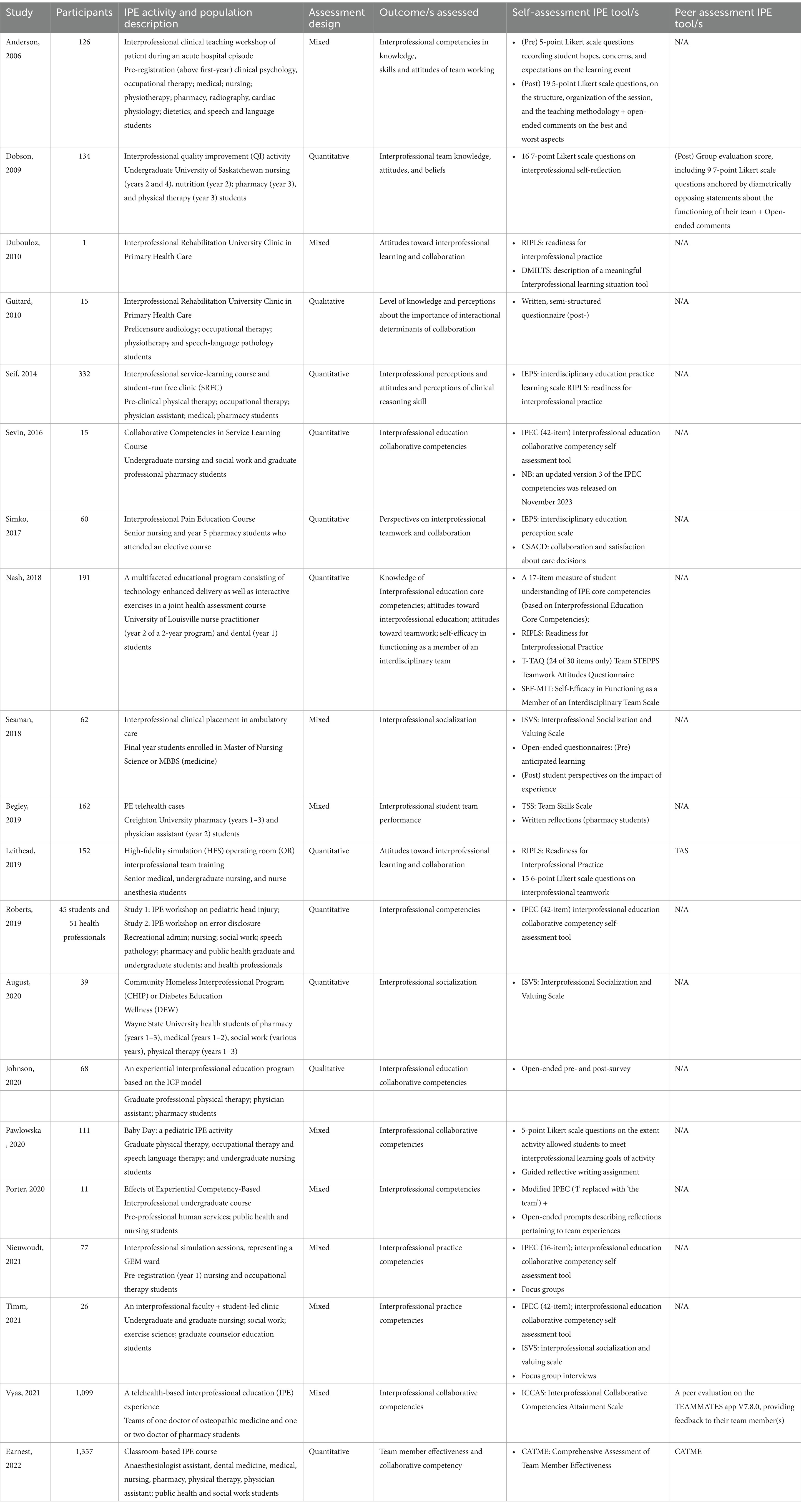

Table 2 provides a summary of the characteristics of each study selected for inclusion in this review. Studies were identified across a 25-year timeframe from 2009 and involved quantitative, qualitative, and mixed-methods approaches. A wide range of health professions were reported in the selected studies with nursing and pharmacy the most frequently noted. Studies originated from the United States, Canada, Australia, and the United Kingdom, with the highest number (14 or 70%) having been published in the United States.

3.2 Analysis of included studies

For the purposes of this review, assessment tools identified May have been used for either self- or peer assessment, with results having been provided to students for the purposes of learning assessment, rather than being used by educators or researchers for program or course evaluation. Table 3 lists each study and provides information about the number of participants, the intervention (IPE learning activity), the participating student population, and the specific assessment tools used. Note that where assessment was undertaken by instructors or faculty in conjunction with self-assessment or peer assessment in a given study, these tools are not listed. For example, Begley et al. (2019) also used the Creighton Interprofessional Collaborative Evaluation (C-ICE) instrument, a “25-point dichotomous tool in which the evaluator awards one point if the interprofessional team demonstrates competency in a 2 specific area, or no point for failure to do so” (p. 477). Because this tool involves evaluator 3 (not self- or peer-) assessment, this tool is not listed or considered further here (38).

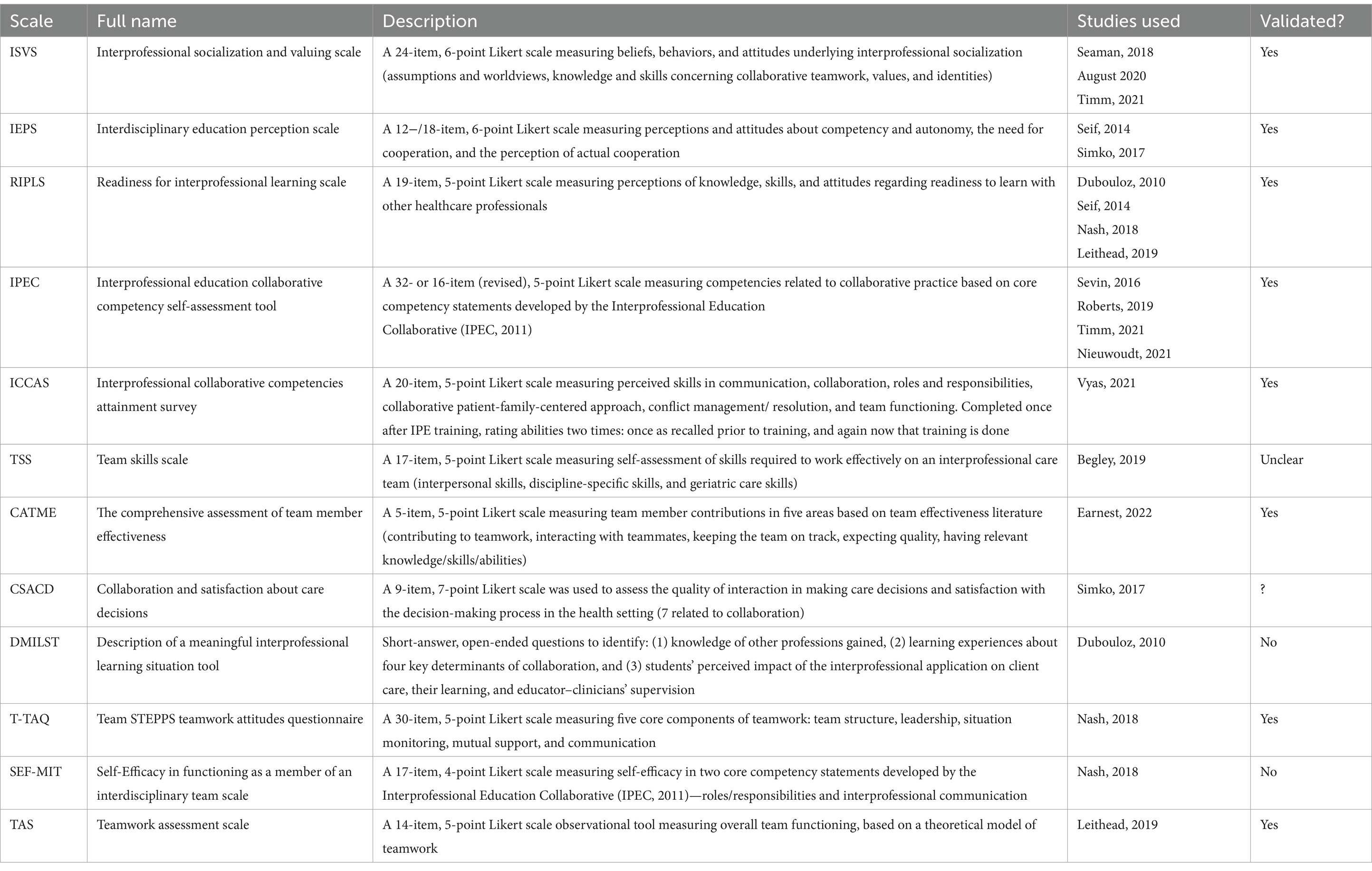

The 12 specific tools in Table 3 have been used for self-and/or peer assessment of interprofessional competencies across the twenty included studies are shown in Table 4.

The origins of the frequently used tool can be found in USA which has a strong history of formally established interprofessional learning collaboratives. For example, the ISVS Scale was developed by the Minnesota-based National Centre for Interprofessional Education and Practice (39), the IPEC scale was developed by the Washington-DC-based Interprofessional Education Collaborative. It appears that US educators have the autonomy to choose and utilize various tools or to construct their own approaches. The UK hosts CAIPE—the Centre for the Advancement of Interprofessional Practice and Education established in 1987 to drive interprofessional practice in health (40). However, UK educational providers appear to have less autonomy as UK-based regulators mandate the actual competencies, which must be addressed by each profession. We can only speculate that this May be why only one UK-based manuscript appeared in this search.

The 12 assessment tools vary in different ways, although 11 of the 12 tools are quantitative, Likert-scale measures, with the exception being the Description of a Meaningful Interprofessional Learning Situation Tool developed by Dubouloz et al. (2010) to capture students’ perspectives qualitatively, via open-ended questions. This is the only specific qualitative tool used (41); however, other studies also adopted less structured approaches to self and peer assessment, such as the use of focus groups or written reflection tasks. Table 4 only includes the 14 studies in which formal tools were utilized. Utilization of tools was most frequently reported in mixed-methods studies, in conjunction with a more structured, quantitative approach utilizing a scaled tool (38, 42–44). Two studies adopted a solely qualitative approach, with students undertaking self-assessment via reflective written questionnaire/open-ended survey (post-test-only and pre-and-post, respectively) (45, 46).

Among quantitative approaches, the most frequently used tool was the IPEC (43, 44, 47, 48). This is a 5-point Likert scale tool based on the well-known core competency statements developed by the Interprofessional Education Collaborative (IPEC, 2011), a U.S. collaboration involving peak bodies from six health disciplines. An early 42-item scale includes 8 to 11 items for each of the four key domains in the statement (values and ethics, roles, and responsibilities, interprofessional communication, and teams and teamwork), although Nieuwoudt et al. (2021) used a shortened 16-item scale and Porter et al. (2020) modified the scale to use ‘the team’, instead of ‘I’ for each of the competencies (43, 49). Note that an updated version 3 of the IPEC competency standards was released in late 2023 shortly after the conclusion of the search process associated with this manuscript (50). The updated version is available as a resource to inform future studies.

Three of the included studies used ISVS for students to self-assess attitudes, values, and beliefs about the value of interprofessional socialization (44, 51, 52). The second most used tool was the Readiness for Interprofessional Learning Scale (RIPLS), used by Ref. (41, 53–55). However, it is important to note that in each of these cases, this tool was used alongside one or more other tools for assessing competencies. In each study including RIPLS the decision to include it is not explained. While a valid and reliable tool, RIPLS (56), was not designed to be an outcome or impact measure. It is designed to measure attitudes toward IPE before starting an IPE intervention. However, it is appropriate to include studies, which have utilized the RIPLS scale on the basis that this scale measures attitudes and values as regards interprofessional educational activities. Collaborative attitudes and values, including the attitude and openness to follow leaders within a team, are important interprofessional competencies (10, 11, 57), and students’ awareness of their own situation is an important part of interprofessional learning. In considering the decision to include studies using the RPILS scale in the findings of this search, it is important to reflect on the variance among the 12 tools highlighted in Table 4. Assessment is a multivariate process. One size does not fit all. Thus, a selection of different types of tools and processes for differing settings is both valid and useful.

Considering differences is also important to differentiate between the most common type of tool, which measures individual competencies (whether for oneself or one’s peers), and those which measure competencies overall, for a team. Most located studies used individual and personal scales, but there were some examples of scales or tools which measured overall team functioning, skills, or approaches. These include the CATME used in Ref. (58) originated in engineering, and which involves individuals assessing self- and team-member contributions to a team, and the Teamwork Assessment Scale used in Ref. (53), which assesses team functioning in a given situation (items include ‘the team roles were distinct without ambiguity’, for example). The CASCD scale used by Ref. (59) measures perceptions of team interaction and satisfaction with decision-making and is thus also more situational and team functioning focused than a scale of individual skills, knowledge, or experience.

Other studies used (either solely or alongside named tools) in-house constructed Likert-scale instruments not listed in Table 3 (51, 57, 60). Validation for these tools, particularly a detailed description of their psychometric properties, was typically lacking (61). Likert-scale ranked approaches were typically used in a pre- and post-design before and after the intervention, but there are also examples of retrospective, post-then-predesign where participants recalled prior knowledge after the fact (48) and ICCAS used by Vyas et al. (2021) is designed to be completed only once, rating abilities after training and also as recalled previously (62). Overall, there was significant variability in the approaches to self- and peer- assessment undertaken by students in these contexts and in the tools and processes used.

4 Discussion

Effective assessment should be designed in a multifaceted manner and include a variety of formative and summative assessment activities and continuous learner feedback with each assessment activity designed to build, test, and affirm learner capability and expand understanding. Using more than one assessment type helps give students a range of ways to demonstrate what they have learned and what still needs to be learned (63). Among the wide milieu to be learned by student health professionals, interprofessional insight and practice capabilities are increasingly important as populations age and levels of chronic and complex care priorities increase (2).

A recent review reported results of a search designed as a resource of interprofessional assessment tools used by faculty (21). This search was designed to complement this study by locating and providing a pointer to tools and processes available for student self-assessment and peer assessment of interprofessional understanding and capability. As highlighted, the search identified studies utilizing and reporting formally developed IP self- and peer assessment tools along with other studies reporting processes such as focus groups and reflection—the benefit being an identification of a broad range of resources that can used to engage with students and their peers and enhance IP related learning among health professional students during their learning experiences. A significant benefit of self- and peer assessment is the extent to which these processes increase student understanding of their current capabilities and learning needs (58, 64).

4.1 Self- and peer assessment tools

Despite concerns in the literature about the objectivity and reliability of students assessing their own performance and/or that of their classmates (17, 18), peer and self-assessments have been shown to significantly contribute to the expansion of student capability and positive learning outcomes (64–66). The argument that self-assessment May be unreliable, inflated, and/or biased can be mitigated by including others (for example, peers, colleagues, and clients) in the assessment of self (67). Thus, the review has searched for examples using both self- and peer assessment tools and processes with both noted as being complementary to each other (65).

Documented benefits of self-assessment include the growth that occurs when students learn how to assess their own competencies and/or those of their peers. This includes increased ‘deep-level’ learning, critical thinking, and problem-solving skills (66). Reported benefits also include growth in self-awareness and the transition from tutor-directed learning, to self-directed learning, and ultimately, autonomous, reflective practice (66).

Timing of assessment and the benefits of repeating assessments are important considerations. Students May rate themselves inappropriately high before their learning experience and score lower in terms of comfort or ability after the placement, once they have greater insight into their capabilities and have been provided with an opportunity to reflect (51, 68). Self-assessment has also been reported as more likely to be inflated among first-year students with further instruction and reflection recommended to moderate over-confidence and self-bias among novice learners (69).

Aside from the use of formalized tools to facilitate self and/or peer assessment, verbal or written reflection and engagement in focus groups provide the opportunity for students to safely contemplate and recognize their own strengths and weaknesses and, as such, is a valuable aid to learning. Benefits also include reported increases in empathy, comfort in dealing with complexity, and engagement in the learning process (45, 46, 70, 71).

4.2 Assessment as a comprehensive concept

“Effective assessment is more like a scrapbook of mementos and pictures rather than a single snapshot.”

Wiggins and McTighe, 2005, p 152

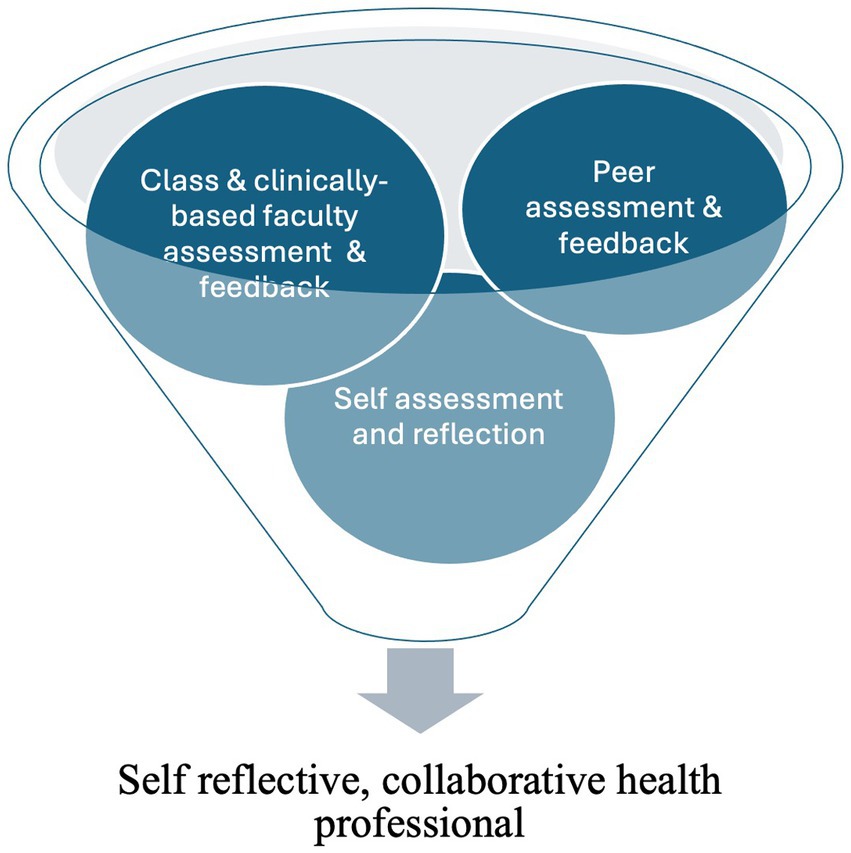

Multiple methods are needed to best capture the major aspects of knowledge and competency acquisition among student health professionals (72, 73). While the search has successfully identified self and/or peer assessment options for educators and their learners, it is important to position these within a broader suite of assessment options to maximize the development of a self-reflective health professional. Figure 2 illustrates the comprehensive nature of student assessment and the multivariate approach outlined by Wiggins and McTighe (2005), which is needed to support the development of critically thinking, self-reflective practitioners (63).

Blue et al. (2015) have noted that the lack of progress relating to the assessment of interprofessional competencies continues to create challenges for educators. Various studies conducting assessments have focused on learner attitudes toward IPE as opposed to learner IP knowledge or skill (61, 74, 75). Moreover, existing tools lack sufficient theoretical and psychometric development (61, 75). The Readiness for Interprofessional Learning Scale (56) and the Interdisciplinary Education Perception Scale (IEPS) (76) have been widely used, for both faculty (21) and student self and/or peer assessment (55), and other tools or scales have been locally developed to meet specific institutional goals and objectives (43, 49, 74). Blue et al. (2015) and Nieuwoudt et al. (2021) found that few programs reported systematic processes for evaluating individual student’s skills and behaviors related to interprofessional collaboration. It is clear that rigorous assessment and evaluation methods, standardized and widely used tools, and longitudinal assessment from diverse contexts are needed if the field of IPE is to advance and align with the demands of changing clinical care systems.

4.3 Need for further research

More study is needed to investigate the strengths and merits of qualitative scales versus more qualitative approaches in the assessment of interprofessional competency and within the suite of currently available self and/or peer assessment options. Reflective, deep dive approaches—both have been used in the literature, but little seems to have been done to reconcile them, test the value or otherwise of one over another or within mixed approaches (74) What is clear is that for the field to progress, there needs to be some consensus agreement on which measures to use to most effectively support learning.

5 Limitations

This study focuses on studies reporting self-assessment and peer assessment processes. Findings identify considerable variance among the identified tools and processes and the ways in which they were utilized. Several studies undertook a case study approach or included small cohorts only, so results May not be comprehensive or generalizable. As a detailed description of learning outcomes and psychometric properties of the results was typically lacking, it is not possible to make evidence-based comparative comments about which of the individual tools and/or processes as the most effective aids for learning. Thus, readers are encouraged to consider the recommendations in conjunction with the combination of assessment and feedback processes available to assess interprofessional readiness, capability, and competence and aid student learning.

6 Conclusion/recommendations

This review has identified a range of self- and peer assessment tools and processes to usefully contribute to the assessment of interprofessional competencies. Findings highlight the option of using a range of self and/or peer assessment approaches including formally structured tools and less structured processes, inclusive of focus groups and reflection. Discussion recommends that results identified within this search be used to complement tools, which can be used by faculty and others within a broader mosaic of assessments designed to support learning and the development of competent, self-reflective beginner practitioners. As such, the research provides a useful resource for seeking to effectively enhance interprofessional learning and competencies attainment. Of note is the conclusion that there is still more study to be undertaken in this area including the need for greater clarity and consensus agreement about definitions, tools, and the most appropriate measurement approach.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

SB: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. JY: Data curation, Formal analysis, Investigation, Writing – review & editing. DB: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – review & editing. IA: Data curation, Formal analysis, Investigation, Writing – review & editing. AP: Data curation, Formal analysis, Investigation, Writing – review & editing. KS: Data curation, Formal analysis, Investigation, Writing – review & editing. A-RY: Data curation, Formal analysis, Investigation, Methodology, Writing – review & editing. PA: Formal analysis, Investigation, Methodology, Writing – review & editing. PB: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Project administration, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research is supported by a Trust Waikato Community Impact Grant, Hamilton, New Zealand.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Rawlinson, C, Carron, T, Cohidon, C, Arditi, C, Hong, QN, Pluye, P, et al. An overview of reviews on Interprofessional collaboration in primary care: barriers and facilitators. Int J Integr Care. (2021) 21:32. doi: 10.5334/ijic.5589

2. Koppel, R, Wilson, C, and Humowiecki, M. Core competencies for frontline complex care providers. National Center for Complex Health and Social Needs, Camden Coalition of Healthcare Providers (2020)

3. Vachon, B, Huynh, A-T, Breton, M, Quesnel, L, Camirand, M, Leblanc, J, et al. Patients’ expectations and solutions for improving primary diabetes care. Int J Health Care Qual Assur. (2017) 30:554–67. doi: 10.1108/IJHCQA-07-2016-0106

4. World Health Organization ed. Framework for action on Interprofessional Education & Collaborative Practice. Geneva, Switzerland: World Health Organization (2010).

5. Thistlethwaite, JE, Dunston, R, and Yassine, T. The times are changing: workforce planning, new health-care models and the need for interprofessional education in Australia. J Interprof Care. (2019) 33:361–8. doi: 10.1080/13561820.2019.1612333

6. World Health Organization . Transforming and scaling up health Professionals' education and training: World Health Organization guidelines. Geneva: Switzerland World Health Organization (2013).

7. Ansa, BE, Zechariah, S, Gates, AM, Johnson, SW, Heboyan, V, and De Leo, G. Attitudes and behavior towards Interprofessional collaboration among healthcare professionals in a large Academic Medical Center. Healthcare (Basel). (2020) 8:323–337. doi: 10.3390/healthcare8030323

8. Andersen, P, Broman, P, Tokolahi, E, Yap, JR, and Brownie, S. Determining a common understanding of interprofessional competencies for pre-registration health professionals in Aotearoa New Zealand: a Delphi study. Front Med. (2023):10. doi: 10.3389/fmed.2023.1119556

9. Canadian Interprofessional Health Collaborative . A national Interprofessional competency framework. (2010). Available at: https://phabc.org/wp-content/uploads/2015/07/CIHC-National-InterprofessionalCompetency-Framework.pdf (Accessed March 01, 2024).

10. McLaney, E, Morassaei, S, Hughes, L, Davies, R, Campbell, M, and Di Prospero, L. A framework for interprofessional team collaboration in a hospital setting: advancing team competencies and behaviours. Healthc Manage Forum. (2022) 35:112–7. doi: 10.1177/08404704211063584

11. Chitsulo, CG, Chirwa, EM, and Wilson, L. Faculty knowledge and skills needs in interprofessional education among faculty at the College of Medicine and Kamuzu College of Nursing, University of Malawi. Malawi Med J. (2021) 33:30–4. doi: 10.4314/mmj.v33is.6

12. Hero, L-M, and Lindfors, E. Students’ learning experience in a multidisciplinary innovation project. Educ Train. (2019) 61:500–22. doi: 10.1108/ET-06-2018-0138

13. Kuhlmann Lüdeke, A, and Guillén Olaya, JF. Effective feedback, an essential component of all stages in medical education. Universitas Medica. (2020) 61:32–46. doi: 10.11144/Javeriana.umed61-3.feed

14. Burgess, A, van Diggele, C, Roberts, C, and Mellis, C. Feedback in the clinical setting. BMC Med Educ. (2020) 20:460. doi: 10.1186/s12909-020-02280-5

15. Archer, JC . State of the science in health professional education: effective feedback. Med Educ. (2010) 44:101–8. doi: 10.1111/j.1365-2923.2009.03546.x

16. Lu, F-I, Takahashi, SG, and Kerr, C. Myth or reality: self-assessment is central to effective curriculum in anatomical pathology graduate medical education. Acad Pathol. (2021) 8:23742895211013528. doi: 10.1177/23742895211013528

17. Mn, W, Gruppen, L, and Regehr, G. Measuring self-assessment: current state of the art. Adv Health Sci Educ. (2002) 7:63–80. doi: 10.1023/a:1014585522084

18. Yates, N, Gough, S, and Brazil, V. Self-assessment: with all its limitations, why are we still measuring and teaching it? Lessons from a scoping review. Med Teach. (2022) 44:1296–302. doi: 10.1080/0142159X.2022.2093704

19. Andrade, HL . A critical review of research on student self-assessment. Front Educ. (2019):4. doi: 10.3389/feduc.2019.00087

20. Almoghirah, H, Nazar, H, and Illing, J. Assessment tools in pre-licensure interprofessional education: a systematic review, quality appraisal and narrative synthesis. Med Educ. (2021) 55:795807. doi: 10.1111/medu.14453

21. Brownie, S, Blanchard, D, Amankwaa, I, Broman, P, Haggie, M, Logan, C, et al. Tools for faculty assessment of interdisciplinary competencies of healthcare students: an integrative review. Front Med. (2023):10. doi: 10.3389/fmed.2023.1124264

22. Kavannagh, J, Kearns, A, and McGarry, T. The benefits and challenges of student-led clinics within an Irish context. J Pract Teach Learn. (2015) 13:58–72. doi: 10.1921/jpts.v13i2-3.858

23. Stuhlmiller, CM, and Tolchard, B. Developing a student-led health and wellbeing clinic in an underserved community: collaborative learning, health outcomes and cost savings. BMC Nurs. (2015) 14:32. doi: 10.1186/s12912-015-0083-9

24. Tokolahi, E, Broman, P, Longhurst, G, Pearce, A, Cook, C, Andersen, P, et al. Student-led clinics in Aotearoa New Zealand: a scoping review with stakeholder consultation. J Multidiscip Healthc. (2021) 2053-2066. doi: 10.2147/JMDH.S308032

25. Frakes, K-A, Brownie, S, Davies, L, Thomas, J, Miller, M-E, and Tyack, Z. Experiences from an interprofessional student-assisted chronic disease clinic. J Interprof Care. (2014) 28:573–5. doi: 10.3109/13561820.2014.917404

26. Frakes, K-a, Brownie, S, Davies, L, Thomas, JB, Miller, M-E, and Tyack, Z. Capricornia allied health partnership (CAHP): a case study of an innovative model of care addressing chronic disease through a regional student-assisted clinic. Australian Health Rev. (2014) 38:483–6. doi: 10.1071/AH13177

27. Dennis, V, Craft, M, Bratzler, D, Yozzo, M, Bender, D, Barbee, C, et al. Evaluation of student perceptions with 2 interprofessional assessment tools-the collaborative healthcare interdisciplinary relationship planning instrument and the Interprofessional attitudes scale-following didactic and clinical learning experiences in the United States. J Educ Eval Health Profess. (2019) 16:35. doi: 10.3352/jeehp.2019.16.35

28. Iverson, L, Todd, M, Ryan Haddad, A, Packard, K, Begley, K, Doll, J, et al. The development of an instrument to evaluate interprofessional student team competency. J Interprof Care. (2018) 32:531–8. doi: 10.1080/13561820.2018.1447552

29. Peltonen, J, Leino-Kilpi, H, Heikkilä, H, Rautava, P, Tuomela, K, Siekkinen, M, et al. Instruments measuring interprofessional collaboration in healthcare - a scoping review. J Interprof Care. (2020) 34:147–61. doi: 10.1080/13561820.2019.1637336

30. Australian Skills Quality Authority . What is the difference between an assessment tool and an assessment instrument? (Clause 1.8). Available at: https://www.asqa.gov.au/faqs/what-difference-betweenassessment-tool-and-assessment-instrument-clause-18 (Accessed March 01, 2024).

31. Industry Network Training & Assessment Resources . Difference between ‘tools’ and ‘instruments’ - chapter 3 (2017) Available at: https://www.intar.com.au/resources/training_and_assessing/section_3/chapter3_developing_assessment_tools/lesson2_tools_and_instruments.htm

32. Munn, Z, Peters, MDJ, Stern, C, Tufanaru, C, McArthur, A, and Aromataris, E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. (2018) 18:143. doi: 10.1186/s12874-018-0611-x

33. Liberati, A, Altman, DG, Tetzlaff, J, Mulrow, C, Gøtzsche, PC, Ioannidis, JPA, et al. The PRISMA statement for reporting systematic reviews and Meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. (2009) 6:e1000100. doi: 10.1371/journal.pmed.1000100

34. Babineau, J . Product review: Covidence (systematic review software). J Can Health Lib Assoc. (2014) 35:68–71. doi: 10.5596/c14-016

35. Mink, J, Mitzkat, A, Mihaljevic, AL, Trierweiler-Hauke, B, Götsch, B, Schmidt, J, et al. The impact of an interprofessional training ward on the development of interprofessional competencies: study protocol of a longitudinal mixed-methods study. BMC Med Educ. (2019) 19:48. doi: 10.1186/s12909-019-1478-1

36. MacKenzie, D, Creaser, G, Sponagle, K, Gubitz, G, MacDougall, P, Blacquiere, D, et al. Best practice interprofessional stroke care collaboration and simulation: the student perspective. J Interprof Care. (2017) 31:793–6. doi: 10.1080/13561820.2017.1356272

37. Mahmood, LS, Mohammed, CA, and Gilbert, JHV. Interprofessional simulation education to enhance teamwork and communication skills among medical and nursing undergraduates using the TeamSTEPPS® framework. Med J Armed Forces India. (2021) 77:S42–s8. doi: 10.1016/j.mjafi.2020.10.026

38. Begley, K, O'Brien, K, Packard, K, Castillo, S, Haddad, AR, Johnson, K, et al. Impact of Interprofessional telehealth case activities on Students' perceptions of their collaborative care abilities. Am J Pharm Educ. (2019) 83:6880. doi: 10.5688/ajpe6880

39. National Centre for Interprofessional Practice and Education . Interprofessional socialization and valuing scale (ISVS-21) (2016) Available at: https://nexusipe.org/advancing/assessmentevaluation/interprofessional-socialization-and-valuing-scale-isvs-21 (Accessed March 01, 2024).

40. The Centre for the Advancement of Interprofessional Education . The Centre for the Advancement of Interprofessional education: collaborative practice through learning together to work together (2024) Available at: https://www.caipe.org/ (Accessed March 01, 2024).

41. Dubouloz, C-J, Savard, J, Burnett, D, and Guitard, P. An Interprofessional rehabilitation University Clinic in Primary Health Care: a collaborative learning model for physical therapist students in a clinical placement. J Physical Therapy Educ. (2010) 24:19–24.

42. Pawłowska, M, Del Rossi, L, Kientz, M, McGinnis, P, and Padden-Denmead, M. Immersing students in family-centered interprofessional collaborative practice. J Interprof Care. (2020) 34:50–8. doi: 10.1080/13561820.2019.1608165

43. Nieuwoudt, L, Hutchinson, A, and Nicholson, P. Pre-registration nursing and occupational therapy students' experience of interprofessional simulation training designed to develop communication and team-work skills: a mixed methods study. Nurse Educ Pract. (2021) 53:103073. doi: 10.1016/j.nepr.2021.103073

44. Timm, JR, and Schnepper, LL. A mixed-methods evaluation of an interprofessional clinical education model serving students, faculty, and the community. J Interprof Care. (2021) 35:92–100. doi: 10.1080/13561820.2019.1710117

45. Guitard, P, Dubouloz, C-J, Metthé, L, and Brasset-Latulippe, A. Assessing Interprofessional learning during a student placement in an Interprofessional rehabilitation University Clinic in Primary Healthcare in a Canadian francophone minority context. J Res Interprofessional Pract Educ. (2010) 1:231–246.

46. Johnson, AM, Woltenberg, LN, Heinss, S, Carper, R, Taylor, S, and Kuperstein, J. Whole person health: using experiential learning and the ICF model as a tool for introductory Interprofessional collaborative practice. J Allied Health (2020) 49:86–91,A.

47. Roberts, SD, Lindsey, P, and Limon, J. Assessing students’ and health professionals’ competency learning from interprofessional education collaborative workshops. J Interprof Care. (2019) 33:38–46. doi: 10.1080/13561820.2018.1513915

48. Sevin, AM, Hale, KM, Brown, NV, and McAuley, JW. Assessing Interprofessional education collaborative competencies in service-learning course. Am J Pharm Educ. (2016) 80:32. doi: 10.5688/ajpe80232

49. Porter, KJ, Nandan, M, Varagona, L, and Maguire, MB. Effect of experiential competency-based Interprofessional education on pre-professional undergraduate students: a pilot study. J Allied Health. (2020) 49:79–85.

50. Interprofessional Education Collaborative . Core competencies for Interprofessional collaborative practice. Washington, D.C: (2023).

51. Seaman, K, Saunders, R, Dugmore, H, Tobin, C, Singer, R, and Lake, F. Shifts in nursing and medical students’ attitudes, beliefs and behaviours about interprofessional work: an interprofessional placement in ambulatory care. J Clin Nurs. (2018) 27:3123–30. doi: 10.1111/jocn.14506

52. August, B, Gortney, J, and Mendez, J. Evaluating interprofessional socialization: matched student self-assessments surrounding underserved clinic participation. Curr Pharm Teach Learn. (2020):12. doi: 10.1016/j.cptl.2020.04.006

53. Leithead, J 3rd, Garbee, DD, Yu, Q, Rusnak, VV, Kiselov, VJ, Zhu, L, et al. Examining interprofessional learning perceptions among students in a simulation-based operating room team training experience. J Interprof Care. (2019) 33:26–31. doi: 10.1080/13561820.2018.1513464

54. Nash, WA, Hall, LA, Lee Ridner, S, Hayden, D, Mayfield, T, Firriolo, J, et al. Evaluation of an interprofessional education program for advanced practice nursing and dental students: the oralsystemic health connection. Nurse Educ Today. (2018) 66:25–32. doi: 10.1016/j.nedt.2018.03.021

55. Seif, G, Coker-Bolt, P, Kraft, S, Gonsalves, W, Simpson, K, and Johnson, E. The development of clinical reasoning and interprofessional behaviors: service-learning at a student-run free clinic. J Interprof Care. (2014) 28:559–64. doi: 10.3109/13561820.2014.921899

56. Parsell, G, and Bligh, J. The development of a questionnaire to assess the readiness of health care students for interprofessional learning (RIPLS). Med Educ. (1999) 33:95–100.

57. Anderson, E, Manek, N, and Davidson, A. Evaluation of a model for maximizing interprofessional education in an acute hospital. J Interprof Care. (2006) 20:182–94. doi: 10.1080/13561820600625300

58. Earnest, M, Madigosky, WS, Yamashita, T, and Hanson, JL. Validity evidence for using an online peer-assessment tool (CATME) to assess individual contributions to interprofessional student teamwork in a longitudinal team-based learning course. J Interprof Care. (2022) 36:923–31. doi: 10.1080/13561820.2022.2040962

59. Simko, LC, Rhodes, DC, McGinnis, KA, and Fiedor, J. Students' perspectives on Interprofessional teamwork before and after an Interprofessional pain education course. Am J Pharm Educ. (2017) 81:104. doi: 10.5688/ajpe816104

60. Dobson, RT, Stevenson, K, Busch, A, Scott, DJ, Henry, C, and Wall, PA. A quality improvement activity to promote interprofessional collaboration among health professions students. Am J Pharm Educ. (2009) 73:64. doi: 10.1016/S0002-9459(24)00559-X

61. Thannhauser, J, Russell-Mayhew, S, and Scott, C. Measures of interprofessional education and collaboration. J Interprof Care. (2010) 24:336–49. doi: 10.3109/13561820903442903

62. Vyas, D, Ziegler, L, and Galal, SM. A telehealth-based interprofessional education module focused on social determinants of health. Curr Pharm Teach Learn. (2021) 13:1067–72. doi: 10.1016/j.cptl.2021.06.012

63. Wiggins, G, and McTighe, J. Understanding by design. 2nd Upper Saddle River, NJ: Pearson; (2005). 1–371 p.

64. New South Wales Government . Peer and self-assessment for students (2023). New South Wales Government. Available at: https://education.nsw.gov.au/teaching-and-learning/professional-learning/teacher-quality-andaccreditation/strong-start-great-teachers/refining-practice/peer-and-self-assessment-for-students (Accessed March 01, 2024).

65. Cheong, CM, Luo, N, Zhu, X, Lu, Q, and Wei, W. Self-assessment complements peer assessment for undergraduate students in an academic writing task. Assess Eval High Educ. (2023) 48:135–48. doi: 10.1080/02602938.2022.2069225

66. Kajander-Unkuri, S, Leino-Kilpi, H, Katajisto, J, Meretoja, R, Räisänen, A, Saarikoski, M, et al. Congruence between graduating nursing students’ self-assessments and mentors’ assessments of students’ nurse competence. Collegian. (2016) 23:303–12. doi: 10.1016/j.colegn.2015.06.002

67. Taylor, SN . Student self-assessment and multisource feedback assessment: exploring benefits, limitations, and remedies. J Manag Educ. (2014) 38:359–83. doi: 10.1177/1052562913488111

68. Ma, K, Cavanagh, MS, and McMaugh, A. Preservice teachers’ reflections on their teaching SelfEfficacy changes for the first professional experience placement. Austrl J Teach Educ. (2021) 46:62–76. doi: 10.14221/ajte.2021v46n10.4

69. Pawluk, SA, Zolezzi, M, and Rainkie, D. Comparing student self-assessments of global communication with trained faculty and standardized patient assessments. Curr Pharm Teach Learn. (2018) 10:779–84. doi: 10.1016/j.cptl.2018.03.012

70. Winkel, AF, Yingling, S, Jones, AA, and Nicholson, J. Reflection as a learning tool in graduate medical education: a systematic review. J Grad Med Educ. (2017) 9:430–9. doi: 10.4300/JGME-D-16-00500.1

71. Artioli, G, Deiana, L, De Vincenzo, F, Raucci, M, Amaducci, G, Bassi, MC, et al. Health professionals and students’ experiences of reflective writing in learning: a qualitative metasynthesis. BMC Med Educ. (2021) 21:394. doi: 10.1186/s12909-021-02831-4

73. ClinEd Australia . The role of assessment in clinical practice (2023) Available at: https://www.clinedaus.org.au/topics-category/the-role-of-assessment-in-clinical-placement-174

74. Blue, A, Chesluk, B, Conforti, L, and Holmboe, E. Assessment and evaluation in Interprofessional education: exploring the field. J Allied Health. (2015):44.

75. Kenaszchuk, C, Reeves, S, Nicholas, D, and Zwarenstein, M. Validity and reliability of a multiplegroup measurement scale for interprofessional collaboration. BMC Health Serv Res. (2010) 10:83. doi: 10.1186/1472-6963-10-83

Keywords: interdisciplinary education, interdisciplinary communication, interprofessional collaboration, self-assessment, peer assessment, healthcare student

Citation: Brownie S, Yap JR, Blanchard D, Amankwaa I, Pearce A, Sampath KK, Yan A-R, Andersen P and Broman P (2024) Tools for self- or peer-assessment of interprofessional competencies of healthcare students: a scoping review. Front. Med. 11:1449715. doi: 10.3389/fmed.2024.1449715

Edited by:

Jill Thistlethwaite, University of Technology Sydney, AustraliaReviewed by:

Lindsay Iverson, Creighton University, United StatesVikki Park, Northumbria University, United Kingdom

Copyright © 2024 Brownie, Yap, Blanchard, Amankwaa, Pearce, Sampath, Yan, Andersen and Broman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sharon Brownie, c2Jyb3duaWVAc3dpbi5lZHUuYXU=

Sharon Brownie

Sharon Brownie Jia Rong Yap

Jia Rong Yap Denise Blanchard4

Denise Blanchard4

Amy Pearce

Amy Pearce Patrick Broman

Patrick Broman