- 1King Salman Center for Disability Research, Riyadh, Saudi Arabia

- 2Department of Computer Engineering and Science, Albaha University, Al Bahah, Saudi Arabia

- 3Deanship of E-learning and Information Technology, King Faisal University, Al-Ahsa, Saudi Arabia

- 4Department of Chemical Engineering, College of Engineering, King Faisal University, Al-Ahsa, Saudi Arabia

- 5Department of Health Informatics, College of Health Sciences, Saudi Electronic University, Riyadh, Saudi Arabia

- 6Department of Computer Science, College of Computer and Information Sciences, Prince Sultan University, Riyadh, Saudi Arabia

Timely and unbiased evaluation of Autism Spectrum Disorder (ASD) is essential for providing lasting benefits to affected individuals. However, conventional ASD assessment heavily relies on subjective criteria, lacking objectivity. Recent advancements propose the integration of modern processes, including artificial intelligence-based eye-tracking technology, for early ASD assessment. Nonetheless, the current diagnostic procedures for ASD often involve specialized investigations that are both time-consuming and costly, heavily reliant on the proficiency of specialists and employed techniques. To address the pressing need for prompt, efficient, and precise ASD diagnosis, an exploration of sophisticated intelligent techniques capable of automating disease categorization was presented. This study has utilized a freely accessible dataset comprising 547 eye-tracking systems that can be used to scan pathways obtained from 328 characteristically emerging children and 219 children with autism. To counter overfitting, state-of-the-art image resampling approaches to expand the training dataset were employed. Leveraging deep learning algorithms, specifically MobileNet, VGG19, DenseNet169, and a hybrid of MobileNet-VGG19, automated classifiers, that hold promise for enhancing diagnostic precision and effectiveness, was developed. The MobileNet model demonstrated superior performance compared to existing systems, achieving an impressive accuracy of 100%, while the VGG19 model achieved 92% accuracy. These findings demonstrate the potential of eye-tracking data to aid physicians in efficiently and accurately screening for autism. Moreover, the reported results suggest that deep learning approaches outperform existing event detection algorithms, achieving a similar level of accuracy as manual coding. Users and healthcare professionals can utilize these classifiers to enhance the accuracy rate of ASD diagnosis. The development of these automated classifiers based on deep learning algorithms holds promise for enhancing the diagnostic precision and effectiveness of ASD assessment, addressing the pressing need for prompt, efficient, and precise ASD diagnosis.

1 Introduction

Autism Spectrum Disorder (ASD) is a neurological condition that involves complications in both spoken and non-spoken communication, as well as challenges in social interaction. It is also marked by monotonous and stereotyped behaviors (1). The intensity of indicators and the impact of ASD differ from one circumstance to another. As to the Centers for Disease Control and Prevention (CDC), the commonness of ASD is assessed to be 1 in 54 children. This condition affects individuals from diverse racial, ethnic, and socioeconomic backgrounds. Furthermore, the prevalence of ASD in boys is four times higher than in girls. Additionally, girls with ASD often have fewer observable symptoms compared to boys (2). Autism is a persistent and enduring condition that remains present throughout a person’s whole life (3). Hence, it is of utmost importance to identify ASD at an early stage, since individuals who are identified with ASD during early infancy can greatly benefit from suitable therapies, leading to a favorable long-term result (4).

Facial expressions communicate a wealth of personal, emotional, and social information from early infancy. Even in a short interaction, people may effortlessly focus on and rapidly comprehend the intricate details of a person’s face, accurately identifying their emotional state and social situation, and frequently recalling their face later (5). Neuroimaging research has indicated that eye interaction can stimulate brain movement in parts of the brain associated with social interactions. Additionally, studies on human development have provided evidence that infants and young children have a natural inclination to pay attention to and comprehend faces that make direct eye contact. Increasing evidence suggests that ASD is related with an aberrant design pattern of eye tracking conduct (6, 7). Therefore, it is widely accepted that autism is characterized by impairments in facial handling. Nevertheless, the precise attributes of these discrepancies and the correlations among atypical face processing and deviant socio-emotional function in ASD remain inadequately comprehended.

Eye tracking, a non-invasive and straightforward measurement technique, has garnered the attention of scientists in recent years (8–11). The use of eye tracking in ASD research is justified by the correlation between ASD and different attention patterns, which differ from those seen in typical development (12–15). Hence, the use of eye tracking based system to quantify eye activities and gaze designs should assist in understanding the aberrant behavior associated with persons diagnosed with ASD, as well as distinguishing individuals with ASD from typically developing (TD) individuals. Eye tracking is a method used by certain computational systems to aid in the identification of mental problems (16, 17). Eye tracking technology is beneficial in addressing ASD, a neurodevelopmental disease marked by challenges in social communication and repetitive activities. An early indication of ASD is the absence of visual engagement, namely the lack of eye contact. This trait is seen in infants as early as six months old, irrespective of the cultural context in which they are raised. Eye-tracking technology is essential in diagnosing ASD through the analysis of visual patterns (18). A device based on eye-tracking framework classically comprises a high-determination digital camera device and a sophisticated technique based machine learning algorithm that accurately determines the coordinates of eye gaze when persons watch films or pictures. This technology’s eye gaze data may help customize therapy to ASD patients’ social issues (19). To further understand how eye-tracking biomarkers might discriminate ASD subgroups, we should explore the effects of closely related mental illnesses such as attention deficit hyperactivity disorder (ADHD), nervousness, and attitude complaint. We may better understand how these variables may affect our ability to distinguish different groups in a medical setting by doing this. Research indicates that children who having the cases of Autism ASD and ADHD tend to have shorter periods of focused attention on faces while looking at static social cues that are not very complex, compared to children who simply have ASD and those with TD (20).

Research has shown that eye-tracking data can be utilized as medical indicators that can be applied in medical health domain to identify ASD in children at an initial state (18). Biomarkers, sometimes referred to as biological markers, are quantifiable and impartial signs that offer insights around a patient’s apparent organic state. Bodily fluids or soft tissue biopsies are frequently employed to assess the efficacy of handling for a disease or medicinal disorder.

A crucial element of social interaction is maintaining eye contact, a skill that individuals with ASD often find challenging. Eye tracking technology may be applied to measure the length of time someone maintains eye interaction and the occurrence and track of their eye movements. This provides measurable signs of difficulties in social interactions. Individuals with ASD may also exhibit other irregularities in pictorial processing, including heightened focus on specific details, sensory hypersensitivity, and difficulties with complex visual tasks. Hence, the sophisticated deep learning algorithms, namely MobileNet, VGG19, DenseNet169, and the hybrid of MobileNet-VGG19, were applied for the early-stage recognition of ASD. The primary contributions of this research article are as follows:

• This work introduces a new method for creating eye-tracking event detectors using a deep learning methodology.

• The research asserts that it has attained accuracy (100%) in identifying ASD by employing the MobileNet algorithm. This indicates that the DenseNet169 and hybrid of MobileNet-VGG19 model that was created has demonstrated encouraging outcomes in accurately differentiating persons with ASD from those who do not have ASD, using eye tracking data.

• The proposed methodology was compared with different existing systems that used the same dataset; it is observed that our model achieved high accuracy because we have used a different preprocessing approach from improving dataset.

• This work presents an innovative artificial intelligence (AI) technique for the diagnosis of ASD. Its objective is to differentiate persons with autism from those without utilizing deep learning models, relying on publicly accessible eye-tracking datasets. The suggested approach was evaluated against other existing systems that utilized the same dataset. It was found that the proposed system achieved a high accuracy rate of 100% when compared to one of the deep learning models.

2 Background

ASD can be detected by early screening techniques utilizing DL algorithms. These approaches have become more prominent because of their accuracy rate and capability to grip large volumes of data. It assists experts in automating the diagnostic procedure and reducing the time spent on tests (21, 22). AI techniques are used in the rehabilitation process to lessen symptoms of ASD. This research analyzes the utilization of DL approaches in the past five years for diagnosing ASD through the application of eye tracking techniques.

Fang et al. (23) introduced a novel method for identifying children with ASD based on stimuli that include the ability to follow someone’s gaze. Individuals with ASD exhibited typical patterns of visual attention, especially while observing social settings. The scientists developed a novel deep neural network (DNN) method to abstract distinctive characteristics and categorize children with ASD and healthy controls based on individual images.

Elbattah et al. (24) developed a machine learning (ML)-based approach to aid in the diagnosing process. This approach relies on acquiring knowledge of sequence-oriented patterns in action eye motions. The primary philosophy was to represent eye-tracking data as written documents that analyze a sequence of rapid eye movements (saccades) and periods of gaze fixation. Therefore, the study utilized the natural language processing (NLP) technique to transform the unorganized eye-tracking information.

Li et al. (25) introduced an automated evaluation framework for detecting typical intonation patterns and predictable unique phrases that are important to ASD. Their focus was on the linguistic and communication difficulties experienced by young children with ASD. At first, the scientists utilized the Open SMILE toolkit to extract high-dimensional auditory characteristics at the sound level. They also employed a support vector machine (SVM) backend as the standard baseline. Furthermore, the researchers suggested many DNN arrangements and structures for representing a shared prosody label derived directly from the audio spectrogram after the constant Q transform.

Identification and intervention for ASD have enduring effects on both ASD children as well as their families, necessitating informative, medical, social, and economic assistance to enhance their overall well-being. Professionals have problems in conducting ASD assessments due to the absence of recognized biophysiological diagnostic techniques (25, 26). Therefore, the diagnosis is often determined by a thorough evaluation of behavior, using reliable and valid standardized techniques such as the Autism Diagnostic Observation Schedule (ADOS) (27) and the Autism Diagnostic Interview-Revised (ADI-R) (28). These tools, widely approved in investigation and research domains, are considered the most reliable method for diagnosing ASD in medical situations (29, 30). However, using them involves the use of many materials, a significant amount of time, and is somewhat expensive (25, 26). Furthermore, the diagnostic technique necessitates the involvement of skilled and knowledgeable interviewers, who have the potential to influence the process. This is accompanied by the inclusion of intricate clinical procedures (25, 31). Collectively, these difficulties frequently contribute to a postponed identification, leading to a delay in the initiation of early intervention (26). Research indicates that early treatments for children with ASD before the age of five result in a much higher success rate of 67%, compared to a success rate of just 11% when interventions begin after the age of 5 (32).

Eye-tracking technology is regarded as a beneficial method for doing research on ASD since it allows for the early detection of autism and its characteristics (33, 34) in a more objective and dependable manner compared to traditional assessments (35). There has been a significant rise in the amount of eye-tracking research focused on autism in the past period. This increase can be attributed to improved accessibility to eye-tracking technology and the development of specialized devices and software that make recording eye-tracking data easier and more cost-effective.

Machine learning and eye-tracking devices are often used together. Data-driven machine learning uses sophisticated mathematics learning, statistical estimates, and information theories (36, 37). This method trains a computer program to examine data and find statistical trends (36–39). Machine learning may improve autism investigation studies by giving an unbiased and reproduceable second evaluation (18), including initial autism detection (40), analysis (41), behavior (16), and brain activity (17). Machine learning may also be a viable biomarker-based tool for objective ASD diagnosis (42). ASD is diagnosed via machine learning in IoT systems (43). By helping ASD youngsters learn, assistive technology may improve their lives. This method is backed by studies (44).

Various studies have utilized artificial neural network (ANN) to classify cases of ASD. For example, in ref. (18), the authors investigated the integration of eye-tracking technologies with ANN to assist in the detection of ASD. Initially, other approaches that did not use neural networks were used. The precision achieved by this ensemble of models was adequate. Subsequently, the model underwent testing using several ANN structures. According to the results, the model with a single layer of 200 neurons achieves the maximum level of accuracy. In ref. (45), researchers examined ASD children’s visual attention when observing human faces. They extract semantic characteristics using DNN. When viewing human faces, ASD feature maps differ from those without ASD. These feature maps are combined with CASNet features. They contrasted CASNet to six different deep learning based techniques. CASNet has outdone all other models in every situation. The scientists used eye movement patterns to classify children with TD and ASD (46). They combined CNNs with LSTMs. CNN-LSTM extracted features from saliency maps and scan route fixation points. SalGAN pretrained prediction model preprocessed and input network data. The validation dataset accuracy of the proposed model is 74.22%.

Akter et al. (47) proposed a method that uses transfer learning to identify ASD by analyzing face features. They developed an improved facial recognition system using transfer learning, which can accurately identify individuals with ASD.

Raj and Masood (48) utilized several machine and deep learning techniques with the aim of identifying ASD in youngsters. They utilized three publicly available datasets obtained from the UCI Repository.

Xie et al. (49) proposed a two-stream deep learning network for the detection of visual attention in individuals with ASD. The suggested framework was built using two VGGNets that were derived from the VGG16 architecture and were similar to each other.

3 Methods

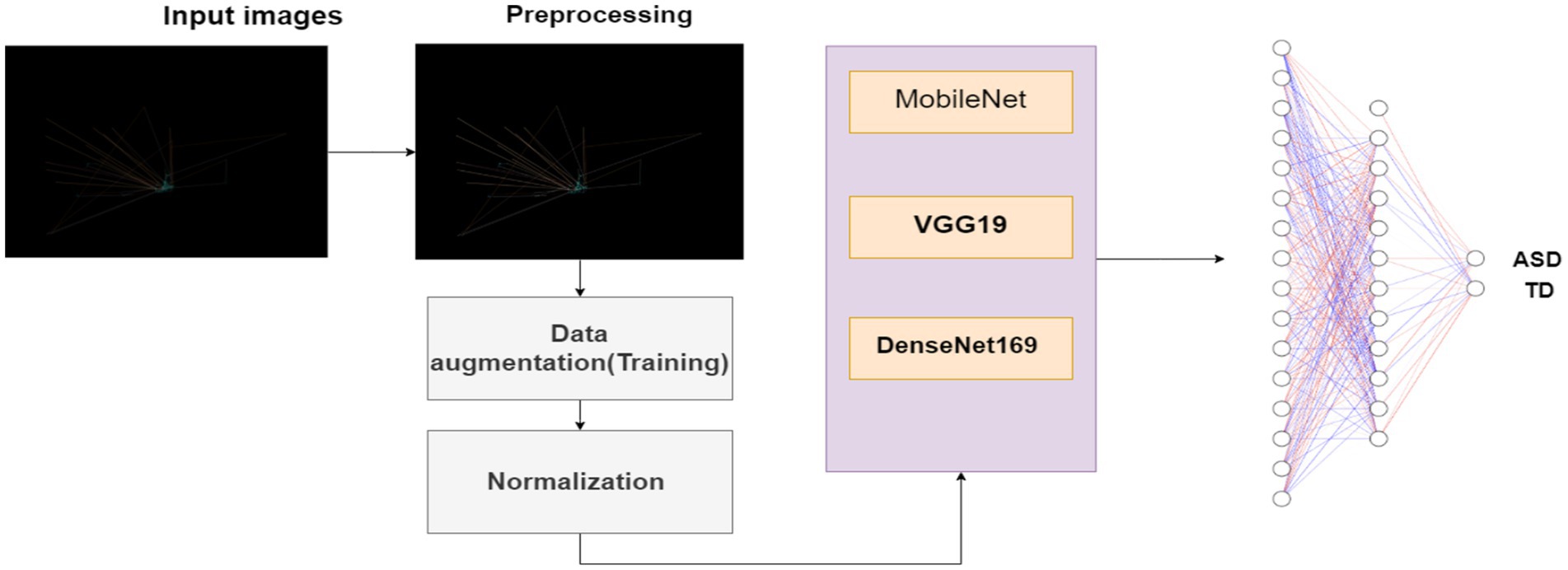

This section presents in depth the planned methodology applied to develop ASD detection system using deep learning techniques capable to detect ASD from eye tracking images based features. This methodology includes dataset collection, data preprocessing, deep learning classification model, evaluation metrics and results analysis. The framework of this methodology is shown in Figure 1.

3.1 Dataset

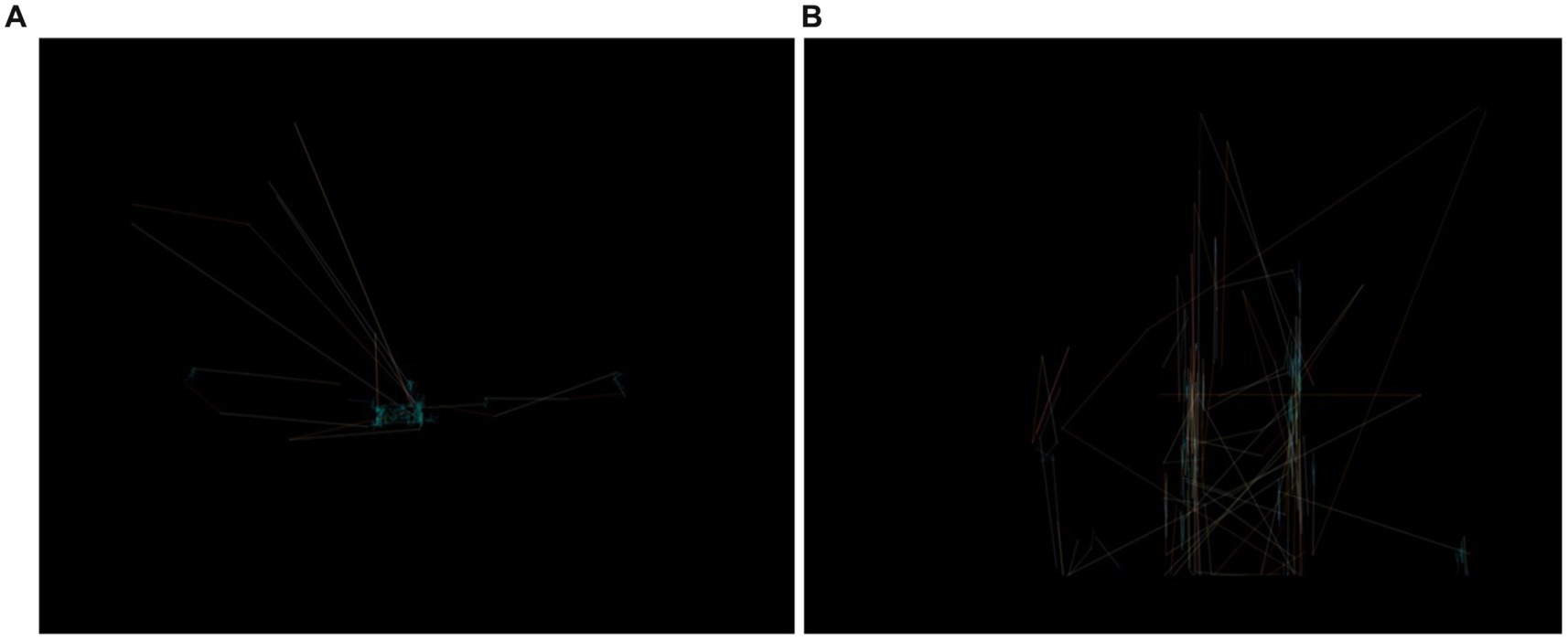

The dataset was obtained from a public repository that contains eye-tracking images. The collection presently comprises 547 images. The default images dimensions were established at 640 × 480. More precisely, there were 328 images for the people without ASD, and 219 images for the persons diagnosed with ASD. Figure 2 shows samples of eye-tracking images that were used for examining the proposed methodology.

3.2 Data preprocessing

It is an important step in making the images dataset for training machine learning models. We applied various data preprocessing methods to make certain the dataset is suitable for model training which are discussed as follows.

• Image Resize: The first step in data preprocessing encompasses resizing all images in the dataset to a standard size of 640 × 480 pixels. This ensures uniformity in image measurements and facilitates effective processing during model training.

• Image Enhancement: For all images in the dataset used, we applied a specific preprocessing step by improving their resolution by 20% using the Image Enhance module. This enhancement aims to enhance the quality and clarity of the images data, particularly for those where it’s considered necessary.

• Vectorization: After resizing and enhancing the images, we converted them into numerical arrays using vectorization techniques. This step includes transforming each image into a multi-dimensional array of pixel values, making it compatible with computational operations and deep learning algorithms.

• Normalization: after transformation to numerical arrays, we normalized the pixel values to fall within the range of [0, 1]. Normalization ensures that the pixel values are scaled appropriately, facilitating more stable and efficient model training by preventing issues related to large variations in input images data.

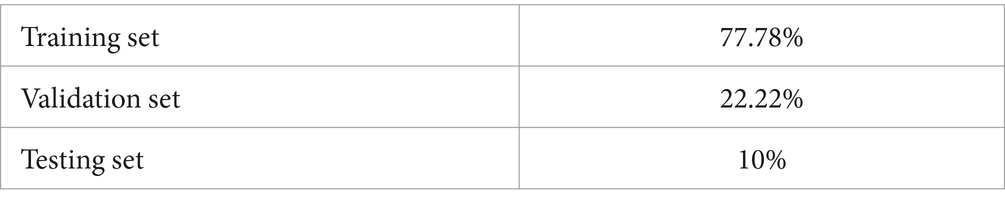

• Splitting Data: Once the images are preprocessed and converted into numerical arrays, we divide the dataset into three sets namely training, validation, and testing. This step is essential for evaluating model results, as it allows us to train the model on one subset of data, validate its performance on another subset, and finally test its generalization ability on a separate unseen subset.

• Data Augmentation: To increase the diversity and robustness of the training dataset, data augmentation techniques, using the Image Data Generator module, was applied. This method involve rotation, shifting, and flipping of images, introducing variations that help avoid overfitting and enhance the model’s capability to be generalized to new, unseen images data.

3.3 Improving the deep leaning algorithms

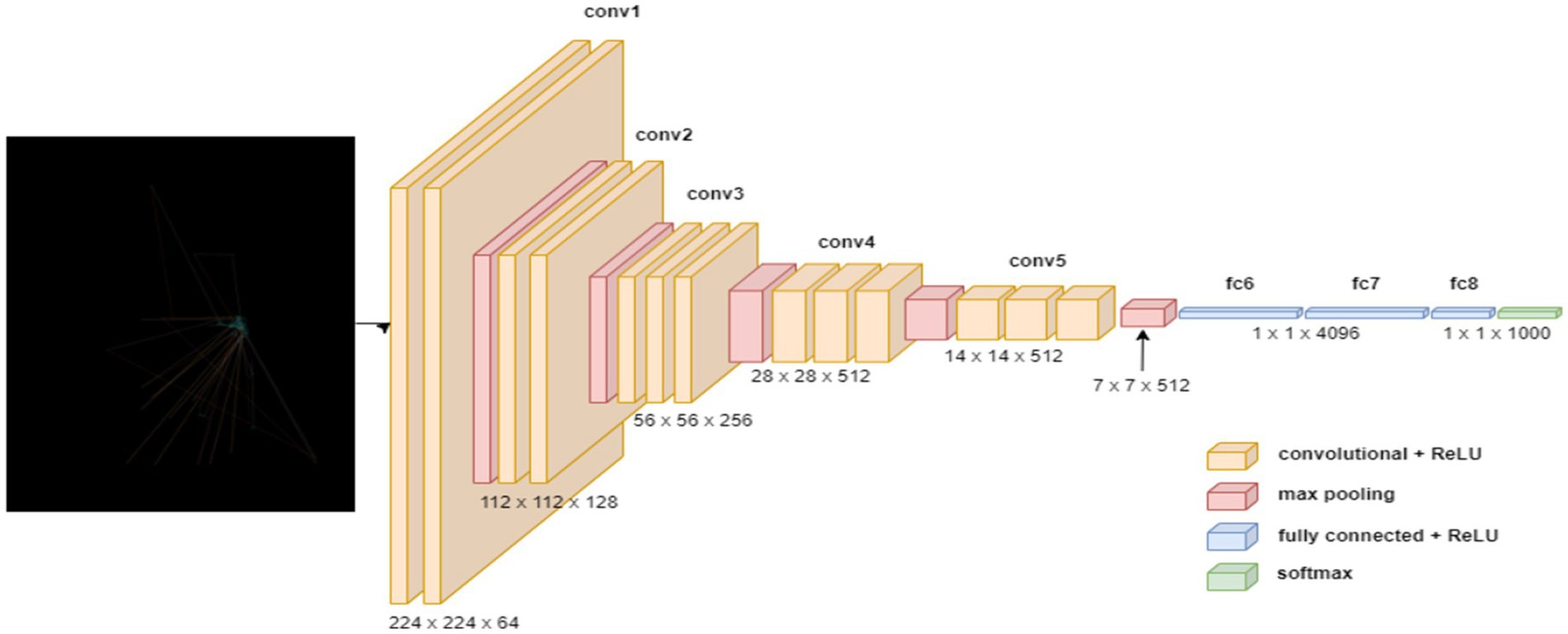

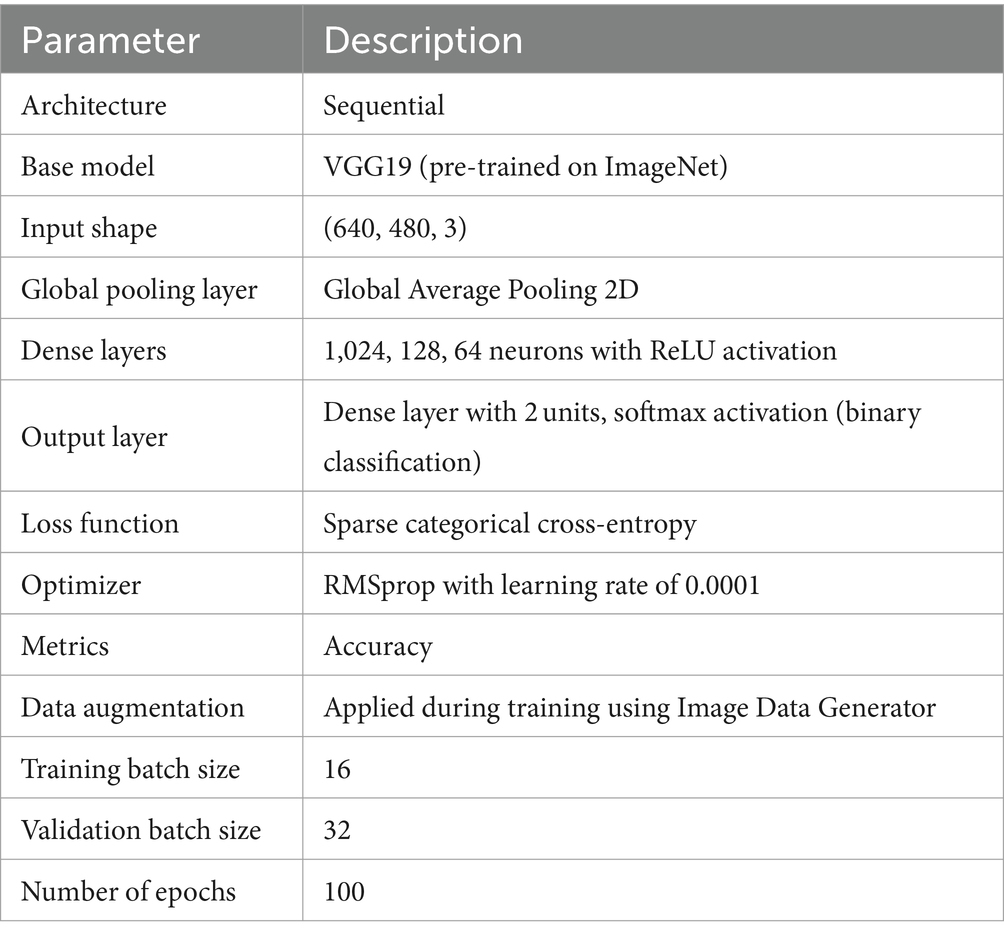

3.3.1 The VGG19 model

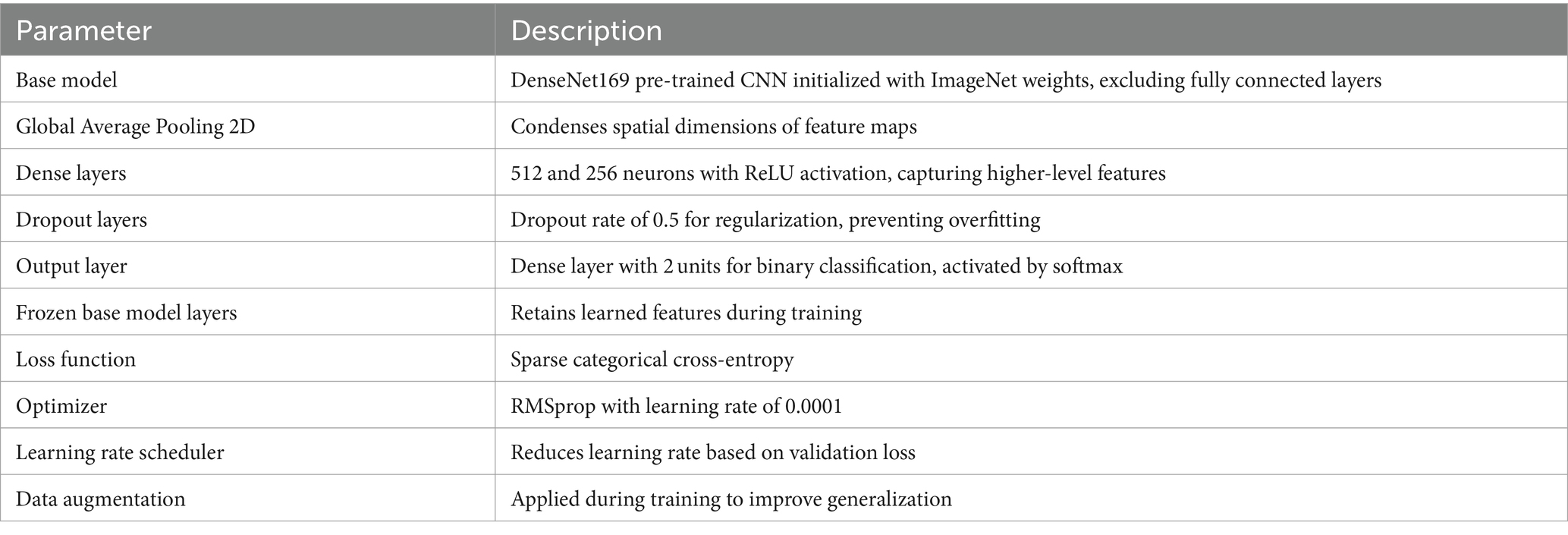

The VGG19 model (50) is a sequential model architecture constructed in this study for the purpose of detecting ASD based on eye-tracking features. Initially, the model incorporates the pre-trained VGG19 architecture, with the weights initialized from the ImageNet dataset, excluding the fully connected layers, and specifying the input shape to match the dimensions of the input images with size of (640, 480). Subsequently, a GlobalAveragePooling2D layer is added to obtain a condensed representation of the features extracted by VGG19. Following this, several dense layers are appended to the model, comprising 1,024, 128, and 64 neurons, each activated by the rectified linear unit (ReLU) function, to facilitate the learning of intricate patterns within the data. Lastly, a Dense layer with 2 units and a softmax activation function are employed for binary classification, enabling the model to predict the probability of ASD presence. Figure 3 shows the VGG1 model structure.

Upon compiling the model, utilizing the sparse categorical cross-entropy loss function and RMSprop optimizer with a learning rate of 0.0001, data augmentation approach is adopted throughout training process to improve the model’s generality competences. Through this architecture, the model aims to effectively discern the presence of ASD based on the provided eye-tracking features, leveraging the robustness of the VGG19 convolutional neural network. Table 1 outlines the parameters of VGG19 model.

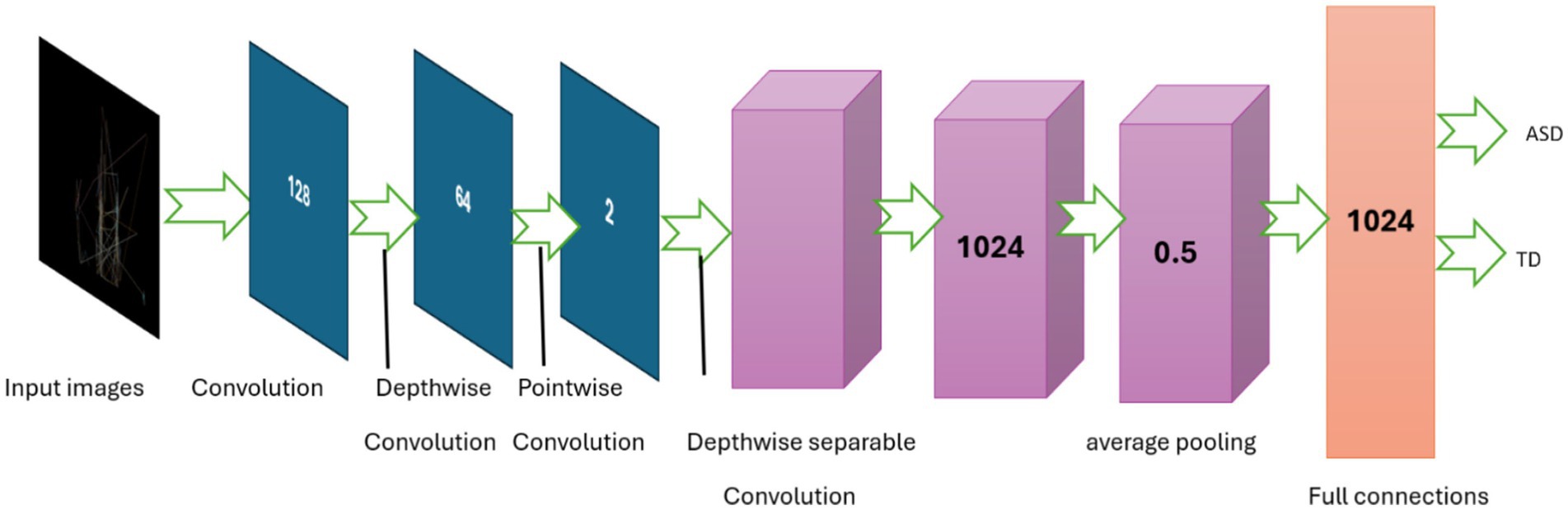

3.3.2 The MobileNet model

The MobileNet (51) model architecture has a sequential model structure, which allows for the systematic building of a neural network layer by layer. The MobileNet pre-trained convolutional neural network (CNN) is used as the basis model in this methodology, which is prepared with learnt representations from the ImageNet dataset. However, the fully connected layers of the MobileNet are excluded to facilitate transfer learning. Following integration of the MobileNet base model, a Global Average Pooling 2D layer is used to compress the three-dimensional spaces of the feature maps formed by the convolutional layers. The pooling layer calculates the mean value of each feature map over all spatial locations, resulting in a fixed-size vector representation of the input image, regardless of its size.

Successively, many dense (completely linked) layers are added to capture more complex characteristics and perform classification tasks. The dense layers are composed of 1,024, 128, and 64 neurons, respectively, each of which is activated using the ReLU activation function. The ReLU activation function is selected for its capacity to introduce non-linearity, hence improving the complexity of the model and the efficiency of training.

The classification layer of the model that is named as output layer consists of a dense layer with 2 units, representing the two classes for binary classification (ASD or TD). These units are activated using the softmax function. This function generates probability for every class. This model architecture seeks to utilize the data obtained by MobileNet and conduct classification based on these features. It then proceeds to fine-tune the dense layers to suit the particular purpose of ASD detection using eye-tracking features. The MobileNet architecture is presented in Figure 4 and model’s parameters are listed in Table 2.

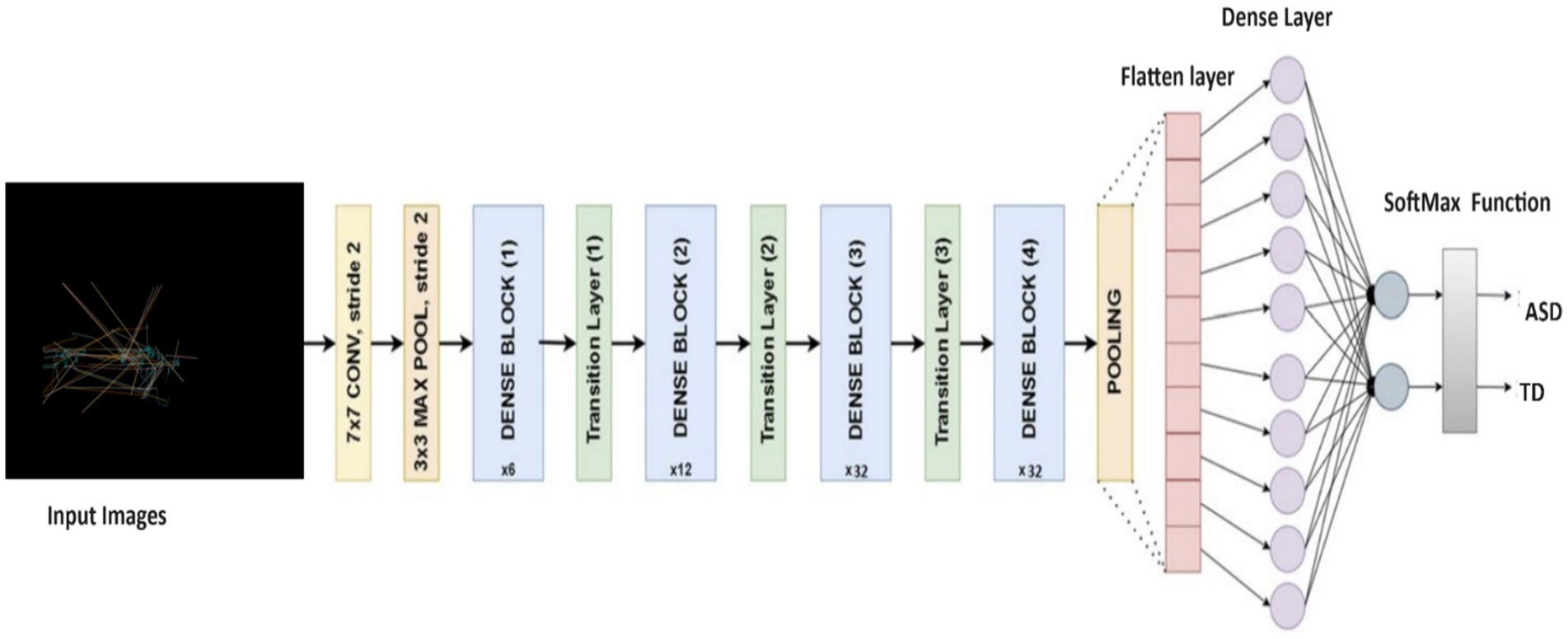

3.3.3 The DenseNet169 model

We also applied the DenseNet169 (52) model as the base, which is tailored for ASD detection based on eye-tracking features. Utilizing pre-trained weights from the ImageNet dataset, the model excludes the fully connected layers for transferring learning tasks. After integrating a Global Average Pooling 2D layer to condense feature maps, dense layers capture higher-level features. Dropout layers mitigate overfitting, and the output layer, activated by softmax, produces class probabilities. With frozen base model layers, the model is compiled with appropriate functions and benefits from learning rate scheduling. Data augmentation enhances training, aligning with the ASD detection task’s needs. Figure 5 displays the structure of DenseNet169 model, and Table 3 outlines the parameters used in DenseNet169 model.

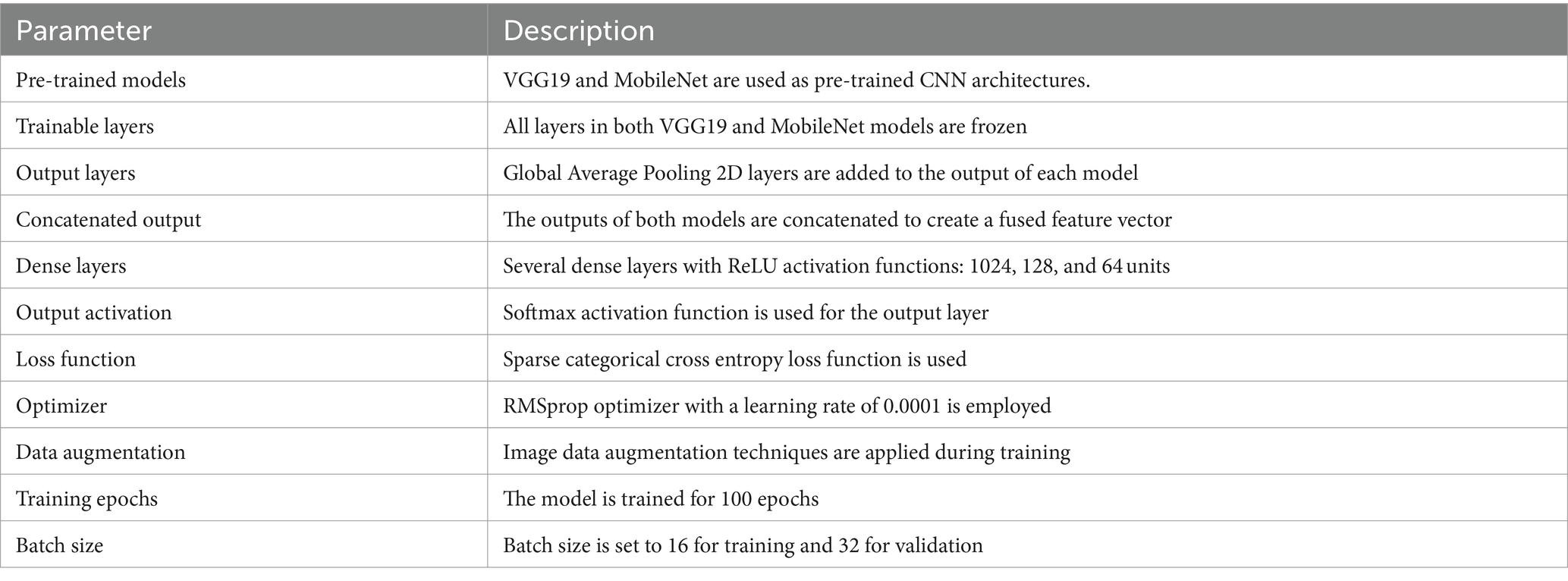

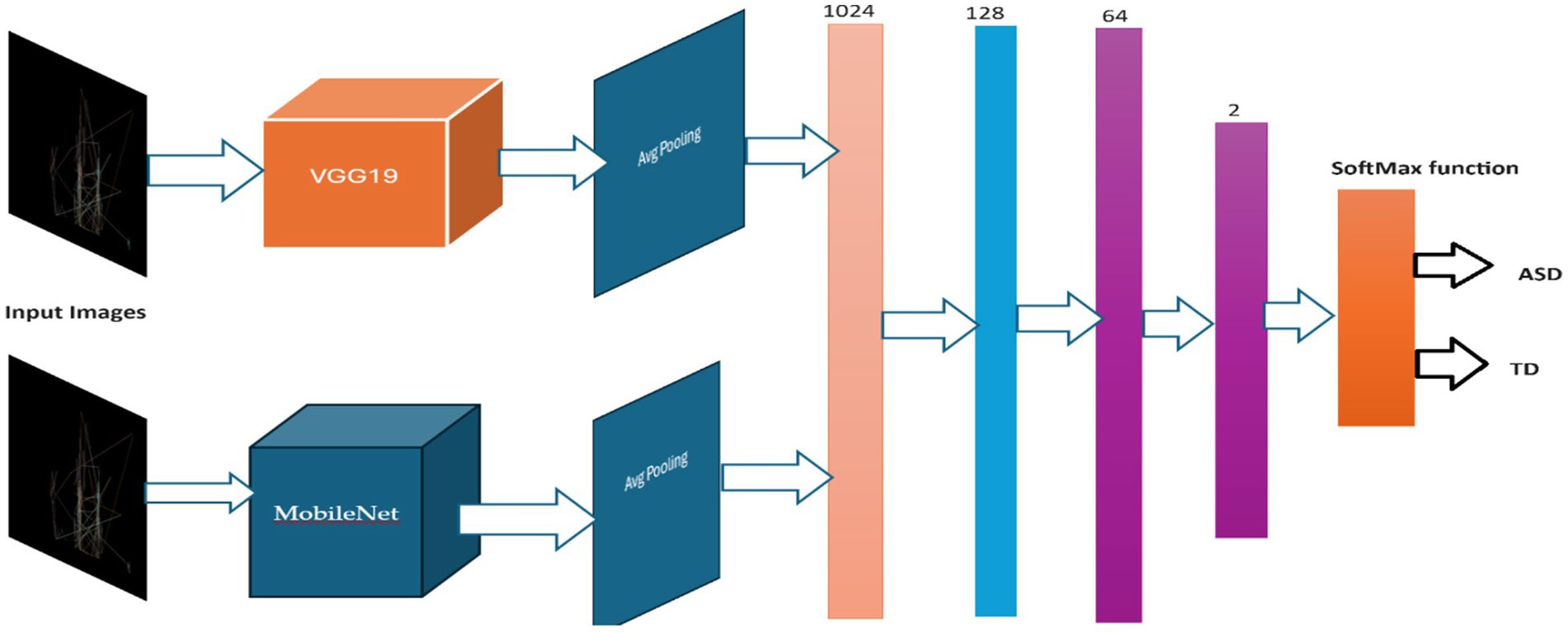

3.3.4 The hybrid model

The framework of this a combination model employs the capacities of two solidified convolutional neural network (CNN) structures, VGG19 (46) and MobileNet (51) models, to enhance its efficacy in recognizing ASD using eye-tracking features. At first, the model provides in the pre-trained VGG19 and MobileNet structures, although without their completely connected layers. It then freezes all layers to maintain their learnt representations. Global Average Pooling 2D layers are subsequently employed to acquire feature representations from the output of each model. These representations are merged to develop a united feature vector, which is then handled through numerous robust layers to capture complicated data patterns. Following that, the model is collected utilizing acceptable loss and optimization functions, while data augmentation approaches are employed during training to improve its generalization capability. This hybrid model aims to improve classification accuracy in the ASD detection task by combining the features learned by VGG19 and MobileNet. By using the capabilities of both architectures, it seeks to attain heightened accuracy. Table 4 summarizes the parameters used in the hybrid VGG19-MobileNet model, and Figure 6 displays the structure of hybrid model.

3.4 Evaluation metrics

Assessing the performance and testing results obtained by the proposed deep learning models namely MobileNet, VGG19, DenseNet169 and hybrid of MobileNet-VGG19 are crucial for gauging the effectiveness of the models. The evaluation measures provide an alternative perspective on the model’s advantages and disadvantages. There are several matrices used to quantify performance, including accuracy, recall (sensitivity), specificity, and F1-score. These evaluation matrices, expressed by Equations (1–4), can be calculated from the confusion matrix.

where TP, TN, FP, and FN stand for true positives, true negatives, false positives, and false negatives, respectively.

4 Results

This section focuses on the gained testing results of each model for spotting ASD using eye-tracking characteristics. The testing process included evaluation of four separate deep learning models: MobileNet, VGG19, DenseNet169, and a combination of VGG19 and MobileNet called the hybrid model.

4.1 Models’ configuration

The efficacy of the advanced deep learning algorithm was evaluated in a specific environment to identify ASD using an eye-tracking method. Table 5 presents the environment of the DL models.

4.2 Splitting dataset

The dataset was segregated into three subsets: training, testing, and validation. Table 6 displays the specific division that was employed in the proposed method for diagnosing ASD.

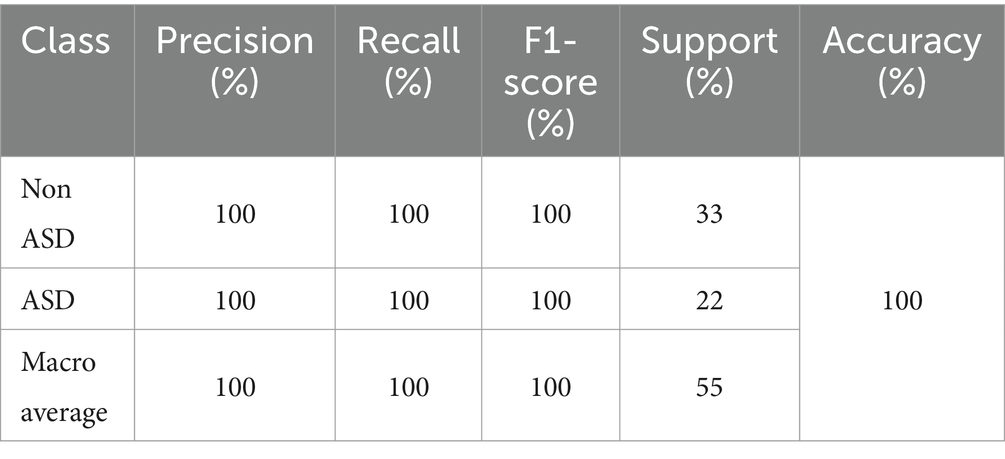

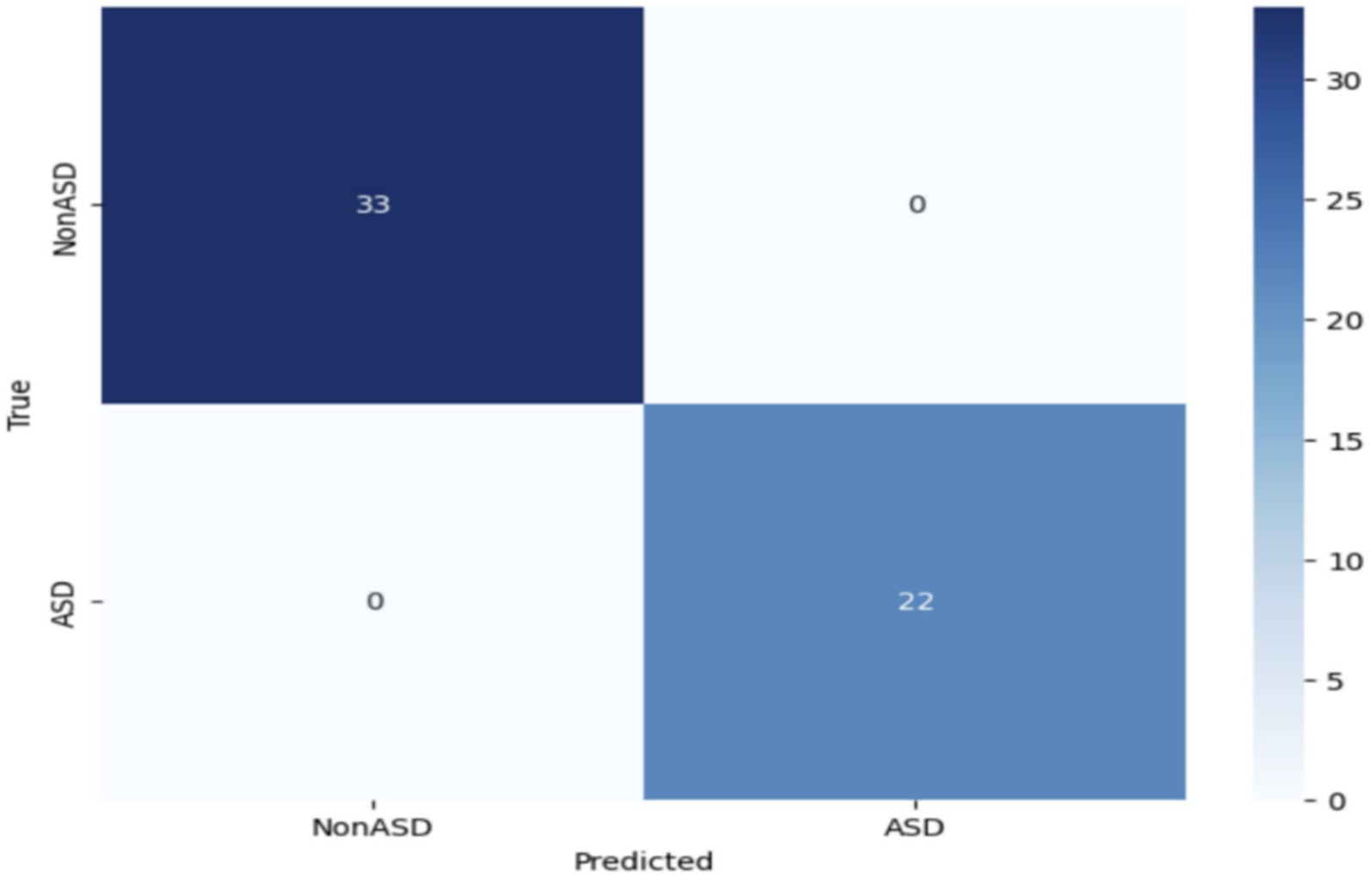

4.3 The test classification results of the MobileNet model

The MobileNet model demonstrated outstanding performance in all parameters, attaining perfect precision, recall, and F1-score for both ASD and non-ASD classes. This indicates that the model accurately categorized all cases of ASD and non-ASD without any incorrect positive or negative predictions, resulting in a remarkable overall accuracy of 100%. Table 7 presents the testing classification results of MobileNet.

The impressive performance of MobileNet underscores its efficacy in accurately recognizing instances of ASD through the utilization of eye-tracking characteristics. Figure 7 depicts the confusion matrix, which reveals that 33 images were correctly identified as true negatives (TN), 22 images were correctly classified as true positives (TP), and there were no instances of false positives (FP) or false negatives (FN). Based on the empirical data, it has been determined that the MobileNet model obtained a high level of accuracy.

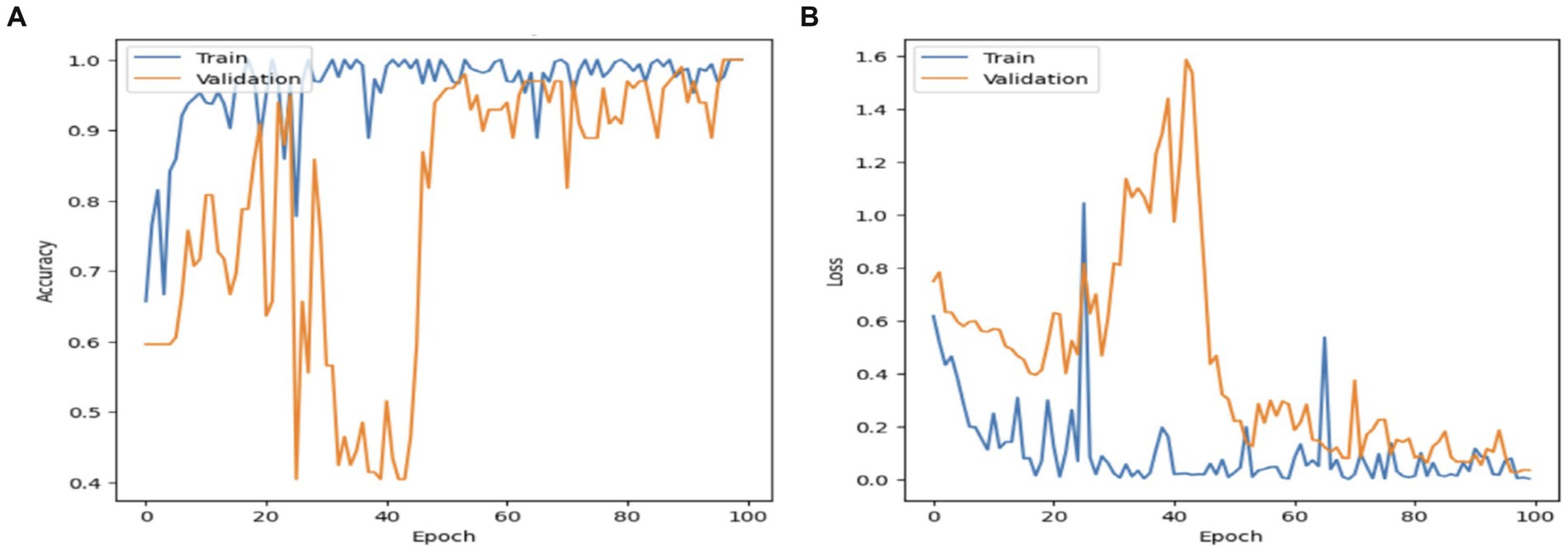

Figure 8 displays the performance of the MobileNet model. The model’s accuracy exhibited a progressive increase in validation performance, starting at 50% and reaching 100%. In contrast, the accuracy in training performance had a smoothing effect, starting at 65% and also reaching 100%. The decline in the MobileNet starting and validation performance has resulted in a fall of 1.6% to reach 0.0. This confirms that the MobileNet model has achieved a high percentage score.

4.4 Testing results of the VGG19 model

This subsection introduces the testing classification results gained by the VGG19 model which achieved an accuracy of 87%, its recall, precision and F1-score for the ASD class were pointedly lower than those for the non-ASD class. This suggests that although the model demonstrated good performance in appropriately categorizing individuals without ASD, it encountered difficulties in correctly identifying individuals with ASD, resulting in a greater incidence of false negatives. Table 8 summarizes and presents the testing results of VGG19 model.

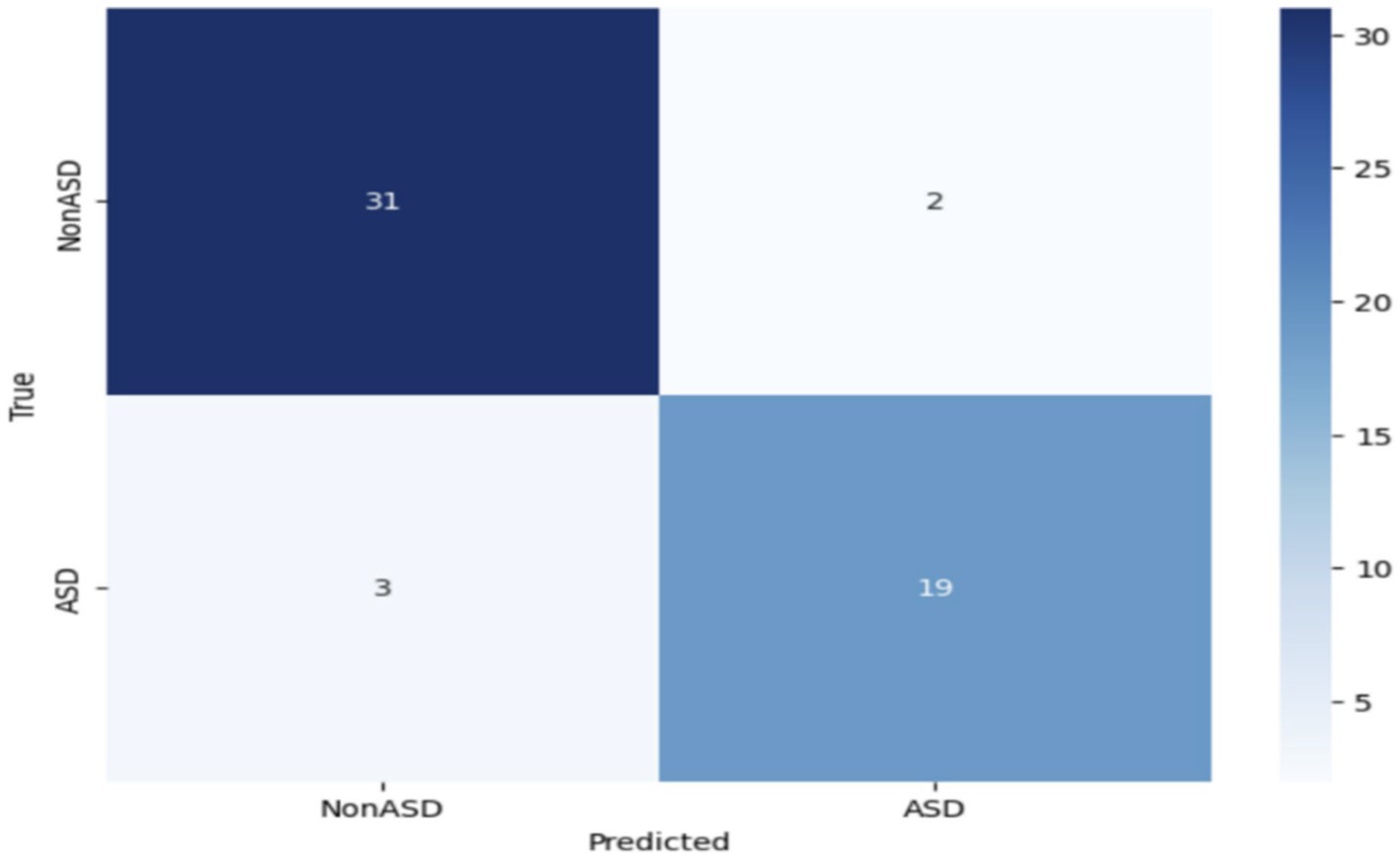

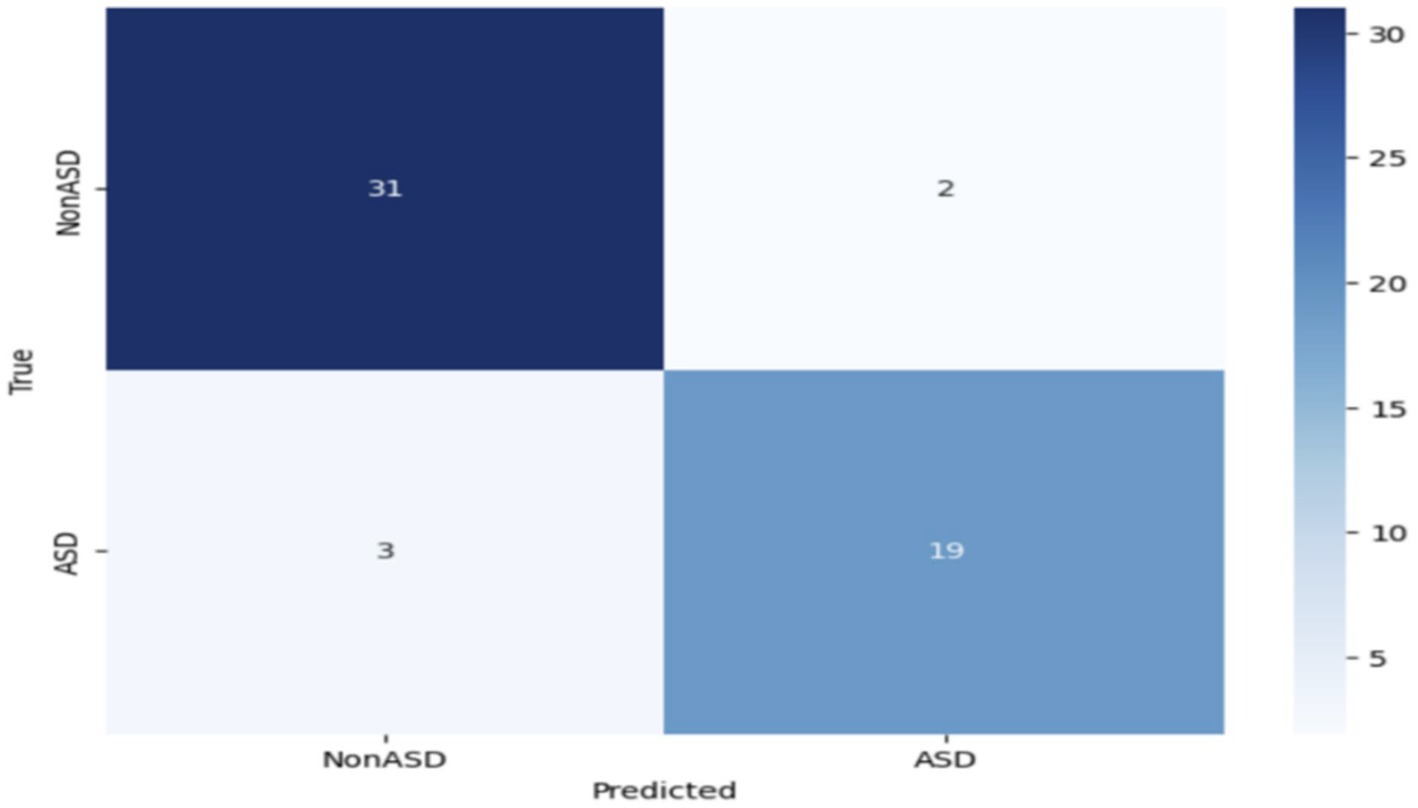

Further modification or improvement of the VGG19 design may be required to enhance its effectiveness in diagnosing ASD. Figure 9 depicts the confusion matrix of the VGG19 model used to categorize Autism Spectrum Disorder (ASD) using an eye-tracking method. The VGG19 model correctly identified 31 images as true negatives (TN) and 19 images as true positives (TP). However, it misclassified 3 images and incorrectly classified 2 images as false negatives (FN).

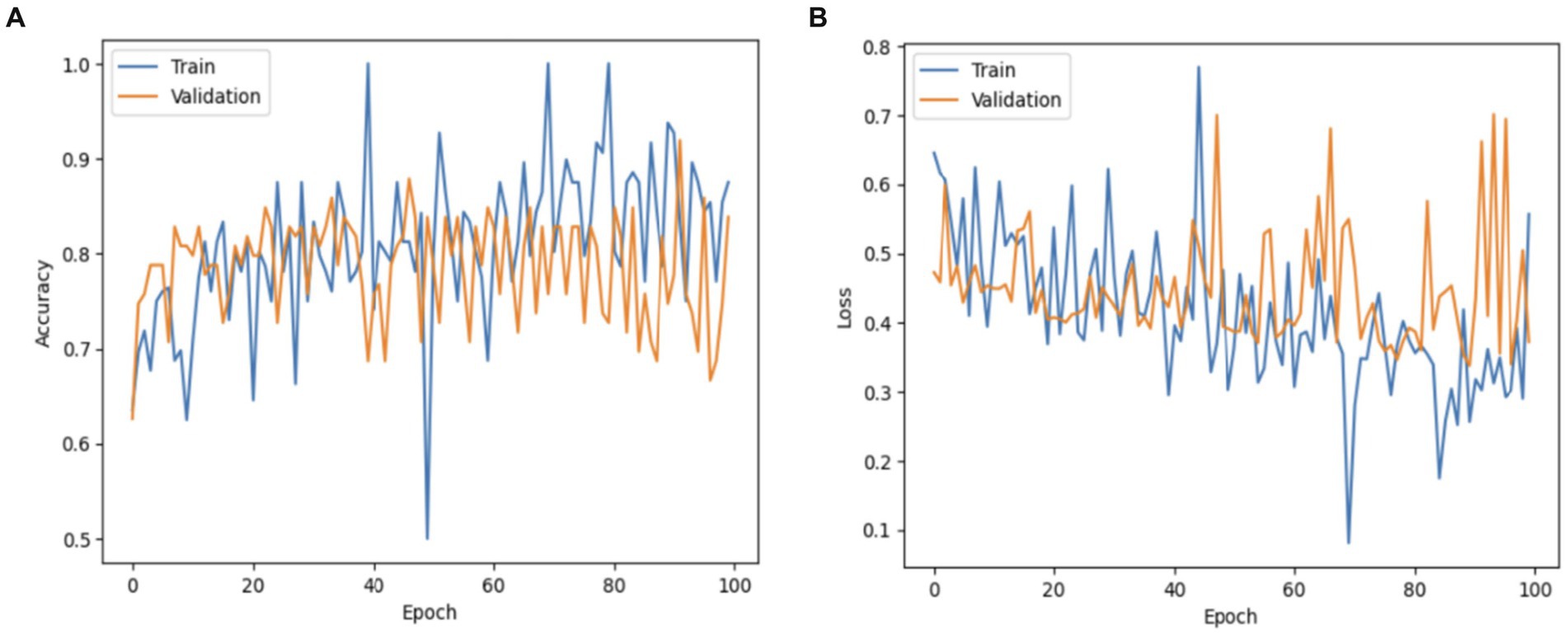

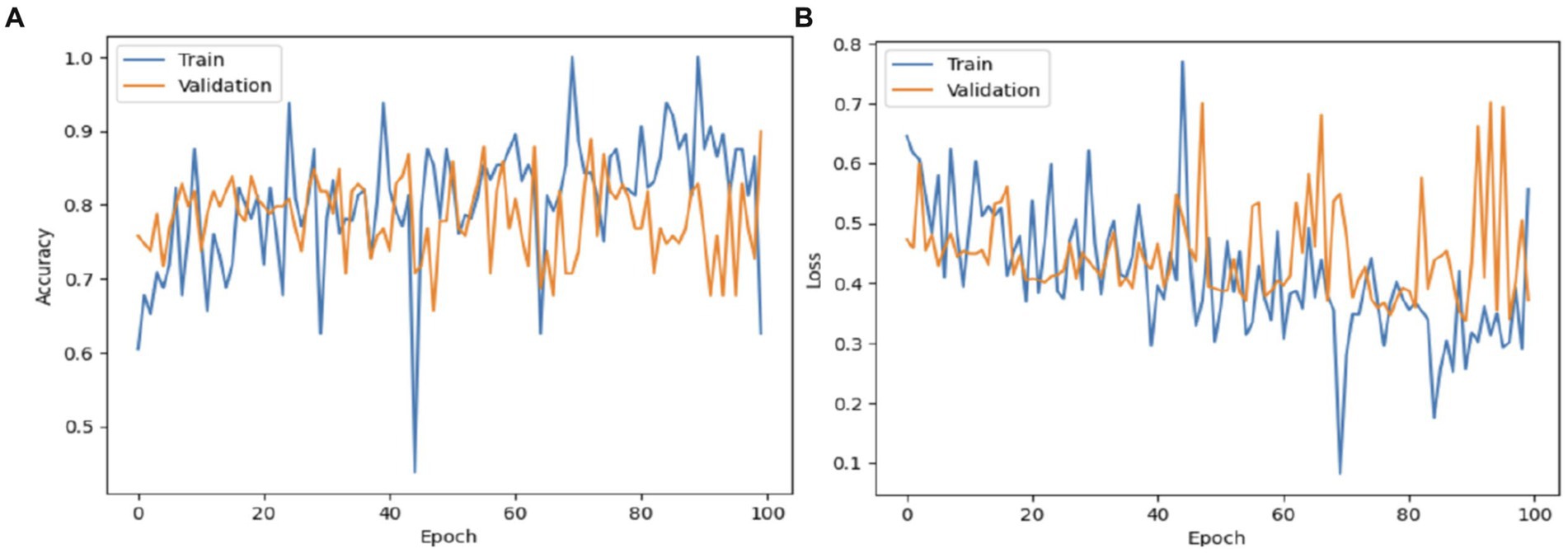

Figure 10 illustrates the process of validating and training the VGG19 model. The VGG19 model achieved a validation accuracy of 87%. The VGG19 model attained an accuracy rate of 89% in diagnosing Autism Spectrum Disorder (ASD) using the eye-tracking dataset during training. The loss of the VGG19 model decreased to 0.3.

4.5 Testing classification results of the hybrid VGG19-MobileNet model

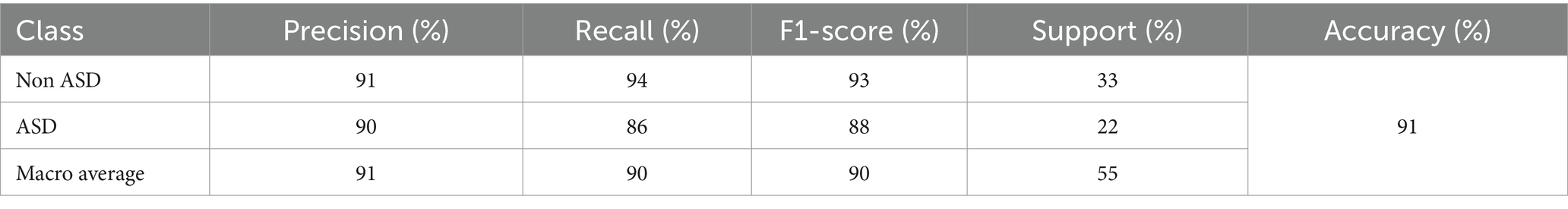

The hybrid VGG19-MobileNet model exhibited strong performance, with a 91% accuracy with well-balanced precision, recall, and F1-score for both ASD and non-ASD categories. The hybrid model successfully utilized the advantageous qualities of both VGG19 and MobileNet architectures, leading to enhanced classification performance. Table 9 presents the testing classification results obtained by the hybrid VGG19-MobileNet model.

The model’s ability to accurately differentiate between cases of ASD and non-ASD highlights its potential utility in clinical settings for diagnosing ASD based on eye-tracking features. Figure 11 presents the confusion matrix of the hybrid VGG19-MobileNet model. In this hybrid model, 31 images were accurately labeled as TD and 19 images were accurately classified as ASD (autism spectrum disorder). The hybrid model correctly classifies 3 images as FP and incorrectly classifies 2 images as FN.

The results performance of the VGG19-MobileNet model is depicted in Figure 12. The VGG19-MobileNet model obtained a validation accuracy of 91% and a training accuracy of 92%. The hybrid model had a reduction from 0.6 to 0.4.

4.6 Testing results of the DenseNet169 model

The DenseNet169 model attained an accuracy of 78%, exhibiting superior precision, recall, and F1-score for the non-ASD class in comparison to the ASD class. This indicates that although the model performed well in accurately categorizing those without ASD, its ability to identify individuals with ASD was comparatively less effective. Table 10 summarizes the testing classification results of the DenseNet169 model.

The elevated rate of false negatives in ASD cases highlights possible opportunities for enhancing the model’s ability to detect ASD-related characteristics. In general, although all models demonstrated potential in detecting ASD, there is a need for more improvement and optimization of model structures to boost the accuracy and precision of ASD diagnosis using eye-tracking data.

5 Discussion

ASD is a neurodevelopmental condition marked by enduring difficulties in social interaction, communication, and restricted or repetitive behaviors. People with Autism Spectrum Disorder (ASD) can display a diverse array of symptoms and levels of functioning, resulting in significant variation within the spectrum. Eye-tracking technology is the technique of observing and documenting the movement of a person’s eyes in order to examine different aspects of visual attention, perception, and cognitive processing. Eye-tracking studies in individuals with ASD commonly examine gaze fixation patterns, saccades (quick eye movements), and pupil dilation to explore disparities in visual processing and social attention between individuals with ASD and those who are typically developing.

The experimental results presented in this study demonstrate the efficacy of several convolutional neural network (CNN) models in detecting and predicting Autism Spectrum Disorder (ASD) by utilizing eye-tracking features. The classification accuracy, precision, recall, and F1-score of each model offer valuable insights into their efficacy in detecting ASD cases using eye movement patterns.

The MobileNet model exhibited outstanding performance, attaining flawless precision, recall, and F1-score for both ASD and non-ASD categories. This indicates that MobileNet successfully diagnosed all cases of ASD and non-ASD, demonstrating its potential usefulness in diagnosing ASD using eye-tracking data.

Although the VGG19 model achieved an accuracy of 87%, its precision, recall, and F1-score for the ASD class were somewhat lower, suggesting a higher occurrence of false negatives. This implies that VGG19 might have difficulties in reliably detecting cases of ASD solely based on eye movement patterns.

The DenseNet169 model attained an accuracy of 78%, exhibiting superior precision, recall, and F1-score for the non-ASD class in comparison to the ASD class. This disparity suggests possible constraints in the model’s ability to detect ASD-related eye movement characteristics, resulting in an increased occurrence of incorrect negative diagnoses for individuals with ASD.

The hybrid VGG19-MobileNet model exhibited strong performance, with a 91% accuracy with well-balanced precision, recall, and F1-score for both ASD and non-ASD categories. This suggests that the hybrid model successfully utilized the advantages of both VGG19 and MobileNet architectures to enhance ASD identification using eye-tracking features.

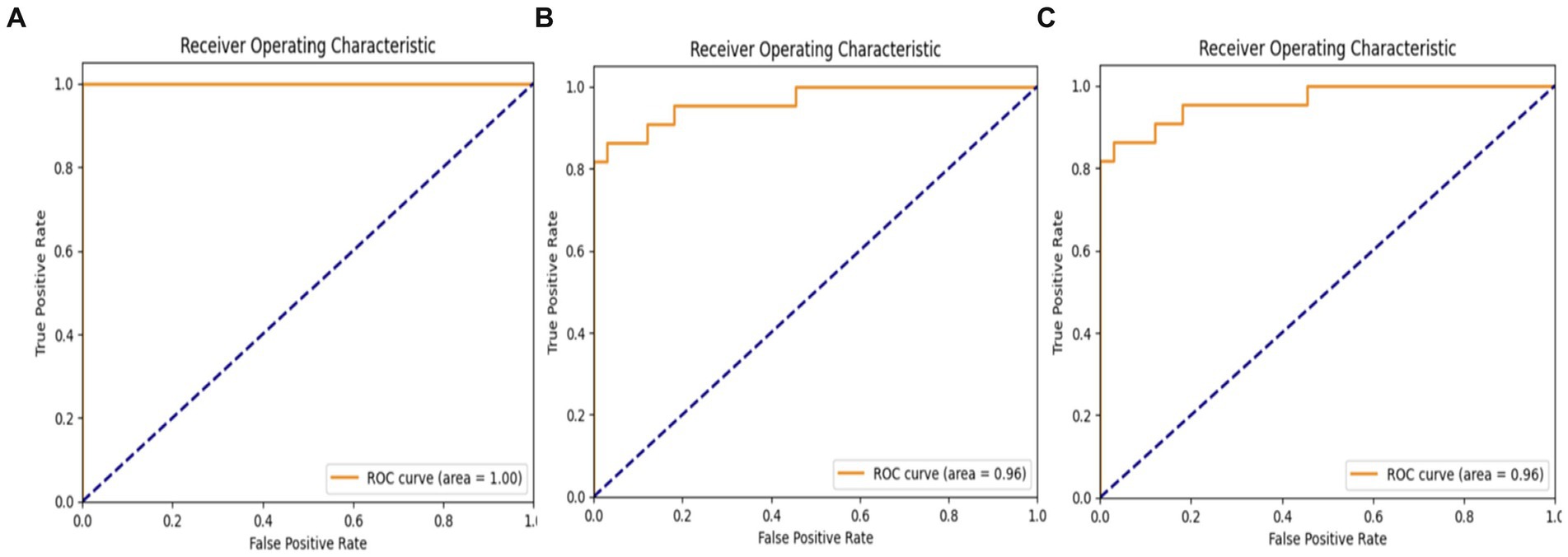

Figure 13 displays the receiver operating characteristics (ROC) findings of the proposed deep learning (DL) model. The MobileNet model earned a high accuracy score of 100%, while both the VGG19 and hybrid models achieved the same accuracy score of 96%.

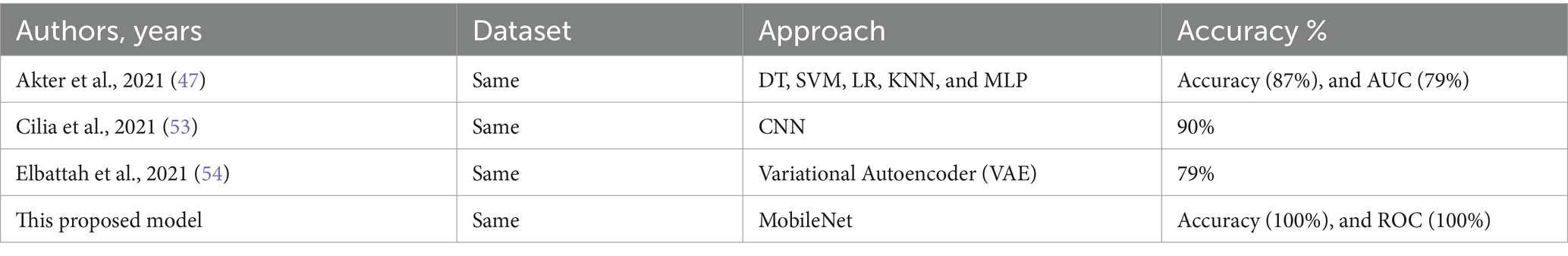

In summary, the experimental results highlight the capability of CNN models, specifically MobileNet and the hybrid VGG19-MobileNet model, to accurately detect ASD cases using eye-tracking data. However, additional study is required to optimize the design of models and increase their ability to detect patterns in eye movements associated to ASD. This will ultimately lead to better accuracy in diagnosing and treating ASD. The proposed system was compared to several current eye-tracking systems (46–48), as seen in Table 11 and Figure 14. Our enhanced MobileNet model achieved a perfect score of 100%, surpassing all other current systems.

6 Conclusion

Eye tracking is a commonly used method for detecting ASD in both young children and adults. Research including eye tracking has revealed that individuals with autism have distinct gaze patterns compared to normally developing individuals. Various diagnostic procedures have been considered for the diagnosis of ASD, such as parent interviews, homogenous behavioral appraisals, and neurological examinations. Eye-tracking technology has gained significance for supporting the study and analysis of autism. This research presents a methodology that utilizes advanced deep learning algorithms, including MobileNet, VGG19, DenseNet169, and a hybrid of MobileNet-VGG19, to analyze and display the eye-tracking patterns of persons diagnosed with ASD. The study specifically focuses on children and adults in the initial phases of growth. The primary concept is to convert the movement patterns of the eye into a visual depiction, allowing for the use of image-based methods in activities connected to diagnosis. The visualizations generated are freely accessible as an image collection for use by other studies seeking to explore the capabilities of eye-tracking in the setting of Autism ASD. The collection consists of 547 images, with 328 images representing persons without ASD and 219 images representing those diagnosed with ASD. The MobileNet model scored high accuracy 100%, the proposed methodology was compared with different with existing ASD model, it is investigated that our model out performance.

An important avenue for future study is to expand the sample size by include a wider range of participants, including a greater number of persons with ASD and TD individuals. By increasing the size of the sample, researchers might potentially uncover additional patterns and subtleties in the data.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://figshare.com/articles/dataset/Visualization_of_Eye-Tracking_Scanpaths_in_Autism_Spectrum_Disorder_Image_Dataset/7073087.

Author contributions

NA: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – original draft. MA-A: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Resources, Software, Validation, Writing – original draft. MA-Y: Visualization, Writing – original draft, Writing – review & editing. NF: Conceptualization, Formal analysis, Writing – review & editing. ZK: Conceptualization, Formal analysis, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The authors extend their appreciation to the King Salman Center for Disability Research for funding this work through Research Group no. KSRG-2023-500.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Bölte, S, and Hallmayer, J. FAQs on autism, Asperger syndrome, and atypical autism answered by international experts. Cambridge, MA, USA: Hogrefe Publishing (2010).

2. CDC. (2018). Screening and diagnosis of autism Spectrum disorder for healthcare providers. Available at: https://www.cdc.gov/ncbddd/autism/hcp-screening.html (Accessed April 13, 2024).

3. Maenner, MJ, Shaw, KA, and Baio, J. Prevalence of autism Spectrum disorder among children aged 8 years. Atlanta, GA, USA: CDC (2020).

4. Dawson, G . Early behavioral intervention, brain plasticity, and the prevention of autism spectrum disorder. Dev Psychopathol. (2008) 20:775–803. doi: 10.1017/S0954579408000370

5. Sasson, NJ . The development of face processing in autism. J Autism Dev Disord. (2006) 36:381–94. doi: 10.1007/s10803-006-0076-3

6. Senju, A, and Johnson, MH. The eye contact effect: mechanisms and development. Trends Cogn Sci. (2009) 13:127–34. doi: 10.1016/j.tics.2008.11.009

7. Senju, A, and Johnson, MH. Atypical eye contact in autism: models, mechanisms and development. Neurosci Biobehav Rev. (2009) 33:1204–14. doi: 10.1016/j.neubiorev.2009.06.001

8. Klin, A, Jones, W, Schultz, R, Volkmar, F, and Cohen, D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. (2002) 59:809–16. doi: 10.1001/archpsyc.59.9.809

9. Falck-Ytter, T, Bolte, S, and Gredeback, G. Eye tracking in early autism research. J Neurodev Disord. (2013) 5:28. doi: 10.1186/1866-1955-5-28

10. Boraston, Z, and Blakemore, SJ. The application of eye-tracking technology in the study of autism. J Physiol. (2007) 581:893–8. doi: 10.1113/jphysiol.2007.133587

11. Black, MH, Chen, NTM, Iyer, KK, Lipp, OV, Bölte, S, Falkmer, M, et al. Mechanisms of facial emotion recognition in autism spectrum disorders: insights from eye tracking and electroencephalography. Neurosci Biobehav Rev. (2017) 80:488–515. doi: 10.1016/j.neubiorev.2017.06.016

12. Fujioka, T, Inohara, K, Okamoto, Y, Masuya, Y, Ishitobi, M, Saito, DN, et al. Gazefinder as a clinical supplementary tool for discriminating between autism spectrum disorder and typical development in male adolescents and adults. Mol Autism. (2016) 7:19. doi: 10.1186/s13229-016-0083-y

13. Klin, A, Lin, DJ, Gorrindo, P, Ramsay, G, and Jones, W. Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature. (2009) 459:257–61. doi: 10.1038/nature07868

14. Frazier, TW, Klingemier, EW, Parikh, S, Speer, L, Strauss, MS, Eng, C, et al. Development and validation of objective and quantitative eye tracking-based measures of autism risk and symptom levels. J Am Acad Child Adolesc Psychiatry. (2018) 57:858–66. doi: 10.1016/j.jaac.2018.06.023

15. Papagiannopoulou, EA, Chitty, KM, Hermens, DF, Hickie, IB, and Lagopoulos, J. A systematic review and meta-analysis of eye-tracking studies in children with autism spectrum disorders. Soc Neurosci. (2014) 9:610–32. doi: 10.1080/17470919.2014.934966

16. Zhou, Y, Yu, F, and Duong, T. Multiparametric MRI characterization and prediction in autism spectrum disorder using graph theory and machine learning. PLoS One. (2014) 9:e90405. doi: 10.1371/journal.pone.0090405

17. Minissi, ME, Giglioli, IAC, Mantovani, F, and Raya, MA. Assessment of the autism spectrum disorder based on machine learning and social visual attention: a systematic review. J Autism Dev Disord. (2021) 52:2187–202. doi: 10.1007/s10803-021-05106-5

18. Carette, R., Elbattah, M., Cilia, F., Dequen, G., Guérin, J.L., and Bosche, J. (2019). Learning to predict autism Spectrum disorder based on the visual patterns of eye-tracking Scanpaths. In Proceedings of the HEALTHINF, Prague, Czech Republic, 22–24 February 2019, pp. 103–112. doi: 10.5220/0007402601030112

19. Kanhirakadavath, MR, and Chandran, MSM. Investigation of eye-tracking scan path as a biomarker for autism screening using machine learning algorithms. Diagnostics. (2022) 12:518. doi: 10.3390/diagnostics12020518

20. Shic, F, Naples, AJ, Barney, EC, Chang, SA, Li, B, McAllister, T, et al. The autism biomarkers consortium for clinical trials: evaluation of a battery of candidate eye-tracking biomarkers for use in autism clinical trials. Mol Autism. (2022) 13:15. doi: 10.1186/s13229-021-00482-2

21. Haq, AU, Li, JP, Ahmad, S, Khan, S, Alshara, MA, and Alotaibi, RM. Diagnostic approach for accurate diagnosis of COVID-19 employing deep learning and transfer learning techniques through chest X-ray images clinical data in E-healthcare. Sensors. (2021) 21:8219. doi: 10.3390/s21248219

22. Ruchi, S, Singh, D, Singla, J, Rahmani, MKI, Ahmad, S, Rehman, MU, et al. Lumbar spine disease detection: enhanced CNN model with improved classification accuracy. IEEE Access. (2023) 11:141889–901. doi: 10.1109/ACCESS.2023.3342064

23. Fang, Y., Duan, H., Shi, F., Min, X., and Zhai, G. (2020). Identifying children with autism spectrum disorder based on gaze-following. In Proc. in 2020 IEEE Int. Conf. on Image Processing (ICIP). Abu Dhabi, United Arab Emirates, pp. 423–427. doi: 10.1109/ICIP40778.2020.9190831

24. Elbattah, M., Guérin, J.L., Carette, R., Cilia, F., and Dequen, G. (2020). NLP-based approach to detect autism spectrum disorder in saccadic eye movement. In Proc. in 2020 IEEE Symp. Series on Computational Intelligence (SSCI), Canberra, Australia, pp. 1581–1587.

25. Li, M, Tang, D, Zeng, J, Zhou, T, Zhu, H, Chen, B, et al. An automated assessment framework for atypical prosody and stereotyped idiosyncratic phrases related to autism spectrum disorder. Comput Speech Lang. (2019) 56:80–94. doi: 10.1016/j.csl.2018.11.002

26. Tsuchiya, KJ, Hakoshima, S, Hara, T, Ninomiya, M, Saito, M, Fujioka, T, et al. Diagnosing autism spectrum disorder without expertise: a pilot study of 5- to 17-year-old individuals using Gazefinder. Front Neurol. (2021) 11:1963. doi: 10.3389/fneur.2020.603085

27. Vu, T., Tran, H., Cho, K.W., Song, C., Lin, F., Chen, C.W., et al. (2017). Effective and efficient visual stimuli design for quantitative autism screening: an exploratory study. In Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February, pp. 297–300. doi: 10.1109/BHI.2017.7897264

28. Lord, C, Rutter, M, and Le Couteur, A. Autism diagnostic interview-revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J Autism Dev Disord. (1994) 24:659–85. doi: 10.1007/BF02172145

29. Goldstein, S, and Ozonoff, S. Assessment of autism Spectrum disorder. New York, NY, USA: Guilford Publications (2018).

30. Kamp-Becker, I, Albertowski, K, Becker, J, Ghahreman, M, Langmann, A, Mingebach, T, et al. Diagnostic accuracy of the ADOS and ADOS-2 in clinical practice. Eur Child Adolesc Psychiatry. (2018) 27:1193–207. doi: 10.1007/s00787-018-1143-y

31. He, Q, Wang, Q, Wu, Y, Yi, L, and Wei, K. Automatic classification of children with autism Spectrum disorder by using a computerized visual-orienting task. PsyCh J. (2021) 10:550–65. doi: 10.1002/pchj.447

32. Fenske, EC, Zalenski, S, Krantz, PJ, and McClannahan, LE. Age at intervention and treatment outcome for autistic children in a comprehensive intervention program. Anal Interv Dev Disabil. (1985) 5:49–58. doi: 10.1016/S0270-4684(85)80005-7

33. Frank, MC, Vul, E, and Saxe, R. Measuring the development of social attention using free-viewing. Infancy. (2012) 17:355–75. doi: 10.1111/j.1532-7078.2011.00086.x

34. Yaneva, V, Eraslan, S, Yesilada, Y, and Mitkov, R. Detecting high-functioning autism in adults using eye tracking and machine learning. IEEE Trans Neural Syst Rehabil Eng. (2020) 28:1254–61. doi: 10.1109/TNSRE.2020.2991675

35. Sasson, NJ, and Elison, JT. Eye tracking young children with autism. J Vis Exp. (2012) 61:3675. doi: 10.3791/3675

36. Bone, D, Goodwin, MS, Black, MP, Lee, C-C, Audhkhasi, K, and Narayanan, S. Applying machine learning to facilitate autism diagnostics: pitfalls and promises. J Autism Dev Disord. (2015) 45:1121–36. doi: 10.1007/s10803-014-2268-6

37. Poonguzhali, R, Ahmad, S, Thiruvannamalai Sivasankar, P, Anantha Babu, S, Joshi, P, Prasad Joshi, G, et al. Automated brain tumor diagnosis using deep residual U-net segmentation model. Comput Mater Contin. (2023) 74:2179–94. doi: 10.32604/cmc.2023.032816

38. Liu, W, Li, M, and Yi, L. Identifying children with autism Spectrum disorder based on their face processing abnormality: a machine learning framework. Autism Res. (2016) 9:888–98. doi: 10.1002/aur.1615

39. Ahmad, S, Khan, S, Fahad AlAjmi, M, Kumar Dutta, A, Minh Dang, L, Prasad Joshi, G, et al. Deep learning enabled disease diagnosis for secure internet of medical things. Comput Mater Contin. (2022) 73:965–79. doi: 10.32604/cmc.2022.025760

40. Peral, J, Gil, D, Rotbei, S, Amador, S, Guerrero, M, and Moradi, H. A machine learning and integration based architecture for cognitive disorder detection used for early autism screening. Electronics. (2020) 9:516. doi: 10.3390/electronics9030516

41. Crippa, A, Salvatore, C, Perego, P, Forti, S, Nobile, M, Molteni, M, et al. Use of machine learning to identify children with autism and their motor abnormalities. J Autism Dev Disord. (2015) 45:2146–56. doi: 10.1007/s10803-015-2379-8

42. Alam, M.E., Kaiser, M.S., Hossain, M.S., and Andersson, K. An IoT-belief Rule Base smart system to assess autism. In Proceedings of the 2018 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018, pp. 672–676.

43. Hosseinzadeh, M, Koohpayehzadeh, J, Bali, AO, Rad, FA, Souri, A, Mazaherinezhad, A, et al. A review on diagnostic autism Spectrum disorder approaches based on the internet of things and machine learning. J Supercomput. (2020) 77:2590–608. doi: 10.1007/s11227-020-03357-0

44. Syriopoulou-Delli, CK, and Gkiolnta, E. Review of assistive technology in the training of children with autism spectrum disorders. Int J Dev Disabil. (2020) 68:73–85. doi: 10.1080/20473869.2019.1706333

45. Duan, H, Min, X, Fang, Y, Fan, L, Yang, X, and Zhai, G. Visual attention analysis and prediction on human faces for children with autism spectrum disorder. ACM Trans Multimed Comput Commun Appl (TOMM). (2019) 15:1–23. doi: 10.1145/3337066

46. Tao, Y., and Shyu, M.L. (2019). SP-ASDNet: CNN-LSTM based ASD classification model using observer scanpaths. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July, pp. 641–646.

47. Akter, T, Ali, MH, Khan, M, Satu, M, Uddin, M, Alyami, SA, et al. Improved transfer-learning-based facial recognition framework to detect autistic children at an early stage. Brain Sci. (2021) 11:734. doi: 10.3390/brainsci11060734

48. Raj, S, and Masood, S. Analysis and detection of autism spectrum disorder using machine learning techniques. Procedia Comput Sci. (2020) 167:994–1004. doi: 10.1016/j.procs.2020.03.399

49. Xie, J., Wang, L., Webster, P., Yao, Y., Sun, J., Wang, S., et al. (2019). A two-stream end-to-end deep learning network for recognizing atypical visual attention in autism spectrum disorder. arXiv [preprint]. Available at: https://doi.org/10.48550/arXiv.1911.11393.

50. Simonyan, K., and Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv [preprint]. Available at: https://doi.org/10.48550/arXiv.1409.1556

51. Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., et al. (2017). MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv [preprint]. Available at: https://doi.org/10.48550/arXiv.1704.04861

52. Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K.Q. (2017). Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4700–4708.

53. Cilia, F, Carette, R, Elbattah, M, Dequen, G, Guérin, J-L, Bosche, J, et al. Computer-aided screening of autism spectrum disorder: eye-tracking study using data visualization and deep learning. JMIR Hum Factors. (2021) 8:e27706. doi: 10.2196/27706

Keywords: autism spectrum disorder, eye tracking, deep leaning, VGG19, MobileNet, DenseNet169, hybrid model

Citation: Alsharif N, Al-Adhaileh MH, Al-Yaari M, Farhah N and Khan ZI (2024) Utilizing deep learning models in an intelligent eye-tracking system for autism spectrum disorder diagnosis. Front. Med. 11:1436646. doi: 10.3389/fmed.2024.1436646

Edited by:

Sultan Ahmad, Prince Sattam Bin Abdulaziz University, Saudi ArabiaReviewed by:

Mohammed Almaiah, The University of Jordan, JordanGhaida Muttashar Abdulsahib, University of Technology, Iraq

Inayat Khan, University of Engineering and Technology, Mardan, Pakistan

Copyright © 2024 Alsharif, Al-Adhaileh, Al-Yaari, Farhah and Khan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohammed Al-Yaari, bWFseWFhcmlAa2Z1LmVkdS5zYQ==

Nizar Alsharif1,2

Nizar Alsharif1,2 Mohammed Al-Yaari

Mohammed Al-Yaari Nesren Farhah

Nesren Farhah