94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med., 17 November 2023

Sec. Ophthalmology

Volume 10 - 2023 | https://doi.org/10.3389/fmed.2023.1308923

This article is part of the Research TopicEfficient Artificial Intelligence (AI) in Ophthalmic ImagingView all 10 articles

Background: This study aimed to develop deep learning models using macular optical coherence tomography (OCT) images to estimate axial lengths (ALs) in eyes without maculopathy.

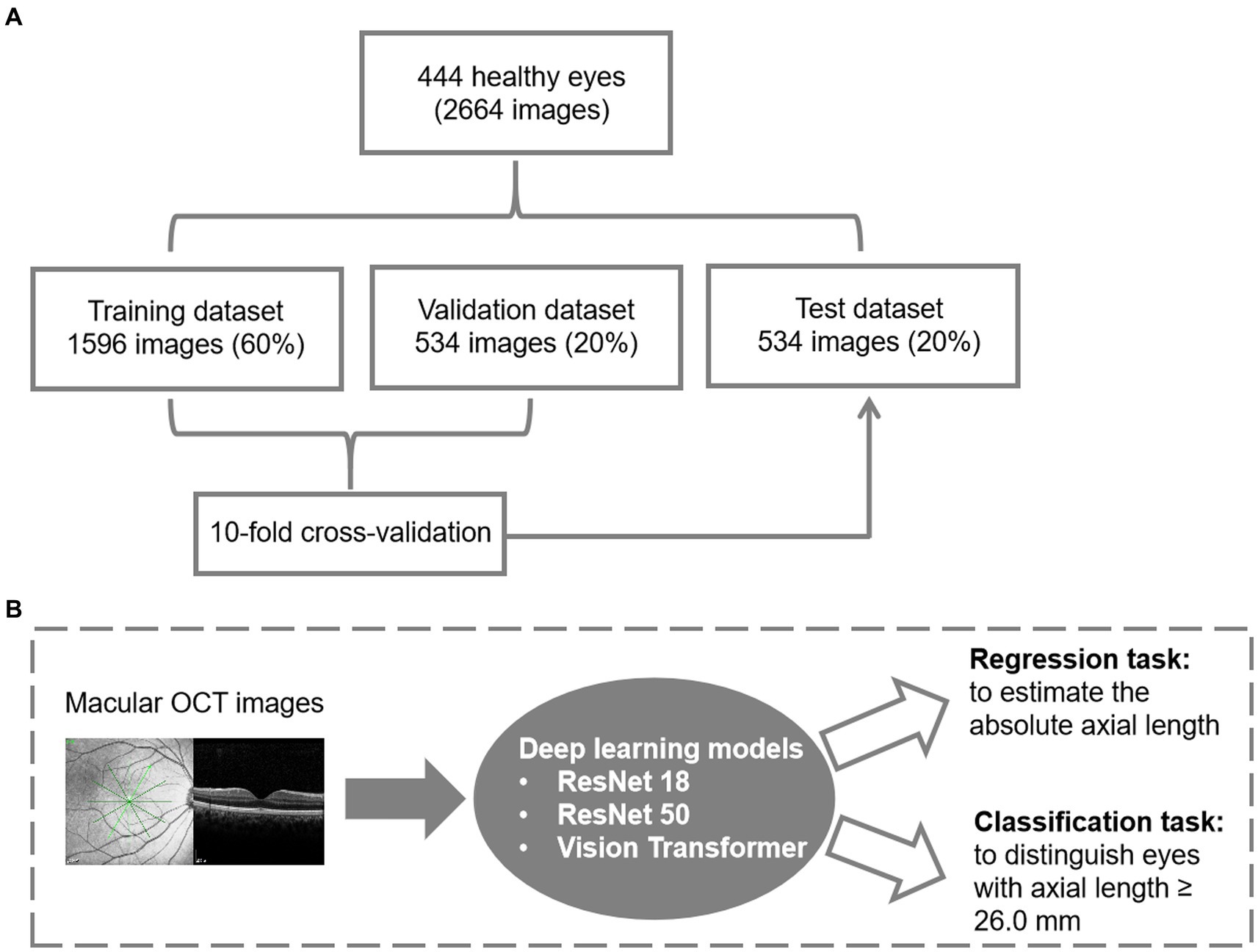

Methods: A total of 2,664 macular OCT images from 444 patients’ eyes without maculopathy, who visited Beijing Hospital between March 2019 and October 2021, were included. The dataset was divided into training, validation, and testing sets with a ratio of 6:2:2. Three pre-trained models (ResNet 18, ResNet 50, and ViT) were developed for binary classification (AL ≥ 26 mm) and regression task. Ten-fold cross-validation was performed, and Grad-CAM analysis was employed to visualize AL-related macular features. Additionally, retinal thickness measurements were used to predict AL by linear and logistic regression models.

Results: ResNet 50 achieved an accuracy of 0.872 (95% Confidence Interval [CI], 0.840–0.899), with high sensitivity of 0.804 (95% CI, 0.728–0.867) and specificity of 0.895 (95% CI, 0.861–0.923). The mean absolute error for AL prediction was 0.83 mm (95% CI, 0.72–0.95 mm). The best AUC, and accuracy of AL estimation using macular OCT images (0.929, 87.2%) was superior to using retinal thickness measurements alone (0.747, 77.8%). AL-related macular features were on the fovea and adjacent regions.

Conclusion: OCT images can be effectively utilized for estimating AL with good performance via deep learning. The AL-related macular features exhibit a localized pattern in the macula, rather than continuous alterations throughout the entire region. These findings can lay the foundation for future research in the pathogenesis of AL-related maculopathy.

Axial length (AL) is a widely discussed parameter, significant not only for defining the eye’s refractive status but also due to its strong association with retinal and macular complications (1, 2). The excessive elongation of AL, often exceeding 26.0 mm, is the dominant cause of an increased risk of posterior segment complications, including vitreous liquefaction, choroidal atrophy, retinoschisis, macular hole, and macular choroidal neovascularization (3). These complications are vision-threatening and often result in irreversible and permanent vision damage if left untreated (4). In the past, it has not been clear whether there are pre-existing differences in macular structure among eyes with prolonged AL prior to the development of maculopathies, except a few studies have reported that AL was positively associated with central retinal thickness, but negatively associated with peripheral retinal thickness farther from the macula (5–8).

Artificial intelligence, specifically deep learning, has exhibited significant potential in medical imaging diagnosis and interpretation (9, 10). Deep learning allows systems to acquire predictive characteristics directly from an extensive collection of labeled images, eliminating the necessity for explicit rules or manually designed features (11). In recent research, deep learning models have been developed that demonstrate precise estimation of AL or refractive error using color fundus photographs (12–14). Additionally, Yoo et al. (15) have introduced a deep learning model that predicts uncorrected refractive error by utilizing posterior segment optical coherence tomography images, suggesting a potential association between AL and the sectional structure of the retina. Considering that a long AL is a significant risk factor for complications that can potentially impair vision, investigating the alterations in macular structure resulting from prolonged AL prior to the onset of maculopathies holds immense significance in guiding the clinical management and prognosis of patients with long AL eyes (4). However, the application of deep learning to estimate AL based on macular OCT images remains unexplored.

Gradient-weighted class activation mapping (Grad-CAM), a commonly employed approach for visualizing models, utilizes the gradient details that flows into the final convolutional layer of a convolutional neural network (CNN) to construct a heat map that unveils the pivotal regions that are most relevant for the decision-making process (16). This study aimed to assess the capability of macular OCT images to estimate ALs of eyes without maculopathy using deep learning algorithms and visualize the cross-sectional alterations in macular structure resulting from the prolonged AL using Grad-CAM.

The data of this study were retrospectively collected from patients who visited the Department of Ophthalmology at Beijing Hospital between January 2019 and October 2021 and were scheduled for cataract surgery. Patients included in the study were required to be aged 18 years or older and have undergone macular OCT examination and AL measurement. Eyes with evident macular abnormalities, such as macular edema, epiretinal membrane, macular hole, macular retinoschisis, and macular neovascularization, were excluded. Furthermore, images of poor quality were also excluded. The study followed the principles of the Declaration of Helsinki and received approval from the institutional review board at Beijing Hospital. Given the retrospective nature of the study, the requirement for written informed consent was waived.

In this study, OCT scans were acquired using the Spectralis OCT device (Heidelberg Engineering, Germany). Images scanned with a stellate scan model centered on the fovea were selected for model development. This scanning model comprises six scans that traverse the fovea, each spanning a length of 6 mm. Moreover, retinal thickness in various subfields was recorded using OCT. The macular region was divided into 9 subfields by employing three concentric circles centered on the fovea, with diameters of 1 mm, 3 mm, and 6 mm. The average thickness of the innermost ring defined the central retinal thickness (CRT). Furthermore, the inner (1–3 mm) and outer (3–6 mm) rings were subdivided into superior, nasal, inferior, and temporal subfields, designated as the parafovea and perifovea, respectively. AL measurements were obtained from the IOL Master 700 (Carl Zeiss, Germany).

Figure 1 presents the data management and the flowchart for deep learning models in this study. Two classic CNN models, ResNet18 and ResNet50, along with a Transformer-based model called Vision Transformer (ViT), were introduced to establish the relationship. The detailed description of the models used in this study was presented in Supplementary material 1. In the ViT architecture, the number of encoder blocks was reduced to 6 to prevent overfitting. The input size for the vision transformer is fixed at 224 * 224 to ensure a fair comparison across all models. The SGD (Stochastic Gradient Descent) method serves as the optimizer for all three models. AL measurements obtained by IOL Master 700 (Carl Zeiss, Germany) served as the ground truth for AL prediction. The prediction task is divided into a regression task and a binary classification task by adjusting the dimension of the output result for comprehensive evaluation. To improve accuracy and efficiency, we implement a transfer learning strategy using models pretrained on ImageNet. The salient areas of the feature maps in the latter layers of these models are visualized using the Grad-CAM interpretability method, which illustrates the contribution of each pixel to the final decision.

Figure 1. Datasets and the architecture of the deep learning model. (A) Data management for model development. (B) The flowchart for deep learning.

During training, we employ multiple data augmentation methods to enhance the model’s generalization ability. The random resize crop strategy is used to capture different parts of the image with varying scales. Furthermore, horizontal flipping, color jittering, gamma transformation, and random Gaussian noise are applied to augment the training samples for OCT data. Eventually, we implement the normalization to scale the training and testing input from 0 to 1. To expand the dataset, each case is considered independent and equipped with 6 OCT B-scans. This allows us to formulate a dataset with 2,664 images. The data split ratio for training, validation, and testing was 6:2:2, and the split was randomized based on the AL. The training set and validation set were combined, and a 10-fold cross-validation was conducted to demonstrate the reliability of the methods. In the 10-fold cross-validation, the training instances are divided into 10 equally-sized partitions with similar class distributions. Subsequently, each partition is sequentially employed as the test dataset for the classifier generated using the remaining nine partitions.

The classification task utilized the cross-entropy loss function, and various metrics such as sensitivity, specificity, area under the receiver operating characteristic curve (AUC), and accuracy were calculated to evaluate performance. In the regression task, the MAELoss function was used as the loss function, and the mean absolute error (MAE) was used as the evaluation metric. The agreement between the actual and predicted AL was assessed using the Bland–Altman plot. The Y-axis represents the difference between the actual and predicted ALs, and the X-axis represents the average of the actual and predicted ALs. The mean difference (MD) and 95% limits of agreement (MD ± 1.96 standard deviations) were calculated to assess the agreement.

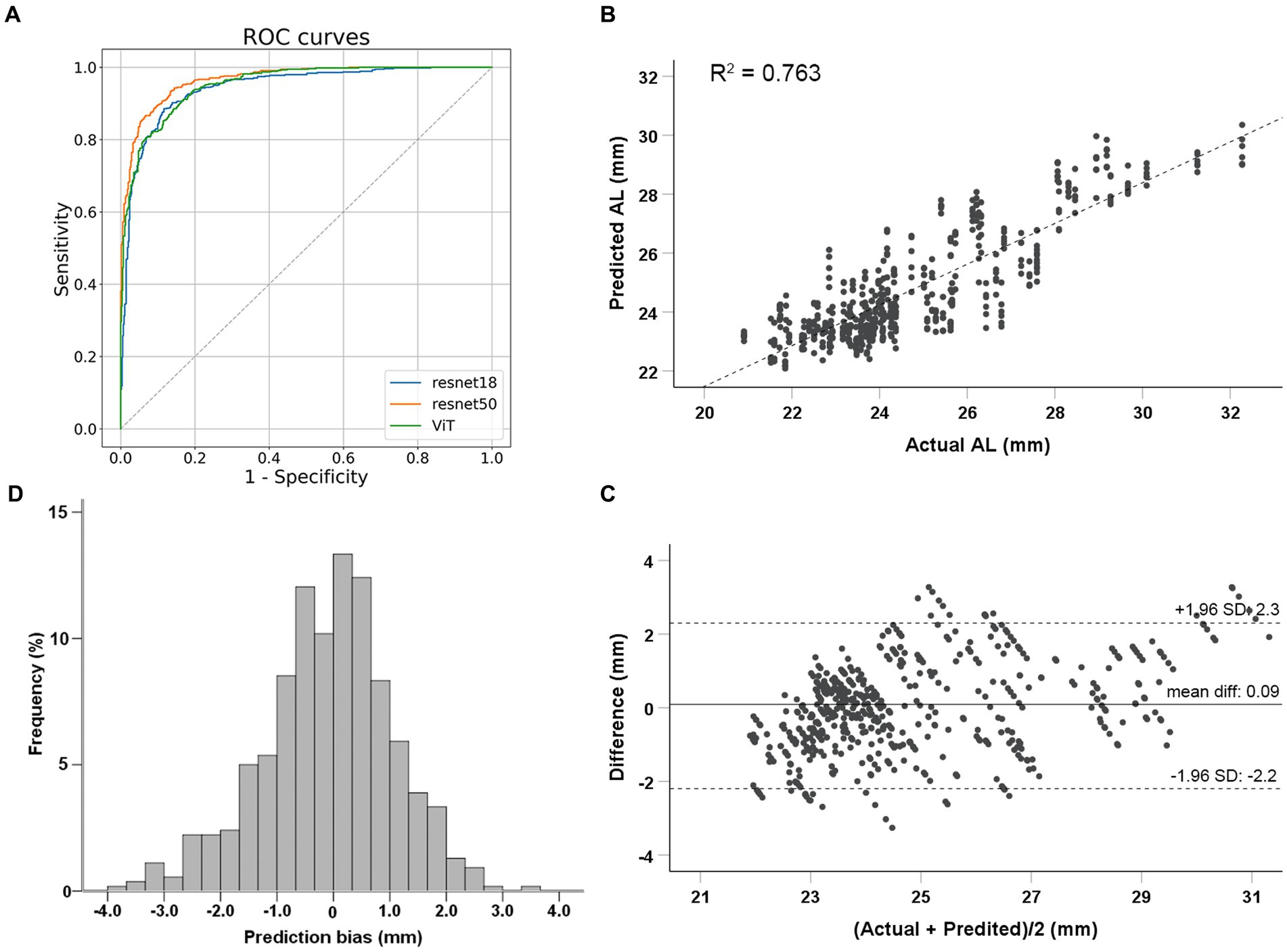

Finally, a total of 2,664 images from 444 eyes (306 patients) were included in the model development. The mean age was 69.02 ± 10.37 years. Among 444 eyes, 113 eyes (25.5%) were high myopic without maculopathy (AL ≥ 26.0 mm). Finally, 266 eyes (1,596 images) were used for training (60%), 89 eyes (534 images) for validation (20%), and 89 eyes (534 images) for testing (20%). The mean age for the training, validation and testing set were 69.36 ± 10.52, 67.89 ± 10.65, and 69.21 ± 9.63 years, respectively. Demographic characteristics of each dataset are summarized in Table 1. Three models (ResNet 50, ResNet 18, and ViT) were developed for the binary classification task of distinguishing AL ≥ 26.0 mm from others. The 10-fold cross-validation results showed the robust performance and high discriminative power of all three models, as illustrated in Table 2. On the test dataset, ResNet 18, ResNet 50, and ViT achieved AUC (95% Confidence Interval [CI]) values of 0.918 (0.886–0.951), 0.929 (0.899–0.960), and 0.924 (0.892–0.955), respectively (as shown in Figure 2A). ResNet 50 and ResNet 18 had the same accuracy of 0.872 (95%CI, 0.840–0.899), which was the highest among the models. ResNet 50 also exhibited the highest performance, with a sensitivity of 0.804 (95%CI, 0.728–0.867) and specificity of 0.895 (95%CI, 0.861–0.923). Therefore, based on the classification results, particularly the AUC and accuracy, ResNet 50 was selected for further analyses.

Figure 2. Performance evaluation of deep learning models. (A) Classification performance of deep learning models to identify eyes with axial lengths ≥26.0 mm in the test dataset. (B) Correlations between actual and predicted axial length using the ResNet 50 model. (C) Bland–Altman plots for the real and predicted axial length using ResNet 50 in test dataset. (D) Prediction bias frequency distribution.

The ResNet 50 model was employed for the regression task. The MAE for predicting AL on the test dataset was 0.83 mm (95%CI, 0.72–0.95 mm). The predicted AL and actual AL had a linear relationship with an R2 of 0.763 in the ResNet 50 model (Figure 2B). Bland–Altman plots revealed a bias of 0.09 mm, with 95% limits of agreement ranging from −2.2 to 2.3 mm (Figure 2C). Prediction bias of 64.8% of the test dataset was less than 1 mm error (Figure 2D); while a calculation of relative bias revealed that 73.1% of the testing difference was within the range of 5% error and 96.5% within 10% error.

Grad-CAM was used to identify the regions within the original OCT images that the models relied on for their predictions. Figure 3 shows representative OCT images with their corresponding Grad-CAM from the test set, which were correctly predicted. The heat maps revealed that AL-related macular features exhibit a localized pattern in the macula, rather than continuous alterations throughout the entire region. Both the region of retina and choroid were highlighted in the heat maps. For eyes with ALs < 26.0 mm, the CNN models predominantly relied on the curvature and shape of the fovea, whereas for eyes with ALs ≥ 26.0 mm, the models relied on the regions flanking the fovea, where the most obvious retinal curvature changes.

The macular thickness of the eyes from the test set was recorded. ROC analyses and linear regression analyses were performed to predict AL based on retinal thickness in different macular regions. The largest AUC value, 0.747, was obtained for CRT. The highest accuracy in distinguishing long AL eyes was 77.8%, achieved by using retinal thickness measurements in the perifoveal (3–6 mm) nasal quadrant (Supplementary material 2). However, both the AUC and accuracy were lower compared to deep learning models that utilized OCT images (p < 0.001). Linear regression analyses showed that the MAE values were 1.78 ± 1.25 mm and 1.57 ± 1.29 mm when using CRT and retinal thickness measurements from all nine regions to predict AL, respectively. These biases were also higher than those observed in deep learning models (p < 0.001).

The present study demonstrated that deep learning models using macular OCT images can accurately estimate AL and differentiate eyes with long AL. The Grad-CAM analysis revealed that the deep learning models primarily relied on the foveal and adjacent regions, as well as the subfoveal choroid for AL estimation. This deep learning model was designed to estimate the AL based on macular OCT images. This study established a significant association between AL and macular structure, demonstrating the AL-related changes in the macular structure as imaged by OCT. These findings provide a solid foundation for research on the pathogenesis of AL-related structural maculopathy.

Previous studies have used fundus photos to estimate AL via developing deep learning models. Dong et al. (12) and Jeong et al. (17) reported the use of CNN models to estimate AL based on 45 degrees fundus photographs, achieving MAE values of 0.56 mm (95% CI, 0.53–0.61 mm) and 0.90 mm (95% CI, 0.85–0.91 mm), and R2 values of 0.59 (95% CI, 0.50–0.65) and 0.67 (95% CI, 0.58–0.87), respectively. Oh et al. (14) developed an AL estimation model using ultra-widefield funds photos with an MAE of 0.74 mm (95% CI, 0.71–0.78 mm) and an R2 value of 0.82 (95% CI, 0.79–0.84). However, this study represents the first attempt to estimate AL using macular B-scan OCT images via deep learning. B-scan images provide cross-sectional views of the retina, offering improved visualization of retinal layers and their integrity (18). The theoretical foundation of this study lies in utilizing the potential alterations in macular structure associated with AL elongation to predict AL. Additionally, we also excluded the eyes with any maculopathy to investigate the changes in macular structure before the development of myopic maculopathy in eyes with long AL. In the current study, the MAE was found to be 0.83 mm (95% CI, 0.72–0.95 mm) and the R2 was 0.763 in the regression task, while the classification model achieved an accuracy of 0.872 (95% CI, 0.840–0.899) in identifying eyes with AL ≥ 26.0 mm. These findings suggest that macular structure changes in eyes with long AL occur independently of OCT-detectable myopic maculopathy, which aligns with clinical observations of a higher risk of the prevalence and progression of myopic maculopathy in eyes with longer AL (19, 20).

The results showed that the accuracy of AL estimation using macular OCT images (87.2%) was superior to using retinal thickness measurements alone (77.8%) in the same study sample. This can be attributed to the detailed structural information available in B-scan images (18). The Grad-CAM analysis revealed that for eyes with ALs shorter than 26.0 mm, the deep learning models primarily relied on the fovea, while for eyes with AL greater than or equal to 26.0 mm, the models showed a preference for regions mainly on either side of the fovea. These findings are consistent with a deep learning model for AL estimation using color fundus photos reported by Dong et al. (12). In their study, the heat map analysis demonstrated that eyes with ALs shorter than 26.0 mm predominantly utilized signals from the foveal region in the fundus photos, while those with AL greater than 26 mm primarily relied on signals from the extrafoveal region (12).

Clinical studies have demonstrated that eyes with high myopia, characterized by an AL exceeding 26.0 or 26.5 mm, were more likely to develop traction maculopathy, such as macular hole and maculoschisis (4, 21, 22). Furthermore, Park et al. (23) found that the development of myopic traction maculopathy was associated with the foveal curvature, which were calculated based on the retinal pigment epithelium hyper-reflective line in OCT images including the fovea. Based on the visualization results obtained from our OCT-based AL estimation model, we speculate that the highlighted regions in the heat maps indicate areas where the changes in retinal curvature are most pronounced (24). In addition, our results also suggested that structural changes in the macula caused by axial elongation exhibit a localized pattern, primarily concentrated at the fovea and the areas where the retinal curvature changes the most significantly, rather than displaying continuous alterations throughout the entire region. Besides retina, the choroid from the corresponding regions were also highlighted in the heat maps. Previous studies have reported that AL was negatively associated with choroidal thickness in both young and elderly people (25, 26), indicating the choroidal atrophy with the elongation of AL. These findings can explain the involvement of choroid in the heat maps when predicting AL in this study. These findings will be helpful for further research on the pathogenesis and prevention of AL-related structural maculopathy.

Several limitations should be noted in this study. First, the sample size is relative small. To minimize the impact of potential sources of bias, we specifically enrolled subjects from a solitary ophthalmological clinic and utilized images acquired using the identical imaging machine. Consequently, the recruitment of additional samples was constrained. Advancements in model predictive performance can be expected when more samples are gathered and analyzed. Second, due to the limited number of eyes with short AL in this study, only two groups (whether AL longer than 26.0 mm) were defined in the classification model development. Nevertheless, this limitation is unlikely to undermine the overall findings, as the focus of this study was on the deep learning model’s performance in distinguishing eyes with elongated AL. Third, it is important to note that we excluded eyes with OCT-detectable maculopathy as our aim was to identify AL-specific macular characteristics prior to the onset of myopic maculopathy. Therefore, caution should be exercised when generalizing these findings to eyes with existing maculopathy. Lastly, the current model was developed based on the macular B-scans centered on the fovea by the stellate 6-scan pattern, which scans from 6 different directions. Since OCT B-scans centered on the fovea exhibit the similar imaging pattern, it is very likely that the deep learning model developed in this study would be applicable to macular OCT B-scans scanned by other pattern centered on the fovea or OCT scans from different manufacturers. However, further research and verification are needed to validate the generalization of the model. Additionally, it’s worth noting that this model was developed using adult eyes with a mean age of 69 years. Considering that the macula develops and axial length increases in children and teenagers, additional studies are required to develop models based on younger age groups.

This study developed a deep learning model using macular OCT images to estimate AL and identify eyes with long AL, achieving good performance. The AL-related macular features exhibit a localized pattern, primarily concentrated in the central fovea and adjacent regions, suggesting that these specific areas may serve as the initial sites for macular alterations caused by AL elongation. These findings have significant implications for further research on the pathogenesis of AL-related structural maculopathy.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by institutional review board at Beijing Hospital. The studies were conducted in accordance with the local legislation and institutional requirements. The ethics committee/institutional review board waived the requirement of written informed consent for participation from the participants or the participants’ legal guardians/next of kin because the retrospective nature of the study.

JL: Conceptualization, Data curation, Methodology, Writing – review & editing, Investigation, Validation, Writing – original draft. HL: Conceptualization, Data curation, Investigation, Methodology, Writing – original draft. YoZ: Conceptualization, Investigation, Methodology, Writing – original draft, Software, Validation. YuZ: Conceptualization, Investigation, Methodology, Writing – original draft, Data curation. SS: Conceptualization, Data curation, Investigation, Methodology, Funding acquisition, Supervision, Writing – review & editing. XG: Conceptualization, Data curation, Methodology, Supervision, Writing – review & editing. JX: Conceptualization, Methodology, Supervision, Writing – review & editing, Investigation, Software. XY: Conceptualization, Methodology, Supervision, Writing – review & editing, Data curation, Funding acquisition.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by National High Level Hospital Clinical Research Funding (BJ-2022-104 and BJ-2020-167).

YoZ and JX are employees of Visionary Intelligence Ltd., which is a Chinese AI startup dedicated to exploring the application of AI technology in the field of healthcare, particularly focusing on ophthalmology.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2023.1308923/full#supplementary-material

1. Foster, PJ, Broadway, DC, Hayat, S, Luben, R, Dalzell, N, Bingham, S, et al. Refractive error, axial length and anterior chamber depth of the eye in British adults: the EPIC-Norfolk eye study. Br J Ophthalmol. (2010) 94:827–30. doi: 10.1136/bjo.2009.163899

2. Xiao, O, Guo, X, Wang, D, Jong, M, Lee, PY, Chen, L, et al. Distribution and severity of myopic maculopathy among highly myopic eyes. Invest Ophthalmol Vis Sci. (2018) 59:4880–5. doi: 10.1167/iovs.18-24471

3. Jonas, JB, Jonas, RA, Bikbov, MM, Wang, YX, and Panda-Jonas, S. Myopia: histology, clinical features, and potential implications for the etiology of axial elongation. Prog Retin Eye Res. (2022) 96:101156. doi: 10.1016/j.preteyeres.2022.101156

4. Ruiz-Medrano, J, Montero, JA, Flores-Moreno, I, Arias, L, García-Layana, A, and Ruiz-Moreno, JM. Myopic maculopathy: current status and proposal for a new classification and grading system (ATN). Prog Retin Eye Res. (2019) 69:80–115. doi: 10.1016/j.preteyeres.2018.10.005

5. Wong, AC, Chan, CW, and Hui, SP. Relationship of gender, body mass index, and axial length with central retinal thickness using optical coherence tomography. Eye. (2005) 19:292–7. doi: 10.1038/sj.eye.6701466

6. Wu, PC, Chen, YJ, Chen, CH, Chen, YH, Shin, SJ, Yang, HJ, et al. Assessment of macular retinal thickness and volume in normal eyes and highly myopic eyes with third-generation optical coherence tomography. Eye. (2008) 22:551–5. doi: 10.1038/sj.eye.6702789

7. Jiang, Z, Shen, M, Xie, R, Qu, J, Xue, A, and Lu, F. Interocular evaluation of axial length and retinal thickness in people with myopic anisometropia. Eye Contact Lens. (2013) 39:277–82. doi: 10.1097/ICL.0b013e318296790b

8. Jonas, JB, Xu, L, Wei, WB, Pan, Z, Yang, H, Holbach, L, et al. Retinal thickness and axial length. Invest Ophthalmol Vis Sci. (2016) 57:1791–7. doi: 10.1167/iovs.15-18529

9. Gulshan, V, Peng, L, Coram, M, Stumpe, MC, Wu, D, Narayanaswamy, A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

10. Kermany, DS, Goldbaum, M, Cai, W, Valentim, CCS, Liang, H, Baxter, SL, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cells. (2018) 172:1122–31.e9. doi: 10.1016/j.cell.2018.02.010

11. LeCun, Y, Bengio, Y, and Hinton, G. Deep learning. Nature. (2015) 521:436–44. doi: 10.1038/nature14539

12. Dong, L, Hu, XY, Yan, YN, Zhang, Q, Zhou, N, Shao, L, et al. Deep learning-based estimation of axial length and subfoveal choroidal thickness from color fundus photographs. Front Cell Dev Biol. (2021) 9:653692. doi: 10.3389/fcell.2021.653692

13. Zou, H, Shi, S, Yang, X, Ma, J, Fan, Q, Chen, X, et al. Identification of ocular refraction based on deep learning algorithm as a novel retinoscopy method. Biomed Eng Online. (2022) 21:87. doi: 10.1186/s12938-022-01057-9

14. Oh, R, Lee, EK, Bae, K, Park, UC, Yu, HG, and Yoon, CK. Deep learning-based prediction of axial length using ultra-widefield fundus photography. Korean J Ophthalmol. (2023) 37:95–104. doi: 10.3341/kjo.2022.0059

15. Yoo, TK, Ryu, IH, Kim, JK, and Lee, IS. Deep learning for predicting uncorrected refractive error using posterior segment optical coherence tomography images. Eye. (2022) 36:1959–65. doi: 10.1038/s41433-021-01795-5

16. Zhang, Y, Hong, D, McClement, D, Oladosu, O, Pridham, G, and Slaney, G. Grad-CAM helps interpret the deep learning models trained to classify multiple sclerosis types using clinical brain magnetic resonance imaging. J Neurosci Methods. (2021) 353:109098. doi: 10.1016/j.jneumeth.2021.109098

17. Jeong, Y, Lee, B, Han, J-H, and Oh, J. Ocular axial length prediction based on visual interpretation of retinal fundus images via deep neural network. IEEE J Selected Topics Quant Elect. (2020) 27:1–7. doi: 10.1109/JSTQE.2020.3038845

18. Sakamoto, A, Hangai, M, and Yoshimura, N. Spectral-domain optical coherence tomography with multiple B-scan averaging for enhanced imaging of retinal diseases. Ophthalmology. (2008) 115:1071–8.e7. doi: 10.1016/j.ophtha.2007.09.001

19. Fang, Y, Yokoi, T, Nagaoka, N, Shinohara, K, Onishi, Y, Ishida, T, et al. Progression of myopic maculopathy during 18-year follow-up. Ophthalmology. (2018) 125:863–77. doi: 10.1016/j.ophtha.2017.12.005

20. Hashimoto, S, Yasuda, M, Fujiwara, K, Ueda, E, Hata, J, Hirakawa, Y, et al. Association between axial length and myopic maculopathy: the Hisayama study. Ophthalmol Retina. (2019) 3:867–73. doi: 10.1016/j.oret.2019.04.023

21. Frisina, R, Gius, I, Palmieri, M, Finzi, A, Tozzi, L, and Parolini, B. Myopic traction maculopathy: diagnostic and management strategies. Clin Ophthalmol. (2020) 14:3699–708. doi: 10.2147/OPTH.S237483

22. Cheong, KX, Xu, L, Ohno-Matsui, K, Sabanayagam, C, Saw, SM, and Hoang, QV. An evidence-based review of the epidemiology of myopic traction maculopathy. Surv Ophthalmol. (2022) 67:1603–30. doi: 10.1016/j.survophthal.2022.03.007

23. Park, UC, Ma, DJ, Ghim, WH, and Yu, HG. Influence of the foveal curvature on myopic macular complications. Sci Rep. (2019) 9:16936. doi: 10.1038/s41598-019-53443-4

24. Park, SJ, Ko, T, Park, CK, Kim, YC, and Choi, IY. Deep learning model based on 3D optical coherence tomography images for the automated detection of pathologic myopia. Diagnostics. (2022) 12:742. doi: 10.3390/diagnostics12030742

25. Ikuno, Y, Kawaguchi, K, Nouchi, T, and Yasuno, Y. Choroidal thickness in healthy Japanese subjects. Invest Ophthalmol Vis Sci. (2010) 51:2173–6. doi: 10.1167/iovs.09-4383

Keywords: optical coherence tomography, axial length, artificial intelligence, deep learning, Grad-CAM

Citation: Liu J, Li H, Zhou Y, Zhang Y, Song S, Gu X, Xu J and Yu X (2023) Deep learning-based estimation of axial length using macular optical coherence tomography images. Front. Med. 10:1308923. doi: 10.3389/fmed.2023.1308923

Received: 07 October 2023; Accepted: 06 November 2023;

Published: 17 November 2023.

Edited by:

Haoyu Chen, The Chinese University of Hong Kong, ChinaReviewed by:

Yi-Ting Hsieh, National Taiwan University Hospital, TaiwanCopyright © 2023 Liu, Li, Zhou, Zhang, Song, Gu, Xu and Yu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaobing Yu, eXV4aWFvYmluZzEyMTRAMTYzLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.