- Pengiran Anak Puteri Rashidah Sa'adatul Bolkiah Institute of Health Sciences, Universiti Brunei Darussalam, Bandar Seri Begawan, Brunei

Introduction

Artificial intelligence, commonly abbreviated as AI, is described as the simulation of human intelligence by machines, giving machines, particularly computer systems, the ability to perform complex tasks that would normally require human reasoning, decision-making, and problem-solving. With advancements in machine learning and deep learning, we can expect to see AI integrated into even more areas of our daily lives. Indeed, AI is becoming increasingly prevalent in healthcare. It can provide personalized recommendations (1) and improve medical diagnostics, particularly in the field of medical imaging (2). At least 11 medical schools across the United States of America, Canada and the Republic of Korea have pilot AI curricula (3). Potentially, all medical schools may need to do the same to keep up with not only the changing landscape of medical education but indeed of medicine itself.

The AI chatbot ChatGPT took the world by storm in late 2022, and higher education institutions are not immune from its repercussions in education. Online education platforms have already started to use generative AI tools, such as Khan Academy via the AI chatbot, Khanmingo, to supplement and personalize student education. It can be used as a personal tutor, a writing coach, and a question generator to test comprehension. It is only a matter of time before brick-and-mortar institutions need to do the same to keep up with the rapidly changing landscape of digital tools in education. Furthermore, Clark and Archibald (4) asserted that the avoidance of the impact of generative AI on higher education results in multi-level harms which range from the lack of structures to ensure scholarly integrity to the stagnation and irrelevance of learning approaches in health science education.

Despite the acceptance of AI in the healthcare profession, concerns such as legal liability and accountability have been raised at the crux of the profession, that is, in making clinical decisions (5). At present, it remains unclear to healthcare professionals who is responsible in the event of errors by AI in clinical decision support systems. Also, it appears that many would not assume accountability for results provided by AI (6). The ethical sense when using AI can be fostered by educators during medical education to facilitate accountable and responsible AI usage by future healthcare professionals.

AI tools in medical education can encompass AI virtual environments, AI-based assessments, and adaptive e-learning systems (7). Additionally, three distinct uses of AI tools in education have been defined in a report by Baker and Smith (8), listed below:

1. Learner-oriented AI—AI technology that “students use to receive and understand new information”.

2. Instructor-oriented AI—AI technology that “can help teachers reduce their workload, gain insights about students and innovate in their classroom”.

3. Institution-oriented AI—AI technology that “helps make or inform decisions made by those managing and administrating schools or our education system as a whole”.

As healthcare education faculty members in a higher education institution, we advocate for the integration of AI tools in medical education, specifically learner-oriented AI tools in this paper. In this article, we focus on learner-oriented tools and propose guidelines for which these tools should be used by medical students.

Impact of AI use in an undergraduate medical program

With the release of ChatGPT late last year, efforts have been made to investigate its utility in education, as well as the utility of other generative AI tools (9–12). However, because of its capabilities, ChatGPT is easily misused, and we outline an observation from our faculty below.

At the Pengiran Anak Puteri Rashidah Sa'adatul Bolkiah (PAPRBS) Institute of Health Sciences at Universiti Brunei Darussalam, undergraduate students of the medicine program are introduced to academic writing as part of the mandatory research module in the curriculum. The module is designed to equip students with the fundamentals to carry out research in science, medicine, and health. For examination, students are expected to report findings scientifically in the form of a written manuscript and oral presentation. Manuscripts are submitted online via the Canvas learning management system and are checked for plagiarism using Turnitin (13).

AI writing had been detected across several pieces of submission, including heavy incorporation of ChatGPT content that gave rise to serious concerns of academic offense. There was evidence of citations to papers which do not exist (an AI phenomenon known as “hallucination”), and the writing was found “nicely summarized generally”, but the knowledge was “touched on superficially and not critically”. The lack of knowledge depth and critical thinking was also demonstrated during the oral presentation.

In summary, generative AI is a powerful tool and its usage by students is currently creating disconcerting experiences in higher education due to its impact on academic integrity.

Training students and faculty staff for ethical use of AI

Our experience above indicates to us that faculty teaching staff needs to engage with AI in the interest of training medical students and keeping the curriculum current. Students may plagiarize essays from content generated by AI tools, compromising their critical thinking skills and scholarly integrity. To address this, universities should implement strict policies and guidelines for the ethical use of AI applications. They should foster a culture of academic integrity and equip students with the necessary skills to critically evaluate and use AI responsibly. Educators can also provide workshops or training sessions to teach students how to effectively use AI tools, balancing their use with their own unique ideas and voices. This approach promotes originality, independent thinking and accountability alongside technological advancement, and supports the interest and positive attitude that faculty teaching staff and medical students have toward AI (14).

In the aforementioned undergraduate research module, we propose guiding medical students on how to write effective ChatGPT prompts and use ChatGPT answers to avoid ChatGPT downsides (such as hallucination and phrase inaccuracies), while maintaining academic integrity and writing standards. During the introductory briefing at the start of the module, we strongly encourage our medical educators who are presenting to remind students of two Turnitin features which detect plagiarism—similarity and AI scores. Medical educators and students should be aware that software programs exist to detect AI-generative text and should take measures to avoid plagiarism (15). To promote accountability, we should require students to disclose the use of AI tools in their written manuscript in a manner similar to how we disclose our use of statistical software for data analysis (15, 16).

Elsewhere in the curriculum, AI-related lectures could be introduced to first-year medical students, in which the topics cover basic understanding, benefits and risks of AI, which would allow students to become familiar with common terminology (3, 17). In modules which emphasize biomedical knowledge acquisition and retention, as well as those related to patient care, teaching staff can incorporate large language model-powered chatbots to answer common medical questions for students and patients. Chatbots can also be used by students to practice their clinical communication skills.

Apart from the integration of AI tools in the curriculum, we further propose AI-based research projects to be made available for medical students on the research project and dissertation track of the fourth year of the curriculum at PAPRSB Institute Health Sciences. This proposal is feasible based on collaborations between our medical school and the School of Digital Sciences on campus.

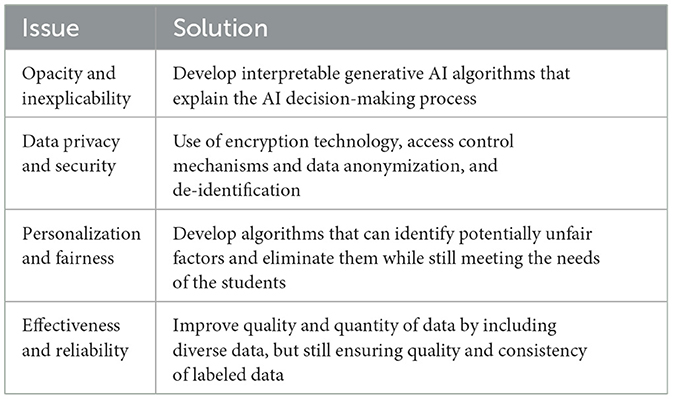

Scientists have tried to use ChatGPT to write scientific manuscripts with varying success (12, 18), while Yu and Guo (19) elaborated on issues to be resolved when using generative AI in education, which we have summarized below together with potential solutions (Table 1). Ethical issues arising from the use of AI tools such as legal liability and accountability can be discussed in the research module as well as in the medical ethics module in the second year of the curriculum.

Table 1. Ethical issues of generative AI and their potential solutions (19).

Beneficial and ethical usage of AI in medical education

Through our conversations with medical students, we can affirm the common usage of ChatGPT. AI writing tools (such as ChatGPT and QuillBot) are prevalent and readily accessible to students right now, and these can enhance research capabilities, as ChatGPT did for college-educated professionals in improving the quality and enjoyment of their writing tasks (20). For medical students, ChatGPT can be used to provide interactive environments for them to practice their communication skills with simulated patients and another tool, DALL-E, can be used to help them practice their diagnostic imaging skills (9).

The ethics of using AI tools is indeed a prevalent concern today. Medical students need to be guided to think independently and appraise critically as well as ethically despite the convenience of readily generated information. They need to be aware of and steer clear of AI-generated misinformation on biomedical knowledge and the pathophysiology of disease (3, 11), and thereby avoid AI-generated information acting as a crutch for clinical decision-making and hampering clinical reasoning abilities (17).

In summary, toward embracing AI education in the medical curriculum and in keeping the curriculum relevant to ongoing development in AI, faculty teaching staff need to rapidly adapt to the integration of AI tools and develop AI literacy by being trained in multiple AI tools for teaching and assessment.

Conclusion

AI has emerged as a powerful tool in medical education, offering unique opportunities to enhance learning, address knowledge gaps, and improve patient care. Embracing AI in medical education holds immense potential for advancing healthcare and empowering the next generation of future healthcare professionals to enhance patient care, improve outcomes, and contribute to the advancement of medicine. By leveraging the capabilities of AI, medical education can become more personalized and efficient. However, careful attention must be paid to ethical considerations, technical infrastructure, and faculty training to ensure the responsible integration of AI into medical education. Medical education should include dedicated modules or courses that explore the ethical considerations of AI and emphasize the need for transparency and comprehensibility in AI systems to foster trust between students, faculty, and AI technologies.

Author contributions

INZ: Conceptualization, Writing—original draft, review, and editing. FA: Conceptualization, Writing—original draft. MAL: Conceptualization, Writing—original draft, review, and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Alkhodari M, Xiong Z, Khandoker AH, Hadjileontiadis LJ, Leeson P, Lapidaire W. The role of artificial intelligence in hypertensive disorders of pregnancy: towards personalized healthcare. Expert Rev Cardiovasc Ther. (2023) 21:531–43. doi: 10.1080/14779072.2023.2223978

2. Al-Antari MA. Artificial intelligence for medical diagnostics-existing and future AI technology! Diagnostics. (2023) 13:688. doi: 10.3390/diagnostics13040688

3. Lee J, Wu AS, Li D, Kulasegaram KM. Artificial intelligence in undergraduate medical education: a scoping review. Acad Med. (2021) 96:S62–70. doi: 10.1097/ACM.0000000000004291

4. Clark AM, Archibald MM. ChatGPT: what is it and how can nursing and health science education use it? J Adv Nurs. (2023) 79:3648–51. doi: 10.1111/jan.15643

5. Lambert SI, Madi M, Sopka S, Lenes A, Stange H, Buszello CP, et al. An integrative review on the acceptance of artificial intelligence among healthcare professionals in hospitals. NPJ Digit Med. (2023) 6:1–14. doi: 10.1038/s41746-023-00852-5

6. Yurdaisik I, Aksoy SH. Evaluation of knowledge and attitudes of radiology department workers about artificial intelligence. Ann Clin Anal Med. (2021) 12:186–90. doi: 10.4328/ACAM.20453

7. Sapci AH, Sapci HA. Artificial intelligence education and tools for medical and health informatics students: systematic review. JMIR Med Educ. (2020) 6:e19285. doi: 10.2196/19285

8. Baker T, Smith, L,. Educ-AI-tion Rebooted? Exploring the Future of Artificial Intelligence in Schools Colleges. Artificial Intelligence - EdTech Innovation Fund (2019). Available online at: https://www.nesta.org.uk/report/education-rebooted/ (cited July 26, 2023).

9. Amri MM, Hisan UK. Incorporating AI tools into medical education: harnessing the benefits of ChatGPT and Dall-E. J Novel Eng Sci Technol. (2023) 2:34–9. doi: 10.56741/jnest.v2i02.315

10. Currie G, Singh C, Nelson T, Nabasenja C, Al-Hayek Y, Spuur K. ChatGPT in medical imaging higher education. Radiography. (2023) 29:792–9. doi: 10.1016/j.radi.2023.05.011

11. De Angelis L, Baglivo F, Arzilli G, Privitera GP, Ferragina P, Tozzi AE, et al. ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front Public Health. (2023) 11:1166120. doi: 10.3389/fpubh.2023.1166120

12. Hisan UK, Amri MM. ChatGPT and medical education: a double-edged sword. J Pedagogy Educ Sci. (2023) 2:71–89. doi: 10.56741/jpes.v2i01.302

13. Turnitin. AI Writing Detection Frequently Asked Questions. (2023). Available online at: https://www.turnitin.com/products/features/ai-writing-detection/faq (cited September 20, 2023).

14. Wood EA, Ange BL, Miller DD. Are we ready to integrate artificial intelligence literacy into medical school curriculum: students and faculty survey. J Med Educ Curric Dev. (2021) 8:23821205211024078. doi: 10.1177/23821205211024078

15. Vintzileos AM, Chavez MR, Romero R. A role for artificial intelligence chatbots in the writing of scientific articles. Am J Obstetr Gynecol. (2023) 229:89–90. doi: 10.1016/j.ajog.2023.03.040

16. Flanagin A, Kendall-Taylor J, Bibbins-Domingo K. Guidance for authors, peer reviewers, and editors on use of AI, language models, and Chatbots. JAMA. (2023) 330:702–3. doi: 10.1001/jama.2023.12500

17. Ngo B, Nguyen D, vanSonnenberg E. The cases for and against artificial intelligence in the medical school curriculum. Radiol Artif Intell. (2022) 4:e220074. doi: 10.1148/ryai.220074

18. Conroy G. Scientists used ChatGPT to generate an entire paper from scratch - but is it any good? Nature. (2023) 619:443–4. doi: 10.1038/d41586-023-02218-z

19. Yu H, Guo Y. Generative artificial intelligence empowers educational reform: current status, issues, and prospects. Front Educ. (2023) 8:1183162. doi: 10.3389/feduc.2023.1183162

Keywords: generative AI, medical higher education, critical thinking, ethical considerations, healthcare professionals

Citation: Alam F, Lim MA and Zulkipli IN (2023) Integrating AI in medical education: embracing ethical usage and critical understanding. Front. Med. 10:1279707. doi: 10.3389/fmed.2023.1279707

Received: 18 August 2023; Accepted: 02 October 2023;

Published: 13 October 2023.

Edited by:

Samson Maekele Tsegay, Anglia Ruskin University, United KingdomReviewed by:

Rintaro Imafuku, Gifu University, JapanCopyright © 2023 Alam, Lim and Zulkipli. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ihsan Nazurah Zulkipli, bmF6dXJhaC56dWxraXBsaUB1YmQuZWR1LmJu

†These authors have contributed equally to this work and share first authorship

Faiza Alam

Faiza Alam Mei Ann Lim

Mei Ann Lim Ihsan Nazurah Zulkipli

Ihsan Nazurah Zulkipli