95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 14 February 2023

Sec. Ophthalmology

Volume 10 - 2023 | https://doi.org/10.3389/fmed.2023.1126754

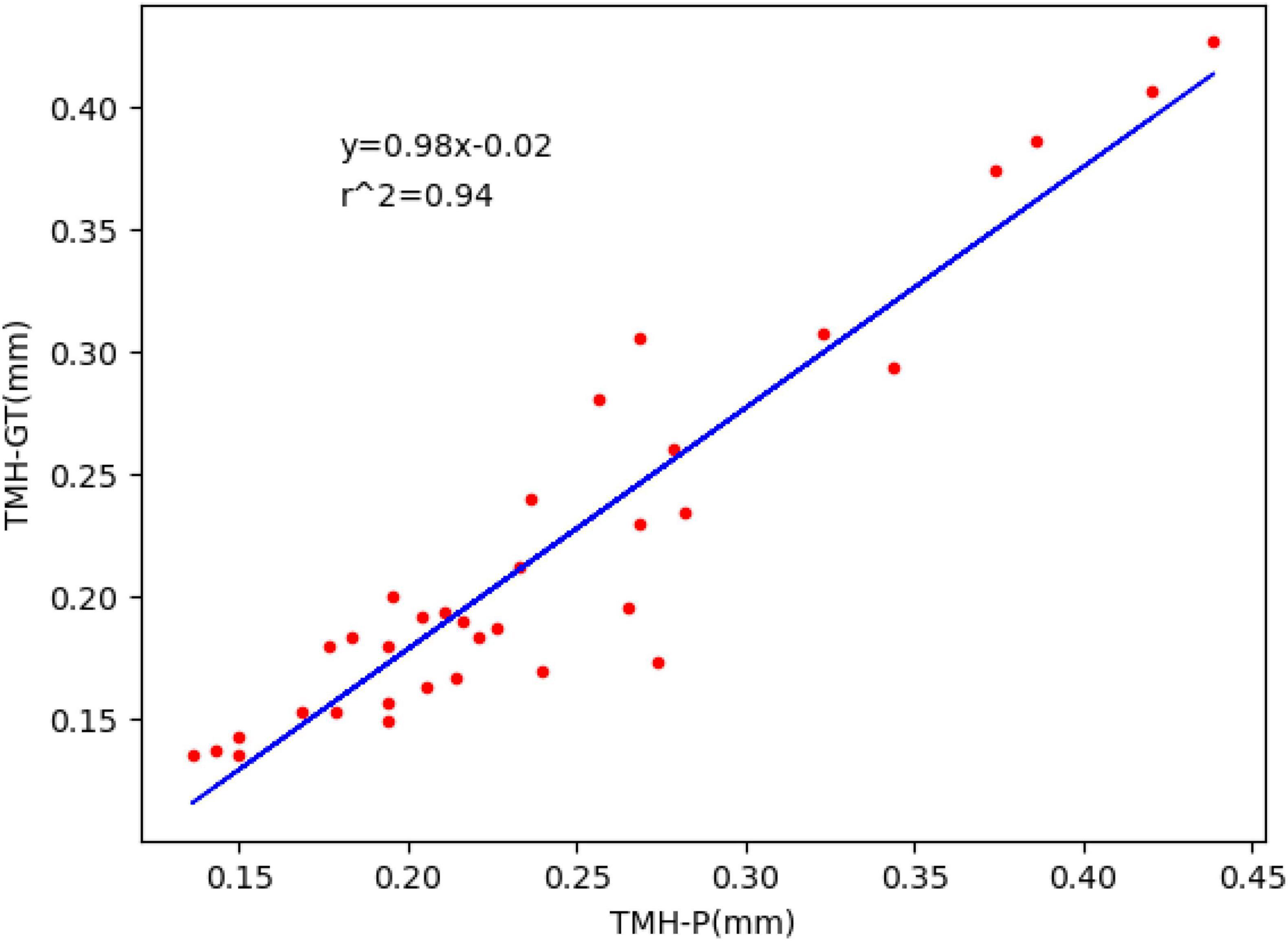

Tear meniscus height (TMH) is an important reference parameter in the diagnosis of dry eye disease. However, most traditional methods of measuring TMH are manual or semi-automatic, which causes the measurement of TMH to be prone to the influence of subjective factors, time consuming, and laborious. To solve these problems, a segmentation algorithm based on deep learning and image processing was proposed to realize the automatic measurement of TMH. To accurately segment the tear meniscus region, the segmentation algorithm designed in this study is based on the DeepLabv3 architecture and combines the partial structure of the ResNet50, GoogleNet, and FCN networks for further improvements. A total of 305 ocular surface images were used in this study, which were divided into training and testing sets. The training set was used to train the network model, and the testing set was used to evaluate the model performance. In the experiment, for tear meniscus segmentation, the average intersection over union was 0.896, the dice coefficient was 0.884, and the sensitivity was 0.877. For the central ring of corneal projection ring segmentation, the average intersection over union was 0.932, the dice coefficient was 0.926, and the sensitivity was 0.947. According to the evaluation index comparison, the segmentation model used in this study was superior to the existing model. Finally, the measurement outcome of TMH of the testing set using the proposed method was compared with manual measurement results. All measurement results were directly compared via linear regression; the regression line was y0.98x−0.02, and the overall correlation coefficient was r20.94. Thus, the proposed method for measuring TMH in this paper is highly consistent with manual measurement and can realize the automatic measurement of TMH and assist clinicians in the diagnosis of dry eye disease.

Dry eye disease (DED) is a multifactorial disease of the ocular surface that is accompanied by increased tear film osmolality and ocular surface inflammation, causing symptoms such as visual impairment and tear film instability (1, 2) and potential damage to the ocular surface, affecting the visual function of millions of people worldwide. In traditional diagnostic methods for DED, Schirmer’s test, tear break-up time measurement, and ocular surface staining score are commonly used to qualitatively and quantitatively analyze the tear film (3, 4). Tear meniscus height (TMH) can be used to assess the tear volume and tear film status. The tear meniscus is located at the edge of the upper and lower eyelids and accounts for 75–90% of the total tear volume (5). The lower tear meniscus is more stable, and the DED analysis mainly uses the lower tear meniscus index, which is also aimed at the lower tear meniscus. Previous studies have reported decreased tear meniscus parameters (TMH, tear meniscus volume, and tear meniscus dynamics) in DED patients (6–8). Therefore, the quantification of tear meniscus parameters is helpful in the diagnosis of DED. As a crucial parameter of the tear meniscus, the TMH has received extensive attention in recent years. In fact, in current clinical studies, although screening of the tear meniscus is performed by non-contact eye photography, the quantitative measurement of TMH is mostly manual or semi-automated. For example, physicians need to be involved in the assessment process of identifying and outlining the upper and lower edges of the tear meniscus in the image, and the measurement points of the TMH are empirically selected by the physician. These subjective assessments may lead to inconsistent results, reduced repeatability, and increased interobserver variability (9, 10). Manual measurement of TMH is time consuming and laborious if a large number of images are involved.

As a crucial parameter of tear meniscus, TMH has received increasing attention in recent years, and screening for DED can be achieved by assessing TMH. Stegmann et al. (11) assessed TMH, tear meniscus area, tear meniscus depth, and tear meniscus radius using image data acquired by ultra-high resolution optical coherence tomography combined with conventional image processing algorithms. In 2019, Yang et al. (12) from the Human Research and Ethics Committee of Peking University Third Hospital implemented a brand-new automated tear meniscus segmentation and height measurement software ImageJ based on a multi-threshold segmentation algorithm and compared the ImageJ measurement results with the manual measurement results. Arita et al. (13) successfully segmented and measured the tear meniscus by interference fringes using a DR-1α tear interferometer and achieved high accuracy. However, this method is not fully automated and requires manual selection of the measurement point. In 2020, Stegmann et al. (14) improved the threshold-based segmentation algorithm to a convolutional neural network segmentation algorithm based on (11) and found that the use of deep learning segmentation algorithm increased the operation speed by 228 times compared with threshold segmentation algorithm. In 2021, researchers from the School of Biomedical Engineering, Department of Medicine, Shenzhen University (15) proposed a tear meniscus segmentation algorithm based on a fully convolutional neural network and combined with polynomial fitting of the upper and lower edges of the tear meniscus to measure TMH, and the measurement results of the TMH were compared with the manual measurement results. However, it was easy to deviate when the tear meniscus edge was fitted with the polynomial, and polynomial fitting was required for each measurement of a picture.

There are two main findings of this study. First, combined with the existing segmentation network structure and the characteristics of ocular surface images, a segmentation network suitable for this experiment is built to accurately segment the tear meniscus region and the central ring of the corneal projection ring (CCPR). Thereafter, combined with the image processing method, the center point of the CCPR is located, and the region that needs to be evaluated for the TMH is selected. Finally, the TMH measurement method is continuously adjusted, and the final measurement method is determined. The processes of ocular surface image acquisition, tear meniscus region segmentation, and TMH measurement are fully automatic and noninvasive (16, 17). In addition, we compared the measured results of TMH using the method proposed in this study with those of experienced professional doctors to evaluate the feasibility of the proposed method.

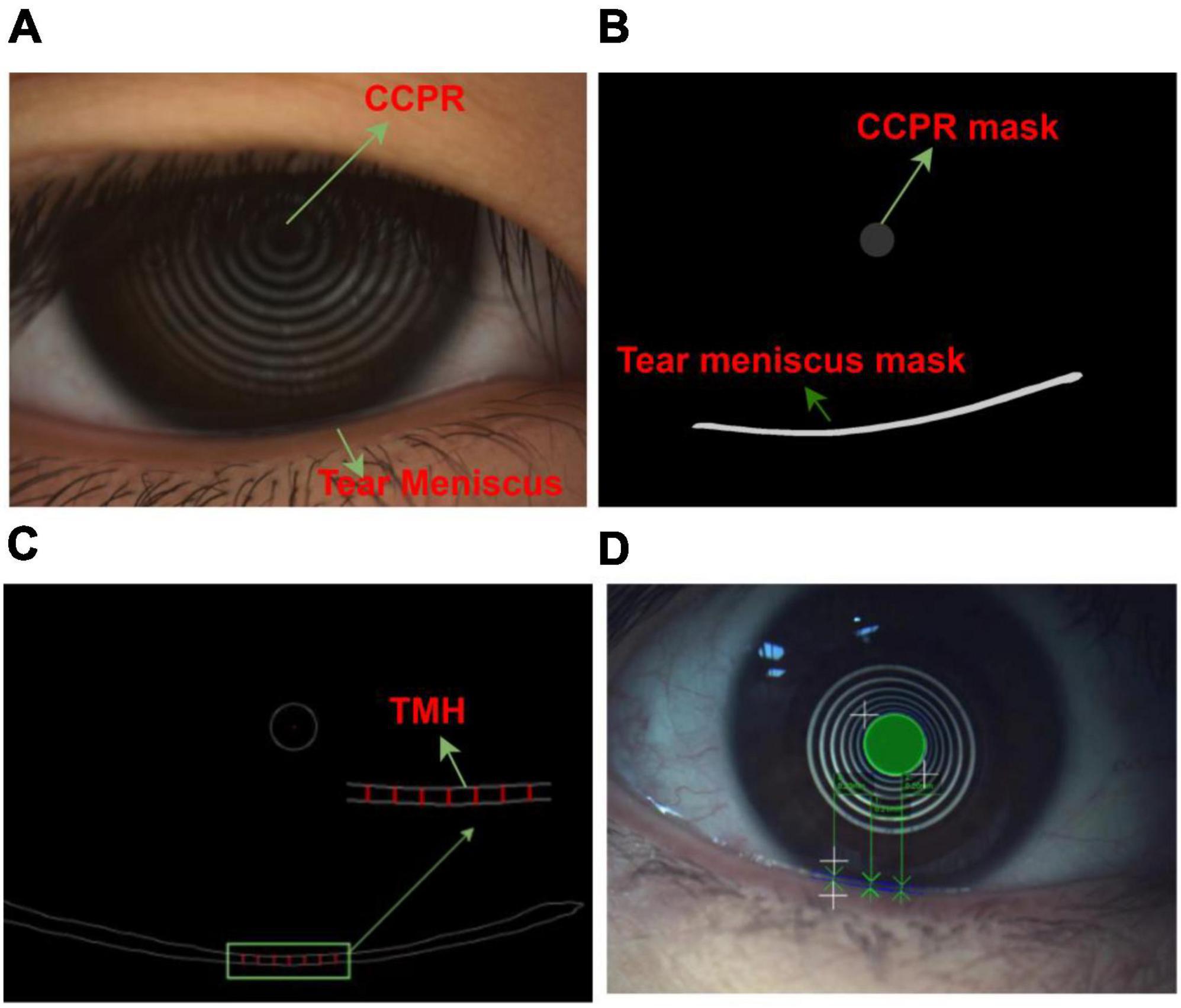

In total, 325 ocular surface images were obtained. All images were obtained from the Shenzhen Eye Hospital. All the data used in this experiment explained the purpose and possible results to the providers. All ocular surface images were acquired using a Keratograph 5 M (K5 M), during which the patients needed to place their chin on a stand in front of the K5 M, adjust the measured eye to a distance of 100 mm from the camera, face the camera, and remain still at the physician’s instructions. The images that caused unclear shooting due to unfocusing and closing eyes in 325 ocular surface images were manually eliminated. Finally, 305 clear images were obtained for the experiment. All images used for the experiment were in png format of 1,360 pixel × 1,024 pixel, as shown in Figure 1A. Furthermore, 305 ocular surface images were divided into training and testing sets, in which 270 ocular surface images were included in the training set, and 35 ocular surface images were included in the testing set. The training set is the data sample used for model fitting that performs gradient descent of training error during training and learns the trainable weight parameters. The testing set was a separate set of samples left during model training, which could be used to evaluate the performance of the model.

Figure 1. Ocular surface image data (A) Original ocular surface image (B) Segmentation convolution neural network segmented tear meniscus region and central ring of corneal projection ring (CCPR) region (C) Schematic of selected TMH measurement region (D) Schematic of physician assessment of TMH.

In the network training process, it is necessary to input the ocular surface image and its corresponding labeling image. All ocular surface images were labeled by a professional DED diagnostician. In the labeling process, time is not limited, and the edge of the tear meniscus region is accurately labeled to the extent possible. The labeled data are transformed into binary images, in which the labeled target region is represented by a pixel value of 1, and the background region is represented by a pixel value of 0, as shown in Figure 1B. To measure TMH, we selected several measuring points, as shown in Figure 1C, the physician selected several measuring points in the tear meniscus region near the right underneath corresponding to the center point of the CCPR, assessed TMH at these measuring points, and subsequently averaged them as the final TMH measurement, as shown in Figure 1D.

In this study, the TMH was evaluated using the following steps: (1) The original ocular surface image and its corresponding tear meniscus region labeling mask in the training set were preprocessed, data augmentation was performed, the processed data were sent into the deep convolution neural network for network training, and the network parameters of the optimal model were saved. (2) The original ocular surface images and the CCPR in the training set were labeled with a mask for preprocessing and data augmentation. The processed data were fed into a deep convolution neural network for network training, and the network parameters of the optimal model were stored. (3) Load the network weights obtained in (1) and (2) to predict the tear meniscus region and the CCPR region of the ocular surface image in the testing set, respectively. (4) The center of CCPR was located. In this study, the center of the CCPR was located by considering the pixel coordinates and edge lengths of the upper left corner of the rectangle through an external rectangle of the predicted CCPR that requires circular fitting of the predicted CCPR to achieve a more accurate center position. (5) Prediction of TMH. The main flow of tear meniscus segmentation and height measurement is shown in Figure 2.

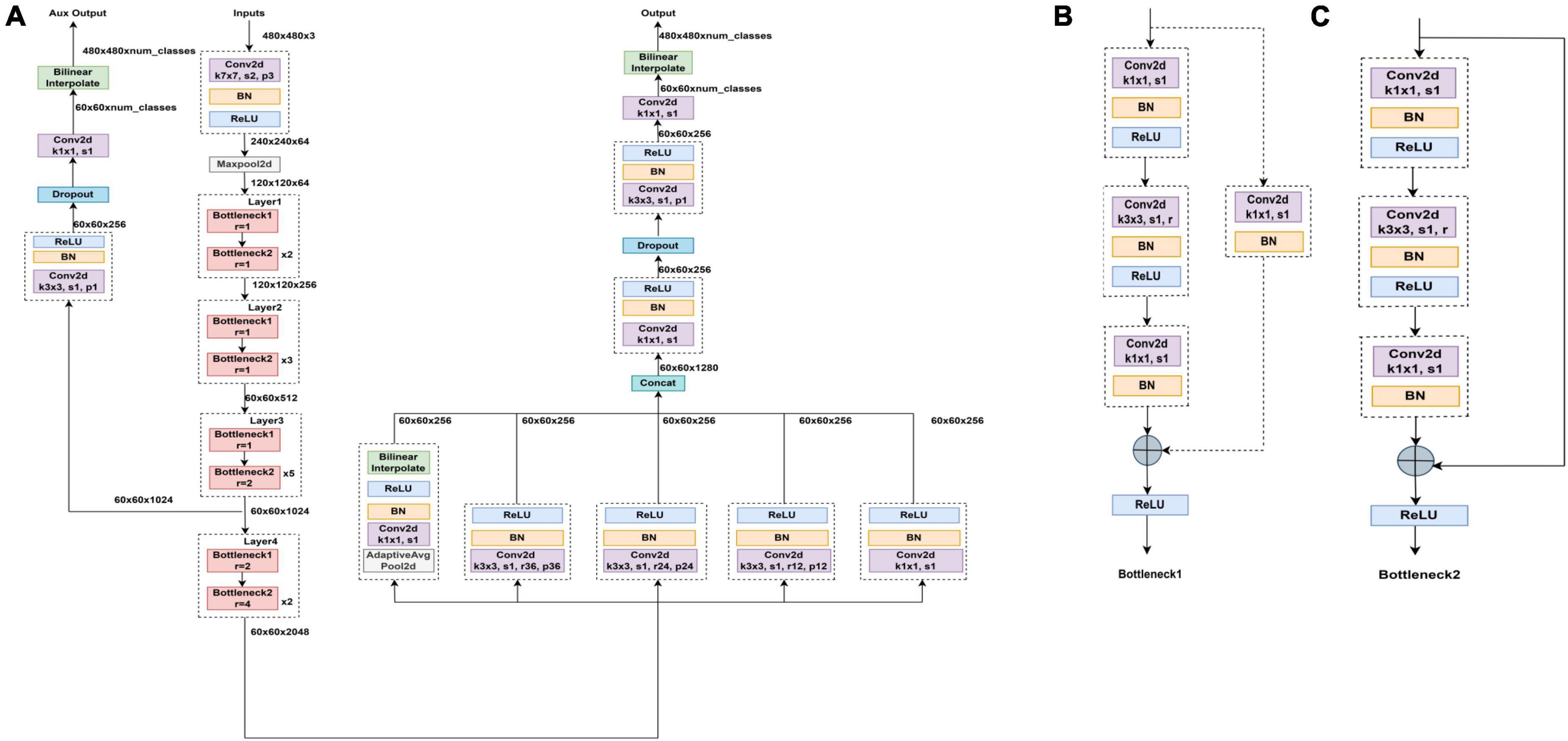

To achieve segmentation of the tear meniscus region, we built a deep convolutional neural network based on the DeepLabv3 (18) architecture, which was initially used for semantic segmentation. To segment the tear meniscus region better, the DeepLabv3 network was adjusted and improved in this study. The segmentation of the tear meniscus region is performed through feature extraction and image size restoration to obtain the final segmentation results, which include the backbone module and ASPP (19) module. The backbone module used in this study refers to resnet50 (20) and renders certain improvements based on the characteristics of the tear meniscus image. The entire backbone consists of a 7 × 7 convolutional layer, maximum pooling layer, and four blocks. Each block consists of bottleneck1 and bottleneck2. Both bottleneck1 and bottleneck2 are residual blocks composed of several convolutional layers, linear normalization layers, rectified linear units, and shortcut branches. Bottlenboteck1 differs from bottleneck2 in that a convolutional kernel of 1 × 1 is added to the shortcut branch of bottleneck1 to reduce the dimension. The specific structures of bottleneck1 and bottleneck2 are shown in Figures 3B, C, respectively. Certain common convolutional layers in block3 and block4 are replaced by atrous convolutional layers (21), and the specific expansion coefficient setting is shown in Figure 3A. The ASPP module consists of five parallel branches, which are a convolutional layer of 1 × 1, three atrous convolutional layers of 3 × 3, and a global average pooling layer that can increase global context information (followed by a convolutional layer of 1 × 1, and subsequently, the size of the input is restored by bilinear interpolation); thereafter, the outputs of these five branches are concatenated along the channel direction, and finally the information is further fused by a convolutional layer of 1 × 1. In addition, for the three parallel atrous convolutional layers, we use the multi-grid strategy and experimentally found that in the experiments performed in this study, the best results are obtained when the multi-grid is set to (1, 1, 1). The structure of the entire network is illustrated in Figure 3.

Figure 3. Segmented network structure diagram (A) Segmented network structure is composed of resnet50 module, ASPP module, and upper sampling. Panels (B,C) are bottleneck1 and bottleneck2, respectively, all of which are composed of several convolutional layers, batch normalization and ReLU.

In the process of feature extraction, the pooling and convolutional layers are generally used to increase the receptive field; however, this also reduces the size of the feature map. For segmentation, it is necessary to use upper sampling to restore the size of the feature map, and the process of feature map reduction and reamplification causes loss of accuracy. To solve this problem, the concept of atrous convolution, which can increase the receptive fields while maintaining the size of the feature maps is proposed. Atrous convolution introduces a hyperparameter called expansion rate, which defines the spacing of each value of the convolutional kernel when processing the data, as shown in Figure 4: (a) shows a common convolutional kernel with a size of 3 × 3; (b) shows an atrous convolution with a size of 5 × 5. The atrous convolutional kernel enlarges the size based on the ordinary convolutional kernel; however, the convolutional kernel unit that participates in the operation does not change; only the light blue square in the figure is the unit that participates in the operation, and the elements in the white square are filled with 0. Atrous convolution increases receptive fields by enlarging the size of the convolutional kernel, while neither increasing the computational load nor reducing the resolution of the feature map. The degree of expansion of the convolutional kernel can be controlled by the expansion factor, assuming that the expansion factor is S, the size of the common convolution kernel is K0, and the size of the convolution kernel after the expansion design is Kc, as follows:

In the process of restoring the image size, bilinear interpolation is adopted in this study. To further optimize the segmentation performance of the network, this study refers to the auxiliary classifier structure in GoogleNet (22) and the FCN (23) network structure, and the output of backbone’s block3 in the model leads to an FCN head as an auxiliary output.

At present, many segmentation networks are based on the improvement of Unet (24), which has also been widely used in the field of biomedical image segmentation, and this method was proposed at the MICCAI meeting in 2015 and has now reached more than 4000 citations. Unet is characterized by an encoder–decoder structure, and through the convolutional layer and pooling layer, the input picture information is encoded into the feature information that can be recognized by the computer, and subsequently, the compressed feature map is also sampled. Compared with the previous segmentation network, Unet fuses more low-level semantic information starting from the first convolutional layer, and the output feature maps of each layer are copied and concatenated with the decoded information after the subsequent upper sampling to generate a new feature map. In the last layer of the network, Unet uses a convolutional layer of 1 × 1 instead of the fully connected layer and uses a convolutional kernel of 1 × 1 to achieve dimensionality reduction, which is a linear transformation and superposition of the combination of information between different channels. Unet plays an important role in the segmentation of medical images owing to their lightweight network structure and feature concatenation. Therefore, to further evaluate the performance of the model used in this study, the Unet series network is selected as the experimental contrast model.

When assessing TMH, a professional doctor mainly evaluates the height of the tear meniscus region corresponding to the vicinity directly below the center of the CCPR, that is, several measurement points are selected in the tear meniscus region directly below the center of the CCPR for TMH assessment, and the average value of the assessment is the final TMH measurement result. Because each assessment requires the physician to select the assessment point, not only is it time consuming and labor intensive, but also the assessment results are susceptible to subjective factors. After consultation with experts in the field of DED diagnosis, this study used the method of averaging multiple measurement points, which is realized as follows: the pixel coordinate of the center of the CCPR is (x,y), the pixel coordinate corresponding to the upper edge of the tear meniscus is (xi,yi), and the pixel coordinates corresponding to the lower edge of the tear meniscus is (x−i−1,y−i−1). The pixel sets of |xi−x|<=100 and |x−i−1−x|<=100 are calculated. Because the edge of the tear meniscus includes the upper and lower edges, 400 pixel coordinates can be obtained. Referring to the opinions given by professional doctors, this study selects an upper tear river coordinate value(xj,yj) and its corresponding tear river coordinate value (x−j−1,y−j−1) every 30 pixels to calculate the TMH. In addition, a previous study (15) showed that TMH in the tear meniscus region 0.5–4 mm directly below the center of the CCPR has strong robustness, and the TMH value in this region is insensitive to the selected measurement points. Therefore, a total of seven TMH measurement points were selected in the tear meniscus region 2 mm directly below the center of the CCPR, and the pixel values corresponding to these seven TMHs were averaged to obtain the final TMH, as shown in Equation 2. The height and width of all pictures used during the experiment were measured and averaged, and this step was repeated three times to finally obtain the height and width of the ocular surface image as 11.85 mm and 15.75 mm, respectively, and subsequently, the pixel value could be converted to a height value by the conversion formula of Equation 3, as shown in Figure 1C.

The evaluation index is a key factor for measuring network performance, and tear meniscus segmentation is of practical significance only if the evaluation index meets the expectations (25). In the field of image segmentation, many evaluation indices can describe the network segmentation accuracy. Among them, the commonly used evaluation indicators are the intersection over union (IOU), dice coefficient, intra class coefficient (ICC) and sensitivity (26–28). We recorded the target region in label A1 and the prediction of the target region as A2.

(1)The IOU describes the ratio between the intersection and merging of the real and predicted results, and the closer the ratio is to 1, the higher the coincidence degree of the two. IOU is calculated as

(2) The dice coefficient describes how similar the two samples are, and the closer the two samples are, the closer the dice coefficient is to 1. The dice coefficient is calculated as follows:

(3) ICC = (variance of interest)/(total variance) = (variance of interest)/(variance of interest + unwanted variance), ICC can be used to evaluate the segmentation done by the models and the observers. I would like to use ICC to evaluate my model proposed in the paper compared with doctors in measuring TMH. The ICC ranges from 0 to 1, a high ICC close to 1 indicates high reliability of the model.

(4) In addition to the IOU and dice coefficient evaluation index, the network performance can be measured using sensitivity. TP: correctly predicted as tear meniscus/CCPR; FN: incorrectly predicted background as tear meniscus/CCPR; FP: incorrectly predicted as the background of tear meniscus/CCPR; TN: correctly predicted background.

Sensitivity refers to the ratio of the predicted correct region to the predicted total region in the prediction result, i.e., the accurate measurement of the network segmentation, and its calculation formula is as follows:

The optimizer provides a direction for adjusting the neural network parameters in deep learning, which causes the loss function to approach the global minimum continuously and determine the global optimal solution. According to the different tasks, selecting the appropriate optimizer to optimize the parameters is necessary; otherwise, the loss function may remain in the local optimal solution, resulting in non-convergence of the network. In this study, the stochastic gradient descent algorithm (29) was used as the optimizer. The gradient is the vector pointing to the maximum value of the derivative of a function in the direction of a certain function at this point, that is, along this direction, the fastest change in the function value. Let the mean-square loss function be

where θ(w1,w2,w3,….,wn) is the weight vector, and a partial derivative of each component is determined using function J(θ) to obtain the gradient g = J′(θ); accordingly, the updated θ at the next moment is

whereθt is the last weight, θt+ 1is the updated weight, andα is the learning rate that determines the step size for each parameter update.

The optimizer controls the direction of the parameter optimization update, whereas the learning rate controls the speed of the parameter optimization update. Generally, the learning rate decreases with the number of iterations. At the beginning of training the network, a larger learning rate can be set to allow the network to swiftly adapt to the training samples. When training to a certain extent, reducing the learning rate is necessary, which finely adjusts the network parameters and avoids the network from shaking. In this study, we used the cosine annealing (30) strategy to update the learning rate. The learning rate decreases in the form of a cosine function. According to the characteristics of the cosine function, learning first gradually decreases, subsequently accelerates the decline, and finally decreases slowly. The learning rate decay formula is

Lrt refers to the current learning rate, Lrmax and Lrmin refer to the maximum learning rate and minimum learning rate that we set in advance, and Ncur and Nmax refer to the current iteration times and total iteration times, respectively.

The designed deep convolutional neural network can accurately segment the tear meniscus region and CCPR in the ocular surface image, and the TMH can be evaluated using the segmentation results. First, the center of CCPR must be determined. To locate the center of the CCPR more accurately, we performed circular fitting and cavity filling of the CCPR followed by an external rectangle (actually a square). The center of the CCPR can be located by the pixel value and edge length of the left upper vertex of the rectangle. The upper and lower edges of the tear meniscus were obtained using edge detection.

Each tear meniscus ocular surface image and its corresponding mask were uniformly cropped to 480 × 480, and subsequently, the image contrast was enhanced by the HSV random enhancement method. Data augmentation was achieved using rotation, translation, and inversion (31, 32) to improve the generalization ability of the model. Experimental hardware configuration during training and testing: Intel (R) Core (TM) i7-6700 CPU @ 3.40GHz, GPUNVIDIA GeForce RTX 1080. Experimental software configuration: The operating system was Windows10 with 64 bits, PyCharm Community Edition 2021.3, Python 3.6.13. All deep convolutional segmentation neural networks were set as follows: (1) SGD (momentum = 0.9, weight decay = 0.0001) was selected by the optimizer, and the learning rate was set as 0.0001. (2) The batch size was set to 4, and the maximum training epoch was set to 500. (3) A learning rate updating strategy was applied during the experiment. This strategy allows the learning rate to update every step instead of every epoch update, such that the network can be trained more effectively. After building the experimental environment and setting the initial learning parameters, different models are trained using the training set, and different models are used to segment the tear meniscus. The change in the loss value during the training process is shown in Figure 5. After training for 500 epochs for the training set, the loss value of the network built in this study decreased the fastest and dropped below 0.1.

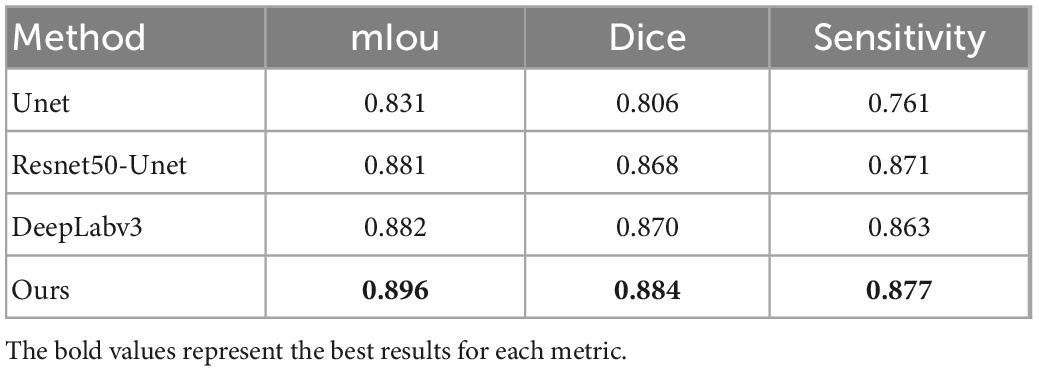

The confusion matrix of tear meniscus segmentation and CCPR segmentation used proposed network in this study showed in Figures 6, 7, respectively. To evaluate the segmentation performance of the deep convolutional segmentation neural network used in this study, we used the popular segmentation networks Unet, Resnet50-Unet, and DeepLabv3 to perform comparative experiments. In this study, the segmentation performance of different models for the tear meniscus region and CCPR were evaluated using IOU, dice coefficient, and sensitivity. The segmentation results of different models for the tear meniscus region are summarized in Table 1, with an average IOU of 0.896, dice coefficient of 0.884, and sensitivity of 0.887. The segmentation performance of our model was the best when the tear meniscus region was segmented. The segmentation results of the different models for the CCPR are summarized in Table 2, where the average IOU was 0.932, the dice coefficient was 0.926, and the sensitivity was 0.947. When the CCPR is segmented, the model segmentation effect is optimal. In summary, the proposed model can accurately segment the tear meniscus region and CCPR, which is conducive to the accurate measurement of the TMH.

Table 1. mIOU, dice coefficient, and sensitivity for tear meniscus region segmentation by different methods.

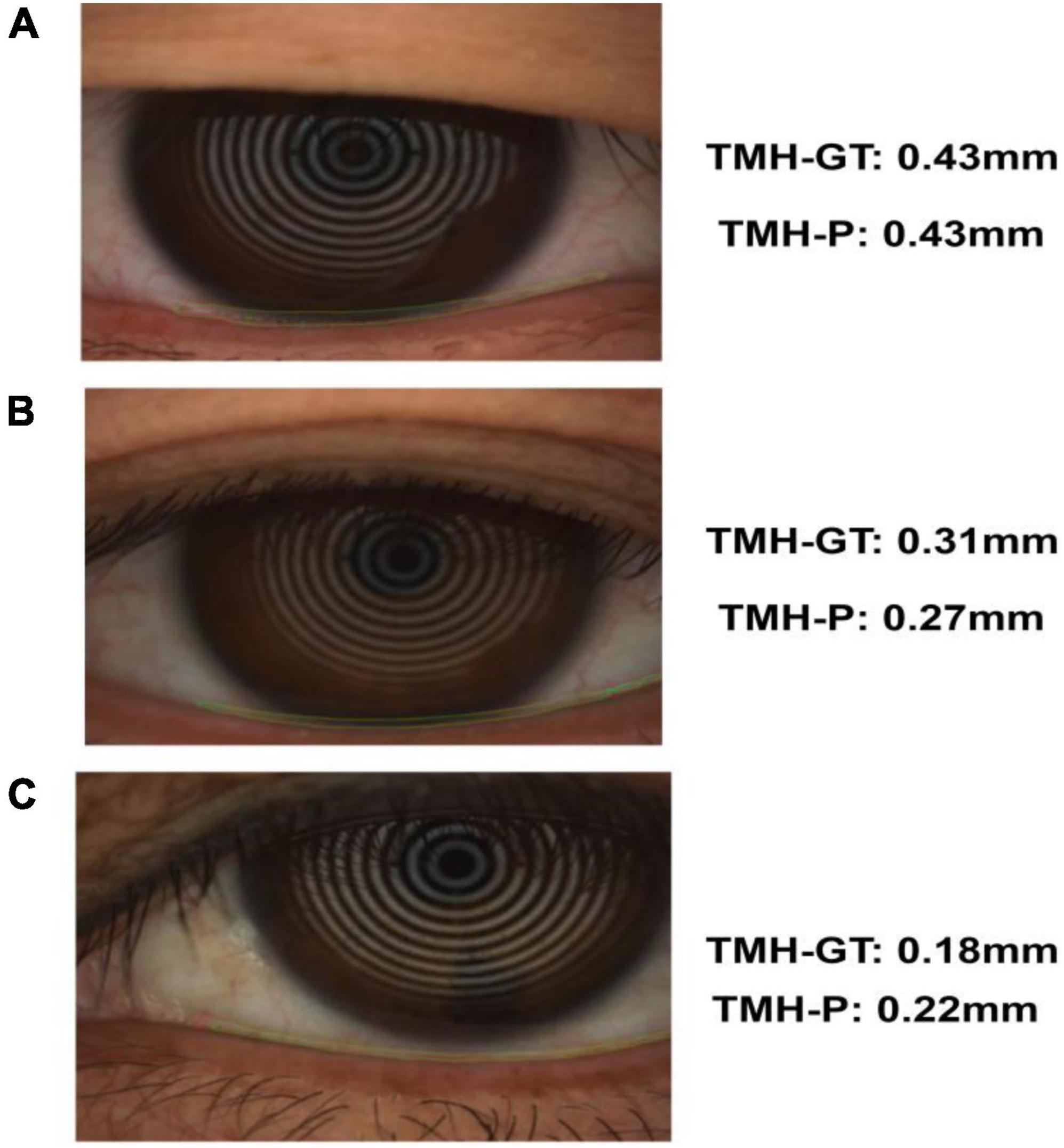

The trained segmentation network combined with the image processing method was used to measure the TMH, and three ocular surface pictures in the testing set were selected, as shown in Figure 8, to show the prediction results of TMH. We outlined the labeled tear meniscus region and the predicted tear meniscus region with red lines and green lines, respectively. Figure 8A shows that the tear meniscus region is accurately segmented, and both the true and predicted value of TMH are 0.43 mm. Figure 8B shows that the tear meniscus region is under segmented, the true value of TMH is 0.31 mm, and the predicted value is 0.27 mm, which results in the predicted value of TMH being less than the true value. Figure 8C shows that the tear meniscus region is over segmented, the true value of TMH is 0.18 mm, and the predicted value is 0.22 mm, which results in the predicted value of TMH being greater than the true value. The true and predicted values in all testing sets were compared using linear regression, as shown in Figure 9, where the true and predicted values of TMH in all images in the testing set remained consistent, with a satisfactory regression line y0.98x−0.02, and the overall correlation coefficient was r20.94. The ICC is used to evaluated the reliability of proposed model in the study, the ICC of TMH was 0.90, which showed good reliability.

Figure 8. Example of tear meniscus segmentation; TMH-GT indicates the TMH of label, TMH-P indicates the TMH predicted in this paper: Panel (A) is accurate segmentation (B) is under segmentation (C) is over segmentation.

Figure 9. Fitted linear regression of true value and predicted value of TMH; ordinate represents the true value of TMH, abscissa represents the predicted value of TMH, and the blue line corresponds to regression line y=0.98x−0.02,r2=0.94.

DED is one of the most common ocular diseases affecting visual function in 5–30% of the world’s population (33). DED causes a series of subjective symptoms and visual damage due to tear film instability accompanied by potential ocular surface damage. As the incidence of DED increases, it affects the visual quality of patients and thus affects their daily life; therefore, the evaluation of visual quality of DED patients has gradually received considerable attention. However, there are no uniform criteria for the diagnosis of DED, and fluorescein tear break-up time (34) and Schirmer test (35) are generally used to diagnose DED; however, these traditional diagnostic methods are invasive and unrepeatable (36–38). Studies have found that TMH is an important parameter of tear meniscus, and its value can be used to distinguish normal eyes from eyes affected by DED (39, 40); nonetheless, most of the measurements of TMH are manual or semi-automatic; for example, professionals are required to outline the upper and lower edges of the tear meniscus and select the measurement point of TMH, which is not only time consuming and laborious, but also the measured TMH is unrepeatable, which may lead to inaccurate diagnostic results. Therefore, it is highly important to design a fully automatic, noninvasive method for measuring TMH. Based on this, a method for measuring TMH is proposed in this study, in combination with deep learning and image processing methods. The acquisition of ocular surface images, detection of the tear meniscus region, and measurement of TMH are automatic and noninvasive. The prediction results of the TMH were consistent with the measurement results of professional doctors. The method proposed in this study can accurately measure TMH and can be used to assist doctors in the diagnosis of DED, which has important clinical and practical significance.

In this study, we first obtained the ocular surface image using K 5M equipment and eliminated the blurred image of the tear meniscus region caused by closing the eyes and not focusing during shooting. Subsequently, a deep convolutional neural network was built to segment the tear meniscus and CCPR regions. The segmentation network used in this study included two parts: feature extraction and image size restoration. The feature extraction part is composed of the adjusted Resnet50 and ASPP module in DeepLabv3. Segmented image size restoration was realized by bilinear interpolation sampling. In addition, an auxiliary output was elicited at the feature extraction stage by referring to the auxiliary output structure of GoogleNet and the output of the FCN. Finally, circular fitting was performed on the segmented CCPR to better locate its central point, and subsequently, the upper and lower edges of the tear meniscus were detected by edge detection to achieve the TMH, and after measuring the pixel values corresponding to the TMH, the final TMH values were obtained by 86 pixel/mm. The model used in this study exhibits an average IOU of 0.896, dice coefficient of 0.884, and sensitivity of 0.887 for the segmentation of the tear meniscus region and an average IOU of 0.932, dice coefficient of 0.926, and sensitivity of 0.947 for the segmentation of the CCPR region. The model built in this study can automatically identify and accurately segment the tear meniscus region and the CCPR region. A trained deep convolutional neural network was used to segment the ocular surface images in the testing set and predict TMH in combination with image processing methods; the regression line y=0.98x−0.02 (r2=0.94) was used to fit the true and predicted values of the TMH in the testing set.

The method proposed in this study for measuring TMH is advantageous because it is noninvasive and fully automatic. The ocular surface images used to assess the TMH were obtained by a professional K5M shooting instrument without touching the patient’s eyes throughout the procedure. Segmentation of the tear meniscus region and measurement of the TMH were achieved by a computer, the measurement method of TMH was easily implementable. Furthermore, the amount of calculation was small, and the physician was not mandated to select TMH measurement points, which eliminated the problem of inconsistent results owing to subjective assessments, reduced repeatability, and increased interobserver variability. The method proposed in this study can accurately measure TMH and assist doctors in DED screening.

The shortcomings of this study are that the number of datasets is small, and the quality is uneven, and certain images with eyes closed and blurred shooting are extant, necessitating continued collection of more high-quality images. The more datasets used to train the network, the more accurate the segmentation results of the network, such that a more accurate TMH is measured. As for segmentation network, with the deepening of the convolutional layer, the obtained feature map has a larger field of view, in which the shallow network focuses on texture features and the deep network focuses on the overall information of the picture. When pooling down sampling, it inevitably loses part of the edge information of the features, and this lost information cannot be recovered by upsampling alone, whereas the Unet network achieves the retrieval of edge features through the concatenation of features, which significantly improves the segmentation fineness. In the future, we can combine different networks, such as the DeepLab series and the Unet series, to improve and further improve the accuracy of segmentation.

In this paper, we propose a method to automatically measure TMH using deep learning combined with image processing. The measurement results of TMH obtained using the method proposed in this paper are consistent with clinical data, and this is clinically significant. In the future, with the continuous development and optimization of algorithms and the acquisition of more high-quality datasets, the accuracy of the measurement of TMH will increase, and it can be used to screen DED.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and the institutional requirements. Written informed consent from the patients was not required to participate in this study in accordance with the national legislation and the institutional requirements.

CW and RH acquired, analyzed, discussed the data and drafted the manuscript. PG and PL analyzed and discussed the data. JW, WY, and XH acquired the clinical information and revised the manuscript. All authors contributed to the article and approved the submitted version.

This research was funded by Shenzhen Fund for Guangdong Provincial High-level Clinical Key Specialties (SZGSP014), Sanming Project of Medicine in Shenzhen (SZSM202011015), Shenzhen Fundamental Research Program (JCYJ20220818103207015), and the Science, Technology and Innovation Commission of Shenzhen Municipality (GJHZ20190821113607205).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Bron AJ, dePaiva CS, Chauhan SK, Bonini S, Gabison EE, Jain S, et al. TFOS DEWS II pathophysiology report. Ocul Surf. (2017) 15:438–510.

2. Koh S, Ikeda C, Fujimoto H, Oie Y, Soma T, Maeda N, et al. Regional differences in tear film stability and meibomian glands in patients with aqueous-deficient dry eye. Eye Contact Lens. (2015) 42:250–5. doi: 10.1097/ICL.0000000000000191

3. Bron A. Diagnosis of dry eye. Surv Ophthalmol. (2001) 45, Suppl. 2:S221–6. doi: 10.1016/s0039-6257(00)00201-0

4. Storås AM, Riegler MA, Grauslund J, Hammer H, Yazidi A, Halvorsen P, et al. Artificial intelligence in dry eye disease. Ocul Surf. (2022) 23:74–86.

5. Holly F. Physical chemistry of the normal and disordered tear film. Trans Ophthalmol Soc U K. (1985) 104 (Pt. 4):374–80.

6. Goto E, Matsumoto Y, Kamoi M, Endo K, Ishida R, Dogru M, et al. Tear evaporation rates in Sjögren syndrome and non-Sjögren dry eye patients. Am J Ophthalmol. (2007) 144:81–5. doi: 10.1016/j.ajo.2007.03.055

7. Chen F, Shen M, Chen W, Wang J, Li M, Yuan Y, et al. Tear meniscus volume in dry eye after punctal occlusion. Invest Ophthalmol Vis Sci. (2010) 51:1965–9. doi: 10.1167/iovs.09-4349

8. Tsubota K. Tear dynamics and dry eye. Prog Retin Eye Res. (1998) 17:565–96. doi: 10.1016/s1350-9462(98)00004-4

9. García-Montero M, Rico-Del-Viejo L, Lorente-Velázquez A, Martínez-Alberquilla I, Hernández-Verdejo J, Madrid-Costa D. Repeatability of noninvasive keratograph 5M measurements associated with contact lens wear. Eye Contact Lens. (2019) 45:377–81. doi: 10.1097/ICL.0000000000000596

10. Hao Y, Tian L, Cao K, Jie Y. Repeatability and reproducibility of SMTubeMeasurement in dry eye disease patients. J Ophthalmol. (2021) 2021:1589378. doi: 10.1155/2021/1589378

11. Stegmann H, Aranha Dos Santos V, Messner A, Unterhuber A, Schmidl D, Garhöfer G, et al. Automatic assessment of tear film and tear meniscus parameters in healthy subjects using ultrahigh-resolution optical coherence tomography. Biomed Opt Express. (2019) 10:2744–56. doi: 10.1364/BOE.10.002744

12. Yang J, Zhu X, Liu Y, Jiang X, Fu J, Ren X, et al. TMIS: a new image-based software application for the measurement of tear meniscus height. Acta Ophthalmol. (2019) 97:e973–80. doi: 10.1111/aos.14107

13. Arita R, Yabusaki K, Hirono T, Yamauchi T, Ichihashi T, Fukuoka S, et al. Automated measurement of tear meniscus height with the Kowa DR-1α tear interferometerin both healthy subjects and dry eye patients. Invest Ophthalmol Vis Sci. (2019) 60:2092–101. doi: 10.1167/iovs.18-24850

14. Stegmann H, Werkmeister R, Pfister M, Garhofer G, Schmetterer L, dos Santos V. Deep learn- ing segmentation for optical coherence tomography measurements of the lowertear meniscus. Biomed Opt Express. (2020) 11:1539–54. doi: 10.1364/BOE.386228

15. Deng X, Tian L, Liu Z, Zhou Y, Jie Y. A deep learning approach for the quantification of lower tear meniscus height. Biomed Signal Process Control. (2021) 68:102655.

16. Hong J, Sun X, Wei A, Cui X, Li Y, Qian T., et al. Assessment of tear film stability in dry eye with a newly developed keratograph. Cornea. (2013) 32:716–21.

17. Arriola-Villalobos P, Fernández-Vigo JI, Díaz-Valle D, Peraza-Nieves JE, Fernández-Pérez C, Benítez-Del-Castillo JM. Assessment of lower tear meniscus measurements obtained with Keratograph and agreement with Fourier-domain optical-coherence tomography. Br J Ophthalmol. (2015) 99:1120–5.

18. Chen LC, Papandreou G, Schroff F, Adam H. Rethinking atrous convolution for semantic image segmentation. arXiv [Preprint]. (2017). doi: 10.48550/arXiv.1706.05587

19. Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL. Semantic image segmentation with deep convolutional nets and fully connected CRFs. arXiv [Preprint]. (2016). doi: 10.48550/arXiv.1412.7062

20. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. Las Vegas, NV (2016). p. 770–8.

21. Wang P, Chen P, Yuan Y, Liu D, Huang Z, Hou X, et al. Understanding convolution for semantic segmentation. arXiv [Preprint]. (2018). doi: 10.48550/arXiv.1702.08502

22. Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 2015. Boston, MA: (2015). p. 1–9.

23. Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell. (2017) 39:640–51.

24. Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. arXiv [Preprint]. (2015). doi: 10.48550/arXiv.1505.04597

25. Niedernolte B, Trunk L, Wolffsohn J, Pult H, Bandlitz S. Evaluation of tear meniscus height using different clinical methods. Clin Exp Optom. (2021) 104:583–8. doi: 10.1080/08164622.2021.1878854

26. Wan C, Shao Y, Wang C, Jing J, Yang W. A novel system for measuring Pterygium’s progress using deep learning. Front Med. (2022) 9:819971. doi: 10.3389/fmed.2022.819971

27. Wan C, Wu J, Li H, Yan Z, Wang C, Jiang Q, et al. Optimized-Unet: novel algorithm for parapapillary atrophy segmentation. Front Neurosci. (2021) 15:758887. doi: 10.3389/fnins.2021.758887

28. Wan C, Chen Y, Li H, Zheng B, Chen N, Yang W, et al. EAD-net: a novel lesion segmentation method in diabetic retinopathy using neural networks. Dis Markers. (2021) 2021:6482665. doi: 10.1155/2021/6482665

29. Zhang Z, Hu Y, Ye Q. LR-SGD: Layer-based Random SGD for Distributed Deep Learning. New York, NY: Association for Computing Machinery (2022). p. 6–11.

30. Wang Z, Hou F, Qiu Y, Ma Z, Singh S, Wang R, et al. CyclicAugment: Speech Data Random Augmentation with Cosine Annealing Scheduler for Automatic Speech Recognition. Incheon: International Speech Communication Association (2022). p. 3859–63.

31. Tang Z, Zhang X, Yang G, Zhang G, Gong Y, Zhao K, et al. Automated segmentation of retinal nonperfusion area in fluorescein angiography in retinal vein occlusion using convolutional neural networks. Am Assoc Phys Med. (2020) 2:648–58. doi: 10.1002/mp.14640

32. Xu J, Zhou Y, Wei Q, Li K, Li Z, Yu T, et al. Three-dimensional diabetic macular edema thickness maps based on fluid segmentation and fovea detection using deep learning. Int J Ophthalmol. (2022) 3:495–501. doi: 10.18240/ijo.2022.03.19

33. Smith JA, Albeitz J, Begley C, Caffery B, Nichols K, Schaumberg D, et al. The epidemiology of dry eye disease: report of the epidemiology subcommittee of the international dry eye workshop. Ocul Surf. (2007) 5:93–107.

34. Bron AJ, Smith JA, Calonge M. Methodologies to diagnose and monitor dry eye disease: report of the diagnostic methodology subcommittee of the international dry eye workshop. Ocul Surf. (2007) 5pp:108–52. doi: 10.1016/s1542-0124(12)70083-6

35. Phadatare SP, Momin M, Nighojkar P, Askarkar S, Singh KK. A comprehensive review on dry eye disease: diagnosis, medical management, recent developments, and future challenges. Adv Pharm. (2015) 704946:2015.

36. Nichols KK, Mitchell GL, Zadnik K. The repeatability of clinical measurements of dry eye. Cornea. (2004) 23:272–85.

37. Johnson ME, Murphy PJ. The agreement and repeatability of tear meniscus height measurement methods. Optom. Vis. Sci. (2005) 82:1030–7.

38. Koh S, Ikeda C, Watanabe S, Oie Y, Soma T, Watanabe H, et al. Effect of non-invasive tear stability assessment on tear meniscus height. Acta Ophthalmol. (2015) 93:e135–9.

39. Burkat CN, Lucarelli MJ. Tear meniscus level as an indicator of nasolacrimal obstruction. Ophthalmology. (2005) 112:344–8.

Keywords: tear meniscus height, dry eye disease, automatic diagnosis, deep learning, image segmentation

Citation: Wan C, Hua R, Guo P, Lin P, Wang J, Yang W and Hong X (2023) Measurement method of tear meniscus height based on deep learning. Front. Med. 10:1126754. doi: 10.3389/fmed.2023.1126754

Received: 18 December 2022; Accepted: 13 January 2023;

Published: 14 February 2023.

Edited by:

FangJun Bao, The Affiliated Eye Hospital to Wenzhou Medical University, ChinaReviewed by:

Yongjin Zhou, Shenzhen University, ChinaCopyright © 2023 Wan, Hua, Guo, Lin, Wang, Yang and Hong. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jiantao Wang,  d2FuZ2ppYW50YW82NUAxMjYuY29t; Weihua Yang,

d2FuZ2ppYW50YW82NUAxMjYuY29t; Weihua Yang,  YmVuYmVuMDYwNkAxMzkuY29t; Xiangqian Hong,

YmVuYmVuMDYwNkAxMzkuY29t; Xiangqian Hong,  eGlhbmdxaWFuLmhvbmdAb3V0bG9vay5jb20=

eGlhbmdxaWFuLmhvbmdAb3V0bG9vay5jb20=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.