- 1Division of Data Science and Learning, Argonne National Laboratory, Lemont, IL, United States

- 2Department of Computer Science, The University of Chicago, Chicago, IL, United States

Cancer claims millions of lives yearly worldwide. While many therapies have been made available in recent years, by in large cancer remains unsolved. Exploiting computational predictive models to study and treat cancer holds great promise in improving drug development and personalized design of treatment plans, ultimately suppressing tumors, alleviating suffering, and prolonging lives of patients. A wave of recent papers demonstrates promising results in predicting cancer response to drug treatments while utilizing deep learning methods. These papers investigate diverse data representations, neural network architectures, learning methodologies, and evaluations schemes. However, deciphering promising predominant and emerging trends is difficult due to the variety of explored methods and lack of standardized framework for comparing drug response prediction models. To obtain a comprehensive landscape of deep learning methods, we conducted an extensive search and analysis of deep learning models that predict the response to single drug treatments. A total of 61 deep learning-based models have been curated, and summary plots were generated. Based on the analysis, observable patterns and prevalence of methods have been revealed. This review allows to better understand the current state of the field and identify major challenges and promising solution paths.

1. Introduction

Cancer treatment response prediction is a problem of great importance for both clinical and pharmacological research communities. Many believe it will pave the way to devising more efficient treatment protocols for individual patients and provide insights into designing novel drugs that efficiently suppress disease. Currently, however, cancer treatment remains extremely challenging, often resulting in inconsistent outcomes. For example, tumor heterogeneity contributes to differential treatment responses in patients with the same tumor type (1, 2). Nevertheless, conventional tumor type-dependent anticancer treatments such as chemotherapy often lead to suboptimal results and substantial side effects, and therefore, are notoriously regarded as one-size-fits-all therapies (3, 4). Alternatively, targeted therapies and certain immunotherapies are prescribed based on known biomarkers, observable within individual patients (5). Cancer biomarkers refer to abnormalities in omics data (genomic, transcriptomic, etc.) which can be predictive of treatment response (3). Biomarker-driven treatment plans, either standalone or in combination with chemotherapies, are the mainstream of nowadays personalized (or precision) oncology. Discovery of biomarkers and their subsequent utilization in clinical settings are attributed to advances in tumor profiling technologies and high-throughput drug screenings (3, 4).

An alternative direction to leverage large-scale screenings and high-dimensional omics data in the cancer research community is to build analytical models designed to predict the response of tumors to drug treatments. Typically, such models use tumor and drug information without explicitly specifying biomarkers (6). These models, often referred to as drug response prediction (DRP) models, can be used to prioritize treatments, explore drug repurposing, and reaffirm existing biomarkers. Artificial intelligence (AI) is the core methodology in designing DRP models, demonstrating encouraging results in retrospective evaluation analyzes with pre-clinical and clinical datasets. Many DRP models use classical machine learning (ML) and deep learning (DL), i.e., multi-layer neural networks (NNs). While DL is generally considered a subset of ML, we differentiate between the two terms, where ML is referred here to learning algorithms that do not involve the use of NNs.

Papers in this field are being published constantly, exploiting learning algorithms for DRP. To cope with increasing rate of publications, a recent special issue in Briefings in Bioinformatics was dedicated to DRP in cancer models (7). Collectively, at least 18 review-like papers have been published since 2020 in an attempt to summarize progress and challenges in the field, as well as provide discussions on promising research directions. Each paper aims to review the field from a unique perspective but certain topics substantially overlap as shown in Table 1 which lists some of the major topics and associated references. Common topics include data resources for constructing training and test datasets, prevalent data representations, ML and DL approaches for modeling drug response, and methods for evaluating the predictive performance of models.

Table 1. Categorization of topics covered in existing review papers on drug response prediction (DRP).

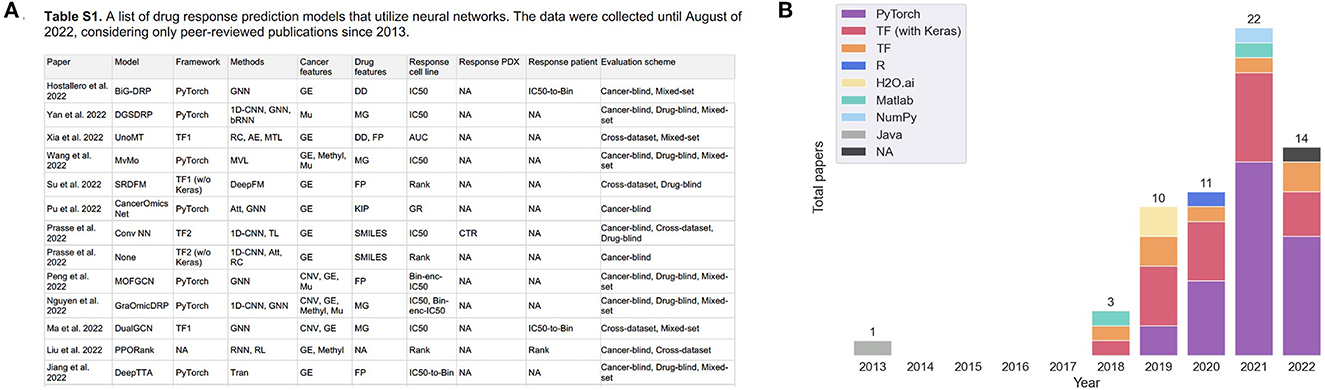

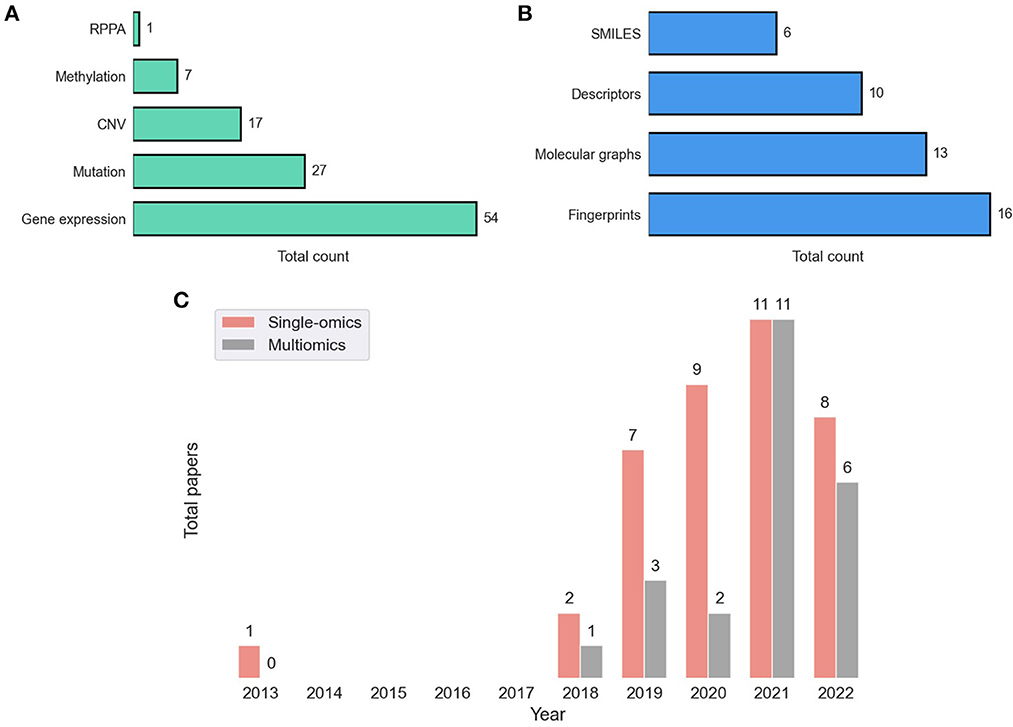

Following the revival of artificial neural networks (NNs) more than a decade ago (29), DL methods have become a promising research direction across a variety of scientific and engineering disciplines (30–33). This trend is also observed in cancer research, including the prediction of tumor response to treatments, as shown in Figure 1. In 2013, Menden et al. demonstrated that a single hidden layer NN predicts drug response with comparable performance to random forest (RF) (34). The authors used genomic and response data from the Genomics of Drug Sensitivity in Cancer (GDSC) project which was published in 2012 (35). Despite the accelerated popularity of DL and the availability of substantial screening and omics data, it was not until the emergence of open-source frameworks dedicated for building and training NNs (36–38) that DL has become an integral part of DRP research (note the gap between 2013 and 2018 in Figure 1). Owing to the abundance of omics and screening data, the availability of DL frameworks and the unmet need for precision oncology, we have been witnessing a growth of scientific publications exploring NN architectures for DRP.

Figure 1. (A) A snapshot of Supplementary Table 1 that lists peer-reviewed papers proposing monotherapy drug response prediction (DRP) models (the full list can be found in the Supplementary material). The papers have been curated to identify various properties (shown in table columns), such as deep learning (DL) methods, feature types, evaluation methods, etc., as discussed in detail in this paper. Multiple plots in this paper have been generated using the data from Supplementary Table 1 [(B), Figures 4–7C, D]. (B) Distribution of papers by year that use DL methods for DRP. The neural network models are designed using popular computational frameworks which include both proprietary and open-source software. The bar plots are color-coded by the different computational frameworks. TensorFlow/Keras and PyTorch are the most popular frameworks based on this plot. Data were collected until August 2022, considering only peer-reviewed publications.

Dozens of DL-based models have been published, exploring diverse feature representations, NN architectures, learning schemes, and evaluation methods. However, only three out of the many existing reviews explicitly focus on modeling DRP with DL (10, 11, 18). These reviews present a categorized summary of methods, citing and discussing a limited number of examples from each category. As a result, only a selective overview of methods is provided and limited aspects of published DRP models are considered, exhibiting a primary limitation of these reviews. In addition, the scope of existing reviews does not require a comprehensive search for models which could reveal predominant and emerging trends. Considering the current rate of publications, the diversity of approaches, and the limitations of existing reviews, a comprehensive review is necessary and timely.

This review is the first one to conduct a comprehensive search to include all relevant papers that utilize NNs for DRP. As of the time of completing this paper, a total of 61 peer-reviewed publications have been identified. Only models predicting response to single-drug treatments are included in the current review, excluding models that make predictions to combination therapies. We identified three major components involved in developing DRP models, including data preparation, model development, and performance analysis. These components have been used to guide the curation of papers with special focus on representation methods of drugs, cancers, and measures of treatment response, DL related methods including NN modules and learning schemes, and methods for evaluating the prediction performance. This information is summarized in Supplementary Table 1. Summary plots have been generated, revealing the prevalence of methods used in these papers. Observing the prevalence of methods will assist in revealing approaches that have been investigated in multiple studies as well as emerging methods which are rather underexplored for DRP. We believe this review would serve as a valuable reference for new and experienced researchers in this field.

Section 2 formulates the DRP as a DL problem. Sections 3–5 provide a detailed review of the drug, cancer, and response representations used in papers listed in Supplementary Table 1. Existing design choices are summarized in Sections 6.1, 6.2 in terms of fundamental NN building blocks and learning schemes, respectively. Section 7 compiles existing approaches for evaluating model performance. In Section 8, we discuss the current state of DRP field, determine primary challenges, and propose further directions.

2. Deep learning-based drug response prediction workflow

A DRP model can be represented by r = f(d, c), where f is the analytical model designed to predict the response r of cancer c to the treatment by drug d. The function f is implemented with a NN architecture in which the weights are learned through backpropagation. This formulation is for pan-cancer and multi-drug1 prediction model where both cancer and drug representations are needed to predict response. A special case is drug-specific models designed to make predictions for a drug or drug family [e.g., drugs with the same mechanism of action (MoA)] (39). These models learn from cancer features only and can be formulated as r = fD(c). Another type of models is multi-task learning models which take only cancer representations as inputs and generate multiple outputs where each output produces predictions for a specific drug. As compared to drug-specific models, the multi-task formulation enables learning from larger amounts of drug response data while exploiting common characteristics among drugs, thereby allowing to further improve generalization of the entire model (further discussed in Section 6.2.3).

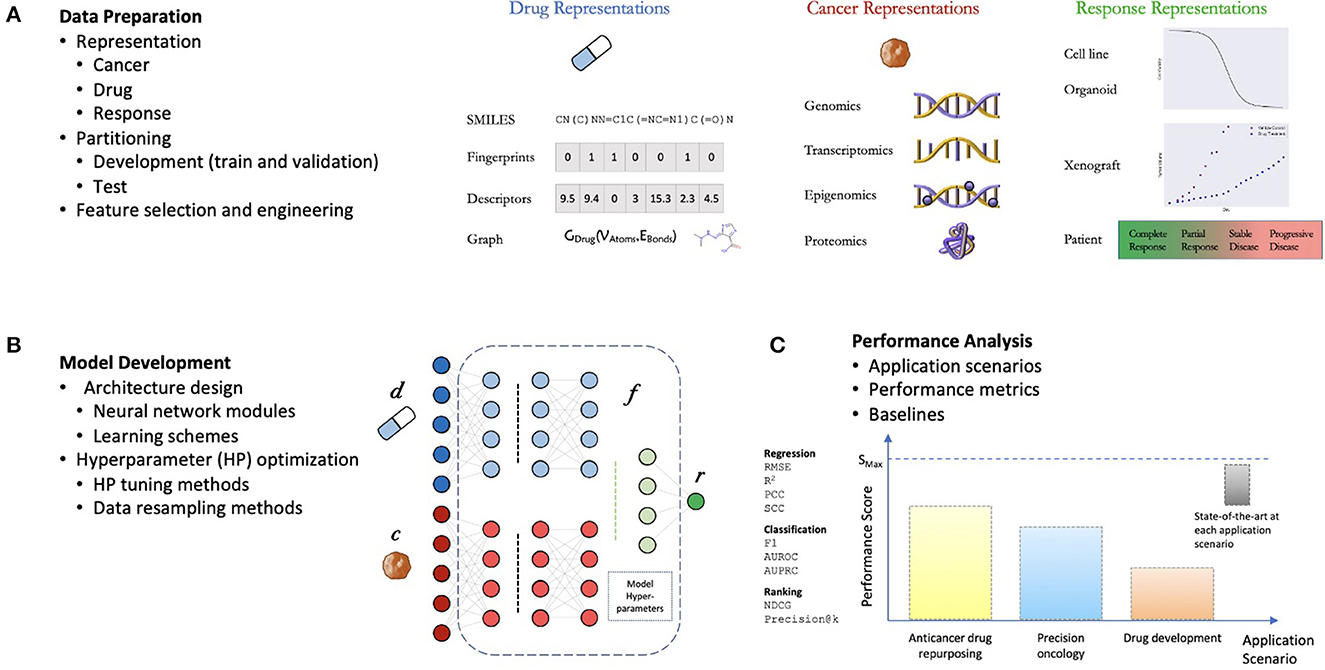

The general workflow for developing DRP models is not much different from developing supervised models for other applications (Figure 2). The challenges come in the specific details typical to predicting treatment response. The process can be divided into three components: (1) data preparation, (2) model development, and (3) performance analysis (10, 12). The choice of methods associated with each one of these components can have a significant impact on the overall workflow complexity and the potential application of the final model.

Figure 2. General components of a drug response prediction (DRP) workflow. (A) Data preparation: requires generating representations of features and treatment response, partition the dataset into development set (for training and hyperparameter (HP) tuning) and test set (for performance analysis), and any additional preprocessing such feature selection/engineering. (B) Model development: the process of generating a deep learning model which involves the design of a neural network (NN) architecture (choice of NN modules and learning schemes) and model training including HP optimization. (C) Performance analysis: assessment of prediction generalization and other metrics allowing to evaluate the utility of the DRP model for different applications in oncology such as personalized recommendation of treatments, drug repurposing, and drug development. The performance is benchmarked against one or more baseline models which should ultimately be chosen from available state-of-the-art models for the investigated application.

2.1. Data preparation

Data preparation is generally the initial step in designing a prediction model, requiring expertise in bioinformatics and statistical methods. During this step, heterogeneous data types are aggregated from multiple data sources, preprocessed, split into training and test sets, and structured to conform to an API of a DL framework. The generated drug response dataset with N samples, denoted by , includes representations for drug (d), cancer (c), and response (r). Prediction generalization is expected to improve with a larger number of training samples as demonstrated with multiple cell line datasets (40–42), normally creating preference for larger datasets when developing DL models. Recent research focusing on data-centric approaches suggests that efficient data representations and proper choice of a training set are at least as important as the dataset size for improving predictions, and further emphasize the importance of the data preprocessing step (43).

2.2. Model development

Model development refers to NN architecture design and optimization of model hyperparameters (HPs). To design NNs, developers often resort to common heuristics which rely on intuition, experimentation, and adoption of architectures from related fields. This process involves choosing the basic NN modules, the architecture, and learning schemes. Diversity of data representations for cancers (9) and drugs (21) and potential utilization of DRP models in several pre-clinical and clinical settings have led researchers to explore a wide range of DL methods.

2.3. Performance analysis

A desirable outcome of a model development workflow is a robust model that produces accurate predictions across cancers and drugs as evaluated by appropriate performance metrics. DRP models can be used in various scenarios such as personalized recommendation of treatments, exploration of drug repurposing, and assisting in development of new drugs. Therefore, both performance metrics and appropriate evaluation schemes (e.g., design of training and test sets) are critical for proper evaluation of prediction performance. The abundance of DRP papers in recent years (Figure 1) and lack of benchmark datasets (10), strongly suggest that a rigorous assessment of model performance is required where state-of-the-art baseline models serve as a point of reference.

2.4. Source and target domains

A vital characteristic that affects the entire workflow is the source domain data used to develop the DRP model, and the target domain data representing the biological domain on which the model is expected to operate. Data suitable for modeling DRP (Sections 3–5) come from multiple biological domains, such as cell lines, organoids, xenografts, and patients (9). Most models utilize data from a single domain (usually cell lines, Section 3.1, due to the abundance of response data). Certain models exploit data from a mix of domains with the goal to improve predictions in a target domain which suffers from insufficient data. Since a primary goal is to make DRP models useful in improving patient care, the biological domains can be further categorized into clinical (human patient data) and pre-clinical (cell lines, etc.). Considering this distinction, models can exploit data in different ways: train and test on preclinical (44–46), train and test on clinical, train on preclinical and test on clinical (47–49), and models that leverage both preclinical and clinical data during the training process and then test on clinical (50–54). Models that use data from mixed domains are generally more challenging to develop because they require extra steps in data preparation, advanced modeling techniques, and robust performance evaluation analysis (Section 6.2.2).

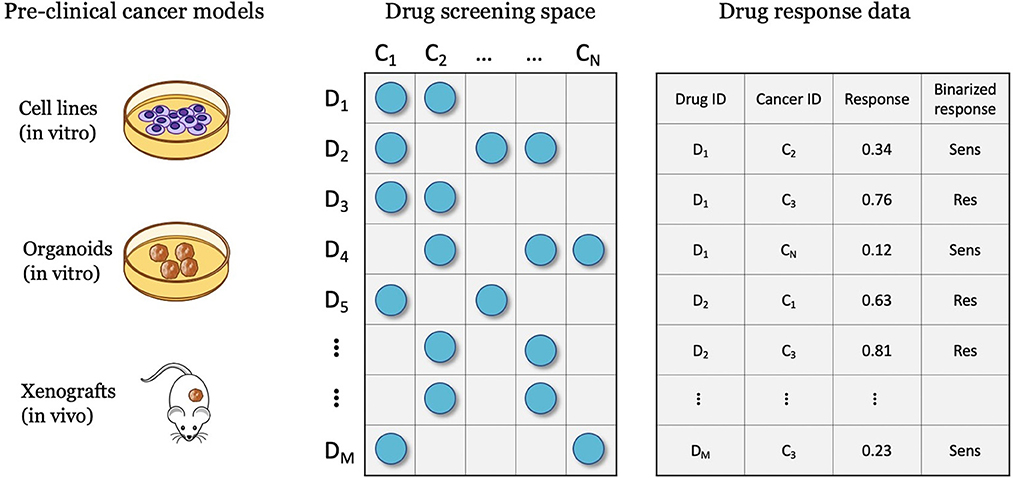

3. Representations of treatment response

Drug screening platforms enable testing the sensitivity of cancer samples in controlled lab environments, ultimately producing data for predictive modeling (Figure 3). A major objective is a discovery of potential anticancer treatments through the screening of compound libraries against diverse cancer panels. Systematic drug screening platforms have been established for in vitro cancer models such as cell lines (55–57) and organoids (58), and in vivo models such as patient-derived xenografts (PDXs) (59). An overview of preclinical cancer models and the corresponding methods for sensitivity profiling is available in a recent review article (9). Drug sensitivity data can be transformed into continuous or categorical variables, representing the treatment response. Accordingly, prediction models have been designed to solve regression, classification, and ranking problems. This section reviews methods for representing treatment response and the corresponding prediction tasks.

Figure 3. Drug screening experiments are performed with various cancer models such as cell lines, organoids, and xenografts, where cancer samples are screened against a library of drug compounds. The screening data is transformed into a drug response dataset that can be used for developing drug response prediction models, including regression, classification, and ranking.

3.1. Cell lines

Cell line studies constitute the most abundant resource of response data. High-throughput drug screenings with cell lines is performed with a compounds library, where each cell-drug combination is screened at multiple drug concentrations. The response of in vitro cells at each concentration is assessed via a cell viability assay which quantifies the surviving (viable) cells after treatment vs. the untreated control cells. Performing the experiments over a range of concentrations results in a vector of non-negative continuous dose-response values for each cell-drug pair. Those data points are summarized via a dose-response curve obtained by fitting a four parameters logistic Hill equation.

3.1.1. Continuous measures of response

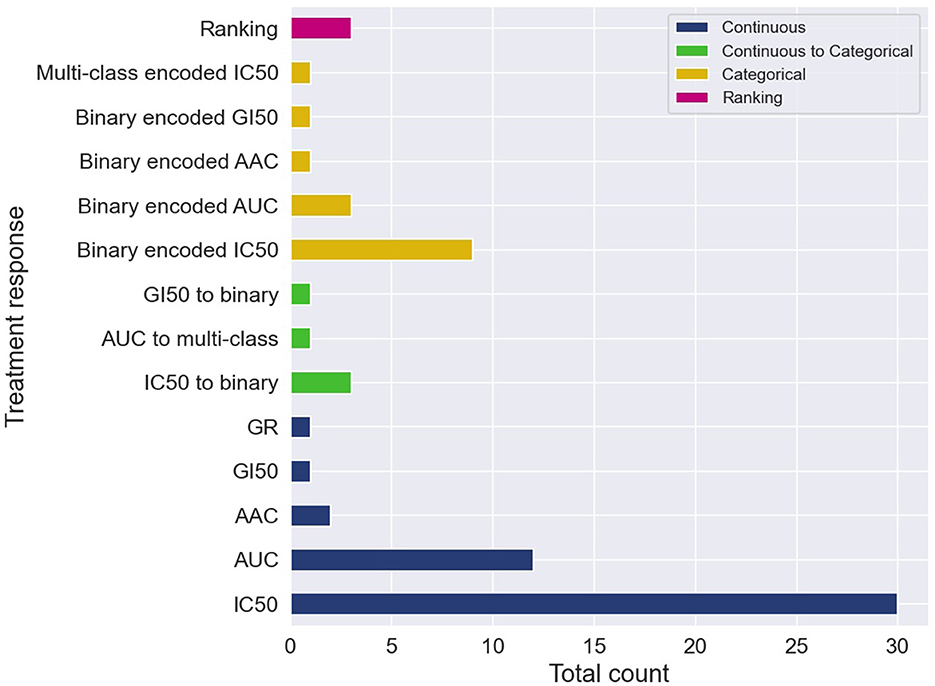

The cell line dose-dependent responses lack a direct translation into the space of in vivo cancer models. A common approach is to extract from the dose-response curve a single-value summary statistic which represents the response for a cell-drug pair. Several methods exist allowing to calculate continuous response values which serve as the prediction target with supervised regression models. The two most common metrics are IC50 and AUC, with IC50 being substantially more prevalent (Figure 4).

Figure 4. Cell-line drug response data is usually represented with continuous or categorical values. Drug response prediction (DRP) models use the different drug response representations to train regression, classification and ranking models. The histogram illustrates the prevalence of the difference representations and learning tasks. Certain papers exploit several representations of response and learning tasks, and therefore, these papers contribute more than one item to the histogram. The label categorical means that the continuous response was first categorized and then a DRP classifier was trained, while continuous to categorical means that a DRP regressor was trained and then the predicted response was categorized (in both cases, classification metrics were used for performance analysis).

Observing such predominance of IC50, an immediate question is whether IC50 exhibits substantial benefits over other measures. The half-maximal inhibitory concentration, i.e., IC50, is the concentration at which the drug reaches half of its maximal inhibition power on the fitted dose-response curve. Alternatively, measures such as AUC (area under the dose-response curve), AAC (area above the dose-response curve or activity area), and DSS (drug sensitivity score), are obtained by aggregating the cell viability values across a range of concentrations of the dose-response curve, providing what is considered a more global measure of response. Arguments in favor of these global measures are available, discussing the benefits of AUC (41, 60) and DSS (61) as opposed to IC50. In addition, empirical analyzes suggest better prediction generalization in the case of using AAC values as opposed to the alternative of using IC50 (14).

3.1.2. Categorical measures of response

Despite the prevalence of IC50 and AUC, there are arguments suggesting that continuous measures of response (blue in Figure 4) lack a straightforward interpretation in the context of decision-making purposes. Alternatively, a categorical output representing a discrete level of response such as sensitive vs. resistant is more comprehensible for humans, and thus, better supports actionable outcomes. Two primary approaches were used to produce categorical responses with DRP models.

In the more common approach, continuous responses were first categorized usually using one of the available methods such as waterfall algorithm (55, 62), LOBICO (56, 63), or a histogram-based method (64, 65). DL classifiers were trained on the transformed values to predict class probability, which is subsequently translated into a discrete response label (orange in Figure 4). The predicted probability can also be utilized as a quantitative measure of prediction uncertainty, an essential aspect in decision-making. Most models were trained to predict a binary response, representing that cancer is either sensitive or resistant to treatment. A multi-class classifier with three classes corresponding to low, intermediate, and high responsiveness, was also explored (66). The second approach is to train regression models to predict one of the continuous responses and then categorize it into two or more classes (green in Figure 4). Only a few papers explored this approach, including binary (67–70) and multi-class labels (71). This approach naturally allows assessing model performance using both regression and classification metrics, possibly offering a more robust generalization analysis.

3.1.3. Ranking

In addition to producing continuous or categorical predictions, models can be trained to learn a ranking function with drug response data. In the context of clinical precision oncology, the clinician might be interested in obtaining a ranked list of the top-k drugs that are likely to be most beneficial for the patient. In our search, we obtained three ranking models, all of which targeting personalized treatment recommendation. By framing the problem as a ranking task, the authors proposed models that learn to produce a ranked list of drugs per cell-line that are most likely to inhibit cell viability. Prasse et al. (72) transformed IC50 into drug relevance scores and derived a differentiable optimization criteria to solve the ranking problem which can be combined with different NN architectures. By combining their ranking learning method with the PaccMann architecture (73) and a fully-connected NN (FC-NN), they significantly improved the ranking performance as compared to baseline models. SRDFM used a deep factorization machine (DeepFM) with pairwise ranking approach which generates relative rankings of drugs rather than exact relevance scores (74). PPORank generates drug rankings using deep reinforcement learning which enables to sequentially improve the model as more data becomes available. SRDFM and PPORank rankings outperform non-DL models.

3.2. Patient derived xenografts and patient tumors

Whereas, cell line data serve as the primary resource for training DL models, several papers proposed methods for predicting response in PDX and patient tumors. PDX is a contemporary cancer model that was developed to better emulate human cancer in medium-scale drug screenings, providing a controlled environment for studying the disease and systematically testing treatments in pre-clinical settings. While systematic drug screenings with patients is practically impossible (6), public data containing treatment response in individual patients are available.

Tumor response in humans is obtained via a standardized evaluation framework called Response Evaluation Criteria in Solid Tumors (RECIST) (75). RECIST involves non-invasive imaging followed by evaluation of change in tumor size. Four categories used for grading tumor change include Complete Response (CR), Partial Response (PR), Progressive Disease (PD) and Stable Disease (SD). Tumor change in PDXs is evaluated by monitoring tumor volume over time using more traditional methods (e.g., calipers) as opposed to using RECIST, primarily due to cost. The main data resource for modeling drug response with PDXs, provides continuous response values calculated based on tumor volume (59). To create a counterpart to RECIST, they also set cutoff criteria allowing to transform the continuous response values into the four categories.

Because discrete response labels are available in patient and PDX datasets, DRP models are primarily designed as classifiers, where the four-label RECIST categories are transformed into sensitive (CR and PR) and resistant (PD and SD) labels. Small sample size, however, is a major challenge in developing DL models with these datasets. This issue is generally addressed by incorporating abundant cell line data and transfer learning schemes to improve predictions in PDX and patients (discussed in Section 6.2.2).

4. Cancer representations

As personalized oncology treatments rely on omics biomarkers, progress in high-throughput tissue profiling plays an important role in existing and future cancer therapies. Pharmaco-omic studies conduct multiomic profiling of cancer models and drug screening experiments (9). Advances in sequencing technologies allowed to substantially scale the experimental studies by increasing the throughput rate and reducing the cost of profiling. The algorithms for processing the raw data evolve as well, thereby providing more reliable representations of the underlying cancer biology and allowing projects to update their repositories with refined versions of existing data while utilizing improved processing techniques (e.g., DepMap portal2).

Dedicated bioinformatics pipelines for transforming raw data depend on the omic type and the profiling technology, where common steps include alignment, quantification, normalization, and quality control. Profiling at each omic level produces high-dimensional representation of cancer that can be used as features in the downstream modeling of drug response. Existing prediction models utilize features that were obtained primarily at four omic levels including genomic [mutation, copy-number variation (CNV)], transcriptomic (gene expression microarrays, RNA-Seq), epigenomic (methylation), and proteomic [Reverse Phase Protein Arrays (RPPA)] (9). Figure 5A shows the prevalence of these representations in DRP models. Many models use these omic features directly as inputs to NNs while others optimize representations via additional preprocessing with the goal of increasing predictive power (76, 77).

Figure 5. Feature representations in drug response prediction (DRP) models. Various representations can be used to represent cancers and drugs in DRP models, as described in Sections 4, 5, respectively. Each DRP model usually exploits one or multiple feature types (Supplementary Table 1 lists the feature types that each model have used). (A) The prevalence of omics (cancer) feature representations in DRP models. (B) The prevalence of drug feature representations in DRP models. (C) The distribution of papers that utilized single-omics and multiomics features in DRP models. We used Supplementary Table 1 to generate these figures, where we considered only peer-reviewed publications, collected until August 2022. CNV, copy number variations; RPPA, reverse phase protein arrays.

In 2014, the NCI-DREAM challenge was a community effort that assessed performance of various classical ML models in predicting drug response in breast cancer cell-lines (27). It was reported that for most models participated in the challenge, gene expression microarrays provided greater predictive power than other data types. Results of this challenge seem to set the tone for future research in this field as gene expression was used in approximately 90% of the models either alone or in combination with other feature types, including mutation, CNV, methylation, and RPPA. Mutation and CNV are also common but rarely used without gene expression (with only 12% of such models).

Learning from multiple omic types have shown to improve generalization, and as consequence, integration of multiomics have been a recent trend (Figure 5C). A straightforward approach is to concatenate the multiomic profiles to form a feature vector and pass it as an input to a NN model, a method commonly referred to as early integration. It has been shown, however, that late integration significantly improves predictions where the different omic profiles are passed through separate subnetworks before the integration (39, 40, 78). Yet, caveats related to data availability hinder the pertinence of multiomics as opposed to using single-omics. Not having all omics available for all samples is a common occurrence in pharmaco-omic projects. A common approach to address this issue is filtering the dataset to retain a subset of cancer samples that contain all the required omic types as well as drug response data. The multiomics data is closely related to multimodal learning which refers to prediction models that learn jointly from multiple data modalities (i.e., representations). It was demonstrated that leveraging multimodal data generally improves predictions.

5. Representations of drug compounds

Contemporary cancer treatment often involves administering therapeutic drugs to patients to inhibit or stop growth of cancer cells, destroy tumors, or boost cancer-related immune system. Drug molecules are 3-D chemical structures consisting of atoms and bonds with complex atomic interactions. Developing numerical representation of molecules is an active research area in several related disciplines, including in silico design and discovery of anticancer drugs.

Ultimately, drug representations should be able to encode essential physical and chemical properties of molecules in compact formats. Alternatively, when using these representations as drug features for DRP, the model should be able to properly ingest these features and extract information predictive of treatment response. Therefore, the inherent information encoded with numerical drug representations and the model capability to learn from that specific representation are closely related and mutually important for producing efficient DRP models.

Neural networks provide substantially more flexibility than classical ML in learning from unstructured data such as strings, images, and graphs. This flexibility facilitates a noticeable trend of exploring various drug representations as features in DL-based DRP models (21). Primary types of drug representations include SMILES, fingerprints, descriptors, and graph-based structures (Figure 5B).

5.1. SMILES

Perhaps the most common format for querying, handling and storing molecules when working with modern chemoinformatics software tools and databases is a linear notation format called SMILES (simplified molecular-input line-entry system). With this data structure, each molecule is represented with a string of symbolic characters generated by a graph traversal algorithm (21, 79). Although the use of SMILES in DRP is quite limited as compared to other applications (e.g., molecule property prediction, QSAR), this format provides several benefits.

Following common text preprocessing steps (e.g., tokenization, one-hot encoding), SMILES can be naturally used with common sequence-aware modules such as RNN (72, 73, 80) and 1-D CNN (81). Moreover, each molecule can be represented with different strings depending on the initial conditions when applying the SMILES generating algorithm (i.e., starting node of graph traversal). The resulting strings are referred to as randomized or enumerated SMILES and have been reported to improve generalization when utilized for drug augmentation (82, 83). SMILES are less prevalent in DRP models but remain a popular notation for describing molecules because it often serves as an intermediate step for generating other representations such as fingerprints, descriptors, and graph structures.

5.2. Descriptors and fingerprints

Fingerprints (FPs) and descriptors are the two most common feature types for representing drugs in DRP papers (Figure 5B). Unlike with SMILES, where the string length varies for different molecules, we can specify the same number of features for all drugs in a dataset with either FPs or descriptors. The consistent feature dimensionality across drugs makes descriptors and FPs easy to use with NNs and classical ML. With FPs, a drug is a binary vector where each value encodes presence or absence of a molecular substructure with a common vector size of 512, 1,024, or 2,048. Multiple algorithms for generating FPs are available, implemented by several chemoinformatics packages. For example, Morgan FPs refers to Extended Connectivity Fingerprints (ECFPs) generated via the Morgan algorithm. ECFP is a class of circular FPs where atom neighborhoods are numerically encoded with binary values. The open-source package RDKit provides an API for generating these FPs which are often used for DRP.3 Descriptors are a vector of continuous and discrete values representing various physical and chemical properties, usually containing hundreds or a few thousands of variables. Both open-source and proprietary software tools are available for generating descriptors, where PaDEL (84), Mordred (85), and Dragon (86) being particularly used for DRP. A systematic assessment of various features and HPs suggests that DRP with DL exhibits no significant difference when utilizing ECFP, Mordred, or Dragon representations as drug features (87).

5.3. Graph structures

Graphs are powerful representations where complex systems can be represented using nodes and edges. Recent advancements in GNNs have opened promising research directions allowing efficient learning of predictive representations from graph data (88–90), and contributing to the widespread interest in GNN among AI researchers and application domain experts (91, 92). Graph-based representations have emerged as a new trend in computational drug development and discovery (93, 94). In graph notation, each molecule is described with a unique graph of nodes and edges, where each atom (i.e., node) and each bond (i.e., edge) can be represented with multiple features characterizing physical and chemical properties. Resources and examples for constructing graphs are well documented in popular chemoinformatics toolkits, as well as software packages dedicated for GNNs (95–97).

By borrowing methodologies from related fields such as drug property prediction and drug design, developers of DRP models exploit graph molecular structures for representing drugs combined with GNN-based architectures. We observe concordant results among those papers investigating molecular graphs with GNNs, reporting superior performance of their models compared to baselines that use non-graph representations. Based on these recent results and the assumption that graphical description is arguably a more natural way to represent drugs as compared to the aforementioned alternatives, molecular graphs combined with GNNs should be a default modeling choice. However, only few papers actually report results with rigorous ablation analysis with extensive HP tuning comparing molecular graphs with other representations such as SMILES, FPs, and descriptors for the application of DRP.

6. Deep learning methods for drug response prediction

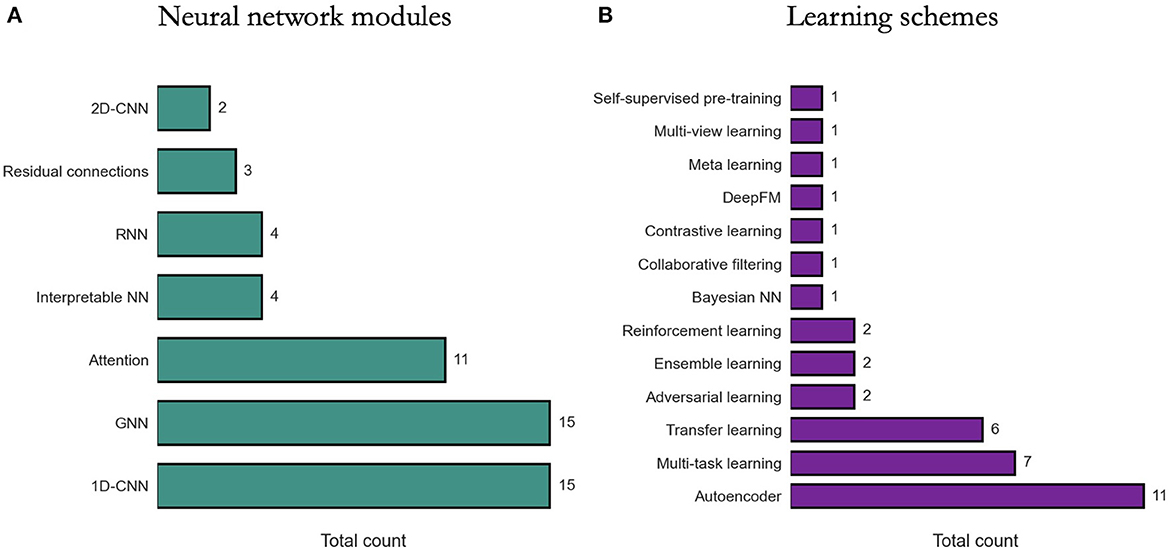

We review existing approaches for modeling DRP with DL in terms of two perspectives: (1) NN modules that are used as building blocks for constructing DRP architectures, (2) learning schemes that are used to train models with the goal to improve prediction generalization. The diversity of methods is highlighted in Figure 6.

Figure 6. Prevalence of deep learning methods in drug response prediction (DRP) papers. Various methods have been used to build DRP models which can be categorized into neural network (NN) modules (Section 6.1) and learning schemes (Section 6.2). The prevalence of NN modules and learning schemes across papers (from Supplementary Table 1) is shown, respectively, in (A, B). 1D-CNN and 2D-CNN, one- and two-dimensional convolutional NN; GNN, graph NN; DeepFM, deep factorization machine.

6.1. Neural network modules

Constructing a NN architecture requires choosing appropriate components, their properties, and the way these components are organized and connected (i.e., network topology). These components are often available as modules in DL frameworks and range from simple dense layers to more advanced structures such as attention. Certain structures, however, are not generally available as is and should be constructed manually using the available modules (e.g., residual connections). While there is an absence of rigorous methodologies for designing network topology, the choice of certain modules can be driven by the characteristics of input data.

6.1.1. Dense layers

Menden et al. (34) is a pioneering work where cell line and drug features were used to train an FC-NN with a single hidden layer to predict IC50. Recently, FC-NN models with multiple dense hidden layers have been proposed to predict response in cell lines (71) and humans (67). Several other models with only dense layers were explored, combining advanced NN attributes. MOLI utilizes triplet loss function and late integration of multiomic inputs to generalize across cancer models by training with cell lines and predicting in PDXs and patients (39). DrugOrchestra is multi-task model that jointly learns to predict drug response, drug target, and side effects (98). RefDNN explores data preprocessing techniques to generate more predictive cell line and drug representations (99). For cell line representation, multiple ElasticNets produce a vector representing drug resistance of a cell line to a set of reference drugs, while for drug representation, a structure similarity profile is computed for each input drug with respect to the set of reference drugs. Another model with late integration of multiomic data is proposed for predicting drug response and survival outcomes which also uses neighborhood component analysis for feature selection of multiomic data (69). PathDSP utilizes multi-modal data preprocessed via pathway enrichment analysis and integrated into an FC-NN for DRP (45). All the aforementioned models demonstrate promising prediction performance while utilizing dense layers only in their architectures.

6.1.2. Convolutional layers

Due to state-of-the-art performance in various applications (100) and fewer learning parameters required as compared to dense layers, 1-D CNNs are a popular choice in DRP models, particularly for processing omics data. DRP models utilizing CNNs have been published every year since 2018.

DeepIC50 is a multi-class classifier exploiting an early integration of cell line mutations and drug features (descriptors and FPs) passed to a three-layer CNN and followed by an FC-NN prediction module (66). tCNNs exploits late integration of two parallel CNN subnetworks with three layers each (101). Binary features of cell lines (mutations and CNV) and drugs (one-hot encoded SMILES) are propagated through the respective subnetworks, concatenated, and passed through an dense layer for the prediction of IC50. GraphDRP is very similar to tCNNs in terms of CNN utilization, with the primary difference being the utility of molecular graphs and GNN layers for learning drug representations. CDRScan is an ensemble of five different architectures with varying design choices (late and early integration, shallow and deep NNs, with and without dense layers) which all contain CNNs and learn to predict IC50 from mutation and FP data (102).

DeepCDR takes the design further by exploiting late integration of drug and multiomic features, including genomic mutation, gene expression, and DNA methylation, where only the mutations are passed through a CNN layers (103). Following DeepCDR, two similar models were published, GraTransDRP (104) and GraOmicDRP (105) which both use late integration of multiomics and molecular graphs for drug representation. While DeepCDR uses CNNs only for genomic features, all three subnetworks for multiomic inputs consist of CNNs in GraTransDRP and GraOmicDRP. SWNet is another model utilizing late integration of cell lines and drugs with three CNN layers in the cell-line subnetwork and one such layer in the prediction subnetwork (106).

We may gather that 1-D CNNs carry substantial benefits in the context of DRP, considering their prevalence (Figure 6). Based on our search, however, only two papers explicitly report their empirical findings assessing CNNs vs. alternative learning modules. Interestingly, both papers suggest that CNNs underperform dense layers in their respective architectures. Zhao et al. (71) reports that in a twelve-layer model predicting response from gene expression, dense layers outperform CNNs or RNNs. Manica et al. (73) present extensive analyzes exploring various architectures for encoding drug representations directly from SMILES, including CNNs, bidirectional RNNs (bRNNs), and various attention modules. Their results suggest that a combination of convolutional and attentions modules produces the most predictive model while a CNN-only encoding subnetwork performs the worst as compared to the explored variants.

With the objective to leverage 2-D CNNs, two algorithms were recently published for converting tabular data into images and utilizing CNNs for predicting drug response. Both algorithms, REFINED (76) and IGTD (77), convert gene expressions and drug descriptors into images as a preprocessing step, and then learn to predict drug response with late integration of 2-D CNN subnetworks. The papers report superior performance of proposed algorithms as compared to various baseline models.

6.1.3. Attention mechanism

Presenting PaccMann (80), Oskooei et al. were the first to report the use of attention-based NN for DRP, exploiting late feature integration with attention mechanisms incorporated both in the cell line and drug subnetworks. On the cell line path, gene expressions are encoded with self-attention producing a gene attention (GA) vector. On drug path, SMILES embeddings are combined with GA via contextual-attention, where the GA vector serves as the context. This design which was further described in Manica et al. (73) improves generalization as compared to baselines as well as facilitates interpretability via attention weights.

Attention-based designs have been further explored in recent years. CADRE is a collaborative filtering model with contextual-attention and pre-trained gene embeddings (gene2vec), designed to recommend treatments based on cell line gene expressions (107). Ablation analyzes against simpler models suggest that CADRE's attributes yield improved generalization. Moreover, the authors show how attention weights could be used to identify biomarker genes, partially addressing the notorious black-box property associated with many DL models. In AGMI, attentions are used for aggregating heterogeneous feature types, including raw multiomics as well as engineered features via protein-protein interaction (PPI), gene pathways, and gene correlations with PCC (Pearson correlation coefficient), contributing to improved generalization. Overall, papers report the predictive benefits of attentions (106–111), while a few papers also explore attention weights for interpretability (107, 110).

Transformer is an attention-based architecture that have recently been explored in DRP models for encoding drug representations (70, 104). In GraTransDRP (104), the authors propose to extend an existing model, GraOmicDRP (105), by modifying the drug subnetwork to include graph attention network (GAT), graph isomorphism network (GIN), and a graph transformer which learns from graph data. In DeepTTA (70), a transformer module encodes drug information represented as text data [ESPF substructures (112)]. Both models report significant improvement in generalization thanks to transformer modules. Transformers have shown immense success first in language and later vision applications, and expected to gain further attention from the DRP community.

6.1.4. Graph neural network layers

Designed to efficiently learn from graph data, GNNs have been extremely popular in applications where information can be represented as graphs. With at least 15 GNN-based DRP models published since 2020 (Supplementary Table 1), it is apparent that the use of GNNs with graph data becomes an emerging alternative to some of the more traditional approaches discussed above. In DRP papers, a graph, G = (V, E), is often characterized by a set of nodes V (a.k.a. vertices), a set of edges E (a.k.a. links), an adjacency matrix W which contains values representing associations between the nodes, and an attribute matrix A containing feature vectors representing node attributes (113). A primary challenge that researchers are facing when designing GNN-based models lies in how to transform components of the DRP problem into graphs. Luckily, accumulated knowledge and methodologies allow viewing biological and chemical systems as networks.

Due to substantial progress in applying GNNs to drug discovery and development (94, 95), it was relatively straightforward to adopt similar techniques to DRP. Papers propose models that convert drug SMILES into graphs and utilize modern GNN layers to encode latent drug representations (49, 103–106, 114). The common approach is constructing a unique graph per drug with nodes and edges representing atoms and bonds, respectively, where node features are the properties of each atom. The subnetworks consist of several (usually 3–5) GNN layers such as GCN and GIN.

Another approach is constructing graphs using biological information of cancer samples, where genes are the graph nodes and gene relationships are the edges. There is slightly more diversity of approaches in this space as compared to drugs partially due to the use multiomic data which allow constructing graphs with heterogeneous node attributes. The multiomics can be utilized to encode gene relationships and attributes using one or more data modalities, including correlations between genes (109, 111, 115, 116), known protein interactions (i.e., PPI) (109, 111, 117, 118) using STRING database (119), and relationships based on known gene pathways (109) using GSEA dataset (120). Recently, novel approaches have been explored such as heterogeneous graphs where both cell lines and drugs are encoded as graph nodes (108, 116, 118, 121), and a model that utilizes diverse data types for building graphs, including differential gene expressions, disease-gene association scores and kinase inhibitor profiling (111). Despite the diversity of the different approaches, most papers report superior performance as compared baseline models.

6.2. Learning schemes

Various learning schemes have been proposed to improve drug response prediction in cancer models. These techniques can be used in different configurations, usually regardless of the fundamental NN building blocks that are used to construct the architecture.

6.2.1. Autoencoders

When considering the large feature space of cancer and drug representations (Sections 4, 5) and the size of drug response datasets, the number of input features outnumbers the number of response samples which potentially leads to model overfitting (6). Learning predictive representations with high-dimensional data manifests a primary strength of multi-layer NNs. A prominent example are autoencoders (AEs) which are NNs that are trained to compress and then reconstruct data in an end-to-end unsupervised learning fashion. Given that an AE can reliably reconstruct the input data, the compressed latent representation is characterized by reduced data redundancy which improves the feature to sample ratio and can be subsequently used as inputs in downstream drug response prediction task.

The primary application of AEs in DRP models is dimensionality reduction. The compressed representation is used as input to a DRP model which is usually a NN or in some cases a classical ML model. DeepDSC reduces gene expressions from about 20,000 genes down to 500 and concatenates the downsampled data with drug FPs as inputs to a FC-NN (122). VAEN exploits variational AEs (VAEs) to learn a low-dimensional representation of gene expressions which are fed into ElasticNet (48). DEERS compresses cancer features (gene expression, mutation, and tissue type) and drugs features (kinase inhibition profiles) into 10 dimensions which are concatenated for a FC-NN (44). Dr.VAE learns pre- and post-treatment embeddings of gene expressions and leverages those embeddings for DRP (123). AutoBorutaRF combines AEs with a feature selection method followed by Boruta algorithm and RF (124). Ding et al. (125) utilized learned representations of multiple hidden layers of the encoder as input features, rather than using just the latent representation.

AEs have also been utilized with external datasets as part of model pretraining. DeepDR uses gene expressions and mutations from the TCGA database to train separate AEs for each omic type (126). The pre-trained encoders are extracted from the individual AEs, concatenated, and passed as inputs to a FC-NN. The combined model was trained to predict drug response in cell lines. Another model uses separate AEs to encode cancer and drug features (127). A GeneVAE encodes gene expressions from CCLE dataset, and a Junction-Tree VAE (JT-VAE) (128) encodes drug molecular graphs from the ZINC database. Similar to DeepDR, the pre-trained encoders are concatenated and combined for DRP.

Dimensionality reduction and model pre-training are common applications of AEs. Yet, AEs have also been utilized in less conventional ways. Xia et al. (41) proposed UnoMT, a multi-task learning (MTL) model that predicts drug response in cell lines via late-integration of multiomic features and drug representations. In addition to the primary DRP task, the model predicts several auxiliary tasks, where one of them is a decoder that reconstructs gene expressions. TUGDA exploits advanced learning schemes such as domain adaption and MTL to improve generalization in datasets with limited sample size (51). Each prediction task in the MTL corresponds to a different drug. With the goal to mitigate the influence of unreliable tasks and reduce the risk of negative transfer, a regularization AE takes the model output and reconstructs an intermediate hidden layer.

6.2.2. Transfer learning

Transfer learning refers to learning schemes designed to improve generalization performance in a target domain T by transferring knowledge acquired in a source domain S (129). The most common scenario is to transfer learned representations from a source domain with large amounts of data into a target domain which suffers from insufficient data. Transfer learning with DL have shown remarkable success in various applications (130) and have recently been applied to DRP (14, 42, 50, 51, 78, 131).

Zhu et al. proposed an ensemble transfer learning (ETL) that extends the classical transfer learning by combining predictions from multiple models, each trained on a cell-line dataset and fine-tuned on a different cell-line dataset. Considering three application scenarios, a NN with late-integration of gene expression and drug descriptors outperformed baselines in most cases, while the LightGBM showed superior performance in certain experimental settings. AITL was proposed to improve generalization across biological domains (50). The model was trained using gene expressions and drug responses from source and target domain data, where abundant cell line data serve as source and the relatively scarce data from either PDXs or patients serves as target. A primary component for accomplishing knowledge transfer is a feature extractor subnetwork that learns shared representations using source and target features, which are passed to multi-task subnetwork for response prediction of source and target samples. Ma et al. (131) proposed TCRP, a two-phase learning framework with meta-learning as a pre-training step followed by a few-shot learning for context transfer. With the goal to obtain transferable knowledge, a meta-learning is applied iteratively by training a simple NN with different subsets of cell lines. The same NN was trained to predict drug response in both phases but with different data. With a few samples from the target context, the pre-trained NN was further trained for a single iteration, demonstrating performance with patient-derived cell line (PDTC) and PDXs.

ETL and AITL can be categorized as inductive transfer learning, where labels from both the source and target data are used to improve generalization on target data (130). However, abundance of per-clinical and clinical data are not labeled, and therefore, remain unused with classical transfer learning. Velodrome is a semi-supervised model that exploits labeled cell lines and unlabeled patient data to improve out-of-distribution (OOD) generalization on datasets that remained unused during training. Generalization has been demonstrated with PDX, patient, and cell lines from non-solid tissue types. TUGDA is an MTL and domain adaptation model which relies on cell line data and advanced learning schemes to improve predictions in data-limited cancer models (51). The model takes gene expressions and learns a latent shared representation that is propagated to predict drug response in a multi-task fashion with each task corresponding to a different drug. To enable domain adaptation from cell lines to other cancer models such as PDXs and patients, adversarial learning is used where a discriminator is branched from the shared layer to classify the type of cancer model. The discriminator is employed in supervised and unsupervised steps, with source- and target domain samples, respectively.

6.2.3. Multi-task learning

As transfer learning and multi-task learning (MTL) are related approaches (129, 130), several models have integrated both methods in their DL-based DRP models (41, 50, 51). However, utilizing MTL without transfer learning is also common. DrugOrchestra is an original work where multiple heterogeneous data sources were curated to develop a multi-task model for simultaneously learning to predict drug response, drug target, and side effects while utilizing advanced weigh sharing approaches (98). SWnet proposed a gene weight layer which scales the contributions of mutations when combined with gene expressions as a multiomic input (106). In its simplest form, the weight layer is trained for the entire dataset. The authors also explored a weight matrix where each vector is determined for a single drug. Considering N drugs in the dataset, the model was trained in an MTL fashion with N prediction tasks, one task for each drug, outperforming the single weight layer configuration. Zhu et al. (118) used MTL in a pre-training step to accumulate chemical knowledge by training a GNN to predict more than a thousand biochemical properties of molecules, a strategy adopted from another work (132). The pre-trained model was integrated into the complete DRP model called TGSA.

7. Model evaluation and comparison

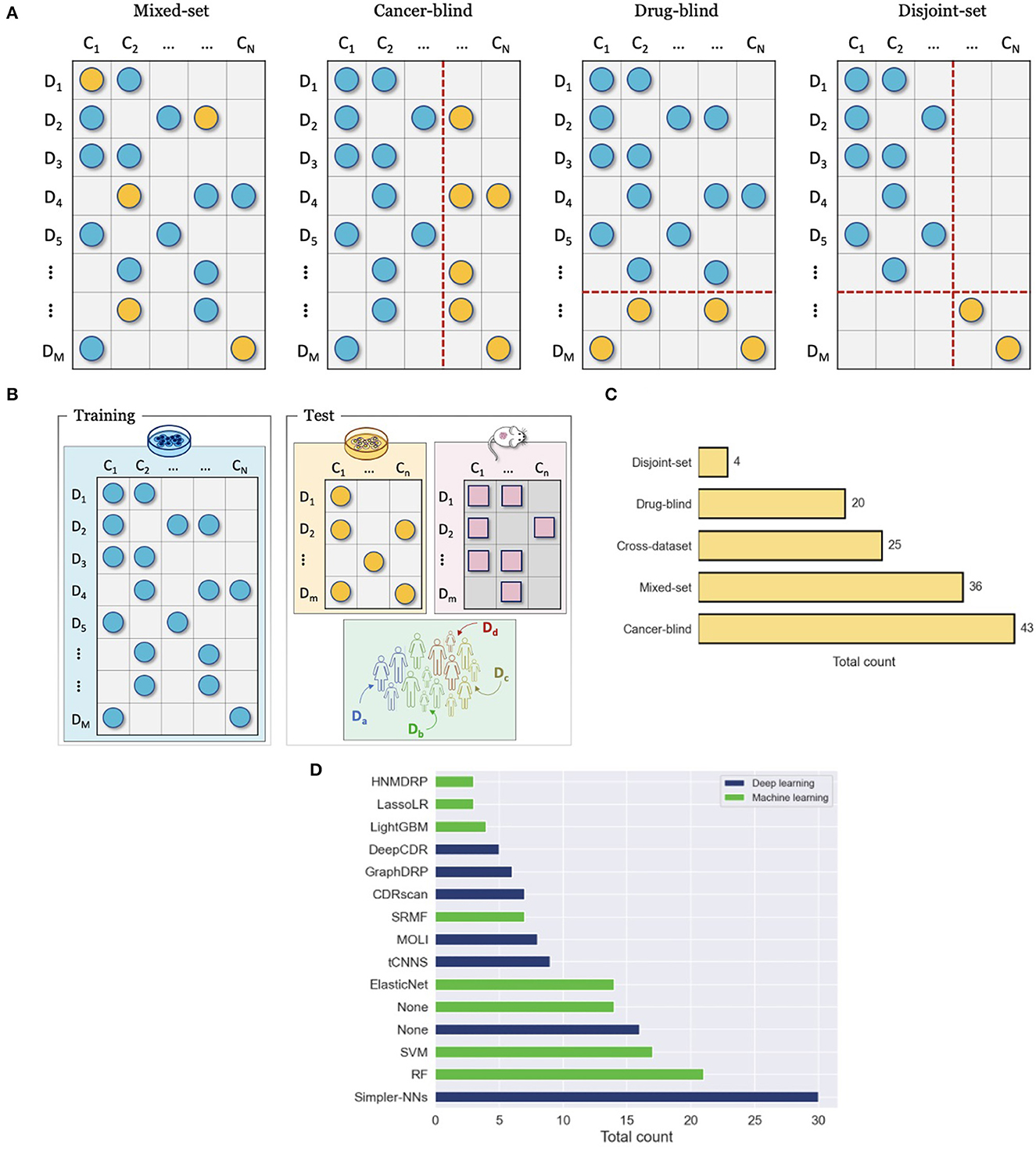

Papers proposing DRP models explore advanced approaches for data representation, NN architecture design, and learning schemes. To motivate the increasing complexity of methods, it is essential to demonstrate their utility for cancer medicine and advantages against existing approaches (i.e., baseline models). Model performance in these papers is primarily assessed via prediction generalization, i.e., prediction accuracy on a test set of unseen data samples, with several common metrics used for regression, classification, and ranking. The choice of the test set is critical as it may illustrate the potential utility of the model for a given application in cancer medicine and significantly affect the observed performance. To make a comparison between proposed and existing models compelling, consistent evaluations should be utilized in terms of drug response datasets, training and test sets, evaluation metrics, and optimization efforts (e.g., HP tuning). This section presents common evaluation approaches used for prediction generalization in terms of drug screening datasets (single or multiple datasets), data splitting strategies (train and test), and baselines models (Figure 7).

Figure 7. Evaluation and comparison of drug response prediction (DRP) models. (A) Data splitting strategies for evaluating performance with a single drug screening study include mixed-set, cancer-blind, drug-blind, and disjoint-set. (B) Cross-dataset evaluation where drug response data that is used for training and evaluation come from different studies. (C) Prevalence of common evaluation methods across studies (from Supplementary Table 1). The methods include mixed-set, cancer-blind, drug-blind, disjoint-set, and cross-dataset, as described in Sections 7.2, 7.1. (D) Histogram of the top fifteen most popular baseline models that were used to benchmark prediction performance of DRP models. The baselines are color-coded as either deep learning (DL) or classical machine learning (ML). The label Simpler-NNs refers to simpler versions of proposed models, where ablation analysis was usually conducted. The label None refers to cases where this type of baseline (i.e., ML or DL) was not used in performance analysis at all (e.g., the blue bar for None shows that 16 of the papers have not used DL-based baselines in their analysis).

7.1. Single-dataset evaluation

A single drug screening study is most commonly used to develop and assess performance of DRP models. Regardless of specific cancer models, the screening data space can be characterized by the involved drugs and cancers. The 2-D matrix in Figure 3 illustrates such a space composed of a finite number of known cancer cases and drugs, where the marked coordinates symbolize that treatment responses are available for these combinations. Considering this 2-D space, four data splitting strategies are commonly used, where each can imitate a different application scenario of drug response prediction (Figure 7A).

7.1.1. Known cancer and drugs (mixed-set)

To ensure a valid assessment of prediction generalization, training and test sets should contain unique cancer-drug pairs without overlap. The overlap may occur, however, on cancer or drug (but not both) in which case a model encounters drug or cancer features during testing that also showed up during the training phase. For example, consider two drug response samples r11 = {d1, c1} included in the training set and r12 = {d1, c2} in the test set. While the pairs are unique, the drug features of d1 show up during both training and testing. The cancers or drugs that show up in test and training set are deemed as known or seen. This splitting strategy is also known as mixed-set, where drugs and cancers are mixed in training and test sets. Randomly splitting cancer-drug pairs naturally results in a mixed-set analysis, where drug and cancer features can show up in both training and test sets. Due to the overlap on both feature dimensions, this analysis naturally leads to the highest performance, and it is also the most common and straightforward to implement. Because of the simplicity of this strategy, almost all papers include it in their analysis. An application of prediction models developed with the mixed-set validation is repurposing of known anticancer drugs for unexplored cancer conditions that have not been treated with these drugs. When considering the 2-D space of known cancer cases and drugs in a given dataset, not all cancer cases and drugs have been screened against each other. If a DRP model exhibits high generalization, it can be deployed as a virtual screening tool which helps in identifying highly sensitive cancer-drug pairs and thereby assisting in the design of experiments or treatments.

7.1.2. Unknown cancers and known drugs (cancer-blind)

When a dataset is split such that an overlap occurs only on drugs but not on cancer cases, it is said that drugs are known but the cancer cases are unknown with respect to the test set. This splitting strategy is also sometimes referred to as cancer-disjoint, cancer-blind, unseen-cancer, and a special case called leave-n-cancers-out where a certain number of cancers is excluded from the training set. Splitting a drug response dataset this way requires extra work which is, therefore, less common than random splitting but still prevalent, especially in recent years. The performance is usually worse than in the mixed-set analysis due to increased complexity in generalizing across the omics feature space of cancers. Alternatively, this type of analysis is perhaps the most adequate in simulating the utility of DRP models for the design of personalized cancer treatment. When considering a potential workflow of utilizing a DRP model for personalized cancer treatment, the patient tumor data is not expected to be included in the training set that was used for model development. However, the drug treatments would be chosen from a collection of known compounds (approved or investigational) that may have been screened against other cancer cases, the drug features of which were used to train the prediction model during model development. Models that exhibit high generalization in this scenario are considered more suitable for designing personalized cancer treatment.

7.1.3. Known cancers and unknown drugs (drug-blind)

Analogously to cancer-blind analysis, the drug-blind refers to data partitioning where an overlap between the training and test sets may occur on cancers but not on drugs. This data splitting strategy is also sometimes referred to as drug-disjoint, unseen-drugs, and a special case called leave-n-drugs-out where a certain number of drugs is excluded from the training set. Interestingly, the generalization in drug-blind analysis as evaluated by common performance metrics is significantly worse than in mixed-set and cancer-blind analysis and, in some cases, models completely fail to make effective predictions. The worse performance can be attributed to the immense chemical space of drug compounds which imposes a challenge on predictive models of learning generalizable feature embeddings with just a few hundreds of drugs that were screened in typical drug screening studies. In addition, it has been shown empirically that drug diversity contributes to a majority of response variation (41, 133), which can explain the performance drop in drug-blind analysis. Models that excel in generalizing for unknown drugs can be useful for repurposing of non-cancer therapies to cancer indications and development of novel drugs for cancer treatment. Existing drugs approved for non-cancer diseases can be repurposed for cancer treatment, thereby decreasing expenses and speeding up the time to market. A DRP model, exhibiting high performance in drug-blind scenario, can be utilized for in silico drug screening across cancer types and libraries of approved compounds.

7.1.4. Unknown cancers and drugs (disjoint-set)

By extending the drug-blind and cancer-blind analysis to both dimensions, generalization analysis can be performed where both drugs and cancers remain disjoint between training and test sets. This analysis exhibits the worst generalization performance. It is primarily used to assess the capacity of models to generalize in this challenging scenario where the application of such model in clinical or preclinical setting is not essentially obvious. Models exhibiting significantly better performance as compared to baselines could be interesting cases for further exploration of the model architecture to scenarios more relevant in pre-clinical and clinical settings.

7.2. Cross-dataset evaluation

Another validation scheme is to assess generalization across datasets, where response data for model development and model testing are derived from different drug screening studies (Figure 7B). The source dataset (DS), used for model development, and the target dataset (DT), used for evaluation, can be of the same or different cancer models. Several selected works have been summarized in Sharifi-Noghabi et al. (14), showing that cell lines constitute the primary cancer model for DS while cell lines, PDX, and patient response data are all common options for DT. Cross-dataset analysis is less prevalent as compared to single-dataset analysis. Primary challenges are related to data preparation and arise when standardizing metadata across datasets, including annotations of drugs, cancer samples, and omics features, as well as utilizing consistent data preprocessing steps for feature normalization (e.g., addressing batch effect) and computation of response metrics.

Inconsistencies in drug screening data across cell line studies, partially due to different experimental setups, are an acknowledged reality which impedes naive integration of data from multiple sources into a single dataset (62, 134). A large-scale empirical study focused on assessing generalization of DL and ML models across five cell line datasets suggests that generalization may depend on multiple attributes of DS and DT datasets, including the number of unique drugs and cell lines in DS and DT, the drug and cell line overlap between DS and DT, and the sensitivity assays used for measuring drug response. Although this type of analysis requires adequate knowledge and substantial effort in data preparation and model development, demonstrating the ability to make accurate predictions across datasets or cancer models can be essential for a DRP model to become a favorable candidate for preclinical and clinical studies.

7.3. Baselines

Proposing novel DRP models could be seen unnecessary unless it leads to better performance as compared to existing models. In predictive modeling, baseline models usually refer to models that serve as a point of reference. Figure 7D shows the number of times each model was used as a baseline across papers, where ML-based and DL-based models are color-coded to distinguish between the two types. The label None refers to cases where this type of baseline (i.e., ML or DL) was not used in performance analysis at all (e.g., the blue bar for None shows that 16 of the papers have not used DL-based baselines in their analysis).

The most popular choice for a baseline is to use a simpler version of a proposed model, where simplicity is defined in the context of a given model. For example, in the case of MOLI which advocates for multi-omics late integration with triplet loss function, simpler versions include combinations of single- instead multi-omics, early instead of late integration, and binary cross-entropy loss function instead of triplet loss function (39). Using simpler versions of a model as baselines can be both straightforward and insightful. In many cases, modifying a model to construct a baseline involves minor changes of little complexity where model attributes are replaced with simpler alternatives followed by ablation analysis demonstrating the necessity of the proposed attributes. However, the actual baselines and ablation experiments that are chosen to benchmark models are a critical aspect that affects credibility. Some DL models explore alternative data representations, such as images (76, 77) or graphs (105, 118). In these cases, ablation studies with common vector-based representations (e.g., FPS, descriptors) should be considered. Certain models incorporate structural biological information such as pathways into NN architectures with the goal to produce interpretable DRP models. With these models, a baseline may need to incorporate random pathway information to serve as a reference point to any claimed pathway interpretability, as discussed in Li et al. (135).

The second most popular baseline model is random forest (RF) presumably due to its availability, simplicity, and predictive power. Boosting algorithms such XGBoost and LightGBM are less prevalent although poses similar characteristics as RF yet faster and often more predictive. Tree-based models and boosting algorithms have shown tremendous success in various prediction tasks and data science competitions. HP tuning is often an essential step to squeeze the maximum performance from these algorithms. When these models are used as baselines in DRP papers, very little (if any) HP tuning is performed. When the focus of proposed models is on prediction performance, extensive HP tuning should be performed with the baselines the reported.

Another common approach to demonstrate model performance is to compare it with other community models. The main problem with this approach is that many papers extract reported performance scores from original publications without actually implementing the models or using the same dataset. Model performance is likely to depend on feature types and specific samples allocated for training and testing. Thus, this approach makes sense in cases where benchmark datasets have been established with clearly designated training and testing samples. However, this is not yet the case with DRP tasks. Reproducing results from papers is challenging, time-consuming, and often contradicts the reported scores (26). Alternatively, there are papers that diligently reproduce models and use these as baselines. So long as benchmark datasets have not been established, reproducing models and benchmarking with consistent datasets should be considered the standard if one decides to compare a model with another published community model.

Oftentimes, simple analysis can lead to useful insights. For example, in the same study that analyzed baselines for interpretable pathway-based architectures (135), the authors also explored a naïve baseline which simply reports the average of drug response values across all samples. While very easy to implement, this baseline performed surprisingly well. This simple and model-agnostic baseline can be very informative not only with respect to a specific model, but more generally for the entire prediction task. Despite the extra amount of work which may require the design of appropriate baselines, rigorous and comprehensive benchmark analysis is likely to establish fidelity and attract the community.

8. Discussion

8.1. Practices

Development of DRP models is a comprehensive task, success of which depends on multiple factors, such as prepossessing and representation of data, model training, and performance evaluation. Good design choices related to each component of the DRP workflow (Section 2) can positively contribute to model performance, while bad practices can result in adverse effects, leading to poor performance. Here, we provide a short summary of practices that can guide researchers in developing DRP models.

Feature scaling is a common preprocessing step, usually applied to both cancer and drug features. However, when the dataset contains omics data from multiple sources or batches, active measures should be considered for mitigating systematic differences between the batches, which otherwise are likely to bias the downstream ML analysis (51, 136, 137). Possible measures include batch-correction (51) and architectures that are specifically designed to address discrepancies in the input feature space (50, 52). Notice that models can also benefit from standardizing data in the output space. For example, in order to combine response data from multiple sources, the drug response AUC values shall be calculated based on the same dose range (41). Still in the context of in vitro drug response, there is a consensus that the global measures of response such AUC and AAC are more robust and produce more predictive models as compared to models trained with IC50 (14, 41, 61).

Due to the diversity of NN architectures and learning schemes (Sections 6.1, 6.2), it is challenging to decipher best practices for DRP model development. Yet, some consensus seems to exist regarding several design principles. Late feature integration exhibits better generalization as compared to early integration approaches (39, 40, 78). Transfer learning and domain adaption significantly improve generalization across datasets and biological cancer models (50, 51). Similar to other application domains, DL for DRP usually requires extensive tuning of HPs to boost performance and integration of early stopping during the training process to avoid model over-fitting. Further comprehensive and systematic studies are required to produce unbiased conclusions regarding DL design methodologies for DRP.

Cross-validation with a DRP model can be performed through different data partitioning schemes, each simulating a different application of the model (Section 7). For example, if authors target the application of precision oncology, then cancer-blind analysis should be considered for model evaluation (Section 7.1.2). Alternatively, if mixed-set analysis is used (Section 7.1.1), the model will produce inflated scores, exhibiting overoptimistic results in the context of precision oncology. Similarly, drug-blind analysis should be used with models targeting drug development applications which commonly leads to significantly lower performance scores as compared to mixed-set and cancer-blind analysis (Section 7.1.3). Whenever possible and regardless of the chosen evaluation scheme, extensive cross-validation should be performed with multiple data splits (e.g., k-fold cross-validation repeated several times) that would generate enough data points for statistical significance analysis and comparison with baseline models (i.e., mean and standard deviation).

The choice of adequate baseline models is critical for objectively assessing the true capabilities of proposed methods, where ablation analysis plays a central role. Claims made about the predictive power of promising data representations such as molecular graphs (Section 5.3) should be benchmarked against common alternatives such as descriptors and FPs (Section 5.2). Another reasonable baseline is a model trained on one-hot encoded labels of drugs, which utilize only drug identities while ignoring the features (133, 138). Similar one-hot encoding strategies can be applied to cancer features (138) as well as ablation analysis exploring various omics cancer representations (105). Novel NN modules that aim to better leverage DRP data should be benchmarked against alternative and generally less complex modules (e.g., attention modules vs. dense layer). For models integrating cancer pathway data, randomly generated pathways can be used to evaluate the gain obtained by leveraging the pathway information (135). Another baseline is to take the average of sample response values. While very easy to implement, this naïve baseline often exhibits surprisingly good results and can put the model performance into the appropriate perspective, allowing to control for inflated performance scores (135).

Finally, there are two more practices that could be highly beneficial for increasing visibility, but are often neglected. First, many papers finalize their performance analysis by demonstrating performance scores using various validation schemes. Instead, it would be highly beneficial to discuss how the proposed DRP model could be integrated in a larger patient care or drug development workflow and what are the primary challenges preventing these type of models from being deployed in real-world applications. Use case scenario could be highly relevant in this context. The second practice relates to software implementation. Many papers are accompanied by software and data that were used to build their models. However, the code repositories often miss certain pieces of information required to reproduce the paper results. Instead, it is recommended for papers to publish well-documented repositories with reproduce code, including data preprocessing scripts and installation instructions of the computational environment. This practice will improve the usability of models, their adoption as baselines, and visibility within the community.

8.2. Challenges

In recent years, AI approaches have taken a notable place in health care (139). Their promise of utilizing large data arrays to improve treatment protocols, aid drug development efforts, and increase diagnostic precision is attractive to the clinical research community. However, navigating a complex landscape of factors affecting clinical outcomes is a challenging task. We can see it in the number of emerging papers on the DRP problem (140). The research community put in monumental efforts to develop methods that leverage multiomics and drug data, explore the applicability of the existing methods, and bridge the gap between results on ubiquitously used cell lines and patient-derived models. However, this area is facing multiple challenges related to the lack of unified evaluation framework, generalizability, interpretability as well as challenges relating to computational representations of omic data, biological response, and drug representations.

8.2.1. Unified framework for model evaluation and comparison