94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 21 December 2022

Sec. Precision Medicine

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.980950

Yingjian Yang1,2†

Yingjian Yang1,2† Ziran Chen2†

Ziran Chen2† Wei Li2

Wei Li2 Nanrong Zeng2,3

Nanrong Zeng2,3 Yingwei Guo1,2

Yingwei Guo1,2 Shicong Wang2,3

Shicong Wang2,3 Wenxin Duan2,3

Wenxin Duan2,3 Yang Liu2,3

Yang Liu2,3 Huai Chen4

Huai Chen4 Xian Li4

Xian Li4 Rongchang Chen5,6,7*

Rongchang Chen5,6,7* Yan Kang1,2,3,8*

Yan Kang1,2,3,8*Introduction: Because of persistent airflow limitation in chronic obstructive pulmonary disease (COPD), patients with COPD often have complications of dyspnea. However, as a leading symptom of COPD, dyspnea in COPD deserves special consideration regarding treatment in this fragile population for pre-clinical health management in COPD. Methods: Based on the above, this paper proposes a multi-modal data combination strategy by combining the local and global features for dyspnea identification in COPD based on the multi-layer perceptron (MLP) classifier.

Methods: First, lung region images are automatically segmented from chest HRCT images for extracting the original 1,316 lung radiomics (OLR, 1,316) and 13,824 3D CNN features (O3C, 13,824). Second, the local features, including five selected pulmonary function test (PFT) parameters (SLF, 5), 28 selected lung radiomics (SLR, 28), and 22 selected 3D CNN features (S3C, 22), are respectively selected from the original 11 PFT parameters (OLF, 11), 1,316 OLR, and 13,824 O3C by the least absolute shrinkage and selection operator (Lasso) algorithm. Meantime, the global features, including two fused PFT parameters (FLF, 2), six fused lung radiomics (FLR, 6), and 34 fused 3D CNN features (F3C, 34), are respectively fused by 11 OLF, 1,316 OLR, and 13,824 O3C using the principal component analysis (PCA) algorithm. Finally, we combine all the local and global features (SLF + FLF + SLR + FLR + S3C + F3C, 5+ 2 + 28 + 6 + 22 + 34) for dyspnea identification in COPD based on the MLP classifier.

Results: Our proposed method comprehensively improves classification performance. The MLP classifier with all the local and global features achieves the best classification performance at 87.7% of accuracy, 87.7% of precision, 87.7% of recall, 87.7% of F1-scorel, and 89.3% of AUC, respectively.

Discussion: Compared with single-modal data, the proposed strategy effectively improves the classification performance for dyspnea identification in COPD, providing an objective and effective tool for COPD management.

Chronic obstructive pulmonary disease (COPD) is a common lung disease characterized by persistent airflow limitation (1–3). Because of this characterization, patients with COPD often have complications of dyspnea (4). However, as a leading symptom of COPD (5), dyspnea in COPD deserves special consideration regarding treatment in this fragile population for pre-clinical health management in COPD. Furthermore, multi-modal biomedical data combination has been a hot research topic for facilitating precision and/or personalized medicine (6, 7). Therefore, multi-modal biomedical data combination is also crucial for pre-clinical health management in dyspnea caused by COPD.

Pulmonary function test (PFT) and computed tomography (CT) have become indispensable for COPD assessment and diagnosis. The PFT and CT have their own advantages in diagnosing and evaluating COPD and are complementary. Compared with CT, PFT is a non-invasive way to diagnose COPD from stage 0 to IV, according to Global Initiative for Chronic Obstructive Lung Disease (GOLD) criteria accepted by the American Thoracic Society and the European Respiratory Society (3). Specifically, the forced expiratory volume in 1 s/forced vital capacity (FEV1/FVC) and FEV1% predicted in PFT are the gold standards for diagnosing the COPD stage. Meanwhile, in patients with COPD, forced inspiration, particularly the assessment of FTV1, yields objective information that correlates closely with subjective dyspnea ratings after bronchodilator inhalation (8). In addition, compared with PFT, CT images can reflect the change in the lung tissue of COPD patients. Thus, CT has been regarded as the most effective modality for characterizing and quantifying COPD (9). Specifically, chest CT images can indicate that the patients have suffered from mild lobular central emphysema and reveal decreased exercise tolerance in smokers without airflow limitations in their PFT results (3, 10). In addition, chest CT images also can quantitatively analyze the bronchial, airway disease, emphysema, and vascular problems in COPD patients by measuring the parameters of the bronchi and vasculature (3). Based on the above, chest CT images should provide more imaging information for dyspnea identification in COPD.

Radiomics was proposed to mine more information from medical images using advanced feature analysis in 2007 for extracting more information from medical images (11). However, because the lesions as the region of interest (ROI) are diffusely distributed in the lungs, radiomics in COPD develops more slowly than other lung diseases, such as lung cancer or pulmonary nodules (12). With the significant progress of CT imaging technology, high-resolution CT (HRCT) imaging has become an effective method for the quantitative analysis of COPD (3, 12). However, quantitative analysis of bronchial and vascular blood flow is still limited by HRCT imaging resolution. Furthermore, it is challenging to automatically, semi-automatically, or manually segment small trachea (such as small airways) and blood vessels from chest HRCT images (13–15). In essence, COPD results from the characteristic pathological changes of the lung region, including the peripheral airway, parenchyma, and vessels. Therefore, lung imaging features extracted based on the lung region have been used for COPD analysis (3, 12). Therefore, it is reasonable for dyspnea identification based on lung region HRCT images and effectively avoids the limitations of challenging segmentation tasks of small airways and vessels, which is conducive to clinical application. Besides, the value of lung radiomics features extracted from lung region HRCT images in COPD assessment has also been confirmed (16).

There are potential applications of radiomics features in COPD, particularly for the diagnosis, treatment, and follow-up of COPD and future directions of radiomics features in COPD (17). Currently, lung radiomics features have been widely used for COPD stage classification (3, 12), COPD survival prediction (18, 19), COPD presence prediction (20), COPD exacerbations (21), COPD early decision (22), and analysis of COPD and resting heart rate (3). However, radiomics features are extracted from medical images by specific calculation equations, preset types of images, and preset classes, limiting the forms of radiomics features. Convolutional neural networks (CNN) based on images for classification also rapidly developed (23). Features extracted from medical images based on the CNN model will compensate for the limitations of radiomics features. Therefore, deep CNN features extracted from lung region HRCT images should be paid attention to improve the classification performance for facilitating precision and/or personalized medicine.

Dyspnea, one of COPD's main symptoms, is currently assessed with the Modified British medical research council (mMRC) questionnaire (24). The mMRC scale, the most common validated scale to assess dyspnea for COPD patients in daily living, is used to assess the dyspnea scale (25). However, the mMRC lacks objectivity in identifying dyspnea. The accuracy of the mMRC depends on the understanding and cooperation attitude of the evaluator. Based on the above, previous works identified dyspnea based on physiological signals. For example, mild dyspnea is detected from pairs of speech recordings, achieving an accuracy of about 74% (26). Besides, respiratory Symptoms are automatically detected using a low-power multi-input CNN processor, achieving an accuracy of 87.3% on dyspnea identification (27). However, dyspnea identification in COPD remains lacking research, especially clinically applying for pre-clinical health management in COPD using multi-modal data.

Above, we summarize the advantages and disadvantages of PFT and HRCT. However, integrating the advantages of the PFT parameters, the lung radiomics features, and CNN features is crucial for dyspnea identification. Therefore, this paper proposes a multi-modal data combination strategy for dyspnea identification in COPD based on the multi-layer perceptron (MLP) classifier, providing an objective and effective model of dyspnea identification. Our contributions in this paper are briefly described as follows:

(1) We settle the problem that minor PFT parameters are easily overwhelmed by a large number of lung radiomics features and CNN features;

(2) Further, inspired by CNN, we propose a combination strategy by combining the local and global features of the PFT parameters, the lung radiomics, and CNN features for improving the classification performance;

(3) Last, our proposed combination strategy based on the MLP classifier achieves the best classification performance at 87.7% of accuracy, 87.7% of precision, 87.7% of recall, 87.7% of F1-score, and 89.3% of AUC, which may become an objective and effective tool for pre-clinical health management in COPD.

This section details our study cohort and methodology, including the selection flow of 404 subjects, the dyspnea distribution of the subjects at different GOLD in our study cohort, and the framework of the proposed method.

This study had approved by the ethics committee in the national clinical research center of China's respiratory diseases. In addition, all subjects have been provided written informed consent by the first affiliated hospital of Guangzhou medical university before chest high-resolution computed tomography (HRCT) scans, PFT, and mMRC scale inquisition.

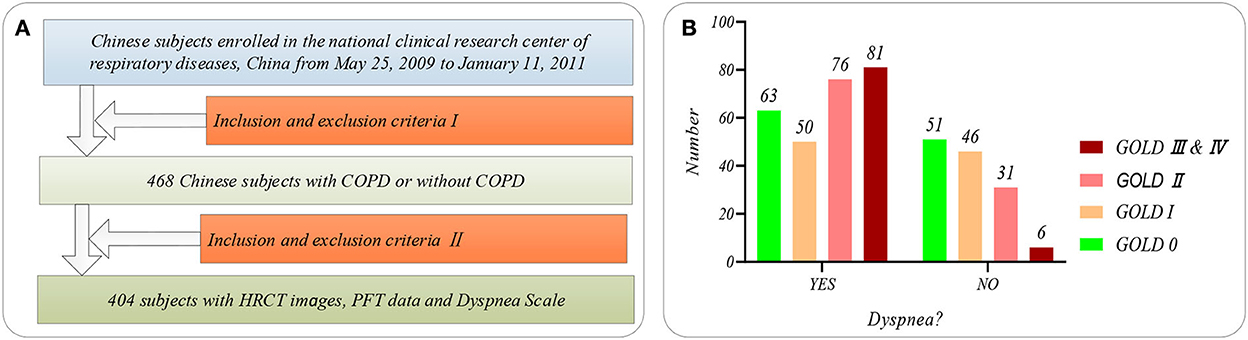

Figure 1 shows the selection flow of 404 subjects aged 40–79 in our study cohort and the dyspnea distribution of the subjects at different GOLD in our study cohort. Figure 1A shows the selection flow of 404 subjects in our study cohort. Specifically, Chinese participants are enrolled by the national clinical research center of respiratory diseases, China, from May 25, 2009, to January 11, 2011. Four hundred sixty-eight Chinese subjects participated in the study after being strictly selected by the inclusion and exclusion criteria I (28). More detailed inclusion and exclusion criteria I can also be found in our previous study (22). First, the 468 subjects were asked to undergo PFT and chest HRCT scans (TOSHIBA, kVp:120 kV, X-ray tube current:40 mA, slice thinkness:1.0 mm) at the full inspiration state. Then, 404 subjects are strictly selected from the 468 subjects by the inclusion and exclusion criteria II. The inclusion and exclusion criteria II requires that every subject meets the following two requirements simultaneously: (1) that subject with the chest HRCT images and PFT parameter; (2) the time of the chest HRCT images, PFT parameters, and mMRC scale on the same day. Normal ordinary people always have shortness of breath during strenuous exercise. Therefore, if shortness of breath occurs only during strenuous exercise (mMRC score of 0), it is considered that there is no dyspnea. Otherwise, it is considered that the subjects suffered from dyspnea (mMRC score of 1–4).

Figure 1. The subjects' selection flow diagram and dyspnea distribution in this study. (A) The subjects' selection flow diagram, including the enrollment and the inclusion and exclusion criteria I and II; (B) Dyspnea distribution of the subjects at GOLD 0-III&IV.

Besides, Figure 1B shows the dyspnea distribution of the subjects at different GOLD. Our study cohort includes 254 subjects who suffered from dyspnea and 150 subjects without dyspnea. Eleven PFT parameters (OLF, 11) include diffusing capacity for carbon monoxide (DLCO, mmol/ kPa x min), FEV1 (L), FEV1 after (FEV1_AFT, L), FVC, FVC after (FEV1_ AFT, L), functional residual capacity (FRC, L), inspiratory capacity IC (L), FEV1/FVC (%), Carbon Monoxide Corr for Alveolar (KCO_BP), residual volume (RV, L), and total lung capacity (TLC, L), referring to the ATS/ERS standard (American Thoracic Society 2005) (29). Statistical information on the PFT parameters is available in Supplementary Table S1 of Supplementary material.

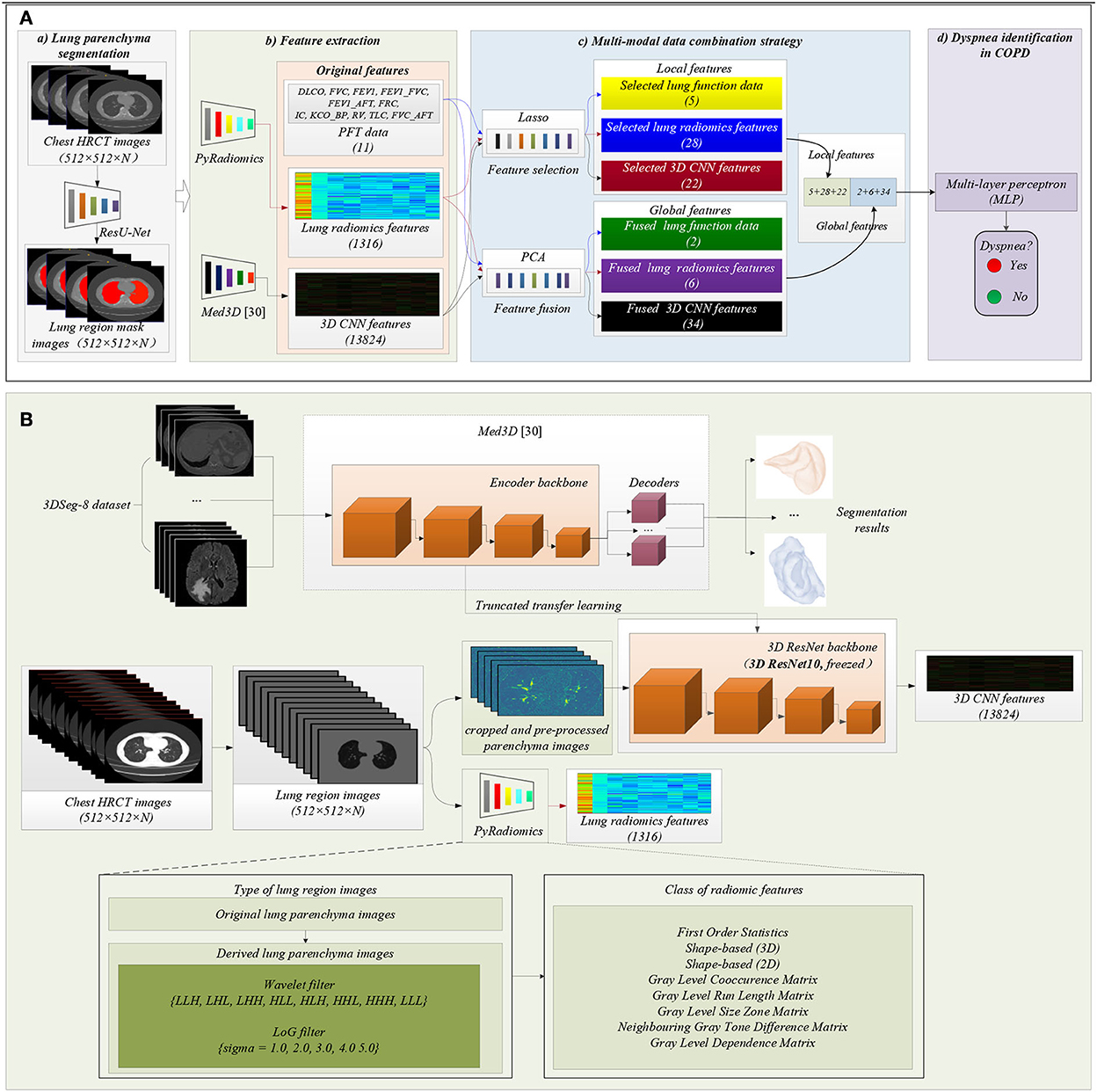

Figure 2 shows the proposed method in this study. The main idea of the proposed method in this paper is to combine PFT parameters and chest HRCT images for intelligent dyspnea identification in COPD based on machine learning (ML) classifiers.

Figure 2. The framework of the proposed method. (A) The flow chart of the proposed method: (a) Lung region (ROI) segmentation; (b) Features extraction; (c) Multi-modal data combination strategy; (d) Dyspnea identification in COPD based on MLP classifier; (B) Detailed process of features extraction, including lung radiomics features and 3D CNN features.

Figure 2A(a) shows that lung region mask images with red color are automatically segmented from the 404 sets of chest HRCT images using a state-of-the-art of ResU-Net (30). The ResU-Net trained by human chest CT images with different lung diseases is a robust and standard segmentation model of pathological lungs (12, 30). The architecture of the ResU-Net has been described in detail in our previous paper (31). In addition, 404 sets of lung region mask images have been checked and modified by experienced radiologists.

Figure 2A(b) shows that the two standard models PyRadiomics (32) and pre-trained Med3D (33) are selected to effectively and comprehensively extract the imaging features of lung region HRCT images. First, the 404 sets of the lung region HRCT images with the Hounsfield unit (HU) are obtained based on the lung region mask images and their chest HRCT images (34). Then, lung radiomics and 3D CNN features are separately extracted from the lung region HRCT images based on PyRadiomics and pre-trained Med3D. Finally, 1,316 original lung radiomics (OLR, 1,316) and 13,824 3D CNN features (O3C, 13,824) are obtained per subject.

Figure 2B details the feature extraction process of 1,316 OLR. Specifically, the lung region HRCT images with HU separately are filtered by wavelet filter and Laplacian of Gaussian filter (LoG) filter, generating the derived images. Then, the lung region HRCT images and their derived images are used to extract 1,316 OLR based on PyRadiomics. Please refer to our previous study (3, 12, 22) for a more detailed description of lung radiomics extraction. In addition, the PyRadiomics is available on the website (https://pyradiomics.readthedocs.io/en/latest/index.html), and the website also has given detailed explanations of radiomics (3).

Besides, Figure 2B also details the feature extraction process of 13,824 O3C. Med3D, a heterogeneous 3D network, is to extract general medical 3D features by building a 3DSeg-8 dataset with diverse modalities, target organs, and pathologies (12, 33). A truncated transfer learning strategy is adopted to extract the 3D CNN features based on the pre-trained Med3D. Thus, only the encoder backbone (3D ResNet10) of pre-trained Med3D needs to be transferred to generate 13,824 O3C. First, we use the same method in Med3D to crop and pre-process the lung region HRCT images (280 × 400 × N'). Second, the cropped and pre-processed lung images generate the CNN feature maps (512 × 35 × 50 × 75). Third, higher-order CNN feature maps (512 × 3 × 3 × 3) are obtained based on the CNN feature maps (512 × 35 × 50 × 75) by 3D average pooling. Finally, the higher-order CNN feature maps (512 × 3 × 3 × 3) per subject are flattened into 13,824 O3C (512 × 3 × 3 × 3 = 13,824).

Before generating the CNN feature maps (512 × 35 × 50 × 75), the cropped and pre-processed lung images need to normalize the lung region and generate random values outside the lung region in accord with Gaussian distribution. Specifically, the mathematical expression of normalization is given by Eq. (1).

where x, , and σ are the HU value, mean, and mean square deviation of cropped and pre-processed lung images, respectively; x′ is the normalized value of cropped and pre-processed lung images.

Figure 2A(c) details the process of the proposed multi-modal data combination strategy in this paper. Inspired by CNN, a combination strategy by combining the local and global features of the PFT parameters, lung radiomics, and 3D CNN features is proposed for improving the classification performance.

The local and global features of 11 OLF, 1,316 OLR, and 13,824 O3C are available in Supplementary Tables S2–7 of Supplementary material.

First, the local features are respectively selected from 11 OLF, 1,316 OLR, and 13,824 O3C by the least absolute shrinkage and selection operator (Lasso) algorithm (35), which has been proved to improve classification performance (3). A standard python package LassoCV (definition in Python 3.6), with 10 fold cross-validation, is performed in this paper. Subsequently, the local features are selected, including five selected PFT parameters (SLF, 5), 28 selected lung radiomics features (SLR, 28), and 22 selected 3D CNN features (S3C, 22). Second, global features of 11 OLF, 1,316 OLR, and 13,824 O3C are respectively fused by the principal component analysis (PCA) algorithm (a classic algorithm for reducing the number of dimensions) with a general 95% contribution (36). A standard python package sklearn.decomposition.PCA(svd_solver='auto') (definition in Python 3.6) is performed in this paper. Subsequently, the global features are fused, including two fused PFT parameters (FLF, 2), six fused lung radiomics features (FLR, 6), and 34 fused 3D CNN features (F3C, 34). Finally, all the local and global features (SLF + FLF + SLR + FLR + S3C + F3C, 5 + 2 + 28 + 6 + 22 + 34) are combined as the variables for dyspnea identification in COPD.

The mathematical expression of the Lasso algorithm (3, 12, 22, 35) is given by Equation (2),

where xij is the value of the independent variable (OLF: 404 × 11 subjects, OLR: 404 × 1,316 subjects, or O3C: 404 × 13,824 subjects) after a normalization operation (Min-max normalization) (3). yi is the value of the dependent variable (subjects with dyspnea or without dyspnea), λ is the penalty parameter (λ ≥0), βj is the regression coefficient, i∈[1, n], and j∈[0, p].

The detailed fused process of the PCA with the singular value decomposition (SVD) algorithm (36) is introduced in this paper. First, a feature matrix Am×n = (a1,a,a3,…,an) is constructed by 404 subjects with their features (OLF: 404 × 11 subjects, OLR: 404 × 1,316 subjects, or O3C: 404 × 13,824 subjects). Second, the eigenvalues of the feature matrix Am×n are obtained by the SVD algorithm (Eq. (3)-(4)). Third, Normalize the eigenvalues, rank the normalized eigenvalues in the order of large size, and determine the corresponding eigenvalues (λ1,λ2,λ3,…,λk) with their 95% accumulation. Then, the eigenvectors are calculated based on the corresponding eigenvalues (λ1→ξ1,λ2→ξ2,λ3→ξ3,…,λk→ξk) used to construct the transformation matrix Pk×n = (ξ1, ξ2, ξ3,…, ξk) k×n. Last, the fused features Bm×k are obtained based on the feature matrix Am×n and the transformation matrix Pk×n using Equation (4).

where U m×m and V n×n are the orthogonal matrices, ∑m×n = (σ1, σ2, σ3,…, σk) is the diagonal matrix, and σi is the ith eigenvalues of the matrix ATA.

Early MLP classifier is a linear model, which can only handle simple binary classification and is difficult to analyze complex non-linear problems (37). However, its non-linear expression ability has been effectively improved by introducing hidden layers and activation functions. Currently, the MLP classifier is widely used in machine learning, pattern recognition, and other fields (38–41).

Figure 2A(c) shows that the MLP classifier based on all the local and global features is used to identify dyspnea in COPD. A standard python package sklearn.neural_network. MLP classifier (definition in Python 3.6) is performed to identify dyspnea. The parameters in the package MLP classifier are set: hidden_layer_sizes=(256,128,64), activation='tanh', solver='adam', alpha=0.0001, tol=0.0005, and max_iter=1000, respectively.

Figure 3 shows the experimental design in this paper. Our experiment includes four experiments (Experiments 1–4) to verify the effectiveness of our proposed method. Previous studies used six classical machine learning (ML) classifiers to complete the COPD classification task (3). The six classical ML classifiers include MLP, support vector machine (SVM) (42), random forest (RF) (43), decision tree (DT) (44), gradient boosting (GB) (45), and linear discriminant analysis (LDA) (46). Based on the six above ML classifiers, K-nearest neighbor (KNN) (47) and logistic regression (LR) (48) are further considered to compare the performance of dyspnea recognition models further. Therefore, eight classical ML classifiers, including MLP, SVM, RF, KNN, DT, GB, LDA, and LR, are adopted to identify dyspnea in COPD based on different features. The definitions and parameters of the eight classifiers are available in Supplementary Table S8 of Supplementary material.

First, the 404 subjects in our study cohort are divided into the training set (n = 323) and the test set (n = 81). Specifically, 113 subjects suffered from dyspnea, and 210 subjects were without dyspnea in the training set. The 27 subjects suffered from dyspnea, and 54 subjects were without dyspnea in the test set. Then, the standard python packages of eight ML classifiers (definition in Python 3.6) are trained based on the training set, respectively. Last, the trained models are separately used to identify dyspnea based on the test set, giving the evaluation metrics of the classification performance. Specifically, the evaluation metrics of the classification performance include accuracy, precision, recall, F1-score, and area under the curve of AUC.

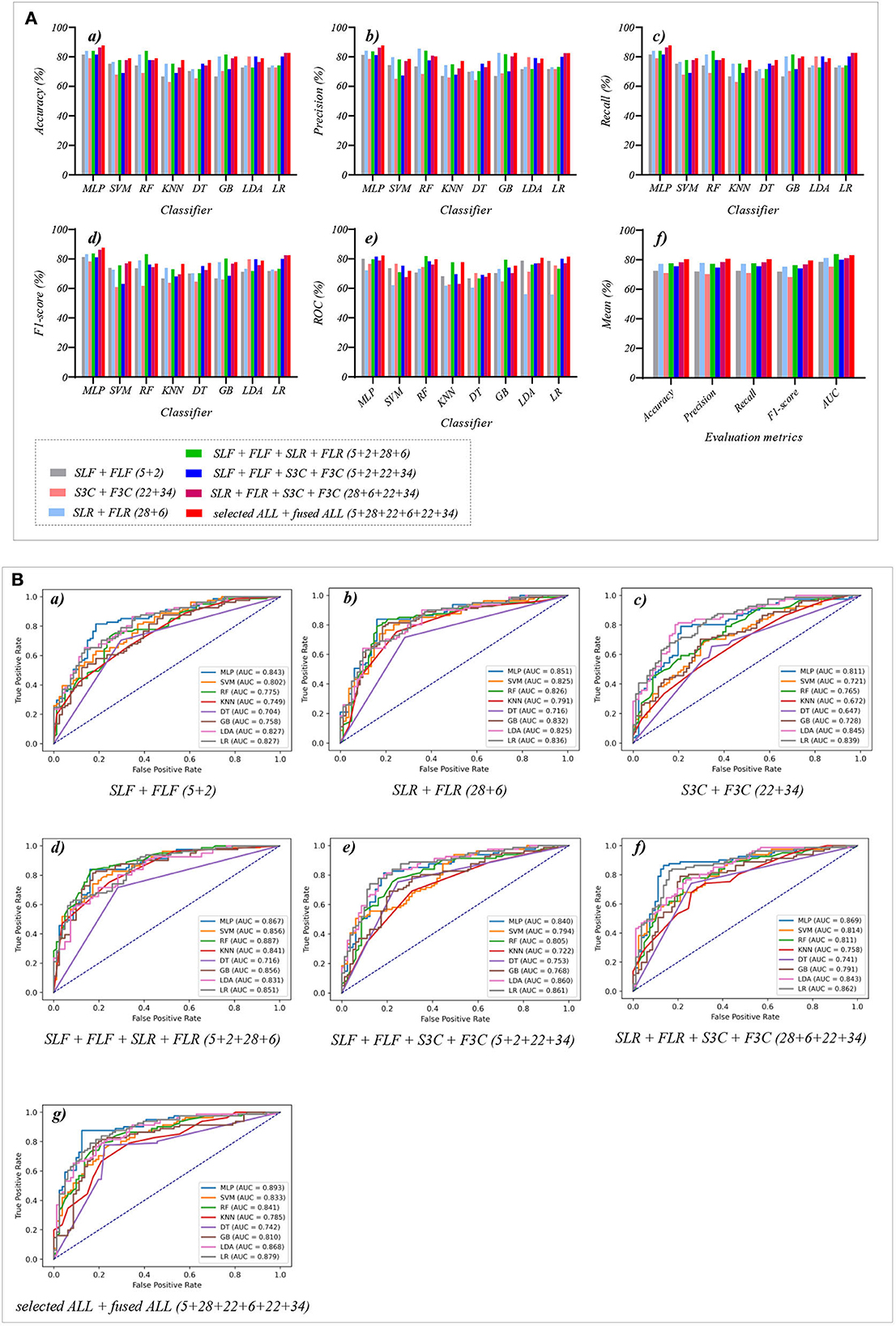

In Experiment 1, the classification performances are obtained based on the eight classical ML classifiers with OLF (11), OLR (1,316), O3C (13,824), and their arbitrary combination, respectively. In Experiment 2, the classification performances are obtained based on the above classifiers with SLF (5), SLR (28), S3C (22), and their arbitrary combination, respectively. Similarly, in Experiment 3, the classification performances are obtained based on the above ML classifiers with FLF (2), FLR (6), F3C (34), and their arbitrary combination, respectively. Finally, in Experiment 4, the classification performances are obtained based on the above classifiers with SLF + FLF (5 + 2), SLR + FLR (28 + 6), S3C + F3C (22 + 34), SLF + FLF + SLR + FLR (5 + 2 + 28 + 6), SLF + FLF + S3C + F3C (5 + 2 + 22 + 34), SLR + FLR + S3C + F3C (28 + 6 + 22 + 34), and SLF + FLF + SLR + FLR + S3C + F3C (selected ALL + fused ALL, 5 + 2 + 28 + 6 + 22 + 34), respectively.

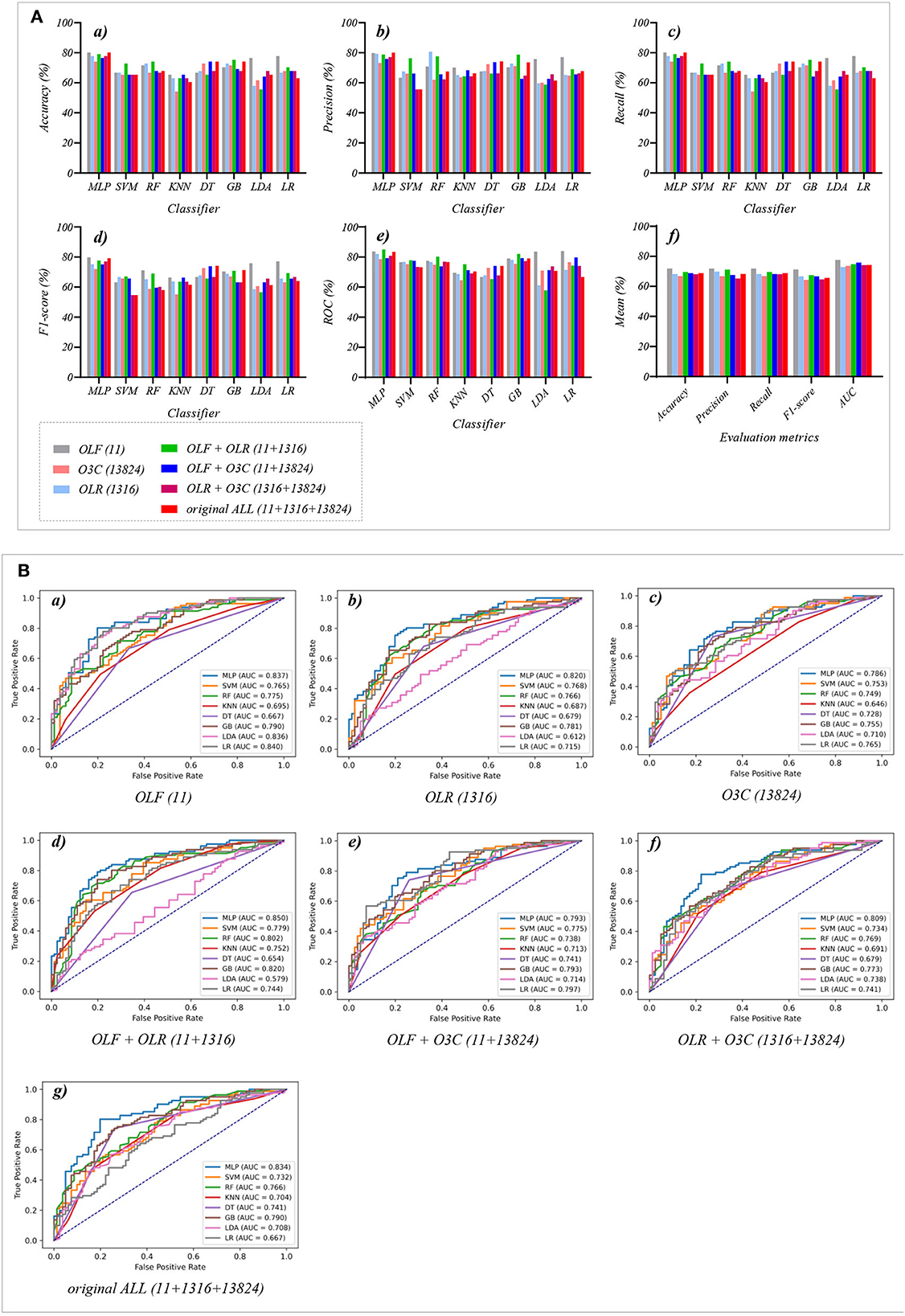

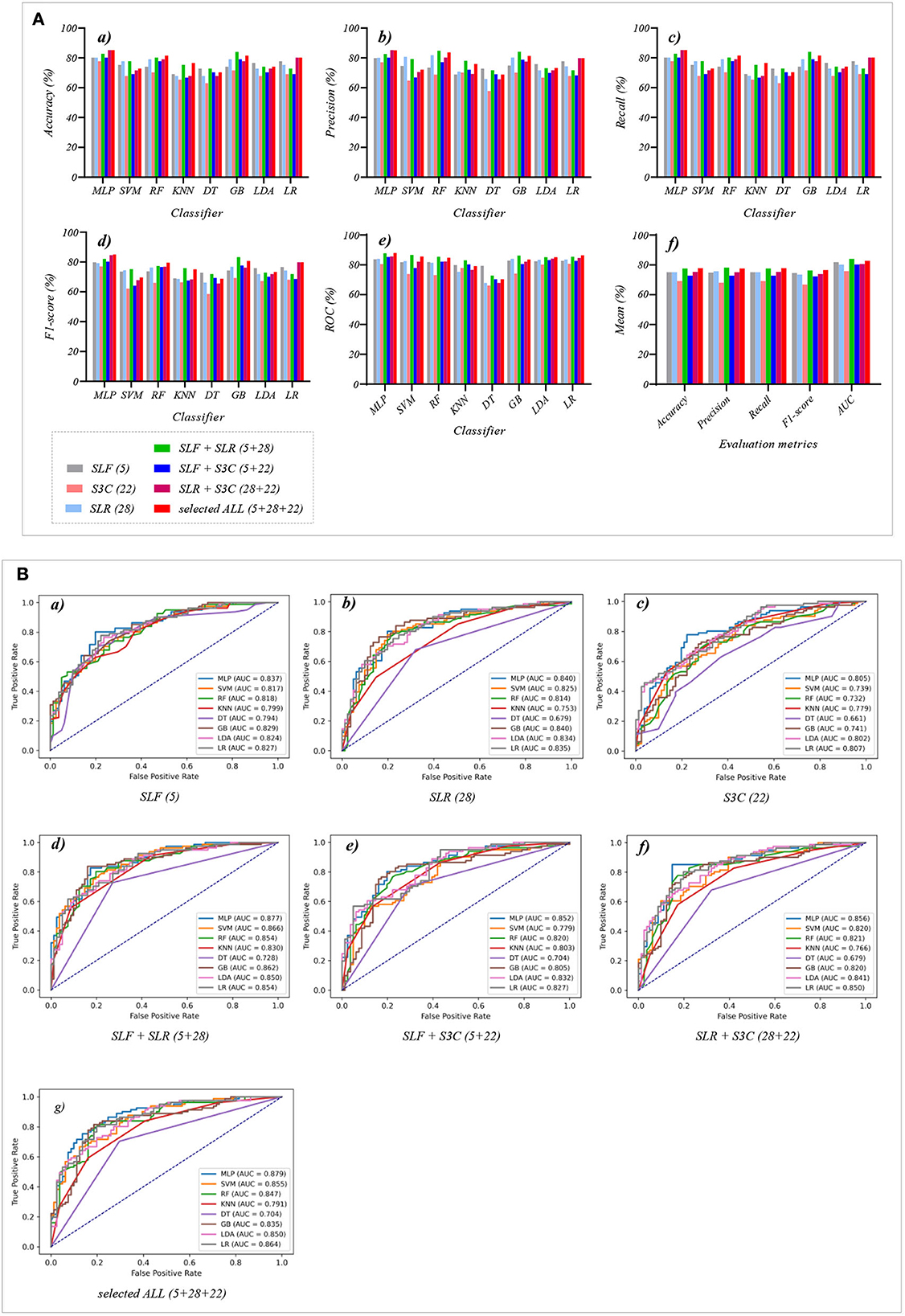

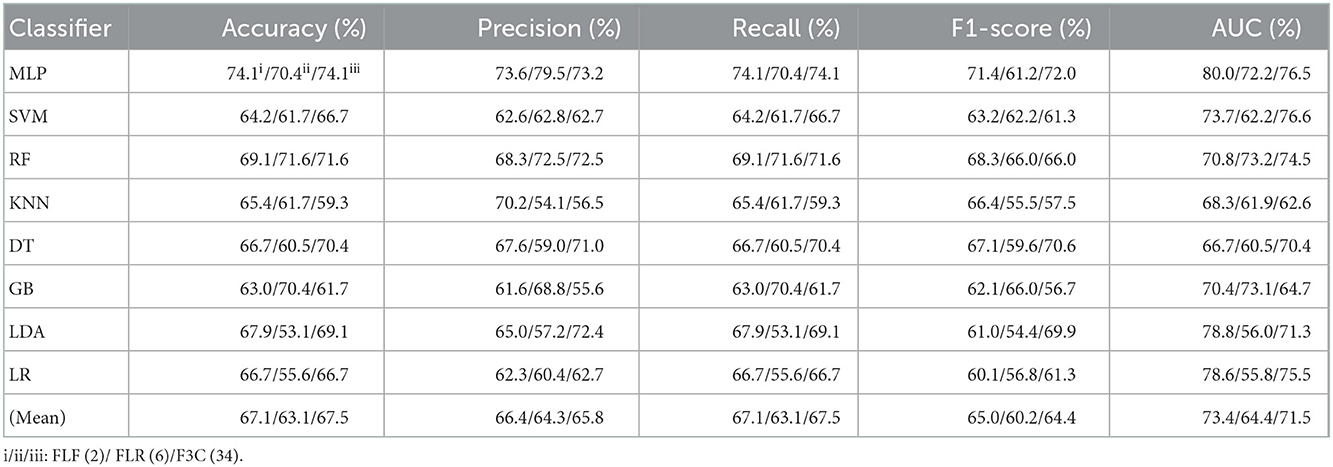

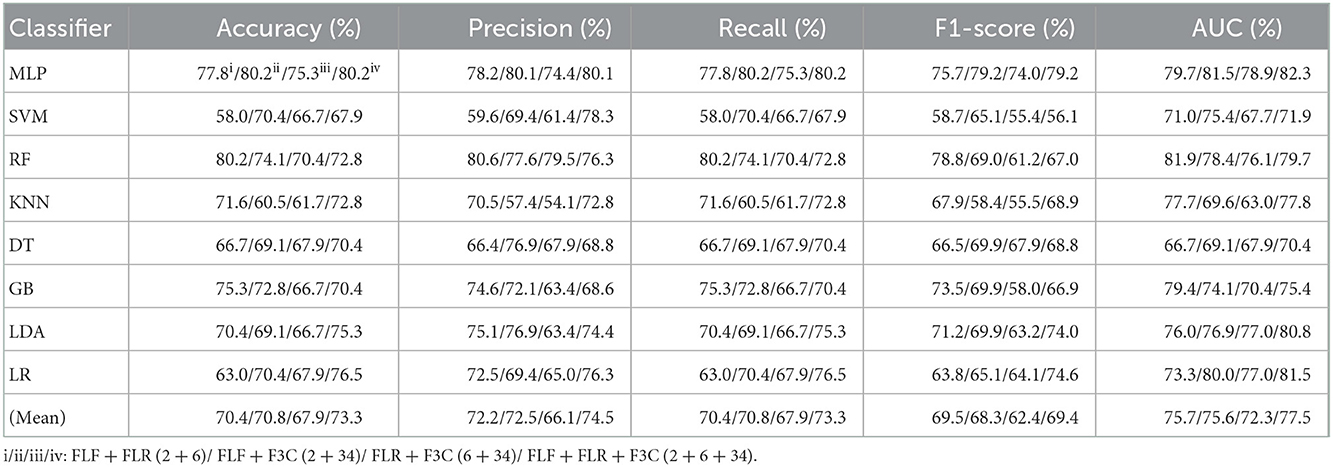

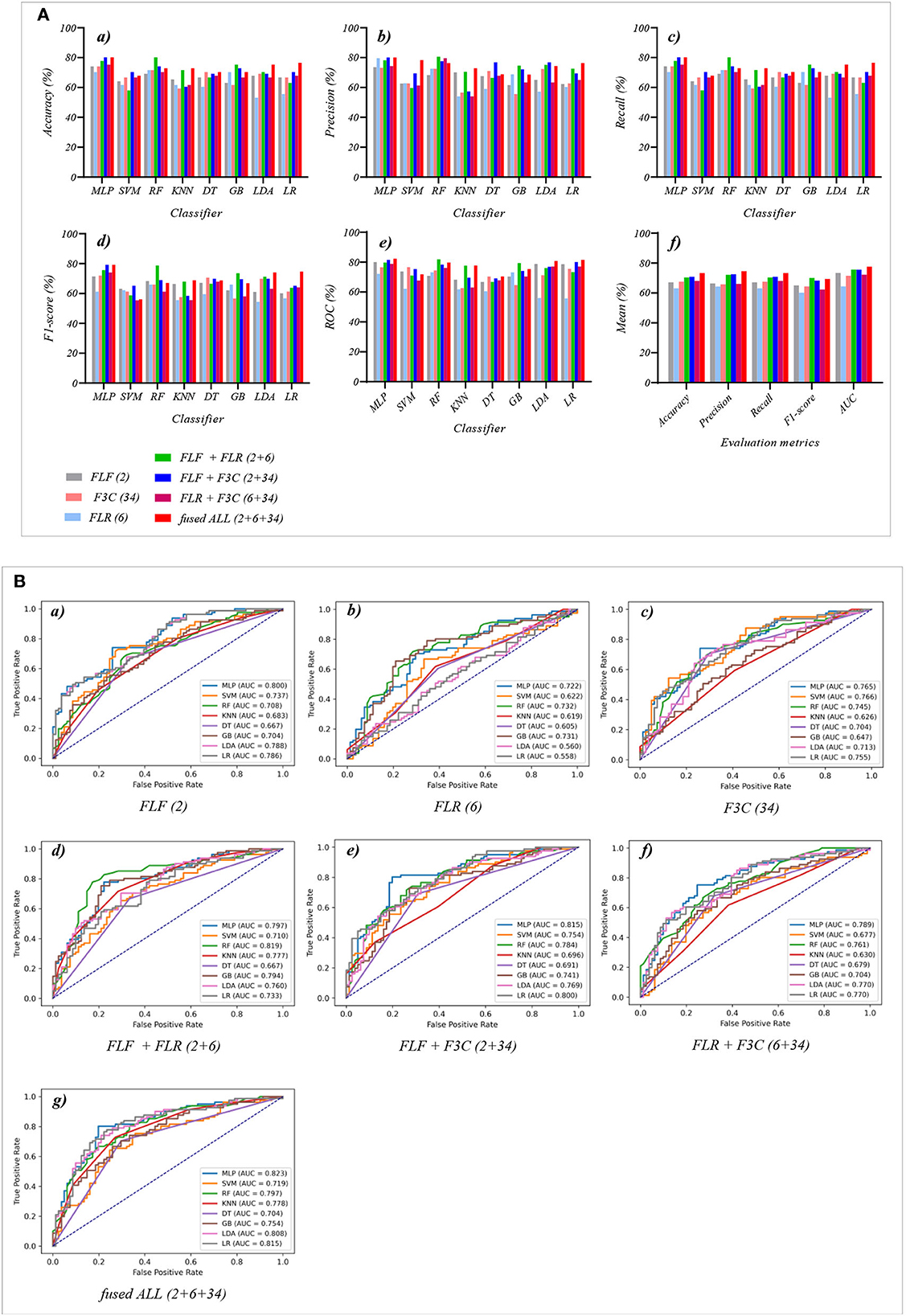

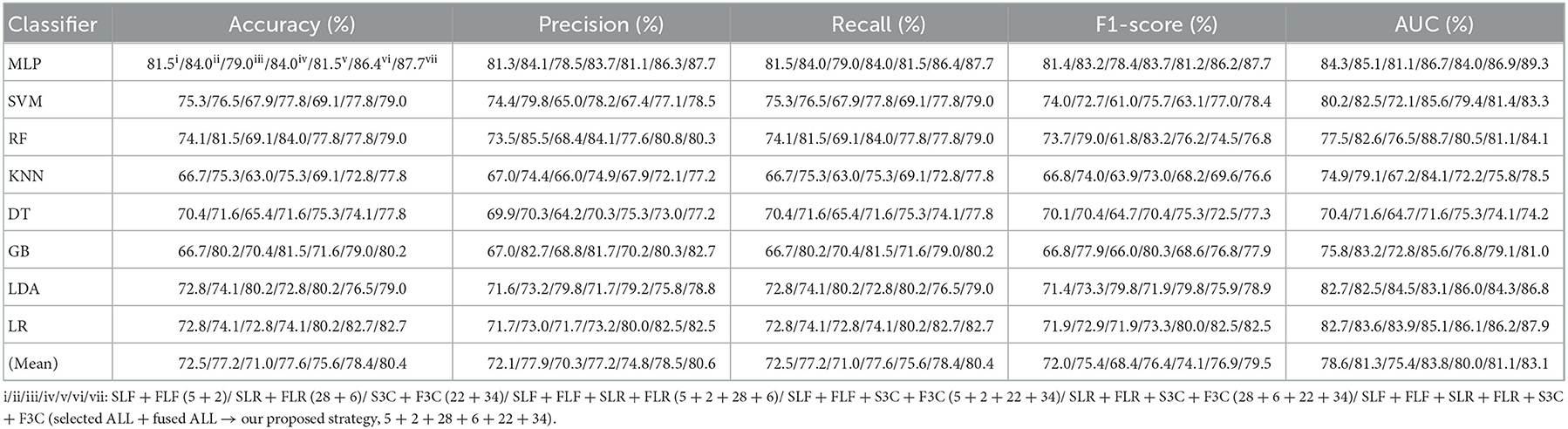

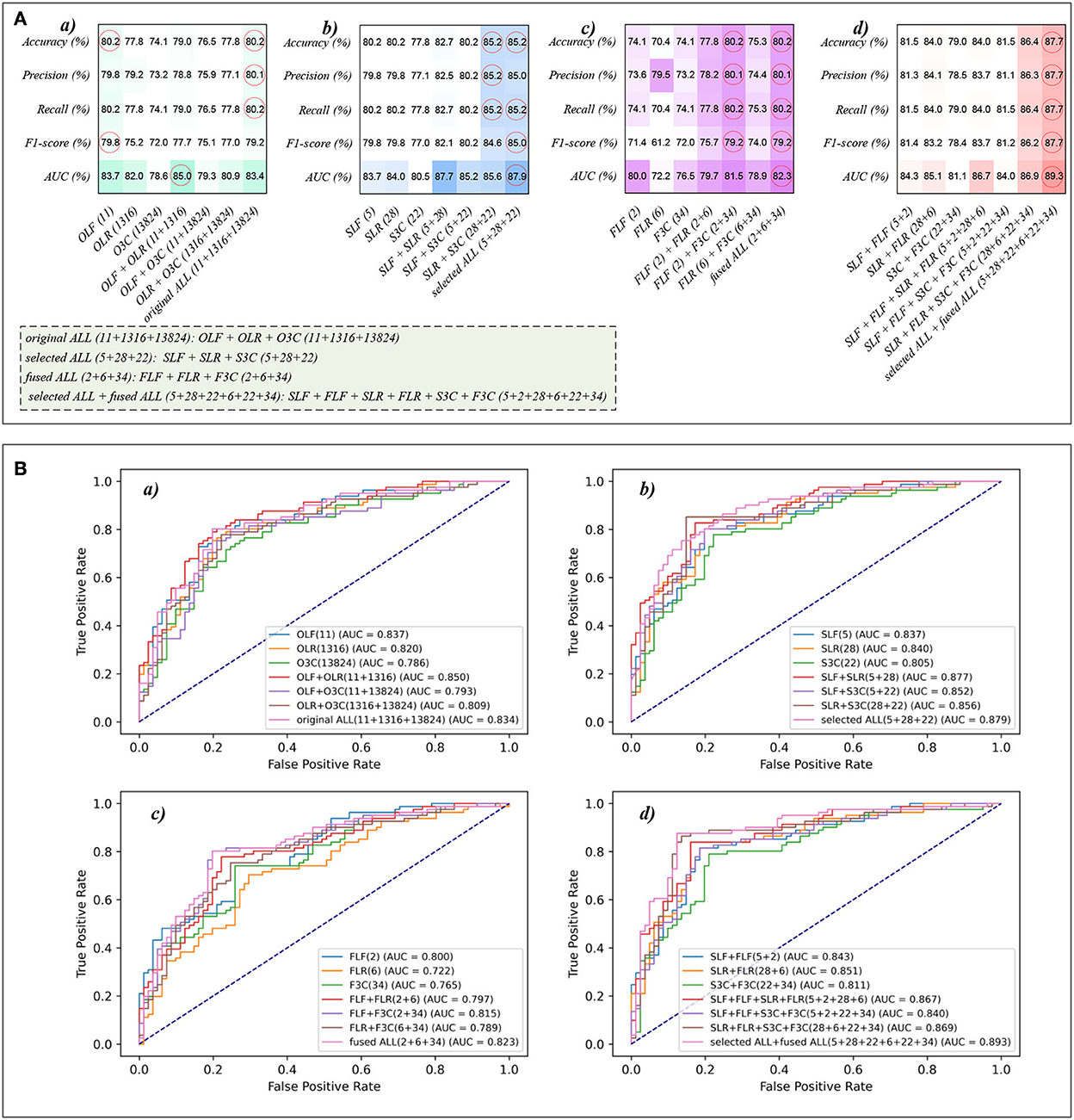

This section reports the experimental results of the eight classical ML classifiers with different features. Specifically, Tables 1–7 reports the experimental results of evaluation metrics. Figures 4–7 visually shows these evaluation metrics, the mean value of evaluation metrics, and the receiver operating characteristic curve (ROC). In addition, the evaluation metric AUC in Tables 1–7 is calculated from their ROCs.

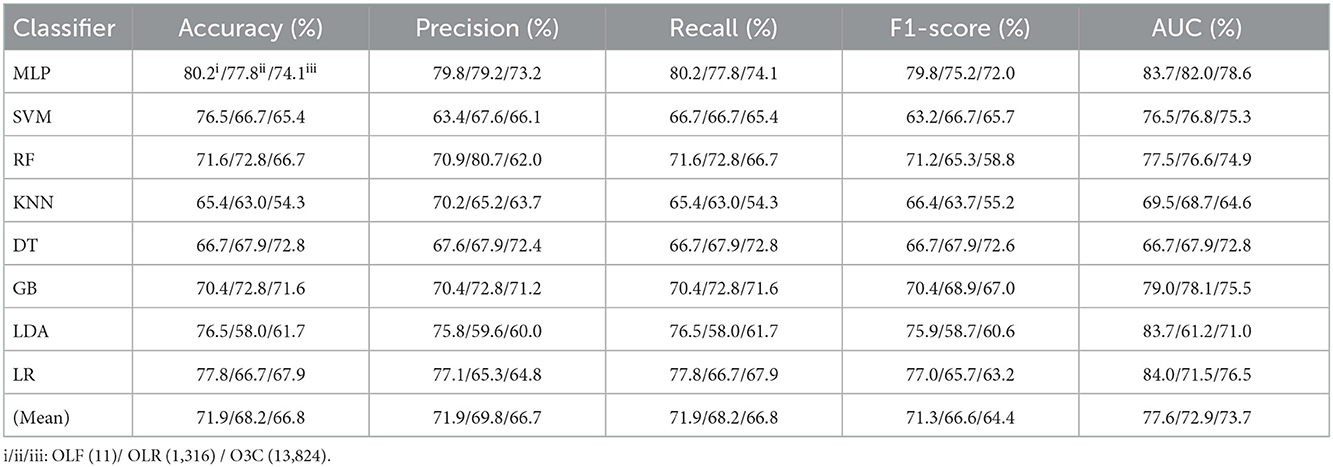

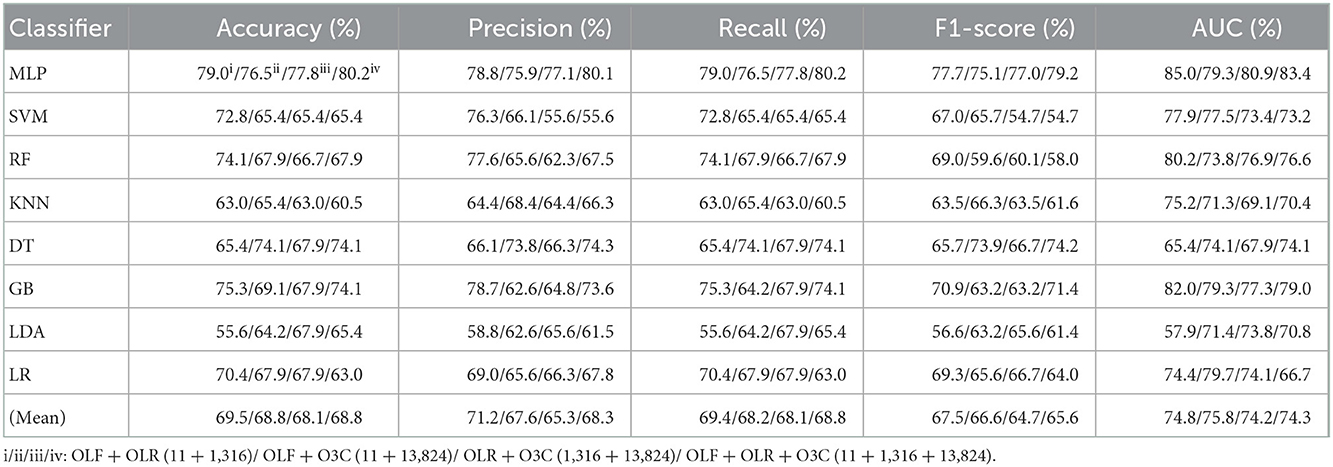

Table 1. Evaluation metrics of the different classifiers with three original features (Experiment 1) on the test set.

Figure 4. The evaluation metrics pictures and ROCs of eight classifiers with seven original classification features (three original features and four original combination features in Experiment 1). (A) The evaluation metrics pictures include (a) Accuracy, (b) Precision, (c) Recall, (d) F1-score, (e) AUC, and (f) Mean. (B) ROCs of the ML classifiers include (a) OLF (11), (b) OLR (1,316), (c) O3C (13,824), (d) OLF + OLR (11 + 1,316), (e) OLF + O3C (11 + 13,824), (f) OLR + O3C (1,316 + 13,824), (g) OLF + OLR + O3C (11 + 1,316 + 13,824).

Tables 1, 2 reports the experimental results of three original features and their combination features based on the eight classical ML classifiers in Experiment 1. Specifically, three original features include OLF (11), OLR (1,316), and O3C (13,824), and their combination features include OLF + OLR (11 + 1,316), OLF + O3C (11 + 13,824), OLR + O3C (1,316 + 13,824), and OLF + OLR + O3C (original ALL, 11 + 1,316 + 13,824).

Table 2. Evaluation metrics of the different classifiers with four original combination features (Experiment 1) on the test set.

Tables 1, 2 and Figures 4A(a–e) show that the MLP classifier performs better than other classifiers. Figure 4B shows the ROCs of the three single original features and their combination features based on the eight classical ML classifiers. Furthermore, the classification performance of the MLP classifier with OLF (11) is the best of the three original features, achieving 80.2% of accuracy, 79.8% of precision, 80.2% of recall, 79.8% of F1-scorel, and 83.7% of AUC. The classification performance of the MLP classifier with OLR is better than that of O3C, achieving 77.8% of accuracy, 79.2% of precision, 77.8% of recall, 75.2% of F1-scorel, and 82.0% of AUC. However, all original combination features have not improved the classification performance compared with single OLF (11). Specifically, the classification performance of the MLP classifier with OLF + OLR (11 + 1,316) performs best at AUC, achieving 85.0%. Other evaluation metrics of OLF + OLR (11 + 1,316) based on the MLP classifier are 79.0% of accuracy, 78.8% of precision, 79.0% of recall, and 77.7% of F1-scorel. Except for OLF + OLR (11 + 1,316), the MLP classifier with the original ALL (11 + 1,316 + 13,824) performs better than other original combination features at AUC, achieving 83.4%. Other evaluation metrics of original ALL (11 + 1,316 + 13,824) based on the MLP classifier are 80.2% of accuracy, 80.1% of precision, 80.2% of recall, and 79.2% of F1-scorel. Figure 4A(f) shows the mean evaluation metrics of all classifiers in Experiment 1, and the mean evaluation metrics of single original features OLF (11) are best.

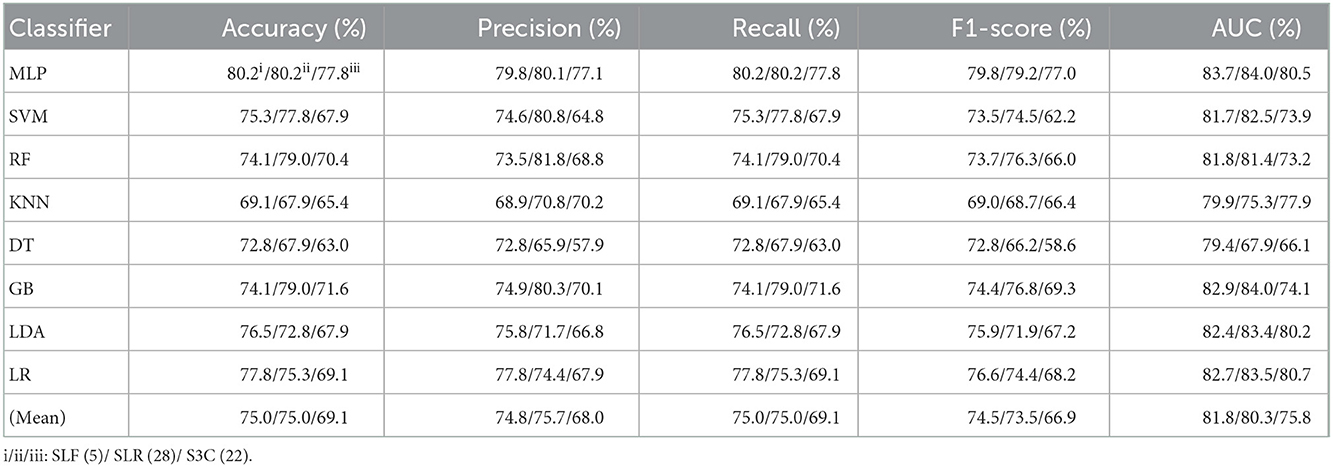

Tables 3, 4 reports the experimental results of three selected features and their combination features based on the eight classical ML classifiers in Experiment 2. Specifically, three selected features include SLF (5), SLR (28), and S3C (22), and their combination features include SLF + SLR (5 + 28), SLF + S3C (5 + 22), SLR + S3C (28 + 22), and SLF + SLR + S3C (selected ALL, 5 + 28 + 22).

Table 3. Evaluation metrics of the different classifiers with three selected features (Experiment 2) on the test set.

Table 4. Evaluation metrics of the different classifiers with four selected combination features (Experiment 2) on the test set.

Table 3 and Figures 5A(a–e) show that the MLP classifier with three single-selected features performs better than other classifiers in Experiment 2. Figure 5B shows the ROCs of the three single-selected features and their combination features based on the eight classical ML classifiers. Furthermore, the MLP classifier with SLR (5) performs best, achieving 80.2% of accuracy, 80.1% of precision, 80.2% of recall, 79.2% of F1-score, and 84.0% of AUC. Compared with the classification performance of OLF (11) based on the MLP classifier, that of SLF (5) remains unchanged. However, the MLP classifier with SLR (28) and S3C (22) separately performs better than that with OLR (1,316) and O3C (13,824). Specifically, the classification performance of SLR (28) has improved by 2.4% of accuracy, 0.9% of precision, 2.4% of recall, 4.0% of F1-scorel, and 2.0% of AUC. On the other hand, the classification performance with S3C (22) has improved by 3.7% of accuracy, 3.9% of precision, 3.7% of recall, 5.0% of F1-scorel, and 1.9% of AUC.

Figure 5. The evaluation metrics pictures and ROCs of eight classifiers with seven selected features (three selected features and four selected combination features in Experiment 2). (A) The evaluation metrics pictures include (a) Accuracy, (b) Precision, (c) Recall, (d) F1-score, (e) AUC, and (f) Mean. (B) ROCs of the ML classifiers include (a) SLF (5), (b) SLR (28), (c) S3C (22), (d) SLF + SLR (5 + 28), (e) SLF + S3C (5 + 22), (f) SLR + S3C (28 + 22), and (g) SLF + SLR + S3C (5 + 28 + 22).

Table 4 and Figures 5A(a–e) show that the MLP classifier with selected combination features SLF + SLR + S3C (5 + 28 + 22) performs best. Specifically, the SLF + SLR + S3C (selected ALL, 5 + 28 + 22) based on the MLP classifier performs best, achieving 85.2% of accuracy, 85.0% of precision, 85.2% of recall, 85.0% of F1-scorel, and 87.9% of AUC. Compared with the classification performance of the single-selected features based on the MLP classifier, the selected combination features based on the MLP classifier performs better. Specifically, compared with the best classification performance of the single-selected features SLR (28) based on the MLP classifier, that of the selected combination features SLF + SLR + S3C (selected ALL, 5+28+22) has improved by 5.0% of accuracy, 4.9% of precision, 5.0% of recall, 5.8% of F1-scorel, and 3.9% of AUC. Compared with the classification performance of the original combination features based on the MLP classifier shown in Table 2, that of the selected combination features based on the MLP classifier has been improved, as shown in Table 4. Specifically, compared with the classification performance of OLF + OLR (11+1,316) based on the MLP classifier, that of SLF + SLR (5+28) based on the MLP classifier has improved by 3.7% of accuracy, 3.7% of precision, 3.7% of recall, 4.4% of F1-scorel, and 2.7% of AUC. Compared with the classification performance of OLF + O3C (11 + 13,824) based on the MLP classifier, that of SLF + S3C (5 + 22) based on the MLP classifier has improved by 3.7% of accuracy, 4.3% of precision, 3.7% of recall, 5.1% of F1-scorel, and 5.9% of AUC. Compared with the classification performance of OLR + O3C (1,316 + 13,824) based on the MLP classifier, that of SLR + S3C (28 + 22) based on the MLP classifier has improved by 7.4% of accuracy, 8.1% of precision, 7.4% of recall, 7.6% of F1-scorel, and 4.7% of AUC. Compared with the classification performance of OLR + O3C (1,316 + 13,824) based on the MLP classifier, that of SLR + S3C (28 + 22) based on the MLP classifier has improved by 5.0% of accuracy, 4.9% of precision, 5.0% of recall, 5.8% of F1-scorel, and 4.5% of AUC.

Figure 5A(f) shows the mean evaluation metrics of all classifiers in Experiment 2. The mean evaluation metrics of ML classifiers based on selected combination features SLF + SLR (5 + 28) and selected ALL (5 + 28 + 22) perform better than the single-selected features. In addition, compared with the mean evaluation metrics of ML classifiers based on the original features and their combination features, that of ML classifiers based on the selected features and their combination features has been improved. Specifically, compared with the best mean evaluation metrics of OLF (11) (71.9% of mean accuracy, 71.9% of mean precision, 71.9% of mean recall, 71.3% of mean F1-score, and 77.6% of mean AUC) in Figure 4A(f), that of SLF + SLR (5 + 28) has improved by 5.6% of accuracy, 6.2% of precision, 5.6% of recall, 5.0% of F1-score, and 6.4% of AUC. Compared with the best mean evaluation metrics of OLF (11), that of selected ALL (5+28+22) has improved by 5.9% of accuracy, 5.6% of precision, 5.9% of recall, 5.0% of F1-score), and 5.2% of AUC.

Tables 5, 6 reports the experimental results of three fused features and their combination features based on the eight classical ML classifiers in Experiment 3. Specifically, three fused features include FLF (2), FLR (6), and F3C (34), and their combination features include FLF + FLR (2 + 6), FLF + F3C (2 + 34), FLR + F3C (6 + 34), and FLF + FLR + F3C (fused ALL, 2 + 6 + 34).

Table 5. Evaluation metrics of the different classifiers with three fused features (Experiment 3) on the test set, respectively.

Table 6. Evaluation metrics of the different classifiers with four fused combination features (Experiment 3) on the test set, respectively.

Table 5 and Figures 6A(a–e) show that the MLP classifier with three single-fused features performs better than other classifiers in Experiment 3. Figure 6B shows the ROCs of the three single-fused features and their combination features based on the eight classical ML classifiers. Furthermore, the MLP classifier with FLR (6) performs best at AUC, achieving 80.0%. Other evaluation metrics of FLR on the MLP classifier are 74.1% of accuracy, 73.6% of precision, 74.1% of recall, and 74.1% of F1-scorel. However, compared with the classification performance of single original features and single-selected features based on the MLP classifier in Tables 1, 3, the MLP classifier with single-fused features fails to improve the classification performance.

Figure 6. The evaluation metrics pictures and ROCs of eight classifiers with seven fused features (three fused features and four fused combination features in Experiment 3). (A) The evaluation metrics pictures include (a) Accuracy, (b) Precision, (c) Recall, (d) F1-score, (e) AUC, and (f) Mean. (B) ROCs of the ML classifiers include (a) FLF (2), (b) FLR (6), (c) F3C (34), (d) FLF + FLR (2 + 6), (e) FLF + F3C (2 + 34), (f) FLR + F3C (6 + 34), and (g) FLF + FLR + F3C (2 + 6 + 34).

Table 6 and Figures 6A(a–e) show that FLF + FLR + F3C (fused ALL, 2 + 6 + 34) based on the MLP classifier also performs best at AUC, achieving 82.3%. Other evaluation metrics of fused ALL based on the MLP classifier are 80.2% of accuracy, 80.1% of precision, 80.2% of recall, and 79.2% of F1-scorel. Compared with the best classification performance of the single-fused features FLR based on the MLP classifier, that of fused ALL based on the MLP classifier has improved by 6.1% of accuracy, 6.5% of precision, 6.1% of recall, 5.1% of F1-scorel, and 2.3% of AUC. However, compared with the classification performance of original combination features and selected combination features based on the MLP classifier in Tables 2, 4, the fused combination features based on the MLP classifier fail to improve the classification performance.

Figure 6A(f) shows the mean evaluation metrics of all classifiers in Experiment 3. The mean evaluation metrics of fused combination features FLF + FLR + F3C (2 + 6 + 34) perform better than the single-fused features. However, compared with the best mean evaluation metrics of OLF (11) in Figure 4A(f) and SLF + SLR (5 + 28) / selected ALL (5 + 28 + 22) in Figure 5A(f), the fused combination features FLF + FLR + F3C (2 + 6 + 34) fail to improve the mean evaluation metrics.

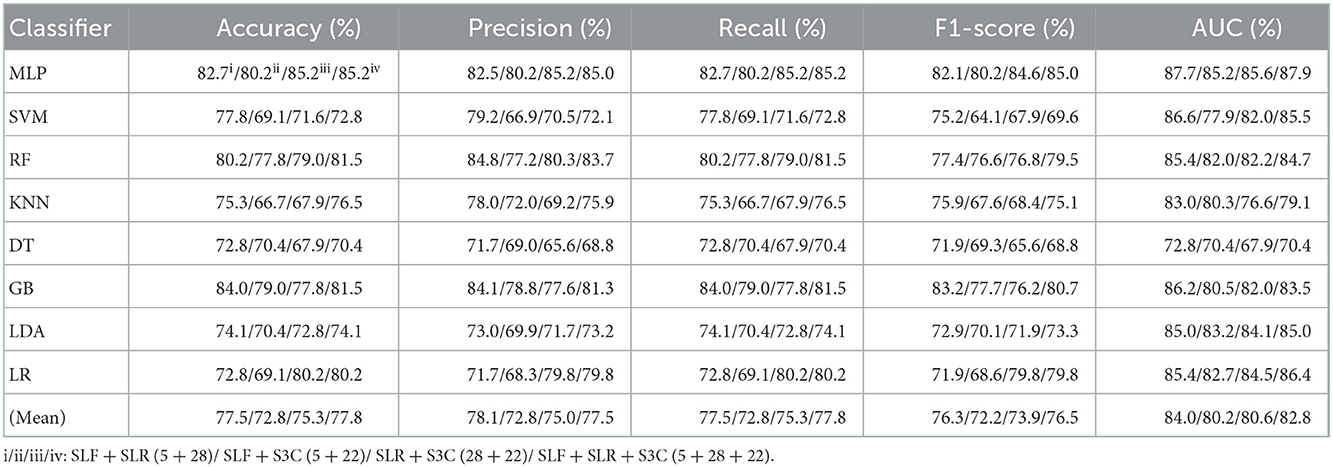

Table 7 reports the experimental results of three selected and fused combination features based on the eight classical ML classifiers in Experiment 4. Specifically, seven selected and fused combination features include SLF + FLF (5 + 2), SLR + FLR (28 + 6), S3C + F3C (22 + 34), SLF + FLF + SLR + FLR (5 + 2 + 28 + 6), SLF + FLF + S3C + F3C (5 + 2 + 22 + 34), SLR + FLR + S3C + F3C (28 + 6 + 22 + 34), SLF + FLF + SLR + FLR + S3C + F3C (selected ALL + fused ALL, 5 + 2 + 28 + 6 + 22 + 34).

Table 7. Evaluation metrics of the different classifiers with seven selected and fused combination features (Experiment 4) on the test set.

Table 7 and Figures 7A(a–e) show that the MLP classifier with selected ALL+fused ALL (our proposed strategy, 5 + 2 + 28 + 6 + 22 + 34) performs best in Experiments 1–4. Figure 7B shows the ROCs of the seven selected and fused combination features based on the eight classical ML classifiers. Specifically, the MLP classifier with selected ALL + fused ALL (5 + 2 + 28 + 6 + 22 + 34) achieves 87.7% of accuracy, 87.7% of precision, 87.7% of recall, 87.7% of F1-scorel, and 89.3% of AUC. Compared with the best classification performance of the single original feature OLF (11) based on the MLP classifier in Experiment 1, the classification performance of the MLP classifier with our proposed strategy has improved by 7.5% of accuracy, 7.9% of precision, 7.5% of recall, 7.9% of F1-scorel, and 5.6% of AUC. Compared with the best classification performance of the selected combination feature selected ALL (5 + 28 + 22) based on the MLP classifier in Experiment 2, the classification performance of the MLP classifier with our proposed strategy has improved by 2.5% of accuracy, 2.9% of precision, 2.5% of recall, 2.7% of F1-scorel, and 1.4% of AUC. Compared with the best classification performance of the fused combination feature fused ALL (2+6+34) based on the MLP classifier in Experiment 3, the classification performance of the MLP classifier with our proposed strategy has improved by 7.5% of accuracy, 7.6% of precision, 7.5% of recall, 8.5% of F1-scorel, and 7.0% of AUC.

Figure 7. The evaluation metrics pictures and ROCs of eight classifiers with seven selected and fused combination features (Experiment 4). (A) The evaluation metrics pictures include (a) Accuracy, (b) Precision, (c) Recall, (d) F1-score, (e) AUC, and (f) Mean. (B) ROCs of the ML classifiers include (a) SLF + FLF (5+2), (b) SLR + FLR (28+6), (c) S3C + F3C (22+34), (d) SLF + FLF + SLR + FLR (5+2+28+6), (e) SLF + FLF + S3C + F3C (5+2+22+34), (f) SLR + FLR + S3C + F3C (28+6+22+34), and (g) SLF + FLF + SLR + FLR + S3C + F3C (selected ALL + fused ALL, 5+2+28+6+22+34).

Figure 7A(f) shows the mean evaluation metrics of all classifiers in Experiment 4. The mean evaluation metrics of selected combination features SLF + FLF + SLR + FLR (5 + 2 + 28 + 6) perform best at mean AUC, achieving 83.8%. The mean AUC of selected ALL+fused ALL is marginally lower than that of SLF + FLF + SLR + FLR, achieving 83.1%. However, other mean evaluation metrics of selected ALL+fused ALL perform best, achieving 80.4% of accuracy, 80.6% of precision, 80.4% of recall, and 79.5% of F1-scorel. The mean evaluation metrics of selected ALL+fused ALL are far superior to the best mean evaluation metrics in Experiments 1–4.

Because of the MLP classifier's excellent performance in dyspnea identification, Figure 8 shows the evaluation metrics pictures and ROCs of MLP classifiers with different features in Experiments 1–4 (Figure 2). Figure 8A(a) shows that although OLF (11), OLR (1,316), and O3C (13,824) are directly combined, the classification performance of the MLP classifier is basically not improved. However, Figure 8A(b) shows that the classification performance of selected combination features based on the MLP classifier has improved. In addition, Figure 8A(c) shows that compared to the single-fused features, the classification performance of the fused combination features based on the MLP classifier has improved. However, compared with the classification performance of the original features or their combination features, the classification performance of the fused combination features based on the MLP classifier is also not improved. Finally, Figure 8A(d) shows that our proposed strategy by combining the local and global features of OLF, OLR, and O3C based on the MLP classifier performs the best classification performance, achieving 87.7% of accuracy, 87.7% of precision, 87.7% of recall, 87.7% of F1-scorel, and 89.3% of AUC.

Figure 8. The evaluation metrics pictures and ROCs of MLP classifiers with different features. (A) The evaluation metrics pictures of MLP classifiers in (a) Experiment 1, (b) Experiment 2, (c) Experiment 3, and (d) Experiment 4; (B) ROCs of MLP classifiers in (a) Experiment 1, (b) Experiment 2, (c) Experiment 3, and (d) Experiment 4.

This paper proposes a multi-modal data combination strategy by concatenating selected and fused PFT parameters, lung radiomics features, and 3D CNN features for dyspnea identification based on the MLP classifier. This section discusses three aspects: the single original modal data, Lasso and PCA, the proposed multi-modal data combination strategy, and the MLP classifier for dyspnea identification. Last, we also point out the limitations in this study and the future direction.

The single original modal data makes it difficult to achieve satisfactory performance of dyspnea identification in COPD for clinical application. Compared with OLR (1,316) or O3C (13,824) extracted from chest HRCT images, PFT parameters OLF (11) performs best for dyspnea identification in the mean evaluation metrics. The reason for PFT parameters achieving the best identification performance also can be explained. Compared with chest HRCT images, the PFT parameters can directly reflect the respiratory status of the lungs. Therefore, the PFT parameters perform better in dyspnea identification than OLR (1,316) and O3C (13,824). Specifically, as pulmonary function index in PFT, FEV1 and FEV1/FVC are the criteria for determining COPD classification (1). Therefore, they may be major factors in dyspnea in COPD. Unfortunately, because of the heterogeneity of COPD patients, some patients are without dyspnea even if they are in a higher COPD stage, such as GOLDIII&IV (Figure 1B). In addition, the alveolar wall structure is damaged in severe COPD patients, leading to alveolar fusion, which further reduces the area of the pulmonary vascular bed so that the gas exchange area is reduced. The proportion of ventilation/blood flow is an imbalance, which may lead to the decline of diffusion function. The mechanisms of exertional dyspnea in patients with mild COPD and low resting DLCO have been revealed (49). The TLC, FVC, and RV increase, vital capacity decreases, and the flow rate in the respiratory process decrease in COPD patients, which may result in dyspnea (50).

The Lasso algorithm is respectively performed to select the SLF (5), SLR (28), and S3C (22) from OLF (11), OLR (1,316), and O3C (13,824). Meanwhile, the PCA algorithm is respectively performed to select the FLF (2), FLR (6), and F3C (34) from OLF (11), OLR (1,316), and O3C (13,824). All ML Models based on the SLF (5), SLR (28), and S3C (22) respectively perform better than the OLF (11), OLR (1,316), and O3C (13,824) in the mean evaluation metrics. However, The FLF (2) and FLR (6) respectively perform poorer than the OLF (11) and OLR (1,316) in the mean evaluation metrics. The mean evaluation metrics of F3C (34) and O3C (13,824) basically remain unchanged. Lasso algorithm selects the identification features by establishing the relationship between the independent and dependent variables (OLF (11)/ OLR (1,316)/ O3C (13,824) and dyspnea identification), reducing the complexity of the ML classifiers and avoiding overfitting (3). While reducing the complexity of the ML classifiers, the ML classifiers respectively focus on the SLF (5), SLR (28), and S3C (22), improving the classifiers' performance for dyspnea identification. PCA algorithm fuses the identification features by reducing the dimension of the high-dimensional original features within a certain range of information loss (36). The PCA algorithm performs better at O3C (13,824) than the OLF (11) and OLR (1,316). Specifically, the OLF (11) and OLR (1,316) are not high-dimensional features. Therefore, certain identification information is lost when the original features of OLF and OLR's dimensionality reduction are performed. The mean evaluation metrics of F3C (34) and O3C (13,824) remain unchanged, confirming the discussion about the PCA algorithm above.

The main problem of the multi-modal data combination is that a smaller number of features OLF (11) are overwhelmed by a larger number of features OLR (1,316) and O3C (13,824). Therefore, the mean evaluation metrics of the ML Models based on original combination features have not been improved. Inspired by YOLOv3-SPP (51) (a CNN for the target detection), a multi-modal data combination strategy is proposed by combining the local and global features for dyspnea identification in COPD. The Lasso algorithm, with excellent performance for COPD dyspnea identification and its discussion above, is used to obtain the local features. Meanwhile, the global features are obtained by the PCA algorithm. The PCA algorithm fails to improve the identification performance, but the mean evaluation metrics of the local and global features have been improved. One important reason is that we select and fuse the original features separately and combine them for dyspnea identification. The local features are relevant for identifying dyspnea, but in any case, other possible features have been ignored by the Lasso algorithm. However, global features are obtained by the PCA algorithm, fusing all original features, which makes up for the defects of local features. Further, the advantages of PFT and CT are fully exploited. Except for PFT parameters and lung radiomics features, deep 3D CNN features are extracted from chest HRCT images. The local and global features of the PFT parameters, lung radiomics features, and 3D CNN features are re-integrated, finally obtaining a good dyspnea identification effect.

Eight classical ML classifiers are respectively used for dyspnea identification in COPD. The MLP classifier performs better than the other classifiers in this paper, implying that there may be a non-linear relationship between identification features and dyspnea. In addition, due to the multi-modal data combination, there are essential differences between the multi-modal features. In particular, the OLF (11) is obtained by PET, and OLR (1,316) and O3C (13,824) are extracted from chest HRCT images imaged by CT. The MLP classifier with strong adaptive and self-learning ability can handle the multi-modal data combination well. Meanwhile, 13,824 3D CNN features are the non-linear classification features. The MLP classifier is good at handling complex non-linear features by itself, which fits the essence of the MLP classifier and is interpretable (3, 37).

This study also has some limitations, and we point out the future direction. First, the number of our study cohort limits the multi-classification of dyspnea in COPD, which may be more meaningful in clinical COPD management. Second, dyspnea in COPD is identified only by engineering means. However, professional clinicians should further analyze the deeper relationship between dyspnea and identification features from a pathophysiological point of view. Third, the existing classic ML classifiers are not improved. Last, the measurement of PFT parameters is very complex and limited by the cooperation of the examiner (52). In our future work, the improved graph neural network, an auto-metric Graph Neural Network based on a meta-learning strategy (12, 53), will be further attempted and modified for dyspnea identification. Meanwhile, this paper only uses chest HRCT images and PFT parameters for dyspnea identification. Other clinical information should be collected to further improve dyspnea's classification performance, such as the heterogeneity parameter ventilation/perfusion /Q' is a major contributor to dyspnea in COPD patients (54, 55). Besides, the mMRC score of 1 is a rather low dyspnea level and can even be physiological breathlessness in older subjects. However, identifying severe and extremely severe dyspnea in COPD may be more valuable for clinical application. Therefore, in subsequent studies, we will further expand our research to reveal the rule of dyspnea in COPD with aging using a survival analysis model.

This paper proposes a multi-modal data combination strategy by combining the local and global features for dyspnea identification in COPD based on the MLP classifier. Specifically, the Lasso algorithm is separately performed to select the local features from original multi-modal data (11 original PFT parameters, 1,316 original lung radiomics features, and 13,824 original 3D CNN features). Meanwhile, the PCA algorithm is separately performed to fuse original multi-modal data, generating the global features. All the local and global features of original multi-modal data are combined for dyspnea identification in COPD based on the MLP classifier, achieving the best classification performance at 87.7% of accuracy, 87.7% of precision, 87.7% of recall, 87.7% of F1-score, and 89.3% of AUC, respectively. Compared with single-modal data, our proposed multi-modal data combination strategy effectively improves the classification performance for dyspnea identification in COPD, providing an objective and effective tool for COPD pre-clinical health management.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

The studies involving human participants were reviewed and approved by National Clinical Research Center of China's respiratory diseases. The patients/participants provided their written informed consent to participate in this study.

Conceptualization and supervision: YK and RC. Methodology: YY, ZC, WL, and YG. Software: YY, ZC, NZ, SW, and WD. Validation: WL, YY, ZC, XL, and HC. Formal analysis: YY, ZC, YL, WL, and YG. Investigation: HC and XL. Resources: HC, RC, and XL. Data curation: RC. Writing—original draft preparation: YY and ZC. Writing—review and editing: WL, YL, and YK. Visualization: ZC, YY, YL, NZ, SW, ZC, and WD. Project administration: YK and HC. Funding acquisition: YK, WL, and HC. All authors have read and agreed to the published version of the manuscript.

This research was funded by the National Natural Science Foundation of China, Grant Number 62071311; The Stable Support Plan for Colleges and Universities in Shenzhen of China, Grant Number SZWD2021010; The Scientific Research Fund of Liaoning Province of China, Grant Number JL201919; The Natural Science Foundation of Guangdong Province of China, Grant Number 2019A1515011382; the special program for key fields of colleges and universities in Guangdong Province (biomedicine and health) of China, Grant Number 2021ZDZX2008.

Thanks to the Department of Radiology, The First Affiliated Hospital of Guangzhou Medical University, for providing the dataset.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2022.980950/full#supplementary-material

1. Singh D, Agusti A, Anzueto A, Barnes PJ, Bourbeau J, Celli BR, et al. Global strategy for the diagnosis, management, and prevention of chronic obstructive lung disease: the GOLD science committee report 2019. Eur Respir J. (2019) 53:1900164. doi: 10.1183/13993003.00164-2019

2. Matheson MC, Bowatte G, Perret JL, Lowe AJ, Senaratna CV, Hall GL, et al. Prediction models for the development of COPD: a systematic review. Int J Chronic Obstr Pulm Dis. (2018) 13:1927–35. doi: 10.2147/COPD.S155675

3. Yang Y, Li W, Kang Y, Guo Y, Yang K, Li Q, et al. A novel lung radiomics feature for characterizing resting heart rate and COPD stage evolution based on radiomics feature combination strategy. Math Biosci Eng. (2022) 19:4145–65. doi: 10.3934/mbe.2022366

4. Redelmeier DA, Goldstein RS, Min ST, Hyland R. Spirometry and dyspnea in patients with COPD-when small differences mean little. Chest. (1996) 109:1163–8. doi: 10.1378/chest.109.5.1163

5. Ficker JH, Brückl WM. Refractory dyspnea in advanced COPD: palliative treatment with opioids. Pneumologie. (2019) 73:430–8. doi: 10.1055/s-0043-103033

6. Gardiner L, Carrieri AP, Bingham K, Macluskie G, Bunton D, McNeil M, et al. Combining explainable machine learning, demographic and multi-omic data to identify precision medicine strategies for inflammatory bowel disease. Cold Spring Harbor Laboratory Press. (2021). doi: 10.1101/2021.03.03.21252821

7. Madabhushi A, Agner S, Basavanhally A, Doyle S, Lee G. Computer-aided prognosis: Predicting patient and disease outcome via quantitative fusion of multi-scale, multi-modal data. Comput Med Imaging Graph. (2011) 35:506–14. doi: 10.1016/j.compmedimag.2011.01.008

8. Taube C, Lehnigk B, Paasch K, Kirsten DK, Jörres RA, Magnussen H. Factor analysis of changes in dyspnea and lung function parameters after bronchodilation in chronic obstructive pulmonary disease. Am J Respir Crit Care Med. (2000). doi: 10.1164/ajrccm.162.1.9909054

9. Lynch DA. Progress in imaging COPD, 2004-2014. Chronic Obstr Pulm Dis. (2014) 1:73. doi: 10.15326/jcopdf.1.1.2014.0125

10. Castaldi PJ, Estépar RSJ, Mendoza CS, Hersh CP, Laird N, Crapo JD, et al. Distinct quantitative computed tomography emphysema patterns are associated with physiology and function in smokers. Am J Respir Crit Care Med. (2013) 188:1083–90. doi: 10.1164/rccm.201305-0873OC

11. Lambin P, Rios-Velazquez E, Leijenaar R, Carvalho S, Van Stiphout RG, Granton P, et al. Radiomics: extracting more information from medical images using advanced feature analysis. Eur Cancer J. (2007) 43:441–6. doi: 10.1016/j.ejca.2011.11.036

12. Yang Y, Wang S, Zeng N, Duan W, Chen Z, Liu Y, et al. Lung radiomics features selection for COPD stage classification based on auto-metric graph neural network. Diagnostics. (2022) 12:2274. doi: 10.3390/diagnostics12102274

13. Tan W, Zhou L, Li X, Yang X, Chen Y, Yang J. Automated vessel segmentation in lung CT and CTA images via deep neural networks. J X-Ray Sci Technol. 2021:1–15. doi: 10.21203/rs.3.rs-551102/v1

14. Tan W, Liu P, Li X, Xu S, Chen Y, Yang J. Segmentation of lung airways based on deep learning methods. IET Image Process. (2022) 16:1444–56. doi: 10.1049/ipr2.12423

15. Góreczny S, Haak A, Morgan GJ, Zablah J. Feasibility of airway segmentation from three-dimensional rotational angiography. Cardiol J. (2020) 27:875–8. doi: 10.5603/CJ.a2020.0136

16. Yang K, Yang Y, Kang Y, Liang Z, Wang F, Li Q, et al. The value of radiomic features in chronic obstructive pulmonary disease assessment: a prospective study. Clin Radiol. (2022) 77:e466–72. doi: 10.1016/j.crad.2022.02.015

17. Wu G, Ibrahim A, Halilaj I, Leijenaar RT, Rogers W, Gietema HA, et al. The emerging role of radiomics in COPD and lung cancer. Respiration. (2020) 99:99–107. doi: 10.1159/000505429

18. Huang L, Lin W, Xie D, Yu Y, Cao H, Liao G, et al. Development and validation of a preoperative CT-based radiomic nomogram to predict pathology invasiveness in patients with a solitary pulmonary nodule: a machine learning approach, multicenter, diagnostic study. European Radiology. 2022:1983–96. doi: 10.1007/s00330-021-08268-z

19. Au RC, Tan WC, Bourbeau J, Hogg JC, Kirby M. Impact of image pre-processing methods on computed tomography radiomics features in chronic obstructive pulmonary disease. Phys Med Biol. (2021) 66:245015. doi: 10.1088/1361-6560/ac3eac

20. Yun J, Cho YH, Lee SM, Hwang J, Lee JS, Oh Y-M, et al. Deep radiomics-based survival prediction in patients with chronic obstructive pulmonary disease. Sci Rep. (2021) 11:1–9. doi: 10.1038/s41598-021-94535-4

21. Au RC, Tan WC, Bourbeau J, Hogg JC, Kirby, M. Radiomics analysis to predict presence of chronic obstructive pulmonary disease and symptoms using machine learning[M]//TP121. TP121 COPD: FROM CELLS TO THE CLINIC. American Thoracic Society. 2021:A4568. doi: 10.1164/ajrccm-conference.2021.203.1_MeetingAbstracts.A4568

22. Yang Y, Li W, Guo Y, Liu Y, Li Q, Yang K, et al. Early COPD risk decision for adults aged from 40 to 79 years based on lung radiomics features. Front Med. (2022) 9:845286. doi: 10.3389/fmed.2022.845286

23. Li Q, Yang Y, Guo Y, Li W, Liu Y, Liu H, et al. Performance evaluation of deep learning classification network for image features. IEEE Access. (2021) 9:9318–33. doi: 10.1109/ACCESS.2020.3048956

24. Kim S, Oh J, Kim Y-I, Ban H-J, Kwon Y-S, Oh I-J, et al. Differences in classification of COPD group using COPD assessment test (CAT) or modified Medical Research Council (mMRC) dyspnea scores: a cross-sectional analyses. BMC Pulm Med. (2013) 13:1–5. doi: 10.1186/1471-2466-13-35

25. Launois C, Barbe C, Bertin E, Nardi J, Perotin JM, Dury S, et al. The modified Medical Research Council scale for the assessment of dyspnea in daily living in obesity: a pilot study. BMC Pulm Med. (2012) 12:1–7. doi: 10.1186/1471-2466-12-61

26. Boelders S, Nallanthighal VS, Menkovski V, Härmä A. Detection of mild dyspnea from pairs of speech recordings[C]// ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE. (2020) 4102–6. doi: 10.1109/ICASSP40776.2020.9054751

27. Mazumder AN, Ren H, Rashid HA, Hosseini M, Chandrareddy V, Homayoun H, et al. Automatic detection of respiratory symptoms using a low-power multi-input CNN processor. IEEE Design & Test. (2021) 39:82–90. doi: 10.1109/MDAT.2021.3079318

28. Zhou Y, Bruijnzeel P, Mccrae C, Zheng J, Nihlen U, Zhou R, et al. Study on risk factors and phenotypes of acute exacerbations of chronic obstructive pulmonary disease in Guangzhou, China-design and baseline characteristics. J Thorac Dis. (2015) 7:720–33. doi: 10.3978/j.issn.2072-1439.2015.04.14

29. Brusasco V, Crapo R, Viegi G. Coming together: the ATS/ERS consensus on clinical pulmonary function testing. Eur Respir J. (2007) 24:11–4. doi: 10.1183/09031936.05.00034205

30. Hofmanninger J, Prayer F, Pan J, Rohrich S, Prosch H, Langs G. Automatic lung segmentation in routine imaging is a data diversity problem, not a methodology problem. Eur Radiol Exp. (2020) 4:1–13. doi: 10.1186/s41747-020-00173-2

31. Yang Y, Li Q, Guo Y, Liu Y, Li X, Guo J, et al. Lung parenchyma parameters measure of rats from pulmonary window computed tomography images based on ResU-Net model for medical respiratory researches. Math Biosci Eng. (2021) 18:4193–211. doi: 10.3934/mbe.2021210

32. Van Griethuysen JJM, Fedorov A, Parmar C, Hosny A, Aucoin N, Narayan V, et al. Computational radiomics system to decode the radiographic phenotype. Cancer Res. (2017) 77:e104–e107. doi: 10.1158/0008-5472.CAN-17-0339

33. Chen S, Ma K, Zheng Y. Med3d: Transfer learning for 3d medical image analysis. arXiv preprint arXiv:1904.00625. (2019). doi: 10.48550/arXiv.1904.00625

34. Yang Y, Guo Y, Guo J, Gao Y, Kang Y. A method of abstracting single pulmonary lobe from computed tomography pulmonary images for locating COPD. In: Proceedings of the Fourth International Conference on Biological Information and Biomedical Engineering. (2020). p. 1–6. doi: 10.1145/3403782.3403805

35. Tibshirani R. Regression shrinkage and selection via the Lasso Robert Tibshirani. Journal of the Royal Statistical Society: Series B (Statistical Methodology). (2007) 58:267–88. doi: 10.1111/j.2517-6161.1996.tb02080.x

36. Bro R, Smilde AK. Principal component analysis. Analytical Methods. (2014) 6:2812–31. doi: 10.1039/C3AY41907J

37. Riedmiller M, Lernen A. Multi layer perceptron. Machine Learning Lab Special Lecture, University of Freiburg. 2014:7–24.

38. Desai M, Shah M. An anatomization on breast cancer detection and diagnosis employing multi-layer perceptron neural network (MLP) and Convolutional neural network (CNN). Clinical eHealth. (2021) 4:1–11. doi: 10.1016/j.ceh.2020.11.002

39. Lorencin I, Andelić N, Španjol J, Car Z. Using multi-layer perceptron with Laplacian edge detector for bladder cancer diagnosis. Artif Intell Med. (2020) 102:101746. doi: 10.1016/j.artmed.2019.101746

40. Xu Y, Li F, Asgari A. Prediction and optimization of heating and cooling loads in a residential building based on multi-layer perceptron neural network and different optimization algorithms. Energy. (2022) 240:122692. doi: 10.1016/j.energy.2021.122692

41. Wan S, Liang Y, Zhang Y, Guizani M. Deep multi-layer perceptron classifier for behavior analysis to estimate Parkinson's disease severity using smartphones. IEEE Access. (2018) 6:36825–33. doi: 10.1109/ACCESS.2018.2851382

42. Jakkula V. Tutorial on support vector machine (svm), School of EECS, Washington State University. (2006) 37:3.

44. Safavian SR, Landgrebe D. A survey of decision tree classifier methodology. IEEE Trans Syst Man Cybern. (1991) 21:660–74. doi: 10.1109/21.97458

45. Friedman JH. Greedy function approximation: a gradient boosting machine. Ann Stat. (2001) 29:1189–232. doi: 10.1214/aos/1013203451

46. Knowles CH, Eccersley AJ, Scott SM, Walker SM, Reeves B, Lunniss PJ. Linear discriminant analysis of symptoms in patients with chronic constipation. Diseases of the Colon & Rectum. (2000). doi: 10.1016/S0016-5085(00)80553-3

47. Ramteke RJ, Khachane MY. Automatic medical image classification and abnormality detection using K-Nearest neighbour. Int J Adv Comput Res. (2012) 2:190–6.

48. LaValley MP. Logistic regression. Circulation. (2008) 117:2395–9. doi: 10.1161/CIRCULATIONAHA.106.682658

49. James M, Milne K, Neder JA, O'Donnell D. Mechanisms of exertional dyspnea in patients with mild COPD and low resting lung diffusing capacity for carbon monoxide (DLCO). (2020) 56:922. doi: 10.1183/13993003.congress-2020.922

50. Parker CM, Voduc N, Aaron SD, Webb KA, O'Donnell DE. Physiological changes during symptom recovery from moderate exacerbations of COPD. European Respiratory Journal. (2005) 26:420–8. doi: 10.1183/09031936.05.00136304

51. Shaotong P, Dewang L, Ziru M, Yunpeng L, Yonglin L. Location and identification of insulator and bushing based on YOLOv3-spp algorithm. In: 2021 IEEE International Conference on Electrical Engineering and Mechatronics Technology. IEEE (2021). p. 791–4. doi: 10.1109/ICEEMT52412.2021.9602798

52. Bailey KL. The importance of the assessment of pulmonary function in COPD. Medical Clinics. (2012) 96:745–52. doi: 10.1016/j.mcna.2012.04.011

53. Song X, Mao M, Qian X. Auto-metric graph neural network based on a meta-learning strategy for the diagnosis of alzheimer's disease. IEEE J Biomed Health Inform. (2021) 25:3141–52. doi: 10.1109/JBHI.2021.3053568

54. Harutyunyan G, Harutyunyan V, Harutyunyan G, Sánchez Gimeno A, Cherkezyan A, Petrosyan S, et al. Ventilation/perfusion mismatch is not the sole reason for hypoxaemia in early stage COVID-19 patients. Eur Respir J. (2022) 31:210277. doi: 10.1183/16000617.0277-2021

Keywords: dyspnea identification, COPD, multi-modal data, combination strategy, PFT parameters, lung radiomics features, 3D CNN features, machine learning

Citation: Yang Y, Chen Z, Li W, Zeng N, Guo Y, Wang S, Duan W, Liu Y, Chen H, Li X, Chen R and Kang Y (2022) Multi-modal data combination strategy based on chest HRCT images and PFT parameters for intelligent dyspnea identification in COPD. Front. Med. 9:980950. doi: 10.3389/fmed.2022.980950

Received: 30 June 2022; Accepted: 06 December 2022;

Published: 21 December 2022.

Edited by:

Hyunjin Park, Sungkyunkwan University, South KoreaReviewed by:

Christophe Delclaux, Hôpital Robert Debré, FranceCopyright © 2022 Yang, Chen, Li, Zeng, Guo, Wang, Duan, Liu, Chen, Li, Chen and Kang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yan Kang,  a2FuZ3lhbkBzenR1LmVkdS5jbg==; Rongchang Chen,

a2FuZ3lhbkBzenR1LmVkdS5jbg==; Rongchang Chen,  Y2hlbnJjQHZpcC4xNjMuY29t

Y2hlbnJjQHZpcC4xNjMuY29t

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.