95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 22 July 2022

Sec. Intensive Care Medicine and Anesthesiology

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.920040

This article is part of the Research Topic Clinical Application of Artificial Intelligence in Emergency and Critical Care Medicine, Volume III View all 13 articles

Background: Although there has been a large amount of research focusing on medical image classification, few studies have focused specifically on the portable chest X-ray. To determine the feasibility of transfer learning method for detecting atelectasis with portable chest X-ray and its application to external validation, based on the analysis of a large dataset.

Methods: From the intensive care chest X-ray medical information market (MIMIC-CXR) database, 14 categories were obtained using natural language processing tags, among which 45,808 frontal chest radiographs were labeled as “atelectasis,” and 75,455 chest radiographs labeled “no finding.” A total of 60,000 images were extracted, including positive images labeled “atelectasis” and positive X-ray images labeled “no finding.” The data were categorized into “normal” and “atelectasis,” which were evenly distributed and randomly divided into three cohorts (training, validation, and testing) at a ratio of about 8:1:1. This retrospective study extracted 300 X-ray images labeled “atelectasis” and “normal” from patients in ICUs of The First Affiliated Hospital of Jinan University, which was labeled as an external dataset for verification in this experiment. Data set performance was evaluated using the area under the receiver operating characteristic curve (AUC), sensitivity, specificity, and positive predictive values derived from transfer learning training.

Results: It took 105 min and 6 s to train the internal training set. The AUC, sensitivity, specificity, and accuracy were 88.57, 75.10, 88.30, and 81.70%. Compared with the external validation set, the obtained AUC, sensitivity, specificity, and accuracy were 98.39, 70.70, 100, and 86.90%.

Conclusion: This study found that when detecting atelectasis, the model obtained by transfer training with sufficiently large data sets has excellent external verification and acculturate localization of lesions.

Atelectasis, as the most common postoperative pulmonary complication (PPC) (1), is also the most common disease in intensive care units (ICUs), and is often accompanied by pneumothorax, pleural effusion, pulmonary edema, and other pulmonary diseases, and requires reintubation within 48 h after a complication. Portable chest radiography (2) is one of the most common non-invasive radiological tests for rapid and straightforward atelectasis detection in ICUs. The main direct signs (3) of atelectasis on chest radiographs include defect migration, parenchymal opacity with unbroken linear boundaries, and vascular displacement. Indirect signs (4) have ipsilateral diaphragmatic elevation, hilar removal, heart involvement, and mediastinum and trachea dysfunction; however, it is difficult to rapidly distinguish the characteristics of early chest radiographs of patients with atelectasis from pleural effusion and lung consolidation with increased density. With the rapid development of deep-learning technology, the convolutional neural network (5) extracts inherent characteristics from medical image data for classification and recognition based on images much more effectively than do traditional recognition algorithms. The problem of inaccurate diagnoses caused by the continuous increase in the number of chest X-rays that exceeds the increase in the number of radiologists has somewhat been solved. Because previous studies have developed chest X-ray diagnostic algorithms based on deep neural networks, 14 basic lung diseases can be diagnosed.

The present study was the first to extract large-scale positive atelectasis data from the MIMIC-CXR-JPG database (6–8), an intensive care medical information database, to obtain a model with high accuracy and obtain reliable external validation. The purpose of this study was to determine the feasibility of transfer learning methods for detecting atelectasis using portable chest X-rays based on large data sets. In addition, this study used multi-center data set for the first time to realize the early diagnosis and prediction of bedside portable chest radiographs and obtained good external validation.

In this study, 14 categories that were clearly diagnosed as “positive” or “negative” were obtained from the MIMIC-CXR-JPG database using the open-source tagging tools NegBio9 (9) and CheXpert10 (10) (473,057 chest radiographs and 206,563 text reports). There were 45,808 chest radiographs labeled “atelectasis” and 75,455 chest radiographs labeled “no finding.” A total of 60,000 images were extracted, including positive images labeled “atelectasis” and positive X-ray images labeled “no finding,” and blank text was excluded. The data were defined as “normal” and “atelectasis” with an even distribution. At the same time, the data set was classified and randomly divided into three sets (training, validation, and testing) at a ratio of about 8:1:1. The MIMIC-CXR-JPG common database was used as the internal testing data of this experiment.

An external testing data set was developed in this study, with primary image data from ICUs patients at The First Affiliated Hospital of Jinan University, from which data during 2017–2021 were randomly selected by three senior attending physicians for a definitive diagnosis of the patients with atelectasis, who found no apparent abnormalities in the chest radiological image data, and professional radiologists carried out a review of the random tag data set. After excluding the data of poor posture placement and unclear diagnoses, 300 images with complete labels were finally extracted: 150 with atelectasis and 150 without abnormalities. The flow-chart of the training data creation is shown in Figure 1.

All experiments were performed using the Ubuntu 20.04 64-bit operating system. For the training process of the CNN model, MATLAB language was adopted as the programming environment. The specific software and hardware configuration are listed in Table 1.

This study processed both internal and external testing cohorts. The frontal chest images from the MIMIC-CXR-JPG database had a resolution of 256 × 256 × 1 pixels, while those from The First Affiliated Hospital of Jinan University were 512 × 512 × 3 pixels. Since the image resolutions of the two data sets had different heights and widths, the image data from the two other sources had to be standardized. We achieved this by automatically cropping the chest region and adjusting it to 224 × 224 × 3 pixels in order to fit the model input resolution more conveniently. At the same time, the image was randomly scaled horizontally and vertically, and processing methods were cut and shifted to enhance the data processing (11) in order to further optimize the verification and evaluation of the testing results.

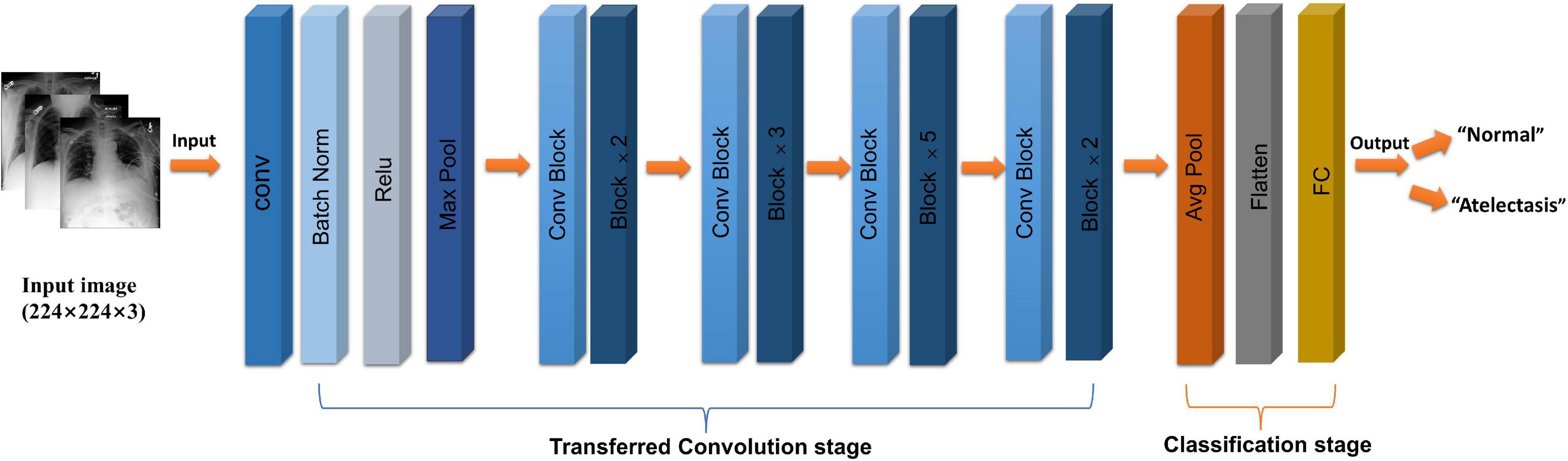

The selected study model was the ResNet50 (12) network model, which is based on the residual error learning method from the VGG19 improved classification model. Based on the existing training network depth, a more-optimized residual learning framework was put forward, not only to solve the problem of the gradient disappearance and explosion (13), but also to further deepen the network depth. The problem of network performance degradation is also avoided. ResNet50 covers 49 convolutional layers and one full connection layer, retains the convolutional layer with a core size of 7 × 7 in VGG19 (14) to learn more features, and uses the maximum pooling layer for downsampling. In addition, there are five stages and two significant boards in the ResNet50 network. Stage 0 has a simple structure and is mainly used for the preprocessing of input images. It has gone through the convolutional layer, batch normalization, and ReLU activation function (12). The next four stages are composed of a bottleneck and have similar structures. After continuous convolution operation of residual blocks, the number of channels in the image pixel matrix becomes deeper and deeper, then passes through the flatten layer, and finally input into the full connection layer and output the corresponding category probability through SoftMax layer, the typical features of the image are automatically extracted, and the last constitute a classifier to divide the image into “normal” and “atelectasis.” The specific network architecture is shown in Figure 2.

Figure 2. Flow chart of ResNet50 (CoNV is convolution operation, Batch Norm is Batch regularization processing, ReLU is activation function, MAXP00L and AvgPOOL are two pooling operations, including convolution transformation stage, and classification stage, respectively).

Considering the extensive data set analyzed in this study, a general machine-learning method called transfer learning was used to improve the rate and performance of model learning. Transfer learning (15, 16) can transfer the knowledge learned by the model from the source domain to another target domain, so that the model can better acquire the understanding of the target domain, and improve the speed and simplicity of the learning, compared with the initial training network that uses randomly initialized weights. This study used a pretrained ResNet50 network model to randomly divide the data into the training (80%) and validation (20%) sets, and the same pretreatment operation was adopted. Since the chest radiography images were asymmetric (16), we adopted random undersampling technology and adaptive moment estimation (Adam) using vector momentum, which was adapted to increase the convergence speed. Our model was trained with a 0.0001 learning rate, minimum batch size of 64, and maximum of 8 epochs, in order to achieve the maximum number of 7,200 iterations. The training lasted 105 min and 6 s. By fine-tuning the experimental parameters, the best experimental results were obtained. The training effect is shown in Figure 2.

In the experiments, the area under the receiver operating characteristic curve (AUC) (17) and the accuracy, specificity, and sensitivity (Eqs. 1, 2, and 3 below) were used as evaluation indicators (18). A larger AUC indicates that the prediction result is closer to the actual situation, and hence better model performance. Through the following formula, we can get the following indicators, respectively: false positives (FP), true negatives (TN), true positives (TP), and false negatives (FN). The confusion matrices were calculated from the following indexes, which can further help to analyze the model performance and calculate the above evaluation values.

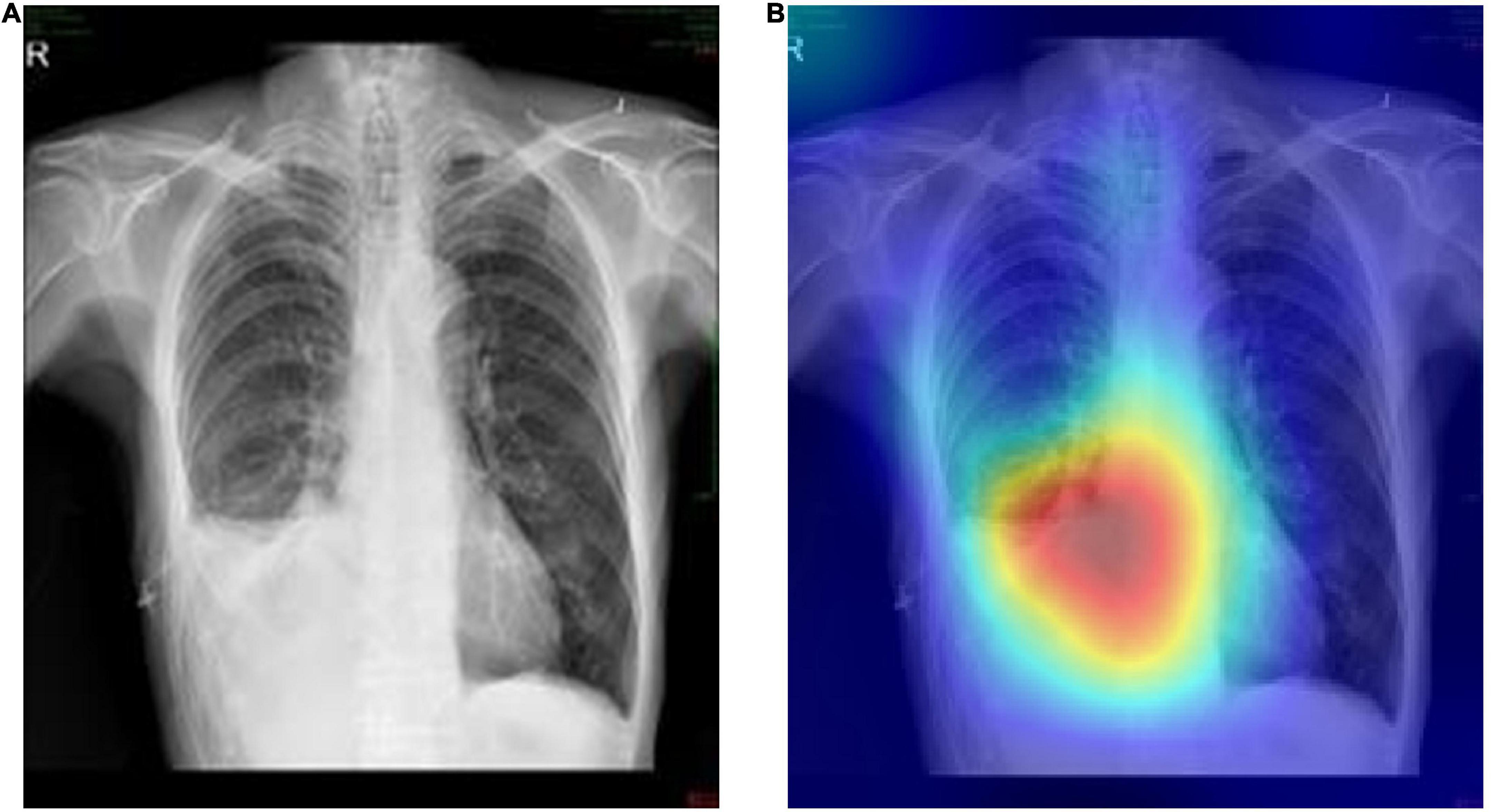

Based on the model obtained by the experimental training, Grad-CAMs (19, 20)can be used to generate a gradient class activation map. Grad-CAMs generated the class activation map and highlighted the areas that are important for the classification of the image, which not only provides insight into the nature of the black box of the model (16), but is also helpful for obtaining the key feature extraction of images and enables predictions of the classification decision interpretation model.

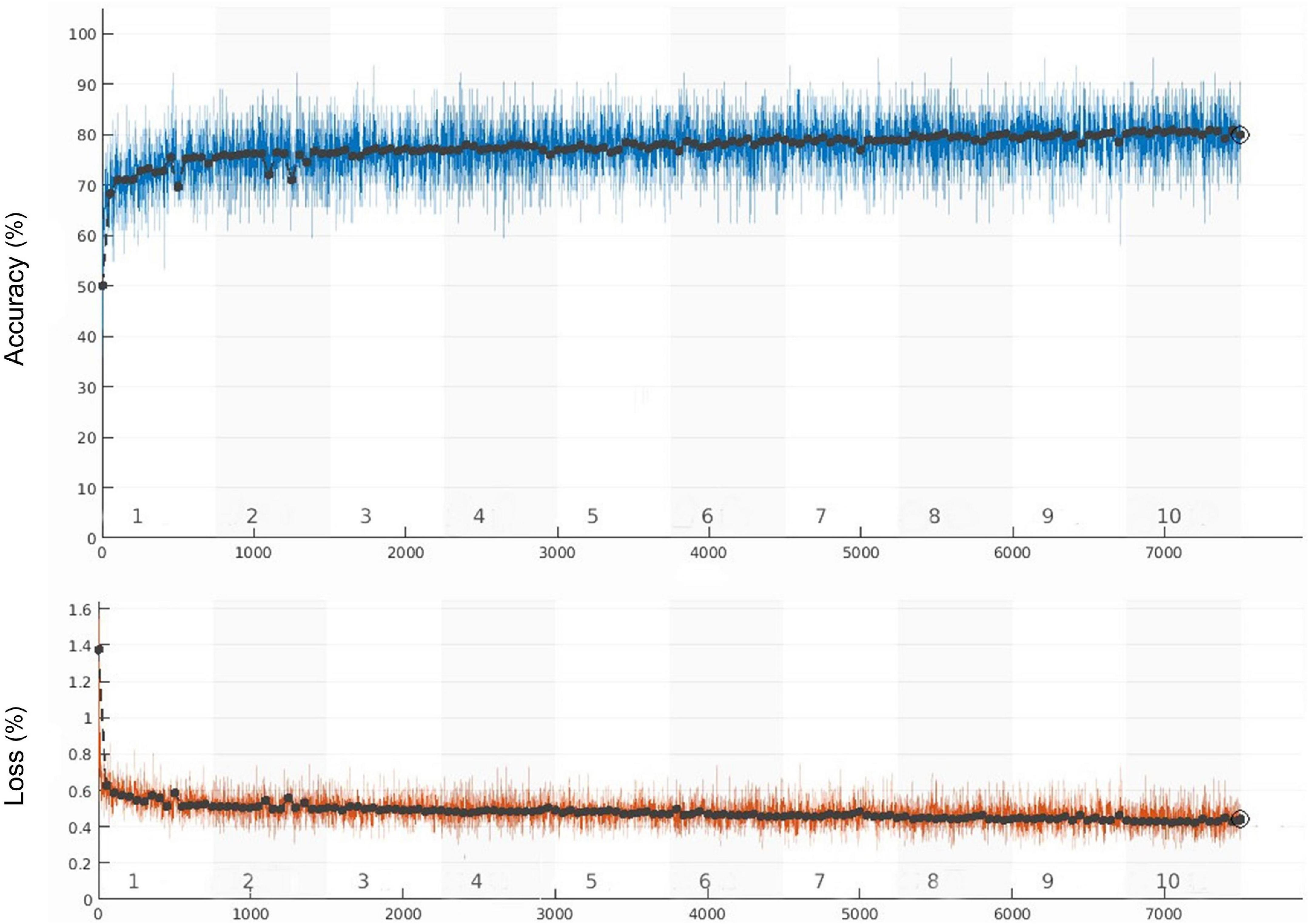

The whole training process took 105 min and 6 s. The learning curve of the model is shown in Figure 3, and the verification accuracy reached 79.91%. The partial accuracy curve reached 80% when the training was completed, and the loss rate decreased significantly to below 40%.

Figure 3. Training accuracy and training loss curves (the black curve and blue curve represent the accuracy of training set and verification set, respectively, the orange curve represents the loss).

The confusion matrix results of the model on the testing and validation cohorts were calculated and shown in Figure 4. The testing cohort was distributed in a 2 × 2 matrix according to the labeled labels and the predicted results. Each square represents the ratio of predicted positives to actual positives. Total data volume and prediction are shown for each level. The trained model can classify the testing cohort at an accuracy of 81.70%, and the specificity and sensitivity were 88.30 and 75.10%, respectively. An accuracy of 86.90% was obtained by classifying the external validation set, and the corresponding specificity and sensitivity in the calculations were 100 and 70.70%, respectively. Table 2 lists all the evaluation indexes obtained in the internal and external testing cohort of this experiment. By calculating the above scores, the confusion matrixes of the internal and external testing cohorts were obtained, which could help to get TPs, TNs, FPs, and FNs. Meanwhile, the AUC was used as the evaluation index, with the vertical axis representing the true category rate. The horizontal and vertical axes represented the FP and TP rates, respectively, and the ROC curve was drawn. Larger AUC values indicate that the model prediction result is closer to the actual situation. The AUCs of the internal and external testing cohorts obtained in the model training were 88.57 and 98.39%, respectively, as shown in Figure 5.

On this basis, the Grad-CAMs map was drawn to predict the deep model. Figure 6 shows that the proposed model can accurately distinguish between normal chest radiographs and those with atelectasis. The red area on the chest radiographic heat map offers the critical area for the machine to determine its classification (21). Randomly generated heat maps focused on the lungs and heart.

Figure 6. Representative cases in the external testing sets. (A) Example of a true-positive case, that is the original chest radiograph of atelectasis with pleural effusion in ICUs. (B) Grad-CAM heatmap of class activation derived from model prediction classification.

Atelectasis remains a significant challenge for physicians in general anesthesia and ICUs treatment and diagnosis. Undiagnosed or late-diagnosed atelectasis can have a significant mortality risk (22). From a pathological point of view (3), atelectasis mainly manifests as reversible alveolar or lobe collapse, which is generally caused by obstruction of the affected alveoli in the airways, resulting in damage to the exchange of carbon dioxide and oxygen. According to preliminary studies, almost all patients undergoing major surgery will present with some degree of atelectasis (23, 24). Typically 2–4% of elective thoracic surgeries and 20% of emergency surgeries are related to PPCs, among which atelectasis is the most common respiratory complication. Without timely early diagnosis and intervention, a series of serious and often fatal complications will occur as the disease progresses. Eventually, due to decreased lung compliance, hypoxemia, decreased pulmonary vascular resistance, hypoxemia, postoperative infection, diffuse alveolar injury, respiratory failure, or even death (in extreme cases) may occur. So far, X-ray imaging has always been an essential means of atelectasis diagnosis, and portable chest X-ray in ICUs (25) is a rapid and straightforward method for the early diagnosis of atelectasis. This is especially true among ICUs patients with respiratory and hemodynamic parameters within a normal range, and where the direct signs of atelectasis mostly appear on chest radiograph crack deviations (3, 4, 26), parenchymal turbidities, linear boundary, and vascular displacements, among which increased density of dysfunctional lung areas is the most-obvious manifestation of atelectasis. In order to help ICUs doctors diagnose atelectasis early using portable chest X-ray, we established a model of bedside chest X-rays for detecting atelectasis by applying transfer learning based on the ResNet50 convolutional network, and used the explicit atelectasis data extracted from the MIMIC-CXR-JPG database as an internal testing cohort, which yielded an accuracy of 81.70%. This result was further externally verified using ICUs atelectasis image data rescreened and relabeled by doctors at The First Affiliated Hospital of Jinan University, achieving an accuracy rate of 86.90%. Obviously, the process of data relabeling is one of the main reasons for the increased accuracy of external validation in this study compared with previous studies.

Data enhancement and transfer learning were simultaneously adopted in this study to improve the accuracy of image classification and avoid overfitting. The ResNet50 network model parameters were obtained through the migration study less, high precision, deep residual layer network structure is complex, which solves the problem of low efficiency based on large training data sets and makes training more precise. Calculating the specificity, sensitivity, accuracy, and AUC revealed that the training model was highly robust (27), which provided external verification, with values of 100.00, 70.70, 86.90, and 98.39%, respectively. Finally, the features extracted from the training images were visualized by a heat map displaying the lung and the region near the heart. In addition, the novelty of this study was highlighted by the application of the transfer learning method to chest X-ray atelectasis examinations, and its reliable external validation.

This study had some limitations. Firstly, we used all the atelectasis image data sets during 2011–2017 in the MIMIC-CXR-JPG database, which is large but only provided relatively limited patient information, such as gender, age, and diagnostic test, and the clinical backgrounds of patients were unavailable. Atelectasis diagnoses could therefore only be labeled according to the diagnostic test, and whether it was associated with other pulmonary complications remains to be determined. We will further attempt to establish a more practical model combined with the experience of clinical practice, use more diverse neural network learning algorithms and network models, and make horizontal comparison with other more advanced networks, to classify atelectasis in more detail, with a view to providing greater assistance in early clinical intervention, diagnosis and treatment. Secondly, the case-control design used in this study artificially increased the prevalence of atelectasis by using positive data collected from the MIMIC-CXR-JPG database and The First Affiliated Hospital of Jinan University, thus overestimating the positive predictive value compared with the clinical reality (28). In addition, the data sets of internal and external validation included in this paper are random and uniform data, which satisfies the ideal comparison of data to a certain extent. Queue design will therefore be used in the future to obtain more-reliable actual tags. Thirdly, our internal and external testing cohorts were basically derived from portable chest X-ray images from ICUs and the results might not be applicable to outpatients and general patients. Moreover, the image data in the MIMIC-CXR-JPG database (6, 29) were derived from foreign databases, and there were some differences in diagnostic reporting and standards. There was some heterogeneity in the atelectasis data obtained from The First Affiliated Hospital of Jinan University based on the external validation, and so the results obtained should be considered exploratory only.

In the future, we will attempt to explore more cutting-edge and optimized AI models for portable chest X-ray diagnoses of acute and severe pulmonary complications such as atelectasis, and further promote precision medicine (30, 31), to allow the application of machine learning in clinical imaging diagnosis to realize human-machine mutual assistance and true generalization.

In summary, this study found that when detecting atelectasis, a model obtained by training with sufficiently large data sets exhibited better external verification and can better help ICUs doctors to diagnose atelectasis and implement interventions early.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by the IRB of the First Affiliated Hospital of Jinan University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s), and minor(s)’ legal guardian/next of kin, for the publication of any potentially identifiable images or data included in this article.

XH created the study protocol, completed the main experimental training, and wrote the first manuscript draft. BL provided clinical guidance and critically revised the manuscript. TH assisted with the study design and performed data collection. SY and WW participated in the analysis and interpretation of data. HY assisted with manuscript revision and data confirmation. JL contributed to data interpretation and manuscript revision. All authors contributed to the article and approved the submitted version.

This study was supported by the Guangdong Provincial Key Laboratory of Traditional Chinese Medicine Information (2021B1212040007).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

MIMIC-CXR, medical information mart for intensive care chest X-ray; AUC, area under the curve; ROC, curves receiver operating characteristic curves; ICUs, intensive care units; AI, artificial intelligence.

1. Restrepo RD, Braverman J. Current challenges in the recognition, prevention and treatment of perioperative pulmonary atelectasis. Expert Rev Respir Med. (2015) 9:97–107. doi: 10.1586/17476348.2015.996134

2. MacMahon H, Giger M. Portable chest radiography techniques and teleradiology. Radiol Clin North Am. (1996) 34:1–20.

4. Woodring JH, Reed JC. Radiographic manifestations of lobar atelectasis. J Thorac Imaging. (1996) 11:109–44. doi: 10.1097/00005382-199621000-00003

5. Moses DA. Deep learning applied to automatic disease detection using chest X-rays. J Med Imag Radiat Oncol. (2021) 65:498–517. doi: 10.1111/1754-9485.13273

6. Johnson AEW, Pollard TJ, Berkowitz SJ, Greenbaum NR, Lungren MP, Deng CY, et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci Data. (2019) 6:317. doi: 10.1038/s41597-019-0322-0

7. Wu WT, Li YJ, Feng AZ, Li L, Huang T, Xu AD, et al. Data mining in clinical big data: the frequently used databases, steps, and methodological models. Mil Med Res. (2021) 8:44. doi: 10.1186/s40779-021-00338-z

8. Yang J, Li Y, Liu Q, Li L, Feng A, Wang T, et al. Brief introduction of medical database and data mining technology in big data era. J Evid Based Med. (2020) 13:57–69. doi: 10.1111/jebm.12373

9. Peng Y, Wang X, Lu L, Bagheri M, Summers R, Lu Z. Negbio:a high-performance tool for negation and uncertainty detection in radiology reports. AMIA Jt Summits Transl Sci Proc. (2018) 2017:188–96.

10. Irvin J, Rajpurkar P, Ko M, Yu Y, Ciurea-Ilcus S, Chute C, et al. “Chexpert:A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison,” in Proceedings of the ThirtyThird AAAI Conference on Artificial Intelligence, Hawaii, HI (2019). p. 590–7.

11. Sirazitdinov I, Kholiavchenko M, Kuleev R, Ibragimov B. “Data Augmentation for Chest Pathologies Classification,” in Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), (Piscataway, NJ: IEEE) (2019). p. 1216–9. doi: 10.1109/ISBI.2019.8759573

12. He KM, Zhang XY, Ren SQ, Sun J. “Deep residual learning for image recognition,” in Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Piscataway, NJ: IEEE) (2016). p. 770–8. doi: 10.1109/CVPR.2016.90

13. Abiyev RH, Ma’aitah MKS. Deep convolutional neural networks for chest diseases detection. J Healthc Eng. (2018) 2018:4168538. doi: 10.1155/2018/4168538

14. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv [Preprint] (2014). doi: 10.48550/arXiv.1409.1556

15. Jason Yosinski JC, Bengio Y, Lipson H. How transferable are features in deep neural networks? Adv Neural Inf Process Syst. (2014) 27:3320–28.

16. Matsumoto T, Kodera S, Shinohara H, Yamaguchi T, Higashikuni Y, Kiyosue A, et al. Diagnosing heart failure from chest x-ray images using deep learning. Int. Heart J. (2020) 61:781–6. doi: 10.1536/ihj.19-714

17. Ayan EÜH. “Diagnosis of pneumonia from chest x-ray images using deep learning,” in Proceedings of the2019 scientific meet-ing on Electrical-Electronics & Biomedical Engineering and Computer Science (EBBT), (Piscataway, NJ: IEEE) (2019). p. 1–5. doi: 10.1109/EBBT.2019.8741582

18. Majeed T, Rashid R, Ali D, Asaad A. Issues associated with deploying CNN transfer learning to detect COVID-19 from chest X-rays. Phys Eng Sci Med. (2020) 43:1289–303. doi: 10.1007/s13246-020-00934-8

19. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. (2020) 128:618–26. doi: 10.1109/ICCV.2017.74

20. Selvaraju RR, Vedantam R, Cogswell M, Parikh D, Batra D. Grad-CAM: why did you say that?visual explanations from deep networks via gradientbased localization. arXiv [Preprint] (2016).

21. Zeiler MD, Fergus R. Visualizing and understanding convolutional networks. In: D Fleet, T Pajdla, B Schiele, T Tuytelaars editors. Computer Vision–ECCV 2014. New York, NY: Springer Publishing (2014). doi: 10.1007/978-3-319-10590-1_53

22. Hoshikawa Y, Tochii D. Postoperative atelectasis and pneumonia after general thoracic surgery. Kyobu Geka. (2017) 70:649–55.

23. Smetana GW. Postoperative pulmonary complications: an update on risk assessment and reduction. Cleve Clin J Med. (2009) 76(Suppl. 4):S60–5. doi: 10.3949/ccjm.76.s4.10

24. Ray K, Bodenham A, Paramasivam E. Pulmonary atelectasis in anaesthesia and critical care. Continuing Educ Anaesth Critic Care Pain. (2014) 14:236–45. doi: 10.1093/bjaceaccp/mkt064

25. Tarbiat M, Bakhshaei MH, Khorshidi HR, Manafi B. Portable chest radiography immediately after post-cardiac surgery; an essential tool for the early diagnosis and treatment of atelectasis: a case report. Tanaffos. (2020) 19:418–21.

26. Hobbs BB, Hinchcliffe A, Greenspan RH. Effects of acute lobar atelectasis on pulmonary hemodynamics. Invest Radiol. (1972) 7:1–10.

27. Arle JE, Mei L, Carlson KW. Robustness in neural circuits. In: SN Makarov, GM Noetscher, A Nummenmaa editors. Brain and Human Body Modeling 2020: Computational Human Models Presented at EMBC 2019 and the BRAIN Initiative(R) 2019 Meeting. Cham: Springer Publishing (2021). p. 213–29. doi: 10.1007/978-3-030-45623-8

28. Thian YL, Ng D, Hallinan JTPD, Jagmohan P, Sia SY, Tan CH, et al. Deep learning systems for pneumothorax detection on chest radiographs: a multicenter external validation study. Radiol Artif Intell. (2021) 3:e200190. doi: 10.1148/ryai.2021200190

29. Zhao QY, Wang H, Luo JC, Luo MH, Liu LP, Yu SJ, et al. Development and validation of a machine-learning model for prediction of extubation failure in intensive care units. Front Med. (2021) 8:676343. doi: 10.3389/fmed.2021.676343

30. Lee JG, Jun S, Cho YW, Lee H, Kim GB, Seo JB, et al. Deep learning in medical imaging: general overview. Korean J Radiol. (2017) 18:570–84. doi: 10.3348/kjr.2017.18.4.570

Keywords: atelectasis, transfer learning, ResNet, artificial intelligence (AI), ICUs

Citation: Huang X, Li B, Huang T, Yuan S, Wu W, Yin H and Lyu J (2022) External validation based on transfer learning for diagnosing atelectasis using portable chest X-rays. Front. Med. 9:920040. doi: 10.3389/fmed.2022.920040

Received: 14 April 2022; Accepted: 01 July 2022;

Published: 22 July 2022.

Edited by:

Zhongheng Zhang, Sir Run Run Shaw Hospital, ChinaReviewed by:

Zhi Mao, People’s Liberation Army General Hospital, ChinaCopyright © 2022 Huang, Li, Huang, Yuan, Wu, Yin and Lyu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Haiyan Yin, eWluaGFpeWFuMTg2N0AxMjYuY29t; Jun Lyu, bHl1anVuMjAyMEBqbnUuZWR1LmNu

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.