95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 30 August 2022

Sec. Healthcare Professions Education

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.897219

This article is part of the Research Topic From Simulation to the Operating Theatre: New Insights in Translational Surgery View all 7 articles

Objective: This paper focuses on simulator-based assessment of open surgery suturing skill. We introduce a new surgical simulator designed to collect synchronized force, motion, video and touch data during a radial suturing task adapted from the Fundamentals of Vascular Surgery (FVS) skill assessment. The synchronized data is analyzed to extract objective metrics for suturing skill assessment.

Methods: The simulator has a camera positioned underneath the suturing membrane, enabling visual tracking of the needle during suturing. Needle tracking data enables extraction of meaningful metrics related to both the process and the product of the suturing task. To better simulate surgical conditions, the height of the system and the depth of the membrane are both adjustable. Metrics for assessment of suturing skill based on force/torque, motion, and physical contact are presented. Experimental data are presented from a study comparing attending surgeons and surgery residents.

Results: Analysis shows force metrics (absolute maximum force/torque in z-direction), motion metrics (yaw, pitch, roll), physical contact metric, and image-enabled force metrics (orthogonal and tangential forces) are found to be statistically significant in differentiating suturing skill between attendings and residents.

Conclusion and significance: The results suggest that this simulator and accompanying metrics could serve as a useful tool for assessing and teaching open surgery suturing skill.

Objective measures of surgical skill have remained elusive because of a lack of consensus regarding the optimal metrics. Surgical trainees are often evaluated by surgical educators using subjective rating scales that often lack precision and reproducibility. Precise quantification of metrics that define “best surgical practices” factors would have potential value to certifying organizations, credentialing committees and surgical educators in addition to providing surgeons in training with objective feedback. Quantification of a surgeon's skill has received attention in recent years due to multiple factors including: duty hour restrictions on surgical residents, limited training opportunities, a call for the reduction in medical errors, and a need for structured training (1–4). Surgical skill is important due to the direct relationship between surgical performance and clinical outcomes such as hospital readmission and complication rates (5). Surgical outcomes may be improved through training to improve skill. For this purpose, surgical simulators —capable of simulating an aspect of a surgical procedure and of assessing and/or training the subject's skill on a given task— have received special attention in recent years. One main advantage of using simulators is the ability to train surgical skills without the use of humans or animals. Another key advantage is the ability to measure skill and its progression over time. Overall, evidence suggests that surgical simulators are potentially effective in training surgical skills (6, 7).

A variety of surgical simulators have been developed to aid surgeons-in-training in the acquisition of a wide range of surgical skills. Surgical simulators may be as basic as simple devices that allow surgeons to practice suturing of synthetic materials (e.g., sponges, plastic tubes) to highly sophisticated computer-based virtual operating rooms. The most effective currently available simulators focus on minimally invasive techniques used in endovascular, laparoscopic, and robotic procedures. Simulation of open surgical techniques has historically relied on the use of animal or cadaver labs which frequently lack object performance metrics (8–12). Suffice it to say that there is a need for more precise, objective measures of open surgical skill (13).

Traditional surgical training shares many features in common with other apprenticeship-based skilled trades. Promotion is often based on duration of service rather than objective demonstration of specific objective performance metrics. This type of training may be highly subjective since feedback often depends on the expert surgeon's preferences and style. Further, training draws expert surgeons away from clinical responsibilities (14). Simulators were developed to address these problems and to standardize and automate assessment of a surgeon's skill. Researchers have focused on identifying objective metrics for surgical skill and on developing devices to measure these metrics. Objective metrics establish a basis for continued practice in order to achieve defined performance goals.

Many metrics for skill assessment have been presented in the literature. These metrics can be classified as force-based metrics (15–23), motion-based metrics (8, 18, 23–30) and image-based metrics (29, 31–39). Force-based metrics, such as absolute, mean, and peak forces and force volume (16–18, 23), have been most successful at distinguishing novice vs. expert performance at surgical tasks. Hand and/or surgical tool motion obtained via sensor-based kinematic data were also examined to extract motion-based metrics, which can distinguish skill level (8, 18, 28–30). Acceleration of the hand and rotation of the wrist were found to distinguish expert surgeons from novices (18, 28). In addition, hand and/or surgical tool motion obtained from external video using Artificial Intelligence (AI) were also examined to extract motion-based metrics (36–39). Total duration, path length, and number of movements were found to be important for distinguishing between attendings and medical students (37). Further, computer vision has also been used to extract image-based metrics as a means to quantify surgical skill (31, 32, 34, 35). Frischknecht et al. (31) analyzed photographs taken post-procedure to assess suturing performance. Metrics that proved most meaningful in ranking the quality of suturing included the number of stitches, stitch length, total bite size, and stitch orientation.

Suturing is a fundamental surgical skill required in a variety of operations, ranging from wound repair in trauma care to delicate vascular reconstruction in vascular surgery (40). The process of suturing can be divided into the following phases: (i) puncturing a needle into the tissue perpendicularly, (ii) driving the needle through the tissue following the curvature of the needle, (iii) exiting the tissue from an exit point, and (iv) withdrawing the needle from the tissue completely prior to tightening the suture. Learning skilled suturing is essential for novice medical practitioners and has been incorporated into most fundamental skills training curricula, for example, the Fundamentals of Laparoscopic Surgery (FLS) (6, 41, 42) and Fundamentals of Vascular Surgery (FVS) curricula (43). However, most currently available simulators for teaching suturing have been developed for minimally invasive surgery (44); only a handful of attempts have focused on open surgery (45–47). Furthermore, the majority of studies that examine suturing skill focus on product metrics, i.e., metrics based on analyzing the final results of the task. Process metrics, i.e., metrics that quantify skill by analyzing how the task was performed, provide significantly more insight for skill training and assessment than product metrics but are also more technically challenging to obtain.

To address the limitations of current surgical simulators, we have developed a suturing simulator which collects synchronized force, motion, touch, and video data as trainees perform a prespecified suturing task. Product and process metrics are extracted from these data and are used to distinguish suturing skill level. A feature of this system is that standard surgical tools (needle holder, needle with surgical thread, etc.) are used on the platform in contrast to simulators which require the use of modified surgical tools (for example needle coloring, dots for computer vision tracking, etc.). Inspired by suggestions from collaborators in vascular surgery, the system simulates suturing at various depth levels, which represent surgery inside a body cavity or at the surface. Suturing at depth is especially important in vascular surgery and requires significantly different and less intuitive hand motions as compared to suturing at the surface (48).

The suturing simulator presented here extends a preliminary version of the platform presented in Kavathekar et al. (49) and Singapogu et al. (50) that featured a single external camera, a force sensor, and a motion sensor. This paper presents the construction of the simulator, metrics based on force, motion and touch, and a study of attending and resident surgeons toward skill assessment using these metrics. The study was carried out with three main objectives: (1) to validate the simulator's capability of collecting synchronized force, motion, touch, and video data, (2) to extract metrics from data collected from a population with open surgery suturing experience, (3) to test the construct validity of the various metrics. The paper is organized as follows. Section 2 describes the simulator, experimental setup, and methods used in the study. Section 3 presents the experimental results, along with a discussion of the force- and motion-based metrics. Section 4 presents conclusions and future work.

The physical system was designed with the following main components: (a) membrane housing, and (b) height adjustable table (see Figure 1). The cylindrical membrane housing was constructed from clear acrylic and its sides were shielded externally with an aluminum sheet. Eight metal latches along the upper exterior of the membrane housing were used to secure the membrane, a material such as GoreTex®, artificial leather, or other fabric, on which suturing is performed (see Figure 2A).

Similar to the radial suturing task in the Fundamentals of Vascular Surgery (43, 51), the suture membrane (see Figure 2B) was designed such that suturing is performed in a radial and uninterrupted fashion. A circle, representing an incision, was drawn on the membrane. The circle was partitioned by radial lines into equal sections each spanning 30°, similar to a clock face. Needle entry points were marked on the radial lines. The distance of the entry mark from the incision line is based on the diameter of the needle. The marks indicated where suturing was to be performed (entry on one side, exit on the other). All membranes were made of artificial leather using a laser cutter.

An internal camera (Firefly MV USB 2.0, Point Grey Research Inc., British Columbia, Canada) was positioned inside the membrane holder and used to record needle and thread movement from underneath the membrane. White LED strips were mounted inside the membrane housing to provide consistent lighting conditions. In addition, an external camera (C920 HD USB 2.0, Logitech International S.A., Lausanne, Switzerland) was positioned above the membrane to record the membrane and hand movement of the subjects during suturing.

A 6-axis force/torque sensor (ATI MINI 40, ATI Industrial Automation Inc., NC, USA) was placed under the housing to measure forces and torques applied to the membrane during suturing (see Figure 1). An InertiaCube4 sensor (InterSense Inc., MA, USA) was used to record hand motion during suturing (see Figure 3).

To simulate suturing in a body cavity or at the surface of the body, a transparent acrylic cylinder is positioned around the membrane holder. The vertical position of the cylinder can be adjusted to simulate suturing at different depths (see Figure 2A).

Capacitive sensing was employed to detect physical contact, i.e., touch, between the subjects' body or the surgical instrument and the cylinder. The interior and top of the cylinder was lined with flexible conductive film (Indium Tin Oxide coated plastic sheet) and aluminum foil, respectively. The conductive materials were attached to a simple capacitive sensing circuit and read using an Arduino.

The membrane housing was mounted onto an adjustable height table. This allows subjects to set the height of the platform as desired for comfort during the suturing exercise (48). Ergonomic studies of the height of operating tables show that the optimum height of the table lies between 55 cm and 100 cm from the floor up to table surface (52–54). The table for the suturing simulator was modified to permit heights between 71 cm and 99 cm.

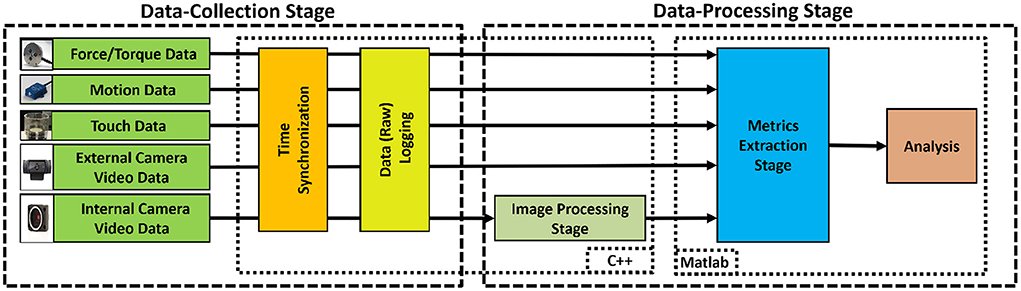

The system processes of the suturing simulator (see Figure 1) are categorized into two main stages: (i) Data Collection, and (ii) Data Processing (Figure 4). In the Data-Collection stage, the system synchronizes and logs force, motion, video, and touch data during suturing. The Data-Processing stage uses the collected data to extract metrics of suturing skill.

Figure 4. System process flow-chart, consisting of two stages. In the Data Collection Stage, raw data from multiple sensors were synchronized and logged. In the Data Processing Stage, the collected data were used to extract metrics for suturing skill.

Data were collected from the four sensing modes: force/torque, motion, video, and physical contact. Force/torque data were collected using the 6-axis force/torque sensor and logged at 1 kHz during suturing. To obtain force/torque data from the sensor, software was written using the NI-DAQ Software Development Kit (SDK). Collected force/torque data were filtered offline with a 10th-order Butterworth lowpass zero-phase filter with a cutoff frequency of 50 Hz to remove noise and smooth the data. To record hand motion, the InertiaCube4 sensor was placed on the dorsum of the subject's dominant hand as shown in Figure 3 and logged at 200 Hz during suturing. InterSense SDK was used to obtain θyaw, θpitch, and θroll measurements of the subject's wrist motion. The internal camera with FlyCapture SDK was used to record needle and suture motion from under the membrane at 60 fps. The external camera was used to record membrane and hand movement at 30 fps. An open source computer vision library (OpenCV 3.0.0) was used to capture and log the external video. For logging touch data, the Arduino capacitive sensing (55) and serial communication libraries (56) were used.

We modified the previous system to include an internal camera, enabling extraction of vision-based metrics. In the previous system, synchronization of the data stream was achieved in post-processing, whereas our current platform synchronizes data collection on a single PC using a multithreaded implementation and timestamping. The Data Collection Stage software was written in C++ using Microsoft Visual Studio 2013 (57).

During suturing, all unprocessed (raw) data is synchronized and logged. Logging allows for revisiting the raw data at any time for additional investigation and analysis. The raw data were then used in the Data-Processing stage.

In this stage (see Figure 4), internal video was first processed with a computer vision algorithm to obtain information about needle and thread movement (33). This information was then used to identify the individual suture cycles. Next, collected raw data were used to extract metrics for each time the subject is actively suturing.

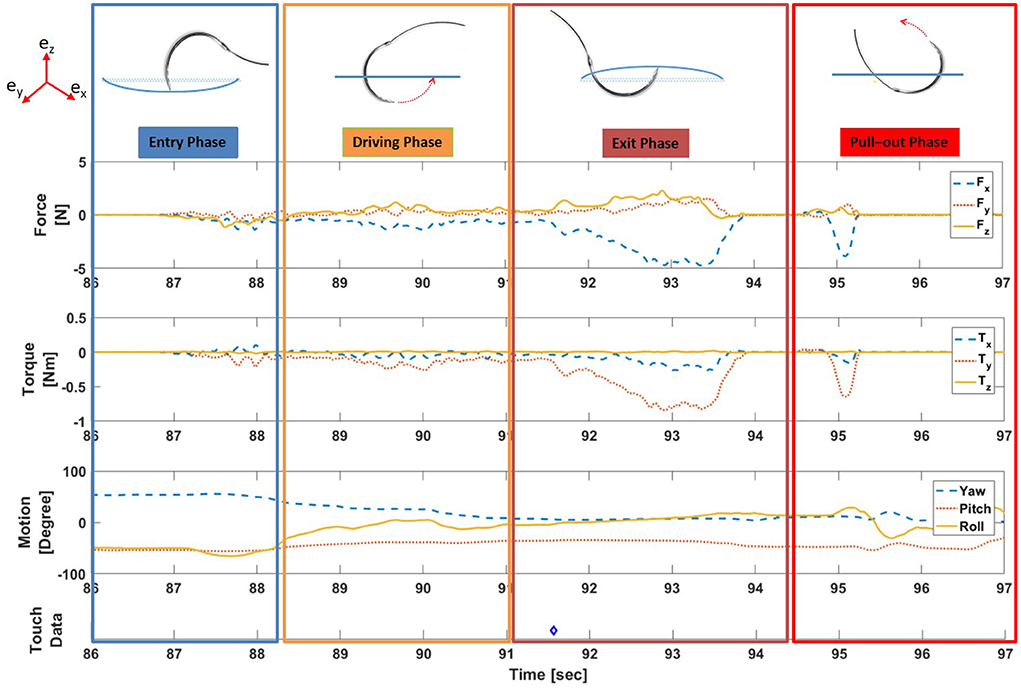

During continuous suturing, a single suture cycle can be divided into two distinct periods of time: active suturing time and idle time. Active suturing time is the time between needle entry into the membrane and complete needle removal from the membrane. Idle time is the time between the end of one active suturing time to the start of the next. In other words, active suturing is the time taken by subjects to complete one suture, whereas idle time is the time spent preparing for the next suture. Active suturing time may be further decomposed into 4 phases: a) entry phase—puncturing the needle into the tissue; b) driving phase—driving the needle along some path under the membrane; c) exit phase—exiting the needle tip from the tissue; and d) pull-out phase—pulling the needle completely from the tissue and then tightening the thread.

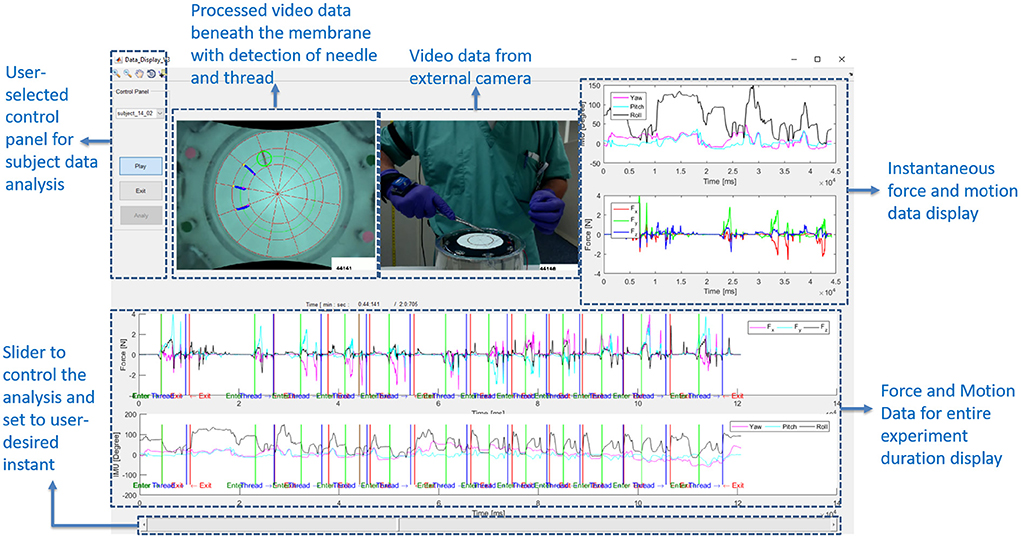

Dividing each suture cycle into distinct phases allows for context-specific interpretation of the sensor data. Needle entry and exit times obtained from the computer vision algorithm were used to extract each suture cycle for individual analysis. In addition, a Graphical User Interface (GUI) in MATLAB (Figure 5) was created to display synchronized force, motion, and touch data, as well as video from external and internal cameras. The interface also labels the needle entry, needle exit and thread entry times automatically determined by computer vision. The interface enables convenient, interactive exploration of the synchronized data. An example of synchronized data for one active suturing time with the suture sub-events identified (entry, driving, exit, and pull-out phase) is shown in Figure 6.

Figure 5. Graphical User Interface (GUI) designed to show the synchronized force and motion data, along with videos from external and internal cameras. GUI allows for convenient, interactive investigation of synchronized data.

Figure 6. Example of synchronized force, torque, motion, and touch data for one active suturing time with suture sub-events labeled. (Note: Blue diamond symbol (◇) in touch data indicates the time instance of the physical touch).

Many of the metrics presented in this paper are computed from time series data of a scalar signal X(t) using one of the following functions:

The time interval over which the maximum is taken is specified in the definition of the specific metric. Typically the time interval corresponds to one whole active suture time. Note that PEAK+(X) is the maximum value that signal X took over the time interval and PEAK-(X) is the negative of the minimum value that signal X took during the time interval. If signal X(t) is negative at some point, then PEAK-(X) can be interpreted as the magnitude of peak negative value of X(t). PP(X) is the peak-to-peak amplitude of signal X. As in Trejos et al. (15) and Horeman et al. (16), INTABS(X) is related to the impulse for a force signal X(t). This quantity will be high when X(t) is high in magnitude over a long period of time. DER(X) is the derivative of the signal X(t) calculated similar to Trejos et al. (15) and can be interpreted as the consistency of signal X(t) during the time interval.

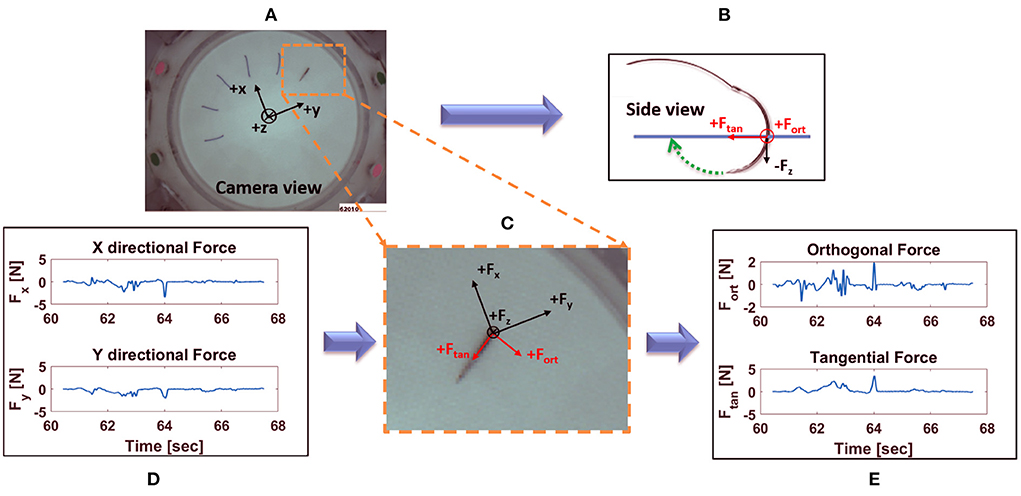

For each active suturing time, (1)–(5) were used to compute metrics based on time series for force components Fx, Fy, and Fz, and torque components Tx, Ty, and Tz. Based on the coordinate axes (shown in Figure 7), PEAK+(Fz) is the maximum force component applied upward on the membrane while PEAK-(Fz) is the maximum force component applied downward (49).

Figure 7. Decomposition of horizontal forces into forces orthogonal and tangential to stitch direction: (A) view of the needle taken from internal camera with force sensor coordinate system overlaid; (B) side view of the needle along with orthogonal and tangential force direction; (C) zoomed in view of the needle, together with the force sensor, orthogonal and tangential forces; (D) X and Y directional forces at suture location in (A), for one active suture time; (E) corresponding orthogonal and tangential forces from (A), for one active suture time.

Force applied orthogonal to the stitch direction may increase tissue tearing and should therefore be minimized. The axes of the force sensor are not generally aligned with the directions of the radial stitches, so a change of coordinates is required to determine the force components orthogonal and tangential to the stitch direction. Using the suture entry and exit points detected by computer vision (33), the suture direction at each suture location can be identified. Then, a change of coordinates can be applied to compute the force tangential to stitch direction and orthogonal to stitch direction (see Figure 7). Calculations of the orthogonal and tangential forces were achieved as follows.

Total force, , can be expressed in the vision coordinate system as:

where Fx and Fy are the component forces in x and y direction, respectively, as read from the force sensor, and and are the unit vectors in the vision coordinate frame aligned with the x- and y- axes of the force sensor, respectively. Since the coordinate system of the force sensor is constant, and were also constant, independent of suture location. The unit vectors and were precomputed based on a calibration experiment.

The same force can also be represented as

where Fo and Ft are the component forces orthogonal and tangential to the stitch direction in vision coordinate frame, respectively, and and are the corresponding unit vectors in the vision coordinate frame.

Thus, (6) and (7) can be rearranged as follows to obtain orthogonal and tangential component forces, Fo and Ft:

Contrary to and , the direction of unit vectors and depend on suture location. The vectors and are calculated from the suture entry and exit points, whose values are obtained using the computer vision algorithm (33).

Using the aforementioned calculations, orthogonal and tangential forces for each suture location were obtained. For each active suturing time, (1)–(3) were used to compute metrics based on Fo and Ft.

Metrics on total range of hand motion were extracted from IMU orientation data using (3), specifically PP(θyaw), PP(θpitch) and PP(θroll) for each active suturing time (49).

The capacitive touch sensor was used to identify and count each instance of physical contact between the subject and the top and/or internal wall of the cylinder around the membrane holder. The total number of touches (Cn) made during a suture cycle is used as a metric.

Approval for the study was obtained from the applicable Institutional Review Board (Reference # Pro00011886). A total of 15 subjects (6 Attending Surgeons, 8 Surgery Residents and 1 Medical Student) were recruited from a local hospital to participate in the study. Informed consent was obtained from participants prior to participation. Each subject was asked to complete a questionnaire on their background and experiences. The data from 12 subjects (5 Attending Surgeons, 7 Surgery Residents) were used in analysis. The range of surgical suturing experience for attending surgeons was from 7 to 25 years, whereas the range of surgical suturing experience for residents was from 2 to 5 years. Three subjects did not meet the study criteria and were removed from analysis; 1 attending surgeon (did not meet subject pool definition, not actively practicing), 1 surgery resident (trial interruption), and 1 medical student (did not meet subject pool definition). All attendings in this study specialized in vascular surgery, except one who was a trauma surgery specialist.

Before suturing, subjects were encouraged to adjust the height of the table (Figure 1) to a comfortable level. The rationale for height adjustment was to allow users to choose a suitable height based on their individual physical characteristics and preferences. Participants were instructed to begin at 10 o'clock on the clock face and suture in a counter-clockwise fashion at each hour to complete a 12 hour cycle. At each hour, the needle is inserted at the marked location and withdrawn to make a stitch symmetric about the incision line. Subjects were instructed to perform continuous, uninterrupted suturing on the membrane using a Prolene suture needle (SH, 26 mm, 3-0) (Ethicon Inc., Somerville, NJ, USA). Subjects performed this procedure at two different membrane depths: at “surface” (i.e., 0 in. depth) and at “depth” (i.e., 4 in. depth) (Figure 2A).

Since the observed distribution of the metrics was not Gaussian (tested with Lilliefors test), the data were analyzed using the Wilcoxon rank sum test (5% significance level) to identify which metrics showed statistically different performance between attending and resident surgeons. Each stitch was considered as a separate trial. Suturing at the surface and at depth are analyzed separately.

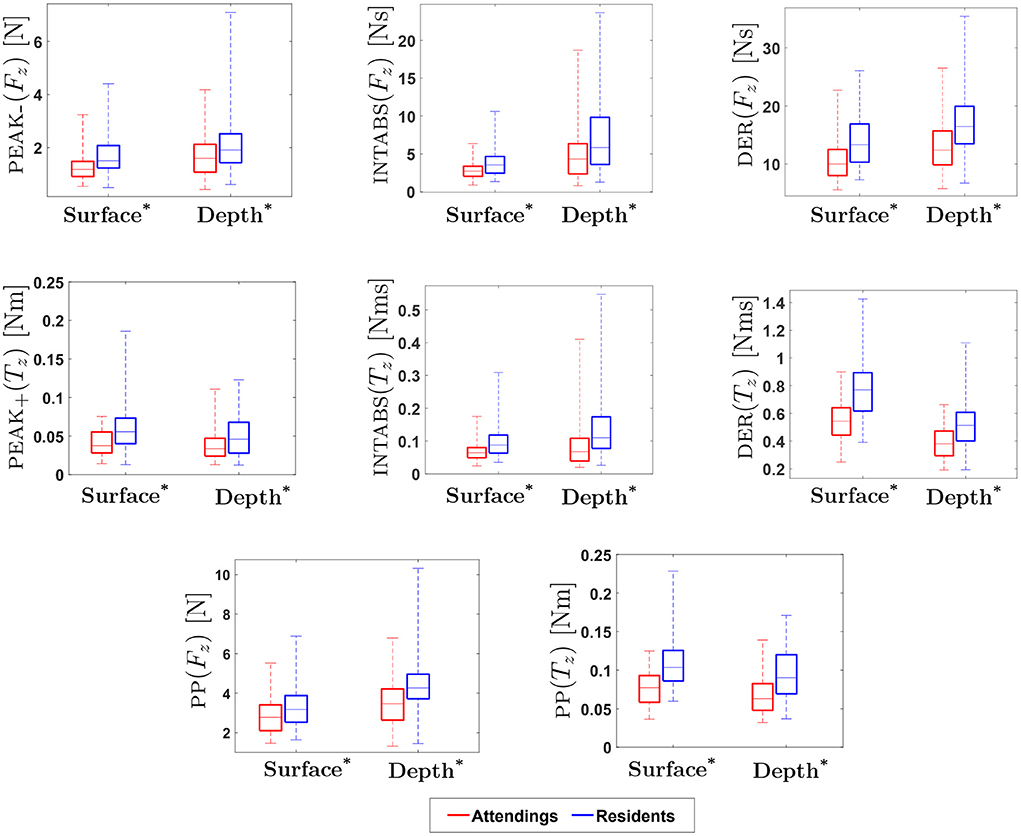

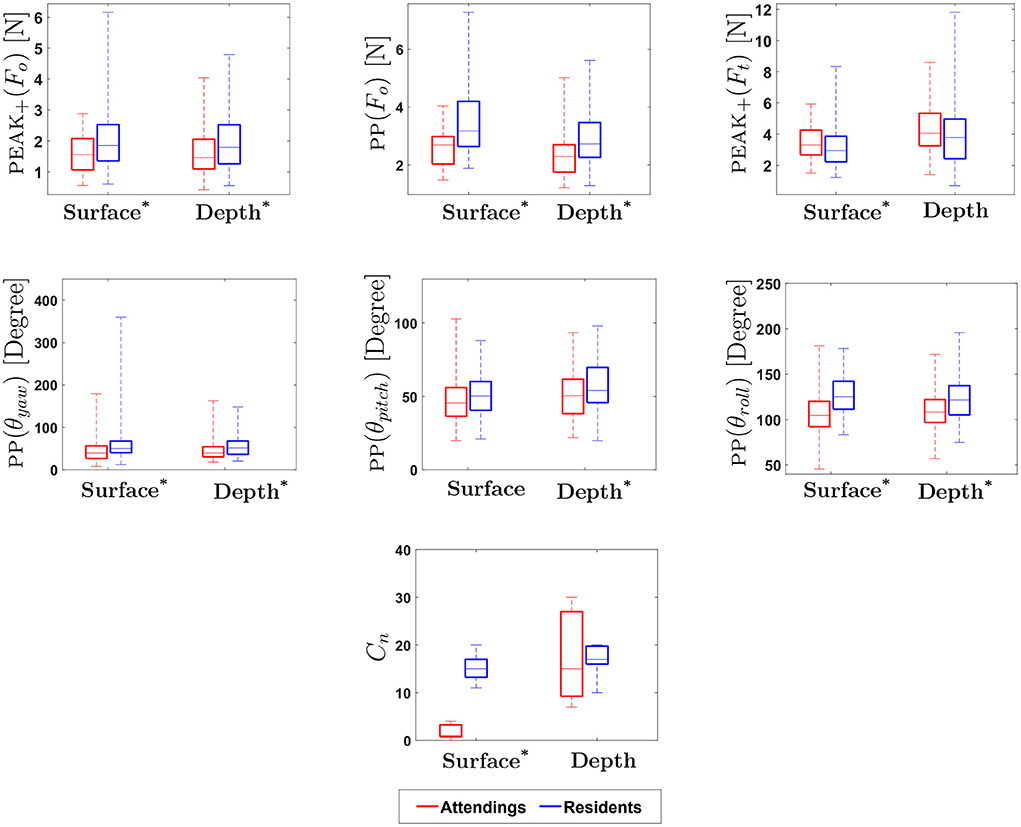

Tables 1, 2 show the p-values for statistical difference between attending and resident surgeons on various force, motion and touch metrics at surface level and at depth level. For selected metrics, Figures 8, 9 provide box plots of performance of attending and resident surgeons at surface level and at depth level. Interpretation and discussion of results are provided below.

Figure 8. Experimental Results for Force/Torque-based Metrics: * indicates statistical significance for p < 0.05. (On each box, the middle line indicates the median, and the bottom and top edges of the box indicate the 25 and 75th percentiles, respectively. The whiskers are extended to the most extreme data points including outliers).

Figure 9. Experimental Results for Motion-based, Physical Contact and Vision-enabled Force Metrics: * indicates statistical significance for p < 0.05. (On each box, the middle line indicates the median, and the bottom and top edges of the box indicate the 25 and 75th percentiles, respectively. The whiskers are extended to the most extreme data points including outliers).

Results for force-based metrics show that INTABS(Fx), DER(Fx), INTABS(Fy) and DER(Fy) were significantly different between attendings and residents at surface level. In addition, a statistical difference in performance between attending and resident surgeons was found for metrics PEAK-(Fz), PP(Fz), INTABS(Fz) and DER(Fz) at both depth and surface as well as for metric PEAK+(Fz) at depth. For z-directional force metrics, the medians of attendings at both surface and depth level were found to be lower as compared to residents. Similar to an earlier study in laparoscopic suturing (17), our results show that z-directional force was found to be important for distinguishing between experience levels. In contrast to z-directional forces, in our study, metrics calculated for x and y direction forces at both surface and depth level were found to be non-significant.

Similarly, results for torque-based metrics show that INTABS(Tx), DER(Tx), INTABS(Ty) and DER(Ty) were significantly different between attendings and residents at surface level. In particular, results for torque-based metrics show that z directional torques (PEAK+(Tz), PEAK-(Tz), PP(Tz), INTABS(Tz) and DER(Tz)) were significantly different between attendings and residents, at both the depth and surface level. The z-axis is vertical, so Tz is associated with forces orthogonal to the z-axis applied with a non-zero moment arm. Given the radial suturing pattern, that means Tz is most closely associated with forces orthogonal to the stitch direction. This motivates direct measurement of the orthogonal force Fo, as explained in 2.1.4.2.

Results show that the metrics obtained from orthogonal force (Fo) were statistically different between attendings and residents on both surface and depth levels (see Table 2). In addition, tangential force (Ft) metrics were significantly different between attendings and residents at surface, with the exceptions of PP(Ft). Orthogonal forces applied by attendings were lower than those applied by residents, whereas tangential forces applied by attendings were higher.

In Horeman et al. (17), subjects made parallel sutures aligned with the y axis of the force sensor. It was observed that the maximum absolute forces in x and y directions were important for distinguishing between experience levels. Since the stitch direction was unchanged, x and y force directions were always orthogonal and tangential to the stitch direction, respectively. The study presented here uses a radial suture membrane with stitches in 12 different directions (see Figure 2B). This radial membrane is based on the one used in FVS training and is intended to test the subject's dexterity and preparedness for vascular anastomosis. Since the force sensor was fixed in place, x and y force directions were not generally aligned with stitch direction. Even though x and y directional force metrics were not found to be statistically significant in our study, measurements of forces in x and y directions are required to calculate orthogonal and tangential forces. Reinterpreting the x and y for axes from Horeman et al. (17) as orthogonal and tangential to stitch direction, the present study supports that orthogonal forces, and to a lesser extent tangential forces, are important for distinguishing skilled performance.

Previous studies suggest that there is a significant difference in hand movement between expert and novice surgeons during suturing. The rotation of the wrist, indicated by θroll, was previously found to be particularly useful in assessment of suturing skill (17, 18). In the present study, similar to earlier studies, the total range of hand movement for PP(θyaw) and PP(θroll) at both surface and depth, and for PP(θpitch) at depth were found to be statistically significant in differentiating attendings from residents. This suggests that yaw, pitch and roll might be useful for assessment of suturing skill.

Results for yaw, pitch, and roll show that total range of hand movement by attendings are consistently lower than that of residents, regardless of depth. In Dubrowski et al. (18) and Horeman et al. (17), it was found that experts use greater wrist rotation during suturing. In contrast, our results show that attendings use less wrist rotation. This may be explained by the fact that the majority of attendings in this study were experts in the field of vascular surgery. Due to the intricate nature of this type of surgery, it may be reasonable to assume that significant wrist rotation is not necessary in achieving accurate suturing during the surgical procedure. Also, pitch was found to be statistically significant, but only at depth, possibly because hand motion is more complicated when a subject sutures at depth. Moreover, during the experiments, it was observed that inexperienced participants tend to reposition the needle holder more often while suturing at depth. The complexity of hand movement during suturing deserves further investigation, specifically for suturing at depth, an essential aspect of vascular suturing.

We examined the number of times subjects made physical contact with the platform at both surface and depth conditions. Results indicate that the total number of physical touches (Cn) on surface level for attendings was significantly lower than for residents, whereas there was no statistical difference between attendings and residents at depth. It should be noted that suturing at depth was introduced to mimic more realistic surgical conditions; however, feedback from attendings after the experiment revealed that requiring a surgeon to suture accurately without touching the top and/or the walls of the cylinder was an overly restrictive constraint. In fact, in certain conditions during surgery, surgeons strategically use boundaries of body cavities, for instance, to augment their forces during suturing.

In this paper, we presented a suturing simulator with the capability of collecting synchronized force, motion, touch, and video data to allow for the assessment of suturing skill in open surgery. Data collected from the simulator during suturing allowed for the extraction of metrics for quantifying suturing skill between different levels of trainees. Force-based, torque-based, motion-based, and physical contact metrics were presented. Combining force data with computer vision information, vision-enabled force metrics were found, specifically for forces orthogonal and tangential to stitch direction which provide deeper insight into suturing performance. Also, the vision algorithm aided in the identification of suture events and the segmentation of corresponding sensor data.

Experimental data collected from both attendings and residents were presented. Presented metrics were used to compare attendings' and residents' performance. Analysis shows that force metrics (force and torque in z direction), motion metrics (yaw, pitch, roll), physical contact metric, and image-enabled metrics (orthogonal and tangential forces) were statistically significant in differentiating suturing skill between attendings and residents. These results demonstrate the feasibility of distinguishing fine skill differences between attendings and residents, as compared to experienced vs. completely inexperienced personnel.

Limitations and Future Work: One key limitation of the current study is the small sample size. Consequently, while the results indicate the feasibility of the methods and metrics used, one cannot draw generalizable results regarding suture skill assessment from these results alone. Furthermore, the pool of attending surgeons in the study included mostly vascular surgeons. We are currently performing a large scale study of suturing skill assessment using the simulator with a wider range of surgical specialties. We hope that future work along these lines will enable the development of training methodologies to accelerate skill acquisition.

The datasets presented in this article are not readily available because of restrictions on data access placed by the relevant IRB. Requests to access the datasets should be directed to am9zZXBoQGNsZW1zb24uZWR1.

The studies involving human participants were reviewed and approved by Clemson University Institutional Review Board. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

IK contributed to the system design, data collection, data analysis and interpretation, and drafting and revising of the manuscript. JE contributed to the conception of the design and experiment design. RG contributed to the conception of the design, system design, data analysis and interpretation, and writing and revising the manuscript. RS contributed to the conception of the design, experiment design, data collection, and writing and revising the manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by the National Institutes of Health (NIH) Grant No. 5R01HL146843.

The authors would like to thank all those who helped facilitate data collection and all participants in the study. The authors would also like to thank Mr. Naren Nagarajan for his contributions to this research.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Tang B, Hanna G, Cuschieri A. Analysis of errors enacted by surgical trainees during skills training courses. Surgery. (2005) 138:14–20. doi: 10.1016/j.surg.2005.02.014

2. Joice P, Hanna G, Cuschieri A. Errors enacted during endoscopic surgery–a human reliability analysis. Appl Ergon. (1998) 29:409–14. doi: 10.1016/S0003-6870(98)00016-7

3. Archer SB, Brown DW, Smith CD, Branum GD, Hunter JG. Bile duct injury during laparoscopic cholecystectomy: results of a national survey. Ann Surg. (2001) 234:549. doi: 10.1097/00000658-200110000-00014

4. Makary MA, Daniel M. Medical error–the third leading cause of death in the US. BMJ. (2016) 353: i2139. doi: 10.1136/bmj.i2139

5. Birkmeyer JD, Finks JF, O'reilly A, Oerline M, Carlin AM, Nunn AR, et al. Surgical skill and complication rates after bariatric surgery. N Engl J Med. (2013) 369:1434–42. doi: 10.1056/NEJMsa1300625

6. McCluney A, Vassiliou M, Kaneva P, Cao J, Stanbridge D, Feldman L, et al. FLS simulator performance predicts intraoperative laparoscopic skill. Surg Endosc. (2007) 21:1991–5. doi: 10.1007/s00464-007-9451-1

7. Stefanidis D, Scerbo MW, Montero PN, Acker CE, Smith WD. Simulator training to automaticity leads to improved skill transfer compared with traditional proficiency-based training: a randomized controlled trial. Ann Surg. (2012) 255:30–37. doi: 10.1097/SLA.0b013e318220ef31

8. Reiley CE, Lin HC, Yuh DD, Hager GD. Review of methods for objective surgical skill evaluation. Surg Endosc. (2011) 25:356–66. doi: 10.1007/s00464-010-1190-z

9. Yiasemidou M, Gkaragkani E, Glassman D, Biyani C. Cadaveric simulation: a review of reviews. Ir J Med Sci. (2018) 187:827–33. doi: 10.1007/s11845-017-1704-y

10. Kovacs G, Levitan R, Sandeski R. Clinical cadavers as a simulation resource for procedural learning. AEM Educ Train. (2018) 2:239–47. doi: 10.1002/aet2.10103

11. Agha RA, Fowler AJ. The role and validity of surgical simulation. Int Sur. (2015) 100:350–7. doi: 10.9738/INTSURG-D-14-00004.1

12. Costello DM, Huntington I, Burke G, Farrugia B, O'Connor AJ, Costello AJ, et al. A review of simulation training and new 3D computer-generated synthetic organs for robotic surgery education. J Robotic Surg. (2021) 16:749–63. doi: 10.1007/s11701-021-01302-8

13. Figert PL, Park AE, Witzke DB, Schwartz RW. Transfer of training in acquiring laparoscopic skills. J Am Coll Surg. (2001) 193:533–7. doi: 10.1016/S1072-7515(01)01069-9

14. Zia A, Sharma Y, Bettadapura V, Sarin EL, Clements MA, Essa I. Automated assessment of surgical skills using frequency analysis. In: Medical image computing and computer-assisted intervention. Cham: Springer (2015). p. 430–38.

15. Trejos AL, Patel RV, Malthaner RA, Schlachta CM. Development of force-based metrics for skills assessment in minimally invasive surgery. Surg Endosc. (2014) 28:2106–19. doi: 10.1007/s00464-014-3442-9

16. Horeman T, Rodrigues SP, Jansen FW, Dankelman J, van den Dobbelsteen JJ. Force parameters for skills assessment in laparoscopy. IEEE Trans Haptics. (2012) 5:312–22. doi: 10.1109/TOH.2011.60

17. Horeman T, Rodrigues SP, Jansen FW, Dankelman J, van den Dobbelsteen JJ. Force measurement platform for training and assessment of laparoscopic skills. Surg Endosc. (2010) 24:3102–8. doi: 10.1007/s00464-010-1096-9

18. Dubrowski A, Sidhu R, Park J, Carnahan H. Quantification of motion characteristics and forces applied to tissues during suturing. Am J Surg. (2005) 190:131–6. doi: 10.1016/j.amjsurg.2005.04.006

19. Kil I, Singapogu RB, Groff RE. Needle entry angle & force: vision-enabled force-based metrics to assess surgical suturing skill. In: 2019 International Symposium on Medical Robotics (ISMR). Atlanta, GA: IEEE (2019). p. 1–6.

20. Rosen J, Hannaford B, Richards CG, Sinanan MN. Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans Biomed Eng. (2001) 48:579–91. doi: 10.1109/10.918597

21. Brown JD, O'Brien CE, Leung SC, Dumon KR, Lee DI, Kuchenbecker KJ. Using contact forces and robot arm accelerations to automatically rate surgeon skill at peg transfer. IEEE Trans Biomed Eng. (2016) 64:2263–75. doi: 10.1109/TBME.2016.2634861

22. Poursartip B, LeBel ME, Patel RV, Naish MD, Trejos AL. Analysis of energy-based metrics for laparoscopic skills assessment. IEEE Trans Biomed Eng. (2018) 65:1532–42. doi: 10.1109/TBME.2017.2706499

23. Horeman T, Dankelman J, Jansen FW, van den Dobbelsteen JJ. Assessment of laparoscopic skills based on force and motion parameters. IEEE Trans Biomed Eng. (2014) 61:805–13. doi: 10.1109/TBME.2013.2290052

24. Rosen J, Brown JD, Chang L, Sinanan MN, Hannaford B. Generalized approach for modeling minimally invasive surgery as a stochastic process using a discrete Markov model. IEEE Trans Biomed Eng. (2006) 53:399–413. doi: 10.1109/TBME.2005.869771

25. Loukas C, Georgiou E. Multivariate autoregressive modeling of hand kinematics for laparoscopic skills assessment of surgical trainees. IEEE Trans Biomed Eng. (2011) 58:3289–97. doi: 10.1109/TBME.2011.2167324

26. Estrada S, Duran C, Schulz D, Bismuth J, Byrne MD, O'Malley MK. Smoothness of surgical tool tip motion correlates to skill in endovascular tasks. IEEE Trans Human-Mach Syst. (2016) 46:647–59. doi: 10.1109/THMS.2016.2545247

27. Yamaguchi S, Yoshida D, Kenmotsu H, Yasunaga T, Konishi K, Ieiri S, et al. Objective assessment of laparoscopic suturing skills using a motion-tracking system. Surg Endosc. (2011) 25:771–5. doi: 10.1007/s00464-010-1251-3

28. Sánchez A, Rodríguez O, Sánchez R, Benítez G, Pena R, Salamo O, et al. Laparoscopic surgery skills evaluation: analysis based on accelerometers. J Soc Lapa Surg. (2014) 18:e2014.00234. doi: 10.4293/JSLS.2014.00234

29. Dosis A, Aggarwal R, Bello F, Moorthy K, Munz Y, Gillies D, et al. Synchronized video and motion analysis for the assessment of procedures in the operating theater. Arch Surg. (2005) 140:293–9. doi: 10.1001/archsurg.140.3.293

30. Goldbraikh A, Volk T, Pugh CM, Laufer S. Using open surgery simulation kinematic data for tool and gesture recognition. Int J Comput Assist Radiol Surg. (2022) 14:965–79. doi: 10.1007/s11548-022-02615-1

31. Frischknecht AC, Kasten SJ, Hamstra SJ, Perkins NC, Gillespie RB, Armstrong TJ, et al. The objective assessment of experts' and novices' suturing skills using an image analysis program. Acad Med. (2013) 88:260–4. doi: 10.1097/ACM.0b013e31827c3411

32. Islam G, Kahol K, Ferrara J, Gray R. Development of computer vision algorithm for surgical skill assessment. In: International Conference on Ambient Media and Systems. Berlin: Springer (2011). p. 44–51.

33. Kil I, Jagannathan A, Singapogu RB, Groff RE. Development of computer vision algorithm towards assessment of suturing skill. In: 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI). IEEE: Orlando, FL (2017). p. 29–32.

34. Islam G, Kahol K, Li B. Development of a computer vision application for surgical skill training and assessment. In: Bio-information system, Proceedingsand Applications. Aalborg (2013). p. 191.

35. Islam G, Kahol K, Li B, Smith M, Patel VL. Affordable, web-based surgical skill training and evaluation tool. J Biomed Inform. (2016) 59:102–14. doi: 10.1016/j.jbi.2015.11.002

36. Lavanchy JL, Zindel J, Kirtac K, Twick I, Hosgor E, Candinas D, et al. Automation of surgical skill assessment using a three-stage machine learning algorithm. Sci Rep. (2021) 11:1–9. doi: 10.1038/s41598-021-84295-6

37. Goldbraikh A, D'Angelo AL, Pugh CM, Laufer S. Video-based fully automatic assessment of open surgery suturing skills. Int J Comput Assist Radiol Surg. (2022) 17:437–48. doi: 10.1007/s11548-022-02559-6

38. Zhang M, Cheng X, Copeland D, Desai A, Guan MY, Brat GA, et al. Using computer vision to automate hand detection and tracking of surgeon movements in videos of open surgery. In: AMIA Annual Symposium Proceedings, Vol. 2020. American Medical Informatics Association (2020). p. 1373. Available online at: https://amia.org/education-events/amia-2020-virtual-annual-symposium

39. Jin A, Yeung S, Jopling J, Krause J, Azagury D, Milstein A, et al. Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In: 2018 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE: Lake Tahoe, NV (2018). p. 691–9.

41. Fried GM. FLS assessment of competency using simulated laparoscopic tasks. J Gastrointest Surg. (2008) 12:210–2. doi: 10.1007/s11605-007-0355-0

42. Fried GM, Feldman LS, Vassiliou MC, Fraser SA, Stanbridge D, Ghitulescu G, et al. Proving the value of simulation in laparoscopic surgery. Ann Surg. (2004) 240:518. doi: 10.1097/01.sla.0000136941.46529.56

43. Mitchell EL, Arora S, Moneta GL, Kret MR, Dargon PT, Landry GJ, et al. A systematic review of assessment of skill acquisition and operative competency in vascular surgical training. J Vasc Surg. (2014) 59:1440–55. doi: 10.1016/j.jvs.2014.02.018

44. Gallagher AG, O'Sullivan GC. Fundamentals of Surgical Simulation: Principles and Practice. London: SSBM (2011).

45. O'toole RV, Playter RR, Krummel TM, Blank WC, Cornelius NH, Roberts WR, et al. Measuring and developing suturing technique with a virtual reality surgical simulator. J Am Coll Surg. (1999) 189:114–27. doi: 10.1016/S1072-7515(99)00076-9

46. Eckert M, Cuadrado D, Steele S, Brown T, Beekley A, Martin M. The changing face of the general surgeon: national and local trends in resident operative experience. Am J Surg. (2010) 199:652–6. doi: 10.1016/j.amjsurg.2010.01.012

47. Davies J, Khatib M, Bello F. Open surgical simulation–a review. J Surg Educ. (2013) 70:618–27. doi: 10.1016/j.jsurg.2013.04.007

48. Kil I, Groff RE, Singapogu RB. Surgical suturing with depth constraints: image-based metrics to assess skill. IEEE Int Conf Eng Med Biol Soc. (2018) 2018:4146–9. doi: 10.1109/EMBC.2018.8513266

49. Kavathekar T, Kil I, Groff RE, Burg TC, Eidt JF, Singapogu RB. Towards quantifying surgical suturing skill with force, motion and image sensor data. In: 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI). Orlando, FL: IEEE (2017). p. 169–72.

50. Singapogu RB, Kavathekar T, Eidt JF, Groff RE, Burg TC. A novel platform for assessment of surgical suturing skill: preliminary results. In: MMVR. Los Angeles, CA (2016). p. 375–8.

51. Sheahan MG, Bismuth J, Lee JT, Shames ML, Sheahan C, Rigberg DA, et al. SS25. The fundamentals of vascular surgery: establishing the metrics of essential skills in vascular surgery trainees. J Vasc Surg. (2015) 6:111S-12S. doi: 10.1016/j.jvs.2015.04.217

52. Berquer R, Smith W, Davis S. An ergonomic study of the optimum operating table height for laparoscopic surgery. Surg Endosc. (2002) 16:416–21. doi: 10.1007/s00464-001-8190-y

53. Van Veelen M, Kazemier G, Koopman J, Goossens R, Meijer D. Assessment of the ergonomically optimal operating surface height for laparoscopic surgery. J Laparoendosc Adv Surg Tech. (2002) 12:47–52. doi: 10.1089/109264202753486920

54. Manasnayakorn S, Cuschieri A, Hanna GB. Ergonomic assessment of optimum operating table height for hand-assisted laparoscopic surgery. Surg Endosc. (2009) 23:783–9. doi: 10.1007/s00464-008-0068-9

55. Badger P,. Capacitive Sensing Library. (2017). Available online at: https://playground.arduino.cc/Main/CapacitiveSensor/ (accessed on January 29, 2017).

56. Arduino and C++ (for Windows) (2017). Available online at: https://playground.arduino.cc/Interfacing/CPPWindows (accessed on January 31, 2017).

Keywords: medical simulator, objective metrics, sensor informatics, suturing, skill assessment

Citation: Kil I, Eidt JF, Groff RE and Singapogu RB (2022) Assessment of open surgery suturing skill: Simulator platform, force-based, and motion-based metrics. Front. Med. 9:897219. doi: 10.3389/fmed.2022.897219

Received: 15 March 2022; Accepted: 05 August 2022;

Published: 30 August 2022.

Edited by:

Mario Ganau, Oxford University Hospitals NHS Trust, United KingdomCopyright © 2022 Kil, Eidt, Groff and Singapogu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ravikiran B. Singapogu, am9zZXBoQGNsZW1zb24uZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.