94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 28 June 2022

Sec. Healthcare Professions Education

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.896822

Background: How to evaluate clinical educators is an important question in faculty development. The issue of who are best placed to evaluate their performance is also critical. However, the whos and the hows of clinical educator evaluation may differ culturally. This study aims to understand what comprises suitable evaluation criteria, alongside who is best placed to undertake the evaluation of clinical educators in medicine within an East Asian culture: specifically Taiwan.

Methods: An 84-item web-based questionnaire was created based on a literature review and medical educational experts' opinions focusing on potential raters (i.e., who) and domains (i.e., what) for evaluating clinical educators. Using purposive sampling, we sent 500 questionnaires to clinical educators, residents, Post-Graduate Year Trainees (PGYs), Year-4~6/Year-7 medical students (M4~6/M7) and nurses.

Results: We received 258 respondents with 52% response rate. All groups, except nurses, chose “teaching ability” as the most important domain. This contrasts with research from Western contexts that highlights role modeling, leadership and enthusiasm. The clinical educators and nurses have the same choices of the top five items in the “personal qualities” domain, but different choices in “assessment ability” and “curriculum planning” domains. The best fit rater groups for evaluating clinical educators were educators themselves and PGYs.

Conclusions: There may well be specific suitable domains and populations for evaluating clinical educators' competence in East Asian culture contexts. Further research in these contexts is required to examine the reach of these findings.

Teaching effectiveness and faculty development are central to healthcare professional excellence (1, 2). Indeed, clinical educators are asked to play numerous roles in relation to their students. For example, Harden and Crosby identify twelve roles of clinical teachers in medical education accross six areas: the information provider in the lecture, the role model on the clinical job, the facilitator as a mentor, the assessor of learners, the curriculum planner, and the resource developer (3). Thus, in medical education, clinical educators focus not only on educating students in terms of their medical knowledge, but also on the learners' learning state, living conditions and emotional state. Indeed, such psychosocial support includes counseling, friendship, acceptance, confirmation and role-modeling (4, 5). Due to the multiple functions of clinical educators, adequate evaluation of their performance is a challenging and complex task.

In 2010, a systemic review of over 30 years' research of current questionnaires for evaluating clinical educators identified 54 manuscripts focusing on 32 different instruments from predominately Western (mainly USA) cultures (6). The authors identified the four most common foci for the evaluation of clinical educators that comprised (in order of frequency): teacher (i.e., mapping onto the information provider in Harden and Crosby's work) and supporter (i.e., Harden and Crosby's mentoring role); role model; and feedback provider (i.e., relating to aspects of Harden and Crosby's assessor role). Planner and assessor roles were less common across intruments/items (featuring in 18 and 5 instruments respectively). The majority of manuscripts cited literature around the concept of good clinical teaching, but no clear description of what makes a good clinical educator is offered. Furthermore, the authors highlight that doctors' competencies “as communicators, collaborators, health advocates, and managers” are missing elements across these evaluation tools (6). More recently, however, within the health professions education literature there has been an attempt to develop clinical educator evaluation tools that variously cover aspects such as: teaching skills (7, 8), establishing learning climates (9), curriculum planning (10), communicating with learners (9), providing feedback (7, 8), assessing learners (9, 10), promoting self-directed learning (7, 10), demonstrating educational goals (10), showing responsibility (10), integrating learning into the clinical setting (9, 10), personal support (7). Many of the above abilities have been showed in the medical competencies described by the Canadian Medical Educational Directives (CanMEDS). It is reasonable that good clinical educators may be expected to act as role models of these competencies (6, 8).

In their review of the literature, Fluit et al. (6) identified medical students as the most common raters of clinical educators (56% of the time). Indeed, some tools have been specifically developed for this purpose (11–13). It is easy to understand how medical students can be thought of as being the most suitable candidates for this task given that they are the recipients of education, and can observe the process directly. However, the power relationship between students and educators may interfere with the evaluation process. The crux of the issue is that educators have the power to fail students. As such, students may be reluctant to offend their educators as it might put them at risk of failure (14–18).

In addition to students, program coordinators also commonly evaluate clinical educators (19). While it is reasonable that program coordinators might score the clinical educators they assign, they may not have many opportunities to observe clinical educators' performances directly. In addition to students and program coordinators, clinical educators have been evaluated by their peers (2, 20, 21). Fellow educators may be able to observe one another during clinical teaching, or gather feedback about other educators from students. Finally, self-evaluation, a reflective process, has also been considered in several educator evaluation studies (2, 20–22).

Irrespective of whom is being evaluated and who undertakes the evaluation, the issue of the specific culture in which evaluation occurs is of importance. Here we focus on national culture (rather than local or organizational cultures). In East Asian cultures, such as Taiwan, China, Hong Kong, and Singapore, students are brought up to respect education and their educators (23–26). This impacts not only students' evaluation of their clinical educators, but also the evaluation of educators by others who are not afforded such reverence (e.g., program coordinators). As such this reverence for educators in general is likely to have an impact on if and how students, and other groups, might evaluate them. Furthermore, in certain contexts and cultures, competition between clinical educators may create a conflict of interest situation (27). Indeed, the literature on clinical educators' evaluation in the medical domain so far appears to come predominately from a Western perspective, with educators' accountability to students having been high on the agenda for a number of years, leading to a variety of evaluation contexts and processes (28–30). Given the different foci between an East Asian perspective and a Western perspective, an understanding of whether Taiwanese students' evaluation of their clinical educators differs from that of Western students would be of great interest; particularly due to the sharp rise in the internationalization of education in general (31) and healthcare professionals more specifically (32).

To date, research has studied evaluation systems available to examine the teaching quality of clinical educators (1, 7). However, there is no single, agreed source for this evaluation nor tool with which to evaluate all aspects of the educators' role: observing educators' effectiveness on different aspects of their role, and by a variety of raters, might be considered a more appropriate way to achieve an holistic view of performance (21, 33). Further, by integrating various subjective observations, we can begin to form a relatively objective evaluation of them. The aim of this study is to find out the suitable evaluation domains (what) and the appropriate raters (who) for evaluating clinical educators' competence in the context of medical education an East Asian culture: specifically Taiwan.

The study was conducted in the largest teaching hospital in Taiwan: a 3,000-bed Medical Center in the north of the country. There were approximately 920 clinical educators, 600 residents, 130 post graduate year physicians (PGY), 200 year-7 medical students (M7), 150 year-4, year-5, and year-6 medical students (M4–6), and at least 3,000 nurses in this institution during the study period.

This study was approved by the Chang Gung Medical Foundation Institutional Review Board (REF: 103-1928B, anonymised to protect participants). Participants consented and were notified that they had the right to withdraw from the study at any time. They were also informed that they could omit any questions they did not want to respond to without consequences to their studies or work.

A purposive sampling technique was used with the numbers of invitations being stratified by staffing ratios at the hospital. We invited a total of 500 particiants by sending questionnaires to 184 clinical educators, 120 residents, 26 PGYs, 40 M7, 30 M4–6, and 100 nurses at the study institution: All of whom should have had experiences of providing, witbessing or receiving clincal education in a hospital setting. The participants were invited by e-mail and were requested to complete the questionnaire online.

A quantitative cross-sectional survey design was used. The research questions (RQs) of this study were:

(RQ1) Which evaluation domains for the role of clinical educator do different stakeholder groups prioritize?

(RQ2) Which study population(s) do different stakeholder groups identify as being most suitable for the evaluation of clinical educators?

Drawing on our review of the literature (including: 1, 4, 5, 14, 21, 34–36), and our understanding of the educational environment of the institution, we itemized potential raters (i.e., who) and potential domains (i.e., what) for evaluating clinical educators. Five healthcare professional experts, including three physician educators, one nursing educator, and one questionnaire development expert at the study institution participated in a Delphi procedure (37, 38) to refine and validate the contents for the questionnaire. They exchanged their ideas under the condition of complete anonymity with the e-mail organized by the research assisstant. Ultimately, with the four rounds of written discussion, this process resulted in an 84-item web-based questionnaire with which we used to gather participants' opinions.

The questionnaire was divided into two parts. The first part, comprising 70 items and five domains focused on what we need to evaluate: teaching ability (16 items) (34, 39–43), assessment ability (12 items) (44–46), personal qualities (16 items) (4, 5, 40, 43, 47, 48), interpersonal relationships (14 items) (1, 2, 36, 43), and curriculum planning (12 items) (1, 22, 43, 49). The participants were asked to give a score to the question “this item is suitable to be evaluated” according to each domain. The second part, comprised 10 items, and focused on who is best placed to be the rater. The following possible rater groups, identified by evaluation experts in the study institution, were: clinical educator self-evaluation, clinical educators' peers, PGYs, residents, medical students, nurses, education associated administrative staff, outpatient services staff, and the clinical educators' supervisors. In this section, participants were asked to give a score for each of the potential rater group that is best placed to evaluate the clinical educators. To avoid a neutral option for respondents, both parts used the same 4-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree). The anonymous questionnaire collected participants' age, gender, occupation, learning and teaching experience in years.

The quantitative responses were analyzed using SPSS 19.0 for Windows software package. For the descriptive statistics, we present the means ± standard deviations. Comparisons among the different groups were analyzed via a one-way analysis of variance (ANOVA) using the Scheffe test for post hoc analysis (p < 0.05).

Two hundred and fifty-eight individuals completed questionnaires (response rate 52%). They comprised mainly male participants (n = 177, 69%) and relatively fewer female participants (n = 81, 31%), with the mean ± SD of age: 28 ± 7 years. The breakdown by group was: clinical educators (n = 63, 24%), residents (n = 87, 34%), PGYs (n = 20, 8%), M7 (n = 31, 12%), M4–6 (n = 26, 10%), and nurses (n = 31, 12%). The M4–6 group had the highest response rate (87%), however, the nurse group had the lowest response rate (31%: Table 1).

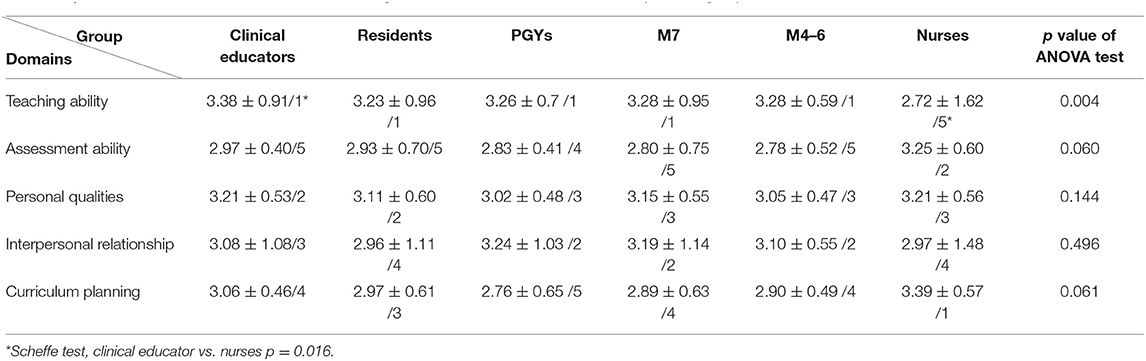

All respondent groups, except nurses, agreed that teaching ability was the most important domain. We applied ANOVA test to evaluate the scores of teaching ability from the different groups showed a significant difference [F (92) = 0.838, p = 0.004]. The post hoc Scheffe test showed a significant difference between the clinical educator and the nurse groups for teaching ability (p = 0.016). The clinical educator, resident, M7 and M4–6 groups chose assessment ability as the least important domain. The M7 and M4–6 groups prioritized the five domains in an identical pattern to each other, with the clinical educator and resident groups prioritizing three of the five domains identically. The PGY group placed curriculum planning as the least important domain, although this was placed as the most important for the nurse group (Table 2).

Table 2. Mean±SD/Rank of the domains for evaluating clinical educators across different respondent groups.

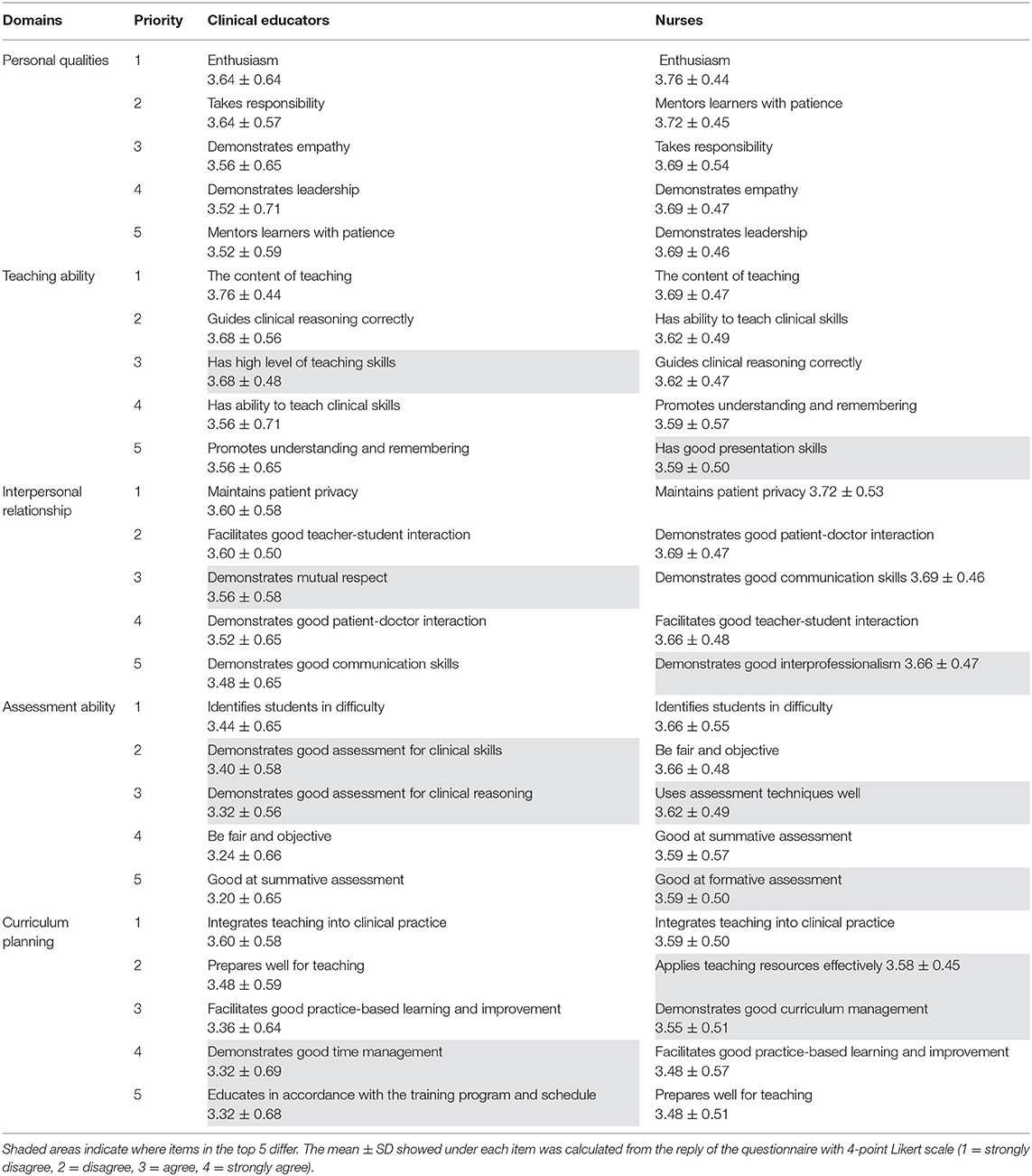

We undertook an in-depth comparison between the clinical educator and nurse groups due to the stark differences in their initial ranking of domains. When comparing the top five ranked items across all domains for these two groups, we can see that both groups of respondents selected identical items as their number one priority across all domains (Table 3). Additionally, for the domain of personal qualities, both groups selected the same top five items, albeit in a slightly different order. For the domains of teaching ability and interpersonal relationships, both groups prioritized 4/5 identical items in their top five: interestingly, in terms of interpersonal relationships, respondents from the nursing group only prioritized demonstrates good interprofessionalism, whereas respondents from the clinical educator group only prioritized demonstrates mutual respect (between themselves and learners). For the domain of assessment ability, the nurse group prioritized uses assessment techniques well and good at formative assessment, whereas the clinical educator group focused on demonstrates good assessemt for clinical skills and clinical reasoning. For the domain of curriculum planning, the nurse group prioritized applies teaching resources effectively and demonstrates good curriculum management, whereas the clinical educator group focused more on demonstrates good time management and educates in accordance with the training program and schedule.

Table 3. Comparison of the top 5 items prioritized by clinical educator and nurse groups across all domains.

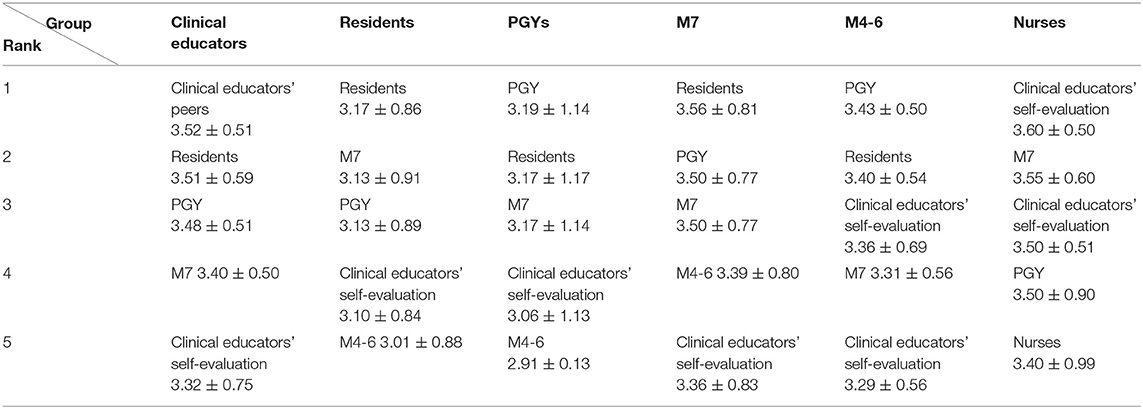

We now turn our attentions to the who question: namely which stakeholder group respondents identify as being the most appropriate to undertake evaluation of clinical educators. Our data illustrates that clinical educators' supervisors, clinical educators themselves (so, self-evaluation), clinical educators' peers, residents, PGYs, M7, M4–6, and nurses featured in the top 5 suitable rater sources for each participant group. All six participant groups identified self-evaluation by clinical educators and PGYs in the top five of suitable rater sources (Table 4).

Table 4. The top five stakeholder groups (of 10) rated by respondent groups for evaluating clinical educators.

This study investigated the suitable content and raters for doctors in their roles as clinical educators. In terms of content, Fluit et al. (6) identified role model, teacher, feedback provider, and supporter being the most common aspects of existing tools, with assessor and planner roles being less common. In our study, we identified the roles of assessor and planner as key factors. With the current international movement toward competency-based training programmes, and East Asia being no exception, the ability to assess the development of students' and trainees' knowledge, skills and practice is becoming a crucial component of the clinical educators' role. Clinical educators' abilities to plan learning activities in busy clinical environments, creating and protecting occasions for the execution of relevant clinical activities, provides a necessary structure for educators and learners. As such the planning role is important for clinical educators, and one for which they can be evaluated.

We found that clinical educators and medical learners prioritize educators' skills over other aspects of the clinical teaching role, including their personal qualities (e.g., enthusiasm, leadership, empathy), which were often ranked below interpersonal relationships by the learners. Indeed, both the clinical educators and medical learners in this study paid attention to the “learning process” and the “learning outcome” when thinking about eudcator evaluation. This contrasts with studies from Western cultural contexts which has found that clinical educators as role models, in which personal aspects such as leadership and enthusiasm are central components (50–52), and is more compatible with the results of studies that focus on the effectiveness of teaching (20, 35).

Our findings show that both clinical educators and learners ranked the five domains in a similar order: teaching ability rated as highest priority, assessment ability as the lowest (or very low). However, we believe that the rationale for each groups' ranking might be different. Thus, for the educators, their low ranking of assessment ability might be due to the relatively low confidence that clinical educators have in their assessment role (53). Indeed, clinical educators sometimes pass underperformance in their students (known as failing to fail): a difficult process tied up with educators' beliefs around self-efficacy (i.e., whether they have the ability to fail the student), their own knowledge and skills (e.g., whether they taught the student correct information, and in an appropriate way) as well as organizational constraints (the consequences of failing students on the organization, the program or fellow educators (53–56). Furthermore, the process of assessment and feedback can sometimes have a negative emotional impact on the educator (15). As such, these aspects of assessment behaviors might lead clinical educators to rate this domain as the lowest priority. As for students, their rationale for rating assessment ability as the lowest priority may be linked to assessment anxiety. This is supported in a recent systematic review examining assessment and psychological distress among medical students, which found that irrespective of the type of assessment involved or the level of learner, overwhelmingly assessment invokes stress or anxiety (57). As such, we might expect that students would display a reluctance to prioritize this aspect of their teachers' role.

In Stein et al.'s study (20) examining teaching effectiveness, different health professions prioritized different aspects of effective teaching according to their own professional culture. We also see this patterning whereby respondents in the nursing group ranked the clinical educator domains differently to respondents in the medical educator or learner groups, most notably for the domains of curriculum planning and assessment ability and teaching ability: which they ranked #1, #2, and #5 respectively, and which others ranked #3–5, #4–5, and #1 respectively. This stark difference in ranking might reflect differences in professional culture and focus alongside professional distance. Thus, in the Taiwanese culture in which this study was conducted, nurse education is highly prioritized and nurse education research comprises a high degree of teaching and curricula innovation evaluations (58–61). This may potentially explain why nurses prioritized the curriculum planning as their number-one domain. That nurses prioritize assessment more than do clinical educators and learners is likely to be due to the fact that they are far removed from the direct assessor-assessee relationship (62–64), and when assessing the learners they do so as part of a group and so the sole responsibility of passing or failing them does not primarily fall on their shoulders.

We undertook an additional comparison between the clinical educator and nurse groups based on their stark differences in opinions around the importance domains for clinical educator evaluations. We found that both groups selected enthusiasm as their #1 item across all the personal qualities domain, in addition to selecting the same top-5 for the domain of personal qualities. This suggests that there are more similarities than there are differences between the two groups. Our finding is compatible with several previous studies for both physicians (2, 22) and nurses (43) which showed that enthusiasm was identified as an important parameter of an effective teacher.

Where differences occurred between clinical educator and nurse groups, this was mainly in the domains of assessment abilities and curriculum planning; furthermore, only the nurse group prioritized the item demonstrates good interprofessionalism within the domain of interpersonal relationships. This latter finding around the respective prioritizing of interprofessional cooperation echoes research undertaken in the undergraduate domain that has found medical students hold more negative attitudes toward interprofessional communication and collaboration, and this holds over time despite interprofessional learning experiences (65–67). Furthermore, in everyday clinical practice and workplace learning, issues of interprofessional hierarchies, roles and conflicts come into play, leading to healthcare practitioners and learners experiencing interprofessional dilemmas (68): notably, due to the power asymmetry between nurses and doctors, it is the nurses who tend to experience greater distress as a result. It is therefore unsurprising that nurses might prioritize interprofessionalism.

Finally, our data showed that the self-evaluation and PGYs were the two most common sources identified for the evaluation of clinical educators. The importance of self-evaluation for the clinical educator evaluation has also been identified in previous studies (2, 20, 22). The Johari window (69), a technique that helps people understand relationships between themselves and others, highlights the importance of self-evaluation. The triangulation of evaluation including learners, peers, and self-evaluation (10, 65) should be considered in the design of future clinical educator evaluation.

As with any study, there are some limitations. Our study was conducted at a single site, with a relatively low response rate in comparison to the entire population targetted and inconsistentcy regarding to the different rater sources. Indeed, we had relatively high response rates from medical learners—including residents, PGYs and medical students—with the lowest response rates from clinical educators and nurses. Given that learners are on the receiving end of clinical educators' teaching, and are presently the main stakeholder group undertaking clinical educators' evaluation, it might be that they are more motivated to respond to invitations to complete the questionnaire about their clinical educators' evaluation. On the other hand, in East Asian cultures, educators comprise an occupation with a relatively high social status. In contrast to the learner-centered education, the educator-centered education may still exist in the East Asian cultures (14). It might be, therefore, that the relatively low response rate from the educators themselves is due to their unwillingness to be evaluated. Furthermore, due to the male-dominated composition of the clinical educators and medical students in Taiwan, our study participants comprised around 70% male. Thus, taken together, caution should be used when considering how the findings might be extrapolated to other settings.

The evaluation of clinical educators is a critical, complicated, and difficult task. The purposes of educator evaluations are to provide learning opportunities for educators, to evaluate how educators able assist their students' learning, to help educators to enhance teaching abilities, and to reward excellent educators. The final goal of educator evaluation is to improve education effectiveness. In this study, we applied a questionnaire survey to find out the suitable evaluation domains/aspects and rater sources for the evaluation of clinical educators. We found that most participants listed teaching ability as their first priority, which was different to the research findings from Western contexts. In terms of the evaluation aspects in each domain, the study showed that the rater sources have both similar and different choices. This reflects the rater sources' opinions of the evaluation of clinical educators according to their experiences. Regarding the issue of who is best placed to assess clinical educators, the study showed the best fit rater groups were educators themselves and PGYs. This study gathered the information about the similar and different opinions from different sources in a single East Asian context. Further studies may be drawn upon for the creation of a comprehensive evaluation system for clinical educators in other East Asian contexts according to the results of this study.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Chang Gung Medical Foundation Institutional Review Board. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

C-CJ: design of the work, drafting, and revising. L-SO: analysis and interpretation of data. H-MT: analysis and interpretation of data and revising. Y-PC: collecting data. J-RL: analyzing data. LM: analysis and interpretation data, revising, and final approval for publishing. All authors contributed to the article and approved the submitted version.

This study was supported by grants from the Chang Gung Medical Research Fund (CDRPG3D0021), Department of Medicine of Chang Gung Memorial Hospital, and Chang Gung Medical Education Research Center (CGMERC).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2022.896822/full#supplementary-material

1. Sutkin G, Wagner E, Harris I, Schiffer R. What makes a good clinical teacher in medicine? A review of the literature academic medicine. J Assoc Am Med Coll. (2008) 83:452–66. doi: 10.1097/ACM.0b013e31816bee61

2. Singh S, Pai DR, Sinha NK, Kaur A, Soe HH, Barua A. Qualities of an effective teacher: what do medical teachers think? BMC Med Educ. (2013) 13:128. doi: 10.1186/1472-6920-13-128

3. Harden RM, Crosby J. AMEE Guide No 20: The good teacher is more than a lecturer-the twelve roles of the teacher. Med Teach. (2000) 22:334–47. doi: 10.1080/014215900409429

4. Burgess A, Goulston K, Oates K. Role modelling of clinical tutors: a focus group study among medical students. BMC Med Educ. (2015) 15:17. doi: 10.1186/s12909-015-0303-8

5. Passi V, Johnson S, Peile E, Wright S, Hafferty F, Johnson N. Doctor role modelling in medical education: BEME Guide No. 27. Med Teach. (2013) 35:e1422–36. doi: 10.3109/0142159X.2013.806982

6. Fluit C, Bolhuis S, Grol R, Laan R, Wensing M. Assessing the quality of clinical teachers: a systematic review of content and quality of questionnaires for assessing clinical teachers. J Gen Intern Med. (2010) 25:1337–45. doi: 10.1007/s11606-010-1458-y

7. Fluit C, Bolhuis S, Grol R, Ham M, Feskens R, Laan R, et al. Evaluation and feedback for effective clinical teaching in postgraduate medical education: validation of an assessment instrument incorporating the CanMEDS roles. Med Teach. (2012) 34:893–901. doi: 10.3109/0142159X.2012.699114

8. Nation JG, Carmichael E, Fidler H, Violato C. The development of an instrument to assess clinical teaching with linkage to CanMEDS roles: a psychometric analysis. Med Teach. (2011) 33:e290–6. doi: 10.3109/0142159X.2011.565825

9. Arah OA, Hoekstra JB, Bos AP, Lombarts KM. New tools for systematic evaluation of teaching qualities of medical faculty: results of an ongoing multi-center survey. PLoS ONE. (2011) 6:e25983. doi: 10.1371/journal.pone.0025983

10. Rink JE. Measuring teacher effectiveness in physical education. Res Q Exerc Sport. (2013) 84:407–18. doi: 10.1080/02701367.2013.844018

11. Beckman TJ, Lee MC, Rohren CH, Pankratz VS. Evaluating an instrument for the peer review of inpatient teaching. Med Teach. (2003) 25:131–5. doi: 10.1080/0142159031000092508

12. Dreiling K, Montano D, Poinstingl H, Müller T, Schiekirka-Schwake S, Anders S, et al. Evaluation in undergraduate medical education: Conceptualizing and validating a novel questionnaire for assessing the quality of bedside teaching. Med Teach. (2017) 39:820–7. doi: 10.1080/0142159X.2017.1324136

13. Ansari AA, Arekat MR, Salem AH. Validating the modified system for evaluation of teaching qualities: a teaching quality assessment instrument. Adv Med Educ Pract. (2018) 9:881-6. https://doi.org/10.2147%2FAMEP.S181094

14. Al-Wassia R, Hamed O, Al-Wassia H, Alafari R, Jamjoom R. Cultural challenges to implementation of formative assessment in Saudi Arabia: an exploratory study. Med Teach. (2015) 37:S9–19. doi: 10.3109/0142159X.2015.1006601

15. Dennis AA, Foy MJ, Monrouxe LV, Rees CE. Exploring trainer and trainee emotional talk in narratives about workplace-based feedback processes. Adv Health Sci Educ Theory Pract. (2018) 23:75–93. doi: 10.1007/s10459-017-9775-0

16. Johansson J, Skeff K, Stratos G. Clinical teaching improvement: the transportability of the stanford faculty development program. Med Teach. (2009) 31:e377–82. doi: 10.1080/01421590802638055

17. Monrouxe LV, Rees CE. Healthcare Professionalism: Improving Practice Through Reflections on Workplace Dilemmas. John Wiley & Sons (2017).

18. Monrouxe LV, Rees CE, Endacott R, Ternan E. ‘Even now it makes me angry': health care students' professionalism dilemma narratives. Med Educ. (2014) 48:502–17. doi: 10.1111/medu.12377

19. DeStephano CC, Crawford KA, Jashi M, Wold JL. Providing 360-degree multisource feedback to nurse educators in the country of Georgia: a formative evaluation of acceptability. J Contin Educ Nurs. (2014) 45:278–84. doi: 10.3928/00220124-20140528-03

20. Stein SM, Fujisaki BS, Davis SE. What does effective teaching look like? Profession-centric perceptions of effective teaching in pharmacy and nursing education. Health Interprof Pract. (2011) 1:eP1003. doi: 10.7772/2159-1253.1007

21. Snell L, Tallett S, Haist S, Hays R, Norcini J, Prince K, et al. A review of the evaluation of clinical teaching: new perspectives and challenges. Med Educ. (2000) 34:862–70. doi: 10.1046/j.1365-2923.2000.00754.x

22. Duvivier RJ, van Dalen J, van der Vleuten CP, Scherpbier AJ. Teacher perceptions of desired qualities, competencies and strategies for clinical skills teachers. Med Teach. (2009) 31:634–41. doi: 10.1080/01421590802578228

23. Salili F. Accepting personal responsibility for learning. In: Watkins D, Biggs JB, editors The Chinese Learner: Cultural, Psychological and Contextual Influences. Hong Kong: CERC (1996).

24. Phuong-Mai N, Terlouw C, Pilot A. Cooperative learning vs. confucian heritage culture's collectivism: confrontation to reveal some cultural conflicts and mismatch. Asia Eur J. (2005) 3:403–19. doi: 10.1007/s10308-005-0008-4

25. Tan C. Teacher-directed and learner-engaged: exploring a confucian conception of education. Ethics Educ. (2016) 10:302–12. doi: 10.1080/17449642.2015.1101229

26. Woo J-G. Educational relationship in the analects of confucius. In: Reichenbach R, Kwak D-J, editors. Confucian Perspectives on Learning and Self-Transformation: International and Cross-Disciplinary Approaches. Cham: Springer International Publishing (2020). p. 45–62.

27. Booth A, Fan E, Meng X, Zhang D. Gender differences in willingness to compete: the role of culture and institutions. Econ J. (2018) 129:734–764. doi: 10.1111/ecoj.12583

28. Bandaranayake RC. Utilization of feedback from student evaluation of teachers. Med Educ. (1978) 12:314–20. doi: 10.1111/j.1365-2923.1978.tb00357.x

29. Molloy E, Boud D. Seeking a different angle on feedback in clinical education: the learner as seeker, judge and user of performance information. Med Educ. (2013) 47:227–9. doi: 10.1111/medu.12116

30. Neumann L. Student evaluation of instruction. A comparison of medicine and engineering. Med Educ. (1982) 16:121–6. doi: 10.1111/j.1365-2923.1982.tb01070.x

31. Institute of International Education. International Student Enrollment Trends, 1948/49-2020/21. Open Doors Report on International Educational Exchange (2021). Available online at: https://opendoorsdata.org/data/international-students/enrollment-trends/

32. ACW-W-HSCWS-13350. Expansion of Undergraduate Medical Education: A Consultation on How to Maximise the Benefits From the Increases in Medical Student Numbers. Department of Health (2017). Available online at: https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/600835/Medical_expansion_rev_A.pdf

33. Bracken DW, Timmreck CW, Fleenor JW, Summers L. 360 feedback from another angle. Hum Resour Manage. (2001) 40:3–20. doi: 10.1002/hrm.4012

34. Irby DM. What clinical teachers in medicine need to know. Acad Med. (1994) 69:333–42. doi: 10.1097/00001888-199405000-00003

35. Parsell G, Bligh J. Recent perspectives on clinical teaching. Med Educ. (2001) 35:409–14. doi: 10.1046/j.1365-2923.2001.00900.x

36. Stenfors-Hayes T, Hult H, Dahlgren LO. What does it mean to be a good teacher and clinical supervisor in medical education? Adv Health Sci Educ Theory Pract. (2011) 16:197–210. doi: 10.1007/s10459-010-9255-2

37. Keeney S, Hasson F, McKenna HP. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud. (2001) 38:195–200. doi: 10.1016/S0020-7489(00)00044-4

38. Palisano RJ, Rosenbaum P, Bartlett D, Livingston MH. Content validity of the expanded and revised gross motor function classification system. Dev Med Child Neurol. (2008) 50:744–50. doi: 10.1111/j.1469-8749.2008.03089.x

39. Althouse LA, Stritter FT, Steiner BD. Attitudes and approaches of influential role models in clinical education. Adv Health Sci Educ Theory Pract. (1999) 4:111–22. doi: 10.1023/A:1009768526142

40. Elzubeir MA, Rizk DE. Identifying characteristics that students, interns and residents look for in their role models. Med Educ. (2001) 35:272–7. doi: 10.1046/j.1365-2923.2001.00870.x

41. Lombarts KM, Heineman MJ, Arah OA. Good clinical teachers likely to be specialist role models: results from a multicenter cross-sectional survey. PLoS ONE. (2010) 5:e15202. doi: 10.1371/journal.pone.0015202

42. Morrison EH, Hitchcock MA, Harthill M, Boker JR, Masunaga H. The on-line clinical teaching perception inventory: a “snapshot” of medical teachers. Fam Med. (2005) 37:48–53.

43. Tang FI, Chou SM, Chiang HH. Students' perceptions of effective and ineffective clinical instructors. J Nurs Educ. (2005) 44:187–92. doi: 10.3928/01484834-20050401-09

44. Blatt B, Greenberg L. A multi-level assessment of a program to teach medical students to teach. Adv Health Sci Educ Theory Pract. (2007) 12:7–18. doi: 10.1007/s10459-005-3053-2

45. Chang YC, Lee CH, Chen CK, Liao CH, Ng CJ, Chen JC, et al. Exploring the influence of gender, seniority and specialty on paper and computer-based feedback provision during mini-CEX assessments in a busy emergency department. Adv Health Sci Educ Theory Pract. (2017) 22:57–67. doi: 10.1007/s10459-016-9682-9

46. Miller GE. The assessment of clinical skills/competence/performance. Acad Med. (1990) 65:S63–7. doi: 10.1097/00001888-199009000-00045

47. Weissmann PF, Branch WT, Gracey CF, Haidet P, Frankel RM. Role modeling humanistic behavior: learning bedside manner from the experts. Acad Med. (2006) 81:661–7. doi: 10.1097/01.ACM.0000232423.81299.fe

48. Wright S. Examining what residents look for in their role models. Acad Med. (1996) 71:290–2. doi: 10.1097/00001888-199603000-00024

49. Ginsburg H. The challenge of formative assessment in mathematics education: children's minds, teachers' minds. Human Devel. (2009) 52:109–28. doi: 10.1159/000202729

50. Irby DM. Clinical teaching and the clinical teacher. J Med Educ. (1986) 61:35–45. doi: 10.1097/00001888-198609000-00005

51. Jochemsen-van der Leeuw HG, van Dijk N, van Etten-Jamaludin FS, Wieringa-de Waard M. The attributes of the clinical trainer as a role model: a systematic review. Acad Med. (2013) 88:26–34. doi: 10.1097/ACM.0b013e318276d070

52. Wright SM, Carrese JA. Excellence in role modelling: insight and perspectives from the pros. CMAJ. (2002) 167:638–43.

53. Cleland JA, Knight LV, Rees CE, Tracey S, Bond CM. Is it me or is it them? Factors that influence the passing of underperforming students. Med Educ. (2008) 42:800–9. doi: 10.1111/j.1365-2923.2008.03113.x

54. Monrouxe L, Rees C, Lewis N, Cleland J. Medical educators' social acts of explaining passing underperformance in students: a qualitative study. Adv Health Sci Educ Theory Pract. (2011) 16:239–52. doi: 10.1007/s10459-010-9259-y

55. Rees CE, Knight LV, Cleland JA. Medical educators' metaphoric talk about their assessment relationships with students: ‘you don't want to sort of be the one who sticks the knife in them'. Assess Eval High Educ. (2009) 34:455–67. doi: 10.1080/02602930802071098

56. Luhanga FL, Larocque S, MacEwan L, Gwekwerere YN, Danyluk P. Exploring the issue of failure to fail in professional education programs: a multidisciplinary study. J Univ Teach Learn Pract. (2014) 11. doi: 10.53761/1.11.2.3

57. Lyndon MP, Strom JM, Alyami HM, Yu TC, Wilson NC, Singh PP, et al. The relationship between academic assessment and psychological distress among medical students: a systematic review. Perspect Med Educ. (2014) 3:405–18. doi: 10.1007/s40037-014-0148-6

58. Lee-Hsieh J, O'Brien A, Liu CY, Cheng SF, Lee YW, Kao YH. The development and validation of the Clinical Teaching Behavior Inventory (CTBI-23): Nurse preceptors' and new graduate nurses' perceptions of precepting. Nurse Educ Today. (2016) 38:107–14. doi: 10.1016/j.nedt.2015.12.005

59. Chang S, Huang C, Chen S, Liao Y, Lin C, Wang H. Evaluation of a critical appraisal program for clinical nurses: a controlled before-and-after study. J Contin Educ Nurs. (2013) 44:43–8. doi: 10.3928/00220124-20121101-51

60. Feng JY, Chang YT, Chang HY, Erdley WS, Lin CH, Chang YJ. Systematic review of effectiveness of situated e-learning on medical and nursing education. Worldviews Evid Based Nurs. (2013) 10:174–83. doi: 10.1111/wvn.12005

61. Hung HY, Huang YF, Tsai JJ, Chang YJ. Current state of evidence-based practice education for undergraduate nursing students in Taiwan: a questionnaire study. Nurse Educ Today. (2015) 35:1262–7. doi: 10.1016/j.nedt.2015.05.001

62. Leung KK, Wang WD, Chen YY. Multi-source evaluation of interpersonal and communication skills of family medicine residents. Adv Health Sci Educ Theory Pract. (2012) 17:717–26. doi: 10.1007/s10459-011-9345-9

63. Tiao MM, Huang LT, Huang YH, Tang KS, Chen CJ. Multisource feedback analysis of pediatric outpatient teaching. BMC Med Educ. (2013) 13:145. doi: 10.1186/1472-6920-13-145

64. Yang YY, Lee FY, Hsu HC, Huang CC, Chen JW, Cheng HM, et al. Assessment of first-year post-graduate residents: usefulness of multiple tools. J Chin Med Assoc. (2011) 74:531–8. doi: 10.1016/j.jcma.2011.10.002

65. Delunas LR, Rouse S. Nursing and medical student attitudes about communication and collaboration before and after an interprofessional education experience. Nurs Educ Perspect. (2014) 35:100–5. doi: 10.5480/11-716.1

66. Hansson A, Foldevi M, Mattsson B. Medical students' attitudes toward collaboration between doctors and nurses - a comparison between two Swedish universities. J Interprof Care. (2010) 24:242–50. doi: 10.3109/13561820903163439

67. Wilhelmsson M, Ponzer S, Dahlgren LO, Timpka T, Faresjo T. Are female students in general and nursing students more ready for teamwork and interprofessional collaboration in healthcare? BMC Med Educ. (2011) 11:15. doi: 10.1186/1472-6920-11-15

68. Monrouxe L, Rees C. Professionalism Dilemmas across Professional Cultures. In: Monrouxe LV, Rees CE, editors. Healthcare Professionalism: Improving Practice through Reflections on Workplace Dilemmas. Chichester: Wiley Blackwell (2017). p. 207–26

Keywords: multisource feedback, faculty assessment, faculty development, clinical educator, multisource evaluation

Citation: Jenq C-C, Ou L-S, Tseng H-M, Chao Y-P, Lin J-R and Monrouxe LV (2022) Evaluating Clinical Educators' Competence in an East Asian Context: Who Values What? Front. Med. 9:896822. doi: 10.3389/fmed.2022.896822

Received: 15 March 2022; Accepted: 07 June 2022;

Published: 28 June 2022.

Edited by:

Marie Amitani, Kagoshima University, JapanReviewed by:

Eleanor Beck, University of Wollongong, AustraliaCopyright © 2022 Jenq, Ou, Tseng, Chao, Lin and Monrouxe. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lynn V. Monrouxe, bHlubi5tb25yb3V4ZUBzeWRuZXkuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.