- 1Taizhou Hospital of Zhejiang Province Affiliated to Wenzhou Medical University, Linhai, China

- 2Key Laboratory of Minimally Invasive Techniques and Rapid Rehabilitation of Digestive System Tumor of Zhejiang Province, Taizhou Hospital Affiliated to Wenzhou Medical University, Linhai, China

- 3Department of Gastroenterology, Taizhou Hospital of Zhejiang Province Affiliated to Wenzhou Medical University, Linhai, China

- 4Institute of Digestive Disease, Taizhou Hospital of Zhejiang Province Affiliated to Wenzhou Medical University, Linhai, China

- 5Health Management Center, Taizhou Hospital of Zhejiang Province Affiliated to Wenzhou Medical University, Linhai, China

- 6Taizhou Hospital, Zhejiang University, Linhai, China

- 7Department of Gastroenterology, Renmin Hospital of Wuhan University, Wuhan, China

- 8Taizhou Hospital of Zhejiang Province, Shaoxing University, Linhai, China

Convolutional neural networks in the field of artificial intelligence show great potential in image recognition. It assisted endoscopy to improve the detection rate of early gastric cancer. The 5-year survival rate for advanced gastric cancer is less than 30%, while the 5-year survival rate for early gastric cancer is more than 90%. Therefore, earlier screening for gastric cancer can lead to a better prognosis. However, the detection rate of early gastric cancer in China has been extremely low due to many factors, such as the presence of gastric cancer without obvious symptoms, difficulty identifying lesions by the naked eye, and a lack of experience among endoscopists. The introduction of artificial intelligence can help mitigate these shortcomings and greatly improve the accuracy of screening. According to relevant reports, the sensitivity and accuracy of artificial intelligence trained on deep cirrocumulus neural networks are better than those of endoscopists, and evaluations also take less time, which can greatly reduce the burden on endoscopists. In addition, artificial intelligence can also perform real-time detection and feedback on the inspection process of the endoscopist to standardize the operation of the endoscopist. AI has also shown great potential in training novice endoscopists. With the maturity of AI technology, AI has the ability to improve the detection rate of early gastric cancer in China and reduce the death rate of gastric cancer related diseases in China.

Introduction

Gastric cancer (GC) is the fifth-most common malignant tumor and the third leading cause of cancer-related death in the world (1, 2). Gastric cancer is also the second leading cause of cancer deaths in China, with a standardized 5-year survival rate of only 27.4% (3). According to related research, there were approximately 1 million newly diagnosed gastric cancer cases in 2008, 47% of which were in China, which accounted for half of the global gastric cancer deaths (4, 5). Of note, however: the 5-year survival rate of early gastric cancer (EGC) was over 90%, which was much higher than that of advanced gastric cancer (AGC) (30%) (6–8). Therefore, improving the detection rate of endoscopic EGC is essential for reducing the mortality, labor loss, and tumor treatment cost caused by GC (9).

The diagnosis of EGC is related to the ability of endoscopists to adequately analyze endoscopic images, a skill cultivated through extensive training over a long period (10). While the diagnostic level of EGC has gradually improved in China with the establishment and improvement of many endoscopic centers, the rate of endoscopy diagnoses differs among regions, and areas with better economic and medical development consequently have better equipment and training systems, whereas facilities in remote areas tend to have insufficient training in endoscopy technology and a lack of experience endoscopists (11). Therefore, it is necessary to improve the detection rate of EGC under endoscopy with instrument-assisted diagnostic tools, especially in areas where there is a shortage of endoscopists.

With the rapid development of computer science and technology, artificial intelligence (AI) technology is maturing, allowing it to be used to improve accuracy in a variety of medical situations (12). The number of endoscopists in China is insufficient at present, being primarily concentrated in the top three hospitals. Most community hospitals lack the proper equipment for endoscopy, and even in cases where they do have the equipment, operators are lacking. Community hospitals are unable to receive diverted patients, resulting in a heavy burden on endoscopists in tertiary hospitals. Under this massive workload, endoscopists struggle to accurately identify any lesions, and EGC is even more difficult to detect. Therefore, to resolve the current situation, attention has been focused on the feasibility of applying AI technology to endoscopy (11).

Among AI technologies, neural networks, represented by cirrocumulus neural networks, have demonstrated remarkable progress, achieving feats comparable to or even surpassing human beings in the field of image recognition. AI is not affected by subjectivity, fatigue, experience, or other factors. It performs medical image-assisted diagnoses well and has a high focus recognition rate. In addition, its learning ability is continuous and improves with increasing exposure to training data. AI has shown great potential in endoscopy, including in screening for EGC (12).

Analysis of Recent Trends in the Literature on Artificial Intelligence and Early Gastric Cancer

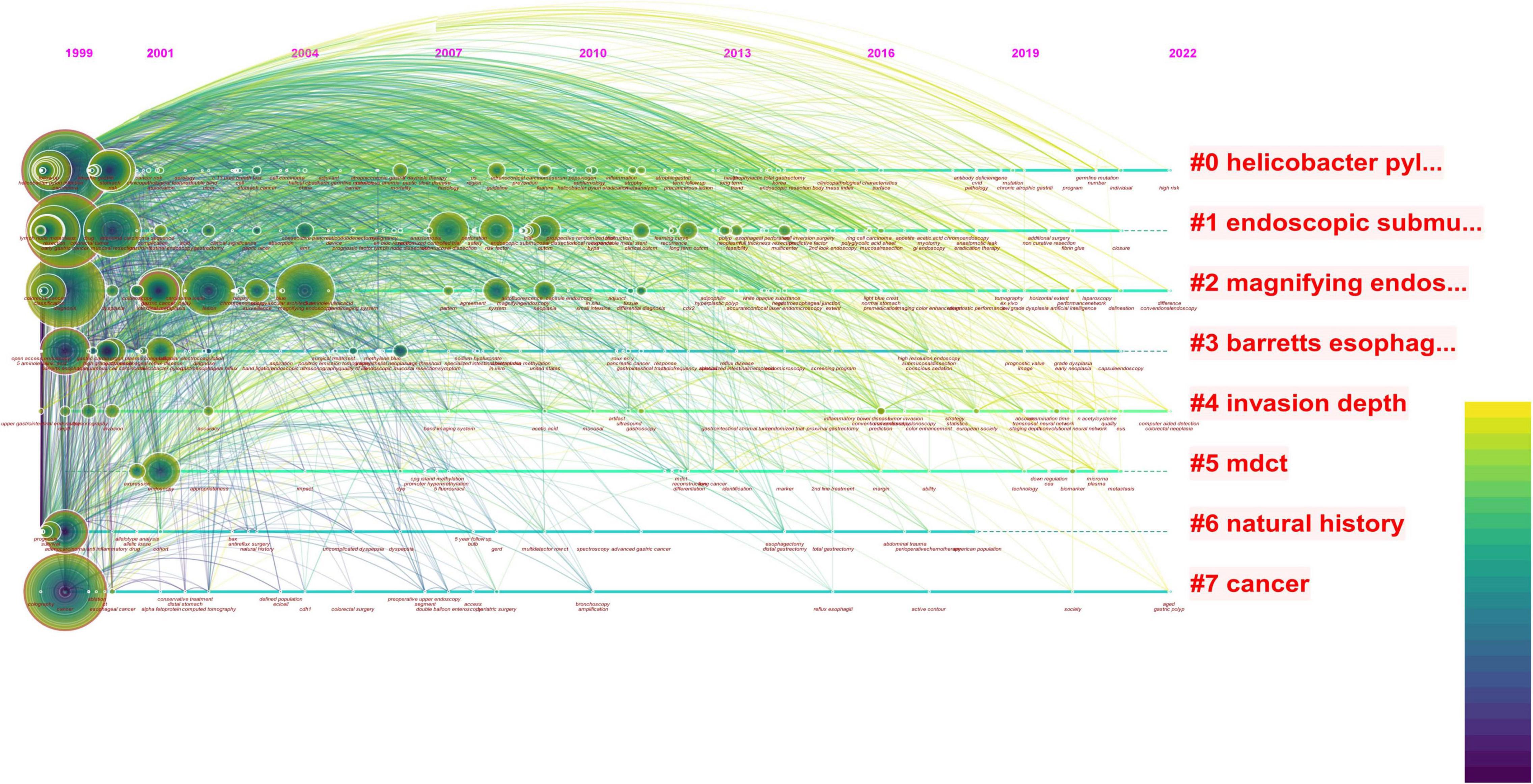

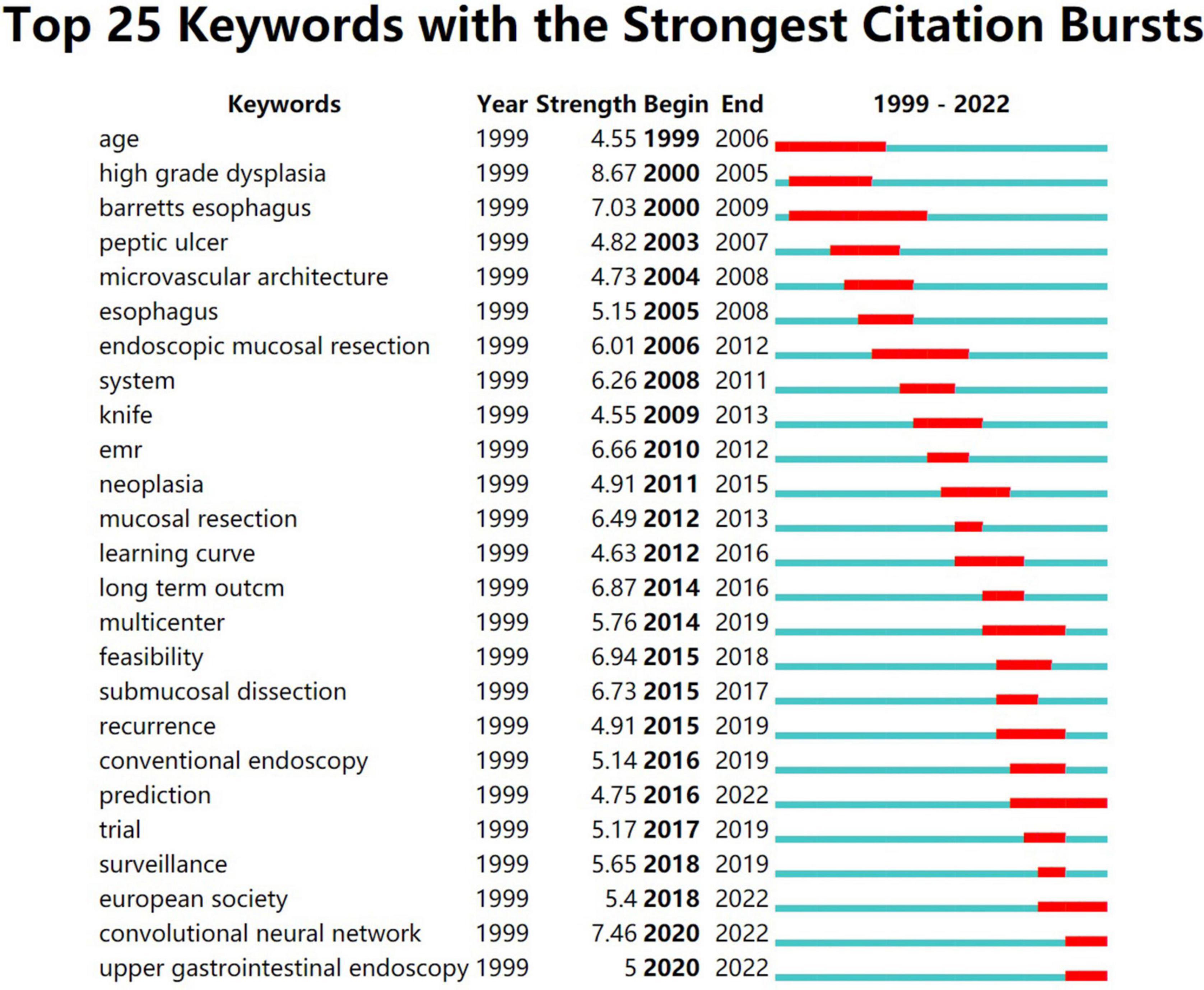

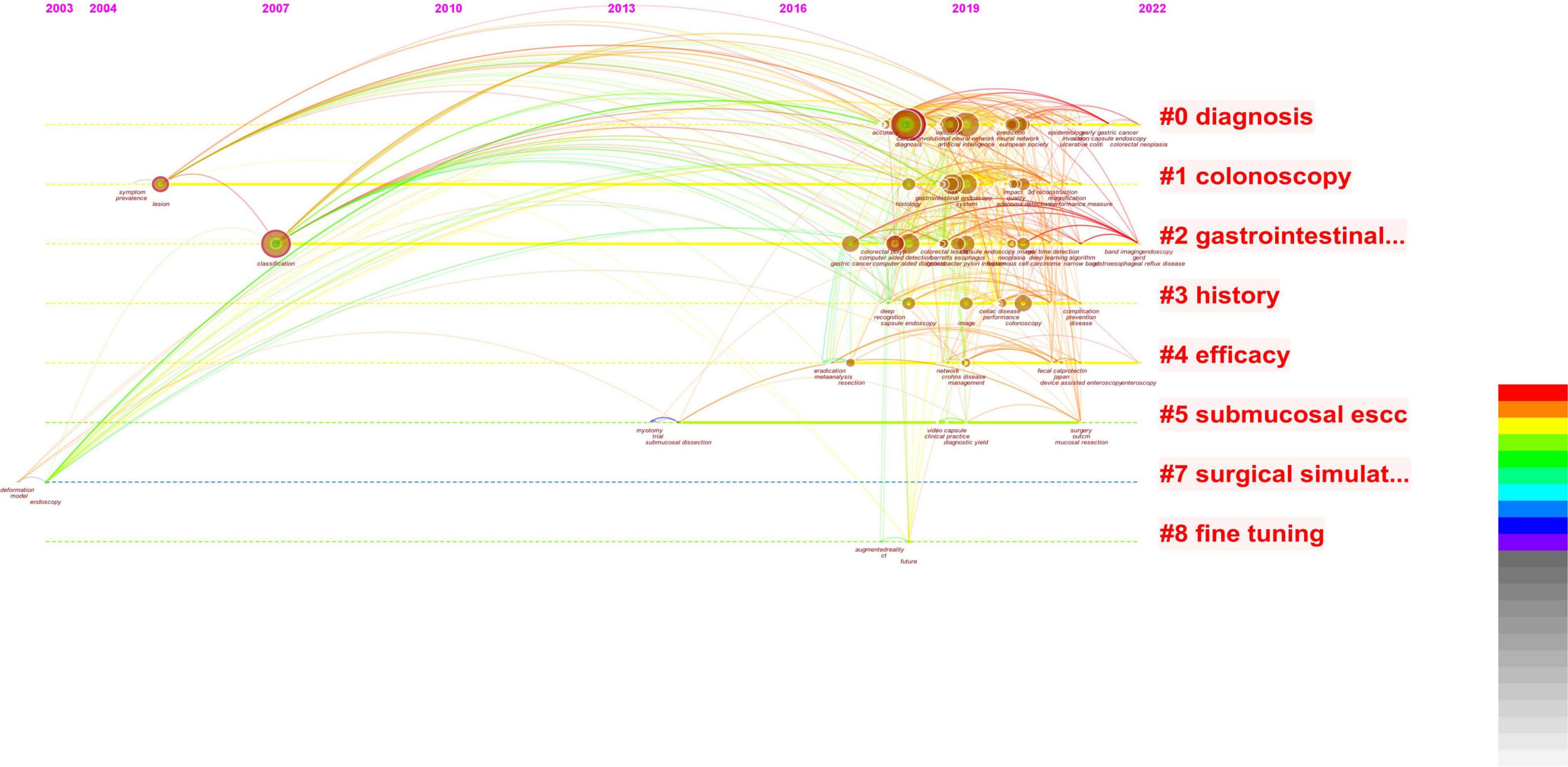

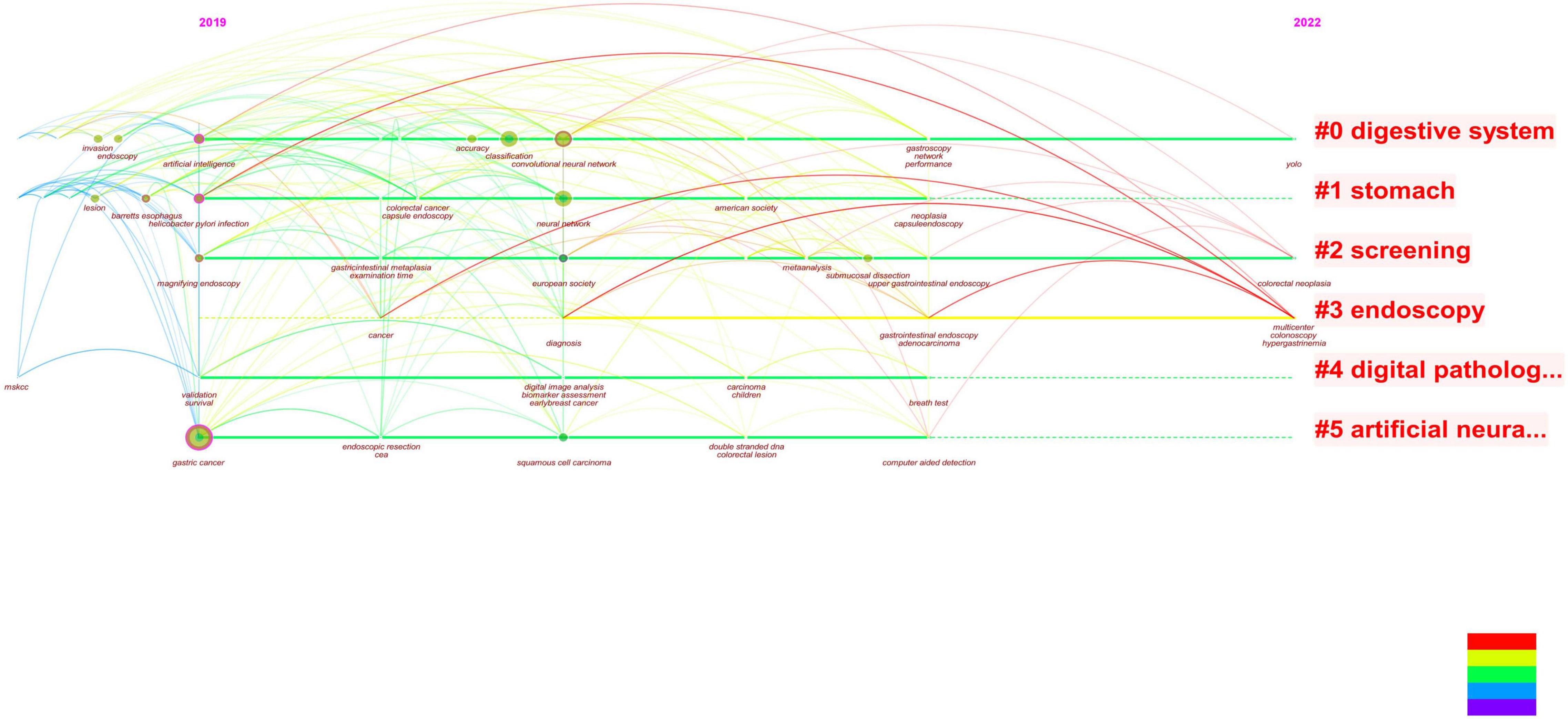

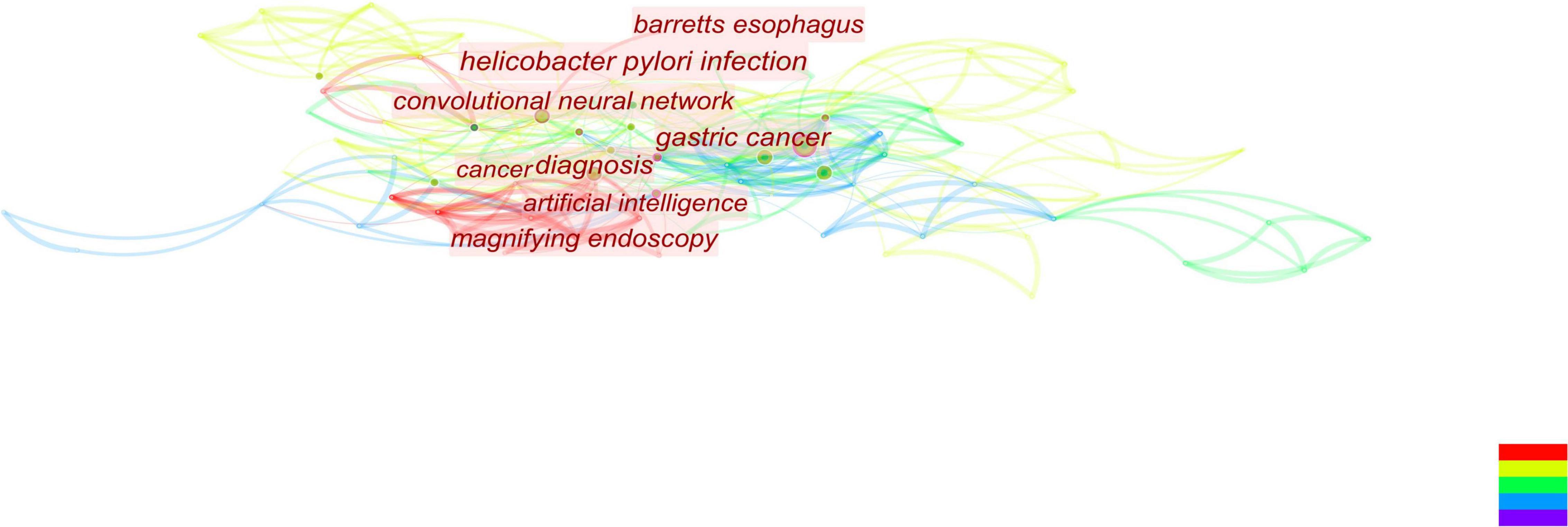

We analyze current research trends in AI, EGC, and endoscopy by searching relevant topics in the Web of Science core database. The analysis results are presented with citeSpace drawings. In our search concerning endoscopy and EGC, we found 1,664 related articles, and 1,625 were used in the final analysis. In our search concerning endoscopy and AI, we found 392 related articles, and 354 were used in the final analysis. In our search concerning AI and EGC, we found 67 relevant articles, and 58 were used in the final analysis. We then combined these three search terms to perform retrieval again and found 55 relevant articles, and 40 were used in the final analysis. We analyzed the topics related to endoscopy and EGC, obtaining three figures (Figures 1–3). On analyzing these three figures, we found that the studies on endoscopy and EGC were mainly concentrated between 1999 and 2010, without much research or attention focused on these topics in the last decade. In line with Figure 3, we also found that various endoscopic operation techniques have been attracting increasing attention in recent years. In addition, we found that convolutional neural networks have received a lot of attention in the last 3 years.

Figure 1. The red font in the figure represents the keywords with the highest frequency in the included literature, the circle represents the articles published in that year, the size represents the number of articles published, and the color of the line represents the year. The reports on endoscopy and EGC were mainly concentrated between 1999 and 2010, with less and less relevant literature published in this field after that point. In the last decade, the topic of combining endoscopy with EGC has no longer been a research topic of interest.

Figure 2. The closer the font is to the center of the figure, the more attention is paid. In addition, the size of the circle indicates the number of relevant publications. The top-down color indicates the year. The diagnosis gets the most attention, followed by the various digestive diseases that surround the diagnosis.

Figure 3. This figure shows the 25 keywords with the highest frequency in the literature and the attention paid to these keywords over time. It’s not hard to see that convolutional neural networks are beginning to attract attention.

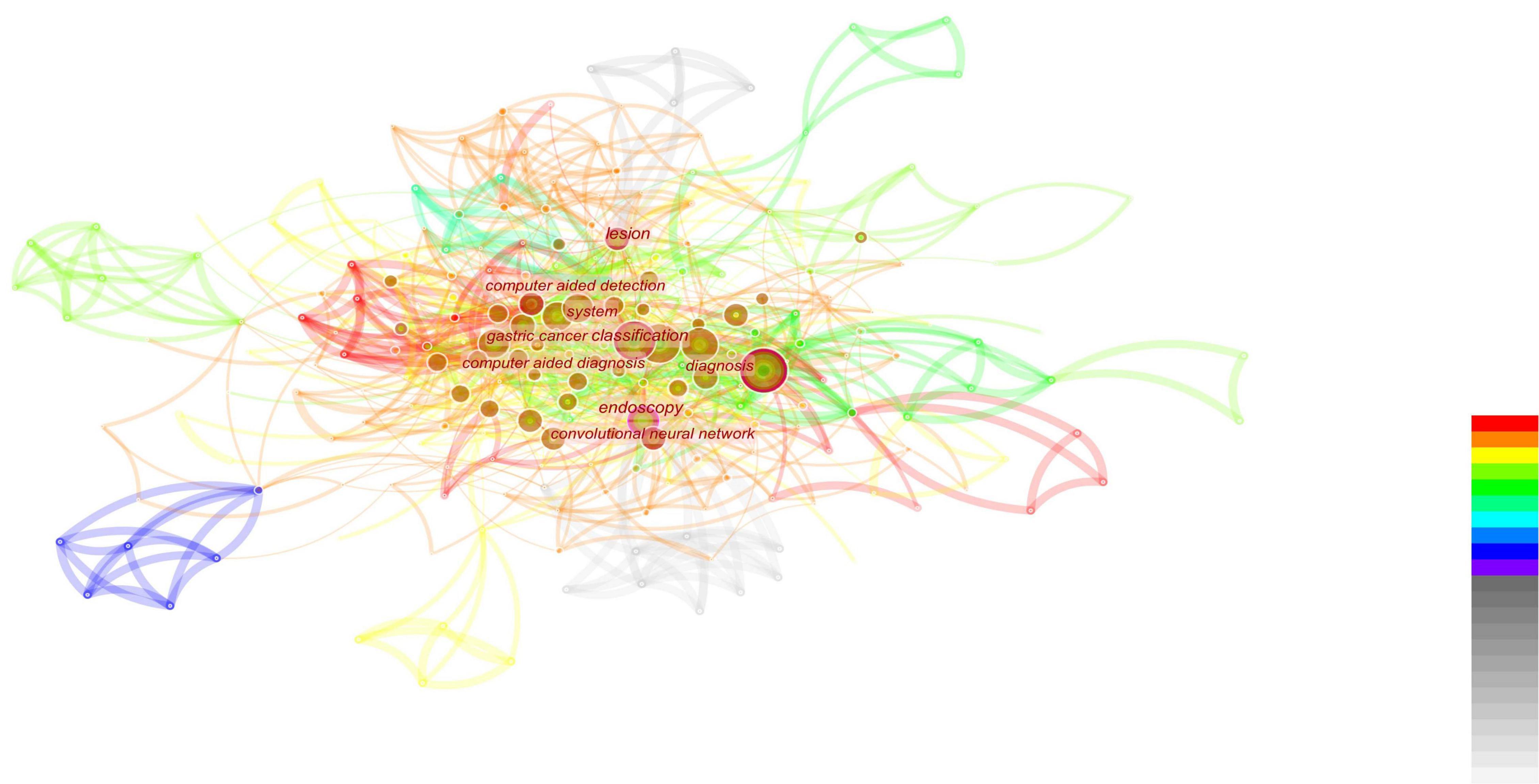

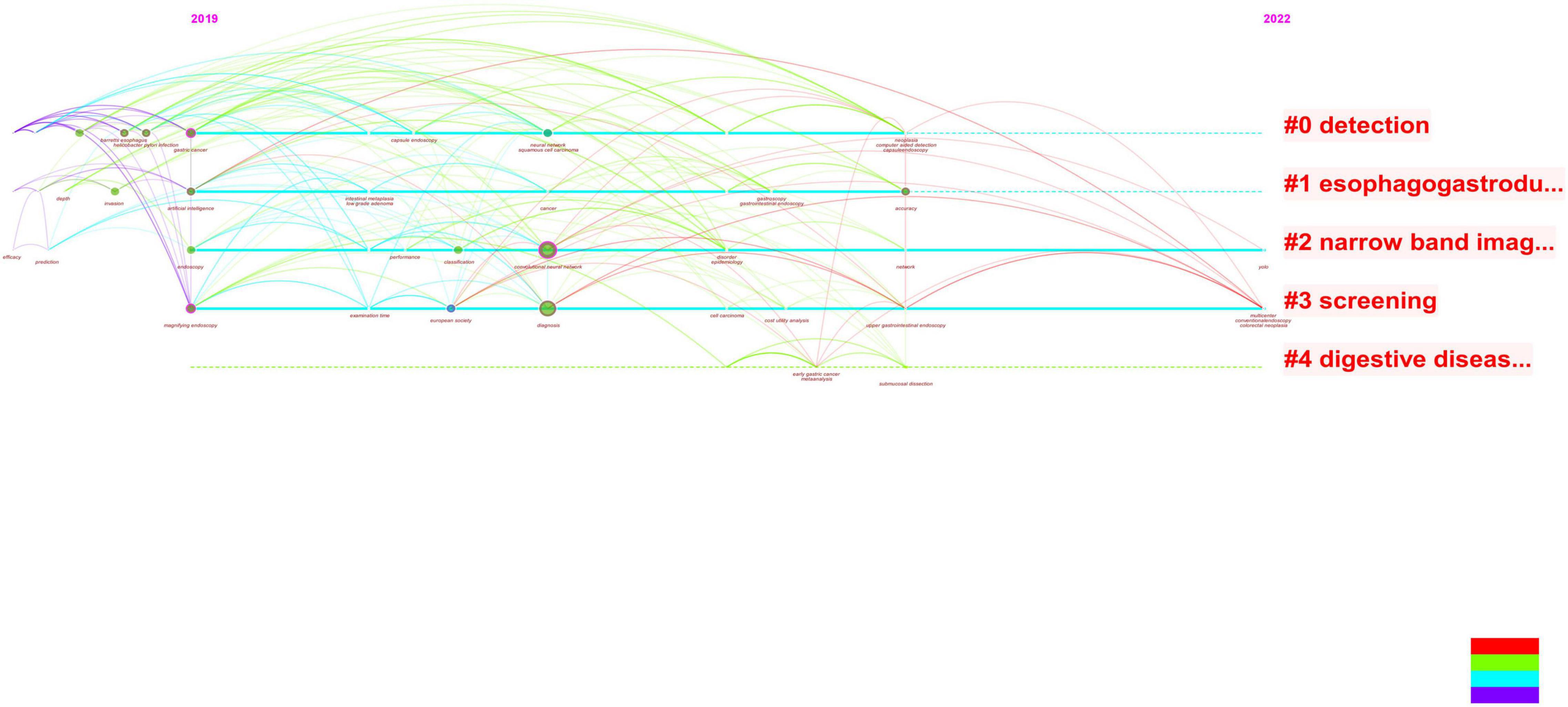

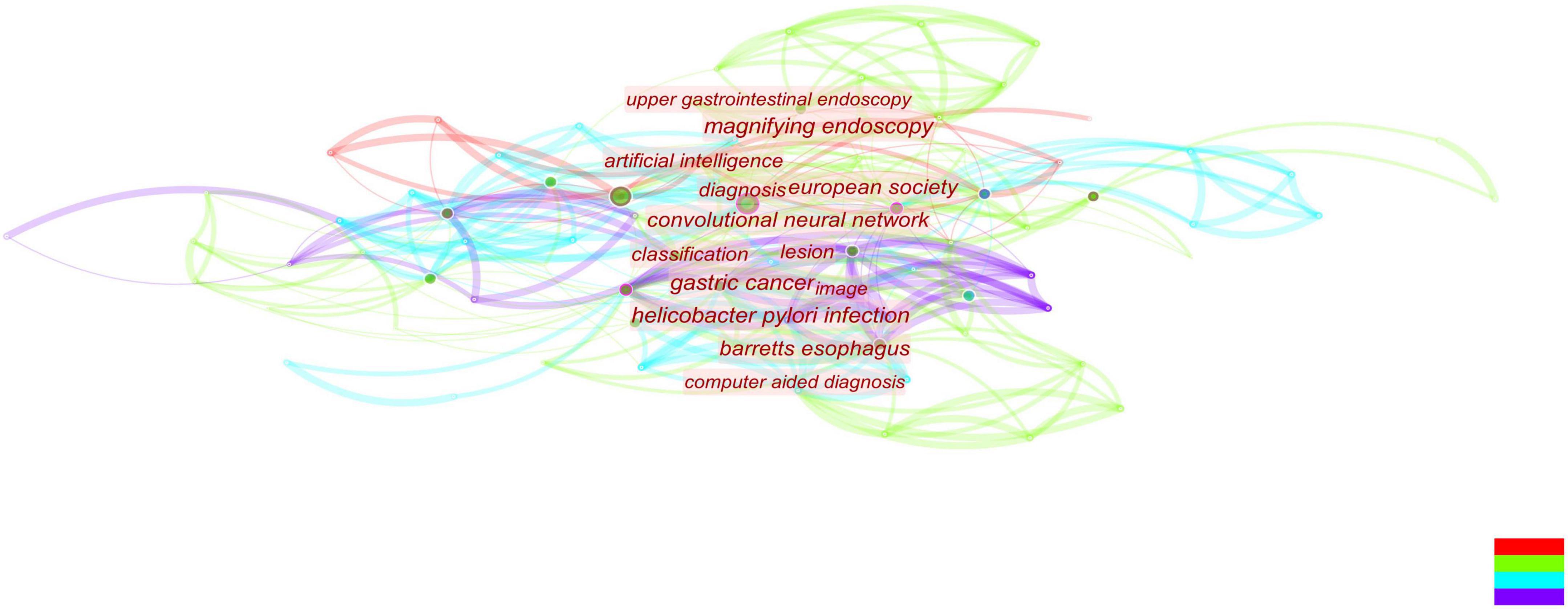

We next analyzed the literature on endoscopy and AI and obtained two similar figures (Figures 4, 5). By combining these two pictures, we found that the combination of AI and endoscopy has been a hot topic in the past 3 years, specifically for the detection of early cancer. We then searched for related literature on AI and EGC as well as the combination of these three topics and obtained four figures (Figures 6–9). Based on our analysis of these four figures and in combination with previous findings, we concluded that the application of AI to endoscopy in order to detect EGC remains a hot research topic, although relevant studies are lacking, so further new findings are awaited. By analyzing the existing literature, we also found that current research is focusing on convolutional neural networks and screening.

Figure 4. Based on combined images, the studies related to AI are concentrated in the last 3 years, and there is an obvious growth trend.

Figure 5. This figure is based on the literature analysis of artificial intelligence and endoscopy. It can be seen that the research hotspots under this topic are the classification of gastric cancer and computer-aided examination and diagnosis. At the same time, convolutional neural networks also appear in hot spots, indicating that convolutional neural networks are showing an increasing trend in the application of artificial intelligence.

Figure 6. By analyzing the figure, it can be seen that when combined with literature on early gastric cancer and artificial intelligence, both of these topics have occurred in recent years. Additionally, this year’s study focused on endoscopic screening.

Figure 7. According to this figure, we can see that the convolutional neural network is currently attracting a lot of attention and is closely associated with gastric cancer.

Figure 8. It can be seen from the revised figure that the literature studies on the combination of early gastric cancer, artificial intelligence, and endoscopy have taken place in recent 3 years, and the main research hotspots in 2022 are focused on screening, i.e., applying artificial intelligence to endoscopy to screen early gastric cancer.

Figure 9. Combined with literature on early gastric cancer, artificial intelligence, and endoscopy, convolutional neural networks occupy the center and become an absolute research hotspot.

This review will focus on these three aspects: convolutional neural networks, the dilemmas associated with EGC screening, and the feasibility of applying AI to GC screening.

The Technology of Artificial Intelligence

Machine learning and deep learning are considered two sub-technologies of AI (13). Deep learning can be used for prediction and judgment (14, 15). Machine learning can automatically improve computer algorithms through experience and use data or past experience to optimize the performance standards of computer programs (13). Both of these are the most commonly used technologies to build AI models (16).

An artificial neural network (ANN) is a monitoring model whose model structure is very similar to that of neurons in the human central nervous system (17, 18). Neurons are joined to create a network as a computational unit. When data enter the input layer, they travel through a series of concealed layers before reaching the output layer (18). Before ANNs can be utilized, they must first be trained, which entails splitting data into “training sets” that define the network structure and “test sets” that assess the ANN’s ability to anticipate the intended output (19, 20).

To meet the need for increased performance, more and more complex neural networks are developed, resulting in the concept of deep learning. Deep learning works by progressively extracting higher-level features from raw input using multi-level structures (21). A deep neural network (DNN) is derived from an ANN and consists of multiple continuous filters that can automatically detect and extract important features of input data (22, 23). To improve performance, a large amount of marked training data is required, which involves a combination of deep learning and reinforcing learning.

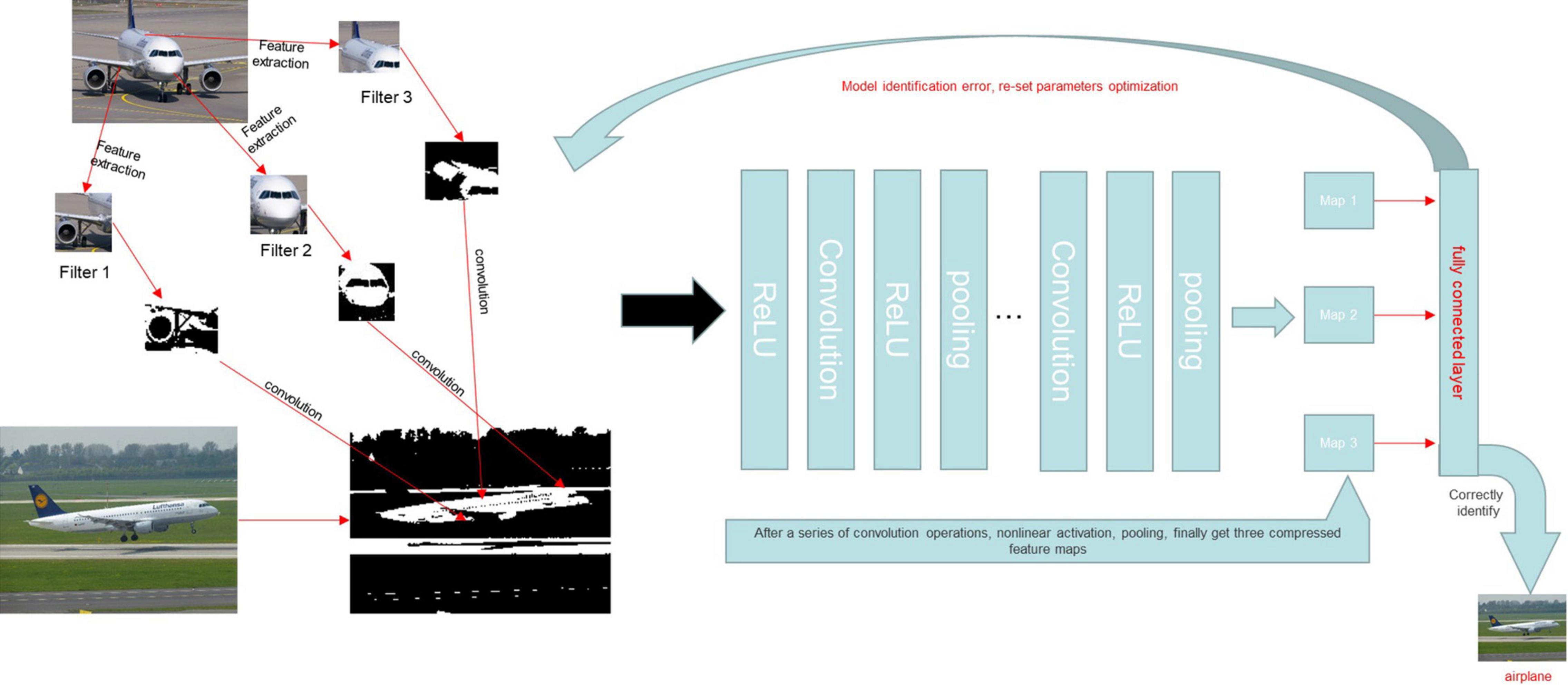

At present, the most widely used and effective network is the convolutional neural network (CNN). It has shown great potential in many fields, such as pathological analyses, computed tomography, and magnetic resonance imaging analyses (23–28). A CNN is a feedforward multi-layer network in which the information flow is unidirectional, i.e., from input to output, and each layer uses a set of convolution kernels to perform multiple transformations in the process of information flow (14). Through this process, information characteristics are extracted. A CNN model mainly includes a convolution layer, pooling layer, and full connection layer. A novel network model is created based on the CNN model by merging multi-layer convolution and multi-layer pooling, which can increase network structure accuracy (22). A traditional CNN is mainly composed of two parts: the multi-component convolution layer and classification layer. The convolution layer’s primary job is to extract features from the input data. When the input data is an image, e.g., and the observed item is an abstract entity, the convolution layer extracts the abstract and valuable texture elements from the image and sends them to the classification layer, which is primarily responsible for classifying the input image (29, 30). Furthermore, because a CNN uses the convolution operation of the weight-sharing scheme, the number of network parameters required by a CNN is dramatically decreased compared to completely linked networks with the same number of network layers, thereby reducing the risk of over-fitting. A CNN may be very profound and complex in the eyes of outsiders, but its working mode is briefly expressed in Figure 10. A CNN is currently being used to solve a variety of computer recognition challenges, including picture categorization, target detection, and image synthesis (31). This model imitates the recognition and the processing of image by the human brain, making the processing of image information faster and more accurate. At the same time, with the continuous iteration and update of the technology, more images can be identified for review. The recognition of medical examination images, including imaging findings, pathological endoscopic images, and endoscopic images (32). A deep CNN was trained using 1,29,450 skin photos to create 2,032 distinct skin disease presentations (33). The model was then put to the test against 21 board-certified dermatologists, who were shown to be equally skilled at telling the difference between keratinocyte cancer and benign seborrheic keratosis, as well as malignant melanoma and benign nevus (33). This example reflects the great potential of CNN-based AI in the field of image recognition.

Figure 10. A CNN model was trained to identify whether or not the content of a given picture was an airplane. We assumed that the characteristics of the aircraft were the tail, engine, and fuselage and set the characteristics as the convolution kernel. The image was then converted into a matrix that a computer could recognize. The eigenmatrix of the sample was obtained by a convolution operation between the convolution kernel and the sample image. A non-linear activation function was then used to perform a non-linear activation operation on the eigenmatrix to improve the sparsity of the network and reduce the interdependence of parameters. The pooling layer was used to reduce the dimension of the feature matrix, compress the image features, remove the redundant information, and reduce the amount of calculation. Finally, we converted the calculated eigenspace mapping sample marker space into a one-dimensional vector through the full connection layer to obtain the complete image features. After completing the above steps, we also established an error function to determine the accuracy of the output. The convolution kernel parameters were adjusted to reduce the error and obtain the actual features of aircraft images.

If AI can be successfully combined with various clinical examinations, it will greatly improve clinical practice. However, at present this is a brand-new field, so further exploration and experimentation are necessary. In recent years, there have been numerous attempts to integrate AI into various medical fields, including endoscopy. The potential utility of this approach in GC screening is discussed below.

The Dilemma of Screening for Early Gastric Cancer

Early gastric cancer is difficult to detect, as the early symptoms of GC are not obvious, and some patients do not actually show any early symptoms, while elderly people tend to avoid visiting the hospital for regular examinations (34). Patients who wait for obvious symptoms to visit a doctor often present with advanced GC, missing the optimum treatment window.

At present, only two nations have government-funded GC screening programs: Japan and South Korea (35). Despite the high prevalence of GC, the death to morbidity ratio is low in these countries (0.43 in Japan and 0.35 in Korea), indicating the value of population-based screening in high-risk locations (4, 35). Another experiment looking into whether or not early detection of GC reduced medical expenditures discovered that the cost of treating GC rises considerably with the stage of the disease. Early identification of GC and endoscopic submucosal dissection (ESD) can thus significantly reduce GC treatment costs, indicating that early identification of GC is critical for reducing medical expenditures (34).

However, despite the country’s relatively high incidence of GC, China still lacks a countrywide screening program and the only way to identify EGC is through opportunistic screening (36). A domestic study established a mathematical model to analyze the long-term population impact of an endoscopic screening program on the disease burden of GC patients in China. Experiments have shown that 5.53–4.64 million cases and 7,40,000–5.42 million deaths could be prevented over 30 years with different screening coverage and frequency. It is necessary to carry out large-scale screening in China (37). To address this issue, China must step up its efforts in EGC screening.

Endoscopy is the most effective diagnostic method for gastric cancer and can improve the detection rate of EGC (38). Despite the ongoing progress of endoscopic imaging technology, which has improved the detection rate of EGC, there remains a high rate of missed diagnoses, as the ultimate result of endoscopy largely depends on the endoscopist, and both their experience and operation approach will affect this outcome. Studies have shown that a diagnosis was missed in up to 10% of patients who underwent endoscopy recently. Meanwhile, in a recent randomized clinical trial in Japan, the sensitivity of GC was only 75% (39), indicating that the detection of EGC still has room for improvement.

Some studies have shown that the sensitivity of GC detection can be increased by training endoscopists to improve their operational skills and ability to identify lesions (9). In addition, In today’s clinical setting, each endoscopist must perform the same set of procedures on a large number of patients and identify lesions that are difficult to identify with the naked eye in a large number of endoscopic images. This is difficult for any endoscopist, even an experienced one, resulting in a risk of subjective mistakes (40). Prolonged endoscopy has been shown to cause endoscopists to lose focus, reducing the quality of the examination and perhaps leading to a false-negative diagnosis. According to 10 studies involving 3,787 patients undergoing upper gastrointestinal (GI) endoscopy, 11.3% of upper GI tumors were missed 3 years before they were ultimately diagnosed (41). Missed diagnoses also depend on the type and location of GC and are more pronounced in endoscopists with under 10 years of experience than in more experienced individuals. The physical and mental condition of the endoscopist who performs the procedure also strongly influences the rate of missed diagnoses.

Because of the uneven distribution of population and medical resources in China, the situation of endoscopy in China is more serious than that in some developed countries. The huge workload may result in our missed diagnosis rate being significantly higher than theirs. According to the census results of the number of practitioners of digestive endoscopy conducted in China in 2013, there is a huge gap in the number of endoscopists in China. Moreover, their technical level is not equal, and the doctors in economically developed cities have more medical resources and opportunities for intensive training than those in less developed cities. According to the census data, the number of digestive endoscopy physicians per million people in 20 provinces and cities is lower than the national average of 19. There are now only 30,000 endoscopists in China, despite a demand for endoscopy in the hundreds of millions. Such a big gap has left endoscopists in China with a huge workload, forcing them to reduce the examination time per patient in order to improve efficiency and relieve the pressure of work caused by a labor shortage. Studies have shown that the duration set aside for endoscopy and the rate of disease detection are positively correlated (42). Shorter test times mean a higher rate of missed diagnoses.

In addition, compared with other developed countries, the development rates of digestive endoscopy diagnoses and treatment technology in China are still quite low, and standardized training of endoscopists has not yet matured, resulting in a disparity of technical skills among endoscopists across the country. Operator factors significantly influence the outcome of endoscopy. Compared to other nations, China’s current condition has rendered the stability and sensitivity of endoscopy unreliable. In general, there are three dilemmas facing GC screening in China: (1) there is still a big gap between the development of digestive endoscopy technology in China and that of foreign countries and a systematic and standardized training system has not been implemented; (2) there is a serious shortage of endoscopists in China that is unable to meet the current demand for endoscopy in China; and (3) the accuracy of endoscopy cannot be guaranteed.

Artificial Intelligence in Screening of Early Gastric Cancer

Endoscopy screening for EGC is a difficult and time-consuming procedure, but it should not be taken lightly, as each missed diagnosis may cause patients to lose out on the most effective therapy option. Early identification and therapy are still the most effective treatments for GC. Endoscopists must therefore thoroughly examine each patient. However, humans are not machines, and long-term endoscopic operation can impair endoscopists’ discriminating capacity and impact the examination quality. The involvement of nurses can increase the rate of lesion identification and the quality of endoscopy by acting as a second observer during the procedure (43). As AI technology advances, it will be possible for AI to be involved in internal examinations as a third observer.

Furthermore, an increasing number of studies have proven that trained CNNs can swiftly identify lesions with an accuracy equivalent to that of endoscopists. A research team from Japan created a model of a CNN-based system using a training model of 13,584 gastroscopic images of GC. The total sensitivity of the model reached 92.2%, and it only took 47 s to examine 2,296 detection images (44). CNN can accurately detect images of invasive GC, and the detection rate of lesions above 6 mm in diameter is 98.6%. A similar study, also from Japan, described training a CNN model in a similar way, and the model recognized each image in just 4 ms (45). These studies show that AI can quickly and accurately identify lesions. If this approach can be applied to the clinical setting, it will reduce the pressure on endoscopists.

The Japan Cancer Research Foundation conducted a study comparing the speed and accuracy of endoscopic image recognition by artificial intelligence and endoscopists. Researchers trained a CNN-based model with 13,584 endoscopy images to work with 67 endoscopists to identify 2,940 images from 140 instances (46). The AI was able to recognize each image in about 40 s, while the endoscopist took about 220 times longer to recognize each endoscope image. On comparing the sensitivity, specificity, and positive and negative predictive values, the AI specificity and positive predictive value were found to be lower than those of endoscopists, while the other two values were higher than those of endoscopists (46). Although the AI model in this trial was able to determine whether or not GC was present in the images, the location, and extent of the tumors in the images were not assessed in detail. The number of endoscopists used for the comparison was also small. However, a CNN was compared with several experienced endoscopists who made their evaluations under the same conditions, so the experimental data obtained were still convincing. We have every reason to believe that by increasing the amount of data even further, the identification ability of AI can be rendered extremely close to that of actual endoscopists or even comparable to that of experienced endoscopists.

A similar experiment was carried out in the Department of Gastroenterology at the People’s Hospital of Wuhan University in China. They used an AI system designed by themselves to validate the results using 200 endoscopic pictures. Its accuracy, sensitivity, and specificity for identifying EGC were 92.5, 94, and 91%, respectively, compared to 89.7, 93.9, and 87.3% for experienced endoscopists (47). The Department of Gastroenterology, Peking University People’s Hospital also trained their own CNN model and obtained similar results (48). The above two experiments also compared the AI trained themselves with endoscopists and obtained similar results. However, the AI used in these experiments has common limitations, as it was only able to recognize static endoscopic images, and only a small number of endoscopists were involved. If more endoscopists had been included in the comparison, the comparison reliability would have been higher. In addition, data from a hospital were used in the above tests to analyze the training model, and there was no strict quality control of the endoscopic images. The same problem also appeared in the experiment comparing AI and endoscopists conducted by Drum Tower Hospital affiliated with Nanjing University Medical School. The results showed that AI was superior to endoscopists with regard to accuracy (85.1–91.2%), sensitivity (85.9–95.5%), and specificity (81.7–90.3%). However, it also has its innovation point, which tests the identification ability of the lesions of the intern endoscopists assisted by artificial intelligence, and makes a comparison with the experts. The sensitivity of interns increased from 82.7 to 94.7%, and their performance was comparable to that of specialists (sensitivity: 94.7 vs. 97.4%) (49). A comparative experiment was also conducted in the Sun Yat-sen University Cancer Center, in which endoscopists were innovatively divided into three levels: expert endoscopist (10 years of endoscopy experience), competent endoscopist (5 years of endoscopy experience), and trainee endoscopist (2 years of endoscopy experience); their evaluations were then compared with AI. Experimental data showed that the diagnostic sensitivity of AI was similar to that of endoscopy experts (0.942 and 0.945). The positive predictive value for experts was 0.932, while that for AI was 0.814. In terms of the negative predictive value, AI was slightly better than the expert endoscopist (0.980) and higher than the competent endoscopist (0.951). AI also demonstrated superior capability to trainees, although the positive predictive value was similar between the two. The advantage of this experiment is that the samples were obtained from multiple hospitals, which reduces the error potentially caused by using samples from a single hospital. At the same time, the quality of the endoscope image was strictly controlled. Each endoscope image was manually marked by two experienced endoscopists and any images that did not meet the requirements were eliminated. However, that study also had limitations, such as only using white-light images. In addition, the AI’s training and external validation sets were obtained retrospectively, which may have led to a certain degree of selection bias. In addition, this experiment did not use a specific method to process images obtained at different positions in the same series of videos, which may have caused some inheritance bias (50).

Despite the limitations, that experiment and each of the others described above had their own innovations. At the same time, there are many similar retrospective experiments, all of which have verified the utility of AI in lesion identification and demonstrated the great potential of AI in endoscopy. Based on the above findings, we believe that AI can quickly identify lesions with accuracy, greatly reducing the current burden on endoscopists.

Furthermore, there are many other aspects to AI that bear highlighting. For example, the AI system developed by the People’s Hospital of Wuhan University was able to divide gastroscopic images into 26 anatomical areas with an accuracy of 65.9%, which was comparable to the rate of 63.8% for experienced endoscopists, and reduced the rate of image sites missing by 15% in a comprehensive randomized controlled trial (47). In addition, the authors found that using an AI system in routine endoscopy can dramatically minimize the number of missed locations. As a result, the use of AI is expected to reduce the number of cases of GC missed due to insufficient endoscopy (51).

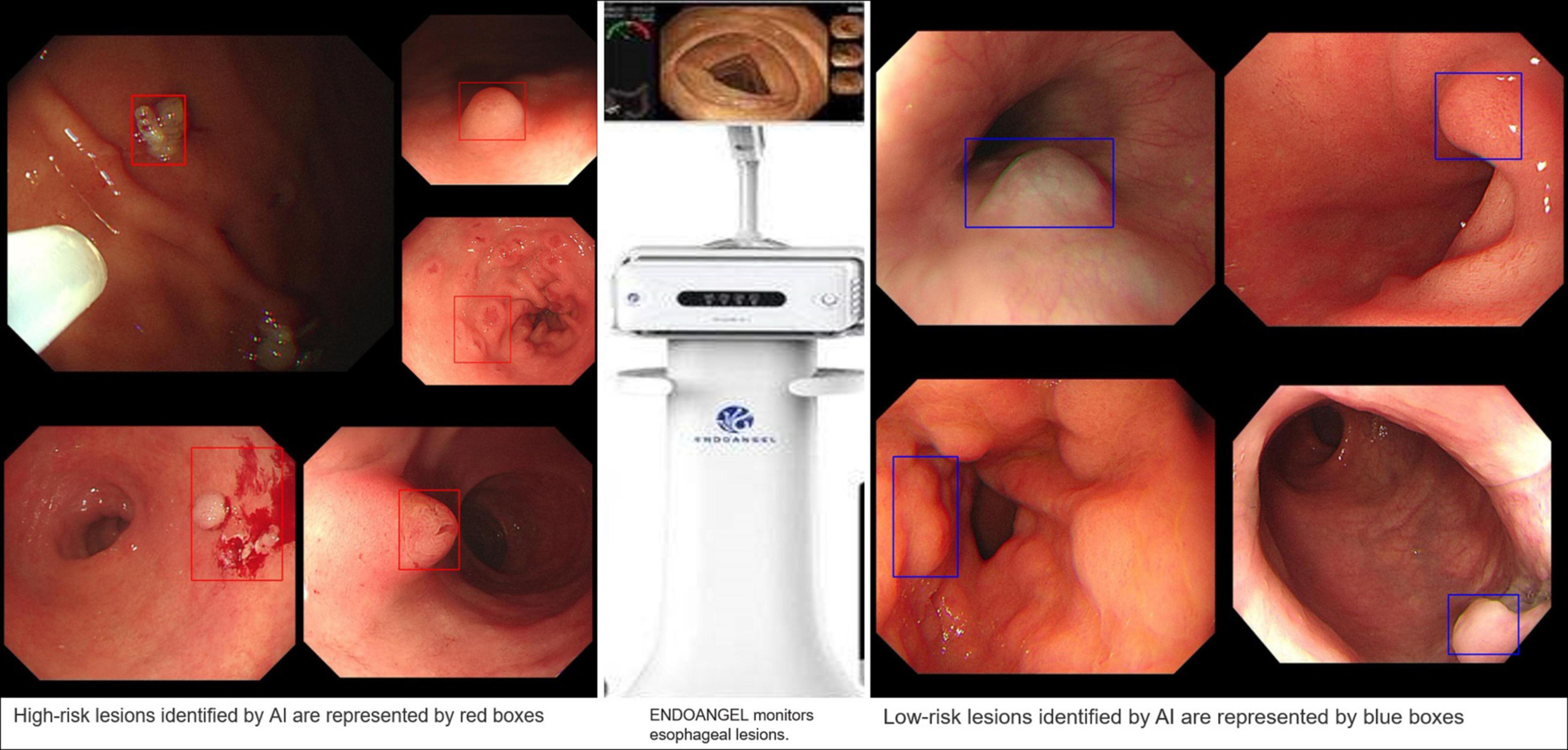

A successful case of applying AI to clinical practice was recently reported in the “EndoAngel.” This is an AI quality control auxiliary diagnosis system of digestive endoscopy based on a CNN model that can effectively monitor the blind area on GI imaging, assist in the detection of suspicious lesions in real time, improve the quality of endoscopy and improve the detection rate of GI tumor lesions; in addition, it is also equipped with a scoring training system for upper and lower gastrointestinal endoscopy. It is a fully functional AI product integrating quality control and auxiliary diagnostic functions. The People’s Hospital of Wuhan University has cooperated with 13 other hospitals to conduct a functional verification study of the EndoAngel for the early diagnosis of GC. The EndoAngel has a 92% diagnostic accuracy in EGC, and its main working mode is shown in Figure 11.

Figure 11. EndoAngel-assisted endoscopy. The image on the left shows high-risk lesions, and the image on the right shows low-risk lesions. During endoscopy, AI automatically identified and evaluated the lesion. If it detected a high-risk lesion, a red prompt box appeared, while a blue prompt box appeared for low-risk lesions. The prompt box not only helps the endoscopist quickly identify the lesion but also helps doctors carry out an accurate sampling biopsy.

The technological skill level of endoscopic physicians is disparate at present, and the operation is not sufficiently standardized, affecting the endoscope quality. The ADM system, based on a CNN and developed by the People’s Hospital of Wuhan University is intended to provide the following statistical quality indicators: colonoscopy time, cecal endoscopy intubation rate (CIR), adequate bowel preparation rate, polyp detection rate (PDR), adenoma detection rate (ADR), gastroscopy time, and gastric precancerous condition (GPC) detection rate. The system may also simultaneously analyze the quality of each endoscope and provide rapid feedback to the operator. Controlled experiments verified that the detection rate of precancerous lesions increased in the endoscopic group with AI feedback (3–7%) as well as in the control group (3.5–3.9%) (11). These findings suggest that quality management of endoscopy operations can significantly increase the screening rate. Furthermore, AI can not only serve as a quality control system to supervise endoscopists’ performance but also participate in the standardized training of endoscopists, reduce the endoscopist training time.

Extensive endoscopy cannot be performed in China at present. One reason for this is a lack of corresponding equipment in community hospitals, and another is the scarcity of endoscopists, with this latter reason being the main issue. Endoscopists are in short supply in China, being mostly centered in major hospitals; this means that even if rural hospitals have similar technology, no one is available to operate them. The advent of AI appears to be a game-changer. In terms of the sensitivity and accuracy of inspections, the present AI model based on a CNN appears to have the equivalent skill to professional endoscopists (52). If AI were to be introduced to community hospitals in China, it would be equivalent to having an experienced endoscopist in each hospital. With this approach, large-scale screening for EGC will also become possible.

Conclusion

Thus far, retrospective trials of AI screening for early stomach cancer have yielded promising results. The precision and accuracy with which lesions are identified are equivalent to those of endoscopists. If AI were to be employed in the early stomach cancer screening process, it would significantly improve the poor detection rate of EGC in China (53). Furthermore, AI, which is still being developed, can aid in training endoscopists, making endoscopy training in China more unified and uniform. However, while AI has demonstrated significant potential in early stomach cancer screening, such clinical trials are uncommon at present, and there remains much research to complete before AI can be widely used in this regard.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the study, and approved it for publication.

Funding

This study was supported in part by Medical Health Science and Technology Project of Zhejiang Province (2021PY083), Program of Taizhou Science and Technology Grant (20ywb29 and 1901ky18), Major Research Program of Taizhou Enze Medical Center Grant (19EZZDA2), Open Project Program of Key Laboratory of Minimally Invasive Techniques and Rapid Rehabilitation of Digestive System Tumor of Zhejiang Province (21SZDSYS01 and 21SZDSYS09), and Key Technology R&D Program of Zhejiang Province (2019C03040).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2018) 68:394–424. doi: 10.3322/caac.21492

2. Feng F, Liu J, Wang F, Zheng G, Wang Q, Liu S, et al. Prognostic value of differentiation status in gastric cancer. BMC Cancer. (2018) 18:865. doi: 10.1186/s12885-018-4780-0

3. Gao K, Wu J. National trend of gastric cancer mortality in China (2003-2015): a population-based study. Cancer Commun. (2019) 39:24. doi: 10.1186/s40880-019-0372-x

4. Shen L, Shan YS, Hu HM, Price TJ, Sirohi B, Yeh KH, et al. Management of gastric cancer in Asia: resource-stratified guidelines. Lancet Oncol. (2013) 14:e535–47. doi: 10.1016/s1470-2045(13)70436-4

5. Young E, Philpott H, Singh R. Endoscopic diagnosis and treatment of gastric dysplasia and early cancer: current evidence and what the future may hold. World J Gastroenterol. (2021) 27:5126–51. doi: 10.3748/wjg.v27.i31.5126

6. Katai H, Ishikawa T, Akazawa K, Isobe Y, Miyashiro I, Oda I, et al. Five-year survival analysis of surgically resected gastric cancer cases in Japan: a retrospective analysis of more than 100,000 patients from the nationwide registry of the Japanese gastric cancer association (2001-2007). Gastric Cancer. (2018) 21:144–54. doi: 10.1007/s10120-017-0716-7

7. Sano T, Coit DG, Kim HH, Roviello F, Kassab P, Wittekind C, et al. Proposal of a new stage grouping of gastric cancer for TNM classification: international gastric cancer association staging project. Gastric Cancer. (2017) 20:217–25. doi: 10.1007/s10120-016-0601-9

8. Kikuchi S, Katada N, Sakuramoto S, Kobayashi N, Shimao H, Watanabe M, et al. Survival after surgical treatment of early gastric cancer: surgical techniques and long-term survival. Langenbecks Arch Surg. (2004) 389:69–74. doi: 10.1007/s00423-004-0462-2

9. Zhang Q, Chen ZY, Chen CD, Liu T, Tang XW, Ren YT, et al. Training in early gastric cancer diagnosis improves the detection rate of early gastric cancer: an observational study in China. Medicine (Baltimore). (2015) 94:e384. doi: 10.1097/MD.0000000000000384

10. Wu L, Shang R, Sharma P, Zhou W, Liu J, Yao L, et al. Effect of a deep learning-based system on the miss rate of gastric neoplasms during upper gastrointestinal endoscopy: a single-centre, tandem, randomised controlled trial. Lancet Gastroenterol Hepatol. (2021) 6:700–8. doi: 10.1016/s2468-1253(21)00216-8

11. Yao L, Liu J, Wu L, Zhang L, Hu X, Liu J, et al. A gastrointestinal endoscopy quality control system incorporated with deep learning improved endoscopist performance in a pretest and post-test trial. Clin Transl Gastroenterol. (2021) 12:e00366. doi: 10.14309/ctg.0000000000000366

12. Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. (2019) 25:1666–83. doi: 10.3748/wjg.v25.i14.1666

13. Deo RC. Machine learning in medicine. Circulation. (2015) 132:1920–30. doi: 10.1161/CIRCULATIONAHA.115.001593

16. Anirvan P, Meher D, Singh SP. Artificial intelligence in gastrointestinal endoscopy in a resource-constrained setting: a reality check. Euroasian J Hepatogastroenterol. (2020) 10:92–7. doi: 10.5005/jp-journals-10018-1322

17. Bai L, Liu X, Zheng Q, Kong M, Zhang X, Hu R, et al. M2-like macrophages in the fibrotic liver protect mice against lethal insults through conferring apoptosis resistance to hepatocytes. Sci Rep. (2017) 7:10518. doi: 10.1038/s41598-017-11303-z

18. Zou J, Han Y, So SS. Overview of artificial neural networks. In: Livingstone DJ editor. Artificial Neural Networks: Methods and Applications. (Totowa, NJ: Humana Press) (2009). p. 14–22. doi: 10.1007/978-1-60327-101-1_2

19. Dhillon A, Verma GK. Convolutional neural network: a review of models, methodologies and applications to object detection. Prog Artif Intell. (2019) 9:85–112. doi: 10.1007/s13748-019-00203-0

20. Kim P. Convolutional Neural Network. MATLAB Deep Learning. New York, NY: Apress (2017). p. 121–47.

21. Ahmad J, Farman H, Jan Z. Deep Learning Methods and Applications. Deep Learning: Convergence to Big Data Analytics. Cham: Springer Briefs in Computer Science (2019). p. 31–42.

22. Badrinarayanan V, Kendall A, Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell. (2017) 39:2481–95. doi: 10.1109/TPAMI.2016.2644615

23. Philbrick KA, Yoshida K, Inoue D, Akkus Z, Kline TL, Weston AD, et al. What does deep learning see? insights from a classifier trained to predict contrast enhancement phase from CT images. AJR Am J Roentgenol. (2018) 211:1184–93. doi: 10.2214/AJR.18.20331

24. Sarigul M, Ozyildirim BM, Avci M. Differential convolutional neural network. Neural Netw. (2019) 116:279–87. doi: 10.1016/j.neunet.2019.04.025

25. Nguyen DT, Lee MB, Pham TD, Batchuluun G, Arsalan M, Park KR. Enhanced image-based endoscopic pathological site classification using an ensemble of deep learning models. Sensors (Basel). (2020) 20:5982. doi: 10.3390/s20215982

26. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer. (2018) 18:500–10. doi: 10.1038/s41568-018-0016-5

27. Acharya UR, Oh SL, Hagiwara Y, Tan JH, Adam M, Gertych A, et al. A deep convolutional neural network model to classify heartbeats. Comput Biol Med. (2017) 89:389–96. doi: 10.1016/j.compbiomed.2017.08.022

28. Liu W, Zhang M, Zhang Y, Liao Y, Huang Q, Chang S, et al. Real-time multilead convolutional neural network for myocardial infarction detection. IEEE J Biomed Health Inform. (2018) 22:1434–44. doi: 10.1109/JBHI.2017.2771768

29. Sun ML, Song ZJ, Jiang XH, Pan J, Pang YW. Learning pooling for convolutional neural network. Neurocomputing. (2017) 224:96–104. doi: 10.1016/j.neucom.2016.10.049

30. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. (2019) 25:44–56. doi: 10.1038/s41591-018-0300-7

31. Zhang Y, Liu Y, Sun P, Yan H, Zhao XL, Zhang L. IFCNN: a general image fusion framework based on convolutional neural network. Inf Fusion. (2020) 54:99–118. doi: 10.1016/j.inffus.2019.07.011

32. Chan HP, Samala RK, Hadjiiski LM, Zhou C. Deep learning in medical image analysis. Adv Exp Med Biol. (2020) 1213:3–21. doi: 10.1007/978-3-030-33128-3_1

33. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542:115–8. doi: 10.1038/nature21056

34. Kim JH, Kim SS, Lee JH, Jung DH, Cheung DY, Chung WC, et al. Early detection is important to reduce the economic burden of gastric cancer. J Gastric Cancer. (2018) 18:82–9. doi: 10.5230/jgc.2018.18.e7

35. Pasechnikov V, Chukov S, Fedorov E, Kikuste I, Leja M. Gastric cancer: prevention, screening and early diagnosis. World J Gastroenterol. (2014) 20:13842–62. doi: 10.3748/wjg.v20.i38.13842

36. Leung WK, Wu MS, Kakugawa Y, Kim JJ, Yeoh KG, Goh KL, et al. Screening for gastric cancer in Asia: current evidence and practice. Lancet Oncol. (2008) 9:279–87. doi: 10.1016/s1470-2045(08)70072-x

37. Shen M, Xia R, Luo Z, Zeng H, Wei W, Zhuang G, et al. The long-term population impact of endoscopic screening programmes on disease burdens of gastric cancer in China: a mathematical modelling study. J Theor Biol. (2020) 484:109996. doi: 10.1016/j.jtbi.2019.109996

38. Ezoe Y, Muto M, Uedo N, Doyama H, Yao K, Oda I, et al. Magnifying narrowband imaging is more accurate than conventional white-light imaging in diagnosis of gastric mucosal cancer. Gastroenterology. (2011) 141:2017–2025.e3. doi: 10.1053/j.gastro.2011.08.007

39. Yoshida N, Doyama H, Yano T, Horimatsu T, Uedo N, Yamamoto Y, et al. Early gastric cancer detection in high-risk patients: a multicentre randomised controlled trial on the effect of second-generation narrow band imaging. Gut. (2021) 70:67–75. doi: 10.1136/gutjnl-2019-319631

40. Lee JH, Kim YJ, Kim YW, Park S, Choi YI, Kim YJ, et al. Spotting malignancies from gastric endoscopic images using deep learning. Surg Endosc. (2019) 33:3790–7. doi: 10.1007/s00464-019-06677-2

41. Almadi MA, Sewitch M, Barkun AN, Martel M, Joseph L. Adenoma detection rates decline with increasing procedural hours in an endoscopist’s workload. Can J Gastroenterol Hepatol. (2015) 29:304–8. doi: 10.1155/2015/789038

42. Gong D, Wu L, Zhang J, Mu G, Shen L, Liu J, et al. Detection of colorectal adenomas with a real-time computer-aided system (ENDOANGEL): a randomised controlled study. Lancet Gastroenterol Hepatol. (2020) 5:352–61. doi: 10.1016/s2468-1253(19)30413-3

43. Ahmad OF, Soares AS, Mazomenos E, Brandao P, Vega R, Seward E, et al. Artificial intelligence and computer-aided diagnosis in colonoscopy: current evidence and future directions. Lancet Gastroenterol Hepatol. (2019) 4:71–80. doi: 10.1016/s2468-1253(18)30282-6

44. Hirasawa T, Aoyama K, Tanimoto T, Ishihara S, Shichijo S, Ozawa T, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. (2018) 21:653–60. doi: 10.1007/s10120-018-0793-2

45. Sakai Y, Takemoto S, Hori K, Nishimura M, Ikematsu H, Yano T, et al. Automatic detection of early gastric cancer in endoscopic images using a transferring convolutional neural network. Annu Int Conf IEEE Eng Med Biol Soc. (2018) 2018:4138–41. doi: 10.1109/EMBC.2018.8513274

46. Ikenoyama Y, Hirasawa T, Ishioka M, Namikawa K, Yoshimizu S, Horiuchi Y, et al. Detecting early gastric cancer: comparison between the diagnostic ability of convolutional neural networks and endoscopists. Dig Endosc. (2021) 33:141–50. doi: 10.1111/den.13688

47. Wu L, Zhou W, Wan X, Zhang J, Shen L, Hu S, et al. A deep neural network improves endoscopic detection of early gastric cancer without blind spots. Endoscopy. (2019) 51:522–31. doi: 10.1055/a-0855-3532

48. Zhang L, Zhang Y, Wang L, Wang J, Liu Y. Diagnosis of gastric lesions through a deep convolutional neural network. Dig Endosc. (2021) 33:788–96. doi: 10.1111/den.13844

49. Tang D, Wang L, Ling T, Lv Y, Ni M, Zhan Q, et al. Development and validation of a real-time artificial intelligence-assisted system for detecting early gastric cancer: a multicentre retrospective diagnostic study. EBioMedicine. (2020) 62:103146. doi: 10.1016/j.ebiom.2020.103146

50. Luo H, Xu G, Li C, He L, Luo L, Wang Z, et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: a multicentre, case-control, diagnostic study. Lancet Oncol. (2019) 20:1645–54. doi: 10.1016/s1470-2045(19)30637-0

51. Chen D, Wu L, Li Y, Zhang J, Liu J, Huang L, et al. Comparing blind spots of unsedated ultrafine, sedated, and unsedated conventional gastroscopy with and without artificial intelligence: a prospective, single-blind, 3-parallel-group, randomized, single-center trial. Gastrointest Endosc. (2020) 91:332–339.e3. doi: 10.1016/j.gie.2019.09.016

52. Guo XM, Zhao HY, Shi ZY, Wang Y, Jin ML. [Application and progress of convolutional neural network-based pathological diagnosis of gastric cancer]. Sichuan Da Xue Xue Bao Yi Xue Ban. (2021) 52:166–9. doi: 10.12182/20210360501

Keywords: artificial intelligence, early gastric cancer, screening, improving, application

Citation: Fu X-y, Mao X-l, Chen Y-h, You N-n, Song Y-q, Zhang L-h, Cai Y, Ye X-n, Ye L-p and Li S-w (2022) The Feasibility of Applying Artificial Intelligence to Gastrointestinal Endoscopy to Improve the Detection Rate of Early Gastric Cancer Screening. Front. Med. 9:886853. doi: 10.3389/fmed.2022.886853

Received: 01 March 2022; Accepted: 06 April 2022;

Published: 16 May 2022.

Edited by:

Tony Tham, Ulster Hospital, United KingdomReviewed by:

Zhen Li, Shandong University, ChinaRabindra Watson, Cedars-Sinai Medical Center, United States

Copyright © 2022 Fu, Mao, Chen, You, Song, Zhang, Cai, Ye, Ye and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Li-ping Ye, eWVscEBlbnplbWVkLmNvbQ==; Shao-wei Li, bGlfc2hhb3dlaTgxQGhvdG1haWwuY29t

†These authors have contributed equally to this work

Xin-yu Fu1†

Xin-yu Fu1† Ya-qi Song

Ya-qi Song Li-hui Zhang

Li-hui Zhang Shao-wei Li

Shao-wei Li