94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med., 31 May 2022

Sec. Gastroenterology

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.852553

This article is part of the Research TopicArtificial Intelligence in EndoscopyView all 9 articles

Shuijiao Chen1,2,3†

Shuijiao Chen1,2,3† Shuang Lu1†

Shuang Lu1† Yingxin Tang4†

Yingxin Tang4† Dechun Wang5

Dechun Wang5 Xinzi Sun5

Xinzi Sun5 Jun Yi1,2,3

Jun Yi1,2,3 Benyuan Liu5

Benyuan Liu5 Yu Cao5

Yu Cao5 Yongheng Chen6*

Yongheng Chen6* Xiaowei Liu1,2,3*

Xiaowei Liu1,2,3*Background and Aims: Recent studies have shown that artificial intelligence-based computer-aided detection systems possess great potential in reducing the heterogeneous performance of doctors during endoscopy. However, most existing studies are based on high-quality static images available in open-source databases with relatively small data volumes, and, hence, are not applicable for routine clinical practice. This research aims to integrate multiple deep learning algorithms and develop a system (DeFrame) that can be used to accurately detect intestinal polyps in real time during clinical endoscopy.

Methods: A total of 681 colonoscopy videos were collected for retrospective analysis at Xiangya Hospital of Central South University from June 2019 to June 2020. To train the machine learning (ML)-based system, 6,833 images were extracted from 48 collected videos, and 1,544 images were collected from public datasets. The DeFrame system was further validated with two datasets, consisting of 24,486 images extracted from 176 collected videos and 12,283 images extracted from 259 collected videos. The remaining 198 collected full-length videos were used for the final test of the system. The measurement metrics were sensitivity and specificity in validation dataset 1, precision, recall and F1 score in validation dataset 2, and the overall performance when tested in the complete video perspective.

Results: A sensitivity and specificity of 79.54 and 95.83%, respectively, was obtained for the DeFrame system for detecting intestinal polyps. The recall and precision of the system for polyp detection were determined to be 95.43 and 92.12%, respectively. When tested using full colonoscopy videos, the system achieved a recall of 100% and precision of 80.80%.

Conclusion: We have developed a fast, accurate, and reliable DeFrame system for detecting polyps, which, to some extent, is feasible for use in routine clinical practice.

Colorectal cancer (CRC) is one of the most common malignancies and the fourth leading cause of cancer-related mortality worldwide (1, 2). Adenomatous polyps, precancerous lesions, will eventually progress to CRC without proper timely intervention. Colonoscopy, as a tool to effectively detect and resect polyps, has played an essential role in preventing the development of CRC. Therefore, it is considered to be the gold standard method for CRC screening (3).

However, some polyps are still missed during the gold standard procedure (4). This procedure involves various factors, such as the endoscopist’s experience, bowel preparation (5), intestinal mucosal exposure (6), and imaging equipment (7, 8). According to statistics, approximately one-fourth of adenomatous polyps can be missed in clinical practice (9), which is the main cause of interval CRC (10, 11). Several indicators have been established to improve the quality of colonoscopy and its performance, among which the adenoma detection rate (ADR) is well known as an independent indicator because of its negative correlation with CRC-related mortality (12–14).

In recent years, with the continuous development of artificial intelligence (AI), especially the emergence and use of deep learning and convolutional neural networks (CNNs) that can automatically extract features and recognize images, image recognition has led to constant breakthroughs, the level of which has gradually reached that of humans. Because of this feature, many researchers have been attracted to developing and optimizing AI-assisted polyp detection systems (15–19). However, most current studies are based on public and small- to medium-sized private datasets; the data volume is not large enough to perform adequate AI deep learning. On the other hand, most of these studies use only one kind of deep learning algorithm, which has not been validated in a clinical setting. Additionally, most of these studies utilize only traditional parameters - specificity and sensitivity–to assess the system’s performance. In our opinion, other novel indicators should also be included to evaluate performance to gain a deeper understanding of a system’s contribution in a clinical setting. In this study, we first developed a machine learning (ML)-based polyp detection system, named DeFrame. Compared with other existing polyp detection systems, the DeFrame system integrates two deep learning (DL)-based algorithms for polyp detection and a fuzzy image filtering module to obtain a more accurate output. In addition, to mock its application in a clinical setting, we tested the DeFrame system with full videos generated during routine endoscopy. The results obtained are quite good. To our knowledge, the database we used to develop the DeFrame system is the largest thus far, which combines multiple open-source datasets and self-built image and video datasets. Finally, we carefully evaluated the system’s performance with both image and target measurement parameters.

We retrospectively collected full colonoscopy videos for 824 patients who received a colonoscopy between June 2019 and June 2020 in the endoscopy rooms of the Gastroenterology Department, Xiangya Hospital, Central South University. According to the patient inclusion and exclusion criteria, 681 videos were included for further studies. A group of experienced endoscopists (with at least 2 years of experience) from Xiangya Hospital identified all the polyps on the videos and annotated the time of appearance and disappearance and the size and location of each polyp. If a polyp was removed during the colonoscopy and sent for histology, the corresponding pathology report was collected and reviewed for accurate diagnosis by another pathologist from Xiangya Hospital. A video clip containing the polyp from the time of its appearance to the time of its disappearance was then cut and saved to extract images containing diversified artifacts and polyps.

The inclusion criteria were adults aged 18 years and older undergoing a nonemergent colonoscopy.

The exclusion criteria were as follows: (1) familial multiple polyposis or inflammatory bowel disease; (2) contraindications to biopsy; (3) personal history of CRC or CRC-related surgery; and (4) presence of submucosal lesions.

All colonoscopies were performed using a high-definition endoscope (Olympus CV70/CV260) and recorded using high-definition recorders (HDTVs). All the collected videos were taken in white light, noniodine staining, and nonmagnification mode.

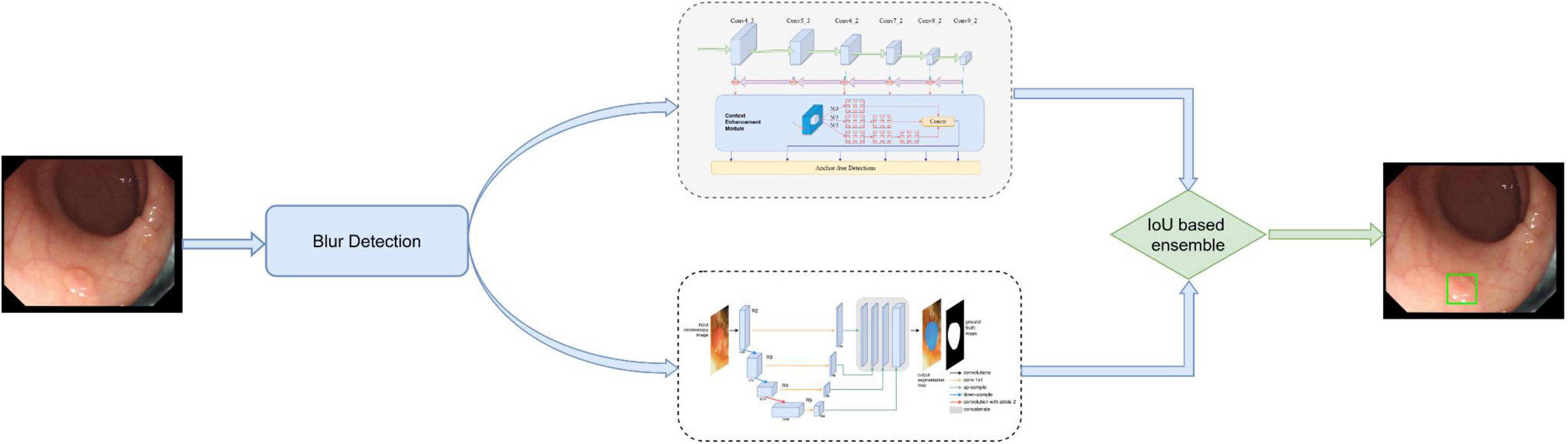

The initial DeFrame system was constructed using images obtained from public databases. This system integrates three algorithms, including Algorithms A, B, and C, and a fusion component. Algorithm A is used to determine whether the image is blurred (20). Both Algorithms B (based on AFP-Net) (21) and C (based on dilated U-Net) (22) are polyp detection modules. The primary work process is described as follows: single-frame images from colonoscopy are used as inputs; the blurred images are filtered out by Algorithm A; polyps are detected by Algorithms B and C simultaneously. The specific process is shown in Figure 1. For the training details, Algorithm C was trained on 2 Nvidia Geforce 1080ti’s with an initial learning rate of 1e–5, while Algorithm B was trained on 4 Nvidia RTX 2080ti’s with an initial learning rate of 1e–3.

Figure 1. The architecture of our developed system, which consists of blurry detection, polyp detection, and fusion module. Among them, polyp detection was performed by two algorithms (algorithm B based on AFP-Net and algorithm C based on U-Net). Data flow is from the left to the right: images were first detected by blurry detection module (algorithm A) and transferred to polyp detection module if they were clear. Output was then gained with a bounding box on the CADe monitor.

The training datasets consisted of 1,544 images from public databases and 6,833 images extracted from self-collected videos. All images from the public polyp datasets (CVC-Clinic DB (23), ETIS-Larib polyp DB (24), GIANA Polyp Detection/Segmentation (25), and CVC-Colon DB (26)) have at least one polyp. The images were extracted from 48 videos we collected from June 2019 to July 2019, and 81.00% of the images contained at least one polyp. Validation set 1 included 24,486 images extracted from 176 videos collected between July 2019 and September 2019, and 42.60% of images contained at least one polyp. Validation set 2 included 12,283 images extracted from 259 videos collected between September 2019 and December 2019, and 84.90% of the images contained at least one polyp. Validation set 2 was built to validate the system’s ability for polyp localization and accurate polyp image segmentation. Validation set 3 included 198 full videos collected from December 2019 to January 2020, serving as the test set. A total of 344 polyps were found in validation set 3. The specific composition of each dataset is shown in Table 1.

In this study, we evaluated the performance of the DeFrame system in terms of both images and polyps. The former includes the following metrics: sensitivity and specificity, the results of which were demonstrated in validation set 1; the latter includes recall, precision, and F1 score, the results of which were demonstrated in validation set 2. The overall performance was assessed from the video perspective with validation set 3.

The sample size for this study was sufficient to ensure that the results we obtained were adequate to produce statistically significant differences. The sample size calculation process is described as follows: first, the typical sample size calculation formula [e.g., ss = (] was used to calculate the initial sample size with a preset z-value of 1.96 for the confidence level, α of 0.05, p of 0.90, and c of 0.01. Therefore, we found that the sample size should be at least 3,457. To further refine the sample size, we also researched recent papers on the development and validation of deep learning-based polyp detection systems. We found that the number of images they used ranged from 8,000 to 30,000. Therefore, we decided to set the sample size to 24,486 and 12,283 for validation datasets 1 and 2, respectively.

Python scripts were used to calculate the evaluation metrics in this study.

A total of 681 eligible subjects were enrolled, among which 463 were male (68.00%). The median age was 65 years, and the interquartile range (IQR) was 56–75. A video recording of the entire colonoscopy was obtained for each subject, and in total, 1,240 polyps were found in 681 videos (Figure 2).

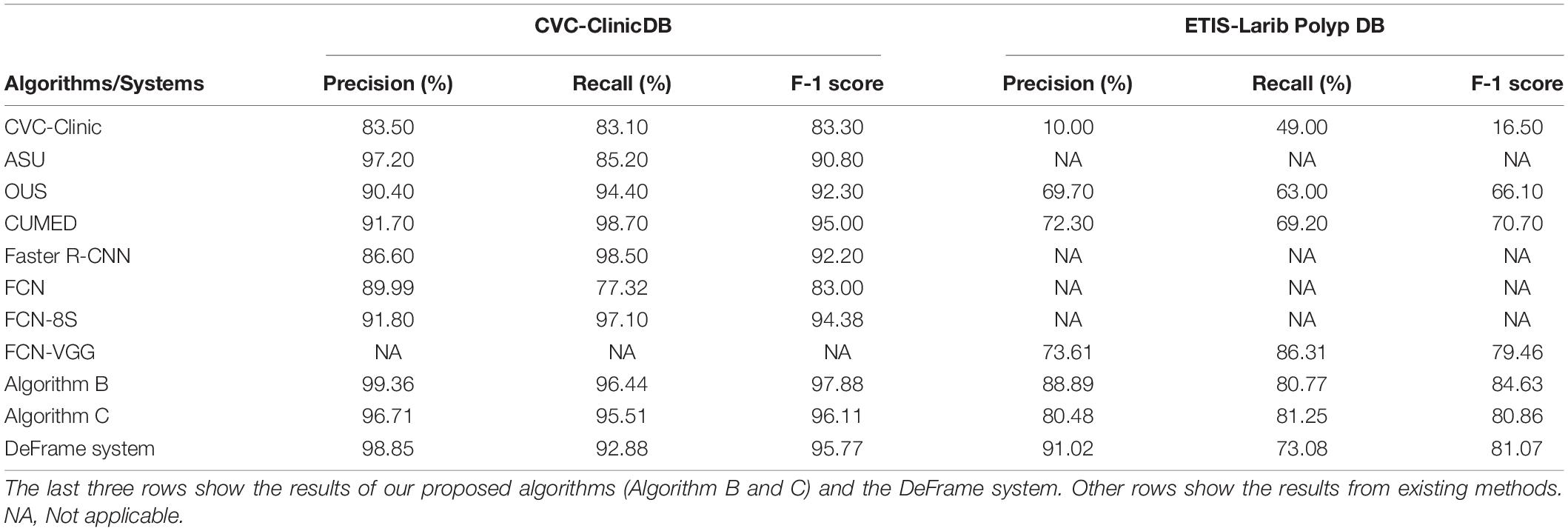

When trained and tested in two public polyp datasets (CVC-Clinic DB and ETIS-Larib Polyp DB), the algorithms (including B and C) and DeFrame system we developed outperformed all other algorithms reported in the literature for polyp detection. The specific values for each algorithm are shown in Table 2.

Table 2. Performance comparison of different polyp detection algorithms and systems on two public datasets.

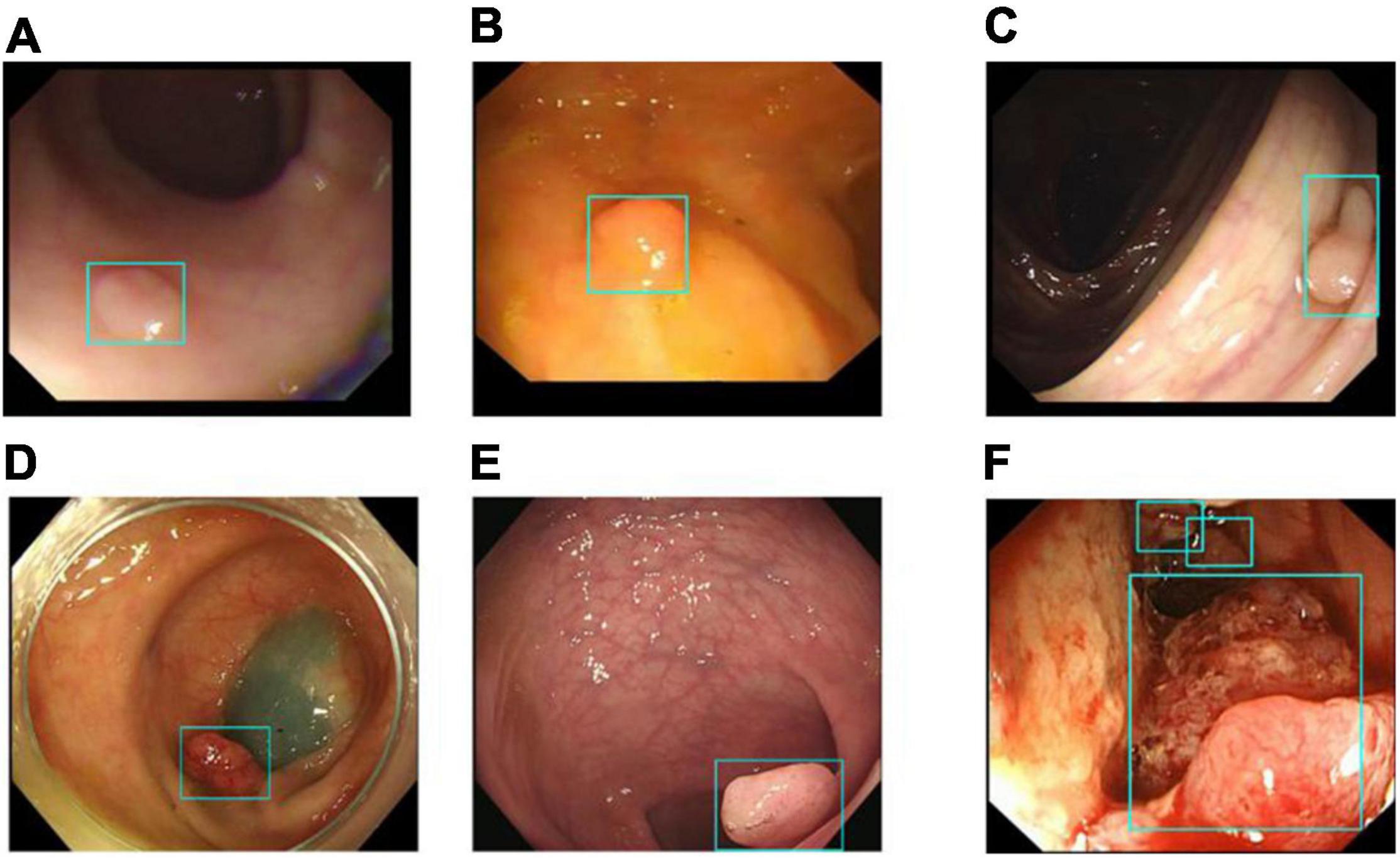

Image classification refers to the DeFrame system’s ability to automatically distinguish whether the image contains polyps. The results of colonoscopy image classification in validation set 1 are shown in Table 3. The sensitivity was 79.54%, and the specificity was 95.83%. In addition, the system can identify polyps with various histological features and sizes in different locations. The output results for the DeFrame system to correctly distinguish the images are shown in Figure 3.

Figure 3. Images (A–F) showing that the DeFrame system generates the correct output, regardless of location and morphology. Specifically, light-blue boxes are used to indicate where the polyps are detected. (A) A small and flat hyperplastic polyp in the sigmoid colon; (B) an isochromatic and small inflammatory polyp in the sigmoid colon; (C) an adenomatous polyp in the ascending colon, (D) an adenomatous polyp in the descending colon, (E) an adenomatous polyp in the rectum; (F) multiple carcinomatous polyps in the sigmoid colon.

We used validation set 2 to evaluate the DeFrame system performance in polyp localization and segmentation (i.e., identifying and segmenting every polyp in one image). The target detection results are shown in Table 4.

Recall is defined as the number of overlapping regions between the segmentation region and target region divided by the number of segmentation regions, numerically equivalent to the sensitivity. Precision is defined as the number of overlapping regions between the segmentation region and target region divided by the number of target regions. The F-1 score is the harmonic mean of precision and recall. In validation set 2, the recall of this system was 95.43%, and the precision was 92.12%.

The DeFrame system was tested using validation set 3 for 198 videos. The results show that the DeFrame system achieves a recall of 100% and precision of 80.80%, which suggests that the system can be used to consistently detect all polyps marked by the endoscopist with a relatively low false-positive rate. The test results for the DeFrame system are shown in Table 5.

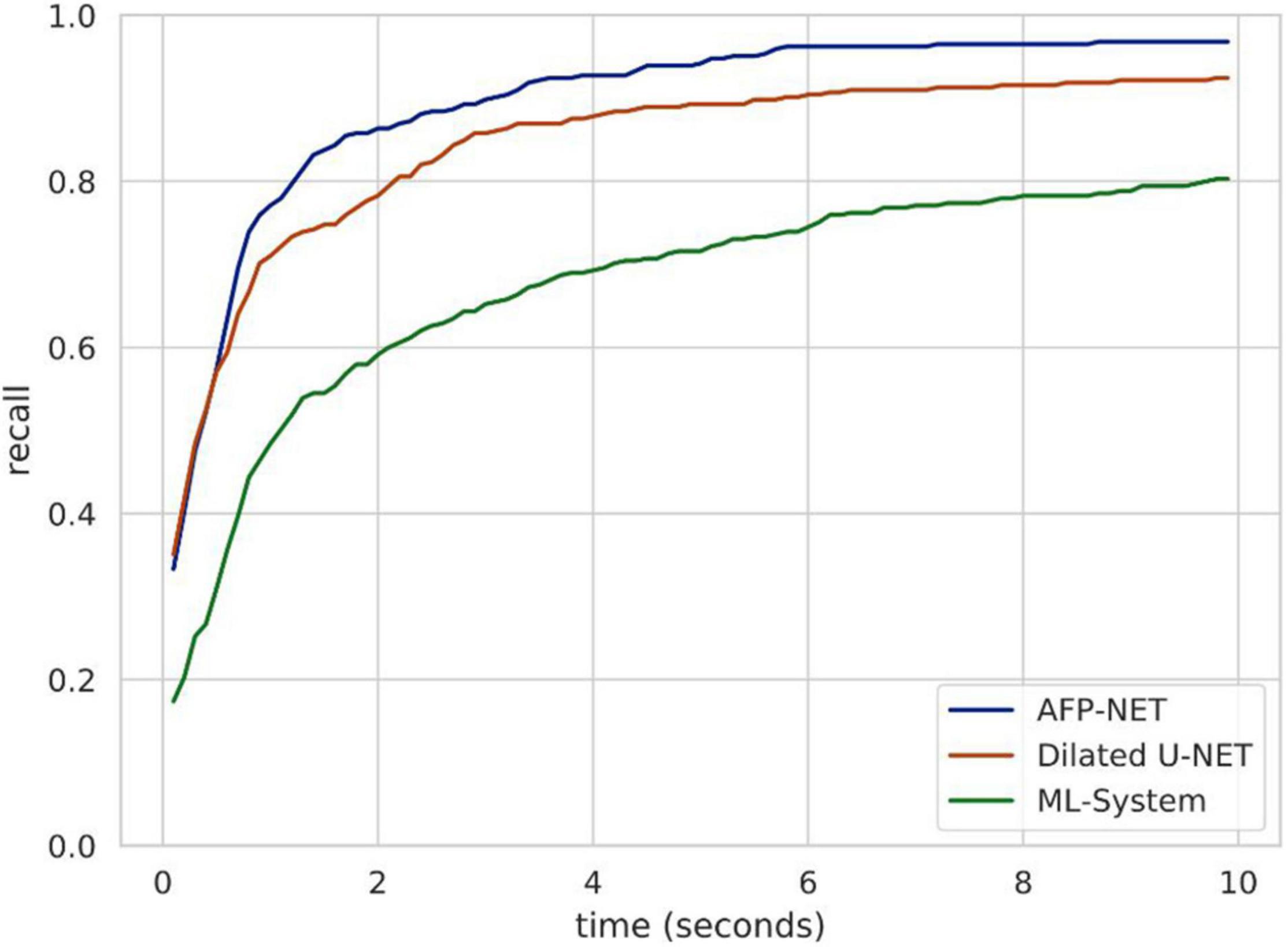

Endoscopists should detect polyps as soon as possible when they appear in the field of view during endoscopy due to many factors in clinical practice. For this purpose, we used validation set 3 to evaluate the polyp detection speed of our system. Algorithm B can be used to detect more than 84.62% of polyps within the first 2 s when they appear in the view field, while the entire system can detect more than 80.38% of polyps within the first 10 s (Figure 4). The system was tested to achieve real-time detection at approximately 23 frames per second to meet clinical practice needs.

Figure 4. Speed analysis of polyp identification in a DeFrame system from a full-length video perspective. Recall is a function of time for different models to find a polyp. Algorithm B (based on AFP-NET) can be used to detect more than 84% of polyps within the first 2 s when they appear in the view field, while the entire system can be used to detect more than 80% of polyps within the first 10 s.

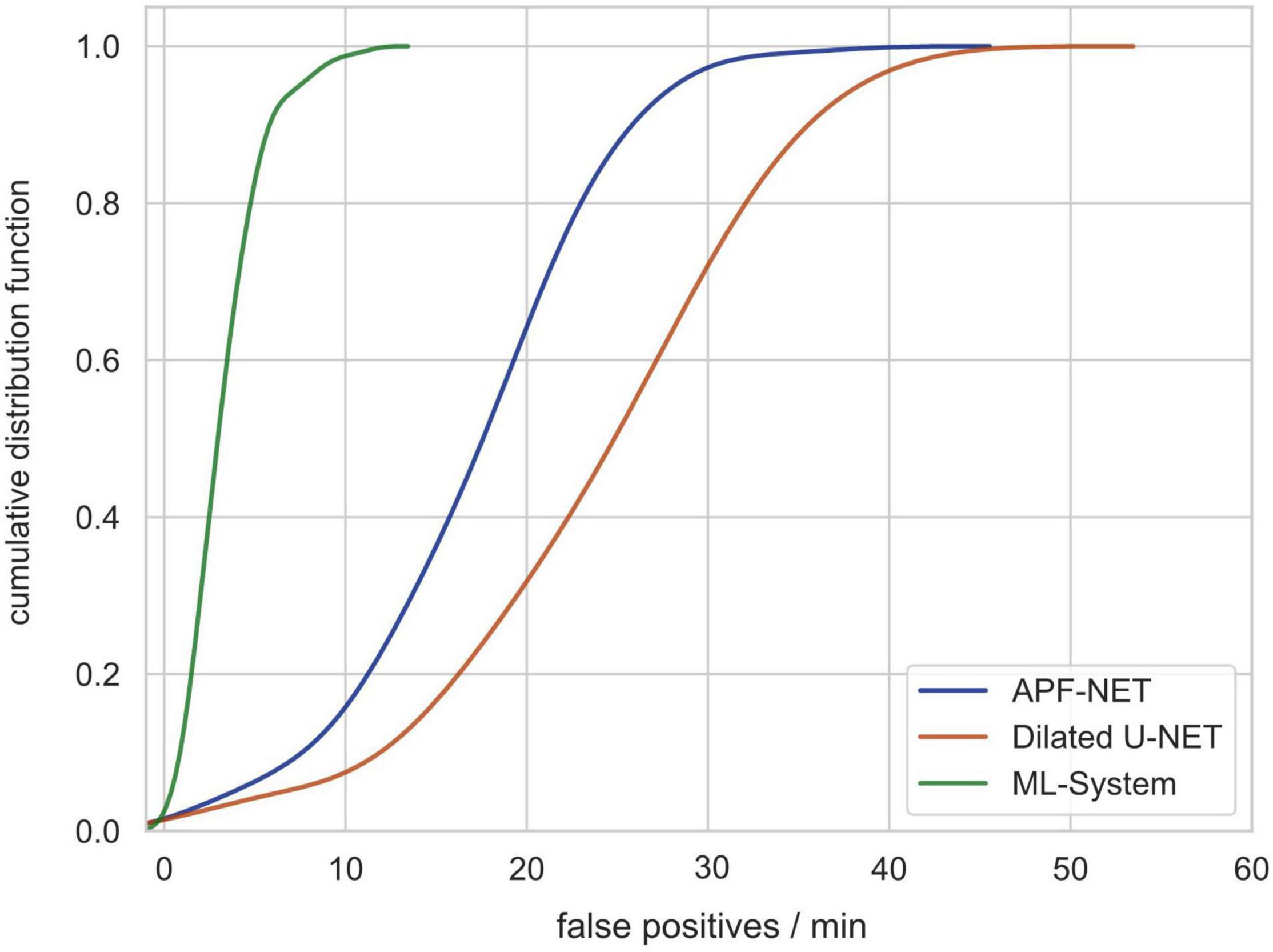

Previous DL-based polyp detection systems are not applicable in clinical practice partially because computer-aided diagnosis (CAD) systems can produce many false-positive results. For this reason, we used video clips without polyps from validation set 3 to test the number of false-positives generated by our system (video length varies from less than 5 min to more than 30 min). As shown in Figure 5, the cumulative distribution function (CDF) of the number of false-positives generated per minute per video shows that the system can avoid most false-positives.

Figure 5. The cumulative distribution function (CDF) of the number of false-positives generated per minute per video showing that the system can avoid most false-positives.

As the gold standard for CRC screening, colonoscopy has made a remarkable contribution to reducing CRC-related mortality due to its ability to directly intervene in suspected malignant polyps during colonoscopy. However, as previously mentioned, approximately one-quarter of polyps are still missed in clinical practice today. In addition, the long training time required and high entry barrier for the procedure has resulted in a severe shortage and heavy workload for endoscopists. Recently, with the development of artificial intelligence, the emergence of endoscopic CAD systems has helped in making clinical decisions and, to a certain extent, reduced human bias during colonoscopy, thus reducing the polyp miss rate (27). In this study, we constructed a DeFrame polyp detection system (DeFrame), which can detect polyps of various morphologies and locations in colonoscopy videos generated during clinical practice with good working performance.

Most polyp detection algorithms used in previous studies have been developed based on public datasets, and the sample sizes of the training and validation sets have been relatively small., e.g., in two widely used public datasets (CVC-Clinic DB and ETIS-Larib polyp DB), the number of polyps was 33 and 111, respectively. In addition, polyps in images obtained from open-source databases are generally obvious to detect with a clean background (23, 24). However, during clinical colonoscopy, most of the images are blurred with various degrees of artifacts (e.g., liquid, food residue, bubbles, reflections, mirrors, etc.), so the detection performance of these algorithms is significantly decreased when applied to clinical practice. In this study, we built a polyp image database with images extracted from endoscopic video clips and a video database of full-length videos. To the best of our knowledge, the size of both databases we used is larger than any other existing database, which ensures the accuracy of the system detection performance to some extent.

Most previous studies have been based on the use of a single algorithm, so combining sensitivity, specificity, and real-time recognition has been difficult, which has resulted in a significant proportion of false-positives (17, 18, 21, 28). Consistent with this, in our previous study, we developed two convolutional neural network (CNN)-based polyp detection models. Additionally, we found that the false-positive rates of the two models are different. Therefore, in this study, we integrated the fuzzy recognition algorithm with two different polyp detection algorithms. We found that a slight decrease in sensitivity can significantly reduce the number of false-positives and increase specificity after integration. The number of images and videos we used for this study is sufficient to produce statistically meaningful results. To the best of our knowledge, the polyp detection system (DeFrame) we have built is the first to integrate multiple deep learning-based algorithms.

As mentioned above, our polyp detection module can be subdivided into two different polyp detection algorithms and the system itself. Our DeFrame system works better when trained and tested on the same two public databases than other current polyp detection algorithms.

The traditional measurement of specificity and sensitivity does reflect the system’s performance, but there are certain flaws. The reasons for this are as follows: in clinical practice, multiple polyps can exist within a certain field of view (in a single image), and using traditional measures alone (image as a unit) can lead to one missing other polyps in the same image, resulting in an inaccurate assessment of the system’s performance. Therefore, in this study, we evaluated the system’s performance at both the image and polyp levels to better understand the system’s role in clinical care.

Additionally, we must admit that this study has some limitations. Since the DeFrame system was only trained, validated, and tested with colon images and videos, further investigation is needed to determine whether it can effectively detect polyps in areas other than the colon. In addition, we used the original video recordings to simulate clinical practice, but the clinical setting is variable, and we need prospective studies to evaluate how well the system performs in clinical practice. Finally, the endoscopic devices produced by different manufacturers vary in terms of light source or resolution, so external validation is needed to assess the system’s adaptability. A possible next step would be to incorporate AI pathology techniques to assist in predicting polyp pathology outcomes to differentiate benign and malignant polyps under endoscopy (29, 30).

We developed a fast, accurate and reliable DeFrame system for detecting polyps, which, to some extent, is feasible for use in routine clinical practice. However, further investigation is needed to determine whether the system can improve the ADR in clinical practice, subsequently reducing the incidence of CRC and CRC-related mortality.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

This study is in accordance with the Helsinki Declaration and has been approved by the Ethics Committee of Xiangya Hospital of Central South University (No. 201812543). Written informed consent was not required because the participants would not be identified from the colonoscopy videos.

XL, YC, and BL designed the experiments. SC, SL, DW, and XS collected the video recordings data and analyzed the data. SC, SL, JY, and YHC assembles the collected data. SL, YHC, and YC wrote and revised the manuscript. All authors read and approved the final manuscript.

The present study was financially supported by the Natural Science Foundation of China (Nos. 81570537, 81974074, and 82172654) and Hunan Provincial Science and Technology Department (2018RS3026 and 2021RC4012).

YT was employed by the company HighWise Medical Technology Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We thank the pathologists from the Pathology Department of Xiangya Hospital, especially Qiongqiong He, for histopathology support.

1. Torre LA, Bray F, Siegel RL, Ferlay J, Lortet-Tieulent J, Jemal A. Global cancer statistics, 2012. CA Cancer J Clin. (2015) 65:87–108. doi: 10.3322/caac.21262

2. Siegel R, Naishadham D, Jemal A. Cancer statistics, 2013. CA Cancer J Clin. (2013) 63:11–30. doi: 10.3322/caac.21166

3. Zauber AG, Winawer SJ, O’Brien MJ, Lansdorp-Vogelaar I, van Ballegooijen M, Hankey BF, et al. Colonoscopic polypectomy and long-term prevention of colorectal-cancer deaths. N Engl J Med. (2012) 366:687–96. doi: 10.1056/nejmoa1100370

4. van Rijn JC, Reitsma JB, Stoker J, Bossuyt PM, van Deventer SJ, Dekker E. Polyp miss rate determined by tandem colonoscopy: a systematic review. Am J Gastroenterol. (2006) 101:343–50. doi: 10.1111/j.1572-0241.2006.00390.x

5. Horton N, Garber A, Hasson H, Lopez R, Burke CA. Impact of single- vs. Split-dose low-volume bowel preparations on bowel movement kinetics, patient inconvenience, and polyp detection: a prospective trial. Am J Gastroenterol. (2016) 111:1330–7. doi: 10.1038/ajg.2016.273

6. Adler A, Aminalai A, Aschenbeck J, Drossel R, Mayr M, Scheel M, et al. Latest generation, wide-angle, high-definition colonoscopes increase adenoma detection rate. Clin Gastroenterol Hepatol. (2012) 10:155–9. doi: 10.1016/j.cgh.2011.10.026

7. Bisschops R, East JE, Hassan C, Hazewinkel Y, Kamiński MF, Neumann H, et al. Advanced imaging for detection and differentiation of colorectal neoplasia: European society of gastrointestinal endoscopy (ESGE) guideline – update 2019. Endoscopy. (2019) 51:1155–79. doi: 10.1055/a-1031-7657

8. Facciorusso A, Del Prete V, Buccino RV, Della Valle N, Nacchiero MC, Monica F, et al. Comparative efficacy of colonoscope distal attachment devices in increasing rates of adenoma detection: a network meta-analysis. Clin Gastroenterol Hepatol. (2018) 16: 1209–1219.e9. doi: 10.1016/j.cgh.2017.11.007

9. Zhao S, Wang S, Pan P, Xia T, Chang X, Yang X, et al. Magnitude, risk factors, and factors associated with adenoma miss rate of tandem colonoscopy: a systematic review and meta-analysis. Gastroenterology. (2019) 156:1661–1674e11. doi: 10.1053/j.gastro.2019.01.260

10. Pohl H, Robertson DJ. Colorectal cancers detected after colonoscopy frequently result from missed lesions. Clin Gastroenterol Hepatol. (2010) 8:858–64. doi: 10.1016/j.cgh.2010.06.028

11. Rex DK, Eid E. Considerations regarding the present and future roles of colonoscopy in colorectal cancer prevention. Clin Gastroenterol Hepatol. (2008) 6:506–14. doi: 10.1016/j.cgh.2008.02.025

12. Corley DA, Jensen CD, Marks AR, Zhao WK, Lee JK, Doubeni CA, et al. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. (2014) 370:1298–306.

13. Kaminski MF, Regula J, Kraszewska E, Polkowski M, Wojciechowska U, Didkowska J, et al. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med. (2010) 362:1795–803. doi: 10.1056/nejmoa0907667

14. Kaminski MF, Wieszczy P, Rupinski M, Wojciechowska U, Didkowska J, Kraszewska E, et al. Increased rate of adenoma detection associates with reduced risk of colorectal cancer and death. Gastroenterology. (2017) 153:98–105. doi: 10.1053/j.gastro.2017.04.006

15. Mo X, Tao K, Wang Q, Wang G. An efficient approach for polyps detection in endoscopic videos based on faster R-CNN. In: Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR). Piscataway, NJ: IEEE (2018). p. 3929–34.

16. Sornapudi S, Meng F, Yi S. Region-based automated localization of colonoscopy and wireless capsule endoscopy polyps. Appl Sci. (2019) 9:2404. doi: 10.3390/app9122404

17. Wang P, Xiao X, Glissen Brown JR, Berzin TM, Tu M, Xiong F, et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat Biomed Eng. (2018) 2:741–8. doi: 10.1038/s41551-018-0301-3

18. Lee JY, Jeong J, Song EM, Ha C, Lee HJ, Koo JE, et al. Real-time detection of colon polyps during colonoscopy using deep learning: systematic validation with four independent datasets. Sci Rep. (2020) 10:8379. doi: 10.1038/s41598-020-65387-1

19. Poon CCY, Jiang Y, Zhang R, Lo WWY, Cheung MSH, Yu R, et al. AI-doscopist: a real-time deep-learning-based algorithm for localising polyps in colonoscopy videos with edge computing devices. NPJ Digit Med. (2020) 3:73. doi: 10.1038/s41746-020-0281-z

20. Zhang C, Zhang N, Wang D, Cao Y, Liu B. Artifact detection in endoscopic video with deep convolutional neural networks. In: Proceedings of the 2020 Second International Conference on Transdisciplinary AI (TransAI). Piscataway, NJ: IEEE (2020). p. 1–8.

21. Wang D, Zhang N, Sun X, Zhang P, Zhang C, Cao Y, et al. AFP-net: realtime anchor-free polyp detection in colonoscopy. arXiv. (2019). [Preprint]. doi: 10.48550/arXiv.1909.02477

22. Sun X, Zhang P, Wang D, Cao Y, Liu B. Colorectal polyp segmentation by U-net with dilation convolution. In: Proceedings of the 2019 18th IEEE International Conference on Machine Learning and Applications (ICMLA). Piscataway, NJ: IEE (2019). p. 851–8.

23. Bernal J, Sánchez FJ, Fernández-Esparrach G, Gil D, Rodríguez C, Vilariño F. WM-DOVA maps for accurate polyp highlighting in colonoscopy: validation vs. saliency maps from physicians. Comput Med Imaging Graph. (2015) 43:99–111. doi: 10.1016/j.compmedimag.2015.02.007

24. Silva J, Histace A, Romain O, Dray X, Granado B. Toward embedded detection of polyps in WCE images for early diagnosis of colorectal cancer. Int J Comput Assist Radiol Surg. (2014) 9:283–93. doi: 10.1007/s11548-013-0926-3

25. Vázquez D, Bernal J, Sánchez FJ, Fernández-Esparrach G, López AM, Romero A, et al. A benchmark for endoluminal scene segmentation of colonoscopy images. J. Healthc Eng. (2017) 2017:1–9. doi: 10.1155/2017/4037190

26. Bernal J, Sánchez J, Vilarino F. Towards automatic polyp detection with a polyp appearance model. Pattern Recognit. (2012) 45:3166–82. doi: 10.1016/j.patcog.2012.03.002

27. Wang P, Berzin TM, Glissen Brown JR, Bharadwaj S, Becq A, Xiao X, et al. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. (2019) 68:1813–9. doi: 10.1136/gutjnl-2018-317500

28. Zhang P, Sun X, Wang D, Wang X, Cao Y, Liu B. An efficient spatial-temporal polyp detection Framework for colonoscopy video. In: Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence. Piscataway, NJ: IEEE (2019). p. 1252–9.

29. Chen PJ, Lin MC, Lai MJ, Lin JC, Lu HH, Tseng VS. Accurate classification of diminutive colorectal polyps using computer-aided analysis. Gastroenterology. (2018) 154:568–75. doi: 10.1053/j.gastro.2017.10.010

30. Byrne MF, Chapados N, Soudan F, Oertel C, Linares Pérez M, Kelly R, et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut. (2019) 68:94–100. doi: 10.1136/gutjnl-2017-314547

Keywords: artificial intelligence, convolutional neural networks, deep learning, colonoscopy, computer-aided detection

Citation: Chen S, Lu S, Tang Y, Wang D, Sun X, Yi J, Liu B, Cao Y, Chen Y and Liu X (2022) A Machine Learning-Based System for Real-Time Polyp Detection (DeFrame): A Retrospective Study. Front. Med. 9:852553. doi: 10.3389/fmed.2022.852553

Received: 11 January 2022; Accepted: 20 April 2022;

Published: 31 May 2022.

Edited by:

Benjamin Walter, Ulm University Medical Center, GermanyReviewed by:

Jin Chai, Third Military Medical University, ChinaCopyright © 2022 Chen, Lu, Tang, Wang, Sun, Yi, Liu, Cao, Chen and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongheng Chen, eW9uZ2hlbmNAMTYzLmNvbQ==; Xiaowei Liu, bGl1eHdAY3N1LmVkdS5jbg==

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.