94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med., 23 February 2022

Sec. Infectious Diseases: Pathogenesis and Therapy

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.846525

Syunsuke Yamanaka1*

Syunsuke Yamanaka1* Koji Morikawa2

Koji Morikawa2 Hiroyuki Azuma3

Hiroyuki Azuma3 Maki Yamanaka4

Maki Yamanaka4 Yoshimitsu Shimada5

Yoshimitsu Shimada5 Toru Wada6

Toru Wada6 Hideyuki Matano7

Hideyuki Matano7 Naoki Yamada1

Naoki Yamada1 Osamu Yamamura8

Osamu Yamamura8 Hiroyuki Hayashi1

Hiroyuki Hayashi1Background: Early prediction of oxygen therapy in patients with coronavirus disease 2019 (COVID-19) is vital for triage. Several machine-learning prognostic models for COVID-19 are currently available. However, external validation of these models has rarely been performed. Therefore, most reported predictive performance is optimistic and has a high risk of bias. This study aimed to develop and validate a model that predicts oxygen therapy needs in the early stages of COVID-19 using a sizable multicenter dataset.

Methods: This multicenter retrospective study included consecutive COVID-19 hospitalized patients confirmed by a reverse transcription chain reaction in 11 medical institutions in Fukui, Japan. We developed and validated seven machine-learning models (e.g., penalized logistic regression model) using routinely collected data (e.g., demographics, simple blood test). The primary outcome was the need for oxygen therapy (≥1 L/min or SpO2 ≤ 94%) during hospitalization. C-statistics, calibration slope, and association measures (e.g., sensitivity) evaluated the performance of the model using the test set (randomly selected 20% of data for internal validation). Among these seven models, the machine-learning model that showed the best performance was re-evaluated using an external dataset. We compared the model performances using the A-DROP criteria (modified version of CURB-65) as a conventional method.

Results: Of the 396 patients with COVID-19 for the model development, 102 patients (26%) required oxygen therapy during hospitalization. For internal validation, machine-learning models, except for the k-point nearest neighbor, had a higher discrimination ability than the A-DORP criteria (P < 0.01). The XGboost had the highest c-statistic in the internal validation (0.92 vs. 0.69 in A-DROP criteria; P < 0.001). For the external validation with 728 temporal independent datasets (106 patients [15%] required oxygen therapy), the XG boost model had a higher c-statistic (0.88 vs. 0.69 in A-DROP criteria; P < 0.001).

Conclusions: Machine-learning models demonstrated a more significant performance in predicting the need for oxygen therapy in the early stages of COVID-19.

The novel coronavirus disease 2019 (COVID-19), first reported in the Hubei province of the People's Republic of China in December 2019, has led to an urgent threat to global health. Many countries faced an emergency crisis despite the significant public and private efforts to delay disease spread (1). The exponential increase in COVID-19 incidence has led to a significant demand for medical services, resulting in a shortage of medical resources (2, 3). Since, it is challenging to hospitalize all patients with COVID-19 in most countries, careful observation outside the hospital is standard for asymptomatic patients and patients with mild symptoms (4). However, some asymptomatic or patients with mild symptoms of COVID-19 at the first medical visit develop severe pneumonia during observation (5, 6). Although, there is no definitive antiviral medication against COVID-19, medications, such as oxygen therapy, antiviral medication, steroids, and supportive care, have become the standard of care and have been effective (7, 8). As a result, preventable deaths occur because of the lack of adequate prediction of patient prognosis at the first medical visit and close follow-up (9). Some of them could have been saved if the need for oxygen therapy had been correctly predicted on the first visit (10). Therefore, accurate medical triage for asymptomatic patients and patients with mild symptoms in the early stage of COVID-19 is essential to decrease the incidence of death and allocate limited medical resources appropriately during the observation period (11). In previous studies, artificial intelligence, such as machine learning, has shown better predictive capabilities than traditional statistical methods (12). However, most published models have not been externally validated with calibration plots, resulting in a high risk of bias (13–15). In addition, few studies have included most patients who were hospitalized, even if the patients were asymptomatic, and have observed their progress in detail. Therefore, the performance of the reported models might be optimistic and highly biased (12, 16).

Moreover, many studies did not include a follow-up period after the end of the study. However, the need for oxygen demand and outcome may have been underestimated because the outcome of these patients may have occurred after the end of the study. With the effort for the quality required by the PROBAST (prediction model risk of bias assessment tool) guidelines and TRIPOD (transparent reporting of a multivariable prediction model for individual prognosis or diagnosis) reporting guidelines (13, 17), this study aimed to develop and validate a prognostic machine-learning model that accurately predicts the need for oxygen therapy in the early stages of COVID-19 using external datasets from multiple institutions and to compare its predictive performance with traditional approaches, such as the A-DROP criteria (consisting of age of ≥70 years in men or ≥75 years in women, blood urea nitrogen of ≥21 mg/dL or dehydration, oxyhemoglobin saturation measured by pulse oximetry of ≤ 90% or partial oxygen pressure in the arterial blood of ≤ 60 mmHg, confusion, and systolic blood pressure of ≤ 90 mmHg) (18).

This multicenter retrospective cohort study analyzed patients with COVID-19 in 11 academic and community hospitals in different geographic regions across Fukui, Japan. The 11 medical institutions included two level-I and eight level-II equivalent trauma centers and a temporal medical institution. The mean annual hospitalizations are 160,000 and 90,000 in two level-I and seven level-II-equivalent trauma centers, respectively. Supplementary Table 1 and Supplementary Figure 1 show the size, location, and number of full-time physicians, hospital beds, annual outpatients, annual patients transported by ambulances, and annual hospitalized patients. The ethics review board of the University of Fukui Hospital and each participating medical institution approved the present study (approval number in the University of Fukui Hospital: 20200120). In addition, the waiver of informed consent before data collection was approved by the institutional review board of each participating medical institution. All procedures were performed according to the principles of the Declaration of Helsinki.

We retrospectively studied all consecutive patients with COVID-19 confirmed by reverse transcription-polymerase chain reaction (RT-PCR) using a pharyngeal swab test for model development and internal validation admitted to participating medical facilities. Since, the number of patients with COVID-19 in Fukui during the study period was relatively small, most patients diagnosed with COVID-19 were hospitalized and few were kept at home (<5% of all patients with COVID-19 in Fukui). We lent pulse oximeters to home care patients, instructed them on how to take measurements, and followed up with daily phone calls to check their condition. In this study, there were only a small number of patients who were initially to have mild disease and were followed up at home; hence, we could collect data closer to the actual situation.

We obtained electronic medical dataon the early stage of COVID-19 in patients admitted to 10 medical institutions (except the Ota Hospital) from July 1, 2020 to March 31, 2021 to develop prediction models and perform internal validation. This study defines medical data regarding the early stage of COVID-19 as data within 48 h of PCR confirmation or initial diagnosis. After admission to the hospital, antivirals, corticosteroids, and herbal medicines were administered by the attending physician according to the standardized methods of each medical institution.

In addition, for temporal and external validation, we retrospectively examined all consecutive patients with COVID-19 admitted to the University of Fukui Hospital, Fukui Prefectural Hospital, Japanese Red Cross Fukui Hospital, Fukui-ken Saiseikai Hospital, and Ota Hospital from April 1, 2021 to September 30, 2021.

Exclusion criteria were patients aged <17 years and pregnant patients. The present study aimed to develop and validate a machine-learning model to identify COVID-19 patients who are mildly ill at the time of initial diagnosis and who require oxygen therapy during the course of their illness. We also excluded patients with transcutaneous oxygen saturation (SpO2) <95%, patients receiving oxygen therapy (>1 L O2/min) before admission, and patients with no recorded SpO2 at the time of initial diagnosis.

We performed a post-discharge telephone follow-up survey on all patients 2–4 weeks after discharge to determine if any patient's condition worsened after discharge.

The outcome of interest was oxygen therapy, which indicates disease progression. Oxygen therapy was administered between the time of diagnosis by PCR-positive confirmation of COVID-19 and the time of discharge from the hospital after negative confirmation by PCR. Oxygen therapy was defined as follows: (1) SpO2 was ≤ 94% at least once during hospitalization; (2) at least one oxygen administration of 1 L/min or more during hospitalization; (3) admission to the ICU; (4) intubation; and (5) discharge due to death. Therefore, patients who met these conditions were considered to have needed oxygen therapy.

From the electronic medical records of 11 medical institutions, we extracted the following routine data in the early stages of COVID-19: patient demographics [age, sex, smoking history, alcohol, height, body weight, body mass index, and comorbidities (myocardinal infarction, cognitive heart failure, peripheral vascular disease, cerebrovascular disease, chronic obstructive pulmonary disease, bronchial asthma, chronic kidney disease, hypertension, diabetes mellites, and malignancy)], symptoms (fever, fatigue, sore throat, headache, rhinorrhea, arthralgia, diarrhea, loss of smell, dyspnea, muscle ache, loss of taste, disturbance of consciousness, and conjunctival hyperemia), vital signs (systolic and diastolic blood pressure and oxygen saturation), Glasgow coma scale, complete blood count (white blood cells, lymphocytes, and platelets), coagulation profile (prothrombin time, activated partial thromboplastin time, fibrinogen, and d-dimer), biochemistry (sodium, potassium, albumin, blood urea nitrogen, creatinine, lactate dehydrogenase, aspartate aminotransferase, and alanine aminotransferase), c-reactive protein, and X-ray examination (chest X-ray or chest computed tomography). Radiology specialists or internal medicine specialists reviewed all X-ray examinations and classified them into three categories: bilateral pneumonia, unilateral pneumonia, and no pneumonia. We also recorded the medications and treatment plans after admission and outcomes.

We performed summary statistics to describe the characteristics of the patients and the patient's clinical course. After multiple imputations using random forests (19) (Supplementary Table 2 shows the missing rate for predictors), we preprocessed predictors, including one-shot encoding (i.e., creation of dummy variables), normalization, and standardization. In the training set (80% random sample) with all available predictors, six machine-learning models were developed: (1) penalized logistic regression (20), (2) random forest (21), (3) support vector machine (22), (4) k-point nearest neighbor (23), (5) XG boost (24), and (6) multilayer perceptron (25) for each outcome. In addition, we selected one machine-learning model that achieved the highest prediction performance among the six models. With the best performance model, we calculated the importance of the variables that improved the c-statistics and selected the top eight variables that improved the model performance for future practical usability. Another reason we developed the model with eight variables was to meet the PROBAST standard, which requires a large data set with at least 10 events per candidate variable for model development and at least 100 events for external validation (16). We performed stratified three-fold cross-validation to determine the optimal hyperparameters with the highest c-statistic [i.e., the area under the receiver operating characteristic (ROC) curve]. We used the A-DROP criteria, which is a modified version of CURB-65 as the reference model (26).

We measured the performance of the reference model (the A-DROP criteria) and each machine-learning model on the test set (the remaining 20% random sample) for internal validation. We estimated the c-statistics for each model and examined the relevant metrics as follows: sensitivity, specificity, positive and negative predictive values, and positive and negative likelihood ratios. Based on the ROC curve from the Youden method, we determined the threshold for perspective prediction results (cutoff) to determine the best performance model (27). We also examined the calibration plots of the best-performing model for the outcome.

For temporal external validation, we collected electronic medical record information from five hospitals. Of all the available variables, we extracted the top eight variables with missing values of 20% or less and contributed the most to improving the c-statistics using the filter method (28). We also demonstrated the c-statistics for the best machine-learning model with the eight variables and examined the relevant metrics (i.e., sensitivity), calibration plots, slope, intercepts, and coefficient of determination using temporal independent variable datasets.

A two-sided P-value of <0.05 was considered statistically significant. Data were analyzed using Python (version 3.7.3) and R (version 3.6.2).

A total of 489 patients with COVID-19 admitted to one of the 11 participating medical institutions were recorded for model development and internal validation during the 9-month study period. Of these, we excluded 28 patients aged <18 years, 14 patients with no SpO2 data at the first visit, and 51 patients who required oxygen administration of >1 L or SpO2 of <95% at the time of admission. The remaining 396 patients were included in the analytic cohort for model development.

For external validation, 855 patients were admitted to one of the five participating medical institutions during the 4 months. Of these, we excluded 90 patients under the age of 18 and 37 patients who required oxygen at the time of admission. The remaining 728 patients were included in the analytic cohort for external validation.

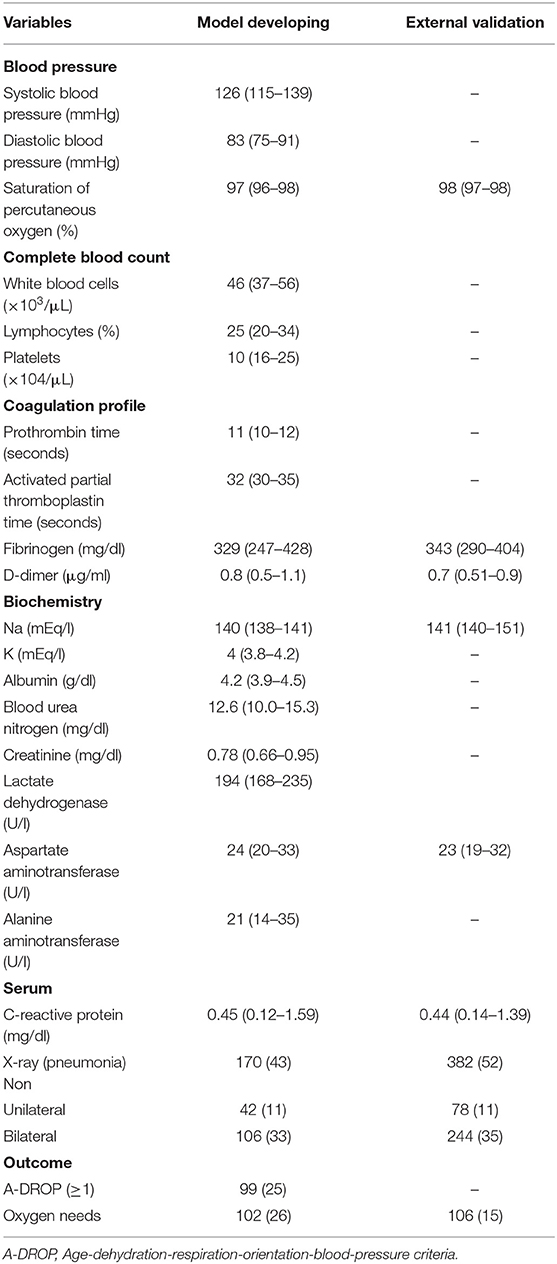

Patient characteristics on the admission of patients with COVID-19 are shown in Table 1. Table 2 shows the patients' vital signs, laboratory findings, X-rays on admission, and outcomes.

Table 2. Blood pressure, percutaneous oxygen saturation, laboratory findings, X-ray on admission, and outcome of the patients with COVID-19.

The median age was 54 years [interquartile range (IQR), 34–70], and 48% of the participants were female. Overall, 102 (26%) and 106 (15%) patients needed oxygen therapy for model dataset development and external validation, respectively. A post-discharge telephone follow-up survey was performed on all patients 2–4 weeks after discharge (≥95% capture rate), and no patients were readmitted.

Table 3 summarizes the prediction performance of the A-DROP criteria (reference) and eight machine-learning models. Compared with the A-DROP criteria, the discrimination performance of the machine-learning models was significantly greater (P < 0.05), except for the k-point nearest-neighbor model. Among the six machine-learning models using all available variables, the XG boost had the highest c-statistic (0.89; 95% confidence interval [CI], 0.79–0.96), with a sensitivity of 0.83 (95% CI, 075–0.91), specificity of 0.90 (95% CI, 0.84–0.96), the positive predictive value of 0.71 (95% CI, 0.61–0.81), and the negative predictive value of 0.95 (95% CI, 0.90–0.99). With the filter method for variable selection, we selected the eight important variables as follows: hypertension, age, any comorbidity, aspartate aminotransferase, lactate dehydrogenase, SpO2 at the first visit, pneumonia, and C-reactive protein.

The prediction performance of the XG boost model using the eight variables is presented in Table 3. Compared with the A-DROP criteria, the discrimination performance of the XG boost models using the top eight essential variables was significantly greater (P < 0.001).

For internal validation, compared to the six machine-learning models using all available variables, the XG boost model using the eight variables had the highest c-statistic (0.92; 95% CI, 0.86–0.98 vs. 0.69 in A-DROP criteria; P < 0.001), with a sensitivity of 0.94 (95% CI, 0.89–0.99), specificity of 0.69 (95% CI, 0.59–0.79), the positive predictive value of 0.47 (95% CI, 0.36–0.59), and negative predictive value of 0.98 (95% CI, 0.94–1.00).

For external validation using an independent data set of 728 patients, compared to the A-DROP criteria, the XG boost using the eight variables had a higher c-statistic (0.88; 95% CI, 0.81–0.95 vs. 0.69 in A-DROP criteria; P < 0.001; Figure 1), with a sensitivity of 0.64 (95% CI, 0.55–0.71), specificity of 0.93 (95% CI, 0.88–0.97), positive predictive value of 0.61 (95% CI, 0.53–0.68), positive predictive value of 0.93 (95% CI, 0.89–0.97]. SpO2 at the first visit was the most important feature, followed by age, lactate dehydrogenase, and aspartate aminotransferase levels (Table 4).

Figure 2 shows the calibration plots of the XG boost models using the eight variables for predicting outcomes for external validation. A positive relationship was observed between the predicted and actual risks in the calibration plots. The slope, intercept, and coefficient of determination of the calibration were 1.132, −0.202, and 0.949 for external validation, respectively. The model-predicted probability for external validation almost matched the observed probabilities.

In this study, we analyzed multicenter, retrospective data from 1,124 patients (396 for model development and internal validation, 728 for external validation) with COVID-19 and applied machine-learning models to predict oxygen therapy during the course of the infection. Specifically, most machine-learning models showed better discriminative performance than the traditional approach (i.e., the A-DROP criteria). In addition, these machine-learning models achieve a high negative predictive value in predicting outcomes, which may help us perform safer triage. To the best of our knowledge, this is the first study to investigate the performance of modern machine-learning models in predicting the need for oxygen therapy in early stage COVID-19 using a large multicenter dataset in Japan with the PROBAST standard.

It is challenging to admit all patients with COVID-19 in the current pandemic due to a lack of medical resources. The WHO guidelines recommend home treatment for patients with mild COVID-19 symptoms (29). Hence, the importance of accurate prediction for oxygen needs in the early state of COVID-19 has been emphasized, and many machine learning-based prognostic models have been developed (5, 6). However, most of their reported prediction ability may be optimistic because of the poorly reported study that did not follow PROBAST and the lack of external validation (12, 16). In addition, since these studies have looked only at hospitalized patients and not at patients considered mildly ill in the early stages of COVID-19 who were kept home without careful observation, these studies do not comprise consecutive patient data, resulting in significant selection bias (12, 16, 30). The present study examined consecutive patients admitted to 11 participating medical institutions, and few patients were kept home. We followed the PROBAST standard, which recommends an extensive data set with at least 10 events per candidate variable for model development and at least 100 events for external validation to reduce bias (31).

The XG boost model with the eight variables validated by an external dataset in the present study shows a negative predictive value of 0.93 (95% CI, 0.89–0.97). The model could contribute to a safer selection of low-risk patients at the initial diagnosis of COVID-19 and their safe management in home care under careful observation. In addition, accurate early prediction of oxygen requirements has several important implications in medical practice. For example, early identification of the potential risk of severe disease allows healthcare providers to develop individualized and optimal management strategies, prepare for hospitalization, and plan more careful follow-up.

Although the machine-learning models achieved significant predictive power, their performance remained imperfect. This can be explained, at least in part, by the limited number of predictors (e.g., the experience of healthcare professionals) and measurement errors in the data.

Furthermore, the A-DROP criterion is presumed to be simpler and easier to use. Although it is known that there is a trade-off between concise models, such as the A-DROP or CURB-65 criteria, the use of modern machine-learning models has the advantages in the era of health information technology, such as web-based applications, automated data entry through speech recognition, natural language processing, continuous model refinement through sequential extraction of electronic medical records, and reinforcement learning (32, 33). Our findings and the recent advent of machine-learning approaches collectively support cautious optimism that machine learning may enhance the clinician's ability as an assistive technology in predicting patient outcomes in the early stage of COVID-19. We have already implemented this model as a web-based application and plan to triage future COVID-19 patients in Fukui Prefecture.

This study has several limitations. First, the current model is designed to predict disease progression to the stage in which oxygen therapy is required. Hence, the present study did not attempt to predict progression to a more severe stage requiring intubation or extracorporeal membrane oxygenation. However, external validation of this study showed that four people were intubated and three of them died, but the model determined that all of them were severely ill without any omissions. Second, although the current study was conducted with a geographically diverse patient population in Fukui Prefecture, our model may not generalize to other practice settings. Therefore, this model needs to be revalidated in other parts of Japan and outside Japan. The model should be validated in other countries outside Japan. Third, the AI model should be validated repeatedly with the new COVID19 variant. Generally, every AI model should be evaluated frequently with a new dataset to maintain its prediction performance. However, we developed the AI model mainly from the alpha variant of COVID19, and the AI model performed well in external validation that primarily consisted of the delta variant. In other words, the AI model can correctly predict the severity of COVID19 even when the model predicts patients with new COVID19 variants. The AI model predicts the severity using the human body's reaction, such as CRP, not using the virus itself. Therefore, we assume if the patient's response to a new variant of COVID19 is correctly reflected in blood data and radiographs, the AI model may accurately predict the patient's severity. Finally, machine-learning models have a common limitation in terms of interpretability.

In conclusion, based on multicenter retrospective data from 1,124 patients, we developed machine-learning models to predict the need for oxygen therapy during the course of COVID-19 at its early stages. We found that, compared to conventional approaches such as the A-DROP criteria, the machine-learning models had a higher ability to predict oxygen therapy.

The datasets presented in this article are not readily available because we did not get permission to provide the dataset to publishers at each ethics review board of the participating hospital. Requests to access the datasets should be directed to Syunsuke Yamanaka, eWFtYW5ha2FzeXVuc3VrZUB5YWhvby5jbw==.jp.

The studies involving human participants were reviewed and approved by the Ethics Review Board of the University of Fukui Hospital. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

SY, HA, MY, YS, TW, HM, NY OY, and HH: contributed to the conception, design of the study, and organizing the database. SY and KM: performed the statistical analysis. SY: wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

This study was financially supported by the health prevention division of the Fukui Prefecture.

KM was employed by Connect Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The authors acknowledge Hiroyuki Suto, MD, Ph.D. (Fukui Katsuyama General Hospital) and Manabu Kimura, MD (Nakamura Hospital) at the study hospitals for their assistance with this project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2022.846525/full#supplementary-material

1. Arabi YM, Murthy S, Webb S. COVID-19: a novel coronavirus and a novel challenge for critical care. Intensive Care Med. (2020) 46:833–6. doi: 10.1007/s00134-020-05955-1

2. Grasselli G, Pesenti A, Cecconi M. Critical care utilization for the COVID-19 outbreak in Lombardy, Italy: early experience and forecast during an emergency response. JAMA. (2020) 323:1545–6. doi: 10.1001/jama.2020.4031

3. Remuzzi A, Remuzzi G. COVID-19 and Italy: what next? Lancet. (2020) 395:1225–8. doi: 10.1016/S0140-6736(20)30627-9

4. Gao Z, Xu Y, Sun C, Wang X, Guo Y, Qiu S, et al. A systematic review of asymptomatic infections with COVID-19. J Microbiol Immunol Infect. (2021) 54:12–6. doi: 10.1016/j.jmii.2020.05.001

5. Tabata S, Imai K, Kawano S, Ikeda M, Kodama T, Miyoshi K, et al. Clinical characteristics of COVID-19 in 104 people with SARS-CoV-2 infection on the Diamond Princess cruise ship: a retrospective analysis. Lancet Infect Dis. (2020) 20:1043–50. doi: 10.1016/S1473-3099(20)30482-5

6. Zhou F, Yu T, Du R, Fan G, Liu Y, Liu Z, et al. Clinical course and risk factors for mortality of adult inpatients with COVID-19 in Wuhan, China: a retrospective cohort study. Lancet. (2020) 395:1054–62. doi: 10.1016/S0140-6736(20)30566-3

7. Cui Y, Sun Y, Sun J, Liang H, Ding X, Sun X, et al. Efficacy and safety of corticosteroid use in coronavirus disease 2019 (COVID-19): a systematic review and meta-analysis. Infect Dis Ther. (2021) 10:2447–63. doi: 10.1007/s40121-021-00518-3

8. Nangaku M, Kadowaki T, Yotsuyanagi H, Ohmagari N, Egi M, Sasaki J, et al. The Japanese Medical Science Federation COVID-19 Expert Opinion English Version. JMA J. (2021) 4:148–62. doi: 10.31662/jmaj.2021-0002

9. Branas CC, Rundle A, Pei S, Yang W, Carr BG, Sims S, et al. Flattening the curve before it flattens us: hospital critical care capacity limits and mortality from novel coronavirus (SARS-CoV2) cases in US counties. medRxiv. (2020). doi: 10.1101/2020.04.01.20049759

10. Sanders JM, Monogue ML, Jodlowski TZ, Cutrell JB. Pharmacologic treatments for coronavirus disease 2019 (COVID-19): a review. JAMA. (2020) 323:1824–36. doi: 10.1001/jama.2020.6019

11. Lee YH, Hong CM, Kim DH, Lee TH, Lee J. Clinical course of asymptomatic and mildly symptomatic patients with coronavirus disease admitted to community treatment centers, South Korea. Emerg Infect Dis. (2020) 26:2346–52. doi: 10.3201/eid2610.201620

12. Shamsoddin E. Can medical practitioners rely on prediction models for COVID-19? A systematic review. Evid Based Dent. (2020) 21:84–6. doi: 10.1038/s41432-020-0115-5

13. Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med. (2019) 170:51–8. doi: 10.7326/M18-1376

14. Najafabadi ZAH, Ramspek CL, Dekker FW, Heus P, Hooft L, Moons KGM, et al. TRIPOD statement: a preliminary pre-post analysis of reporting and methods of prediction models. BMJ Open. (2020) 10:e041537. doi: 10.1136/bmjopen-2020-041537

15. Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ. (2015) 350:g7594. doi: 10.1136/bmj.g7594

16. Wynants L, Van Calster B, Collins GS, Riley RD, Heinze G, Schuit E, et al. Prediction models for diagnosis prognosis of covid-19: systematic review critical appraisal. BMJ. (2020) 369:m1328. doi: 10.1136/bmj.m1328

17. Moons KG, Altman DG, Reitsma JB, Ioannidis JP, Macaskill P, Steyerberg EW, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. (2015) 162:W1–W73. doi: 10.7326/M14-0698

18. Miyashita N, Matsushima T, Oka M. The JRS guidelines for the management of community-acquired pneumonia in adults: an update and new recommendations. Intern Med. (2006) 45:419–28. doi: 10.2169/internalmedicine.45.1691

19. Stekhoven DJ. Nonparametric Missing Value Imputation Using Random Forest. R package version 1.4 (2012).

20. Warton DI. Penalized normal likelihood and ridge regularization of correlation and covariance matrices. J Am Stat Assoc. (2008) 103:340–9. doi: 10.1198/016214508000000021

21. Svetnik V, Liaw A, Tong C, Culberson JC, Sheridan RP, Feuston BP. Random forest: a classification and regression tool for compound classification and QSAR modeling. J Chem Inf Comput Sci. (2003) 43:1947–58. doi: 10.1021/ci034160g

22. Suykens JA, Vandewalle J. Least squares support vector machine classifiers. Neural Process Lett. (1999) 9:293–300. doi: 10.1023/A:1018628609742

23. Bay SD. Nearest neighbor classification from multiple feature subsets. Intell Data Anal. (1999) 3:191–209. doi: 10.1016/S1088-467X(99)00018-9

24. Chen T, He T, Benesty M, Khotilovich V, Tang Y. Xgboost: Extreme Gradient Boosting. R package version 0.4-2. 1-4 (2015).

25. Pal S, Mitra S. Multilayer perceptron, fuzzy sets, and classification. IEEE Trans Neural Netw. (1992) 3:683–97. doi: 10.1109/72.159058

26. Shindo Y, Sato S, Maruyama E, Ohashi T, Ogawa M, Imaizumi K, et al. Comparison of severity scoring systems A-DROP and CURB-65 for community-acquired pneumonia. Respirology. (2008) 13:731–5. doi: 10.1111/j.1440-1843.2008.01329.x

27. Tolga T, Jiju A. The assessment of quality in medical diagnostic tests: a comparison of ROC/Youden and Taguchi methods. Int J Health Care Qual Assur. (2000) 13:300–7. doi: 10.1108/09526860010378744

28. Guyon I, Elisseeff A. An introduction to variable and feature selection. J Mach Learn Res. (2003) 3:1157–82. doi: 10.1162/153244303322753616

29. World Health Organization. Home Care for Patients With COVID-19 Presenting With Mild Symptoms and Management of Their Contacts: Interim Guidance (2020). Available online at: https://www.who.int/publications/i/item/home-care-for-patients-with-suspected-novel-coronavirus-(ncov)-infection-presenting-with-mild-symptoms-and-management-of-contacts (accessed March 17, 2020).

30. Luo M, Liu J, Jiang W, Yue S, Liu H, Wei S. IL-6 and CD8+ T cell counts combined are an early predictor of in-hospital mortality of patients with COVID-19. JCI Insight. (2020) 5:e139024. doi: 10.1172/jci.insight.139024

31. Collins GS, Ogundimu EO, Altman DG. Sample size considerations for the external validation of a multivariable prognostic model: a resampling study. Stat Med. (2016) 35:214–26. doi: 10.1002/sim.6787

32. Freeman MB,. Method and Apparatus for Automated Data Entry. Google Patents (2001). Available online at: https://patents.justia.com/patent/6299063 (accessed January 14, 2022).

Keywords: COVID-19, machine learning, prognostic model, medical triage, multicenter, PROBAST, TRIPOD

Citation: Yamanaka S, Morikawa K, Azuma H, Yamanaka M, Shimada Y, Wada T, Matano H, Yamada N, Yamamura O and Hayashi H (2022) Machine-Learning Approaches for Predicting the Need of Oxygen Therapy in Early-Stage COVID-19 in Japan: Multicenter Retrospective Observational Study. Front. Med. 9:846525. doi: 10.3389/fmed.2022.846525

Received: 31 December 2021; Accepted: 27 January 2022;

Published: 23 February 2022.

Edited by:

Longxiang Su, Peking Union Medical College Hospital (CAMS), ChinaReviewed by:

Carmen Silvia Valente Barbas, University of São Paulo, BrazilCopyright © 2022 Yamanaka, Morikawa, Azuma, Yamanaka, Shimada, Wada, Matano, Yamada, Yamamura and Hayashi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Syunsuke Yamanaka, eWFtYW5ha2FzeXVuc3VrZUB5YWhvby5jby5qcA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.