- 1School of Information Engineering, Huzhou University, Huzhou, China

- 2Zhejiang Province Key Laboratory of Smart Management and Application of Modern Agricultural Resources, Huzhou University, Huzhou, China

- 3The Affiliated Eye Hospital of Nanjing Medical University, Nanjing, China

- 4Department of Ophthalmology, Shanghai Changzheng Hospital, Huangpu, China

- 5Huzhou Traditional Chinese Medicine Hospital Affiliated to Zhejiang University of Traditional Chinese Medicine, Huzhou, China

Purpose: A six-category model of common retinal diseases is proposed to help primary medical institutions in the preliminary screening of the five common retinal diseases.

Methods: A total of 2,400 fundus images of normal and five common retinal diseases were provided by a cooperative hospital. Two six-category deep learning models of common retinal diseases based on the EfficientNet-B4 and ResNet50 models were trained. The results from the six-category models in this study and the results from a five-category model in our previous study based on ResNet50 were compared. A total of 1,315 fundus images were used to test the models, the clinical diagnosis results and the diagnosis results of the two six-category models were compared. The main evaluation indicators were sensitivity, specificity, F1-score, area under the curve (AUC), 95% confidence interval, kappa and accuracy, and the receiver operator characteristic curves of the two six-category models were compared in the study.

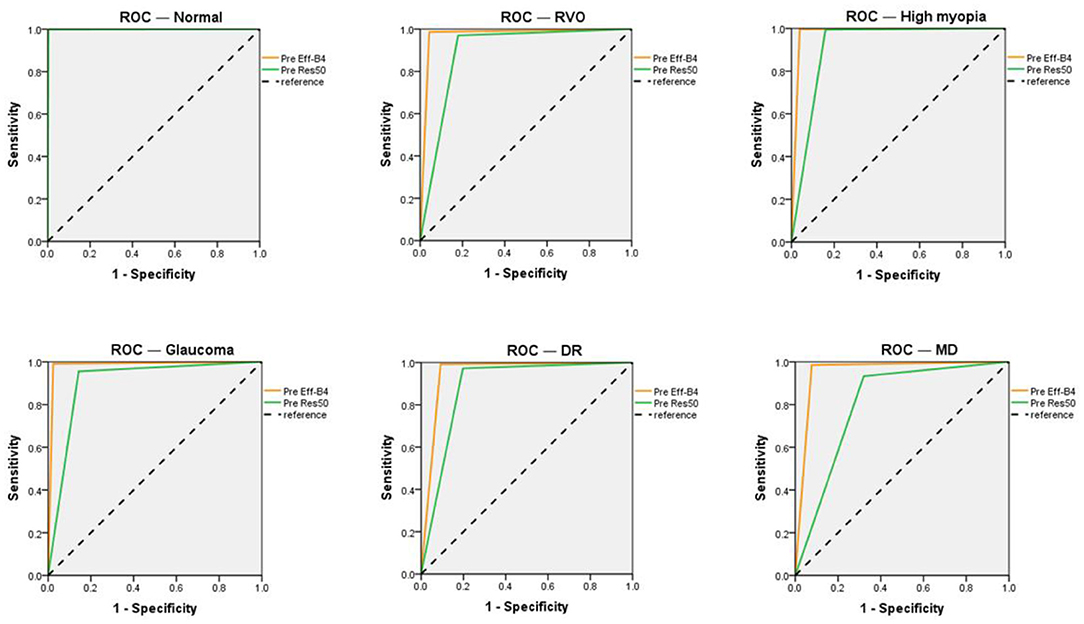

Results: The diagnostic accuracy rate of EfficientNet-B4 model was 95.59%, the kappa value was 94.61%, and there was high diagnostic consistency. The AUC of the normal diagnosis and the five retinal diseases were all above 0.95. The sensitivity, specificity, and F1-score for the diagnosis of normal fundus images were 100, 99.9, and 99.83%, respectively. The specificity and F1-score for RVO diagnosis were 95.68, 98.61, and 93.09%, respectively. The sensitivity, specificity, and F1-score for high myopia diagnosis were 96.1, 99.6, and 97.37%, respectively. The sensitivity, specificity, and F1-score for glaucoma diagnosis were 97.62, 99.07, and 94.62%, respectively. The sensitivity, specificity, and F1-score for DR diagnosis were 90.76, 99.16, and 93.3%, respectively. The sensitivity, specificity, and F1-score for MD diagnosis were 92.27, 98.5, and 91.51%, respectively.

Conclusion: The EfficientNet-B4 model was used to design a six-category model of common retinal diseases. It can be used to diagnose the normal fundus and five common retinal diseases based on fundus images. It can help primary doctors in the screening for common retinal diseases, and give suitable suggestions and recommendations. Timely referral can improve the efficiency of diagnosis of eye diseases in rural areas and avoid delaying treatment.

Introduction

Common retinal diseases include retinal vein occlusion (RVO), high myopia, glaucoma, diabetic retinopathy (DR), and macular degeneration (MD) (1–5). DR and MD are high-incidence fundus diseases in China. According to statistics, patients with fundus diseases account for 54.7% of all blindness patients in China. There are more than three million people suffering from fundus diseases and more than two-thirds of patients with fundus diseases face blindness every year. Ophthalmologists use a non-mydriatic fundus color camera to obtain images of the fundus in these five common retinal diseases. A diagnosis is made by reading and interpreting the fundus images (6). At present, China's rural areas have inefficient transportation systems, poor medical conditions, and few professional ophthalmologists. Hence, patients with ophthalmopathy often only go to hospitals in the city to seek for treatment when the disease has already progressed; this may lead to delays in getting the best available treatment and may cause serious consequences for the patient.

A six-category model consisting of the normal retina and five common retinal diseases was designed to help patients with ophthalmopathy. This may be useful in rural areas for the preliminary diagnosis, accurate classification, and timely referral of retinal diseases.

In recent years, feature extraction methods using traditional machine learning have become a common method for diagnosing ophthalmologic diseases. The pertinent features of the ophthalmologic diseases were manually selected then identified through machine learning (7–13). Deep learning used convolutional neural networks to automatically extract image features; it obtained satisfactory results in the field of ophthalmology (14–23). Many researchers have used deep learning to diagnose retinal diseases using fundus images.

Nagasato et al. (24) compared the ability of machine learning technology and deep learning technology in the detection of branch RVO through the ultra-wide field-of-view fundus images; they found that deep learning technology had higher sensitivity and specificity. Li et al. (20) used convolutional neural networks to design a system based on macular images obtained through optical coherence tomography to identify the visual conditions of patients with high myopia; the said system had high area under the curve (AUC), sensitivity, and specificity. Ahn et al. (25) trained a new neural network model using fundus photos that can detect early and late glaucoma, with a high AUC. The Google team of Gulshan et al. (26) trained a deep learning model to diagnose DR through fundus images, and automatically graded DR; they obtained satisfactory results and carried out clinical trials upon patient follow-up. Yim et al. (21) combined a three-dimensional optical coherence tomography image and the corresponding automatic tissue map to design an artificial intelligence model to predict the progress of the other eye's conversion to exudative age-related macular degeneration of a patient with one eye diagnosed to have the said ophthalmologic disease. There were also a few studies that focused on the simultaneous screening of multiple diseases. Zheng et al. (15) used 2,000 fundus images to design a five-category model of common retinal diseases based on ResNet50; the model was able to diagnose common retinal diseases, except for macular degeneration (MD). Cen et al. (27) used deep neural networks to identify 39 retinal diseases and conditions that needed to be referred to higher facilities of care; although satisfactory results were achieved, the amount of data required for training was too large.

Our team in a previous study used the ResNet50 to create a five-category model that consisted of the normal fundus and four common fundus diseases (RVO, high myopia, glaucoma, and DR) (15), with an AUC above 0.92 and a kappa value of 89.33%. The retinal diseases in the previous study did not include MD because of its complicated features and different sub-types. However, since MD is a common retinal disease, our team included it in the new classification model used in this study.

This study designed a six-category model for common retinal diseases based on the EfficientNet model. It was used to detect the normal fundus and five common retinal diseases using fundus images. The model can help patients with ophthalmopathy in rural areas in their initial diagnosis of common retinal diseases for their prompt referral.

Materials and Methods

Data Source

The images used in this study were obtained from the Intelligent Ophthalmology Database of the Ophthalmology Hospital of Nanjing Medical University. These images were obtained by various types of non-mydriatic fundus cameras. This study used the EfficientNet model to train a six-category model for common fundus diseases. A total of 2,400 fundus images were used as training data; there were 400 fundus images for each retinal disease and 400 images of normal fundus. A total of 1,315 fundus images were used as test data. The research had no restrictions on the sex and age of the patients who had their fundus images taken. The relevant personal information of the patients were removed before the fundus images were delivered to the researchers. Therefore, this research did not determine the demographic information of the patients who had their fundus images taken.

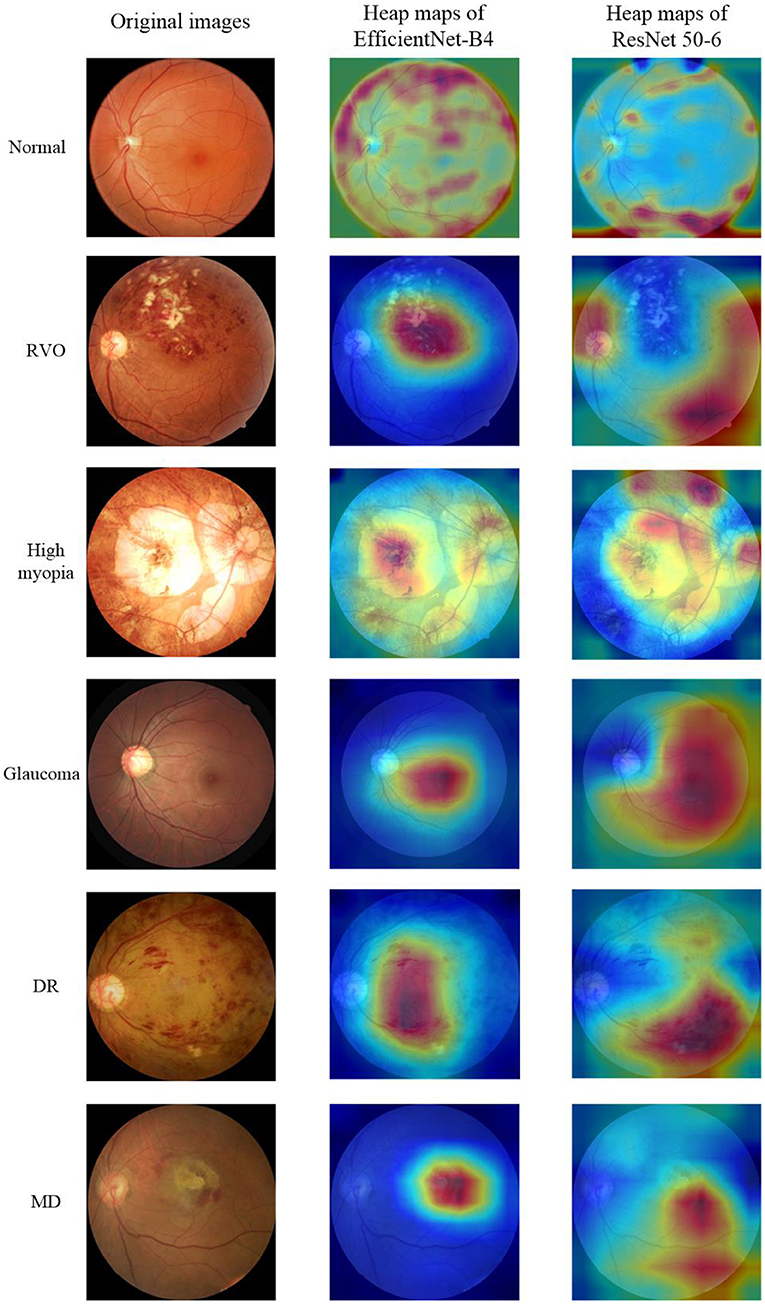

The fundus images provided by the cooperative hospital were of high quality. The actual diagnoses of the images were given at the same time and were regarded as the diagnoses from the expert ophthalmologist. Two other experienced ophthalmologists independently diagnosed the fundus images. If the two ophthalmologists had the same diagnosis, then it was regarded as the final diagnosis. However, if the two ophthalmologists had different diagnoses, then the expert ophthalmologist would assess the fundus image and gave the final diagnosis. The fundus images only had one disease and did not contain multiple retinal diseases. A fundus image could only be assessed as normal or diagnosed with one of the five common retinal diseases (RVO, high myopia, glaucoma, DR, and MD). The normal fundus image and the fundus images of the five common retinal diseases are shown in the first column of Figure 1.

Model Training

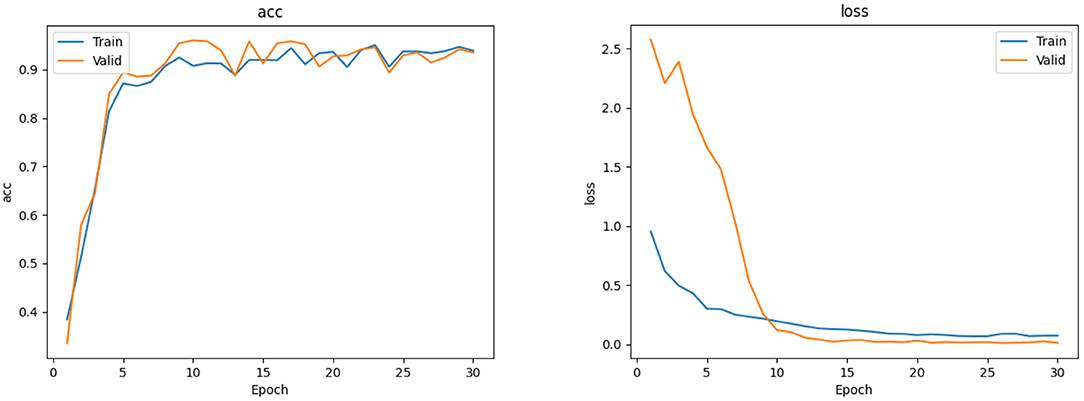

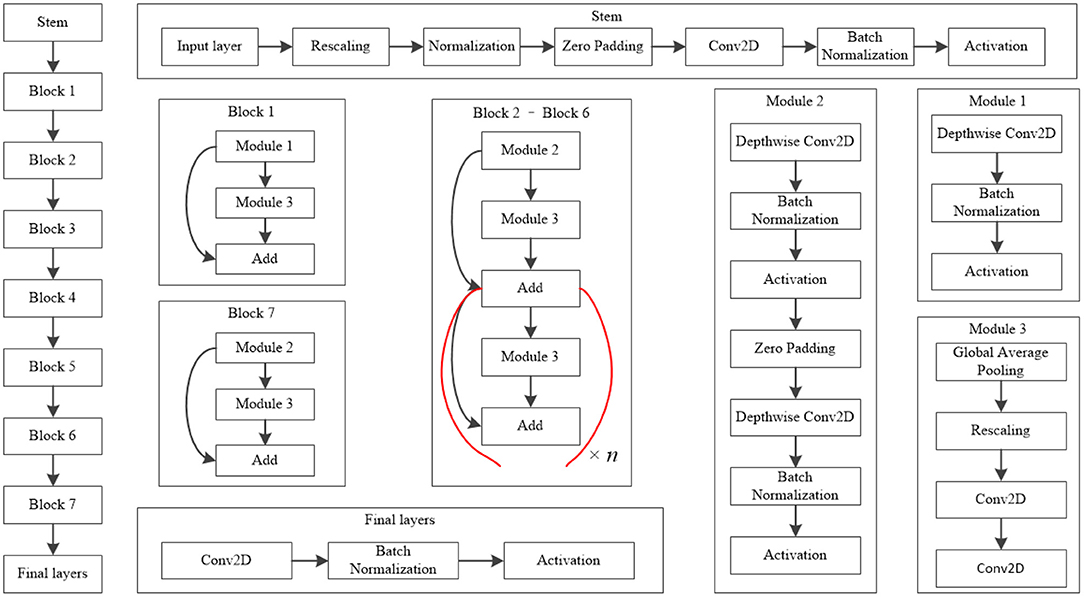

The EfficientNet-B4 model (28) was used to classify the normal fundus and the five common retinal diseases using the fundus images. The EfficientNet model was proposed by Google. EfficientNet-B0 provided the basebone; its depth, width, and resolution were jointly adjusted to obtain the other models. Finally, eight models with different parameters, from EfficientNet-B0 to EfficientNet-B7, were created. EfficientNet-B4 is mainly composed of one stem, seven blocks, and one final layer. The seven blocks mainly included modules 1, 2, and 3. All modules were mainly composed of the convolutional layer, pooling layer, and activation layer. The model structure and learning curves of EfficientNet-B4 is shown in Figures 2, 3, respectively.

Figure 2. Model structure of EfficientNet-B4. The values of n in this figure of Block 2–Block 6 is 2, 2, 4, 4, and 6, respectively.

The classic classification model of deep learning also included other models like VGG16 (28) and ResNet50 (29), among others. Their basic network structure mainly included convolutional layers, pooling layers, and fully connected layers. Our team previously used ResNet50 to train a five-category intelligent auxiliary diagnosis model for common retinal diseases (normal fundus and four common retinal diseases, excluding MD in that study) (15). Hence, in this study, the ResNet50 model was used to classify the normal fundus and the five common retinal diseases. The results of the ResNet50 model were compared with the results of the EfficientNet-B4 model.

The six-category model of common retinal diseases only changed the output layer category; there were no changes on the original network structure of the EfficientNet-B4 model during training. The initial parameters of the six-category model obtained after training were transferred to the ImageNet Large Scale Visual Recognition Challenge (30) to improve the initial performance of the model. Then, 2,400 fundus images were used to train the model iteratively to obtain the best weighted parameters. Finally, the six-category model of common retinal diseases was obtained.

Statistical Analysis

SPSS version 22.0 statistical software was used for statistical analysis. The receiver operating characteristic curve was used to analyze the diagnostic performance of the model, and kappa value was used to test the consistency of the diagnosis between the expert and the model. A kappa value of 0.61–0.80 indicated significant consistency, and >0.80 indicated high consistency. The sensitivity, specificity, F1-score, 95% confidence interval, AUC and other indicators of the six-category model of the normal fundus and the five common retinal diseases were calculated. The classification effect of the AUC values were interpreted as follows: >0.85, high; 0.7–0.85, average; and 0.5–0.7, poor.

Results

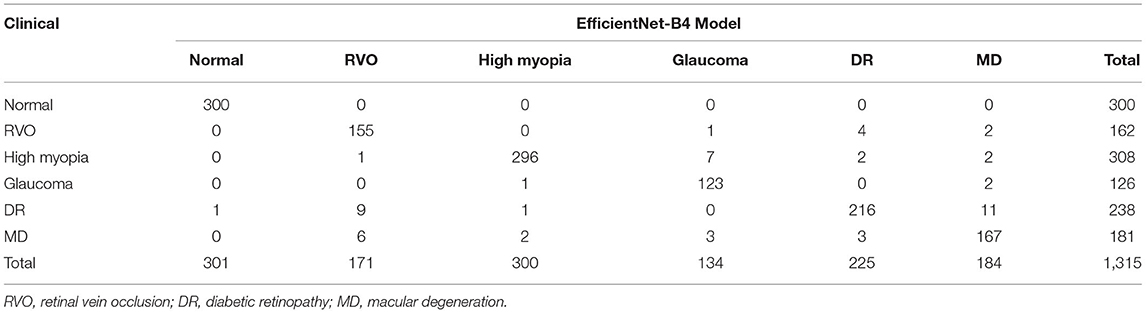

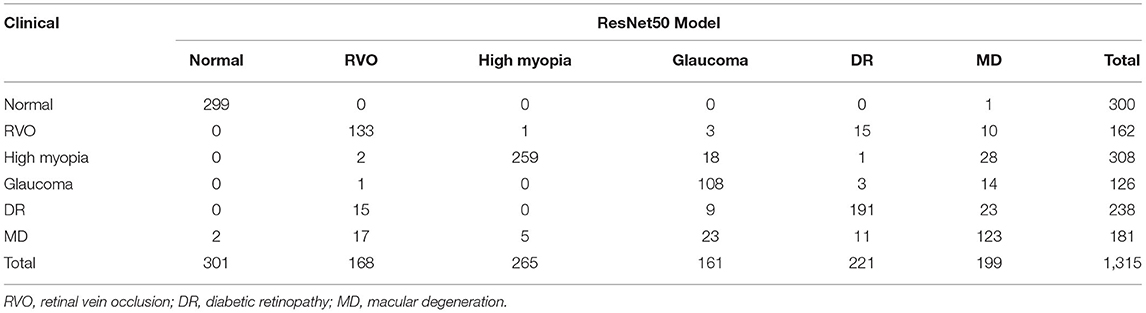

There were 1,315 fundus images used to test the six-category models of the common retinal diseases. The expert ophthalmologist diagnosed 300 fundus images as normal, 162 fundus images as RVO, 308 fundus images as high myopia, 126 fundus images as glaucoma, 238 fundus images as DR, and 181 fundus images as MD. The EfficientNet-B4 six-category model diagnosed 301 fundus images as normal, 171 fundus images as RVO, 300 fundus images as high myopia, 134 fundus images as glaucoma, 225 fundus images as DR, and 167 fundus images as MD. The ResNet50 six-category model diagnosed 301 fundus images as normal, 168 fundus images as RVO, 265 fundus images as high myopia, 161 fundus images as glaucoma, 221 fundus images as DR, and 199 fundus images as MD. The results of the EfficientNet-B4 model and the ResNet50 model are shown in Tables 1, 2, respectively.

Compared with the expert diagnosis group, the EfficientNet-B4 six-category model had 95% sensitivity for the diagnoses of RVO, high myopia, and glaucoma, while 90% sensitivity was found for the diagnoses of DR and MD. The specificity for diagnosing the five retinal diseases was approximately 99%. All the AUCs were above 95%, and the kappa value was 94.61%; this implies a high consistency of the model. The ResNet50 six-category model (ResNet 50-6) had >80% sensitivity for the diagnoses of RVO, high myopia, glaucoma, and DR. However, the sensitivity of the model for diagnosing MD was only at 67.96%. There was >93% specificity for diagnosing the five retinal diseases. All the AUCs were above 80%, and the kappa value was 81.31%; thus, there was high consistency of the model. The ResNet50 five-category model (15) (ResNet50-5) was made by our team; it is a five-category intelligent auxiliary diagnosis model of common retinal diseases. All the indicators for diagnosing the normal fundus images of the three models can reach 99%. The evaluation index results of the three models are shown in Table 3.

The EfficientNet-B4 and ResNet50-6 models are six-category models, while the ResNet50-5 model is a five-category model for common retinal diseases. The EfficientNet-B4 model was found to be superior to the ResNet50-6 and ResNet50-5 models in terms of sensitivity and specificity in the diagnoses of RVO, high myopia, glaucoma, and DR. The ResNet50-5 model could diagnose more accurately RVO, high myopia, glaucoma, and DR than the ResNet50-6 model. Figure 3 shows the accuracy and loss curves of Efficient-B4. Figure 4 shows the comparison of ROC curves between the EfficientNet-B4 model and the ResNet50-6 model for the assessment of the images of the normal fundus and of the five common retinal diseases.

Figure 4. ROC of the EfficientNet-B4 and ResNet 50-6 for normal fundus and the five common retinal diseases.

This study used Grad-CAM (31) to make heat maps for the EfficientNet-B4 and ResNet 50-6 models, as shown in the second and third columns of Figure 1, respectively. It can be seen from the figure that the focus area marked by the heat map of the EfficientNet-B4 model is more accurate, while the accuracy of the focus area marked by the ResNet 50-6 model is slightly worse. In this study, when the same algorithm is used to obtain the heat map, the better the evaluation index of the model, the more accurate the heat map area obtained.

Discussion

In 1998, LeCun et al. (32) proposed the LeNet-5 model that used convolutional neural networks to recognize handwritten digits. Their study laid the foundation for the basic convolutional neural network (CNN) architecture of convolution, pooling, and fully connected layers. After the year 2012, deep learning had developed rapidly. The AlexNet (33) model, VGG model, GoogleNet (34) model, and ResNet model had obtained the best results for the image classification or object detection using the ImageNet Large Scale Visual Recognition Challenge. In 2019, Google researchers proposed the EfficientNet model. First, the MnasNet (35) method was used to design EfficientNet-B0 that served as the basebone of EfficientNet-B1 to B7. The network depth, width, and resolution were refined in the succeeding versions. In this study, the EfficientNet model was selected to classify the normal and the five common retinal diseases based on the fundus images. Compared with other models, EfficientNet showed a better ability to extract the internal features, hence the classification and diagnoses were improved.

Tables 1, 2 shows that the EfficientNet-B4 model had a better diagnostic ability as compared with the ResNet50-6 model. The main reason could be explained by the complexity of the fundus images; there were inconsistent focus areas and varying characteristics of the different retinal diseases. The ResNet 50 model had 50 layers, while the EfficientNet-B4 model had deeper layers. Thus, the deep features of the fundus image could be extracted through operations, such as convolution. These deep features could help increase the accuracy of the model's assessment of the fundus image's diagnosis. However, the models used in this study had poor diagnostic results for MD. It was misdiagnosed as other diseases in large numbers because of its complicated manifestation in fundus images. The models had a difficulty in determining MD from other types of macular lesions, hence leading to misdiagnosis.

Our team had proposed a five-category model for normal fundus images and the four common retinal diseases based on the ResNet50 model. MD was not included in the four common retinal diseases in that study. Moreover, the results of the related retinal diseases were compared with the results of the five-category model. The results of the ResNet50-5 model in Table 3 are based on the five-category model. The addition of new categories will increase the difficulty of the model's identification of the target. The accuracy of the classification of the results by the model would decrease. This would apply to the features of other retinal diseases that may contain the features of MD that would make the diagnosis of MD more difficult. Consequently, this increases the probability of misdiagnosing MD as other types of retinal disease.

Some researchers had also done research on multi-class fundus diseases. Karthikeyan et al. (36) used deep learning to detect 12 major retinal complications, and the verification accuracy was 92.99%. Wang et al. (37) used the EfficientNet model to do multi-label classification research and the accuracy was 92%. The accuracy of the EfficientNet-B4 model in this study is 95.59%, but the images only had single labels, which was normal and five common fundus diseases. Other fundus diseases were usually classified into five fundus diseases, and then they would be diagnosed again by a doctor.

Tables 1–3, show that the two six-category models could diagnose normal fundus images. It is rare for normal fundus images to be diagnosed with retinal disease. There was one or two images with retinal disease that were diagnosed as normal images for the two models; the misdiagnosis mainly occurred in the fundus images of DR and MD. In the typical process of making a diagnosis, the doctor would need to confirm the results after the preliminary diagnosis using the classification model. The DR and MD lesions were relatively apparent, and even non-ophthalmologists could make good judgments after basic training. Therefore, the missed diagnosis of these two retinal diseases will be greatly reduced after the doctor's confirmation

The six-category model for common fundus diseases based on EfficientNet-B4 had high sensitivity and specificity for diagnosing normal fundus and five common retinal diseases. Therefore, it may be suitable for the primary diagnosis of common retinal diseases at the primary hospital. It may help increase diagnostic accuracy in primary care and support early detection, diagnosis, treatment, and referral. However, the model also had some shortcomings. For example, the sensitivity of diagnosing DR and MD was lower than in the other retinal diseases. The model could be further improved by increasing the number of training images to attain a better diagnostic performance.

Data Availability Statement

The raw data supporting the conclusions of this article will be available from the corresponding author on reasonable request.

Author Contributions

SZ and BZ wrote the manuscript. CW, MW, and WY reviewed the manuscript. BL and QJ trained the model. RW and QC collected and labeled the data. All authors issued final approval for the version to be submitted.

Funding

This research supported by the National Natural Science Foundation of China (No. 61906066), Natural Science Foundation of Zhejiang Province (No. LQ18F020002), Science and Technology Planning Project of Huzhou Municipality (No. 2016YZ02), and Nanjing Enterprise Expert Team Project. The Medical Science and Technology Development Project Fund of Nanjing (Grant No. YKK21262); Teaching Reform Research Project of Zhejiang Province (jg20190446).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank Editage (www.editage.cn) for English language editing.

References

1. Hogarty DT, Mackey DA, Hewitt AW. Current state and future prospects of artificial intelligence in ophthalmology: a review. Clin Experiment Ophthalmol. (2019) 47:128–39. doi: 10.1111/ceo.13381

2. Yang WH, Zheng B, Wu MN, Zhu SJ, Fei FQ, Weng M, et al. An evaluation system of fundus photograph-based intelligent diagnostic technology for diabetic retinopathy and applicability for research. Diabetes Ther. (2019) 10:1811–22. doi: 10.1007/s13300-019-0652-0

3. Armstrong GW, Lorch AC. A (eye): a review of current applications of artificial intelligence and machine learning in ophthalmology. Int Ophthalmol Clin. (2020) 60:57–71. doi: 10.1097/IIO.0000000000000298

4. Nagasato D, Tabuchi H, Masumoto H, Enno H, Ishitobi N, Kameoka M, et al. Automated detection of a nonperfusion area caused by retinal vein occlusion in optical coherence tomography angiography images using deep learning. PloS ONE. (2019) 14:1–14. doi: 10.1371/journal.pone.0223965

5. Tan TE, Anees A, Chen C, Li SH, Xu XX, Li ZX, et al. Retinal photograph-based deep learning algorithms for myopia and a blockchain platform to facilitate artificial intelligence medical research: a retrospective multicohort study. Lancet Digit Health. (2021) 3:e317–29. doi: 10.1016/S2589-7500(21)00055-8

6. Walter T, Massin P, Erginay A, Ordonez R, Jeulin C, Klein JC. Automatic detection of microaneurysms in color fundus images. Med Image Anal. (2007) 11:555–66. doi: 10.1016/j.media.2007.05.001

7. Hosoda Y, Miyake M, Yamashiro K, Ooto S, Takahashi A, Oishi A, et al. Deep phenotype unsupervised machine learning revealed the significance of pachychoroid features in etiology and visual prognosis of age-related macular degeneration. Sci Rep. (2020) 10:1–13. doi: 10.1038/s41598-020-75451-5

8. Xu J, Yang W, Wan C, Shen J. Weakly supervised detection of central serous chorioretinopathy based on local binary patterns and discrete wavelet transform. Comput Biol Med. (2020) 127:104056. doi: 10.1016/j.compbiomed.2020.104056

9. Chowdhury A R, Banerjee S. Detection of abnormalities of retina due to diabetic retinopathy and age related macular degeneration using SVM. Sci J Circuits Syst Signal Process. (2016) 5:1–7. doi: 10.11648/j.cssp.20160501.11

10. Rajan A, Ramesh G P. Automated early detection of glaucoma in wavelet domain using optical coherence tomography images. Biosci Biotechnol Res Asia. (2015) 12:2821–8. doi: 10.13005/bbra/1966

11. Zhang H, Chen Z, Chi Z, Fu H. Hierarchical local binary pattern for branch retinal vein occlusion recognition with fluorescein angiography images. Electron Lett. (2014) 50:1902–4. doi: 10.1049/el.2014.2854

12. Liu J, Wong D W K, Lim J H, Tan N M, Zhang Z, Li H, et al. Detection of pathological myopia by PAMELA with texture-based features through an SVM approach. J Healthc Eng. (2010) 1:1–11. doi: 10.1260/2040-2295.1.1.1

13. Bizios D, Heijl A, Hougaard J L, Bengtsson B. Machine learning classifiers for glaucoma diagnosis based on classification of retinal nerve fibre layer thickness parameters measured by Stratus OCT. Acta Ophthalmol. (2010) 88:44–52. doi: 10.1111/j.1755-3768.2009.01784.x

14. Fu H, Li F, Sun X, Cao X, Liao J, Orlando J I, et al. AGE challenge: angle closure glaucoma evaluation in anterior segment optical coherence tomography. Med Image Anal. (2020) 66:101798. doi: 10.1016/j.media.2020.101798

15. Zheng B, Jiang Q, Lu B, He K, Wu M N, Hao X L, et al. Five-Category intelligent auxiliary diagnosis model of common fundus diseases based on fundus images. Transl Vis Sci Technol. (2021) 10:1–10. doi: 10.1167/tvst.10.7.20

16. Xu J, Wan C, Yang W, Zheng B, Yan Z, Shen J. A novel multi-modal fundus image fusion method for guiding the laser surgery of central serous chorioretinopathy. Math Biosci Eng. (2021) 18:4797–816. doi: 10.3934/mbe.2021244

17. Zheng B, Liu Y, He K, Wu M, Jin L, Jiang Q, et al. Research on an intelligent lightweight-assisted pterygium diagnosis model based on anterior segment images. Dis Markers. (2021) 2021:1–8. doi: 10.1155/2021/7651462

18. Zhang H, Niu K, Xiong Y, Yang W, He Z, Song H. Automatic cataract grading methods based on deep learning. Comput Methods Programs Biomed. (2019) 182:104978. doi: 10.1016/j.cmpb.2019.07.006

19. Tang Z, Zhang X, Yang G, Zhang G, Gong Y, Zhao K, et al. Automated segmentation of retinal nonperfusion area in fluorescein angiography in retinal vein occlusion using convolutional neural networks. Med Phys. (2021) 48:648–58. doi: 10.1002/mp.14640

20. Li Y, Feng W, Zhao X, Liu B, Zhang Y, Chi W, et al. Development and validation of a deep learning system to screen vision-threatening conditions in high myopia using optical coherence tomography images. Br J Ophthalmol. (2020) 0:1–7. doi: 10.1136/bjophthalmol-2020-317825

21. Yim J, Chopra R, Spitz T, Winkens J, Obika A, Kelly C, et al. Predicting conversion to wet age-related macular degeneration using deep learning. Nat Med. (2020) 26:892–9. doi: 10.1038/s41591-020-0867-7

22. Yan Q, Weeks D E, Xin H, Swaroop A, Chew E Y, Huang H, et al. Deep-learning-based prediction of late age-related macular degeneration progression. Nat Mach Intell. (2020) 2:141–50. doi: 10.1038/s42256-020-0154-9

23. Orlando J I, Fu H, Breda J B, Keer K, Bathula D R, Rinto A D, et al. Refuge challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Med Image Anal. (2020) 59:101570. doi: 10.1016/j.media.2019.101570

24. Nagasato D, Tabuchi H, Ohsugi H, Masumoto H, Enno H, Ishitobi N, et al. Deep-learning classifier with ultrawide-field fundus ophthalmoscopy for detecting branch retinal vein occlusion. Int J Ophthalmol. (2019) 12:94–99. doi: 10.18240/ijo.2019.01.15

25. Ahn J M, Kim S, Ahn K S, Cho S H, Lee K B, Kim US. A deep learning model for the detection of both advanced and early glaucoma using fundus photography. PloS ONE. (2018) 13:1–8. doi: 10.1371/journal.pone.0207982

26. Gulshan V, Peng L, Coram M, Stumpe M C, Wu D, Narayannaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

27. Cen L P, Ji J, Lin J W, Ju S T, Lin H J, Li T P, et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat Commun. (2021) 12:1–13. doi: 10.1038/s41467-021-25138-w

28. Tan M, Le Q. Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning. Los Angeles, CA: PMLR (2019). p. 6105–14.

29. Simonyan K, Zisserman A, Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014). Available online at: https://arxiv.org/abs/1409.1556.pdf

30. Deng J, Dong W, Socher R, Li L-J, Li K, Li F-F. Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition. Miami, FL. (2009). p.248–55. doi: 10.1109/CVPR.2009.5206848

31. Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision. Venice. (2017). p. 618–26.

32. LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE. (1998) 86:2278–324. doi: 10.1109/5.726791

33. Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst. (2012) 25:1097–105. doi: 10.1145/3065386

34. Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. Boston, MA. (2015). p. 1–9.

35. Tan M, Chen B, Pang R, Vasudevan V, Sandler M, Howard A, et al. Mnasnet: Platform-aware neural architecture search for mobile. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, CA. (2019). p. 2820–8.

36. Karthikeyan S, Kumar PS, Madhusudan RJ, Sundaramoorthy SK, Namboori PKK. Detection of multi-class retinal diseases using artificial intelligence: an expeditious learning using deep CNN with minimal data. Biomed Pharmacol J. (2019) 12:1577–86. doi: 10.13005/bpj/1788

Keywords: fundus, retinal diseases, computer simulation, vision screening, optical imaging

Citation: Zhu S, Lu B, Wang C, Wu M, Zheng B, Jiang Q, Wei R, Cao Q and Yang W (2022) Screening of Common Retinal Diseases Using Six-Category Models Based on EfficientNet. Front. Med. 9:808402. doi: 10.3389/fmed.2022.808402

Received: 03 November 2021; Accepted: 12 January 2022;

Published: 23 February 2022.

Edited by:

Shaochong Zhang, Shenzhen Eye Hospital, ChinaReviewed by:

Carl-Magnus Svensson, Leibniz Institute for Natural Product Research and Infection Biology, GermanyYalin Zheng, University of Liverpool, United Kingdom

João Almeida, Federal University of Maranhão, Brazil

Copyright © 2022 Zhu, Lu, Wang, Wu, Zheng, Jiang, Wei, Cao and Yang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qin Jiang, anFpbjcxMEB2aXAuc2luYS5jb20=; Ruili Wei, cnVpbGl3ZWlAMTI2LmNvbQ==; Weihua Yang, YmVuYmVuMDYwNkAxMzkuY29t

†These authors have contributed equally to this work and share first authorship

Shaojun Zhu1,2†

Shaojun Zhu1,2† Maonian Wu

Maonian Wu Bo Zheng

Bo Zheng Ruili Wei

Ruili Wei Weihua Yang

Weihua Yang