95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 13 January 2022

Sec. Precision Medicine

Volume 8 - 2021 | https://doi.org/10.3389/fmed.2021.810995

This article is part of the Research Topic Intelligent Analysis of Biomedical Imaging Data for Precision Medicine View all 19 articles

Background: Multiparametric magnetic resonance imaging (mpMRI) plays an important role in the diagnosis of prostate cancer (PCa) in the current clinical setting. However, the performance of mpMRI usually varies based on the experience of the radiologists at different levels; thus, the demand for MRI interpretation warrants further analysis. In this study, we developed a deep learning (DL) model to improve PCa diagnostic ability using mpMRI and whole-mount histopathology data.

Methods: A total of 739 patients, including 466 with PCa and 273 without PCa, were enrolled from January 2017 to December 2019. The mpMRI (T2 weighted imaging, diffusion weighted imaging, and apparent diffusion coefficient sequences) data were randomly divided into training (n = 659) and validation datasets (n = 80). According to the whole-mount histopathology, a DL model, including independent segmentation and classification networks, was developed to extract the gland and PCa area for PCa diagnosis. The area under the curve (AUC) were used to evaluate the performance of the prostate classification networks. The proposed DL model was subsequently used in clinical practice (independent test dataset; n = 200), and the PCa detective/diagnostic performance between the DL model and different level radiologists was evaluated based on the sensitivity, specificity, precision, and accuracy.

Results: The AUC of the prostate classification network was 0.871 in the validation dataset, and it reached 0.797 using the DL model in the test dataset. Furthermore, the sensitivity, specificity, precision, and accuracy of the DL model for diagnosing PCa in the test dataset were 0.710, 0.690, 0.696, and 0.700, respectively. For the junior radiologist without and with DL model assistance, these values were 0.590, 0.700, 0.663, and 0.645 versus 0.790, 0.720, 0.738, and 0.755, respectively. For the senior radiologist, the values were 0.690, 0.770, 0.750, and 0.730 vs. 0.810, 0.840, 0.835, and 0.825, respectively. The diagnosis made with DL model assistance for radiologists were significantly higher than those without assistance (P < 0.05).

Conclusion: The diagnostic performance of DL model is higher than that of junior radiologists and can improve PCa diagnostic accuracy in both junior and senior radiologists.

Prostate cancer (PCa) is a major public health problem, representing the most common cancer type and the second highest cancer mortality among men in western countries (1). Multiparametric magnetic resonance imaging (mpMRI) plays an important role in diagnosis, targeted puncture guidance, and prognosis assessment of PCa in the current clinical setting (2). However, the performance of mpMRI usually varies based on the experience of radiologists at different levels (3), and the demand for MRI interpretation is ever-increasing. A convolutional neural network (CNN) approach, which can surpass human performance in natural image analysis, is anticipated to enhance computer-assisted diagnosis in prostate MRI (4, 5).

The CNN-based deep learning (DL) method revolutionizes and reshapes the existing work pattern. Diffusion weighted imaging (DWI), apparent diffusion coefficient (ADC), and T2-weighted imaging (T2WI) sequences are probably the most important and practical components of clinical prostate MRI examinations (6, 7). Several previous studies on DL involved a PCa diagnosis using only one or two of the above sequences and thus cannot be directly compared with clinical performance (8, 9).

Some studies focused on DL models with MRI data labeling based on biopsy locations that were determined by radiologists (10), which could result in inaccurate labeling. Whole-mount tissue sections, in which the entire cross-section of tissue from the gross section is mounted to the slide, provide pathologists with a good overview facilitating the identification of tumor foci (11–13). The use of prostate specimen whole-mount sectioning provides significantly superior anatomical registration for PCa than just mpMRI. Herein, we propose that the radiologists label PCa lesions on the MRI images using whole-mount histopathology images as reference to increase the accuracy of the labels.

In this study, a DL method was proposed to automatically conduct prostate gland segmentation, classification, and regional segmentation of PCa lesions, and subsequently compare its diagnostic efficiency with different level radiologists in clinical practice.

This retrospective study was approved by the Ethics Institution of Nanjing Drum Tower Hospital, and informed consent was waived since T2WI, DWI, and ADC sequences are part of the routine protocols for prostate MRI scans.

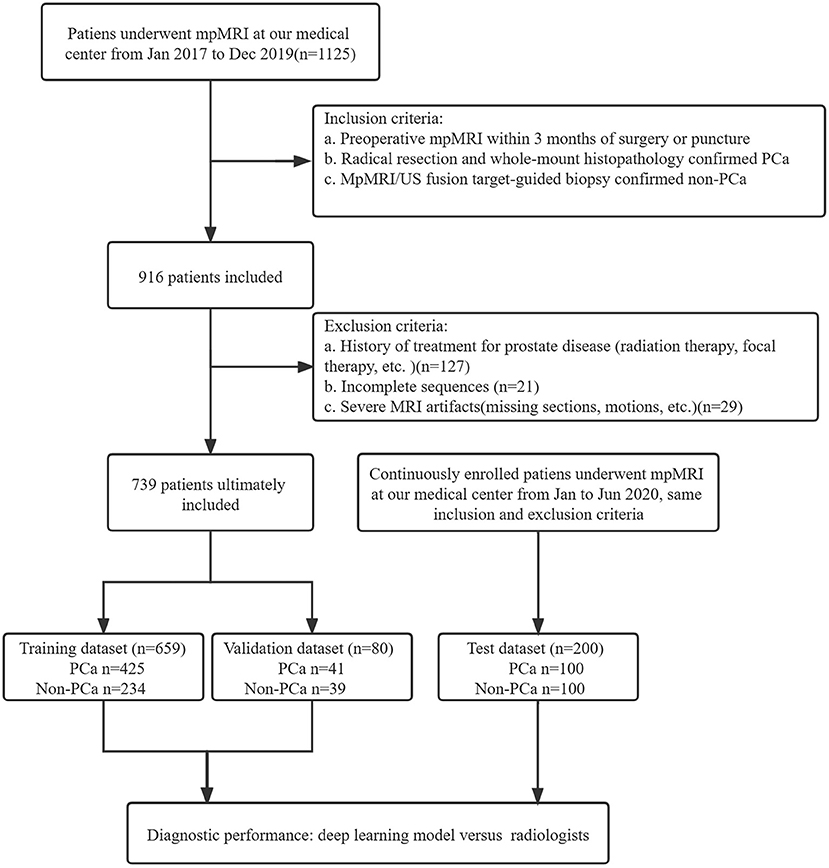

A total of 1125 patients who underwent prostate mpMRI between January 2017 and December 2019 were enrolled in the study. The inclusion criteria were as follows: (a) preoperative mpMRI within 3 months of surgery or puncture, (b) radical resection and whole-mount histopathology-confirmed PCa, and (c) mpMRI/ultrasonography (US) fusion target-guided biopsy or surgery confirmed non-PCa. Patients without PCa were defined as having negative biopsy or surgery. The exclusion criteria were (a) a history of treatment for prostate disease (radiation therapy, focal therapy, etc.), (b) incomplete imaging sequences, (c) severe MRI artifacts (missing sections, motions, etc.), and (d) unavailable whole-mount history. All MRI scans were reviewed in consensus by two radiologists, a 5-year junior and 10-year senior radiologist specializing in genitourinary imaging. A total of 739 patients, including 466 patients with PCa and 273 patients without PCa, were included for training and validation in the model. The independent dataset was consecutively collected from January 2020 to June 2020 with the same inclusion and exclusion criteria as mentioned above. A flowchart of the patient selection is shown in Figure 1.

Figure 1. Study flowchart of patient selection. PSA, prostate-specific antigen; mpMRI, multiparametric MRI; US, ultrasound.

Patients were scanned using two 3.0 T MRI scanners (uMR770; United Imaging, Shanghai, China and Ingenia; Philips Healthcare, Best, the Netherlands) with the same sequences and standard phased array surface coils according to the European Society of Urogenital Radiology guidelines. T1WI, T2WI, DWI and ADC sequences were acquired. Detailed parameters for transverse DWI (b-values of 50, 1,000, and 1,500 s/mm2) were as follows: repetition time (TR), 5,100 ms; echo time (TE), 80 ms; field of view, 26 × 22 cm; and thickness, 3 mm. Low b-value images were acquired at 50 s/mm2 to avoid perfusion effects at a b-value of 0 s/mm2. ADC maps were calculated from the b-value (1,500 s/mm2) using the scanner software. T2WI, DWI (b-values of 1,500 s/mm2), and ADC (b-values of 1,500 s/mm2) sequences were used in this study.

All the cases were confirmed by mpMRI/US fusion-guided targeted biopsy, and patients with PCa were further confirmed by radical resection and whole-mount histopathology. All the biopsies were conducted using the MRI-trans rectal ultrasound scan (TRUS) image registration system (Esaote® and RVS®). Whole-mount specimens were sliced from the apex to the base at 3-mm intervals following prostatectomy. All the specimens were examined by two independent urological pathologists.

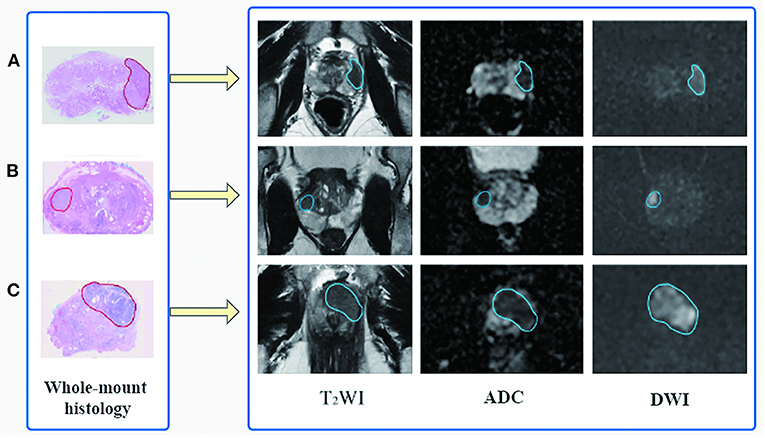

Based on the whole-mount histopathology images, the prostate gland and all the cancer regions on T2WI, DWI, and ADC sequences were labeled by two radiologists (with 5 and 10 years of expertise, respectively) under the supervision of a superior radiologist (with 15 years of expertise) using the open-source software ITK-SNAP (http://www.simpleitk.org, version 3.8.0). The workflow is illustrated in Figure 2.

Figure 2. Flowchart of region of interest delineation for prostate cancer lesion. All the prostate cancer lesions were manually labeled on the magnetic resonance images using whole-mount histopathology as a reference. Representative cases of prostate cancer in different zone distributions: (A) the lesion is in the left peripheral zone, (B) in the right peripheral and transition zone, and (C) in the transition zone and anterior fibromuscular stroma.

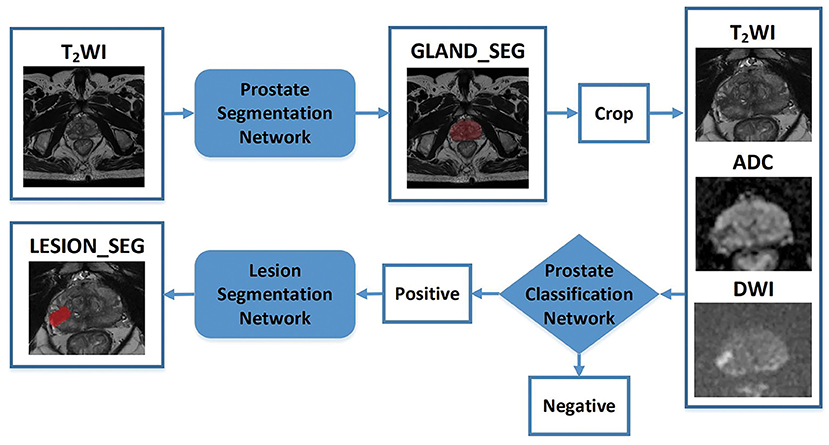

A CNN was constructed for prostate gland segmentation, classification, and cancer region segmentation/detection tasks. The model structure is illustrated in Figure 3.

Figure 3. Flowchart of the study. The blocks highlighted in blue (prostate gland segmentation network, prostate cancer classification network, prostate cancer segmentation/detection network) denote network models used in our study. “Crop” represents a fixed size region of interest (ROI) to crop the prostate gland according the result of the prostate gland segmentation network. The cropped ROI of ADC and DWI would be registered to the cropped ROI of the T2-weighted imaging (T2WI) and then three cropped ROI would be fed into the prostate cancer classification network. “Positive” represents the positive output of the classification network; in that case, the cropped ROI would be fed into the prostate cancer segmentation network to obtain the lesion region. “Negative” represents the negative output of the classification network; in that case, the cropped ROI would not be fed into the prostate cancer segmentation network.

First, a prostate gland segmentation network based on the T2WI sequence was implemented to obtain a mask of the gland. The mask was subsequently cropped to obtain three image patches including the gland on T2WI, DWI, and ADC sequences. Second, a prostate classification network based on the image patches from the T2WI, DWI, and ADC sequences was used to determine whether the gland had PCa lesion(s). If the gland was abnormal, a PCa segmentation network was used to obtain the lesion region. It is worth noting that the T2WI, DWI, and ADC patches were obtained based on the prostate gland segmentation results and were of fixed and similar sizes, including the gland.

The prostate gland segmentation network was based on V-Net (14), as shown in Supplementary Figure 1. The classification network was based on dense convolutional network (DenseNet) (15), which was used to determine whether the gland was normal. DenseNet connects each layer to every other layer in a feed-forward fashion. The feature maps of all the preceding layers were used as inputs for each layer, and their feature maps were used as inputs to all the subsequent layers. Prostate cancer lesion segmentation was also performed based on the image patches of T2WI, DWI, and ADC sequences. To obtain a more accurate cancer region, the Up-Block in V-Net was changed to an Up SE-Block, which adds a squeeze-and-excitation operation following two convolutions, as shown in Supplementary Figure 2.

In this study, all the networks were implemented using the PyTorch framework and Python 3.7. All the learning computations were performed on a Tesla V100 DGXS GPU with 32 GB of memory. The adaptive moment (Adam) algorithm was applied to optimize the parameters of the prostate segmentation network. The training dataset was randomly shuffled, and a batch size of four was selected. The stochastic gradient descent (SGD) algorithm was applied to optimize the parameters of the PCa network (15). The training dataset was randomly shuffled, and a batch size of 12 was selected. Finally, the Adam algorithm was applied again to optimize the parameters of the PCa region segmentation network. The training dataset was randomly shuffled, and a batch size of four was selected. During the training process for the prostate gland and cancer segmentation networks, the Dice loss was adopted, and the network weights were updated using the Adam optimizer with an initial learning rate of 0.0001. During the training process for the classification network, the cross-entropy loss was adopted, and the network weights were updated using SGD with an initial learning rate of 0.1.

The T2WI, DWI, and ADC images were imported from the DICOM format into ITK-SNAP (version 3.8.0). The MR images with and without DL delineations were independently reviewed by two radiologists, a 5-year junior and 10-year senior radiologist specializing in genitourinary imaging, who were blinded to the pathological results. PI-RADS v2.1 (16) recommendations were used by the junior and senior radiologists to evaluate the PCa likelihood of suspicious areas on mpMRI for each patient (17), and the results were divided into PCa (PI-RADS score 4-5 and partly PI-RADS score 3 cases) and non-PCa. Particularly, referring to PI-RADS score 3 cases, the final diagnosis would be further made by another 20-year radiologist specializing in genitourinary imaging.

Continuous variables are described using mean ± standard deviation, while categorical variables are described using frequency and ratio. The chi-square test was used for the sample size and location distribution. The DL model was verified using the validation and test datasets. The Dice loss was used to evaluate the performance of prostate gland and PCa lesion segmentation networks. The cross-entropy loss and AUC were used to evaluate the performance of the classification networks. Furthermore, the sensitivity, specificity, precision and accuracy were used to evaluate the diagnostic performance of the model in clinical application.

Patient demographic data and characteristics of the training, validation, and test datasets are shown in Table 1. There were no significant differences in the patient age or total prostate-specific antigen (PSA) values among the three groups. In the training dataset, there were 500 pathologically proven cancer lesions, with 315 lesions in the peripheral zone (PZ), 146 in the transitional zone (TZ), 3 in the anterior fibromuscular stroma (AFS), and 36 in the mixed region. In the validation data set, there were 59 pathologically proven cancer lesions, with 42 lesions in the PZ, 10 in the TZ, 0 in the AFS, and 7 in the mixed region. In the test dataset, there were 127 pathologically proven cancer lesions, with 78 lesions in the PZ, 38 in the TZ, 1 in the AFS, and 10 in the mixed region.

The training epoch was set as 700 for the prostate gland segmentation network, while the Dice loss values converged to 0.068; the convergence graph is shown in Supplementary Figure 3. A total of 330 epochs were set for training the prostate classification model, and the cross-entropy loss converged to 0.120; the convergence graph is shown in Supplementary Figure 4. For the PCa segmentation model, the network was trained for 240 epochs, when the value of the loss function converged to 0.167. A convergence graph is shown in Supplementary Figure 5.

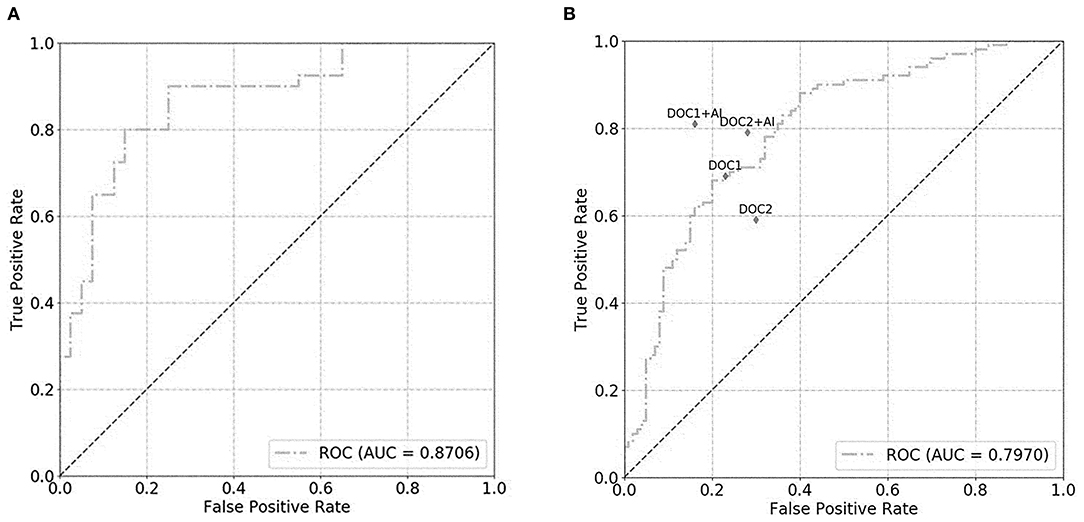

For the prostate gland automatic segmentation efficacy, the Dice loss values converged to 0.076, and the convergence graph is shown in Supplementary Figure 3. For the prostate automatic classification efficacy, the cross-entropy loss converged to 0.224, and the convergence graph is shown in Supplementary Figure 4. The AUC value for the prostate classification network was 0.871 (Figure 4A). For the prostate cancer automatic segmentation efficacy, the Dice loss values converged to 0.484, as shown in Supplementary Figure 5.

Figure 4. The graph shows the receiver operating characteristic (ROC) curve for prostate classification network performance. The ROC curves for validation set (A) and test set (B) show area under the curve (AUC) of 0.871 and 0.797, respectively. DOC1, senior radiologist; DOC2, junior radiologist.

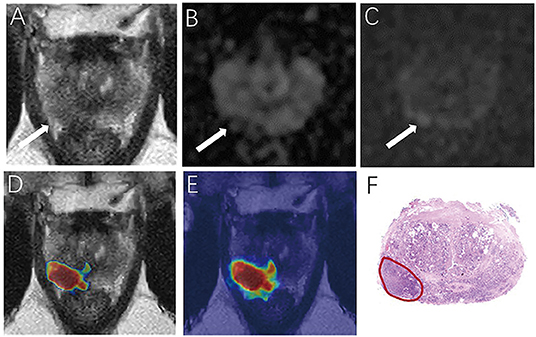

For the prostate automatic classification efficacy, the cross-entropy loss converged to 0.236. The AUC value for the prostate classification network was 0.797 in the test dataset (Figure 4B). Table 2 shows the evaluation of the model's diagnostic efficiency in practical applications based on the sensitivity, specificity, precision, and accuracy, with values of 0.710, 0.690, 0.696, and 0.700, respectively. For the junior radiologist without and with DL model assistance, these values were 0.590, 0.700, 0.663, and 0.645 vs. 0.790, 0.720, 0.738, and 0.755, respectively. For the senior radiologist, the values were 0.690, 0.770, 0.750, and 0.730 vs. 0.810, 0.840, 0.835, and 0.825, respectively. The values obtained with DL model assistance for radiologists were significantly higher than those without assistance (P < 0.05). Figure 5 shows a representative PCa example of radiologist-negative but DL model positive.

Figure 5. Demonstrate representative prostate cancer (PCa) example of radiologists negative (A–C) and deep learning (DL) model positive (D,E). Images show a case of DL model segmentation in a 60-year patient in a test set with prostate-specific antigen (PSA) of 5.59 ng/mL. Axial T2-weighted image (A) shows an ill-defined area of little low signal in the right peripheral zone (arrow), with slight restricted diffusion on apparent diffusion coefficient (ADC) maps (B). (C) Diffusion weighted imaging (DWI) (b-value 1,500 sec/mm2) shows slightly increased signal in this region, with an obvious conspicuity over background normal signal; this lesion would be PI-RADS score 3 for magnetic resonance imaging (MRI). (D,E) show overlapping areas between DL focused PCa region and genuine cancer location. The overlapped areas are colored in red. The software ITK-SNAP was used to open the probability map and MR images at the same time. Through the software function, the probability map is displayed as a jet type color map and overlappedon the T2 weighted imaging (T2WI) to obtain (E); The window width and window level of the probability map is adjusted to 0.5 and 0.75 respectively to display the probability map of the detected cancer area and overlapped on the image to obtain (D).

We proposed a DL model for improving the diagnostic ability of PCa using mpMRI and whole-mount histopathology images referenced delineations. The DL model diagnostic ability was higher than that of a junior radiologist and can improve PCa diagnostic accuracy in both junior and senior radiologists in clinical practice.

MpMRI plays an important role in the diagnostic workflow of patients with suspected PCa (18). DWI, ADC, and T2WI are probably the most important sequences in the detection, identification, and staging of PCa (19–21), and the DCE sequence offers limited added value compared to T2+ADC+DWI (22). According to PI-RADS V2.1, the role of the DCE sequence is only helpful for score 3 lesions in the PZ (7). Some study also observed that for DWI score 3 lesions in the PZ of biopsy-negative patients, the DCE sequence had no significant increased value in improving the identification of PCa (13). So, we proposed a DL model based on DWI, ADC, and T2WI sequences without contrast medium injections. Furthermore, some previous studies on DL model using only one or two of the above sequences and thus cannot be directly compared with clinical performance (8, 9).

The use of whole-mount histopathological specimens is a strong reference standard. Moreover, Padhani et al. (23) suggested that training datasets with spatially well-correlated histopathologic validation should be used. Our previous studies confirmed that whole-mount sections can be used as a reference to obtain a highly accurate prostate lesion label on prostate mpMRI (13). We subsequently labeled the PCa lesions on MR images using whole-mount histopathology images as references. Furthermore, the DL model was developed based on the classic V-Net and DenseNet networks; SE-Block integrated variables controlling also helped in improving the model accuracy and performance (24). In our study, the DL model was used to extract the gland and PCa areas, and accurately identify PCa compared to the gold standard of histopathology. The AUC value was 0.797 for the prostate classification network in the test dataset, and the accuracy of PCa detection/diagnosis was 0.700, which is higher than that of several reports. For example, Ishioka et al. (25) proposed a CNN deep learning model with AUCs of 0.645 and 0.636 in two validation sets, respectively. Moreover, our independent test dataset is imported without gland or lesion labeling in order to evaluate the model in real clinical work scenarios. The average PSA level of non-PCa group in the test dataset was 9.1 ± 6.4 ng/ml. It was a little high because all the patients were confirmed by targeted biopsy or resection for prostatitis, hyperplasia, or other prostate benign diseases; thus, the differential diagnosis could be challenging (26).

Castillo et al. (27) systematically reviewed the performance of machine learning applications in PCa classification based on MRI, and found that only one paper (27 publications) compared the performance of radiologists with or without DL model assistance, and presented that evaluation should be performed in a real clinical setting since the ultimate goal of these models is to assist the radiologists in diagnosis. Seetharaman et al. (28) developed a SPCNet model accurately detected aggressive PCa. In our study, we evaluated the DL model in an independent test dataset to assess its clinical application value and to compare it with junior and senior radiologists. This DL model showed higher accuracy than junior radiologist in diagnosing PCa and slightly lower than the senior radiologist. Furthermore, the DL model improved PCa diagnostic accuracy for both junior and senior radiologists. This is similar to the findings of Cao et al. (29), who presented a DL algorithm (FocalNet) that achieved slightly but not significantly lower PCa diagnosis performance than genitourinary radiologists. Additionally, some studies demonstrated diagnostic accuracy for prostate cancer using PI-RADS was 71.0 83.5%, which was similar with our results, but PI-RADS usually varies based on the experience of radiologists at different levels (30, 31).

Currently, most DL models are not fully automated diagnosis systems; rather, they are adjunct tools that aid radiologists in reading prostate mpMRI results. Kotter et al. (32) determined that new DL technology would not threaten a radiologist's career but rather help strengthen his or her diagnostic ability. In summary, our proposed DL model can improve PCa diagnostic performance for both senior and junior radiologists, indicating that DL assistance can potentially improve the clinical workflow.

There are several limitations to this study. First, all the patients were recruited from a single center. This may have negatively affected the performance of the model because larger and more diverse patient groups improve the generalizability of the classification algorithms. Second, the study was retrospective, the clinical data and traditional image parameters were not used in this study. Future studies should focus on multicenter data, biomarkers, and optimized algorithms to produce more reliable models for improving diagnosis, staging, and recurrence prediction of PCa. At last, all the patients were scanned using two 3.0 T MRI scanners in this study. The DL model may not perform so well using images provided by different machines or by a machine with a lower magnetic field.

In this study, we proposed an automated DL model for the segmentation and detection of PCa based on mpMRI and whole-mount histopathology referenced delineations. The diagnostic performance of DL model is higher than junior radiologist and could be capable of improving the diagnostic accuracy for both junior and senior radiologists and applying for young radiologist training.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and this retrospective study was approved by the Ethics Institution of Nanjing Drum Tower Hospital. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

DL and XH acquired and analyzed data and drafted the manuscript. HY and SL analyzed and explained the imaging data. JG and QZ acquired the clinical information and revised the manuscript. HG and BZ designed the study and revised the manuscript. All authors contributed to the article and approved the submitted version.

HY and SL were employed by company Shanghai United Imaging Intelligence Co.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We sincerely thank Prof. Yao Fu and Department of Pathology in Nanjing Drum Tower Hospital for retrieval of pathological data of patients in this study.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2021.810995/full#supplementary-material

1. Siegel RL, Miller KD, Jemal A. Cancer statistics, 2020. CA Cancer J Clin. (2020) 70:7–30. doi: 10.3322/caac.21590

2. Verma S, Choyke PL, Eberhardt SC, Oto A, Tempany CM, Turkbey B, et al. The current state of MR imaging-targeted biopsy techniques for detection of prostate cancer. Radiology. (2017) 285:343–56. doi: 10.1148/radiol.2017161684

3. Stabile A, Giganti F, Rosenkrantz AB, Taneja SS, Villeirs G, Gill IS, et al. Multiparametric MRI for prostate cancer diagnosis: current status and future directions. Nat Rev Urol. (2020) 17:41–61. doi: 10.1038/s41585-019-0212-4

4. Suarez-Ibarrola R, Hein S, Reis G, Gratzke C, Miernik A. Current and future applications of machine and deep learning in urology: a review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J Urol. (2020) 38:2329–47. doi: 10.1007/s00345-019-03000-5

5. Syer T, Mehta P, Antonelli M, Mallett S, Atkinson D, Ourselin S, et al. Artificial intelligence compared to radiologists for the initial diagnosis of prostate cancer on magnetic resonance imaging: a systematic review and recommendations for future studies. Cancers (Basel). (2021) 13:3318. doi: 10.3390/cancers13133318

6. Tamada T, Kido A, Yamamoto A, Takeuchi M, Miyaji Y, Moriya T. et al. Comparison of Biparametric and Multiparametric MRI for Clinically Significant Prostate Cancer Detection With PI-RADS Version 21. J Magn Reson Imaging. (2021) 53:283–91. doi: 10.1002/jmri.27283

7. Rudolph MM, Baur ADJ, Cash H, Haas M, Mahjoub S, Hartenstein A. et al. Diagnostic performance of PI-RADS version 21 compared to version 20 for detection of peripheral and transition zone prostate cancer. Sci Rep. (2020) 10:15982. doi: 10.1038/s41598-020-72544-z

8. Alkadi R, Taher F, El-Baz A, Werghi N. A deep learning-based approach for the detection and localization of prostate cancer in t2 magnetic resonance images. J Digit Imaging. (2019) 32:793–807. doi: 10.1007/s10278-018-0160-1

9. Clark T, Zhang J, Baig S, Wong A, Haider MA, Khalvati F. Fully automated segmentation of prostate whole gland and transition zone in diffusion-weighted MRI using convolutional neural networks. J Med Imaging (Bellingham). (2017) 4:041307. doi: 10.1117/1.JMI.4.4.041307

10. Yoo S, Gujrathi I, Haider MA, Khalvati F. Prostate cancer detection using deep convolutional neural networks. Sci Rep. (2019) 9:19518. doi: 10.1038/s41598-019-55972-4

11. Ito K, Furuta A, Kido A, Teramoto Y, Akamatsu S, Terada N, et al. Detectability of prostate cancer in different parts of the gland with 3-Tesla multiparametric magnetic resonance imaging: correlation with whole-mount histopathology. Int J Clin Oncol. (2020) 25:732–40. doi: 10.1007/s10147-019-01587-8

12. Gao J, Zhang C, Zhang Q, Fu Y, Zhao X, Chen M, et al. Diagnostic performance of 68Ga-PSMA PET/CT for identification of aggressive cribriform morphology in prostate cancer with whole-mount sections. Eur J Nucl Med Mol Imaging. (2019) 46:1531–41. doi: 10.1007/s00259-019-04320-9

13. Wang B, Gao J, Zhang Q, Zhang C, Liu G, Wei W, et al. Investigating the equivalent performance of biparametric compared to multiparametric MRI in detection of clinically significant prostate cancer. Abdom Radiol (NY). (2020) 45:547–55. doi: 10.1007/s00261-019-02281-z

14. Zhu Y, Wei R, Gao G, Ding L, Zhang X, Wang X, et al. Fully automatic segmentation on prostate MR images based on cascaded fully convolution network. J Magn Reson Imaging. (2019) 49:1149–56. doi: 10.1002/jmri.26337

15. Huang G, Liu Z, Pleiss G, Van Der Maaten L, Weinberger K. Convolutional networks with dense connectivity. IEEE Trans Pattern Anal Mach Intell. (2019). doi: 10.1109/TPAMI.2019.2918284

16. Turkbey B, Rosenkrantz AB, Haider MA, Padhani AR, Villeirs G, Macura KJ, et al. Prostate imaging reporting and data system version 2.1: 2019 update of prostate imaging reporting and data system version 2. Eur Urol. (2019) 76:340–51. doi: 10.1016/j.eururo.2019.02.033

17. Lo GC, Margolis DJA. Prostate MRI with PI-RADS v21: initial detection and active surveillance. Abdom Radiol (NY). (2020) 45:2133–42. doi: 10.1007/s00261-019-02346-z

18. Barrett T, Rajesh A, Rosenkrantz AB, Choyke PL. Turkbey B. PI-RADS version 21: one small step for prostate. MRI Clin Radiol. (2019) 74:841–52. doi: 10.1016/j.crad.2019.05.019

19. Xu L, Zhang G, Shi B, Liu Y, Zou T, Yan W, et al. Comparison of biparametric and multiparametric MRI in the diagnosis of prostate cancer. Cancer Imaging. (2019) 19:90. doi: 10.1186/s40644-019-0274-9

20. Zawaideh JP, Sala E, Shaida N, Koo B, Warren AY. Carmisciano, et al. Diagnostic accuracy of biparametric versus multiparametric prostate MRI: assessment of contrast benefit in clinical practice. Eur Radiol. (2020) 30:4039–49. doi: 10.1007/s00330-020-06782-0

21. Christophe C, Montagne S, Bourrelier S, Roupret M, Barret E, Rozet F, et al. Prostate cancer local staging using biparametric MRI: assessment and comparison with multiparametric MRI. Eur J Radiol. (2020) 132:109350. doi: 10.1016/j.ejrad.2020.109350

22. Vargas HA, Hötker AM, Goldman DA, Moskowitz CS, Gondo T, Matsumoto K, et al. Updated prostate imaging reporting and data system (PIRADS v2) recommendations for the detection of clinically significant prostate cancer using multiparametric MRI: critical evaluation using whole-mount pathology as standard of reference. Eur Radiol. (2016) 26:1606–12. doi: 10.1007/s00330-015-4015-6

23. Padhani AR, Turkbey B. Detecting prostate cancer with deep learning for MRI: a small step forward. Radiology. (2019) 293:618–9. doi: 10.1148/radiol.2019192012

24. Hu J, Shen L, Albanie S, Sun G, Wu E. Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell. (2020) 42:2011–23. doi: 10.1109/TPAMI.2019.2913372

25. Ishioka J, Matsuoka Y, Uehara S, Yasuda Y, Kijima T, Yoshida S, et al. Computer-aided diagnosis of prostate cancer on magnetic resonance imaging using a convolutional neural network algorithm. BJU Int. (2018) 122:411–7. doi: 10.1111/bju.14397

26. Cuocolo R, Cipullo MB, Stanzione A, Ugga L, Romeo V, Radice L, et al. Machine learning applications in prostate cancer magnetic resonance imaging. Eur Radiol Exp. (2019) 3:35. doi: 10.1186/s41747-019-0109-2

27. Castillo TJMC, Arif M, Niessen WJ, Schoots IG, Veenland JF. Automated classification of significant prostate cancer on MRI: a systematic review on the performance of machine learning applications. Cancers (Basel). (2020) 12:1606. doi: 10.3390/cancers12061606

28. Seetharaman A, Bhattacharya I, Chen LC, Kunder CA, Shao W, Soerensen SJC, et al. Automated detection of aggressive and indolent prostate cancer on magnetic resonance imaging. Med Phys. (2021) 48:2960–72. doi: 10.1002/mp.14855

29. Cao R, Mohammadian Bajgiran A, Afshari Mirak S, Shakeri S, Zhong X, Enzmann D, et al. Joint Prostate Cancer Detection and Gleason Score Prediction in mp-MRI via FocalNet. IEEE Trans Med Imaging. (2019) 38:2496–506. doi: 10.1109/TMI.2019.2901928

30. Mussi TC, Yamauchi FI, Tridente CF, Tachibana A, Tonso VM, Recchimuzzi DR, et al. Interobserver agreement and positivity of PI-RADS version 2 among radiologists with different levels of experience. Acad Radiol. (2019) 26:1017–22. 2 doi: 10.1016/j.acra.2018.08.013

31. Xu L, Zhang G, Zhang D, Zhang X, Bai X, Yan W. et al. Comparison of PI-RADS version 21 and PI-RADS version 2 regarding interreader variability and diagnostic accuracy for transition zone prostate cancer. Abdom Radiol. (2020) 45:4133–41. doi: 10.1007/s00261-020-02738-6

Keywords: prostate cancer, deep learning, magnetic resonance imaging, segmentation, detection

Citation: Li D, Han X, Gao J, Zhang Q, Yang H, Liao S, Guo H and Zhang B (2022) Deep Learning in Prostate Cancer Diagnosis Using Multiparametric Magnetic Resonance Imaging With Whole-Mount Histopathology Referenced Delineations. Front. Med. 8:810995. doi: 10.3389/fmed.2021.810995

Received: 08 November 2021; Accepted: 16 December 2021;

Published: 13 January 2022.

Edited by:

Kuanquan Wang, Harbin Institute of Technology, ChinaReviewed by:

Xiaohu Li, First Affiliated Hospital of Anhui Medical University, ChinaCopyright © 2022 Li, Han, Gao, Zhang, Yang, Liao, Guo and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bing Zhang, emhhbmdiaW5nX25hbmppbmdAbmp1LmVkdS5jbg==

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.