- Department of Ultrasound, Shengjing Hospital of China Medical University, Shenyang, China

The application of artificial intelligence (AI) technology to medical imaging has resulted in great breakthroughs. Given the unique position of ultrasound (US) in prenatal screening, the research on AI in prenatal US has practical significance with its application to prenatal US diagnosis improving work efficiency, providing quantitative assessments, standardizing measurements, improving diagnostic accuracy, and automating image quality control. This review provides an overview of recent studies that have applied AI technology to prenatal US diagnosis and explains the challenges encountered in these applications.

Introduction

Ultrasonography is convenient, low-cost, real-time, and non-invasive, and it has been the most largely used imaging modality. Antenatal ultrasound (US) examination, as the most important imaging method used during pregnancy, can assess the growth condition and birth defects of a fetus, helping the fetus to receive timely and effective treatment before or after delivery. For malformations with a poor prognosis, timely termination of a pregnancy could reduce the rate of births with severe birth defects. However, this time-consuming process depends to a large extent on doctor's experience and the available equipment. Moreover, it involves great work intensity in practice.

Artificial intelligence (AI) (1) refers to the ability to interpret external data and learning for specific purposes through flexible adaptation. Machine learning (ML), a field gaining considerable attention in AI, is a powerful set of computational tools that trains models on descriptive patterns obtained from human inference rules. However, a major problem facing ML is that feature selection relies heavily on statistical insights and domain knowledge, a limitation that initiated the development of deep learning. As a branch of ML, deep learning takes advantage of convolutional neural networks, one of the most powerful methods associated with images, which can realize high performance with limited training samples and even permit more abstract feature definitions. Consequently, it is often used for image pattern recognition and classification.

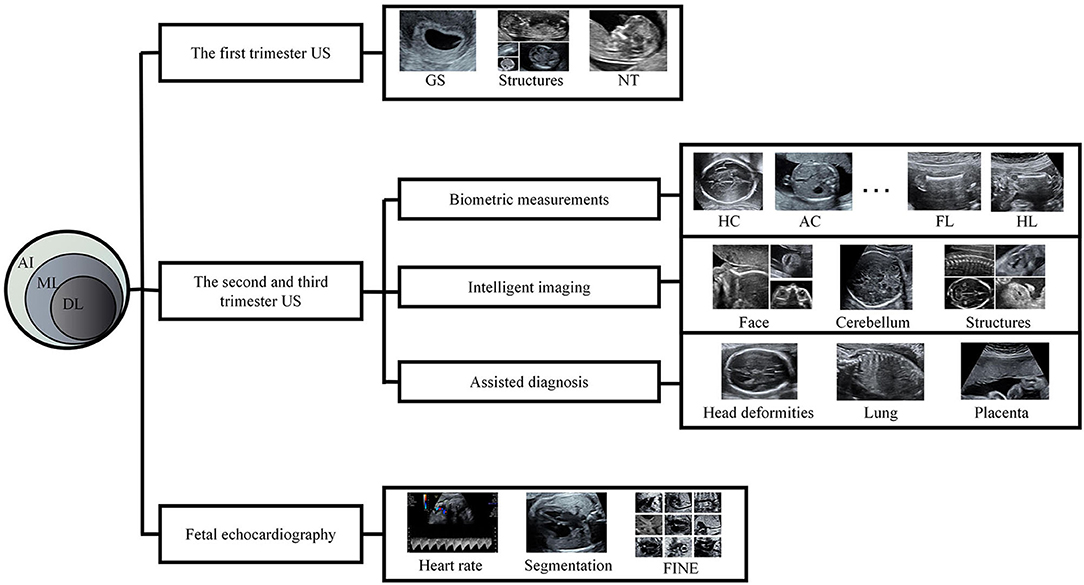

There has been much research on radiology with AI (2–5), and AI-assisted diagnosis has also become a research hotspot in the US field. Some experts have gained success in the intelligent US diagnosis of liver, thyroid, and breast diseases (6–9). However, AI in prenatal US diagnosis is still in its infancy, though there have been breakthroughs in measurement, imaging, and diagnosis. Such applications are of great significance; not only they improve efficiency, but they also make up for the inexperience and skill deficiency of some examiners. In this review, we introduce recent literature on the application of AI in prenatal US diagnosis (Figure 1).

Figure 1. A schematic diagram of this review AI, artificial intelligence; ML, machine learning; DL, deep learning; US, ultrasound; GS, gestational sac; NT, nuchal translucency; HC, head circumference; AC, abdominal circumference; FL, femur length; HL, humerus length; FINE, fetal intelligent navigation echocardiography.

Ai In The First Trimester Us

Gestational Sac

GS is the first important structure observed by US in pregnancy. The mean gestational sac diameter can roughly estimate the gestational age (GA). Zhang et al. (10) designed an automatic solution to select the standardized biometric plane of the GS and perform measurements during routine US examinations. The quantitative and qualitative analysis results showed the robustness, efficiency, and accuracy of the proposed method. Although this study is restricted to normal gestation within 7 weeks, it is expected to facilitate the clinical workflow with further extension and validation. Yang et al. (11) established a fully automatic framework that could simultaneously affect the semantic segmentation of multiple anatomical structures including the fetus, GS, and placenta in prenatal volumetric US. Extensively verified on large in-house datasets, the method demonstrates superior segmentation results, good agreement with expert measurements, and high consistency against scanning variations.

Fetal Biometry Assessment

The automation of image-based assessments of fetal anatomies in the initial trimester remains a rarely studied and arduous challenge. Ryou et al. (12) developed an intelligent image analysis method to visualize the key fetal anatomy and automate biometry in the first trimester. With this approach, all sonographers needed to do was acquire a three-dimensional US (3DUS) scan following a simple standard acquisition guideline. Next, the method could perform semantic segmentation of the whole fetus and extract the biometric planes of the head, abdomen, and limbs for anatomical assessment. However, it exhibited relatively low qualitative analysis results of the limbs due to a low detection rate.

Nuchal Translucency

NT is a fluid-filled region under the skin of the posterior neck of a fetus, which appears sonographically as an anechogenic area. A fetus with increased NT thickness has a higher risk of congenital heart disease, chromosomal abnormalities, and intrauterine fetal death. The NT measurement has proven to be a crucial parameter in prenatal screening.

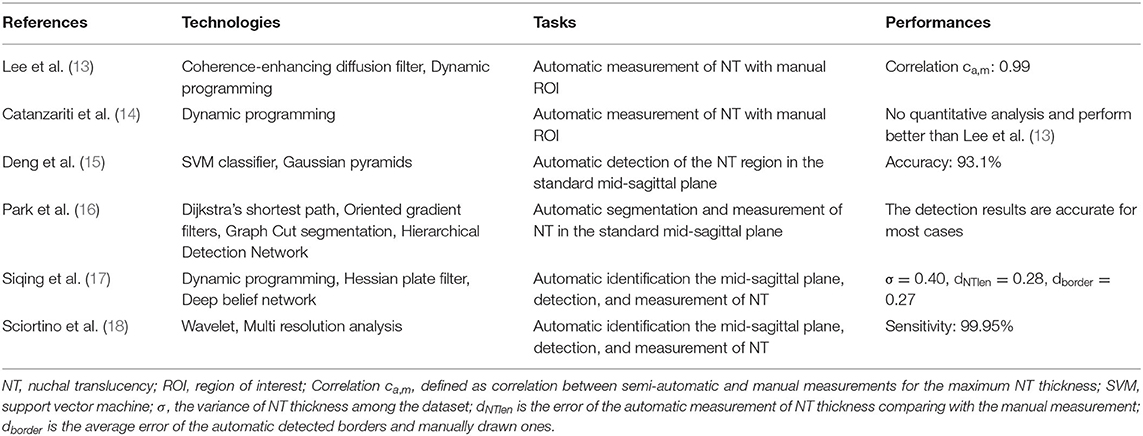

NT thickness needs to be measured in the fetal standard medium sagittal plane. On account of the low signal-to-noise ratio of ultrasonic data, the relatively short fetal crown-rump lengths and activity in early pregnancy, the section is hard to obtain, making its intelligent measurement a challenge. In recent years, with the joint efforts of multidisciplinary experts, many breakthroughs have been made (Table 1).

Initially, the thickness of NT could be measured by manual selection of the region of interest (13, 14), and semi-automatic approaches were proven to produce reliable measurements compared to traditional manual methods. Later, experts made attempts to automatically identify and measure NT in mid-sagittal section images (15, 16), and the NT detection results were accurate in most cases. Following studies of the automatic detection of the fetal sagittal plane in US (19, 20), researchers developed sufficiently accurate (17, 18) methods for the automatic recognition of the standard NT plane as well as measurements of its thickness.

Ai In The Second And Third Trimester Us

It is in the mid-trimester that sonographers can evaluate fetal growth and find dysplasia with greater sensitivity. The second trimester scan associated with third trimester checks can better detect congenital abnormalities and even predict postnatal outcomes.

Biometric Measurement

Standardized measurements are an indispensable part of prenatal US, which play a role in dating pregnancies and detecting potential abnormalities, but the process remains a highly repetitive one. Automation assists in reducing the time needed for routine tasks, allowing more time to analyze additional scan planes for diagnosis. Moreover, automatic measurements can reduce operator bias and contribute to improved quality control.

Fetal Head

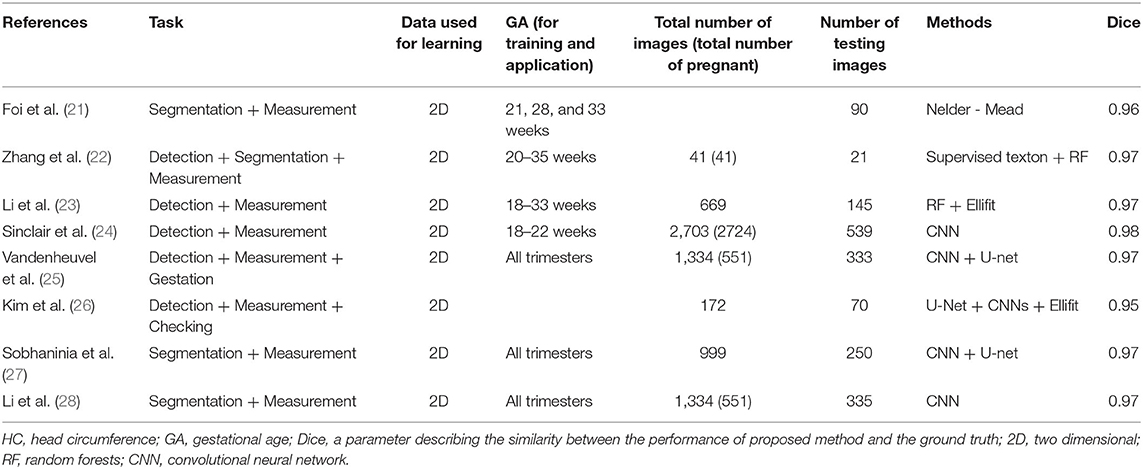

A number of AI-based methods have been developed for head circumference (HC) measurement (21–28), the studies of which are summarized in Table 2.

Fetal factors—such as abnormalities, low contrast, speckle noise, boundary occlusion, or other artifacts—may affect intelligent detection and measurement; such conditions having been accounted for in the studies (22, 24, 26, 28). Approaches with plane verification may obtain more accurate results. As Kim et al. (26) assessed the transthalamic plane on the basis of the cavum septum pellucidum, the V-shaped ambient cistern, and the cerebellum. Van den Heuvel et al. (25) combined their intelligent method with the Hadlock curve, allowing the GA to be determined automatically from the measurements. However, the determination was unreliable for the third trimester.

Some medical devices have been successfully equipped with software for intelligent processing (29–31). With such computer assistance, basic planes can be extracted and biometries related to the fetal head can be obtained automatically from the 3DUS. And the computer-assisted systems have proven to be reliable in measuring HC. With further optimization, such tools suggest great promise in improving workflow efficiency.

Fetal Abdomen

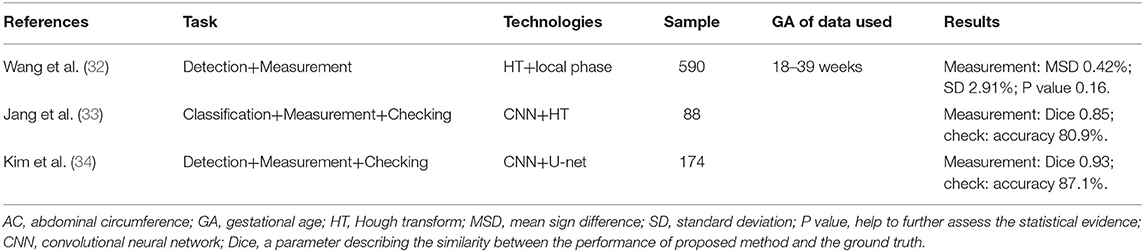

The low contrast between the fetal abdomen and the surrounding environment, its irregular shape, and the high variability of images all serve to make intelligent study of the abdominal circumference (AC) a difficult task. Several original studies (32–34) published on the subject are summarized in Table 3.

The novelty of a method introduced by Kim et al. (34) was the use of the spine position as a navigation marker to determine the final plane for AC measurement. In this way, the influence of interference—such as a regional lack of amniotic fluid, acoustic shadows, or certain anatomical structures—could be reduced. Moreover, the checking process of the standard plane further raises the stability and accuracy of AC measurements. This intelligent approach significantly outperforms conventional studies (33), and the multiple learning framework is desirable to integrated into a single framework.

Fetal Long Bone

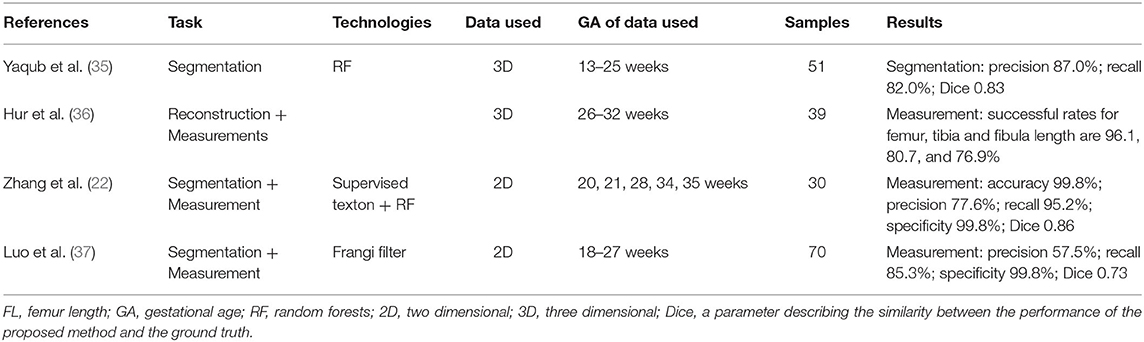

The position and posture of a fetus varies, which limits such studies about fetal long bone. There has been some progress regarding the segmentation and measurement of the fetal femur (22, 35–37) (Table 4). Most of the methods can be divided into several parts including the determination of regions of interest, image processing, identification of femoral features, and measurement of lengths or volumes. Moreover, the models can obtain similar accuracy as that obtained via manual measurements.

Hur et al. (36) conducted a prospective study to evaluate the performance of a 3DUS system, five-dimensional long bone (5DLB), in detecting the lower limb long bone. They found this intelligent tool to be reproducible and comparable with conventional two-dimensional (2D) and manual 3D techniques for fetal long bone measurements. As the study demonstrates, the new technique streamlines the process of reconstructing lower limb long bone images and performing fetal biometry.

Multiple Structures

With the advances in ML, professionals were not satisfied by focusing on a single anatomical structure alone and started exploring measurements of multiple structures. Carneiro et al. (38, 39) proposed a novel method for the rapid detection and measurement of fetal anatomical structures. The system was, on average, close to the accuracy of experts in terms of the segmentation and obstetric measurements of HC, AC, femur length, etc. The approach dealt with humerus length and crown-rump length measurements for the first time. Moreover, the framework was further optimized for an intelligent application—that is, syngo Auto OB measurements algorithm—and demonstrated acceptable performance when integrated with the clinical workflow (40).

Intelligent Imaging

Accurate acquisition of fetal standard planes with key anatomical structures is crucial for obstetric examination and diagnosis. However, the standard plane acquisition is a labor-intensive task and requires an operator equipped with a thorough knowledge of fetal anatomy. Therefore, automatic methods are in high demand to alleviate the workload and boost examination efficiency.

The fetal face is one of the key points of a prenatal US scan, and conventional 2DUS is still the gold standard for the examination. Automatic solutions (41, 42) for the recognition of the fetal facial standard plane (FFSP) have been presented using different models. They can classify input images into the axial plane, coronal plane, sagittal plane, and non-FFSP. Lei et al. (41) used manual annotated features from consecutive US images to train their intelligent system. In the literature (42), method have been proposed that learn feature representations from raw data for recognition without any manually designed features via deep convolutional neural network architectures. Representations discovered by deep convolutional neural networks are more robust and sophisticated than standard hand-crafted features, facilitating better classified results.

Assessment of the fetal cerebellar volume is important for evaluating fetal growth or diagnosing nervous system deformities. However, the irregular shape of the cerebellum and strong ultrasound image artifacts complicate the task without manual intervention. AI in prenatal US examination has realized the automatic localization (43), and segmentation (44) of the fetal cerebellum in 3DUS with reasonable accuracy.

Owing to the development of computer technology, the emergence of medical AI tools make it possible to automatically detect the genital organ (45) and kidney (46) with an accuracy of more than 80%. Moreover, the intelligent imaging of multiple different fetal structures has also become a reality based on CNNs. Chen et al. (47) presented a general framework for the automatic identification of four fetal standard planes—including the abdominal, face axial, and four-chamber view standard plane—from US videos. Extensive experiments have been conducted to corroborate the efficacy of the framework on the standard plane detection problem. Baumgartner et al. (48) proposed a novel method to automatically detect thirteen fetal standard views including the brain, lips, kidneys, etc. Moreover, it could provide the localization of the target structures via a bounding box. Evidence in the experimental data suggested that the proposed network could achieve excellent results for real-time annotation of 2DUS frames. While Sridar et al. (49) introduced and assessed a method to automatically classify fourteen different fetal structures using 2DUS images. After verification, there was good agreement between the ground truth and the proposed method. The architecture was capable of predicting images without US scanner overlays with a mean accuracy of 92%. Although these studies focused only on pregnant woman of 18–22 weeks GA, they initiated new ideas for prenatal US studies.

Assisted Diagnosis

Diagnosis of fetal abnormalities in US is a highly subjective process. Consequently, the research into computer-aided diagnosis (CAD) tools will help doctors make more objective and quantitative decisions. However, the ML of abnormal cases is a complex study in itself and needs sufficient training data—currently, there are but a few studies.

Central Nervous System Abnormalities of Fetus

Sahli et al. (50) proposed a learning framework for the automatic diagnosis of microcephaly and dolichocephaly using intelligent measurements of fetal head. Test results showed that this method could detect and diagnose such abnormalities quickly and accurately, but it focused only on size and shape, excluding internal structures.

Based on previous experts' research, Xie et al. (51) added specific abnormal cases to the exploration of CAD. The study included a total of 29 419 images from 12,780 pregnancies, containing cases of common central nervous system abnormalities confirmed by follow-up care such as, ventriculomegaly, microcephalus, holoprosencephaly, etc. In addition to recognize and classify normal and abnormal US images of the fetal brain, their method could also visualize lesions and interpret results using heat maps. After testing, the overall accuracy for classification reached 96.31%; the probability of heat map location being 86.27%. This study confirmed the feasibility of the CAD of encephalic abnormities. It also laid the foundation for further study of the diagnosis and differential diagnosis of fetal intracranial malformations. Xie et al. (52) proposed a similar CAD approach for the differential diagnosis of five common fetal brain abnormalities. The algorithms, however, need further refinement for diagnosis assistance and the reduction of false negatives.

Fetal Lung Maturation

Neonatal respiratory morbidity (NRM) is the leading cause of mortality and morbidity associated with prematurity, and it can be assessed through the fetal lung maturity (FLM) process. Traditional clinical options for FLM estimation are either the use of GA directly as a proxy FLM estimator or through amniocentesis, an invasive laboratory test. In recent years, the feasibility of evaluating the degree of fetal lung maturation from US images has been preliminarily validated (53, 54). Moreover specialists have made attempts to quantitatively analyze FLM by means of ML (55, 56). Such automated and non-invasive methods can predict NRM with a performance similar to that reported for tests based on amniotic fluid analysis and much greater than that of GA alone. The intelligent evaluation technique needs further studying and is a promising technique to assist clinical diagnosis in the future.

Placenta

US classification of placental maturity is a key part of placental function evaluation. At present, determining the placenta stage depends mainly on observation and the empirical analysis of clinicians. The emergence of automatic detection provides an alternative for the evaluation which would reduce the differences in subjective evaluations improving and verifying the diagnoses.

With the development of computer techniques, some intelligent grading methods have been developed (57, 58), but analysis results are insufficiently good for practical application owing to fewer discriminative features, sample methods for classification, etc. The approach of Lin (59) included several algorithms for extracting different characteristics and a process for selecting multiple relevant characteristics. The performances of different methods for feature extraction were compared in the article and confirmed that their method outperformed previous studies, providing more accurate staging results. Consequently, it exhibited better clinical value. In other studies (60, 61), the use of dense sampling contributes to better discriminability as more placental image samples are captured. They share the same classifier and descriptor but have different encoding methods. And Lei et al. (61) achieved obvious superiority in encoding accuracy. Intelligent evaluation methods could be further optimized by adding more advanced algorithms or synthesizing other information such as blood flow.

Ai In Fetal Echocardiography

Congenital heart disease (CHD) is one of the most common birth defects. Fetal echocardiography, as the preferred choice for diagnostic screening and prognosis evaluation, is getting increased attention. However, this examination is resource limited, and the diagnostic accuracy depends on the skills of the screening operators. According to a multicenter study done in China, despite the specificity of fetal echocardiography being 99.8%, the sensitivity was just 33.9% (62). AI research is expected to improve the detection rate of fetal heart abnormalities with US.

There have been intelligent studies that focused on the heart rate (63), heart segmentation (64, 65), and congenital heart disease diagnosis (66) of fetus. Yeo et al. have been studying fetal intelligent navigation echocardiography (FINE) for many years. FINE is a novel method for visualization of the standard fetal echocardiography views and application of “intelligent navigation” technology (67, 68). It can obtain nine standard fetal echocardiography views—that is, four chamber, aortic arch, three vessels and trachea, etc—in normal hearts (67) and display abnormal anatomy and doppler flow characteristics (69), and detect complex CHD (70–72). Other researchers have also performed several studies related to FINE (73–77). The results have shown their potential value in fetal heart evaluations and usefulness in the congenital defect screening process, such as double-outlet right ventricle and the D-transposition of large arteries. Moreover, FINE has been integrated into the commercial application 5D Heart (78, 79), which is considered to be a promising tool for cardiac screening and diagnostics in a clinical setting. The exploration of FINE aims to simplify the examination of fetal hearts, reduce operator dependency, and improve the index of suspicion of CHD.

Discussion

AI is expected to change medical practices in ways we remain unaware of. The latest ultrasound machines are already equipped with intelligent applications. It can realize intelligent measurement based on the standard section obtained by the sonographers. Some ultrasound instruments can realize intelligent imaging of the fetal face. Intelligentized antenatal US could improve work efficiency, provide more consistent and quantitative results, contribute to effective malformation diagnoses. Moreover, AI-based prenatal US promises to improve the quality control of clinical work and the imbalance of medical resources, shortening the training cycle of young doctors.

While CAD in prenatal US is just beginning, the irregular movement of a fetus and the complexity of fetal malformations does pose a significant challenge. To date, most research has been conducted with 2DUS, focused primarily on algorithmic performance rather than clinical utility, with fewer studies exploring intelligent imaging and diagnosis compared to biological measurements.

In terms of diagnosis, comprehensive models need to integrate diagnostic imaging and clinical data. However, in reality, not all cases are easy to diagnosis with only one clear malformation and some malformations may be too subtle. Single abnormal images are too limited for recognition and diagnosis, with data for training being unable to cover all fetus abnormities. Moreover, data used usually come from the same centers, which is insufficient for better robustness making experimental data too limited for generalized clinical applications.

It should be clear that machine learning is a powerful tool for clinical assistance, but it is not realistic to conduct independent diagnosis without the supervision of sonographers. At the same time, because the acquisition of raw data still needs to rely on the sonographers, the sonographer's mistake will also affect the AI diagnosis results. In addition, how to perform intelligent diagnosis integrated with traditional sonographic data is another question that needs to be answered. Furthermore, when designing or applying an algorithm, the false positive or false negative rates need to be considered based on real-world requirements. Finally, the ethical issues related to AI products also need to be considered. All of the above need to be discussed seriously by experts in various fields to pave the way to better health care.

Conclusion

Multidisciplinary integration is a general trend. There are high expectations of AI applications for innovative healthcare solutions. In this article, we present this emerging technology in the context of US in obstetrics. Despite the advances, there are still many study gaps in this field. AI will continue to be used to optimize prenatal US scans and is expected to provide superior antenatal service in the near future.

Author Contributions

LC had the idea and designed this review. FH drafted the manuscript and prepared the figures and tables. FH, LC, YW, YX, and YZ revised the paper. All the authors read and agreed to the submission of the final version of the manuscript.

Funding

This study was supported by the National Natural Science Foundation of China (Grant Nos. 81600258 and 81802540), and the 345 Talent Project of Shengjing Hospital.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

AC, Abdominal circumference; AI, Artificial intelligence; CAD, Computer-aided diagnosis; CHD, Congenital heart disease; FFSP, Fetal facial standard plane; FINE, Fetal intelligent navigation echocardiography; FLM, Fetal lung maturity; GA, Gestational age; GS, Gestational sac; HC, Head circumference; NRM, Neonatal respiratory morbidity; NT, Nuchal translucency; ML, Machine learning; 2D, Two-dimensional; 2DUS, Two-dimensional ultrasound; 3D, Three-dimensional; 3DUS, Three-dimensional ultrasound; US, Ultrasound.

References

1. Kaplan A, Haenlein M. Siri, Siri, in my hand: who's the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus Horiz. (2019) 62:15–25. doi: 10.1016/j.bushor.2018.08.004

2. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H. Artificial intelligence in radiology. Nat Rev Cancer. (2018) 18:500–10. doi: 10.1038/s41568-018-0016-5

3. Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. (2019) 69:127–57. doi: 10.3322/caac.21552

4. Stib MT, Vasquez J, Dong MP, Kim YH, Subzwari SS, Triedman HJ, et al. Detecting large vessel occlusion at multiphase CT angiography by using a deep convolutional neural network. Radiology. (2020) 297:640–9. doi: 10.1148/radiol.2020200334

5. Wang H, Wang L, Lee EH, Zheng J, Zhang W, Halabi S, et al. Decoding COVID-19 pneumonia: comparison of deep learning and radiomics CT image signatures. Eur J Nucl Med Mol Imaging. (2021) 48:1478–86. doi: 10.1007/s00259-020-05075-4

6. Choi YJ, Baek JH, Park HS, Shim WH, Kim TY, Shong YK, et al. A Computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of thyroid nodules on ultrasound: initial clinical assessment. Thyroid. (2017) 27:546–52. doi: 10.1089/thy.2016.0372

7. Nishida N, Kudo M. Artificial intelligence in medical imaging and its application in sonography for the management of liver tumor. Front Oncol. (2020) 10:594580. doi: 10.3389/fonc.2020.594580

8. Liang X, Yu J, Liao J, Chen Z. Convolutional neural network for breast and thyroid nodules diagnosis in ultrasound imaging. Biomed Res Int. (2020) 2020:1763803. doi: 10.1155/2020/1763803

9. Lei YM, Yin M, Yu MH, Yu J, Zeng SE, Lv WZ, et al. Artificial intelligence in medical imaging of the breast. Front Oncol. (2021) 11:600557. doi: 10.3389/fonc.2021.600557

10. Zhang L, Chen S, Chin CT, Wang T, Li S. Intelligent scanning: automated standard plane selection and biometric measurement of early gestational sac in routine ultrasound examination. Med Phys. (2012) 39:5015–27. doi: 10.1118/1.4736415

11. Yang X, Yu L, Li S, Wen H, Luo D, Bian C, et al. Towards automated semantic segmentation in prenatal volumetric ultrasound. IEEE Trans Med Imaging. (2019) 38:180–93. doi: 10.1109/TMI.2018.2858779

12. Ryou H, Yaqub M, Cavallaro A, Papageorghiou AT, Alison Noble J. Automated 3D ultrasound image analysis for first trimester assessment of fetal health. Phys Med Biol. (2019) 64:185010. doi: 10.1088/1361-6560/ab3ad1

13. Lee YB, Kim MJ, Kim MH. Robust border enhancement and detection for measurement of fetal nuchal translucency in ultrasound images. Med Biol Eng Comput. (2007) 45:1143–52. doi: 10.1007/s11517-007-0225-7

14. Catanzariti E, Fusco G, Isgro F, Masecchia S, Prevete R, Santoro M. A semi-automated method for the measurement of the fetal nuchal translucency in ultrasound images. In: Conference of Image Analysis and Processing – ICIAP 2009. Vietri sul Mare (2009). p. 613–22. doi: 10.1007/978-3-642-04146-4_66

15. Deng Y, Wang Y, Chen P, Yu J. A hierarchical model for automatic nuchal translucency detection from ultrasound images. Comput Biol Med. (2012) 42:706–13. doi: 10.1016/j.compbiomed.2012.04.002

16. Park J, Sofka M, Lee S, Kim D, Zhou SK. Automatic nuchal translucency measurement from ultrasonography. Med Image Comput Comput Assist Interv. (2013) 16:243–50. doi: 10.1007/978-3-642-40760-4_31

17. Siqing N, Jinhua Y, Ping C, Yuanyuan W, Yi G, Jian Qiu Z. Automatic measurement of fetal Nuchal translucency from three-dimensional ultrasound data. Annu Int Conf IEEE Eng Med Biol Soc. (2017) 2017:3417–20. doi: 10.1109/EMBC.2017.8037590

18. Sciortino G, Tegolo D, Valenti C. Automatic detection and measurement of nuchal translucency. Comput Biol Med. (2017) 82:12–20. doi: 10.1016/j.compbiomed.2017.01.008

19. Nie S, Yu J, Chen P, Wang Y, Zhang JQ. Automatic detection of standard sagittal plane in the first trimester of pregnancy using 3-D ultrasound data. Ultrasound Med Biol. (2017) 43:286–300. doi: 10.1016/j.ultrasmedbio.2016.08.034

20. Sciortino G, Orlandi E, Valenti C, Tegolo D. Wavelet analysis and neural network classifiers to detect mid-sagittal sections for nuchal translucency measurement. Image Anal Stereol. (2016) 35:105–15. doi: 10.5566/ias.1352

21. Foi A, Maggioni M, Pepe A, Rueda S, Noble JA, Papageorghiou AT, et al. Difference of Gaussians revolved along elliptical paths for ultrasound fetal head segmentation. Comput Med Imaging Graph. (2014) 38:774–84. doi: 10.1016/j.compmedimag.2014.09.006

22. Zhang L, Ye X, Lambrou T, Duan W, Allinson N, Dudley NJ, et al. supervised texton based approach for automatic segmentation and measurement of the fetal head and femur in 2D ultrasound images. Phys Med Biol. (2016) 61:1095–115. doi: 10.1088/0031-9155/61/3/1095

23. Li J, Wang Y, Lei B, Cheng JZ, Qin J, Wang T, et al. Automatic fetal head circumference measurement in ultrasound using random forest and fast ellipse fitting. IEEE J Biomed Health Inform. (2018) 22:215–23. doi: 10.1109/JBHI.2017.2703890

24. Sinclair M, Baumgartner CF, Matthew J, Bai W, Martinez JC, Li Y, et al. Human-level performance on automatic head biometrics in fetal ultrasound using fully convolutional neural networks. Annu Int Conf IEEE Eng Med Biol Soc. (2018) 2018:714–7. doi: 10.1109/EMBC.2018.8512278

25. van den Heuvel TLA, Petros H, Santini S, de Korte CL, van Ginneken B. Automated fetal head detection and circumference estimation from free-hand ultrasound sweeps using deep learning in resource-limited countries. Ultrasound Med Biol. (2019) 45:773–85. doi: 10.1016/j.ultrasmedbio.2018.09.015

26. Kim HP, Lee SM, Kwon JY, Park Y, Kim KC, Seo JK. Automatic evaluation of fetal head biometry from ultrasound images using machine learning. Physiol Meas. (2019) 40:065009. doi: 10.1088/1361-6579/ab21ac

27. Sobhaninia Z, Rafiei S, Emami A, Karimi N, Najarian K, Samavi S, et al. Fetal ultrasound image segmentation for measuring biometric parameters using multi-task deep learning. Annu Int Conf IEEE Eng Med Biol Soc. (2019) 2019:6545–8. doi: 10.1109/EMBC.2019.8856981

28. Li P, Zhao H, Liu P, Cao F. Automated measurement network for accurate segmentation and parameter modification in fetal head ultrasound images. Med Biol Eng Comput. (2020) 58:2879–92. doi: 10.1007/s11517-020-02242-5

29. Rizzo G, Aiello E, Pietrolucci ME, Arduini D. The feasibility of using 5D CNS software in obtaining standard fetal head measurements from volumes acquired by three-dimensional ultrasonography: comparison with two-dimensional ultrasound. J Matern Fetal Neonatal Med. (2016) 29:2217–22. doi: 10.3109/14767058.2015.1081891

30. Ambroise Grandjean G, Hossu G, Bertholdt C, Noble P, Morel O, Grangé G. Artificial intelligence assistance for fetal head biometry: assessment of automated measurement software. Diagn Interv Imaging. (2018) 99:709–16. doi: 10.1016/j.diii.2018.08.001

31. Pluym ID, Afshar Y, Holliman K, Kwan L, Bolagani A, Mok T, et al. Accuracy of three-dimensional automated ultrasound imaging of biometric measurements of the fetal brain. Ultrasound Obstet Gynecol. (2020). doi: 10.1016/j.ajog.2019.11.1047

32. Wang W, Qin J, Zhu L, Ni D, Chui YP, Heng PA. Detection and measurement of fetal abdominal contour in ultrasound images via local phase information and iterative randomized Hough transform. Biomed Mater Eng. (2014) 24:1261–7. doi: 10.3233/BME-130928

33. Jang J, Park Y, Kim B, Lee SM, Kwon JY, Seo JK. Automatic estimation of fetal abdominal circumference from ultrasound images. IEEE J Biomed Health Inform. (2018) 22:1512–20. doi: 10.1109/JBHI.2017.2776116

34. Kim B, Kim KC, Park Y, Kwon JY, Jang J, Seo JK. Machine-learning-based automatic identification of fetal abdominal circumference from ultrasound images. Physiol Meas. (2018) 39:105007. doi: 10.1088/1361-6579/aae255

35. Yaqub M, Javaid MK, Cooper C, Noble JA. Investigation of the role of feature selection and weighted voting in random forests for 3-D volumetric segmentation. IEEE Trans Med Imaging. (2014) 33:258–71. doi: 10.1109/TMI.2013.2284025

36. Hur H, Kim YH, Cho HY, Park YW, Won HS, Lee MY, et al. Feasibility of three-dimensional reconstruction and automated measurement of fetal long bones using 5D long bone. Obstet Gynecol Sci. (2015) 58:268–76. doi: 10.5468/ogs.2015.58.4.268

37. Luo N, Li J, Zhou CL, Zheng JZ, Ni D. Automatic measurement of fetal femur length in ultrasound image. J Shenzhen Univ Sci Eng. (2017) 34:421–7. doi: 10.3724/SP.J.1249.2017.04421

38. Carneiro G, Georgescu B, Good S, Comaniciu D. Automatic fetal measurements in ultrasound using constrained probabilistic boosting tree. Med Image Comput Comput Assist Interv. (2007) 10:571–9. doi: 10.1007/978-3-540-75759-7_69

39. Carneiro G, Georgescu B, Good S, Comaniciu D. Detection and measurement of fetal anatomies from ultrasound images using a constrained probabilistic boosting tree. IEEE Trans Med Imaging. (2008) 27:1342–55. doi: 10.1109/TMI.2008.928917

40. Pashaj S, Merz E, Petrela E. Automated ultrasonographic measurement of basic fetal growth parameters. Ultraschall Med. (2013) 34:137–44. doi: 10.1055/s-0032-1325465

41. Lei B, Tan EL, Chen S, Zhuo L, Li S, Ni D, et al. Automatic recognition of fetal facial standard plane in ultrasound image via fisher vector. PLoS ONE. (2015) 10:e0121838. doi: 10.1371/journal.pone.0121838

42. Yu Z, Tan EL, Ni D, Qin J, Chen S, Li S, et al. A deep convolutional neural network-based framework for automatic fetal facial standard plane recognition. IEEE J Biomed Health Inform. (2018) 22:874–85. doi: 10.1109/JBHI.2017.2705031

43. Liu X, Yu J, Wang Y, Chen P. Automatic localization of the fetal cerebellum on 3D ultrasound volumes. Med Phys. (2013) 40:112902. doi: 10.1118/1.4824058

44. Gutierrez Becker B, Arambula Cosio F, Guzman Huerta ME, Benavides-Serralde JA. Automatic segmentation of the cerebellum of fetuses on 3D ultrasound images, using a 3D point distribution model. Annu Int Conf IEEE Eng Med Biol Soc. (2010) 2010:4731–4. doi: 10.1109/IEMBS.2010.5626624

45. Tang S, Chen SP. A fast automatic recognition and location algorithm for fetal genital organs in ultrasound images. J Zhejiang Univ Sci B. (2009) 10:648–58. doi: 10.1631/jzus.B0930162

46. Meenakshi S, Suganthi M, Sureshkumar P. Segmentation and boundary detection of fetal kidney images in second and third trimesters using kernel-based fuzzy clustering. J Med Syst. (2019) 43:203. doi: 10.1007/s10916-019-1324-3

47. Chen H, Wu L, Dou Q, Qin J, Li S, Cheng JZ, et al. Ultrasound standard plane detection using a composite neural network framework. IEEE Trans Cybern. (2017) 47:1576–86. doi: 10.1109/TCYB.2017.2685080

48. Baumgartner CF, Kamnitsas K, Matthew J, Fletcher TP, Smith S, Koch LM, et al. SonoNet: real-time detection and localisation of fetal standard scan planes in freehand ultrasound. IEEE Trans Med Imaging. (2017) 36:2204–15. doi: 10.1109/TMI.2017.2712367

49. Sridar P, Kumar A, Quinton A, Nanan R, Kim J, Krishnakumar R. Decision Fusion-based fetal ultrasound image plane classification using convolutional neural networks. Ultrasound Med Biol. (2019) 45:1259–73. doi: 10.1016/j.ultrasmedbio.2018.11.016

50. Sahli H, Mouelhi A, Ben Slama A, Sayadi M, Rachdi R. Supervised classification approach of biometric measures for automatic fetal defect screening in head ultrasound images. J Med Eng Technol. (2019) 43:279–86. doi: 10.1080/03091902.2019.1653389

51. Xie HN, Wang N, He M, Zhang LH, Cai HM, Xian JB, et al. Using deep-learning algorithms to classify fetal brain ultrasound images as normal or abnormal. Ultrasound Obstet Gynecol. (2020) 56:579–87. doi: 10.1002/uog.21967

52. Xie B, Lei T, Wang N, Cai H, Xian J, He M, et al. Computer-aided diagnosis for fetal brain ultrasound images using deep convolutional neural networks. Int J Comput Assist Radiol Surg. (2020) 15:1303–12. doi: 10.1007/s11548-020-02182-3

53. Prakash KN, Ramakrishnan AG, Suresh S, Chow TW. Fetal lung maturity analysis using ultrasound image features. IEEE Trans Inf Technol Biomed. (2002) 6:38–45. doi: 10.1109/4233.992160

54. Tekesin I, Anderer G, Hellmeyer L, Stein W, Kühnert M, Schmidt S. Assessment of fetal lung development by quantitative ultrasonic tissue characterization: a methodical study. Prenat Diagn. (2004) 24:671–6. doi: 10.1002/pd.951

55. Bonet-Carne E, Palacio M, Cobo T, Perez-Moreno A, Lopez M, Piraquive JP, et al. Quantitative ultrasound texture analysis of fetal lungs to predict neonatal respiratory morbidity. Ultrasound Obstet Gynecol. (2015) 45:427–33. doi: 10.1002/uog.13441

56. Burgos-Artizzu XP, Perez-Moreno Á, Coronado-Gutierrez D, Gratacos E, Palacio M. Evaluation of an improved tool for non-invasive prediction of neonatal respiratory morbidity based on fully automated fetal lung ultrasound analysis. Sci Rep. (2019) 9:1950. doi: 10.1038/s41598-019-38576-w

57. Liu Z, Chang C, Ma X, Wang YY, Wang WQ. Primary study on automatic ultrasonic grading of placenta. Chin J Ultrasonograph. (2000) 19–21. doi: 10.3760/j.issn:1004-4477.2000.12.006

58. Chen CY, Su HW, Pai SH, Hsieh CW, Jong TL, Hsu CS, et al. Evaluation of placental maturity by the sonographic textures. Arch Gynecol Obstet. (2011) 284:13–8. doi: 10.1007/s00404-010-1555-5

59. Lin SL. Methods for automatic grading of placental maturity under Bultrasound images. Chin Sci Technol Inf . (2011) 184–5. doi: 10.3969/j.issn.1001-8972.2011.11.118

60. Li X, Yao Y, Ni D, Chen S, Li S, Lei B, et al. Automatic staging of placental maturity based on dense descriptor. Biomed Mater Eng. (2014) 24:2821–9. doi: 10.3233/BME-141100

61. Lei B, Yao Y, Chen S, Li S, Li W, Ni D, et al. Discriminative learning for automatic staging of placental maturity via multi-layer fisher vector. Sci Rep. (2015) 5:12818. doi: 10.1038/srep12818

62. Chu C, Yan Y, Ren Y, Li X, Gui Y. Prenatal diagnosis of congenital heart diseases by fetal echocardiography in second trimester: a Chinese multicenter study. Acta Obstet Gynecol Scand. (2017) 96:454–63. doi: 10.1111/aogs.13085

63. Maraci MA, Bridge CP, Napolitano R, Papageorghiou A, Noble JA. A framework for analysis of linear ultrasound videos to detect fetal presentation and heartbeat. Med Image Anal. (2017) 37:22–36. doi: 10.1016/j.media.2017.01.003

64. Yu L, Guo Y, Wang Y, Yu J, Chen P. Segmentation of fetal left ventricle in echocardiographic sequences based on dynamic convolutional neural networks. IEEE Trans Biomed Eng. (2017) 64:1886–95. doi: 10.1109/TBME.2016.2628401

65. Femina MA, Raajagopalan SP. Anatomical structure segmentation from early fetal ultrasound sequences using global pollination CAT swarm optimizer-based Chan-Vese model. Med Biol Eng Comput. (2019) 57:1763–82. doi: 10.1007/s11517-019-01991-2

66. Arnaout R, Curran L, Zhao Y, Levine JC, Chinn E, Moon-Grady AJ. An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease. Nat Med. (2021) 27:882–91. doi: 10.1038/s41591-021-01342-5

67. Yeo L, Romero R. Fetal intelligent navigation echocardiography (FINE): a novel method for rapid, simple, and automatic examination of the fetal heart. Ultrasound Obstet Gynecol. (2013) 42:268–84. doi: 10.1002/uog.12563

68. Yeo L, Romero R. Intelligent navigation to improve obstetrical sonography. Ultrasound Obstet Gynecol. (2016) 47:403–9. doi: 10.1002/uog.12562

69. Yeo L, Romero R. Color and power Doppler combined with fetal intelligent navigation echocardiography (FINE) to evaluate the fetal heart. Ultrasound Obstet Gynecol. (2017) 50:476–91. doi: 10.1002/uog.17522

70. Yeo L, Luewan S, Markush D, Gill N, Romero R. Prenatal diagnosis of dextrocardia with complex congenital heart disease using fetal intelligent navigation echocardiography (FINE) and a literature review. Fetal Diagn Ther. (2018) 43:304–16. doi: 10.1159/000468929

71. Yeo L, Luewan S, Romero R. Fetal intelligent navigation echocardiography (FINE) detects 98% of congenital heart disease. J Ultrasound Med. (2018) 37:2577–93. doi: 10.1002/jum.14616

72. Yeo L, Markush D, Romero R. Prenatal diagnosis of tetralogy of Fallot with pulmonary atresia using: fetal intelligent navigation echocardiography (FINE). J Matern Fetal Neonatal Med. (2019) 32:3699–702. doi: 10.1080/14767058.2018.1484088

73. Veronese P, Bogana G, Cerutti A, Yeo L, Romero R, Gervasi MT, et al. Prospective study of the use of fetal intelligent navigation echocardiography (FINE) to obtain standard fetal echocardiography views. Fetal Diagn Ther. (2017) 41:89–99. doi: 10.1159/000446982

74. Gembicki M, Hartge DR, Dracopoulos C, Weichert J. Semiautomatic fetal intelligent navigation echocardiography has the potential to aid cardiac evaluations even in less experienced hands. J Ultrasound Med. (2020) 39:301–9. doi: 10.1002/jum.15105

75. Huang C, Zhao BW, Chen R, Pang HS, Pan M, Peng XH, et al. Is fetal intelligent navigation echocardiography helpful in screening for d-transposition of the great arteries? J Ultrasound Med. (2020) 39:775–84. doi: 10.1002/jum.15157

76. Gembicki M, Hartge DR, Fernandes T, Weichert J. Feasibility of semiautomatic fetal intelligent navigation echocardiography for different fetal spine positions: a matter of “time”? J Ultrasound Med. (2021) 40:91–100. doi: 10.1002/jum.15379

77. Ma M, Li Y, Chen R, Huang C, Mao Y, Zhao B. Diagnostic performance of fetal intelligent navigation echocardiography (FINE) in fetuses with double-outlet right ventricle (DORV). Int J Cardiovasc Imaging. (2020) 36:2165–72. doi: 10.1007/s10554-020-01932-3

78. Hu WY, Zhou JH, Tao XY, Li SY, Wang B, Zhao BW. Novel foetal echocardiographic image processing software (5D Heart) improves the display of key diagnostic elements in foetal echocardiography. BMC Med Imaging. (2020) 20:33. doi: 10.1186/s12880-020-00429-8

Keywords: ultrasound, artificial intelligence, prenatal diagnosis, fetus, medical imaging

Citation: He F, Wang Y, Xiu Y, Zhang Y and Chen L (2021) Artificial Intelligence in Prenatal Ultrasound Diagnosis. Front. Med. 8:729978. doi: 10.3389/fmed.2021.729978

Received: 24 June 2021; Accepted: 29 November 2021;

Published: 16 December 2021.

Edited by:

Patrice Mathevet, Centre Hospitalier Universitaire Vaudois (CHUV), SwitzerlandReviewed by:

Süleyman Cansun Demir, Çukurova University, TurkeyCécile Guenot, Centre Hospitalier Universitaire Vaudois (CHUV), Switzerland

Copyright © 2021 He, Wang, Xiu, Zhang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lizhu Chen, YWxpY2VjbHpAc2luYS5jb20=

Fujiao He

Fujiao He Yaqin Wang

Yaqin Wang