- Ophthalmology Unit, A.O.U. City of Health and Science of Turin, Department of Surgical Sciences, University of Turin, Turin, Italy

Artificial intelligence (AI) is a subset of computer science dealing with the development and training of algorithms that try to replicate human intelligence. We report a clinical overview of the basic principles of AI that are fundamental to appreciating its application to ophthalmology practice. Here, we review the most common eye diseases, focusing on some of the potential challenges and limitations emerging with the development and application of this new technology into ophthalmology.

Introduction

In the near future, the number of patients suffering from eye diseases is expected to increase dramatically due to aging of the population. In such a scenario, early recognition and correct management of eye diseases are the main objectives to preserve vision and enhance quality of life. Deep integration of artificial intelligence (AI) in ophthalmology may be helpful at this aim, having the potential to speed up the diagnostic process and to reduce the human resources required. AI is a subset of computer science that deals with using computers to develop algorithms that try to simulate human intelligence.

The concept of AI was first introduced in 1956 (1). Since then, the field has made remarkable progress to the point that it has been defined as “the fourth industrial revolution in mankind's history” (2).

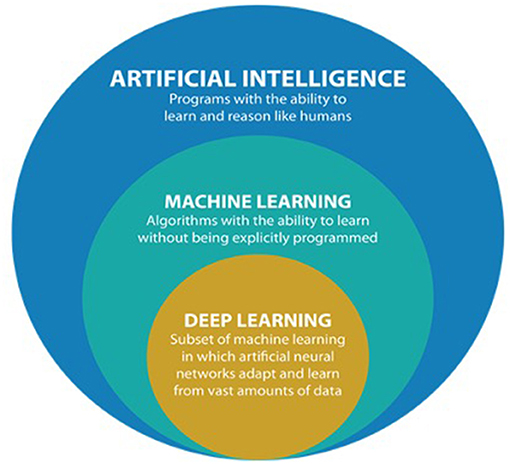

The terms artificial intelligence, machine learning, and deep learning (DL) have been used at times as synonyms; however, it is important to distinguish the three (Figure 1).

Figure 1. Comprehensive overview of artificial intelligence (AI) and its subfields (from https://datacatchup.com/artificial-intelligence-machine-learning-and-deep-learning/).

Artificial intelligence is the most general term, referring to the “development of computer systems able to perform tasks by mimicking human intelligence, such as visual perception, decision making, and voice recognition” (3). Machine learning, which occurred in the 1980s, refers to a subfield of AI that allows computers to improve at performing tasks with experience or to “learn on their own without being explicitly programmed” (4).

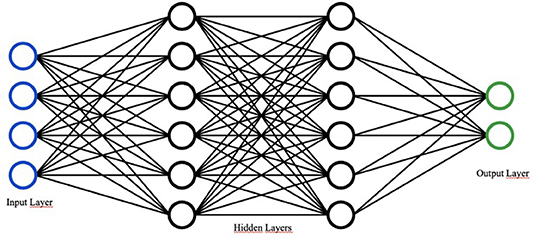

Finally, deep learning refers to a “subfield of machine learning composed of algorithms that use a cascade of multilayered artificial neural networks for feature extraction and transformation” (5, 6). The term “deep” refers to the many deep hidden layers in its neural network: the benefit of having more layers of analysis is the ability to analyze more complicated inputs, including entire images. In other words, DL uses representation learning methods with multiple levels of abstraction to elaborate and process input data and generate outputs without the need for manual feature engineering, automatically recognizing the intricate structures embedded in high-dimensional data (7) (Figure 2).

Figure 2. Basic design of a neural network. Adapted from “Network.svg” by Victor C. Zhou (https://victorzhou.com/series/neural-networks-from-scratch/).

The entire field of healthcare has been revolutionized by the application of AI to the current clinical workflow, including in the analysis of breast histopathology specimens (8), skin cancer classification (9), cardiovascular risk prediction (10), and lung cancer detection (11). This expanding research inspired numerous studies of AI application also to ophthalmology, leading to the development of advanced AI algorithms together with multiple accessible datasets such as EyePACS (12), Messidor (12), and Kaggle's dataset (13).

Deep learning has been largely reported to be capable of achieving automated screening and diagnosis of common vision-threatening diseases, such as diabetic retinopathy (DR), glaucoma, age-related macular degeneration (AMD), and retinopathy of prematurity (ROP).

Further integration of DL into ophthalmology clinical practice is expected to innovate and improve the current disease and management process, including an earlier detection and hopefully better disease outcomes.

Materials and Methods

The outcome of this review was to provide a descriptive analysis of the current and most clinically relevant applications of AI in the various fields of ophthalmology. A literature search was conducted by two independent investigators (FR and GB), from the earliest available year of indexing until February 28, 2021. Two databases were used during the literature search: MEDLINE and Scopus. The following terms were connected using the Boolean operators “and,” “or,” “and/or”: “Artificial Intelligence,” “Ophthalmology,” “Diabetic Retinopathy,” “Age Related Macular Degeneration,” “Retinal Detachment,” “Retinal Vein Occlusion,” “Cataract,” “Keratoconus,” “Glaucoma,” “Pediatric Ophthalmology,” “Retinopathy of Prematurity,” “Teleophthalmology,” “Eyelid Tumors,” “Exophthalmos,” and “Strabismus.” The terms were searched as “Mesh terms” and as “All fields” terms. No limitations were placed on the keyword searches. Full articles or abstracts that were written in English were included. Only articles published in peer-reviewed journals were selected in this review. The investigators screened the search results and selected the most recent and noteworthy publications with such an impact on clinical practice. In particular, the inclusion criteria were: a clear methodology of algorithm development and training, high number of images and/or data used for training the DL algorithm, and high rates of disease prediction/detection in terms of sensibility, specificity, and area under the receiver operating characteristic curve (AUC).

Data extracted from each selected paper included: the first author of the study, year, time frame, study design, location, follow-up time, number of eyes enrolled, demographic features (mean age, gender, and ethnicity), ophthalmological pathology under investigation (DR, AMD, retinal detachment, retinal vein occlusion, cataract, keratoconus, glaucoma, ROP, pediatric cataract, strabismus, myopia, and teleophthalmology), and AI characteristics (imaging type, disease definition, sensitivity, specificity, and accuracy).

Results

Study Selection

The review included a total of 69 studies, of which one study was about exophthalmos (13), three about strabismus (14–16), two studies about eyelid tumors (17, 18), three about keratoconus (19–21), seven about cataracts (22–28), three about pediatric cataracts (29–31), one about myopia (32), nine about glaucoma (33–41), nine studies were about DR (11, 34, 39, 42–47), nine about AMD (34, 48–55), two about retinal detachment (56, 57), one about retinal vein occlusion (58), 12 about ROP (59–70), and seven were about teleophthalmology (71–77).

Exophthalmos

One of the most common causes of exophthalmos is the thyroid-associated ophthalmopathy (TAO). Salvi et al. (14) developed a model to evaluate the disease classification and prediction of progression. They considered a group of 246 patients with absent, minimal, or inactive TAO and 152 patients with progressive and/or active TAO. The research collected variations of 13 clinical eye signs. The neural network they used obtained a concordance with clinical assessment of 67%.

Strabismus

Lu et al. (15) used a convolutional neural network (CNN) together with facial photos to detect abnormal position of the eye. This could be beneficial in telemedical evaluation and screening. On the contrary, for in-office evaluation, the CNN could be applied to eye-tracking data (16) or to retinal birefringence scanning (17).

Eyelid Tumors

Wang et al. (18), using a DL (VGG16) that contained the parameters learnt from ImageNet2014, developed a protocol to classify eyelid tumors by distinguishing digital pathological slides that have either malignant melanoma or non-malignant melanoma at the small patch level. They used 79 formalin-fixed paraffin-embedded pathological slides from 73 patients, divided into 55 non-malignant melanoma slides from 55 patients and 24 malignant slides from 18 patients cut into patches. The validation consisted of 142,104 patches from 79 slides, with 61,031 non-malignant patches from 55 slides and 81,073 malignant patches from 24 slides. The AUC for the algorithm was 0.989.

Tan et al. (19) developed a model to predict the complexity of reconstructive surgery after periocular basal cell carcinoma excision. The three predictive variables were preoperative assessment of complexity, surgical delays, and tumor size. They obtained an AUC of 0.853.

Keratoconus

The most important diagnostic imaging techniques for keratoconus include corneal topography with a Placido disc-based imaging system (Orbscan, Bausch & Lomb, Bridgewater, NJ, USA), anterior segment optical coherence tomography (AS-OCT), and three-dimensional (3D) tomographic imaging, such as Scheimpflug (Pentacam, Oculus, Lynnwood, WA, USA). On this basis, Yousefi et al. developed an unsupervised machine learning algorithm for the grading of keratoconus using 3,156 AS-OCT images of keratoconus from grade 0 to grade 4. It showed a sensibility of 97.7% and a specificity of 94.1% (20). In 2019, Kamiya et al. reported higher sensibility (99.1%) and specificity (98.4%) for keratoconus grading with a CNN that used 304 AS-OCT images of keratoconus from grade 0 to grade 4 (21). Finally, Lavric and Valentin developed a CNN trained using 1,350 healthy eye and 1,350 keratoconus eye topographies, with a validation set of 150 eyes, that showed an accuracy of 99.3% (78).

Cataract

The AI technology has been applied to various aspects of cataract, both on clinical and surgical management, from diagnosing cataracts to optimizing the biometry for intraocular lens (IOL) power calculation.

The clinical classification of cataracts includes nuclear sclerotic, cortical, and posterior subcapsular. These are usually diagnosed by slit lamp microscopy and/or photography. Cataract is graded on clinical scales such as the Lens Opacities Classification System III (22). One of the first AI systems for evaluating nuclear cataracts was described by Li et al. in 2009. Their system had a success rate of 95% (23). Xu et al., in 2013, evaluated an automatic grading method of nuclear cataracts from slit lamp lens images using group sparsity regression, obtaining a mean absolute error of 0.336 (24). In 2015, Gao et al. (25) used 5,378 slit lamp photographs to develop an algorithm to grade nuclear cataracts, obtaining an accuracy of 70.7%. In a recent large-scale study, Wu et al. (26), in China, used DL via residual neural network (ResNet) to establish a three-step sequential AI algorithm for the diagnosis of cataracts. This algorithm was trained with 37,638 slit lamp photographs in order to differentiate cataract and IOL from normal lens (AUC > 0.99) and to detect referable cataracts (AUC > 0.91).

With the increasing use of retinal imaging, other researchers have also explored the use of color fundus photographs for the development of an automated cataract evaluation system, potentially leveraging on retinal imaging as an opportunistic screening tool for cataracts as well. Dong et al. (27) developed an AI algorithm with a combination of machine learning and DL using 5,495 fundus images. The goal was to describe a classification of the “visibility” of fundus images to report four classes of cataract severity (normal, mild, moderate, and severe). The accuracy was 94.07%. Zhang et al., in 2017, proposed a system to classify cataracts, obtaining an accuracy of 93.52% (28). Li et al., in 2018, published an article reporting accuracies of 97.2 and 87.7%, respectively, for detecting and grading tasks (29). Ran et al. (30) proposed a six-level cataract grading based on the feature datasets generated by a deep convolutional neural network (DCNN). Xu et al. (31) have developed CNN-based algorithms, AlexNet and VisualDN, with the purpose of diagnosing and grading cataracts, gaining an accuracy of 86.2% using 8,030 fundus images. Pratap and Kokil, in 2019, trained a CNN for automatic cataract classification, obtaining an accuracy of 92.91% (79). Zhang et al. (32) showed a higher accuracy of 93% in the detection and grading of cataracts using 1,352 fundus images.

Currently, the choice of an IOL power calculation formula remains unstandardized and at the discretion of the surgeon. Ocular parameters such as axial length and keratometry are important factors when determining the applicability of each formula. In 2015, with the introduction of a concept of an IOL, “Ladas super formula” (80), the method of IOL calculation changed radically. Previous generations of IOL formulas were developed as 2D algorithms. This new methodology was derived by extracting features from respective “ideal parts” of old formulas (Hoffer Q, Holladay-1, Holladay-1 with Koch adjustment, Haigis, and SRK/T, with the exclusion of the Barrett Universal II and Barrett Toric formulas) and plotting into a 3D surface. The super formula may serve as a solution to calculating eyes with typical and atypical parameters such as axial length, corneal power, and anterior chamber depth. The concept of three-dimensionality is to develop a way to compare one or more formulas, allowing for the evaluation of areas of clinical agreements and disagreements between multiple formulas (81). Recently, Kane et al. have demonstrated a Hill-RBF (radial basis function) method using a large dataset with an adaptive learning to calculate the refractive output. For a given eye, it relies on adequate numbers of eyes of similar dimensions to provide an accurate prediction (82).

Pediatric Cataract

Pediatric cataract is a more variable disease than are cataracts developing in adults. Moreover, slit lamp examination and cataract visualization could be challenging because these are based on child compliance. CC-Cruiser (33–35) is a cloud-based system that can automatically identify cataracts from slit lamp images, grade them, and suggest treatment. Although this approach could lead to a higher patient satisfaction due to its rapid evaluation, it is characterized by a significantly lower performance in diagnosing cataracts and in recommending treatment than by experts (34).

High Myopia

Children at risk of high myopia could benefit from assuming low-dose atropine to stop or slow down myopic progression (36); however, determining for which children this therapy should be prescribed can be challenging (37). For this reason, Lin et al. (37) tried to predict high-grade myopia progression in children using a clinical measure, showing good predictive performance for up to 8 years in the future. This approach could represent a better guide to prophylactic treatment.

Glaucoma

The main difficulty in detecting and treating glaucoma consists in its being asymptomatic at the early stages (38). In this scenario, AI can be helpful in detecting the glaucomatous disc, interpreting the visual field tests, and forecasting clinical outcomes (39).

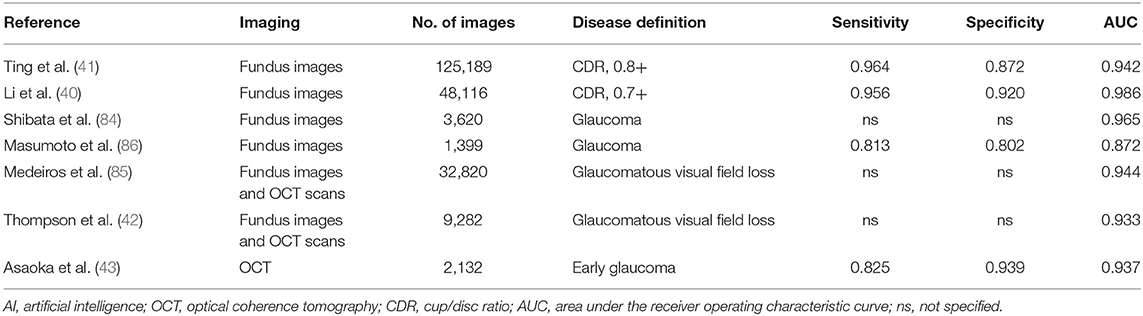

Given the dissimilarity in optic disc anatomy, identifying the glaucomatous optic nerve head (ONH) can be difficult at the early stages of the disease. Moreover, it was shown that, even among experts, agreement on the detection of ONH damage is modest (83). The difference in identifying the glaucomatous disc on fundus photographs is magnified by variations in the image capturing device, mydriasis state, focus, and exposure. Given that, AI can implement different sources of data and help in defining ONH damage. Some investigators have trained DL algorithms to detect a cup/disc ratio (CDR) at or above a certain threshold (either a CDR of 0.7 or 0.8) with AUC ≥ 0.942 (40). Ting et al., in 2017, developed an algorithm through a dataset of retinal images for the detection of DR, glaucoma, and AMD on a multiethnic population. In particular, for glaucoma detection, their algorithm presented an AUC of 0.942, sensibility of 96.4%, and specificity of 87.2% (41). Using a different approach, the investigators defined the glaucoma status by linking other data with the optic disc photograph, obtaining remarkably good results (AUC ≥ 0.872) (42, 84–86). Moreover, Asaoka et al. applied DL to OCT images, obtaining an even higher AUC (0.937) than did other machine learning methods (43). Finally, Medeiros et al. used an innovative approach, training a DL algorithm from OCT scans to predict retinal nerve fiber layer (RNFL) thickness on fundus photos, with a high correlation of prediction of 0.83 and an AUC of 0.944 (85) (Table 1).

Visual fields, unlike fundus photographs and OCT scans, represent the functional assay of the visual pathway. Despite being a fundamental exam in clinical evaluation, current algorithms applied to visual fields do not differentiate subtle loss in a regional manner and glaucomatous from the non-glaucomatous defects and artifacts (39). Moreover, the current computerized packages do not decompose visual field data into patterns of loss. Visual field loss patterns are due to the compromised RNFs projecting to specific areas of the optic disc. Recently, Elze et al. (44) have developed an unsupervised algorithm “employing a corner learning strategy called archetypal analysis to quantitatively classify the regional patterns of loss without the potential bias of clinical experience”.

Archetypal analysis provides a regional stratification of the visual fields together with the coefficients weighting each pattern loss. Furthermore, implementation of AI algorithms to visual field testing could also assist clinicians in tracking the visual field progression with more accuracy (45). Finally, in more recent years, AI has been used to forecast glaucoma progression using Kalman filtering. This technique could lead to the generation of a personalized disease prediction based on different sources of data, which can help clinicians in the decision-making process (46, 47).

Diabetic Retinopathy

Several studies have implemented DL algorithms for the diagnosis of microaneurysms, hemorrhages, hard exudates, and cotton wool spots among patients with DR. DL algorithms for the detection of DR have recently been reported to have a higher sensitivity than does manual detection by ophthalmologists (48). However, more studies are needed to confirm this thesis (12).

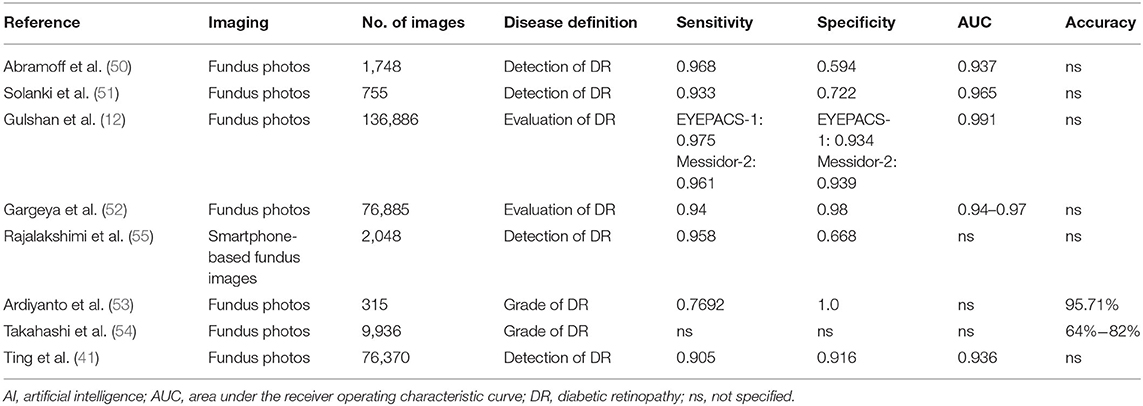

The accuracy of AI depends on access to good training datasets. Gulshan et al. (12) evaluated the accuracy of a two-dataset system in the detection of DR from fundus photographs: the EyePACS-1 dataset, composed of 9,963 images from 4,997 patients, and the MESSIDOR-2 dataset, consisting of 1,748 images from 874 people. One of the earliest studies on the automated detection of DR from color fundus photographs was by Abramoff et al. in 2008 (49). It was a retrospective analysis done with non-mydriatic images that was able to detect referable DR with 84% sensitivity and 64% specificity. In 2013 (50), in a research showing the results of the Iowa Detection Program, a higher sensitivity of 96.8% and a lower specificity of 59.4% were found. Another study published in 2015, using EyeArt AI software trained with the MESSIDOR-2 dataset, demonstrated a sensitivity of 93.3% and a specificity of 72.2% in diagnosing DR (51). Later, in 2016, Gulshan et al. developed an algorithm trained with 128,175 macula-centered retinal images obtained from EyePACS and MESSIDOR-2 and reported sensitivity values of 97.5 and 96.1% and specificities of 93.4 and 93.3%, respectively (12).

Gargeya and Leng (52) focused on the identification of mild non-proliferative DR. They showed a sensitivity of 94% and a specificity of 98% for referable DR with EyePACS.

As reported above, Ting et al. developed an algorithm through a dataset of retinal images for the detection of DR, glaucoma, and AMD on a multiethnic population. This DL system evaluated 76,370 retinal images with a sensitivity of 90.5% and a specificity of 91.6% for DR (41). Ardiyanto et al. (53) developed an algorithm for DR grading trained with 315 fundus images, with an accuracy of 95.71%, a sensitivity of 76.92%, and a specificity of 100%. Takahashi et al. (54) proposed a novel AI disease staging system with the ability to grade DR involving retinal areas not typically visualized on fundoscopy. They obtained an algorithm able to grade DR with 9,939 fundus images with an accuracy of 64–82%. Finally, Rajalakshimi et al. (55) showed the possibility of a smartphone-based fundus image diagnosis of DR with a sensitivity of 95.8% and a specificity of 66.8% (Table 2).

Age-Related Macular Degeneration

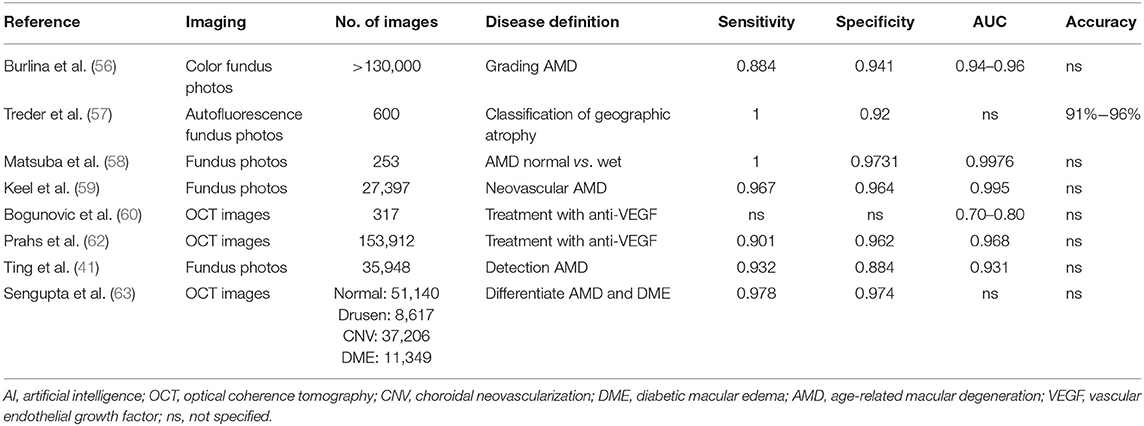

Several studies have used fundus photographs in the diagnosis of AMD. Burlina et al. (56) focused on the automated grading of AMD. From color fundus images, they evaluated the classification between absence/early AMD and intermediate/advanced AMD, with an accuracy of 0.94–0.96. Treder et al. (57) focused on a DL-based detection and classification of geographic atrophy using a DCNN classifier through autofluorescence fundus photos. They obtained an accuracy of 91–96%. Another study (58) used a deep, unspecified CNN to classify between normal and wet AMD images, with a sensitivity of 100%, a specificity of 97.31%, and an accuracy of 99.76%. Two hundred fifty-three fundus photos for training and 111 for validation were used. Keel et al. (59) developed a DL algorithm for the detection of neovascular AMD using color fundus photographs, with a sensitivity of 96.7%, a specificity of 96.4%, and an accuracy of 99.5%. Ting et al. (41) showed an AUC for their algorithm of 0.931, a sensitivity of 93.2%, and a specificity of 88.4% when compared to manual efforts by ophthalmologists.

Concerning OCT, Bogunovic et al. developed a data-driven model to predict the progression risk in intermediate AMD (60). Another research in 2017 developed an algorithm to predict anti-vascular endothelial growth factor (VEGF) treatment requirements in neovascular AMD. They used quantitative OCT scan features to classify the need for injections over 20 months into high (more than 15), medium (between 6 and 15), and low (<6) groups and used the OCT images of 317 patients as a dataset for training and validation (61). An accuracy of 70%−80% was achieved for treatment requirement evaluation. Treder et al., in 2017, established a model able to detect automatically exudative AMD from spectral domain OCT (SD-OCT) (57). Prahs et al. (62) developed an OCT-based DL algorithm for the evaluation of treatment indication with anti-VEGF. This research included a deep, unsupervised CNN to compare the system prediction injection requirement to actual injection administration within 21 days. This kind of AI used more than 150,000 OCT line scans for training and 5,358 for validation, with an AUC of 96.8%, a sensitivity of 90.1%, and a specificity of 96.2%. Sengupta et al. (63) used OCT images with the aim of differentiating between AMD and diabetic macular edema, obtaining a sensitivity of 97.8% and a specificity of 97.4% (Table 3).

Retinal Detachment

Ohsugi et al. (64) used fundus ophthalmoscopy to detect retinal detachment, with 329 fundus photos for training and 82 for validation, obtaining a sensitivity of 97.6.7%, a specificity of 96.5%, and an accuracy of 99.6%. Li et al. (65) developed a deep, unsupervised CNN to detect retinal detachment and macula-on status through 7,323 fundus photos for training and 1,556 for validation. The sensitivity values were 96.1 and 93.8%, the specificities were 99.6% and 90.9%, and the AUCs were 0.989 and 0.975, respectively.

Retinal Vein Occlusion

One of the most important researches on retina vein occlusion was that by Zhao et al. (66), who used a CNN together with patch-based and image-based vote methods to identify the fundus image of branch retinal vein occlusion automatically. They achieved an accuracy of 97%.

Retinopathy of Prematurity

The most significant progresses in the pediatric application of AI deal with ROP. Automation derived from AI application could not only improve screening and objective assessment but also cause less stress and pain for infants undergoing examination compared with indirect ophthalmoscopy (67). Different studies have focused on the determination of the vessel tortuosity and width via fundus images, creating tools like Vessel Finder (68), Vessel Map (69), ROP tool (70), Retinal Image Multiscale Analysis (RISA) (71, 87), and Computer-Aided Image Analysis of the Retina (CAIAR) (72, 73). Vessel measurements were used as a feature for various predictive models of diseases. Finally, recent approaches to ROP are mostly based on CNNs, which take fundus images as inputs and do not require manual intervention. Systems like that of Worral and Wilson (74), the i-ROP-DL (75, 76), and DeepROP (77) have demonstrated agreement with expert opinions and better disease detection than that by some experts (76, 77).

Teleophthalmology and Screening

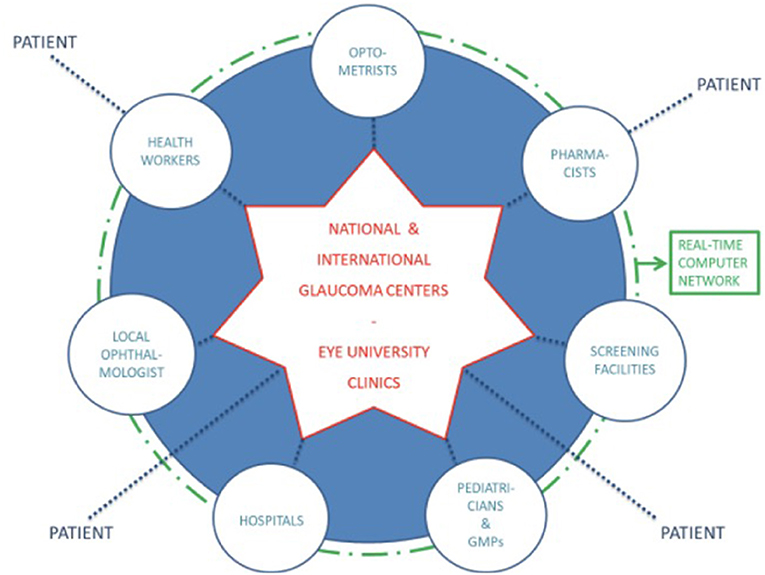

Telemedicine is defined as “the use of medical information exchanged from one site to another via electronic communications to improve a patient's health status” (88). Telemedicine can facilitate a larger distribution of healthcare to distant areas where there is a lack of health workers, can reduce the waiting times, and improve acute management of patients, even in remote regions. A possible example of the application of telemedicine to glaucoma management is represented by the “hub and spoke pre-hospital model of glaucoma: the hubs correspond to optometrists, pharmacists, pediatricians, general medical practitioners (GMPs), the local ophthalmologist, health workers, screening facilities, and hospitals, while the spoke is represented by the national and international glaucoma centers and the Eye University Clinics” (89) (Figure 3). This model of healthcare improves and expands clinical services to remote regions (the so-called spoke) with consultations between patients and specialists based in referral centers (the so-called hub) (90).

Figure 3. The hub and spoke model of glaucoma (89).

However, given the global burden of eye diseases and the progressive shortage of healthcare workers, telemedicine solutions that decentralize consultations could not be sufficient.

In such a scenario, the AI technology should be combined with telemedicine, enabling clinicians to receive automatically acquired and screened health parameters from the patients and to visit them remotely. Especially during the coronavirus disease 2019 (COVID-19) pandemic era, the implementation of teleophthalmology could reduce the infection risk in the healthcare setting, enabling remote triaging before arriving to the hospital in order to avoid unnecessary visits and exposure risks, as already done by multiple centers across the world (91–94).

Furthermore, the DL algorithm applied to retinal fundus images could be useful as a broad-based visual impairment screening tool, as reported by Tham et al. (95). This approach could lead to a more rapid referral of patients with visual impairments to tertiary eye centers.

Another step forward in improving traditional clinic visits might be further accelerated by the application of AI to home devices used for monitoring parameters such as visual acuity, visual fields, and intraocular pressure (96–99). Although further studies need to be conducted before the mass adoption of these devices, they provide assurance of the possibility to utilize teleophthalmology from home in the future.

Discussion

In this review, we described the main applications of AI to ophthalmology, underlining many aspects of evolution and future improvements related to this technology.

Several other reviews on AI in ophthalmology have been published (100); however, they are more focused on specific diseases such as DR or AMD (101–103). Although these articles are exhaustive and are in-depth reviews, the aim of our work was to create a more comprehensive clinical overview of the current applications of AI in ophthalmology, giving the clinicians a practical summary of current evidence for AI.

DL algorithms reached high thresholds of accuracy, sensitivity, and specificity for some of the most common vision-threatening diseases, with the highest level of evidence regarding DR, AMD, and glaucoma. Furthermore, some studies on AI have focused on pediatric ophthalmology, with the aim of helping clinicians in overcoming common practical limitations due to pediatric patients' compliance.

However, in such a scenario of optimistic acceptance of this new technology, it should be highlighted that DL has also given rise to challenges and some controversy.

Firstly, DL algorithms are poorly explainable in ophthalmology terms. This is the so-called black box phenomenon and could eventually lead to a reduced acceptance of this technology by clinicians (104, 105). A black box suggests lack of understanding of the decision-making process of the algorithm that gives a certain output. Several techniques are used to bound this phenomenon, such as the “occlusion test, in which a blank area is systemically moved across the entire image and the largest drop in predictive probability represents the specific area of highest importance for the algorithm” (106), or “saliency maps (heat maps) generation techniques such as activation mapping, which, again, highlights areas of importance for classification decisions within an image” (107). Despite the progress made, in some cases (108), the visualization method highlighted non-traditional areas of diagnostic interest, and it is uncertain how to consider the features identified by saliency analysis of those regions (109).

External validation of algorithms represents the second challenge. Although many DL algorithms have been developed based on publicly available datasets, there are some concerns about how well these will perform in a “real-world” clinical practice setting (109, 110). When adopted in clinical practice, these algorithms may have a diminished performance due to variabilities in several aspects, such as in the imaging quality, lighting conditions, and the different dilation protocols.

Another area of controversy is the presence of bias in the datasets for training algorithms. Biases in the training data used for developing AI algorithms not only may weaken the external applicability but may also amplify preexisting biases (109). Some solutions to recognize potential biases and limit unwanted outcomes could be to rebalance the training dataset if a certain subgroup is underrepresented or to select a training dataset with diverse patient populations. An example of an adequate training dataset on different populations is that used by Ting et al. (41), in which their algorithm for detecting diabetic retinopathy was validated on different ethnic groups.

Lastly, it is relevant noticing the legal implications of using DL algorithms in clinical practice. In fact, if a machine thinks similarly to a human ophthalmologist who can make errors, “who is responsible to bear the legal consequences of an undesirable outcome due to an erroneous prediction made by an artificial intelligence algorithm? These are such complex medical legal issues that have yet to be settled” (109, 111).

Conclusions

In conclusion, we discussed the main fields for the application of AI into ophthalmology practice. The use of AI algorithms should be seen as a tool to assist clinicians, not to replace them. AI could speed up some processes, reduce the workload for clinicians, and minimize diagnostic errors due to inappropriate data integration. AI is able to extract features from complex and different imaging modalities, enabling the discovery of new biomarkers to expand our current knowledge of diseases. This could lead to introducing into clinical practice new automatically detected diagnostic parameters or to developing new treatments for eye diseases. Challenges related to the implementation of these technologies remain, including algorithm validation, patient acceptance, and the education and training of health providers. However, physicians should continue to adapt to the fast-changing models of care delivery, collaborating more with teams of engineers, data scientists, and technology experts in order to achieve high-quality standards for research and interdisciplinary clinical practice.

Author Contributions

RN, GB, PM, and FR have contributed to manuscript drafting, literature review, and final approval of the review. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the Dartmouth summer research project on artificial intelligence. AI Mag. (2006) 27:12–4. doi: 10.1609/aimag.v27i4.1904

2. World Economic Forum. The Fourth Industrial Revolution: What it Means, How to Respond. (2016). Available online at: https://www.weforum.org/agenda/2016/01/the-fourth-industrial-revolution-what-it-means-and-how-to-respond/ (accessed Auguest 18, 2018).

3. Rahimy E. Deep learning applications in ophthalmology. Curr Opin Ophthalmol. (2018) 29:254–260. doi: 10.1097/ICU.0000000000000470

4. Puget JF. What is Machine Learning? (2020). Available online at: https://www.ibm.com/cloud/learn/machine-learning

5. Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med Image Anal. (1998) 42:60–80.

6. Shen D, Wu G, Suk H, Engineering C. Deep learning in medical image analysis. Annu Rev Biomed Eng. (2017) 19:221–48. doi: 10.1146/annurev-bioeng-071516-044442

8. Bejnordi BE, Zuidhof G, Balkenhol M, Hermsen M, Bult P, van Ginneken B, et al. Context-aware stacked convolutional neural networks for classification of breast carcinomas in whole-slide histopathology images. J Med imaging (Bellingham, Wash.). (2017) 4:44504. doi: 10.1117/1.JMI.4.4.044504

9. Esteva A, Kurpel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542:115–8. doi: 10.1038/nature21056

10. Weng SF, Reps J, Kai J, Garibaldi JM, Qureshi N. Can machine-learning improve cardiovascular risk prediction using routine clinical data? PLoS ONE. (2017) 12:e0174944. doi: 10.1371/journal.pone.0174944

11. van Ginneken B. Fifty years of computer analysis in chest imaging: rule-based, machine learning, deep learning. Radiol Phys Technol. (2017) 10:23–32. doi: 10.1007/s12194-017-0394-5

12. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

13. Quellec G, Charrière K, Boudi Y, Cochener B, Lamard M. Deep image mining for diabetic retinopathy screening. Med Image Anal. (2017) 39:178–93. doi: 10.1016/j.media.2017.04.012

14. Salvi M, Dazzi D, Pellistri I, Neri F. Prediction of the progression of thyroid-associated ophthalmopathy at first ophthalmologic examination: use of a neural network. Thyroid. (2002) 12:233–6. doi: 10.1089/105072502753600197

15. Lu J, Fan Z, Zheng C, Feng J, Huang L, Li W, et al. Automated strabismus detection for telemedicine applications. arXiv. (2018).

16. Chen Z, Fu H, Lo W-L, Chi Z. Strabismus recognition using eye-tracking data and convolutional neural networks. J Healthc Eng. (2018) 2018:7692198. doi: 10.1155/2018/7692198

17. Gramatikov BI. Detecting central fixation by means of artificial neural networks in a pediatric vision screener using retinal birefringence scanning. Biomed Eng Online. (2017) 16:52. doi: 10.1186/s12938-017-0339-6

18. Wang L, Ding L, Liu Z, Sun L, Chen L, Jia R, et al. Automated identification of malignancy in whole-slide pathological images: identification of eyelid malignant melanoma in gigapixel pathological slides using deep learning. Br J Ophthalmol. (2020) 104:318–23. doi: 10.1136/bjophthalmol-2018-313706

19. Tan E, Lin F, Sheck L, Salmon P, Ng S. A practical decision-tree model to predict complexity of reconstructive surgery after periocular basal cell carcinoma excision. J Eur Acad Dermatology Venereol. (2017) 31:717–23. doi: 10.1111/jdv.14012

20. Yousefi S, Yousefi E, Takahashi H, Hayashi T, Tampo H, Inoda S, et al. Keratoconus severity identification using unsupervised machine learning. PLoS ONE. (2018) 13:e0205998. doi: 10.1371/journal.pone.0205998

21. Kamiya K, Ayatsuka Y, Kato Y, Fujimura F, Takahashi M, Shoji N, et al. Keratoconus detection using deep learning of colour-coded maps with anterior segment optical coherence tomography: a diagnostic accuracy study. BMJ Open. (2019) 9:e031313. doi: 10.1136/bmjopen-2019-031313

22. Chylack LTJ, Wolfe JK, Singer DM, Leske MC, Bullimore MA, Bailey IL, et al. The lens opacities classification system III. The longitudinal study of cataract study group. Arch Ophthalmol. (1993) 111:831–36. doi: 10.1001/archopht.1993.01090060119035

23. Li H, Lim JH, Liu J, Wong DWK, Tan NM, Lu S, et al. An automatic diagnosis system of nuclear cataract using slit-lamp images. Annu Int Conf IEEE Eng Med Biol Soc. (2009) 2009:3693–6. doi: 10.1109/IEMBS.2009.5334735

24. Xu Y, Gao X, Lin S, Wong DWK, Liu J, Xu D, et al. Automatic grading of nuclear cataracts from slit-lamp lens images using group sparsity regression. Med Image Comput Comput Assist Interv. (2013) 16(Pt 2):468–75. doi: 10.1007/978-3-642-40763-5_58

25. Gao X, Lin S, Wong TY. Automatic feature learning to grade nuclear cataracts based on deep learning. IEEE Trans Biomed Eng. (2015) 62:2693–701. doi: 10.1109/TBME.2015.2444389

26. Wu X, Huang Y, Liu Z, Lai W, Long E, Zhang K, et al. Universal artificial intelligence platform for collaborative management of cataracts. Br J Ophthalmol. (2019) 103:1553–60. doi: 10.1136/bjophthalmol-2019-314729

27. Dong Y, Zhang Q, Qiao Z, Yang J. Classification of cataract fundus image based on deep learning. In: 2017 IEEE International Conference on Imaging Systems and Techniques (IST). Beijing: IEEE (2017). p. 1–5.

28. Zhang L, Li J, Zhang I, Han H, Liu B, Yang J, et al. Automatic cataract detection and grading using deep convolutional neural network. In: 2017 IEEE 14th International Conference on Networking, Sensing and Control (ICNSC) Calabria: IEEE (2017).

29. Li J, Xu X, Guan Y, Imran A, Liu B, Zhang L, et al. Automatic cataract diagnosis by image-based interpretability. In: 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Vol. 2018. Miyazaki: IEEE (2019). p. 3964–9.

30. Ran J, Niu K, He Z, Zhang H, Song H. Cataract detection and grading based on combination of deep convolutional neural network and random forests.In: 2018 International Conference on Network Infrastructure and Digital Content (IC-NIDC). Guiyang: IEEE (2018). p. 155–9.

31. Xu X, Zhang L, Li J, Guan Y, Zhang L. A Hybrid Global-Local Representation CNN Model for Automatic Cataract Grading. IEEE J Biomed Heal Informatics. (2020) 24:556–67. doi: 10.1109/JBHI.2019.2914690

32. Zhang H, Niu K, Xiong Y, Yang W, He Z, Song H. Automatic cataract grading methods based on deep learning. Comput Methods Programs Biomed. (2019) 182:104978. doi: 10.1016/j.cmpb.2019.07.006

33. Long E, Lin H, Liu Z, Wu X, Wang L, Jiang J, et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat Biomed Eng. (2017) 1:24. doi: 10.1038/s41551-016-0024

34. Lin H, Li R, Liu Z, chen J, Yang Y, Chen H, et al. Diagnostic efficacy and therapeutic decision-making capacity of an artificial intelligence platform for childhood cataracts in eye clinics: a multicentre randomized controlled trial. EClinicalMedicine. (2019) 9:52–9. doi: 10.1016/j.eclinm.2019.03.001

35. Liu X, Jiang J, Zhang K, Long E, Cui J, Zhu M, et al. Localization and diagnosis framework for pediatric cataracts based on slit-lamp images using deep features of a convolutional neural network. PLoS ONE. (2017) 12:e0168606. doi: 10.1371/journal.pone.0168606

36. Clark TY, Clark RA. Atropine 0.01% eyedrops significantly reduce the progression of childhood myopia. J Ocul Pharmacol. (2015) 31:541–5. doi: 10.1089/jop.2015.0043

37. Lin H, Long E, Ding X, Diao H, Chen Z, Liu R, et al. Prediction of myopia development among Chinese school-aged children using refraction data from electronic medical records: a retrospective, multicentre machine learning study. PLoS Med. (2018) 15:e1002674. doi: 10.1371/journal.pmed.1002674

38. Harwerth RS, Carter-Dawson L, Shen F, 3rd Smith EL, Crawford ML. Ganglion cell losses underlying visual field defects from experimental glaucoma. Invest Ophthalmol Vis Sci. (1999) 40:2242–50.

39. Mayro EL, Wang M, Elze T, Pasquale LR. The impact of artificial intelligence in the diagnosis and management of glaucoma. Eye. (2020) 34:1–11. doi: 10.1038/s41433-019-0577-x

40. Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. (2018) 125:1199–206. doi: 10.1016/j.ophtha.2018.01.023

41. Ting DSW, Cheung CYL, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA - J Am Med Assoc. (2017) 318:2211–23. doi: 10.1001/jama.2017.18152

42. Thompson AC, Jammal AA, Medeiros FA. A deep learning algorithm to quantify neuroretinal rim loss from optic disc photographs. Am J Ophthalmol. (2019) 201:9–18. doi: 10.1016/j.ajo.2019.01.011

43. Asaoka R, Murata H, Hirasawa K, Fujino Y, Matsuura M, Miki A, et al. Using deep learning and transfer learning to accurately diagnose early-onset glaucoma from macular optical coherence tomography images. Am J Ophthalmol. (2019) 198:136–45. doi: 10.1016/j.ajo.2018.10.007

44. Elze T, Pasquale LR, Shen LQ, Chen TC, Wiggs JL, Bex PJ. Patterns of functional vision loss in glaucoma determined with archetypal analysis. J R Soc Interface.(2015) 12:20141118. doi: 10.1098/rsif.2014.1118

45. Wang M, Shen LQ, Pasquale LR, Petrakos P, Formica S, Boland MV, et al. An artificial intelligence approach to detect visual field progression in glaucoma based on spatial pattern analysis. Invest Ophthalmol Vis Sci. (2019) 60:365–75. doi: 10.1167/iovs.18-25568

46. Garcia GP. Accuracy of kalman filtering in forecasting visual field and intraocular pressure trajectory in patients with ocular hypertension. JAMA Ophthalmol. (2019) 137:1416–23. doi: 10.1001/jamaophthalmol.2019.4190

47. Kazemian P, Lavieri MS, Van Oyen MP, Andrews C, Stein JD. Personalized prediction of glaucoma progression under different target intraocular pressure levels using filtered forecasting methods. Ophthalmology. (2018) 125:569–77. doi: 10.1016/j.ophtha.2017.10.033

48. Krause J, Gulshan V, Rahimy E, Karth P, Widner K, Corrado GS, et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology. (2018) 125:1264–72. doi: 10.1016/j.ophtha.2018.01.034

49. Abramoff MD, Niemeijer M, Suttorp-Schulten MSA, Viergever MA, Russell SR, van Ginneken B. Evaluation of a system for automatic detection of diabetic retinopathy from color fundus photographs in a large population of patients with diabetes. Diabetes Care. (2008) 31:193–98. doi: 10.2337/dc07-1312

50. Abràmoff MD, Folk JC, Han DP, Walker JD, Williams DF, Russel SR, et al. Automated analysis of retinal images for detection of referable diabetic retinopathy. JAMA Ophthalmol. (2013) 131:351–7. doi: 10.1001/jamaophthalmol.2013.1743

51. Solanki K, Ramachandra C, Bhat S, Bhaskaranand M, Nittala MG, Sadda SR. EyeArt: automated, high-throughput, image analysis for diabetic retinopathy screening. Invest Ophthalmol Vis Sci. (2015) 56:1429.

52. Gargeya R, Leng T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology. (2017) 124:962–969. doi: 10.1016/j.ophtha.2017.02.008

53. Ardiyanto I, Nugroho HA, Buana RLB. Deep learning-based Diabetic Retinopathy assessment on embedded system. Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Int Conf. (2017) 2017:1760–3. doi: 10.1109/EMBC.2017.8037184

54. Takahashi H, Tampo H, Arai Y, Inoue Y, Kawashima H. Applying artificial intelligence to disease staging: deep learning for improved staging of diabetic retinopathy. PLoS ONE. (2017) 12:e0179790. doi: 10.1371/journal.pone.0179790

55. Rajalakshmi R, Subashini R, Anjana RM, Mohan V. Automated diabetic retinopathy detection in smartphone-based fundus photography using artificial intelligence. Eye (Lond). (2018) 32:1138–44. doi: 10.1038/s41433-018-0064-9

56. Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. (2017) 135:1170–6. doi: 10.1001/jamaophthalmol.2017.3782

57. Treder M, Lauermann JL, Eter N. Automated detection of exudative age-related macular degeneration in spectral domain optical coherence tomography using deep learning. Graefes Arch Clin Exp Ophthalmol. (2018) 256:259–65. doi: 10.1007/s00417-017-3850-3

58. Matsuba S, Tabuchi H, Ohsugi H, Enno H, Ishitobi N, Masumoto H, et al. Accuracy of ultra-wide-field fundus ophthalmoscopy-assisted deep learning, a machine-learning technology, for detecting age-related macular degeneration. Int Ophthalmol. (2019) 39:1269–75. doi: 10.1007/s10792-018-0940-0

59. Keel S, Li Z, Scheetz J, Robman L, Phung J, Makeyeva G, et al. Development and validation of a deep-learning algorithm for the detection of neovascular age-related macular degeneration from colour fundus photographs. Clin Experiment Ophthalmol. (2019) 47:1009–18. doi: 10.1111/ceo.13575

60. Bogunovic H, Montuoro A, Baratsits M, Karantonis MG, Waldstein SM, Schlanitz F, et al. Machine learning of the progression of intermediate age-related macular degeneration based on OCT imaging. Invest Ophthalmol Vis Sci. (2017) 58:BIO141–BIO150. doi: 10.1167/iovs.17-21789

61. Bogunovic H, Montuoro A, Baratsis M, Karantosis MG, Waldstein SM, Schlanitz F, et al. Prediction of Anti-VEGF Treatment Requirements in Neovascular AMD Using a Machine Learning Approach. Invest Ophthalmol Vis Sci. (2017) 58:3240–48. doi: 10.1167/iovs.16-21053

62. Prahs P, Radeck V, Mayer C, Cvetkov Y, Cvetkova N, Helbig H, et al. OCT-based deep learning algorithm for the evaluation of treatment indication with anti-vascular endothelial growth factor medications. Graefes Arch Clin Exp Ophthalmol. (2018) 256:91–98. doi: 10.1007/s00417-017-3839-y

63. Sengupta S, Singh A, Leopold HA, Gulati T, Lakshminarayanan V. Ophthalmic diagnosis using deep learning with fundus images - A critical review. Artif Intell Med. (2020) 102:101758. doi: 10.1016/j.artmed.2019.101758

64. Ohsugi H, Tabuchi H, Enno H, Ishitobi N. Accuracy of deep learning, a machine-learning technology, using ultra-wide-field fundus ophthalmoscopy for detecting rhegmatogenous retinal detachment. Sci Rep. (2017) 7:9425. doi: 10.1038/s41598-017-09891-x

65. Li Z, Guo C, Nie D, Lin D, Zhu Y, Chen C, et al. Deep learning for detecting retinal detachment and discerning macular status using ultra-widefield fundus images. Commun Biol. (2020) 3:15. doi: 10.1038/s42003-019-0730-x

66. Zhao R, Chen Z, Chi Z. Convolutional neural networks for branch retinal vein occlusion recognition? In: 2015 IEEE International Conference on Information and Automation, ICIA 2015 - In Conjunction with 2015 IEEE International Conference on Automation and Logistics. Lijiang: IEEE (2015). p. 1633–6. doi: 10.1109/ICInfA.2015.7279547

67. Moral-Pumarega MT, Caserío-Carbonero S, De-La-Cruz-Bértolo J, Tejada-Palacios P, Lora-Pablos D, Pallás-Alonso C. Pain and stress assessment after retinopathy of prematurity screening examination: indirect ophthalmoscopy versus digital retinal imaging. BMC Pediatr. (2012) 12:132. doi: 10.1186/1471-2431-12-132

68. Heneghan C, Flynn J, O'Keefe M, Cahill M. Characterization of changes in blood vessel width and tortuosity in retinopathy of prematurity using image analysis. Med Image Anal. (2002) 6:407–429. doi: 10.1016/S1361-8415(02)00058-0

69. Rabinowitz MP, Grunwald JE, Karp KA, Quinn GE, Ying G-S, Mills MD. Progression to severe retinopathy predicted by retinal vessel diameter between 31 and 34 weeks of postconception age. Arch Ophthalmol. (2007) 125:1495–500. doi: 10.1001/archopht.125.11.1495

70. Wallace DK, Zhao Z, Freedman SF. A pilot study using ‘ROPtool' to quantify plus disease in retinopathy of prematurity. J AAPOS. (2007) 11:381–7. doi: 10.1016/j.jaapos.2007.04.008

71. Swanson C, Cocker KD, Parker KH, Moseley MJ, Fielder AR. Semiautomated computer analysis of vessel growth in preterm infants without and with ROP. Br J Ophthalmol. (2003) 87:1474–77. doi: 10.1136/bjo.87.12.1474

72. Shah DN, Wilson CM, Ying GS, Karp KA, Fielder AR, Ng J, et al. Semiautomated digital image analysis of posterior pole vessels in retinopathy of prematurity. J AAPOS. (2009) 13:504–6. doi: 10.1016/j.jaapos.2009.06.007

73. Wilson CM, Cocker KD, Moseley MJ, Paterson C, Clay ST, Schulenburg WE, et al. Computerized analysis of retinal vessel width and tortuosity in premature infants. Invest Ophthalmol Vis Sci. (2008) 49:3577–85. doi: 10.1167/iovs.07-1353

74. Worral DE, Wilson CM, Brostow G. Automated retinopathy of prematurity case detection with convolutional neural networks. In: LABELS/DLMIA@MICCAI. Cham: Springer (2016) p. 68–76 doi: 10.1007/978-3-319-46976-8_8

75. Redd TK, Campbell JP, Brown JM, Kim SJ, Ostmo S, Chan RVP, et al. Evaluation of a deep learning image assessment system for detecting severe retinopathy of prematurity. Br J Ophthalmol. (2018). doi: 10.1136/bjophthalmol-2018-313156. [Epub ahead of print].

76. Brown JM, Campbell JP, Beers A, Chang K, Ostmo S, Chan RVP, et al. Automated diagnosis of plus disease in retinopathy of prematurity using deep convolutional neural networks. JAMA Ophthalmol. (2018) 136:803–10. doi: 10.1001/jamaophthalmol.2018.1934

77. Wang J, Ju R, Chen Y, Zhang L, Hu J, Wu Y, et al. Automated retinopathy of prematurity screening using deep neural networks. EBioMedicine. (2018) 35:361–8. doi: 10.1016/j.ebiom.2018.08.033

78. Lavric A, Valentin P. KeratoDetect: keratoconus detection algorithm using convolutional neural networks. Comput Intell Neurosci. (2019) 2019:8162567. doi: 10.1155/2019/8162567

79. Pratap T, Kokil P. Computer-aided diagnosis of cataract using deep transfer learning. Biomed Signal Process Control. (2019) 53:101533. doi: 10.1016/j.bspc.2019.04.010

80. Ladas JG, Siddiqui AA, Devgan U, Jun AS. A 3-D ‘Super surface' combining modern intraocular lens formulas to generate a ‘super formula' and maximize accuracy. JAMA Ophthalmol. (2015) 133:1431–436. doi: 10.1001/jamaophthalmol.2015.3832

81. Siddiqui AA, Ladas JG, Lee JK. Artificial intelligence in cornea, refractive, and cataract surgery. Curr Opin Ophthalmol. (2020) 31:253–60. doi: 10.1097/ICU.0000000000000673

82. Connell BJ, Kane JX. Comparison of the Kane formula with existing formulas for intraocular lens power selection. BMJ Open Ophthalmol. (2019) 4:1–6. doi: 10.1136/bmjophth-2018-000251

83. Varma R, Steinmann WC, Scott IU. Expert agreement in evaluating the optic disc for glaucoma. Ophthalmology. (1992) 99:215–21. doi: 10.1016/S0161-6420(92)31990-6

84. Shibata N, Tanito M, Mitsuhashi K, Fujino Y, Matsuura M, Murata H, et al. Development of a deep residual learning algorithm to screen for glaucoma from fundus photography. Sci Rep. (2018) 8:14665. doi: 10.1038/s41598-018-33013-w

85. Medeiros FA, Jammal AA, Thompson AC. From machine to machine: an OCT-trained deep learning algorithm for objective quantification of glaucomatous damage in fundus photographs. Ophthalmology. (2019) 126:513–21. doi: 10.1016/j.ophtha.2018.12.033

86. Masumoto H, Tabuchi H, Nakakura S, Ishitobi N, Miki M, Enno H. Deep-learning classifier with an ultrawide-field scanning laser ophthalmoscope detects glaucoma visual field severity. Glaucoma J. (2018) 27:647–52. doi: 10.1097/IJG.0000000000000988

87. Gelman R, Jiang L, Du YE, Martinez-Perez ME, Flynn JT, Chiang MF. Plus disease in retinopathy of prematurity: pilot study of computer-based and expert diagnosis. J AAPOS. (2007) 11:532–40. doi: 10.1016/j.jaapos.2007.09.005

88. Telemedicine glossary Virginia. American Telemedicine Association. (2018). Available online at: https://www.americantelemed.org/resource/

89. Nuzzi R, Marolo P, Nuzzi A. The hub-and-spoke management of glaucoma. Front Neurosci. (2020) 14:180. doi: 10.3389/fnins.2020.00180

90. Gunasekeran DV, Tham YC, Ting DSW, Tan GSW, Wong TY. Digital health during COVID-19: lessons from operationalising new models of care in ophthalmology. Lancet Digit Heal. (2021) 3:e124–34. doi: 10.1016/S2589-7500(20)30287-9

91. Hollander JE, Carr BG. Virtually perfect? Telemedicine for Covid-19. N Engl J Med. (2020) 382:1679–81. doi: 10.1056/NEJMp2003539

92. Ting DSW, Lin H, Ruamviboonsuk P, Wong TY, Sim DA. Artificial intelligence, the internet of things, and virtual clinics: ophthalmology at the digital translation forefront. Lancet Digit Heal. (2020) 2:e8–e9. doi: 10.1016/S2589-7500(19)30217-1

93. Gunasekeran DV, Tseng MWW R, Tham YC, Wong TY. Applications of digital health for public health responses to COVID-19: a systematic scoping review of artificial intelligence, telehealth and related technologies. npj Digit Med. (2021) 4:36–41. doi: 10.1038/s41746-021-00412-9

94. Son J, Shin JY, Kim HD, Jung KH, Park KH, Park S. Development and Validation of Deep Learning Models for Screening Multiple Abnormal Findings in Retinal Fundus Images. Ophthalmology. (2020) 127:85–94. doi: 10.1016/j.ophtha.2019.05.029

95. Tham YC, Anees A, Zhang L, Goh JHL, Rim TH, Nusinovici S, et al. Referral for disease-related visual impairment using retinal photograph-based deep learning: a proof-of-concept, model development study. Lancet Digit Heal. (2021) 3:e29–e40. doi: 10.1016/S2589-7500(20)30271-5

96. Ittoop SM, SooHoo JR, Seibold LK, Mansouri K, Kahook MY. Systematic review of current devices for 24-h intraocular pressure monitoring. Adv Ther. (2016) 33:1679–90. doi: 10.1007/s12325-016-0388-4

97. Anderson AJ, Bedggood PA, George Kong YX, Martin KR, Vingrys AJ. Can home monitoring allow earlier detection of rapid visual field progression in glaucoma? Ophthalmology. (2017) 124:1735–42. doi: 10.1016/j.ophtha.2017.06.028

98. Ciuffreda KJ, Rosenfield M. Evaluation of the SVOne: a handheld, smartphone-based autorefractor. Optom Vis Sci. (2015) 92:1133–9. doi: 10.1097/OPX.0000000000000726

99. Wisse RPL, Muijzer MB, Cassano F, Godefrooij DA, Prevoo FDM Y, Soeters N. Validation of an independent web-based tool for measuring visual acuity and refractive error (the manifest versus online refractive evaluation trial: prospective open-label noninferiority clinical trial. J Med Internet Res. (2019) 21:e14808. doi: 10.2196/14808

100. Armstrong GW, Lorch AC. A(eye: a review of current applications of artificial intelligence and machine learning in ophthalmology. Int Ophthalmol Clin. (2020) 60:57–71. doi: 10.1097/IIO.0000000000000298

101. Nielsen KB, Lautrup ML, Andersen JKH, Savarimuthu TR, Grauslund J. Deep Learning–Based Algorithms in Screening of Diabetic Retinopathy: A Systematic Review of Diagnostic Performance. Ophthalmol Retin. (2019) 3:294–304. doi: 10.1016/j.oret.2018.10.014

102. Islam MM, Yang HC, Poly TN, Jian WS, Jack CYL. Deep learning algorithms for detection of diabetic retinopathy in retinal fundus photographs: A systematic review and meta-analysis. Comput Methods Programs Biomed. (2020) 191:105320. doi: 10.1016/j.cmpb.2020.105320

103. Ta JH, Bhandary SV, Sivaprasad S, Hagiwara Y, Bagchi A, Raghavendra U, et al. Age-related Macular Degeneration detection using deep convolutional neural network. Futur Gener Comput Syst. (2018) 87:127–35. doi: 10.1016/j.future.2018.05.001

104. Maddox TM, Rumsfeld JS, Payne PRO. Questions for Artificial Intelligence in Health Care. JAMA. (2019) 321:31–32. doi: 10.1001/jama.2018.18932

105. Stead WW. Clinical implications and challenges of artificial intelligence and deep learning. JAMA. (2018) 320: 1107–8. doi: 10.1001/jama.2018.11029

106. Zeiler MD, Fergus R. Visualizing and understanding convolutional networks. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T, editors. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 8689LCNS (PART 1). Cham: Springer (2014). p. 818–33.

107. Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning Deep Features for Discriminative Localization. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, NV: IEEE (2016). p. 2921–9.

108. Keel S, Wu J, Lee PY, Scheetz J, He M. Visualizing deep learning models for the detection of referable diabetic retinopathy and glaucoma. JAMA Ophthalmol. (2019) 137:288–92. doi: 10.1001/jamaophthalmol.2018.6035

109. Liu TYA, Bressler NM. Controversies in artificial intelligence. Curr Opin Ophthalmol. (2020) 31:324–8. doi: 10.1097/ICU.0000000000000694

110. Kanagasingam Y, Xiao D, Vignarajan J, Preetham A, Tay-Kearney M-L, Mehrotra A. Evaluation of artificial intelligence-based grading of diabetic retinopathy in primary care. JAMA Netw Open. (2018) 1:e182665. doi: 10.1001/jamanetworkopen.2018.2665

Keywords: artificial intelligence, machine learning, deep learning, neural network, teleophthalmology, ophthalmology

Citation: Nuzzi R, Boscia G, Marolo P and Ricardi F (2021) The Impact of Artificial Intelligence and Deep Learning in Eye Diseases: A Review. Front. Med. 8:710329. doi: 10.3389/fmed.2021.710329

Received: 15 May 2021; Accepted: 23 July 2021;

Published: 30 August 2021.

Edited by:

Haotian Lin, Sun Yat-sen University, ChinaReviewed by:

Yih Chung Tham, Singapore Eye Research Institute (SERI), SingaporeGilbert Lim, Singapore Eye Research Institute (SERI), Singapore

Copyright © 2021 Nuzzi, Boscia, Marolo and Ricardi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Raffaele Nuzzi, cHJvZi5udXp6aV9yYWZmYWVsZUBob3RtYWlsLml0

Raffaele Nuzzi

Raffaele Nuzzi Giacomo Boscia

Giacomo Boscia Paola Marolo

Paola Marolo Federico Ricardi

Federico Ricardi