- Life Sciences R&D Hub, Digital and Computational Pathology, Philips, Belfast, United Kingdom

There has been an exponential growth in the application of AI in health and in pathology. This is resulting in the innovation of deep learning technologies that are specifically aimed at cellular imaging and practical applications that could transform diagnostic pathology. This paper reviews the different approaches to deep learning in pathology, the public grand challenges that have driven this innovation and a range of emerging applications in pathology. The translation of AI into clinical practice will require applications to be embedded seamlessly within digital pathology workflows, driving an integrated approach to diagnostics and providing pathologists with new tools that accelerate workflow and improve diagnostic consistency and reduce errors. The clearance of digital pathology for primary diagnosis in the US by some manufacturers provides the platform on which to deliver practical AI. AI and computational pathology will continue to mature as researchers, clinicians, industry, regulatory organizations and patient advocacy groups work together to innovate and deliver new technologies to health care providers: technologies which are better, faster, cheaper, more precise, and safe.

Introduction

Artificial Intelligence (AI) along with its sub-disciplines of Machine Learning (ML) and Deep Learning (DL) are emerging as key technologies in healthcare with the potential to change lives and improve patient outcomes in many areas of medicine. Healthcare AI projects in particular have attracted greater investment than in any other sector of the global economy (1). In 2018, it is estimated that $2.1 billion were invested in AI related products it is estimated this will rise to $36.1 billion dollars by 2025 (2).

The innovation opportunities offered by AI has been discussed extensively in the medical literature (3). Underpinned by the ability to learn from salient features from large volumes of healthcare data, an AI system can potentially assist clinicians by interpreting diagnostic, prognostic and therapeutic data from very large patient populations, providing real-time guidance on risk, clinical care options and outcome, but in addition provide up-to-date medical information from journals, textbooks, and clinical practices to inform proper patient care (4). By combining access to such extensive knowledge, an AI system can help to reduce diagnostic and therapeutic errors that are inevitable in conventional human clinical practice.

AI systems are being researched widely in healthcare applications, where they are being trained not just from one data modality but from multivariate data (5) generated across multiple clinical activities including imaging, genomics, diagnosis, treatment assignment where associations between subject features and outcomes can be learned. Big data is the ammunition for the development of AI applications. The increasing availability of enormous datasets, curated within and across healthcare organizations will drive the development of robust and generalizable AI apps in health. Currently the largest data sets come from diagnostic imaging (comprising CT, CAT, MRI, and MRA) and this tends to have been the focus of AI development in medicine.

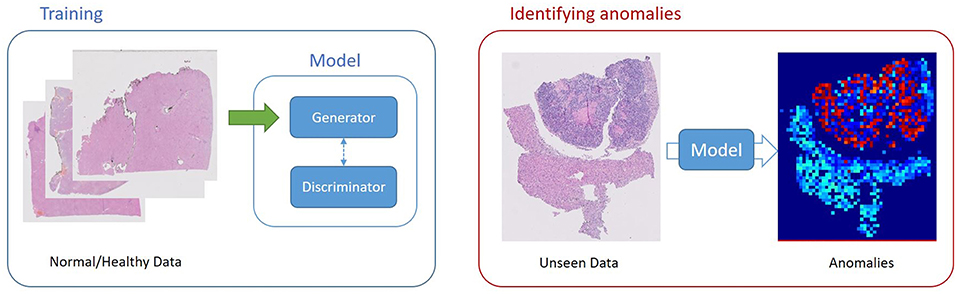

The development of AI applications has been wide-raging. There are AI apps being researched and developed in health care from emergency call assessment of myocardial risk (6) to blood test analysis (7) to drug discovery (8). In parallel, FDA has been increasingly clearing AI medical applications for clinical use. These are summarized in Table 1.

While, there is considerable promise in AI technologies in health, there are some challenges ahead. These include the ability of AI to generalize to achieve full automation in the diagnostic/clinical pathway will be extremely difficult. The medico-legal issues around accountability and liability in decision made or supported by machines will be hard, the regulatory issues for manufacturers of instruments capable of AI will be challenging and the need to demonstrate reproducibility and accuracy on large populations of patients which contain outliers and no-representative individuals may cause difficulties for AI development (9). Diagnosis and treatment plans are inherently non-linear, complex processes, requiring creativity, and problem-solving skills that demand complex interactions with multiple other medical disciplines. This will be difficult to achieve using AI. However, for well-defined domains that contribute to that diagnostic value chain, AI can clearly be transformative. Even with the advent of new AI, computers are unlikely to replace the diagnostic role of clinicians in the near future. However, there is a growing acceptance of AI systems with 61% of people suggesting that AI will make the world a better place (10).

Pathology is also now recognized as a strong candidate for AI development, principally in the field of cancer diagnosis and tissue biomarker analytics. This has been driven primarily by the development of whole slide imaging (WSI) platforms and digital pathology. Here, the generation high resolution digital images, each of which carries high volumes of data capturing the complex patterns, are critical to diagnosis of disease, providing a fertile opportunity to apply AI for improved detection of disease. This paper set out to review the recent applications of AI in pathology, highlighting the benefits and the pitfalls.

Digital Pathology and AI: A Perfect Storm

With the advent of high throughput scanning devices and WSI systems, capable of digitally capturing the entire content of resection, biopsy and cytological preparations from glass slides at diagnostic resolution, researchers can now use these content rich digital assets to develop imaging tools for discovery and diagnosis. The advantages on quantitative pathology imaging have been known for many years. By extracting quantitative data from the images using automated segmentation and pixel analysis, diagnostic patterns and visual clues can be better defined driving improved reproducibility and consistency in diagnostic classification. Image analysis also allows the identification of sub-visual clues allowing the potential identification of new signatures of disease, derived from the pixel information, but not visible to the naked eye.

The advances in high throughput scanning devices in pathology has been astounding. In 2017, FDA cleared the use of the first WSI system for primary diagnostics (11). Here, digitization of pathology can enable pathologists to transform their entire workflow in a busy diagnostic laboratory; integrating digital scanners with laboratory IT systems, handling and dispatching digital slides to pathology staff inside and outside an organization, manually reviewing digital slides on-screen rather than using a microscope and reporting cases in an entirely digital workspace. This has been shown in the largest pivotal trial of digital pathology in the US to be non-inferior to conventional diagnosis by microscopy (12). With the right infrastructure and implementation, this has been shown to introduce significant savings in pathologists time in busy AP laboratories (13).

The digital transformation of pathology is expected to growth dramatically over the next few with increasing numbers of laboratories moving to high throughput digital scanning to support diagnostic practice. The real drivers for this include (i) an acute shortage of pathologists in many countries (14, 15), (ii) aging populations driving up pathology workloads (16), (iii) increased cancer screening programs resulting in increased workloads, (iv) increasing complexity of pathology tests driving up the time taken per case, (v) the need for pathology laboratories to outsource expertise (15, 16).

These same drivers are also accelerating the development of AI to support the diagnostic challenges that face pathologists today. By layering AI applications into digital workflows, potential additional improvements in efficiencies can be achieved both in terms of turn-around times but also patient outcomes though improved detection and reproducibility.

Recent reports from a number of professional pathology organizations have highlighted the potential that digital pathology and AI could bring to the discipline to address the current workforce, workload, and complexity challenges (16). The number of academic publications in pathology AI has increased exponentially with over 1,000 registered in PubMed in 2018. In the last 18 months there has been in excess of $100M invested in start-ups in pathology AI with a focus on building practical AI applications for diagnostics. In addition, governments are recognizing the opportunity that AI can bring to pathology. In the UK, a £1.3 billion investment has been announced to help detect diseases earlier through the use of artificial intelligence as part of the government's second Life Sciences Sector Deal (17). Pathology AI has been highlighted as a specific opportunity in UK and now $65M of investment has been committed to pathology and radiology AI R&D through a major Innovate UK initiative which has engaged industry and clinical sites across the UK (18).

This exciting and growing ecosystem of AI development in pathology is expected to drive major improvements in pathology AI over the next few years. This will require continued innovation in AI technologies and their effective application on large annotated image data lakes as develop in tandem with the adoption of digital pathology in diagnostic labs worldwide.

The convergence of advanced technologies, regulatory approval for digital pathology, digital transformation of pathology, adoption of digital pathology diagnostic practice, AI innovation and funding to accelerate pathology AI discovery, represents a perfect storm for the real transformation of pathology as a discipline.

Deep Learning Methods in Pathology: A Rapidly Developing Domain

Given the widespread application of Artificial Intelligence (AI) based methods in computational pathology as illustrated in the previous section, it is worthwhile considering the current State of the Art in Deep Learning and the potential evolution of the technologies in the future. Although many of these are developed and proved in areas other than computational pathology, or indeed biomedical imaging, the field is moving forward apace, and many potential improvements will also have the capability of being used within computational pathology.

Network Architectures

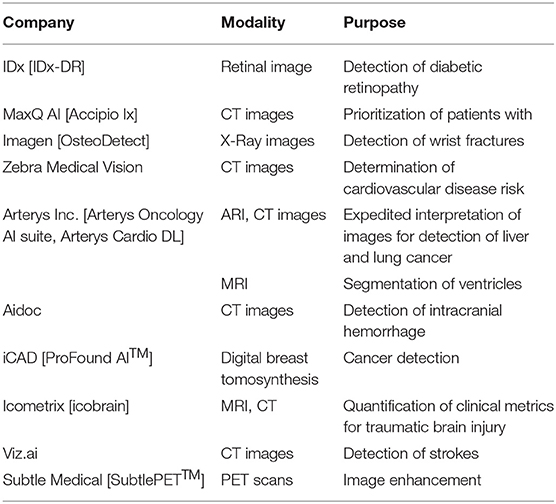

The majority of efforts to date have focused on the development of neural network architectures in order to enhance the performance of different computational pathology tasks. U-Net has been commonly used in several applications (19–22). It relies on the strong use of data augmentation to use the available annotated samples more efficiently (Figure 1). Therefore, it became popular as it can be trained end-to-end from very few images, and, nevertheless, outperformed prior methods (based on a sliding-window convolutional network) on the ISBI challenge for segmentation of neuronal structures in electron microscopic stacks.

Figure 1. U-Net architecture for semantic segmentation, comprising encoder (downsampling), and decoder (upsampling) sections, and showing the skip connections between layers (in yellow).

Recently, a deep learning network, called MVPNet, used multiple viewing paths for magnification invariant diagnosis in breast cancer (23). MVPNet has significantly fewer parameters than standard deep learning models with comparable performance and it combines and processes local and global features simultaneously for effective diagnosis. A ResNet based deep learning network (101-layer deep) was adopted in another work due to the fact of high efficiency and stable network structure (24). The method proved useful in discriminating breast cancer metastases with different pathologic stages from digital breast histopathological images.

A hybrid model was proposed for breast cancer classification from histopathological images (25). The model combines the strength of several convolutional neural networks (CNN) (i.e., Inception, Residual, and Recurrent networks). The final model provided superior performance compared against existing approaches for breast cancer recognition.

Motivated by the zoom-in operation of a pathologist using a digital microscope, RAZN (Reinforced Auto-Zoom Net) learns a policy network to decide whether zooming is required in a given region of interest (26). Because the zoom-in action is selective, RAZN is robust to unbalanced and noisy ground truth labels and can efficiently reduce overfitting. RAZN outperformed both single-scale and multiscale baseline approaches, achieving better accuracy at low inference cost.

Generative Adversarial Networks

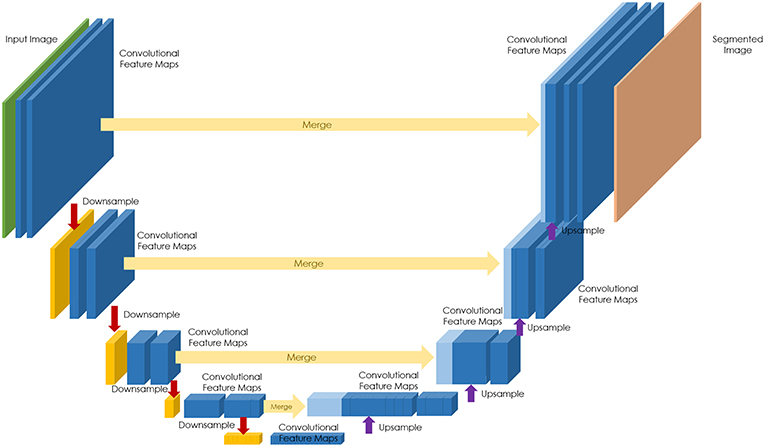

Generative adversarial networks (GANs) are deep neural network architectures comprised of two networks (generator and discriminator), opposing one against the other (thus the “adversarial”) (Figure 2). GANs were introduced by Ian Goodfellow in 2014 (27), and has found its way for several applications in pathology.

Figure 2. GANs, Generative adversarial networks (GANs) are deep neural network architectures comprised of two networks (generator and discriminator), opposing one against the other (thus the “adversarial”). The generator takes in random numbers and returns an image. This generated image is fed into the discriminator alongside a stream of images taken from the actual, ground-truth dataset.

For instance, color variations due to various factors are imposing obstacles to the digitized histological diagnosis process. Shaban et al. (28) proposed to overcome this problem by developing a stain normalization methodology based on CycleGAN, which is a GAN that uses two generators and two discriminators (29). They revealed that the method significantly outperformed the state of the art. Also, Lahiani et al. (30) used CycleGANs to virtually generate FAP-CK from Ki67-CD8 tissue stained images.

In order to create deep learning models that are robust to the typical color variations seen in staining of slides, another approach is to extensively augment the training data with respect to color variation to cause the models to learn color-invariant features (31). Recently, generative adversarial approaches (32, 33) have been proposed to learn to compose domain-specific transformations for data augmentation. By training a generative sequence model over the specified transformation functions using reinforcement learning in a GAN-like framework, the model is able to generate realistic transformed data points which are useful for data augmentation.

Unsupervised Learning

Most deep learning methods require large annotated training datasets that are specific to a particular problem domain. Such large datasets are difficult to acquire for histopathology data where visual characteristics differ between different tissue types, besides the need for precise annotations.

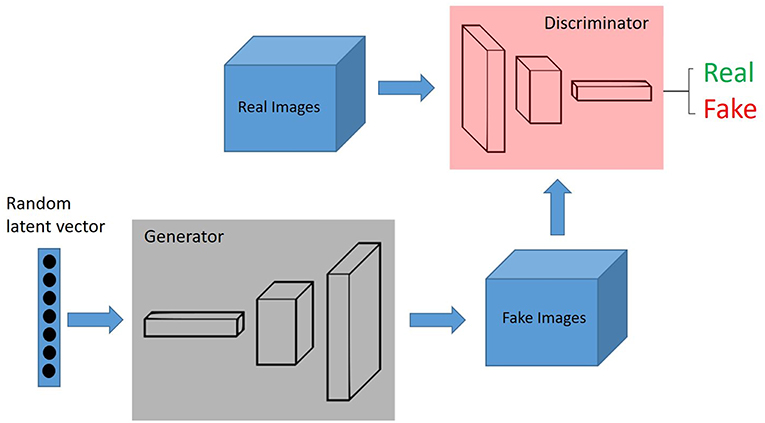

Schlegl et al. (34) built an unsupervised learning to identify anomalies in imaging data as candidates for markers. The deep convolutional GAN learns a manifold of normal anatomical variability, accompanying a novel anomaly scoring scheme based on the mapping from image space to a latent space. Applied to new data, the model labels anomalies, and scores image patches indicating their fit into the learned distribution.

In the context of domain adaptation, Xia et al. (35) proposed a new framework for the classification of histopathology data with limited training datasets. The approach utilizes CNNs to learn the low-level, shared image representations of the characteristics of tissues in histopathology images, and then optimizes this shared representation to a more specific tissue types (Figure 3).

Figure 3. Unsupervised Learning, unsupervised anomaly detection framework. Generative adversarial training is performed on healthy data and testing is performed on unseen data.

The number, variation, and interoperability of deep learning networks will continue to grow as the field evolves. Pathology as a discipline and the technology available to apply deep learning modalities, must be able to adapt to these innovations to ensure the benefits on tissue imaging are fully experienced. This poses challenges in regulatory environment where algorithms need to be locked down to ensure evaluation, consistency, and repeatability and pace at which new algorithms can be taken to market. New approaches to regulatory governance need to be developed to ensure that patients benefit from the rapid deployment of latest technologies, but in a safe way. FDA and other regulatory authorities are exploring this with novel schemes that can accelerate new technologies to market (36).

Testing the Ecosystem: Open Competitions in Computational Pathology and AI

One driving force behind innovation in computational pathology has been the increase of so-called “Grand Challenges.” These are open, public competitions aimed at addressing key use cases within the domain of computational pathology and typically provide data sets and annotations to allow competitors to develop algorithms, and test data and criteria against which those algorithms may be benchmarked and compared.

One of the earliest challenges in histopathology was held in 2010 at the International Conference for Pattern Recognition (ICPR) (37) which positioned two problems: (i) counting lymphocytes on images of H&E stained slides of breast cancer, and (ii) counting centroblasts on images of H&E stained slides of follicular lymphoma. These two problems are still pressing issues, as lymphocytic infiltration strongly correlates with breast cancer recurrence, and histological grading of follicular lymphoma is based on the number of centroblasts. Twenty-three groups registered for this challenge, but only five teams submitted their results with variable results.

The next grand challenge in histopathology was held in 2012 by the same ICPR conference group and focused only on mitosis detection in breast cancer histological images (38). Mitotic count is an important parameter in breast cancer grading. However, consistency, reproducibility, and agreement on mitotic count for the same slide can vary largely among pathologists. Detection of mitosis is a very challenging task since mitosis are small objects with a large variety of shape configurations. Different types of images were provided, so that the contestants could analyze classical images of H&E stained slides as well as images acquired with a 10 bands multispectral microscope, which might be more discriminating for the detection of mitosis. Compared to the previous ICPR challenge, 129 teams registered to the contest and 17 teams submitted their results, showing an increasing interest for automatic cell detection in general, and mitotic cell detection in particular. This was the first histopathology challenge where a deep learning max-pooling CNN clearly outperformed other methods based on handcrafted features, and paved the way for future use of CNNs (39).

The winner of the ICPR 2012 pathology grand challenge was also the winner of the following year's grand challenge Assessment of Mitosis Detection Algorithms 2013 (AMIDA13) held at the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2013) (40). However, the AMIDA13 data set was much larger and more challenging than the one of ICPR 2012, with many ambiguous cases and frequently encountered problems such as imperfect slide staining. More than 89 research groups (universities and companies) registered, out of which 14 submitted results. The best performing method was the first system to achieve an accuracy that was in the order of inter-observer variability.

At the ICPR grand challenge in 2014, the objectives of the contest were to analyze breast cancer H&E stained biopsies in order to detect mitosis and also to evaluate the score of nuclear atypia (41). Nuclear atypia scoring is a value (1, 2, or 3) corresponding to a low, moderate or strong nuclear atypia respectively, and is an important factor in breast cancer grading, as it gives an indication about the aggressiveness of the cancer. The mitosis detection winning algorithm was a fast deep cascaded CNN composed of two different CNNs: a coarse retrieval model to identify potential mitosis candidates and a fine discrimination model (42). Compared with state-of-the-art methods on previous grand challenge data sets, the winning system achieved comparable or better results with roughly 60 times faster speed.

In 2015, the organizers of the International Symposium in Applied Bioimaging held a grand challenge (43) and presented a new H&E stained breast cancer biopsy dataset with the goal of automatic classification of histology images into one of four classes: normal tissue, benign lesion, in situ carcinoma, or invasive carcinoma. Once again, CNNs were highly successful (44) and achieved performance similar or superior to state of the art methods, even though a smaller and more challenging dataset was used. Main contributions of the winning system were image normalization based on optical density, patch augmentation and normalization, and training SVMs on features extracted by CNN.

MICCAI 2015 presented a new grand challenge in histopathology, on gland segmentation in H&E stained slides of colorectal adenocarcinoma biopsies, one of the most common form of colon cancer. An overview of the challenge along with evaluation results from top performing methods has been summarized (45). The same team that won the ICPR 2014 grand challenge also provided the winning CNN system for MICCAI 2015, but with fundamental differences between the systems. The novel deep contour-aware network (46) architecture consisted of two parts, a down-sampling path and an up-sampling path, resembling very much the well-known and popular U-Net architecture (20), which won the IEEE International Symposium on Biomedical Imaging (ISBI) cell tracking challenge in the same year and was also conditionally accepted and published at MICCAI 2015.

The organizers of MICCAI 2016 presented the TUmor Proliferation Assessment Challenge (TUPAC 2016) (47) with a very clear and testing objective: predicting mitotic scores (1, 2, or 3) of nuclear atypia in images of breast cancer H&E stained slides, one of the ICPR 2014 goals. However, the main difference from previous conferences was the fact that contestants had to analyze whole slides images (WSI) instead of regions of interest manually selected by pathologists. The challenge was based on a very large dataset called The Cancer Genome Atlas (TCGA) (48, 49) that also included genomic information, so the contestants had an additional objective of predicting PAM50 gene expression scores. The system that won both tasks (50) performed image preprocessing (tissue detection with Otsu thresholding and stain normalization) and ROI detection based on cell density, followed by feature extraction using a hard-negative mined ResNet (51) architecture, which they then used as input to an SVM.

The organizers of ISBI 2016 also presented a grand challenge based on WSI: the Cancer Metastases in Lymph Nodes Challenge 2016, or CAMELYON16 (52). The main objective was to assess the performance of automated deep learning algorithms at detecting metastases in H&E stained tissue sections of lymph nodes with breast cancer and compare it with diagnoses from (i) a panel of 11 pathologists with time constraint and (ii) one pathologist without any time constraint. Performance assessment was done on two main tasks, (i) metastasis identification and (ii) WSI classification as either containing or lacking metastases. The winning team submitted a CNN system that performed image preprocessing first (tissue detection and WSI normalization) and relied on a pre-trained 22-layer GoogLeNet architecture (53) to identify metastatic regions for the first task of the challenge. Afterwards they did post-processing and extracted features that were used to train a random forest classifier for the second task of the challenge. The winning system performed better than the panel of 11 pathologists with time control and had comparable results to the only one pathologist without any time control.

Building upon the success of CAMELYON16, ISBI 2017 introduced CAMELYON17 (53), the grand challenge with the largest histopathology dataset publicly made available, totaling 1399 WSI and around 3 terabytes (54). The main objective changed slightly, from individual WSI analysis to patient level analysis (i.e., combining the assessment of multiple lymph node slides into one outcome). The winning system came from the same team that won TUPAC 2016 and was based on an ensemble of three pre-trained ResNet-101, each of them further optimized with different patch augmentation techniques for the CAMELYON17 dataset.

ISBI 2017 also introduced a grand challenge for Tissue Microarray (TMA) analysis in thyroid cancer diagnosis (55). The main objective of this challenge was predicting clinical diagnosis results based on patient background information, but also on H&E stained TMAs as well as immunohistochemical (IHC) TMAs.

MICCAI 2018 presented three different challenges that used histopathology images from H&E stained biopsies. Two of them took place within the workshop for Computational Precision Medicine: (1) Combined Radiology and Pathology Brain Tumor Classification and (2) Digital Pathology Nuclei Segmentation. The first one focused on classifying low-grade from high-grade brain tumors based on a combination of radiology and pathology images, while the second one focused on nuclei segmentation in pathology images acquired from low-grade and high-grade brain tumors. The third challenge was the Multi-organ nuclei segmentation (MoNuSeg) challenge and was based on a public dataset (56) containing 30 images and around 22,000 nuclear boundary annotations from multiple organs.

In 2018, the widely-used and popular competitions website Kaggle opened submission for the Data Science Bowl with the main objective of segmenting nuclei on microscopy images acquired under different conditions and from different organs. The dataset included both H&E stained biopsies as well as fluorescence images. The winning system selected from over more than 700 submissions was an ensemble of four very deep CNNs, trained using heavy augmentation techniques, and a complex post-processing step involving water-shedding, extracting morphological features and training gradient boosted trees.

Another challenge that took place in 2018 was the Grand Challenge on BreAst Cancer Histology (BACH) (57), held at the International Conference on Image Analysis and Recognition (ICIAR 2018). This challenge was a follow-up challenge of Bioimaging 2015, and the purpose was classification at the slide-level and pixel-level of H&E stained breast histology images in four classes: normal, benign, in situ carcinoma and invasive carcinoma. The winning system of both tasks (58) was based on the Inception-ResNet-v2 architecture (59), improved by a modified hard negative mining technique.

Going forward in 2019, at least three challenges have been announced, showing the massive interest that exists in the online communities for solving complex pathology problems. Kaggle's Data Science Bowl 2019 aims at identifying metastatic tissue in histopathologic scans of lymph node sections, building on the huge success and massive dataset of the CAMELYON challenges. The 2019 SPIE Medical Imaging Conference will hold the BreastPathQ challenge, with the main purpose of quantifying tumor patch cellularity from WSI of breast cancer H&E stained slides. ISBI 2019 will also hold another challenge in Automatic Cancer Detection and Classification in Whole-slide Lung Histopathology.

Selected Applications of AI in Pathology

Prostate Cancer

Prostate cancer is the second most common cancer in men in USA and the most common cause of cancer death in men in the UK, with around 175,000 new cases per year in the US (60), 47,200 new cases per year in UK (61) with 9.6 million deaths globally from the disease. Histopathological assessments, using needle core biopsies and surgical resection, play an important role in the diagnosis of the prostate cancer. Current interpretation of the histopathology images includes the detection of tumor patterns, Gleason grading (62), and the combination of prominent grades into a Gleason score, which is critical in determining the clinical outcome. The higher the Gleason grade and the more prominent that pattern is seen in biopsies, the more aggressive the cancer the more likely that that disease has already metastasized. Gleason grading is not only time-consuming, but also prone to intra- and inter-observer variation (63, 64). Tissue and cellular imaging have for a long time been proposed as a quantitative tool in the assessment of cancer grade in the prostate. However, this has been limited by the technology and the precision of the imaging algorithms. More recently, several research teams have proposed to use AI technologies for the automated analysis of prostate cancer as a means to precisely detect prostate cancer patterns in tissue sections and also to objectively grade the disease.

With regard to tumor detection in prostate tissues, Litjens et al. (65) used a convolutional auto-encoder for tumor detection in H&E stained biopsy specimens. Substantial gains in efficiency were possible by using CNNs to exclude tumor-negative slides from further human analysis; showing the potential to reduce the workload for pathologists. Bulten et al. (66) developed an algorithm for automated segmentation of epithelial tissue in prostatectomy slides using CNN. The generated segmentation can be used to highlight regions of interest for pathologists and to improve cancer annotations.

While tumor detection is largely a binary decision on the presence or absence of invasive cancer in tissue biopsies, Gleason grading represents a complex gradation of patterns that reflect the differentiation and so the severity of the cancer. Such is the complexity of the image patterns seen, reliable and consistent interpretation is challenging and prone to disagreement and potential diagnostic error. Jiménez-del-Toro et al. developed an automated approach using patch selection and CNN, to detect regions-of-interest in WSIs where relevant visual information can be sampled to detect high-grade Gleason grades (67). They achieved an accuracy of 78% on an unseen data set, with particular success in classifying Gleason Grades 7–8.

A number of groups have used a generically trained CNN for analyzing prostate biopsies and classifying the images into benign tissue and different Gleason grades (68, 69). The proposed algorithm benefited from combining visually driven feature extraction by human eye with those derived by a deep neural network (69). Importantly, this work showed was able to differentiate between Gleason 3+4 and 4+3 slides with 75% accuracy. The algorithm was designed to run on whole slide images, conceptually allowing the technology to be used in clinical practice.

One group (70) presented a deep learning approach for automated Gleason grading of prostate cancer tissue microarrays with H&E staining. The study shows promising results regarding the applicability of deep learning based solutions toward more objective and reproducible prostate cancer grading, especially for cases with heterogeneous Gleason patterns.

Nagpal et al. (71) presented a deep learning system for Gleason grading in whole-slide images of prostatectomies. The system goes beyond the current Gleason system to more finely characterize and quantitate tumor morphology, providing opportunities for refinement of the Gleason system itself. This approach opens the opportunity to build new approaches to tissue interpretation; not based on simply measuring what pathologists recognize in the tissue today, but that creates new signatures of disease that radically transform the approach to diagnosis and has stronger correlation with clinical outcome.

A compositional multi-instance learning approach has also been developed which encodes images of nuclei through a CNN, then predicts the presence of metastasis from sets of encoded nuclei (72). The system has ability to predict the risk of metastatic prostate cancer at diagnosis.

In conclusion, AI and deep learning techniques can play an important role in prostate cancer analysis, diagnosis and prognosis. They could also be used to quickly analyze huge clinical trial databases to extract relevant cases. Although, the above techniques have focused on the use of H&E stained images, techniques that use immunohistochemistry might be of more interest when researching the efficacy of drugs or the expression of genes.

Metastasis Detection in Breast Cancer

The problem of identifying metastases in lymph nodes within the context of breast cancer is an important part of staging such cancers, but has been found to be a challenging task for pathologists, with one study showing a change of classification in up to 24% of cases after subsequent review (73). The applicability of AI-based techniques to assist pathologists with this task has been addressed by a number of open competitions such as the CAMELYON series discussed earlier (52–54, 59, 74), and the results from those challenges have shown comparable discriminative performance to pathologists, in the particular task of detecting lymph node metastases in H&E-stained tissue sections (52). There have been a number of subsequent studies in metastasis detection (31, 75, 76). The work by Liu et al. (31) is particularly interesting as it showed superiority for algorithm-assisted pathologist detection of metastases over detection by pathologist or algorithm in isolation. The potential benefits of AI in this use case are yet to be studied in a clinical trial, but the work of Benjordi et al. (52) and Liu et al. (31) indicates the potential for AI to assist pathologists in making difficult clinical decisions, and improve the quality and consistency of such decisions.

Ki67 Scoring

The Ki67 antigen is a nuclear protein strictly associated with cell proliferation. This makes it a perfect cellular biomarker for determining the growth factor of any given cell population, which has particular value in cancer research, where cell proliferation is strong marker of tumor growth and patient prognosis. The fraction of Ki67 positive tumor cells (Ki-67 labeling index, i.e., Ki67 LI) is often correlated with the clinical course of the disease (77). In breast cancer research there has been a massive international multicenter collaboration toward the validation of a standard Ki67 scoring protocol (78–80) as well as showing the prognostic value of an automated Ki67 protocol compared to manual or visual scoring (81, 82). In prostate cancer research, Ki67 has been validated as a biomarker for overall survival (83) and disease free survival in a large meta-analysis (84), but a standard scoring process is still missing (85).

Some authors have show significant agreement between their automated Ki67 LI and the average of two pathologists KI67 LI estimates (86). Their model is based on image preprocessing (color space transformation), image clustering with k-means, and cell segmentation and counting using global thresholding, mathematical morphology and connected component analysis.

Some have chosen to analyze the cell counting task as a regression problem (87). They modified a very deep ResNet with 152 layers to output a spatial density prediction and evaluated it on three datasets, including a Ki67 stained dataset, compared their approach to three state-of-the-art models and obtain superior performance. The same authors were also the first to combine deep learning with compressed sensing for cell detection (88). The essential idea of their method was to employ random projections to encode the output space (cell segmentation masks) to a compressed vector of fixed dimension indicating the cell centers. Afterwards, the CNN regresses this compressed vector from the input pixels. They achieved the highest or at least top-3 performance in terms of F1-score, compared with other state-of-the-art methods on seven mainstream datasets, including the one from (87).

A novel deep learning technique based on hypercolumn descriptors of VGG16 for cell classification in Ki67 images has been proposed, called Simultaneous Detection and Cell Segmentation (DeepSDCS) (89). They extracted hypercolumn descriptors to form an activation vector from specific layers to capture features at different granularity. These features were then propagated using a stochastic gradient descent optimizer to yield the detection of the nuclei and the final cell segmentations. Subsequently, seeds generated from cell segmentation were propagated to a spatially constrained CNN for the classification of the cells into stromal, lymphocyte, Ki67-positive cancer cell, and Ki67-negative cancer cell. They validated its accuracy in the context of a large-scale clinical trial of estrogen-receptor-positive breast cancer. They achieved staggering accuracies of 99% and 89% on two separate test sets of Ki67 stained breast cancer dataset comprising biopsy and whole-slide images.

A model has been proposed for GEP-NEN based on three parts: (1) a robust cell counting and boundary delineation algorithm that is designed to localize both tumor and non-tumor cells, (2) online sparse dictionary learning method, and (3) an automated framework that is used to differentiate tumor from non-tumor cells and then immunopositive from immunonegative tumor cells (90). They report similar performance to pathologists' manual annotations.

Other authors have shown the improved performance of a modified CNN model over classical image processing methods for robust cell detection in GEP-NEN, testing their algorithm on 3 data sets, including Ki67 and H&E stained images (91, 92).

Deep Learning for Immuno-Histochemistry Applications Including PD-L1

IHC image analysis provides an accurate means for quantitatively estimating disease related protein expression, thereby reducing inter- and intra-observer variation and improve scoring reproducibility. For this reason, accurate approximation of staining in IHC images for diagnostics has long been an important aspect of IHC-based computational pathology. Many commercial and open source solutions are available that allow IHC analysis evaluation for research and discovery purposes (25).

A variety of challenges exist in IHC analytics. Shariff et al. provide a review of the problems faced in the domain of IHC image analysis along with solutions and techniques used in the area (93). Subsequently several image processing and machine learning based approaches have been proposed providing different levels of accuracies (94–96). Then work of Lejeune et al. (97) is significant as they perform automated analysis for quantification of proteins for different nuclear (ki67, p53), cytoplasmic (TIA-1, CD68) and membrane markers (CD4, CD8, CD56, HLA-Dr). Techniques used involve extracting contrast features in combination with spatial filtering followed by color segmentation with the help of HSI histogram-based model. Range-based filtering is followed by automatic counting using measurement data and statistics (97). Similarly, Humphries et al. use image processing to detect stained tumor cells in order to understand the role of PD-L1 in predicting outcome of breast cancer treatment (98). Also, Zehntner et al. propose a novel technique for automatic image segmentation using the blue to red channel ratio and subsequently use different thresholds on B/R and the green channel to acquire chromogen positive areas. The results show high level of concordance with manual segmentation (99).

Recently, traditional image processing and machine learning techniques have been shown to be less powerful and efficient as compared to deep learning techniques (100–102). Chen and Chefd'Hotel propose a CNN-based technique for automatic detection of immune cells in IHC images. A very important aspect of the work is that sparse color un-mixing is used to preprocess the image in to different biologically meaningful color channels (103). Lahiani et al. trained a unified segmentation model with a color deconvolution segment added to the network architecture. High accuracy results are obtained with substantial improvement in generalization. The added deconvolution segment layer learns to differentiate stain channels for different types of stains (104). Garcia et al. used CNNs for detection of lymphocytes in IHC images and have used augmentation to increase the data for analysis. The technique works on single-cell as well as multiple-cell images (105).

One biomarker of recent interest has been PD-L1 expression, which is used as a companion or complementary marker to stratify patients who may benefit from checkpoint inhibitor therapy in a number of cancer types (106). The quantification of this biomarker is made more difficult by the non-specific staining of areas other than tumor cell membranes, in particular macrophages, lymphocytes, necrotic and stromal regions. These factors can, in particular cause challenges when scoring around the 1% threshold used for second-line treatment of NSCLC with pembrolizumab (107). Automated imaging based on deep learning of the cell types and the expression profiles can significantly underpin the quantitative interpretation of PD-L1 expression (Figure 4). The work by Humphries et al. (107) describes the use of image processing to quantify PD-L1 expression and showed reasonable concordance with scores from trained pathologists for adenocarcinoma and squamous cell carcinomas in lung. Another recent study (108), applied deep learning to determination of the PD-L1 Tumor Proportion Score (TPS) in NSCLC needle biopsies, showing strong concordance between the algorithmic estimation of TPS and pathologist visual scores.

Figure 4. PD-L1 imaging in lung cancer. Deep learning can be used to identify and distinguish positive | negative tumor cells and positive | negative inflammatory cells.

Genetic Mutation Prediction

Recently, some have used deep CNNs to predict whether or not SPOP was mutated in prostate cancer, given only the digital whole slide after standard H&E staining (109). Moreover, quantitative features learned from patient genetics and histology have been used for content-based image retrieval, finding similar patients for a given patient where the histology appears to share the same genetic driver of disease i.e., SPOP mutation, and finding similar patients for a given patient that does not have that driver mutation. This is extremely beneficial as mutations in SPOP lead to a type of prostate tumor thought to be involved in about 15% of all prostate cancers (110).

Within the same context, Coudray et al. (111) trained the network to predict the ten most commonly mutated genes in LUAD. Also, Kim et al. (112) used deep convolutional neural networks to predict the presence of mutated BRAF or NRAS in melanoma histopathology images. The findings from these studies suggest that deep learning models can assist pathologists in the detection of cancer subtype or gene mutations and therefore has the potential to become integrated into clinical decision making.

Tumor Detection for Molecular Analysis

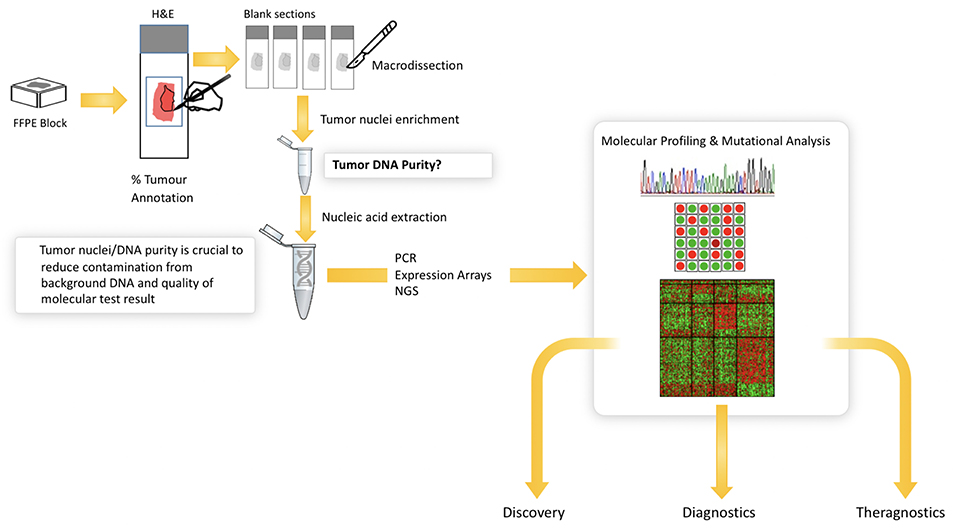

The increasing number of molecular tests for specific mutations in solid tumors has significantly improved our ability to identify new patient cohorts that can be selectively treated. EGFR mutational analysis in lung cancer, KRAS in colorectal cancer and BRAF in melanoma all represent examples of mutational tests that are routinely performed on appropriate patients with these cancers. Similarly, multigene panels are increasingly being used to better profile patients for targeted therapy, and next generation sequencing is routinely performed for solid tumor analytics and is now becoming the standard of care in many institutions. In all of these settings, histopathological review of the H&E tissue section prior to molecular analysis is critical (Figure 5). This is due to the heterogeneity that exists in most tissue samples where clarity over the cellular content is critical to ensuring the quality of the molecular test. Manual mark-up of the tumor in the tissue section is often carried out to support macrodissection, aimed at enriching the tumor DNA. Here, the molecular test is carried out on tumor tissue scraped from the FFPE, H&E tissue section. Also, given the heterogeneity of solid tumor tissue samples and the multiple tumor and non-tumor cells that exist in a sample, the pathologist must routinely assess the % of tumor cells to again ensure that there is sufficient tumor DNA in the assay and that the background noise from non-tumor cells does not impact on the test result. Most tests have a % tumor threshold below which the test is not recommended.

Figure 5. Illustrates the current workflow in molecular research and diagnostics. Solid tumor analysis is commonly derived from FFPE block and H&E tissue section as guide for tumor content (far left). The figure shows the need for annotation and macrodissection and the importance of tumor purity from FFPE samples for molecular profiling. Digital pathology can automate the annotation and measurement of tumor cells in H&E—providing a more objective, reliable platform for molecular pathology.

The challenge is that the interobserver variation in the assessment of percentage of tumor is considerable (113–116) where differences can range from between 20% and 80% and where the risk of false negative molecular tests, due to imprecise understanding of sample quality, could impact on patient care.

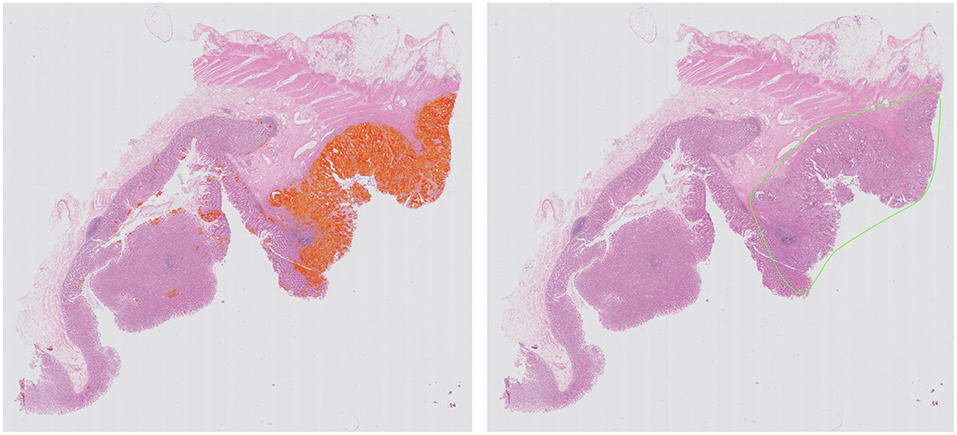

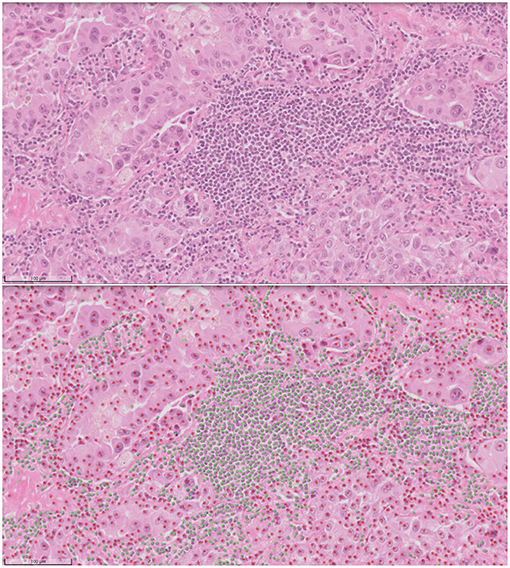

Computational pathology and image analytics have been used to develop a solution for automated analysis and annotation of H&E tissue samples, identifying the boundary of the tumor and precisely measuring tissue cellularity and tumor cell content. TissueMark1 developed by PathXL Ltd and subsequently by Philips has been described in the literature (113). Designed specifically to support molecular pathology laboratories, it has been shown high levels of performance in lung cancer. The algorithms have now been expanded to automatically identify tumor in colorectal, melanoma, breast, and prostate tissue section. Trained on large datasets across multiple laboratories and sing deep learning technology, the solution can drive automation of microdissection and quantitative analysis of % tumor, providing an objective tissue quality evaluation for molecular pathology in solid tumors (Figures 6, 7).

Figure 6. Automated identification of colorectal tumor in H&E tissue samples using deep learning networks, showing heatmap of tumor regions (Left) and automatically generated macrodissection boundary (Right) with a product called TissueMark1.

Figure 7. Automated analysis of cellular content in H&E using deep learning in TissueMark1. Here tumor (red) and non-tumor cells (green) can be distinguished, annotated for visual inspection and counted to reach more precise qualititive measures of % tumor across entire whole slide H&E scans in lung, colon, melanoma, breast, and prostate tissue sections.

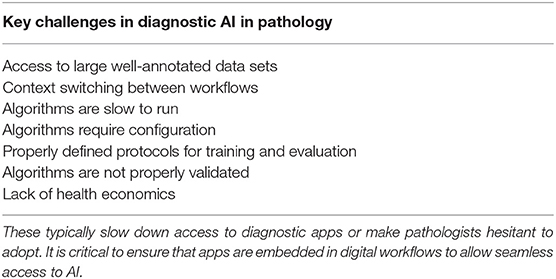

Challenges With Computational Pathology as a Diagnostic Tool

As can be seen from this review, there has been considerable research on AI and deep learning across many pathological problems. Indeed, a review in PubMed shows an almost exponential growth in publications in pathology AI on the last 5 years. Unfortunately, as is typical, this has not been mirrored by a similar growth in diagnostic practice and the translation of research to clinical diagnostics.

Some of the reasons for this are shown in Table 2. A key requirement for technology translation is the need to embed AI within diagnostic workflow—to ensure that the pathologist can easily access AI applications for diagnostics. With the first approval to use digital pathology for primary diagnostics in the US and increasing adoption of digital WSI scanners, image management systems and workflows in digital diagnostic practice, this presents the ideal platform on which to build AI applications. Here AI should be fully embedded seamlessly within diagnostic workflow, where the pathologist can review digital slides manually for conventional manual diagnostic assessment but also access new visual and quantitative data generated from computational pathology imaging. Computational should not represent an extra step, the need to load new software or a switch in context, but should practically invisible, operating in the background but generating the valuable insights into tissue analytics that are not currently available.

Table 2. List of the key challenges that face the translation of computational pathology into clinical practice.

In designing algorithms, it is critical to ensure that algorithms execute quickly to avoid slowing the diagnostic process. This requires optimal processing hardware to be in place to manage analytical requests made by the pathologist within the viewing software. Better still is to have the images completely analyzed at the time of scanning and to allow all of the relevant image analysis data to be available to pathologist at the time of review. This includes the use of computational pathology to dispatch digital slides to the correct pathologist, prioritize cases for review, and request extra sections/stains before pathological review. This requires considerable processing capacity available at the time of scanning with pixels being analyzed as they are created on the scanner. However, bringing intelligence to pathology workflow in in this way will potentially drive further efficiencies in pathology, accelerate turnaround times and improve the precision of diagnosis.

Finally, as stated previously, translation into clinical practice and adoption by pathologists requires algorithms trained and validated on large patient cohorts and sample numbers, across multiple laboratories. Many academic studies are restricted to small sample sets from a single laboratory. The preparation of pathology specimens has long been recognized as a problem which can challenge the robustness of computational pathology algorithms (106, 107), and this continues to be problem for the larger data sets required for deep learning. While approaches such as augmentation and/or color normalization have been used successfully in training such algorithms (98, 108), adequately representing inter-laboratory variations in the training data will also give confidence that algorithms are not “over-trained” to perform well on the characteristics of only one lab (preparation/staining). However, for the validation of such algorithms for wider usage, it is absolutely necessary to gather data from as wide a variety of laboratories as possible, in order to mitigate the risk that what appears to be an accurate algorithm may not have the broad applicability required of a clinical algorithm. No guidelines are yet available on the numbers of annotations, images and laboratories that are needed to capture the variation that is seen in the real world, and statistical studies will be needed for application to properly determine this.

Given the inherent variation that exists in staining patterns from lab to lab, generalizing these algorithms will require a step change in the size and spread of samples from multiple laboratories. This is now driving the need for multinational data-lakes with large volumes of WSI in pathology and high quality annotations for AI training and validation from multiple laboratories. A number of initiatives are already underway to achieve this. In the UK, a large multi-million pound grant has been provided by the government Innovate UK programme to several clinical networks to support the construction of pathology data lakes for AI innovation. This is supported by a number of large industry partners to provide the infrastructure to support this initiative. This will provide a robust data environment for the development of reliable IVD-ready applications. Industry has to work within a very tightly regulated environment and satisfy regulatory authorities of efficacy and safety through comprehensive clinical studies, before releasing a product with clinical claims. While this is costly and time consuming, and will inevitably delay the introduction of computational pathology for clinical practice, it is a critical step and will ensure that AI applications undergo significant testing to ensure they are safe in the hand of professionals.

Finally, there is nervousness by some that AI will replace skills, resulting in fewer jobs for pathologists and this will drive resistance. While AI will inevitably result in the automation of some common tasks in diagnostic pathology, the vast majority of applications will benefit from combined human-machine intelligence. Pathologists are excellent at assessing tissue pathology in the context of multiple clinico-pathological data across a broad range of diseases—some of which occur together. AI currently works best in well-defined domains, but brings quantitative insights to that domain, overcoming the issues of standardization. Doing this automatically can increase the speed of tissue assessment and provide pathologists with critical data on the tissue patterns. It is this hybrid approach of computer-aided decision support that is likely to drive the adoption and success of AI where the pathologist and machine working in tandem bring the biggest benefits. In these settings, some have proposed that Intelligence Augmentation (IA) is a better term than AI to describe how computational pathology will drive improvements in diagnostic pathology.

Conclusion

Computational pathology and the application of AI for tissue analytics is growing at a tremendous rate and has the potential to transform pathology with applications that accelerate workflow, improve diagnostics, and the clinical outcome of patients. There is a large gap between research studies and those necessary to deliver safe and reliable AI to the pathology community. As the demands of clinical AI become better understood, we will see this gap narrow. The field of pathology AI is still young and will continue to mature as researchers, clinicians, industry, regulatory organizations, and patient advocacy groups work together to innovate and deliver new technologies to health care providers: technologies which are better, faster, cheaper, more precise, and safe.

Author Contributions

All authors have written various sections of this review. All have deep experience on the application of AI and deep learning in pathology applications working in the Philips image analysis hub.

Conflict of Interest

The authors are employees of Royal Philips, Digital and Computational Pathology. The opinions expressed in this presentation are solely those of the author or presenters, and do not necessarily reflect those of Philips. The information presented herein is not specific to any product of Philips or their intended uses. The information contained herein does not constitute, and should not be construed as, any promotion of Philips products or company policies

Footnotes

1. ^TissueMark is not intended for diagnosis, monitoring or therapeutic purposes or in any other manner for regular medical practice. PathXL is the legal manufacturer and is a Philips company.

References

1. Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA. (2013) 309:1351–2. doi: 10.1001/jama.2013.393

2. Bresnick J. Artificial Intelligence in Healthcare Spending to Hit $36B. (2018). Available online at: https://healthitanalytics.com/news/artificial-intelligence-in-healthcare-spending-to-hit-36b (accessed March 31, 2019).

3. Buch VH, Ahmed I, Maruthappu M. Artificial intelligence in medicine: current trends and future possibilities. Br J Gen Pract. (2018) 68:143–4. doi: 10.3399/bjgp18X695213

4. Ker J, Wang L, Rao J, Lim T. Deep learning applications in medical image analysis. IEEE Access. (2018) 6:9375–89. doi: 10.1109/ACCESS.2017.2788044

5. MacEwen C. Artifical Intelligence in Healthcare. (2019). Available online at: https://www.aomrc.org.uk/wp-content/uploads/2019/01/Artificial_intelligence_in_healthcare_0119.pdf (accessed March 31, 2019).

6. Tsay D, Patterson C. From machine learning to artificial intelligence applications in cardiac care. Circulation. (2018) 138:2569–75. doi: 10.1161/CIRCULATIONAHA.118.031734

7. Gunčar G, Kukar M, Notar M, Brvar M, Cernelč P, Notar M, et al. An application of machine learning to haematological diagnosis. Sci Rep. (2018) 8:411. doi: 10.1038/s41598-017-18564-8

8. Agrawal P. Artificial intelligence in drug discovery and development. J Pharmacovigil. (2018) 6:e173. doi: 10.4172/2329-6887.1000e173

9. Bresnick J. Arguing the Pros and Cons of Artificial Intelligence in Healthcare. (2018). Available online at: https://healthitanalytics.com/news/arguing-the-pros-and-cons-of-artificial-intelligence-in-healthcare (accessed March 31, 2019).

10. Segars S. AI Today, AI Tomorrow. Awareness, Acceptance and Anticipation of AI: A Global Consumer Perspective. (2018). Available online at: https://pages.arm.com/rs/312-SAX-488/images/arm-ai-survey-report.pdf (accessed March 31, 2019).

11. FDA. Press Announcements - FDA Allows Marketing of First Whole Slide Imaging System for Digital Pathology. (2017). Available online at: https://www.fda.gov/newsevents/newsroom/pressannouncements/ucm552742.htm (accessed March 31, 2019).

12. Mukhopadhyay S, Feldman MD, Abels E, Ashfaq R, Beltaifa S, Cacciabeve NG, et al. Whole slide imaging versus microscopy for primary diagnosis in surgical pathology: a multicenter blinded randomized noninferiority study of 1992 cases (Pivotal Study). Am J Surg Pathol. (2018) 42:39–52. doi: 10.1097/PAS.0000000000000948

13. Baidoshvili A, Bucur A, van Leeuwen J, van der Laak J, Kluin P, van Diest PJ. Evaluating the benefits of digital pathology implementation: time savings in laboratory logistics. Histopathology. (2018) 73:784–94. doi: 10.1111/his.13691

14. Robboy SJ, Gupta S, Crawford JM, Cohen MB, Karcher DS, Leonard DGB, et al. The pathologist workforce in the United States II. An interactive modeling tool for analyzing future qualitative and quantitative staffing demands for services. Arch Pathol Lab Med. (2015) 139:1413–30. doi: 10.5858/arpa.2014-0559-OA

15. Royal College of Pathologists. Meeting Pathology Demand Histopathology Workforce Census. (2018). Available online at: https://www.rcpath.org/uploads/assets/952a934d-2ec3-48c9-a8e6e00fcdca700f/meeting-pathology-demand-histopathology-workforce-census-2018.pdf (accessed March 31, 2019).

16. Cancer Research UK. Testing Times to Come? An Evaluation of Pathology Capacity Across the UK. (2016). Available online at: www.cancerresearchuk.org (accessed March 31, 2019).

17. Hardaker A. UK AI Investment Hits $1.3bn as Government Invests in Skills. (2019). Available online at: https://www.businesscloud.co.uk/news/uk-ai-investment-hits-13bn-as-governement-invests-in-skills (accessed March 31, 2019).

18. Leontina P. UK invests $65M to set up five new AI digital pathology and imaging centers. MobiHealthNews. (2018). Available online at: https://www.mobihealthnews.com/content/uk-invests-65m-set-five-new-ai-digital-pathology-and-imaging-centers (accessed March 31, 2019).

19. Falk T, Mai D, Bensch R, Çiçek Ö, Abdulkadir A, Marrakchi Y, et al. U-Net: deep learning for cell counting, detection, and morphometry. Nat Methods. (2019) 16:67–70. doi: 10.1038/s41592-018-0261-2

20. Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. (2015). Available online at: http://arxiv.org/abs/1505.04597 (accessed March 31, 2019).

21. Alom MZ, Yakopcic C, Taha TM, Asari VK. Nuclei segmentation with recurrent residual convolutional neural networks based U-Net (R2U-Net). In: IEEE National Aerospace and Electronics Conference. (2018). Available online at: https://www.semanticscholar.org/paper/Nuclei-Segmentation-with-Recurrent-Residual-Neural-Alom-Yakopcic/d6785c954cc5562838a57e185e99d0496b5fd5a2 (accessed March 31, 2019).

22. Oda H, Roth HR, Chiba K, Sokolić J, Kitasaka T, Oda M, et al. BESNet: boundary-enhanced segmentation of cells in histopathological images. In: Medical Image Computing and Computer Assisted Intervention. (2018). p. 228–36. Available online at: http://link.springer.com/10.1007/978-3-030-00934-2_26 (accessed March 31, 2019).

23. Jonnalagedda P, Schmolze D, Bhanu B. MVPNets: multi-viewing path deep learning neural networks for magnification invariant diagnosis in breast cancer. In: 2018 IEEE 18th International Conference on Bioinformatics and Bioengineering (BIBE). (2018). p. 189–94. Available online at: https://ieeexplore.ieee.org/document/8567483/ (accessed March 31, 2019).

24. Xiao K, Wang Z, Xu T, Wan T. A Deep Learning Method for Detecting and Classifying Breast Cancer Metastases in Lymph Nodes on Histopathological Images. (2017). Available online at: https://www.semanticscholar.org/paper/A-DEEP-LEARNING-METHOD-FOR-DETECTING-AND-BREAST-IN-Xiao-Wang/72ed2f4b2b464e36f85c70dcf660f4bb9468c64c (accessed March 31, 2019).

25. Hamilton P, O'Reilly P, Bankhead P, Abels E, Salto-Tellez M. Digital and computational pathology for biomarker discovery. In: Badve S, Kumar G, editors. Predictive Biomarkers in Oncology. Cham: Springer International Publishing (2019). p. 87–105.

26. Dong N, Kampffmeyer M, Liang X, Wang Z, Dai W, Xing EP. Reinforced auto-zoom net: towards accurate and fast breast cancer segmentation in whole-slide images. In: Stoyanov D, et al., editors. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. (2018). Available online at: http://arxiv.org/abs/1807.11113 doi: 10.1007/978-3-030-00889-5_36 (accessed March 31, 2019).

27. Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative Adversarial Networks. (2014). Available online at: http://arxiv.org/abs/1406.2661 (accessed April 1, 2019).

28. Shaban MT, Baur C, Navab N, Albarqouni S. StainGAN: stain style transfer for digital histological images. In: IEEE 16th International Symposium on Biomedical Imaging (ISBI). (2018). Available online at: http://arxiv.org/abs/1804.01601 (accessed April 1, 2019).

29. Zhu J-Y, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In: IEEE International Conference on Computer Vision (ICCV). (2017). Available online at: http://arxiv.org/abs/1703.10593 (accessed April 1, 2019).

30. Lahiani A, Gildenblat J, Klaman I, Albarqouni S, Navab N, Klaiman E. Virtualization of tissue staining in digital pathology using an unsupervised deep learning approach. In: Digital Pathology 15th European Congress, ECDP. (2019). Available online at: http://arxiv.org/abs/1810.06415 (accessed April 1, 2019).

31. Liu Y, Gadepalli K, Norouzi M, Dahl GE, Kohlberger T, Boyko A, et al. Detecting Cancer Metastases on Gigapixel Pathology Images. (2017). Available online at: http://arxiv.org/abs/1703.02442 (accessed April 1, 2019).

32. Ratner AJ, Ehrenberg HR, Hussain Z, Dunnmon J, Ré C. Learning to Compose Domain-Specific Transformations for Data Augmentation. Advances in Neural Information Processing Systems 30. (NIPS) (2017). Available online at: http://arxiv.org/abs/1709.01643 (accessed April 1, 2019).

33. Cubuk ED, Zoph B, Mane D, Vasudevan V, Le QV. AutoAugment: learning augmentation policies from data. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2019). Available online at: http://arxiv.org/abs/1805.09501 (accessed April 1, 2019).

34. Schlegl T, Seeböck P, Waldstein SM, Schmidt-Erfurth U, Langs G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In: Information Processing in Medical Imaging. (2017). Available online at: http://arxiv.org/abs/1703.05921 (accessed April 1, 2019).

35. Xia T, Kumar A, Feng D, Kim J. Patch-level tumor classification in digital histopathology images with domain adapted deep learning. Conf Proc IEEE Eng Med Biol Soc. (2018) 2018:644–7. doi: 10.1109/EMBC.2018.8512353

36. Health Center for Devices and Radiological. How to Study and Market Your Device - Breakthrough Devices Program. (2018). Available online at: https://www.fda.gov/medicaldevices/deviceregulationandguidance/howtomarketyourdevice/ucm441467.htm (accessed April 1, 2019).

37. Gurcan MN, Madabhushi A, Rajpoot N. Pattern recognition in histopathological images: an ICPR 2010 contest. In: Ünay D, Çataltepe Z, Aksoy S, editors. Recognizing Patterns in Signals, Speech, Images and Videos. Berlin; Heidelberg: Springer (2010). p. 226–34.

38. Roux L, Racoceanu D, Loménie N, Kulikova M, Irshad H, Klossa J, et al. Mitosis detection in breast cancer histological images An ICPR 2012 contest. J Pathol Inform. (2013) 4:8. doi: 10.4103/2153-3539.112693

39. Cireşan DC, Giusti A, Gambardella LM, Schmidhuber J. Mitosis detection in breast cancer histology images with deep neural networks. In: Mori K, Sakuma I, Sato Y, Barillot C, Navab N, editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2013. Berlin; Heidelberg: Springer (2013). p. 411–8.

40. Veta M, van Diest PJ, Willems SM, Wang H, Madabhushi A, Cruz-Roa A, et al. Assessment of algorithms for mitosis detection in breast cancer histopathology images. Med Image Anal. (2015) 20:237–48. doi: 10.1016/j.media.2014.11.010

41. Roux L. Detection of mitosis and evaluation of nuclear atypia score in breast cancer histological images. In: 22nd International Conference on Pattern Recognition 2014. MITOS-ATYPIA Contest. (2014). Available online at: http://ludo17.free.fr/mitos_atypia_2014/icpr2014_MitosAtypia_DataDescription.pdf

42. Chen H, Dou Q, Wang X, Qin J, Heng P-A. Mitosis detection in breast cancer histology images via deep cascaded networks. In: Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence. AAAI Press (2016). p. 1160–6. Available online at: http://dl.acm.org/citation.cfm?id=3015812.3015984

43. Pego AAP. Grand Callenge: Bioimaging 2015. (2015). Available online at: http://www.bioimaging2015.ineb.up.pt/challenge_overview.html (accessed April 1, 2019).

44. Araújo T, Aresta G, Castro E, Rouco J, Aguiar P, Eloy C, et al. Classification of breast cancer histology images using Convolutional Neural Networks. PLoS ONE. (2017) 12:e0177544. doi: 10.1371/journal.pone.0177544

45. Sirinukunwattana K, Pluim JPW, Chen H, Qi X, Heng P-A, Guo YB, et al. Gland segmentation in colon histology images: the GLAS challenge contest. Med Image Anal. (2017) 35:489–502. doi: 10.1016/j.media.2016.08.008

46. Chen H, Qi X, Yu L, Dou Q, Qin J, Heng P-A. DCAN: deep contour-aware networks for object instance segmentation from histology images. Med Image Anal. (2017) 36:135–46. doi: 10.1016/j.media.2016.11.004

47. Veta M, Heng YJ, Stathonikos N, Bejnordi BE, Beca F, Wollmann T, et al. Predicting breast tumor proliferation from whole-slide images: the TUPAC16 challenge. Med Image Anal. (2019) 54:111–21. doi: 10.1016/j.media.2019.05.008

48. Cancer Genome Atlas Network, Daniel C, Fulton RS, McLellan MD, Schmidt H, Kalicki-Veizer J, et al. Comprehensive molecular portraits of human breast tumours. Nature. (2012) 490:61–70. doi: 10.1038/nature11412

49. Heng YJ, Lester SC, Tse GM, Factor RE, Allison KH, Collins LC, et al. The molecular basis of breast cancer pathological phenotypes. J Pathol. (2017) 241:375–91. doi: 10.1002/path.4847

50. Paeng K, Hwang S, Park S, Kim M. A Unified Framework for Tumor Proliferation Score Prediction in Breast Histopathology. (2016). Available online at: http://arxiv.org/abs/1612.07180 (accessed April 1, 2019).

51. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2015). Available online at: http://arxiv.org/abs/1512.03385 (accessed April 1, 2019).

52. Ehteshami Bejnordi B, Veta M, Johannes van Diest P, van Ginneken B, Karssemeijer N, Litjens G, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA. (2017) 318:2199–210. doi: 10.1001/jama.2017.14580

53. Bandi P, Geessink O, Manson Q, Van Dijk M, Balkenhol M, Hermsen M, et al. From detection of individual metastases to classification of lymph node status at the patient level: the CAMELYON17 challenge. IEEE Trans Med Imaging. (2019) 38:550–60. doi: 10.1109/TMI.2018.2867350

54. Litjens G, Bandi P, Ehteshami Bejnordi B, Geessink O, Balkenhol M, Bult P, et al. (1399) H&E-stained sentinel lymph node sections of breast cancer patients: the CAMELYON dataset. Gigascience. (2018) 7:giy065. doi: 10.1093/gigascience/giy065

55. Wang C-W, Lee Y-C, Calista E, Zhou F, Zhu H, Suzuki R, et al. A benchmark for comparing precision medicine methods in thyroid cancer diagnosis using tissue microarrays. Bioinformatics. (2018) 34:1767–73. doi: 10.1093/bioinformatics/btx838

56. Kumar N, Verma R, Sharma S, Bhargava S, Vahadane A, Sethi A. A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans Med Imaging. (2017) 36:1550–60. doi: 10.1109/TMI.2017.2677499

57. Aresta G, Araújo T, Kwok S, Chennamsetty SS, Safwan M, Alex V, et al. BACH: grand challenge on breast cancer histology images. In: ICIAR. (2018) Available online at: http://arxiv.org/abs/1808.04277 (accessed April 1, 2019).

58. Kwok S. Multiclass classification of breast cancer in whole-slide images. In: Campilho A, Karray F, ter Haar Romeny B, editors. Image Analysis and Recognition. ICIAR 2018. Cham: Springer (2018). p. 931–40.

59. Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: Computer Vision and Pattern Recognition. (2014). Available online at: http://arxiv.org/abs/1409.4842 (accessed April 1, 2019).

60. American Cancer Society. Key Statistics for Prostate Cancer and Prostate Cancer Facts. (2018). Available online at: https://www.cancer.org/cancer/prostate-cancer/about/key-statistics.html (accessed April 1, 2019).

61. CRUK. Prostate cancer statistics|Cancer Research UK. (2018). Available online at: https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/prostate-cancer (accessed April 1, 2019).

62. Epstein JI, Egevad L, Amin MB, Delahunt B, Srigley JR, Humphrey PA, et al. The 2014 International society of urological pathology (ISUP) consensus conference on gleason grading of prostatic carcinoma. Am J Surg Pathol. (2015) 40:1. doi: 10.1097/PAS.0000000000000530

63. Singh R, Gosavi A, Agashe S, Sulhyan K. Interobserver reproducibility of Gleason grading of prostatic adenocarcinoma among general pathologists. Indian J Cancer. (2011) 48:488. doi: 10.4103/0019-509X.92277

64. McKenney JK, Simko J, Bonham M, True LD, Troyer D, Hawley S, et al. The potential impact of reproducibility of gleason grading in men with early stage prostate cancer managed by active surveillance: a multi-institutional study. J Urol. (2011) 186:465–9. doi: 10.1016/j.juro.2011.03.115

65. Litjens G, Sánchez CI, Timofeeva N, Hermsen M, Nagtegaal I, Kovacs I, et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci Rep. (2016) 6:26286. doi: 10.1038/srep26286

66. Bulten W, Litjens GJS, Hulsbergen-van de Kaa CA, van der Laak J. Automated segmentation of epithelial tissue in prostatectomy slides using deep learning. In: Proceedings Medical Imaging 2018: Digital Pathology. (2018). p. 27. Available online at: https://www.spiedigitallibrary.org/conference-proceedings-of-spie/10581/2292872/Automated-segmentation-of-epithelial-tissue-in-prostatectomy-slides-using-deep/10.1117/12.2292872.full (accessed April 1, 2019).

67. Jiménez del Toro O, Atzori M, Otálora S, Andersson M, Eurén K, Hedlund M, et al. Convolutional neural networks for an automatic classification of prostate tissue slides with high-grade Gleason score. In: Proceedings Medical Imaging 2017: Digital Pathology. (2017). p. 10140. Available online at: http://proceedings.spiedigitallibrary.org/proceeding.aspx?doi=10.1117/12.2255710 (accessed April 1, 2019).

68. Kallen H, Molin J, Heyden A, Lundstrom C, Astrom K. Towards grading gleason score using generically trained deep convolutional neural networks. In: 2016 IEEE 13th Int Symp on Biomed Im (ISBI). (2016). p. 1163–7. Available online at: http://ieeexplore.ieee.org/document/7493473/ (accessed April 1, 2019).

69. Zhou N, Fedorov A, Fennessy FM, Kikinis R, Gao Y. Large scale digital prostate pathology image analysis combining feature extraction and deep neural network. CoRR. (2017). Available online at: https://www.semanticscholar.org/paper/Large-scale-digital-prostate-pathology-image-and-Zhou-Fedorov/5faf07decd896237a82b89e4e4fd42739a3eea1b (accessed April 1, 2019).

70. Arvaniti E, Fricker KS, Moret M, Rupp N, Hermanns T, Fankhauser C, et al. Automated Gleason grading of prostate cancer tissue microarrays via deep learning. Sci Rep. (2018) 8:12054. doi: 10.1038/s41598-018-30535-1

71. Nagpal K, Foote D, Liu Y, Chen PH, Wulczyn E, Tan F, et al. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digit Med. (2019) 2:48. doi: 10.1038/s41746-019-0112-2

72. Ing N, Tomczak JM, Miller E, Garraway IP, Welling M, Knudsen BS, et al. A deep multiple instance model to predict prostate cancer metastasis from nuclear morphology. In: Conference on Medical Imaging with Deep Learning. Amsterdam (2018).

73. Vestjens JHMJ, Pepels MJ, de Boer M, Borm GF, van Deurzen CHM, van Diest PJ, et al. Relevant impact of central pathology review on nodal classification in individual breast cancer patients. Ann Oncol. (2012) 23:2561–6. doi: 10.1093/annonc/mds072

74. Wang D, Khosla A, Gargeya R, Irshad H, Beck AH. Deep Learning for Identifying Metastatic Breast Cancer. (2016) Available online at: http://arxiv.org/abs/1606.05718 (accessed April 1, 2019).

75. Guo Z, Liu H, Ni H, Wang X, Su M, Guo W, et al. A fast and refined cancer regions segmentation framework in whole-slide breast pathological images. Sci Rep. (2019) 9:882. doi: 10.1038/s41598-018-37492-9

76. Steiner DF, MacDonald R, Liu Y, Truszkowski P, Hipp JD, Gammage C, et al. Impact of deep learning assistance on the histopathologic review of lymph nodes for metastatic breast cancer. Am J Surg Pathol. (2018) 42:1636–46. doi: 10.1097/PAS.0000000000001151

77. Scholzen T, Gerdes J. The Ki-67 protein: from the known and the unknown. J Cell Physiol. (2000) 182:311–22. doi: 10.1002/(SICI)1097-4652(200003)182:3<311::AID-JCP1>3.0.CO;2-9

78. Polley M-YC, Leung SCY, McShane LM, Gao D, Hugh JC, Mastropasqua MG, et al. An international Ki67 reproducibility study. J Natl Cancer Inst. (2013) 105:1897–906. doi: 10.1093/jnci/djt306

79. Polley M-YC, Leung SCY, Gao D, Mastropasqua MG, Zabaglo LA, Bartlett JMS, et al. An international study to increase concordance in Ki67 scoring. Mod Pathol. (2015) 28:778–86. doi: 10.1038/modpathol.2015.38

80. Leung SCY, Nielsen TO, Zabaglo L, Arun I, Badve SS, Bane AL, et al. Analytical validation of a standardized scoring protocol for Ki67: phase 3 of an international multicenter collaboration. NPJ Breast Cancer. (2016) 2:16014. doi: 10.1038/npjbcancer.2016.14

81. Abubakar M, Orr N, Daley F, Coulson P, Ali HR, Blows F, et al. Prognostic value of automated KI67 scoring in breast cancer: a centralised evaluation of 8088 patients from 10 study groups. Breast Cancer Res. (2016) 18:104. doi: 10.1186/s13058-016-0765-6

82. Klauschen F, Wienert S, Schmitt WD, Loibl S, Gerber B, Blohmer J-U, et al. Standardized Ki67 diagnostics using automated scoring–clinical validation in the GeparTrio breast cancer study. Clin Cancer Res. (2015) 21:3651–7. doi: 10.1158/1078-0432.CCR-14-1283

83. Berney DM, Gopalan A, Kudahetti S, Fisher G, Ambroisine L, Foster CS, et al. Ki-67 and outcome in clinically localised prostate cancer: analysis of conservatively treated prostate cancer patients from the Trans-Atlantic Prostate Group study. Br J Cancer. (2009) 100:888–93. doi: 10.1038/sj.bjc.6604951

84. Berlin A, Castro-Mesta JF, Rodriguez-Romo L, Hernandez-Barajas D, González-Guerrero JF, Rodríguez-Fernández IA, et al. Prognostic role of Ki-67 score in localized prostate cancer: A systematic review and meta-analysis. Urol Oncol Semin Orig Investig. (2017) 35:499–506. doi: 10.1016/j.urolonc.2017.05.004

85. Fantony JJ, Howard LE, Csizmadi I, Armstrong AJ, Lark AL, Galet C, et al. Is Ki67 prognostic for aggressive prostate cancer? A multicenter real-world study. Biomark Med. (2018) 12:727–36. doi: 10.2217/bmm-2017-0322

86. Al-Lahham HZ, Alomari RS, Hiary H, Chaudhary V. Automating proliferation rate estimation from Ki-67 histology images. In: van Ginneken B, Novak CL, editors. The International Society for Optical Engineering. (2012). p. 83152A. Available online at: http://proceedings.spiedigitallibrary.org/proceeding.aspx?doi=10.1117/12.911009 (accessed April 1, 2019).

87. Xue Y, Ray N, Hugh J, Bigras G. Cell counting by regression using convolutional neural network. In: European Conference on Computer Vision. Amsterdam (2016). p. 274–90.

88. Xue Y, Ray N. Cell Detection in Microscopy Images with Deep Convolutional Neural Network and Compressed Sensing. (2017). Available online at: http://arxiv.org/abs/1708.03307 (accessed April 1, 2019).

89. Narayanan PL, Raza SEA, Dodson A, Gusterson B, Dowsett M, Yuan Y. DeepSDCS: dissecting cancer proliferation heterogeneity in Ki67 digital whole slide images. In: MIDL. (2018). Available online at: http://arxiv.org/abs/1806.10850 (accessed April 1, 2019).

90. Fuyong Xing F, Hai Su H, Neltner J, Lin Yang L. Automatic Ki-67 counting using robust cell detection and online dictionary learning. IEEE Trans Biomed Eng. (2014) 61:859–70. doi: 10.1109/TBME.2013.2291703

91. Xie Y, Xing F, Kong X, Su H, Yang L. Beyond classification: structured regression for robust cell detection using convolutional neural network. Med Image Comput Comput Assist Interv. (2015) 9351:358–65. doi: 10.1007/978-3-319-24574-4_43

92. Xie Y, Kong X, Xing F, Liu F, Su H, Yang L. Deep voting: a robust approach toward nucleus localization in microscopy images. Med Image Comp and Comp Assisted Interv. (2015) 9351:374–82. doi: 10.1007/978-3-319-24574-4_45