95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Mar. Sci. , 11 April 2024

Sec. Ocean Observation

Volume 11 - 2024 | https://doi.org/10.3389/fmars.2024.1378817

This article is part of the Research Topic Deep Learning for Marine Science, volume II View all 27 articles

Underwater images suffer from severe color attenuation and contrast reduction due to the poor and complex lighting conditions in the water. Most mainstream methods employing deep learning typically require extensive underwater paired training data, resulting in complex network structures, long training time, and high computational cost. To address this issue, a novel ZeroReference Parameter Estimation Network (Zero-UAE) model is proposed in this paper for the adaptive enhancement of underwater images. Based on the principle of light attenuation curves, an underwater adaptive curve model is designed to eliminate uneven underwater illumination and color bias. A lightweight parameter estimation network is designed to estimate dynamic parameters of underwater adaptive curve models. A tailored set of non-reference loss functions are developed for underwater scenarios to fine-tune underwater images, enhancing the network’s generalization capabilities. These functions implicitly control the learning preferences of the network and effectively solve the problems of color bias and uneven illumination in underwater images without additional datasets. The proposed method examined on three widely used real-world underwater image enhancement datasets. Experimental results demonstrate that our method performs adaptive enhancement on underwater images. Meanwhile, the proposed method yields competitive performance compared with state-of-the-art other methods. Moreover, the Zero-UAE model requires only 17K parameters, minimizing the hardware requirements for underwater detection tasks. What’more, the adaptive enhancement capability of the Zero-UAE model offers a new solution for processing images under extreme underwater conditions, thus contributing to the advancement of underwater autonomous monitoring and ocean exploration technologies.

Since the particulate matter in the water leads to light absorption and scattering, the underwater observation tasks based on optical vision face enormous challenges. Underwater images inevitably suffer from quality degradation issues caused by wavelength and distance-dependent attenuation and scattering (Akkaynak et al., 2017). Typically, when the light propagates through water, it suffers from selective attenuation that results in various degrees of color deviations. In water, red light with a longer wavelength is absorbed more than green and blue light, so it attenuates fastest. Conversely, light with the blue-green wavelength experiences the slowest attenuation, resulting in most underwater images appearing in bluegreen tones (Kocak et al., 2008). In this environment, it is critical to identify effective solutions for improving the visual quality of underwater images and for a better understanding the underwater world.

Given the challenges faced by underwater optical imaging, Synthetic Aperture Sonar(SAS) imaging technology based on sound waves may offer some solutions (Zhang et al., 2021; Yang, 2023). Unlike optical imaging, SAS utilizes the propagation characteristics of sound waves in water to penetrate through particles and acquire high-resolution underwater images. Sound waves propagate in water without being affected by light absorption and scattering, thus overcoming the quality degradation issues encountered in optical imaging. However, the resolution of SAS imaging is typically influenced by underwater propagation media such as water temperature, salinity, and water flow velocity. SAS imaging often requires complex signal processing, data processing techniques, and corresponding hardware equipment, potentially increasing system costs and complexity (Abu and Diamant, 2023). Therefore, despite the significant advantages of SAS imaging technology in underwater observation, the focus of this study remains on the processing and analysis of underwater optical images. This aims to explore effective methods for improving the visual quality of underwater images, thereby enhancing our understanding of the underwater environment.

Furthermore, when conducting underwater observation tasks, the selection of lightweight equipment is crucial to enhance maneuverability, flexibility, reducing complexity, and cutting costs. Despite the potential for slight performance degradation associated with lightweight devices, this is a factor that needs to be balanced when effectively executing tasks. In this context, the adoption of lightweight methods for processing underwater images becomes particularly important, as they can enhance in real-time the visual quality of underwater images, contributing to a more accurate understanding of the underwater environment.

In order to obtain higher visual quality underwater images, methods based on physical models can, to some extent, address the aforementioned issues (Zhuang, 2021). In the field of underwater image enhancement, physics-based methods (Chiang and Chen, 2011; Drews et al., 2016; Li et al., 2016; Berman et al., 2017; Zhuang et al., 2021) focus on accurately estimating medium transmission. By utilizing estimated parameters such as medium transmittance, uniform background light, and other critical underwater imaging parameters, these methods invert the physical model of underwater imaging to obtain clear images. Although these methods perform well in certain scenarios, they often produce unstable and sensitive results when dealing with challenging underwater environments. These methods include histogram equalization (HE) (Frei, 1977) and contrast-limited adaptive histogram equalization (CLAHE) (Zuiderveld, 1994), aim to adjust pixel values to enhance specific qualities of the image, such as color, contrast, and brightness. Image restoration methods (UDCP) (Drews et al., 2016) view improving image quality as the inverse imaging problem. Though methods based on physical models can exhibit satisfactory performance in certain scenarios, they typically generate unstable and sensitive results when facing challenging underwater scenarios. There are two reasons for this: 1) estimating multiple underwater imaging parameters is intricate for traditional methods, and 2) the assumed underwater imaging models do not work well.

In recent years, significant progress (Cai et al., 2018; Li et al., 2020b) has been made in underwater image enhancement using deep learning technologies. (Wang et al., 2021; Huang et al., 2022; Lai et al., 2022) showed that convolutional neural network (CNN) based image enhancement algorithms perform well on underwater images, achieving enhanced images with improved contrast and color reproduction. The method in (Xiao et al., 2022) introduced a CNN-based image enhancement framework for underwater images that is able to automatically determine optimal parameters for enhancing underwater images, resulting in images with both high quality and low computational cost. This method has achieved state-of-the-art performance compared to prior work in image enhancement for underwater images. However, most of these methods rely on paired data for supervised training, and even though some unsupervised learning methods do not require paired data, they still necessitate unpaired reference data. Unfortunately, collecting paired data introduces high costs, and images generated by simulation algorithms differ from real data, leading to lower generalization capabilities of the network. Different from these papers, the proposed deep learning-based methods possess a unique advantage—zero-reference. Throughout the training process, it does not require any paired or unpaired data, in stark contrast to existing CNN and GAN-based methods that rely on such data.

Inspired by Zero-DCE (Guo et al., 2020), this paper specifically designs an underwater curve model that applies the concept of zero-reference learning to underwater scenarios. A new deep learning method called Zero-UAE is proposed, which is based on a zero-reference parameter estimation network, for adaptive enhancement of underwater images. This method does not use an end-to-end network model because such a model is much more complex than parameter estimation. Only relying on a small amount of non-reference data samples, the training effectiveness of an end-to-end network model always cannot achieve expectations. In order to achieve lightweight and zero-reference better while ensuring the robustness of the network, an adaptive recovery image parameter estimation network is needed, which as simple as possible. Unlike the training method proposed in Zero-DCE, due to the complexity of the underwater environment, which cannot use multi-sequence datasets for guidance, this method only uses a limited number of underwater image datasets for guidance. Zero-UAE can adaptively enhance the brightness and contrast of images while restoring normal colors and details to underwater images. This method demonstrates that even in zero-reference training scenarios, Zero-UAE remains competitive in comparison with state-of-the-art methods that require paired or unpaired data. The contributions of this method can be summarized as follows:

1. A zero-reference underwater adaptive enhancement parameter estimation network is proposed, which does not rely on paired or unpaired data, thereby reducing the risk of overfitting. This study demonstrates robustness in various complex underwater conditions.

2. A set of non-reference loss functions is designed, including the specifically crafted underwater color adaptive correction loss function proposed in this paper. Through their collaborative action, these loss functions effectively facilitate the adaptive enhancement of degraded images in complex underwater scenes while ensuring pixel consistency.

3. Zero-UAE achieves state-of-the-art performance on several recent benchmarks, both in terms of visual quality and quantitative metrics.

Furthermore, the Zero-UAE method performs excellently in underwater survey tasks, including various marine life, seabed debris, corals, sand, without incurring significant computational burdens. With a small model size, real-time image processing can be achieved in just 30 minutes of training time. This offers a more convenient option for devices in underwater observation tasks.

The rest of this paper is organized as follows. Section II presents the related works of underwater image enhancement. Section III introduces the proposed method. In Section IV, the qualitative and quantitative experiments are conducted. Section V concludes this paper.

Underwater image enhancement is generally categorized into two major groups: traditional methods and deep learning methods. Traditional methods are further divided into non-physical model-based methods and physical model-based methods.

Non-physical model-based methods focus on directly intensifying pixel values to achieve improved image quality without the constraints of physical models. (Ancuti et al., 2012) proposed a fusion-based method that applies a multiscale fusion strategy on images subjected to color correction and contrast enhancement. In (Ancuti et al., 2017; Ghani and Isa, 2015) introduced a contrast enhancement method that aligns with the Rayleigh distribution in RGB color space. Another technical method utilizes the Retinex theorem for algorithm design, where (Fu et al., 2014) converts color-corrected images into the CIELab color space and enhances the L channel using the Retinex theorem. Methods based on physical models treat underwater image enhancement as an inverse problem, introducing various priors and models of underwater image formation. Among these, the notable model is the Jaffe-McGlamery underwater image model (McGlamery, 1980; Jaffe, 1990).

In recent years, deep learning methods have been widely applied in the field of underwater image processing, primarily focusing on acquiring training datasets and the generalization capability of convolutional models. These methods mainly include methods based on Convolutional Neural Networks (CNN) and Generative Adversarial Networks (GAN).

(Li et al., 2020a) introduced the Underwater Image Enhancement Convolutional Neural Network (UWCNN), reconstructing clear underwater images directly using underwater scene priors without estimating model parameters. (Qi et al., 2022) proposed a novel underwater image enhancement network (SGUIE-Net), which addresses the issues of color distortion and detail blurring in underwater images by incorporating semantic information and region-wise enhancement feature learning. (Wang et al., 2021) proposed an underwater image enhancement convolutional neural network (UICE2-Net) that utilizes two color spaces. This method is the first one based on deep learning to use the HSV color space for underwater image enhancement.

(Guo et al., 2019) proposed a Multiscale Dense Generative Adversarial Network (GAN) for underwater image enhancement, employing multiscale dense residual blocks in the generator to improve performance and retain finer details. They used spectral normalization to stabilize discriminator training and designed a non-saturating GAN loss function to constrain the training. (Cao et al., 2018) utilized two neural networks to estimate background light and scene depth separately to restore underwater images, improving the color information of underwater images (Fabbri et al., 2018), by improving the loss function of the Generative Adversarial Network, trained a paired underwater image dataset generated using CycleGAN to obtain enhanced images with better color effects. (Li et al., 2017) proposed an Unsupervised Generative Adversarial Network (WaterGAN), taking aerial images and depth pairs as input to generate synthesized underwater images. Subsequently, they introduced a color correction network, taking original unlabeled underwater images as input and outputting restored underwater images. (Wang et al., 2019) introduced an Unsupervised Generative Adversarial Network (UWGAN) based on an improved underwater imaging model for generating lifelike underwater images from aerial images and depth maps. They further utilized U-Net for color restoration and dehazing training on a synthetic underwater dataset. (Islam et al., 2020b) introduced a method for fast underwater image enhancement to enhance visual perception (FUnIEGAN). They proposed a model based on conditional generative adversarial networks for real-time underwater image enhancement. Moreover, they contributed to the EUVP dataset, which includes a collection of paired and unpaired underwater images. (Wang et al., 2023) proposed a generative adversarial network with multi-scale and attention mechanisms, which introduces multi-scale dilated convolution and directs the network’s focus towards important features, thus reducing the interference from redundant feature information.

(Huang et al., 2023) introduced a Zero-Reference Deep Network that is designed based on the classical haze image formation principle, aiming to explore zero-reference learning for underwater image enhancement. (Xie et al., 2023) proposed a zero-shot dehazing network that further improved the level adjustment method combined with automatic contrast for enhancement.

Currently, many deep learning-based underwater image enhancement methods employ a supervised learning method that relies on paired training data generated by simulation methods. However, this method faces several challenges. Firstly, supervised learning requires a substantial amount of paired data, and in the deep-sea environment, the difficulty and cost of obtaining real paired data make this method impractical. Secondly, due to the complexity of the deep-sea environment, simulated image pairs may not fully capture the diversity and details of the actual scenes, thereby affecting the network’s generalization ability. In comparison to supervised learning, there are some unsupervised learning methods that do not require paired data, but they still necessitate non-paired data for training. Despite the efforts of zero-shot underwater image enhancement to improve the quality of underwater images, the deep-sea environment presents unique challenges. Factors such as lighting conditions, water quality variations, and the diversity of underwater objects make training models challenging.

Therefore, this paper proposes a novel lightweight underwater image adaptive enhancement method based on Zero-UAE. In contrast to existing deep learning-based underwater image enhancement methods, this paper has the following unique characteristics: 1) It adopts a zero-reference learning strategy, eliminating the need for paired and unpaired data. 2) It designs an underwater adaptive curve model based on the principle of light attenuation curves to eliminate uneven underwater illumination and color distortion. 3) The paper employs a non-end-to-end network structure, acquiring low-level features through skip connections, capable of handling most underwater scenes. 4) It devises a set of underwater image non-reference loss functions, reinforcing the pixel structure of underwater images and enhancing their visual effects compared to other methods.

Typically, collecting enough paired data in underwater scenes incurs high costs, and simulated underwater images differ from real ones. Consequently, supervised underwater image enhancement methods relying on paired datasets are limited due to their relatively poor generalization ability, additional artifacts, and color shifts. Although unsupervised underwater image enhancement doesn’t require paired datasets, it still necessitates carefully selected unpaired training data. Recognizing the challenges of insufficient image samples and acquiring paired/unpaired images in certain underwater scenarios, this paper proposes an underwater image adaptive enhancement framework based on Zero-UAE. Compared to other deep learning methods, the training process of the proposed method doesn’t rely on any reference images. Additionally, to adapt to the unique characteristics of the deep-sea environment, this study specifically devises a lightweight network architecture and employs non-reference loss functions tailored for underwater scenes to enhance the network’s generalization capabilities. The objective of this method is to make deep learning more practical and effective in the field of underwater image processing.

The proposed Zero-UAE framework, as shown in Figure 1, relies solely on pixel features from a limited number of non-reference underwater data samples. Image enhancement is achieved through a straightforward mapping of underwater adaptive enhancement curves. This framework comprises a crucial component known as UAE-Net (Underwater Adaptive Enhancement Parameter Estimation Network), tasked with estimating the optimal fit of the underwater adaptive enhancement curve (UAE curve) for a given input image. Subsequently, the framework iteratively applies these curves, systematically mapping all pixels within the input RGB channels, ultimately generating the enhanced image. The key components of Zero-UAE will be detailed in subsequent sections, including UAE curves, UAE-Net, and non-reference loss functions.

Inspired by the curve adjustment feature in photo editing software and the Zero-DCE method proposed by (Guo et al., 2020), this study presents a curve model suitable for underwater adaptive image enhancement. We utilize the curve adjustment method to automatically map degraded underwater images to normal underwater images, where the network-estimated parameter feature mapping relies entirely on the input image. When designing a differentiable curve model for underwater parameter mapping, there are two requirements: 1) Each pixel value of the enhanced underwater image should be within the normalized range of [0,1] to avoid information loss, which can lead to severe color bias; 2) The curve should be monotonous to maintain the differences between neighboring pixels. To achieve the requirements, a design similar to the previously mentioned quadratic curve was adopted, which can be represented as:

where x represents pixel coordinates, and UAE(I(x);β) denotes the enhanced version of the given input I(x). β ∈ [−1,1] is a trainable curve parameter, learned through the underwater adaptive enhancement parameter estimation network, used to adjust the magnitude of the UAE curve and control the level of underwater image enhancement. In order to preserve color information in underwater images better, the curve is separately applied to the three RGB channels of the image. Specifically, the UAE curve defined in Equation (1) can be iteratively applied for more versatile adjustments, adapting to complex underwater conditions. This can be expressed by the following formula:

where n is the iteration number controlling the curvature. The value of n is set to 8, which can deal with most cases satisfactorily. This method takes into account more flexibility in adapting to color variations and brightness differences in underwater images. Because β is applied to all pixels, global adjustments may lead to potential local over-enhancement/under-enhancement issues in underwater images. To further enhance the capability of processing underwater images, this study formulates δ as a pixel-wise parameter, i.e., each pixel of the given input image has a corresponding curve with the best-fitting δ to adjust its dynamic range, referred to as the underwater color adaptive recovery map and denoted as δ, it has been introduced. Consequently, Equation (2) can be expressed as Equation (3):

where δ is a parameter map of the same size as the given image. Here, this paper assumes that pixels in a local region have the same intensity (also the same adjustment curves), and thus the neighboring pixels in the output result still preserve the monotonous relations. This pixel-wise higher-order curve not only adapts to underwater conditions better but also ensures the goals of normalization, monotonicity, and simplicity.

An example of the pixel-wise curve parameter maps is shown in Figure 2. The curve parameter maps for the three channels of the input image and the resulting image were respectively illustrated, showcasing the adaptability of this new feature to underwater images. This included the best-fitting parameter maps that accurately reflected changes in different regions. The effectiveness of revealing details in each region of the underwater image was demonstrated through pixel-wise curve mapping.

To understand the relationship between input images and their most suitable underwater adaptive enhancement curves, this paper transforms the underwater image enhancement task into an estimation problem of specific curve parameters, rather than directly conducting end-to-end mapping. End-to-end models are much more complex than parameter mapping estimation. For complex end-to-end networks, training results often fall short of expectations when relying on only a small number of samples without reference data. To better achieve lightweight and zero-reference characteristics, for parameter mapping estimation tasks, the network needs to be designed as simple as possible. Therefore, this paper designs an Underwater Adaptive Enhancement Parameter Estimation Network (UAE-Net), as shown in Figure 3. This network takes underwater images as input and outputs a series of pixel-level curve parameter maps corresponding to higher-order curves. The network consists of three layers of traditional convolution and four layers of depth-wise separable convolution. The first two layers contain 32 convolutional kernels of size 3×3 with a stride of 1, using the LeakyReLU activation function; the third layer comprises 32 convolutional kernels of size 1×1 with a stride of 1, also using LeakyReLU. To capture advanced color features of a large number of underwater degraded images while maintaining the relationship between neighboring pixels, both the fourth and fifth layers of depth-wise separable convolution take parameters from the third layer and incorporate a GroupNorm layer. Some skip connections are used to introduce the features from shallow convolutional layers to obtain rich low-level information. The final convolutional layer is followed by the Tanh activation function, generating parameter maps distributed over 8 iterations (n=8), where each iteration produces three curve parameter maps for each of the three channels. It is noteworthy that UAE-Net has only 17,699 trainable parameters and 1.15 billion floating-point operations (FLOPs), making it suitable for processing input images of size 256×256×3. More detailed network resource information is provided in Table 1. Therefore, this network is extremely lightweight so it is suitable for deployment on computationally limited devices, such as underwater exploration robots.

To achieve zero-reference learning in UAE-Net, a set of differentiable non-reference loss functions was designed to train the network, aimed at adapting to the unique characteristics of underwater images for effective network training. This series of loss functions not only serves training purposes but also implicitly evaluates the quality of image enhancement. An underwater color adaptive recovery loss is employed to restore image colors, correcting potential color biases in the enhanced image and establishing relationships among the three adjustment channels. Additionally, an illumination smoothness loss is introduced to maintain a monotonic relationship between adjacent pixels, coupled with exposure control loss for effective exposure level management. Such a multi-loss strategy aids in comprehensively considering various aspects of image quality, enhancing the network’s performance in underwater environments.

Drawing inspiration from the underwater image fusion algorithm (Babu et al., 2023), which utilizes the concept of combining histogram stretching, contrast enhancement, and color balancing, we design an underwater color adaptive correction loss Luac that can be expressed as Equation (4):

in this context, τ represents the enhanced image, while R,G and B correspond to the values of the three channels in the enhanced image, respectively. The smaller the underwater color Adaptive correction loss, the closer the average values of the RGB components are to each other, and the closer the output image is to the real world.

To control exposure levels and mitigate underexposed or overexposed areas, this study employed an exposure control loss, Lexp, which measures the distance between the average intensity of local areas and a well-exposed reference level E. Following existing practices (Mertens et al., 2009, 2007), E was set as the gray level in the RGB color space. E was adjusted to 0.43. M determines the patch size for processing images, and based on experimental results and performance evaluations, this paper sets M to 32. The loss Lexp can be expressed as Equation (5):

where M represents the number of nonoverlapping local regions of size 32×32, Y is the average intensity value of a local region in the enhanced image.

To maintain the monotonic relationships between adjacent pixels, an illumination smoothness loss is incorporated into each curve parameter map δ. The illumination smoothness loss LTVδ can be expressed as Equation (6):

where N is the number of iteration, ∇x and ∇y represent the horizontal and vertical gradient operations, respectively.

The spatial consistency loss Lspa encourages spatial coherence of the enhanced image through preserving the difference of neighboring regions between the input image and its enhanced version. The spatial consistency loss Lspa can be expressed as Equation (7):

where K is the number of local regions, and Ω(i) represents the four neighboring regions (top, down, left, right) centered at the region i. This study denotes Y and I as the average intensity values of the local region in the enhanced version and input image, respectively. The size of the local region is empirically set to 4×4. This loss is stable given other region sizes.

The total loss can be expressed as Equation (8):

where and are the weights of the losses.

In order to enhance the network’s generalization performance, underwater images of various degradation types are incorporated into the training set. Specifically, 1000 images from the SUIM dataset (Islam et al., 2020a) and 800 underwater images from the NUICNet dataset (Cao et al., 2020) are selected for training. The number of iterations is set to 100. The experiment is implemented using the PyTorch framework, and the training images are resized to 256 × 256 × 3. The Adam optimizer is used with default parameters and a fixed learning rate of 1e-4. The experimental environment includes an NVIDIA GeForce RTX 3080Ti GPU, 32GB RAM, and an AMD Ryzen 7-5800X CPU.

Several underwater image processing algorithms were compared, including two traditional methods, three supervised methods, and one similar unsupervised method: the underwater depth estimation and image restoration method (UDCP) by (Drews et al., 2016), the underwater image restoration method based on image blurring and light absorption (IBLA) by (Peng and Cosman, 2017), the underwater image enhancement network (UWCNN) by (Li et al., 2020a), fast underwater image enhancement to enhance visual perception (FUnIEGAN) by (Islam et al., 2020b), the medium transmission guided multi-color space embedding (Ucolor) underwater image enhancement method by (Li et al., 2021), and the unsupervised underwater image restoration method (UDNet) by (Saleh et al., 2022).

To evaluate the effectiveness of the proposed method across different standards, this paper selected 100 underwater photographs from the RUIE dataset (Liu et al., 2020). Several non-reference image quality assessment metrics were employed, including Underwater Image Quality Metric (UIQM) (Panetta et al., 2015), Multi-Scale Image Quality Transformer (MUSIQ) (Ke et al., 2021), and No-Reference Image Quality Evaluator (NIQE) (Mittal et al., 2012). Higher UIQM and MUSIQ values indicate better algorithm performance, while lower NIQE values signify better performance. In comparative experiments, the proposed method demonstrated the best performance with UIQM and NIQE evaluation metrics scoring 5.2196 and 3.3951, respectively, and maintained competitiveness in the MUSIQ evaluation metric as well.

Table 2 presents the average quantitative evaluation of the RUIE dataset. Among the results, red indicates the best performance, and green signifies the second best. Moreover, an upward arrow denotes that higher values represent better algorithm performance, while a downward arrow signifies that lower values indicate better performance. It can be observed that Ucolor and UDnet exhibit suboptimal performance on UIQM and NIQE, respectively. In contrast, the proposed method achieves optimal levels across the entire dataset. It is noteworthy that, unlike other deep learning methods, the proposed method does not utilize any reference images during the training process. Overall, extensive experiments on benchmark datasets demonstrate that the proposed method outperforms current state-of-the-art methods both subjectively and objectively, showcasing the potential of zero-reference image enhancement in underwater applications.

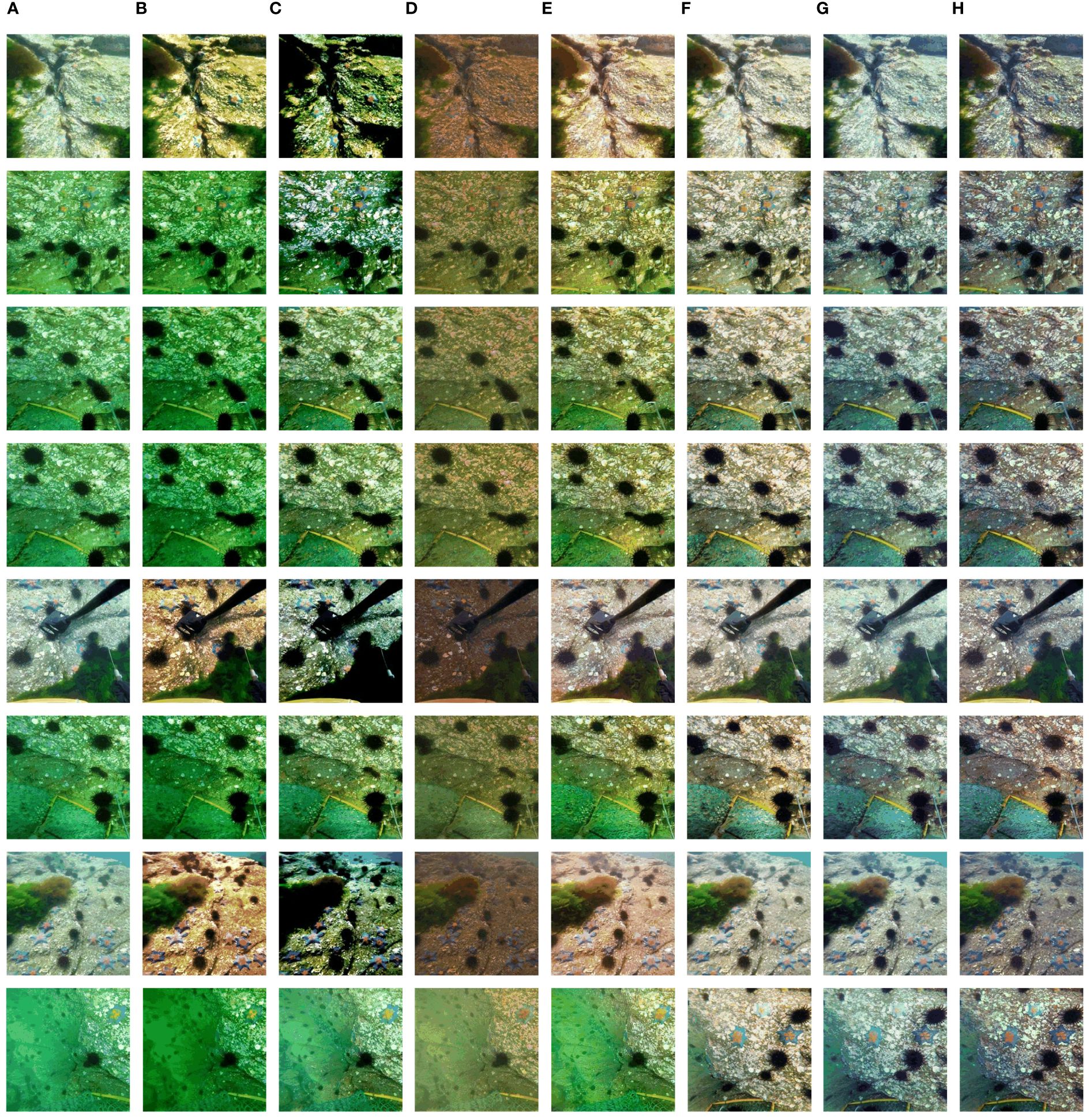

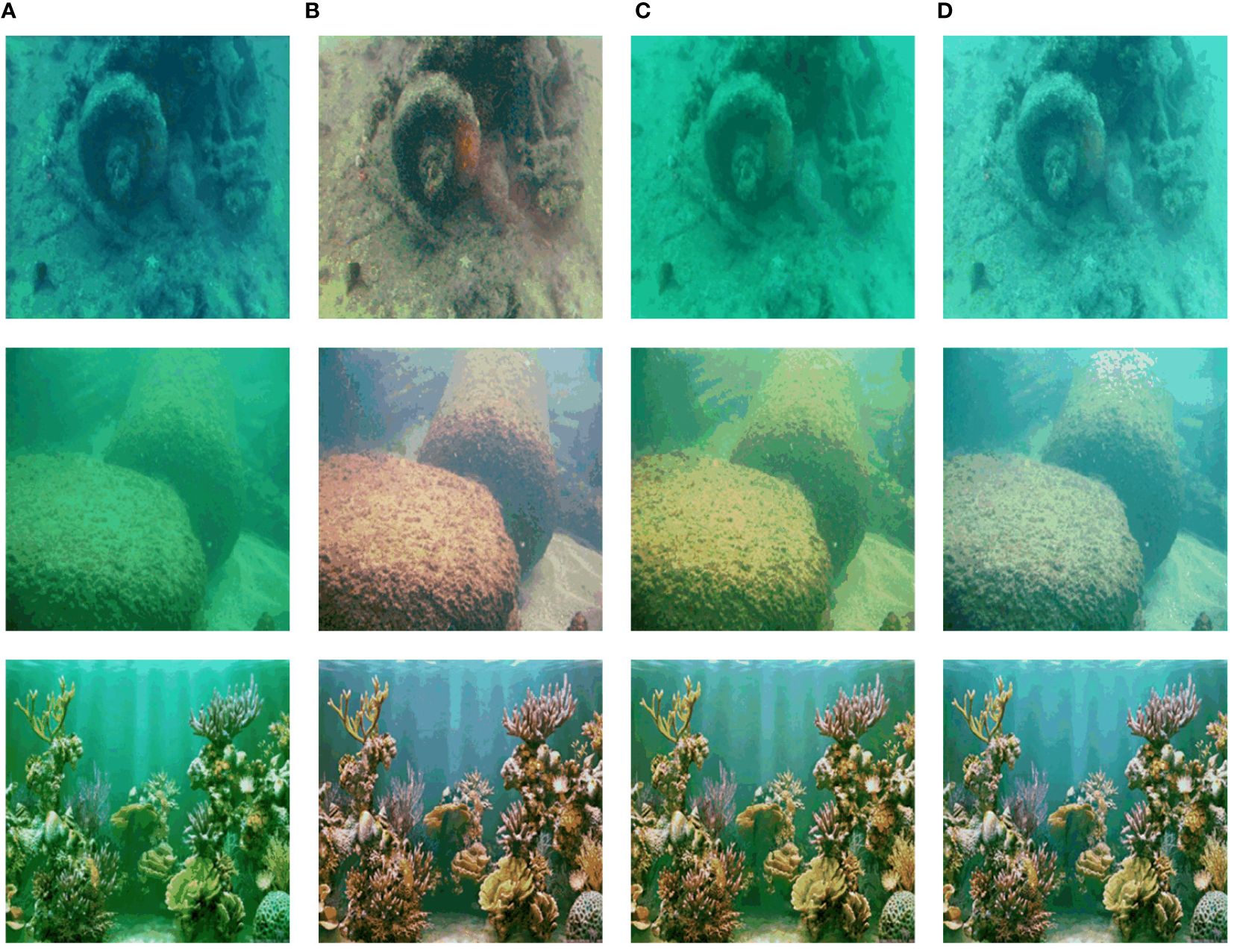

As shown in Figure 4, UDCP performs poorly in enhancing image brightness and color. While the IBLA method exhibits issues of blurring and color bias, it is not entirely effective. The UWCNN and FUnIE-GAN exhibit suboptimal performance on the dataset. Despite making some progress in color adjustment, they cannot completely solve the problem of color distortion. Additionally, in terms of brightness enhancement, they demonstrate certain shortcomings in their ability to improve the overall brightness of images. Despite its ability to increase brightness, Ucolor is unable to fully rectify color distortion issues. The unsupervised scheme UDNet also fails to completely eliminate color bias. In contrast, our proposed method demonstrates outstanding performance in color restoration, contrast enhancement, and brightness improvement.

Figure 4 Comparison on RUIE data sets. (A) Original image. (B) UDCP (Drews et al., 2016). (C) IBLA (Peng and Cosman, 2017). (D) UWCNN (Li et al., 2020a). (E) FUnIEGAN (Islam et al., 2020b). (F) Ucolor (Li et al., 2021). (G) UDNet (Saleh et al., 2022). (H) Proposed.

To comprehensively assess the quantitative performance of the proposed method on the UIEB dataset (Li et al., 2019), 800 underwater images are selected for evaluation. Performance evaluation uses the nonreference metrics UIQM, NIQE, and MUSIQ. In comparative experiments, the proposed method performs the best in the NIQE and MUSIQ evaluation metrics, scoring 4.4538 and 49.8793, respectively.

Table 3 presents the average evaluation results of UIQM, NIQE, and MUSIQ metrics on the UIEB dataset. The proposed algorithm achieved either optimal or suboptimal results on most images. While the similar unsupervised scheme UDNet showed acceptable results on some images, the proposed method consistently obtained optimal values across the entire dataset.

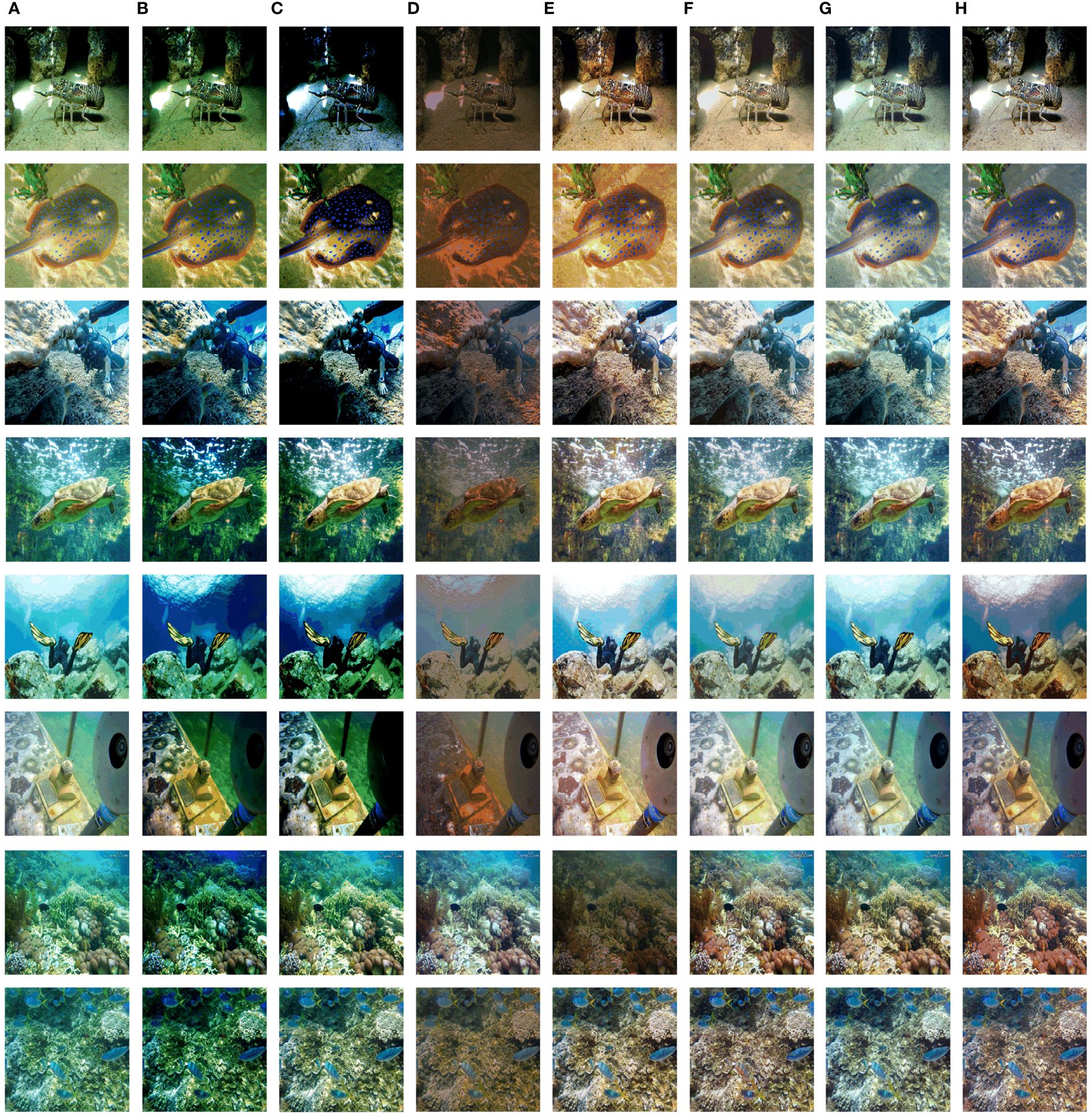

As observed in Figure 5, various existing methods exhibited different shortcomings. UDCP failed to effectively eliminate color cast, while IBLA introduced brightness distortion in certain images. Although UWCNN, Ucolor, and UDNet showed some capability in removing haze and blur, issues with color cast persisted in some images, and UWCNN suffered from insufficient brightness. FUnIE-GAN managed to restore color in most images but encountered difficulties with specific ones, resulting in a grayish tone. In contrast, the proposed method outperformed in color restoration and contrast enhancement, particularly excelling in target restoration and brightness improvement.

Figure 5 Comparison on UIEB data sets. (A) Original image. (B) UDCP (Drews et al., 2016). (C) IBLA (Peng and Cosman, 2017). (D) UWCNN (Li et al., 2020a). (E) FUnIEGAN (Islam et al., 2020b). (F) Ucolor (Li et al., 2021). (G) UDNet (Saleh et al., 2022). (H) Proposed.

To validate the performance of the proposed method across multiple benchmark tests, this paper performs experiments on the U45 dataset and assesses its performance using non-reference metrics such as UIQM, MUSIQ, and NIQE. In comparative experiments, the proposed method performs the best in the NIQE and MUSIQ evaluation metrics, scoring 4.4738 and 47.1163, respectively.

Table 4 presents the average quantitative evaluation of the U45 dataset. The proposed method achieves the optimal level on the dataset at both NIQE and MUSIQ metrics. In contrast, traditional algorithms UDCP and Ucolor show suboptimal performance.

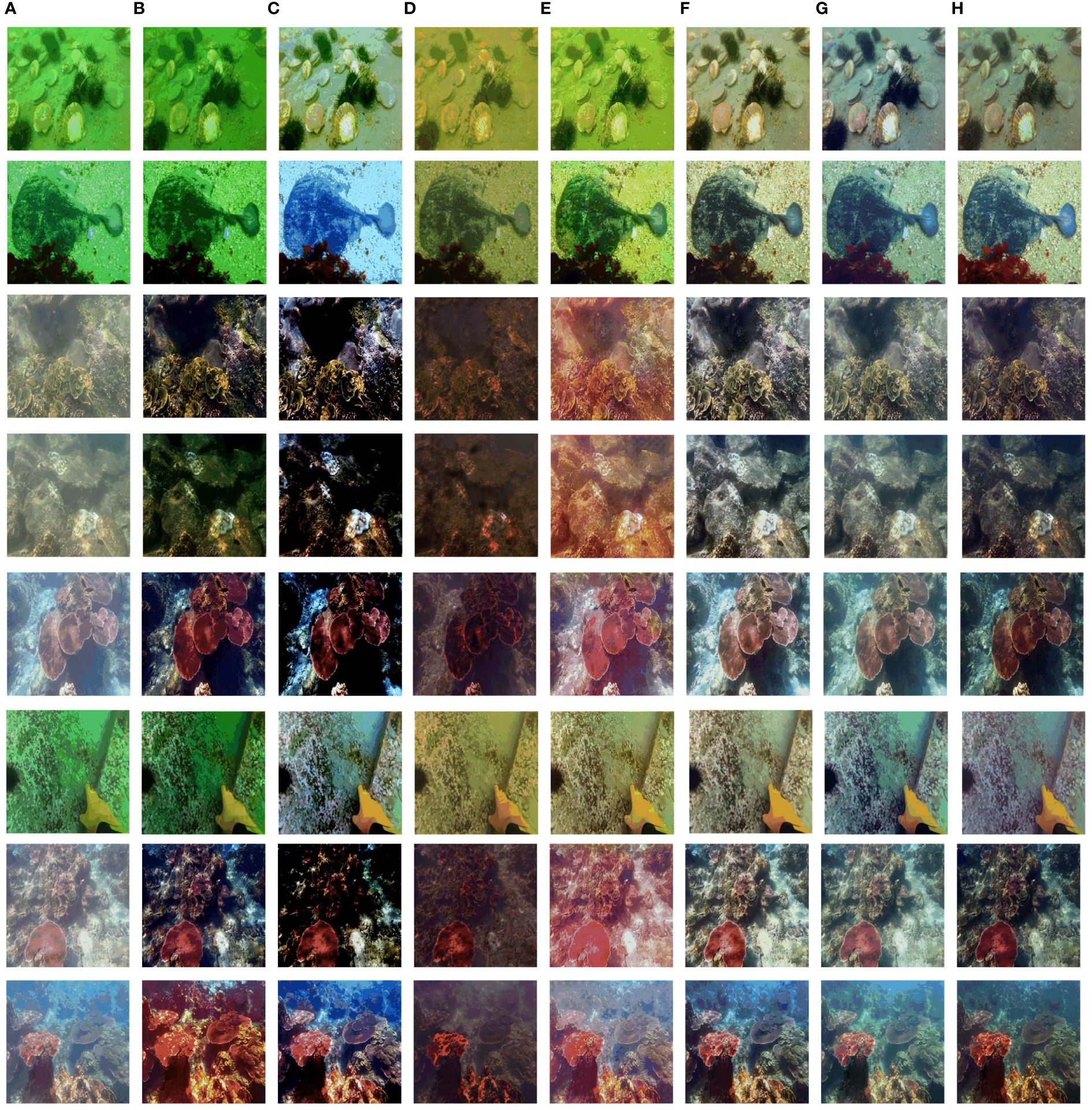

As shown in Figure 6, UDCP performs poorly in enhancing image brightness and color. Although the IBLA method exhibits issues of blurring and color bias, it is not entirely ineffective. UWCNN and FUnIE-GAN both exhibit problems such as excessive saturation and uneven brightness in the U45 dataset. Despite some adjustments in saturation, Ucolor and UDNet are unable to fully correct color distortion issues in underwater images. In contrast, our proposed method demonstrates good performance in color restoration, brightness enhancement, and contrast improvement.

Figure 6 Comparison on U45 data sets. (A) Original image. (B) UDCP (Drews et al., 2016). (C) IBLA (Peng and Cosman, 2017). (D) UWCNN (Li et al., 2020a). (E) FUnIEGAN (Islam et al., 2020b). (F) Ucolor (Li et al., 2021). (G) UDNet (Saleh et al., 2022). (H) Proposed.

For the purpose of conducting a more detailed analysis of the proposed method, extensive ablation studies were performed to examine the impact of each stage of the proposed framework. This was done to demonstrate the effectiveness of each component in Zero-UAE, with a particular focus on the loss functions and training datasets.

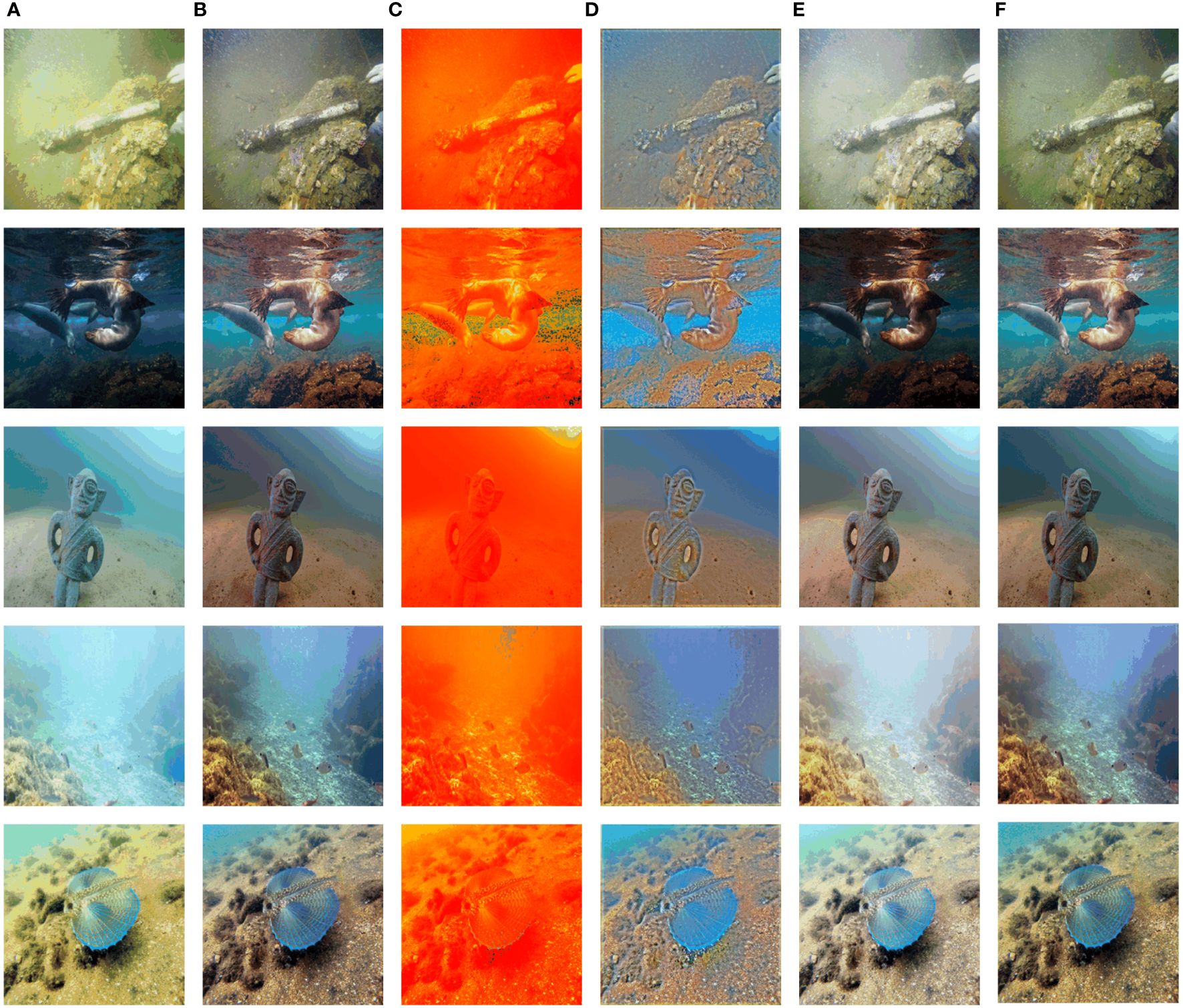

The outcomes produced by various combinations of loss functions are depicted in Figure 7, where “w/o” denotes “without.”

Figure 7 Ablation study of the loss functions (underwater color adaptive correction loss Luac, illumination smoothness loss Ltvδ, exposure control loss Lexp, and spatial consistency loss Lspa). (A) Input. (B) Total. (C) w/o Luac. (D) w/o LTVδ. (E) w/o Lexp. (F) w/o Lspa.

For a direct visual comparison of the impact of loss functions on network training, only the network output results are presented. When the underwater color adaptive correction loss Luac is not considered, the underwater blue-green color tone cannot be completely eliminated, leading to potential color bias issues, such as over-enhancement of underwater environmental regions. The absence of illumination balance loss Ltvδ hinders correlations between adjacent regions, resulting in noticeable artifacts and imbalanced areas in the images. Without exposure control loss Lexp, underwater images may experience overexposure issues. Without spatial consistency loss Lspa, underwater images may encounter issues of insufficient contrast saturation. Therefore, these several loss functions complement each other, allowing the resulting images to achieve optimal color restoration and haze removal.

Table 5 presents the average quantitative evaluation of the ablation study on UIEB, yielding the following observations: 1) The stability of our zero-shot framework is primarily governed by the losses Ltvδ and Luac; removing either significantly diminishes restoration performance. 2) Both Lexp and Lspa losses are not indispensable for stabilizing network training. Lexp effectively controls complex underwater lighting conditions, while Lspa enhances image contrast. Visual inspection indicates favorable image results, and although the inclusion of Lspa leads to a slight decrease in evaluation metrics, this does not significantly impact overall perceptual quality. 3) Each loss contributes to restoring underwater images in its respective role, and the combination of all losses achieves optimal performance.

In order to test the impact of the training dataset, Zero-UAE is retrained on different datasets: 1) the original images from the UIEB dataset (Li et al., 2019) (a), 2) the original training data (b), 3) 3,700 underwater images provided by the EUVP dataset (Islam et al., 2020b) (c), and 4) 2,000 unlabeled underwater images from the HICID dataset (Han et al., 2022) (d). As shown in Figures 8C, D, after switching to different datasets, the color bias issue in underwater images cannot be completely eliminated in Zero-UAE. For instance, if the input underwater image has a bluish tint, the resulting image will maintain the bluish tint of the input. These results indicate the rationality and necessity of using the current training dataset in the training process of our network.

Figure 8 Ablation study of the Training Data. (A) Input (Li et al., 2019). (B) The results of this method. (C) EUVP Dataset. (D) HICID Dataset.

To research the efficiency of the proposed model, this paper compares the average testing runtime of different methods. These comparisons help assess the speed performance of this paper’s model in processing underwater images, comparing it with other methods to validate its superiority. This is crucial for understanding the practicality and performance of the method in real-world applications. This paper selected images from the 256×256 UIEB dataset for testing. The runtimes were measured on a computer equipped with an NVIDIA RTX 3080Ti GPU and AMD Ryzen 7-5800X CPU. The average runtimes are shown in Table 6, where “RT” represents the required runtime per image. Image quality evaluation metrics NIQE and MUSIQ are also provided for reference. The time efficiency of the proposed Zero-UAE is slightly better than that of FuniE-GAN and UDNet. Some other methods have relatively longer runtimes, requiring complex inference for each image. Additionally, our proposed method achieves the optimal metric evaluation results with the least time consumption.

This paper presents a novel lightweight zero-reference deep network for underwater image enhancement (Zero-UAE), eliminating the requirements for paired or unpaired data. The image enhancement problem is transformed into the task of estimating parameters for a curve model mapping. A set of differentiable underwater non-reference loss functions is designed to guide the network training. The method can adaptively compensate for image color and brightness to enhance visual quality. It is noteworthy that, compared to other deep learning methods, the proposed method does not require any reference images during the training process. Under zero-reference training, Zero-UAE exhibits satisfactory visual performance in brightness, color, contrast, and underwater environments. Extensive experiments on multiple benchmarks demonstrate that the proposed method outperforms state-of-the-art methods both on qualitative and quantitative evaluations. Due to these advantages, it holds significant value in practical applications such as real-time processing tasks on underwater robots in marine exploration.

In the future, our goal is to improve the generalization performance of the zero-reference network in underwater sonar image and underwater optical image processing tasks. We plan to further refine the loss functions to enhance the underwater image color restoration and uniform contrast capabilities in challenging underwater scenes. Additionally, we intend to explore the possibility of integrating additional datasets and other models to further enhance the network’s ability to preserve low-level features, thereby increasing its applicability in real-world underwater environments and contributing to underwater autonomous detection tasks.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

TL: Conceptualization, Data curation, Investigation, Methodology, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. KZ: Funding acquisition, Resources, Supervision, Writing – review & editing. XW: Data curation, Investigation, Resources, Writing – review & editing. WS: Data curation, Investigation, Resources, Writing – review & editing. HW: Investigation, Supervision, Validation, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Natural Science Foundation Program of Liaoning (Grant No. 2022-KF-18-04) and the Science Research Project under grant agreement No. LJKZ-0731, which are funded by the Educational Department of Liaoning Province.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abu A., Diamant R. (2023). Underwater object classification combining SAS and transferred optical-to-SAS Imagery. Pattern Recognit. 144, 109868. doi: 10.1016/j.patcog.2023.109868

Akkaynak D., Treibitz T., Shlesinger T., Loya Y., Tamir R., Iluz D. (2017). “What is the space of attenuation coefficients in underwater computer vision?,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vol. 1. 4931–4940 (CVPR 2017).

Ancuti C. O., Ancuti C., De Vleeschouwer C., Bekaert P. (2017). Color balance and fusion for underwater image enhancement. IEEE Trans. image Process. 27, 379–393. doi: 10.1109/TIP.83

Ancuti C., Ancuti C. O., Haber T., Bekaert P. (2012). “Enhancing underwater images and videos by fusion,” in Proceedings of the 2012 IEEE conference on computer vision and pattern recognition (CVPR). 81–88 (IEEE).

Babu S. R., Reddy S. L., Dogiparthi T. S., Syambabu M. (2023). Using a fusion algorithm for underwater image enhancement, colour balancing, contrast optimisation, and histogram stretching. Int. J. Res. Appl. Sci. Eng. Technol. 11. doi: 10.22214/ijraset.2023.50504

Berman D., Treibitz T., Avidan S. (2017). “Diving into haze-lines: Color restoration of underwater images,” in Proc. British Machine Vision Conference (BMVC), Vol. 1. 2.

Cai J., Gu S., Zhang L. (2018). Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27, 2049–2062. doi: 10.1109/TIP.2018.2794218

Cao K., Peng Y.-T., Cosman P. C. (2018). “Underwater image restoration using deep networks to estimate background light and scene depth,” in 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI). 1–4 (IEEE).

Cao X., Rong S., Liu Y., Li T., Wang Q., He B. (2020). Nuicnet: non-uniform illumination correction for underwater image using fully convolutional network. IEEE Access 8, 109989–110002. doi: 10.1109/Access.6287639

Chiang J. Y., Chen Y.-C. (2011). Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. image Process. 21, 1756–1769. doi: 10.1109/TIP.2011.2179666

Drews P. L., Nascimento E. R., Botelho S. S., Campos M. F. M. (2016). Underwater depth estimation and image restoration based on single images. IEEE Comput. Graphics Appl. 36, 24–35. doi: 10.1109/MCG.38

Fabbri C., Islam M. J., Sattar J. (2018). “Enhancing underwater imagery using generative adversarial networks,” in 2018 IEEE international conference on robotics and automation (ICRA). 7159–7165 (IEEE).

Frei W. (1977). Image enhancement by histogram hyperbolization. Comput. Graphics Image Process. 6, 286–294. doi: 10.1016/S0146-664X(77)80030-0

Fu X., Zhuang P., Huang Y., Liao Y., Zhang X.-P., Ding X. (2014). “A retinex-based enhancing approach for single underwater image,” in 2014 IEEE international conference on image processing (ICIP). 4572–4576 (IEEE).

Ghani A. S. A., Isa N. A. M. (2015). Underwater image quality enhancement through integrated color model with rayleigh distribution. Appl. soft computing 27, 219–230. doi: 10.1016/j.asoc.2014.11.020

Guo C., Li C., Guo J., Loy C. C., Hou J., Kwong S., et al. (2020). “Zero-reference deep curve estimation for low-light image enhancement,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 1780–1789 (CVPR).

Guo Y., Li H., Zhuang P. (2019). Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Oceanic Eng. 45, 862–870. doi: 10.1109/JOE.48

Han J., Shoeiby M., Malthus T., Botha E., Anstee J., Anwar S., et al. (2022). Underwater image restoration via contrastive learning and a real-world dataset. Remote Sens. 14 (17), 4297. doi: 10.3390/rs14174297

Huang Z., Li J., Hua Z. (2022). Underwater image enhancement via lbp-based attention residual network. IET Image Process. 16, 158–175. doi: 10.1049/ipr2.12341

Huang Y., Yuan F., Xiao F., Lu J., Cheng E. (2023). Underwater image enhancement based on zero-reference deep network. IEEE J. Oceanic Eng. 48 (3), 903–924. doi: 10.1109/JOE.2023.3245686

Islam M. J., Edge C., Xiao Y., Luo P., Mehtaz M., Morse C., et al. (2020a). “Semantic segmentation of underwater imagery: Dataset and benchmark,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 1769–1776 (IEEE). doi: 10.1109/IROS45743.2020

Islam M. J., Xia Y., Sattar J. (2020b). Fast underwater image enhancement for improved visual perception. IEEE Robotics Automation Lett. 5, 3227–3234. doi: 10.1109/LSP.2016.

Jaffe J. S. (1990). Computer modeling and the design of optimal underwater imaging systems. IEEE J. Oceanic Eng. 15, 101–111. doi: 10.1109/48.50695

Ke J., Wang Q., Wang Y., Milanfar P., Yang F. (2021). “Musiq: Multi-scale image quality transformer,” in Proceedings of the IEEE/CVF International Conference on Computer Vision. 5148–5157 (ICCV 2021).

Kocak D. M., Dalgleish F. R., Caimi F. M., Schechner Y. Y. (2008). A focus on recent developments and trends in underwater imaging. Mar. Technol. Soc. J. 42, 52. doi: 10.4031/002533208786861209

Lai Y., Zhou Z., Su B., Xuanyuan Z., Tang J., Yan J., et al. (2022). Single underwater image enhancement based on differential attenuation compensation. Front. Mar. Sci. 9, 1047053. doi: 10.3389/fmars.2022.1047053

Li C., Anwar S., Hou J., Cong R., Guo C., Ren W. (2021). Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 30, 4985–5000. doi: 10.1109/TIP.2021.3076367

Li C., Anwar S., Porikli F. (2020a). Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognition 98, 107038. doi: 10.1016/j.patcog.2019.107038

Li C., Cong R., Kwong S., Hou J., Fu H., Zhu G., et al. (2020b). Asif-net: Attention steered interweave fusion network for rgb-d salient object detection. IEEE Trans. cybernetics 51, 88–100. doi: 10.1109/TCYB.6221036

Li C., Guo J., Chen S., Tang Y., Pang Y., Wang J. (2016). “Underwater image restoration based on minimum information loss principle and optical properties of underwater imaging,” in 2016 IEEE International Conference on Image Processing (ICIP). 1993–1997 (IEEE).

Li C., Guo C., Ren W., Cong R., Hou J., Kwong S., et al. (2019). An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389. doi: 10.1109/TIP.83

Li J., Skinner K. A., Eustice R. M., Johnson-Roberson M. (2017). Watergan: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robotics Automation Lett. 3, 387–394. doi: 10.1109/LRA.2017.2730363

Liu R., Fan X., Zhu M., Hou M., Luo Z. (2020). Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. circuits Syst. video Technol. 30, 4861–4875. doi: 10.1109/TCSVT.76

McGlamery B. (1980). “A computer model for underwater camera systems,” in Ocean Optics VI, vol. 208. (SPIE), 221–231.

Mertens T., Kautz J., Van Reeth F. (2007). “Exposure fusion,” in 15th Pacific Conference on Computer Graphics and Applications (PG’07). 382–390 (IEEE).

Mertens T., Kautz J., Van Reeth F. (2009). “Exposure fusion: A simple and practical alternative to high dynamic range photography,” in Computer graphics forum, vol. 28. (Wiley Online Library), 161–171.

Mittal A., Soundararajan R., Bovik A. C. (2012). Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 20, 209–212. doi: 10.1109/LSP.2012.2227726

Panetta K., Gao C., Agaian S. (2015). Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41, 541–551. doi: 10.1109/JOE.2015.2469915

Peng Y.-T., Cosman P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Trans. image Process. 26, 1579–1594. doi: 10.1109/TIP.2017.2663846

Qi Q., Li K., Zheng H., Gao X., Hou G., Sun K. (2022). Sguie-net: Semantic attention guided underwater image enhancement with multi-scale perception. IEEE Trans. Image Process. 31, 6816–6830. doi: 10.1109/TIP.2022.3216208

Saleh A., Sheaves M., Jerry D., Azghadi M. R. (2022). Adaptive uncertainty distribution in deep learning for unsupervised underwater image enhancement. arXiv preprint arXiv:2212.08983. doi: 10.48550/arxiv.2212.08983

Wang Y., Guo J., Gao H., Yue H. (2021). UIECˆ2-Net: Cnn-based underwater image enhancement using two color space. Signal Processing: Image Communication 96, 116250. doi: 10.1016/j.image.2021.116250

Wang Z., Zhao L., Zhong T., Jia Y., Cui Y. (2023). Generative adversarial networks with multi-scale and attention mechanisms for underwater image enhancement. Front. Mar. Sci. 10, 1226024. doi: 10.3389/fmars.2023.1226024

Wang N., Zhou Y., Han F., Zhu H., Yao J. (2019). Uwgan: underwater gan for real-world underwater color restoration and dehazing. arXiv preprint arXiv:1912.10269. doi: 10.48550/arXiv.1912.10269

Xiao Z., Han Y., Rahardja S., Ma Y. (2022). Usln: A statistically guided lightweight network for underwater image enhancement via dual-statistic white balance and multi-color space stretch. arXiv preprint arXiv:2209.02221. doi: 10.48550/ARXIV.2209.02221

Xie Q., Gao X., Liu Z., Huang H. (2023). Underwater image enhancement based on zero-shot learning and level adjustment. Heliyon 9 (4), e14442. doi: 10.1016/j.heliyon.2023.e14442

Yang P. (2023). An imaging algorithm for high-resolution imaging sonar system. Multimedia Tools Appl. 31957–31973. doi: 10.1007/s11042-023-16757-0

Zhang X., Wu H., Sun H., Ying W. (2021). Multireceiver sas imagery based on monostatic conversion. IEEE J. Selected Topics Appl. Earth Observations Remote Sens. 14, 10835–10853. doi: 10.1109/JSTARS.2021.3121405

Zhuang P. (2021). “Retinex underwater image enhancement with multiorder gradient priors,” in 2021 IEEE International Conference on Image Processing (ICIP). 1709–1713 (IEEE).

Zhuang P., Li C., Wu J. (2021). Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 101, 104171. doi: 10.1016/j.engappai.2021.104171

Keywords: underwater image enhancement, zero-reference, parameter estimation network, loss functions, lightweight

Citation: Liu T, Zhu K, Wang X, Song W and Wang H (2024) Lightweight underwater image adaptive enhancement based on zero-reference parameter estimation network. Front. Mar. Sci. 11:1378817. doi: 10.3389/fmars.2024.1378817

Received: 30 January 2024; Accepted: 22 March 2024;

Published: 11 April 2024.

Edited by:

Jie Nie, Ocean University of China, ChinaCopyright © 2024 Liu, Zhu, Wang, Song and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kaiyan Zhu, emh1a2FpeWFuQGRsb3UuZWR1LmNu

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.