94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Mar. Sci. , 01 September 2023

Sec. Ocean Observation

Volume 10 - 2023 | https://doi.org/10.3389/fmars.2023.1249351

This article is part of the Research Topic Best Practices in Ocean Observing View all 85 articles

High-quality underwater images are used to extract information for a variety of purposes, including habitat characterization, species monitoring, and behavioral analysis. However, due to the limitation of non-uniform illumination environment and equipment, these images often have the problem of local over- or underexposure due to non-uniform illumination. Conventional methods cannot fully correct for this, and the dark area artifacts generated in the process of enhancing a low-light image cannot be readily fixed. Therefore, we describe a low-illumination underwater image enhancement method based on non-uniform illumination correction and adaptive artifact elimination. First, to eliminate the influence of non-uniform illumination on underwater images, an illumination equalization algorithm based on non-linear guided filtering corrects the non-uniform bright and dark regions of underwater images, and the dark channel prior algorithm and contrast-limited adaptive histogram equalization algorithm are introduced to prevent excessive enhancement of images and generation of dark regions. Then, in order to adaptively eliminate the dark area artifacts generated during the enhancement process, an adaptive multi-scale Retinex color fidelity algorithm with color restore is proposed to improve the color of the image and adaptively eliminate the dark area artifacts of the image. Then, the gray world white balance algorithm is used to adjust the color distortion caused by the attenuation of light. Finally, a multi-scale Retinex model parameter estimation algorithm is proposed to obtain the illumination component and reflection component of the image, and then, the enhanced image is obtained according to the Retinex model. The results show that the proposed method is superior to other algorithms regarding contrast, color restoration, and comprehensive effect, and improves low-illumination image enhancement technology.

With the rapid development of economy and society, the demand for resources is becoming more and more vigorous, and the increasing consumption of land resources has forced people to turn their attention to the ocean. Therefore, the development and utilization of marine resources has become a common concern of the international community. Underwater images are an important source for people to obtain marine information from the outside world, and high-quality underwater images are the key to the development and utilization of marine resources (Li et al., 2023). Due to the limitations of the environment and equipment, people tend to use extremely bright artificial light or take underwater images in extremely dark environments, which will lead to the problem of local over- or underexposure of the captured images, especially for the deep seabed. Water and suspended particles have absorption and scattering effects on light, making underwater images obtained under uneven ambient light often less ideal (Yu et al., 2022). In conventional methods, low-light images are often considered, and the overall contrast of this image is low. However, for underwater images taken in uneven lighting environment, especially the halo artifacts produced in the process of enhancing low-light images, conventional methods often cannot fully deal with them, which leads to problems such as unnatural colors and loss of detailed information in the enhanced underwater images, which seriously hinder people’s information recognition of regions of interest (Ning et al., 2023). Therefore, how to enhance the dark area of the non-uniform low-light underwater image and suppress the overexposed bright area, and improve the global contrast of the image have become the focus of underwater image enhancement. The research on this enhancement technology has wide application value and theoretical significance. It can complete tasks such as target detection, underwater scientific research, and hydrological data measurement in a non-uniform low-light marine environment, which greatly improves the efficiency of marine resource development and utilization and provides a theoretical reference for low-light image enhancement technology.

At present, many scholars have carried out research on low-light underwater image enhancement methods. Among them, the simplest and most intuitive method is histogram equalization (HE) (Dhal et al., 2021). This method can evenly distribute the pixels of the image, expand the dynamic range of the image pixels, and improve the contrast of the low-light image. Based on this technical principle, many scholars have extended it, such as CLAHE, AEPHE, ESIHE, DHE (Abdullah-Al-Wadud et al., 2007), and CLDQHE (Huang et al., 2021). Although these methods improve the contrast of low-light images, they do not pay attention to the actual illumination conditions. At the same time, more noise is introduced in the process of improving the contrast of low-light images. The second is a low-light image enhancement method based on image fusion. Commonly, there are non-linear transformation methods based on image pixel-level operations. This method often needs to rely on non-linear transformation functions. Common non-linear transformation functions include gamma correction, S-type transfer function, and logarithmic transfer function. Huang et al. (2016) proposed an adaptive gamma correction (AGCCH) algorithm based on cumulative histogram. The author incorporated the cumulative histogram or cumulative sub-histogram into the weighted distribution to improve the contrast of local pixels. This method performs well in brightness enhancement and detail retention. Srinivas and Bhandari (2020) proposed a low-light image enhancement algorithm with adaptive S-shaped transfer function. The author combined the adaptive S-shaped transfer function (ASTF) with the Laplace operator to obtain color and contrast-enhanced images. Huang et al. (2012) used gamma correction and redistribution of brightness pixels to improve the brightness of low-illumination images. This method can effectively enhance the contrast of the image and reduce the complexity of calculation. Kansal and Tripathi (2019) combined adaptive gamma correction with discrete cosine transform (DCT) to enhance the contrast of the image. Ying et al. (2017) proposed a multi-exposure fusion algorithm based on the human visual system (HVS). The author synthesized the multi-exposure image through the camera response model and fused the input image and the synthesized image through the weight matrix to get the enhancement result. This method can avoid over-enhancement of the image. Fu et al. (2016) proposed a fusion-based image enhancement algorithm in weak light. The author combined different technical advantages to adjust the illumination components of the image and compensated the adjusted illumination to the reflectivity to obtain an enhanced image. This method can adapt to different lighting environments. This kind of image enhancement method mainly performs pixel-level operations on low-light images and has achieved certain results. However, the parameters of the non-linear transformation function are difficult to determine, which makes the enhancement effect uneven. In practical applications, the fusion-based method is often limited due to the lack of fusion sources. The third method is low-light image enhancement based on Retinex model (Land, 1986). Retinex theory is a color perception model based on human vision. It regards an image as composed of illumination component and reflection component, in which the illumination component represents the gap between low light and normal light, and the reflection component is an inherent property of an object, which does not change with the change in lighting conditions and has color constancy. Therefore, the enhancement of low-light images can be achieved by estimating the illumination components of Retinex model. Compared with other methods, Retinex-based algorithms have better effects in enhancing low-light images, including classic single-scale Retinex (SSR) (Jobson et al., 1997a) algorithm and multi-scale Retinex (MSR) (Jobson et al., 1997b) algorithm. The classic Retinex algorithm often considers the use of surround function for illumination estimation, but it is difficult to have a good compromise between dynamic range compression and color constancy in this way, and it will also introduce halo artifacts into the enhanced image. In order to solve these problems, many scholars have improved the Retinex theory, mainly improving the illumination map and reflection map of Retinex model. Fu et al. (2015) proposed an illumination and reflection component estimation algorithm based on linear domain. The author uses the linear domain model to represent the prior information so that the estimated illumination and reflection components have better performance, and the enhanced low-light image has a visually pleasing effect. Huang et al. (2018) proposed an image enhancement algorithm based on reflectivity-based small-frame regularization. This method can estimate the illumination and reflectivity while maintaining the image details and has the advantages of detail preservation and contrast enhancement. Li et al. (2018) proposed a robust Retinex model. In this model, the author considers the influence of noise and improves the robustness of the model. Hao et al. (2020) proposed a low-light image enhancement method based on semi-decoupled decomposition. The author realized Retinex image decomposition in an effective semi-decoupled way, which eliminated the noise of the image, improved the visibility of the image, and maintained its visual naturalness. However, these methods enhance low-light images by eliminating illumination, resulting in the loss of image information, and inefficient image decomposition will introduce various dark area artifacts into the enhanced image.

The above research is mainly applied to the low light environment on land and has achieved certain results. However, the underwater environment is complex, water and suspended particles have absorption and scattering effects on light, and images taken in non-uniform illumination environments often have local overexposure or insufficient exposure. Especially in the process of enhancing low-light images, halo artifacts will be generated, resulting in unnatural image color and information loss. Therefore, a low-illumination underwater image enhancement method based on non-uniform illumination correction and adaptive artifact elimination is proposed. It is mainly composed of non-uniform illumination correction module, adaptive artifact elimination module, and multi-scale fusion module. First, in order to eliminate the influence of non-uniform illumination on underwater images, a non-linear guided filtering illumination equalization algorithm is designed to correct the non-uniform bright and dark areas of underwater images, and the dark channel prior theory and contrast-limited adaptive histogram equalization (CLAHE) algorithm are introduced to prevent the over-enhancement of images and the generation of dark areas. Then, aiming at the halo artifacts easily produced in the enhancement process, an adaptive multi-scale Retinex color fidelity algorithm with color restore (MSRCR) is proposed to improve the color of the image, and then, the gray world white balance algorithm is used to adjust the color distortion caused by the attenuation of light. Finally, we propose a multi-scale Retinex model parameter estimation algorithm to estimate the illumination component and reflection component and then obtain the enhanced underwater image according to Retinex model. The main contributions of this paper are summarized as follows:

(1) A low-illumination underwater image enhancement method based on non-uniform illumination correction and adaptive artifact elimination is proposed. It can effectively correct the influence of non-uniform low illumination on underwater images and eliminate halo artifacts of underwater images, which can provide theoretical reference for low-illumination image enhancement technology.

(2) A non-linear guided filtering illumination equalization algorithm is designed to correct the non-uniform bright and dark regions of the underwater image so that the processed image pixel distribution has a wider dynamic range, and the overall brightness and contrast of the image are greatly improved.

(3) An adaptive MSRCR color fidelity algorithm is proposed to improve the color of the image and ensure that the image will not appear artifacts and color distortion.

(4) A multi-scale Retinex model parameter estimation algorithm is proposed to accurately estimate the illumination component and the reflection component. The corrected illumination component eliminates the influence of non-uniform illumination to a certain extent, and the corrected reflection component retains more color information.

The structure of this paper is as follows: in Section 2, the main ideas and theoretical basis of our proposed method are elaborated in detail. In Section 3, the research results are analyzed and discussed from three aspects: qualitative, quantitative, and application test. In Section 4, the work of this paper is summarized.

The low-illumination underwater image enhancement method proposed in this paper is mainly composed of non-uniform illumination correction, artifact adaptive elimination, and multi-scale image fusion module. In the non-uniform illumination correction module, an illumination equalization algorithm based on non-linear guided filtering is designed to correct the non-uniform bright and dark regions of the image, and the dark channel prior theory and CLAHE algorithm are introduced to prevent the over-enhancement and dark region generation of low-illumination underwater images. In the artifact adaptive elimination module, an adaptive MSRCR color fidelity algorithm is proposed to improve the color of the image, and then, the gray world white balance algorithm is used to adjust the image color distortion caused by light attenuation. In the multi-scale image fusion module, a multi-scale Retinex model parameter estimation algorithm is proposed to obtain the illumination component and the reflection component. It is divided into two parts. The first part is to obtain the corrected illumination component by multi-scale fusion of the images processed by non-uniform illumination correction, dark channel prior, and CLAHE algorithm. The second part is the multi-scale fusion of the images processed by the initial reflection component, the adaptive artifact elimination, and the gray world white balance algorithm, and the corrected reflection component is obtained. Finally, the enhanced clear image is obtained by the Retinex model. Figure 1 is the flow chart of the method proposed in this paper. We will introduce each part in detail below.

According to the Retinex theory, the original image can be regarded as composed of illumination component and reflection component. The expression is as follows:

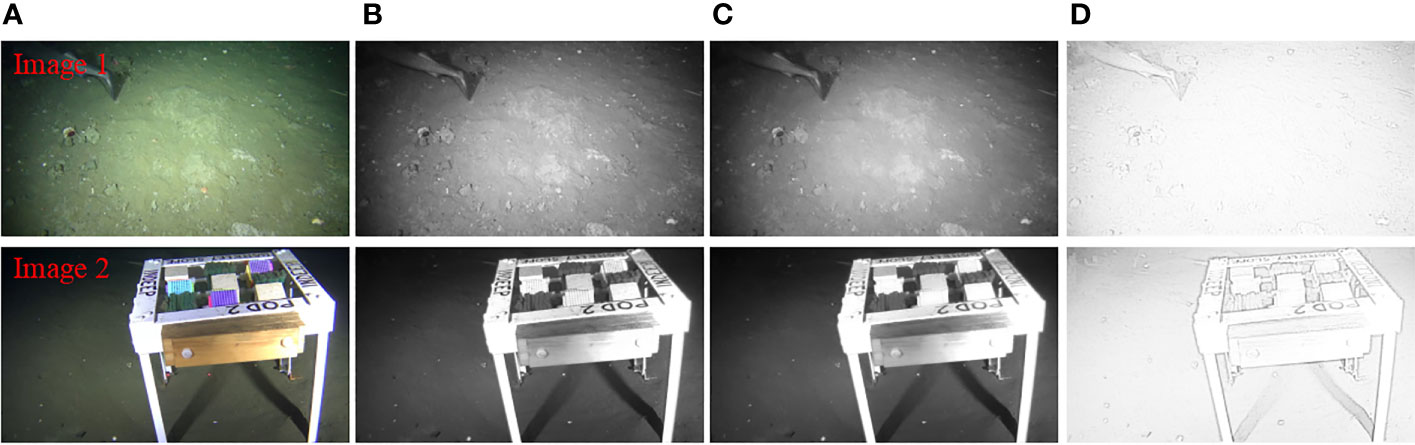

where is the illumination component, which reflects the brightness change in the scene and is related to the naturalness of the image, and directly determines the dynamic range of pixels in the image. is a reflection component, which reflects the essential information of an object and the physical properties of its surface, and has color constancy. Therefore, Retinex algorithm can be used to estimate the illumination component and reflection component of low-illumination images, so as to obtain images with normal contrast. However, when Retinex algorithm is used to enhance low-light images, color fading and noise usually occur, and at the same time, artifacts appear, especially in the underwater environment with uneven illumination, which is more obvious. In order to eliminate the influence of non-uniform illumination on underwater images, a non-linear guided filtering illumination equalization algorithm is designed to correct the non-uniform bright and dark areas of low-light images, in which the original image is shown in Figure 2A. The original image is the result of non-uniform light irradiation. The contrast in the middle of the image is high, and the contrast in the edge part is low, and it is blurred, which gives people a poor visual experience. Because H, S, and V in the HSV color space are independent of each other, direct operation on the V component will not affect the color information of underwater images. We convert the original image from the RGB color space to the HSV color space, and its expression is:

Figure 2 The process of non-uniform illumination correction module. Panel (A) is an underwater original image; panel (B) is the V component in the HSV color space; panel (C) is the image refined by guided filtering; panel (D) is an image processed by a non-linear guided filtering illumination equalization algorithm.

where R, G, and B are red, green, and blue channels in the RGB color space, and H, S, and V are hue, saturation, and brightness channels in the HSV color space. The V component is shown in Figure 2B, which contains the brightness information of the image and is independent of the other two components, so enhancing the V component will not change the original color of the image. Therefore, we use guided filtering (Yan et al., 2022) to operate on the V component to obtain the initial illumination component, which solves the problems of color fading and noise caused by uneven illumination and better protects the edge gradient information of the original image. Guided filtering is an anisotropic adaptive weight filter. Assuming that the input image is , the output image is , and the guide image is , there is a local linear relationship between the output image and the guide image in the filtering window, and its formula is as follows:

where is noise, and and are average coefficients of local linear functions. is the filtering window, which is a rectangular window with pixel as the center and as the radius. At the same time, in order to ensure that the guided filtering can smoothen the image while still having good edge retention ability, the value of the window radius is 3. When the input image and the guide image are equal, the guide filter can be used as an edge-preserving filter, and and can be obtained at this time, as shown below:

where is the variance of the window image , is the average of the input image in the filtering window , and is the regularization parameter, which aims to prevent from being too large. Experimental research shows that when takes a small value, it has a good enhancement effect in the environment of low visibility, weak target scene energy, and unclear details, so the regularization parameter in this paper takes a value of 10−6. As shown in Figure 2C, the illumination image processed by guided filtering can better preserve the edge information of the image and reduce the influence of noise. However, for under- or overexposed underwater images, there will also be over-enhancement, and it is difficult to achieve satisfactory results. Therefore, an illumination equalization algorithm based on non-linear guided filtering is proposed. Due to the influence of non-uniform illumination, the illumination component of the image obtained by guided filtering will appear in the over- and under-exposure areas and cannot be adaptively eliminated. Therefore, we use Weber-Fechner’s law for adaptive brightness correction. This method can adaptively adjust the parameters of the enhancement function according to the distribution profile of the illumination component in the image, thereby effectively eliminating the influence of uneven illumination on the underwater image. According to Weber-Fechner’s law, there is a logarithmic linear relationship between the subjective brightness perception and the objective brightness perception of the human eye, that is:

where is the subjective brightness perception, is the objective brightness perception, and are constants, and is the logarithmic transformation. In order to avoid increasing the calculation amount due to logarithmic operation and at the same time to avoid excessive enhancement of the image, we use a simple function to fit the curve of the above formula, namely:

where is the adjustment coefficient, and the smaller the value of , the greater the adjustment range. In this paper, according to the average value of the saturated component image , the value can be obtained. Therefore, , is the average value of , and is the sum of image pixels. As shown in Figure 2D, the V component processed by the illumination equalization algorithm of non-linear guided filtering has a wider dynamic range, the overall brightness and contrast of the adjusted image are greatly improved, and the influence of non-uniform illumination is also effectively eliminated.

In order to deal with the non-uniform illumination area of the image more naturally, we introduce the dark channel prior algorithm to process the initial illumination component and further eliminate the influence of non-uniform illumination on the underwater image by calculating the dark channel weight map of the initial illumination component. The overexposed area of the image processed by the dark channel prior theory can be greatly weakened, and it can also prevent the over-enhancement of the low-illumination image and the generation of dark areas. The study of Dong et al. (2010) found that the low-light image after the inversion operation is similar to the foggy image, indicating that the dark channel prior theory is also suitable for the processing of low-light images. The dark channel can be expressed as:

where represents the color channel of the input image, represents the vicinity of the center, represents the minimum filter, and represents the minimum value in the three color channels of R, G, and B. In the above operation process, it is inevitable to introduce noise. Therefore, we divide the image into blocks to obtain the cumulative distribution function of each region. The sharp gray histogram is appropriately cropped, and the number of cropped pixels is evenly distributed in the gray histogram. The final image is represented by .

Since the reflection component retains the color information of the image and has color constancy, color fidelity is extremely important. However, in the complex underwater environment, with the attenuation of light, the underwater image has the problem of color fading, which leads to the color distortion of the underwater image and the generation of artifacts. Therefore, we propose an adaptive MSRCR color fidelity algorithm to adaptively eliminate the dark area of the underwater image and preserve its color. We first introduce the color recovery parameter to adjust the three-channel ratio of the underwater image, so as to effectively solve the problem of color distortion in the original underwater image. The calculation formula is as follows:

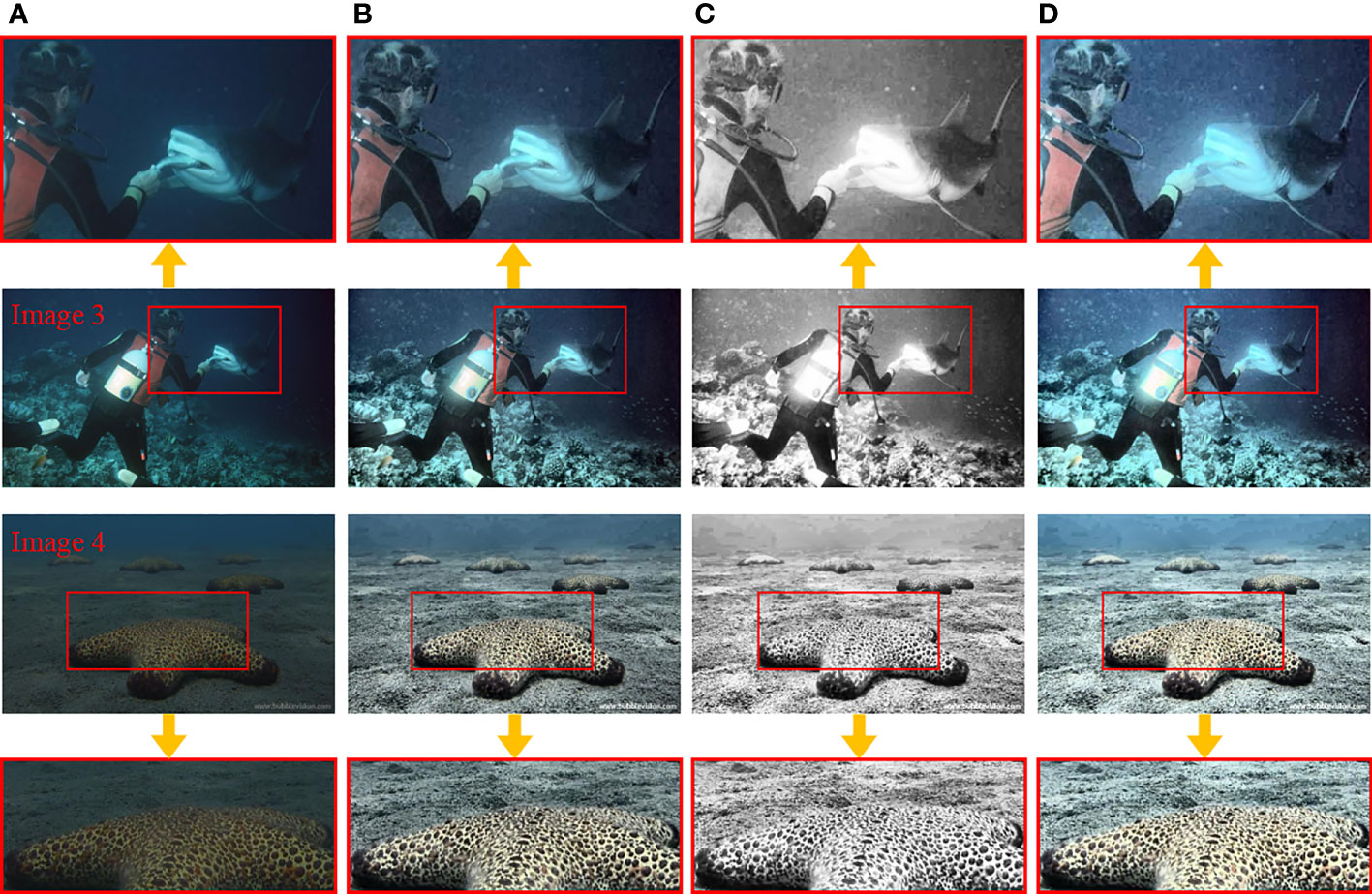

where is the input image; is the color recovery parameter of the th channel of the image, which is mainly used to adjust the proportional relationship between different color channels. is the number of scales, usually ; is the weighted coefficient of the th scale; is a Gaussian filter function; and is the th channel component of the input image. and are empirical parameters. We refer to the literature (Li et al., 2021). Usually, takes 46, and takes 125; is the number of channels of the image, so takes 3. Figure 3A is the original underwater image. As shown in Figure 3B, the above operations can improve the color information in the underwater image, but there will also be local edge detail blurring and artifacts. Therefore, we propose an adaptive MSRCR color fidelity algorithm. For blurred low-light images, most of its pixels are clustered in dark areas, so it is necessary to operate on the pixels in these dark areas. First, we transform Figure 3B into HSV space and improve the detail blur and artifacts of the image by dynamically adjusting the probability density of its V components. By calculating the probability density of each intensity level of the underwater image, the intensity change of the image is adaptively represented, and the formula is as follows:

Figure 3 The process of artifacts adaptive elimination module. Panel (A) is an underwater original image; panel (B) is the result of MSRCR algorithm; panel (C) is the V component processed by an adaptive MSRCR color fidelity algorithm; panel (D) is the image converted to RGB space.

where is the weighted probability density distribution; is the adjustment parameter, which is set to 0.3 according to experience. It is mainly used to slightly modify the statistical histogram and reduce the generation of unfavorable factors. is the probability density of image intensity , is the maximum probability density, and is the minimum probability density. , is the number of pixels in the image intensity , and is the total number of pixels in the image. The cumulative probability density distribution can be obtained as follows:

Finally, the gamma parameter correction of each pixel intensity can be expressed as:

As shown in Figure 3C, after correction by adaptive MSRCR color fidelity algorithm, the dark region of its V component can be processed smoothly, and the color information of the image is preserved. Figure 3D is an image converted to RGB space. It can be seen that the dark area of the image is eliminated to some extent, and the color distortion is corrected.

In order to adjust the color distribution of the reflected image, we use the Grey World white balance algorithm (Buchsbaum, 1980) to process the reflected image, which is used to restore the true color of the underwater image. The average gray value of the RGB three-channel component of the color image under the standard light source is equal. Therefore, the average gray value is divided by the average value of each channel to calculate the weight of each channel. Finally, the value of each channel is multiplied by the weight to obtain the value of each channel after adjustment. Based on this assumption, the color distribution of the light source can be estimated by calculating the average gray value of each color channel. The expression is:

where , , and are the weights of red, green, and blue channels, respectively, and , , and are the average values of red, green, and blue channels, respectively; , , and are three-channel values of , , and , respectively; , , and are the adjusted gray values of RGB three channels, respectively. The image processed by the color fidelity algorithm of the adaptive MSRCR is represented by , the original reflection component of the image is represented by , and the image processed by the gray world white balance algorithm is represented by .

In order to accurately obtain the illumination component and reflection component of Retinex model, a multi-scale Retinex model parameter estimation algorithm is proposed. First, , , and obtained in the non-uniform illumination correction module are used as the inputs of the multi-scale image fusion module, and then, the brightness weight, color weight, and average weight of the corresponding input images are calculated, respectively, and then, the Laplacian values of the three input images and the Gaussian values mapped by the corresponding weights are calculated, and finally, the corrected illumination components are obtained by multi-scale fusion. At the same time, we use , , and in the artifact adaptive elimination module as the input of the multi-scale image fusion module and then calculate the Laplacian contrast weight, dark channel weight, exposure weight, and saturation weight of the input image, respectively (Fu et al., 2016). Then, we calculate the Laplacian value of the corresponding input image and the Gaussian value of the corresponding weight mapping. Finally, multi-scale fusion is performed to obtain the corrected reflection component. We use the corrected illumination component and reflection component and then get the enhanced underwater clear image according to the Retinex model.

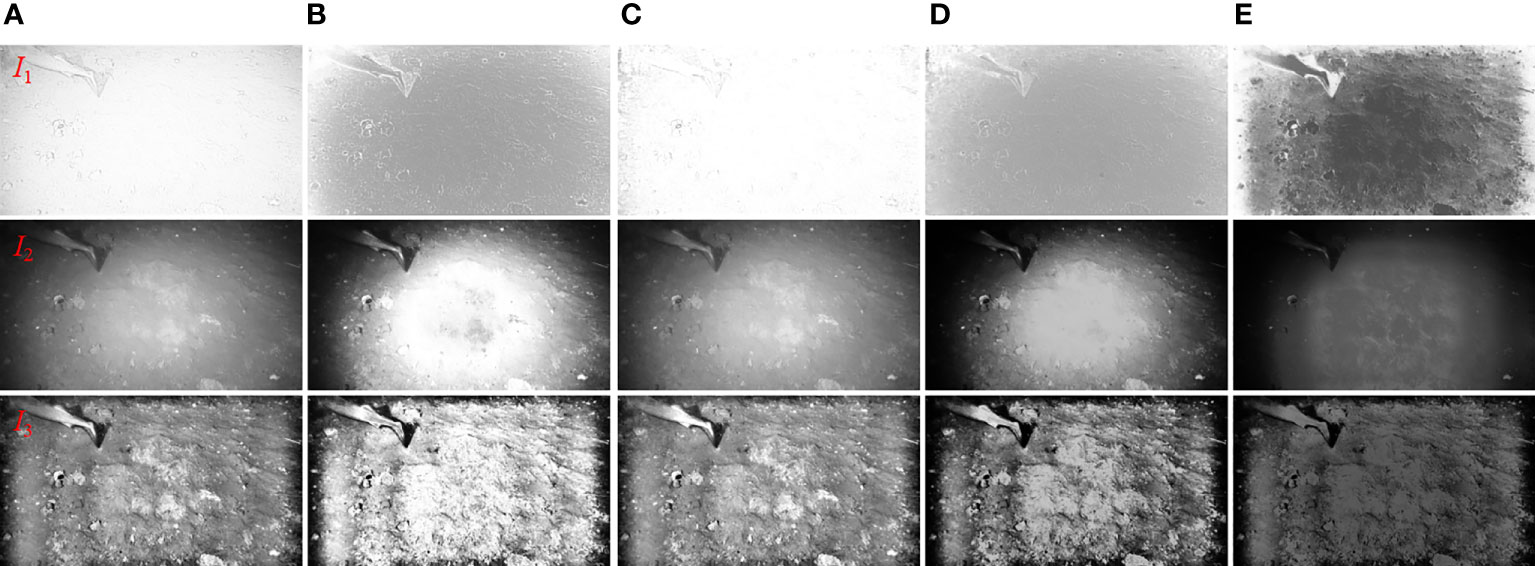

The image processed by the non-uniform illumination module is shown in Figure 4A. In order to accurately obtain the illumination component of the Retinex model, the selection of the input image weight map is particularly important. However, it is difficult to ensure that artifacts will not be introduced through simple pixel fusion. Therefore, we use a variety of weight maps in the fusion process so that pixels with high weight values can be displayed more. Brightness weight map is to distribute the pixel values in the areas with higher or lower brightness in the image evenly, so as to achieve the balance between color and contrast. The brightness weight diagram is shown in Figure 4B. However, for the non-uniform lighting environment, its weight value is not enough to enhance the underwater image, which has certain limitations. In order to solve this problem, we introduce chroma weight and control the image saturation gain by adjusting the ratio of input image to output image in color, as shown in Figure 4C. In order to keep the balance of illumination components in brightness and chroma, the fusion weights of brightness weight and chroma weight are calculated, and the fusion weight diagram is shown in Figure 4D. Finally, the three weight maps are summed and normalized to get the regularized weight, and the weight map is shown in Figure 4E.

Figure 4 The process of illumination component estimation in the multi-scale fusion module. (A) The image processed by the non-uniform illumination correction module; panel (B) is the brightness weight; panel (C) is the color degree weight; panel (D) is the fusion weight; panel (E) is the regularization weight.

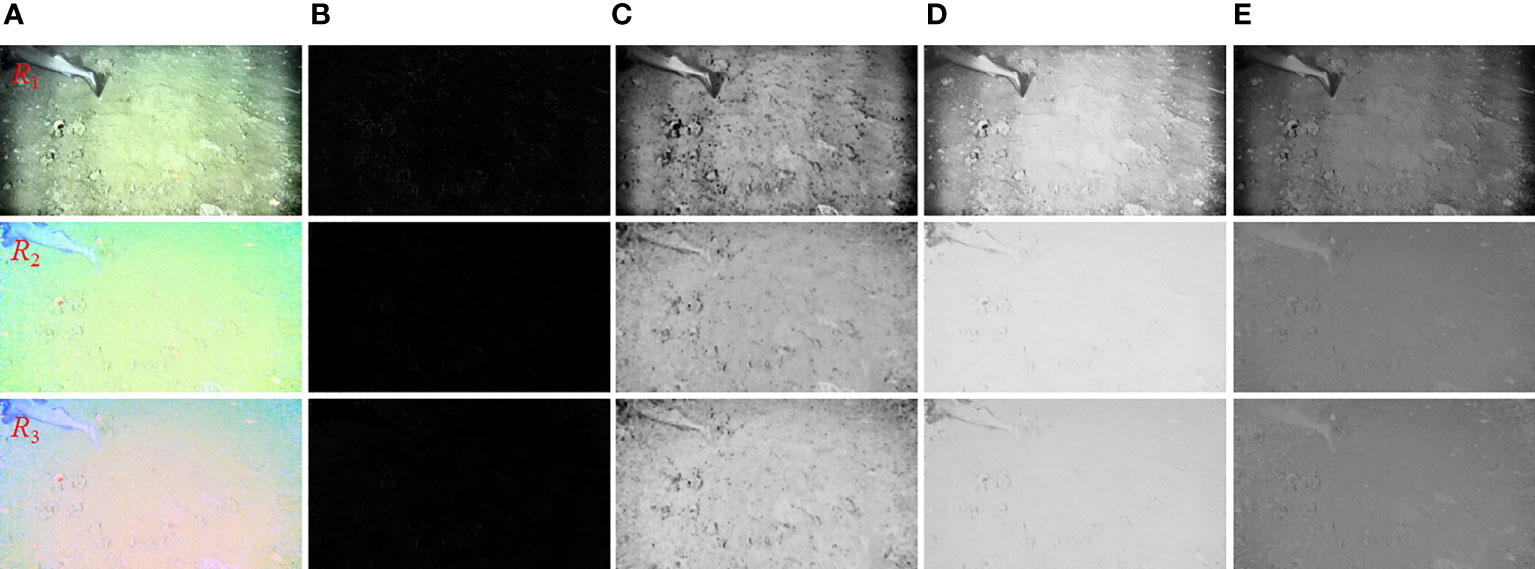

The image processed by the artifact adaptive elimination module is shown in Figure 5A. In order to preserve the edge and detail information of the original image to a greater extent, Laplacian contrast weight is usually introduced, which can improve the contrast of the whole picture, and its weight diagram is shown in Figure 5B. In order to deal with the uneven illumination area of underwater image more naturally, we also introduce dark channel weight and exposure weight, and weigh the pixels with high or low brightness to improve the brightness of the image and further smoothen the uneven pixels. The dark channel weight diagram is shown in Figure 5C. The formula for calculating the exposure weight can be expressed as:

Figure 5 The process of reflection component estimation in the multi-scale fusion module. (A) The image processed by the artifact adaptive elimination module; panel (B) is Laplace weight; panel (C) is the dark channel weight; panel (D) is the exposure weight; and (E) is the saturation weight.

where is the value of the input image at the pixel position . Since the normalized natural brightness of image pixels is usually close to the average value of 0.5, the average value of experimental brightness is set to 0.5, and the standard deviation is set to 0.25. The exposure weight is shown in Figure 5D. Saturation weight can be used to adjust the high saturation region of the image, which makes the fusion algorithm in this paper more suitable for chroma information. The expression of saturation weight is as follows:

where is the saturation weight; , , and are the , , and three-channel images of the input image, respectively; is the brightness; and is the th input image. The saturation weight diagram is shown in Figure 5E.

Finally, the Gaussian pyramid is used to decompose the normalized weight , and the input image is decomposed into the Laplacian pyramid , and then, the Laplacian input and the Gaussian normalized weight of each pyramid level are fused. Finally, the fused illumination component and the reflection component are obtained by summing. The expression is

where represents the fused image, represents the pyramid level, and represents the number of input images.

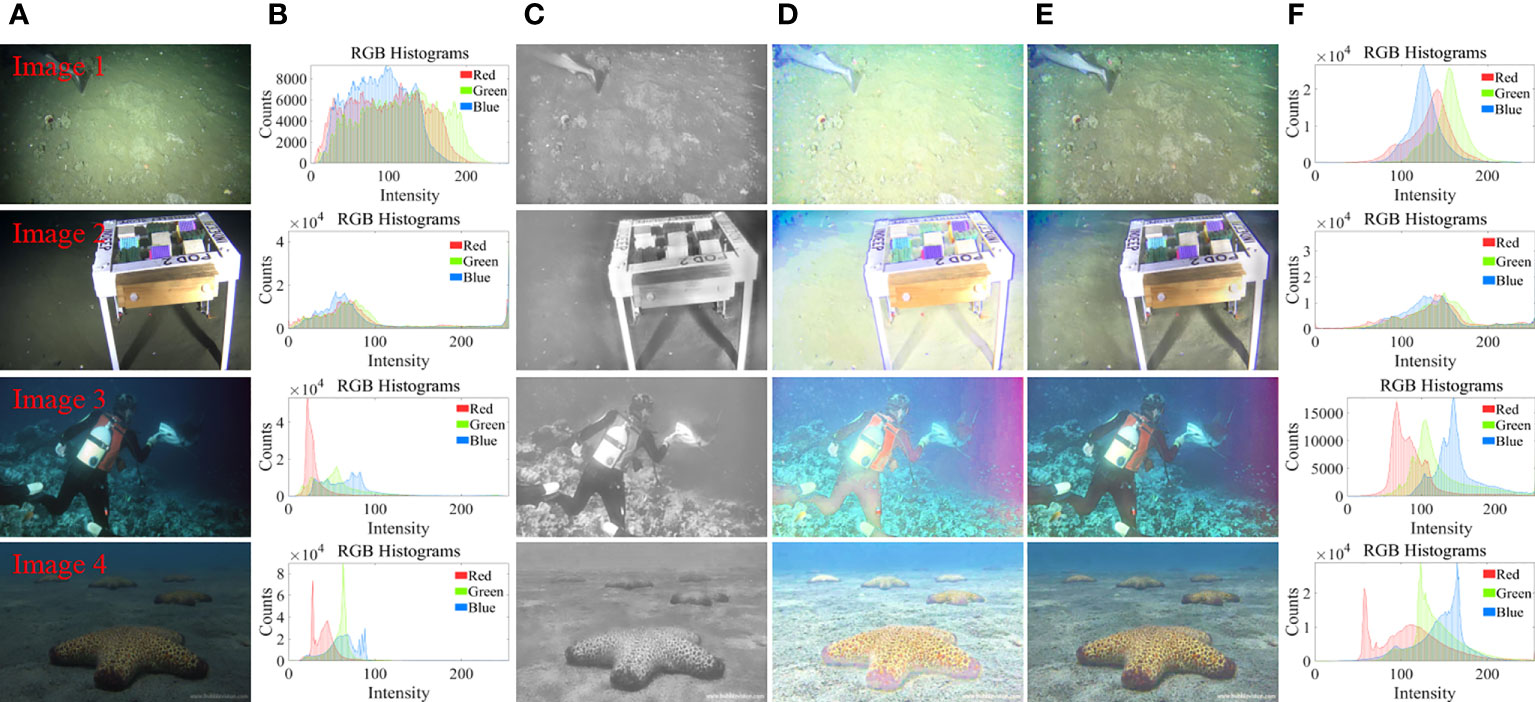

Using the modified illumination component and reflection component, the enhanced underwater clear image is obtained according to the Retinex model as shown in Figure 6. It can be seen from Figure 6E that the method in this paper can effectively correct non-uniform low-light underwater images. Comparing Figures 6B, F, it can be seen that the enhanced underwater image has a wider and more uniform RGB histogram distribution range. As shown in Figure 6C, the corrected illumination component eliminates the influence of non-uniform illumination to a certain extent. As shown in Figure 6D, the modified reflection component retains the color information of the image and ensures that the image does not have artifacts and color distortions.

Figure 6 The processing results of the multi-scale Retinex model parameter estimation module. Panel (A) is an underwater original image; (B) RGB histogram of the original underwater image; panel (C) is the corrected illumination component; panel (D) is the corrected reflection component; panel (E) is the enhanced image of the method proposed in this paper; panel (F) is the RGB histogram of the enhanced image.

In order to verify the effectiveness and scalability of the proposed method, we conducted qualitative and quantitative comparisons and application test. In the qualitative and quantitative comparisons, in order to show the advantages of the proposed method more comprehensively, we compare the existing classical underwater image enhancement techniques, including UDCP (Drews et al., 2013), IBLA (Peng and Cosman, 2017), and WCID (Jayasree et al., 2014) algorithms based on physical model, BIMEF (Ying et al., 2017) and FWE (Fu et al., 2016) algorithms based on image fusion, and SDD (Hao et al., 2020) algorithm based on Retinex theory. Then, the enhancement effect of each method is evaluated qualitatively and quantitatively. Finally, we use saliency detection (SOD) and scale invariant feature transform (SIFT) for application testing to verify the scalability of the proposed method. All the underwater images in this paper come from OCEANDARK (Porto Marques et al., 2019) and UIEBD (Li et al., 2019) datasets, which provide real underwater scenes, including underwater images in the environment of non-uniform illumination and dark area artifacts. We randomly select representative images from them for experiments. In order to ensure the fairness of comparison among different algorithms, the experiment in this paper is carried out in the environment of Matlab R2018b, and the hardware parameters of the computer are Windows 10 PC Inter (R) Core (TM) i7-9700 CPU 3.00 GHz.

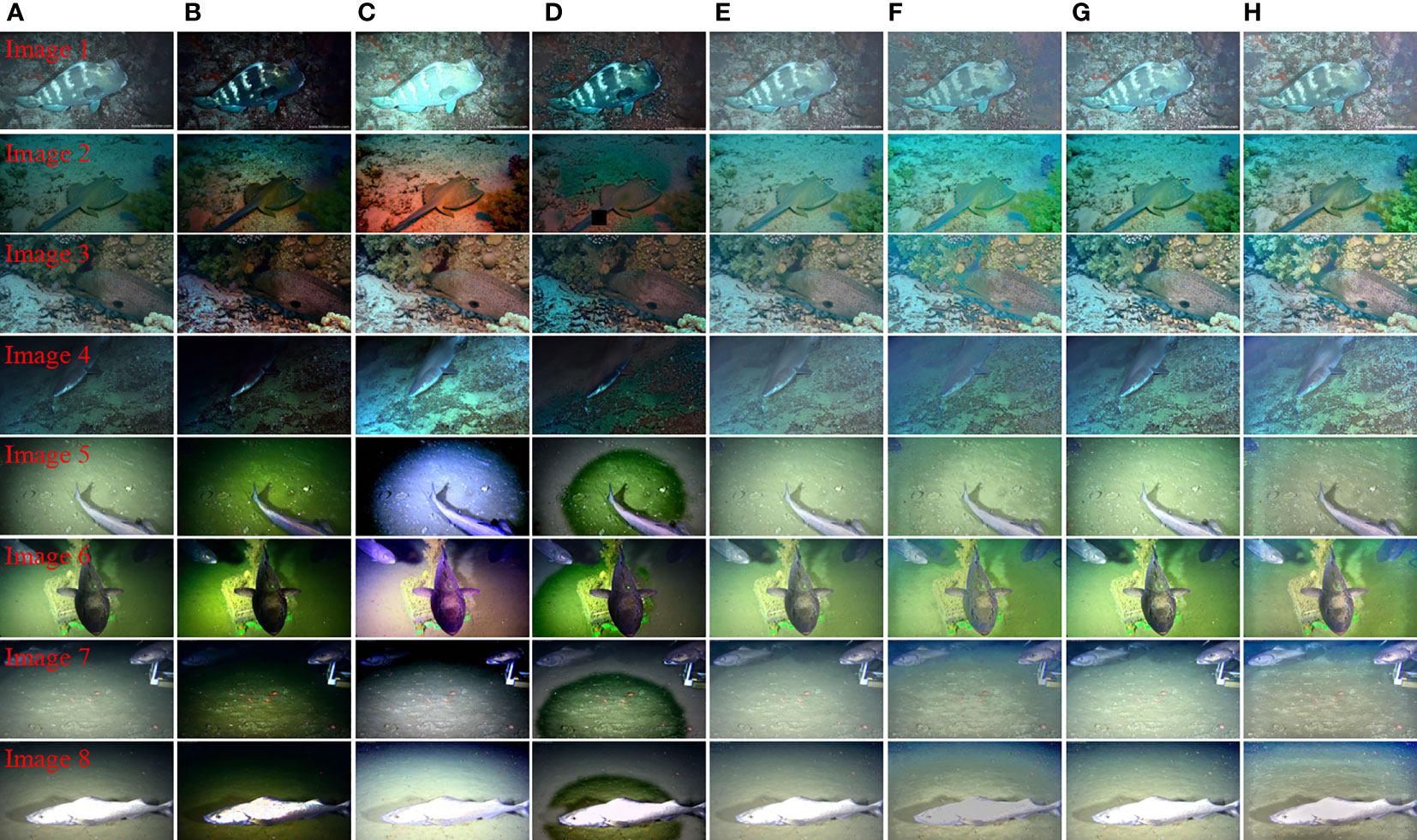

In order to verify the effectiveness of the method in this paper to restore underwater images in non-uniform illumination and low-illumination environments, we selected underwater low-light images with non-uniform illumination and dark area artifacts for testing. At the same time, by comparing with the existing classical underwater image enhancement techniques and analyzing from the aspects of contrast, visibility, and color restoration, the processing effects of each method are discussed. Due to the limitation of space, we only show some of the experimental results. The qualitative comparison results of different algorithms under non-uniform illumination and dark area artifact environment are shown in Figure 7. Figure 7A is an underwater original image, including images with non-uniform illumination and dark area artifacts. Figure 7B is the processing result of the UDCP algorithm. It can be seen that the contrast of all images is lower than that of the original image. At the same time, the red color deviation is introduced in Image3, and the green color deviation is introduced in Image5 and Image6, indicating that the algorithm does not accurately estimate the background light value of the underwater image. Figure 7C is the processing result of the IBLA algorithm. It can be seen from the figure that the contrast of all images has been improved, but each image has introduced different degrees of color deviation. Image1 and Image8 also have overexposure, indicating that the algorithm cannot accurately estimate the transmittance of the scene when processing non-uniformly illuminated underwater images. Figure 7D is the processing result of WCID algorithm. It can be seen from the image that there are artifacts in Image5–Image8, and the visibility of all images is not high. Figures 7E–G are the processing results of BIMEF, FWE, and SDD algorithms, respectively. These three algorithms have better effects on non-uniform low-light underwater images. The overall contrast of all images is greatly improved, and no color deviation is introduced. The visibility is also very good. However, these methods do not effectively restore the color of the image, and the edge of the image will still appear as dark area artifacts. Figure 7H is the processing result of the proposed method. It can be seen from the image that the contrast of all images is greatly improved compared with other algorithms, and there is no over-bright or over-dark area. At the same time, the contrast of the edge area of the image is also greatly improved, and the dark area artifacts of all images are eliminated. It shows that the proposed method can effectively deal with underwater low-light images and can also correct non-uniform bright and dark regions and ensure that the image does not appear artifacts and color distortion. Compared with the other six algorithms, the method proposed in this paper has good universality and is more suitable for underwater environments with non-uniform low-illumination and dark area artifacts.

Figure 7 Qualitative comparison results of different algorithms in non-uniform illumination and dark area artifact environments. Panel (A) is an underwater original image; panel (B) is the processing result of UDCP algorithm; panel (C) is the processing result of IBLA algorithm; panel (D) is the processing result of WCID algorithm; panel (E) is the processing result of BIMEF algorithm; panel (F) is the processing result of FWE algorithm; panel (G) is the processing result of SDD algorithm; panel (H) is the processing result of the method proposed in this paper.

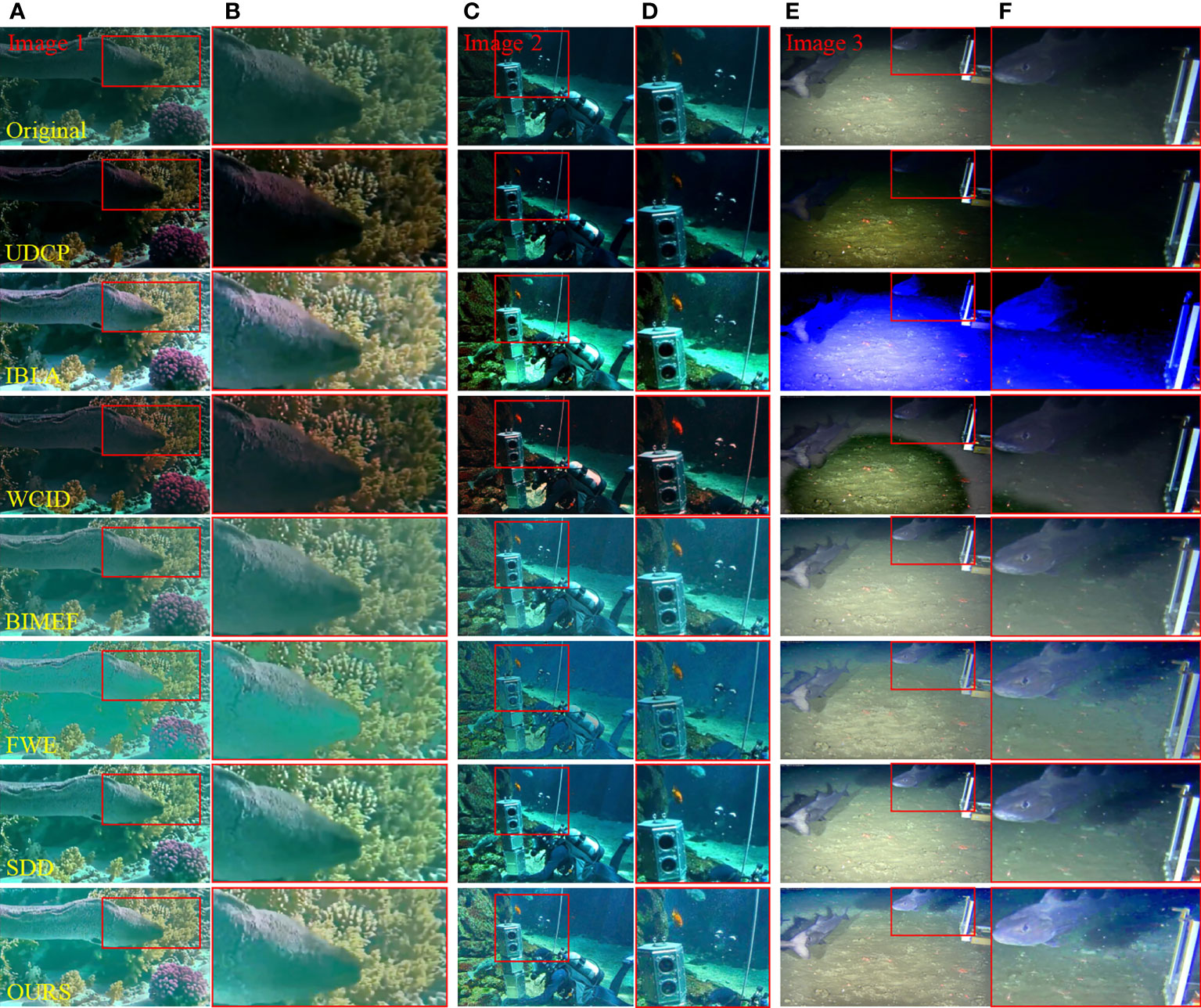

Figure 8 shows the comparison of the detail enhancement ability of different algorithms in the scene of non-uniform illumination and dark area artifacts. From top to bottom, it is the underwater original image, the processing result of UDCP algorithm, the processing result of IBLA algorithm, the processing result of WCID algorithm, the processing result of BIMEF algorithm, the processing result of FWE algorithm, the processing result of SDD algorithm, and the processing result of the method proposed in this paper. From the detail magnification map, it can be seen that the image contrast processed by the UDCP algorithm is very low and the visibility is low. The image processed by IBLA algorithm introduces color cast, especially the serious blue cast is introduced in Image3 image. The image processed by WCID algorithm has halo artifacts. The images processed by BIMEF, few, and SDD algorithms are better. These three algorithms correct the non-uniform low-light image to a certain extent, but they are not thorough enough, and the color recovery of the image is not enough. At the same time, the dark area of the image edge is not well restored. The image processed by the proposed method has better contrast and visibility, and the detail texture of the image is clearer. Therefore, it also shows that the proposed method has better processing effect in non-uniform illumination and low-illumination underwater environment.

Figure 8 The detail enhancement ability of different algorithms in non-uniform illumination and dark area artifact scenes is compared. Panels (A), (C), and (E) are three underwater images in different scenes. Panels (B), (D), and (F) are the enlarged details in the red box on the left.

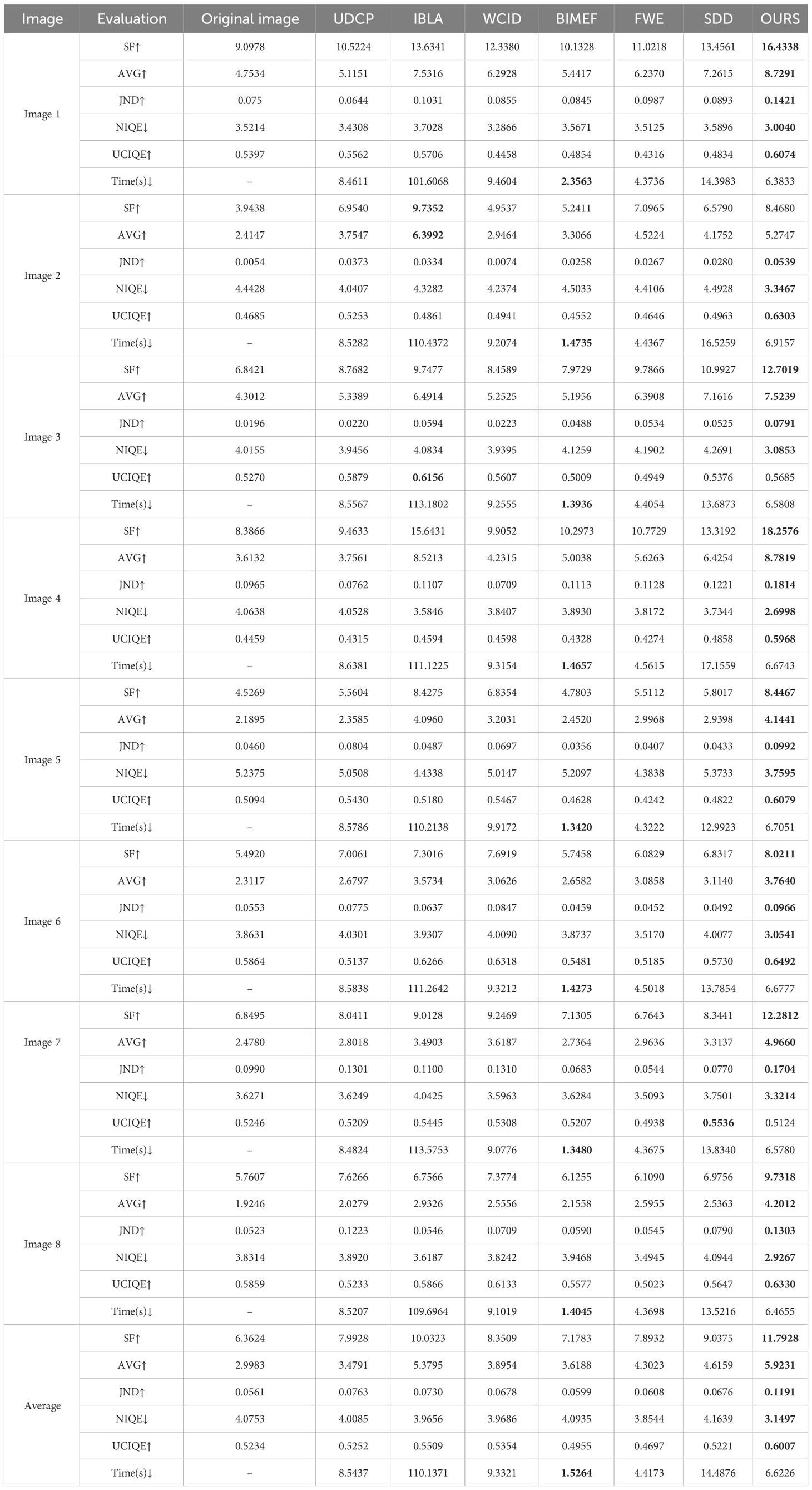

Through qualitative comparison, it can be seen that the method in this paper has good enhancement effect in both uneven- and low-illumination scenes. However, everyone’s subjective feelings are different, and the conclusions will be different. Therefore, in order to avoid the deviation of qualitative comparison, we then objectively evaluate the restoration effects of different algorithms on underwater images from the aspects of color restoration, contrast, and comprehensive effect. The spatial frequency (SF), average gradient (AVG), effective perception rate (JND), image naturalness evaluation (NIQE), underwater color image quality evaluation (UCIQE) (Zhan et al., 2017), and the running time of the algorithm are mainly used for evaluation. Spatial frequency (SF) is used to evaluate the color restoration effect of underwater images. The larger the value, the richer the color of enhanced images. It can be defined as:

where and represent the width and height of the image, and represents the pixel value at point in the image.

Average gradient (AVG) is used to characterize the clarity of an image. The higher the value, the clearer the image. The average gradient can be described as:

Effective perception rate (JND) is based on local average brightness and local spatial frequency to evaluate the enhancement effect, and the greater the value, the better the enhancement effect. Image naturalness evaluation (NIQE) is used to characterize the naturalness of an image. The smaller the value, the higher the naturalness of the image, which is more in line with human visual experience. The underwater color image quality assessment (UCIQE) is to linearly combine the chromaticity, saturation, and contrast of the image, which is mainly used to quantify the image degradation caused by uneven illumination, color deviation, blur, and low contrast of the underwater image. The calculation formula of the index is:

where represents the standard deviation of chromaticity, represents the contrast of brightness, and represents the average saturation. , , and are weight coefficients, which are set to 0.4680, 0.2745, and 0.2576, respectively. Due to the limitation of space, we only show some experimental results. Eight representative non-uniform illumination and low-illumination underwater images were randomly selected from the OCEANDARK and UIEBD datasets. By calculating the running time of SF, AVG, JND, NIQE, UCIQE, and algorithms for each image, and comparing and analyzing them, the advantages and disadvantages of each algorithm are evaluated. Table 1 is the quantitative comparison results of non-uniform illumination and low-illumination underwater images. The thickened value in the table is the optimal value of the corresponding algorithm. Among them, '↑' indicates that the larger the index value, the better, and '↓' indicates that the smaller the index value, the better. As can be seen from Table 1, the method in this paper is superior to the other six classical algorithms in SF, AG, JND, NIQE, and UCIQE. It shows that the enhanced image has more natural color recovery, good fidelity, high clarity, more information, and better visual effect. However, the running time of the method proposed in this paper is longer than that of BIMEF and FWE algorithms. That is to say, the speed of image processing in this paper is medium, which is suitable for occasions with low real-time requirements. Comprehensive qualitative and quantitative comparisons, the method in this paper has good adaptability to non-uniform illumination and low-illumination underwater environment. It can correct the influence of non-uniform illumination on the image and can also effectively restore the brightness and color of the underwater image and adaptively eliminate the artifacts of the image.

Table 1 Quantitative comparison results of non-uniform illumination and low-illumination underwater images.

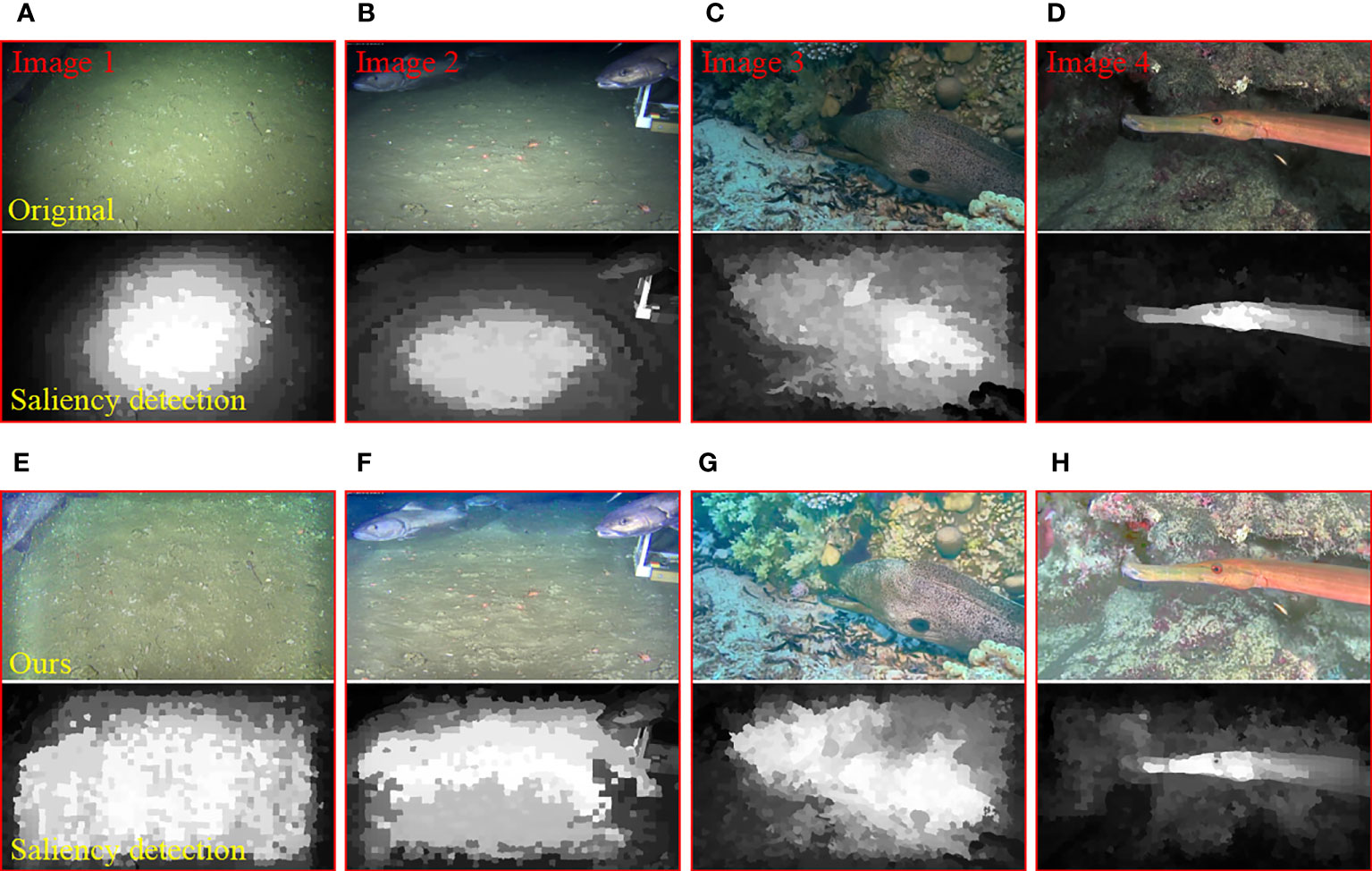

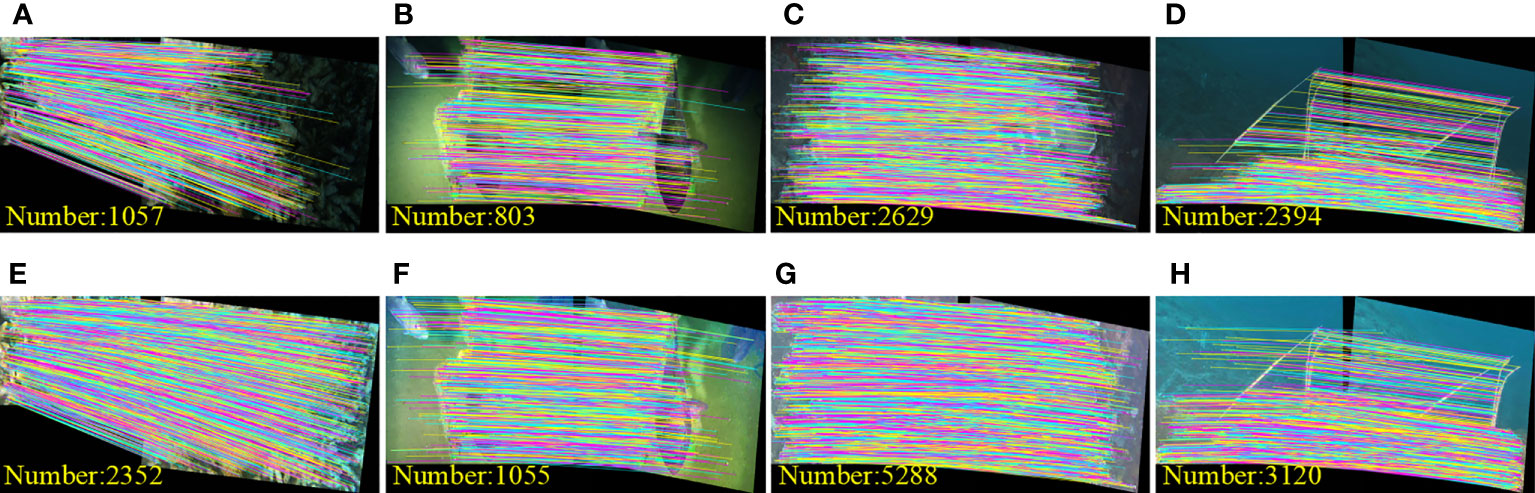

In order to further verify the scalability of the proposed method, we use saliency detection (SOD) (Wu et al., 2018) and scale invariant feature transform (SIFT) (Zhang, 2020) to test the underwater images before and after enhancement. If the saliency of the image is more obvious or the number of feature matching points is more, the texture of the image is clearer, and the processing performance of the algorithm is better. The test results are shown in Figures 9, 10. Figure 9 shows the saliency detection results. It can be seen that the restored image has better saliency than the original image. Figure 10 is the test results of feature matching. It can be seen that the restored image has more feature matching points than the original image, indicating that the image has higher clarity and better image restoration effect. Therefore, the method proposed in this paper can obtain more texture details in uneven- and low-illumination scenes, and the image clarity is significantly improved. It shows that the method in this paper can be more suitable for the underwater environment with uneven illumination and low illumination and can provide theoretical reference for low-illumination image enhancement technology. At the same time, underwater acoustic images are also an important means to obtain ocean information, such as synthetic aperture sonar images (Zhang et al., 2021). The method in this paper is mainly developed for underwater optical images and has achieved certain results, but it cannot be adapted to underwater acoustic images. Therefore, in the follow-up work, we also focus on underwater acoustic image enhancement.

Figure 9 Results of saliency detection. Panels (A–D) are the original underwater image and its saliency detection results; panels (E–H) are the enhanced images and their saliency detection results.

Figure 10 The test results of feature matching. Panels (A–D) are the feature matching test results of the underwater original image; panels (E–H) are the test results of feature matching for enhanced images.

A low-illumination underwater image enhancement method based on non-uniform illumination correction and adaptive artifact elimination is proposed, which can effectively correct the influence of non-uniform low illumination on underwater images and eliminate dark area artifacts of underwater images, and can provide theoretical reference for low-illumination image enhancement technology. The main conclusion of this method is as follows: a non-linear guided filtering illumination equalization algorithm is designed to correct the uneven bright and dark areas of underwater images so that the pixel distribution of the processed images has a wider dynamic range. An adaptive MSRCR color fidelity algorithm is proposed to improve the color of the image and ensure that the image will not appear artifacts and color distortion. A multi-scale Retinex model parameter estimation algorithm is proposed to accurately estimate the illumination component and reflection component. The corrected illumination component eliminates the influence of uneven illumination to a certain extent, and the corrected reflection component retains more color information. The results of qualitative and quantitative comparisons and application test show that the method proposed in this paper can widely adapt to non-uniform low-light underwater scenes and also show that the method has important practical value in the field of underwater image processing. However, such methods still have shortcomings, often ignoring the physical characteristics of underwater optical propagation. Therefore, in the future work, we will further adjust the structure of the algorithm to adapt to the underwater environment with color cast. At the same time, we will also take the algorithm running speed and underwater acoustic image enhancement as the focus of research.

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Conceptualization, YN and Y-DP. Methodology, YN and JY. Software, YN and Y-PJ. Validation, YN and Y-PJ. Formal analysis, Y-DP and Y-PJ. Investigation, YN. Data curation, YN. Writing—original draft preparation, YN and Y-DP. Writing—review and editing, YN and Y-DP. Visualization, JY. Supervision, Y-DP. Project administration, Y-DP and Y-PJ. All authors contributed to the article and approved the submitted version.

This work was supported by the Special Project for the Construction of Innovative Provinces in Hunan (Grant No. 2020GK1021), the Construction Project for Innovative Provinces in Hunan (Grant No. 2020SK2025), and the National Key Research and Development Program of China (Grant No. 2022YFC2805904).

The authors would like to thank the editor and the reviewers for their valuable comments.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdullah-Al-Wadud M., Kabir M. H., Dewan M. A. A., Chae O. (2007). A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consumer. Electron. 53 (2), 593–600. doi: 10.1109/TCE.2007.381734

Buchsbaum G. (1980). A spatial processor model for object colour perception. J. Franklin. Inst. 310.1, 1–26. doi: 10.1016/0016-0032(80)90058-7

Dhal K. G., Das A., Ray S., Gálvez J., Das S. (2021). Histogram equalization variants as optimization problems: a review. Arch. Comput. Methods Eng. 28, 1471–1496. doi: 10.1007/s11831-020-09425-1

Dong X., Pang Y., Wen J. (2010). “Fast efficient algorithm for enhancement of low lighting video,” in ACM SIGGRAPH 2010 posters, 1–1. doi: 10.1145/1836845.1836920

Drews P., Nascimento E., Moraes F., Botelho S., Campos M. (2013). “Transmission estimation in underwater single images,” in Proceedings of the IEEE international conference on computer vision workshops, 825–830. doi: 10.1109/ICCVW.2013.113

Fu X., Liao Y., Zeng D., Huang Y., Zhang X. P., Ding X. (2015). A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation. IEEE Trans. Image. Process. 24 (12), 4965–4977. doi: 10.1109/TIP.2015.2474701

Fu X., Zeng D., Huang Y., Liao Y., Ding X., Paisley J. (2016). A fusion-based enhancing method for weakly illuminated images. Signal Process. 129, 82–96. doi: 10.1016/j.sigpro.2016.05.031

Hao S., Han X., Guo Y., Xu X., Wang M. (2020). Low-light image enhancement with semi-decoupled decomposition. IEEE Trans. Multimedia. 22 (12), 3025–3038. doi: 10.1109/TMM.2020.2969790

Huang S. C., Cheng F. C., Chiu Y. S. (2012). Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image. Process. 22 (3), 1032–1041. doi: 10.1109/TIP.2012.2226047

Huang Z., Huang L., Li Q., Zhang T., Sang N. (2018). Framelet regularization for uneven intensity correction of color images with illumination and reflectance estimation. Neurocomputing 314, 154–168. doi: 10.1016/j.neucom.2018.06.063

Huang Z., Wang Z., Zhang J., Li Q., Shi Y. (2021). Image enhancement with the preservation of brightness and structures by employing contrast limited dynamic quadri-histogram equalization. Optik 226, 165877. doi: 10.1016/j.ijleo.2020.165877

Huang Z., Zhang T., Li Q., Fang H. (2016). Adaptive gamma correction based on cumulative histogram for enhancing near-infrared images. Infrared. Phys. Technol. 79, 205–215. doi: 10.1016/j.infrared.2016.11.001

Jayasree M. S., Thavaseelan G., Scholar P. G. (2014). Underwater color image enhancement using wavelength compensation and dehazing. Int. J. Comput. Sci. Eng. Commun. 2 (3), 389–393.

Jobson D. J., Rahman Z. U., Woodell G. A. (1997a). Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 6 (3), 451–462. doi: 10.1109/83.557356

Jobson D. J., Rahman Z. U., Woodell G. A. (1997b). A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6 (7), 965–976. doi: 10.1109/83.597272

Kansal S., Tripathi R. K. (2019). Adaptive gamma correction for contrast enhancement of remote sensing images. Multimedia. Tools Appl. 78, 25241–25258. doi: 10.1007/s11042-019-07744-5

Land E. H. (1986). An alternative technique for the computation of the designator in the retinex theory of color vision. Proc. Natl. Acad. Sci. 83 (10), 3078–3080. doi: 10.1073/pnas.83.10.3078

Li C., Guo C., Ren W., Cong R., Hou J., Kwong S., et al. (2019). An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image. Process. 29, 4376–4389. doi: 10.1109/TIP.2019.2955241

Li M., Liu J., Yang W., Sun X., Guo Z. (2018). Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image. Process. 27 (6), 2828–2841. doi: 10.1109/TIP.2018.2810539

Li Y., Ruan R., Mi Z., Shen X., Gao T., Fu X. (2023). An underwater image restoration based on global polarization effects of underwater scene. Optics. Lasers. Eng. 165, 107550. doi: 10.1016/j.optlaseng.2023.107550

Li Z., Zheng X., Bhanu B., Long S., Zhang Q., Huang Z. (2021). “Fast region-adaptive defogging and enhancement for outdoor images containing sky,” in 2020 25th international conference on pattern recognition (ICPR) (IEEE), 8267–8274. doi: 10.1109/ICPR48806.2021.9412595

Ning Y., Jin Y. P., Peng Y. D., Yan J. (2023). Underwater image restoration based on adaptive parameter optimization of the physical model. Optics. Express. 31.13, 21172–21191. doi: 10.1364/OE.492293

Peng Y. T., Cosman P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image. Process. 26 (4), 1579–1594. doi: 10.1109/TIP.2017.2663846

Porto Marques T., Branzan Albu A., Hoeberechts M. (2019). A contrast-guided approach for the enhancement of low-lighting underwater images. J. Imaging 5 (10), 79. doi: 10.3390/jimaging5100079

Srinivas K., Bhandari A. K. (2020). Low light image enhancement with adaptive sigmoid transfer function. IET. Image. Process. 14 (4), 668–678. doi: 10.1049/iet-ipr.2019.0781

Wu X., Ma X., Zhang J., Wang A., Jin Z. (2018). “Salient object detection via deformed smoothness constraint,” in 2018 25th IEEE international conference on image processing (ICIP) (IEEE), 2815–2819. doi: 10.1109/ICIP.2018.8451169

Yan M., Qin D., Zhang G., Zheng P., Bai J., Ma L. (2022). Nighttime image stitching method based on guided filtering enhancement. Entropy 24 (9), 1267. doi: 10.3390/e24091267

Ying Z., Li G., Gao W. (2017). A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv. preprint. arXiv:1711.00591. doi: 10.48550/arXiv.1711.00591

Yu Z., Xie Y., Yu X., Zheng B. (2022). Lighting the darkness in the sea: A deep learning model for underwater image enhancement. Front. Mar. Sci. 9. doi: 10.3389/fmars.2022.921492

Zhan K., Shi J., Teng J., Li Q., Wang M., Lu F. (2017). Linking synaptic computation for image enhancement. Neurocomputing 238, 1–12. doi: 10.1016/j.neucom.2017.01.031

Zhang W. (2020). Combination of SIFT and Canny edge detection for registration between SAR and optical images. IEEE Geosci. Remote Sens. Lett. 19, 1–5. doi: 10.1109/LGRS.2020.3043025

Keywords: underwater image enhancement, non-uniform illumination, artifact, guided filtering, Retinex model

Citation: Ning Y, Jin Y-P, Peng Y-D and Yan J (2023) Low-illumination underwater image enhancement based on non-uniform illumination correction and adaptive artifact elimination. Front. Mar. Sci. 10:1249351. doi: 10.3389/fmars.2023.1249351

Received: 28 June 2023; Accepted: 11 August 2023;

Published: 01 September 2023.

Edited by:

Rachel Przeslawski, NSW Government, AustraliaReviewed by:

Zhenghua Huang, Wuhan Institute of Technology, ChinaCopyright © 2023 Ning, Jin, Peng and Yan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: You-Duo Peng, eWRwZW5nQGhudXN0LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.