- 1New South Wales Department of Primary Industries – Fisheries, Huskisson, Australia

- 2Institute for Marine and Antarctic Studies, University of Tasmania, Hobart, TAS, Australia

- 3Oceans, Reefs, Coasts and the Antarctic Branch, Canberra, Australia

- 4Data61, Commonwealth Scientific and Industrial Research Organisation (CSIRO), Hobart, TAS, Australia

- 5The University of Western Australia (UWA) Oceans Institute and School of Biological Sciences, The University of Western Australia, Perth, WA, Australia

- 6FourBridges, Port Angeles, WA, United States

- 7Integrated Marine Observing System, University of Tasmania, Hobart, TAS, Australia

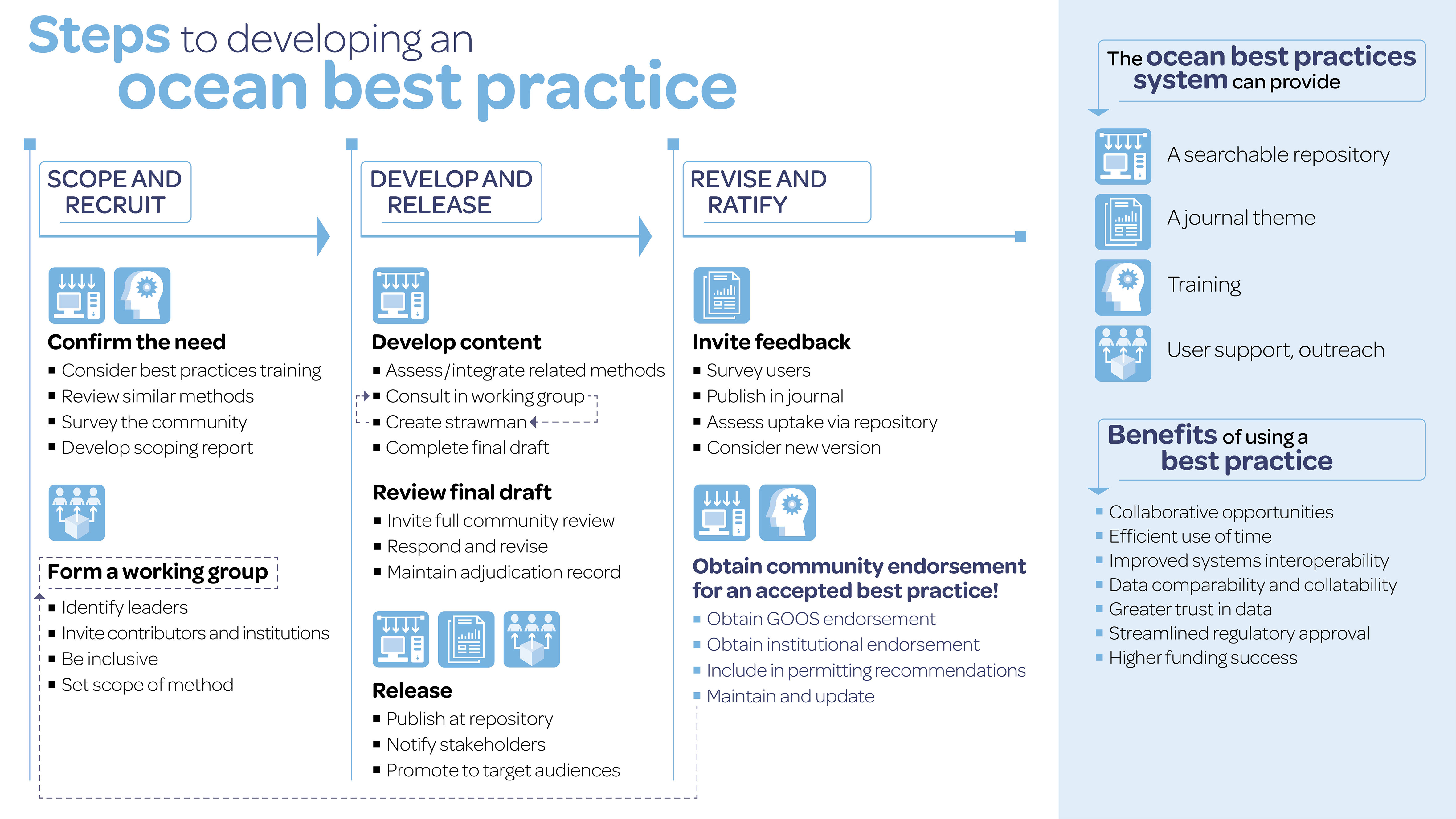

Since 2012, there has been a surge in the numbers of marine science publications that use the term ‘best practice’, yet the term is not often defined, nor is the process behind the best practice development described. Importantly a ‘best practice’ is more than a documented practice that an individual or institution uses and considers good. This article describes a rigorous process to develop an ocean best practice using examples from a case study from Australia in which a suite of nine standard operating procedures were released in 2018 and have since become national best practices. The process to develop a best practice includes three phases 1) scope and recruit, 2) develop and release, 3) revise and ratify. Each phase includes 2-3 steps and associated actions that are supported by the Ocean Best Practices System (www.oceanbestpractices.org). The Australian case study differs from many other practices, which only use the second phase (develop and release). In this article, we emphasize the value of the other phases to ensure a practice is truly a ‘best practice’. These phases also have other benefits, including higher uptake of a practice stemming from a sense of shared ownership (from scope and recruit phase) and currency and accuracy (from revise and ratify phase). Although the process described in this paper may be challenging and time-consuming, it optimizes the chance to develop a true best practice that is a) fit-for-purpose with clearly defined scope; b) representative and inclusive of potential users; c) accurate and effective, reflecting emerging technologies and programs; and d) supported and adopted by users.

Introduction

An ocean best practice is a “methodology that has repeatedly produced superior results relative to other methodologies with the same objective; to be fully elevated to a best practice, a promising method will have been adopted and employed by multiple organizations” (Pearlman et al., 2019). Best practices can include manuals, guides, protocols and standard operating procedures, but they all share a common goal to improve the quality, consistency, and effectiveness of data acquisition and accessibility. They include some or all aspects of the ocean observation value chain, from planning through to derived products. Using ocean best practices has many benefits including more collaborative opportunities, efficient use of time, improved systems operability, data comparability and interoperability, greater trust in data, streamlined regulatory approvals, and higher funding success (e.g. (Pensieri et al., 2016; Rivest et al., 2016; Walker et al., 2021).

The systematic development and use of ocean best practices is supported by the Ocean Best Practices System (OBPS) (Pearlman et al., 2019) (www.oceanbestpractices.org). The centerpiece of this international program is the OBPS Repository, a searchable and secure interface that allows the submission and retrieval of all ocean practices, including those fully elevated to best practices (Hörstmann et al., 2021). Other facets of the OBPS include a journal research theme, a UN Ocean Decade program (Ocean Practices), training and capacity development, and various task teams focused on discrete projects or disciplines (e.g. -omics).

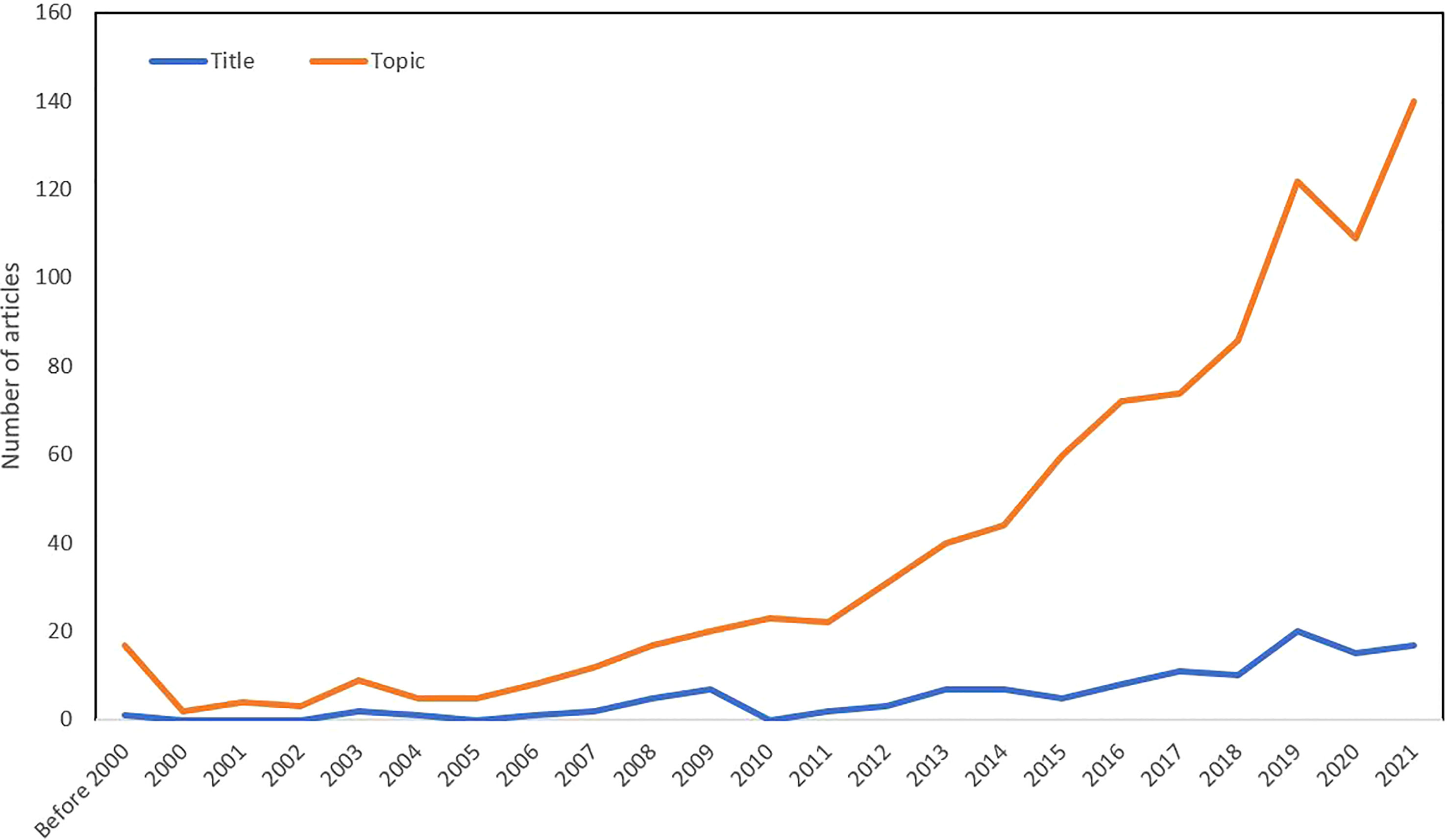

Since 2012, there has been a surge in the number of marine science publications that use the term ‘best practice’ in their title or topic (Figure 1), yet the term is not often defined, nor the process behind the practice development described. Is it necessary to clearly articulate how ocean best practices are developed to ensure consistency and meaning when one refers to a ‘best practice.’

Figure 1 Number of articles published using the term ‘best practice(s)’ in the title or topic as determined from a search on Web of Science and filtering by disciplines (oceanography, marine freshwater biology).

Importantly a ‘best practice’ is more than a documented practice that an individual or institution uses and considers good. This article aims to describe a rigorous process to develop an ocean best practice using examples from a case study from Australia. Although we focus on an Australian case study, there are of course other practices that have been developed using a similar approach (e.g. QARTOD Quality Assurance of Real Time Oceanographic Data in Bushnell et al. (2019), Monitoring and Regulation of Marine Aquaculture in Read et al. (2001). The case study used here is the Field Manuals for Marine Sampling to Monitor Australian Waters, a suite of nine standard operating procedures (referred to henceforth as Australian SOPs) released in 2018, which have since become national best practices for marine survey design, multibeam echosounders, autonomous underwater vehicles (AUVs), benthic and pelagic baited remote underwater video systems (BRUVs), towed imagery, sleds and trawls, grabs and box corers, and remotely operated vehicles (ROVs). This project is described in more detail in Przeslawski et al. (2019b) and at https://marine-sampling-field-manual.github.io. More than 136 individuals from 53 agencies contributed to the Australian SOPs, and the process underpinning their development highlights the broader challenges and benefits of developing best practices.

As presented below, the process to develop a best practice includes three phases 1) scope and recruit, 2) develop and release, 3) revise and ratify; this article is similarly structured. Each phase includes several steps and actions that are supported by the Ocean Best Practices System (Figure 2, www.oceanbestpractices.org). The Australian case study differs from many other practices, which only use the second phase (develop and release). This paper will emphasize the value of the other phases to ensure a practice is truly a ‘best practice’.

Figure 2 The three phases and associated steps required to develop an ocean best practice. Each step is linked to icons showing the relevant part of the Ocean Best Practices System that provide support.

Phase 1: Scope and recruit

The first step to develop a best practice is to confirm the need, including identifying potential users. This may involve an audit of existing practices, workshops or questionnaires which may reveal similar practices or multiple practices that could be converged. No one wants to go through this whole process only to find that it has already been done by someone else or will only have a handful of users! It may also be useful to identify the drivers for a proposed best practice which may include the need for consistent reporting or for metrics to be linked integrated monitoring (Essential Ocean Variables in Muller-Karger et al. (2018). For the Australian SOPs, a questionnaire was released to the Australian marine science community to identify user needs and gauge interest in contributing to a working group. Similar methods were also reviewed by searching scientific databases. This was done before the full development of the Intergovernmental Oceanographic Commission’s OBPS Repository (www.oceanbestpractices.org), which now provides a useful a tool for such a search (Samuel et al., 2021). Results from the review or questionnaire were then compiled in a scoping report to identify potential knowledge gaps and to document this first step (Przeslawski et al., 2019a).

After the need for a best practice has been confirmed, a working group and leader(s) should be formed. The working group leader should have expertise on the topic and have the time and ability to organize people and make decisions about potentially contentious issues. Before assembling the working group, the leader must set the scope of the best practice document to manage expectations. A best practice cannot address everything; it may need to be limited to a particular instrument, environment, or metric. The logistics and format of the working group will vary among regions and communities, and there is no ideal number of participants. The Australian SOPs had varying working group sizes, ranging from eight members (sled and trawl SOP) to 48 members (multibeam SOP). For this step, the overriding principle is inclusivity, and all major potential contributors and institutions should be invited to participate. Invitations can be extended individually or through newsletters, workshops or other public fora. In many ways, this is the most important step to set a future best practice up for success. The more perspectives able to be included in a working group, the stronger the best practice will be, and the more ownership and uptake will eventuate.

Phase 2: Develop and release

This second phase is the one that will be most familiar to many researchers, as it is indispensable in the development of any practice, let alone a best practice. The working group develops content by first applying the learnings from the first phase to decide if and how to integrate related methods into their practice. The working group will then undertake several iterations of consultations and draft methods (referred to as a strawman in Figure 2) until they have a final draft. There may be disagreements during this iterative process. In these cases, the majority or working group lead should decide which method to use. More formal decision-making processes may also be explored (e.g. Loizidou et al., 2013). Importantly, these choices should be clearly documented and justified in the final draft. For the Australian SOPs, where there were disagreements about including a particular step, the step was often included but clearly marked as recommended, not essential. At other times, the working groups developed more innovative ways to integrate seemingly disparate recommendations. For example, in the grab and box corer SOP, working group members advocated for different sieve sizes (1000, 500, 300 µm). Ultimately, the SOP puts forward the 500 µm sieve size as the consistent national standard, but states that if ‘individual survey objectives require a finer mesh size … or comparison with datasets from larger mesh size … [the user can] layer the sieves and process samples separately so that the recommended standard of 500 µm is still followed and data are comparable.”

After the working group has agreed on a final version, the next step is a formal review process. This can either be done through submission to a journal via their peer-review process (e.g. Langlois et al., 2020; Mantovani et al., 2020; Parks et al., 2022) or managed through the working group via an external review process. It is insufficient for working group members alone to review the practice. If the practice is likely to be used by a large number of users, we also recommend the working group solicit input more broadly through a consultation period or online questionnaire that invites feedback from potential users. The working group will then respond to these reviews and revise the method, documenting decisions and responses to feedback in an adjudication record, with major points addressed in the revised version of the method. The Australian SOPs were each externally reviewed by at least two experts in the field, with some of these reviewers providing such valuable input that they were included as authors in the subsequent version.

After revision, the practice is ready to be released, if it has not already been done so through a scientific journal’s peer-review process. The process of appropriately documenting a best practice is described in Hörstmann et al. (2021). The way a practice is released depends on resourcing and user preferences and can include traditional formats such as institutional reports or scientific journal articles, as well as more modern formats such as apps, webpages or videos. The first version of the Australian SOPs was released as static pdfs, but the second version also included an online GitHub portal (https://introduction-field-manual.github.io). This digital delivery system was chosen due to the following benefits:

• The practices are easily accessible in online or pdf formats, increasing the flexibility of user experiences and needs.

• The online system readily reflects minor corrections by harvesting through the source document maintained on an online file repository, in this case Google Docs.

• Updates and version control are easier to manage through permissions on GitHub and Google Docs.

• Analytics are easily generated to track downloads, which can then be incorporated into impact assessments.

• The online system has more flexibility to embed imagery and other media and to maintain links to external resources.

If a working group does not have the capability to host a practice, they can upload the practice directly to the Ocean Best Practices Repository, during which it will also be assigned a DOI. Regardless of the way in which a practice is released, the meta-data should be submitted to the Ocean Best Practices Repository to make a new practice findable to the international community. Importantly, after a practice is released and listed in the OBPS Repository, the working group should notify key stakeholders and promote to target audiences using newsletters, social media, or conference and workshop presentations.

Phase 3: Revise and ratify

Most ocean practices are considered complete after they are released, but a final phase (revise and ratify) is required to elevate them to a ‘best practice’. Once a practice is released, the working group should invite feedback from the community to understand how and why the practice is being used and to identify any necessary revisions. When considering the definition of a best practice, this step is particularly important because it shows that the practice is ‘adopted and employed by multiple organizations’ (Pearlman et al., 2019). When the Australian SOPs were first released, contact details and a simple web-form were shared for users to offer suggestions or report errors; these were compiled over the following year. During this same period, we also assessed uptake through analytics generated by the OBPS Repository and Google Analytics. These and other measures were compiled in a report on impact and outcomes of the Australian SOPs (Przeslawski et al., 2021).

Based on feedback and uptake, it may be appropriate to revise the practice. If this is the case, the working group is reformed, and the second phase starts again (Figure 2). Revisions can be minor to reflect recent studies, technological advances, or new digital infrastructure; or they can be more substantial in which case another review period is warranted. An example of the latter is the Australian multibeam SOP that was first released in 2018 at the same time as a very similar practice from AusSeabed, an emerging seabed mapping initiative. During Phase 3 of the Australian SOPs, we noted the similarity between the two practices, and a partnership between the two programs yielded a converged second version that was released in 2020 (Picard et al., 2020). Although often desirable, convergence or extensive revision of best practices could potentially result in changes in the way in which data are collected such that data are no longer consistently comparable over time. This must be considered during best practices revisions, and if major method changes are proposed, a window of overlap between the old and new methods could facilitate comparisons between them to map interoperability.

Once a practice has been released, the working group should seek community endorsement from broader discipline representatives or end users so that the practice can become a true best practice. The Global Ocean Observing System (GOOS) offers an international endorsement process for best practices (Hermes, 2020) which details several criteria including a rigorous community review process (see Phase 2). At the time of writing, this is the only established endorsement process for an international ocean best practice. Other endorsements may come from institutions and permitting agencies who may recommend or require your method be used in ocean activity permissions. The endorsement process for the Australian SOPs varies among the numerous best practices. For example, the BRUV SOP (Langlois et al., 2020) received GOOS endorsement, the multibeam SOP is used by many entities collaborating with the AusSeabed program (e.g. Geoscience Australia, CSIRO, Australian Hydrographic Office, Australian State governments and universities), and all the SOPs are used by the Australian Federal Government (Parks Australia – Marine Parks Branch) as recommendations in permitting and requirements in contracts.

The final step is to maintain and update what is now a best practice. This is particularly relevant for those practices incorporating emerging technologies, developing data infrastructure, or tentative workflows. For example, the -omics methods often used in biomolecular ocean observing and research are rapidly evolving and may require more frequent updates (Saito et al., 2019; Samuel et al., 2021). Periodic reviews of the practice will help identify outdated information that can be corrected with a new version. For long-term maintenance, particularly of lengthy best practices or compendiums of best practices such as the Australian SOPs, an oversight committee will be useful.

Challenges

The process to develop a best practice takes time and effort, and it has numerous challenges. Some of these relate to content, such as ensuring the method is fit-for-purpose and within scope, but arguably the more significant challenges relate to people and institutional resistance. Because of the highly collaborative nature of this process, there may be many people and institutions involved, thus posing issues related to following deadlines with numerous time-poor colleagues, reaching consensus when two groups disagree on key aspects, and balancing flexibility to help ensure uptake with prescriptiveness to help ensure data comparability.

Although the process described in this paper may be challenging and time-consuming, it optimizes the chance to develop a true best practice that is:

• Fit-for-purpose with clearly defined scope;

• Representative and inclusive of potential users;

• Accurate and effective, reflecting emerging technologies and programs; and

• Supported and adopted by users.

Conclusions

This paper illustrates the three major phases and accompanying steps to develop an ocean best practice, providing associated examples from a case study from Australia. Many practices are only developed with a single phase (develop and release), and we accentuate the importance of the other two phases (scope and recruit, revise and ratify) which are required for a true best practice. These phases also have other benefits, including higher uptake of a practice stemming from a sense of shared ownership (from scope and recruit phase) and currency and accuracy (from revise and ratify phase). Importantly, the approach documented here is highly collaborative and iterative, with the final step linking to earlier steps and potentially yielding subsequent versions (Figure 2). This will ensure a long lifespan of a best practice.

An additional benefit of the Australian SOP approach was the development of multiple related ocean practices at the same time. These SOPs are often used concurrently to characterize the geomorphology and ecology of a marine area. The impact of the SOPs and their value to users would have been diminished if they had progressed separately in an uncoordinated manner over different timeframes.

At a national scale, the Australian SOPs have been used to ensure consistency in data acquisition and data quality, such that data are interoperable at national scales. This allows scientists, managers and policymakers in Australia to 1) meet state and federal goals for integrated monitoring (e.g. National Marine Science Plan in Hedge et al. (2022), New South Wales Marine Integrated Monitoring Program in Aither (2022)), 2) report on internationally agreed metrics Miloslavich et al. (2018) and Muller-Karger et al. (2018) at local to national scales, and 3) adopt a best practice-based approach to monitoring the vast network of Australian Marine Parks that will ideally spread into many other applications that are comparatively unguided and unregulated regarding standards for data collection and management (e.g. naval mapping, offshore energy exploration and development). Industry can then adopt such best practices to improve environmental assessments and regulation (e.g. offshore wind in North Sea in Abramic et al. (2022)).

At a global scale with the United Nations Decade of Ocean Science for Sustainable Development, it is imperative that we continue developing, using, and maintaining the currency of existing best practices to ensure that the science we undertake locally or regionally can complement that undertaken elsewhere (Pearlman et al., 2021). This will contribute to improving our understanding of our global ocean and the changes it faces, which in turn will assist other ocean stakeholders in policy and management to make science-driven decisions.

Author's note

The OBPS has developed a course on ‘Ocean Best Practices’ through the Ocean Teacher Global Academy. This short course includes four modules and provides an overview of the importance and relevance of using and sharing Ocean Best Practices and Standards, as well as on how to create, submit, share and search for Best Practices in the Ocean Best Practices System (OBPS) repository. This course is intended for University students, researchers and ocean practitioners in general. It does not provide a certificate, and can be accessed with free registration here: https://classroom.oceanteacher.org/enrol/index.php?id=737. The second module of this course is a video tutorial called ‘Creating a Best Practice’ that complements this paper.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Funding

This work was undertaken for the Marine and Coastal Hub, a collaborative partnership supported through funding from the Australian Government’s National Environmental Science Program (NESP).

Acknowledgments

The authors are grateful to the many contributors to the Field Manuals for Marine Sampling to Monitor Australian Waters, as listed here https://introduction-field-manual.github.io/collaborators. Louise Bell and Bryony Bennett designed Figure 2, and members of the Ocean Best Practices Steering Group provided input to it. AC and KP publish with the permission of the Chief Executive Officer, Geoscience Australia.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abramic A., Cordero-Penin V., Haroun R. (2022). Environmental impact assessment framework for offshore wind energy developments based on the marine good environmental status. Environ. Impact Assess. Rev. 97, 106862. doi: 10.1016/j.eiar.2022.106862

Aither (2022). Integrated monitoring and evaluation framework for the marine integrated monitoring program (NSW Government). Available at: https://www.marine.nsw.gov.au/marine-estate-programs/marine-integrated-monitoring-program

Bushnell M., Waldmann C., Seitz S., Buckley E., Tamburri M., Hermes J., et al. (2019). Quality assurance of oceanographic observations: Standards and guidance adopted by an international partnership. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00706

Hörstmann C., Buttigieg P. L., Simpson P., Pearlman J., Waite A. M. (2021). Perspectives on documenting methods to create ocean best practices. Front. Mar. Sci. 7. doi: 10.3389/fmars.2020.556234

Hedge P., Souter D., Jordan A., Trebilco R., Ward T., Van Ruth P., et al. (2022). Establishing and supporting a national marine baselines and monitoring program: Advice from the marine baselines and monitoring working group (Prepared for Australia’s National Marine Science Committee). Available at: https://www.marinescience.net.au/workinggroups

Hermes J. (2020). GOOS best practice endorsement process. version 1 (Paris: Global Ocean Observing System).

Langlois T., Goetze J., Bond T., Monk J., Abesamis R. A., Asher J., et al. (2020). A field and video annotation guide for baited remote underwater stereo-video surveys of demersal fish assemblages. Methods Ecol. Evol. 11, 1401–1409. doi: 10.1111/2041-210X.13470

Loizidou X. I., Loizides M. I., Orthodoxou D. L. (2013). A novel best practices approach: the MARLISCO case. Mar. Poll. Bull. 88, 118–128. doi: 10.1016/j.marpolbul.2014.09.015

Mantovani C., Corgnati L., Horstmann J., Rubio A., Reyes E., Quentin C., et al. (2020). Best practices on high frequency radar deployment and operation for ocean current measurement. Front. Mar. Sci. 7. doi: 10.3389/fmars.2020.00210

Miloslavich P., Bax N. J., Simmons S. E., Klein E., Appeltans W., Aburto-Oropeza O., et al. (2018). Essential ocean variables for global sustained observations of biodiversity and ecosystem changes. Global Change Biol. 24, 2416–2433. doi: 10.1111/gcb.14108

Muller-Karger F. E., Miloslavich P., Bax N. J., Simmons S., Costello M. J., Pinto I. S., et al. (2018). Advancing marine biological observations and data requirements of the complementary essential ocean variables (EOVs) and essential biodiversity variables (EBVs) frameworks. Front. Mar. Sci. 5. doi: 10.3389/fmars.2018.00211

Parks J., Bringas F., Cowley R., Hanstein C., Krummel L., Sprintall J., et al. (2022). XBT operational best practices for quality assurance. Front. Mar. Sci. 9. doi: 10.3389/fmars.2022.991760

Pearlman J., Bushnell M., Coppola L., Karstensen J., Buttigieg P. L., Pearlman F., et al. (2019). Evolving and sustaining ocean best practices and standards for the next decade. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00277

Pearlman J., Buttigieg P. L., Bushnell M., Delgado C., Hermes J., Heslop E., et al. (2021). Evolving and sustaining ocean best practices to enable interoperability in the UN decade of ocean science for sustainable development. Front. Mar. Sci. 8. doi: 10.3389/fmars.2021.619685

Pensieri S., Bozzano R., Schiano M. E., Ntoumas M., Potiris E., Frangoulis C., et al. (2016). Methods and best practice to intercompare dissolved oxygen sensors and Fluorometers/Turbidimeters for oceanographic applications. Sensors 16:702. doi: 10.3390/s16050702

Picard K., Austine K., Bergersen N., Cullen R., Dando N., Donohue D., et al. (2020). Australian Multibeam guidelines, version 2 (Canberra: Geoscience Australia).

Przeslawski R., Bodrossy L., Carroll A., Cheal A., Depczynski M., Foster S., et al. (2019a). Scoping of new field manuals for marine sampling in Australian waters. report to the national environmental science programme (Marine Biodiversity Hub). Available at: https://www.nespmarine.edu.au/document/scoping-new-field-manuals-marine-sampling-australian-waters

Przeslawski R., Foster S., Langlois T., Gibbons B., Monk J. (2021). Impact and outcomes of marine sampling best practices (Canberra: NESP Marine Biodiversity Hub). Available at: https://www.nespmarine.edu.au/document/scoping-new-field-manuals-marine-sampling-australian-waters.

Przeslawski R., Foster S., Monk J., Barrett N., Bouchet P., Carroll A., et al. (2019b). A suite of field manuals for marine sampling to monitor Australian waters. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00177

Read P. A., Fernandes T. F., Miller K. L. (2001). The derivation of scientific guidelines for best environmental practice for the monitoring and regulation of marine aquaculture in Europe. J. Appl. Ichthyol 17, 146–152. doi: 10.1046/j.1439-0426.2001.00311.x

Rivest E. B., O’brien M., Kapsenberg L., Gotschalk C. C., Blanchette C. A., Hoshijima U., et al. (2016). Beyond the benchtop and the benthos: Dataset management planning and design for time series of ocean carbonate chemistry associated with durafet (R)-based pH sensors. Ecol. Inf. 36, 209–220. doi: 10.1016/j.ecoinf.2016.08.005

Saito M. A., Bertrand E. M., Duffy M. E., Gaylord D. A., Held N. A., Hervey W. J., et al. (2019). Progress and challenges in ocean metaproteomics and proposed best practices for data sharing. J. Proteome Res. 18, 1461–1476. doi: 10.1021/acs.jproteome.8b00761

Samuel R. M., Meyer R., Buttigieg P. L., Davies N., Jeffery N. W., Meyer C., et al. (2021). Toward a global public repository of community protocols to encourage best practices in biomolecular ocean observing and research. Front. Mar. Sci. 8. doi: 10.3389/fmars.2021.758694

Keywords: monitoring, FAIR (findable, accessible, interoperable, and reusable) principles, standard operating procedure (SOP), marine protected areas

Citation: Przeslawski R, Barrett N, Carroll A, Foster S, Gibbons B, Jordan A, Monk J, Langlois T, Lara-Lopez A, Pearlman J, Picard K, Pini-Fitzsimmons J, van Ruth P and Williams J (2023) Developing an ocean best practice: A case study of marine sampling practices from Australia. Front. Mar. Sci. 10:1173075. doi: 10.3389/fmars.2023.1173075

Received: 24 February 2023; Accepted: 27 March 2023;

Published: 11 April 2023.

Edited by:

Roberta Congestri, University of Rome Tor Vergata, ItalyReviewed by:

George Petihakis, Hellenic Centre for Marine Research (HCMR), GreeceGianluca Persichetti, National Research Council (CNR), Italy

Copyright © 2023 Przeslawski, Barrett, Carroll, Foster, Gibbons, Jordan, Monk, Langlois, Lara-Lopez, Pearlman, Picard, Pini-Fitzsimmons, van Ruth and Williams. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rachel Przeslawski, cmFjaGVsLnByemVzbGF3c2tpQGRwaS5uc3cuZ292LmF1

Rachel Przeslawski

Rachel Przeslawski Neville Barrett

Neville Barrett Andrew Carroll

Andrew Carroll Scott Foster

Scott Foster Brooke Gibbons

Brooke Gibbons Alan Jordan2

Alan Jordan2 Jacquomo Monk

Jacquomo Monk Tim Langlois

Tim Langlois Ana Lara-Lopez

Ana Lara-Lopez Jay Pearlman

Jay Pearlman Kim Picard

Kim Picard Joni Pini-Fitzsimmons

Joni Pini-Fitzsimmons Paul van Ruth

Paul van Ruth Joel Williams

Joel Williams