- 1School of Information and Control Engineering, Qingdao University of Technology, Qingdao, China

- 2Civil Aviation Logistics Technology Co., Ltd, Chengdu, China

- 3Institute of Oceanographic Instrumentation, Qilu University of Technology (Shandong Academy of Sciences), Qingdao, China

- 4School of Optoelectronic Engineering, Xidian University, Xi’an, China

Blurring and color distortion are significant issues in underwater optical imaging, caused by light absorption and scattering impacts in the water medium. This hinders our ability to accurately perceive underwater imagery. Initially, we merge two images and enhance both the brightness and contrast of the secondary images. We also adjust their weights to ensure minimal effects on the image fusion process, particularly on edges, colors, and contrast. To avoid sharp weighting transitions leading to ghost images of low-frequency components, we then propose and use a multi-scale fusion method when reconstructing the images. This method effectively reduces scattering and blurring impacts of water, fixes color distortion, and improves underwater image contrast. The experimental results demonstrate that the image fusion method proposed in this paper effectively improves the fidelity of underwater images in terms of sharpness and color, outperforming the latest underwater imaging methods by comparison in PSNR, Gradient, Entropy, Chroma, AG, UCIQE and UIQM. Moreover, this method positively impacts our visual perception and enhances the quality of the underwater imagery presented.

1 Introduction

The ocean holds vast resources and is considered a new continent to be exploited by mankind. However, rapid population growth, depletion of land resources, and natural environment deterioration has increased the importance of both exploiting and protecting marine resources. In this context, ocean information acquisition, transmission, and processing theory and technology play a critical role in the rational exploitation and utilization of ocean resources. Underwater images are a key source of ocean data and a useful visualization tool for identifying the ocean.

However, compared to the air medium, the attenuation coefficient of light beam propagation in the water medium is much larger, leading to poor underwater imaging quality (Yang et al., 2019). The scattering of light by water and suspended particles also reduces image contrast, resulting in blurred images and poor visibility. Additionally, the attenuation characteristics in water vary with wavelengths of light, with red light being the most attenuated, losing its energy after a distance of 4-5 meters. This makes underwater images more likely to have a bluish or greenish appearance.

These factors collectively limit the quality of underwater imaging, posing significant practical and scientific challenges in the application of underwater images in marine military, marine environmental protection, and marine engineering. Therefore, it is essential to develop effective techniques and technologies to improve underwater imaging quality and overcome these limitations.

The motivation for designing the multi-scale fusion mechanism in underwater image enhancement is to deal with the unique challenges that arise when imaging underwater environments. In particular, underwater images often suffer from severe noise, low contrast, and color distortion, which can reduce visibility and make it difficult to distinguish between different objects in the scene.

One approach to addressing these challenges is to use image enhancement techniques that adjust the brightness, contrast, and color balance of the underwater images. However, standard image enhancement techniques may not be effective in underwater environments due to the complex nature of the underwater light field and the scattering and absorption of light by water and suspended particles.

To overcome these challenges, researchers have developed multi-scale fusion mechanisms that combine information from different scales in the image to improve the overall image quality. This approach involves breaking down the image into different scales and processing each scale separately before fusing the results back together.

By using this multi-scale approach, the low-level features of the image can be enhanced at the pixel level, while the high-level features, such as edges and boundaries, can be preserved to maintain the overall structure of the image. This allows for better visibility and the ability to distinguish between different objects in the scene, making it easier to interpret underwater images for scientific, commercial, and military applications.

With the advancement and maturation of image processing and computer vision technologies (Sahu et al., 2014), many scientists are paying more attention to using these technologies as post-processing steps to enhance the visual quality of underwater images to meet the needs of both human visual characteristics and machine recognition (Guo et al., 2017). Jiang et al. make efforts in both subjective and objective aspects to fully understand the true performance of underwater image enhancement algorithms (Jiang et al., 2022). Image enhancement is a widely-used technique that can be used to improve the quality of underwater photographs by primarily increasing image contrast and correcting color distortion (Wang et al., 2019).

Typical enhancement methods used in this field include histogram equalization (HE) (Hummel, 1977; Pisano et al., 1998), generalized unsharp mask (GUM), and fusion using a monochromatic model (Ancuti et al., 2012). Iqbal et al. developed an Integrated Color Model (ICM) algorithm based on the integrated color model (Iqbal et al., 2007) and an Unsupervised Colour Correction Method (UCM) for underwater image enhancement (Iqbal et al., 2010). Abdul Ghani et al. employed the Rayleigh distribution function to redistribute the input image (Abdul Ghani and Mat Isa, 2015). Huang et al. proposed the RGHS model to enhance image information entropy (Huang et al., 2018). To solve blurriness and color degradation issues, Zhou et al. developed a restoration method based on backscatter pixel prior and color cast removal from the physical point of view of underwater image degradation (Zhou et al., 2022). Peng et al. proposed a depth estimation method for underwater scenes based on image blurriness and light absorption (IBLA), which can be used in the image formation model (IFM) to restore and enhance underwater images (Peng and Cosman, 2017).

For underwater image restoration, a common approach is to analyze the effective degradation model of the underwater imaging mechanism and determine the model parameters based on prior knowledge (Chang et al., 2018). For image defogging, the Dark Channel Prior (DCP) has attracted attention due to the similarity between outdoor and underwater images (Ancuti et al., 2020). Drews-Jr provided a method of Underwater Dark Channel Prior (UDCP) (Drews-Jr et al., 2013) that only considers the G and B channels to produce underwater DCP without taking into account the red channel.

Deep learning-methods have gradually become a research hot spot/highlight as the progress of artificial intelligence technology, such as visual recognition and detection of aquatic animals (Li et al., 2023).Chen et al. constructed a real-time adaptive underwater image restoration method, called GAN-based restoration scheme (GAN-RS) (Chen et al., 2019). Yu et al. developed an underwater image restoration network using an underwater image dataset to simulate the relevant imaging model (Yu et al., 2019). Sun et al. also developed a framework for underwater image enhancement that employs a Markov Decision Process (MDP) for reinforcement learning (Sun et al., 2022). Wang et al. proposed a one-stage CNN detector-based benthonic organism detection (OSCD-BOD) scheme to outperform typical approaches (Chen et al., 2021). Then they summarized on Architectures and algorithms in deep learning techniques for marine object recognition (Wang et al., 2022), especially in organisms (Wang et al., 2023). Li et al. proposed the first comparative learning framework for underwater image enhancement problem beyond training with single reference, namely Underwater Image Enhancement via Comparative Learning (CLUIE-Net), to learn from multiple candidates of enhancement reference (Li et al., 2022). To address the challenges of degraded underwater images, Zhou et al. propose a novel cross domain enhancement network (CVE-Net) that uses high-efficiency feature alignment to utilize neighboring features better (Zhou et al., 2023b). They addressed that most existing deep learning methods utilize a single input end-to-end network structure leading to a single form and content of the extracted features. And they presented a multi-feature underwater image enhancement method via embedded fusion mechanism (MFEF) (Zhou et al., 2023a). To boost the performance of data-driven approaches, Qi et al. proposed a novel underwater image enhancement network, called Semantic Attention Guided Underwater Image Enhancement (SGUIE-Net),in which we introduce semantic information as high-level guidance across different images that share common semantic regions (Qi et al., 2022).

We summarize our main contributions as follows:

(1) We propose a fusion frame for underwater image enhancement. This frame supplies a basis of different in different scenes for underwater images.

(2) We proposed a multi-scale weighted method, which employed the white balance method to obtain initial enhancement images as references in real conditions, and then filter the compensated images.

(3) We applied Contrast-Limited Adaptive Histogram Equalization (CLAHE) (Pisano et al., 1998) to the L channel in the model in this frame, to reduce time costs and improve efficiency compared to global image equalization.

The following parts are organized as below: Section 2 illustrates the presented method’s structure, including color compensation, initial enhancement, image equalization, contrast enhancement, and image fusion. Next, Section 3 explains our experiment and compares the results to those of other methods. Finally, we summarize our method and references, and then discuss its prospects for the future in Section 4.

2 Materials and methods

From the standpoint of image fusion, two different technologies are used on the underwater degraded image to obtain a new image with color brightness and contrast enhancement. The weight maps of the two images are determined and a high-quality image is obtained by weighted fusion.

The original degraded underwater image, based on the Jaffe-McGlamery model, can be expressed based on the follow formula:

where represents the original image taken underwater, represents transmissivity, means observer and object’s distance, gives attenuation coefficient, refer to color vector.

2.1 Color compensation and initial enhancement

2.1.1 Color channel compensation

Several studies on underwater images have shown that green light attenuates less than red and blue light when propagating underwater, and, as a result, the water body, as well as most captured underwater, is typically blue-green in appearance. Red and blue dual-channel colour compensation is used to solve colour cast (Ancuti et al., 2020), and the images and after color compensation is obtained as:

where , and represent the red, green and blue colour channels of the initial image , each channel being in the range (0,1), after normalization by the upper limits of their dynamic ranges; and , and denoting the average of those channels over the whole image.α is the compensation parameter, and the test shows that α=1 is suitable for a variety of lighting conditions and acquisition settings.

For underwater scenes with limited distortion, in the grayscale world white-balance algorithm achieves good visual performance. In this paper, in the grayscale world this method was applied to calculate the white balance image and obtain the final colour-corrected result by compensating for the loss in both red and blue channel.

2.1.2 Homomorphic filtering

Due to the light limitation of underwater imaging system, the illumination on imaging target is uneven, which deteriorates imaging quality. Homomorphic filtering method was applied for image compensated (Jiao and Xu, 2010). At the end, the image is decomposed into direct irradiation, reflection component, which are then logarithmically transformed as follows:

where refer to illumination component, represents reflection component, corresponding to high-frequency information. And equation (5) is performed with Fourier transform:

In the frequency domain, the different frequency parts of the underwater image are processed based on a Gaussian filter , with its transfer function given as below:

where is the enhanced part in high frequency, is the reduced part in low frequency, and is referred to the distance of the frequency in the midpoint and . is the value of when . The brightness range is compressed to make them average and improve image contrast. The homomorphic filter can appropriately separate the different components. Then, multiply by as follows:

The output of homomorphic filter is further performed by the inverse Fourier transform and exponential transformation. The final result is finally given as follows:

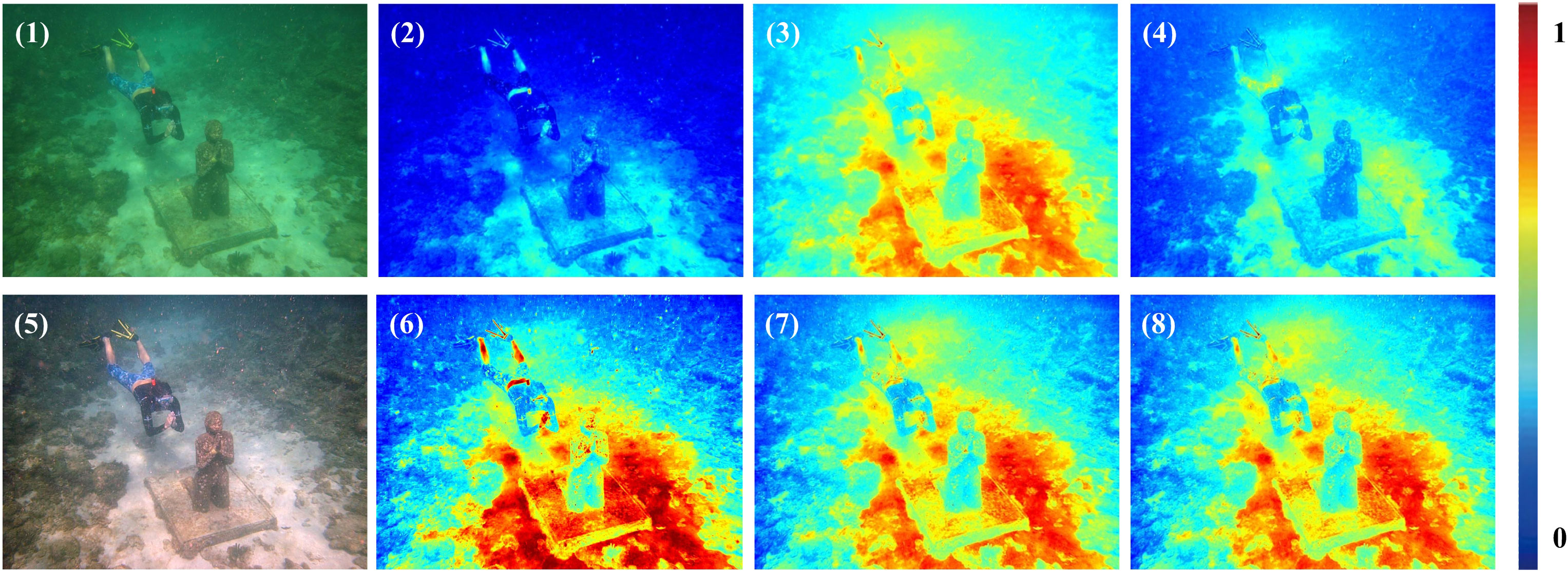

where represents the result obtained by inverse Fourier transform, is direct illumination component and is reflection component. Figure 1 shows the preliminary enhanced image, which was generated using color compensation and homomorphic filtering. When comparing the two-colour images before and after processing, it is clear that colours in three channels of the image are more balanced by using the method described in this paper.

Figure 1 Comparison original images with preliminary enhancement images. (1) Original; (2) Channel R; (3) Channel G; (4) Channel B; (5) Colour compensation and homomorphic filtering; (6) Channel R after preliminary enhancement; (7) Channel G after preliminary enhancement; (8) Channel B after preliminary enhancement.

2.2 Image equalization and contrast enhancement

2.2.1 Gamma correction

After color compensation, the white balance algorithm is used to process the original/preliminary enhanced image. The goal of this step is to improve image quality by reducing color shifts because of excessive illumination. However, because the underwater image is often brighter after color compensation and homomorphic filtering, we convert the preliminary enhanced image into HSV space to enlarge the contrast of bright and dark areas. Set the gamma as follow:

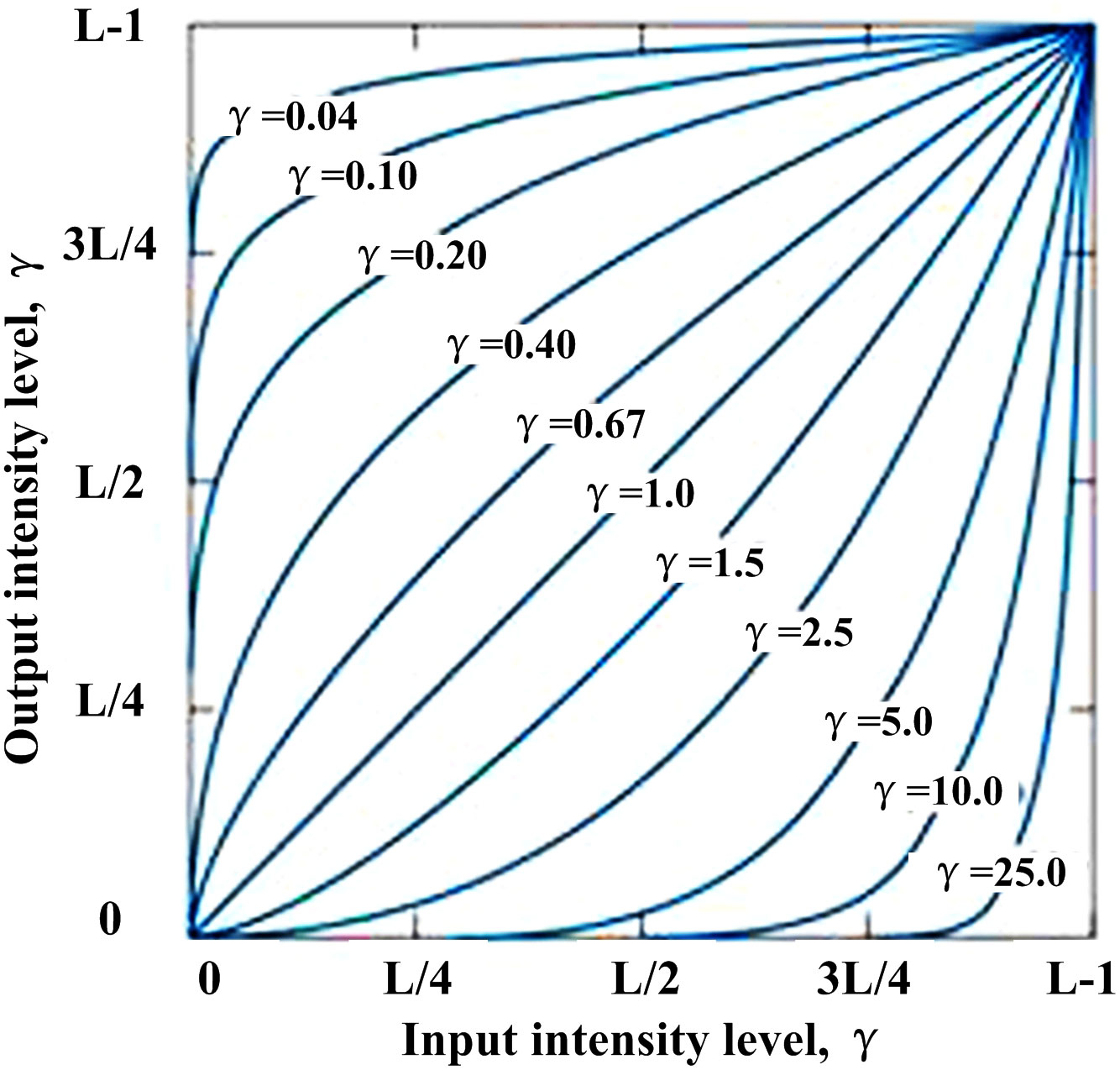

Figure 2 depicts the gamma correction curve. When <1, the dynamic range of low gray values increases, the image’s overall gray value increases. When >1, the dynamic range of low gray value shrinks while the high gray value expands. Selecting a value greater than 1 can correct the global contrast in high-brightness underwater images, such as =2.2 in our case.

2.2.2 Contrast limited adaptive histogram equalization

The gray values of most underwater images are low and, therefore, their histogram distribution tends to be narrow. CLAHE can be used to modify the histogram distribution of an underwater image so that correcting colour bias and improving image contrast to some extent (Pisano et al., 1998). In this paper, histogram equalization was performed in LAB space, i.e. the contrast of L component was enhanced separately in LAB space. L stands for brightness in LAB model, while A and B stand for colour. By enhancing the L channel separately, you can avoid impact colour component of image.

The image is divided into several sub-blocks using local histogram equalization, and into limited non-coincidence sub-blocks in this paper. The pixel points in grayscale are then calculated using bilinear interpolation technology to solve the block effect in image reconstruction.

2.3 Image fusion by weight

2.3.1 Define the weight of fusion

After obtaining two fusion input images, we calculate the special weight map of these inputs to reflect high contrast, regions with edge texture change of images. Brightness, local contrast, and saturation of the image are primarily considered in the selection of the weight map in this paper.

To maintain consistency in local image contrast, the brightness weight is applied to assess the exposure degree of its pixels, giving higher weights to well situated pixels in brightness. Because the mean natural brightness of an image pixel is typically close to 0.5, the mean experimental brightness is set to 0.5, and its standard deviation is set to 0.25 (Ancuti and Ancuti, 2013), and the brightness weight of image I:

The normalized image in grayscale is represented by .

The average value of its neighboring pixels is represented by the local contrast weight . By using local contrast weighting, we can draw attention to the transition area between the light and dark parts. is the input image’s brightness weight, given as follows:

where refers to the brightness channel of image and represents its low-pass part. The low-pass filter uses a separable binomial kernel of 5×5 (1/16 (Pisano et al., 1998; Wang et al., 2019; Wang et al., 2019; Yang et al., 2019; Yang et al., 2019)) with a =π/2.75. The binomial kernel is very similar to the Gaussian kernel and, as a result, easy to calculate.

We define an input image’s saturation weight , which makes the fusion image evenly saturated by adjusting the highly saturated area of the input image, expressed as below:

where , and represent red, green and blue channel respectively. Calculate the normalized weight by applying normalization to the brightness, local contrast, and saturation weights as:

2.3.2 Multi-scale fusion

The typical intuitive method in this field is to add two weighted images, but which will lead to significant halos. Therefore, the experiment in this paper uses multi-scale fusion technology (Ancuti and Ancuti, 2013), which is developed from the classic multi-scale fusion. The following is a description of fusion computing:

where is pyramid decomposition layers number, is fused images number, is the normalized weight, is the Laplacian pyramid decomposition, and is the layer of image pyramid, =5 and =2 in this experiment.

where denotes final output image; denotes up-sampling.

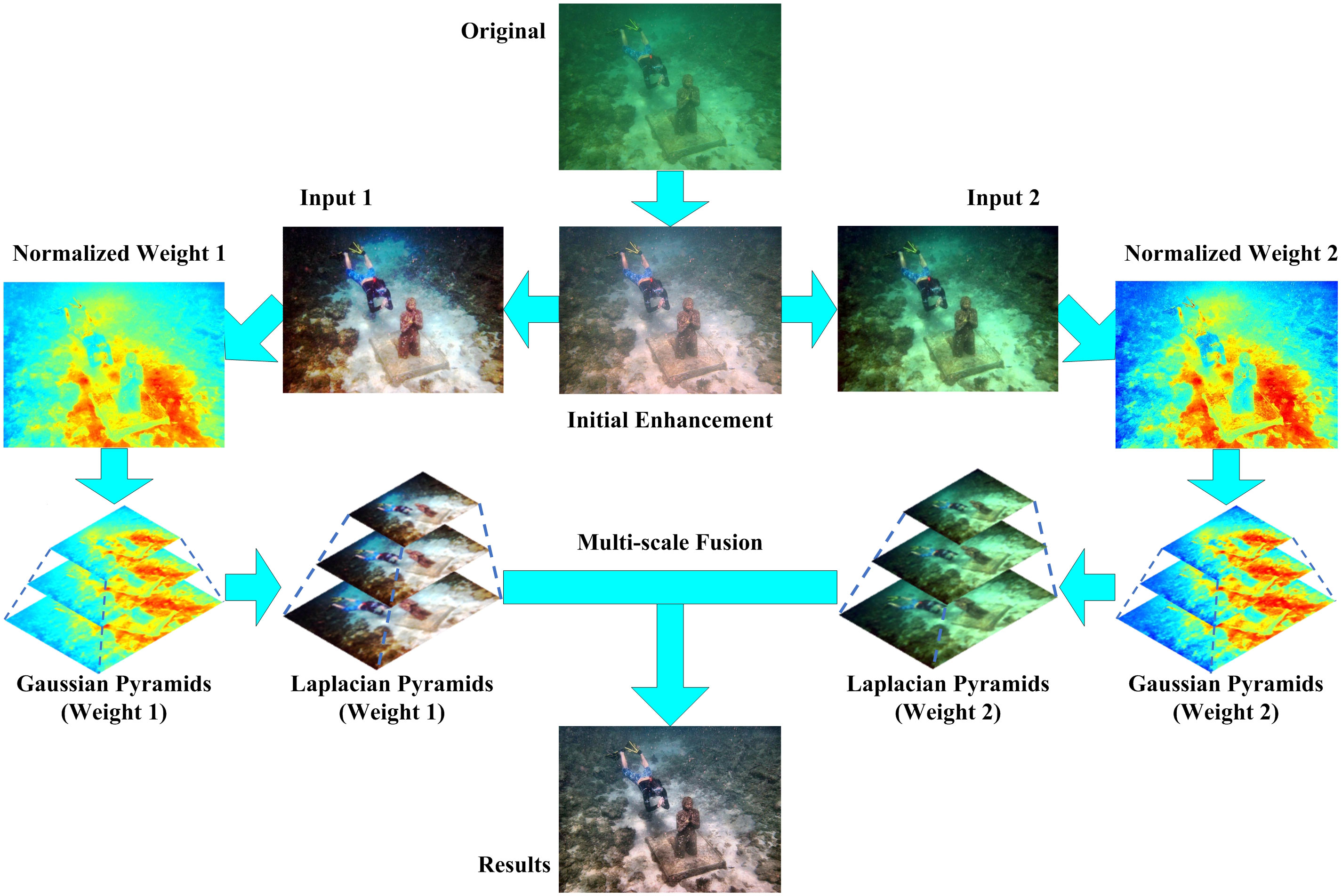

2.4 Our methodology

This paper proposes a multi-scale fusion-based underwater image enhancement algorithm. Figure 3 depicts the algorithm flow. Colour compensation and colour cast correction are performed on the underwater image in shallow water, and the compensated image is then subjected to complete the preliminary enhancement. To get two fused input images, the enhanced image is subjected to gamma correction equalization. Finally, to achieve the goals of attenuation compensation and contrast and definition improvement, a multi-scale fusion algorithm was used.

The method established in this study outperforms existing underwater image enhancement methods in subjective visual effects and objective evaluation indicators in an experimental comparison of various types of underwater images in shallow water.

3 Experimental results and discussion

We compare the enhancement method set in this article with existing professional algorithms by processing multiple underwater images and summarizing their image visual effects and objective image quality evaluation to verify the effectiveness of i in this section. With reference to our previous work, our experiments with each previous algorithm (Pisano et al., 1998; Iqbal et al., 2010; Drews-Jr et al., 2013; Abdul Ghani and Mat Isa, 2015; Panetta et al., 2015; Yang and Sowmya, 2015; Peng and Cosman, 2017; Huang et al., 2018; Chen et al., 2019) in the experiment are set in 3 parts for more details.

3.1 Image enhancement visual effect comparison (dataset 1)

Experiment results are shown as Figure 4. Imaging results in Rayleigh (Abdul Ghani and Mat Isa, 2015) show varying degrees of colour restoration, especially overcompensation in the red channel loss of several local details. Images from the algorithms demonstrate poor quality with excessive compensation and partial colour cases (Huang et al., 2018; Chen et al., 2019). The image contrast has improved significantly (Pisano et al., 1998; Huang et al., 2018), but the colour restoration effect is still poor. In terms of image enhancement and colour restoration, UDCP has not outperformed the competition (Drews-Jr et al., 2013). However, the experimental method described in this paper has demonstrated best performance on a variety of underwater images, allowing it to more effectively correct colour bias, improve contrast, and preserve image details.

Figure 4 The results comparison of underwater images from different methods. (1) The original; (2) CLAHE; (3) Rayleigh; (4) RGHS; (5) UDCP; (6) GAN-RS; (7) Our method.

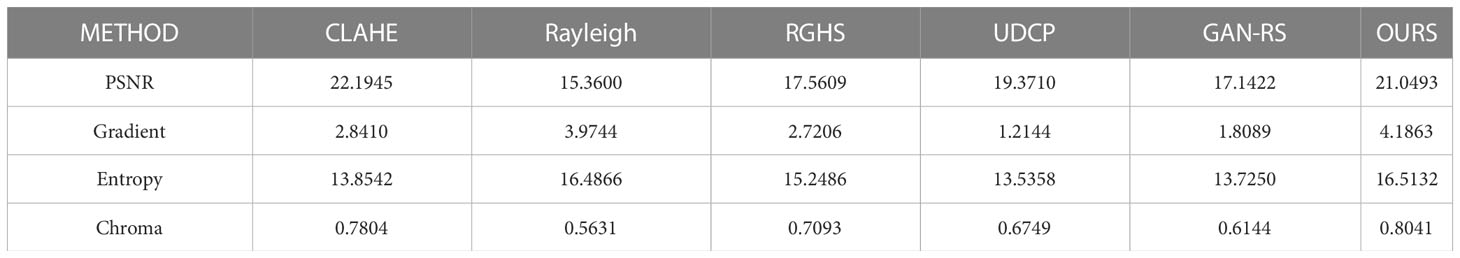

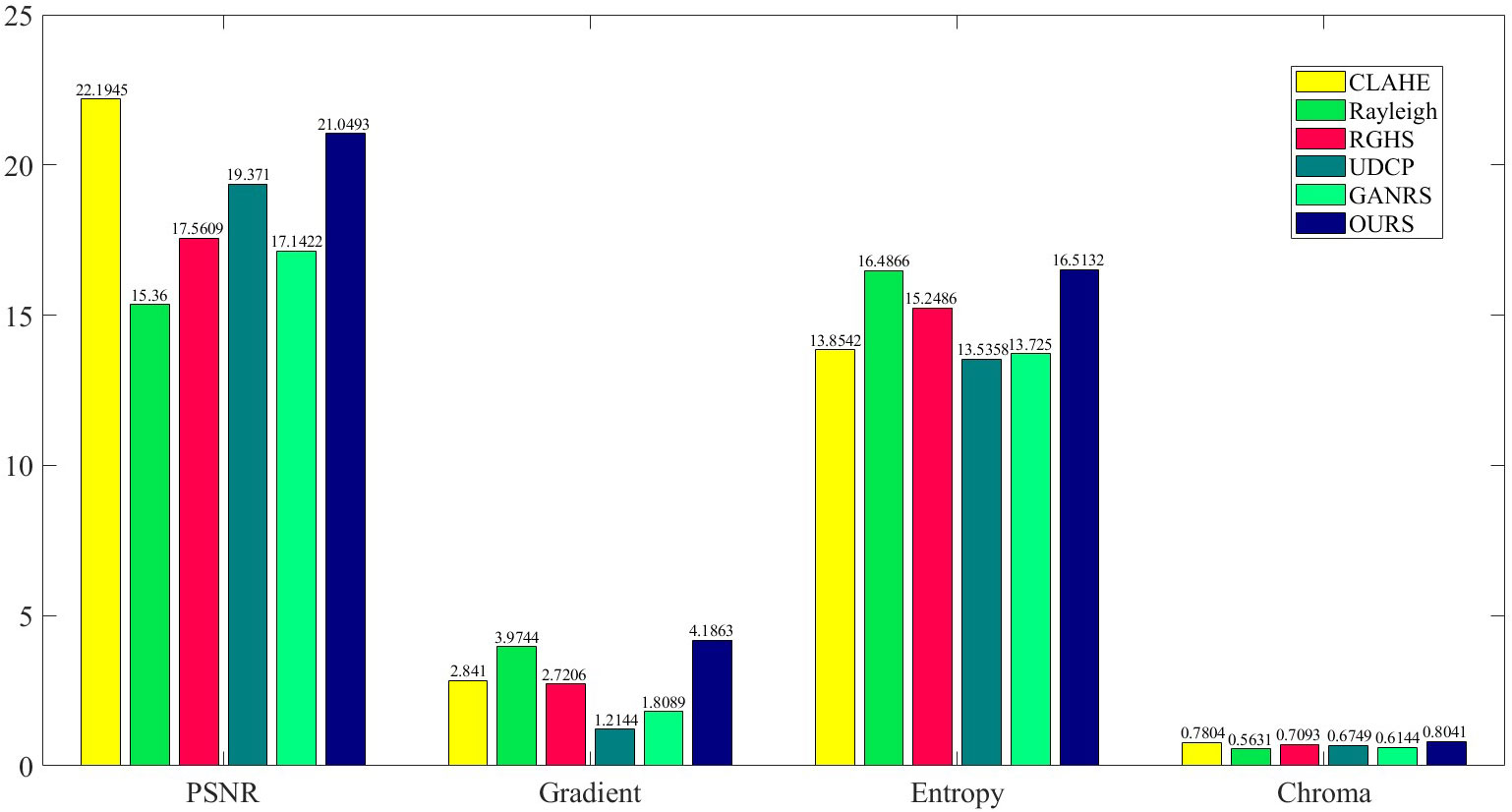

To evaluate the results of various algorithms, this article uses several traditional image quality objective evaluation indicators: peak signal-to-noise ratio (PNSR), average gradient (Average Gradient), tone (Chroma). A higher PNSR value indicates that the algorithm introduces a small amount of noise and retains more valuable image information. The mean gradient value reflects the small detail contrast feature in image, and the information entropy indicates the mean amount of information contained in that. The tone is a summary of the color of the entire image.

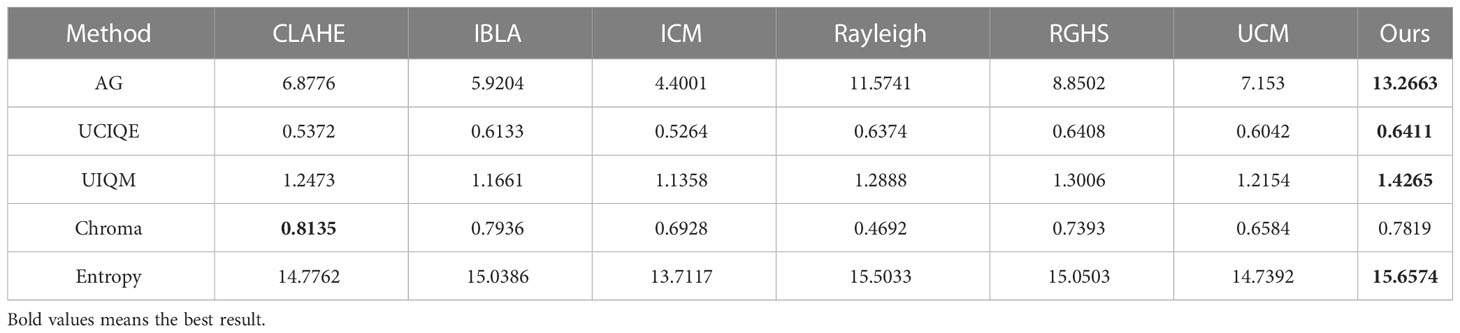

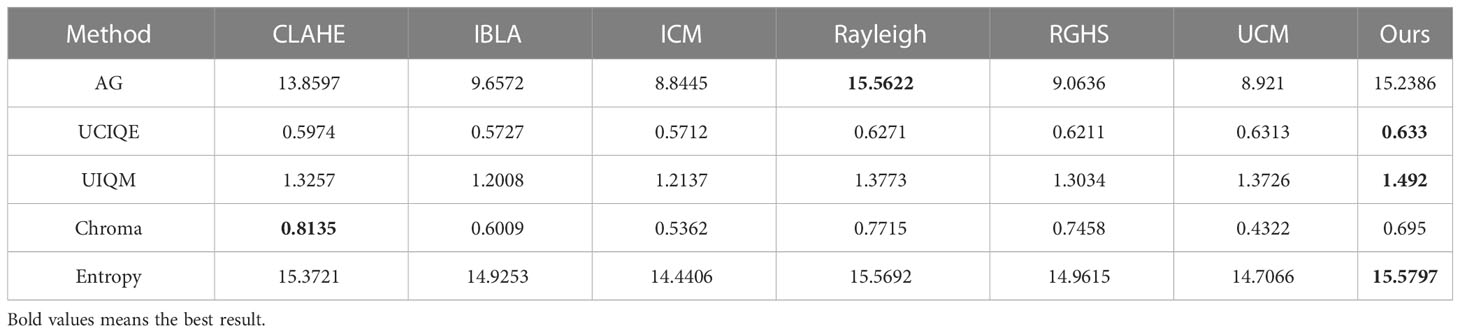

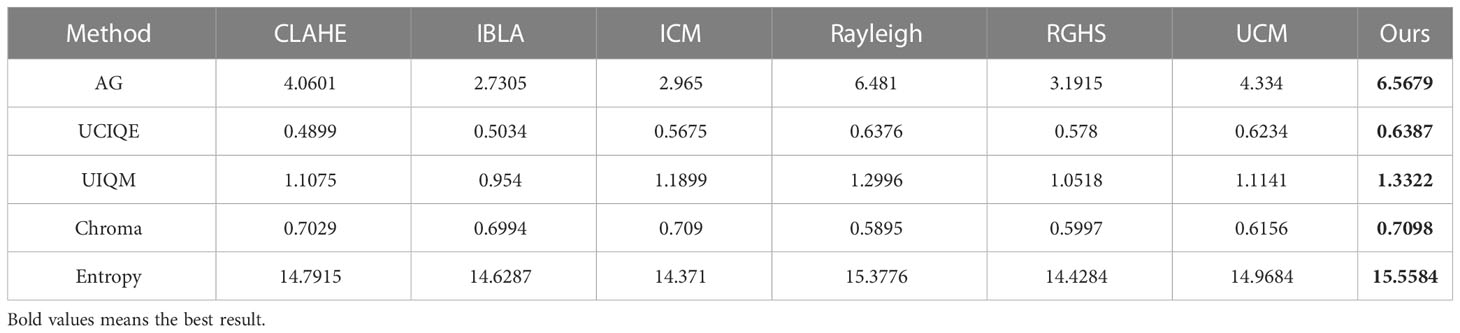

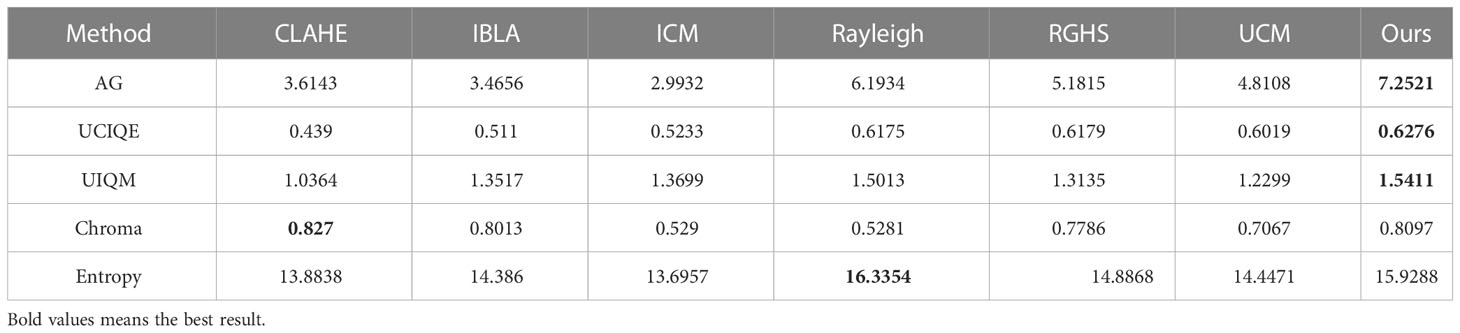

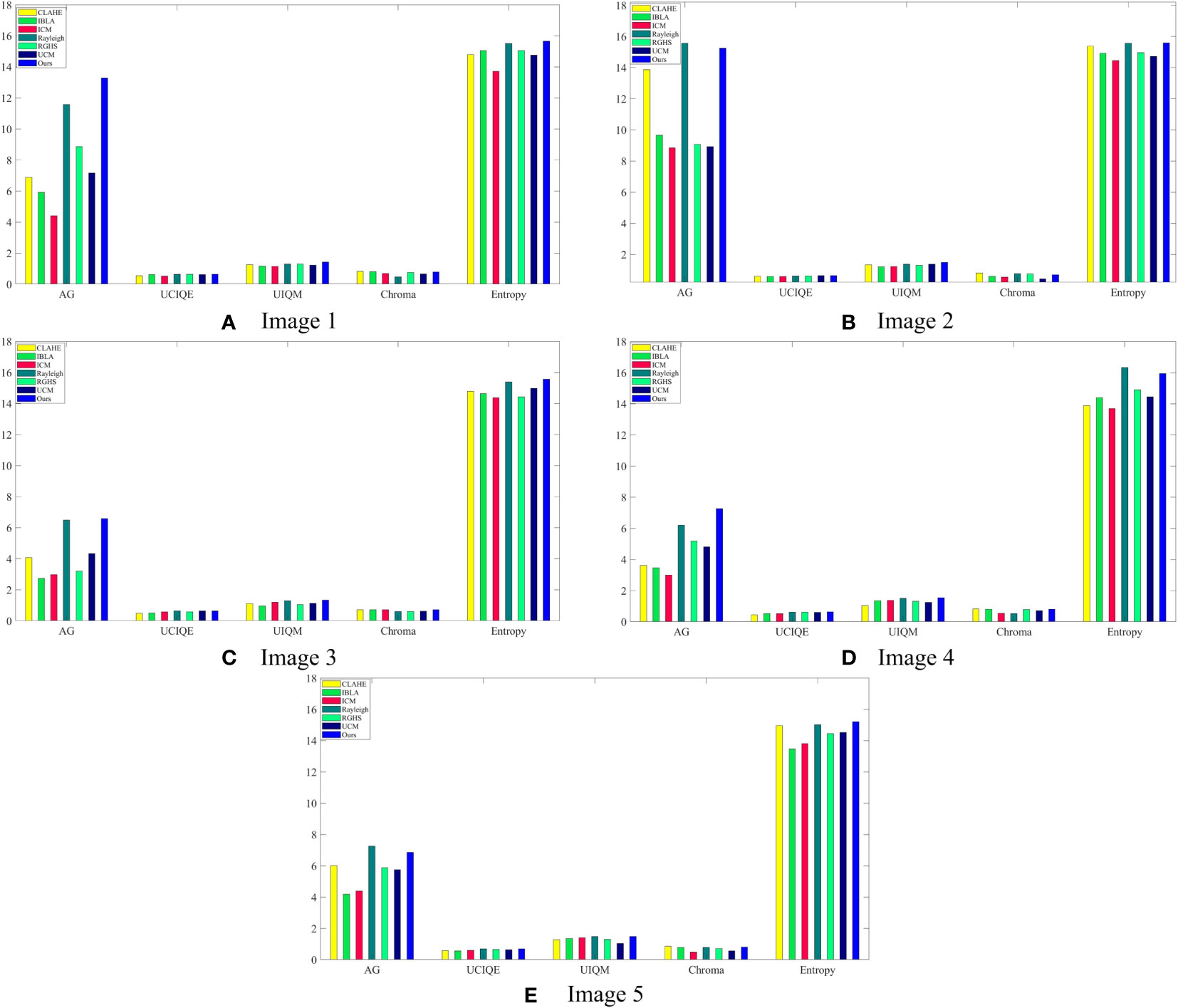

The above quality assess indicators are used to evaluate the results of each algorithm after performing comparative experiments on the four contrast images mentioned above. Table 1 shows the mean values of each algorithm’s enhancement results for multiple images on various evaluation indicators. According to the results, as seen in Figure 5, the image processed by the algorithm in this paper introduces less noise, retains more effective image information, and has a better processing performance than other algorithms. It also shows that this algorithm presented in this paper shows high practical application value and can meet the requirements in this field.

3.2 Image enhancement visual effect comparison (dataset 2)

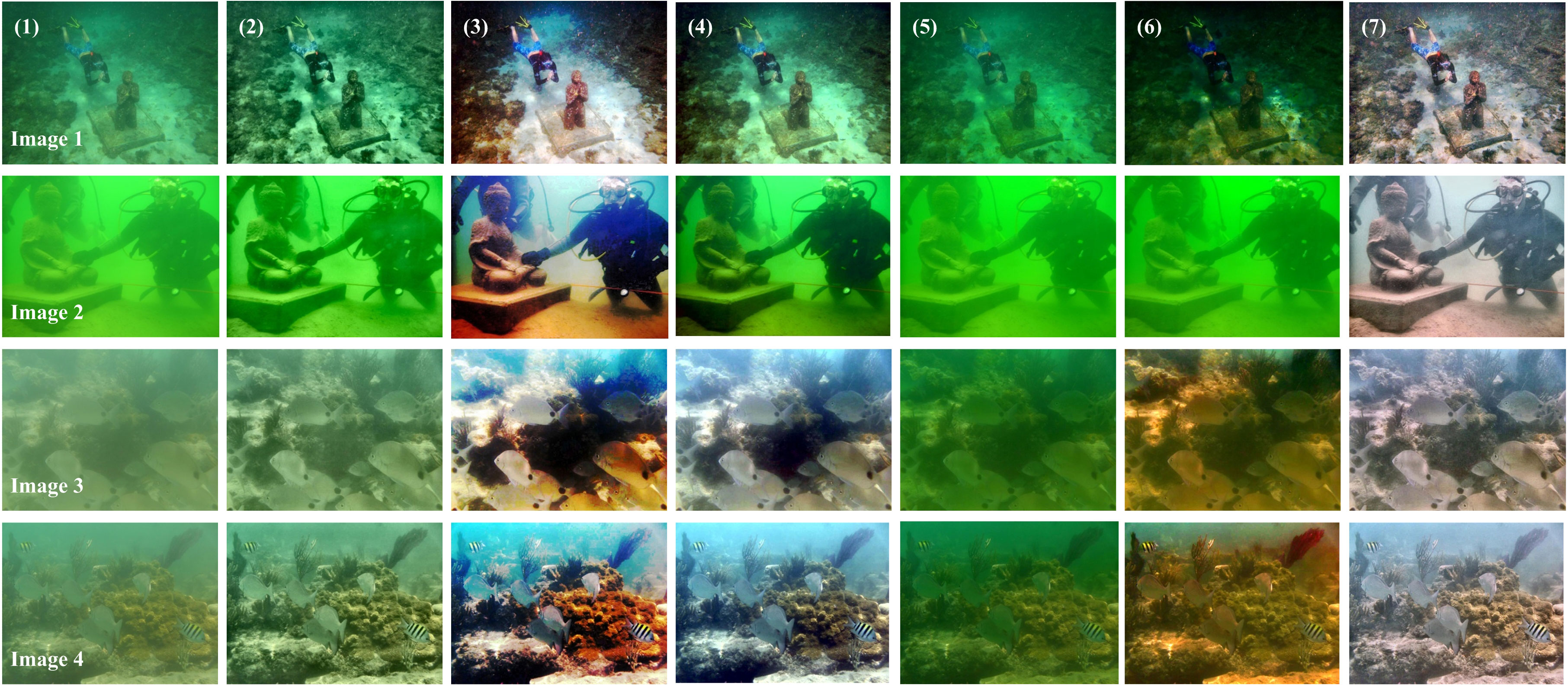

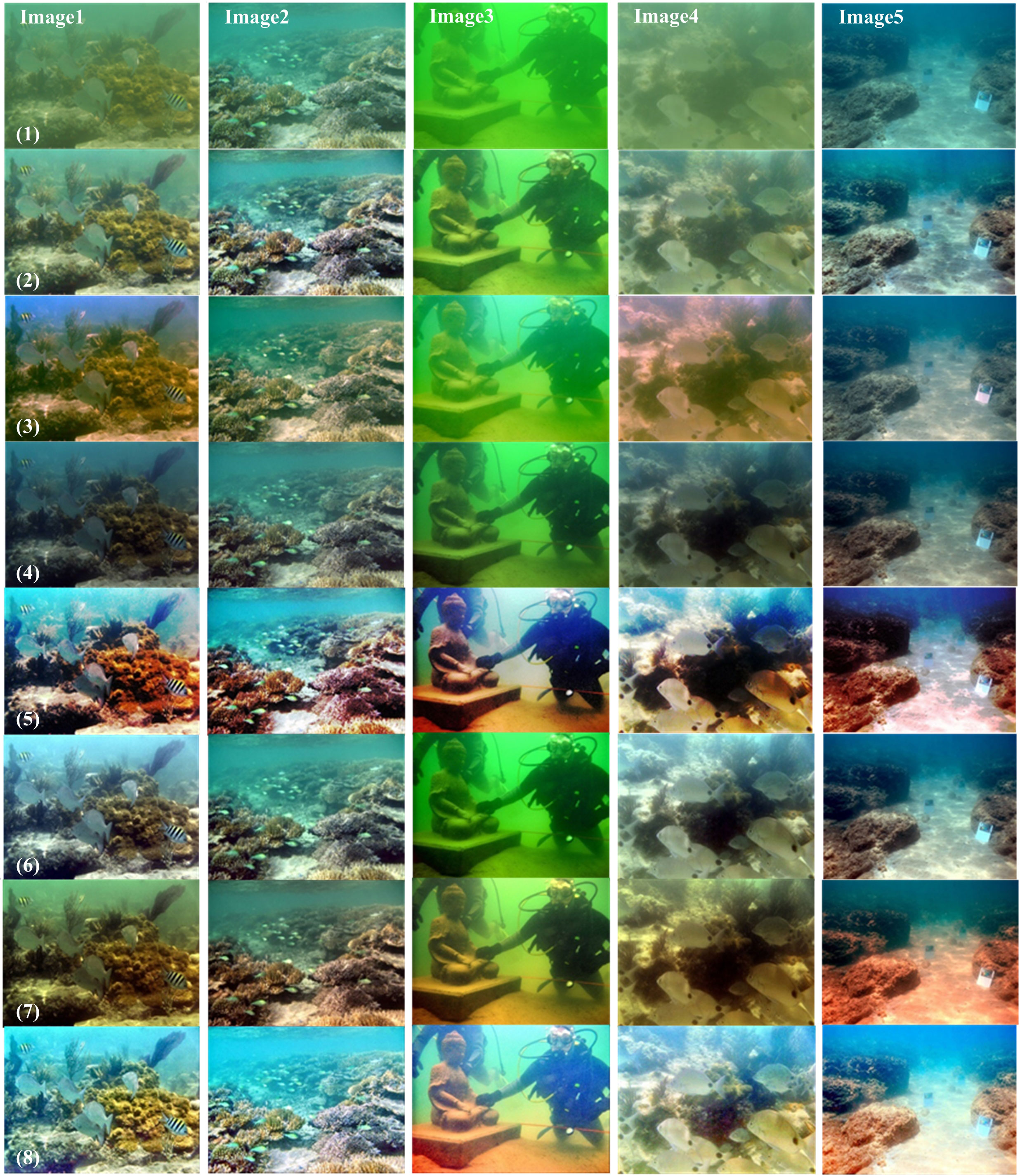

As shown in Figure 6, the experimental results indicate that the previous six algorithms achieved different degrees of color restoration, but could not simultaneously achieve good enhancement effects. The Rayleigh algorithm led to excessive compensation in the red channel and the loss of many local details, while the ICM and UCM algorithms did not perform well in color restoration of green-tinted images, resulting in overcompensation and color deviation. Although CLAHE and RGHS algorithms significantly increased the image contrast, their color restoration effects still need to be improved. However, the experimental method in this paper achieved excellent results for multiple underwater images, which could more effectively correct color deviation, significantly improve contrast, and retain image details.

Figure 6 The results comparison of underwater images from different sources. (1) The original; (2) CLAHE; (3) IBLA; (4) ICM; (5) Rayleigh; (6) RGHS; (7) UCM; (8) Ours.

The average values of the algorithmically enhanced results of multiple images over different evaluation metrics are shown in Table 2, such as AG (Average Gradient), UCIQE (Underwater Color Image Quality Evaluation metric) (Yang and Sowmya, 2015), UIQM (Underwater Image Quality Measures) (Panetta et al., 2015), tone (Chroma) and Entropy. The bold data within the tables represent the maximum value of the column data.

By comparing various underwater image processing methods and the proposed algorithm in this paper, as seen in Figure 7, the method processed image obtained the maximum UCIQE value and UIQM value, which demonstrates that the algorithm performs well in enhancing underwater degraded images. Meanwhile, our method also shows significant superiority in terms of image average gradient and entropy. In terms of image chroma, the proposed algorithm performs well in enhancing underwater green-tinted degraded images. The above experimental results indicate that the proposed fusion algorithm has excellent performance in enhancing underwater images.

3.3 Potential applications

Underwater image preprocessing is used to create high-quality underwater images for use in other applications. The feature matching test in this paper is performed using the SIFT (Lowe, 2004). We find the correspondence between two sets of similar underwater images under the same experimental conditions, compare the number of feature points before and after image processing, and verify the practical application of this algorithm’s effectiveness.

Figure 8 depicts the result of feature point matching and comparison. According to the comparison results, it can be inferred that the images number with accurately matched feature points has increased as a result within this method. According to the above results, it can be inferred that after image fusion based on this method, the corresponding fusion image quality is significantly improved, which can better meet the subsequent recognition requirements and show high application value.

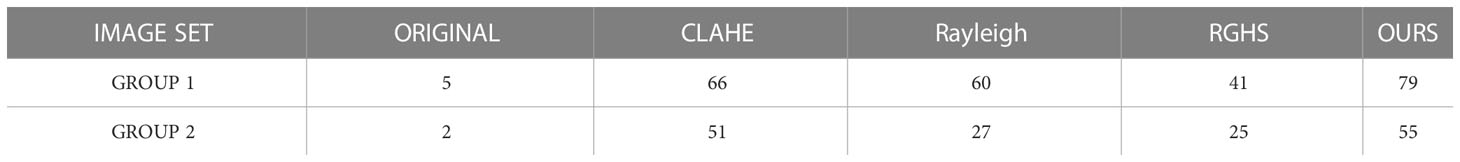

The image processed by ours has a good application performance in the feature extraction process, according to application test results. At the same time, the experiment used feature point matching processed by various enhancement algorithms. Table 3 exhibits the experimental comparison tests result. The numbers of image matching feature points processed by the method in this study are higher than those of other methods, indicating that it performs better in real-world applications.

4 Conclusions

Images captured in offshore waters often suffer from low contrast, uneven colors, and varying degrees of blur. To address these issues, we propose a new fusion algorithm that employs color compensation, homomorphic filtering, and L-channel histogram equalization technology to enhance the visual quality of underwater images in shallow sea water through multi-scale fusion processing.

Our algorithm significantly improves the visibility of underwater images in a variety of shallow sea scenes, enhancing color restoration and sharpening effects as shown in subjective image visual effect demonstrations.

Experimental comparison tests showed that utilizing our method for image preprocessing significantly enhances the quality of relevant underwater vision tasks. However, it should be noted that the proposed method can lead to overcompensation and correction of colors. In future work, we aim to improve the color restoration and further enhance underwater image quality.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://li-chongyi.github.io/proj_benchmark.html.

Author contributions

HZ proposed the main frame of the underwater images enhancement and its process, and revised the manuscript. LG assisted to complete this process and experiments, and drafted a manuscript. XL assisted to complete data analysis and draw. FL assisted to improve this frame and get better results. JY assisted to revise the manuscript to make it more fluent.

Funding

This research has obtain the support of the Key research projects of Qingdao Science and Technology Plan (Grant Number: 22-3-3-hygg-30-hy), the Natural Science Foundation of Shandong Province (Grant Number: ZR2022ZD38), and Basic Research Projects of Qilu University of Technology (Grant Number: 2022PX053).

Acknowledgments

The authors want to thank reviewers for their suggestions in our revision of manuscripts.

Conflict of interest

LG was employed by Civil Aviation Logistics Technology Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdul Ghani A. S., Mat Isa N. A. (2015). Enhancement of low quality underwater image through integrated global and local contrast correction. Appl. Soft Computing 37, 332–344. doi: 10.1016/j.asoc.2015.08.033

Ancuti C. O., Ancuti C. (2013). Single image dehazing by multi-scale fusion. IEEE Trans. Image Processing 22, 3271–3282. doi: 10.1109/TIP.2013.2262284

Ancuti C., Ancuti C. O., Haber T., Bekaert P. (2012). “Enhancing underwater images and videos by fusion,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition. (IEEE: Providence, RI, USA), 81–88. doi: 10.1109/CVPR.2012.6247661

Ancuti C. O., Ancuti C., Vleeschouwer C. D., Sbert M. (2020). Color channel compensation (3C): a fundamental pre-processing step for image enhancement. IEEE Trans. Image Processing 29, 2653–2665. doi: 10.1109/TIP.2019.2951304

Chang H., Cheng C. Y., Sung C. C. (2018). Single underwater image restoration based on depth estimation and transmission compensation. IEEE J. Oceanic Eng., 44 1–20. doi: 10.1109/JOE.2018.2865045

Chen T., Wang N., Wang R., Zhao H., Zhang G. (2021). One-stage CNN detector-based benthonic organisms detection with limited training dataset. Neural Networks 144, 247–259. doi: 10.1016/j.neunet.2021.08.014

Chen X., Yu J., Kong S., Wu Z., Fang X., Wen L. (2019). Towards real-time advancement of underwater visual quality with GAN. IEEE Trans. Ind. Electronics 66, 9350–9359. doi: 10.1109/TIE.2019.2893840

Drews-Jr P., do Nascimento E., Moraes F., Botelho S., Campos M. (2013). “Transmission estimation in underwater single images,” in International Conference on Computer Vision - Workshop on Underwater Vision. (IEEE:Sydney, NSW, Australia), 825–830.

Guo J. C., Li C. Y., Guo C. L., Chen S. J. (2017). Research progress of underwater image enhancement and restoration methods. J. Image Graphics 22, 0273–0287. doi: 10.11834/jig.20170301

Huang D., Wang Y., Song W., Sequeira J., Mavromatis S. (2018). “Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition,” in 24th International Conference on Multimedia Modeling - MMM2018. (MultiMedia Modeling: Bangkok, Thailand).

Hummel R. (1977). Image enhancement by histogram transformation. Comput. Graphics Image Processing 6, 184–195. doi: 10.1016/S0146-664X(77)80011-7

Iqbal K., Odetayo M., James A., Salam R. A., Talib A. Z. H. (2010). “Enhancing the low quality images using unsupervised colour correction method,” in 2010 IEEE International Conference on Systems, Man and Cybernetics. (IEEE:Istanbul, Turkey), 1703–1709.

Iqbal K., Salam R. A., Osman A., Talib A. Z. (2007). Underwater image enhancement using an integrated colour model. IAENG Int. J. Comput. Sci. 34, 239–244.

Jiang Q., Gu Y., Li C., Cong R., Shao F. (2022). Underwater image enhancement quality evaluation: benchmark database and objective metric. IEEE Trans. Circuits Syst. Video Technol. 32 (9), 5959–5974. doi: 10.1109/TCSVT.2022.3164918

Jiao Z., Xu B. (2010). Color image illumination compensation based on HSV transform and homomorphic filtering. Comput. Eng. Applications 46, 142–144. doi: 10.3778/j.issn.1002-8331.2010.30.042

Li K., Wu L., Qi Q., Liu W., Gao X., Zhou L., et al. (2022). Beyond single reference for training: underwater image enhancement via comparative learning, in IEEE Transactions on Circuits and Systems for Video Technology, 2022. 3225376. doi: 10.1109/TCSVT

Li J., Xu W., Deng L., Xiao Y., Han Z., Zheng H. (2023). Deep learning for visual recognition and detection of aquatic animals: a review. Rev. Aquacul. 15 (2), 409–433. doi: 10.1111/raq.12726

Lowe D. G. (2004). Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision 60, 91–110. doi: 10.1023/B:VISI.0000029664.99615.94

Panetta K., Gao C., Agaian S. (2015). Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41 (3), 541–551. doi: 10.1109/JOE.2015.2469915

Peng Y. T., Cosman P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Trans. image processing 26 (4), 1579–1594. doi: 10.1109/TIP.2017.2663846

Pisano E. D., Zong S., Hemminger B. M., DeLuca M., Johnston R. E., Muller K., et al. (1998). Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digital Imaging 11, 193–200. doi: 10.1007/BF03178082

Qi Q., Li K., Zheng H., Gao X., Hou G., Sun K. (2022). SGUIE-net: semantic attention guided underwater image enhancement with multi-scale perception. IEEE Trans. Image Processing 31, 6816–6830. doi: 10.1109/TIP.2022.3216208

Sahu P., Gupta N., Sharma N. (2014). A survey on underwater image enhancement techniques. Int. J. Comput. Applications 87, 19–23. doi: 10.5120/15268-3743

Sun S., Wang H., Zhang H., Li M., Xiang M., Luo C., et al. (2022). Underwater image enhancement with reinforcement learning. IEEE J. Oceanic Eng., 1–13. doi: 10.1109/JOE.2022.3152519

Wang N., Chen T., Liu S., Wang R., Karimi H. R., Lin Y. (2023). Deep learning-based visual detection of marine organisms: a survey. Neurocomputing 532, 1–32. doi: 10.1016/j.neucom.2023.02.018

Wang Y., Song W., Fortino G., Qi L.-Z., Zhang W., Liotta A. (2019). An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access 7, 140233–140251. doi: 10.1109/ACCESS.2019.2932130

Wang N., Wang Y., Er M. J. (2022). Review on deep learning techniques for marine object recognition: architectures and algorithms. Control Eng. Practice 118, 104458. doi: 10.1016/j.conengprac.2020.104458

Yang M., Hu J., Li C., Rohde G., Du Y., Hu K. (2019). An in-depth survey of underwater image enhancement and restoration. IEEE Access 7, 123638–123657. doi: 10.1109/ACCESS.2019.2932611

Yang M., Sowmya A. (2015). An underwater color image quality evaluation metric. IEEE Trans. Image Process 24, 6062–6071. doi: 10.1109/TIP.2015.2491020

Yu X., Qu Y., Hong M. (2019). Underwater-GAN: underwater image restoration via conditional generative adversarial network. Lecture Notes Comput. Sci. 11188, 66–75. doi: 10.1007/978-3-030-05792-3_7

Zhou J., Sun J., Zhang W., Lin Z. (2023a). Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intelligence 121, 105946. doi: 10.1016/j.engappai.2023.105946

Zhou J., Yang T., Chu W., Zhang W. (2022). Underwater image restoration via backscatter pixel prior and color compensation. Engineer. App. Art. Intelligence 111, 104785. doi: 10.1016/j.engappai.2022.104785

Keywords: underwater optical imaging, multi-scale weight, image enhancement, image fusion, homomorphic filtering

Citation: Zhang H, Gong L, Li X, Liu F and Yin J (2023) An underwater imaging method of enhancement via multi-scale weighted fusion. Front. Mar. Sci. 10:1150593. doi: 10.3389/fmars.2023.1150593

Received: 24 January 2023; Accepted: 26 April 2023;

Published: 18 May 2023.

Edited by:

Xuemin Cheng, Tsinghua University, ChinaCopyright © 2023 Zhang, Gong, Li, Liu and Yin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hao Zhang, emhhbmdoYW9AcXV0LmVkdS5jbg==

Hao Zhang

Hao Zhang Longxiang Gong2

Longxiang Gong2 Fei Liu

Fei Liu Jiawei Yin

Jiawei Yin