- 1Faculty of Electrical Engineering and Computer Science, Ningbo University, Ningbo, China

- 2Key Laboratory of Aquaculture Biotechnology, Chinese Ministry of Education, Ningbo University, Ningbo, China

- 3Collaborative Innovation Center for Zhejiang Marine High-Efficiency and Healthy Aquaculture, Ningbo, China

- 4Marine Economic Research Center, Dong Hai Strategic Research Institute, Ningbo University, Ningbo, China

Swimming crab Portunus trituberculatus is a vital crab species in coastal areas of China. In this study, an individual re-identification method based on Pyramidal Feature Fusion Model (PFFM) for P. trituberculatus was proposed. This method took the carapace texture of P. trituberculatus as a “biological fingerprint” and extracted carapace texture features, including global features and local features, to identify P. trituberculatus. Furthermore, this method utilized a weight adaptive module to improve re-identification (ReID) accuracy for the P. trituberculatus individuals with the incomplete carapace. To strengthen the discrimination of the extracted features, triplet loss was adopted in the model training process to improve the effectiveness of P. trituberculatus ReID. Furthermore, three experiments, i.e., PFFM on the effect of pyramidal model, P. trituberculatus features analysis, and comparisons to the State-of-the-Arts, were carried out to evaluate PFFM performance. The results showed that the mean average precision (mAP) and Rank-1 values of the proposed method reached 93.2 and 93% in the left half occlusion case, and mAP and Rank-1 values reached 71.8 and 75.4% in the upper half occlusion case. By using the experiments, the effectiveness and robustness of the proposed method were verified.

Introduction

The swimming crab (Portunus trituberculatus) is a marine economic crab on the coast of China. In 2017, the highest production of 561,000 tons was recorded (FAO, 2020). In recent years, with the rapid development of the industry, the subsequent quality and security issues of P. trituberculatus have raised many concerns, such as heavy metal residue in edible tissues of the swimming crab (Barath Kumar et al., 2019; Yu et al., 2020; Bao et al., 2021; Yang et al., 2021). The tracing system can recall the product in question in time and find counterfeit products to solve the food security issues. Therefore, it is integral to establish a P. trituberculatus tracing system. In terms of tracing technology, there are a number of Internet of Things (IoT)-based tracking and tracing infrastructures, such as Radio Frequency Identification (RFID) and Quick Response (QR) codes (Landt, 2005), which are primarily targeted for product identification. However, the RFID and QR codes are easily damaged during transportation. In contrast, the image can be conveniently captured, and the re-identification (ReID) method based on image processing can identify the product without the physical label. Thus, the image ReID method becomes a trend in product identification and tracing.

Traditional individual tracing methods have some limitations (Violino et al., 2019). For example, biological identification is time-consuming, chemical identification is not applicable on a massive scale dataset, and information identification cannot recognize artificially forged samples. All the methods above are complicated, and feature extraction cost is high on large-scale applications. In contrast, the State-of-the-Art artificial intelligence (AI)-based methods can overcome these challenges. AI has been applied to simplify the feature extraction process in the biometrics field (Hansen et al., 2018; Marsot et al., 2020). Specifically, AI-based methods mainly identify objects according to image features. There are many methods, including Histogram of Oriented Gradient (HOG) (Dalal and Triggs, 2005), Local Binary Pattern (LBP) (Ojala et al., 2002), and deep network. These methods can extract features such as bumps, grooves, ridges, and irregular spots on the carapace of P. trituberculatus. The extracted features seem like the “biological fingerprint” of P. trituberculatus, which provides initial features for object ReID.

In terms of ReID, the traditional ReID is mainly applied to pedestrian re-identification. The initial visual features are used to represent pedestrian (Bak et al., 2010; Oreifej et al., 2010; Jüngling et al., 2011). To measure the effect of ReID methods, Relative Distance Comparison (RDC) (Zheng et al., 2012) was proposed based on PRDC (Zheng et al., 2011). RDC utilized the AdaBoost mechanism to reduce the dependence of model training on labeled samples.

With the development of deep learning, many scholars introduced deep learning into ReID and focused on the part-based method. The deeply learned representations had a high discriminative ability, especially when aggregated from deeply learned part features. In 2018, the Part-based Convolutional Baseline (PCB) model (Sun et al., 2018) was proposed. The PCB model divides pedestrians into separate blocks to extract fine-grained features and receive promising results. The use of the part-based method is effective to ReID. Meanwhile, a more detailed part-based method (Fu et al., 2019) with the combination of the divided part features as individual “biological fingerprint” was proposed. The detailed part-based method performed better than PCB, and the part-based strategy could further improve the ReID accuracy, such as multiple granularity network (MGN) (Wang et al., 2018) and pyramidal model (Zheng et al., 2019). Based on the part-based methods, triplet loss (Schroff et al., 2015) was adopted to minimize the feature distance of P. trituberculatus individuals with the same identification (ID) and maximize the feature distance of P. trituberculatus individuals with a different ID. The triplet loss is another way to deal with ReID task. Later, triplet loss is widely used in ReID.

In the field of biological ReID, the deep convolutional neural network (DCNN) is an efficient deep learning method that provides extract features to solve ReID problems (Hansen et al., 2018) in a low-cost and scalable way (Marsot et al., 2020). Actually, the deep learning method requires large amounts of labeled pictures to train the DCNN model (Ferreira et al., 2020). Korschens and Denzler (2019) introduced an elephant dataset for elephant ReID. The elephant dataset contained 276 elephant individuals and provided a baseline approach for elephant ReID. Based on the elephant dataset, the approach used You Only Look Once (YOLO) (Redmon et al., 2016) detector to recognize elephant individuals. In 2020, a ReID method for Southern Rock Lobster (SRL) by convolutional neural networks (CNNs) (Vo et al., 2020) was proposed. The lobster ReID method used a contrastive loss function to distinguish lobsters based on carapace images. This method showed that the loss function also contributed to ReID. In addition, the standard cross-entropy loss with a pairwise Kullback-Leibler (KL) divergence loss was used to enforce consistent semantically constrained deep representations explicitly and showed competitive results on the Wild ReID task (Shukla et al., 2019). In terms of part-based methods, a part-pose guided model was proposed for tiger ReID (Liu et al., 2019). The model consisted of two-part branches and a full branch. The part branches were used as regulators to constrain full branch feature training on original tiger images. Part-based methods are proven to be efficient in the biological field.

There are many approaches using machine learning and computer vision technology to identify individuals. All the individual ReID methods need to design a computer vision model according to the individual surface characteristics. To identify P. trituberculatus individuals, a pyramidal feature fusion model (PFFM) method was developed according to the carapace characteristics of P. trituberculatus. The PFFM could extract P. trituberculatus features on local and global perspectives and effectively match P. trituberculatus individuals. This study aims to develop a method to extract image features for P. trituberculatus individual identification. The extracted image feature is treated as a product label, by which the crab in question can be retrieved and traced. Furthermore, the proposed method could also be potentially applied to reidentify other crabs with apparent characteristics on the carapace.

Materials and Methods

Experimental Animal

We collected 211 adult P. trituberculatus from a crab farm in Ningbo in March 2020. The appendages of the adult P. trituberculatus were intact. The average body weight of the experimental crab was 318.90 ± 38.07 g (mean ± SD), the full carapace width was 16.73 ± 0.75 cm, and the length was 8.65 ± 0.41 cm. After numbering the P. trituberculatus, the carapaces were pictured with a mobile phone (Huawei P30 rear camera).

Experimental Design

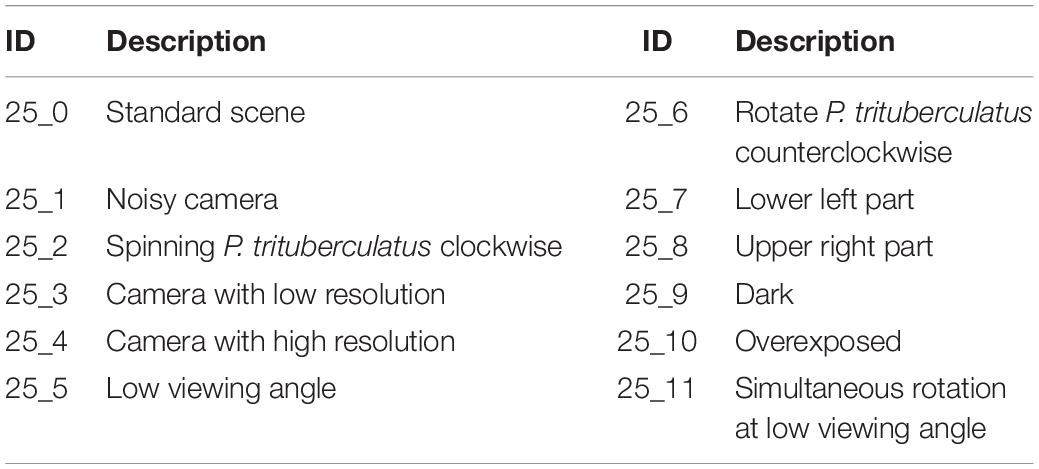

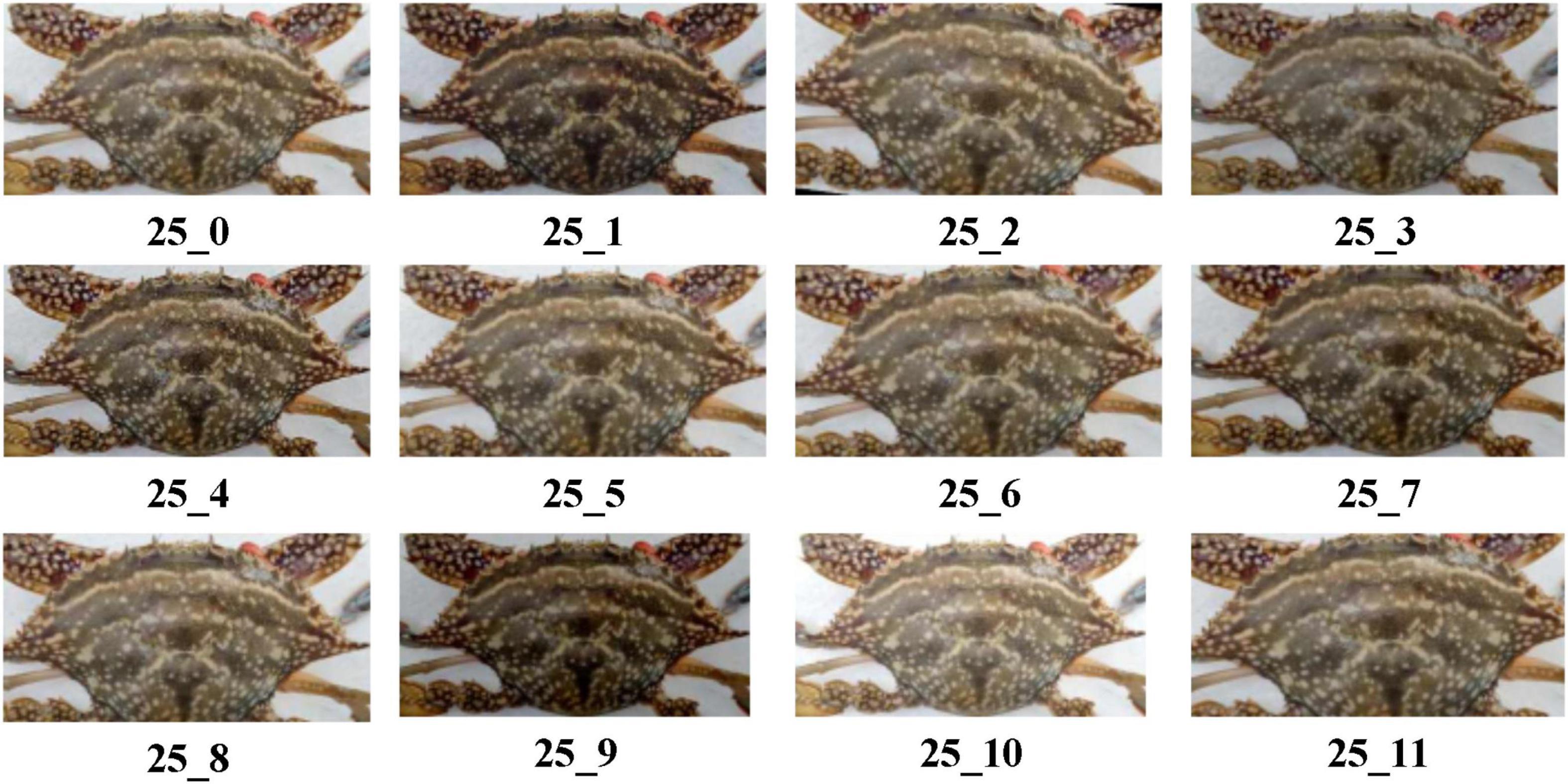

The P. trituberculatus were numbered from 1 to 211, and the carapace images were used to compose a dataset named crab-back-211. The carapace pictures were taken in multiple scenes to augment the diversity of dataset crab-back-211. The description of the scenes is shown in Table 1. In Table 1, “25” represents the ID of P. trituberculatus in crab-back-211, and “0–11” are the IDs of 12 scenes. In the 12 scenes, ID 0 is a standard scene without any processing. By diversity augmentation, the crab-back-211 was expanded to 2,532 images. Figure 1 shows the carapace images of the 25th P. trituberculatus in 0–11 scenes, where 25_0 is the standard scene.

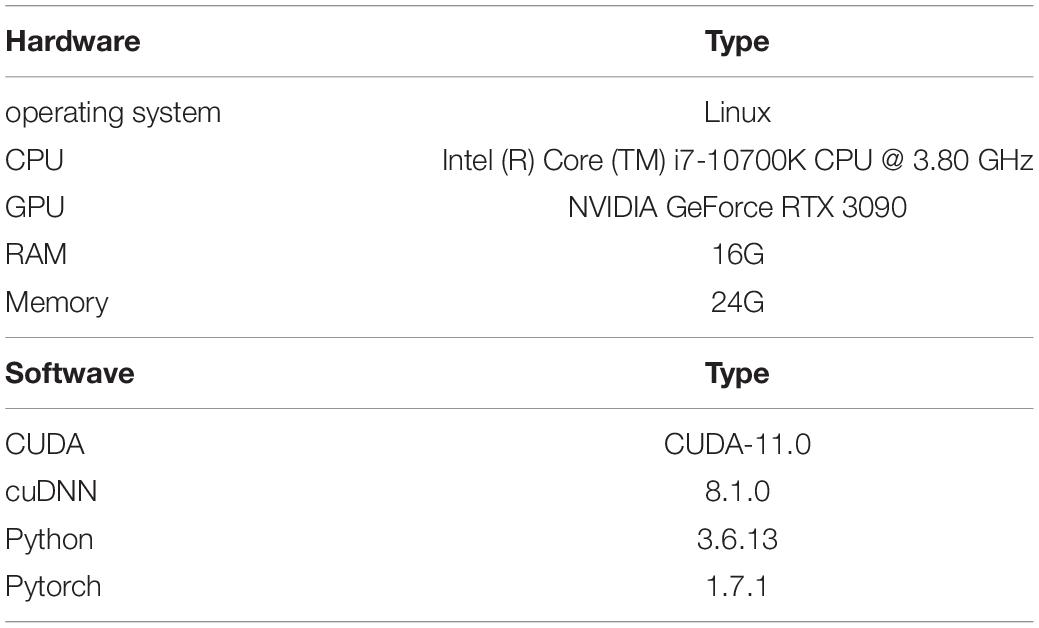

We divided the crab-back-211 into the training set and test set, including 154 crabs and 57 crabs, respectively. The training set was used to train ReID model, while the test set was used to evaluate the performance of the trained model. The training set contained 154 P. trituberculatus with a total of 1,848 images, and the test set had 57 P. trituberculatus with a total of 684 images. The test set consisted of a query set and a gallery set. P. trituberculatus ReID aimed at matching images of a specified P. trituberculatus in gallery set, given a query image in the query set. The correct matches could be found by the similarities of carapace features obtained by Euclidean distance. First, carapace features of P. trituberculatus in query or gallery set were obtained by pyramid-based ReID (PR). Then, all the similarities of carapace features between query images and gallery images could be obtained by Euclidean distances. By the similarities, the match of a query image was found from the gallery images. In this experiment, 57 query images were selected as the query set to find the correct match across 627 gallery images in the gallery set. Table 2 shows the configuration of the experiment platform.

We tested the proposed method on crab-back-211. The experiment consisted of two stages, namely, the model training stage and the model inference stage.

Model Training

Resnet18, Resnet50, and Resnet101 were used as backbone models. These models were pretrained on ImageNet (Krizhevsky et al., 2012). Random horizontal flipping and cropping methods were adopted to augment carapace images in the data preparation process. ID loss and triplet loss were combined into global objective function in model training, and the ratios of ID loss and triplet loss were 0.3 and 0.7, respectively. In this experiment, the margin of triplet loss was set to 0.2. Totally, in this study, the proposed PFFM trained 60 epochs. In each epoch, a mini-batch of 60 images of 15 P. trituberculatus was sampled from crab-back-211, where each P. trituberculatus contained four images. We used Adam Optimizer as a training optimizer, with an initial learning rate of 10−5, and shrunk this learning rate by a factor of 0.1 at 30. The scale of input images was 382 × 128.

Model Inference

In this stage, 57 query images were selected as the query set to find the matches across 627 gallery images. Three experiments were designed and adopted matching accuracy to evaluate PFFM matching accuracy. The three experiments were the effect of pyramidal model, P. trituberculatus features analysis, and comparisons to the State-of-the-Arts.

(1) Effect of pyramidal model: the effect of PFFM and the effect of the pyramidal structure size on performance were empirically studied.

(2) Portunus trituberculatus features analysis: the Euclidean distance between the query images and gallery images was visualized to show the discrimination of the extracted features.

(3) Comparisons to the State-of-the-Arts: The PFFM and other ReID methods were compared.

All three experiments utilized mAP (Zheng et al., 2015) and cumulative match characteristics (CMC) at rank-1 and rank-5 as evaluation indicators.

Portunus trituberculatus ReID Algorithm

For P. trituberculatus ReID, the traditional ReID method takes the whole P. trituberculatus carapace image as input, to extract the whole feature. This extracted feature represents P. trituberculatus individual. By the feature similarity between query images and gallery images, the matching result of a query image can be found in the gallery set. However, these traditional methods overemphasize the global features of the P. trituberculatus individual and ignore some insignificant detailed features. These methods could fail to distinguish similar P. trituberculatus individuals. Therefore, many pieces of research comprehensively consider global features and local features and focus on the contribution of key local areas to the whole feature. To extract local features, an image should be divided into fixed parts. For example, in the field of pedestrian ReID, a pedestrian image is divided into three fixed parts, namely, head, upper body, and lower body, and the divided local features are extracted, respectively. In terms of part-based methods, different part-based frameworks were adopted to improve the performance of ReID (Sun et al., 2018; Fu et al., 2019). The critical point of part-based methods is to align the divided fixed parts.

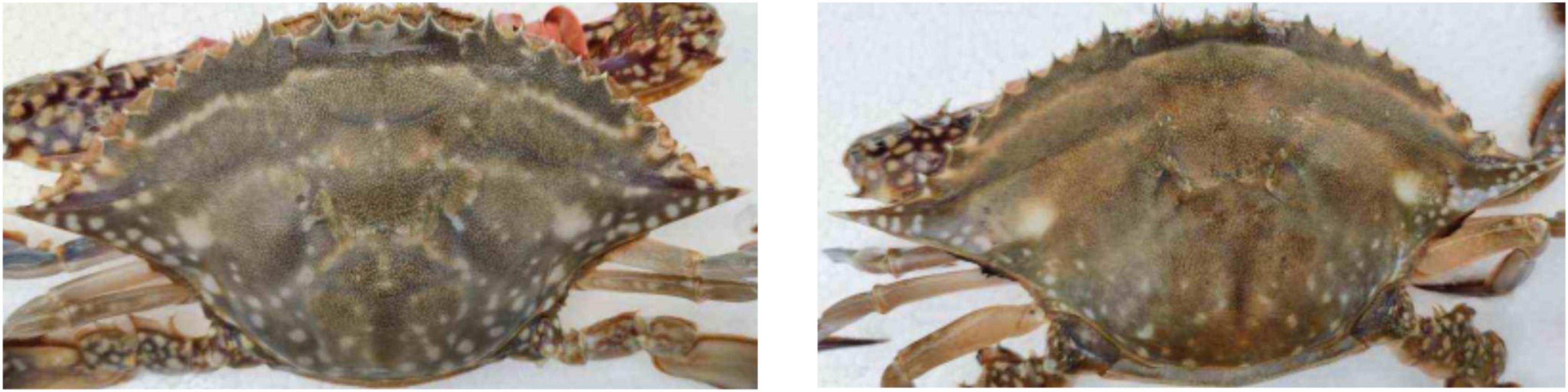

For P. trituberculatus, there were many carapace characteristics, such as protrusions, grooves, ridges, and irregular spots. Figure 2 shows two P. trituberculatus carapaces. The carapace texture and spots have significant individual discrimination. Therefore, we focused on the local texture and spot characteristics of P. trituberculatus carapaces to improve ReID accuracy.

Pyramid-Based ReID

Pyramid-based ReID considered a local feature by dividing original features into 18 groups with an alignment to strengthen the role of the detailed feature. Previous part-based methods, such as PCB, used several part-level features to achieve ReID. However, these methods did not consider the continuity of separate parts. We proposed a pyramid-based ReID with a multilevel feature based on PCB to focus on the continuity of separate parts and enhance detailed features. This multilevel framework effectively not only avoided the problem of “feature separation” caused by part division but also smoothly merged the local and global features. In addition, a combination of ID loss and triplet loss to train the ReID model was used to strengthen the feature discrimination between P. trituberculatus individuals.

(1) Part-based strategy

To strengthen the local detailed features on the carapace of P. trituberculatus, we divided the carapace image into fixed separate parts. Based on the shape of the carapace, many carapace division strategies could be designed. Figure 3 shows a 6-square grid division plan on carapace image, the divided fixed six parts, numbered 1–6. Two problems for the part-based strategy are as follows: (a) How to weigh the contribution of the divided parts to the whole feature and (b) How to solve the “feature separation” problem on the separate parts. Thus, the PFFM model, which could deeply fuse the features of the separate carapace parts, was proposed.

(2) Pyramid-based strategy

Figure 3. Six-square grids plan on P. trituberculatus. The numbers in the picture indicate the divided parts.

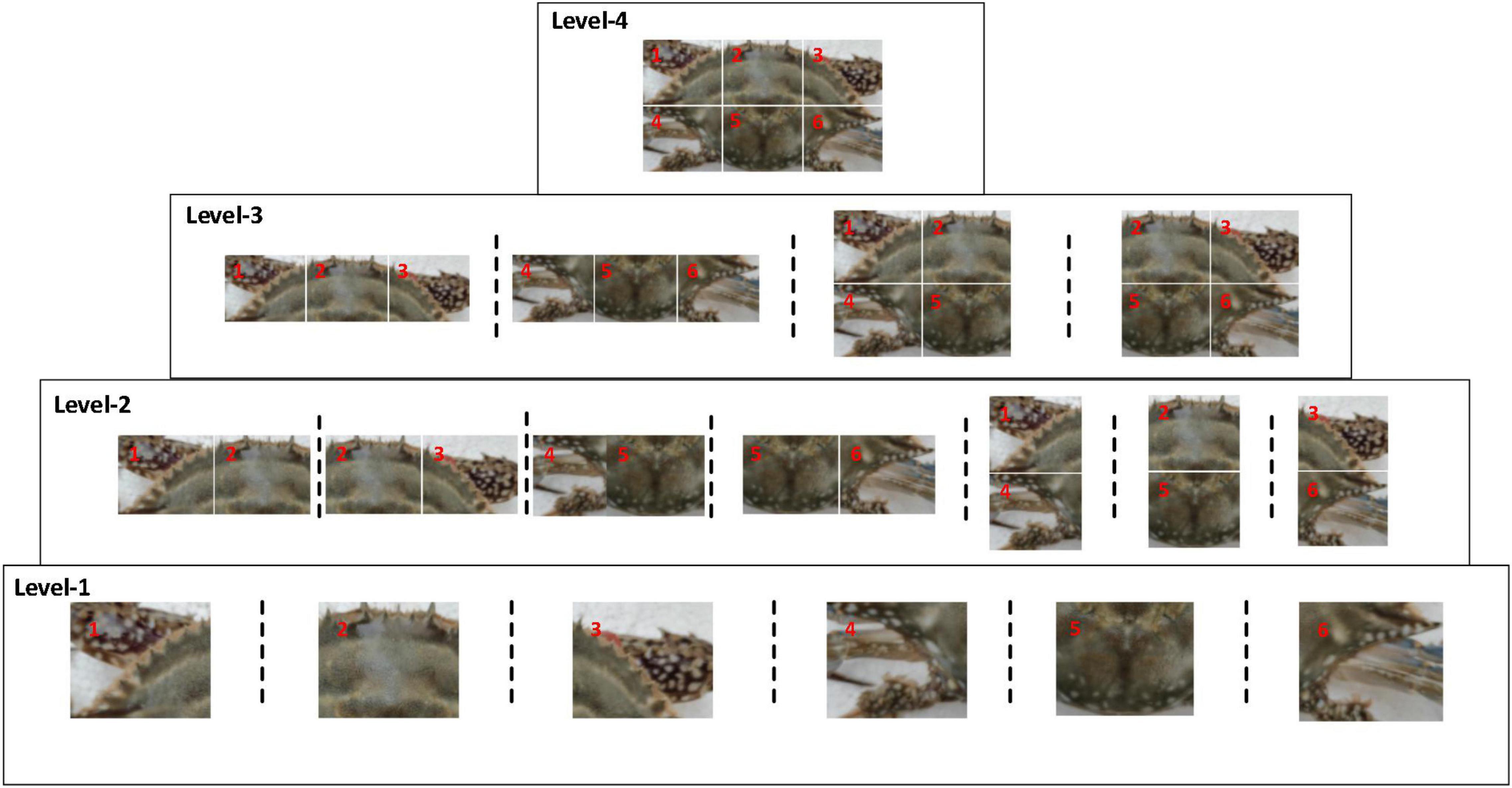

We designed a pyramid-based strategy to fuse the local features obtained by a part-based strategy. As shown in Figure 4, the pyramid-based strategy composed the six fixed parts in Figure 3 into 18 groups. For instance, the fixed part group (1, 2, 4, and 5) was composed of part-1, part- 2, part-4, and part-5. The 18 groups were divided into four levels forming a feature pyramid. In level-1, six basic groups were formed by the divided six separate parts. Seven groups composed of the six basic groups in level-2, while four groups in level-3 composed of the seven groups in level-2. In level-4, there was only one group, which was the whole carapace image representing the global feature. Each level represented a granularity on feature extraction, and the four levels extracted the carapace feature at multi-granularity. Therefore, this pyramid-based strategy extracted global and local features and also provided a feature integration strategy on feature extraction.

(3) Pyramidal Feature Fusion Model

Figure 4. Pyramid-based strategy. There are 18 groups in the four levels, covering the whole P. trituberculatus carapace characteristics locally and globally.

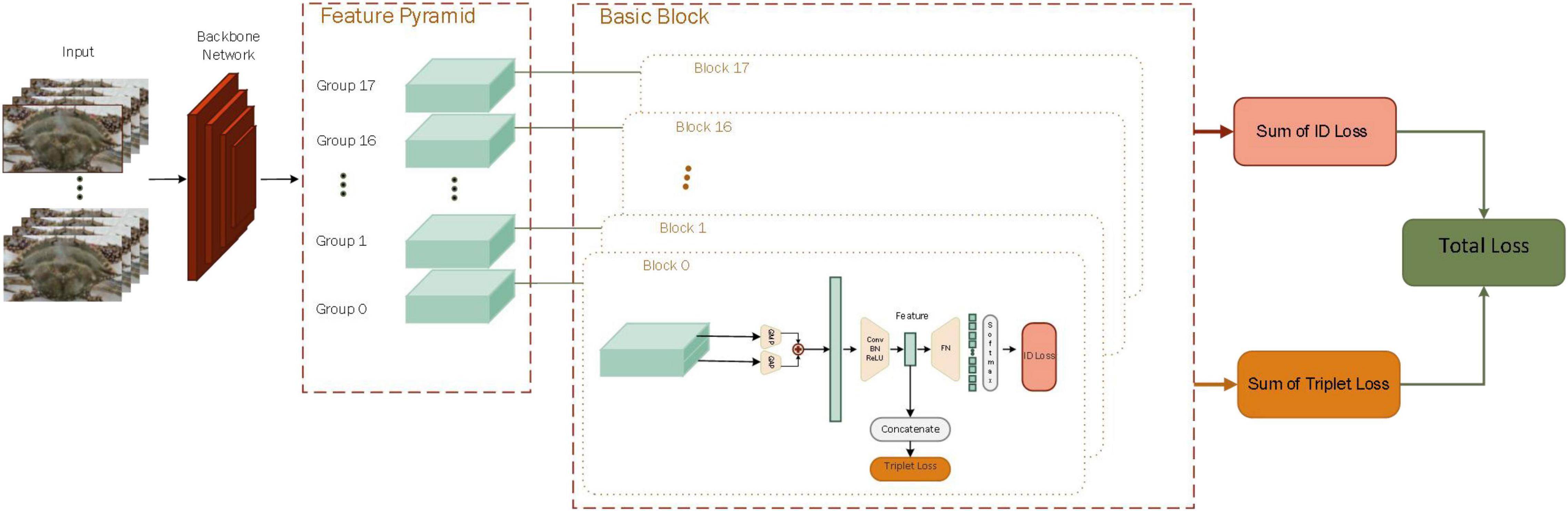

Figure 5 shows the architecture of the PFFM model. PFFM model was mainly composed of feature extraction backbone, pyramid-based module, and basic convolution block module. Each module is described in the following sections.

Figure 5. The architecture of pyramidal feature fusion model (PFFM) model. It is composed of backbone, pyramid, and basic block.

Backbone

Backbone was a network for feature extraction. The objective of the backbone was to extract original features and feed the features into the next. ResNet framework (He et al., 2016) is an effective backbone with a strong feature extraction ability on image processing, such as object classification and segmentation. Therefore, we used ResNet to extract original features on P. trituberculatus carapace. Furthermore, many backbone networks, such as Resnet-50 and Resnet-101, can also be used as the basic network, and these backbones were compared with each other in the experiments.

Feature Pyramid

To extract the global and local features of P. trituberculatus, a part-based strategy was used to divide the original features into six fixed parts as shown in Figure 3, after backbone. In Figure 5, the input of the feature pyramid was the original features extracted by the backbone, and then 18 groups in 4 levels by the six fixed parts could be obtained by the pyramid-based strategy.

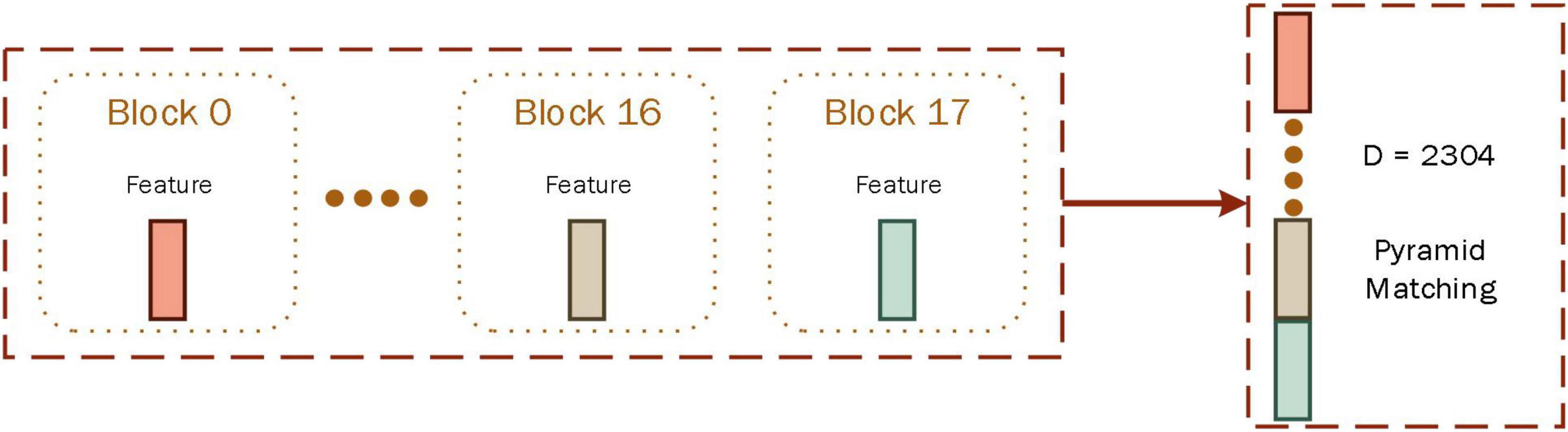

Basic Block

The basic block used in this study is shown in Figure 5, which includes the pooling layer, convolutional layer, batch normalization (BN), rectified linear units (ReLU), full connection (FC), and softmax layers. There were 18 blocks in the basic block, which could process the 18 features of 18 groups, after the feature pyramid. For each block in the basic block, global average pooling (GAP) and global maximum pooling (GMP) were used to capture the characteristics of different channels, such as protrusions, grooves, ridges, and irregular spots. Then, two features in the same channel were added into a vector. Later, a convolution layer was followed by BN and ReLU activation. The features by 18 blocks were concatenated, as shown in Figure 6. ID loss and triplet loss were adopted to train the PFFM model and to discriminate the subtle discrimination of overall features. In the inference stage, the concatenated feature was used to identify P. trituberculatus.

Figure 6. Feature integration module. Features in different blocks are fused, and the fused feature could be used to identify P. trituberculatus.

Triplet Loss

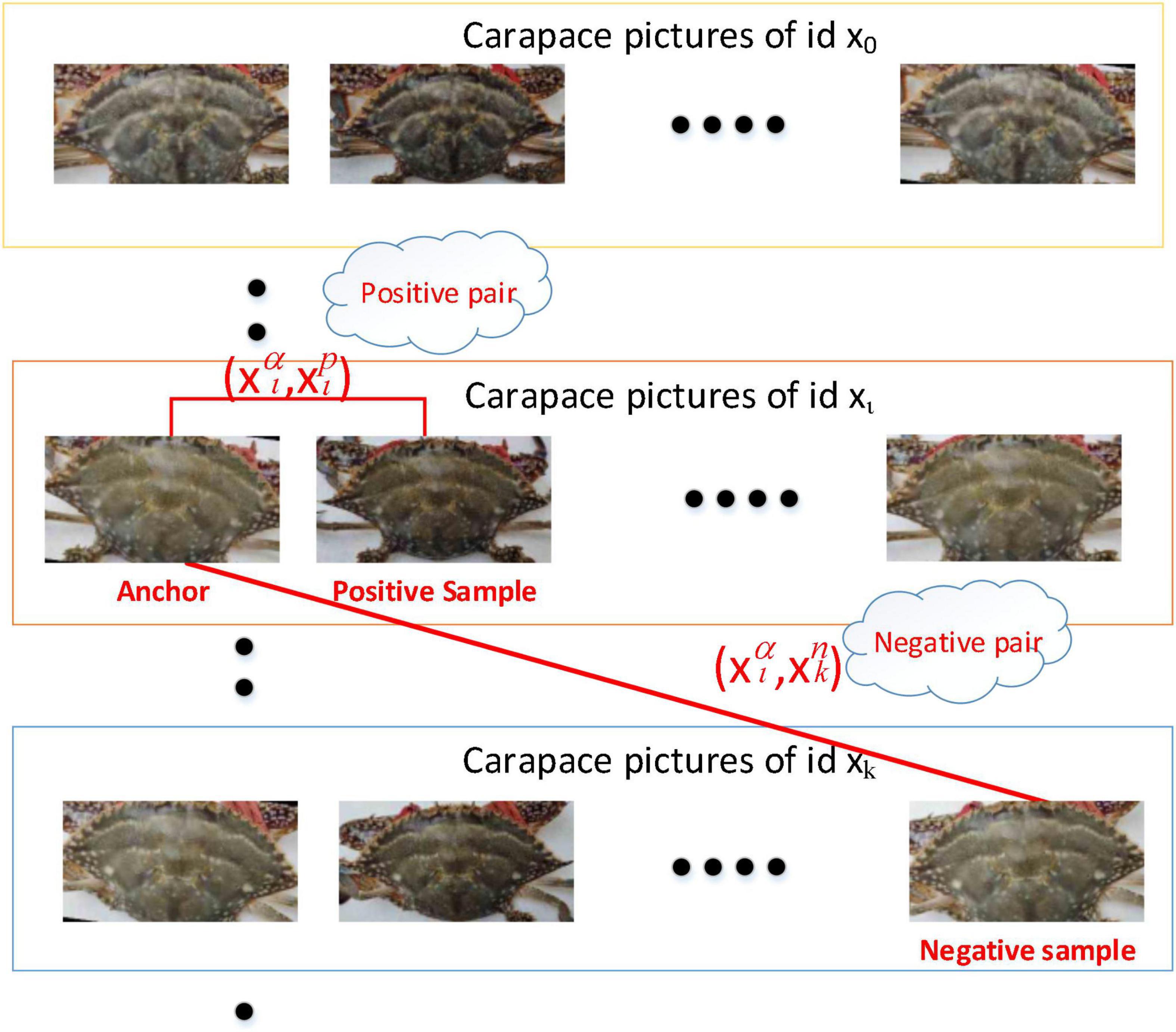

When triplet loss for model training was used, each P. trituberculatus individual was selected as an anchor. For each anchor, the sample with the same ID and the lowest feature similarity to the anchor was selected as a positive sample. Conversely, the sample with the different ID and the highest feature similarity was selected as a negative sample. By using the above process, the selected three samples (i.e., anchor sample, positive sample, and negative sample) formed a triplet tuple. Figure 7 shows the positive and negative sample selection processes.

Figure 7. Example of triplet loss, where the lines represent the positive sample pair and the negative sample pair. The similarity is measured by Euclidean distance.

As shown in Figure 7, each P. trituberculatus was photographed in different scenes. xj represents the pictures of P. trituberculatus j, where is the picture of P. trituberculatus j in scene i. When was selected as anchor sample, was a sample of ID i. If had the lowest similarity with , was selected as positive sample. As shown in Figure 7, the ID of was k, and if had the highest similarity with , was selected as negative sample. The selected composed a triplet tuple. The tuple composed by anchor and positive sample, denoted as , was positive sample pair, while the tuple by anchor and negative sample, denoted as , was negative sample pair. The purpose of triplet loss was to minimize the feature distance of the positive sample pair in each scene and maximize the feature distance of the negative sample pair. Triplet loss was expressed in the following:

where f(⋅) is the feature extraction function, ||⋅|| is the Euclidean distance function, , , denote the features of anchor sample , positive sample , and negative sample , respectively, α is a hyperparameter, and the value here is 0.2.

Results

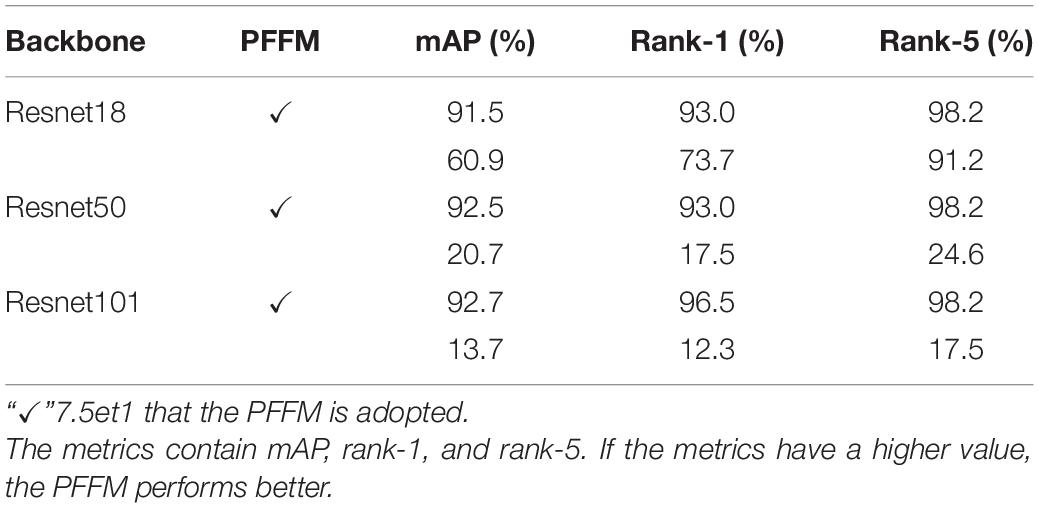

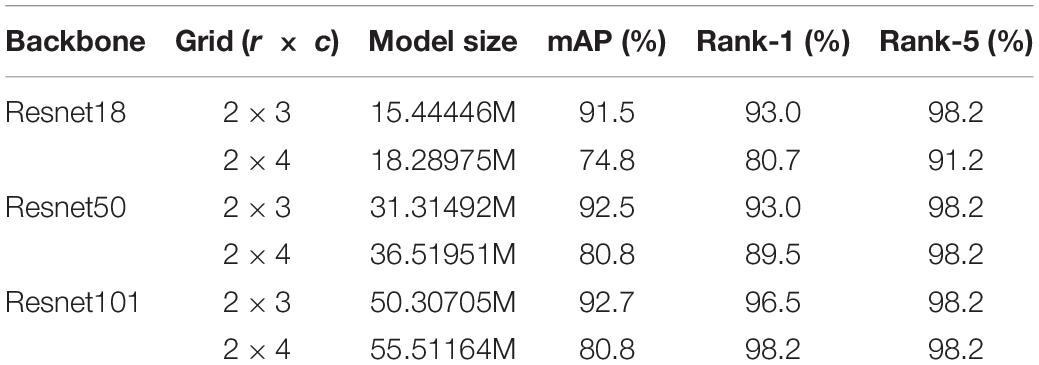

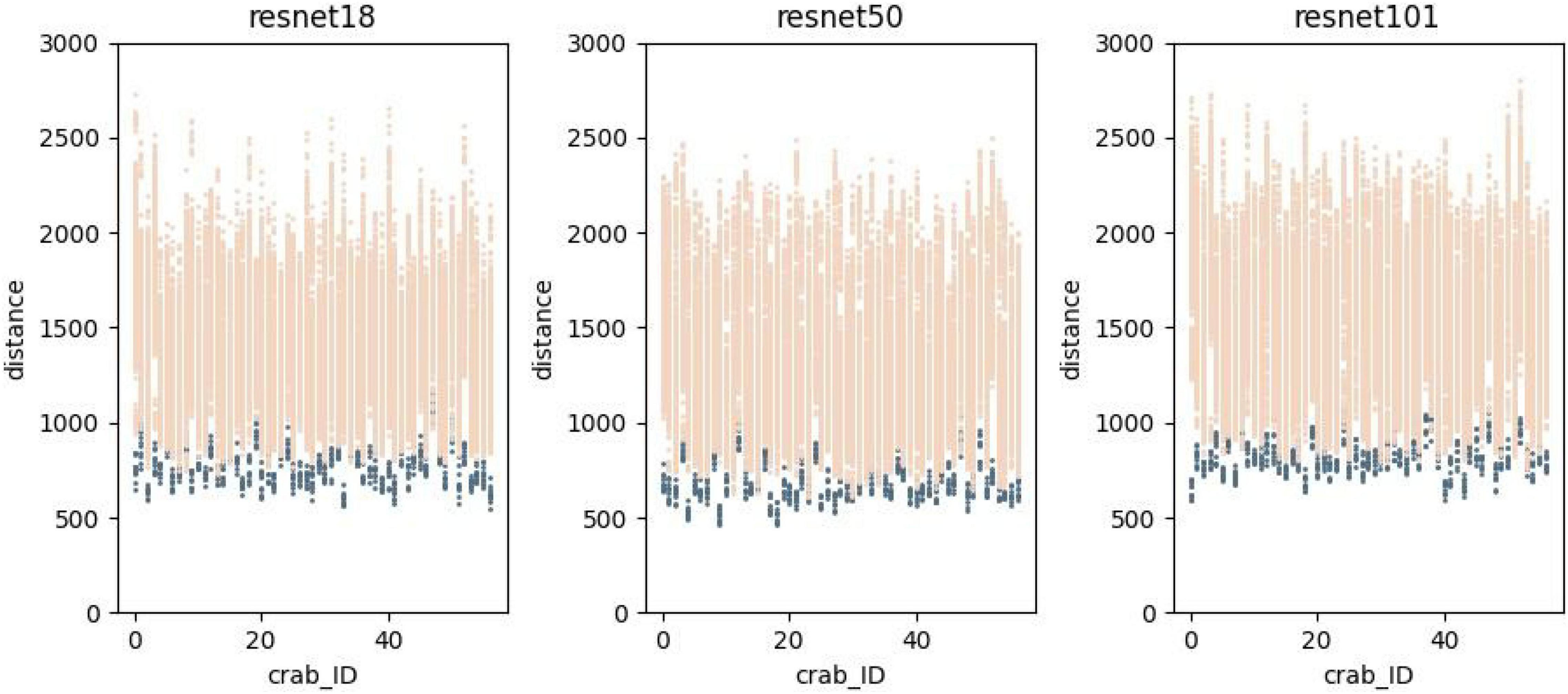

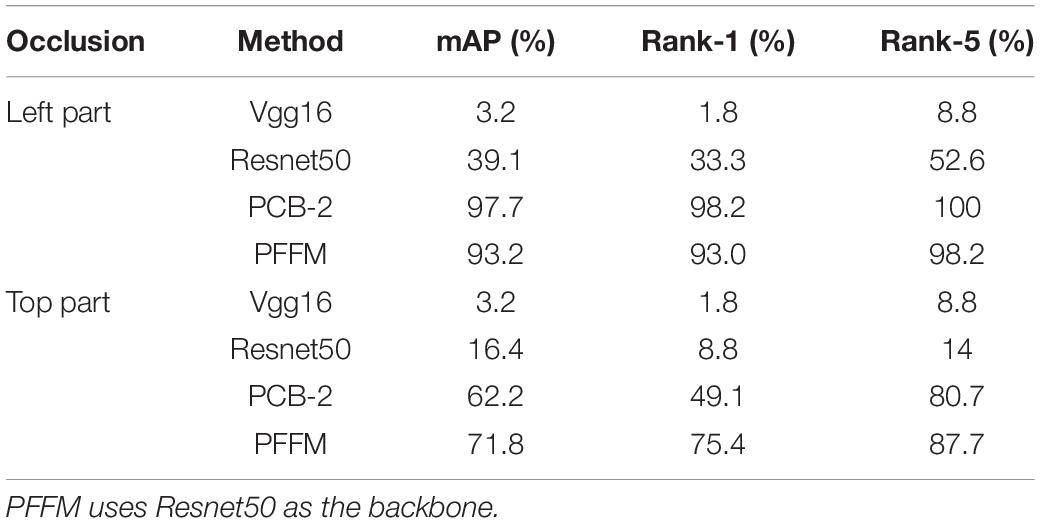

Table 3 shows the evaluation of PFFM using different backbones (i.e., Resnet18, Resnet50, and Resnet101). Table 4 shows the performance of PFFM with different division strategies, such as grid 2×3 and grid 2×4. Figure 8 visualizes the discrimination of the P. trituberculatus features by PFFM with different backbones (i.e., Resnet18, Resnet50, and Resnet101). Table 5 compares the proposed PFFM with the State-of-the-Arts on crab-back-211.

Table 4. The performance of pyramidal feature fusion model (PFFM) with different division strategies.

Figure 8. The feature distance of P. trituberculatus in the query set to the other images in the gallery set.

Table 5. The performance of Vgg16, Resnet50, PCB-2, and pyramidal feature fusion model (PFFM) on crab-back-211, where PCB-2 represents that feature is divided into 2 horizontal blocks using PCB.

For each backbone, the features of P. trituberculatus with the same ID are closer, while farther with different IDs.

Discussion

Effect of Pyramidal Feature Fusion Model

(1) The benefit of PFFM

The purpose of this experiment was to verify the effectiveness of PFFM. This experiment used three backbones (i.e., Resnet18, Resnet50, and Resnet101) to extract the original image feature and made six groups for the comparison methods, such as Resnet18, Resnet18-pyramid, Resnet18, Resnet50-pyramid, Resnet50, and Resnet101-pyramid. The groups of Resnet18-pyramid, Resnet50-pyramid, and Resnet101-pyramid adopted the proposed PFFM as the ReID model. To verify the robustness of each method, we occluded the left half part of the images in the query set and carried out the six comparison methods above on the retained right half part, to find the ReID target in the gallery set. The experimental results are shown in Table 3. The methods using PFFM, such as Resnet18-pyramid, Resnet50-pyramid, and Resnet101-pyramid, performed better than those not using PFFM. The mAP and rank-1 of Resnet18-pyramid increased by 39.6 and 19.3% compared with Resnet18. Among these comparison methods, the methods using PFFM performed best on mAP and rank-1.

The Resnet18, Resnet50, and Resnet101 models mainly used global features of P. trituberculatus for ReID. When the query set was occluded, a large global feature deviation would occur, which led to a decrease in mAP, rank-1, and rank-5. The proposed PFFM utilized local features to compensate for global features, to alleviate the global feature deviation problem, and to strengthen its robustness. This experiment also showed that PFFM with Resnet101 as backbone performed better than other comparison methods.

(2) Comparison of part division strategy

In contrast, to verify the optimal division strategy of PFFM, this experiment used two-division strategies, i.e., grid 2 × 3 and grid 2 × 4. This experiment used three backbones (i.e., Resnet18, Resnet50, and Resnet101) to extract the original image feature, and the results obtained using grid 2 × 3 and grid 2 × 4 are shown in Table 4. The model with grid 2 × 4 had more parameters than the model with grid 2 × 3. In accuracy contrast, the model with grid 2 × 3 also had an advantage. For grid 2 × 4, the divided parts were too small to maintain the continuity of local features, which could affect ReID accuracy and increase the burden of model training. By using this experiment, the optimal division of PFFM was grid 2 × 3.

Portunus trituberculatus Feature Analysis

To verify the compatibility of the PFFM, this experiment used different backbones with PFFM to analyze the feature distance of P. trituberculatus. This experiment calculated the feature distances of the samples with the same ID, and the feature distances of the samples with the different ID. Figure 8 shows the feature distance distribution of P. trituberculatus by resnet18, resnet50, and resnet101. In Figure 8, the x-axis indicates P. trituberculatus IDs, and the y-axis is the distance that shows the distances between P. trituberculatus. The dark scatter points represent the feature distances among the samples with the same ID, and the scatter light points represent the feature distances among the samples with a different ID. The dark scatter points are mainly distributed at the bottom, indicating that the distances among the samples with the same ID were small. The distances of the samples with different IDs were greater and had a large fluctuation. By the above analysis, the samples with the same ID were closer, while the samples were farther with different IDs. In addition, the PFFM provided a high discriminative ability, especially when aggregated from fixed part features. The discriminating features reflected the specificity of each P. trituberculatus, which was more suitable for P. trituberculatus ReID. By using this experiment, the PFFM could achieve better results on crab-back-211 with various backbones, so the proposed PFFM had better compatibility with P. trituberculatus ReID.

Comparisons to the State-of-the-Arts

This experiment compared PFFM with other methods on crab-back-211. Table 5 shows the comparison results. In this experiment, we occluded the left or top half of the images in the query set, to test the robustness of these methods. The occlusion was used to simulate the worse scenes in practice. We selected Vgg16, Resnet50, and PCB as comparison methods. For ReID models, the backbone was the preceding process used to generate original features, such as Vgg16 and Resnet50. The ReID models extracted features of P. trituberculatus from the original features. The Euclidean distance between the extracted features was used to identify P. trituberculatus individuals. For example, PCB was a ReID model that divided the original feature into two horizontal blocks for training and inference. Our study proposed PFFM using Resnet50 as the backbone. It was seen from Table 5 that the mAPs of Vgg16 and Resnet50 were much lower than those of PCB and PFFM that implied the models using part-based strategy had better robustness. Therefore, the method that only considered global features could not accurately identify P. trituberculatus with the incomplete carapace. The mAP of PFFM used in this experiment was 9.6% higher than that of PCB in the upper half occlusion case, while the rank-1 was 26.3% higher and the rank-5 was 7% higher. Therefore, the PFFM proposed had better robustness and could effectively identify the P. trituberculatus individual with the incomplete carapace.

Conclusion

In this study, a part-based PFFM model for P. trituberculatus ReID was designed. This model divided and merged the original features obtained by the backbone, extracting global and multilevel local features of P. trituberculatus. The proposed PFFM utilized local features to compensate for global feature, to alleviate the global feature deviation problem, and strengthen its robustness. In the model training process, ID loss and triplet loss were adopted to minimize the feature distance of P. trituberculatus individuals with the same ID and maximize the feature distance of P. trituberculatus individuals with a different ID. The experimental results showed that the PFFM had better performance when resnet50 was used as a backbone, and the best division strategy was grid 2 × 3. The PFFM maintained a high ReID accuracy for P. trituberculatus ReID in the incomplete carapace case. However, we discussed only adult P. trituberculatus ReID. There could be morphological changes of carapace characteristics in the growth cycle of P. trituberculatus. The proposed PFFM method is sensitive to the shape of the carapace. Therefore, the PFFM method is currently insufficient for P. trituberculatus across the growth cycle. In future research, the preparation of adequate training data is essential, and PFFM can be investigated to be applied to other animal ReID situations.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

KZ: methodology, data curation, visualization, and writing—original draft. YX and CS: conceptualization, writing—review and editing, project administration, and funding acquisition. ZX: resources, and review and editing. ZR: resources. All authors contributed to the article and approved the submitted version.

Funding

This study was supported by the National Key Research and Development Program of China (Project No. 2019YFD0901000), Special Research Funding from the Marine Biotechnology and Marine Engineering Discipline Group in Ningbo University (No. 422004582), the National Natural Science Foundation of China (Grant Nos. 41776164 and 31972783), the Natural Science Foundation of Ningbo (2019A610424), 2025 Technological Innovation for Ningbo (2019B10010), the National Key R&D Program of China (2018YFD0901304), the Ministry of Agriculture of China and China Agriculture Research System (No. CARS48), K. C. Wong Magna Fund in Ningbo University and the Scientific Research Foundation of Graduate School of Ningbo University (IF2020145), the Natural Science Foundation of Zhejiang Province (Grant No. LY22F020001), and the 3315 Plan Foundation of Ningbo (Grant No. 2019B-18-G).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bak, S., Corvee, E., Bremond, F., and Thonnat, M. (2010). “Person re-identification using haar-based and dcd-based signature,” in Proceedings of the 2010 7th IEEE International Conference on Advanced Video and Signal Based Surveillance (Piscataway, NJ: IEEE). doi: 10.1109/AVSS.2010.68

Bao, C., Cai, Q., Ying, X., Zhu, Y., Ding, Y., and Murk, T. A. J. (2021). Health risk assessment of arsenic and some heavy metals in the edible crab (Portunus trituberculatus) collected from Hangzhou Bay, China. Mar. Pollut. Bull. 173(Pt A):113007. doi: 10.1016/j.marpolbul.2021.113007

Barath Kumar, S., Padhi, R. K., and Satpathy, K. K. (2019). Trace metal distribution in crab organs and human health risk assessment on consumption of crabs collected from coastal water of South East coast of India. Mar. Pollut. Bull. 141, 273–282. doi: 10.1016/j.marpolbul.2019.02.022

Dalal, N., and Triggs, B. (2005). “Histograms of oriented gradients for human detection,” in Proceedings of the 2005 IEEE Computer Society Conference On Computer Vision And Pattern Recognition (CVPR’05), Vol. 1, (Piscataway, NJ: IEEE), 886–893.

Ferreira, A. C., Silva, L. R., Renna, F., Brandl, H. B., Renoult, J. P., Farine, D. R., et al. (2020). Deep learning-based methods for individual recognition in small birds. Methods Ecol. Evol. 11, 1072–1085. doi: 10.1111/2041-210X.13436

Fu, Y., Wei, Y., Zhou, Y., Shi, H., Huang, G., Wang, X., et al. (2019). Horizontal pyramid matching for person re-identification. Proc. AAAI Conf. Artif. Intell. 33, 8295–8302. doi: 10.1609/aaai.v33i01.33018295

Hansen, M. F., Smith, M. L., Smith, L. N., Salter, M. G., Baxter, E. M., Farish, M., et al. (2018). Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 98, 145–152. doi: 10.1016/j.compind.2018.02.016

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, Las Vegas, NV, 770–778. doi: 10.1109/CVPR.2016.90

Jüngling, K., Bodensteiner, C., and Arens, M. (2011). “Person re-identification in multi-camera networks,” in Proceedings of the CVPR 2011 Workshops (Colorado Springs, CO: IEEE). doi: 10.1109/CVPRW.2011.5981771

Korschens, M., and Denzler, J. (2019). “Elpephants: a -grained dataset for elephant re-identification,” in Proceedings Of The IEEE/CVF International Conference on Computer Vision Workshops, Seoul. doi: 10.1109/ICCVW.2019.00035

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105.

Liu, C., Zhang, R., and Guo, L. (2019). “Part-pose guided amur tiger re-identification,” in Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul. doi: 10.1109/ICCVW.2019.00042

Marsot, M., Mei, J., Shan, X., Ye, L., Feng, P., Yan, X., et al. (2020). An adaptive pig face recognition approach using Convolutional Neural Networks. Comput. Electron. Agric. 173:105386. doi: 10.1016/j.compag.2020.105386

Ojala, T., Pietikainen, M., and Maenpaa, T. (2002). Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Machine Intell. 24, 971–987. doi: 10.1109/tpami.2002.1017623

Oreifej, O., Mehran, R., and Shah, M. (2010). “Human identity recognition in aerial images,” in Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (San Francisco, CA: IEEE). doi: 10.1109/CVPR.2010.5540147

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A. (2016). “You only look once: unified, real-time object detection,” in Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, Las Vegas, NV, 779–788. doi: 10.1109/CVPR.2016.91

Schroff, F., Kalenichenko, D., and Philbin, J. (2015). “Facenet: a unified embedding for face recognition and clustering,” in Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, Boston, MA. doi: 10.1109/CVPR.2015.7298682

Shukla, A., Sigh Cheema, G., Gao, P., Onda, S., Anshumaan, D., Anand, S., et al. (2019). “A hybrid approach to tiger re-identification,” in Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul. doi: 10.1109/ICCVW.2019.00039

Sun, Y., Zheng, L., Yang, Y., Tian, Q., and Wang, S. (2018). “Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline),” in Proceedings of the European Conference On Computer Vision (ECCV) (Cham: Springer).

Violino, S., Antonucci, F., Pallottino, F., Cecchini, C., Figorilli, S., and Costa, C. (2019). Food traceability: a term map analysis basic review. Eur. Food Res. Technol. 245, 2089–2099. doi: 10.1007/s00217-019-03321-0

Vo, S. A., Scanlan, J., Turner, P., and Ollington, R. (2020). Convolutional neural networks for individual identification in the southern rock lobster supply chain. Food Control 118:107419. doi: 10.1016/j.foodcont.2020.107419

Wang, G., Yuan, Y., Chen, X., Li, J., and Zhou, X. (2018). “Learning discriminative features with multiple granularities for person re-identification,” in Proceedings of the 26th ACM International Conference On Multimedia, Seoul. doi: 10.1145/3240508.3240552

Yang, L., Wang, D., Xin, C., Wang, L., Ren, X., Guo, M., et al. (2021). An analysis of the heavy element distribution in edible tissues of the swimming crab (Portunus trituberculatus) from Shandong Province, China and its human consumption risk. Mar. Pollut. Bull. 169:112473. doi: 10.1016/j.marpolbul.2021.112473

Yu, D., Peng, X., Ji, C., Li, F., and Wu, H. (2020). Metal pollution and its biological effects in swimming crab Portunus trituberculatus by NMR-based metabolomics. Mar. Pollut. Bull. 15:111307. doi: 10.1016/j.marpolbul.2020.111307

Zheng, F., Deng, C., Sun, X., Jiang, X., Guo, X., Yu, Z., et al. (2019). “Pyramidal person re-identification via multi-loss dynamic training,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA. doi: 10.1109/CVPR.2019.00871

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., and Tian, Q. (2015). “Scalable person re-identification: a benchmark,” in Proceedings of the IEEE Interna- Tional Conference On Computer Vision, Santiago, 1116–1124. doi: 10.1109/ICCV.2015.133

Zheng, W.-S., Gong, S., and Xiang, T. (2011). “Person re-identification by probabilistic relative distance comparison,” in Proceedings Of The IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2011 (Colorado Springs, CO: IEEE). doi: 10.1109/CVPR.2011.5995598

Keywords: re-identification, deep learning, triplet loss, swimming crab, individual recognition

Citation: Zhang K, Xin Y, Shi C, Xie Z and Ren Z (2022) A Pyramidal Feature Fusion Model on Swimming Crab Portunus trituberculatus Re-identification. Front. Mar. Sci. 9:845112. doi: 10.3389/fmars.2022.845112

Received: 29 December 2021; Accepted: 08 February 2022;

Published: 22 March 2022.

Edited by:

Ping Liu, Yellow Sea Fisheries Research Institute (CAFS), ChinaCopyright © 2022 Zhang, Xin, Shi, Xie and Ren. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yu Xin, eGlueXVAbmJ1LmVkdS5jbg==; Ce Shi, c2hpY2UzMjEwQDEyNi5jb20=

Kejie Zhang

Kejie Zhang Yu Xin

Yu Xin Ce Shi

Ce Shi Zhijun Xie

Zhijun Xie Zhiming Ren

Zhiming Ren