- Ocean Networks Canada, University of Victoria, Victoria, BC, Canada

The advent of large-scale cabled ocean observatories brought about the need to handle large amounts of ocean-based data, continuously recorded at a high sampling rate over many years and made accessible in near-real time to the ocean science community and the public. Ocean Networks Canada (ONC) commenced installing and operating two regional cabled observatories on Canada’s Pacific Coast, VENUS inshore and NEPTUNE offshore in the 2000s, and later expanded to include observatories in the Atlantic and Arctic in the 2010s. The first data streams from the cabled instrument nodes started flowing in February 2006. This paper describes Oceans 2.0 and Oceans 3.0, the comprehensive Data Management and Archival System that ONC developed to capture all data and associated metadata into an ever-expanding dynamic database. Oceans 2.0 was the name for this software system from 2006–2021; in 2022, ONC revised this name to Oceans 3.0, reflecting the system’s many new and planned capabilities aligning with Web 3.0 concepts. Oceans 3.0 comprises both tools to manage the data acquisition and archival of all instrumental assets managed by ONC as well as end-user tools to discover, process, visualize and download the data. Oceans 3.0 rests upon ten foundational pillars: (1) A robust and stable system architecture to serve as the backbone within a context of constant technological progress and evolving needs of the operators and end users; (2) a data acquisition and archival framework for infrastructure management and data recording, including instrument drivers and parsers to capture all data and observatory actions, alongside task management options and support for data versioning; (3) a metadata system tracking all the details necessary to archive Findable, Accessible, Interoperable and Reproducible (FAIR) data from all scientific and non-scientific sensors; (4) a data Quality Assurance and Quality Control lifecycle with a consistent workflow and automated testing to detect instrument, data and network issues; (5) a data product pipeline ensuring the data are served in a wide variety of standard formats; (6) data discovery and access tools, both generalized and use-specific, allowing users to find and access data of interest; (7) an Application Programming Interface that enables scripted data discovery and access; (8) capabilities for customized and interactive data handling such as annotating videos or ingesting individual campaign-based data sets; (9) a system for generating persistent data identifiers and data citations, which supports interoperability with external data repositories; (10) capabilities to automatically detect and react to emergent events such as earthquakes. With a growing database and advancing technological capabilities, Oceans 3.0 is evolving toward a future in which the old paradigm of downloading packaged data files transitions to the new paradigm of cloud-based environments for data discovery, processing, analysis, and exchange.

Introduction

About Ocean Networks Canada

Ocean Networks Canada (ONC), a University of Victoria initiative, operates world-class cabled ocean observatories in the northeast Pacific, Arctic and Atlantic Ocean basins for the advancement of science and the benefit of Canada. With an operational design life of more than 25 years, the Ocean Networks Canada infrastructure collects and provides essential data required to address pressing scientific and policy issues. The innovative cabled infrastructure supplies continuous power and Internet connectivity to a broad suite of subsea instruments from coastal to deep-ocean environments. These observatories are supplemented by sensors installed on ferries, autonomous gliders and moorings, coastal radars, and other instrument technologies. Data acquired through these systems are provided freely and in near real time, from thousands of instruments distributed across some of the most diverse ocean environments found anywhere on Earth.

As one of the original Major Science Initiatives (MSI) funded by the Canadian Foundation of Innovation (CFI), Ocean Networks Canada is a national research facility hosted and owned by the University of Victoria. The total investments to build and operate the ocean observatories exceed $350M to date.

Ocean Networks Canada is among the vanguard of organizations advancing ocean intelligence, as the data, data products, and services from ONC physical and digital infrastructure support research by a growing cohort of scientists across diverse sectors and disciplines (see Supplementary Figure 43), inform policy decisions, provide a platform for Canadian industry to test and develop instruments and respond to events, and transform ocean technology and infrastructure into new knowledge that positions Canada at the forefront of the field.

Purpose of This Paper

This paper serves several purposes. First, an end-to-end description of data acquisition, processing, storage and product generation systems is provided to help scientific users better understand how ONC manages and serves data. This knowledge will help the researcher gain confidence in reliability and reproducibility of ONC data, while supporting needs to describe data provenance for scientific applications. The goal is to provide a citable reference for the ocean scientist.

Secondly, this paper is intended as a general reference for the overall Oceans 2.0/3.0 software framework, which will be of interest to those working in the areas of scientific data management systems and oceanographic data repositories. This paper does not delve deeply into the specifics of code, but rather provides a broad overview of the many platforms and capabilities comprising Oceans 2.0/3.0.

Motivations for a Data Management System

Decades of experience with expensive scientific observatories (both space-based, e.g., the Hubble Space Telescope, and terrestrial, e.g., large seismic arrays in several countries) have demonstrated the value of maintaining well-curated data archives. An observing system that costs on the order of 108 to 1010 dollars to design, implement and operate for any number of years must ensure its legacy – typically the data it collects – remains available for the longest possible time; doing so enables verification and reproducibility of results, and can often lead to new, unexpected discoveries. The long-term scientific productivity of projects like the Voyager probes (still producing data 44 years after they were launched) and Hubble (18,000+ scientific papers with 900,000+ citations) is attributable in no small part to the efforts made from the early design phase to include an associated data management and archiving system (Pirenne et al., 1993).

The large, real-time, high time-resolution ocean observatories pioneered by Ocean Networks Canada’s VENUS and NEPTUNE initiatives have similar long-term requirements for data management. From the early days, it became quickly apparent to the promoters of these initiatives that they could not be justified from either a science perspective (need for observations spanning decades) or a financial responsibility perspective (investment well into the $108 range) without a robust companion data management system.

Genesis of the System

With the need for a data management system clearly established in the early stages, the promoters of VENUS and NEPTUNE commissioned studies to assess needs, including the expected data types that the ocean observing systems would produce, together with design considerations and indications of an overall architecture. One such study was performed by the National Research Council’s Canadian Astronomy Data Centre (CADC) in 2004. CADC had, at the time, over 15 years of experience in dealing with research data from a variety of astronomical telescopes, both spatial and terrestrial, and with their curation, processing and visualization.

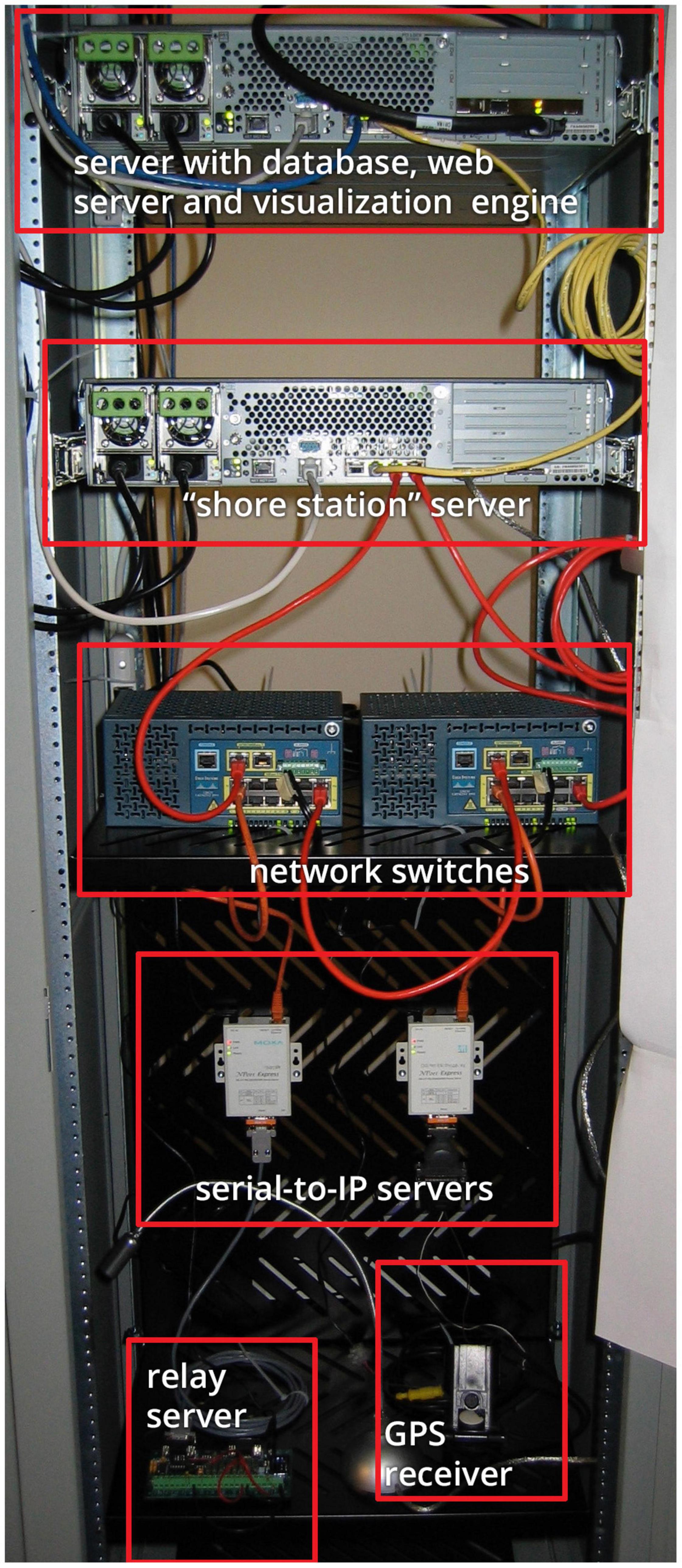

Toward the end of 2004, with the first staff in place, a prototype Data Management and Archiving System (DMAS) was developed to demonstrate the data acquisition, registration of new data and their archival. Simple but representative instruments were connected to the system, as shown in Figure 1.

Figure 1. View of Prototype DMAS, implemented in a single equipment rack. At top is the initial concept of the “data center,” a server running the data repository, consisting of a database, web server and visualization software. Second from top is a server running the “shore station” with “drivers” that implement the communication protocols of each instrument, parsing and pre-processing. In the middle is network equipment that implements the tree topology of the infrastructure: switches representing connections within the network. Second from bottom are a pair of serial-to-IP terminal servers that interface to the “instruments” at the bottom of the image, in this example, including a relay server and a GPS receiver. This high-level architecture is still in place today, with multiple “shore stations” and hundreds of instruments supported.

The structure defined and tested through the prototype led to the development of an architecture that satisfied the eight requirements (Supplementary Table 1) of the nascent ocean data management system and which remain key structural elements in place today.

Following the prototype, an interim DMAS was developed to support the first of the VENUS arrays in Saanich Inlet on Vancouver Island, which went operational in February 2006. The key elements (including data center and shore station) were maintained, as developers focused on implementing code to interface with the various instruments deployed. Initially the Sybase relational data management system was chosen as a metadata database. A file management system called AD, in use at the CADC and at the European Southern Observatory, was implemented to host the data records from each instrument, split into 24-h data segments.

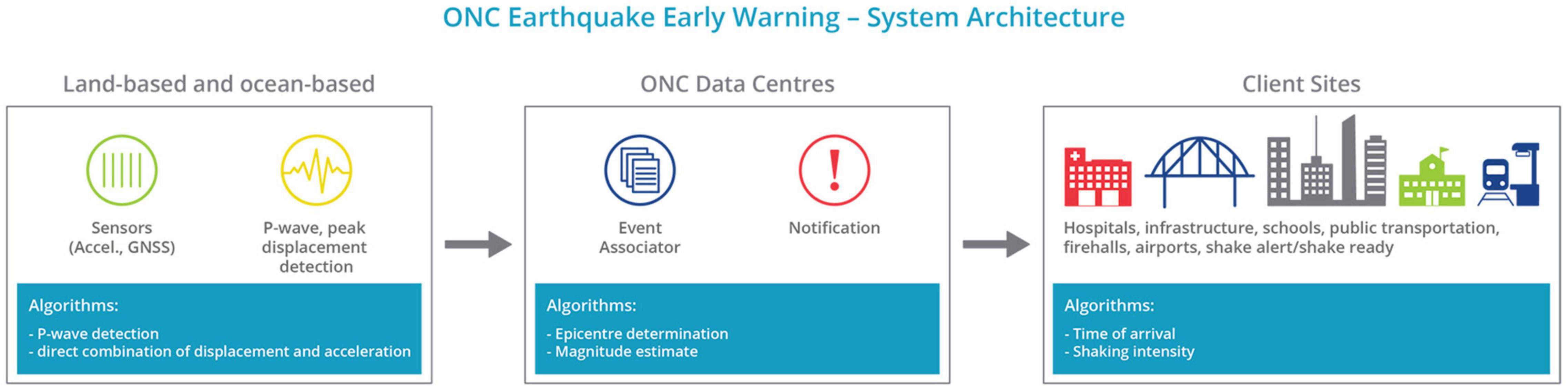

The interim DMAS rapidly evolved into a full-fledged system to support the second VENUS array (2008) and the NEPTUNE sensor network in 2010. Today, the system continues to grow and adapt, supporting an ever-expanding array of instruments and data types, and the significant combination of data products that can be derived from them. The flexibility and extensibility of the system has enabled expansion to support multiple communication technologies, and to collect data from many different locations, including the harshest deep ocean and arctic environments. The system also supports an increasingly diverse array of applications including an earthquake early warning system and a planned neutrino observatory. In 2012, DMAS was renamed and became known as Oceans 2.0, reflecting Web 2.0 concepts of user contribution and participation, as described by Murugesan (2007) (see section “User-Contributed Content”).

Features

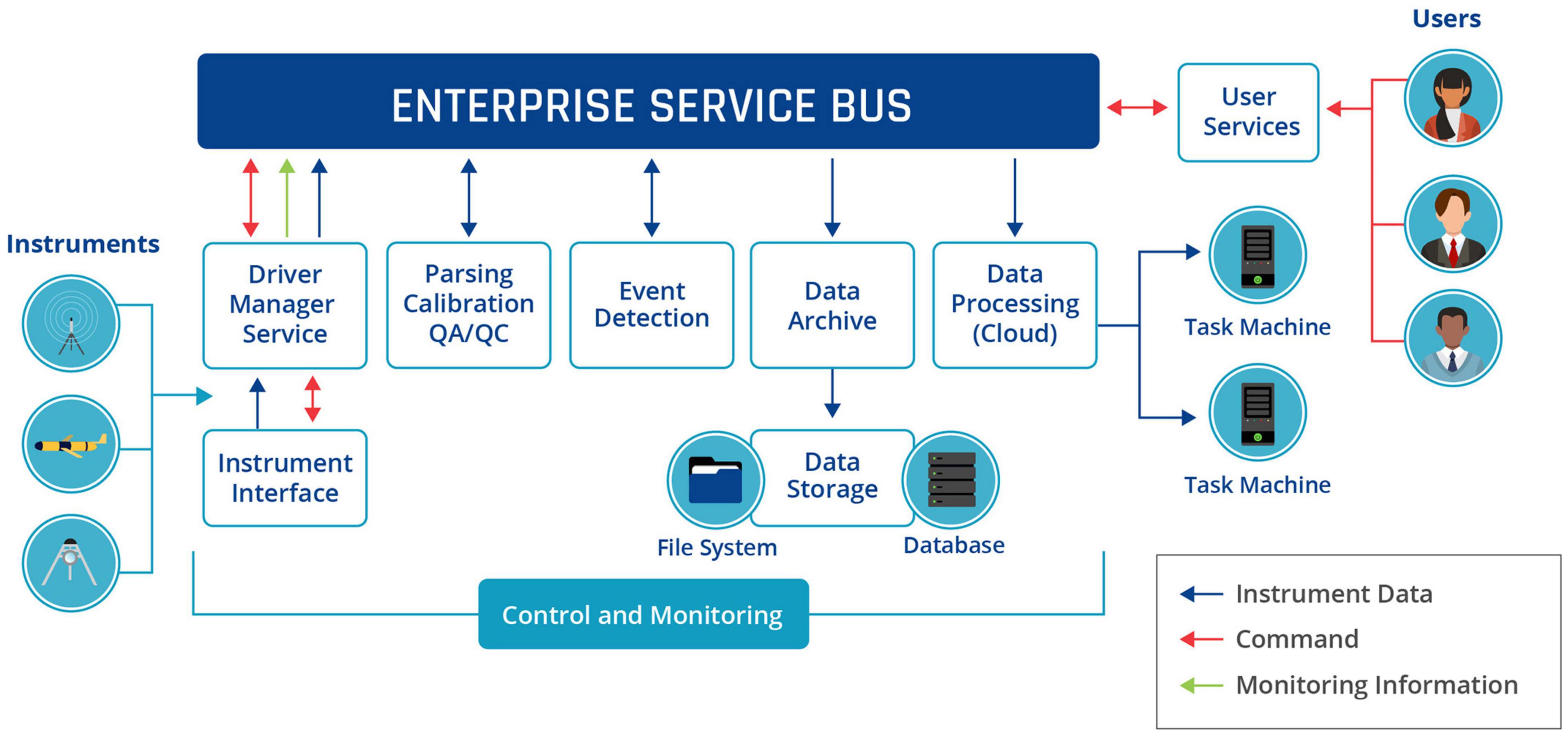

The features of Oceans 2.0 were implemented to address the key top level requirements identified in Supplementary Table 1. Ocean Networks Canada designed a system structure and topology (illustrated in Figure 2) that would be able to support any number of sensors, instruments, sites and networks, modeled on the tree structure used by Internet Protocol (IP) networks (Rose and McCloghrie, 1990).

Figure 2. Overview of the Oceans 2.0/3.0 Data Management and Archiving System (DMAS) structure, where a number of interconnected modules perform specific activities and pass their results to an Enterprise Service Bus for other subscribing modules to take on and process further.

In ensuing years, the efforts of the Oceans 2.0 team consisted primarily in implementing:

• support for additional instrument types;

• new data products, i.e., packaging of data into containers that satisfy international or industry standards;

• improvements of visualization methods for the various data types (from time-series plots to hydrophone spectra to combined views of environmental sensors data next to video streams);

• dedicated tools to help users not only view data but describe or annotate these data streams (SeaTube, Digital Fishers);

• dedicated applications to allow the automated contribution of field data measured by trained individuals anywhere around the world (Community Fishers);

• the ability to generate and associate Digital Object Identifiers to datasets, with tracking of their reprocessing history and versioning;

• an integrated observatory management system that includes full instrument preparation workflow, real-time monitoring and control of the infrastructure and full instrument metadata management, including the complete history of the instruments throughout their lifetime at ONC.

At the time of this writing, Oceans 3.0 supported:

• 9400 active sensors producing data;

• 930+ instruments producing data daily;

• 299 unique file-based data products;

• 8600 pre-generated plots produced daily;

• 2550 average daily data requests;

• 430 GB average volume of uncompressed data archived per day;

• 1.2 PB total uncompressed volume of archived data.

The rest of this review explores Oceans 2.0/3.0 features in depth.

Architecture

Planning for Renewal

Ocean Networks Canada observatories are research infrastructures intended to last at least 25 years. This includes both the physical as well as the digital components. Given the pace of technology evolution, the design and operational plans must account for different time scales/lifetimes of various components so that they can be replaced as needed to retain currency with the state of the art, while providing continuity of service. Typical operational lifetimes for various technology elements are listed in Supplementary Table 2; these correspond to replacement cycles anticipated in the ongoing maintenance and renewal of the ONC’s research infrastructures.

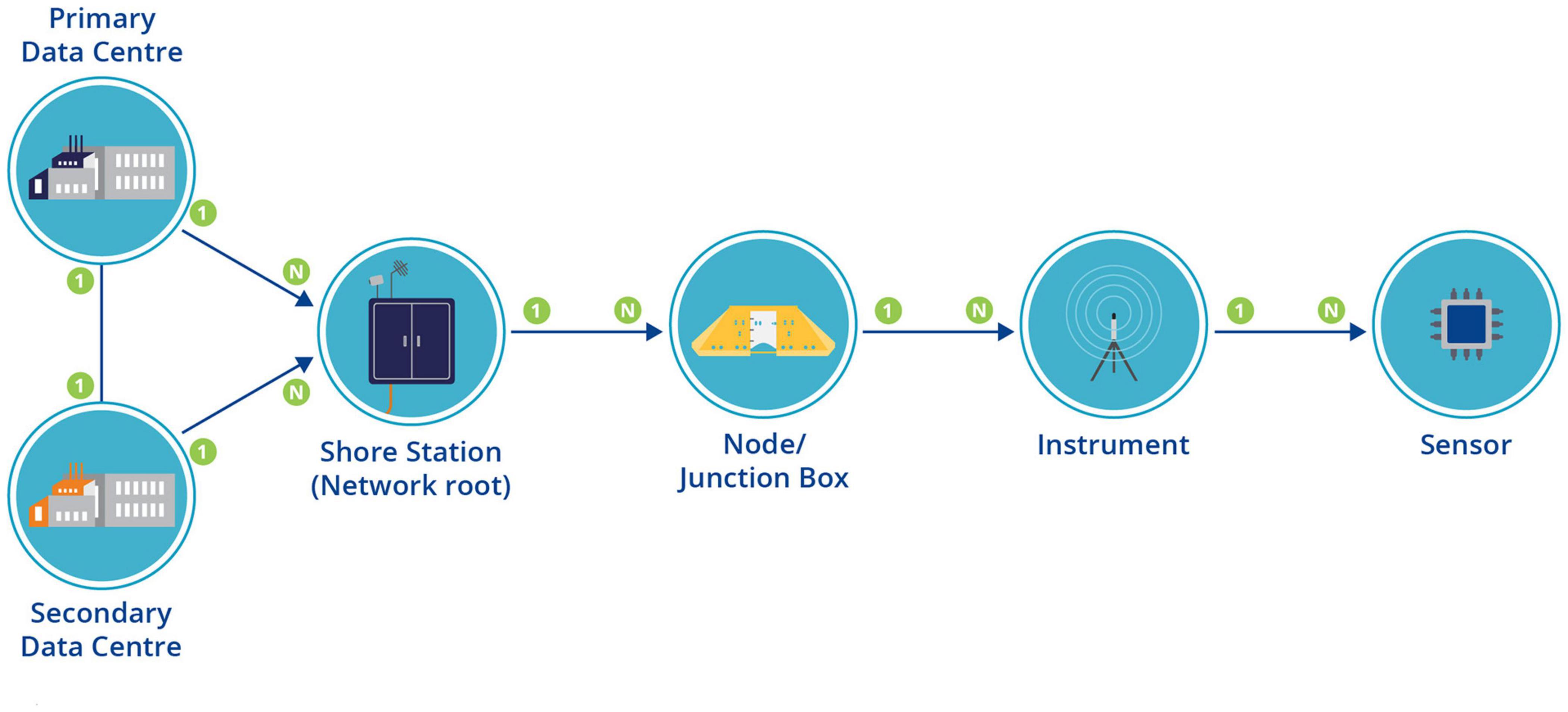

At its core, Oceans 3.0, the digital component of the Ocean Networks Canada research infrastructure, is a comprehensive management system for sensor networks. As a centrally managed infrastructure, its overall structure is hierarchical and tree-like, modeled after the Internet Protocol (IP) structure. As briefly presented in the introduction, it can be depicted in an entity-relationship diagram as illustrated by Figure 3.

Figure 3. ONC infrastructure represented by hierarchical entity-relationships extending from data center to sensor. The primary and secondary data centers support n shore stations, which in turn connect n nodes or junction boxes. Each junction box may have n connected instruments, with each instrument comprising n sensors.

Network

Ocean Networks Canada operates a collection of sensor networks, distributed across a vast geography, nearly extending from pole to pole, with systems in the Arctic as well as one being prepared for deployment in Antarctica as of this writing, and systems on both the Pacific and Atlantic coasts of Canada. The sensor networks and all the key elements of ONC data centers are integrated in a Class A private network (rooted at the non-routable IPv4 address 10.x.x.x). Interconnections between the distributed segments of the network are performed over virtual private networks (VPNs) that integrate a variety of Internet service provision methods ranging from cabled terrestrial, to wireless, and to satellite.

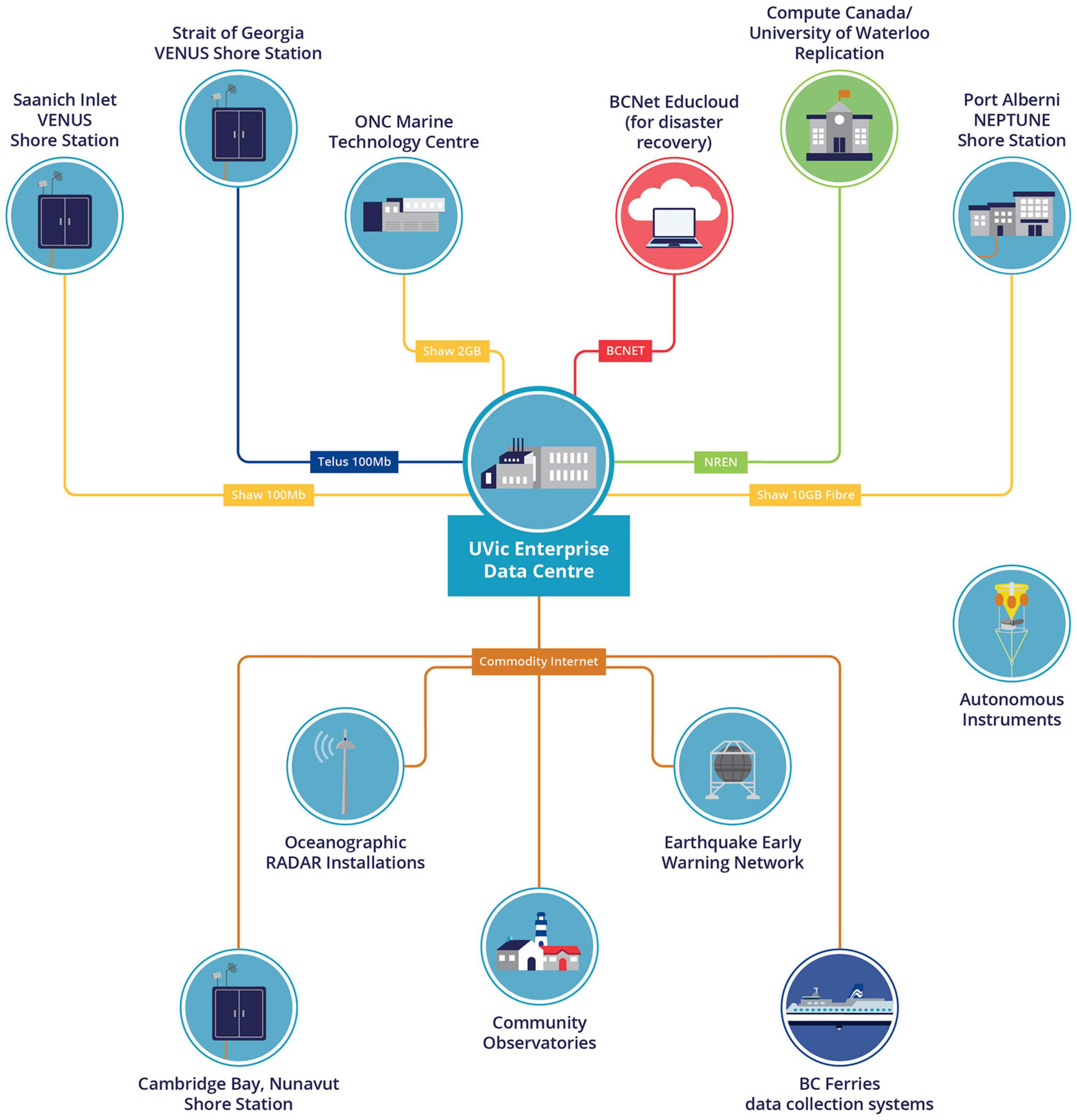

Ocean Networks Canada operates three data centers; the primary data center is located at the University of Victoria, in British Columbia, while secondary data centers (described in section “Business Continuity and Disaster Recovery”) are housed in the interior region of British Columbia and Ontario. The backup data centers provide an important safeguard in the event of disruptions caused by a potential major seismic event on Canada’s West Coast.

Ocean Networks Canada operates multiple shore stations, which provide a focal point and a root for their local subnet. The shore stations host equipment for communication with individual instruments, typically (but not always) located underwater. The overall configuration of shore stations, data centers and network connections is illustrated by Figure 4.

Figure 4. ONC networks connecting shore-based network nodes to the University of Victoria Enterprise Data Centre.

Since ONC’s infrastructure is essentially an extension of the Internet underwater, Internet Protocol (IP) access is extended as far as possible toward the sensor endpoints. For legacy serial instruments, terminal servers located in junction boxes translate the serial protocol to make their data available over IP. The terminal servers are configured to act as servers, while software drivers interacting with instruments act as clients for the purpose of the socket connection.

Timing

An integral aspect of Ocean Networks Canada facility design, and a key enabler of multi- and trans-disciplinary research, is the ability to coordinate observations between completely different observing systems (such as satellites and in situ sensors). This is only possible if a single, very accurate clock signal is available to synchronize all the readings from all instruments.

Ocean Networks Canada’s largest observing infrastructure (the NEPTUNE observatory) is equipped with three GPS clocks that follow the IEEE1588 Precision Time Protocol and can be inter-compared to ensure provision of the most accurate absolute time signal to all instruments underwater. All readings from all instruments are time-stamped at the shore station, and that time is used if the instrument cannot autonomously synchronize its internal clock with the shore station master clocks.

A single time reference allows the researcher to make direct comparisons between events seen in distinct data streams, for example, camera video and temperature readings, or the collation of data from seismic devices across the network to derive an earthquake epicenter. Additionally, the system enables secondary clocks to provide a higher accuracy time signal for specific local experiments, such as a planned neutrino observatory that will require nanosecond-level local timing.

Data Storage Formats

Resisting trends to build an archive in which datasets are stored in short-lived formats, or to choose one format among competing standards, ONC system architects decided to remain agnostic with respect to formats and select for internal storage those most appropriate for the given application. However, data downloads always respect users’ choices. For example, Oceans 3.0 delivers the same data to users, whether formatted as Comma-Separated Values (CSV), in a MATLAB table or in NetCDF. To enable this, Oceans 3.0 performs format conversions on the fly when generating products from internally stored data. This averts the possibility of being locked into specific stored formats that could be deprecated after a few years, requiring costly internal conversions. ONC believes this approach has been a beneficial best practice, both for data managers and users, thanks to its flexibility.

Hardware and Software Technologies

A variety of hardware platforms, software systems and technologies are combined to host and operate Oceans 3.0, including storage and database systems, virtualization infrastructure, and physical machines for specialized applications.

Ocean Networks Canada’s main storage system is a NetApp FAS8200 NAS (Network Attached Storage) with 1.5 PB of available storage as of July 2021. This system hosts the Oceans 3.0 Archive Data file server and archives. In addition, it hosts the Oceans 3.0 web server and all of ONC’s virtual machines and associated file systems as well as ONC’s supporting software systems used for system monitoring and graphing, issue tracking, documentation (including extensive details on data models, software requirements, design, etc.), and content management. These main data holdings are replicated in two back-up locations, as described in section Business Continuity and Disaster Recovery. At the time of writing, an additional layer of replication was in process of transitioning from Tivoli Storage Manager to Google Cloud.

Two main database systems support Oceans 3.0: Postgres and Cassandra. The Postgres database, which stores Oceans 3.0 metadata, is instantiated as a read/write master in the University of Victoria Enterprise Data Centre (UVic EDC), with read-only replicas at the UVic EDC and in the BCNet Educloud. The Cassandra no-SQL database, which stores Oceans 3.0 scalar data and other readings, is implemented as a 16-node cluster in the UVic EDC, with each datapoint replicated three times across the cluster. A backup Cassandra instance is implemented on BCNet Educloud across 12 nodes.

Ocean Networks Canada’s virtualization infrastructure supporting all Oceans 3.0 software development and production platforms runs on 21 physical servers, supporting over 170 virtual machines.

Seven dedicated task machines are also in operation, performing all of the computation and rendering for Oceans 3.0 data product generation. At the time of writing, ONC was in process of shifting from CPU-based to GPU-based platforms for data product generation, with work underway to partially implement these within Compute Canada’s cloud environment.

Instrument driver software runs on driver machines located in all ONC shore stations. These driver machines are operated as a redundant pair of machines, with the backup configured as a warm standby. The drivers running on these systems connect to oceanographic instruments, retrieve raw data and feed these data into the upstream components of the Oceans 3.0 data acquisition and archival system.

Oceans 3.0 runs on Gemini servers under the CentOS Linux operating system (Gemini is an open-source lightweight application-level server supporting the Open Service Gateway Initiative (OSGi) and encapsulating the ubiquitous open-source Tomcat server). Some of the main software systems used to operate Oceans 3.0 include the ActiveMQ messaging service (for transferring data, scheduling jobs and handling communications among Oceans 3.0 computing components), Zenoss (for network monitoring), Prometheus, Graphite and Grafana (for metrics and monitoring), Graylog and Splunk (for log file aggregation) and Wowza (for video recording and streaming).

Business Continuity and Disaster Recovery

Because ONC shore stations and data center are all located in seismically active areas, ONC operates two disaster recovery locations, one in Educloud hosted in Kamloops, British Columbia (a location far removed from the coastal seismic hazard zone) and a second in Compute Canada hosted at the University of Waterloo, Ontario, Canada.

The disaster recovery location at the University of Waterloo maintains an exact copy of all archived instrument and sensor data. Data are copied daily and periodically checked for consistency. At the time of this writing, the replication and consistency verification processes were being revamped to accommodate the large volume of archived data, in excess of 10TB and 2 million files per month. A third replica of archived data was formerly maintained on tape backups. Due to rising costs of operating a tape library ONC decided to transition the third replica to Google Cloud in Montréal.

The Educloud disaster recovery location runs all the software required to start Oceans 3.0 in the event of a major disaster impacting the UVic data center. This includes database replicas (Cassandra and Postgres) and virtual machines. Aside from Oceans 3.0, this also includes development, documentation and monitoring systems required to maintain and operate Oceans 3.0.

User Management and Access Restrictions

Since Oceans 3.0 was designed not only for providing access to the data produced by instruments on the networks but also for managing and controlling those instruments, a user management scheme was integrated into the design of Oceans 3.0. Permission schemes for individuals and groups, as well as functions that require group authorization have been implemented and offer the full range of authentication/permissions features. The operation of a specific instrument, for example an underwater camera, assigns permissions to one implicit group and two explicit groups for managing the various operational aspects. The implicit group’s permissions are restricted to merely viewing what the camera is seeing. One of the explicit groups allows its members to operate the camera (e.g., illuminate the lights, move the pan and tilt), whereas the third group members are allowed to change the observing program schedule.

Login is not required for simply browsing or accessing data; an anonymous use mode was implemented, which does not provide any access to specific features or assistance with data requests that may have gone awry. Login is required for users wanting to contribute content to the system, for instance to add annotations to data streams such as hydrophone audio or video recordings; this login requirement enables traceability, reporting by source, and helps prevent system abuse.

Specific cases where login is required also provide access to restricted data. Whereas the vast majority of the data are available immediately to users without any restrictions, in some specific cases restrictions are applied for sensitive data (more on this in section “Metadata”). This occurs when Oceans 3.0 is the repository of another organization’s data, governed by data agreements that stipulate either limiting access restrictions to a specific group or for a proprietary period lasting from minutes to years. These restrictions are applied broadly by device, or specifically by data product and time for specific users and groups. Data restrictions are adhered to throughout Oceans 3.0, including programmatic access, interactive data access and all downloads. Data access restrictions are also configurable in the user management system of Oceans 3.0.

Data Acquisition and Archival

The Oceans 3.0 data acquisition and archival system ingests readings from oceanographic instruments (referred to as devices), and stores them in database and file system archives. This highly automated pipeline is implemented by an interconnected set of software drivers, messaging queues, parsers, calibrators, Quality Assurance/Quality Control (QA/QC) tests, event detectors and archival routines.

Real Time Acquisition

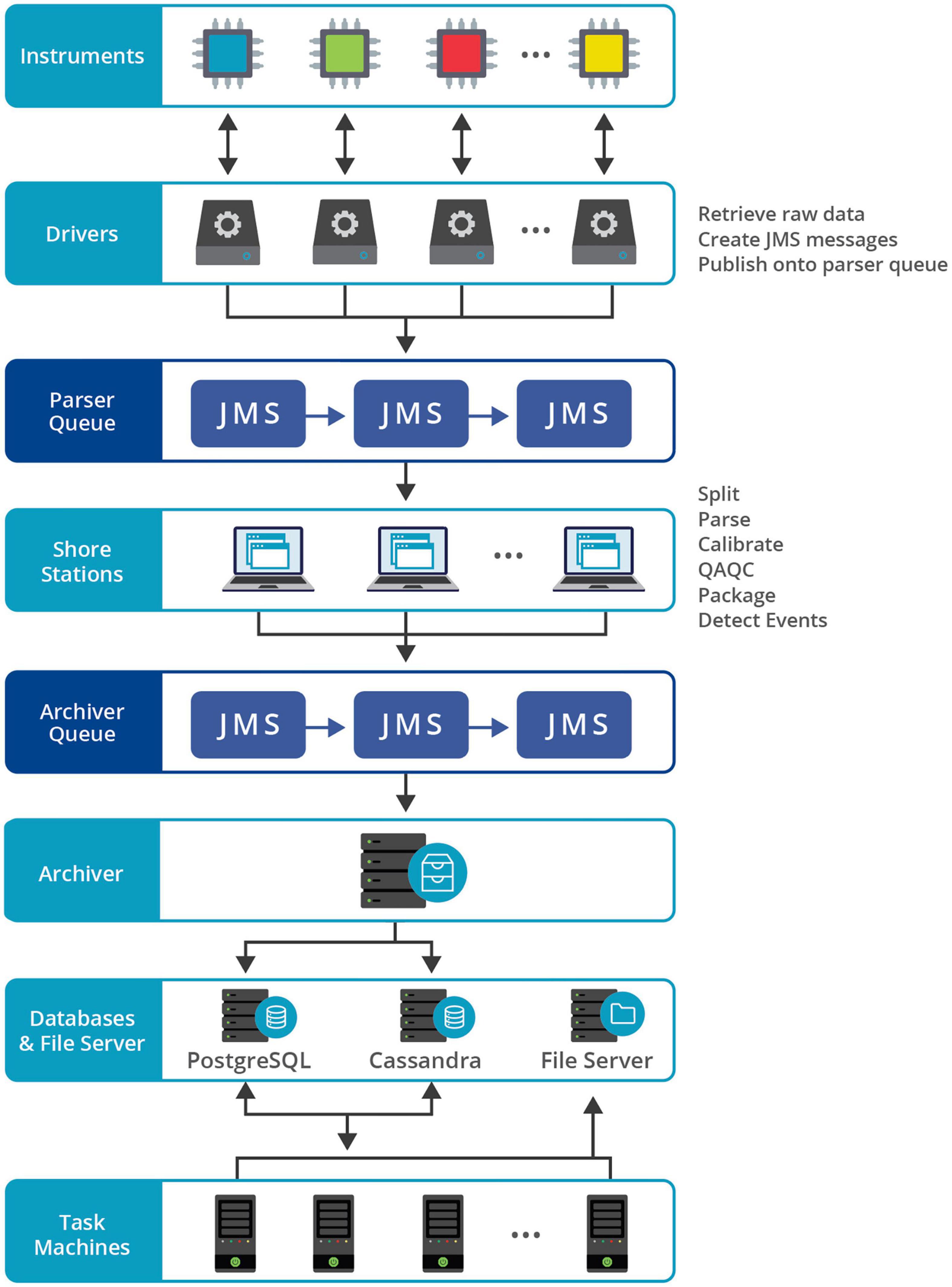

Real time and near-real time data acquisition is handled by a series of systems and processes extending from instruments to database and file servers, as illustrated in Figure 5.

Step 1: Acquiring Data Readings

Programs that control and communicate directly with devices are called drivers within Oceans 3.0 nomenclature. The primary function of each driver is to acquire real time data from the device; they are designed to be as simple as possible for completion of this function. Drivers typically support a subset of the functions available on the target device, usually only commands related to configuring the device and retrieving data.

Data collected by drivers are published as Java Message Service (JMS) messages. Drivers are run on Java Virtual Machines (JVMs); there can be multiple drivers on one JVM. Typically, ONC spawns one JVM per physical machine and it is common practice to launch multiple JVM machines at a particular physical network location.

Within Oceans 3.0, network connections between drivers and devices are always handled through a Transmission Control Protocol (TCP) connection. Even serial data streams are converted into TCP format for network transmission. Different protocols are used for different devices, depending on configurations. Oceans 3.0 supports TCP, UDP, HTTP and SSH network connections.

Step 2: Publishing Onto the Parser Queue

Oceans 3.0 uses a publish and subscribe model for handling the JMS messages. These messages are published onto the parser queue. The JMS messaging standard is advantageous because of built-in failsafes, which ensure that any published message will reach its subscriber, even in the event of a lost connection or outage.

Oceans 3.0 employs the Active MQ implementation of JMS, which includes robust handshaking protocols and intermediary data backup. These messages are retrieved from the parser queue by the shore station for processing.

Step 3: Processing by the Shore Station

The Oceans 3.0 Shore Stations are not physical facilities, but rather JVMs running in the same physical location as the driver JVMs. These programs process JMSs sequentially, performing a number of operations along the way:

1. Splitting the raw data into components, such as device IDs or sub messages;

2. Parsing raw data and converting values into readings, configurations and complex data structures;

3. Calibrating parsed data, applying/converting units of measure, calculating derived quantities (e.g., salinity which is derived from other parameters);

4. QA/QC operations, such as checking for data out of bounds and flagging suspect data;

5. Packaging all of these elements into a new JMS containing the raw data along with parsed values, corrected values, derived values and QA/QC flags; and

6. Event detection, which can be any of a number of automated operations, depending on specific data values or ranges (e.g., sending an email).

Step 4: Publishing Onto the Archiver Queue

Finished JMS messages produced by the shore station are then published to the archiver queue, which is another instance of the Active MQ messaging service. This queue serves the same function as the parser queue, holding incoming messages, and allowing them to be picked up sequentially by the archiver, which subscribes to this queue.

Step 5: Archival

Although multiple shore stations are implemented within the Oceans 3.0 cyber infrastructure, there is currently only one archiver machine, which is another JVM running at the University of Victoria Enterprise Data Centre. The role of the archiver is to ensure all incoming data are stored in their proper storage systems. In the event that this system becomes overloaded or experiences malfunctions, the data remain in the MQ system until they can be safely archived. There is a manual process to re-ingest data failures and errors in the archiver (and the parsers on the shore stations). A new queue management and configuration system is being implemented in early 2022 which will allow for multiple archiver instances.

The incoming data, including raw data, sensor data and QA/QC flags, are stored in different systems, depending on the type of data. Currently, Oceans 3.0 supports the following storage systems:

• Postgres –an open-source SQL database, used for QA/QC flags in particular and all other metadata and data not stored in Cassandra;

• Cassandra – a no-SQL database, used to store parsed scalar sample values, complex readings, and as an accumulator for raw data prior to its writing into raw data files (Cassandra is used here instead of Postgres to more effectively handle and scale to the data throughput);

• Archive Directory (AD) – a file store, used to archive one concatenated file daily for each device.

Task Machine and Scheduled Jobs

The above steps comprise the end-to-end process of real time data acquisition, but some additional processing steps are handled by task machines at the end of this acquisition pipeline. Task machines incorporate a scheduler system, which runs thousands of jobs daily. One important scheduled job is the daily job, which runs every day after midnight UTC. This routine pulls all raw files recorded during the past day from the Cassandra database and writes them as one log file per device into the Archive Directory. These log files retain not only the data records, but also the commands and responses between the driver and the device. These log files are therefore an extremely valuable resource for troubleshooting and provenance. Another scheduled job pulls scalar data from Cassandra and generates 15-min averaged data values that are then stored back into Cassandra as quarter (hour) scalar data; the quarter scalar readings help improve performance when generating on-the-fly plots and other data products.

Other Acquisition Methods

Aside from the real time acquisition described above, Oceans 3.0 supports other types of acquisition for different data collection regimes.

3rd Party Data Push

For some systems, such as buoys operated by partner institutions, acquired data can be pushed directly to the Active MQ parser queue without passing through a driver. Additionally, some data are acquired via web services and sent directly to the archiver queue; this is the method for ship Automated Identification Services (AIS) data.

Store and Forward Acquisition

The store and forward model is used in situations where data are stored on an external device and forwarded to the Oceans 3.0 system periodically. Some examples of this are scheduled jobs that access external ftp or mail servers and upload the data. Some of these scheduled jobs also read the acquired files and push data directly onto the Active MQ parser queue, while all the files acquired this way are archived in the file system. Some independent drivers (not part of Oceans 3.0) also push files over the secure network to the file archiving scheduled jobs, where they can be tagged for post-processing by the data product pipeline.

Autonomous Systems

A variation on this model is used for data from autonomous systems, such as battery-powered moorings, which collect data over an extended period of time until the instruments are recovered and their raw data then ingested and processed. In these cases, the data are retrieved from the instrument at recovery or in situ following procedures outlined by the instrument manufacturers. These raw files are verified, renamed to ONC’s file naming conventions, and uploaded to the archive. In cases where data parsing is intended, scripts are executed to convert the raw manufacturer files into daily log files that mimic those produced from the driver-operated instruments. Once these log files are archived, the files are added into a parser queue to follow steps 2–5 above, in similar fashion to real time data acquisition.

Device Control

Co-located Active Acoustic Devices

In some cases, multiple active acoustics devices, such as echosounders, Acoustic Doppler Current Profilers or sonars, are located in close enough proximity that signal interference could be problematic. For example, with two co-located sonars, the drivers for these sonars coordinate their timing by interlacing the acoustic pings from each sonar. This is done by using the ping of one of the sonars as a signal for the 2nd sonar to perform its ping following a predefined delay. Supplementary Figure 1 illustrates this as a simplified timing diagram.

This solution is in use for several of ONC’s co-located sonar devices. It can be used for pairings of co-located devices provided there is enough time between pings for each sonar to perform a ping and the secondary sonar can be operated in a poll mode.

Camera Control and Acquisition

Camera Systems

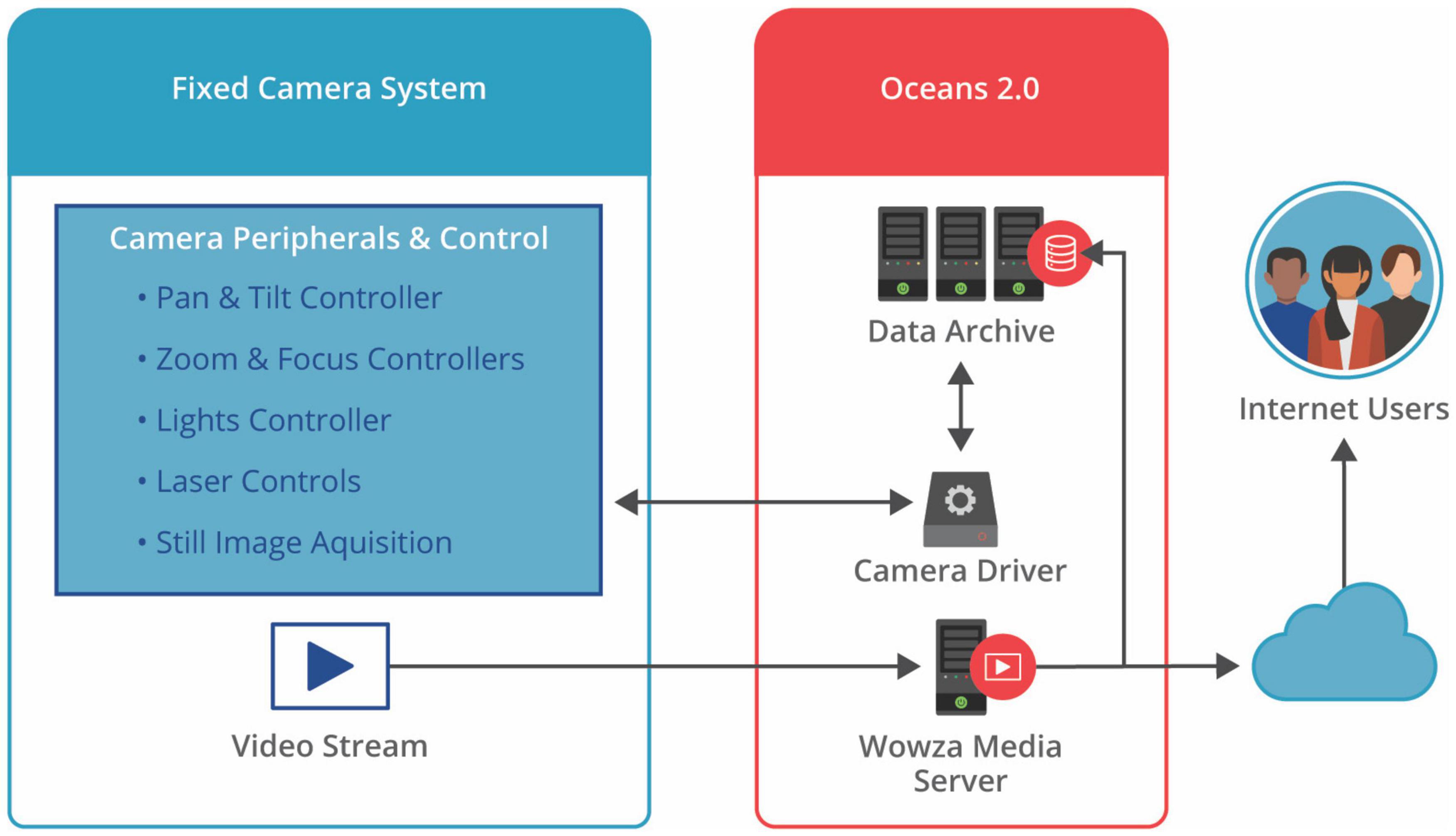

Camera systems consist of a camera, lights and in some cases a pan/tilt device and/or set of lasers. Oceans 3.0 supports multiple manufacturers and models of each of these components. Camera operations include handling the video stream and controlling the various camera and peripheral settings. Figure 6 illustrates the system for data acquisition, camera control and distribution of the video stream.

Figure 6. Control, data acquisition and stream distribution system for a fixed-location camera on the ONC network.

Video Streaming Server

A Wowza streaming video server is used to stream video from all cameras (Wowza Streaming Engine, 2022). Video is streamed in whatever format the camera supports and the video server maintains only one stream per camera. All Oceans 3.0 web pages that display streaming video from the cameras are connected to the Wowza streaming engine1, which provides video streams in a standard format and resolution. This streaming server technology is compatible with the networks, cameras and servers used by ONC; at the time of adoption by ONC in 2008 it also had the advantage of being one of the only alternatives to the proprietary Adobe Flash format.

The video streaming server also writes each video stream to the AD file system. For deep sea camera systems, writing the stream is usually controlled by the status of the camera’s lights; since there is almost no ambient light in the deep ocean, the stream is only written when the lights are illuminated.

Camera Driver

A camera driver is a type of driver as outlined in the above section Acquiring Data Readings. This driver contains additional capabilities to control various functions on the camera system such as zoom, focus, lights, pan/tilt, lasers, etc. Drivers transmit commands to the camera system and obtain telemetry and status information from the system. For some cameras, there is also a capability to record high-resolution still images, which are transmitted through the driver to the Oceans 3.0 data acquisition framework. Camera drivers implement a common set of camera commands that are the same for all camera systems regardless of the manufacturer or model (More on this in the Common Interfaces section “Common Interfaces” below).

Infrastructure Management Tools

Device Console

The Oceans 3.0 Device Console (Supplementary Figure 2) provides a real time display of instrument connectivity. This application serves as the command-and-control center for the observatory systems team, and is vital for the maintenance and troubleshooting of instruments. Using the Device Console, ONC system operators can start and stop instrument drivers. All users can obtain real-time summaries of any networked instrument’s current status, uptime and last archived file; in addition, interactive quick plots of sensor readings and links to Device Details are provided.

Junction Box View

Junction boxes are an integral component of the subsea infrastructure, as they distribute power and communications to connected individual scientific instruments. Within the ONC infrastructure, many different types of junction boxes are deployed, each customized for its specific needs; some junction boxes are designed to serve very basic functions, while others are quite sophisticated, integrating many dozens of sensors and control systems. The Junction Box View tab in the Device Console (Supplementary Figure 3) allows observatory operators to monitor electrical conditions for each junction box port and connected device as well as activate and deactivate instruments via the port on/off buttons. This common interface provides a standardized means of monitoring and controlling a wide range of instruments connected to a wide variety of junction boxes.

SeaScript

SeaScript is a scripting language developed by Oceans Networks Canada that enables control of device behavior through a script. This tool serves as an engine for creating and executing scripts containing commands for sets of devices in order to accommodate complex experiments. Some use cases for SeaScript include remote execution of profiling system casts and operation of pre-defined recording routines for seafloor video cameras.

The SeaScript commands and comments in Supplementary Figure 4 are part of a camera control sequence to control lights, pan/tilt, and camera settings then record an image. Such scripts can be scheduled to run periodically, for example every 4 h.

SeaScript allows scientific users to readily understand and easily customize the behavior of drivers. This is particularly useful in situations where the data acquisition routine is not clear from the outset and iterative refinements are required by the users to obtain the most meaningful results. Iterative software improvements include on-going support for new instrumentation and functions; for example, a new video camera was recently added that can be configured via SeaScript to record in 4K resolution.

Common Interfaces

Oceans 3.0 supports common interfaces for different devices of a given category, such as different camera models. By abstracting controls specific to individual makes and models to derive a generalized set of commands, the task of viewing and controlling different instruments is greatly simplified. At the time of this writing, 11 different camera model types were active on the ONC network, all supported by the same common control interface. Two contrasting examples are shown in Supplementary Figure 5.

Task Management

At any time, there may be hundreds of unscheduled and scheduled jobs running on Oceans 3.0 task machines. Unscheduled jobs typically process requests made by Oceans 3.0 users for specific data products, but also include reprocessing jobs initiated by ONC data stewards. Scheduled jobs are automated processes such as file generation and transfers that are part of ongoing operations. Jobs can also be batched and run as a consecutive set of tasks.

The Task Management interface (Supplementary Figure 6) allows specialists to see which jobs are queued, running, canceled, completed, or aborted with errors.

The Task Management interface also allows operators to define and edit specific tasks, such as an automated routine to illuminate a camera’s lights and record video for a period of time before turning the lights off again. Supplementary Figure 7 shows the Task Definition tab with task number 216, which runs a scheduled SeaScript job on the camera at the Folger Pinnacle location. The actual SeaScript is also shown in the lower part of this figure. Not all scheduled tasks are SeaScript routines; they also perform functions such as downloading files from an FTP site or generating 15-min averaged data and writing the values into the database.

Data Versioning

Data versioning is a necessary aspect of data management, which facilitates corrections or enhancements to datasets. Corrections may be required when fixes are made to calibration formulae, parsers, data processing algorithms, or other metadata that influence the resulting data products. Enhancement examples include adding more derived variables or improvements to data visualization parameters. On occasion, instruments send data in an unexpected format that breaks down-stream processes; once mitigating measures are identified and incorporated, it is sometimes possible to regain this segment of the time series through reprocessing.

The specific tools and procedures used vary depending on what part of the data product processing pipeline is affected. While there has always been some traceability of these events in the Oceans 3.0 database records and code versioning, there was limited ability to fully reconstruct and communicate the events pertaining to a particular dataset. Recognizing that dataset provenance is extremely important for reproducibility and to be able to apply versioning updates for dataset persistent identifiers, new infrastructure referred to as the batch system was developed in 2020.

In this revised system, batches are defined to encapsulate the triggers that initiate versioning of tasks, and the relevant DataCite DOI updates (see Persistent Identifiers and Data Citation section). A free-text field also allows data stewards to describe the reason and scope for the change. Triggers include items like calibration formula changes and parser updates. Versioning tasks include reprocessing the raw data (essentially redoing the Real Time Data Acquisition Steps 2 to 5 described above), re-generating derived data products, and file uploads (to fill gaps or replace faulty files). Once the tasks are complete, a new DOI is generated such that the new and previous DataCite DOIs are associated with one another using the “isPreviousVersionOf” and “isNewVersionOf” relationships.

This dataset versioning provenance information is communicated to end-users via the dataset landing page (as shown in Supplementary Figure 8).

This versioning approach is consistent with recommendations from the Research Data Alliance (RDA) Data Citation Working Group (Rauber et al., 2015) and the RDA Data Versioning Working Group (Principles 1, 5, and 6 of Klump et al., 2021). As new standards and best practices emerge from the research data community, ONC will continue to improve these frameworks. More information on this topic is also provided in section Persistent Identifiers and Data Citation.

Metadata

Ocean Networks Canada maintains a wealth of metadata and documentation to support the available datasets in Oceans 3.0. Metadata, often defined as data about data, provide users with the necessary information to discover, acquire and use data confidently and correctly. Metadata are also integral to the maintenance of ONC sensor networks.

Standardized metadata are provided to users in ISO 19115 (International Standards Organization, 2014) and DataCite metadata records, while more comprehensive content is available throughout the Oceans 3.0 data portal. An example snippet from an ISO 19115 XML metadata file is shown in Supplementary Figure 9; an example interface displaying metadata associated with a device is shown in Supplementary Figure 10. These metadata include details about the instrument life cycle events such as deployments, recoveries, maintenance, calibrations, configuration changes and more. All metadata records are maintained with the aid of the workflow tool described in the following section. In addition to instrument metadata, Oceans 3.0 maintains metadata describing a wide variety of entities, including non-instrument infrastructure, instrument platforms, expeditions, missions and remotely operated vehicle dives, etc.

Documentation for each instrument including manuals, calibration sheets and photos are curated in a content management system, and can be provided to data users upon request. For instrument deployments conducted through Remotely Operated Vehicle (ROV) operations, the annotated video is publicly accessible via Ocean 3.0’s SeaTube interface (see section “Data Discovery and Access”). This feature allows users to visually contextualize the environment in which an instrument is placed. ONC also maintains a transaction history of changes to any metadata, including details of who made the change and at what time. In 2020–2021, ONC implemented a more robust system for tracking data versioning changes, such as reprocessing or file fixes. These data versioning metadata are now provided in the dataset landing page. Dataset versioning prior to the allocation of DOIs is mostly traceable in ONC’s database, although not currently exposed to end-users. As of 2021, a maintenance history of changes was being implemented into the ISO 19115 metadata records.

Controlled Vocabularies

To efficiently serve Ocean Networks Canada’s large, interdisciplinary user community it is important to follow widely accepted and consistent conventions when describing data. Controlled vocabularies, such as those maintained on the vocabulary server provided by the Natural Environment Research Council (NERC) define a common language for referencing variables and instruments. The NERC Vocabulary Server (National Oceanography Centre, 2021) provides access to lists of standardized terms that cover a broad spectrum of disciplines of relevance to the oceanographic and wider community. All of the vocabularies are fully versioned and a permanent record is kept of all changes. By referencing controlled vocabularies, ONC can be confident that its use of terms adheres to the current standards of active controlled vocabularies.

Vocabularies were selected from the NERC Vocabulary Server that paired with concepts used by the Oceans 3.0 data management system, including device type, device category, and units of measure. Once a controlled vocabulary was selected, terms from Oceans 3.0 were manually mapped to corresponding terms in the vocabulary. These mappings are stored in ONC’s relational database, which simplifies management and maintenance of the controlled vocabularies. Implemented mappings include the SeaVoX Device Catalogue, SeaDataNet Device Categories, British Oceanographic Data Centre Data Storage Units, Climate and Forecasting Standard Names, IOOS categories, and Global Change Master Directory Keywords controlled vocabularies. Terms and the source-controlled vocabulary are returned to help users determine fitness for use of the data. Not every concept in Oceans 3.0 maps to a term in one of the selected vocabularies, in which case a null is returned with the search results. However, by adopting multiple vocabularies ONC minimizes gaps in the description of data.

Metadata Formats

Just as oceanographic data need to be provided in common and interoperable formats, so too do the metadata. Oceans 3.0 conforms to the ISO 19115-1:2014 Geographic Information Metadata schema to deliver metadata accompanying data search results.

There were several motivations to adopt ISO 19115. Developed by the International Standards Organization, the schema is well maintained with an active and engaged user community. The standard has been adopted by other organizations in the field of study, such as the National Oceanic and Atmospheric Administration, and is used by repositories that ONC contributes to, such as the Polar Data Catalogue. Additionally, the XML format of ISO 19115 ensures the metadata is machine readable, allowing users to easily parse documentation.

Extensive crosswalks have mapped concepts in Oceans 3.0 to relevant fields in the ISO-19115 schema. Mappings consider how metadata terms are defined in the main standard as well as how terms have been implemented by other organizations and the North American Profile of ISO 19115. The result is an ONC-tailored metadata profile that expands on the minimum mandatory requirements of ISO 19115. Doing so maximizes interoperability and provides users with the details they need to use the data obtained through Oceans 3.0.

Abiding by Principles and Standards

Ocean Networks Canada became a member of the International Science Council World Data System in 2014. This body, in partnership with the Data Seal of Approval (DSA) launched the CoreTrustSeal organization in 2017. CoreTrustSeal is an international community-based, non-governmental and non-profit organization promoting sustainable and trustworthy data infrastructures. CoreTrustSeal offers data repository certification based on conformance with an agreed set of requirements covering aspects such as data security, continuity of access, confidentiality, data integrity, discovery and identification. As of 2021, ONC was in process of preparing for recertification under CoreTrustSeal.

In developing ONC’s data management policies, careful attention has been paid to Several seminal principles, including FAIR, TRUST, OCAPTM, and CARE.

FAIR Principles

In 2016, the ‘FAIR Guiding Principles for scientific data management and stewardship’ were published, offering guidelines to improve the Findability, Accessibility, Interoperability, and Reuse of digital assets (Wilkinson et al., 2016). The Findable principle implies that data and metadata should be easy to find for both humans and computers. The Accessible principle ensures that once data are found, there are open processes for accessing them. Interoperability relates to the ability to integrate data from different sources as well as across different applications for analysis, storage and processing. Reusability is the ultimate goal, ensuring data are well-described so that they can be replicated or combined in different settings.

Ocean Networks Canada has strived to implement the FAIR principles within Oceans 3.0, although not all previous versions of data can always be accessed. In some situations when data are reprocessed, the older version becomes unavailable, but at minimum all associated metadata are preserved.

TRUST Principles

In 2020, Lin, et al. published the TRUST guiding principles for demonstrating the trustworthiness of a digital repository, including Transparency, Responsibility, User Focus, Sustainability, and Technology. The TRUST principles recognize that for a repository to provide “FAIR data whilst preserving them over time requires trustworthy digital repositories with sustainable governance and organizational frameworks, reliable infrastructure, and comprehensive policies supporting community-agreed practices.” (Lin et al., 2020) Transparency calls for repositories to enable publicly accessible verification of specific repository services and data holdings. The Responsibility guideline requires repositories to ensure the authenticity and integrity of data holdings as well as the reliability and persistence of their services. User Focus ensures that data management norms and expectations of target user communities are met. Sustainability reminds that services should be sustained and data holdings preserved for the long-term. Technology refers to the infrastructure and capabilities implemented to support secure, persistent and reliable services. As part of ongoing efforts to maintain CoreTrustSeal certification, ONC strives to abide by TRUST principles as foundational for implementation of the Oceans 3.0 data repository.

OCAP™ Principles

In 2014, the OCAP™ principles, originally introduced in 2002, were refined and updated by The First Nations Information Governance Centre (2014). These principles and values are reflective of Indigenous Peoples’ world view of jurisdiction and collective rights. They include Ownership, Control, Access and Possession. Ownership states that a community owns information collectively, and that ownership is distinct from stewardship. The Control principle asserts that Indigenous Peoples must have control over how their data are collected, used, disclosed and destroyed. The Access principle requires that Indigenous Peoples will have ongoing access to their data, while also having the right to make decisions regarding who can access these data. Possession describes the mechanism for Indigenous Peoples to assert and protect ownership of their data.

CARE Principles

In 2020, Carrol et al. published the CARE Principles for Indigenous Data Governance, in recognition that “ongoing processes of colonization of Indigenous Peoples and globalization of Western ideas, values, and lifestyles have resulted in epistemicide, the suppression and co-optation of Indigenous knowledges and data systems” (Carroll et al., 2020). The CARE principles seek to balance the FAIR principles for open data against respect for “Indigenous use of Indigenous data for Indigenous pursuits.” The CARE Principles include Collective benefit, Authority to control, Responsibility and Ethics. Collective benefit supports Indigenous creation/use/reuse of data for policy decisions and evaluation of services in ways that reflect community values. Authority to control affirms Indigenous Peoples rights to determine Indigneous data governance protocols and be actively involved in stewardship decisions. The Responsibility principle acknowledges the importance of nurturing respectful relationships with Indigenous Peoples from whom the data originate, while the Ethics principle recognizes that Indigenous Peoples’ rights and wellbeing should be the focus across data ecosystems and throughout data lifecycles.

As ONC upholds Indigenous partnerships for hosting environmental data, the data policy implementation plan and practices are informed by the CARE and OCAP Principles. ONC data stewards have completed training courses on OCAP™ and participated in the Portage Network’s Sensitive Data Expert Group (n.d.), which works to develop practical guidance and tools for the management of sensitive research data. The team is developing plans to increase Indigenous data support through means such as integrating notices and labels relating to traditional knowledge and biocultural holdings. ONC actively participates in Indigenous data governance events and continues to evolve practices and implementations within Oceans 3.0 accordingly.

Data Restrictions

Most data within the Oceans 3.0 repository are provided under the Creative Commons CC-BY 4.0 license, which means these holdings are open and free for anyone to use (Creative Commons, 2021). However, for some datasets, ONC maintains agreements with the relevant data partners to clarify the data restriction details, with follow-on support for providing access to designated users within the contractual time frame of the data agreement. Even in the case of restricted data, metadata remain accessible. Embargoes may be established in some cases for the entire dataset, specific subsets, or most recent data (e.g., last 4 h). ONC’s data access interfaces and services are generally designed to show the existence of datasets, even if access to the datasets requires specific permissions. Requests to access any restricted datasets are evaluated on a case-by-case basis.

Within the Oceans 3.0 framework, support has been implemented to handle requirements for access to, use and sharing of Indigenous datasets, which are defined by data agreements with providers.

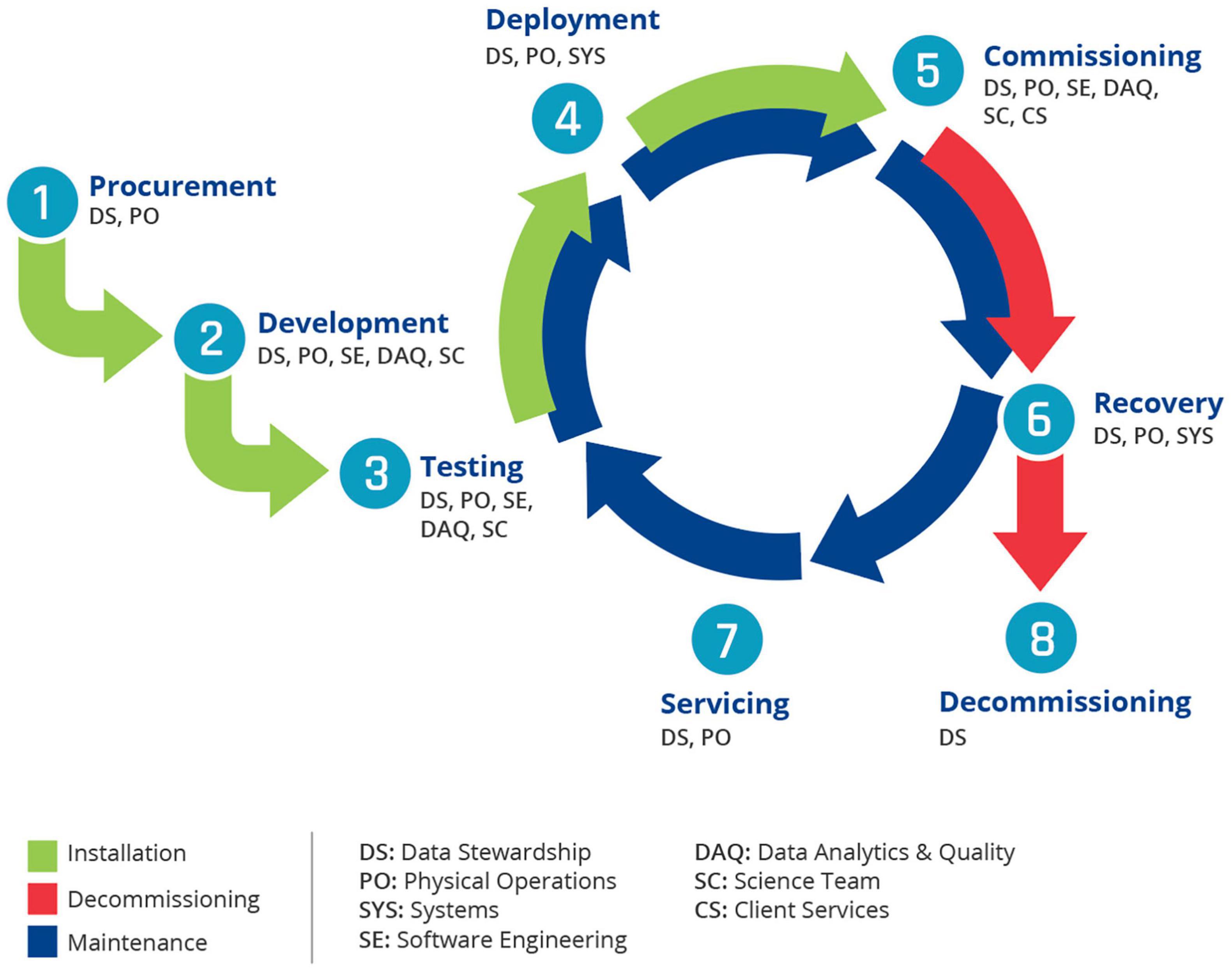

Quality Assurance Lifecycle, Workflow and Testing

Quality Assurance/Quality Control Model

Ocean Networks Canada has developed and implemented a comprehensive process-oriented quality assurance (QA) model in combination with a product-oriented data quality control (QC) model. This QA/QC model systematically intercepts and examines the instrument and data streams at various stages with the objective of minimizing human and/or systematic errors, thus ensuring high quality data workflow (see Figure 7). ONC’s QA/QC methodology specifically addresses the QA/QC needs of a long-term dataset by ensuring data quality consistency within a single dataset and simultaneously among a collection of datasets at each site.

The following QA/QC stages monitor the performances of measurement systems, which eventually contribute to scheduling maintenance expeditions and calibrations of the instrument platforms. These processes are complementary to research and development of improved and new monitoring technologies.

Pre-deployment Testing

This stage includes all data/metadata QA/QC checks performed during pre-deployment testing for an instrument up to actual deployment.

Post-deployment Commissioning

This stage includes all data/metadata QA/QC checks from actual deployment to commissioning of the data from an instrument as good or compromised.

Automated Quality Testing

This stage includes all data QA/QC-related checks, real-time or delayed, performed via automated quality control procedures while the instrument is deployed.

Manual Quality Control Methods

This stage includes all data QA/QC checks performed via systematic manual data assessments and annotation routines.

Post-recovery Tests

This stage includes all post-calibration checks performed during post-recovery and servicing of an instrument.

Data Quality Assurance

Data quality assurance (QA) processes are preventive measures implemented to minimize issues in the data streams and inaccuracies, thus averting corrective measures required to improve data quality. The ONC data QA component includes processes to ensure that the instrument sensor network protocols are appropriately developed and observed. Examples of QA processes currently in place include periodic manual data review by ONC data specialists, inclusion of data assessment annotations and the completion of end-to-end workflow tasks.

Manual Data Assessment Annotations

Quality assurance on the quality-controlled data is accomplished by performing periodic manual data quality reviews followed by modification to the existing data quality flags as required. In addition, ONC data specialists add manual data assessment annotations of devices, sensors and other observatory components, reporting events or conditions that may affect the quality of ONC data. Such information includes instrument commissioning, sensor failures, changes in instrument calibration, and explanations for data gaps. Effort has gone into developing user-friendly interfaces and tools to facilitate annotation entry by data specialists and to effectively link the annotations through the time domain with corresponding data. External users can conveniently access and download the annotations through the Annotation Search tool and various links provided in the ONC data download interface.

Workflow Processes

By using an end-to-end workflow with systematic methodologies and processes, ONC ensures that the necessary pre-conditions for high-quality data are met. A workflow-process user interface facilitates the integration of knowledge among various teams within the ONC organization where teams work together to ensure that instruments are well-documented and provide the highest quality data possible.

Since 2013, ONC has employed an in-house software tool (shown in Supplementary Figure 11) that facilitates task management for all the network instruments affected in a given expedition or program (Jenkyns et al., 2013). Its development was motivated by the necessity to ensure all instruments are properly managed during a busy expedition season that requires input from domains of expertise distributed throughout the organization. Its design and implementation also establish records of events in an instrument’s life cycle, and track ONC processes governing deployments, maintenance and recoveries.

Data Quality Control

Data quality control (QC) is a product-oriented process to identify and flag suspect data after they have been generated. QC includes both automated and manual procedures to test whether data meet necessary quality requirements. QC of ONC data includes three components. The first component evaluates real-time data automatically before data are parsed into the database. The second component evaluates near-real time or archived data using automatic delayed-mode testing. The third component is manual review, where an expert inspects the data for quality issues. The three components are discussed in more detail below.

Automatic Real Time Tests

Real time automated data qualification determines the initial validity of data prior to archival in the ONC database. The QA/QC test model follows conventions listed in the Argo quality controls manual (Wong et al., 2021) with additional tests developed at ONC. Qualifying the data prior to archival ensures that every reading with a QA/QC test has an associated QA/QC value.

The QA/QC architecture supports two types of automatic real-time QC tests: single-sensor range tests and dual-sensor relational tests. These tests are designed to catch instrument failures and erroneous data at regional or site-specific range values derived from various sources depending on test level (defined in the following section). In addition, quality flags are propagated to dependent or derived sensor data streams to ensure derived data are adequately quality controlled as well. Example listings of automatic real time tests are shown in Supplementary Figure 12; details of a range test for a fluorometer are shown in Supplementary Figure 13.

Automated Delayed-Mode Testing

Automated delayed-mode testing includes checks on data that can be applied in near real time or batch processed at set intervals. These tests require consecutive data where the central value is compared with surrounding values to determine its validity. The QA/QC test model supports tests such as spike detection and gradient steepness.

Manual Tests

Automated QC is a first pass at quality control, the results of which may contain both false positives and false negatives. For this reason, ONC data specialists conduct daily manual tests, by which all real time data are visually reviewed. In situations where data specialists notice issues with data visually, they isolate such data segments and perform an in-depth review to confirm whether automatic QA/QC tests were able to capture the instances and flag the data accordingly. If not, data specialists perform appropriate manual corrections to auto QA/QC flags.

An example situation requiring manual review of bad data points that were flagged as good, is with the automated Spike Test. This test is only able to capture a single erroneous point when applied as an auto test. However, there may be multiple erroneous data points subsequent to the initial instance. Such points can only be identified and flagged appropriately via manual review of data.

Another situation requiring manual review and flagging accordingly is the identification of potential drifts in the data. In general, automatic QA/QC tests, which are applied to single data points or very short segments of real time data, are unable to capture longer-term errors introduced gradually into the data from sensor drifts. This can only be addressed by data specialists periodically reviewing long term historical data visually, to identify potential drifts. Such data are flagged manually by ONC data specialists.

On occasion, in-depth reviews require consultation with ONC staff scientists to discuss potential natural events that may produce outliers. An example might be erroneously flagged data indicating presence of an unusual event, such as a marine heat wave or hypoxia intrusion. After consultation to confirm anomalies reflect actual events, data that may have been automatically flagged as “2 – probably correct” (see Table 1) could be reverted to “1 – good data.” As with all other manual QA/QC flagging, such changes are performed in delayed mode.

Manual QA/QC tests essentially follow the test criteria applied by auto QA/QC tests. The test criteria are developed by ONC data specialists through analysis of long-term data from specific sites and regions. Significant weight is given to the skill of the data specialist to capture potential issues visually. ONC data specialists are subject matter experts on a variety of instrumentation and use their experience and knowledge to determine manual QA/QC flags that are not easily captured by automatic tests. These can include comparison with co-located instrumentation, drift analysis, seasonal events, stuck point values, and other tests. The underlying data stream used to derive the auto (and/or manual) tests will be validated against physical samples or shipboard and ROV cast data as and when they become available. However, availability of such data is limited.

Many problems are identified and corrected by the manual test process, including adjustment of automated QC test parameters. Within the ONC Quality Control terminology, manual QA/QC tests are considered as major tests (defined in next section).

Major Tests

A major test sets gross limits on the incoming data such as instrument manufacturer’s specifications or climatological values. Failure of this test level is considered major and it is recommended that the flagged data should not be used. Specific tests that belong to this category include instrument-specific comparisons (against value ranges specified by the manufacturer for each physical sensor on an instrument) and regional-level tests (based on climatological values for a region and depth).

Minor Tests

Minor tests are based on local statistics derived from historical ONC data. If a minor test generates failures, the data are considered suspect and require further investigation by the user to decide whether or not to include these data in their analyses. Specific tests that belong to this category include single-sensor tests (compared against historical ranges for a specific site and station) and dual-sensor tests (utilizing two different sensors on the same instrument to catch dropouts and other sensor-specific errors).

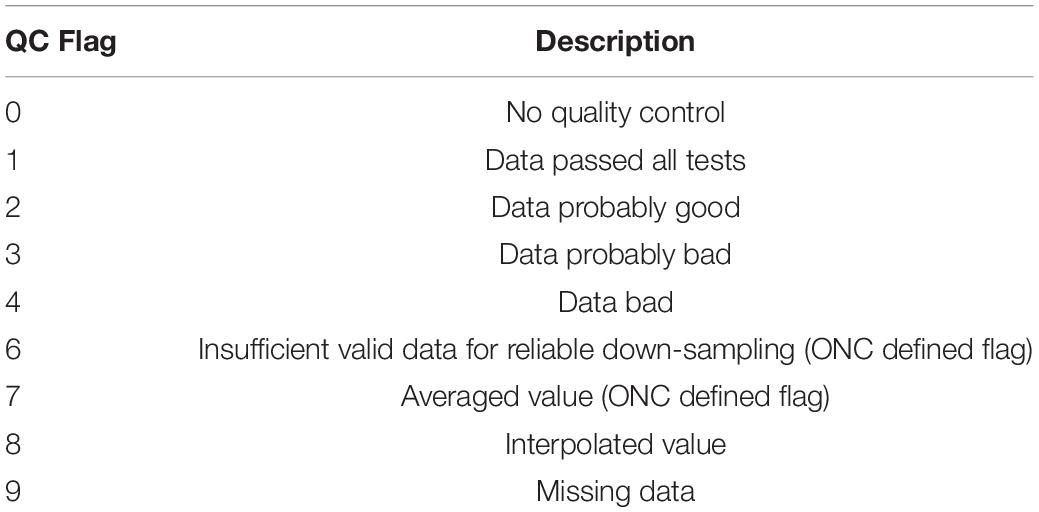

Quality Control Flags

Quality information for individual measurements is conveyed by integrating the results from multiple types of test evaluations. The overall quality of the data is shown by integer indicators, or flags, which are standardized across all ONC data and are based on the Argo quality control flagging system (Wong et al., 2021), as well as including some ONC-defined flags (Table 1).

Overall quality flags are used to demarcate data values that fail one or more QC tests. This is achieved by subjecting the data to various levels of testing that generate a QC vector containing the output for each test. The final quality control flag is then determined as follows.

• If all tests achieve pass status, the final output flag assigned is 1 (Data passed all tests).

• If passed status is reported on major tests but failed reported on minor tests, the final output flag assigned is 2 (Data probably good). In cases where the Temperature-Conductivity tests are failed, the output assigned flag is 3 (Data probably bad).

• If failed status is reported on major tests, the final flag is 4 (Data bad).

In addition to using flags as quality indicators, the ONC flagging systems also provide information about how the data were processed, with flag 7 for averaging and flag 8 for filling gaps via interpolation. Note that averaged and interpolated data exclusively use clean data (all values have QC flag 1). Users can determine the type of tests that have been applied to the data downloads by referring to the Data Quality Information section in the accompanying metadata file.

Quality Assurance/Quality Control Implementation Tools

Within the ONC data acquisition and delivery model, QA and QC procedures are applied at various stages as data flow from sensors to the end user. Various Oceans 3.0 tools and web interfaces have been developed for easy handling and linking this information to the data stream. Such tool developments are continuously improved and remain as work in progress. Both auto and delayed QA/QC tests are managed through a custom-designed QA/QC interface, which allows data specialists to search, display and filter test results for sensors and instruments.

Maintaining historical information over the lifespan of every ONC instrument is indispensable for delivering quality data. To serve this purpose, the design architecture of all the ONC tools related to data QA/QC ensures that all historical information pertaining to a device is accessible via a single link.

Ocean Networks Canada Quality Assurance/Quality Control Data Delivery Policies

Ocean Networks Canada delivers data to the end users in clean and raw data products or via web services that include QA/QC flags. For clean data products, all compromised data resulting from QA/QC assessments are removed and replaced with NaN (Not a Number) values. Raw data products deliver raw data (unmanipulated, preprocessed) with corresponding data assessment flags in separate columns. Data delivered via web services return the QA/QC flag values, but the onus is on the user to use the flags appropriately. Since there is a risk that real and potentially important phenomena will be ignored in fully automated QC models, the ONC data delivery policy emphasizes the need to maintain the raw unmanipulated data and offer the option of downloading raw data to the end user. Great care is also taken to ensure that valid data are not removed and that all QA/QC processing steps are well documented.

Data reliability is based, in part, on the capacity to reproduce data products. To this end, ONC data QA/QC model developers have carefully considered ways to preserve the original data in its raw form so that subsequent procedures performed on the data may be reproduced. Here, metadata act as a resource, holding valuable information about all QC procedures performed on the data (i.e., raw data, qualifier flags added, problematic data removed or corrected and gaps filled). Also included is all necessary information used to generate the data, such as the source file used, data-rejection criteria, gap-filling method, and model parameters. This information enables the data user to carefully scrutinize the data and determine whether data processing methods used by ONC are appropriate for their specific applications. Further, facilitating the review of uncorrected data through the ONC data distribution model helps end users perform their own quality analysis and identify real phenomena that may not be apparent in the corrected data.

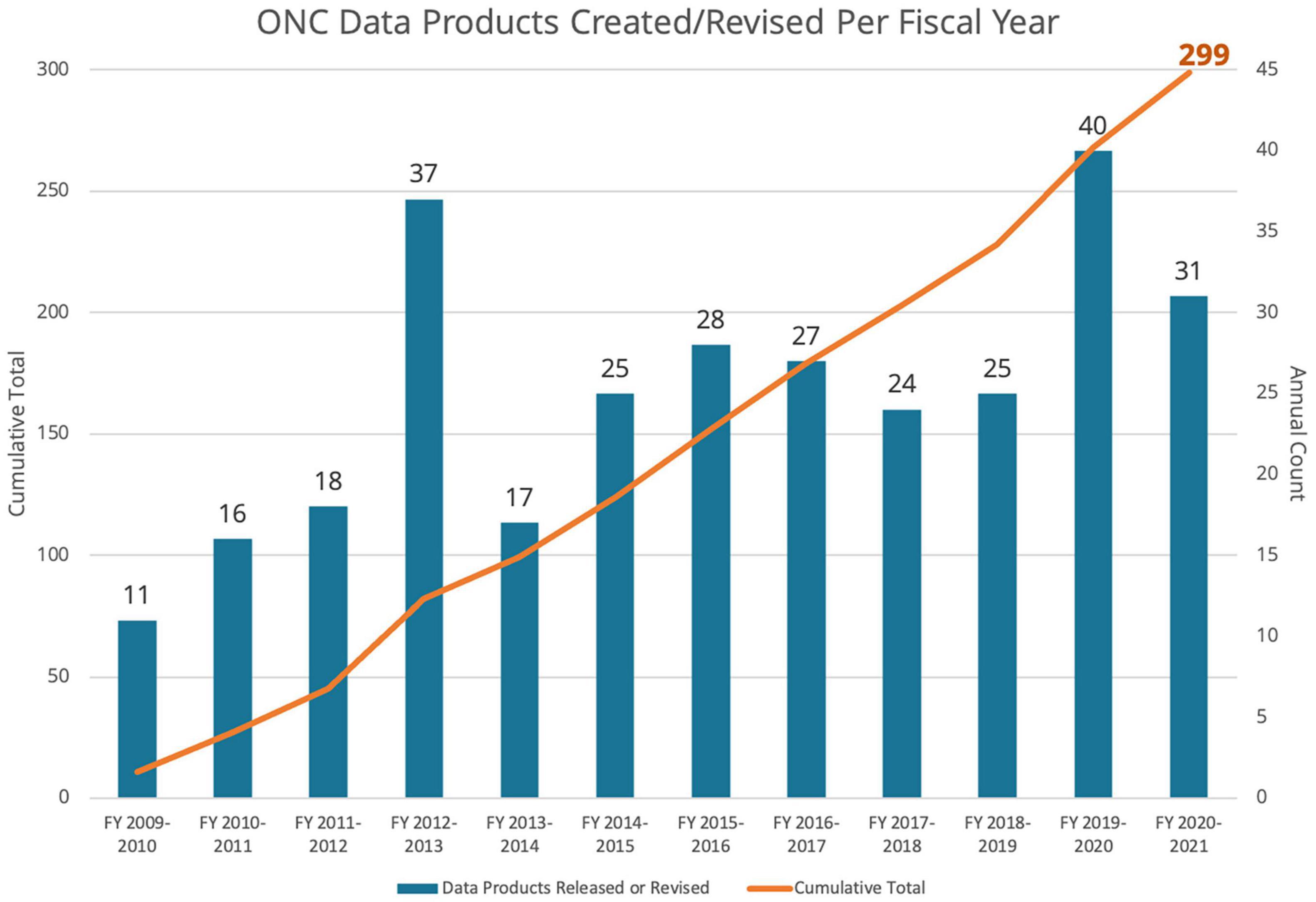

Data Product Pipeline

As of Spring 2021, 299 distinct file-based data products were available for download through Oceans 2.0 (this total does not include data available via web services and interactive portals). Over the 2009–2021 time period, an average of 25 data products were created or revised annually, as shown in Figure 8. Data products are maintained in perpetuity, allowing for reproducibility, particularly via DOIs. This includes the ability to reproduce any historical version of a data product, particularly the more value-added and processed data products that are continually improved over time.

Figure 8. Graph of ONC data products created and revised per fiscal year (blue bars) and cumulative total (orange line), 2009–2021.

Data Products

Examples of data products include numerous forms of data plots, primarily in image formats, and many data file formats including self-describing and standard-adhering NetCDF formats, convenient MAT (MATLAB) files, accessible CSV files, manufacturer formats and raw data. These products are generated by Java or MATLAB codebases. Device manufacturers generally write their supporting software for standalone operation; usually for a PC laptop to connect, configure and download the data. To integrate with the network, ONC drivers emulate the device interaction and acquisition functions of the software, while ONC data products reproduce the initial manufacturer’s product, including calibration, configuration and any metadata, as if the device were operated in the usual way, albeit continuously, with no limitations on power, data transfer and storage. No two device types are the same, even those produced by the same manufacturer. Support from the manufacturers has been very beneficial in the effort to integrate the hundreds of devices and data products to date. In general, for each device type, Oceans 3.0 offers at least one visualization product and the manufacturer’s file product. Additional formats, including specialty products with increasing levels of refinement, are developed in response to user requests.

Data products are generated primarily on-demand, when requests are received from either the Oceans 3.0 web applications or the Application Programming Interface (API). As of July 2021, over 8600 graphical data visualizations were also pre-generated daily via scheduled jobs.

Depending on the data type, the data product processing pipeline converts device-specific source files, generic raw log files and/or parsed scalar data (from the database) into finished data products. Device-specific source files are usually acquired via file transfer (FTP, email, etc.). Generic raw log files include device output intermixed with logged commands and device response codes, as acquired by ONC device driver software. Some log files are stored in hex format, others in ASCII. As described in section Data Acquisition and Archival, incoming raw log files may be parsed into the scalar data system. Data products generated from the scalar data system have device independent format and options, while complex data products are generally specific to the device type. Consider the Teledyne Acoustic Doppler Current Profilers (AD) as an explanatory example. These ADCPs produce data via ONC device drivers that is stored as raw log files. Live incoming ADCP data is parsed in real-time producing scalar sensor data for temperature, tilt, and other state-of-health internal sensors, while the acoustic data is too complex to express as a single-reading in a unit of time. Instead, the data product pipeline processes the manufacturer format RDI files via a scheduled task; these are then stored in the file archive as an intermediary product and used to produce on-demand data products such as plots, MAT and NetCDF data products. If users request near real time complex data, most of the data product generation code is able to read raw live data directly from the Cassandra database, producing any normally pre-processed, intermediary formats on-demand. This mode is slower for processing large amounts of data, but it provides access to near real time data. The product generation code is also able to fill in any missing pre-processed data products on-demand as well. By using a combination of pre-processed stored formats and on-demand generation, the data product pipeline is optimized for both long time series and near real time data access.

Device-specific, manufacturer complex formats are necessary to support the diverse and numerous devices ONC operates. However, parsing some data into scalar sensors has many advantages over complex data products. Instruments with scalar sensors produce single values over time, such as temperatures or pressure readings. As described in detail in previous sections of this paper, incoming data streams are parsed, calibrated, quality controlled and stored within one of the Oceans 3.0 production databases, Postgres or Cassandra (Oceans 3.0 can be configured to use either or both of these database systems). The development of data products, visualization and interactive portals such as Plotting Utility (described in section “Data Discovery and Access”) is much more easily practicable when drawing from standardized, database-stored scalar data.

In addition to what ONC classifies as scalar and complex data products, some value-added and processed data products combine these using data from the same or different source instruments. An example is the processed radiometer data product which combines the complex array data with scalar depth values acquired by a separate instrument to reproduce a manufacturer format file for easier processing. All products are available alongside the raw data and all formats. The processing steps for all data products are described in online data product documentation (ONC Data Products Wiki, n.d.).

MATLAB-based ONC data parsing and data product generations routines are provided to interested researchers upon request. Future plans for Oceans 3.0 include the publication of citable and persistently identified data product generation routines, which will advance efforts to support replicability by providing open-source code that can be run independently.

Long-Term Time Series