- 1School of Biological, Earth and Environmental Sciences, Environmental Research Institute, University College Cork, Cork, Ireland

- 2Green Rebel Marine, Crosshaven Boatyard, Crosshaven, Ireland

- 3Escola de Artes, Ciências e Humanidades, Universidade de São Paulo, São Paulo, Brazil

- 4Irish Centre for Research in Applied Geosciences, Marine and Renewable Energy Institute, University College Cork, Cork, Ireland

Cold-water coral (CWC) reefs are complex structural habitats that are considered biodiversity “hotspots” in deep-sea environments and are subject to several climate and anthropogenic threats. As three-dimensional structural habitats, there is a need for robust and accessible technologies to enable more accurate reef assessments. Photogrammetry derived from remotely operated vehicle video data is an effective and non-destructive method that creates high-resolution reconstructions of CWC habitats. Here, three classification workflows [Multiscale Geometrical Classification (MGC), Colour and Geometrical Classification (CGC) and Object-Based Image Classification(OBIA)] are presented and applied to photogrammetric reconstructions of CWC habitats in the Porcupine Bank Canyon, NE Atlantic. In total, six point clouds, orthomosaics, and digital elevation models, generated from structure-from-motion photogrammetry, are used to evaluate each classification workflow. Our results show that 3D Multiscale Geometrical Classification outperforms the Colour and Geometrical Classification method. However, each method has advantages for specific applications pertinent to the wider marine scientific community. Results suggest that advancing from commonly employed 2D image analysis techniques to 3D photogrammetric classification methods is advantageous and provides a more realistic representation of CWC habitat composition.

Introduction

Azooxanthallate scleractinian corals, such as Lophelia pertusa (synonymised to Desmophyllum pertusum in Addamo et al., 2016) and Madrepora oculata, are recognised by their three-dimensional branching morphology and framework building capacity (Mortensen et al., 1995; Roberts, 2002; Jonsson et al., 2004; Costello et al., 2005; Wheeler et al., 2005a, 2007b; Gass and Roberts, 2006; Guinan et al., 2009). In suitable environmental conditions, these cold-water coral (CWC) species can form structural habitats such as small coral patches (Wilson, 1979a), reefs (Masson et al., 2003; Roberts et al., 2006b; Victorero et al., 2016; Lim et al., 2018), and giant carbonate mounds (Hovland and Thomsen, 1997; Mienis et al., 2006; Wheeler et al., 2007a; Freiwald et al., 2011; Huvenne et al., 2011) that can reach up to 400 m above the seabed. The presence of reef-forming CWC colonies has been documented in a range of settings from fjords (Fosså et al., 2006) to continental shelfs, slopes (Wilson, 1979b; Mortensen et al., 1995; Wheeler et al., 2005c; Leverette and Metaxas, 2006; Mienis et al., 2006) to seamounts and submarine canyons (Huvenne et al., 2011; Appah et al., 2020) throughout the North Atlantic, Indian, and Pacific oceans and the Mediterranean Sea (de Mol et al., 2005; Freiwald and Roberts, 2005; Wheeler et al., 2007a, b; Roberts et al., 2009; Freiwald et al., 2011; Lim et al., 2018).

Cold-water coral environments are commonly considered marine biodiversity “hotspots” as they can harbour increased biodiversity and biomass relative to their surrounding areas (Jonsson et al., 2004; Wheeler et al., 2007a; Fanelli et al., 2017). Despite being among the world’s most important reservoirs of marine biodiversity (Freiwald et al., 2011), CWC reefs are also susceptible to environmental changes and threats such as temperature and salinity changes, as well as anthropogenic activities (Roberts et al., 2006a; Wheeler et al., 2007a; Orejas et al., 2009; Huvenne et al., 2016), e.g., bottom trawling (Wheeler et al., 2005b), mining (Savini et al., 2014), and oil and gas exploration (Roberts, 2002; Gass and Roberts, 2006; Barbosa et al., 2019). Several studies have affirmed that CWC reefs are declining rapidly in response to high rates of environmental change (Lim et al., 2018; Boolukos et al., 2019) and ocean acidification (Turley et al., 2007; Findlay et al., 2013). Consequently, there is a need for CWC reef assessments that quantify variations in temperature, salinity, food supply, and growth rates combined with measurements of structural complexity and biodiversity. It is therefore essential to understand these environments and to assign priority areas for protection (Ferrari et al., 2018; Appah et al., 2020).

Three-dimensional structures enhance small-scale spatial variability and play a major role in species biodiversity and nutrient cycling (Graham and Nash, 2013; Pizarro et al., 2017; Lim et al., 2018). The use of multibeam echosounders (MBES) can provide sub-metre pixel resolution bathymetric coverages of submarine canyons (Huvenne et al., 2011; Robert et al., 2017) and CWC environments (De Clippele et al., 2017; Lim et al., 2017). However, there is a lack of studies at a centimetric resolution (King et al., 2018; Anelli et al., 2019; Price et al., 2019) that reveal the complexity of coral frameworks. The analysis of these environments usually relies on 1D or 2D estimates of coral cover and distribution that can potentially disregard important changes in reef habitats as they may not integrate accurate vertical or volumetric information (Courtney et al., 2007; Goatley and Bellwood, 2011; House et al., 2018). Therefore, there is an increasing demand for the development of novel techniques for measuring coral reef environments in 3D (Burns et al., 2015a, b; House et al., 2018; Fukunaga et al., 2019). This demand has been mitigated with the use of novel mapping techniques such as structure-from-motion (SfM) photogrammetry (Cocito et al., 2003; Burns et al., 2015b; Storlazzi et al., 2016; Robert et al., 2017; Price et al., 2019) which is becoming progressively more common since the introduction of remotely operated vehicles (ROVs) (Kwasnitschka et al., 2013; Lim et al., 2020). Increasing access to computer processing power, high-resolution digital imagery, and recent developments in image processing software has led to a considerably higher number of studies utilising photogrammetry for seabed habitat mapping (Storlazzi et al., 2016; Pizarro et al., 2017; Hopkinson et al., 2020). SfM photogrammetry is considered a time- and cost-effective method for seabed mapping that allows high-resolution environment reconstruction (Burns et al., 2015a, b; Storlazzi et al., 2016; Robert et al., 2017; House et al., 2018). SfM utilises multiple overlapping images at various angles to reconstruct 3D models of complex scenes. To this end, SfM uses a scale-invariant feature transform (SIFT) algorithm to extract corresponding image features from an offset of images captured sequentially along the camera transect (Lowe, 1999). These calculations of overlapping imagery can be used to reconstruct 3D point cloud models of the photographed surface or scene (Carrivick et al., 2016). Moreover, the use of ROV video data has a number of benefits when compared to traditional sampling methods given that it is non-destructive and can have a wide spatial coverage (Guinan et al., 2009; Bennecke et al., 2016; De Clippele et al., 2017; Young, 2017).

The increase of data derived from SfM mapping has led to the necessity for new tools and techniques to aid time-effective and high-quality analysis of large areas (Storlazzi et al., 2016; Pizarro et al., 2017; Young et al., 2018; Marre et al., 2019). As technology advances, datasets are also becoming larger which, in turn, leads to a need for automated processing with faster, more precise, and accurate classification outputs (Brodu and Lague, 2012; Weinmann et al., 2015). Currently, this need has been achieved by integrating machine learning (ML) with mapping techniques to achieve automated meaningful pattern detection from multi-thematic datasets. ML has been widely used in remote sensing (Pal, 2005; Mountrakis et al., 2011), archaeology (Menna et al., 2018; Lambers et al., 2019), and to predict fish abundances (Young, 2018). Studies have shown optimal results on the application of ML for satellite, aerial, and terrestrial imagery (Wang et al., 2015; Pirotti and Tonion, 2019). Classification studies using LiDAR data performed in Walton et al. (2016) and Weidner et al. (2019) are also good examples. However, there is still a scientific gap between ML and marine surveying for seabed classification due to the costly computational nature of ML methods and the time-intensive annotation of marine datasets which usually requires expert knowledge (Shihavuddin et al., 2013; Marburg and Bigham, 2016; Hopkinson et al., 2020). This gap is emphasised when we consider the use of 3D data. Even though existing ML models such as neural networks (NNs) have shown promising results on 3D reconstructions of single objects, there is still room for improvement for the classification of complex 3D scenes (Weinmann et al., 2015; Roynard et al., 2018), especially in the case of marine habitats (Gómez-ríos et al., 2018; Hopkinson et al., 2020). Challenges related to the complexity derived from variability of point density, non-uniform point structure, and size of the dataset still cause difficulties when developing and applying new methodologies for 3D point cloud classification (Weinmann et al., 2013). In the specific case of coral reefs, difficulties in detecting coral shape, colour, and texture have also been reported (Gómez-ríos et al., 2018; King et al., 2018; Hopkinson et al., 2020) especially as corals tend to occur in colonies and can have similar features.

In this study, we assess three different image classification techniques embedded in image analysis software and evaluate both the performance and results when using 3D data. We also compare their resource requirements and information outputs. The usability and the computing power required to process and analyse data were also taken into account. In a wider scenario, this study aims to show novel applications of ML for seabed mapping of submarine canyons and CWC reefs. Furthermore, we provide a classification workflow created for these environments and evaluate the limitations and advantages of using 3D data in comparison to 2D. For the first time, the techniques were applied to the CWC reefs in the Porcupine Bank Canyon (PBC), NE Atlantic. As such, this paper contributes to the wider scientific community using existing image processing software for 3D classification of deep-sea environments.

Materials and Methods

Three classification workflows applied to underwater photogrammetric reconstructions of CWC habitats in the PBC are analysed. The methods range from a relatively straight-forward appraisal to ones of increased complexity in terms of computational requirements and user knowledge. Herein, we describe data acquisition, processing, and the workflow of the applied methods.

Study Area

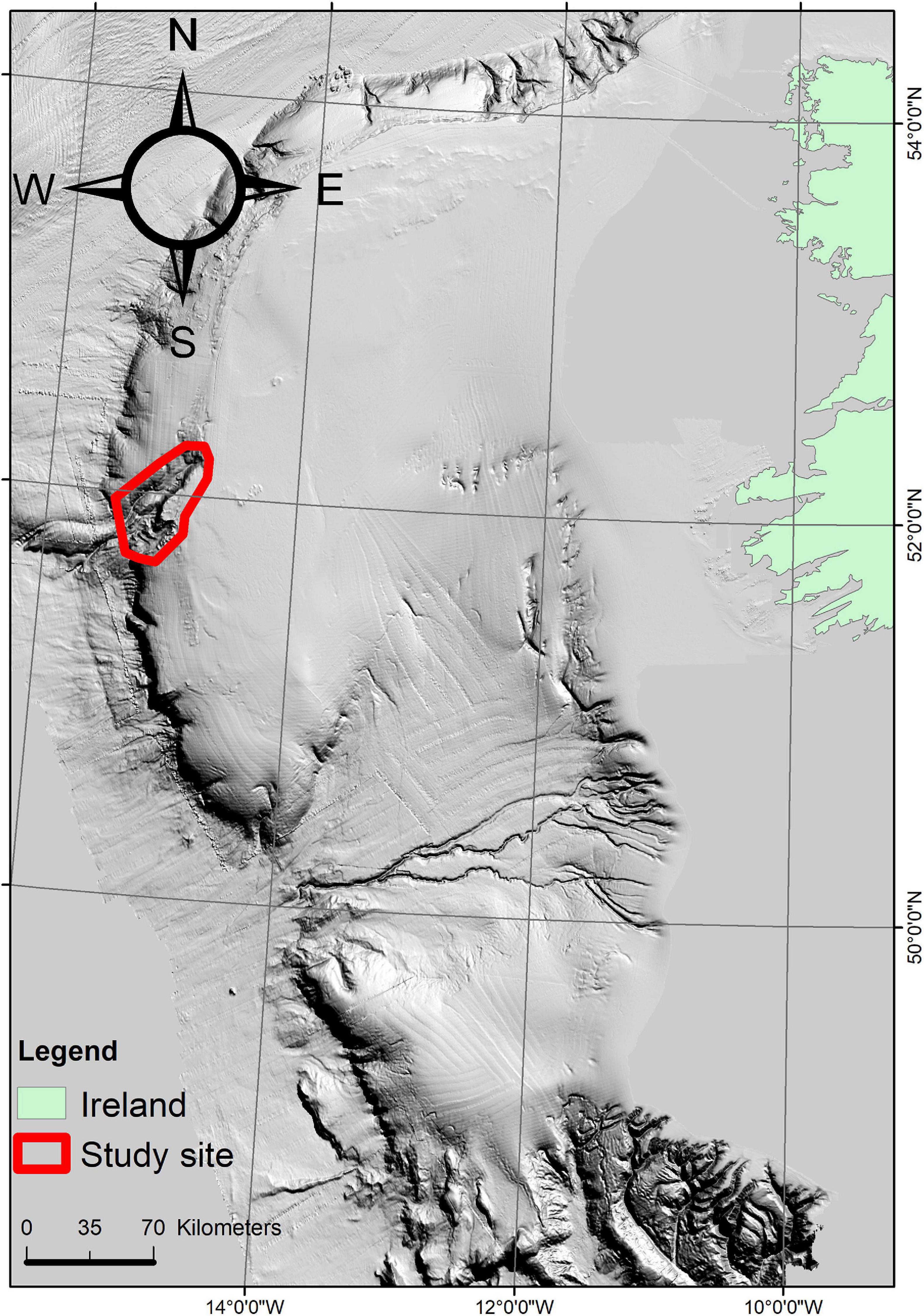

Submarine canyons offer a variety of CWC habitats including vertical habitats (Huvenne et al., 2011; De Clippele et al., 2017; Robert et al., 2017). The three methodologies presented herein were applied to CWC habitats in the PBC, located approximately 300 km southwest of Ireland (Figure 1). The canyon is located between the Porcupine Seabight to the southeast and the Rockall Trough to the west (Wheeler et al., 2005a). Measuring 63 km in length, the PBC is one of the largest submarine canyons on Ireland’s western margin. Since 2016, the PBC has been designated as a special area of conservation (SAC) (n°003001) by the European Union Habitats Directive (2016), and therefore no fishing or other exploratory activities are allowed in the area.

Figure 1. Study site (in red)—The Porcupine Bank Canyon study area on the Irish continental margin west of Ireland.

The PBC is a tectonically controlled (Shannon, 1991), asymmetrical canyon with two branching heads separated by a ridge and exiting separately into the Rockall Trough (Appah et al., 2020; Lim et al., 2020). A steep, ∼700 m high, cliff face exists at the southeast margin of the canyon with exposed bedrock. This bedrock contrasts with the sediment-dominant slope on the northwest margin that extends to the canyon base (Dorschel et al., 2010; Appah et al., 2020; Lim et al., 2020). Giant carbonate mounds of up to 100 m high (Wheeler et al., 2005a) are concentrated along the edges of the canyons or associated with fault systems existing around the canyon head, leading to escarpments of up to 60 m high (Mazzini et al., 2012).

The PBC is influenced by strong currents along the mound tops and flanks, water column stratification, enhanced bottom currents, and upwelling (Mazzini et al., 2012). Unprecedented current speeds of 114 cm s–1 have been recorded within the PBC, which is the highest current speed ever recorded in a CWC habitat (Lim et al., 2020). Data from conductivity-temperature-depth (CTD) measurements show that the region is mainly influenced by the eastern north atlantic water (ENAW) down to 800 m water depth flowing northerly (Lim et al., 2020) with the labrador sea water (LSW) below 1100 m depth (Appah et al., 2020). Mediterranean outflow water (MOW) also flows through the canyon between 800 and 1100 m water depth (Appah et al., 2020; Lim et al., 2020). It is suggested that current regimes influence the distribution of benthic fauna throughout the canyon and that CWC habitats in the PBC can tolerate considerably high current speeds (Lim et al., 2020). High biodiversity including actively growing and well-developed coral colonies is found at depths of 600–1000 m where the ENAW and MOW occur, while poorly developed coral colonies were related to the LSW (Appah et al., 2020). The main framework forming CWC in the canyon is L. pertusa (syn. D. pertusum), and the other most common coral species were black corals Stichopathes cf. abyssicola and Leiopathes glaberrima and sponges Aphrocallistes beatrix and Hexadella dedritifera (Appah et al., 2020).

Video Survey and Data Collection

The video data used in this survey were acquired during research cruises CE19008 (Lim et al., 2019b) and CE19014 (Lim et al., 2019a) from 13rd to 23rd of May of 2019 and 25th to 31st of July of 2019, respectively. Video data were collected using the Holland 1 ROV, although the methodologies compared in this paper could be applied to towed-camera or diver surveys. The ROV is equipped with 11 camera systems of which two were used as data sources for analysis in this paper: an HDTV camera (HD Insite mini-Zeus with HD SDI fibre output), and a Kongsberg OE 14–208 digital stills camera. Two deep-sea power lasers spaced at 10 cm were used for scaling. Positioning data were recorded with a Sonardyne Ranger 2 ultra short baseline (USBL) beacon and corrected by an IXBlue doppler velocity logger (DVL) (Lim et al., 2020). ROV video data were acquired at a height of ∼2 m above the seabed with a survey speed of <0.2 knots at locations in the PBC. High-definition video data (1080p) were acquired at 50 fps and stored as ∗.mov files. The areas selected for reconstruction were based on the distribution and variety of CWC habitats such as small individual coral colonies, coral colonies on rock outcrops, coral gardens, and mounds.

3D Reconstruction Using Structure-From-Motion (SfM) Photogrammetry

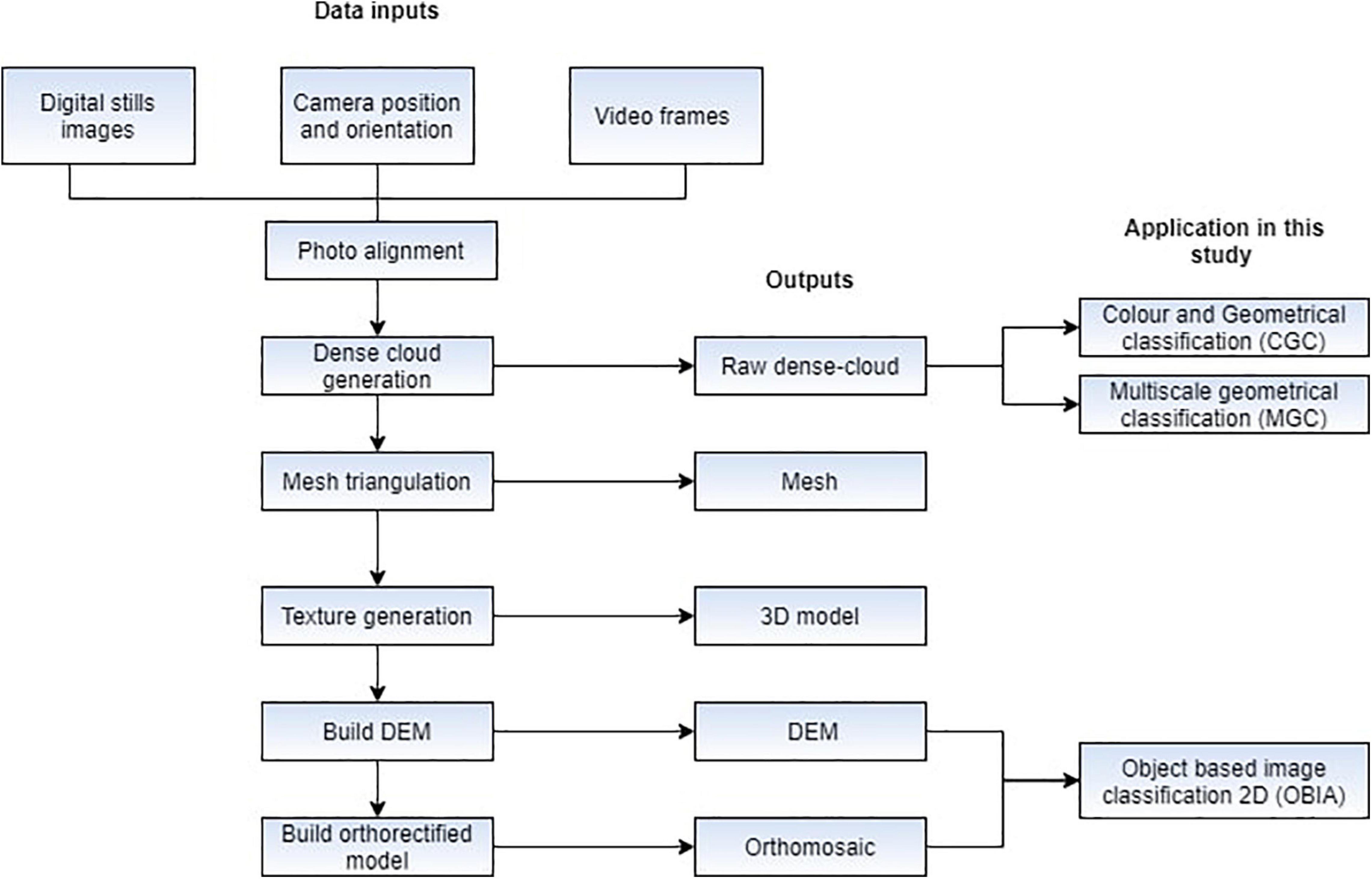

Remotely operated vehicle video data, digital stills, and camera positioning information were used to produce the 3D reconstructions in this study. One frame per second was extracted from the raw video data with Blender (version 2.78). The frames were imported into Agisoft Metashape Professional v1.6, and each frame was georeferenced with its relative USBL positioning data. The lasers from the HD camera were utilised to scale features during the reconstruction process. The workflow for model rendering was carried out as detailed in Agisoft (2019) using an Intel I7 hexa core, 16 GB of RAM, and NVIDIA GTX1070 (8 GB) graphics card. The overall workflow and data outputs are shown in Figure 2. Dense clouds were used in method 1–MGC [section “Method 1–Multiscale Geometrical Classification (MGC)”] and method 2–CGC [section “Method 2–Colour and Geometrical Classification (CGC)”], while the orthomosaics were used for method 3–OBIA [section “Method 3–2D Object-Based Image Analysis (OBIA)”].

Figure 2. Workflow for 3D model reconstruction and applications of each output data within this study.

Classification Methods

Method 1–Multiscale Geometrical Classification (MGC)

An MGC approach was utilised in this study to perform a binary classification of our 3D CWC reef reconstructions. We utilised Canupo (Brodu and Lague, 2012), a support vector machine (SVM) classification algorithm implemented in the open-source software CloudCompare (Girardeau-Montaut, 2011). This method was chosen due to its solid workflow for classification of point clouds applicable to natural environments (Brodu and Lague, 2012). As this technique uses dimensionality (computation of vector dispersion for each point relative to a neighbourhood of points) as a parameter for classification, it provides flexibility when applied to data derived from different sources as geometrical measurements are not dependent on the instrument used (Brodu and Lague, 2012). Therefore, these SVM-based classifiers can be reutilised by other users with point cloud data derived from different sources, e.g., terrestrial or airborne laser scanning. Another reason for choosing a dimensionality-based classifier is the limited separability of RGB component spectral signatures in underwater photogrammetry.

The multiscale classification technique used here computes the degree to which each neighbourhood of points can be examined as single-, two-, or three-dimensional aspects by identifying the principal components of the point coordinates in the given neighbourhood (Brodu and Lague, 2012). This method is defined as a multiscale classification because it calculates these statistics for each core point in the scene at a spherical neighbourhood of different sizes, referred to as scales parameters. As such, this method generates a feature vector that can distinguish between semantic objects (Maxwell et al., 2018; Weidner et al., 2019), such as coral and bedrock. The computation of neighbourhoods defined by each scale gives the classifier a multi-scale refining property (Brodu and Lague, 2012; Weidner et al., 2019). The final product of this process is defined herein as a multiscale classifier that is applied to the test dataset. Here, the neighbourhood sizes were chosen to include a range from coral frameworks to differing rock sizes so that small-scale objects would also be captured in large-scale sizes.

As this technique is a semi-automated classification that employs a probabilistic approach, it is essential to build classifiers based on samples of features of interest from a training dataset, i.e., live and dead corals. Entire dense clouds were manually segmented into objects of interest and divided in two classes: “coral” and “seabed.” The class “coral” consisted of hard and soft coral colonies and frameworks. The class seabed consisted of the remainder, i.e., seafloor and other benthic organisms (e.g., coral rubble, echinoderms, sponges, etc.). The segmentation process was repeated on each axis (X, Y, and Z) to avoid single view bias.

Scale parameters used for the multiscale descriptors were based on the variance of object size within the scene. Ten initial scales with steps of 0.1 or 0.005 were chosen based on an empirical analysis of the data, combining the evaluation of features to be identified with a trial-and-error approach. The maximum number of core points (MNCPs) value is the number of randomly sub-sampled points that will be used for computations on the scene data. The higher the MNCP value, the greater the number of computations. Thus, an increase in the number of scales and core points was directly related to the ability to discriminate and the processing power required to train the classifier. The performance of each classifier was quantified by the Balanced Accuracy (ba) value which is defined by the equation:

Where each class accuracy (ac1 and ac2) is defined as ac1=tcc1/(tcc1 + fcc2) and ac2=tcc2/(tcc2 + fcc1) (tcc1–truly classified class 1, tcc2–truly classified class 2, fcc1–falsely classified class 1, and fcc2–falsely classified class 2). For each sample, the classifier assigns a distance, d, from the separation line of the classifier and the measure of separability is calculated by the Fisher Discriminant Ratio (fdr) described in Sergios and Konstantinos (2008) which is defined as:

where μ2 and μ1 and ν1 and ν2 are the mean and the variance of the aforementioned distance d for each class 1 and 2. The fdr is used to assess the class separability, i.e., how well the classes are separated. Therefore, a high ba value indicates that the trained classifier has a good recognition performance, whereas a high fdr indicates that the classes are well separated in a plane of maximal separability. The classifier quality is directly proportional to ba and fdr values. The higher the values, the better the classifier can identify and classify objects into two classes. Further details can be found in Brodu and Lague (2012).

The dataset consisted of eight dense-clouds that were split into training and testing sets. The training set was used for training the classifier, which was then applied onto the unseen testing set. Each classifier was trained with a combination of segments from a single dense-cloud or two different dense-clouds, referred to here as source-clouds. The testing dataset composed by the remainder of the dataset after excluding the dense-clouds was used to train the classifier. The classifiers with the highest ba and fdr values were applied to the testing dataset to evaluate their robustness and reproducibility, i.e., their ability to be applied to analogous environments. Initially, no confidence threshold was set for the classification. Therefore, all points were classified as either coral or seabed. After a visual inspection of preliminary results, a confidence threshold was set to arbitrary values 0.5 or 0.9 and the classifier was executed again. The confidence threshold allows class labels to be assigned only if the results exceed that value; otherwise, the point is left unclassified. If more than 30% of the points were left unclassified, the classifier was retrained with a different number of scales and core points (Supplementary Figure 1). This threshold was set to reassure classification quality in a trial-and-error approach (Weidner et al., 2019). Hence, higher values will result in less generalisation and more complex decision boundaries (Maxwell et al., 2018). Studies in terrestrial point clouds for rock slope classification have chosen the confidence threshold based on up to 15% of unclassified points (Weidner et al., 2019). A 30% confidence threshold was chosen for this study due to the point cloud density differences and the classes of objects to be addressed.

Method 2–Colour and Geometrical Classification (CGC)

The second classification workflow is based on the use of colour and geometrical feature information following the work of Becker et al. (2018) which has shown satisfactory results for ground classification point clouds surveys using unmanned aerial vehicles (UAVs) (Klápště et al., 2018).

The use of geometrical features for semantic classification has brought positive results in several terrestrial data studies (Weinmann et al., 2015; Hackel et al., 2016). In addition to geometrical features, the use of colour information in the classification process of point clouds provides a significant increase in prediction accuracy (Lichti, 2005; Becker et al., 2018). For underwater photogrammetry, the use of colour has been advised as a way to include important image spectral information (Beijbom et al., 2012; Bryson et al., 2013, 2015, 2016). However, its importance is questionable as there are interactions between the colour spectrum and water column, e.g., the red colour channel is attenuated with distance from camera (Carlevaris-Bianco et al., 2010; Beijbom et al., 2012; Bryson et al., 2013).

Here, the same training set of dense point clouds was classified using the supervised multiclass classification algorithm implemented in Agisoft Metashape (Supplementary Figure 2). This automatic multiclass classification approach associates geometric and colour features that are fed into the Gradient Boosted Tree (GBT) algorithm to predict the class of each point in the point cloud. GBT utilises colour features computed from the colour values of each point and the average colour values of its neighbouring points.

Geometrical features used in the algorithm were previously presented in Becker et al. (2018). For each point, its neighbouring points are computed and the set is used to build a local 3D structure covariance tensor which summarises the predominant direction of the slope gradients in the neighbourhood of a point. The eigenvalues and corresponding eigenvectors are used to compute the local geometric features, for instance, ominivariance, eigentropy, anisotropy, planarity, linearity, surface variation, verticality, and scatter. These features, which originate from the 3D covariance matrix of nearest neighbours of each point, can be used to describe the local 3D structure and dimensionality (Weinmann et al., 2013). Further information about the algorithm can be found in Becker et al. (2018). This technique provides a supervised classification which is pre-trained using terrestrial datasets. The dense clouds were classified with the GBT classifier into ground (seabed) and low vegetation (corals).

Method 3–2D Object-Based Image Analysis (OBIA)

As a baseline method, object image classification was utilised to analyse the range of information that 2D data classification can provide in comparison to the 3D data. Object-based analysis techniques have been widely applied across different remote sensing areas, especially for marine studies (Conti et al., 2019; Lim et al., 2020), aerial imagery (Zhang et al., 2010), and land cover mapping (Benz et al., 2004).

For this classification method, the georeferenced orthomosaics, DEM, and slope from the training set were used. Slope was derived from the DEM in ArcGIS Spatial Analyst toolbox. Slope, DEM, and the orthomosaics derived from the point cloud processing were imported into eCognition Developer (Trimble Germany GmbH, 2019) and segmented using the multi-resolution segmentation algorithm (Benz et al., 2004) at a pixel level using different layer weights for RGB, DEM, and slope layers. This segmentation approach merges pixels of similar values into objects based on relative homogeneity criterion. The homogeneity criterion measures how homogeneous an image object is in relation to itself and it is calculated as a combination of spectral and shape criteria (Trimble, 2018). The shape ratio determines to what extent the shape influences the segmentation compared to colour. The compactness is a weighting value that affects the compactness of the objects in relation to smoothness created during the segmentation. These ratios are obtained by calculating primary object features, shape, and colour, with heterogeneity calculations, i.e., standard deviation (Benz et al., 2004). Layer weight values control the emphasis given to colour and shape during the heterogeneity calculation (Koop et al., 2021) as it increases the weight of a layer based on the heterogeneity. The weight parameters adapt the heterogeneity definition to the application in order to get suitable segmentation output for image data (Benz et al., 2004). The layer weight values used were chosen following Lim et al. (2020). The scale parameter is considered the most effective parameter (Benz et al., 2004; Kavzoglu and Yildiz, 2014) and is used to control the average image object size in relation to the whole scene, the higher the value, the larger the objects will be. Scale parameters, shape, and compactness thresholds were set for each model individually, following a trial-and-error approach.

After the segmentation, each model was manually classified by an expert. The simplified process is defined in the workflow in Supplementary Figure 3.

Ground Truthing

To assess classification performance, dense cloud datasets were manually annotated. These points were then compared to the classification outputs from the MGC and CGC methods. Classification accuracy was calculated in Python with the ML library Scikit-learn (Pedregosa et al., 2011).

The accuracy score was calculated by summing the true positives and true negatives of all classes and dividing by the total number of annotated points (true positives, false positives, true negatives, and false negatives). The balanced accuracy was calculated as the arithmetic mean of sensitivity (true positive rate) and specificity (true negative rate) of each class. These metrics were chosen because they take into account the class imbalance, i.e., classes do not have the same number of samples, which is typical of seabed imagery datasets. Failure to do so would accidentally inflate the performance of classifiers (Akbani et al., 2004; Brodersen et al., 2010).

Results

3D Reconstructions

A total of eight 3D reconstructions were produced using 3681 images. Dense clouds were composed of a total of 165,356,594 points. The average reconstruction length was 19.73 m and depths ranged from 595 to 1001 m, with average depth of 732.57 m. Mean total error (i.e., root-mean-square error for X, Y, and Z coordinates for all the cameras) was 13.015. Continuous video acquisition along the ROV transects was not regularly possible because of variations in ROV height and speed. High current speeds at the PBC and the presence of particles in suspension in water column (marine snow) also impacted the ROV video transect and consequently the video quality in some areas. Low-quality data were rejected prior to reconstruction. Despite the presence of a few gaps in the surface, the reconstructions showed medium scale features (>10 cm) such as coral branches, coral rubble, and some benthic species with distinction. However, fine scale features (<5 cm) such as individual coral polyps and encrusted algae were not easily visible.

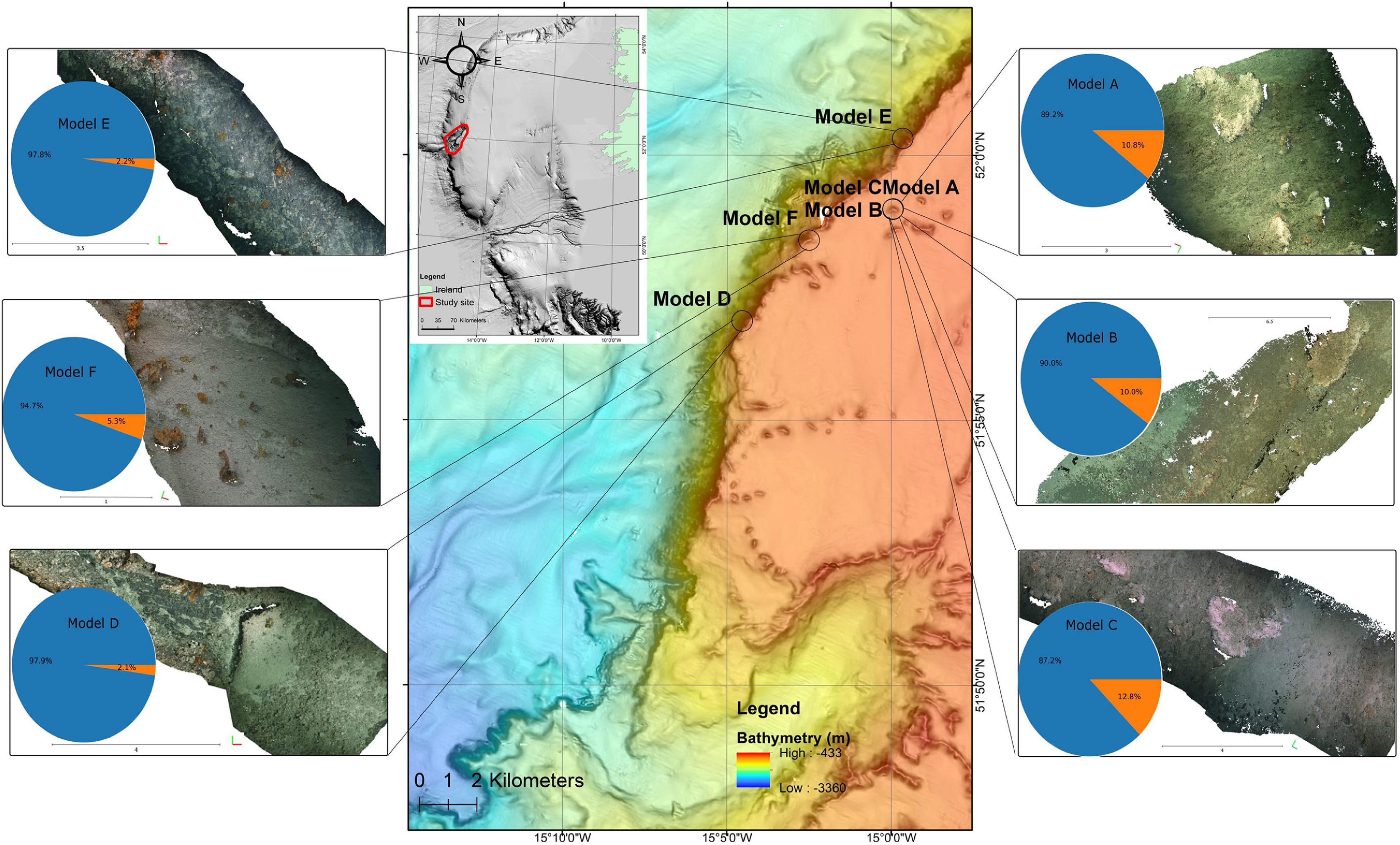

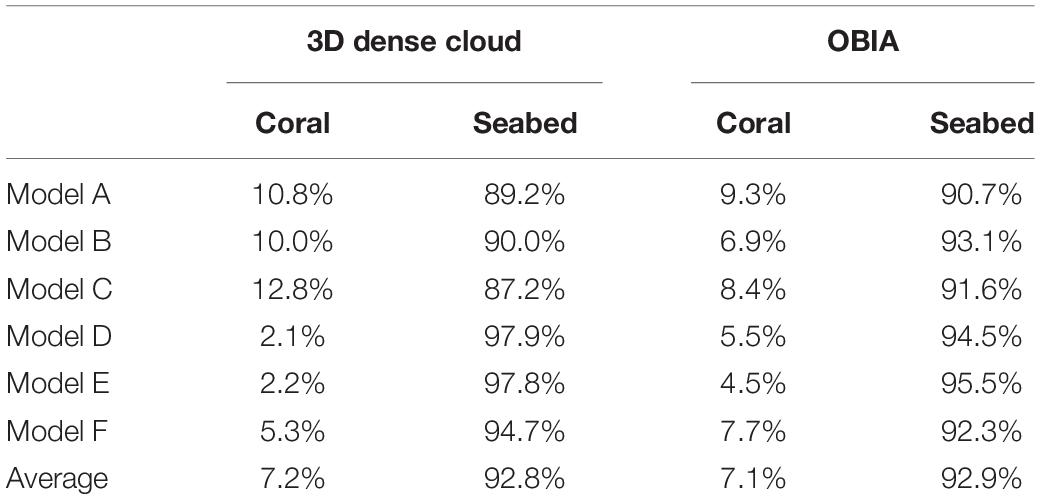

Coral and Seabed Distribution in the Porcupine Bank Canyon

The dense clouds were manually annotated by an expert and segmented into classes: coral and seabed. The percentage distribution of the coral and seabed samples from the annotated test set showed an average of 7.19% coral and 92.81% seabed. Models A, B, and C, located on the upper part of the PBC, showed higher percentages of coral (>10%) and sediment-dominated facies with dropstones (Figure 3). Models D, E, and F, located on the canyon flank ridge, showed lower percentages of coral (<5%), predominance of bedrock with occasional sediment-dominated facies in areas proximal to the eastern side of the flank (Model F; Figure 3).

Figure 3. Map showing the location of the Porcupine Bank Canyon on the Irish continental shelf and the location of each SfM reconstructed dense cloud produced in this study and its respective class distribution with manual annotation. Blue represents seabed and orange represents coral.

Multiscale Geometrical Classification (MGC)

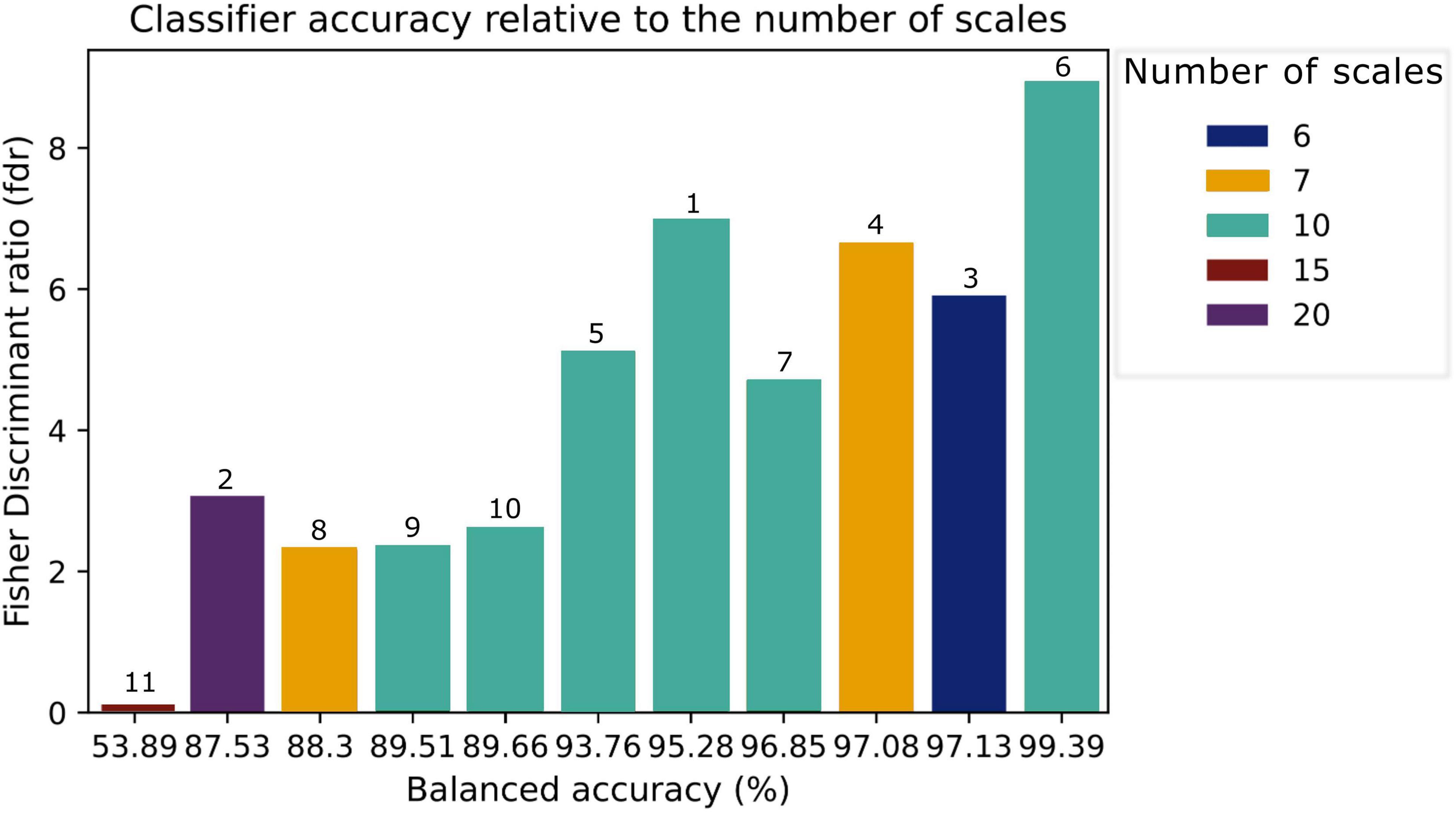

A total of 11 SVM classifiers were built based on different combinations of annotated samples from the training dataset (Part 1 of Supplementary Figure 1). Overall classifier training results had an average ba of 89.85%, and fdr ratio of 4.27. Classifier 6 presented the best ba and fdr with values of 99.8 and 8.98%, respectively (Figure 4). The training was performed with 20,000 core points and 10 scales with a minimum of 0.1, maximum of 1, and step of 0.2. Classifiers trained with classes from two different source clouds hence, different environments, presented higher ba and fdr ratios than classifiers trained with one single cloud source.

Figure 4. Classifier accuracy in relation to the number of scales. The classifier ID is placed on the top of each bar. In the MGC method, scale is defined as the neighbourhood size of pixels to which the classifiers compute each metrics.

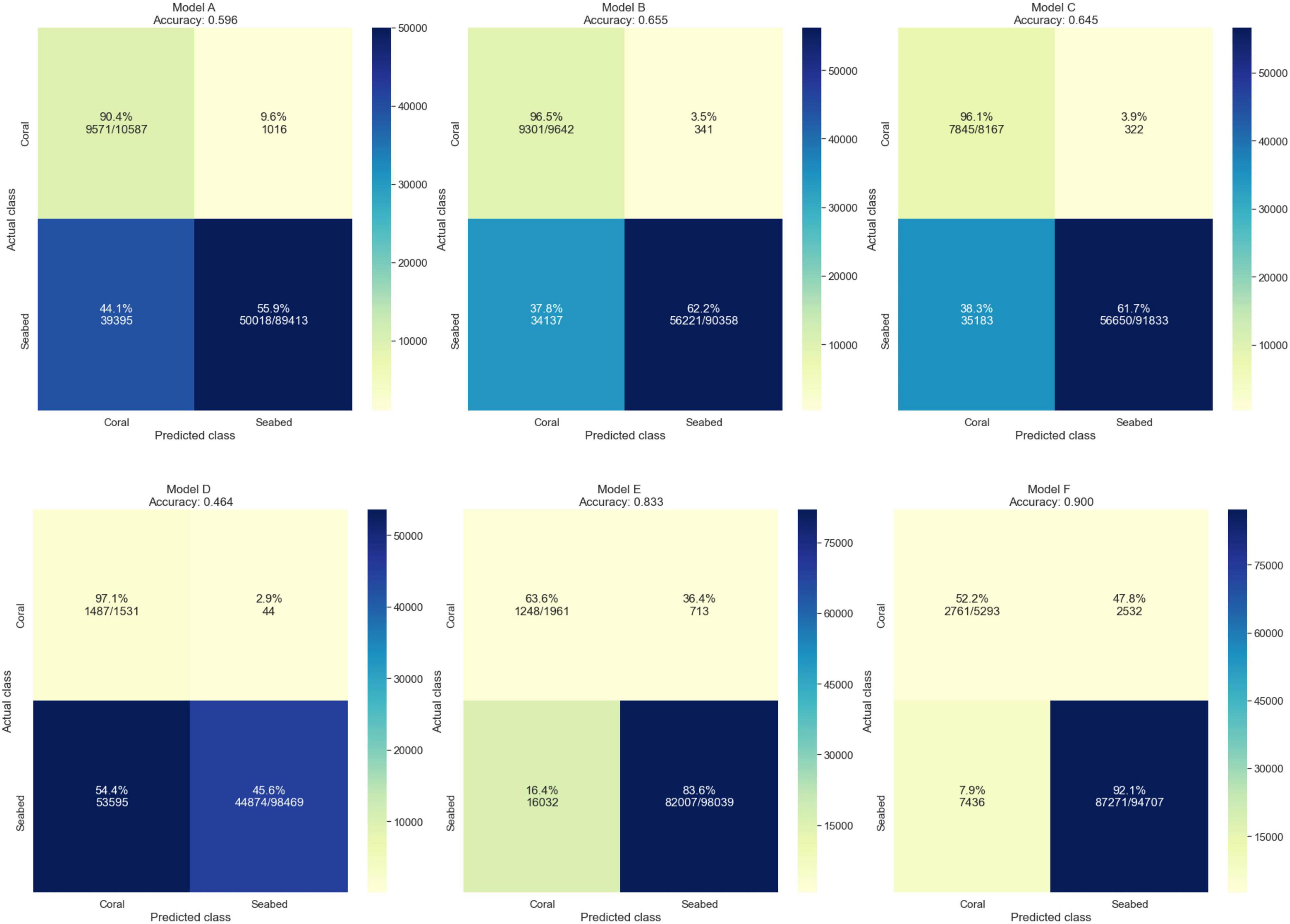

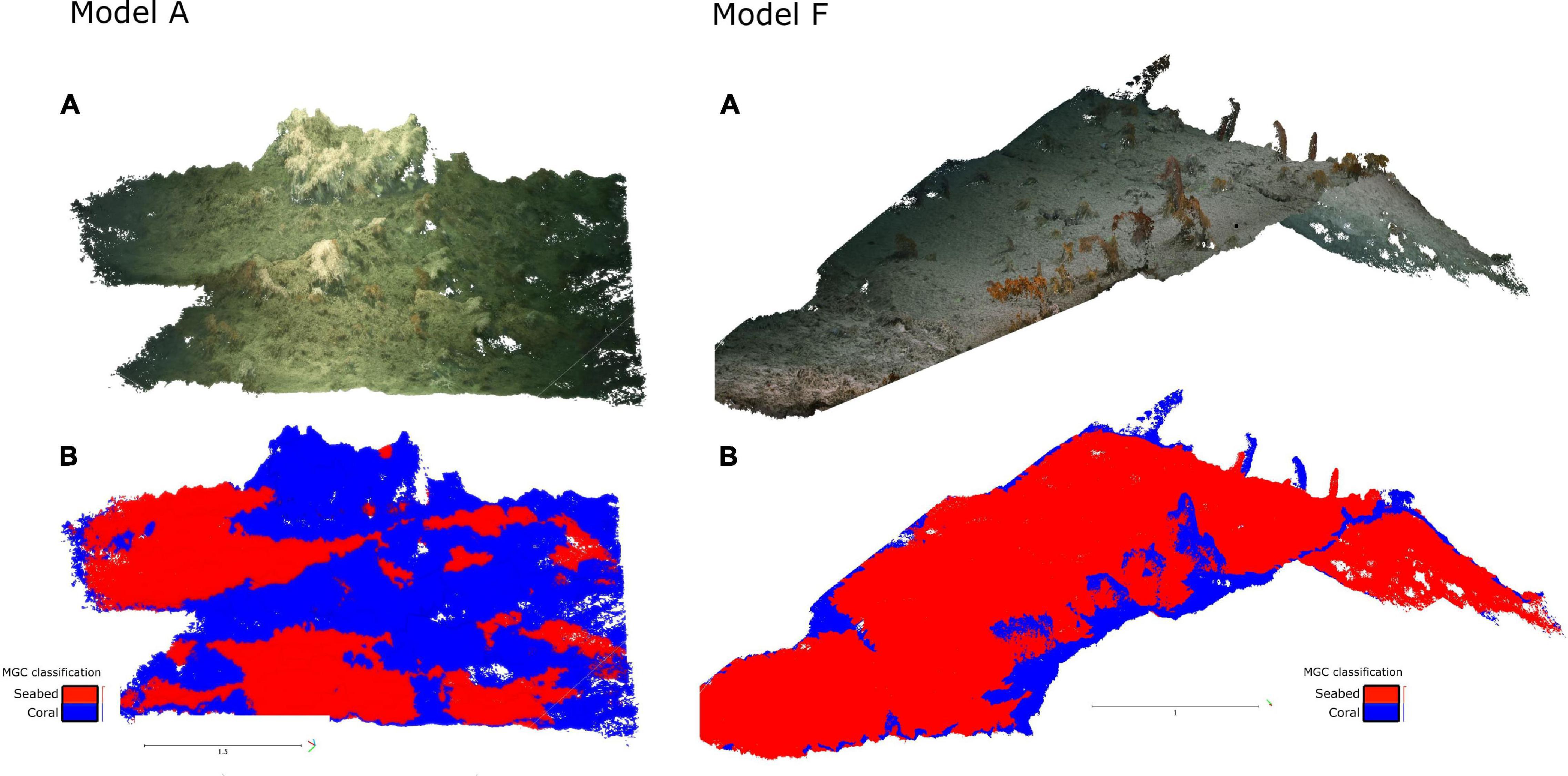

The classifier that presented the best performance (classifier 6 on Figure 4) was applied to the testing dataset (see Part 2 of Supplementary Figure 1). As this classifier was trained with two different source clouds, these were excluded from the test set, which was composed of the remainder of the dataset, i.e., six manually annotated dense clouds. Average accuracy and balanced accuracy scores were 68.2 and 74.7%, respectively. Two models presented accuracy scores above 80% and two models above 60% (Figure 5). Models which presented an accuracy score above 80% shared a similar coral distribution pattern. This pattern is represented by the vertical elongation of the coral branches through the Z-axis, i.e., height information, which can be accurately determined using 3D information (Figure 6-Model F).

Figure 5. Confusion matrices representing the MGC classification results for each dense cloud reconstruction. Confusion matrices show the accuracy score and the relationship between the referenced data and the classification. The “Actual class” on the y-axis refers to the manually annotated data, whereas the “Predicted class” on the x-axis relates to the classification output. The main diagonal of the matrices lists the correctly classified percentage of points per class. The colour scale bar on the right of each confusion matrix represents the number of points.

Figure 6. Model F – Results of classification using the MGC method – (A) Dense cloud (B) Classification output. Model with predominance of black corals (Leiopathes sp.) Red is seabed and blue is coral. Model A – Results of classification using the MGC method – (A) Dense cloud (B) Classification output. Model with predominance of coral rubble patterns. Red is seabed and blue is coral.

Colour and Geometrical Classification (CGC)

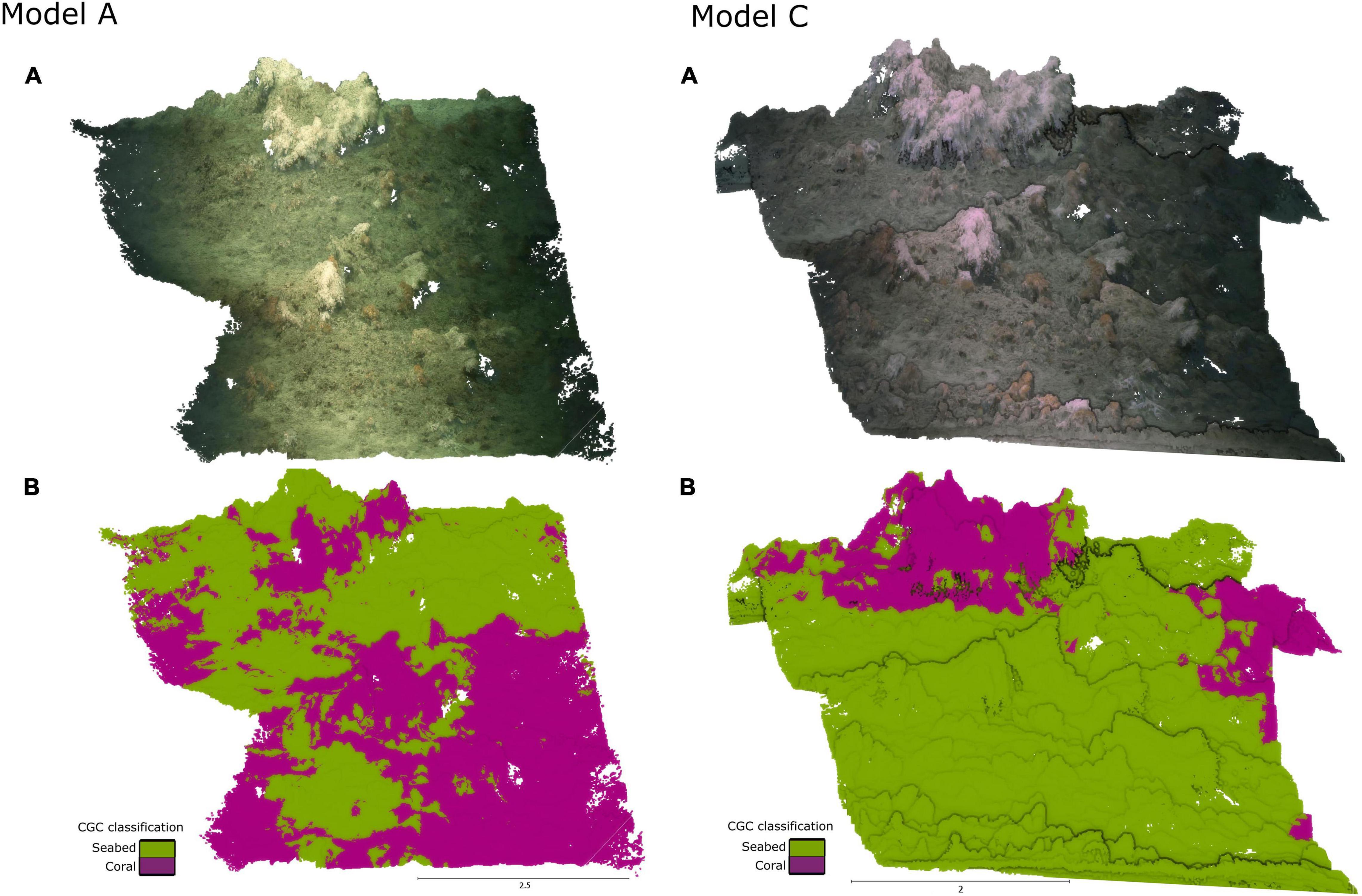

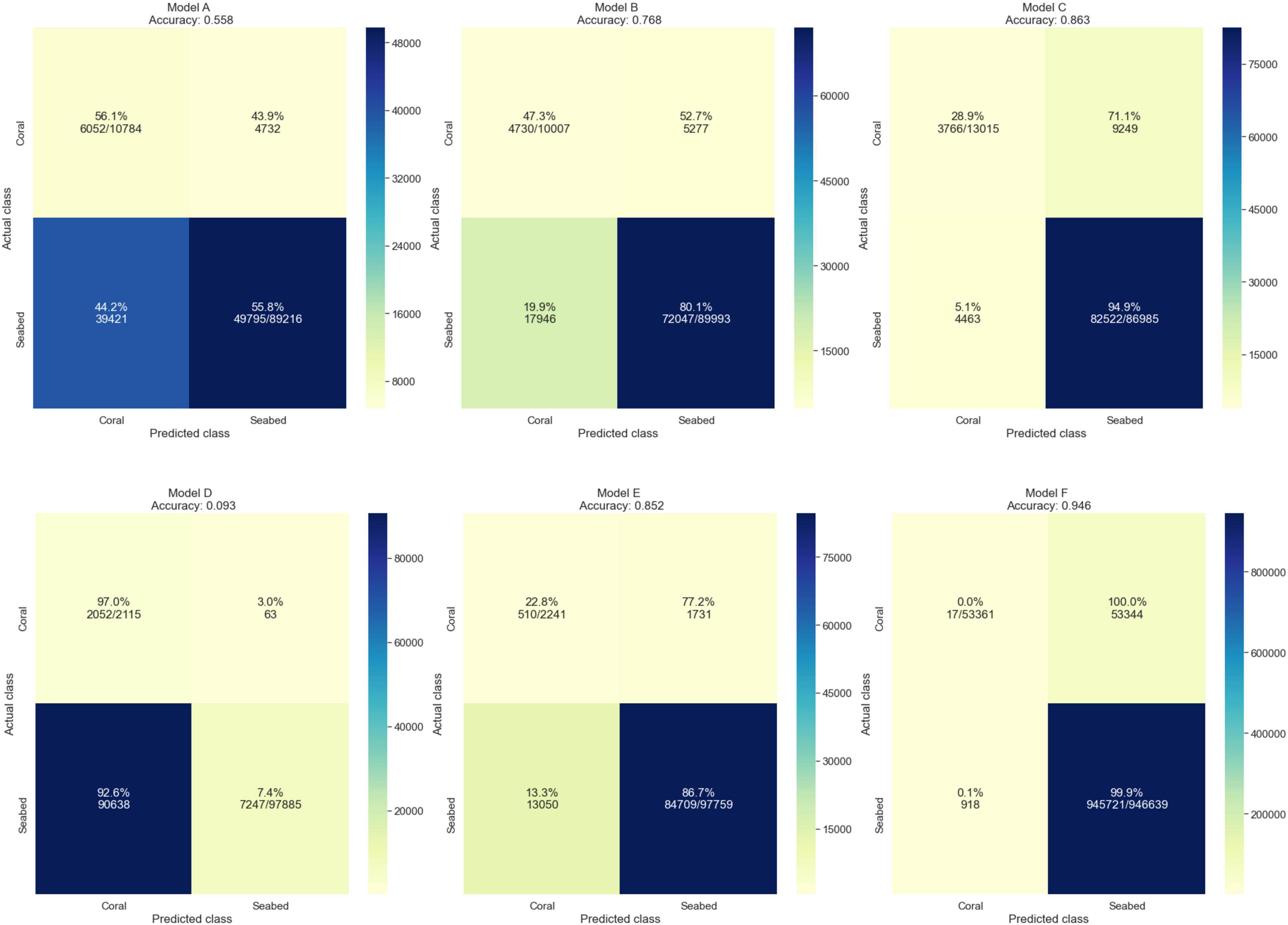

The average classification accuracy score using the colour and geometrical classification method was approximately 67.9% with an average balanced accuracy of 58.1%. The classification output resulting from this method is shown in Figure 7. From the six models analysed on our testing dataset, two models presented accuracy scores below 60%. The remainder presented accuracy scores above 70%, ranging from 75 to 95% (Figure 8).

Figure 7. Results of classification using CGC method—(A) Dense cloud and (B) classification output with CGC method.

Figure 8. Confusion matrices representing the CGC classification results for each dense cloud reconstruction. The “Actual class” on the y-axis refers to the manually annotated data, whereas the “Predicted class” on the x-axis relates to the classification output. The main diagonal of the matrices lists the correctly classified percentage of points per class. The colour scale bar on the right of each confusion matrix represents the number of points.

Object-Based Image Classification (OBIA)

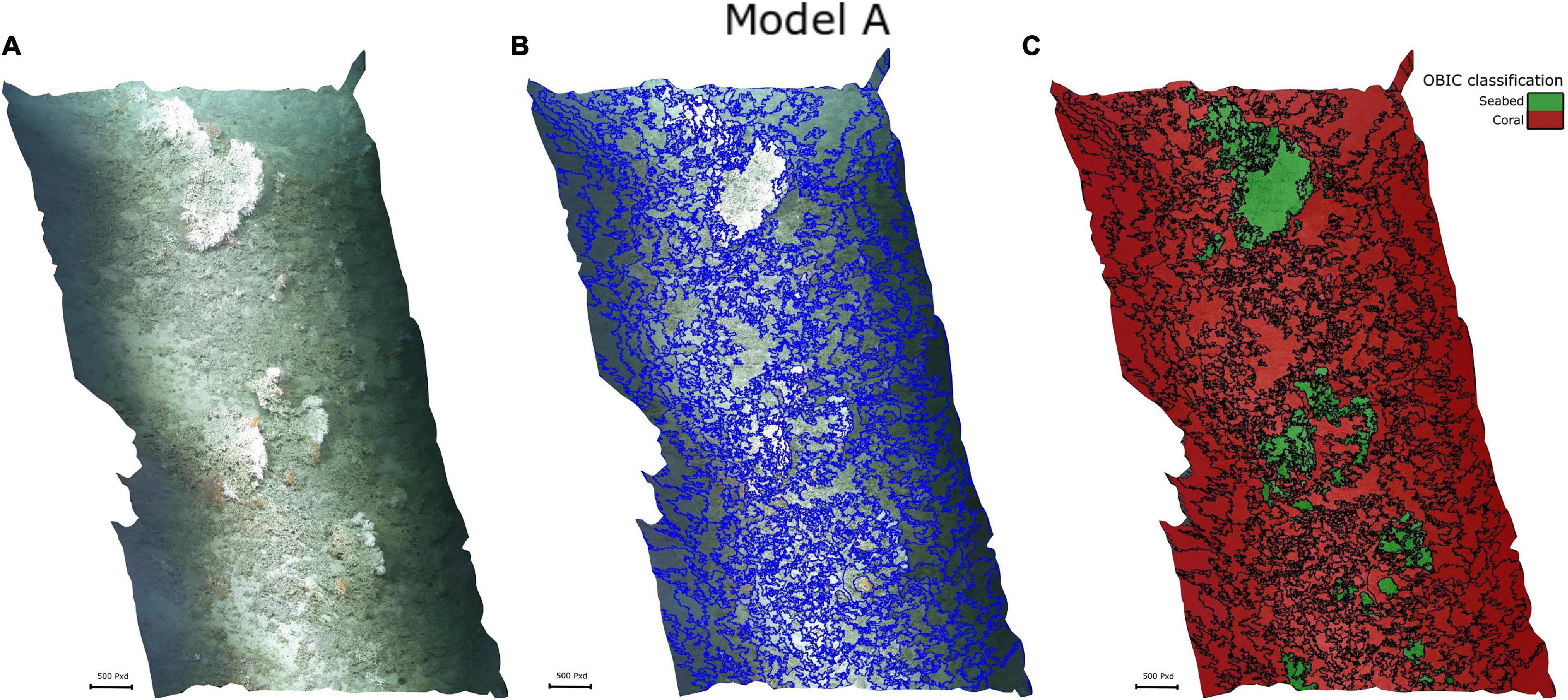

The OBIA method was performed on the orthomosaics, respective DEMs, and calculated slopes of the same dataset. The average classification accuracy was approximately 100%. This result is to be expected because all orthomosaics were manually classified (Figure 9) and an adequate manual classification, when repeated by an expert, is expected to have the same outcome.

Figure 9. Results of classification using OBIA method—(A) Orthomosaic, (B) output of the multi-resolution automated segmentation, and (C) manual classification.

Discussion

This study compares classification methods of 3D point clouds and 2D images. The workflow involved annotation of datasets, training of classifiers (MGC method), evaluation of the classification output, and the analysis of 3D and 2D-derived information from CWC environments. Studies have shown that overall accuracy is widely used for both OBIA and pixel-based classification accuracy assessments (Ye et al., 2018). Recent developments in accuracy assessment techniques have indicated redundancies in metrics such as standard Kappa indices (Foody, 1992; Pontius and Millones, 2011; Ye et al., 2018; Verma et al., 2020). The use of a Kappa score (Cohen, 1960) as a metric compares the observed accuracy to random accuracy; therefore, it is considered questionable to create a classification map (Foody, 2008; Pontius and Millones, 2011). Although there is substantial discussion on the appropriate metrics for image classification (Congalton, 1991; Banko, 1998; Foody, 2008; Ye et al., 2018), the confusion matrices presented here are a neutral representation of the true positives and true negatives of the classification.

The class distribution of the test data showed more abundance of corals on areas located on the eastern canyon flank. Reconstructions from sites located proximal to the canyon axis, towards the west, presented fewer coral features than sites located on the eastern canyon flank, as also described in previous studies (Appah et al., 2020; Lim et al., 2020). Appah et al. (2020) show that benthic taxa mean percentage coverage is twice as high in the flank as in adjacent areas, such as north towards the canyon head and the southern part of the canyon. Lim et al. (2020) provide a high-resolution habitat suitability correlation with current speeds, photogrammetry, and coral distribution in the PBC, showing that the variation in coral habitats does not follow a specific pattern, for example, from south to north. This result was also observed in reconstructions from areas located proximal to the canyon flank ridge (models D, E, and F), where the percentage of coral did not present any major increase or decrease following the north–south trend.

Classification Results

The ba and fdr ratios obtained for method MGC indicate that classifiers were influenced by the diversity of point sources used during the training process. Classifiers that were trained with two different dense clouds showed higher ba and fdr than classifiers trained with a single dense cloud. This suggests that training data with different datasets including those with habitat variability, e.g., different ratios of coral, seabed, and seabed facies, have a positive impact on the classifier performance, as also seen by Mountrakis et al. (2011), Brodu and Lague (2012), and Weidner et al. (2019) in terrestrial data studies. Interestingly, classifier accuracy results (ba and fdr ratios) showed that increasing the number of scales did not directly impact the quality of the classifier, as increasing the scale past a certain number did not necessarily lead to an increase in accuracy (see Figure 4). Thus, incorporating a great number of scales to build a classifier aiming to address a variety of seabed features in the classification computation did not show an improvement on the classifier performance and it can increase the computational complexity required to train the classifier. The process of incorporating multiple scales to acquire the best combination of scales thereby allowing the maximum separability between two classes is constructed automatically and as such, it can tend to overfit. SVM model overfitting can happen by maximising the margin and minimising the training error, which is typical of not only SVMs but also general kernel-based functions (Mountrakis et al., 2011).

Dense clouds that shared a similar coral distribution pattern, such as individual coral colonies with high vertical elongation and low presence of coral rubble as shown in Model F (Figure 6), had high accuracy scores (>80%). In contrast, models with lower accuracy results (<60%) (Figure 6-Model A) originated from areas with less defined feature boundaries such as coral rubble.

The colour and geometrical classification algorithm applied in the CGC method did not show any definite patterns concerning the structural complexity of the environment. Even though the classification outputs showed that coral colonies and patches tended to be misclassified in non-flat areas, the classifier resulted in different behaviours when applied to dense-cloud reconstructions of similar environments (Figure 7). In previous studies, the algorithm performed well for the detection of buildings and roads, but it misclassified vegetation and ground, especially in datasets containing hills and non-flat surfaces (Becker et al., 2018) meaning that terrain variations could have influenced object detection. Performance results showed that, although accuracy scores were >60% for the majority of the models, the visual assessment of the predicted output was not in accordance with the high values, as the classification results did not reflect the real objects clearly. Therefore, this result suggests that the high accuracy score may have been derived from the class imbalance in the test dataset, given that the seabed is more dominant than the coral class. Thus, the balanced accuracy provided a better representation of the overall performance. These results may also reflect the similarity of RGB spectral signatures as previously mentioned here and discussed in Lichti (2005), Beijbom et al. (2012), and Hopkinson et al. (2020). It is also possible that the performance of the CGC classifier may improve if there was an intermediary step that allowed training with seafloor and coral data within Agisoft Metashape. Nevertheless, the minimal user input required for this method, since pre-training is not necessary, makes it suitable for fast identification of seabed distribution.

In relation to the OBIA method, the automated segmentation performed better in small-scale orthomosaics (<4 m) where corals and rubble were easily distinguishable. In large-scale models (>8 m), the segmentation tended to under or over-segment, resulting in a poor differentiation in coral and seabed classes, specifically between coral patches and coral rubble (Figure 9). A benefit of utilising object-based techniques applied to classification tasks is the automatic segmentation process, which in the workflow shown herein (Supplementary Figure 3) can be faster and more accurate than manual segmentation for annotation of datasets. However, although orthomosaics and DEMs provide height information that is useful for larger scale models, they can be limiting for high-resolution analyses. Conversely, 3D metrics derived from vector dispersion and triangulation in dense clouds provide more detailed information for characterising individual coral colonies and benthic species (Fukunaga and Burns, 2020). Thus, the use of 2D metrics for detailed habitat analysis can lead to lack of discernment when detecting seabed features, e.g., coral branches, coral rubble, and sand ripples as also noted by Hopkinson et al. (2020).

Comparison Within 3D Classifications

Accuracy results (Table 1) suggest that models behaved similarly when 3D methods are used (MGC and CGC). Models that obtained an accuracy score of >60% with the MGC method also obtained a comparable result for the CGC method. Occasionally, MGC tended to ignore coral, while CGC tended to overclassify such objects. Both CGC and MGC appeared to be susceptible to object occlusion and canopy effects created by objects. This occlusion was recognised by a classification pattern occurring not only on the object itself (e.g., coral) but also on the shade it created, producing an elongated pattern behind the object consistent with its shadow (Figures 6, 7).

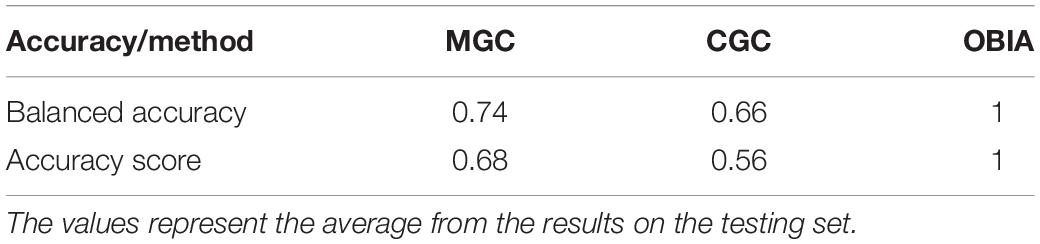

Table 1. Accuracy metrics for Method 1–Multiscale Geometrical Classification (MGC), Method 2–Colour and Geometrical Classification (CGC), and Method 3–Object-based Image Classification (OBIA).

In support of this observation, challenges related to the partial occlusion of objects and lighting artefacts have been addressed in other studies (Singh et al., 2004; Gracias and Negahdaripour, 2005). Lighting artefacts such as light scattering, colour shifts, and blurring related to the data acquisition can be considered a bottleneck which impacts the overall model resolution and hence the classification output (Bryson et al., 2015, 2016, 2017). This difficulty can be addressed with the use of image enhancement methods, e.g., texture delighting and colour filtering that can diminish object occlusion artefacts (Bryson et al., 2015). In the overall outputs, the canopy effect pattern was more evident in the CGC method. Furthermore, dense cloud models with a low RGB variability hence, similar RGB values for coral and seabed, resulted in slightly different classification outputs with the CGC method not being able to recognise seabed as compared to the method MGC. As previously mentioned, the classifier in the CGC method also seemed to take into consideration terrain surface variations. Conversely, the geometrical approach and the resistance to shadow effects of the MGC provided a degree of variability and heterogeneity in the class characteristics. As such, MGC appears more suitable for the classification of CWC because (a) it addressed coral colonies and coral patches more accurately, (b) it was able to identify seabed coverage in all 3D reconstructions, and (c) it can be reapplied to classify similar coral reef environments. As a preliminary study, the results showed herein provide important insights towards the advancement on the venue of 3D classification as an accessible and informative approach.

Cost and Data-Loss Related to Representing 3D Objects as 2D

With the exception of the CGC method, all methods required similar amounts of data processing, which was mainly allocated to segmentation, labelling, and parameter-tuning, e.g., manual segmentation and labelling in MGC and manual classification in the OBIA method. Even though visual classification output did not fully delineate areas where coral rubble and gradational boundaries were present, the CGC method provided more accurate results when applied to models where objects have well-defined boundaries and sharp edges such as man-made objects (Becker et al., 2018). Conversely, the MGC method is more appropriate for complex scenes with high environmental variability (Brodu and Lague, 2012). One important aspect that should be considered in the MGC application is the amount of training data required to train the classifier. As for most of the classification methods, training data size and availability should be evaluated prior to choosing the methodology to be followed as it directly impacts the performance of the classifier (Lu and Weng, 2007; Maxwell et al., 2018; Zurowietz et al., 2018).

Similarly, the abundance of coral rubble and octocorals must be considered when choosing the classification method as they are subject to underwater colour and intensity distortions (Beijbom et al., 2012; Bryson et al., 2013). Such features present similar values within the intensity range and tend to exhibit wave-length attenuation when reconstructed (Bryson et al., 2013). Attention is drawn to coral rubble features as they present undefined boundary features which hinders their detection. Previous studies have also highlighted the difficulties of automatically classifying coral rubble in images (Beijbom et al., 2012) and 3D models (Hopkinson et al., 2020). Coral rubble occurs due to the coral exposure for extensive periods which lead to abrasion and bioerosion of coral framework (Titschack et al., 2015). High proportions of coral rubble may be indicative of high current speeds in the area (Lim et al., 2020). In contrast, the segmentation utilised in the OBIA method successfully distinguished coral rubble from sediment. This observation agrees with previous studies that have shown that orthomosaics can be useful for high-resolution habitat mapping of large areas (Lim et al., 2017; Conti et al., 2019). Although coral rubble was not included as a class on our framework, this observation can be useful for future studies.

Representing 3D objects in a 2D space may potentially lead to data bias due to misrepresentation of the object in the feature space and the use of metrics that disregard its multidimensionality factors. In this study, two manual annotation schemes were used to provide a baseline and ground-truth for accuracy calculation. Annotations were performed by an expert in dense-clouds (3D) and orthomosaics for each 3D reconstruction. Table 2 shows the percentage distribution of classes of each model in each manual annotation schemes: 3D dense cloud annotation and OBIA manual classification.

Table 2. Percentage of class distribution results from the manual annotation for each habitat and each class in 2D and 3D.

Class distribution results for each method (Table 2) show that there is a higher distribution of coral class within the 3D dense-cloud annotation in comparison to the OBIA method in 50% of the models. When such variation happens, there is a difference of up to 4.45% in the percentage of coral with a mean of 3.02%. Conversely, in models where the distribution of coral class is higher in the OBIA methods, the difference is only up to 3.39%, with a mean of 2.75%.

The average class distribution for the 3D dense cloud was 7.2% coral and 92.8% seabed as opposed to 7.1% coral and 92.9% seabed in the OBIA method. The average of the difference between class distributions was 0.2%. These results show that there is potentially an impact of at least a magnitude order of a tenth of the value in using 2D methods to represent objects that are naturally 3D structures. Although these values may not appear significant in the overall scheme, they have the potential to impact studies whose aims are derived from habitat mapping at a sub-centimetre resolution and a more significant impact when applied over large areas.

Scleractinian corals are naturally vertical-orientated features that, when mapped using 2D metrics, may give a small contribution in the percentage coverage. The 3D branching framework of CWC can increase sediment baffling with reefs or around colonies by offering a resistance to currents, for example (Mienis et al., 2019; Lim et al., 2020). However, the vertical structure of corals would be taken into account in overall biomass estimates if mapped in 3D. Furthermore, calculation of biomass considering all aspects of the environment is extremely relevant to understanding coral reef metabolism and overall environmental dynamics (McKinnon et al., 2011; Burns et al., 2015a, 2019; Price et al., 2019; Hopkinson et al., 2020). In comparison to 2D metrics, the use of multiscale dimensionality features that describe the local geometry of each point in relation to the entire scene makes 3D classification more suitable for the analysis of real complex scenarios at higher resolutions. Thus, advancing from commonly employed 2D image analysis techniques to 3D methods could provide more realistic representations of coral reefs and submarine environments (Fisher et al., 2007; Anelli et al., 2019).

Main Advantages and Disadvantages of the 3D Workflows Identified Within This Study

Three-dimensional reconstructions provide rich, non-destructive ecological and structural habitat information (Burns et al., 2015b; Figueira et al., 2015; Pizarro et al., 2017; Price et al., 2019), serving as a valuable tool for monitoring growth rates and assessing impacts of environmental disturbances (Bennecke et al., 2016; Marre et al., 2019). The use of SfM can also increase versatility and repeatability of reef surveys (Storlazzi et al., 2016; Bayley et al., 2019; Lim et al., 2020) as it can provide accurate quantifications for habitat coverage as well as coral orientation analyses (Lim et al., 2020). The 3D reconstructions produced in this study can complement recent studies (e.g., Appah et al., 2020; Lim et al., 2020) by providing an object of comparison for spatio-temporal changes in the PBC. The workflows applied herein yield the identification and quantification of CWC distribution at high resolutions.

In contrast, monitoring seabed habitats through 3D reconstruction require centimetric to milimetric resolutions and corresponding accuracies (Marre et al., 2019). High-resolution 3D models require significant data resources (storage and processing power) (Bayley and Mogg, 2020; Hopkinson et al., 2020; Mohamed et al., 2020). Therefore, it is important to highlight the constraints associated with manipulating 3D data. In many cases, it is necessary to develop sub-sampling processes to analyse large batches of data without compromising data resolution. The computer resources and methods available to manipulate and analyse 3D data from marine environments at larger scales could be further improved (Bryson et al., 2017; Robert et al., 2017). The 3D-based workflows described herein demonstrate that most off-the-shelf algorithms need to be adapted for seabed classification and mapping.

The use of SfM for seabed mapping requires consideration of a number of variables to determine the feasibility and accuracy of each study (Burns et al., 2015a; Bayley and Mogg, 2020). For example, environmental conditions such as visibility, swell variations, changes in camera altitude, and ROV speed can impact the survey design, hence, the video quality (Mohamed et al., 2018; Anelli et al., 2019; Marre et al., 2019). Factors related to HD video acquisition and processing such as camera position, lens, light attenuation, calibration, image overlap, and software options can affect the results of 3D reconstructions (Marre et al., 2019; Rossi et al., 2020). Furthermore, high-resolution reconstruction of models can take up to 12 h of processing and a considerable amount of HD video footage (Robert et al., 2017). A regular laptop computer may face limitations to process the resulting models, which can be over 10 GB in size (Robert et al., 2017).

Conclusion

Cold-water corals significantly contribute to deep-sea biodiversity due to their 3D structure and reef-building capacity. Submarine canyons act as conduits for sediments, nutrients, and organic matter supporting high biomass communities (Nittrouer and Wright, 1994; Puig and Palanques, 1998; Harris and Whiteway, 2011). There is an increasing demand for new methods able to efficiently capture fine-scale changes in these environments. SfM can contribute to more precise structural analysis of CWC habitats while also providing grounds for temporal and volumetric change detection in CWC reefs. This study describes three classification methods applied to CWC reefs within the PBC SAC in the North East Atlantic. The workflows described provide an original and not yet applied methodology for the classification of 3D reconstructed marine environments at the PBC. The dataset consisted of 3D reconstructed point clouds, respective orthomosaics, DEMs, and associated terrain variables of CWC environments. The classification workflows designed for 3D point clouds showed a similar accuracy, even though visual results had different outputs and had a different level of robustness. The balanced accuracy and accuracy scores averaged 67.2% for the 3D methods. The study defines methodologies that are compatible with off-the-shelf commercial software with high-resolution data. Furthermore, the execution of the methods was fast and appeared suitable for the wider deep-sea research community who have access to the SfM point cloud data. Executing more complex frameworks is possible at the expense of computation power and time resources. Future research should involve the application of unsupervised learning with use of geometrical features and application of other ML algorithms for supervised learning. The use of more robust classification methods and higher resolution 3D reconstructions will aid the inclusion of more classes, especially of objects with irregular contour boundaries.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

All authors contributed to the conceptualisation, writing, review, and editing of the manuscript, and read and agreed to the published version of the manuscript. LO and AL: methodology, investigation, data curation, and project administration. LO: formal analysis, writing—original draft preparation, and visualisation. AL and AW: resources. AL, LC, and AW: supervision. AL, AW, and LO: funding acquisition.

Funding

This research was funded by Science Foundation Ireland, grant number: 16/IA/4528 and Irish Research Council, grant number: GOIPG/2020/1659. The APC was funded by Science Foundation Ireland. AL was supported by the European Union’s Horizon 2020 Research and Innovation Program “iAtlantic” project, grant number: 818123. LC was supported by São Paulo Research Foundation (Fundação de Amparo à Pesquisa do Estado de São Paulo – FAPESP), grant number: 2017/19649-8.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank the Science Foundation Ireland, Geological Survey Ireland, and Marine Institute who funded this work through the MMMonKey_Pro (grant number 16/IA/4528) and the Irish Research Council for funding the first author’s Ph.D. research project ASMaT (grant number: GOIPG/2020/1659). Especial thanks goes to Marine Institute for funding the ship time on RV Celtic Explorer under the 2018 and 2019 Ship Time Program of the National Development scheme and the shipboard party of RV Celtic Explorer and ROV Holland 1 for their support with data acquisition.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmars.2021.640713/full#supplementary-material

References

Addamo, A. M., Vertino, A., Stolarski, J., García-Jiménez, R., Taviani, M., and Machordom, A. (2016). Merging scleractinian genera: the overwhelming genetic similarity between solitary Desmophyllum and colonial Lophelia. BMC Evol. Biol. 16:1–17. doi: 10.1186/s12862-016-0654-8

Agisoft (2019). Agisoft Metashape User Manual. 160. Available online at: https://www.agisoft.com/pdf/metashape-pro_1_5_en.pdf (accessed March 12, 2020).

Akbani, R., Kwek, S., and Japkowicz, N. (2004). “Applying support vector machines to imbalanced datasets,” in Proceedings of the 15th European Conference on Machine Learning, Berlin, 39–50.

Anelli, M., Julitta, T., Fallati, L., Galli, P., Rossini, M., and Colombo, R. (2019). Towards new applications of underwater photogrammetry for investigating coral reef morphology and habitat complexity in the Myeik Archipelago. Myanmar. Geocar. Int. 34, 459–472. doi: 10.1080/10106049.2017.1408703

Appah, J. K. M., Lim, A., Harris, K., O’Riordan, R., O’Reilly, L., and Wheeler, A. J. (2020). Are non-reef habitats as important to benthic diversity and composition as coral reef and rubble habitats in submarine canyons? Analysis of controls on benthic megafauna distribution in the porcupine bank Canyon, NE Atlantic. Front. Mar. Sci. 7:831. doi: 10.3389/fmars.2020.571820

Banko, G. (1998). A Review of Assessing the Accuracy of and of Methods Including Remote Sensing Data in Forest Inventory. Internation Institute for Applied Systems Analysis, Interim Report IT-98-081. Laxenburg: Internation Institute for Applied Systems Analysis.

Barbosa, R. V., Davies, A. J., and Sumida, P. Y. G. (2019). Habitat suitability and environmental niche comparison of cold-water coral species along the Brazilian continental margin. Deep Sea Res. Part I 155:103147. doi: 10.1016/j.dsr.2019.103147

Bayley, D. T. I., and Mogg, A. O. M. (2020). A protocol for the large−scale analysis of reefs using structure from motion photogrammetry. Methods Ecol. Evolut. 11, 1410–1420. doi: 10.1111/2041-210x.13476

Bayley, D. T. I., Mogg, A. O. M., Koldewey, H., and Purvis, A. (2019). Capturing complexity: field-testing the use of “structure from motion” derived virtual models to replicate standard measures of reef physical structure. PeerJ 2019:6540. doi: 10.7717/peerj.6540

Becker, C., Rosinskaya, E., Häni, N., d’Angelo, E., and Strecha, C. (2018). Classification of aerial photogrammetric 3D point clouds. Photogramm. Eng. Remote Sens. 84, 287–295. doi: 10.14358/PERS.84.5.287

Beijbom, O., Edmunds, P. J., Kline, D. I., Mitchell, B. G., and Kriegman, D. (2012). “Automated annotation of coral reef survey images,” in. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, 1170–1177. doi: 10.1109/CVPR.2012.6247798

Bennecke, S., Kwasnitschka, T., Metaxas, A., and Dullo, W. C. (2016). In situ growth rates of deep-water octocorals determined from 3D photogrammetric reconstructions. Coral Reefs 35, 1227–1239. doi: 10.1007/s00338-016-1471-7

Benz, U. C., Hofmann, P., Willhauck, G., Lingenfelder, I., and Heynen, M. (2004). Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 58, 239–258. doi: 10.1016/j.isprsjprs.2003.10.002

Boolukos, C. M., Lim, A., O’Riordan, R. M., and Wheeler, A. J. (2019). Cold-water corals in decline – A temporal (4 year) species abundance and biodiversity appraisal of complete photomosaiced cold-water coral reef on the Irish Margin. Deep Sea Res. Part I Oceanogr. Res Pap. 146, 44–54. doi: 10.1016/j.dsr.2019.03.004

Brodersen, K. H., Ong, C. S., Stephan, K. E., and Buhmann, J. M. (2010). “The balanced accuracy and its posterior distribution,” in Proceedings of the International Conference on Pattern Recognition, Istanbul, 3121–3124. doi: 10.1109/ICPR.2010.764

Brodu, N., and Lague, D. (2012). 3D terrestrial LiDAR data classification of complex natural scenes using a multi-scale dimensionality criterion: applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 68, 121–134. doi: 10.1016/j.isprsjprs.2012.01.006

Bryson, M., Ferrari, R., Figueira, W., Pizarro, O., Madin, J., Williams, S., et al. (2017). Characterization of measurement errors using structure-from-motion and photogrammetry to measure marine habitat structural complexity. Ecol. Evol. 7, 5669–5681. doi: 10.1002/ece3.3127

Bryson, M., Johnson-Roberson, M., Pizarro, O., and Williams, S. (2016). “Colour-Consistent structure-from-motion models using underwater imagery,” in Proceedings of the Robotics: Science and System, Sydney, NSW. doi: 10.15607/rss.2012.viii.005

Bryson, M., Johnson-Roberson, M., Pizarro, O., and Williams, S. B. (2013). Colour-consistent structure-from-motion models using underwater imagery. Robotics 8, 33–40. doi: 10.7551/mitpress/9816.003.0010

Bryson, M., Johnson-Roberson, M., Pizarro, O., and Williams, S. B. (2015). True color correction of autonomous underwater vehicle imagery. J. Field Robot. 33, 1–17. doi: 10.1002/rob.21638

Burns, J. H. R., Delparte, D., Gates, R. D., and Takabayashi, M. (2015a). Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015:1077. doi: 10.7717/peerj.1077

Burns, J. H. R., Delparte, D., Gates, R. D., and Takabayashi, M. (2015b). Utilizing underwater three-dimensional modeling to enhance ecological and biological studies of coral reefs. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 40, 61–66. doi: 10.5194/isprsarchives-XL-5-W5-61-2015

Burns, J. H. R., Fukunaga, A., Pascoe, K. H., Runyan, A., Craig, B. K., Talbot, J., et al. (2019). 3D habitat complexity of coral reefs in the northwestern hawaiian islands is driven by coral assemblage structure. ISPRS Annal. Photogramm. Remote Sens. Spatial Inf. Sci. 42, 61–67. doi: 10.5194/isprs-archives-XLII-2-W10-61-2019

Carlevaris-Bianco, N., Mohan, A., and Eustice, R. M. (2010). Initial results in underwater single image dehazing. MTS/IEEE Seattle OCEANS 2010, 1–8. doi: 10.1109/OCEANS.2010.5664428

Carrivick, J. L., Smith, M. W., and Quincey, D. J. (2016). Structure from Motion in the Geosciences. Hoboken, NJ: Wiley Blackwell.

Cocito, S., Sgorbini, S., Peirano, A., and Valle, M. (2003). 3-D reconstruction of biological objects using underwater video technique and image processing. J. Exp. Mar. Biol. Ecol. 297, 57–70. doi: 10.1016/S0022-0981(03)00369-1

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educ. Psychol. Measur. 20, 37–46. doi: 10.1177/001316446002000104

Congalton, R. G. (1991). A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 37, 35–46. doi: 10.1016/0034-4257(91)90048-B

Conti, L. A., Lim, A., and Wheeler, A. J. (2019). High resolution mapping of a cold water coral mound. Sci. Rep. 9:1016. doi: 10.1038/s41598-018-37725-x

Costello, M. J., McCrea, M., Freiwald, A., Lundälv, T., Jonsson, L., Bett, B. J., et al. (2005). “Role of cold-water Lophelia pertusa coral reefs as fish habitat in the NE Atlantic,” in Cold-Water Corals and Ecosystems, eds A. Freiwald and J. M. Roberts (Berlin: Springer), 771–805. doi: 10.1007/3-540-27673-4_41

Courtney, L. A., Fisher, W. S., Raimondo, S., Oliver, L. M., and Davis, W. P. (2007). Estimating 3-dimensional colony surface area of field corals. J. Exp. Mar. Biol. Ecol. 351, 234–242. doi: 10.1016/j.jembe.2007.06.021

De Clippele, L. H., Gafeira, J., Robert, K., Hennige, S., Lavaleye, M. S., Duineveld, G. C. A., et al. (2017). Using novel acoustic and visual mapping tools to predict the small-scale spatial distribution of live biogenic reef framework in cold-water coral habitats. Coral Reefs 36, 255–268. doi: 10.1007/s00338-016-1519-8

de Mol, B., Henriet, J.-P., and Canals, M. (2005). Development of coral banks in Porcupine Seabight: do they have Mediterranean ancestors? Cold Water Corals Ecosyst. 5, 515–533. doi: 10.1007/3-540-27673-4_26

Dorschel, B., Wheeler, A. J., Monteys, X., and Verbruggen, K. (2010). Atlas of the Deep-Water Seabed: Ireland. Dordrecht: Springer Netherlands. doi: 10.1007/978-90-481-9376-9

European Union Habitats Directive (2016). European Union Habitats (Porcupine Bank Canyon Special Area of Conservation 003001) Regulations 2016. Available online at: http://www.irishstatutebook.ie/eli/2016/si/106/made/en/pdf (accessed October 25, 2020).

Fanelli, E., Delbono, I., Ivaldi, R., Pratellesi, M., Cocito, S., and Peirano, A. (2017). Cold-water coral Madrepora oculata in the eastern Ligurian Sea (NW Mediterranean): historical and recent findings. Aquat. Conserv. Mar. Freshw. Ecosyst. 27, 965–975. doi: 10.1002/aqc.2751

Ferrari, R., Marzinelli, E. M., Ayroza, C. R., Jordan, A., Figueira, W. F., Byrne, M., et al. (2018). Large-scale assessment of benthic communities across multiple marine protected areas using an autonomous underwater vehicle. PLoS One 13:e0193711. doi: 10.1371/journal.pone.0193711

Figueira, W., Ferrari, R., Weatherby, E., Porter, A., Hawes, S., and Byrne, M. (2015). Accuracy and precision of habitat structural complexity metrics derived from underwater photogrammetry. Remote Sens. 7, 16883–16900. doi: 10.3390/rs71215859

Findlay, H. S., Artioli, Y., Moreno Navas, J., Hennige, S. J., Wicks, L. C., Huvenne, V. A. I., et al. (2013). Tidal downwelling and implications for the carbon biogeochemistry of cold-water corals in relation to future ocean acidification and warming. Glob. Change Biol. 19, 2708–2719. doi: 10.1111/gcb.12256

Fisher, W. S., Davis, W. P., Quarles, R. L., Patrick, J., Campbell, J. G., Harris, P. S., et al. (2007). Characterizing coral condition using estimates of three-dimensional colony surface area. Environ. Monitor. Assess. 125, 347–360. doi: 10.1007/s10661-006-9527-8

Foody, G. M. (1992). On the compensation for chance agreement in image classification accuracy assessment. Photogramm. Eng. Remote Sens. 58, 1459–1460.

Foody, G. M. (2008). Harshness in image classification accuracy assessment. Int. J. Remote Sens. 29, 3137–3158. doi: 10.1080/01431160701442120

Fosså, J. H., Lindberg, B., Christensen, O., Lundälv, T., Svellingen, I., Mortensen, P. B., et al. (2006). “Mapping of Lophelia reefs in Norway: experiences and survey methods,” in Cold-Water Corals and Ecosystems, eds A. Freiwald and J. M. Roberts (Heidelberg: Springer-Verlag), 359–391. doi: 10.1007/3-540-27673-4_18

Freiwald, A., Fosså, J. H., Grehan, A., Koslow, T., and Roberts, J. M. (2011). Cold-water Coral Reefs: Out of Sight – no Longer out of Mind. UNEP-WCMC Biodiversity Series. Cambridge: World Conservation Monitoring Centre. doi: 10.5962/bhl.title.45025

Freiwald, A., and Roberts, J. M. (2005). Cold-Water Corals and Ecosystems. Berlin: Springer. doi: 10.1007/3-540-27673-4

Fukunaga, A., and Burns, J. H. R. (2020). Metrics of coral reef structural complexity extracted from 3D mesh models and digital elevation models. Remote Sens. 12:2676. doi: 10.3390/RS12172676

Fukunaga, A., Burns, J. H. R., Craig, B. K., and Kosaki, R. K. (2019). Integrating three-dimensional benthic habitat characterization techniques into ecological monitoring of coral reefs. J. Mar. Sci. Eng. 7:27. doi: 10.3390/jmse7020027

Gass, S. E., and Roberts, J. M. (2006). The occurrence of the cold-water coral Lophelia pertusa (Scleractinia) on oil and gas platforms in the North Sea: colony growth, recruitment and environmental controls on distribution. Mar. Pollut. Bull. 52, 549–559. doi: 10.1016/j.marpolbul.2005.10.002

Goatley, C. H. R., and Bellwood, D. R. (2011). The roles of dimensionality, canopies and complexity in ecosystem monitoring. PLoS One 6:e0027307. doi: 10.1371/journal.pone.0027307

Gómez-ríos, A., Tabik, S., Luengo, J., and Shihavuddin, A. S. M. (2018). Towards highly accurate coral texture images classification using deep convolutional neural networks and data augmentation towards highly accurate coral texture images classification using deep convolutional neural networks and data augmentation. Exp. Syst. Appl. 118, 315–328. doi: 10.1016/j.eswa.2018.10.010

Gracias, N., and Negahdaripour, S. (2005). Underwater mosaic creation using video sequences from different altitudes. Proc. MTS/IEEE OCEANS 2005:e1639933. doi: 10.1109/OCEANS.2005.1639933

Graham, N. A. J., and Nash, K. L. (2013). The importance of structural complexity in coral reef ecosystems. Coral Reefs 32, 315–326. doi: 10.1007/s00338-012-0984-y

Guinan, J., Grehan, A. J., Dolan, M. F. J., and Brown, C. (2009). Quantifying relationships between video observations of cold-water coral cover and seafloor features in rockall trough, west of Ireland. Mar. Ecol. Prog. Ser. 375, 125–138. doi: 10.3354/meps07739

Hackel, T., Wegner, J. D., and Schindler, K. (2016). Fast emantic segmentation of 3d point clouds with strongly varying density. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 3, 177–184. doi: 10.5194/isprs-annals-III-3-177-2016

Harris, P. T., and Whiteway, T. (2011). Global distribution of large submarine canyons: geomorphic differences between active and passive continental margins. Mar. Geol. 285, 69–86. doi: 10.1016/j.margeo.2011.05.008

Hopkinson, B. M., King, A. C., Owen, D. P., Johnson-roberson, M., Long, M. H., and Bhandarkar, S. M. (2020). Automated classification of three-dimensional reconstructions of coral reefs using convolutional neural networks. PLoS One 15:e0230671. doi: 10.1371/journal.pone.0230671

House, J. E., Brambilla, V., Bidaut, L. M., Christie, A. P., Pizarro, O., Madin, J. S., et al. (2018). Moving to 3D: relationships between coral planar area, surface area and volume. PeerJ 6:e4280. doi: 10.7717/peerj.4280

Hovland, M., and Thomsen, E. (1997). Cold-water corals – Are they hydrocarbon seep related? Mar. Geol. 137, 159–164. doi: 10.1016/S0025-3227(96)00086-2

Huvenne, V. A. I., Bett, B. J., Masson, D. G., le Bas, T. P., and Wheeler, A. J. (2016). Effectiveness of a deep-sea cold-water coral Marine Protected Area, following eight years of fisheries closure. Biol. Conserv. 200, 60–69. doi: 10.1016/j.biocon.2016.05.030

Huvenne, V. A. I., Tyler, P. A., Masson, D. G., Fisher, E. H., Hauton, C., Hühnerbach, V., et al. (2011). A picture on the wall: innovative mapping reveals cold-water coral refuge in submarine canyon. PLoS One 6:e0028755. doi: 10.1371/journal.pone.0028755

Jonsson, L. G., Nilsson, P. G., Floruta, F., and Lundälv, T. (2004). Distributional patterns of macro- and megafauna associated with a reef of the cold-water coral Lophelia pertusa on the Swedish west coast. Mar. Ecol. Progr. Ser. 284, 163–171. doi: 10.3354/meps284163

Kavzoglu, T., and Yildiz, M. (2014). Parameter-Based performance analysis of object-based image analysis using aerial and Quikbird-2 images. ISPRS Annal. Photogramm. Remote Sens. Spat. Inform. Sci. 7, 31–37. doi: 10.5194/isprsannals-ii-7-31-2014

King, A., Bhandarkar, S. M., and Hopkinson, B. M. (2018). “A comparison of deep learning methods for semantic segmentation of coral reef survey images,” in Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), (Salt Lake City, UT: IEEE), 1475–14758. doi: 10.1109/CVPRW.2018.00188

Klápště, P., Urban, R., and Moudrı, V. (2018). “Ground classification of UAV image-based point clouds through different algorithms: open source vs commercial software,” in Proceedings of the 6th International Conference on “Small Unmanned Aerial Systems for Environmental Research, Split, 15–18.

Koop, L., Snellen, M., and Simons, D. G. (2021). An object-based image analysis approach using bathymetry and bathymetric derivatives to classify the seafloor. Geosciences 11:45. doi: 10.3390/geosciences11020045

Kwasnitschka, T., Hansteen, T. H., Devey, C. W., and Kutterolf, S. (2013). Doing fieldwork on the seafloor: photogrammetric techniques to yield 3D visual models from ROV video. Comput. Geosci. 52, 218–226. doi: 10.1016/j.cageo.2012.10.008

Lambers, K., Verschoof-van der Vaart, W. B., and Bourgeois, Q. P. J. (2019). Integrating remote sensing, machine learning, and citizen science in dutch archaeological prospection. Remote Sens. 11, 1–20. doi: 10.3390/rs11070794

Leverette, T. L., and Metaxas, A. (2006). “Predicting habitat for two species of deep-water coral on the Canadian Atlantic continental shelf and slope,” in Cold-Water Corals and Ecosystems, eds A. Freiwald and J. M. Roberts (Berlin: Springer-Verlag), 467–479. doi: 10.1007/3-540-27673-4_23

Lichti, D. D. (2005). Spectral filtering and classification of terrestrial laser scanner point clouds. Photogramm. Rec. 20, 218–240. doi: 10.1111/j.1477-9730.2005.00321.x

Lim, A., Huvenne, V. A. I., Vertino, A., Spezzaferri, S., and Wheeler, A. J. (2018). New insights on coral mound development from groundtruthed high-resolution ROV-mounted multibeam imaging. Mar. Geol. 403, 225–237. doi: 10.1016/j.margeo.2018.06.006

Lim, A., O’Reilly, L., Summer, G., Harris, K., Oliveira, L., O’Hanlon, Z., et al. (2019a). Monitoring Changes in Submarine Canyon Coral Habitats - Leg 2 (MoCha_Scan I), survey (CE19014) of the Porcupine Bank Canyon, Cruise Report. Cork, Ireland. Marine Institute. doi: 10.5281/zenodo.3819565

Lim, A., O’Reilly, L., Summer, G., Harris, K., Shine, A., Harman, L., et al. (2019b). Monitoring Changes in Submarine Canyon Coral Habitats - Leg 1 (MoCha_Scan I), survey (CE19008) of the Porcupine Bank Canyon, Cruise Report. Cork, Ireland. Marine Institute. doi: 10.5281/zenodo.3699111

Lim, A., Wheeler, A. J., and Arnaubec, A. (2017). High-resolution facies zonation within a cold-water coral mound: the case of the Piddington Mound, Porcupine Seabight, NE Atlantic. Mar. Geol. 390, 120–130. doi: 10.1016/j.margeo.2017.06.009

Lim, A., Wheeler, A. J., Price, D. M., O’Reilly, L., Harris, K., and Conti, L. (2020). Influence of benthic currents on cold-water coral habitats: a combined benthic monitoring and 3D photogrammetric investigation. Sci. Rep. 10:19433. doi: 10.1038/s41598-020-76446-y

Lowe, D. G., (1999). “Object recognition from local scale-invariant features,” in The Proceedings of the Seventh IEEE International Conference on Computer Vision, 1150–1157. doi: 10.1109/ICCV.1999.790410

Lu, D., and Weng, Q. (2007). A survey of image classification methods and techniques for improving classification performance. Int. J. Rem. Sens. 28, 823–870. doi: 10.1080/01431160600746456

Marburg, A., and Bigham, K. (2016). “Deep learning for benthic fauna identification,” in Proceeding of the OCEANS 2016 MTS/IEEE Monterey, OCE 2016, Monterey, CA. doi: 10.1109/OCEANS.2016.7761146

Marre, G., Holon, F., Luque, S., Boissery, P., and Deter, J. (2019). Monitoring marine habitats with photogrammetry: a cost-effective, accurate, precise and high-resolution reconstruction method. Front. Mar. Sci. 6:276. doi: 10.3389/fmars.2019.276

Masson, D. G., Bett, B. J., Billett, D. S. M., Jacobs, C. L., Wheeler, A. J., and Wynn, R. B. (2003). The origin of deep-water, coral-topped mounds in the northern Rockall Trough, Northeast Atlantic. Mar. Geol. 194, 159–180. doi: 10.1016/S0025-3227(02)00704-1

Maxwell, A. E., Warner, T. A., and Fang, F. (2018). Implementation of machine-learning classification in remote sensing: an applied review Implementation of machine-learning classification in remote sensing: an applied review. Int. J. Remote Sens. 39, 2784–2817. doi: 10.1080/01431161.2018.1433343

Mazzini, A., Akhmetzhanov, A., Monteys, X., and Ivanov, M. (2012). The Porcupine bank canyon coral mounds: oceanographic and topographic steering of deep-water carbonate mound development and associated phosphatic deposition. Geo Mar. Lett. 32, 205–225. doi: 10.1007/s00367-011-0257-8

McKinnon, D., He, H., Upcroft, B., and Smith, R. N. (2011). Towards Automated and In-Situ, Near-Real Time 3-D Reconstruction of Coral Reef Environments. Waikoloa, HI, USA.

Menna, F., Agrafiotis, P., and Georgopoulos, A. (2018). State of the art and applications in archaeological underwater 3D recording and mapping. J. Cult. Herit. 33, 231–248. doi: 10.1016/j.culher.2018.02.017

Mienis, F., Bouma, T., Witbaard, R., van Oevelen, D., and Duineveld, G. (2019). Experimental assessment of the effects of coldwater coral patches on water flow. Mar. Ecol. Prog. Ser. 609, 101–117. doi: 10.3354/meps12815

Mienis, F., van Weering, T., de Haas, H., de Stigter, H., Huvenne, V., and Wheeler, A. (2006). Carbonate mound development at the SW Rockall Trough margin based on high resolution TOBI and seismic recording. Mar. Geol. 233, 1–19. doi: 10.1016/j.margeo.2006.08.003

Mohamed, H., Nadaoka, K., and Nakamura, T. (2018). Assessment of machine learning algorithms for automatic benthic cover monitoring and mapping using towed underwater video camera and high-resolution satellite images. Remote Sens. 10:773. doi: 10.3390/rs10050773

Mohamed, H., Nadaoka, K., and Nakamura, T. (2020). Towards benthic habitat 3D mapping using machine learning algorithms and structures from motion photogrammetry. Remote Sens. 12:127. doi: 10.3390/rs12010127