- 1Industrial Engineering, University of Windsor, Windsor, ON, Canada

- 2Mechanical, Automotive and Materials Engineering, University of Windsor, Windsor, ON, Canada

Leveraging Computer-Aided Design (CAD) and Manufacturing (CAM) tools with advanced Industry 4.0 (I4.0) technologies presents numerous opportunities for industries to optimize processes, improve efficiency, and reduce costs. While certain sectors have achieved success in this effort, others, including agriculture, are still in the early stages of implementation. The focus of this research paper is to explore the potential of I4.0 technologies and CAD/CAM tools in the development of pick and place solutions for harvesting produce. Key technologies driving this include Internet of Things (IoT), machine learning (ML), deep learning (DL), robotics, additive manufacturing (AM), and simulation. Robots are often utilized as the main mechanism for harvesting operations. AM rapid prototyping strategies assist with designing specialty end-effectors and grippers. ML and DL algorithms allow for real-time object and obstacle detection. A comprehensive review of the literature is presented with a summary of the recent state-of-the-art I4.0 solutions in agricultural harvesting and current challenges/barriers to I4.0 adoption and integration with CAD/CAM tools and processes. A framework has also been developed to facilitate future CAD/CAM research and development for agricultural harvesting in the era of I4.0.

1 Introduction

Industry 4.0 technologies are revolutionizing the agricultural sector into a new era of data-driven practices that are transforming the way crops are produced, harvested, and distributed. This transformation is paramount given that by 2050 the world’s population is estimated to reach 10 billion, necessitating a 70% increase in food production (De Clercq et al., 2018). Internet of Things (IoT), artificial intelligence (AI), advanced robotics, simulation, machine learning (ML), deep learning (DL), and additive manufacturing (AM) are examples of the transformative technologies driving this shift. Their implementation across the sector’s value chain has the potential to enhance competitiveness by increasing yields, improving input efficiency, and reducing financial and time expenses from human mistakes (Javaid et al., 2022).

A representative value chain for agriculture consisting of pre-production, production, processing, distribution, retail, and final consumption stages is shown in Figure 1. The pre-production process involves suppliers of seeds, fertilizers, pesticides, and other inputs or services (Paunov and Satorra, 2019). Activities within the production process include sowing, irrigation, pest management, fertilization, and harvesting. Following this, the processing stage converts the crops into consumer ready states by means of cleaning, sorting, grading, and or slicing. The final product is then packaged or canned, stored, and distributed to retail stores, grocery markets, and supermarkets. Lastly, it is purchased by the consumer for end use. The primary subject of this review is on the application of I4.0 technologies within the production stage, with a particular focus on harvesting.

Harvesting is a labour-intensive task, and the agricultural industry is currently experiencing a severe labour crisis. In a 2019 report from the Government of Canada, an estimated 13% of fruits and vegetables grown are either left unharvested or discarded post harvest from being picked past their prime (Canada, 2019; VCMI, 2019). Other countries are facing similar labour struggles, such as Japan, New-Zealand, Netherlands, Ireland, Spain, and The United States (Ryan, 2023). Recent research efforts have aimed at addressing these issues by exploring automation strategies and new methods for smart farming using I4.0 technologies. The development of IoT-based monitoring systems, robotic harvesting solutions, and advanced object-recognition algorithms are promoting new ways to avoid produce loss, increase crop yields, and optimize resources (Liu et al., 2021).

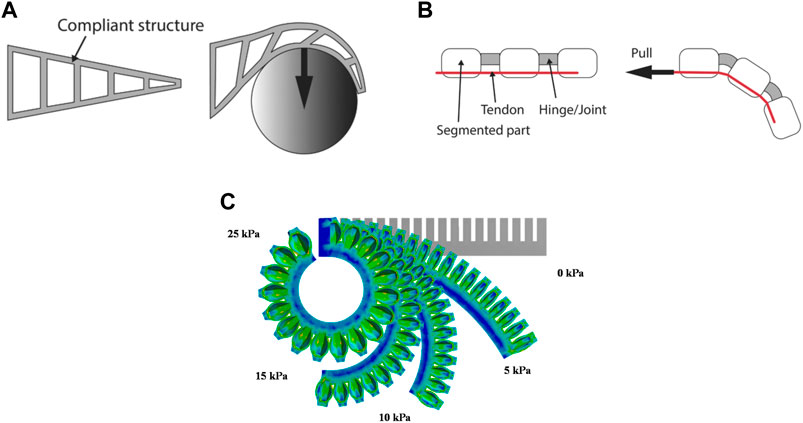

Computer-aided design (CAD) and manufacturing (CAM) play a vital role in the success and optimization of this emerging agricultural era. The complexity of the harvesting environment poses unique challenges for automation which prevents the direct translation of solutions from other domains. This stems from the variability of the produce (size, shape, colour), crop objects (leaves, stems), and the crop environment (Bac et al., 2014). Leveraging advanced CAD and CAM tools presents opportunities to reverse engineer agricultural environments to effectively work with this variability. For instance, CAD tools support the design of specialty compliant end-of-arm tooling (EOAT) to grasp and manipulate different crop types. Additive manufacturing (AM) enables rapid prototyping for testing and validation of EOAT and promotes complexity within their designs. Compliant mechanisms mitigate uncertainty by conforming to objects of various geometries via compliant materials and structures (Rus and Tolley, 2015). Elastomers are an extensively used compliant material due to their ability to sustain large strains without permanent deformation (Shintake et al., 2018). Common actuation methods for compliant mechanisms (Figure 2) include contact-driven, cable-driven, and fluid-driven. These solutions can be readily realized using AM processes to build the EOAT directly, or by exploiting rapid tooling strategies to fabricate molds, and using over-molding strategies to embed elements (Odhner et al., 2014).

FIGURE 2. Actuation methods of compliant mechanisms (A) contact-driven from © 2018 Shintake et al. (2018). Published by WILEY-VCH Verlag GmbH & Co. KGaA, Weinheim. Licensed under CC BY-NC-ND 4.0. doi: 10.1002/adma.201707035 (B) cable-driven from © 2018 Shintake et al. (2018). Published by WILEY-VCH Verlag GmbH & Co. KGaA, Weinheim. Licensed under CC BY-NC-ND 4.0. doi: 10.1002/adma.201707035 (C) fluid-driven from © 2018 by Hu et al. (2018). Licensee MDPI, Basel, Switzerland. Licensed under CC BY. doi: 10.3390/robotics7020024.

Further, CAD facilitates the design of robotic rigid-body structures and other auxiliary components to ensure safe navigation in the specified crop environment. Robust simulation models capable of capturing the environmental variability can assist with testing, validation, and optimization of solutions prior to commercialization. Moreover, integrating IoT networks and robotic harvesters presents opportunities for improved harvest decisions, trajectory planning, and ideal time to harvest, all of which represent methods leveraged by CAM systems.

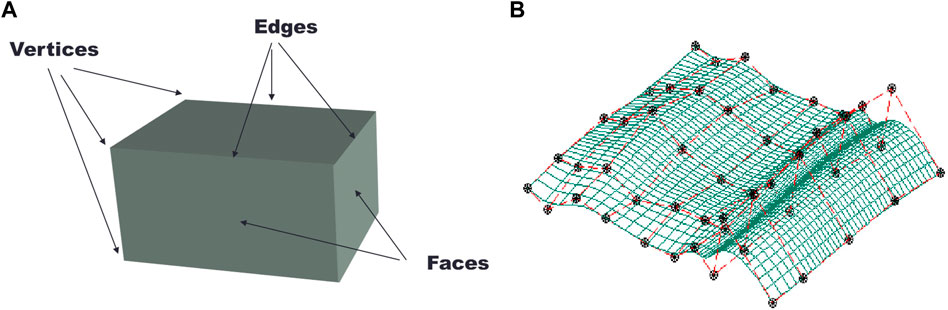

There are several CAD modeling approaches that can be explored to effectively represent the objects within a complex crop environment. The classic contemporary approach is to use boundary rep (B-rep) models (Figure 3A) with a constructive solid geometry history tree. Euler’s operators, rules for identifying loops, and parametric curves and surfaces (spline, Bézier, nonuniform rational B-splines (NURBS)) are standard to provide sophisticated design solutions (Figure 3B).

FIGURE 3. (A) B-rep model which is constructed from vertices, edges, and faces, and (B) NURBs surface with UV flow lines and selected control points.

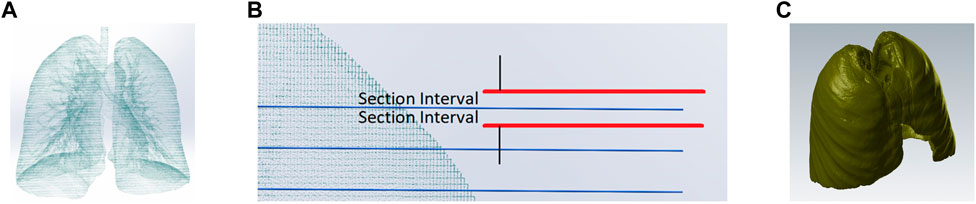

The present tools allow for effective design of mechanical components and complex surfaces or solid models but for complex ‘random’ shapes, decomposition models are applied. Volumetric representation techniques offer realistic 3D depictions of objects, incorporating depth and other geometric features that are difficult to capture in 2D-based representations. Common approaches include point clouds (Figure 4), voxel grids, octrees, and signed distance fields (SDFs). 3D point clouds, when sampled uniformly, offer the ability to preserve original geometric information without any discretization (Guo et al., 2021).

FIGURE 4. (A) Point cloud data for a lung, (B) slicing interval used to select points to extract a spline curve, and (C) an editable CAD model. (Kokab and Urbanic, 2019).

3D point cloud crop models have been generated directly from Light Detection and Ranging (LiDAR) systems or obtained from Unmanned Aerial Vehicles (UAVs), multispectral, and thermal imagery using photogrammetry software or other computer vision algorithms (Khanna et al., 2015; Comba et al., 2019). Point cloud to mesh to surface modeling is a standard approach for reverse engineering (Várady et al., 1997). Point cloud to solid model activities have occurred for reverse engineering (Urbanic and Elmaraghy, 2008) and developing bio-medical models (Kokab and Urbanic, 2019).

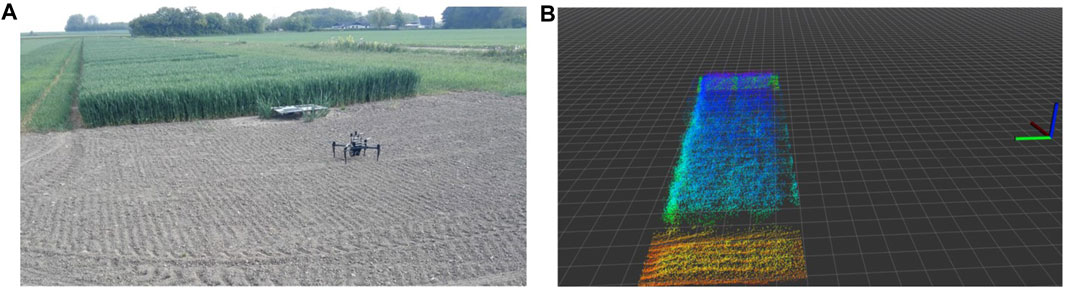

Irregular 3D point cloud data is often transformed into regular data formats, such as 3D voxel grids, for downstream analysis in deep learning architectures (Charles et al., 2017) and other analytical techniques. Voxelization of point clouds discretizes the data, forming grids in 3D space where each voxel defines individual values. Christiansen et al. (2017) developed a model of this type for a winter wheat field to assist with crop height estimations (Figure 5). However, large voxel grids can require significant computational and memory resources. Octrees offer improved memory efficiency with their hierarchal structure. With this method, each voxel can be divided up to eight times and only voxels that are occupied are initialized whereas uninitialized voxels may represent an empty or unknown space (Hornung et al., 2013).

FIGURE 5. Winter wheat field (A) experimental representation and (B) voxel-grid representation. From © 2017 Christiansen et al. (2017). Licensee MDPI, Basel, Switzerland. Licensed under CC BY. doi: 10.3390/s17122703.

SDFs are also a more efficient form of voxel-based representation that specifies the distance from any point in 3D space to the boundary of an object (Frisken and Perry, 2006). A sign is designated to each point relative to the boundary, where a negative sign is attributed to points within the boundary and a positive sign to points outside of the boundary (Jones et al., 2006). Two common specialized forms include Euclidean Signed Distance Fields (ESDFs) and Truncated Signed Distance Fields (TSDFs). ESDFs (Figure 6) contain the Euclidean distance to the nearest occupied voxel for every voxel, whereas TSDFs incorporate a short truncation radius surrounding the boundary, allowing for more efficient construction and noise filtering (Oleynikova et al., 2017). These model types have been applied in agriculture. For example, an octree-based map in the form of TSDF for a sweet pepper environment was developed by Marangoz et al. (2022) to estimate produce shapes.

FIGURE 6. Explicit boundary representation (left) and implicit boundary representation via Euclidean SDF (right). Reproduced with permission. Peelar et al. (2019). Copyright 2019, CAD Solutions.

Volumetric representations of crops and their environment can become large and complex. Simplified representations can be achieved by skeletonizing volumetric data. Voronoi skeleton models (VSKs) based on the Voronoi diagrams (VDs) from boundary line segments of a shape preserve both it is geometrical and topical information (Langer et al., 2019). Ogniewicz and Ilg (1992) applied this approach with thresholding to assign residual values to each VD boundary that indicated its important to the overall skeleton of 2D shapes. This is useful for complex objects that exhibit a large number of skeleton branches. The concept of VSKs have also been exercised for 3D shapes (Näf et al., 1997; Hisada et al., 2001). Modelling agricultural environments and crop objects using VSKs has not been extensively researched. Considering clustered crops with relatively simple geometries, such as mushrooms, blueberries, or grapes, VSK representation models have potential to infer boundaries between the individual clustered objects. Solutions that incorporate this representation type should be developed in the agricultural domain to validate its applicability.

This review presents the current state of the art for the major I4.0 technologies employed in agricultural harvesting applications from studies published between 2019 to the present day. Technologies include Internet of Things, artificial intelligence, machine learning, deep learning, and advanced robotics. Applications of CAD/CAM tools and their integration with I4.0-based solutions will also be discussed.

2 Internet of Things

Internet of Things (IoT) is a network of physical devices and technologies that facilitate data collection, communication, and remote sensing and control (McKinsey and Company, 2022). As described by Elijah et al. (2018), its architecture can be classified into four general components: (1) IoT devices; (2) communication technology; (3) Internet; and (4) data storage and processing. The IoT devices are responsible for collecting data in real-time and include sensors, unmanned aerial vehicles (UAVs), ground robotics, and other appliances. Communication technologies enable data exchange between the IoT devices and the data storage and analytics. These are either wired or wireless mediums. In agriculture, the most commonly used wireless communication devices include Cellular, 6LoWPAN (IPv6 over Low power Wireless Personal Area Network), ZigBee, Bluetooth, RFID (Radio Frequency Identification), Wi-Fi and LoRaWAN (Shafi et al., 2019). An experimental comparison study demonstrated that LoRaWAN systems would be most ideal for agricultural applications since it demonstrated greater longevity compared to Zigbee and Wi-Fi systems (Sadowski and Spachos, 2020). The internet serves as the connective foundation of IoT systems and facilitates remote access to the data. Extensive data collection requires the use of storage methodologies, such as clouds, and advanced algorithms for processing (Elijah et al., 2018). Forms of big data analytics are often employed.

The four components can be further broken down into layers to promote communication, management, and analytical capabilities. For example, Shi et al. (2019) presented a 5-layer IoT structure in agriculture containing a perception (physical devices), network (wired or wireless communication mediums), middleware (data aggregation), common platform (data storage and analytics), and application layer (management platforms and systems).

Agricultural crop growth and ideal harvest times are significantly influenced by environmental parameters, such as temperature, humidity, CO2 levels, sunlight, and water levels (Sishodia et al., 2020; Rodríguez et al., 2021). IoT-based systems enable real-time monitoring of these parameters to assist farm management and operation. Several studies have focused on the development of complete IoT-based systems for protected and open-field crop environments. Many indicated more efficient use of inputs (Zamora-Izquierdo et al., 2019; Rodríguez-Robles et al., 2020), the potential to remotely control environmental conditions (Thilakarathne et al., 2023) and the ability to enhance farm management practices (Mekala and Viswanathan, 2019; Rodríguez et al., 2021) based on the system’s data-driven reports. Limitations concerning power supply, stable connections, and extensibility were highlighted by Thilakarathne et al. (2023). Currently, all these systems are in a prototype phase. However, other sources have presented IoT-based systems that have achieved a commercial product stage. For instance, Croptracker (Croptracker, 2023), CropX (CropX inc, 2022) and Semios (Semios, 2023), are commercially available solutions.

Monitoring individual crop characteristics throughout growth can support the subsequent planning of actions performed by autonomous robotic solutions (Kierdorf et al., 2023), including establishing harvest priorities. Real-time machine learning classification and growth prediction models are methods that provide robotic solutions with knowledge regarding the current and future state of a crop. Several studies have utilized IoT devices to create time-series datasets that can be used as input to growth prediction models. For example, Kierdorf et al. (2023) created a time series UAV-based image dataset of cauliflower growth characteristics including developmental stage and size. Weyler et al. (2021) collected images of beet plants throughout a cultivation period via ground robot and monitored phenotypic traits for growth stage classification.

Both time-series datasets were recorded in open-field environments for crops where a top-down view is sufficient for capturing the necessary phenotypic data. However, this collection method may be impractical for crops grown in protected environments given the space constraints and direction of growth. Thus, different strategies will need to be explored to ensure valid data is collected in these situations. CAD/CAM tools have the potential to assist with exploring new strategies for many harvesting applications. Modelling IoT devices and the crop structure in CAD will allow for testing various collection strategies within a simulated environment prior to fabrication. A study by Iqbal et al. (2020) demonstrated this approach by creating 3D CAD models of cotton crops and their LiDAR based robot designed to collect phenotypic data. They created a Gazebo simulation environment of the cotton field to test the identification capabilities of multiple LiDAR configurations from 3D point cloud data, which was converted into voxel grids, for navigation in the crop rows. Another study used Gazebo to model a sweet pepper environment with a UR5e robot arm to evaluate the accuracy of their fruit shape estimation approach based on super ellipsoids (Marangoz et al., 2022). Their simulation was integrated with an octree-based truncated signed distance field to map the images collected by the robot’s RGB camera.

3 Artificial intelligence

IoT-based systems involve data collection at high velocity, volume, value, variety, and veracity, which are the 5 V’s that define Big Data. To effectively process and analyze this data in agriculture, a variety of tools and techniques have been explored. Artificial intelligence-based tools, such as Machine Learning (ML) and Deep Learning (DL) are among the most used. Other methods include cloud computing and edge computing. ML and DL play a vital role in object detection and localization and crop yield mapping. Integrating IoT networks with these algorithms allows for data-driven performance and decision-making.

3.1 Machine learning

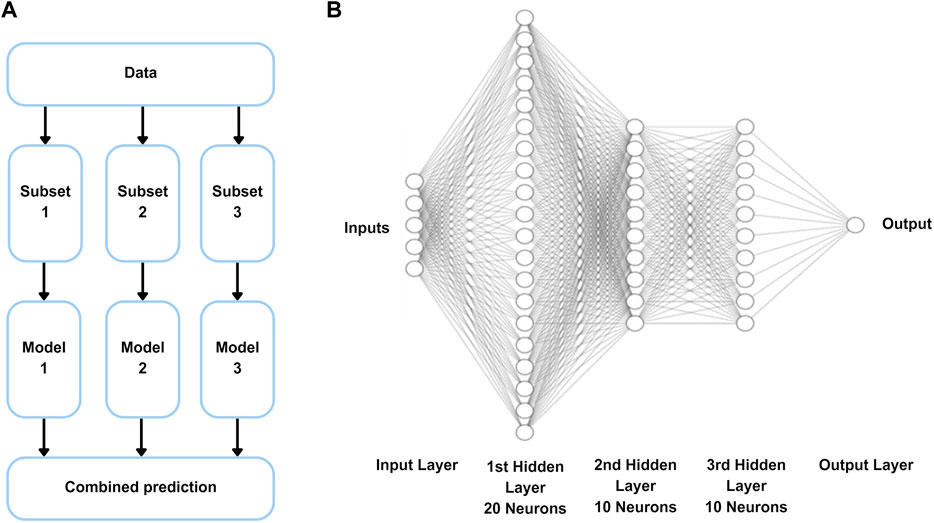

Machine learning techniques are categorized into three core learning methods: supervised learning, un-supervised learning, and reinforcement learning (Jordan and Mitchell, 2015). In supervised learning systems, a model is trained using a labelled dataset and forms predictions from learned mapping. Forms of this approach include decision trees (DT), random forest (RF), support vector machines (SVM), artificial neural networks (ANN), and Bayesian classifiers (Praveen Kumar et al., 2019). DTs are very sensitive to changes in input data and are prone to overfitting. RF models (Figure 7A), offer more robust solutions in comparison. Random subsets of input data are used to form multiple DTs where the combined final prediction follows a majority rule or takes an average of the individual predictions. A representative feed-forward back propagation ANN structure is presented in Figure 7B), consisting of three hidden layers between the input and output layers. The back propagation aspect of ANNs promotes feedback learning to improve predictive performance of the model. This adaptive nature makes them more suitable for use in agricultural environments since recent inputs can enhance model structure and performance.

Unsupervised learning models are trained using unlabeled data and employ algorithms such as k-means clustering, hierarchical clustering and fuzzy clustering. Clustering techniques are highly sensitive to outliers in the input data. In comparison to supervised learning approaches, these techniques are not as suitable for agricultural environments since its inherent variability presents a higher potential for outliers in the data. Reinforcement learning algorithms, such as Q-learning and Markov decision processes, take actions and learn from trial and error via training datasets composed of supervised and unsupervised learning (Jordan and Mitchell, 2015).

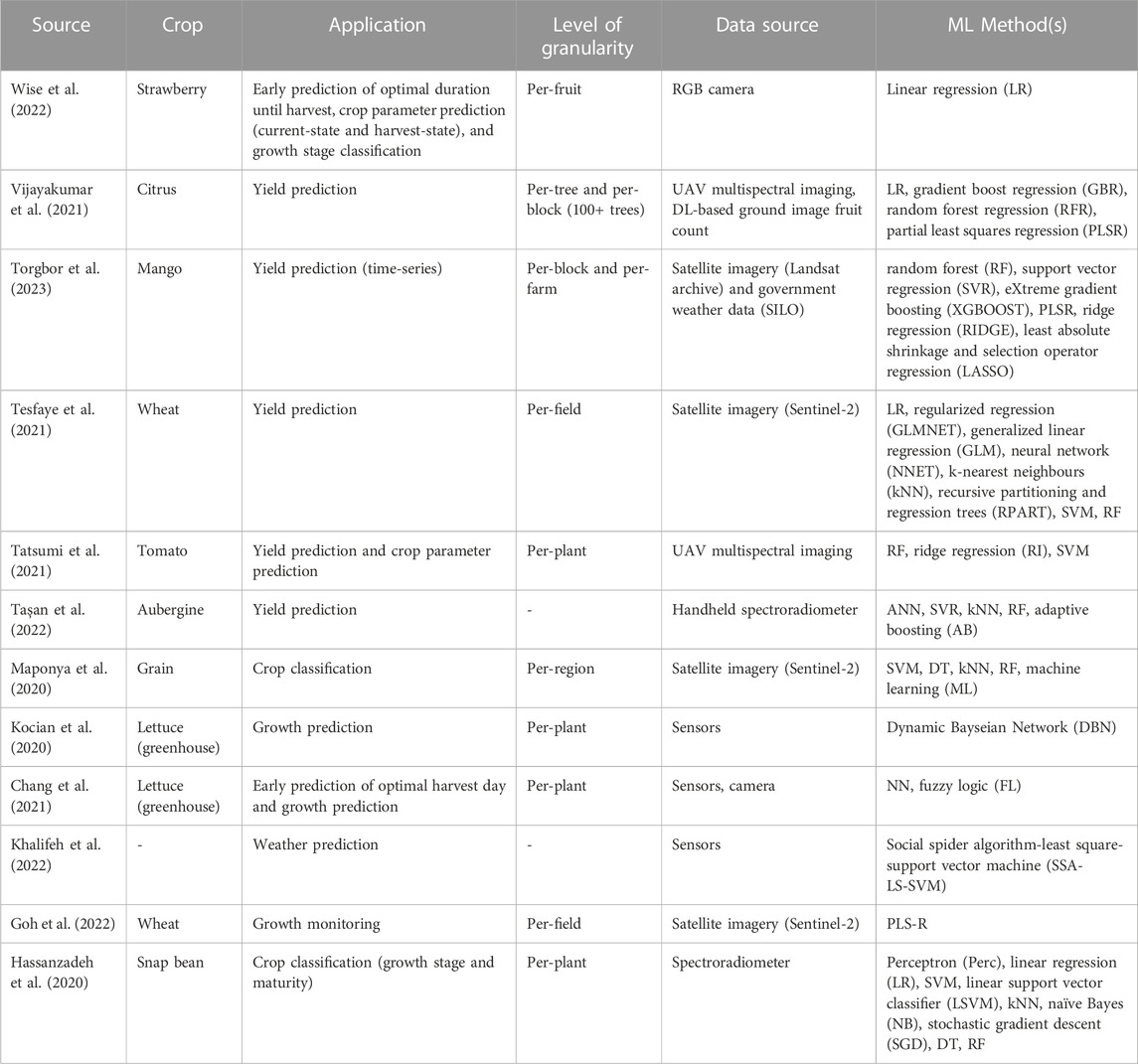

In agricultural harvesting applications, studies have utilized ML algorithms for crop yield predictions, crop growth stage classification and monitoring, optimal duration until harvest predictions and weather prediction/forecasting as shown in Table 1. However, this is not a mature area of research.

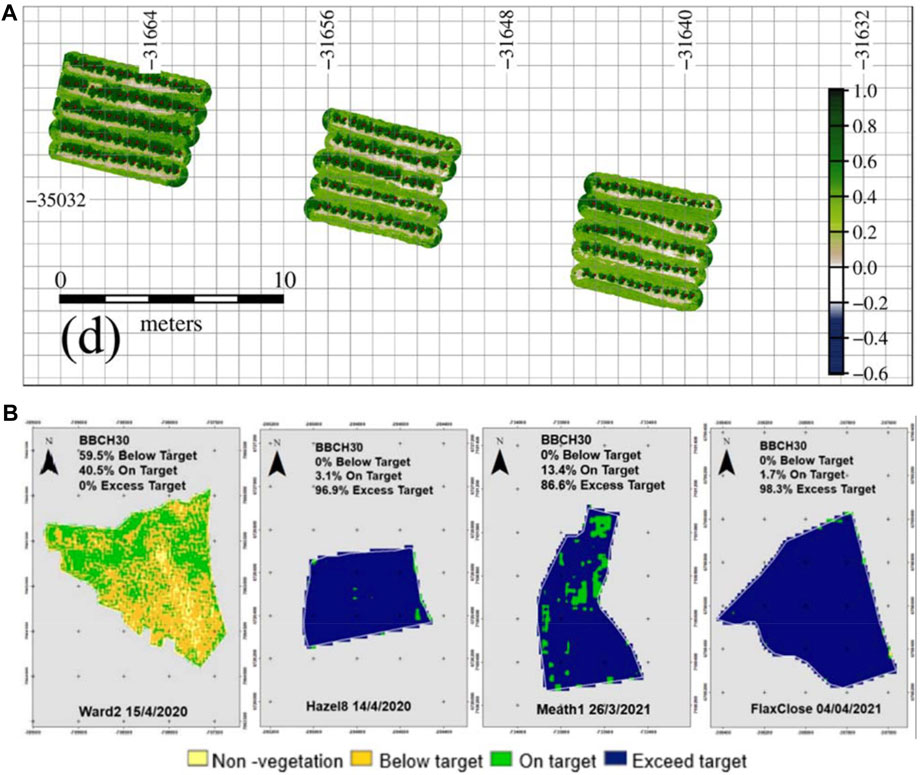

Supervised learning approaches are most employed and yield prediction models are the most common use case. Estimating yield prior to harvest allows for accurate and timely planning (Taşan et al., 2022), which promotes efficient use of harvesting robots. Satellite and UAV imagery combined with ML methods have created accurate and detailed yield maps for crops grown in open fields (Maponya et al., 2020). UAV imagery (Figure 8A) offers superior granularity when compared to satellite imagery (Figure 8B), making it possible to estimate yields at the per-plant level as demonstrated in the work by Tatsumi et al. (2021). The majority of these models derived vegetive indices (VIs), such as leaf area index (LAI), biomass, and evapo-transpiration (ET) from the images since these are indicative parameters of crop development (Kocian et al., 2020) that assist with determining crop yields (Tesfaye et al., 2021). However, this presents significant challenges for crops grown in open-field environments since poor lighting conditions from cloud coverage and shadows decrease image resolutions which can lead to critical growth stage gaps in the datasets (Tesfaye et al., 2021). This is also a challenge for open-field crop models that classify growth states and predict maturity levels or optimal durations until harvest. Implementing cloud restoration algorithms to restore data gaps was examined by Tesfaye et al. (2021), which demonstrated an increased yield prediction accuracy.

FIGURE 8. Maps for yield estimation (A) Tomato green normalized difference vegetation index (GNDVI) from UAV multispectral imagery from Tatsumi et al. (2021). Licensed under CC BY 4.0. doi: 10.1186/s13007-021-00761-2 and (B) winter wheat field green area indices (GAI) from Sentinel-2 satellite imagery from Goh et al. (2022). Licensed under CC BY-NC-ND 4.0. doi: 10.1016/j.jag.2022.103124.

Predicting growth stages, time of maturity and associated time of harvest for a given crop enables efficient scheduling of automated harvesters (Wise et al., 2022). As a result of the limitations for image quality in open-field environments, these model types have been developed for crops grown in protected environments, including strawberries (Wise et al., 2022) and lettuce (Kocian et al., 2020; Chang et al., 2021). Currently, all solutions in Table 1 are in a prototype or conceptual phase.

There are no works that have combined the mapping feature of yield models with the crop growth classification and maturity prediction models. Future research should address this gap to represent these two attributes in detailed CAD models. The framework in Section 7 is designed to facilitate the development of such models using CAD/CAM tools. It is also important to consider the scale of the model and the corresponding computational and memory resources required. With a model of this nature, geometric traits of crops can be predicted and verified throughout the growth cycle. Further, ideal harvest times can be estimated, which will assist with scheduling robotic harvesters.

3.2 Deep learning

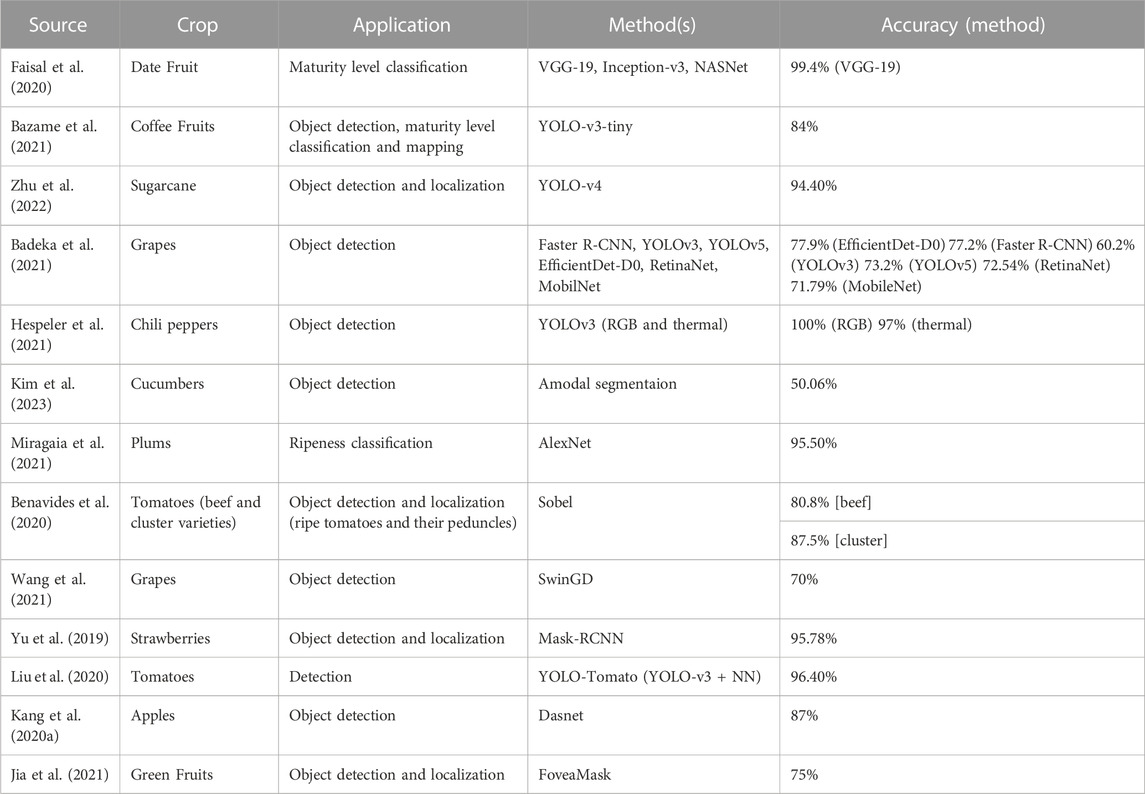

Deep learning computer vision algorithms are being used to detect, localize, and classify crops in real time for robotic harvesting applications. Crop environments present complex conditions for these tasks, which stem from various crop geometries, orientations, maturity levels, illumination, and occlusions. DL-based techniques have demonstrated higher-level feature learning and detection accuracies in comparison to traditional ML-based techniques, which makes them more applicable in complex environments (Badeka et al., 2021; Jia et al., 2021).

Current DL-based vision solutions for robotic harvesting are summarized in Table 2. Versions of YOLO (You-Only-Look-Once) were most commonly utilized as they demonstrate high detection accuracy with quick processing times. However, these algorithms are most successful in relatively simple environments where the crop density is low, lighting is uniform, and there are few to no occlusions (Badeka et al., 2021; Bazame et al., 2021). Other solutions have developed unique algorithms to improve detection capabilities, such as Dasnet (Kang et al., 2020a) for apples, and FoveaMask (Jia et al., 2021) for green fruits.

Several advanced DL-based techniques have been explored to limit the influence of occlusions from overlapping crops, leaves, stems, and other objects. It is important to address this issue since retaking images from various viewpoints will increase cycle times. General instance segmentation models, such as Mask-RCNN (Mask Region-Based Convolutional Neural Network) are common choices in agricultural computer visions systems for addressing occlusions. In Yu et al. (2019), ripe strawberry fruits were successfully detected and picking points were localized with a 95.78% accuracy using Mask-RCNN. The solution indicated effective detection in situations with varying illumination, multi-fruit adhesion, overlapping fruits, and occlusions. However, the processing time was slow and large errors were observed in cases of unsymmetrical fruits. Amodal segmentation, a one-stage model, was used by Kim et al. (2023) to predict the blocked regions of cucumbers with an accuracy of 50.06%. This model was significantly slower than Mask-RCNN models and other general instance segmentation models. Liu et al. (2020) developed YOLO-Tomato, a YOLOv3 and circular bounding box-based model that is robust to illumination and occlusions conditions. Performance loss was indicated in severe occlusion cases, however, the authors stated that this is not a vital issue since harvesting robots operate alternatively between detection and picking. Thus hidden crops will appear after the front ones have been removed. Although this ideology is not applicable in cases where ripe crops are hidden behind unripe crops since removal will not occur. Hespeler et al. (2021) utilized thermal imaging for the harvesting of chili peppers, which improved the detection accuracy in environments with variant lighting and heavy occlusion from leaves or overlapping peppers. This method outperformed both YOLO and Mask-RCNN algorithms with respect to detection accuracy.

It is important to note that all these computer vision solutions for agricultural harvesting are based on real-time performance. Their implementation in crop environments requires the ability to accurately detect, localize, and classify crops despite occlusions and varying illumination conditions. However, the solutions that incorporated these parameters all demonstrated slower processing times compared to traditional DL-methods. As a result of these limitations, all are currently in a prototype phase. Thus, it is essential to explore alternatives approaches to improve robotic harvesting performance. Future research should focus on reverse engineering the environment and utilizing CAD and CAM tools to create 3D crop maps prior to harvest as this may offer better performance in situations with occlusions from crop overlapping, other objects in the environment, and with varying illumination conditions.

In the study by Kang et al. (2020b), an octrees-based 3D representation of the crop environment for a robotic apple harvester was created, which included the locations and orientations of the fruit and obstacles (branches) in the scene. The positional information from the model was integrated with a central control algorithm to compute the proper grasping pose during picking and the trajectory path. This reverse engineered model operated in real-time and only incorporated objects within the working view of the camera. There is potential to expand this methodology to develop environmental models that incorporate predictions for maturity levels, physical growth characteristics, and other geometric properties for the entire working area. By leveraging forms of in-process techniques and a-priori knowledge, the influence from environmental complexities and dependency on real-time performance can be reduced. Furthermore, integrating DL-based 3D crop maps with ML growth prediction and maturity models can support downstream robotic design, end-effector design, trajectory planning, and optimal harvest time decisions.

4 Advanced robotics

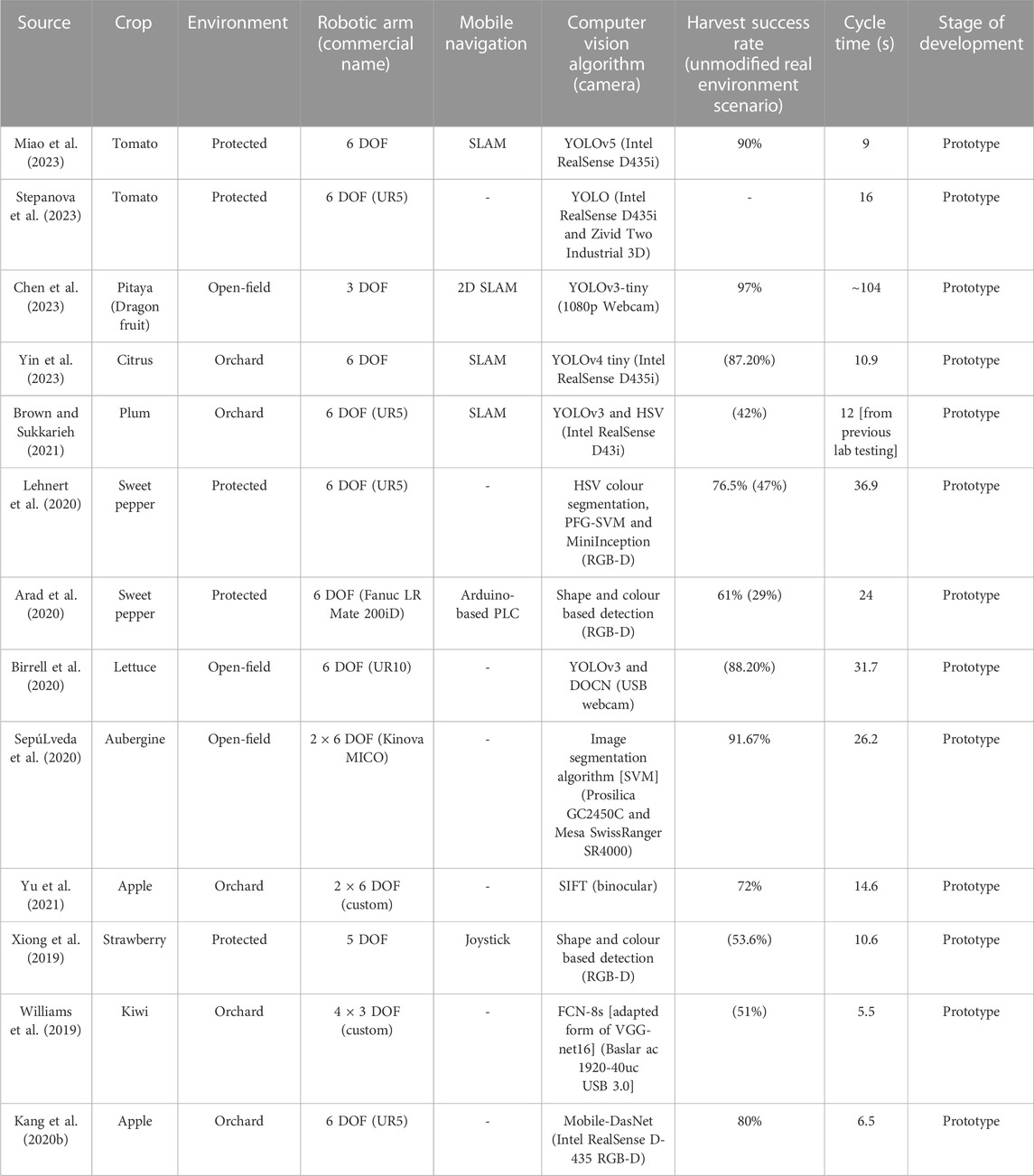

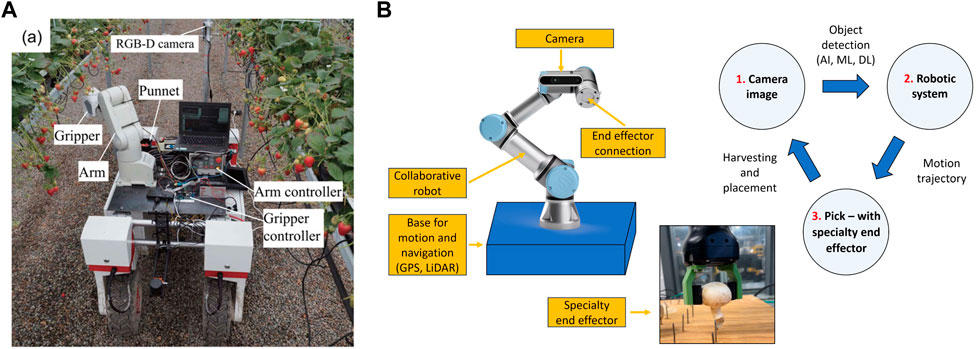

Automated robotic solutions are being developed to perform agricultural harvesting pick and place tasks (Figure 9). Recent research efforts have focused on addressing particular technical elements, including computer vision systems for object detection and localization, motion and trajectory planning algorithms, and EOAT design. An overview of current solutions that incorporate all these aspects is shown in Table 3.

FIGURE 9. (A) Automated robotic harvesting solution for strawberries from Xiong et al. (2019). Licensed under CC BY-NC-ND 4.0. doi: 10.1002/rob.21889. (B) schematic of automated harvesting solutions.

Notably, a considerable number of studies have concentrated on crops grown in protected environments (tomatoes, strawberries, sweet peppers). Protected environments offer diffused lighting, shelter from adverse weather, and level terrains, which are advantageous to automated harvesting. However, difficulties with real-time mobile navigation, and object detection, localization, and grasping remain a barrier to commercial adoption. To date, many of these solutions are in the prototype stage with very few stating near commercial readiness. Other companies have presented solutions that have achieved commercial product trials, including strawberry harvesting robots (Advanced Farm Technologies, 2023; Agrobot, n.d.), apple harvesting robots (Advanced Farm Technologies, 2023), and tomato harvesting robots (Ridder and MetoMotion, n.d.; Panasonic, 2018).

Few solutions have incorporated a form of autonomous navigation in the harvesting environment. Simultaneous Localization and Mapping (SLAM) is the most applied algorithm, allowing the harvesting units to map out their environment and localize in real time. SLAM maps are constructed using point cloud data (Yin et al., 2023). Solutions for crops grown in unprotected environments were more likely to incorporate this element than those in protected environments. For example, a robot designed for citrus fruits (Yin et al., 2023) was tested in a large field environment with a multisensory fusion SLAM algorithm and demonstrated a localization and sensing performance suitable for harvest. Similarly, a plum harvesting robot (Brown and Sukkarieh, 2021) used a SLAM camera for global robot tracking to assist with creating crop density maps.

Variations of the YOLO algorithm were most used for object detection and localization across robotic solutions. YOLO is one-stage CNN (Convolutional Neural Network) that has fast detection and high accuracy performance in real-time, but struggles with detecting small objects (Badeka et al., 2021). As mentioned in the previous section, occlusions in the natural growing environment are one of the largest limitations to the accuracy of object detection and localization in computer vision algorithms. Occlusions not only lower detection accuracy but can also increase the harvesting cycle time from algorithm processing or the need for additional image viewpoints. Many solutions leveraged modified crop environments during testing, where obstacles causing occlusions, such as leaves, stems, or overlapping crops, were removed prior to harvest. This demonstrated much higher harvesting success rates for sweet peppers (Arad et al., 2020). As mentioned previously, efforts to create algorithms that predict object position and orientation, such as Mask-RCNN have been developed to address this issue. Furthermore, dual arm robotic solutions have also been explored to reduce the challenges that arise from occlusions. In the work by SepúLveda et al. (2020), one robotic arm was dedicated to removing the objects causing occlusions while the other proceeded with the harvesting actions of aubergines. This method demonstrated longer cycle times but had higher productivity in terms of the number of fruits harvested over a given period.

A specialty end-effector was designed for each robotic solution since the crops varied with respect to their shape, size, and environment. When working with crops in dense environments or highly clustered crops, the design of the end-effector becomes more difficult due to the increased risk of damage to the crop itself or the surrounding objects during picking. Many of the end-effector designs demonstrated high success rates when the crops were accurately detected and localized. Most challenges for end-effector performance arose due to incorrect positioning from neighbouring crops or obstacles, inability to access the crop due to rigid and large designs, and slow cycle times from heavy weight. For example, in Miao et al. (2023), the gripper design was too rigid and large, which made picking tomato stems challenging if they were either angled or short. A similar issue was noted by Brown and Sukkarieh (2021), where the oversized soft gripper design caused issues with plum picking and concerns with longevity resulting from damage of the silicon material. Difficulties in positioning were observed by Arad et al. (2020) and Yin et al. (2023) when obstacles and neighbouring fruit blocked the end-effector from reaching its intended picking position. Weight is also a crucial factor as it can significantly impact the cycle time and risk damaging the crop during harvest, which was highlighted in a solution for lettuce (Birrell et al., 2020). The heavy weight of the end effector caused picking to be the rate limiting step and a high damage rate was also observed.

CAD/CAM tools play a critical role in the design and development of specialty end-effectors for robotic harvesting. Simulations allow for testing and validating grasping and picking strategies, material selection, and mechanical design. For example, Fan et al. (2021) investigated multiple apple grasping principles and picking patterns by developing 3D branch-stem-fruit models and conducting finite element simulations in ABAQUS to compute and compare separation forces. An underactuated broccoli gripper design was validated using ADAMS (Automatic Dynamic Analysis of Mechanical Systems) software that measured applied contact forces (Xu et al., 2023). ADAMS software was also used by Mu et al. (2020) to validate trajectory motions of the bionic fingers in a kiwifruit robotic end-effector design. Simulation is also being used to explore best practices for robotic harvesting solutions (Van De Walker et al., 2021) with software such as V-REP, Gazebo, ArGOS, and Webots (Iqbal et al., 2020).

Future works should focus on compliant mechanism design for robotic harvesting end-of-arm-tooling for crops that are harvested by gripping. Contact-driven, cable-driven, and fluid-driven actuation methods are suitable for use in agricultural environments. Silicon rubbers are common choices for gripper fabrication in this domain, but are limited by their susceptibility to damage from surrounding objects in crop environments. Leveraging AM to fabricate compliant structures allows for rapid prototyping and customization. For example, novel fluid-driven soft robotic fingers for apple harvesting were designed and realized via 3D printing (Wang et al., 2023). Coupling designs with sensor technologies can provide feedback necessary for subsequent optimization and should also be further explored.

5 Summary

I4.0 technologies and CAD/CAM tools have been shown to support automated data-driven solutions for harvesting agricultural crops, but this is not a mature field. IoT networks coupled with machine learning algorithms are indicating that improved yields, growth predictability, and weather forecasting can be implemented, providing new ways of seeing related to designing and managing the agricultural space. However, it is clear that most solutions are either in a prototype or conceptual phase as there are several challenges and barriers preventing successful operation in real crop environments (Section 6).

CAD/CAM tools have been leveraged using the standard design packages available. Future research should be directed at implementing novel CAD/CAM design tools (such as voxelized representations and developing simulation solutions that include the mechanical and physical properties of plants, leaves, etc.) with existing I4.0-based solutions to form a completely integrated solution targeting the unique challenges in the agricultural domain. These can facilitate harvest decisions at the granular and systems level, as discussed in the presented framework in Section 7.

6 Challenges and barriers to the adoption of I4.0 technologies and CAD/CAM tools

For widespread implementation of advanced I4.0 technologies and CAD/CAM tools across agricultural harvesting operations, there are several challenges and barriers that first need to be addressed. These originate from the several uncertainties within the dynamic crop environment, data sufficiency, and other technical aspects.

Throughout growth, crop parameters such as size, shape, colour, position, and orientation can change considerably. Additionally, these parameters are significantly different between crop types. This product variability creates difficulties for computer vision system operation, tool design and implementation, process standardization, and CAD modelling and simulation. For example, fruits and vegetables that are green in colour when mature often share a resemblance in hue to surrounding plant objects, such as vines, leaves, and stems. These scenarios hinder the ability for computer vision algorithms to detect and localize the product. Neighbouring objects and overlapping crops also influence vision system performance as well as end-effector design and implementation. These obstacles can cause damage to end-effectors and limit their ability to access the product. Non-homogenous properties of crops pose a challenge for capturing the true mechanical characteristics in 3D models and simulations. For instance, sections of a tomato crop vine may be more rigid than others, although current finite element analysis methods would represent a 3D model of this as a uniform rigid body.

It is also important to note the extent of data required in adopting these advanced technologies may be difficult to consolidate. As mentioned previously, crop environments are dynamic and variable. To develop representative and robust computerized models, the inherent unpredictability needs to be captured. This will require sufficient data for continuous model updating and processing. As a result, model history tree structures will become large and complicated and will likely require significant memory capacity and computational resources. Another challenge is ensuring the compatibility of the models with downstream analytical algorithms. Depending on the representation type, conversions may be necessary. For instance, 3D point clouds are not effective inputs to deep learning algorithms and must be translated into other volumetric forms, such as voxel grids. Methods to convert between data types for deep learning have been developed. One example is NVIDIA’s Kaolin library which provides a PyTorch API to work with several forms of 3D representations (NVIDIA Corporation, 2020). This library includes an expanding collection of GPU-optimized operations for quick conversions between CAD representation types, data loading, volumetric acceleration data structures, and many other techniques.

7 Framework

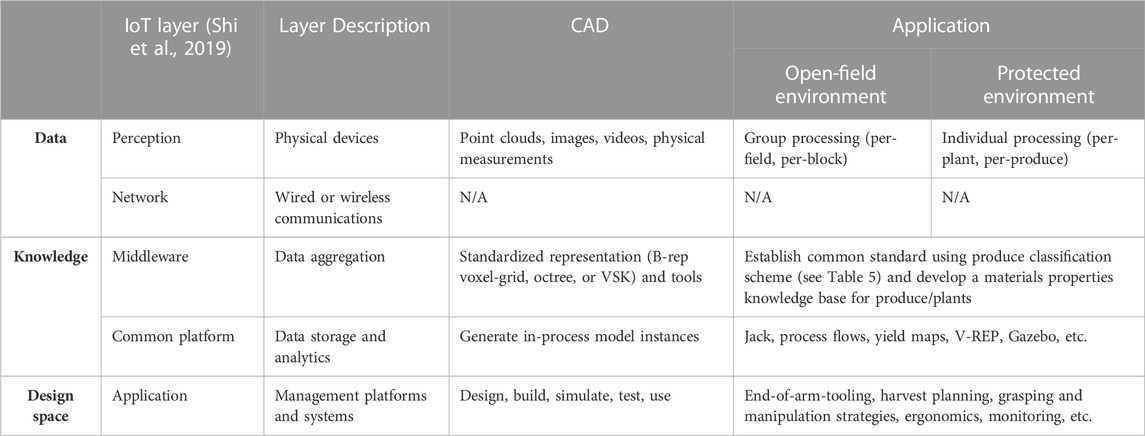

A framework based on the IoT structure from Shi et al. (2019) has been developed to facilitate future CAD/CAM research and development for agricultural harvesting in the era of I4.0 (Table 4, 5).

At the perception layer, data collected from physical IoT devices exhibit various forms of CAD representations. In agricultural systems, 3D point clouds from LiDAR systems, images from UAVs, or physical measurements from sensor networks are all examples of data that can be collected in real-time. The granularity of this data is dependent on the environment type. Obtaining data per-produce will be difficult in open-field environments since the operational scale is significantly larger in comparison to protected environments. It is recommended that a group-processing methodology (per-field or per-block) is applied to collect data in these environments and can be achieved using satellite imagery or UAVs.

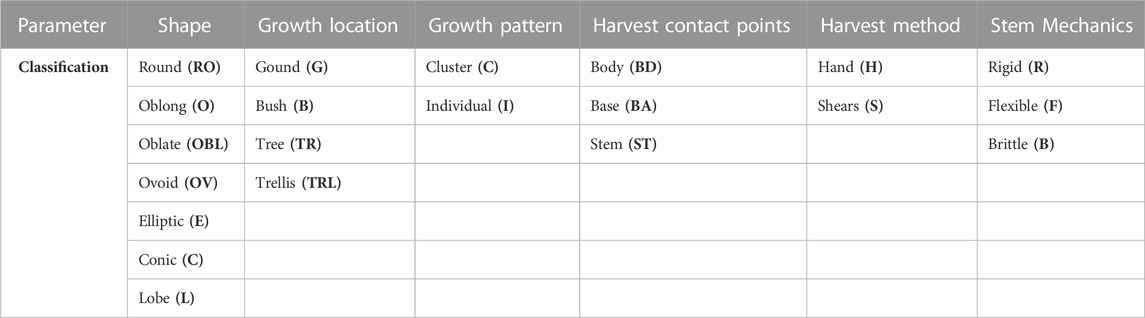

To develop effective models for agricultural harvesting activities, it is important to aggregate this data into a standardized CAD representation. This is similar to the function of the middleware layer in an IoT system. By classifying produce according to key parameters (Table 5), appropriate representations can be established. For example, produce that are round, have a cluster growth pattern, and are harvested by contacting the body, such as mushrooms and blueberries, may be best represented using VSKs or SDFs to conserve the individual object boundaries in the cases of overlap. Whereas produce that grow individually and are harvested by cutting a rigid stem, such as sweet peppers or citrus fruits, may be best represented using 3D voxel-grids or octrees since their respective object boundaries are more easily identifiable. The representation type will also depend on the growth location of the produce and the associated objects that will be incorporated in the model, such as branches, leaves, and vines.

The aggregated data from IoT systems are stored and analyzed in a common platform. For CAD applications, the CAD software represents this platform. Instance models can be developed and applied for a variety of harvesting activities, such as Siemens Jack (Siemens Industry Software, 2011) for ergonomic analysis, AnyLogic (The AnyLogic Company, n. d.) for process flows, and Gazebo (Open Source Robotics Foundation, Inc., n. d.) for robotic harvesting strategies.

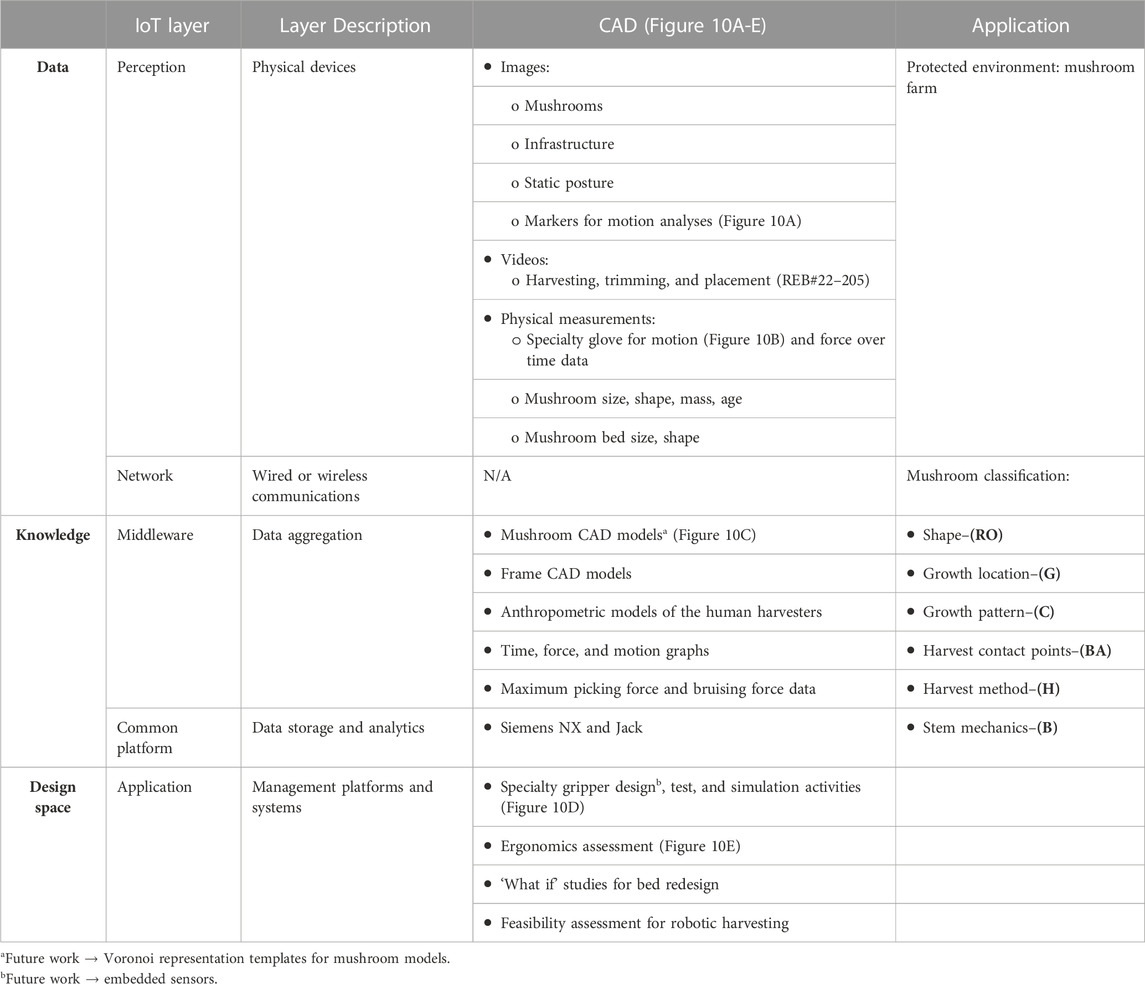

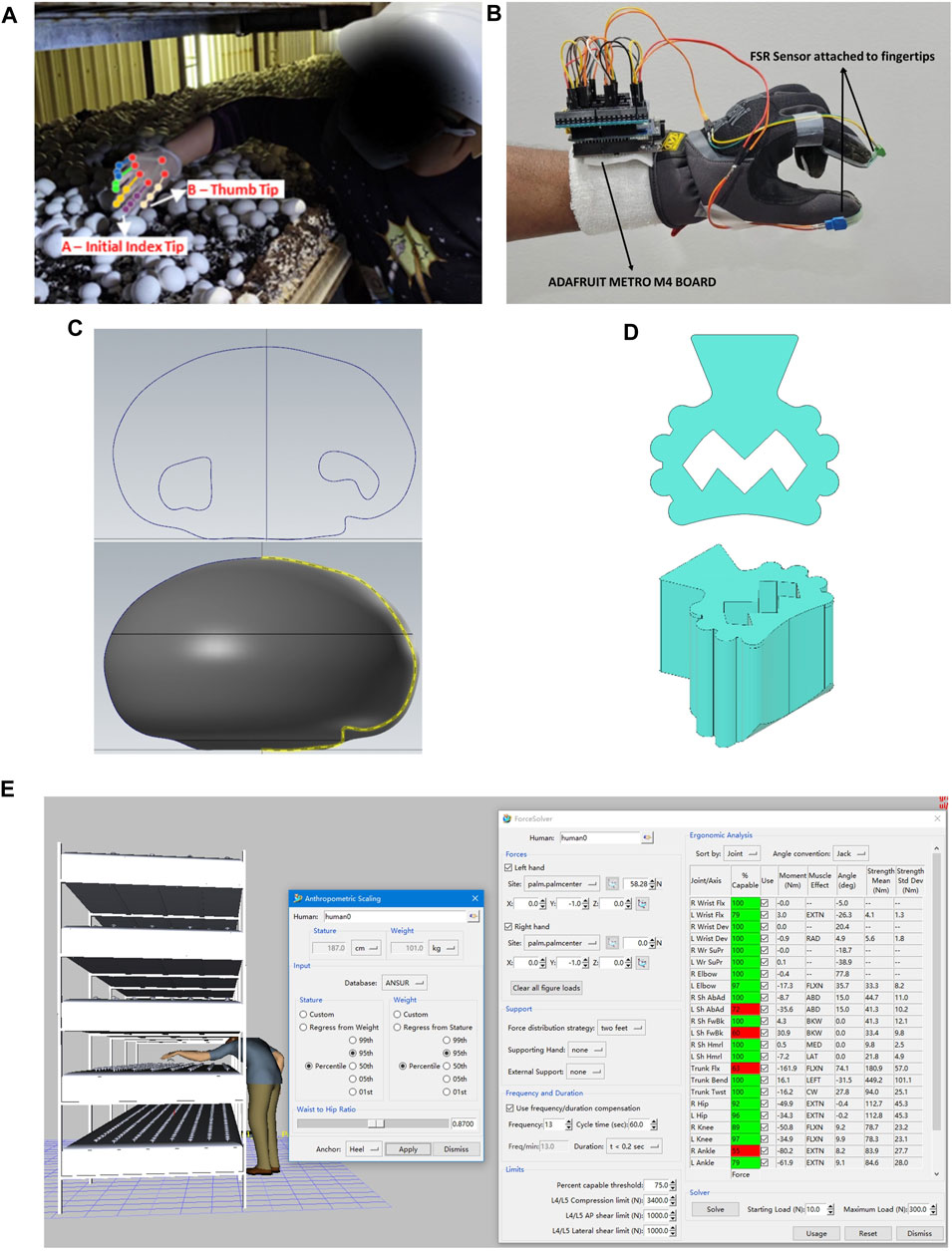

Preliminary research in the mushroom industry has been selected as a case study to highlight the CAD/CAM framework and is demonstrated in Table 6.

Images, videos, and physical measurements were collected at the per-produce level in a commercial farm environment (Figures 10A, B). From this data, CAD models of mushrooms (Figure 10C) and their growth environment (frames) were developed in Siemens NX software (NX software Siemens Software, n.d.). Harvesting time, force, and motion graphs were produced, and maximum picking and bruising forces were established. Specialty grippers for mushroom harvesting (Figure 10D) were designed, simulated, and tested. These were also used to perform feasibility assessments for robotic harvesting. Ergonomic assessments and ‘what if’ studies were conducted for various harvesting scenarios using Siemens Jack software with anthropometric human harvester models (Figure 10E). This conceptual model featured mushrooms objects that are uniform in size, shape, location, and orientation. In the real environment mushrooms have varying geometric and physical parameters, and growth is clustered. Although useful for analyzing ergonomics in select static harvesting situations, this model is not a true representation of a growing mushroom environment.

FIGURE 10. (A) markers for mushroom picking motion analysis (B) force measurement glove (C) CAD model of mushroom (D) CAD models of specialty gripper design (E) Siemens Jack anthropometric model for ergonomic analysis.

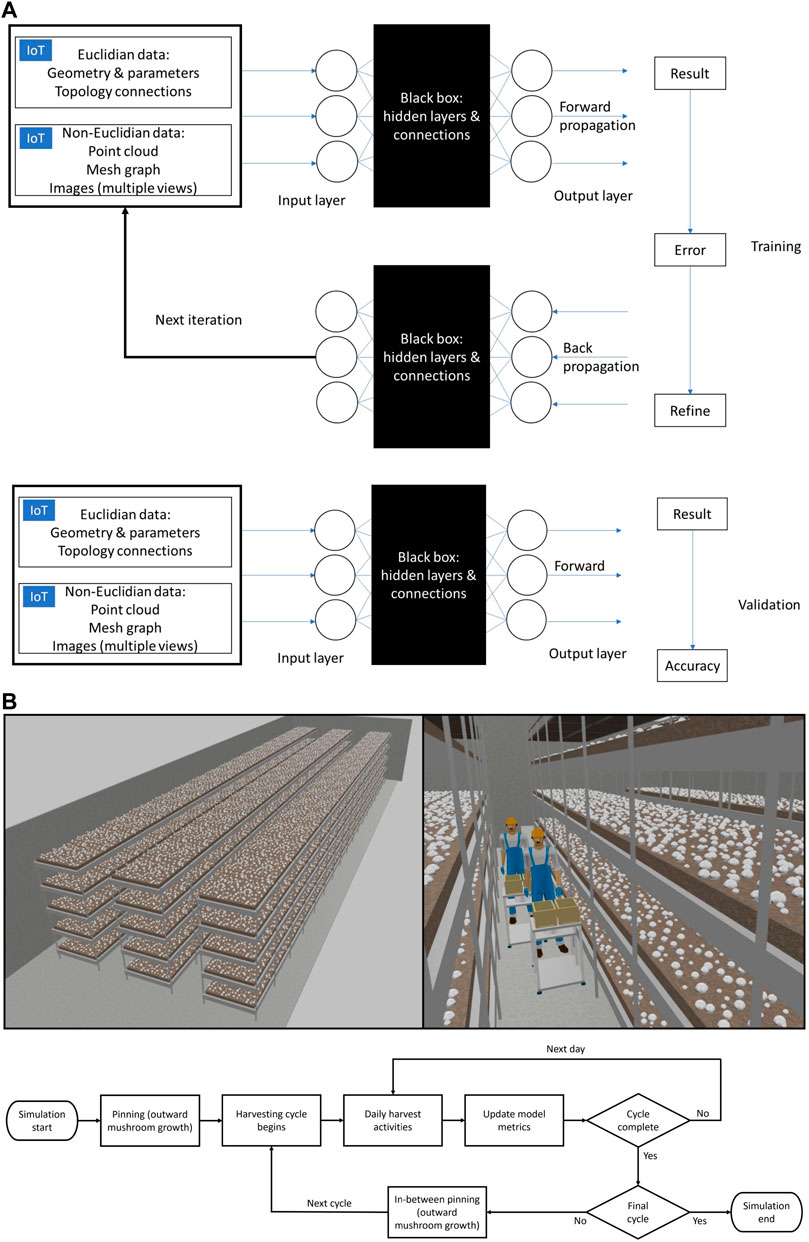

Future research should focus on establishing model instances throughout a harvest cycle with standardized representations based on real-time data collected by IoT devices. An ANN approach, where image data is collected along with representative geometry (Euclidean or Non-Euclidean) input data (Figure 11A) can be used to develop accurate CAD models (SDF or voxel based) from baseline instances of different shapes. This would facilitate adaptable end-effector designs. At the systems level, aggregate modeling that considers discrete events in tandem with agent-based simulations (The AnyLogic Company, n. d.) can be developed using more realistic scenarios for crop management (Figure 11B) to detailed harvesting activities such as robotic trajectories.

FIGURE 11. (A) ANN structure for training and validation for produce and plant features (B) AnyLogic model of mushroom harvesting at the systems level.

Responses to compression forces (deflection, bruising, etc.) (Recchia et al., 2023) and other mechanical and physical properties need to be determined experimentally to have a baseline for effective downstream modeling simulations, whether machining or finite element based. As the knowledge base develops for a harvest type, design improvements throughout the growth cycle for the automation can be implemented.

8 Conclusion

In order to ensure that food production will meet the demand of a rapidly growing global population, agricultural harvesting systems need to transform their existing practices. I4.0 technologies are driving more efficient use of inputs, remote environmental control in greenhouses, automated harvesting, and enhanced farm management practices. Several solutions that utilized these technologies, including Internet of Things, machine learning, deep learning and advanced robotics, were highlighted throughout this review. CAD and CAM tools supported the development of 3D crop environment models to simulate, test, and validate harvesting strategies, trajectory plans, and grasping poses. CAD tools also facilitated the design of robotic end-of-arm tooling, rigid-body, and other auxiliary components. Very few solutions have achieved a commercial product state. Complexities within crop environments, data sufficiency, and memory and computational demands are barriers to their successful operation in actual farming systems. CAD models that represent in-process crop states throughout a harvesting cycle should be explored. Integrating this model type with I4.0 technologies can promote data-driven harvesting practices to improve system performance.

Author contributions

AR: Writing–original draft, Writing–review and editing. JU: Supervision, Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Acknowledgments

The authors would like to acknowledge the fourth year Industrial Engineering capstone team at the University of Windsor for their work in developing an ergonomic simulation for mushroom harvesting.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Advanced Farms Technologies. Apple harvester. Adv. Farm. Available at: https://advanced.farm/technology/apple-harvester/ (Accessed August 5, 2023).

Advanced Farms Technologies,. Strawberry harvester. Adv. Farm. Available at: https://advanced.farm/technology/strawberry-harvester/ [Accessed August 5, 2023].

Agrobot, . Robotic harvesters | agrobot. Agrobot. Agric. Robots. Available at: https://www.agrobot.com/e-series (Accessed August 5, 2023).

Arad, B., Balendonck, J., Barth, R., Ben-Shahar, O., Edan, Y., Hellström, T., et al. (2020). Development of a sweet pepper harvesting robot. J. Field Robotics 37, 1027–1039. doi:10.1002/rob.21937

Bac, C. W., van Henten, E. J., Hemming, J., and Edan, Y. (2014). Harvesting robots for high-value crops: state-of-the-art review and challenges ahead. J. Field Robot. 31, 888–911. doi:10.1002/rob.21525

Badeka, E., Kalampokas, T., Vrochidou, E., Tziridis, K., Papakostas, G. A., Pachidis, T. P., et al. (2021). Vision-based vineyard trunk detection and its integration into a grapes harvesting robot. IJMERR, 374–385. doi:10.18178/ijmerr.10.7.374-385

Bazame, H. C., Molin, J. P., Althoff, D., and Martello, M. (2021). Detection, classification, and mapping of coffee fruits during harvest with computer vision. Comput. Electron. Agric. 183, 106066. doi:10.1016/j.compag.2021.106066

Benavides, M., Cantón-Garbín, M., Sánchez-Molina, J. A., and Rodríguez, F. (2020). Automatic tomato and peduncle location system based on computer vision for use in robotized harvesting. Appl. Sci. 10, 5887. doi:10.3390/app10175887

Birrell, S., Hughes, J., Cai, J. Y., and Iida, F. (2020). A field-tested robotic harvesting system for iceberg lettuce. J. Field Robotics 37, 225–245. doi:10.1002/rob.21888

Brown, J., and Sukkarieh, S. (2021). Design and evaluation of a modular robotic plum harvesting system utilizing soft components. J. Field Robotics 38, 289–306. doi:10.1002/rob.21987

Canada, E. (2019). Taking stock: reducing food loss and waste in Canada. Available at: https://www.canada.ca/en/environment-climate-change/services/managing-reducing-waste/food-loss-waste/taking-stock.html (Accessed July 29, 2023).

Chang, C.-L., Chung, S.-C., Fu, W.-L., and Huang, C.-C. (2021). Artificial intelligence approaches to predict growth, harvest day, and quality of lettuce (Lactuca sativa L.) in a IoT-enabled greenhouse system. Biosyst. Eng. 212, 77–105. doi:10.1016/j.biosystemseng.2021.09.015

Charles, R. Q., Su, H., Kaichun, M., and Guibas, L. J. (2017). “PointNet: deep learning on point sets for 3D classification and segmentation,” in IEEE Conference on Computer Vision and Pattern Recognition, Canada, 17-19 June 1997 (CVPR), 77. –85. doi:10.1109/CVPR.2017.16

Chen, L.-B., Huang, X.-R., and Chen, W.-H. (2023). Design and implementation of an artificial intelligence of things-based autonomous mobile robot system for pitaya harvesting. IEEE Sensors J. 23, 13220–13235. doi:10.1109/JSEN.2023.3270844

Christiansen, M. P., Laursen, M. S., Jørgensen, R. N., Skovsen, S., and Gislum, R. (2017). Designing and testing a UAV mapping system for agricultural field surveying. Sensors 17, 2703. doi:10.3390/s17122703

Comba, L., Biglia, A., Aimonino, D. R., Barge, P., Tortia, C., and Gay, P. (2019). “2D and 3D data fusion for crop monitoring in precision agriculture,” in IEEE international workshop on metrology for agriculture and forestry (China: MetroAgriFor), 62. –67. doi:10.1109/MetroAgriFor.2019.8909219

Croptracker, (2023). Croptracker - farm management software. Available at: https://www.croptracker.com/product/farm-management-software.html (Accessed August 1, 2023).

CropX inc (2022). CropX agronomic farm management system. CropX. Available at: https://cropx.com/cropx-system/ (Accessed August 1, 2023).

De Clercq, M., Vats, A., and Biel, A. (2018). Agriculture 4.0 – the future of farming technology. Available at: https://www.oliverwyman.com/our-expertise/insights/2018/feb/agriculture-4-0--the-future-of-farming-technology.html (Accessed March 28, 2023).

Elijah, O., Rahman, T. A., Orikumhi, I., Leow, C. Y., and Hindia, M. N. (2018). An overview of internet of things (IoT) and data analytics in agriculture: benefits and challenges. IEEE Internet Things J. 5, 3758–3773. doi:10.1109/JIOT.2018.2844296

Faisal, M., Alsulaiman, M., Arafah, M., and Mekhtiche, M. A. (2020). IHDS: intelligent harvesting decision system for date fruit based on maturity stage using deep learning and computer vision. IEEE Access 8, 167985–167997. doi:10.1109/ACCESS.2020.3023894

Fan, P., Yan, B., Wang, M., Lei, X., Liu, Z., and Yang, F. (2021). Three-finger grasp planning and experimental analysis of picking patterns for robotic apple harvesting. Comput. Electron. Agric. 188, 106353. doi:10.1016/j.compag.2021.106353

Frisken, S. F., and Perry, R. N. (2006). Designing with distance fields. Mitsubishi Electr. Res. Laboratories. Available at: https://www.merl.com/publications/docs/TR2006-054.pdf (Accessed August 13, 2023).

Goh, B.-B., King, P., Whetton, R. L., Sattari, S. Z., and Holden, N. M. (2022). Monitoring winter wheat growth performance at sub-field scale using multitemporal Sentinel-2 imagery. Int. J. Appl. Earth Observations Geoinformation 115, 103124. doi:10.1016/j.jag.2022.103124

Guo, Y., Wang, H., Hu, Q., Liu, H., Liu, L., and Bennamoun, M. (2021). Deep learning for 3D point clouds: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 43, 4338–4364. doi:10.1109/TPAMI.2020.3005434

Hassanzadeh, A., Murphy, S. P., Pethybridge, S. J., and Van Aardt, J. (2020). Growth stage classification and harvest scheduling of snap bean using hyperspectral sensing: a greenhouse study. Remote Sens. 12, 3809. doi:10.3390/rs12223809

Hespeler, S. C., Nemati, H., and Dehghan-Niri, E. (2021). Non-destructive thermal imaging for object detection via advanced deep learning for robotic inspection and harvesting of chili peppers. Artif. Intell. Agric. 5, 102–117. doi:10.1016/j.aiia.2021.05.003

Hisada, M., Belyaev, A., and Kunii, T. (2001). A 3D voronoi-based skeleton and associated surface features. 89–96. doi:10.1109/PCCGA.2001.962861

Hornung, A., Wurm, K. M., Bennewitz, M., Stachniss, C., and Burgard, W. (2013). OctoMap: an efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 34, 189–206. doi:10.1007/s10514-012-9321-0

Hu, W., Mutlu, R., Li, W., and Alici, G. (2018). A structural optimisation method for a soft pneumatic actuator. Robotics 7, 24. doi:10.3390/robotics7020024

Iqbal, J., Xu, R., Sun, S., and Li, C. (2020). Simulation of an autonomous mobile robot for LiDAR-based in-field phenotyping and navigation. Robotics 9, 46. doi:10.3390/robotics9020046

Javaid, M., Haleem, A., Singh, R. P., and Suman, R. (2022). Enhancing smart farming through the applications of Agriculture 4.0 technologies. Int. J. Intelligent Netw. 3, 150–164. doi:10.1016/j.ijin.2022.09.004

Jia, W., Zhang, Z., Shao, W., Hou, S., Ji, Z., Liu, G., et al. (2021). FoveaMask: a fast and accurate deep learning model for green fruit instance segmentation. Comput. Electron. Agric. 191, 106488. doi:10.1016/j.compag.2021.106488

Jones, M. W., Baerentzen, J. A., and Sramek, M. (2006). 3D distance fields: a survey of techniques and applications. IEEE Trans. Vis. Comput. Graph. 12, 581–599. doi:10.1109/TVCG.2006.56

Jordan, M. I., and Mitchell, T. M. (2015). Machine learning: trends, perspectives, and prospects. Science 349, 255–260. doi:10.1126/science.aaa8415

Kang, H., Zhou, H., and Chen, C. (2020a). Visual perception and modeling for autonomous apple harvesting. IEEE Access 8, 62151–62163. doi:10.1109/ACCESS.2020.2984556

Kang, H., Zhou, H., Wang, X., and Chen, C. (2020b). Real-time fruit recognition and grasping estimation for robotic apple harvesting. Sensors 20, 5670. doi:10.3390/s20195670

Khalifeh, A. F., AlQammaz, A. Y., Abualigah, L., Khasawneh, A. M., and Darabkh, K. A. (2022). A machine learning-based weather prediction model and its application on smart irrigation. J. Intelligent Fuzzy Syst. 43, 1835–1842. doi:10.3233/JIFS-219284

Khanna, R., Möller, M., Pfeifer, J., Liebisch, F., Walter, A., and Siegwart, R. (2015). Beyond point clouds - 3D mapping and field parameter measurements using UAVs.

Kierdorf, J., Junker-Frohn, L. V., Delaney, M., Olave, M. D., Burkart, A., Jaenicke, H., et al. (2023). GrowliFlower: an image time-series dataset for GROWth analysis of cauLIFLOWER. J. Field Robotics 40, 173–192. doi:10.1002/rob.22122

Kim, S., Hong, S.-J., Ryu, J., Kim, E., Lee, C.-H., and Kim, G. (2023). Application of amodal segmentation on cucumber segmentation and occlusion recovery. Comput. Electron. Agric. 210, 107847. doi:10.1016/j.compag.2023.107847

Kocian, A., Massa, D., Cannazzaro, S., Incrocci, L., Di Lonardo, S., Milazzo, P., et al. (2020). Dynamic Bayesian network for crop growth prediction in greenhouses. Comput. Electron. Agric. 169, 105167. doi:10.1016/j.compag.2019.105167

Kokab, H. S., and Urbanic, R. J. (2019). Extracting of cross section profiles from complex point cloud data sets. IFAC-PapersOnLine 52, 346–351. doi:10.1016/j.ifacol.2019.10.055

Langer, M., Gabdulkhakova, A., and Kropatsch, W. G. (2019). “Non-centered Voronoi skeletons,” in Discrete geometry for computer imagery lecture notes in computer science. Editors M. Couprie, J. Cousty, Y. Kenmochi, and N. Mustafa (Cham: Springer International Publishing), 355–366. doi:10.1007/978-3-030-14085-4_28

Lehnert, C., McCool, C., Sa, I., and Perez, T. (2020). Performance improvements of a sweet pepper harvesting robot in protected cropping environments. J. Field Robotics 37, 1197–1223. doi:10.1002/rob.21973

Liu, G., Nouaze, J. C., Touko Mbouembe, P. L., and Kim, J. H. (2020). YOLO-tomato: a robust algorithm for tomato detection based on YOLOv3. Sensors 20, 2145. doi:10.3390/s20072145

Liu, Y., Ma, X., Shu, L., Hancke, G. P., and Abu-Mahfouz, A. M. (2021). From industry 4.0 to agriculture 4.0: current status, enabling technologies, and research challenges. IEEE Trans. Industrial Inf. 17, 4322–4334. doi:10.1109/TII.2020.3003910

Maponya, M. G., van Niekerk, A., and Mashimbye, Z. E. (2020). Pre-harvest classification of crop types using a Sentinel-2 time-series and machine learning. Comput. Electron. Agric. 169, 105164. doi:10.1016/j.compag.2019.105164

Marangoz, S., Zaenker, T., Menon, R., and Bennewitz, M. (2022). “Fruit mapping with shape completion for autonomous crop monitoring,” in IEEE 18th International Conference on Automation Science and Engineering (CASE), USA, 20-24 Aug. 2022 (IEEE), 471. –476. doi:10.1109/CASE49997.2022.9926466

McKinsey and Company (2022). What is IoT: the internet of things explained | McKinsey. Available at: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-the-internet-of-things (Accessed August 13, 2023).

Mekala, M. S., and Viswanathan, P. (2019). CLAY-MIST: IoT-cloud enabled CMM index for smart agriculture monitoring system. Measurement 134, 236–244. doi:10.1016/j.measurement.2018.10.072

Miao, Z., Yu, X., Li, N., Zhang, Z., He, C., Li, Z., et al. (2023). Efficient tomato harvesting robot based on image processing and deep learning. Precis. Agric. 24, 254–287. doi:10.1007/s11119-022-09944-w

Miragaia, R., Chávez, F., Díaz, J., Vivas, A., Prieto, M. H., and Moñino, M. J. (2021). Plum ripeness analysis in real environments using deep learning with convolutional neural networks. Agronomy 11, 2353. doi:10.3390/agronomy11112353

Mu, L., Cui, G., Liu, Y., Cui, Y., Fu, L., and Gejima, Y. (2020). Design and simulation of an integrated end-effector for picking kiwifruit by robot. Inf. Process. Agric. 7, 58–71. doi:10.1016/j.inpa.2019.05.004

Näf, M., Székely, G., Kikinis, R., Shenton, M. E., and Kübler, O. (1997). 3D Voronoi skeletons and their usage for the characterization and recognition of 3D organ shape. Comput. Vis. Image Underst. 66, 147–161. doi:10.1006/cviu.1997.0610

NVIDIA Corporation, (2020). Kaolin suite of tools. NVIDIA Dev. Available at: https://developer.nvidia.com/kaolin (Accessed August 13, 2023).

NX software Siemens Software. Siemens digital industries software. Available at: https://plm.sw.siemens.com/en-US/nx/ (Accessed August 23, 2023).

Odhner, L. U., Jentoft, L. P., Claffee, M. R., Corson, N., Tenzer, Y., Ma, R. R., et al. (2014). A compliant, underactuated hand for robust manipulation. Int. J. Robotics Res. 33, 736–752. doi:10.1177/0278364913514466

Ogniewicz, R., and Ilg, M. (1992). “Voronoi skeletons: theory and applications,” in Proceedings 1992 IEEE computer society conference on computer (USA: Vision and Pattern Recognition), 63–69. doi:10.1109/CVPR.1992.223226

Oleynikova, H., Taylor, Z., Fehr, M., Siegwart, R., and Nieto, J. (2017). Voxblox: incremental 3D euclidean signed distance fields for on-board MAV planning. IEEE/RSJ Int. Conf. Intelligent Robots Syst. (IROS), 1366. doi:10.1109/IROS.2017.8202315

Open Source Robotics Foundation, Gazebo. Available at: https://gazebosim.org/home (Accessed August 17, 2023).

Panasonic (2018). Introducing AI-equipped tomato harvesting robots to farms may help to create Jobs | business solutions | products & solutions | feature story. Panasonic Newsroom Global. Available at: https://news.panasonic.com/global/stories/814 (Accessed August 5, 2023).

Paunov, C., and Satorra, S. (2019). HOW ARE DIGITAL TECHNOLOGIES CHANGING INNOVATION? doi:10.1787/67bbcafe-en

Peelar, S., Urbanic, J., Hedrick, R., and Rueda, L. (2019). Real-time visualization of bead based additive manufacturing toolpaths using implicit boundary representations. CAD&A 16, 904–922. doi:10.14733/cadaps.2019.904-922

Praveen Kumar, D., Amgoth, T., and Annavarapu, C. S. R. (2019). Machine learning algorithms for wireless sensor networks: a survey. Inf. Fusion 49, 1–25. doi:10.1016/j.inffus.2018.09.013

Recchia, A., Strelkova, D., Urbanic, J., Kim, E., Anwar, A., Murugan, A. S., et al. (2023). A prototype pick and place solution for harvesting white button mushrooms using a collaborative robot. Robot. Rep. 1 (1), 67–81. doi:10.1089/rorep.2023.0016

Ridder and MetoMotion. GRoW tomato harvesting robot | for picking, collecting and boxing your tomatoes. Available at: https://grow.ridder.com (Accessed August 5, 2023).

Rodríguez, J. P., Montoya-Munoz, A. I., Rodriguez-Pabon, C., Hoyos, J., and Corrales, J. C. (2021). IoT-Agro: a smart farming system to Colombian coffee farms. Comput. Electron. Agric. 190, 106442. doi:10.1016/j.compag.2021.106442

Rodríguez-Robles, J., Martin, Á., Martin, S., Ruipérez-Valiente, J. A., and Castro, M. (2020). Autonomous sensor network for rural agriculture environments, low cost, and energy self-charge. Sustainability 12, 5913. doi:10.3390/su12155913

Rus, D., and Tolley, M. T. (2015). Design, fabrication and control of soft robots. Nature 521, 467–475. doi:10.1038/nature14543

Ryan, M. (2023). Labour and skills shortages in the agro-food sector. Paris: OECD. doi:10.1787/ed758aab-en

Sadowski, S., and Spachos, P. (2020). Wireless technologies for smart agricultural monitoring using internet of things devices with energy harvesting capabilities. Comput. Electron. Agric. 172, 105338. doi:10.1016/j.compag.2020.105338

Semios (2023). Discover our crop management solutions. Semios. Available at: https://semios.com/solutions/ (Accessed August 1, 2023).

SepúLveda, D., Fernández, R., Navas, E., Armada, M., and González-De-Santos, P. (2020). Robotic aubergine harvesting using dual-arm manipulation. IEEE Access 8, 121889–121904. doi:10.1109/ACCESS.2020.3006919

Shafi, U., Mumtaz, R., García-Nieto, J., Hassan, S. A., Zaidi, S. A. R., and Iqbal, N. (2019). Precision agriculture techniques and practices: from considerations to applications. Sensors 19, 3796. doi:10.3390/s19173796

Shi, X., An, X., Zhao, Q., Liu, H., Xia, L., Sun, X., et al. (2019). State-of-the-Art internet of things in protected agriculture. Sensors 19, 1833. doi:10.3390/s19081833

Shintake, J., Cacucciolo, V., Floreano, D., and Shea, H. (2018). Soft robotic grippers. Adv. Mater. 30, 1707035. doi:10.1002/adma.201707035

Siemens Industry Software (2011). Siemens industry software. Available at: https://www.plm.automation.siemens.com/media/store/en_us/4917_tcm1023-4952_tcm29-1992.pdf (Accessed August 12, 2023).

Sishodia, R. P., Ray, R. L., and Singh, S. K. (2020). Applications of remote sensing in precision agriculture: a review. Remote Sens. 12, 3136. doi:10.3390/rs12193136

Stepanova, A., Pham, H., Panthi, N., Zourmand, A., Christophe, F., Laakkonen, M.-P., et al. (2023). Harvesting tomatoes with a Robot: an evaluation of Computer-Vision capabilities. IEEE Int. Conf. Aut. Robot Syst. Compet. (ICARSC), 63. –68. doi:10.1109/ICARSC58346.2023.10129601

Taşan, S., Cemek, B., Taşan, M., and Cantürk, A. (2022). Estimation of eggplant yield with machine learning methods using spectral vegetation indices. Comput. Electron. Agric. 202, 107367. doi:10.1016/j.compag.2022.107367

Tatsumi, K., Igarashi, N., and Mengxue, X. (2021). Prediction of plant-level tomato biomass and yield using machine learning with unmanned aerial vehicle imagery. Plant Methods 17, 77. doi:10.1186/s13007-021-00761-2

Tesfaye, A. A., Osgood, D., and Aweke, B. G. (2021). Combining machine learning, space-time cloud restoration and phenology for farm-level wheat yield prediction. Artif. Intell. Agric. 5, 208–222. doi:10.1016/j.aiia.2021.10.002

The AnyLogic Company. AnyLogic. Available at: https://www.anylogic.com/features/(Accessed August 17, 2023).

Thilakarathne, N. N., Bakar, M. S. A., Abas, P. E., and Yassin, H. (2023). Towards making the fields talks: a real-time cloud enabled IoT crop management platform for smart agriculture. Front. Plant Sci. 13, 1030168. doi:10.3389/fpls.2022.1030168

Torgbor, B. A., Rahman, M. M., Brinkhoff, J., Sinha, P., and Robson, A. (2023). Integrating remote sensing and weather variables for mango yield prediction using a machine learning approach. Remote Sens. 15, 3075. doi:10.3390/rs15123075

Urbanic, R. J., and Elmaraghy, W. H. (2008). Design recovery of internal and external features for mechanical components. Virtual Phys. Prototyp. 3, 61–83. doi:10.1080/17452750802078698

Várady, T., Martin, R. R., and Cox, J. (1997). Reverse engineering of geometric models—an introduction. Computer-Aided Des. 29, 255–268. doi:10.1016/S0010-4485(96)00054-1

Vijayakumar, V., Costa, L., and Ampatzidis, Y. (2021). “Prediction of citrus yield with AI using ground-based fruit detection and UAV imagery,” in 2021 ASABE annual international virtual meeting (American: American Society of Agricultural and Biological Engineers). doi:10.13031/aim.202100493

Wang, J., Zhang, Z., Luo, L., Zhu, W., Chen, J., and Wang, W. (2021). Swingd: a robust grape bunch detection model based on swin transformer in complex vineyard environment. Horticulturae 7, 492. doi:10.3390/horticulturae7110492

Wang, X., Kang, H., Zhou, H., Au, W., Wang, M. Y., and Chen, C. (2023). Development and evaluation of a robust soft robotic gripper for apple harvesting. Comput. Electron. Agric. 204, 107552. doi:10.1016/j.compag.2022.107552

Weyler, J., Milioto, A., Falck, T., Behley, J., and Stachniss, C. (2021). Joint plant instance detection and leaf count estimation for in-field plant phenotyping. IEEE Robotics Automation Lett. 6, 3599–3606. doi:10.1109/LRA.2021.3060712

Williams, H. A. M., Jones, M. H., Nejati, M., Seabright, M. J., Bell, J., Penhall, N. D., et al. (2019). Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 181, 140–156. doi:10.1016/j.biosystemseng.2019.03.007

Wise, K., Wedding, T., and Selby-Pham, J. (2022). Application of automated image colour analyses for the early-prediction of strawberry development and quality. Sci. Hortic. 304, 111316. doi:10.1016/j.scienta.2022.111316

Xiong, Y., Peng, C., Grimstad, L., From, P. J., and Isler, V. (2019). Development and field evaluation of a strawberry harvesting robot with a cable-driven gripper. Comput. Electron. Agric. 157, 392–402. doi:10.1016/j.compag.2019.01.009

Xu, H., Yu, G., Niu, C., Zhao, X., Wang, Y., and Chen, Y. (2023). Design and experiment of an underactuated broccoli-picking manipulator. Agriculture 13, 848. doi:10.3390/agriculture13040848

Yin, H., Sun, Q., Ren, X., Guo, J., Yang, Y., Wei, Y., et al. (2023). Development, integration, and field evaluation of an autonomous citrus-harvesting robot. J. Field Robotics n/a 40, 1363–1387. doi:10.1002/rob.22178

Yu, X., Fan, Z., Wang, X., Wan, H., Wang, P., Zeng, X., et al. (2021). A lab-customized autonomous humanoid apple harvesting robot. Comput. Electr. Eng. 96, 107459. doi:10.1016/j.compeleceng.2021.107459

Yu, Y., Zhang, K., Yang, L., and Zhang, D. (2019). Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 163, 104846. doi:10.1016/j.compag.2019.06.001

Zamora-Izquierdo, M. A., Santa, J., Martínez, J. A., Martínez, V., and Skarmeta, A. F. (2019). Smart farming IoT platform based on edge and cloud computing. Biosyst. Eng. 177, 4–17. doi:10.1016/j.biosystemseng.2018.10.014

Keywords: internet of things, industry 4.0, CAD, CAM, produce harvesting, automated harvesting

Citation: Recchia A and Urbanic J (2023) Leveraging I4.0 smart methodologies for developing solutions for harvesting produce. Front. Manuf. Technol. 3:1282843. doi: 10.3389/fmtec.2023.1282843

Received: 24 August 2023; Accepted: 29 November 2023;

Published: 15 December 2023.

Edited by:

Weidong Li, Coventry University, United KingdomReviewed by:

Roberto Simoni, Federal University of Santa Catarina, BrazilAntonio Carlos Valdiero, Federal University of Santa Catarina, Brazil

Copyright © 2023 Recchia and Urbanic. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jill Urbanic, anVyYmFuaWNAdXdpbmRzb3IuY2E=

Ava Recchia

Ava Recchia Jill Urbanic

Jill Urbanic