- 1Department of Applied Language Studies, Nebrija University, Madrid, Spain

- 2Nebrija Research Center in Cognition, Nebrija University, Madrid, Spain

- 3Department of Language and Culture (ISK), UiT The Arctic University of Norway, Tromsø, Norway

- 4Department of Modern Languages and Classics, The University of Alabama, Tuscaloosa, AL, United States

- 5Department of World Languages and Cultures, The University of Tennessee, Knoxville, Knoxville, TN, United States

- 6Department of World Languages and Cultures, Northern Illinois University, DeKalb, IL, United States

The multidimensional nature of bilingualism demands ecologically valid and inclusive research methods that can capture its dynamism and diversity. This is particularly relevant when assessing language proficiency in minoritized and racialized communities, including heritage speakers (HSs). Motivated by a paradigm shift in bilingualism research, the present study joined current efforts to establish best practices for assessing language proficiency among bilingual individuals accurately and consistently, promoting ecological validity and inclusivity. Specifically, we examined the reliability and validity of objective and subjective proficiency assessments ubiquitously used in second language (L2) and bilingualism research to assess Spanish proficiency, within a sample of HSs of Spanish in the United States (US). We also sought to understand the relationships between these proficiency assessments and a subset of heritage language (HL) experience factors. To our knowledge, this is the first study to examine the reliability and validity of these proficiency assessments and their relationship with HL experience factors with HSs of Spanish in the US in a multidimensional way. Forty-three HSs of Spanish completed the Bilingual Language Profile questionnaire, including self-reports of proficiency and information about HL experience and two objective proficiency assessments: a lexical decision task, namely the LexTale-Esp, and a vocabulary and grammar task, often referred to as the “Modified DELE”. Our findings revealed high internal consistency for both objective proficiency assessments and medium correlations between them, supporting their reliability and validity. However, our results also revealed inconsistent relationships between subjective proficiency assessments and HL language experience factors. These findings underscore the dynamic interplay between these HSs' objective and subjective proficiency, and HL experiences and use across different contexts. Additionally, they highlight the limitations of relying on any single proficiency assessment, aligning with previous research that emphasizes the need for multidimensional proficiency assessments and language experience factors to capture the dynamic and diverse nature of bilingualism. By critically evaluating the reliability and validity of existing objective and subjective proficiency assessments alongside HL experience factors, our study aims to shed light on the best practices of assessing language proficiency among bilingual individuals, specifically HSs of Spanish in the US, in an ecologically valid and inclusive manner.

1 Introduction

Bilingualism, characterized by regular engagement with two (or more) languages in daily life– regardless of level of proficiency in each language–, is a subject of profound academic interest due to its influence on multiple dimensions of the human experience, including identity, language development, communication, sociocultural engagement, and neuro/psychological functioning (e.g., Birdsong, 2014; Dewaele et al., 2003; Grosjean, 2010). Within this fascinating landscape, heritage language (HL) bilingualism occupies a distinct and significant place, emerging as a complex and dynamic phenomenon, reflecting the experiences of individuals who grow up speaking a native language, their HL, which differs from the dominant language in their wider societal context.

Heritage speakers (HSs) constitute a unique group of bilinguals. Although these speakers acquire their HL early and naturalistically, they often navigate a sociolinguistic landscape characterized by challenges. These include reduced linguistic input (especially written input in academic contexts), and/or have fewer opportunities to meaningfully engage with, use, or be formally trained in their HL (e.g., Flores, 2015; Rothman and Treffers-Daller, 2014; Valdés, 2005). Additionally, HLs are frequently marginalized within broader societal contexts, facing systematic neglect in educational, governmental, and cultural domains. This marginalization is deeply intertwined with raciolinguistic ideologies that both reflect and reinforce societal hierarchies based on race and language (e.g., Rosa and Flores, 2017; Zou and Cheryan, 2017). For example, within the United States (US), HSs of Spanish often encounter policies and practices that prioritize English proficiency and use, sometimes to the detriment of their HL development. This can manifest in educational settings where English-only instruction predominates, limiting opportunities for HL development and contributing to potential loss over time (e.g., Beaudrie and Fairclough, 2012; Christoffersen, 2019; Flores and García, 2017; García and Solorza, 2021; Kelly, 2018; Lee and Wright, 2014; Leeman, 2015; Leeman and Martínez, 2007; Sánchez-Muñoz, 2016). Furthermore, societal attitudes toward Spanish and bilingualism are mixed, with some segments of society viewing Spanish and/or bilingualism as an asset, while others may perceive it negatively (e.g., Achugar and Pessoa, 2009; Barrett et al., 2023; Fuller and Leeman, 2020; Surrain and Luk, 2023). Additionally, the availability of educational opportunities for HSs of Spanish may vary depending on factors such as geographic location, socioeconomic status, and access to resources, leading to disparities in outcomes (e.g., Bohman et al., 2010; Paradis, 2023; Rothman, 2009). Navigating this complex sociolinguistic landscape presents unique challenges for HSs of Spanish, as they strive to maintain their HL and bicultural identity while also adapting to the linguistic and cultural hegemonic norms of their environment (e.g., Holguín Mendoza et al., 2023; Pascual y Cabo and Prada, 2018).

We acknowledge that, as researchers, we have an obligation to contribute knowledge that can help address these challenges to promote linguistic diversity, cultural preservation, and equitable educational opportunities for HSs of Spanish in the US (e.g., Flores, 2020; Flores and Rosa, 2015, 2023; Flores and Schissel, 2014; García et al., 2021).

For researchers and practitioners working with HSs, assessing language proficiency takes on a multifaceted character and involves considering cultural identity, communication, and sociolinguistic engagement (e.g., Pascual y Cabo and Prada, 2015; Valdés, 2005). However, many tasks utilized to evaluate language proficiency rely on standardized assessments based on monolingual benchmarks, prioritizing prescriptive linguistic norms and language usage (e.g., Bachman and Palmer, 1996; Bayram et al., 2021b; Cummins, 2013). While these proficiency assessments can offer useful data for the purposes of HL bilingualism research, they also present limitations in capturing the rich diversity and dynamic nature of bilingual experiences and the sociocultural and linguistic abilities of bilingual individuals in a holistic way. Moreover, the exclusive use of such tasks can inadvertently perpetuate negative stereotypes and disregard the sociocultural dimensions inherent in bilingualism, which are a core part of HSs' lived experiences (Flores and Rosa, 2015; Ortega, 2020).

Recognizing these limitations, there has been a notable paradigm shift in bilingualism research to establish best practices for assessing language proficiency among bilingual individuals while promoting ecological validity and inclusivity (e.g., De Bruin, 2019; López et al., 2023). Within this context, ecological validity refers to the extent to which research findings about bilingualism apply to real-world bilingual settings, such that results can be generalized to everyday bilingual experiences beyond the controlled conditions of a research laboratory. Inclusivity ensures that bilingual individuals' diverse experiences and backgrounds are accurately represented and respected in research. An integral part of this shift acknowledges that bilingual individuals are not simply two monolinguals in one person; instead, bilingualism is viewed as multifaceted and dynamic, including unique phenomena such as code-switching and translanguaging, where speakers fluidly alternate between languages across conversations and/or contexts. These practices, inherent to the bilingual experience, reflect the adaptive nature of bilingualism across diverse contexts of language use, contexts which are essential to understanding the full breadth of bilingual realities. To advance this understanding, researchers have begun to propose and incorporate new methodologies that better capture the diverse and dynamic language proficiencies and experiences of bilingual individuals. By prioritizing ecological validity and inclusivity, these new approaches aim to reflect the complex nature and dynamics of bilingualism more accurately, making research findings more relevant and applicable to real-world settings (e.g., Ali, 2023; Bayram et al., 2019, 2021a; Cacoullos and Travis, 2018; Grosjean, 1989, 2010; Gullifer et al., 2021; Higby et al., 2023; Leivada et al., 2023; Prada, 2021, 2022; Rothman et al., 2023; Toribio and Duran, 2018).

Despite this shift, there remains a lack of consensus in the field regarding which proficiency assessments best capture the multifaceted nature of bilingualism in an accurate and consistent way, especially for HSs. Furthermore, the wide variety of proficiency measurements used across studies limits the generalizability of results and complicates cross-study comparability, thereby hindering the advancement of knowledge in the field (Olson, 2023a). Thus, the first step to creating best practices for assessing proficiency in HSs is to better examine and understand these various proficiency assessments, both their reliability and validity. Key aspects, such as internal consistency within reliability, and construct and ecological validity within overall validity, play crucial roles in this process. Note that reliability does not tell us the specific nature of what is being measured—only that the measurement is consistent across items. In contrast, construct validity focuses on determining whether the test accurately measures the intended concept or construct, while ecological validity examines how well the test results apply to real-world contexts (Brown, 2013; Crocker and Algina, 1986; Kline, 2013; Tavakol and Dennick, 2011). These aspects can be evaluated by assessing the internal consistency of the test items to ensure reliability, examining how well the test relates to other assessments designed to measure the same construct, which supports construct validity, and by assessing how well the results reflect real-life situations, which is crucial for ecological validity. Through these comprehensive examinations, researchers can better identify reliable and valid proficiency assessments.

Enhancing the robustness of bilingualism research depends significantly on using the most reliable and valid methodologies, including proficiency assessments. This approach facilitates knowledge development in the field, especially if researchers can converge on a smaller set of the most robust measures, which are then used consistently across studies. As Olson (2023a) states, “given the important role that proficiency plays in the field, and notably in the comparability of results across multiple studies, proficiency assessment remains a key methodological consideration” (p. 7). Thus, by these aspects, researchers can ensure their work contributes to a more reliable and valid understanding of bilingual proficiency, advancing the field in a more ecologically valid and inclusive way.

Our study aimed to contribute to this effort by examining the reliability and validity of a set of objective and subjective proficiency assessments focusing on a specific group of bilinguals: HSs of Spanish in the US. Specifically, we investigated the reliability and validity of two widely used objective and subjective proficiency assessments in the field of L2 and bilingualism research. These were a lexical decision task, in particular, the Lextale-Esp (Izura et al., 2014), and a vocabulary-grammar task often called the “Modified DELE” (Montrul and Ionin, 2012). The subjective assessments were derived from proficiency self-reports in the Bilingual Language Profile questionnaire (BLP; Birdsong et al., 2012). Our goal was to examine these tasks within a sub-group of college-educated HSs of Spanish, who were not the target population for which the assessments were developed.

Additionally, as part of our assessment of ecological validity, we sought to understand the relationships between these proficiency assessments and particular HL experience factors, including years of exposure to Spanish, years of Spanish schooling, and the social diversity of bilingual language use, as assessed by language entropy (following Gullifer and Titone, 2018). This multidimensional approach aimed to shed light on the effectiveness of these assessments in capturing and characterizing the dynamic and diverse nature of HSs' proficiency and experiences and of HL bilingualism in an ecologically valid and inclusive way. To our knowledge, this is the first study to examine the reliability and validity—specifically internal consistency, construct validity, and ecological validity—of these proficiency assessments and their relationship with HL experience factors with HSs of Spanish in the US.

2 Background

To enhance our ability to characterize the Spanish proficiency of HSs in the US in an ecologically valid and inclusive manner, it is crucial to disentangle the different concepts and terms that are used in the field to describe and evaluate the receptive and productive linguistic proficiency of HSs. In this section, we begin by unpacking the concept of proficiency, a term that is ubiquitous in the literature, but often used without a clear operationalization. Following, we review studies that have explored interactions between bilingual language proficiency and exposure, comparing objective and subjective assessments of proficiency across different modalities and bilingual populations. Finally, we review previous research that has provided insights into the reliability and validity of the objective and subjective proficiency assessments examined in our study.

A recent review of proficiency assessment methods in bilingualism research (Olson, 2023a) traces the evolution of definitions of the term proficiency. Such definitions begin with notions of general competence in a language (e.g., Thomas, 1994) and expand to communicative competence in different sociocultural contexts (e.g., Canale and Swain, 1980; Hymes, 1972). Other definitions conceptualize this construct as (at least) two-dimensional, composed of a linguistic knowledge dimension (e.g., morphosyntactic, lexical) and a language skills dimension (reading, writing, speaking, listening) (e.g., Carroll and Freedle, 1972). More recent conceptualizations merge linguistic knowledge, language abilities or skills, and communicative competence into multidimensional models (e.g., Hulstijn, 2015; Hyltenstam, 2016). These models ultimately converge in the notion that proficiency is a combination of skills and knowledge that allow speakers to comprehend and produce language successfully (Olson, 2023a).

Several different types of assessment methods have been used to characterize proficiency in bilinguals, including (a) standardized language-specific tests such as the TOEFL, (b) self-ratings, (c) area-specific tests (e.g., vocabulary tests, picture-naming tasks, etc.), (d) multiple component tests (e.g., an elicited imitation task), (e) holistic assessments such as the Oral Proficiency Interview, or (f) characterization based on curricular level (Olson, 2023a). Each of these approaches has its theoretical or practical justification but also has specific methodological limitations [as discussed by Menke and Malovrh (2021); Olson (2023a)]. Assessments of proficiency among bilinguals can be (and are) used for different purposes in research, including to examine as a variable of interest, to characterize (e.g., “intermediate level”), to group, and/or to exclude participants, as well as to make cross-study comparisons (Olson, 2023a). Thus, they are critical to examine from a methodological viewpoint. As revealed by Surrain and Luk (2019), there is a tendency to oversimplify the construct of proficiency based on a single metric. This oversimplification can lead to the categorization or assignment of potentially misleading labels to bilingual speakers, overlooking the multidimensional nature of bilingual language proficiency and the diverse factors that contribute to it.

A number of studies have explored relationships between objective and subjective proficiency assessments to evaluate and characterize different aspects of language proficiency among diverse bilingual populations with varying results. For instance, Gollan et al. (2012) investigated language dominance among Spanish-English bilinguals, including young and older adults. Their study examined the Multilingual Naming Test (MINT) and the Boston Naming Test (BNT), both picture-naming tasks, as objective measures, and proficiency interviews and subjective self-reports of language proficiency as subjective measures. The results revealed that while self-ratings of proficiency and proficiency interviews generally aligned well with the results of the MINT in determining language dominance, the BNT often classified participants as more English-dominant than other assessments. This discrepancy is particularly significant because it highlights a key issue in bilingual language assessment: the potential for tasks originally designed for monolingual speakers, like the BNT, to misrepresent the abilities of bilingual individuals. Specifically, the BNT appeared to underestimate proficiency in Spanish, suggesting that such tools may not be fully reliable for assessing language proficiency in bilingual populations. Furthermore, the study found that a substantial portion of participants—up to 60%—performed better on tasks involving their self-reported non-dominant language. This finding suggests that bilinguals may possess a higher level of proficiency in their non-dominant language than they perceive or that certain tasks may be more sensitive to different aspects of language proficiency in dominant vs. non-dominant languages.

Similarly, Sheng et al. (2014) explored the relationship between subjective and objective assessments of language dominance among Mandarin-English bilinguals, employing similar assessments as those used by Gollan et al. (2012), such as the MINT and self-reported proficiency. Their findings echoed the earlier study in that discrepancies existed between self-reports and objective assessments. Specifically, self-ratings of language dominance did not always align with the results of the MINT, suggesting that self-perceptions of language abilities can be influenced by factors other than actual proficiency, such as cultural attitudes or confidence levels in using a particular language. A key finding from the study by Sheng et al. (2014) was that the degree of convergence or divergence between subjective and objective assessments could vary depending on the language pair and the context in which the languages are used. For instance, Mandarin-English bilinguals who used both languages regularly in different domains (e.g., Mandarin at home, English at work) were more likely to have self-ratings that diverged from their MINT results. The results of these studies highlight the importance of using multiple, carefully chosen tasks to assess bilingual proficiency comprehensively. The observed differences between subjective assessments, like self-ratings, and certain objective assessments underscore the need to use assessment methods that accurately reflect and characterize bilingual individuals' dynamic and diverse linguistic abilities. This complexity underscores that a single approach may not suffice, and a more multidimensional strategy, which integrates both subjective experiences and objective linguistic abilities, is crucial for a more accurate representation of bilingual proficiency.

Other studies have focused on the role of language experience factors in influencing the reliability and validity of proficiency assessments. Tomoschuk et al. (2019) examined the relationship between self-ratings and picture-naming tasks across Spanish-English and Chinese-English bilinguals with varying acquisition backgrounds. Their findings showed discrepancies between self-ratings and picture-naming results across different language groups, with some individuals rating their proficiency higher or lower than what was reflected in their performance on the objective task. These discrepancies suggest that individual biases or differing interpretations may influence subjective assessments, while objective assessments, such as picture-naming tasks, were more reliable indicators of proficiency. Additionally, their results underscored the importance of considering language experience factors, including the amount and context of language exposure, as these were found to impact the reliability of both subjective and objective assessments significantly. Relatedly, Gullifer and Titone (2020) explored bilingual language proficiency among French-English bilinguals (with varying experience backgrounds) using a combination of objective and subjective assessments. Objective assessments included picture-naming ability and verbal fluency tests, while subjective assessments encompassed self-reports. Their study investigated how factors such as timing and amount of HL exposure influence proficiency outcomes across different communicative contexts. The findings revealed nuanced patterns in language exposure and proficiency, indicating that subjective assessments can sometimes provide more accurate insights than expected, particularly for assessing L2 proficiency. Specifically, the study highlighted how language exposure across various communicative contexts exhibited distinct but interrelated patterns that contributed to a more comprehensive self-assessment of L2 proficiency compared to L1 proficiency.

Additionally, Gehebe et al. (2023) used both objective (ACTFL and DIALANG standardized proficiency tests) and subjective proficiency assessments (self-rated proficiency and Can-Do statements) among young adult bilinguals with varying levels of exposure to English as their L2 (and one of over a dozen different non-English languages as their L1). Their findings revealed that proficiency assessment outcomes varied based on exposure levels to the L2 and domains of language proficiency, revealing the impact of language exposure on proficiency assessments. In their study, participants with higher English exposure demonstrated more consistent proficiency outcomes across subjective and objective assessments, supporting the validity of standardized assessments in capturing proficiency differences among this group. Yet, subjective assessments provided insights into self-perceptions and confidence in language use, complementing the quantitative data of standardized tests. Hržica et al. (2024) added another layer of complexity by examining the relationship between self-assessment of language proficiency and objective assessments of lexical diversity and syntactic complexity among bilingual HSs of Italian in Croatia. Their study specifically focused on a diglossic community, where individuals regularly navigate between a standard language (Italian) and a regional dialect (Istrovenetian) in different contexts. The findings revealed an intricate interplay between objective and subjective language proficiency assessments, indicating that although subjective assessments can provide valuable insights, they do not always fully align with objective language proficiency assessments. Specifically, the results of their study revealed that self-assessment scores were generally higher for the standard language compared to the regional dialect, reflecting the different social statuses and usage contexts of the two language varieties. However, objective assessments, such as lexical diversity and syntactic complexity, often painted a different picture, sometimes showing higher proficiency for the regional dialect, particularly in spoken contexts.

Taken together, these findings highlight the importance of considering language experience factors when assessing bilingual proficiency. A balanced approach that integrates both objective and subjective assessments is essential for capturing the full scope of bilingual language proficiency, particularly in individuals with diverse linguistic backgrounds. The complexity of bilingualism underscores the need to evaluate proficiency within multiple socio-experiential contexts. This multidimensional approach, supported by previous studies, allows for a more accurate and dynamic understanding of bilingual proficiency. While subjective assessments provide valuable insights, they may not fully capture the intricate relationship between language experience and proficiency, making it crucial to complement them with objective assessments.

Finally, it is worth noting that some studies have found self-ratings to be highly correlated with other well-documented, production-oriented, objective assessments of proficiency in bilinguals, supporting their validity. For example, robust correlations have been found with both the Elicited Imitation Task (EIT) and Simulated Oral Proficiency Interview (SOPI) for L2 learners (Bowden, 2016), and with the EIT for L2 learners and HSs (Faretta-Stutenberg et al., 2023). These findings suggest that self-ratings can serve as more reliable indicators of proficiency when aligned with certain oral and production-oriented tasks. However, it is essential to recognize that the effectiveness of self-ratings may vary depending on the specific tasks and contexts, highlighting the multifaceted nature of language proficiency and the need to consider task-specific characteristics in assessments.

These studies underscore the complexity of assessing bilingual language proficiency due to the interplay between objective and subjective assessments and varying language experience factors. Specifically, they highlight how language proficiency assessments can yield inconsistent or variable results, often influenced by different factors such as language exposure, socio-cultural contexts, and individual perceptions. This variability points to the need for a multidimensional language proficiency assessment approach that captures the full spectrum of bilingual language abilities and experiences.

Our study aimed to contribute to this line of work, highlighting the need for a multidimensional approach to proficiency assessment by focusing specifically on the Spanish proficiency of HSs in the US. As noted above, we explored the reliability and validity of both objective and subjective assessments to assess HL proficiency and examined how these assessments correlate with various HL experience factors. To achieve this, we begin with a detailed analysis of the objective assessments employed in our study, followed by a discussion of relevant prior research examining their development and validation.

2.1 Objective assessments of language proficiency

In the context of our study, we define objective proficiency assessments as a type of language assessment designed to quantify, track, or categorize an individual's language abilities in a systematic manner (Olson, 2023a). Such objective assessments are utilized to evaluate bilingual individuals' proficiency across their different languages, including standardized tests developed by language assessment organizations or researchers, or specifically designed by researchers for the purpose of their studies, and they may focus on one or more domains, such as oral, written, receptive, productive, lexical, and/or grammatical proficiency. Following, we describe the objective assessments used in our study and discuss relevant prior research examining these assessments.

2.1.1 Lexical decision task: Lextale-Esp

The Lexical Test for Advanced Learners of English (LexTALE) was initially developed by Lemhöfer and Broersma (2012) to be a practical and quick (about 5 min) objective tool to assess L2 English vocabulary knowledge. It is intended as a potential proxy to assess overall language proficiency by estimating an individual's vocabulary size. The task uses word frequency as the basic criterion for establishing varying difficulty levels across the proficiency continuum. That is, certain high-frequency words were selected so that they are known by even L2 learners on the lower end of the proficiency spectrum, while other low-frequency words were selected as they would be known only by L2 learners at the higher end. Specifically, participants are presented with a list of words (e.g., scornful) and English-like non-words (e.g., mensible) and are asked to identify whether each is an existing English word or not. The LexTALE evaluates participants' performance through signal detection theory approaches by considering participants' accurate identification of words and non-words, erroneous identification of a non-word as a word (i.e., false alarms), and failure to recognize a word (i.e., miss rate).1 There is ample support for the task's reliability and validity as an estimate of vocabulary size, knowledge and processing speed. This evidence comes from correlations with individual differences in language processing abilities across various task, including studies on reaction time dynamics on masked priming tasks (Andrews and Hersch, 2010), written word identification strategies (Chateau and Jared, 2000), word-recognition speed lexical-decision task accuracy (Diependaele et al., 2013), and performance on lexical decision tasks (Yap et al., 2008), among others. It has also been shown to have small to medium (all correlation strengths following Plonsky and Oswald, 2014) sized correlations with English proficiency assessments including the TOEIC and Quick Placement Test (Lemhöfer and Broersma, 2012). However, recent findings by Puig-Mayenco et al. (2023) suggest a more nuanced consideration of the LexTALE's applicability. In their study, they critically evaluated the LexTALE's validity as an assessment of global L2 proficiency across learners of English with varying proficiency levels, originating from different L1 backgrounds (Spanish and Chinese) by conducting a partial replication of the work by Lemhöfer and Broersma (2012). The results of their study revealed that the LexTALE, while offering valuable insights into vocabulary size, knowledge, and processing speed, shows only low-to-moderate correlations with a standardized assessment of English global proficiency, such as the Quick Placement Test. These findings underscore the fact that the LexTALE's applicability is not straightforward, as its correlations with other proficiency assessments seem to be inconsistent.

Mirroring the English version, a Spanish version of the task (Lextale-Esp; Izura et al., 2014) was developed to address the growing need for efficient and objective tools to assess Spanish language proficiency among bilingual populations, including HSs (e.g., Hao et al., 2024; Luque et al., 2023). Based on the design and purpose of the original LexTALE, the Lextale-Esp also evaluates vocabulary knowledge by estimating an individual's vocabulary size to gauge overall L2 proficiency. The Lextale-Esp uses a range of words that appear to be influenced by Peninsular Spanish selected from the Subtlex-Esp database (Cuetos et al., 2011), which is based on word frequencies from movies and TV shows subtitles screened between 1990 and 2009, with the same intended goal of having words with very high-frequency rates likely known by even beginning L2 learners to very low-frequency words likely only known by highly proficient native speakers.

Regarding its validity, the Lextale-Esp has been shown to be a valuable tool for assessing vocabulary knowledge as a proxy to assess overall language proficiency across different Spanish-speaking bilingual populations. Specifically, a study conducted by Ferré and Brysbaert (2017) supported the discriminative power of the Lextale-Esp in assessing Spanish vocabulary size and processing speed within highly proficient Catalan-Spanish bilinguals with varying degrees of language dominance. The findings showed that the two participant groups performed differently on the Lextale-Esp, with the Spanish-dominant group displaying significantly higher scores than the Catalan-dominant group Thus, these findings provide evidence supporting the Lextale-Esp's validity in capturing variability in vocabulary knowledge among highly proficient bilinguals. Further validation efforts for the Lextale-Esp come from Bermúdez-Margaretto and Brysbaert (2022), exploring translation efficiency in language assessments. Participants in the study were L1 Spanish-dominant adults who identified as bilingual speakers in 26 different languages. The goal of their study was twofold: first, to develop new assessment methods that more accurately reflect vocabulary knowledge by emphasizing meaning recognition rather than form, following the work of Vermeiren et al. (2022) and second, to explore the broader question of convergent validity, which involved assessing the extent to which their newly developed vocabulary test and the already established Lextale-Esp measured the same construct of vocabulary knowledge. Findings revealed medium-sized correlations between the Lextale-Esp and their vocabulary test, suggesting that both assessments tap into the same construct to a significant degree. These results suggest that the LexTale-Esp is specifically suited to assessing overall vocabulary knowledge. Overall, these findings support the validity and reliability of the Lextale-Esp as an objective assessment of vocabulary knowledge among bilingual individuals.

Despite the growing body of research supporting the Lextale-Esp's use across different linguistic contexts, there remains a critical need to specifically investigate its reliability and validity within the domain of HL bilingualism, especially regarding its potential to tap into individual language abilities and overall proficiency more broadly in an ecologically valid and inclusive way. Additionally, it is important to recognize that the LexTale-Esp appears to be heavily influenced by Peninsular Spanish norms, especially the low-frequency items. This poses challenges in terms of ecological validity and inclusivity, particularly for HSs of Spanish in the US given their potential lack of familiarity with this particular variety of Spanish. Such unfamiliarity could negatively impact their score on the task, potentially leading to a mischaracterization of their Spanish proficiency.

2.1.2 Spanish vocabulary and grammar task, also known as the “modified DELE”

A written Spanish vocabulary and grammar task widely used as a proficiency assessment in L2 and HL research is often referred to as the “Modified DELE”. The task was in fact compiled from two sources in the 1990s by Montrul and Bruhn de Garavito (Hoot, 2020). Its first published use was in Duffield and White (1999), as a measure for grouping adult L2 Spanish learners by proficiency level. They described the task as being comprised of:

sections from standardized Spanish as a second language proficiency tests, namely the reading/vocabulary section of the MLA Cooperative Foreign Language Test (Educational Testing Service, Princeton, NJ) and a cloze test from the Diploma de Español como Lengua Extranjera (DELE) (Embajada de España, Washington, DC) (p. 139)2.

The test consists of 50 multiple-choice fill-in-the-blank items in two sections. The first section–the reading/vocabulary section–contains 30 separate sentences with a blank in each one, with all items and choices targeting vocabulary knowledge. The second section–the cloze section–consists of a multi-paragraph reading passage with 20 blanks, with 10 items targeting vocabulary and 10 items targeting grammar knowledge (4 related to tense/aspect/mood, 4 related to prepositions, and 2 related to relatives and conjunctions). (See Section 3.2.1.1 for examples from each section and Note 2 for a link to the full test). Scoring usually consists of the total number of correct responses out of 50. Duffield and White (1999) proposed score ranges to categorize L2 Spanish proficiency levels as follows: 37–50 for “advanced”, 25–36 for “intermediate”, and 0–25 for “low”. Montrul and Slabakova (2003) subsequently used the task with both L1 and L2 Spanish speakers and defined a score range of 45–50 for “near-native” proficiency (as 45 was the minimum score in the L1 group). It should also be noted that some researchers have at least one additional version of the task in circulation (Hoot, 2020). In that version, both sections differ from the original test but follow the same format.

To our knowledge, the first study to employ the task with HSs was Montrul (2005). Investigating the impact of early linguistic exposure on language development, she examined adult L2 and HSs of Spanish. Interestingly, Montrul (2005) noted that the “test might not be entirely suitable to predict the linguistic performance of heritage speakers or early bilinguals” (p. 237), given that this measure invites participants to make explicit grammatical and vocabulary judgments (which may not align with the implicit linguistic competencies inherent to such speakers; Carreira and Potowski, 2011). Nonetheless, this task (in particular, the one available on the UCLA National Heritage Language Resource Center (NHLRC) website) has been widely adopted as a proficiency assessment and as a means of cross-study comparison in L2 and HL bilingualism research (e.g., Faretta-Stutenberg and Morgan-Short, 2018; Sánchez Walker and Montrul, 2020; Solon et al., 2022; Torres, 2018; among many others). The task has often been passed down from researcher to researcher and is available publicly, as mentioned, facilitating the task's adoption in research.

Although few studies have attempted to validate this proficiency assessment, such studies have so far provided support for the test's internal reliability and external validity in L2 and HS samples. In particular, Montrul et al. (2008) and Montrul and Ionin (2012) explored these aspects among L2 learners and HSs. They found the internal reliability of the task to be moderate (Brown, 2013), as evidenced by a Cronbach's Alpha (i.e., α) coefficient of 0.827. This suggests that the test items were reliable and uniform for the two samples. Regarding validity, these studies examined correlations between scores on this task and performance on other measures of linguistic knowledge, including judgment accuracy for gender agreement and verb tense. The positive correlations observed (r = 0.807 for the HS group and r = 0.653 for the L2 group) provide some support for the construct validity of the task for these samples. Additionally, a recent study by Solon et al. (2022) with L2 speakers and HSs revealed significant correlations between the this task and other validated language proficiency assessments, such as the EIT, which is often utilized to assess Spanish oral proficiency (e.g., Faretta-Stutenberg et al., 2023; Kostromitina and Plonsky, 2022; Solon et al., 2022; see Bowden, 2016 for a validation study of the Spanish EIT).

Given this test's common use in the L2/HL fields, together with the limited evidence regarding its relationship with other proficiency assessments and experience factors, especially for HSs of Spanish, additional research examining this task is warranted. As such, this test was examined in the current study. However, given the fact that the test is (1) only partly from the DELE (and not a current version at that) and (2) that the task requires sentence and paragraph-level reading comprehension, with questions that target vocabulary (40 questions), along with some grammar knowledge (10 questions), we here refer to the task as a Spanish Vocabulary and Grammar Test (VGT).3

2.2 Subjective assessments of language proficiency

In the context of our study, we define subjective proficiency assessments as an approach for evaluating an individual's language proficiency that emphasizes subjective personal perceptions rather than objective metrics. These assessments rely on individuals' self-reports of their own individual language abilities, often elicited through surveys, interviews, or expert feedback (Olson, 2023a). Unlike objective assessments, subjective assessments explore personal views on language abilities, incorporating factors such as confidence, comfort level, and self-rated proficiency across language domains (the most predominantly used; Gertken et al., 2014). While subjective assessments might initially seem less precise compared to objective assessments, the use of Likert scales to quantify these subjective evaluations facilitates a systematic analysis of individuals' perceptions, thereby transforming subjective ratings into structured, quantifiable data. However, it is crucial to recognize that, despite our ability to quantify subjective assessments, the resulting data from subjective proficiency assessments can still be influenced by biases, socio-cultural and political factors, and variability in individual self-awareness (e.g., De Bruin, 2019; Hulstijn, 2012). This underscores the need for careful, contextualized interpretation of these assessments, considering the diverse factors that may impact individuals' subjective perceptions of their language proficiency.

2.2.1 Bilingual language profile questionnaire

The Bilingual Language Profile (BLP; Birdsong et al., 2012) was developed as a succinct and accessible self-report tool for assessing bilingual language dominance across bilingual languages (see Treffers-Daller, 2019) and a general bilingual profile. It provides a continuous (and composite) dominance score, alongside a general profile of bilinguals' language history, use, attitudes, and proficiency.

Since its development, the BLP has been used across different areas of bilingualism research, including but not limited to research on language processing, language acquisition and psycho/neurolinguistics (e.g., Amengual and Chamorro, 2016; Kubota et al., 2023; Poarch et al., 2019). The availability of the BLP in multiple languages, coupled with its ease of use and open access, has likely contributed to its broad adoption, making it a widely used measure of bilingual language use, experience, and proficiency, including for HSs (Solís-Barroso and Stefanich, 2019). As of February 21, 2024, the BLP had been cited 197 times, according to Google Scholar, highlighting its widespread recognition and impact in the academic community. Several studies have provided positive evidence supporting its construct validity as well as its concurrent validity and test-retest reliability (see Dass et al., 2024; Gertken et al., 2014; Mallonee Gertken, 2013; Olson, 2023b; Solís-Barroso and Stefanich, 2019).

However, the BLP's reliance on self-reported data introduces the potential for subjective bias(es). This can lead participants to either overestimate or underestimate their language proficiency. Such underestimation is a notable concern among HSs, as highlighted by Bayram et al. (2021b). This underscores the importance of interpreting BLP results with caution and, where feasible, integrating objective measures to support (and enhance) the available self-reported data.

2.2.2 Language entropy

In their 2020 study, Gullifer and Titone proposed a novel approach to examining bilingualism through the lens of language entropy. Language entropy is defined as a metric for estimating the diversity of language use in social contexts, particularly focusing on the various contexts in which bilinguals engage with their languages. According to Gullifer and Titone, language entropy can serve as a relevant tool for understanding and quantifying individual differences in how bilinguals navigate their different linguistic environments, by exploring the extent to which bilingual individuals engage in environments that require the use of both languages simultaneously (i.e., dual language contexts) vs. those that are more segregated, relying on a single language mode (i.e., compartmentalized language contexts). This construct of language entropy as defined by Gullifer and Titone is particularly relevant when considered alongside theoretical and empirical findings, such as the adaptive control hypothesis (ACH; Abutalebi and Green, 2016; Green and Abutalebi, 2013). The ACH suggests that how bilinguals use their languages across different social settings—their interactional context—plays a significant role in shaping how they represent, access, and control these languages. According to this hypothesis, bilinguals who frequently navigate dual language contexts (integrated bilinguals) face distinct language and executive control demands compared to those who engage with their languages in more compartmentalized, single-language settings. By quantifying the social diversity of language use through language entropy, researchers can gain quantifiable estimations of how bilinguals manage and navigate multiple linguistic systems and the factors that influence language choice, language switching, and language adaptation in bilingual contexts.

The construct of language entropy is starting to gather significant attention in studying bilingualism thanks to its relationships with the neural, cognitive, and social dynamics of bilingual language proficiency and use. For instance, Sulpizio et al. (2020) investigated the impact of bilingual experience—considering factors such as age of acquisition, proficiency, and language entropy—on the functional connectivity within and between language and executive control networks in the brain. They found that higher language entropy, indicating more diverse and integrated language use, was associated with enhanced connectivity in these networks. Building on this, Li et al. (2021) explored the relationship between bilingual language entropy and executive function. Their findings revealed that greater diversity of language use across social contexts, as assessed by language entropy, seemed to be associated with enhanced brain network specialization and segregation in brain networks associated with executive control. Additionally, Kałamała et al. (2023) introduced a novel psychometric network modeling approach to capture the complexity of bilingual experience, focusing on language entropy and language mixing as key indicators. Their study suggests that bilingualism is an emergent phenomenon shaped by the interplay of language acquisition background, skills, and usage practices. Finally, Wagner et al. (2023) critically examined how contextual factors influence the effects of language entropy on cognitive performance, comparing bilingual contexts in Toronto and Montréal, with results suggesting that language entropy can vary significantly based on environmental/societal factors that influence language use. Collectively, these studies underscore the critical role of language entropy in understanding the complex interplay between the social diversity of language use, neural and psycho/sociocognitive function, and sociolinguistic contexts.

Language entropy is also intimately connected to code-switching, a bilingual practice often associated with HS populations. An individual speaker's tendency to seamlessly alternate between languages goes hand-in-hand with higher language entropy. Although code-switching is frequently maligned as a sign of “disfluency” among “non-proficient” bilinguals, in reality, more dense switching (i.e., intra-sentential code-switching) is associated with higher proficiency in both languages (Bullock and Toribio, 2009). Code-switching is a form of linguistic flexibility that can be seen as a sign of the vitality of the minority language in a community (Gardner-Chloros, 2009) in that it supports identity formation while also being tied to language proficiency. As such, examining language entropy is crucial for understanding the linguistic behavior of HSs of Spanish in the US, as it provides insights into how they integrate Spanish and English in their daily lives, which in turn influences the intergenerational transmission of the HL as well as the diverse ways in which they develop their proficiency in it. In the current study, we calculated language entropy using questions from the BLP (see details in the Methods Section).

2.3 Study goals and research questions

Consequently, the goals of our study were three-fold:

1. Reliability: First, we evaluated the reliability of the objective proficiency assessments (LexTale-ESP, VGT), by examining their internal consistency. As mentioned above, internal consistency reliability refers to the degree to which different items within a specific assessment yield consistent results (Cronbach, 1951). By analyzing the internal consistency of these items, we aimed to provide evidence that these assessments offer a stable and reliable measure of Spanish proficiency.

2. Validity: Second, we evaluated the validity of these assessments by examining their interrelationships with each other and their relationships with the subjective proficiency assessments. This aspect of the study focused specifically on construct validity, which refers to the extent to which these assessments accurately measure the construct of language in Spanish as an HL among HSs of Spanish in the US (Messick, 1995). By analyzing these relationships, we aimed to provide evidence as to whether the assessments reflect the Spanish proficiency constructs they are intended to evaluate.

3. Validity in Context: Finally, we investigated how objective and subjective proficiency assessments were related to different HL experience factors, specifically years of exposure to Spanish, years of Spanish schooling, and language entropy (following Gullifer and Titone, 2020). This aspect of the study addresses both construct validity and ecological validity. Construct validity, in this context, pertains to whether these assessments accurately capture different dimensions of Spanish proficiency, while ecological validity concerns how well performance on these tasks reflects real-world language use and experience (Bronfenbrenner, 1977). By exploring these relationships, we aimed to understand how these proficiency assessments relate to real HL experiences and usage patterns in everyday life.

These goals guided the formulation of the following research questions (RQs):

RQ1 (Reliability): What is the internal consistency of the selected objective proficiency assessments for this sample of HSs of Spanish?

With regard to RQ1, we hypothesized that the selected objective proficiency assessments will demonstrate high internal consistency within this sample of HSs of Spanish. As noted above, internal consistency is crucial for establishing the reliability of these proficiency assessments (Cronbach, 1951). Our hypothesis was based on previous research indicating high internal consistency reliability for these measures across diverse populations (e.g., Izura et al., 2014; Montrul et al., 2008). Thus, to our knowledge, this has not yet been investigated specifically in our population of HSs. Therefore, we aimed to examine whether similar reliability would be observed in assessing Spanish proficiency for this sample of HSs of Spanish.

RQ2 (Validity): How do the selected objective and subjective proficiency assessments relate to one another for this sample of HSs of Spanish?

With regard to RQ2, we hypothesized finding variable relationships among the assessments, reflecting aspects of construct validity. As noted above, construct validity examines whether these assessments accurately measure the intended constructs of Spanish proficiency and how these constructs interrelate (Messick, 1995). Prior research has shown that objective and subjective proficiency assessments can be related, but the strength and nature of these relationships may vary (e.g., Gullifer and Titone, 2020; Tomoschuk et al., 2019). Specifically, we predicted that the objective assessments would be significantly correlated, reflecting their shared focus on vocabulary knowledge and prior evidence of their intercorrelations (e.g., Bermúdez-Margaretto and Brysbaert, 2022). However, as these intercorrelations have not been investigated specifically in HSs, we aimed to examine whether similar patterns would emerge for this sample of HSs of Spanish. Additionally, we drew on evidence supporting the external validity of the VGT as a proficiency assessment (e.g., Montrul and Ionin, 2012). However, we also predicted that the relationships between the objective and subjective proficiency assessments would be more variable than the relationships among the objective assessments themselves, due to (a) subjective assessments' potential to be influenced by individual biases or differing subjective interpretations (Tomoschuk et al., 2019) and (b) HSs' frequently reported underestimation of their HL abilities and overall HL proficiency compared to their objectively measured HL proficiency (e.g., Bayram et al., 2021b). Consequently, while both types of proficiency assessments may demonstrate construct validity, we hypothesized that the objective measures would provide a more consistent and reliable reflection of Spanish proficiency, with stronger and more consistent relationships observed among the objective assessments as compared to those between the objective and subjective assessments.

RQ3 (Validity in Context): Do the selected objective and subjective proficiency assessments correlate similarly with each HL experience factor—namely years of exposure to Spanish, years of Spanish schooling, and social diversity of HL use (i.e., language entropy) helping to determine if these HL experience factors are equally influential for capturing dimensions of Spanish proficiency and reflecting real-world HL use among Spanish HSs?

With regard to RQ3, we hypothesized that the selected objective and subjective proficiency assessments would be differentially related to the investigated HL experience factors, reflecting both construct and ecological validity. Previous research suggests that language experience factors, such as the amount and social diversity of L2 use and exposure (e.g., language entropy), are critical in shaping proficiency outcomes, with subjective assessments potentially being more sensitive to context-dependent language experiences. However, as demonstrated by the findings of Gehebe et al. (2023) and Gullifer et al. (2021), these effects may vary depending on the context (i.e., where, when, and how bilinguals' languages are used) and type of proficiency measure used (i.e., objective vs subjective proficiency assessments focused on specific language abilities). Although these studies do not directly compare objective and subjective measures, they suggest that different facets of language experience might influence each assessment type uniquely. Therefore, we expected that objective and subjective assessments of Spanish proficiency would not pattern uniformly but rather would tap into distinct components of the HL experience factors, with subjective assessments potentially capturing more context-sensitive, real-world language usage.

3 Methods

3.1 Participants

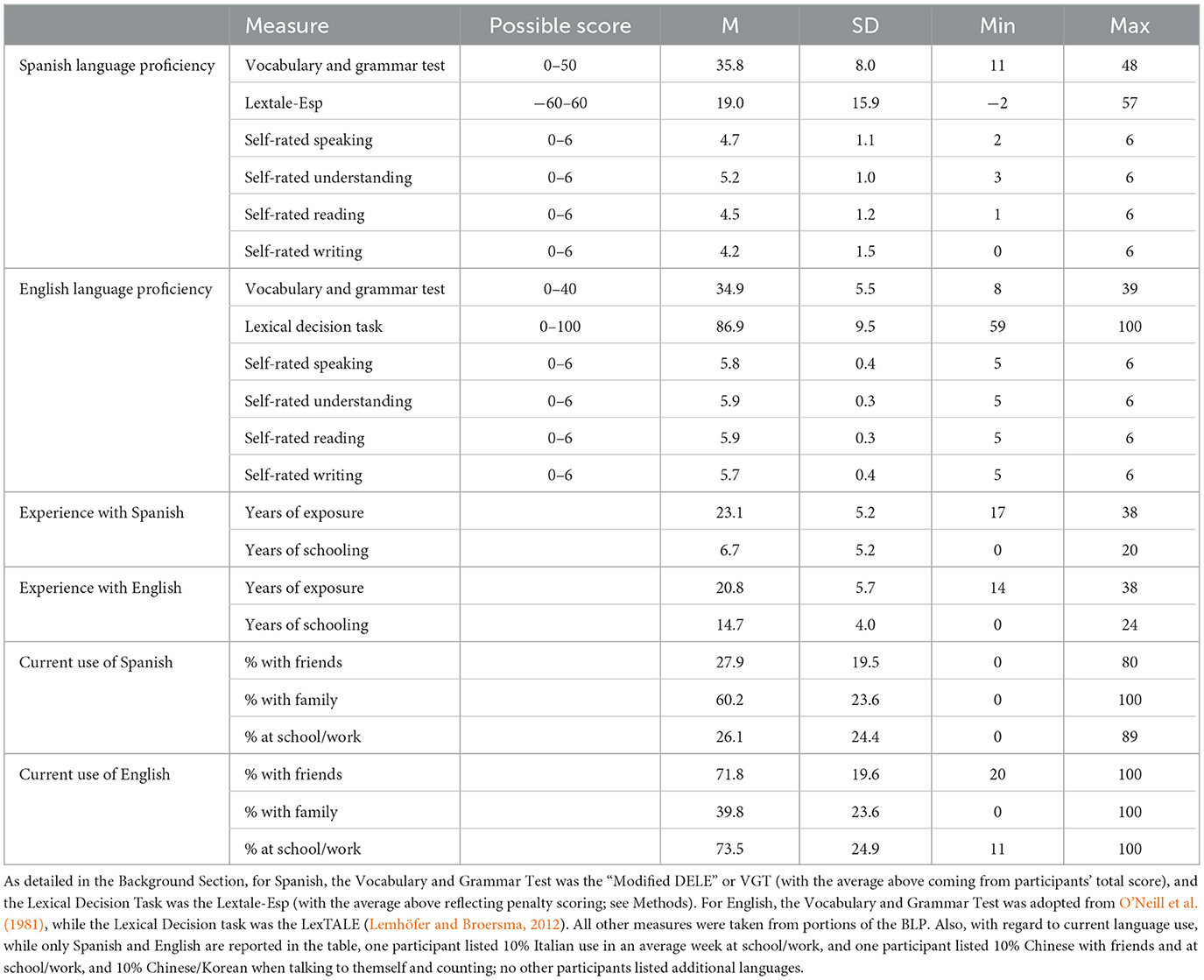

A total of 45 Spanish-English HSs, with ages ranging from 18 to 38 years (M = 23.46; SD = 5.13), were recruited through multiple avenues, including undergraduate Spanish courses as well as personal contacts, leading to a somewhat diverse set of profiles not only in terms of prior language experience and proficiency but also in their current exposure to and use of Spanish. Participants were classified as heritage speakers of Spanish if their age of onset for Spanish exposure was before age 6, based on research indicating that early exposure to the heritage language, typically before school age, is crucial for its maintenance and development. This cutoff aligns with findings from Benmamoun et al. (2013), who suggest age 6 as a reasonable cutoff for early bilinguals, and Silva-Corvalán (2014), who emphasizes that exposure by age 5 allows for substantial meaningful exposure and interaction with the HL before formal schooling in the majority language. The average age of onset for exposure to Spanish among participants was 0.33 years (SD = 0.98). Participants' use of Spanish and English (discussed below) and their language dominance were assessed using the BLP (Birdsong et al., 2012). For language dominance, the group averaged 32.1 out of a possible range of ±218 (SD = 30.8; range −44 to 94), where greater positive values indicate more English dominance, and greater negative values indicate more Spanish dominance. Overall, the group leaned toward English dominance, but scores varied widely, with some Spanish-dominant participants among the group. See Table 1 for a summary of participants' language proficiency (as measured by objective and subjective assessments), experience, and use of both Spanish and English. This information related to the HL will be addressed again in more detail in the Results Section.

Participants reported their gender identities and cultural/ethnic backgrounds via free-response questions. Most participants identified as female (N = 35; 77.8%); eight participants identified as male (17.8%), one participant identified as trans masculine (2.2%), and one participant chose not to disclose gender identity (2.2%). For cultural/ethnic background, participants could include as many different identifiers as they wished. The most common response included the term Mexican (N = 18; 40.0%). Other common identifiers included Hispanic (N = 10; 22.2%) and Latinx/o/a (N = 9; 20.0%). Less commonly reported identities (1–2 participants each) were: Bolivian, Colombian, New Mexican, Peruvian, Puertorriqueña/German, Salvadoran, Texan/Tejana. Additionally, one individual responded multi-racial, one responded unique, and three participants chose not to answer this question.

3.2 Materials

Given the methodological scope of this study, as mentioned, our assessments fall into three broad categories:

1. Objective assessments of Spanish proficiency: These assessments consist of widely used tasks that quantify vocabulary and/or grammar knowledge and have been used as proxies to assess Spanish proficiency.

2. Subjective self-assessments of Spanish proficiency: These assessments offer a subjective and personal perspective on one's Spanish abilities across various language domains.

3. HL experience factors: These self-reported data (i.e., years of Spanish exposure, years of Spanish schooling, and language usage data, used to calculate language entropy) tap into various aspects of the depth and nature of each individual's engagement with Spanish.

3.2.1 Objective assessments of Spanish proficiency

To critically examine how Spanish proficiency is often measured, two objective, quantitative assessments (rather than one) were employed. These two tasks were chosen for a larger project (Koronkiewicz, 2023) due to their widespread usage in the fields of L2 acquisition and bilingualism research as proxies for characterizing Spanish language proficiency.

3.2.1.1 Spanish vocabulary and grammar task

The first objective measure used was the 50-item written, multiple-choice Spanish VGT, consisting of two sections. As described above, the first section comprises 30 sentence-level items, for which selecting the correct answer depends on understanding the sentence and completing it with a semantically appropriate word or phrase, for example (see footnote for translations4):

Al oír del accidente de su buen amigo, Paco se puso______.

a. alegre b. fatigado c. hambriento d. desconsolado

The second section (20 items) is a multi-paragraph, fill-in-the-blank passage. Multiple-choice options for each blank are oriented toward vocabulary/semantics (10 questions) and prescriptively correct grammar (10 questions). Note that this section is representative of Peninsular Spanish language and culture, as shown through its focus on a Catalan artist and the use of some particular verbal morphology. For example (see footnote for translation):

Hoy se inaugura en Palma de Mallorca la Fundación [Pilar] y Joan Miró, en el mismo lugar en donde el artista vivió sus últimos treinta y cinco años. El sueño de Joan Miró se ha______ (1). Los fondos donados a la ciudad por el pintor y su esposa en 1981 permitieron que el sueño se______ (2)...

1. a. cumplido b. completado c. terminado

2. a. inició b. iniciara c. iniciaba

In the present analyses, we include three different score calculations from the VGT. The first is simply the total number of correct responses from 0 to 50, which is the most commonly reported score in previous research. However, given the qualitative differences between the two sections, we also separated the calculations for each section (i.e., just the sentence-level vocabulary-oriented responses [from 0 to 30], and just the paragraph-level vocabulary- and grammar-oriented responses [from 0 to 20]).

3.2.1.2 Lextale-Esp

The second objective assessment of Spanish proficiency was the Lextale-Esp lexical decision task (Izura et al., 2014). As mentioned above, the task includes 90 items that are either Spanish words (pellizcar ‘to pinch'; n = 60) or Spanish-like non-words (e.g., terzo; n = 30), and the participant is asked to simply select Sí or No for each item to indicate if it is a word or not.

For the analysis, we include three different calculations. The first is simply the total of correct answers from 0 to 90. The second is the calculation recommended by the authors of the Lextale-Esp where there is a penalty for “guessing behavior”. This is calculated as the total of correct words minus two times the total of incorrect non-words (e.g., if a participant responded Sí to terzo), with a range of possible scores from −60 to 60. Finally, we also included a d-prime (d′) score, which is a standardized measure following signal detection theory that accounts for response bias in a participant's ability to discriminate words from non-words (Macmillan and Creelman, 1996); specifically, scores can range from −4.65 to 4.65, where a score of zero reflects chance-level discrimination ability.

3.2.2 Subjective assessments of Spanish proficiency

The subjective assessments of Spanish proficiency were self-reported language skill ratings, which were collected as part of the BLP. The questionnaire asks participants how well they speak, understand, read, and write Spanish (and, separately, English), with a 7-point Likert scale from not well (0) to very well (6) for each question. For the analysis, we include three different calculations. The first is a composite score (“Total”) that averages the four questions about Spanish (i.e., the four skills). The second and third scores average together productive (i.e., speaking, writing) and receptive (i.e., understanding, reading) Spanish abilities separately. We wanted to examine productive and receptive Spanish abilities separately given the receptive nature of the objective proficiency assessments used here, which might be expected to pattern together.

3.2.2.1 Heritage language experience factors

Three HL experience factors were also derived from self-reported data gathered from participants' BLP responses. First, we calculated years of Spanish exposure based on participant responses to the following question: At what age did you start learning the following languages?, subtracting their reported age at first exposure to Spanish from the participants' current age. For years of Spanish schooling, we utilized the participant responses to the question, How many years of classes (grammar, history, math, etc.) have you had in the following languages (primary school through university)?

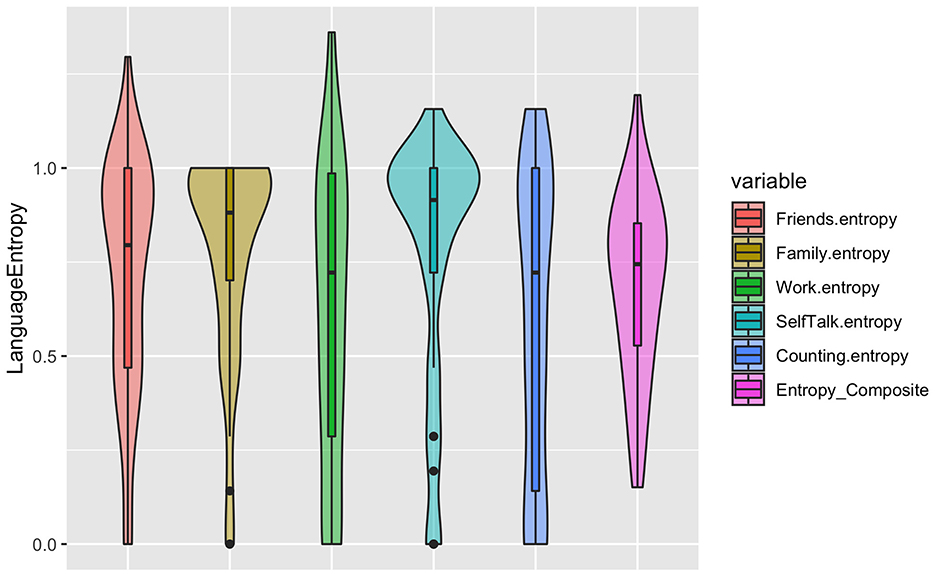

Additionally, we examined language entropy as an HL experience-related factor, following Gullifer and Titone (2020)'s methodology. As mentioned above, language entropy assesses the dynamics of an individual's language use across different sociolinguistic contexts, indicating the degree to which their languages are used in a compartmentalized or integrated manner. We used data from the BLP (Birdsong et al., 2012) to calculate language entropy, where participants reported the percentage of time in an average week they use each language in five different contexts: at school/work, with friends, with family, when talking to themselves, and when counting. These percentages were converted into a proportion for each context, which we then used with the languageEntropy package in R (Gullifer and Titone, 2018) to calculate an entropy score for each context.

The languageEntropy package calculates entropy based on Shannon entropy (Shannon, 1948), a concept from information theory originally developed to estimate the unpredictability or diversity in a system of possible outcomes. In information theory, entropy provides a measure of how “spread out” or “integrated” different elements are within a system. In this context, Gullifer and Titone (2020) adapted Shannon entropy to estimate the diversity in bi/multilingual language use, where the entropy score for each context reflects the proportion and dynamics of language use across social settings. Specifically, the languageEntropy package uses the formula H = –∑ (pi ·log(pi)), where H represents the entropy score for a given context, pi denotes the proportion of time that each language i is used within that context, and the summation is taken over all languages used in that context. Thus, the formula works as follows: for each language used within a context (e.g., English, Spanish), we calculate the proportion of time the participant uses that language and multiply it by the logarithm of that proportion. This product pi · log(pi) is calculated for each language, and the results are then summed. The negative sign in front of the summation ensures the entropy value is positive. Thus, the resulting entropy score reflects the diversity of language use within each context.

A score of 0 indicates complete compartmentalization, where only one language is used exclusively within a context (e.g., 100% use of English and 0% use of Spanish in a particular setting). For instance, a participant who reports using only English in some contexts (e.g., school/work and with friends) and only Spanish in other contexts (e.g., family and self-talk) would have an entropy score close to 0, reflecting complete compartmentalization across different social contexts. In contrast, a score of 1 indicates complete integration, where both languages are used equally within a context (e.g., 50% English and 50% Spanish in a given context). A participant who reports using each language 50% of the time across all contexts—such as school/work, with friends, and with family—would achieve an entropy score close to 1, showing full integration, as both languages are used equally within each context.

To generate an overall measure of language integration across contexts, we computed a composite entropy score by averaging the individual entropy scores across all contexts. This composite score provides a single, interpretable metric that represents the participant's overall level of language integration or compartmentalization in daily life. It is important to note that for multilingual experiences involving more than two languages, the entropy calculation dynamically adapts by incorporating each language's proportion of use in the formula. For instance, if a participant uses three languages (e.g., English, Spanish, and French) in a context, each language's proportion of use is included in the calculation. The maximum entropy score increases as more languages are used, reflecting a greater diversity of language use. For a trilingual context, the maximum entropy score becomes approximately 1.585 (the logarithm of 3), rather than 1, allowing the measure to capture multilingual dynamics effectively. For a more detailed description of entropy calculations and their theoretical basis in the context of bi/multilingualism research (see Gullifer and Titone, 2020).5

3.3 Procedure

As mentioned, the data under analysis here came from a larger project. This larger project included three study sessions that were completed on different days. All relevant data for the present study come from the first two sessions, completed independently by study participants via Qualtrics surveys (Qualtrics, Provo, UT).

In the first session (~10–20 min), participants completed the Lextale-Esp (Izura et al., 2014), followed by the English LexTALE (Lemhöfer and Broersma, 2012). They then answered 12 questions targeting language exposure and acquisition and language mixing experience and attitudes. Those 12 questions were used to categorize participants as late L2 learners or HSs of Spanish, as both were targeted in recruitment efforts for the larger project. Any participant who indicated that they learned both languages from a young age and had a parent or primary caregiver who primarily used Spanish with them growing up was categorized as a HS and was included in the current dataset.

The second session (~45–60 min) included a series of acceptability judgment tasks for code-switched sentences, Spanish-only sentences, and English-only sentences (none of which are analyzed here; see Koronkiewicz, 2023, for details). Between the judgment tasks, participants completed the (Spanish) VGT and an English proficiency measure (O'Neill et al., 1981) parallel to the VGT. Finally, participants completed basic demographic questions and the BLP (Birdsong et al., 2012), both in English.

Participants were compensated with a $20 Amazon.com eGift Card for their time completing the first two sessions of the study. Informed consent was also obtained from all participants before each study session.

4 Results

4.1 Descriptive results

First, we present a general overview of participants' data from the different assessments of Spanish proficiency, both objective and subjective. These descriptive statistics, detailed in Table 2, summarize the average scores obtained for the three proficiency assessments. Recall that for each assessment, there were three different score types; the various scores either comprised the total score and subsets of the measure (as in the case of the VGT and self-ratings) or they employed distinct score calculations (as in the case of the Lextale-Esp) (see Section 3.1 and Table 1 for a comprehensive reporting of participant characteristics, including demographics and educational background).

Table 2. Descriptive statistics for objective and subjective language proficiency assessments, broken down by score types.

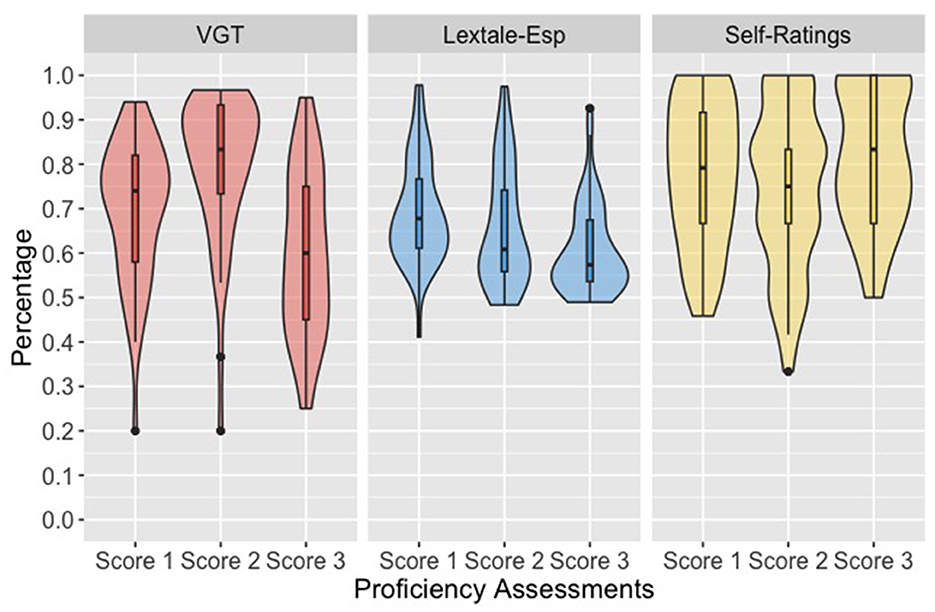

Because many of the score types have different ranges of possible values, direct comparison of mean values across assessments is not descriptively straightforward. Thus, Figure 1 illustrates average performance on each proficiency assessment as a percentage of the maximum for each score type, facilitating a more meaningful comparison. As we can see, overall, the scores for the different assessments were relatively similar, as the average scores were between ~60–80% of their respective maximum scores. Descriptively, participants performed the lowest on Score 3 of the VGT (i.e., the paragraph-level section that requires reading comprehension generally as well as specific vocabulary and grammar knowledge), receiving on average 11.9 out of 20 points (59.5%). Meanwhile, participants performed the highest on Score 3 of the self-ratings (i.e., receptive skills), averaging a 4.9 out of 6 (81.7%). The VGT showed the most variability within the proficiency assessments, where there was a difference of 19.8% between Score 2 and Score 3 (i.e., the sentence-level vocabulary-focused questions and the paragraph-level vocabulary and grammar-focused questions, respectively).

Figure 1. Overall mean performance on objective and subjective proficiency assessments as a percentage. This figure presents violin plots depicting the distribution of percentage scores across (y-axis) the different proficiency assessments (x-axis). Specifically, VGT scores are represented in red, LexTALE-Esp in blue, and Self-Ratings in yellow. Recall that each assessment method is divided into three score types: VGT: Score 1 (overall score), Score 2 (sentence-level vocabulary score), Score 3 (paragraph-level vocabulary and grammar score); LexTALE-Esp: Score 1 (standard scoring), Score 2 (penalty-based scoring), Score 3 (d-prime scoring); Self-Ratings: Score 1 (overall score), Score 2 (productive skills score), Score 3 (receptive skills score). The width of each violin plot corresponds to the frequency of data points at different percentage levels, and the black lines inside the violins indicate the interquartile range and median of the scores.

Data analyses were conducted using R version 4.2.2 (2022-10-31), within RStudio Version 2022.12.0+353. A suite of R packages was employed for comprehensive data manipulation, analysis, and visualization, including tidyverse for manipulation of data sets, ggplot2 for creating graphics, psych for calculating effect sizes and measures of internal consistency, stats for correcting p values and languageEntropy for calculating and analyzing language entropy scores to address our specific data analysis needs. In order to assess the strength and direction of the relationships we examined in RQ2 and RQ3, we used the non-parametric Spearman's correlation as the data were not normally distributed, and some were ordinal rather than continuous. Note that all correlation sizes were interpreted following Plonsky and Oswald (2014), and p values associated with correlations were corrected using the Benjamini-Hochberg (BH) correction using the p.adjust() function in the stats package in R to account for the false discovery rate in multiple statistical tests (Benjamini and Hochberg, 1995). The BH correction method was chosen due to the characteristics of our study, as the correction does not assume independence of tests, and our study was exploratory in nature, providing a balanced approach to controlling for both Type I and Type II errors (i.e., false positives and false negatives, respectively).

4.2 RQ1 (reliability): what is the internal consistency of the selected objective proficiency assessments for this sample of HSs of Spanish?

To address RQ1, pertaining to whether the objective proficiency assessments employed in this study were reliable (i.e., internally consistent) for our sample, we calculated Cronbach's alpha for the Lextale-Esp and each portion of the VGT using the alpha function from the psych package in R. The 95% confidence intervals (CIs) for Cronbach's alpha were calculated using the Duhachek method (Duhachek and Iacobucci, 2004). To interpret the levels of Cronbach's alpha, we followed the guidelines provided by Brown (2013).

The overall reliability results were as follows: For the Lextale-Esp, Cronbach's alpha indicated high internal consistency at 0.88, with 95% CIs [0.82 −0.93]. The sentence-level portion of the VGT showed a Cronbach's alpha of 0.87, with 95% CIs [0.82 −0.92], indicating high internal consistency. Additionally, the paragraph-level portion of the VGT revealed a Cronbach's alpha of 0.68, with 95% CIs [0.55 −0.82], indicating moderate internal consistency.

In summary, the objective proficiency assessments, including the Lextale-Esp and the sentence-level portion of the VGT, demonstrated moderate to high internal consistency, revealing that the items were highly correlated and reliably measured the same construct. However, the paragraph-level portion of the VGT showed lower internal consistency, revealing that the items were reasonably consistent in measuring the same construct for this sample, but less so than the other portions of the objective assessments under investigation.

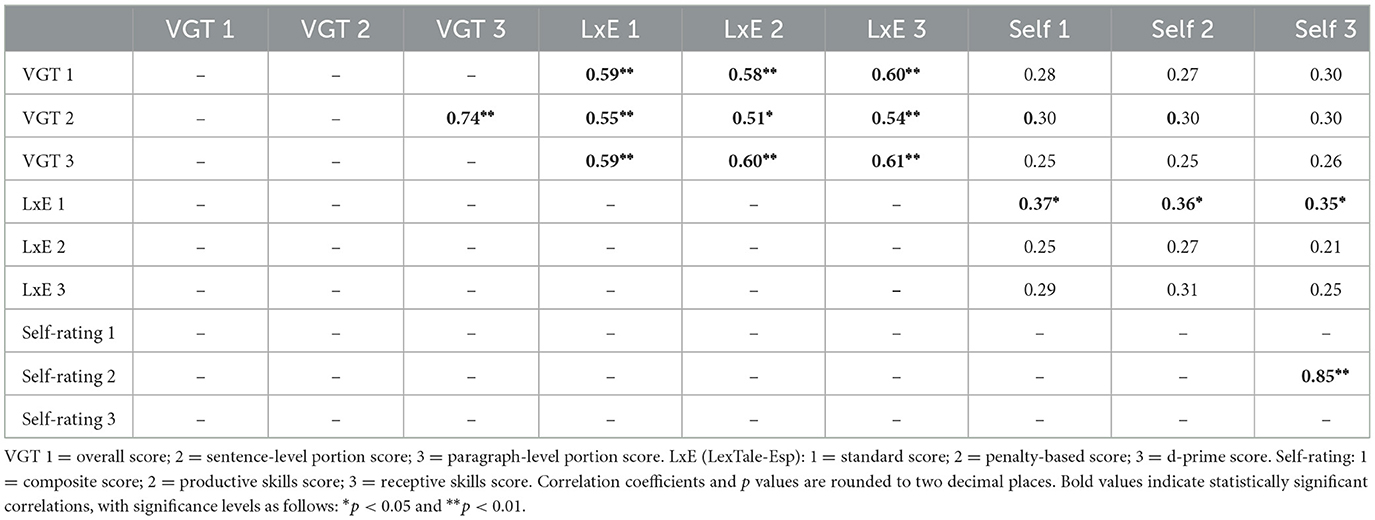

4.3 RQ2 (validity): how do the selected objective and subjective proficiency assessments relate to one another for this sample of HSs of Spanish?

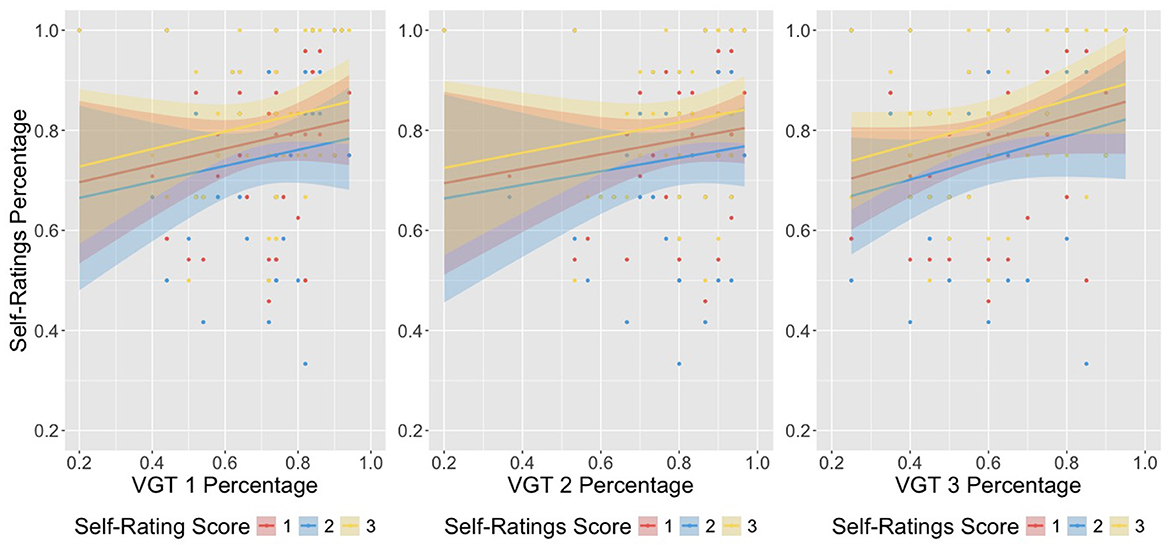

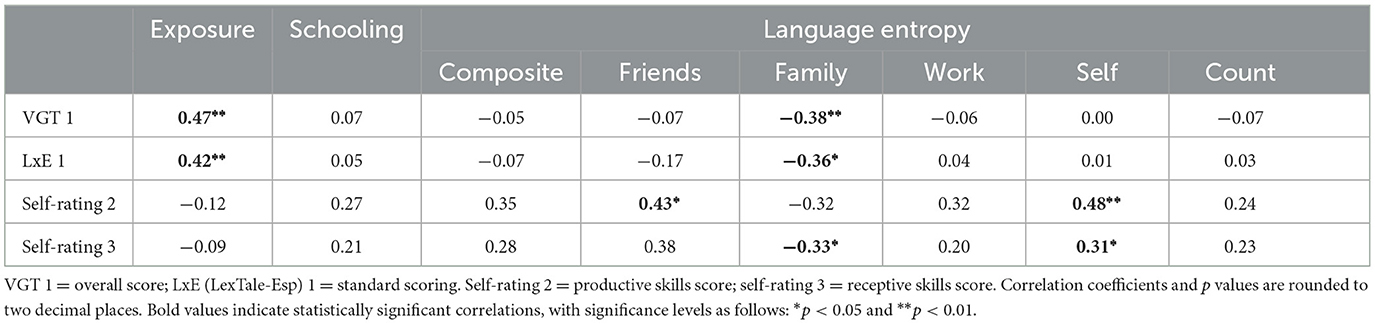

A summary of the correlations used to assess the relationships between objective and subjective proficiency assessments is provided in Table 3. We also provide scatter plots to illustrate these relationships in Figures 2–4, visualized as a percentage of the maximum for each score type.

Figure 2. Scatter plots illustrating the relationship between VGT scores and LexTale-Esp scores. The scatter plots display the percentage scores on the VGT (x-axis) against the percentage scores on the Lextale-Esp (y-axis) for each score type. Recall that VGT 1 refers to the overall score, VGT 2 includes only the sentence-level score, and VGT 3 includes only the paragraph-level vocabulary and grammar score. The color-coded lines and points represent the different Lextale-Esp scoring methods: standard scoring (red), penalty-based scoring (blue), and d-prime scoring (yellow). The shaded areas around the lines represent the 95% confidence intervals for the linear regression fit.

Figure 3. Scatter plots illustrating the relationship between VGT scores and Self-Ratings scores. The scatter plots display the percentage scores on the VGT (x-axis) against the percentage scores on the self-ratings (y-axis) for each score type. Recall that VGT 1 refers to the overall score, VGT 2 includes only the sentence-level vocabulary score, and VGT 3 includes only the paragraph-level vocabulary and grammar score. The color-coded lines and points represent the different self-rating scores: composite score (red), productive skills score (blue), and receptive skills score (yellow). The shaded areas around the lines represent the 95% confidence intervals for the linear regression fit.

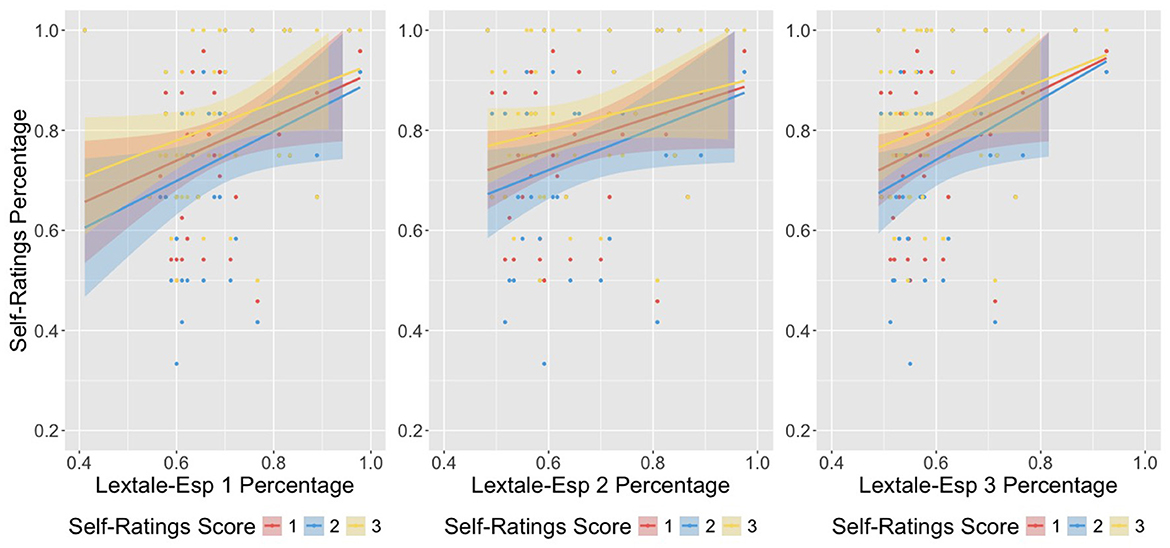

Figure 4. Scatter plots illustrating the relationship between LexTale-Esp scores and Self-Ratings scores. The scatter plots display the percentage scores on the Lextale-Esp (x-axis) against the percentage scores on the self-ratings (y-axis) for each score type. Lextale-Esp 1 refers to the standard scoring method, Lextale-Esp 2 refers to the penalty-based scoring method, and Lextale-Esp 3 refers to the d-prime scoring method. The color-coded lines and points represent the different self-rating scores: composite score (red), productive skills score (blue), and receptive skills score (yellow). The shaded areas around the lines represent the 95% confidence intervals for the linear regression fit.