94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Lang. Sci. , 28 January 2025

Sec. Language Processing

Volume 3 - 2024 | https://doi.org/10.3389/flang.2024.1380990

Various linguistic models have been developed to systematize language processes and provide a structured framework for understanding the complex network of language production and reception. However, these models have often been developed in isolation from neurolinguistic research, which continues to provide new insights into the mental processes involved in language production and comprehension. Conversely, neurolinguists often neglect the potential benefits of incorporating contemporary linguistic models into their research, although these models could help interpret specific findings and make complex concepts more accessible to readers. This paper evaluates the utility of Jackendoff's Parallel Architecture as a generic framework for explaining language acquisition. It also explores the potential for incorporating neurolinguistic findings by mapping its components onto specific neural structures, functions, and processes within the brain. To this end, we reviewed findings from a range of neurolinguistic studies on language acquisition and tested how their results could be represented using the Parallel Architecture. Our results indicate that the framework is generally well-suited to illustrate many language processes and to explain how language systems are built. However, to increase its explanatory power, it would be beneficial to add other linguistic and non-linguistic structures, or to signal that there is the option of adding such structures (e.g., prosody or pragmatics) for explaining the processes of initiating language acquisition or non-typical language acquisition. It is also possible to focus on fewer structures to show very specific interactions or zoom in on chosen structures and substructures to outline processes in more detail. Since the Parallel Architecture is a framework of linguistic structures for modeling language processes rather than a model of specific linguistic processes per se, it is open to new connections and elements, and therefore open to adaptations and extensions as indicated by new findings in neuro- or psycholinguistics.

Language processing is a remarkable feat performed by a complex neurological system in which different brain regions are responsible for different linguistic sub-processes. The swift and seamless interaction between these regions is essential for humans to successfully acquire language, as well as to understand and produce a wide range of communicative messages (Price, 2010). Because of this inherent complexity, a specific lesion in the brain does not necessarily result in a corresponding impairment. This is because the human language system is intricate enough to find workarounds that allow individuals with lesions to listen and speak without discernible problems (Poeppel and Hickok, 2004; Poeppel et al., 2012; as seen in cases of Broca's aphasia and lesions in Broca's area).

Linguistic models must—therefore—meet the challenge of not only representing successful language processing, but also elucidating the underlying learning processes and potential obstacles. They should also be applicable across different languages. If these criteria are met, linguistic models can serve as invaluable tools for explaining language processes in a comprehensible way and can form the basis for discussion or for developing empirical tests to verify or falsify predictions.

This paper focuses on Jackendoff's (2002) Parallel Architecture—a generic framework of language. Unlike other cognitive frameworks, such as Levelt's Blueprint of a Speaker (Levelt, 1989), which map actual language processing, the Parallel Architecture comprises a framework of the linguistic components in the human mind. This construction provides a unified representation of three higher order structures. Each of these structures is composed of various specialized intra- and interrelated substructures, which are connected by interfaces. This framework thus holds promise for explaining (neuro)linguistic processes in that the structures can be located in brain areas that have been shown to be involved in language processing. In the following sections, we will first provide an overview of the framework itself (Section 2). We will then test whether neurolinguistic findings on successful and impaired language acquisition can be represented by the framework (Section 3). In Section 4, we will discuss findings in neurolinguistics that call for adaptations to the framework to strengthen its power for systematically explaining neurological language processes. Section 5 summarizes our findings.

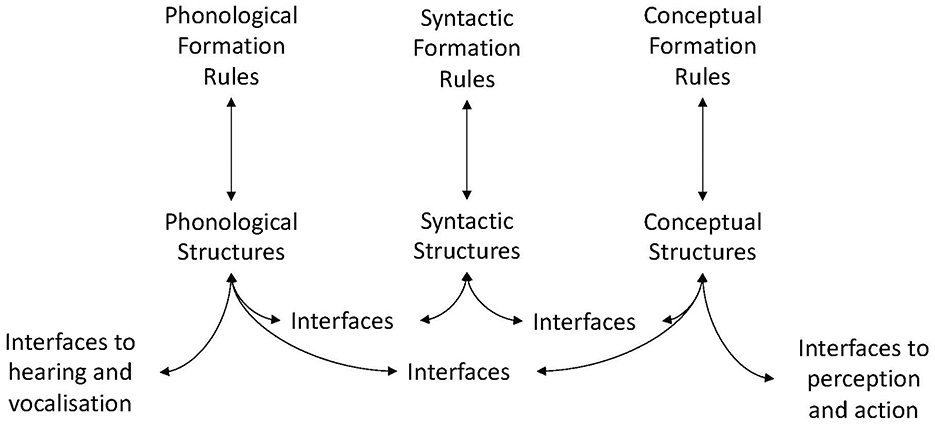

Jackendoff's (2002) Parallel Architecture is intended to be a “unified theory of the mental representations involved in the language faculty and their interactions with the mind as a whole” (Jackendoff, 2023, p. 1). The theory proposes that language consists of three independent generative components that form a mental network: the phonological, the syntactic, and the conceptual structures (Figure 1). These structures are interconnected by interfaces that facilitate the exchange of structure-relevant information. While the content of the phonological and syntactic structures is language dependent, the conceptual structures are proposed to be language independent (Jackendoff, 2003, 1996). Furthermore, the framework includes links to extralinguistic faculties: the conceptual structures are linked to perception and action, while the phonological structures are linked to hearing and vocalization (Jackendoff and Audring, 2020).

Figure 1. The Parallel Architecture (after Jackendoff, 2002, p. 125/2010, 3).

The phonological structures are responsible for processing speech sounds (phones) and for generating or analyzing strings of speech sounds, as well as syllabic, prosodic, and morphophonological forms of expression. Syntactic structures handle the analysis and construction of sentences by organizing phrases composed of word forms (e.g., nouns, adjectives, prepositions, or verbs) and syntactic features (e.g., 3rd person singular, present tense). The conceptual structures mentally encode the meaning of a word, phrase or sentence and serve as “the form (or one of the forms) in which human thought is couched” (Jackendoff, 2010, p. 7).

Neither phonological, syntactic nor conceptual structures in the framework are proposed to play a dominant or superior role in language processing, and many processes can occur in parallel, increasing the efficiency of language use and communication. Crucial to successful language processing are the bidirectional interfaces between structures. They transfer only the type of information between structures that is relevant to the target structures. For example, the distinction between a rhetorical question and an informational question primarily concerns the conceptual and phonological structures. The syntax of the question, such as Could you help me? remains the same for both a rhetorical and an informational question, but the intended meaning is conveyed solely through the application of specific prosodic rules that transform a question into a request for information or action (cf. Mauchand et al., 2019). The structures are therefore closely linked to all types and modalities of information stored in long-term memory (LTM). External factors, including communication partners and the linguistic environment, also play an active role, and are crucial for speakers to (re)act appropriately (but see Section 4 for a discussion of prosodic elements).

Jackendoff's underlying beliefs about how conceptual structures are constructed are controversial. Unlike Fodor's Representational Model of Mind (e.g., Fodor, 1985), Jackendoff's approach denies a necessary intentional relation between words and the objects in the world that they denote. Gross (2005), for example, criticizes this approach as “relying on philosophical assumptions in tension with a properly ‘naturalistic' attitude” (251), arguing, among other things, that the very fact of thinking about things shows that there is intentionality. In what follows, we outline in more detail how we understand Jackendoff's idea of conceptual structures and why we believe that it offers high potential for explaining neural language processes.

Whereas, in the past (e.g., Jackendoff, 1983, 1987), conceptual structures were proposed to consist of “conceptual constituents, each of which belongs to one of a small set of major categories such as Thing, Event, State, Place, Path, Property, and Amount” (Jackendoff, 1987, p. 357), conceptual structures are now understood to consist of substructures that also include information such as manner or distances, functions, features, and “many aspects of meaning [that] are conveyed through coercion, ellipsis, and constructional meaning” (Jackendoff, 2019, p. 86). This knowledge is stored in a modular cognitive system each of which is proposed to consist of independent but interconnected levels similar to the linguistic system. The spatial structures that provide parts of the information for the conceptual structures include, for example, the visual representations of a concept in three-dimensional shapes that allow us to mentally create the back of a person standing in front of us, as proposed by Marr and Nishihara (1978). In addition, spatial structures store and make available haptic, proprioceptive and motor information. The conceptual structures abstract and subsume these different types of information (and others, such as experiences or attitudes) into a particular word that denotes either a specific object, types of objects, an action or an abstract idea.

The framework's philosophical approach to conceptual semantics is mentalistic, in the sense that words are not seen as necessarily referring to objects in the world, and that it is not possible to objectively determine that a word or sentence concept is true by the conditions of the world, but that it is the “conditions in speakers' conceptualizations of the world” (Jackendoff, 2019, p. 89, emphasis in original) that determine the truth of the meaning of a word, a phrase or a sentence. Each person's understanding of the meaning of words is closely linked to their individual knowledge of the world, their attitudes and experiences, and how these things are processed in their minds. These internal representations of the world are the basis on which humans judge the truth of expressions. The individual experiences on aboutness, the meaning of words/expressions and combinations of words are then mental constructs that cannot be unambiguously judged as right or wrong in general (as we can do with the syntax of a sentence), but that knowledge of the meaning of words and sentences is strongly intertwined with a person's knowledge of the world (Jackendoff, 2023):

The meaning of a word or a sentence is not a free-standing object in the world […]. Rather, the meaning of a word is to be regarded as a mental structure stored in a speaker's mind, linked in long-term memory to the structures that encode the word's pronunciation and its syntactic properties. The meaning of a sentence is likewise to be regarded as a mental structure, constructed in a speaker's mind in some systematic way from the meanings of its components. (Jackendoff, 2019, p. 88)

This is not to say that there are no commonalities in the understanding of words by different speakers. If we consider Labov's (1973) experiment on the semantics of the word cup, we see that “the salient (or default) meaning of cup is of a drinking vessel that is an impermeable oblate hemispheroid (a squashed half sphere)” (Allan, 2020, p. 126), so that we can expect to be served a particular type of drink in a vessel, when we order a cup of tea in an English tea room. However, there were pictures in Labov's test where parts of the participants agreed on a vessel with specific height-width ratios being a cup, but this evaluation changed depending on whether a handle was added. In that case, more stated that the picture showed a cup than when the handle was missing (Labov, 1973). For people living in Japan and China, however, this characteristic would not be an indicator, since cups in their cultures usually do not have handles and they have a different common height-width ratio than cups in England (Allan, 2020). In other words, although there is a common social agreement on some parts of the semantics of the word cup, there are individual differences in the mental concept of this word.

Because the language system and the meaning of words are learned in a particular community with shared experiences of the world, the semantics of words are often quite homogeneous, enabling people to communicate with each other. If the meaning of a word is not the same in two speakers, but only similar (e.g., if an English person asks for a cup of tea and a Japanese person gives them tea in a cup without a handle), the English person will tolerate the vessel as a cup because they will still be able to drink tea. In other instances, e.g., in a communication about fluent writing between a writing researcher and a person that is interested in literature, an exchange about the different understanding of the word by the expert and the none-expert might become necessary because for writing researchers fluent writing means fast execution of the motor tasks (such as typing), i.e., a characteristic of quantity (Van Waes et al., 2021); whereas fluent writing in everyday communication is usually understood as a writer's ability to produce a text that reads well, i.e., a characteristic of quality. Since writing research also showed that fluent production often leads to qualitatively higher texts (e.g., Baaijen and Galbraith, 2018), this different understanding might, however, even stay unnoticed during the communication.

The framework's denial of aboutness in our understanding thus takes account of the fact that we do not have an objective view on the world but that visual, auditory, or olfactory information is computed inside our minds based on the fragments of information that we perceive and analyse. As we will see below, this understanding of conceptual structures is mirrored in neurolinguistic studies.

In the framework, the mental lexicon is understood as a “multidimensional continuum of stored structures” (Jackendoff, 2009, p. 108), with words at one end and general rules at the other. The word sing, for example, would consist of the phonological information:/s ɪ η/, the syntactic information [VP V1] and the semantic information that sing denotes the ACTION of PRODUCING A MELODY WITH YOUR VOICE. More specific semantic information then depends on the co-text; e.g., it would activate specific sound memories if one knew the ACTOR of the ACTION of singing one is referring to, or depending on whether the singing takes place in an opera setting or at a karaoke party. Unusual linguistic elements, idioms, and fixed expressions (e.g., For pity's sake!) are also stored as single units in the lexicon. Similarly, meaningful constructions such as SOUND+MOTION verb phrases (e.g., The girl sang around the corner, Jackendoff, 2002, p. 392, 2007, p. 69) are treated as holistic units.

However, not all possible word forms are stored in the LTM, but speakers construct words online using productive and semi-productive rules. Productive rules are used, for example in regular word formations, such as adding -ly to adjectives to form adverbs (Jackendoff, 2007). In irregular cases, complete word forms are stored in the lexicon, and the application of productive rules is blocked [e.g., good→goodly [blocked] → well]. This procedure extends to irregular declension forms in languages such as German, where the schemes for vowel changes in declension are stored as semi-productive rules in LTM (e.g., Ball→Bälle in the plural). Semi-productive rules are not actively used to decline an unknown word, but they help us to work out the likely meaning of similar forms of unfamiliar words. The boundary between grammar and lexicon is thus blurred, as lexical items “establish the correspondence of certain syntactic constituents with phonological and conceptual structures” (Jackendoff, 2002, p. 131). This lexicalised grammatical pattern replaces traditional grammar rules and is actively acquired by language learners through trial and error in natural settings1 and it is this understanding of language, among other aspects of the framework, that positions the approach as a valuable tool for explaining language processes.

The specific aim of this paper is then to test how the concepts and mechanisms of Jackendoff's Parallel Architecture can be mapped on specific neural regions, pathways, and processes in the brain. Roughly speaking, the central mechanisms of phonological structures would be implemented in brain areas such as the superior temporal gyrus, which is involved in the processing of speech sounds; those of syntactic structures in areas such as Broca's area, which is crucial for syntactic processing and language production; and those of conceptual structures in the anterior temporal lobe, which is involved in semantic processing and the integration of conceptual knowledge. The neural pathways between these structures, for example, the arcuate fasciculus between Broca's and Wernicke's areas, are then represented by the interfaces. This also applies to the neural pathways between, for example, Broca's area and the cortical networks involved in the production of spoken words. Since the conceptual structures are proposed to be connected to other complex cognitive systems in the mind, a dense network of neural pathways from very different areas of the brain connected to the central monitor would be the neurolinguistic image of these structures.

As Jackendoff (2023, p. 3) notes, “there is still a lot to be learned” about the conceptual structures. This openness provides a degree of flexibility in the framework to incorporate new findings in (neuro)linguistics. It can include empirical findings that support the embodiment of semantic information (e.g., that using the word “writing” activates areas in the brain responsible for controlling the muscles needed for writing, cf. Johnson, 2018) as well as those that support the hub-and-spoke theory (cf. Lambon Ralph et al., 2017), which proposes that individual experiences (both verbal and non-verbal) construct the concepts behind a word, and that the multimodal components of these concepts are stored in modality-specific areas in the brain, which are then mediated by a central hub in the anterior temporal lobes. This could then be seen as the central location that regulates the processes within the conceptual structures.

In the following sections, we will first test the possibilities of integrating findings from neurolinguistics into the Parallel Architecture (Section 3), then discuss problems in doing so, and suggest adaptations or additions to the framework (Section 4). For the purposes of congruity, we will focus on examples from language acquisition studies.

The ability to effortlessly use oral sounds for complex communication is a uniquely human trait. The underlying neurological system is extraordinarily complex, suggesting the presence of a pre-existing, widely distributed network of language processing structures in the human brain. Indeed, Perani et al. (2011) found that the neural basis for language learning is not only present in newborns but also very active. The researchers analyzed functional and structural data from 2-day-old babies when they were exposed to language. They found that all the brain areas involved in language processing in children and adults were already active in the babies. However, the activation was more bilateral, and normal speech activated the right auditory cortex more than the left in the newborns. The brain areas that are mainly responsible for language processing in adults were largely devoid of content in the infants. These areas were then continuously enriched with information from the language input of the infant's environment, and after a few months, it could be seen that Wernicke's area became more prominent in filtering the acoustic input for information relevant to building the language-specific neural networks. Menn et al. (2023) studied the acquisition of speech sounds in children using deconvolution EEG modeling. They also found that the increase in neural responses to native phonemes increases gradually. Children first acquire longer time intervals, which are often articulated with a distinct prosody in adults' child talk, thus supporting infants' processing abilities. Short-lived phonemes are then added step by step. It is thus that prerequisite language structures (e.g., for making out prosody) and their inherent mechanisms allow children to acquire language without explicit instruction, contributing to the wide range of acquisition successes but also difficulties and language impairments observed due to lesions in different brain regions (Lewis et al., 2015; Turken and Dronkers, 2011; Warlaumont et al., 2013).

The Parallel Architecture would locate the mechanisms in the phonological structures that filter the phonemes from the input. Since the learning steps are similar for children with different L1, depending on the length of the phonetic intervals (so that long-time intervals are easier learned than short-time intervals cf. Warlaumont et al., 2013), it is essential to bear in mind that the working memory is an essential part of language processing (and is therefore proposed to be explicitly included in the framework, Figure 9).

Studies of word learning show that children often acquire vocabulary by making a connection between objects they see and words they hear. For example, Clerkin et al. (2017) conducted a study in which children were equipped with a head camera that recorded the infants' views of objects in their environment. The authors analyzed the objects that were in the children's gaze and the naming of the objects. In this egocentric scenario, the frequency with which objects were in view was a significant indicator of the words the children learned. The surrounding objects, which the children also perceived when looking at the target object, might have functioned as a “desirable difficulty” (7), in that the learning system needed to set up a category bundle that on the one hand allowed the children to recognize different variations of a specific object (e.g., different types of balls) but on the other hand avoided overgeneralizing all round objects (e.g., an apple) as balls. Although articulating the appropriate word for the object starts at a later age than correctly recognizing the objects and identifying them as the tool to play with, children are already able to recognize words and associate them with the correct object, when each of the elements is frequently uttered in their environment. This is also true for common word combinations (e.g., clap your hands” vs. take your hands; Skarabela et al., 2021).

Translating these findings into the Parallel Architecture, one can say that the three language structures initially contain only the mechanisms that filter the diverse input. These determine which acoustic input is relevant to language development and which phonetic input is related to perceived objects and people. This means that initially it is the phonological structures in combination with visual and haptic input filtered through the conceptual structures that play the main role in initiating the development of the cognitive language structures that enable people to verbally communicate their (non-linguistic) wishes, needs and thoughts. The relationship between concepts and phonological forms in the framework can then be illustrated as in Figure 2 with the bilingual German-English representation of Auto/car. The phonological structures are very different in the two languages, but for a bilingual person both phonological forms refer to a means of transport that (typically) has four tires, a steering wheel, runs on petrol (or diesel or electricity), typically has a boot, a roof and a bonnet, is made mainly of steel and aluminum, that its exhaust has a certain smell and that it makes certain noises. This information is stored independently of language in the brain areas specialized in processing it (occipital lobe for vision, auditory cortex for acoustic information, etc.).

It is important to note that the conceptual structures in the framework are considered to be much more complex than those shown in the figure, encompassing different car models, sensory experiences, sounds, functions, memories and more. Given their association with limbic regions (e.g., Gálvez-García et al., 2020; Horoufchin et al., 2018; Pellicano et al., 2009), the conceptual structures are also connected to these motor language-external conceptual systems via interfaces. All this information is activated in the bilingual speaker by both words. This does not mean that each of the languages that a bilingual person knows has a word that conveys the conceptual structures which a person has in their mind. For example, a German-English bilingual person will be faced with the problem that the word castle incorporates the semantics of two German words: Burg for a fortified building in which a royal/aristocratic family and their people live (who are then often knights or soldiers), similar but not exactly like a fortress, which would be Festung in German. Castle also stands for the concept of the German Schloss, which is a non-fortified, decorative building for the royal/aristocratic family and their people (who are then more likely to be butlers, cooks, secretaries, etc.), leaving many L1 German tourists rather puzzled when they stand in front of Windsor Castle (Schloss Windsor) which looks more like a Burg than like a Schloss.

The existence of semantic networks and the Parallel Architecture's proposition that the semantics of a word is stored in different specialized modules in our brains is supported by neuropsychological evidence (e.g., González et al., 2006). Neuroimaging shows that each word consists of a neural network with different cortical topographies (Grisoni et al., 2024). Concreteness or abstractness of the concepts also influence the space of neural representation (Hultén et al., 2021). Controlling instances are then responsible for processing this information (Whitney et al., 2012).

Tests by Beckage et al. (2011) e.g., highlight the importance of these networks for language acquisition. The authors compared the semantic networks of toddlers (15–36 months) with age-typical and age-atypical vocabulary sizes. Using a co-occurrence statistic of words in a normative language learning environment (CHILDES corpus) for semantic relatedness, they analyzed the conceptual structures of the words known by the different groups. They found that there was a relationship between vocabulary size and semantic networks in that the networks of children with small vocabularies had less connectivity, fewer clusters and less global access. These problems in building small worlds in the semantic network then led to problems in language acquisition. Bohlman et al. (2023) showed that children whose left and right hemispheres due to agenesis of the corpus callosum (ACC) are not or not properly connected acquire vocbularly more slowly and less successfully than children with no ACC. Jackendoff's framework would explain this delay in learning words by the learners not seeing the need for a word if the concept is not set up. The fact that these problems with word learning might be closely related to an overload in working memory due to the difficulty of learning the differences between the concepts of cat and dog, for example, would then call for an addition of interfaces to the working memory or for its inclusion as an active part of the framework, as was done in section 4 in Figure 9.

In order to organize the complex conceptual content, conceptual structures require mechanisms that facilitate systematic and efficient storage (Lakoff, 1982, 1987). Taxonomic and thematic relations are therefore derived from experiences with different objects and words and help children to build this organization. Taxonomic relations reflect similarities between concepts, while thematic relations define how objects/concepts relate to each other, often based on word co-occurrences or associations with events and scenes [e.g., plate—spoon (theme: eating); Chou et al., 2019; Landrigan and Mirman, 2018]. Different brain regions are involved in the generation of these representations: the left temporo-parietal junction, posterior temporal areas and inferior frontal gyrus are active during thematic relation judgments, whereas taxonomic distinctions involve the left anterior temporal lobe, bilateral visual areas and the precuneus (Lewis et al., 2015; Patterson et al., 2007).

In the Parallel Architecture, it is proposed that these differences in categorization require different types of rules for extracting relevant content and generating conceptual structures, thus providing interfaces to different non-linguistic cognitive regions (see Figure 3).

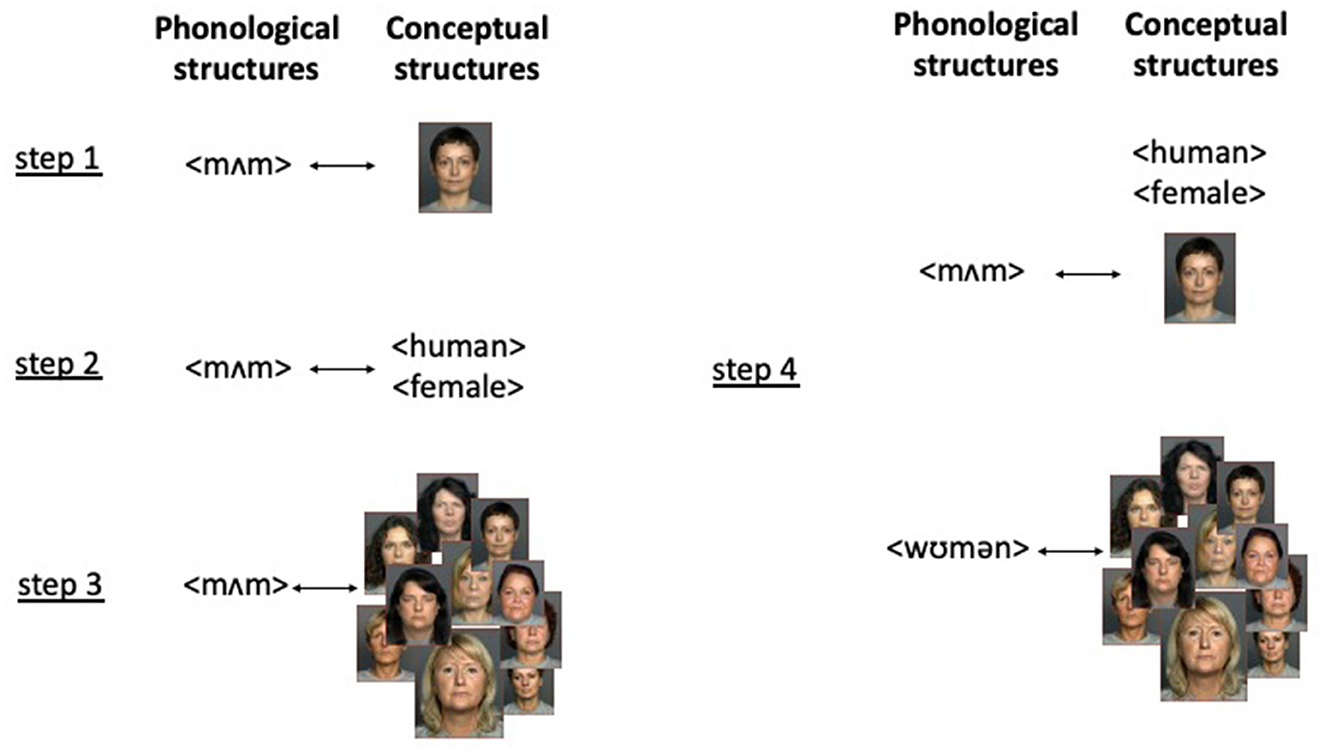

The learning steps underlying taxonomic classification can be modeled as shown in Figure 4, using the example of mum and woman. Children first learn a word by exposure to a specific example (labeled as TOKEN in Jackendoff, 1983). They extract conceptual content and apply it to other similar entities, gradually extending the use of the word to refer to women with similar characteristics. By analyzing feedback and observing how these women are referred to (e.g., by their mothers), children learn that some women belong to the category FAMILY, while others are categorized as FRIENDS or STRANGERS but all fall under the broader category of FEMALE PERSON, leading toddlers to call many adult female persons Mum if these persons display biologically and/or socially typical characteristics of the female type. The precise boundaries of what makes a TOKEN belong to a TYPE can vary, leading to different object classifications between individuals or even for the same individual in different contexts, depending on factors such as perceptual and experiential influences on decision-making processes (Evans et al., 2007; Labov, 1973; Jackendoff, 1983). For example, depending on the family situation, the token referred to by the word mum might belong not only to the classification FAMILY but also to the category FRIENDS, while for others mum might belong to the category STRANGER because they have never met their mother.

Figure 4. Creation of TYPE-TOKEN relation of mum and woman. The pictures are provided in the database on faces by the Max Planck Institute for Human Development, Center for Lifespan Psychology, Berlin, Germany.

The formation of categories and concepts is supported by a rich linguistic environment in that, for example showing children objects and naming them and their characteristic features lead to more successful recognition of 3D caricatures of objects that were new or familiar to them (Smith, 2003). Similar results have been found in studies on how AI develops new concepts. Neural network studies can provide valuable insights into language acquisition because AI's learning processes can be studied more discretely, and scientists can adjust the learning conditions in different experiments, allowing them to test hypotheses while reducing the influence of extraneous factors (Portelance and Jasbi, 2024). Schwartz et al. (2022) analyzed the human method of acquiring concepts and their phonological representations with the help of AI. They compared whether few-shot learning of new concepts by presenting visual images to AI or by presenting the images and labeling them, both of which had not worked well in the past, could be improved by adding verbal semantic information about the object in the training process, similar to how infants learn new words. The results showed that AI, like humans, indeed benefits from a verbal description of objects to learn the semantics of words (Portelance et al., 2024).

The enhanced learning process is the effect of language and vision providing complementary information that helps to develop concepts. In the theory of the Parallel Architecture, this is the effect of the frequency of co-occurrence of words in communication about different TOKENS belonging to the same TYPE. The phonology of the descriptive words that frequently co-occur with the concept will activate their own concepts. These, in turn, highlight those elements of the conceptual network of each TOKEN that are common to different TOKENS, leading language learners to extract the TYPEs. Language is thus important not only for expressing concepts or for activating concepts in reception, but also for developing them. Although in an individual the majority of stored concepts, e.g., of HOUSE, are located in modules that are language independent, as suggested in the Parallel Architecture (e.g., Jackendoff, 2010), language still plays an important role in their construction.

While conceptual and phonological structures and their interfaces serve toddlers well in the acquisition of vocabulary, the mechanisms within syntactic structures begin early by scanning audio input for syntactic cues. Friederici et al. (2011) e.g., tested 4-month-old infants' event-related potential to violations of syntactic expectations in an unfamiliar language. German infants were familiarized with correct Italian four-word sentences that contained rule-based non-adjacent dependencies between two elements, i.e., between an auxiliary verb and a verb suffix indicating the continuous form. Both—the auxiliary form and the suffix, are realized in phonological syllables. The children listened to the sentences in four short sessions of 3.3 min each, for a total of 13.2 min. After the first session there was no effect on the event-related potential, but after the fourth session there were clear responses. Thus, it can be said that even at this early age, the children successfully stored phonological associations and related them to syntactic patterns. These processes can be modeled in the Parallel Architecture as a cooperation between the phonological and the syntactic structures via the interfaces. The methods in the phonological structures identify phonological regularities and store them as a rule. This phonological rule is then (later) related to the syntactic structures of the continuous form, and to the conceptual structures indicating that a process is going on at the moment of speaking, or a that it is about a temporary action that is repeated regularly (cf. Weist et al., 2004, for neural interfaces between syntax and cognition).

Perani et al. (2011) provide visual evidence of the increasing connectivity between phonology and syntax in a diffusion tensor imaging study. They examined the structural connectivity of newborns, focusing on the fiber tracts that connect language-relevant brain areas in adults. The authors found that fiber tracts connect the ventral part of the inferior frontal gyrus to the temporal cortex shortly after birth, but that dorsal fiber tracts form later in life. This means that the dorsal pathway connecting the temporal cortex to Broca's area is not present from birth, nor has syntactic processing yet begun. In the language model, these fiber tracts would be translated into the interfaces in the Parallel Architecture that are responsible for establishing the connections and interactions between the phonological and the syntactic structures. While at the beginning of a human's life, no content is stored in the syntactic structures, the extracted phonological rules will activate an interface to the syntactic structures where, e.g., the rule for generating the regular past tense is extracted and stored. Since Perani et al.'s analysis suggests primarily one-way interfaces, Figure 5 illustrates the relationship between syntactic and phonological structures in regular past tense generation and comprehension, making two one-way interfaces out of the proposed two-way interfaces in the framework.

Figure 6 then outlines how the acquisition of correct regular and irregular past tense verb forms can be conceptualized in the framework using eat as an example. Initially, the interfaces between phonological and conceptual structures are the key structures for accurate performance, and the phonological structures of ate are associated with the information that it denotes the action of eating which took place in the past (BEFORE), but so are the phonological structures of talked, hugged, or kissed. As vocabulary grows, however, it becomes inefficient to store all possible phonological forms in declarative memory. Productive rules for generating regular forms are extracted and stored in the procedural memory in the syntactic structures. Interfaces are established between the procedural memories of conceptual and syntactic structures, and between syntactic structures and phonological structures, and children form all past tense forms by adding the -ed ending to the infinitive form. Feedback from the environment then leads to the blocking of the productive rule for the regular verb forms in words like eat, prompting the storage of the correct irregular form in phonological long-term memory (Ullman, 2004; Earle and Ullman, 2021).

Similar learning processes apply to the development of rules for sentence generation and analysis. These include word order, the integration of clauses, the syntactic implications of certain semantic choices, and the syntactic consequences of certain words. For example, the German conjunction weil (because) requires the verb to be placed at the end of the causal clause (weil er gern lachte—because he liked to laugh), which categorizes the word sequence as a subclause. On the other hand, the word denn (also because) requires the verb to be placed immediately after the noun (denn er lachte gern), which grammatically characterizes the word sequence as a main clause, although semantically it is still a subclause: it will not stand alone but needs another main clause to accompany it.

It is these existing word-dependent syntactic structures that support Jackendoff's concept of the lexicon as an interface between linguistic structures (Jackendoff, 2002, 2010; Jackendoff and Audring, 2020). The view of words as initiators of syntactic learning is also supported by studies in neural network modeling, which have shown that word categories (e.g., noun vs. verb) can be established when generic distributional statistical learning is applied to sequences of words (cf. Frank et al., 2019). Recurrent neural networks control how and which information is relevant for syntactic categorization as gating mechanisms analyse and compare old and new inputs to decide on which information should be stored and which should be forgotten or rewritten (Pannitto and Herbelot, 2022). In the Parallel Architecture, these mechanisms are located in the linguistic structures: the phonological input is processed in the phonological structure. The cognitive and the syntactic structures extract the parts of the information that are relevant for developing and installing syntactic rules. In English, the phonological structures would inform the syntactic structures that the word table is often co-occurring with determiners (a or the), which is an indicator for table being a syntactic noun. The cognitive structures would identify NON-LIVING as a characteristic of table, and this information would then tell the syntactic structures that table is a neuter noun.

With the development of syntactic structures, a consistent sequence of learning processes can be discerned. Diehl (1999) found that, regardless of the method used to learn German syntax (implicitly or explicitly), individuals progress through learning steps in the same order when establishing the syntactic rules. For learning sentence structures, these stages are (1) main clauses: subject-verb, (2) coordinated main clauses, W-questions, and yes-no-questions, (3) distancing of verb phrases, (4) subclauses, and (5) inversion. The order of acquisition reflects the need to convey increasingly complex conceptual structures efficiently, along with the visible development of mechanisms and elements in the linguistic structures. The contents within these structures may vary among individuals due to different inputs, environments, and the complexity of ideas to be communicated. However, the general structures remain consistent, if the learners' brains are intact. The first step using the correct regular word order in simple sentences may not even require additional syntactic rules, it may be guided by semantics. The default sequence of ACTOR, ACTION, and OBJECT in sentences can make an additional syntactic rule unnecessary (Figure 7).

Santi and Grodzinsky's (2010) fMRI findings on relative clause processing in healthy adults further support this notion. The authors tested the effect of two different dimensions of sentence complexity on Broca's area, depending on (1) the position of the relative clause in the sentence (in the middle or at the end) and (2) whether the relative clause was a subject or an object relative clause. The fMRI images show that both factors influenced the changes in posterior Broca's area. However, the type of relative clause was the only factor that caused a syntactic adaptation in anterior Broca's area. The first adaptation thus shows the effect of different complexities of syntactic processing (space between reference and referent and/or reversion of subject and object), the second exclusively the effect of the syntactic role of the pronoun. This difference could thus result from the activation of a mechanism that tells the conceptual structure that the pronoun is the OBJECT/RECEIVER of the ACTION, despite its initial position (cf. Santi et al., 2015).

This effect can be modeled by the Parallel Architecture (Figure 8): in the first sentence: John is the man who loves Linda, the relative clause has the syntactic and semantic form of subject=/ACTOR/, verb=/ACTION/and object=/RECIPIENT/which is the easiest to process and is acquired in the first step of sentence learning. In the relative clause John is the man who Linda loves, who will first be interpreted as a pronoun introducing a subject relative clause. As there is no verb but another noun following, the syntactic (and semantic) analysis needs to be adapted, and the second noun becomes the subject while the pronoun becomes the object of the clause. The syntactic inversion also activates changes in the semantic interpretation: whereas in the first sentence who refers to the/ACTOR/of the relative clause, in the second sentence, this interpretation must be blocked and changed to/RECIPIENT/.

As we can see in the implementation of neurolinguistic findings on language learning processes, the Parallel Architecture in its current form already provides a framework that is well-equipped to systematically describe a variety of neurolinguistic processes in language acquisition. However, it would be useful to integrate structures and interfaces into the framework that have been shown to be important in neuroimaging studies of language processing—or to make clear where language modules could be added to explain e.g., non-verbal or written language processes. In the following, we will outline our ideas in more detail.

A first and simple step in adapting the framework to the implementation of neurolinguistic findings would be to change the single two-way interfaces to two one-way interfaces since it has been found that the flow of neural information between brain structures is often unidirectional (Perani et al., 2011, see Figure 6). This update would also make it easier to outline scenarios in which reception and production capabilities diverge due to language impairment.

In addition, studies of speech perception show that it is useful to link the phonological structures to more cognitive perceptual systems than just hearing and vocalization. Visual input can be a crucial factor for all language users when following communication in a noisy environment. Listeners often rely on a combination of lip-reading and comparing the assumptions of likely pronounced phonemes with words that would fit the context of the communication. A prominent example of the effects of this interaction is the McGurk effect (McGurk and MacDonald, 1976, MacDonald, 2018), where auditory input (e.g., ba) and visual input of lip movements (e.g., ga) are incongruent, and the listener fuses visual and auditory representations to perceive a completely different syllable (in this case, da). Studies of people who perceive this effect and of those who do not, but “hear” the uttered syllable, have shown that there is a difference in connectivity of the left superior temporal gyrus, where audiovisual integration is processed, and the auditory and visual cortices interact (Nath and Beauchamp, 2012). It would therefore be reasonable to add interfaces between phonological and visual structures.

Since declarative and procedural memory are crucial for language processing, as shown above for the acquisition of regular and irregular forms, the explicit inclusion of both in the structures may also help to explain findings on atypical language development.

Furthermore, we would propose to explicitly include executive function as a crucial element in the framework. Executive function is involved in the control of thoughts, actions and emotions. It is embedded in the prefrontal cortex and includes different skills such as attentional control, working memory, and behavioral inhibition, which are crucial for processing language in a contextually appropriate form (Gooch et al., 2016). It thus plays a central role in guiding both learning processes and the coordination of perception and production. Summarizing the results of correlational and experimental studies, Müller et al. (2009) emphasize that not only does the executive function affect language, but that language can in turn affect the development of executive function, for example, by helping to direct the attention of learners and listeners. Language is also crucial for training the executive function to work effectively in more complex situations, which, in turn, supports language learning as a next step. Executive control is also crucial in bilingual language production. Bilingual brains show different activity than those of monolinguals, and again bilinguality has a strengthening effect on specific areas in the control systems (Coderre et al., 2016). Thus, when executive function is integrated into the Parallel Architecture (Figure 9), the interfaces between executive function and the language system (in the oval) are bidirectional.

In addition to these changes and additions to the framework, we would also suggest that it is important to remember that there are more notions of language than phonology, syntax and semantics. As noted in the study by Perani et al. (2011, see above), the structures and mechanisms responsible for analyzing auditory input, evaluating it and extracting rules are active from birth, initiating the development for phonological processing in Wernicke's area, which would correspond to the phonological structures in the Parallel Architecture. However, the first linguistic activities precede the activation of linguistic structures in the left hemisphere (df. Steinhauer et al., 1999). These vocal activities are related to prosody. For example, in their study of the crying patterns of newborns in Germany and France, Mampe et al. (2009) found that the language newborns were exposed to before birth influenced their crying patterns. Whereas, French babies' cries have an ascending melodic contour, German babies' cries showcase a falling contour. The babies also adapt the intensity of their cries to the melodies. This means that premature babies adapt their vocal muscles to the prosodic characteristics of their environment even before the left hemisphere, which is dominant for language understanding and production, has taken over the lead in language analysis.

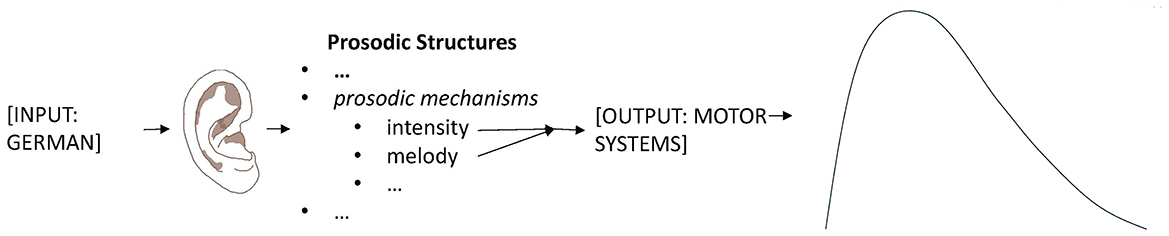

In the Parallel Architecture, prosody is included in the phonological structures, but since we can see that there are distinct brain areas involved in performing phonological and prosodic tasks, integrating prosodic structures as separate structures in the framework would help to explain a variety of language learning and processing activities that are crucial for language acquisition and for understanding a speaker's intended message. In prosodic structures, infants recognize intensity and melody and the relevant information of the audio input, and the patterns identified here are then transferred not only to the phonological structures, but also to conceptual structures. Mampe et al.'s (2009) findings on the differences in crying patterns between German and French infants would then be modeled within the Parallel Architecture as shown in Figure 10.

Figure 10. Adding the structure of prosody for implementing the findings of Mampe et al. (2009) in the architecture.

Prosodic mechanisms are not only crucial for establishing crying patterns and initiating phoneme and word learning (Wermke et al., 2007). Babies also filter out elements such as pauses and melodies, which are critical for sentence comprehension and formation. Gervain and Werker (2013) showed that characteristic prosodic cues differentiate word order (verb-object vs. object-verb) even in 7-month-old infants. To explain these prosodic-syntactic rules and interfaces, consider the illustration in Figure 11: auditory information is analyzed within prosodic structures to identify prosodic patterns, which in turn provide information about the sentence proposition. Männel and Friederici (2011) showed in ERP studies with 21-month-old, 3-year-old, and 6-year-old children that participants in speech groups older than 2 years showed a similar response to intonational phrase boundaries as adults who analyse sentence structures. However, before sentence and phrase knowledge is sufficiently developed, children rely on lower-level prosodic cues to analyse speech input. These prosodic elements and their associated rules are then transferred to syntactic structures where they contribute to the development of passive and active syntactic competence.

Other language structures that could be added to the framework are pragmatic structures. The fact that babies adapt their crying to their environment is already driven by the need to communicate physical and emotional states such as hunger, pain or fear, in order to get others to act and feed them, hug them or change their nappies (Choliz et al., 2012). So we can see that there is a pragmatic reason for developing a language system. The Parallel Architecture proposes that pragmatics is part of the conceptual structures. However, one can see that pragmatics are not independent of the language a speaker uses, as is assumed for semantics in the framework, but that pragmatics depend on language and culture, and that speakers have to adapt their language, for example, to the context in which they are using it (e.g., academic communication vs. communication on a football pitch) or to geographical and cultural difference. Jay and Janschewitz (2008) also showed that first language (L1) and foreign language (FL) speakers of English rated the impact of swear words differently, i.e., the FL speakers did not rate the swear words as offensive as the L1 speakers. In terms of neural networks, Márquez-García et al. (2023) conducted a study of the neural communication patterns of children with Autism Spectrum Disorder (ASD), who have difficulty filtering out context and social situation cues that are critical for successful understanding and participation in social interactions. The authors compared the MRI scans of the ASD group performing semantic and pragmatic language tasks with the scans of typically developing (TD) peers. They found that the neural communication patterns of the ASD children differed from those of the TD children. It was suggested that these different neural networks might be used to compensate for disrupted conventional brain networks. As semantic processing did not show these effects, the results would support our proposal to add pragmatic structures to the framework.

As can be seen in Figures 10 and 11, the general construction of the Parallel Architecture as a set of generative components connected to each other via interfaces makes the framework very flexible in terms of adding structures (or leaving them out, depending on what one wants to show). Breuer (2015) has already shown that the openness of the framework allows users to include, for example, more languages by adding phonological and syntactic structures for each language, and adding interfaces between the individual structures in each and across languages (the conceptual structures remaining unique).

Orthographic structures can also be added. Jackendoff and Audring (2020, p. 252) introduce an orthographic structure in The Texture of the Lexicon. This structure consists of two dimensions; the dimension of spelling rules that “define a repertoire of graphemes” (252) and the dimension of the “realization of graphemes in visible form.” The structure would then be linked to the phonological structures. However, this would only work in an alphabetic language where the graphemes represent the phonemes, which is only true in shallow orthographies like Latin or (mostly) Dutch, but in languages with deep orthographies like English, they often do not consistently represent specific phonemes (and in a logographic language they do not do so at all; Bar-Kochva and Breznitz, 2012). Cao et al. (2015) conducted an fMRI study with Chinese and English participants and found that (beside commonalities in both languages) the left inferior parietal lobe and the left superior temporal gyrus were mainly involved in the development of reading competence in English. In Chinese, it was the right middle occipital gyrus that showed higher activity, suggesting that the brain adapts its processes and processing locations to the specific demands of the orthographic system. Orthographic structures would also need interfaces with the conceptual structures (night denotes a different concept than knight, adjectives denoting a language are written with capital letters in English) and the syntactic structures (words can be represented by a single sign in logographic languages; sentences are separated by a full stop, although in oral conversation a stop is not always found in pronunciation; in German, nouns are written with capital letters at the beginning, although this is not acoustically realized), and these structures may also vary in multilingual speakers/writers. Orthographic structures are then associated with different motor areas for the manual control of different forms of writing (as would also be the case for sign language), structures that are located in both the left and the right hemisphere, as was shown by Zuk et al.'s (2018) study that musical training activates regions bilaterally and with this supports children with dyslexia.

In summary, the Parallel Architecture provides a compelling framework for modeling the multifaceted dynamics of language processing. It postulates three distinct structures: the phonological and syntactic structures tailored to language, and the language-independent conceptual structures. However, this framework does not view these structures as isolated compartments in which all information is contained and processed. Instead, it portrays them as intricate networks of productive and semi-productive rules, fixed elements and control centres. These control centres act as orchestrators, activating the functions of these structures through various interfaces. Incorporating findings from neurolinguistics into the framework, the localization of phonological and syntactic decision-making control centres could be located in Wernicke's and Broca's areas, respectively, while the anterior temporal lobe (ATL) and spokes in the left and right hemispheres could be posited as the neurological basis for conceptual structures (cf ., Chiou and Lambon Ralph, 2019; Patterson and Lambon Ralph, 2016; Lambon Ralph, 2014).

The description of neurolinguistic findings on language acquisition using the Parallel Architecture has shown that the framework has great potential for representing the complex landscape of language and language processing. By making active use of its construction as a ‘box of language building blocks,' one can select the components that are relevant for representing the neural processes of language processing, add new ones and omit others that are not involved in the specific processes one wishes to explain. The flexibility of the architecture and its modularity promise the possibility of integrating new findings in neurolinguistics. The framework is thus a powerful tool for modeling not only language acquisition, but also typical language processing and language deficits in a schematic and flexible way.

EB: Conceptualization, Writing – original draft. FB: Conceptualization, Writing – review & editing. AP: Conceptualization, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

We used DeepL Write for an initial linguistic improvement before handing the text over to a colleague with English as an L1 for final revision.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^Although the model labels this knowledge as rules, they actually have rather the form of constructions (cf. e.g., Langacker, 1987, 1991, 2009). In order to go along with the wording used by Jackendoff, we will stay with the term rule, however.

Allan, K. (2020). “On cups,” in Dynamics of Language Changes: Looking Within and Across Languages, ed. K. Allan (Singapore: Springer), 125–137.

Baaijen, M., and Galbraith, D. (2018). Discovery through writing: relationships with writing processes and text quality. Cogn. Instr. 36, 199–223. doi: 10.1080/07370008.2018.1456431

Bar-Kochva, I., and Breznitz, Z. (2012). Does the reading of different orthographies produce distinct brain activity patterns? an ERP study. PLoS ONE 7, 1–8. doi: 10.1371/journal.pone.0036030

Beckage, N., Smith, L., and Hills, T. (2011). Small worlds and semantic network growth in typical and late talkers. PLoS ONE 6:e19348. doi: 10.1371/journal.pone.0019348

Bohlman, E., Turner, J., Haisley, L. D., Hantzch, L., Botteron, K. N., Dager, S., et al. (2023). Language development in infants and toddlers (12 to 24 months) with agenesis of the corpus callosum. J. Int. Neuropsychol. Soc. 29, 404–405. doi: 10.1017/S1355617723005386

Breuer, E. O. (2015). L1 versus FL: Fluency, Errors and Revision Processes in Foreign Language Academic Writing. Frankfurt a.M.: Peter Lang.

Cao, F., Brennan, C., and Booth, J. R. (2015). The brain adapts to orthography with experience: evidence from English and Chinese. Dev. Sci. 18, 785–798. doi: 10.1111/desc.12245

Chiou, R., and Lambon Ralph, M. A. (2019). Unveiling the dynamic interplay between the hub- and spoke-components of the brain's semantic system and its impact on human behaviour. NeuroImage 199, 114–126. doi: 10.1016/j.neuroimage.2019.05.059

Choliz, M., Fernández-Abascal, E. G., and Martinez-Sánchez, F. (2012). Infant crying: pattern of weeping, recognition of emotion and affective reactions in observers. Spanish J. Psychol. 15, 978–988. doi: 10.5209/rev_SJOP.2012.v15.n3.39389

Chou, T.-L., Wong, C.-H., Chen, S.-Y., Fan, L.-Y., and Booth, J. R. (2019). Developmental changes of association strength and categorical relatedness on semantic processing in the brain. Brain Lang. 189, 10–19. doi: 10.1016/j.bandl.2018.12.006

Clerkin, E. M., Hart, E., Rehg, J. M., Yu, C., and Smith, L. B. (2017). Real-world visual statistics and infants's first-learned object names. Phil. Trans. R. Soc. 372, 1–9. doi: 10.1098/rstb.2016.0055

Coderre, E. L., Smith, J. F., van Heuven, W. J. B., and Horowitz, B. (2016). The functional overlap of executive control and language processing in bilinguals. Bilingualism 19, 471–488. doi: 10.1017/S1366728915000188

Diehl, E. (1999). Schulischer Grammatikerwerb unter der Lupe: Das Genfer DIGS-Projekt. Bulletin suisse de linguistique appliquée 70, 7–26.

Earle, F. S., and Ullman, M. T. (2021). Deficits of learning in procedural memory and consolidation in declarative memory in adults with developmental language disorder. J. Speech Lang. Hear. Res. 64, 531–541. doi: 10.1044/2020_JSLHR-20-00292

Evans, V., Bergen, B. K., and Zinken, J. (2007). “The cognitive linguistics enterprise: an overview,” in The Cognitive Linguistics Reader, eds. V. Evans, B. K. Bergen, and J. Zinken (London: Equinox), 2–36.

Fodor, J. A. (1985). Fodor's guide to mental representation: the intelligent Anutie's Vade-Mecum. Mind 94, 76–100. doi: 10.1093/mind/XCIV.373.76

Frank, S., Monaghan, P., and Tsoukala, C. (2019). “Neural network models of language acquisition and processing,” in Human Language: From Genes and Brain to Behavior, ed. A. Hagoort (Cambridge, MA: MIT Press), 277–293.

Friederici, A. D., Mueller, J. L., and Oberecker, R. (2011). Precursors to natural grammar learning: preliminary evidence from 4-month-old infants. PLoS ONE 6, 1–7. doi: 10.1371/journal.pone.0017920

Gálvez-García, G., Aldunate, N., Bascour-Sandoval, C., Martínez-Molina, A., Peña, J., and Barramuño, M. (2020). Muscle activation in semantic processing: An electromyography approach. Biol. Psychol. 152:107881.

Gervain, J., and Werker, J. F. (2013). Prosody cues word order in 7-month-old bilingual infants. Nat. Commun. 4, 1–6. doi: 10.1038/ncomms2430

González, J., Barros-Loscertales, A., Pulvermüller, F., Meseguer, V., Sanjuán, A., Belloch, V., et al. (2006). Reading cinnamonactivates olfactory brain regions. NeuroImage 32, 906–912. doi: 10.1016/j.neuroimage.2006.03.037

Gooch, D., Thompson, P., Nash, H. M., Snowling, M. J., and Hulme, C. (2016). The development of executive function and language skills in the early school years. J. Child Psychol. Psychiat. 57, 180–187. doi: 10.1111/jcpp.12458

Grisoni, L., Boux, I. P., and Pulvermüller, F. (2024). Predictive brain activity shows congruent semantic specificity in language comprehension and production. J. Neurosci. 44:e1723232023. doi: 10.1523/JNEUROSCI.1723-23.2023

Gross, S. (2005). The nature of semantics: on Jackendoff's arguments. Linguist. Rev. 22, 249–270. doi: 10.1515/tlir.2005.22.2-4.249

Horoufchin, H., Bzdok, D., Buccino, G., Borghi, A. M., and Binkofski, F. (2018). Action and object words are differentially anchored in the sensory motor system – A perspective on cognitive embodiment. Sci. Rep. 8, 1–11. doi: 10.1038/s41598-018-24475-z

Hultén, A., van Vliet, M., Kivisaari, S., Lammi, L., Lindh-Knuutila, T., Faisal, A., et al. (2021). The neural representation of abstract words may arise through grounding word meaning in language. Hum. Brain Mapp. 42, 4973–4984.

Jackendoff, R. (1987). “X-bar semantics,” in Proceedings of the Annual Meeting of the Berkeley Linguistics Society, eds. J. Aske, N. Beery, L. Michaelis, and H. Filip (Berkeley, CA: Berkeley Linguistics Society), 355–365.

Jackendoff, R. (1996). Conceptual semantics and cognitive linguistics. Cogn. Linguist. 7, 93–129. doi: 10.1515/cogl.1996.7.1.93

Jackendoff, R. (2003). Précis of foundations of language: brain, meaning, grammar evolution. Behav. Brain Sci. 26, 651–707. doi: 10.1017/S0140525X03000153

Jackendoff, R. (2007). Linguistics in cognitive science: the state of the art. Linguist. Rev. 24, 347–401. doi: 10.1515/TLR.2007.014

Jackendoff, R. (2009). “The parallel architecture and its place in cognitive science,” in The Oxford Handbook of Linguistic Analsysis, eds. B. Heine and H. Narrog (Oxford: Oxford University Press), 583–606.

Jackendoff, R. (2010). Meaning and the Lexicon. The Parallel Architecture 1975-2010. Oxford: Oxford University Press.

Jackendoff, R. (2019). “Conceptual semantics,” in Semantics: Theories, eds. C. Maienborn, K. von Heusinger, and P. Portner (Berlin: de Gruyter), 86–113.

Jackendoff, R. (2023). The parallel architecture in language and elsewhere. Top. Cogn. Sci. 2023, 1–10. doi: 10.1111/tops.12698

Jackendoff, R., and Audring, J. (2020). The Texture of the Lexicon: Relational Morphology and the Parallel Architecture. Oxford: Oxford University Press.

Jay, T., and Janschewitz, K. (2008). The pragmatics of swearing. J. Politen. Res. 4, 267–288. doi: 10.1515/JPLR.2008.013

Johnson, M. (2018). “The embodiment of language,” in The Oxford Handbook of 4E Cognition, eds. A. Newen, L. de Bruin, and S. Gallagher (Oxford: Oxford University Press), 623–640.

Labov, W. (1973). “The boundaries of words and their meanings,” in New Ways of Analyzing Variation in English, eds. C. J. Bailey and R. W. Shuy (Washington, DC: Georgetown University Press), 340–373.

Lakoff, G. (1987). Women, Fire, and Dangerous Things: What Categories Reveal about the Mind. Chicago, IL; London: The University of Chicago Press.

Lambon Ralph, M. A. (2014). Neurocognitive insights on conceptual knowledge and its breakdown. Philos. Trans. R Soc. Lond. B Biol. Sci. 1634:392. doi: 10.1098/rstb.2012.0392

Lambon Ralph, M. A., Jefferies, E., Patterson, K., and Rogers, T. T. (2017). The neural and computational bases of semantic cognition. Nat. Rev. Neurosci. 18, 42–55. doi: 10.1038/nrn.2016.150

Landrigan, J.-F., and Mirman, D. (2018). The cost of switching between taxonomic and thematic semantics. Mem. Cogn. 46, 191–203. doi: 10.3758/s13421-017-0757-5

Langacker, R. W. (1991). Concept, Image, and Symbol: The Cognitive Basis of Grammar. Berlin/New York, NY: Mouton de Gruyter.

Langacker, R. W. (2009). Investigations in Cognitive Grammar. Berlin/New York, NY: Mouton De Gruyter.

Lewis, G. A., Poeppel, D., and Murphy, G. L. (2015). The neural bases of taxonomic and thematic conceptual relations: an MEGstudy. Neuropsychologia 68, 176–189. doi: 10.1016/j.neuropsychologia.2015.01.011

MacDonald, J. (2018). Hearing lips and seeing voices: the origins and development of the 'mcgurk effect' and reflections on audio-visual speech perception over the last 40 years. Multisens. Res. 31, 7–18. doi: 10.1163/22134808-00002548

Mampe, B., Friederici, A. D., Christophe, A., and Wermke, K. (2009). Newborns' cry melody is shaped by their native language. Curr. Biol. 19, 1994–1997. doi: 10.1016/j.cub.2009.09.064

Männel, C., and Friederici, A. D. (2011). Intonational phrase structure processing at different stages of syntax acquisition: ERP studies in 2-, 3-, and 6-year-old children. Dev. Sci. 14, 786–798. doi: 10.1111/j.1467-7687.2010.01025.x

Márquez-García, A. V., Ng, B. K., Iarocci, G., Moreno, S., Vakorin, V. A., and Doesburg, S. M. (2023). Atypical associations between functional connectivity during pragmatic and semantic language processing and cognitive abilities in children with autism. Brain Sci. 13, 1–22. doi: 10.3390/brainsci13101448

Marr, D., and Nishihara, N. K. (1978). Represenation and recognition of the spatial organization of three-dimensional shapes. Proc. Royal Soc. B 200, 269–294. doi: 10.1098/rspb.1978.0020

Mauchand, M., Vergis, N., and Pell, M. D. (2019). Irony, prosody, and social impressions of affective stance. Discour. Process. 57, 141–157. doi: 10.1080/0163853X.2019.1581588

McGurk, H., and MacDonald, J. (1976). Hearing lips and reading voices. Nature 264, 746–748. doi: 10.1038/264746a0

Menn, K. H., Männel, C., and Meyer, L. (2023). Phonological acquisition depends on the timing of speech sounds: deconvolution EEG modeling across the first five years. Sci. Adv. 9, 1–8. doi: 10.1126/sciadv.adh2560

Müller, U., Jacques, S., Brocki, K., and Zelazo, P. D. (2009). “The executive functions of language in preschool children,” in Private Speech, Executive Functioning, and the Development of Verbal Self-Regulation, eds. A. Winsler, C. Fernyhough, and I. Montero (Cambridge: Cambridge University Press), 53–68.

Nath, A. R., and Beauchamp, M. S. (2012). A neural basis for interindividual differences in the McGurk effect: a multisensory speech illusion. Neuroimage 59, 781–787. doi: 10.1016/j.neuroimage.2011.07.024

Pannitto, L., and Herbelot, A. (2022). Can recurrent neural networks validate usage-based theories of grammar acquisition? Front. Psychol. 13:e741321. doi: 10.3389/fpsyg.2022.741321

Patterson, K., and Lambon Ralph, M. A. (2016). The hub-and-spoke hypothesis of semantic memory. Neurobiol. Lang. 4, 765–775. doi: 10.1016/B978-0-12-407794-2.00061-4

Patterson, K., Nestor, P. J., and Rogers, T. T. (2007). Where do you know what you know? the representation of semantic knowledge in the human brain. Nat. Rev. 8, 976–988. doi: 10.1038/nrn2277

Pellicano, A., Lugli, L., Baroni, G., and Nicoletti, R. (2009). The Simon effect with conventional signals: a time-course analysis. Exp. Psychol. 56, 219–227. doi: 10.1027/1618-3169.56.4.219

Perani, D., Saccumana, M. C., Scifob, P., Anwanderd, A., Spadaa, D., Baldolib, C., et al. (2011). Neural language networks at birth. Proc. Natl. Acad. Sci. U. S. A. 108, 16056–16061. doi: 10.1073/pnas.1102991108

Poeppel, D., Emmeroy, K., Hickok, G., and Pylkkänen, L. (2012). Towards a new neurobiology of language. J. Neurosci. 32, 14125–14131. doi: 10.1523/JNEUROSCI.3244-12.2012

Poeppel, D., and Hickok, G. (2004). Introduction: towards a new functional anatomy of language. Cognition 9, 1–12. doi: 10.1016/j.cognition.2003.11.001

Portelance, E., Frank, M. C., and Jurafsky, D. (2024). Learning the meanings of function words from grounded language using a visual question answering model. Cogn. Sci. 48:e13448. doi: 10.1111/cogs.13448

Portelance, E., and Jasbi, M. (2024). The roles of neural networks in language acquisition. Lang. Linguist. Compass 18:e70001. doi: 10.1111/lnc3.70001

Price, C. J. (2010). The anatomy of language: a review of 100 fMRI studies published in 2009. Ann. N. Y. Acad. Sci. 1191, 62–88. doi: 10.1111/j.1749-6632.2010.05444.x

Santi, A., Friederici, A., Makuuchi, M., and Grodzinsky, Y. (2015). An fMRI study dissociating distance measures computed by Broca's area in movement processing: clause boundary vs. identity. Front. Psychol. 6:654. doi: 10.3389/fpsyg.2015.00654

Santi, A., and Grodzinsky, Y. (2010). fMRI adaptation dissociates syntactic complexity dimensions. NeuroImage 51, 1285–1293. doi: 10.1016/j.neuroimage.2010.03.034

Schwartz, E., Karlinsky, L., Feris, R., Giryes, R., and Bronstein, A. (2022). Baby steps towards few-shot learning with multiple semantics. Pat. Recogn. Lett. 160, 142–147. doi: 10.1016/j.patrec.2022.06.012

Skarabela, B., Ota, M., O'Connor, R., and Arnon, I. (2021). “Clap your hands” or “take your hands”? one-year-olds distinguish between frequent and infrequent multiword phrases. Cognition 211:104612. doi: 10.1016/j.cognition.2021.104612

Smith, L. (2003). Learning to recognise objects. Psychol. Sci. 15I, 244–250. doi: 10.1111/1467-9280.03439

Steinhauer, K., Alter, K., and Friederici, A. D. (1999). Brain potentials indicate immediate use of prosodic cues in natural speech processing. Nat. Neurosci. 2, 191–199. doi: 10.1038/5757

Turken, A. U., and Dronkers, N. F. (2011). The neural architecture of the language comprehension network: converging evidence from lesion and connectivity analyses. Front. Syst. Neurosci. 5:1. doi: 10.3389/fnsys.2011.00001

Ullman, M. T. (2004). Contributions of memory circuits to language: the declarative/procedural model. Cognition 92, 231–270. doi: 10.1016/j.cognition.2003.10.008

Van Waes, L., Leijten, M., Roeser, J., Olive, T., and Grabowski, J. (2021). Measuring and assessing typing skills in writing research. J. Writ. Res. 13, 107–153. doi: 10.17239/jowr-2021.13.01.04

Warlaumont, A. S., Westermann, G., Buder, E. H., and Oller, D. K. (2013). Pre-speech motor learning in a neural network using reinforcement. Neural Netw. 38, 64–75. doi: 10.1016/j.neunet.2012.11.012

Weist, R., Pawlak, A., and Carapella, J. (2004). Syntactic-semantic interface in the acquisition of verb morphology. J. Child Lang. 31, 31–60. doi: 10.1017/S0305000903005920

Wermke, K., Leising, D., and Stellzig-Eisenhauer, A. (2007). Relation of melody complexity in infants' cries to language outcome in the second year of life: a longitudinal study. Clin. Linguist. Phonet. 21, 961–973. doi: 10.1080/02699200701659243

Whitney, C., Kirk, M., O'Sullivan, J., Lambon Ralph, M. A., and Jefferies, E. (2012). Executive semantic processing is underpinned by a large-scale neural network: revealing the contribution of left prefrontal, posterior temporal, and parietal cortex to controlled retrieval and selection using TMS. J. Cogn. Neurosci. 24, 133–147. doi: 10.1162/jocn_a_00123

Keywords: Parallel Architecture, review, language model, cognitive linguistics, neurolinguistics, language acquisition, neurological implementation

Citation: Breuer EO, Binkofski FC and Pellicano A (2025) The Parallel Architecture—application and explanatory power for neurolinguistic research. Front. Lang. Sci. 3:1380990. doi: 10.3389/flang.2024.1380990

Received: 02 February 2024; Accepted: 27 November 2024;

Published: 28 January 2025.

Edited by:

José Antonio Hinojosa, Complutense University of Madrid, SpainReviewed by:

Dalia Elleuch, University of Sfax, TunisiaCopyright © 2025 Breuer, Binkofski and Pellicano. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ferdinand Christoph Binkofski, Zi5iaW5rb2Zza2lAZnotanVlbGljaC5kZQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.