95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Integr. Neurosci. , 09 May 2012

Volume 6 - 2012 | https://doi.org/10.3389/fnint.2012.00018

This article is part of the Research Topic Cognitive Functions of the Posterior Parietal Cortex View all 14 articles

The primate brain is adept at rapidly grouping items and events into functional classes, or categories, in order to recognize the significance of stimuli and guide behavior. Higher cognitive functions have traditionally been considered the domain of frontal areas. However, increasing evidence suggests that parietal cortex is also involved in categorical and associative processes. Previous work showed that the parietal cortex is highly involved in spatial processing, attention, and saccadic eye movement planning, and more recent studies have found decision-making signals in lateral intraparietal area (LIP). We recently found that a subdivision of parietal cortex, LIP, reflects learned categories for multiple types of visual stimuli. Additionally, a comparison of categorization signals in parietal and frontal areas found stronger and earlier categorization signals in parietal cortex arguing that, in trained animals, parietal abstract association or category signals are unlikely to arise via feedback from prefrontal cortex (PFC).

Parietal cortex was historically considered “association cortex” because it appeared to integrate sensory information to generate perceptions of the external world and guide body movements. Anatomical, physiological, and lesion data suggested that parietal cortex is well positioned to associate and adapt sensory information into a form that is useful for guiding behavior. Humans with lesions in the inferior parietal lobule do not experience basic sensory deficits, such as blindness or loss of somatosensation, but rather have more complex symptoms, including deficits in attention, movement planning, and spatial orientation (Critchley, 1953; Mountcastle et al., 1975).

Studies have pinpointed several brain areas that are involved in visual learning and categorization. Of these, lateral intraparietal area (LIP) is of particular interest, because it shares reciprocal connections with both early visual areas as well as higher cognition centers and is thus in an optimal position to integrate inputs from both regions. In this review, we focus on recent work in macaques which highlights LIP's role in categorization.

Decades of work has elaborated robust modulation of parietal subdivision LIP by spatial attention and saccadic eye movements (Andersen and Buneo, 2002; Goldberg et al., 2006). Shadlen and colleagues have argued further that LIP encodes perceptual decisions in an “intentional framework” embedded within the motor-planning system (Shadlen et al., 2008). In these studies, subjects used saccadic eye movements to report their decisions, and signals related to decision and eye movements were observed in LIP. However, it is unclear how a decision system based on planning specific motor responses could be extended to explain more abstract decisions that do not necessarily result in specific and predictable motor responses (Freedman and Assad, 2011).

While most work on LIP has focused on its role in spatial processing, LIP neurons also show selectivity for various stimulus attributes during both passive-viewing and more complex behavioral paradigms. For example, LIP neurons respond selectively to the directions of moving random-dot stimuli (Fanini and Assad, 2009). LIP neurons also respond selectively to static, two-dimensional shape stimuli during passive viewing (Sereno and Maunsell, 1998; Janssen et al., 2008) and a delayed match-to-sample task (Sereno and Maunsell, 1998; Sereno and Amador, 2006). In these studies, stimuli were presented in neurons' receptive fields (RFs); thus the stimulus selectivity could not be explained by LIP spatial selectivity.

Visual-feature selectivity in LIP has been shown to change depending on the features that are relevant for solving a behavioral task. For example, LIP neurons are selective for color when colored cues are used to direct saccadic eye movements (Toth and Assad, 2002). In this study, monkeys were trained such that in alternating blocks of trials, either the color or location of a stimulus determined the direction of an upcoming saccade. When color was relevant, neurons were often color selective. In contrast, the same neurons showed much less color selectivity when cue location (but not color) was relevant for saccade planning. Moreover, when color was relevant for directing the saccade, the animal could not predict the upcoming saccade direction. Thus, color selectivity was not an artifact of saccade planning or spatial selectivity. This suggests that LIP can encode arbitrary stimulus properties not simply when they are important for guiding an action, but also when they are relevant to solving a task.

Posterior parietal (including LIP and 7a) neurons may also encode the “rules” that dictate how the animals should link stimuli to responses. Stoet and Synder trained monkeys on a task-switching paradigm in which the animals alternated between two stimulus-response mappings (Stoet and Snyder, 2004). A pre-trial task cue instructed animals to discriminate either the color or orientation of a subsequent test stimulus to generate an appropriate response. Neurons in areas of posterior parietal cortex, including LIP, were selective for the task rules even before the test stimuli were turned on. These studies show that LIP activity reflects cognitive signals that are not related to spatial encoding; moreover, they suggest that LIP activity reflects changes in behavioral demands.

These experiments showed that LIP is involved in functions beyond spatial processing and raise the possibility that LIP plays a general role in cognitive processing. A strong test for the presence of abstract cognitive signals is whether LIP neurons represent categories. Categorization is a fundamental cognitive ability that assigns meaning to stimuli. Stimuli in the same category may be physically dissimilar, while stimuli in different categories may be physically similar. For instance, a wheel and a clock may look alike, but serve different functions. Categorical signals have been observed in prefrontal cortex (PFC) when monkeys learned to categorize morphed visual stimuli as “cats” or “dogs” (Freedman et al., 2001). In contrast, neurons in inferior temporal (IT) cortex showed very weak category encoding, but were strongly selective for the features of visual stimuli (Freedman et al., 2003).

Freedman and Assad (2006) asked whether direction selectivity in LIP is plastic depending on the category rule used to solve the task. Two monkeys performed a delayed-match-to-category task, in which they learned to group 360° of motion directions into two 180°-wide categories. The stimuli were patches of high-coherence random-dot movies. Animals were presented with a sample and a test stimulus separated by a delay period. If the sample and test directions belonged to the same category, animals released a touch-bar to receive a reward. Because the sample and test categories were chosen randomly on each trial, animals could not predict during the sample and delay periods whether to release or to continue holding the touch-bar to a future test stimulus.

After the animals were proficient in the direction categorization task, LIP activity was recorded during task performance. Sample and test stimuli were placed in neurons' RFs in order to elicit strong visual responses. Neuronal activity reflected the learned motion categories—that is, individual neurons tended to show smaller differences in firing rate within categories, and larger differences in firing rate between categories. This effect was present during stimulus presentation and the subsequent delay period, when no stimulus was present in the RF. The animals were then retrained on a new category boundary over the course of several weeks, and a second population of neurons was recorded. After the monkeys learned the second boundary, LIP selectivity had “shifted” dramatically away from the previous category boundary and reflected the new category boundary. Thus, LIP activity changes to reflect the learned category membership of visual stimuli. Similarly, PFC neurons showed similar shifting of representations following retraining (Freedman et al., 2001) and differential activity when identical stimuli are classified according to varying rules (Roy et al., 2010).

In contrast, neurons in the middle temporal area (MT), which is directly interconnected with LIP, were little affected by category training. MT contains a preponderance of neurons that are selective for motion direction (Born and Bradley, 2005), and nearly all of the recorded MT neurons were also highly direction selective in the direction categorization task. The preferred directions of individual MT neurons were distributed almost uniformly in the direction categorization task and thus did not reflect the category boundary or category membership of the motion stimuli (Freedman and Assad, 2006). Because motion category selectivity was absent in area MT but present in LIP, an intriguing possibility is that directional signals in MT are transformed into more abstract categorical representations in LIP. This could occur via plasticity within the hierarchy of parietal cortical processing or even in the direct interconnections between MT and LIP.

Freedman and Assad examined how LIP's categorical signals interact with spatial signals by varying the position of the direction stimuli with respect to the RF of the neuron under study (Freedman and Assad, 2009). Not surprisingly, LIP neurons were strongly modulated according to whether stimuli were presented within or outside their RFs—nearly all LIP neurons showed much lower activity when the stimuli fell outside of their RFs; however, many LIP neurons still showed modulation by the direction categories despite their weak firing rates, suggesting that LIP categorization signals are orthogonal to spatial signals. Open questions include how spatial signals in LIP (e.g., signals related to attention or eye movements) are multiplexed with non-spatial signals, and how both spatial and non-spatial signals are “read out” from LIP by downstream brain areas.

LIP activity flexibly changes with the demands of a direction categorization task, but does this flexibility extend to other visual stimuli? Selectivity for learned direction categories may be a special case, because the continuous, native parametric tuning for direction in parietal neurons may provide a “scaffold” upon which the categorization signals emerge (Ferrera and Grinband, 2006). In fact, visual-motion patterns were chosen for that study because LIP neurons were known to respond to such stimuli. Alternatively, LIP may reflect learned associations between other visual stimulus attributes besides direction. This would suggest that LIP plays a more general role in encoding learned associations between visual stimuli, much like that ascribed to frontal cortical areas such as the lateral PFC (Miller et al., 2002; Cromer et al., 2010). Since LIP has been shown to respond selectively to non-spatial visual stimuli, such as color (Toth and Assad, 2002) and shape (Sereno and Maunsell, 1998), LIP may also encode associations between such diverse stimulus features.

To examine the generality of learned associations in parietal cortex, Fitzgerald and colleagues asked whether LIP neurons reflect arbitrary associations between pairs of visual shapes (Fitzgerald et al., 2011). Animals learned to associate pairs of static, two-dimensional shape stimuli in a delayed pair association task. The shapes were paired arbitrarily, and different pairing schemes were used for the two animals in the study. Finding shape pair selectivity in LIP would provide evidence that associative representations are a general property of LIP neurons, and are not specific to particular stimulus attributes such as direction.

Pair-association learning tasks have been used extensively to study neurons in the ventral visual stream, particularly by Miyashita and colleagues. For example, Sakai and Miyashita described IT neurons that are activated specifically by one pair of shapes, which had been associated with one another over the course of long-term training, during the sample and/or delay intervals of a delayed pair association task (Sakai and Miyashita, 1991). Further work described pair-association effects in perirhinal cortex (Naya et al., 2003), hippocampus (Wirth et al., 2003; Yanike et al., 2004), PFC (Rainer et al., 1999), and there is some evidence for associative effects in MT (Schlack and Albright, 2007).

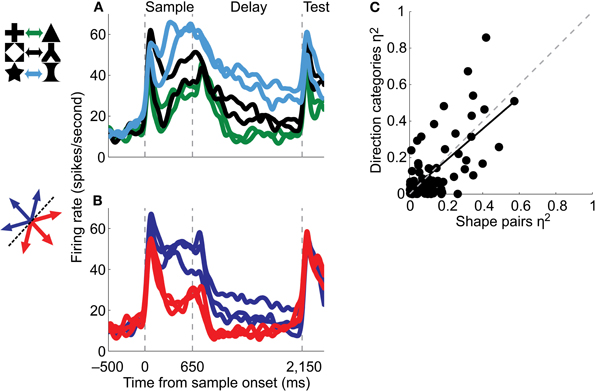

After monkeys were well-trained on the shape pair associations, Fitzgerald et al. (2011) recorded from LIP as animals performed the task. A majority of LIP neurons reflected the learned shape-shape associations, such that the neurons showed more similar activity for shapes that had been associated with one another and distinct activity for the non-associated shapes (Figure 1A). These results provide evidence that LIP neurons can encode associations for broad classes of visual stimuli and that LIP may play a general role in forming visual associations. Whether associative signals in LIP might be observed for stimuli from other sensory modalities (e.g., audition or somatosensation) remains unknown.

Figure 1. Single neurons reflect both shape-shape associations and motion direction categories [Fitzgerald et al. (2011)]. (A) The activity of a single LIP neuron as a monkey associated six shapes into three pairs in a delayed-match-to-pair task. The average neuronal activity evoked by each sample shape is plotted, and same-color traces correspond to associated pairs of shapes. (B) The same neuron was recorded while the animal performed a delayed-match-to-category task. Average activity evoked by each sample motion direction is shown, and same-color traces correspond to directions in the same category. (C) Association or category strength, as measured by explained variance (η2) for direction categories versus shape pairs, during the first half of the delay period for all neurons tested in both tasks. The solid line is a regression fit, and the dashed line has a slope of 1.

While LIP activity can reflect learned associations between shapes as well as motion categories, a second question is whether individual LIP neurons encode associations for both shapes and motion, or rather only encode associations for particular classes of visual stimuli. The question is germane because LIP receives broad inputs from other visual cortical areas (Blatt et al., 1990; Lewis and van Essen, 2000), and inputs from the dorsal and ventral visual streams—which are considered specialized for spatial and object processing, respectively (Mishkin et al., 1983)—are anatomically segregated along the dorsal-ventral axis of LIP (Lewis and van Essen, 2000). The segregated pattern of visual input to LIP might suggest that individual LIP neurons are specialized for forming associations for either shapes or directions, but not both. Such specialization would suggest that LIP's involvement in categorization is limited, and that the information represented in LIP alone is not sufficient for solving abstract categorization tasks. If instead individual LIP neurons can form associations for both stimulus types, this would reinforce the notion that LIP neurons are capable of forming broad associations for a wide range of visual stimuli. This might suggest that LIP can encode the outcome of any task in which the animal must arrive at a discrete outcome or decision—e.g., “category one” versus “category two” or “pair A” versus “pair C” (Freedman and Assad, 2011). This would be particularly interesting because it would potentially link associative or categorical representations in LIP with discrete decision-related activity in LIP that has been described by Shadlen and colleagues (Gold and Shadlen, 2007).

To examine the generality of associative representations in LIP, monkeys were trained to alternate between blocks of the shape and direction categorization tasks. LIP neurons that were selective for the shape pair associations also tended to be selective for the direction categories (Figures 1A,B), and there was a positive correlation between the strength of associative encoding in the two tasks (Figure 1C). This argues that single LIP neurons may generally encode associations between any types of visual stimuli and supports the hypothesis that LIP neurons are modulated whenever animals must determine a discrete outcome or decision. This hypothesis is supported by a study that dissociated perceptual decisions from the direction of the saccades used to signal the decisions and found that decision signals were encoded independently of the eye-movement (Bennur and Gold, 2011). Thus, LIP may generally encode categorical decisions and associations independently of spatial or motor planning.

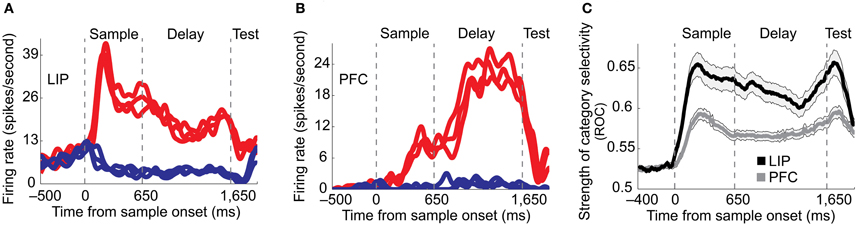

PFC neurons have been shown to reflect the category membership of visual shapes for one (Freedman et al., 2001) or two categorization rules (Cromer et al., 2010; Roy et al., 2010). However, the relationship between category signals in PFC and LIP had been unclear, as the two areas had not been directly compared. One possibility is that visual categories are computed in PFC, which is often considered the executive, decision-making center of the brain, and then sent to LIP via top-down connections. To directly assess the roles of these two areas, Swaminathan and Freedman (2012) recorded from single neurons in LIP and PFC during the motion direction categorization task. In this experiment, LIP showed stronger and more reliable category encoding than PFC. Moreover, category signals appeared with a shorter latency in LIP than in PFC (Figure 2), and LIP's stronger categorization signals were robust even after adjusting for differences in the strength of firing rate and category selectivity between the two brain areas. This finding argues that categorical signals in LIP during this task are unlikely to be driven by PFC, and raises the possibility that LIP or another brain area may be a source for category signals observed in PFC.

Figure 2. Comparison of LIP and PFC in a motion direction categorization task [Swaminathan and Freedman (2012)]. (A–B) Examples of category-selective neurons in LIP (A) and PFC (B). Single neurons in both areas displayed binary-like category selectivity during the motion direction categorization task. Same-color traces correspond to directions in the same category. (C) Category selectivity, measured by receiver operating characteristic (ROC) analysis was stronger and appeared with a shorter latency in LIP (black) compared to PFC (dark gray). The shaded area around the solid traces indicates the s.e.m.

The finding that PFC showed weaker and longer latency category signals than LIP during the direction categorization task (Swaminathan and Freedman, 2012) places important constraints on the neural circuitry underlying the categorization process. An appealing hypothesis that arises from the comparison of MT, LIP, and PFC is that motion direction encoding in MT may be transformed into category encoding in LIP via learning-dependent changes in the direct synaptic connections between the two areas. However, a key consideration is that the direct cortical-cortical connection between MT and LIP is only one pathway by which information can propagate between these two areas. For example, MT and LIP are both interconnected with motion-sensitive regions such as the medial superior temporal (MST) and ventral intraparietal (VIP) areas (Lewis and van Essen, 2000). LIP and PFC are also interconnected with parietal area 7a, in which several recent studies have found category-related neuronal signals (Merchant et al., 2011; Goodwin et al., 2012) and the medial intraparietal area. While anatomical studies have demonstrated the interconnections between these areas, their relative positions in the information processing hierarchy are poorly understood. Categorical signals have also been observed in the frontal eye fields (Ferrera et al., 2009), and a network of other regions, including sensory cortex, motor cortex, the medial temporal lobe, and basal ganglia (Seger and Miller, 2010).

In the categorization studies described above, neuronal activity was examined only after the monkeys were fully trained on the categorization or pair association tasks. Because of this, much less is known about the roles of LIP and PFC during the learning process itself. While LIP showed more reliable and shorter latency category effects than PFC after the learning process was complete, PFC might be more involved in the initial category-learning process. Strong category signals might not emerge in LIP until late in the learning process, once the categories are highly familiar. Alternatively, LIP might be more directly involved than PFC during the category-learning process as well as after learning is complete. This is supported by the finding that LIP neurons reflect dynamic stimulus-response mappings (Toth and Assad, 2002) and dynamically changing task rules (Stoet and Snyder, 2004). Further, LIP showed a stronger coupling than PFC with the monkey's trial-by-trial classifications of ambiguous stimuli (Swaminathan and Freedman, 2012). A key question for future work is to examine the role of parietal cortex in the category learning process, particularly in comparison with PFC, in which category representations have been shown to arise in parallel with the learning process (Antzoulatos and Miller, 2011). If category selectivity appears with a shorter latency in PFC than LIP during category learning, this would suggest that PFC may have a critical role in category learning, and LIP only becomes involved when subjects are experts at the task. Alternatively, if LIP showed category encoding earlier than PFC during learning, it would indicate that LIP is also strongly involved in the category learning process.

Together, the studies described here represent progress toward understanding the neuronal mechanisms underlying the learning and recognition of visual associations and categories. The brain-wide circuit underlying categorization processes is likely to include a large network of brain areas. However, recent work suggests that the parietal cortex and LIP in particular, is more involved in encoding abstract associative and categorical factors than its traditionally ascribed role in visual-spatial processing might suggest.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank John Assad for helpful discussions, support, and comments on an early version of this manuscript.

Andersen, R. A., and Buneo, C. A. (2002). Intentional maps in posterior parietal cortex. Annu. Rev. Neurosci. 25, 189–220.

Antzoulatos, E. G., and Miller, E. K. (2011). Differences between neural activity in prefrontal cortex and striatum during learning of novel, abstract categories. Neuron 71, 243–249.

Bennur, S., and Gold, J. I. (2011). Distinct representations of a perceptual decision and the associated oculomotor plan in the monkey lateral intraparietal area. J. Neurosci. 31, 913–921.

Blatt, G. J., Andersen, R. A., and Stoner, G. R. (1990). Visual receptive field organization and cortico-cortical connections of the lateral intraparietal area (area LIP) in the macaque. J. Comp. Neurol. 299, 421–445.

Born, R. T., and Bradley, D. C. (2005). Structure and function of visual area MT. Annu. Rev. Neurosci. 28, 157–189.

Cromer, J. A., Roy, J. E., and Miller, E. K. (2010). Representation of multiple, independent categories in the primate prefrontal cortex. Neuron 66, 796–807.

Fanini, A., and Assad, J. A. (2009). Direction selectivity of neurons in the macaque lateral intraparietal area. J. Neurophysiol. 101, 289–305.

Ferrera, V. P., and Grinband, J. (2006). Walk the line: parietal neurons respect category boundaries. Nat. Neurosci. 9, 1207–1208.

Ferrera, V. P., Yanike, M., and Cassanello, C. (2009). Frontal eye field neurons signal changes in decision criteria. Nat. Neurosci. 12, 1458–1462.

Fitzgerald, J. K., Freedman, D. J., and Assad, J. A. (2011). Generalized associative representations in parietal cortex. Nat. Neurosci. 14, 1075–1079.

Freedman, D. J., and Assad, J. A. (2006). Experience-dependent representation of visual categories in parietal cortex. Nature 443, 85–88.

Freedman, D. J., and Assad, J. A. (2009). Distinct encoding of spatial and nonspatial visual information in parietal cortex. J. Neurosci. 29, 5671–5680.

Freedman, D. J., and Assad, J. A. (2011). A proposed common neural mechanism for categorization and perceptual decisions. Nat. Neurosci. 14, 143–146.

Freedman, D. J., Riesenhuber, M., Poggio, T., and Miller, E. K. (2001). Categorical representation of visual stimuli in the primate prefrontal cortex. Science 291, 312–316.

Freedman, D. J., Riesenhuber, M., Poggio, T., and Miller, E. K. (2003). A comparison of primate prefrontal and inferior temporal cortices during visual categorization. J. Neurosci. 23, 5235–5246.

Gold, J. I., and Shadlen, M. N. (2007). The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574.

Goldberg, M. E., Bisley, J. W., Powell, K. D., and Gottlieb, J. (2006). Saccades, salience and attention: the role of the lateral intraparietal area in visual behavior. Prog. Brain Res. 155, 157–175.

Goodwin, S. J., Blackman, R. K., Sakellaridi, S., and Chafee, M. V. (2012). Executive control over cognition: stronger and earlier rule-based modulation of spatial category signals in prefrontal cortex relative to parietal cortex. J. Neurosci. 32, 3499–3515.

Janssen, P., Srivastava, S., Ombelet, S., and Orban, G. A. (2008). Coding of shape and position in macaque lateral intraparietal area. J. Neurosci. 28, 6679–6690.

Lewis, J. W., and van Essen, D. C. (2000). Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J. Comp. Neurol. 428, 112–137.

Merchant, H., Crowe, D. A., Robertson, M. S., Fortes, A. F., and Georgopoulos, A. P. (2011). Top-down spatial categorization signal from prefrontal to posterior parietal cortex in the primate. Front. Syst. Neurosci. 5:69. doi: 10.3389/fnsys.2011.00069

Miller, E. K., Freedman, D. J., and Wallis, J. D. (2002). The prefrontal cortex: categories, concepts and cognition. Philos. Trans. R. Soc. Lond. B Biol. Sci. 357, 1123–1136.

Mishkin, M., Ungerleider, L. G., and Macko, K. A. (1983). Object vision and spatial vision: two cortical pathways. Trends Neurosci. 6, 414–417.

Mountcastle, V. B., Lynch, J. C., Georgopoulos, A., Sakata, H., and Acuna, C. (1975). Posterior parietal association cortex of the monkey: command functions for operations within extrapersonal space. J. Neurophysiol. 38, 871–908.

Naya, Y., Yoshida, M., Takeda, M., Fujimichi, R., and Miyashita, Y. (2003). Delay-period activities in two subdivisions of monkey inferotemporal cortex during pair association memory task. Eur. J. Neurosci. 18, 2915–2918.

Rainer, G., Rao, S. C., and Miller, E. K. (1999). Prospective coding for objects in primate prefrontal cortex. J. Neurosci. 19, 5493–5505.

Roy, J. E., Riesenhuber, M., Poggio, T., and Miller, E. K. (2010). Prefrontal cortex activity during flexible categorization. J. Neurosci. 30, 8519–8528.

Sakai, K., and Miyashita, Y. (1991). Neural organization for the long-term memory of paired associates. Nature 354, 152–155.

Schlack, A., and Albright, T. D. (2007). Remembering visual motion: neural correlates of associative plasticity and motion recall in cortical area MT. Neuron 53, 881–890.

Seger, C. A., and Miller, E. K. (2010). Category learning in the brain. Annu. Rev. Neurosci. 33, 203–219.

Sereno, A. B., and Amador, S. C. (2006). Attention and memory-related responses of neurons in the lateral intraparietal area during spatial and shape-delayed match-to-sample tasks. J. Neurophysiol. 95, 1078–1098.

Sereno, A. B., and Maunsell, J. H. (1998). Shape selectivity in primate lateral intraparietal cortex. Nature 395, 500–503.

Shadlen, M. N., Kiani, R., Hanks, T. D., and Churchland, A. K. (2008). “Neurobiology of decision making: an intentional framework,” in Better than Conscious? Decision Making, the Human Mind, and Implications for Institutions, ed C. E. A. W. Singer (Cambridge, MA: MIT Press), 71–101.

Stoet, G., and Snyder, L. H. (2004). Single neurons in posterior parietal cortex of monkeys encode cognitive set. Neuron 42, 1003–1012.

Swaminathan, S. K., and Freedman, D. J. (2012). Preferential encoding of visual categories in parietal cortex compared with prefrontal cortex. Nat. Neurosci. 15, 315–320.

Toth, L. J., and Assad, J. A. (2002). Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature 415, 165–168.

Wirth, S., Yanike, M., Frank, L. M., Smith, A. C., Brown, E. N., and Suzuki, W. A. (2003). Single neurons in the monkey hippocampus and learning of new associations. Science 300, 1578–1581.

Keywords: neuroscience, categorization, learning, parietal cortex, LIP, frontal cortex, electrophysiology

Citation: Fitzgerald JK, Swaminathan SK and Freedman DJ (2012) Visual categorization and the parietal cortex. Front. Integr. Neurosci. 6:18. doi: 10.3389/fnint.2012.00018

Received: 05 February 2012; Accepted: 23 April 2012;

Published online: 09 May 2012.

Edited by:

Christos Constantinidis, Wake Forest University, USAReviewed by:

Lawrence H. Snyder, Washington University School of Medicine, USACopyright: © 2012 Fitzgerald, Swaminathan and Freedman. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: David J. Freedman, Department of Neurobiology, The University of Chicago, 947 E. 58th St., MC0926, Ab310, Chicago, IL 60637, USA. e-mail:ZGZyZWVkbWFuQHVjaGljYWdvLmVkdQ==

†Present address: Laboratory of Integrative Brain Function, The Rockefeller University, New York, NY, USA.

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.