- Department of Mechanical Engineering, Tufts University, Medford, MA, United States

Navigating the future of autonomous vehicles (AVs) brings promise and peril. This paper zeroes in on Tesla’s innovative yet sometimes controversial approach to AVs, spotlighting the intersection of human cognition, vehicle automation, and safety. Amid the excitement of rapid tech advancements, we highlight the risks of over-reliance and potential misperceptions fueled by marketing overreach. Introducing the “Quick Car Scorecard,” we offer a solution to empower consumers in deciphering AV usability, bridging tech specs with real-world needs. As AVs steer our future, it is crucial to prioritize human life and responsible innovation. The journey to automation demands not just speed, but utmost caution and clarity.

1 Introduction

As the automotive industry progresses in developing and incorporating autonomous vehicles into their fleets, the pressing issue of the driver experience has become critical to re-examine. While originally aimed at improving safety and transportation reliability, the rise of semi-autonomous vehicles, which blend human and machine interaction, has led to unforeseen consequences, particularly in the realm of human factors design considerations. It is all too common that companies ignore or deprioritize human factors, while drivers ignore safety technologies (e.g., paying attention to the road with their hands on the wheel) put in place to protect them.

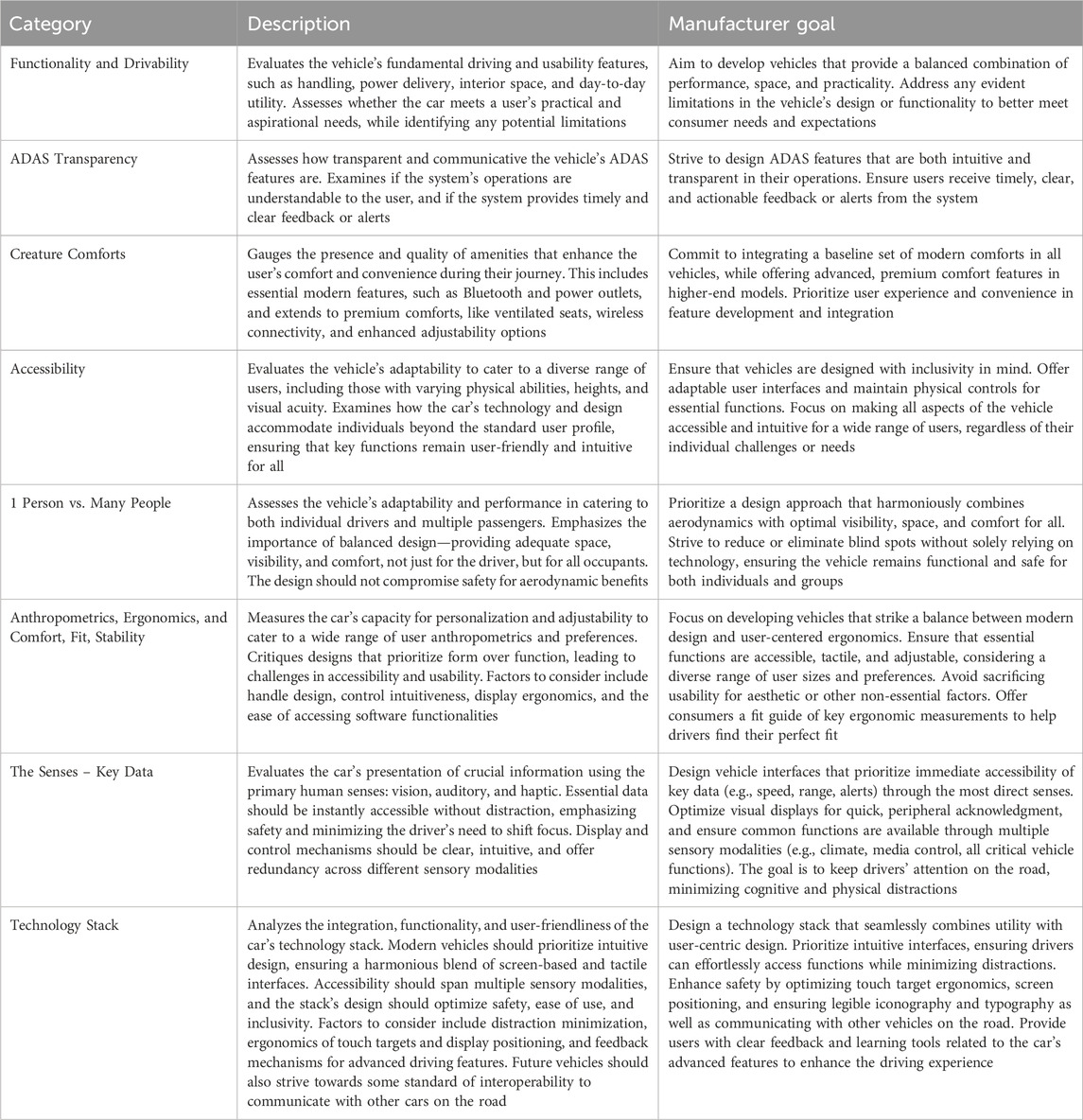

Today, autonomous vehicles (AVs), a catch-all term, is used to describe a growing trend of vehicles that use driverless technologies, thereby affording drivers the opportunity to disengage from the process of driving. Semi-autonomous vehicles, sometimes referred to as automated vehicles, within the context of this paper discuss cars that have drivers but include some autonomous technologies. Many manufacturers currently sit somewhere between Level 2 and Level 3 self-driving (see Figure 1) - but since there is not any consistent validation, one manufacturer’s claim of their autonomous driving capabilities should still be subject to scrutiny. At the start of 2023, Mercedes announced it would soon be selling autonomous cars in the US capable of Level 3 driving (Motavalli, 2023) - the first of their kind - further affording drivers the opportunity to safely disengage from the process of driving under certain conditions.

Figure 1. Levels of automation (Choksey and WardlawPower, 2021).

When it comes to AV technology in the United States and beyond, Tesla generally appears at the forefront of the media, and has become a focus of societal attention. Tesla catapulted to fame, most notably after the success of the Model S in the early 2010s1. They pushed the automotive industry by offering a car with advanced hardware and software that in the case of their future cars: “get faster, smarter, and better as time passes. The car gets better as you sleep. When you wake up, it is like driving a new car” (DeBord, 2015). However, since Tesla’s inception, the modus operandi has been about simplification through advanced software.

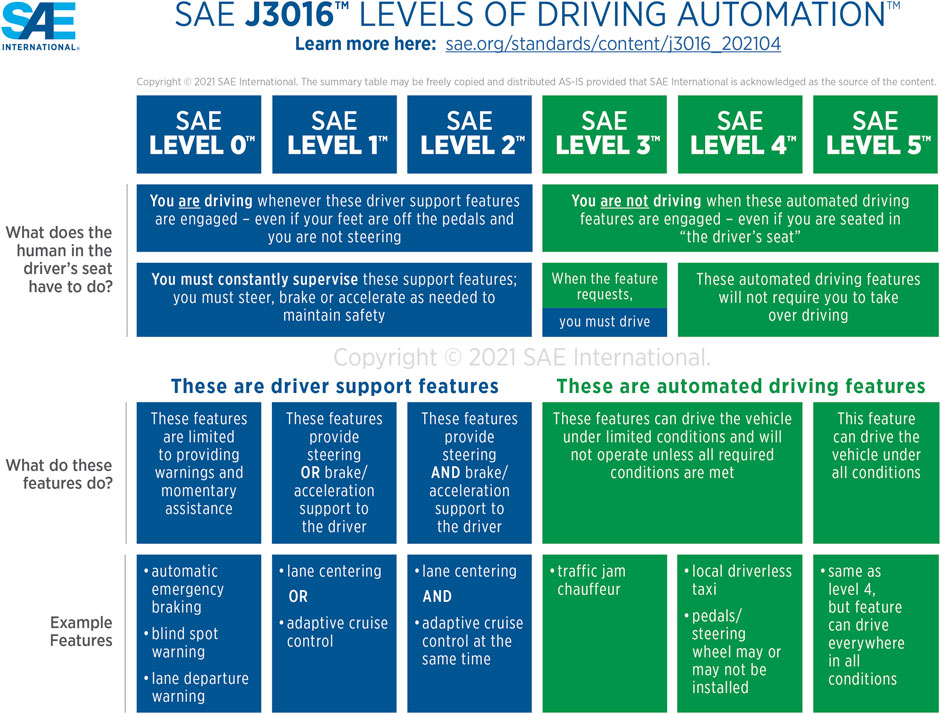

There has been a subtle but concerning trend with respect to minimalism at the expense of usability with subsequent updates to the original Tesla Model 3 interiors (see Figure 2). There is now heavy reliance on a minimalist interior with the iconic and centrally located landscape touchscreen. This touchscreen serves as a hub for the car, controlling most of the cars’ critical functions, while also allowing for modern conveniences enabled by an internet-connected tablet (e.g., watching movies, playing games, etc.). In the most recent refresh as of this writing, the number of physical buttons, control stalks, and switches in the car, a staple of older car design, has greatly diminished, with functions’ locations and mechanisms changing. For example, as is visible in Figure 2, the 2017 Tesla Model 3 windshield wiper and turn signal stalks were replaced by buttons on the steering wheel in the 2024 Model 3 “Highland.” This change has already garnered significant criticism, especially given that the buttons, which are on the wheel, would change orientation as the driver turned the wheel while steering - with one interviewee even suggesting that they would be tempted to not signal at all (Levin, 2023). While many users like the veiled simplicity of a large touchscreen, the lack of physical, tactile controls presents one safety hazard to be explored in this holistic, heuristic outlook of Tesla’s mass-market electric vehicles (EV), and how this company, as a dominant EV automaker in the US (Johnson, 2022), and others are setting a potentially dangerous precedent for the entire auto industry. In any industry, the market leader shapes consumer perception for industry trends (Spalding, 2013) - in this case Tesla has prioritized futuristic design aesthetics over core automotive tenets, such as safety, practicality, and usability. This is further compounded by the fact that Tesla frequently uses the end-user population as unsuspecting test subjects2 for the latest software experiences (Marcetic, 2023), or patches software after users expose issues (Elon Musk, 2023). From a research ethics perspective, Tesla should take greater steps to transparently share intelligible information with end users about the ways in which software updates will meaningfully impact the end user experience.

Figure 2. Comparison highlighting the changes in certain steering wheel and stalk controls between original Model 3 and most recent update (“Highland”). (A): Tesla Model 3 (c.2017) with visible stalks beside steering wheel (circled) (Jurvetson, 2017). (B). Tesla Model 3 Highland with stalks removed (Zlatev, 2023).

This paper intends to serve as a call to action during a pivotal juncture in the evolution of future automobile technology. Specifically, it builds upon the analysis of Tesla’s role as a pioneering force in the realm of AVs, with a distinct focus on the principles of human factors engineering. Within this context, the paper delves into the intricate landscape of human factors design for AVs, drawing upon a wealth of research in psychology and human factors engineering spanning several decades. Additionally, it introduces an innovative approach, namely, the “Quick Car Scorecard,” which empowers users to swiftly assess a vehicle’s usability by posing a set of straightforward questions grounded in human factors considerations. This multifaceted exploration underscores the urgency of incorporating human-centric design principles as we navigate the future of automotive technology.

2 Literature review

After a comprehensive review of the published literature there are three primary questions this paper investigates to improve future automotive technology and experiences for drivers: What cognitive psychology principles are involved in user experience with semi-autonomous vehicles? What existing literature is available on how Teslas:

1. Compare to their AV competitors (with a focus on the EV sector)?

2. Fare when it comes to human factors concerns associated with these technologies?

3. Skirt or push boundaries with relation to other systems (such as laws, policies, or infrastructure) in the U.S. and raise new questions about technology?

What are the public perspectives and opinions regarding Teslas, particularly in relation to the integration of self-driving technology into new vehicles?

The authors aim to shed light and provide a balanced set of viewpoints on the current state of knowledge and public sentiment concerning Teslas and AVs, with a particular emphasis on human factors considerations and societal attitudes towards self-driving technology in modern vehicles.

2.1 How cognition and autonomy overlap

2.1.1 The complications inherent in human-automation interactions

With vehicles of any kind, safety is of paramount importance. Human factors engineering is a discipline focused on, among many things, an understanding of how to design with safety in mind3. Through this lens, there exists significant research showing that current autonomous and semi-autonomous vehicles suffer from human factors issues. A 2018 study by Robson Forensic examined whether AVs were specifically designed to consider human cognition, revealing that individuals using autonomous capabilities not only require a significant amount of time to detect problems with automation, but also experience considerable delays in understanding the issues thereafter (Robson Forensic, 2018). Moreover, the introduction of automation tends to worsen situational awareness, leading to increased reaction times. This decline in situational awareness can be attributed to factors such as “complacency” and distraction, which tend to rise when relying heavily on automation (Robson Forensic, 2018). The presence of automation4 necessitates additional steps for a driver to address an issue, including the need to re-engage, detect, identify, and respond, which can further contribute to delays and potential challenges in managing autonomous systems effectively (Robson Forensic, 2018). Other research has shown that individuals in vehicles equipped with autonomous systems are more likely to engage in secondary tasks and activities that demand greater attention, ultimately diminishing their focus on driving (Llaneras et al., 2013). Supporting this, in 2021 MIT completed a study using glance data that suggested that drivers using Tesla autopilot focused more on non-driving tasks and less on the road, compared to manual driving (Morando et al., 2021).

2.1.2 Balancing cognitive load while driving

Even without autonomy, monotony in driving has long been studied as a contributor to lowered driver awareness. The phenomena of “highway hypnosis” (Williams, 2022) has been well theorized and documented in literature for decades, with some authors even suggesting that it dates back as far as 1921 (Williams and Shor, 1970). The concept behind highway hypnosis is that drivers may experience a trance-like state on monotonous roads, characterized by reduced awareness and automatic driving behavior (Cerezuela et al., 2004). Wertheim’s hypothesis that highway hypnosis was connected to visual predictability has been supported by research finding that drivers were drowsier on highways after a long period of driving (Cerezuela et al., 2004). This literature presents evidence of the potential human factors issue of how to maintain alertness when a driver’s main task is to monitor the road and a screen, without there necessarily being varied visual stimuli. This is even further exacerbated during this period of semi-automation because many vehicles, Tesla included, recommend (or require) only engaging full autopilot when on long, straight roads such as highways: the perfect setup for automation-induced highway hypnosis.

On the other hand, research has also shown that it is possible for drivers to have too high of a cognitive load demand while driving, lowering their driving capability. Recent research by Engström et al. looked into the cognitive control hypothesis, meaning that “cognitive load selectively impairs driving sub-tasks that rely on cognitive control but leaves automatic performance unaffected” (Engström et al., 2017). Their research found that tasks depending on cognitive control, specifically those that were unfamiliar or subject to variation, exhibited consistent decline due to cognitive load. However, performance on tasks that had been thoroughly practiced remained either unaltered or exhibited enhancement. This, along with highway hypnosis, suggests that there is a “sweet spot” for cognitive demand while driving; one must provide enough stimulus for a person to not “check out” while simultaneously avoiding overloading a driver with unfamiliar cues.

2.1.3 Existing guidelines for AV design

There are highly reliable resources investigating elements of human factors in interfaces within vehicles. The “Human Factors Design Guidance for Driver-Vehicle Interfaces” (2016) is a federal repository of best practices covering a wide array of features within vehicles. This comprehensive literature encompasses design guidance, message attributes, visual interfaces, auditory interfaces, haptic interfaces, driver input systems, and system integration, among other critical aspects (Campbell et al., 2016). Each section within this resource provides detailed guidance, accompanied by extensive discussions and a delineation of potential design challenges. Additionally, there exist federal reports addressing human factors in related systems, including guidance recommendations for aviation systems (Ahlstrom, 2016; Dodd et al., 2022; Cardosi et al., 2021). One of these reports delves specifically into essential facets of well-founded automation in aviation, substantiating their claims with thorough human factors research (Cardosi et al., 2021).

For touchscreens, there are also valuable resources outlining best practices. An example is ESA Automation’s 2017 whitepaper on touchscreen design guidelines, which offers detailed recommendations encompassing sizing, physical characteristics, touch target and icon dimensions, and color (ESA Automation, 2017). However, it is worth noting that these examples do not delve into the specific human-centric design requirements unique to self-driving automobiles. Self-driving vehicles, particularly those equipped with touchscreens, necessitate a solid foundation in human factors and psychological research. Yet, the industry is still relatively nascent, and research in this area is evolving, with noticeable gaps. It remains uncertain whether companies heavily reliant on automation have fully embraced guidelines like these in their designs. Nevertheless, the mere existence of reports like those referenced above underscores the ongoing relevance and significance of research into driver-vehicle interfaces, even from a decade ago. The authors of this paper propose that, as AV research advances, it would be advantageous to develop an equally comprehensive catalog of requirements and certification process for automation and touchscreen interfaces in semi-autonomous vehicles across various levels of autonomy.

2.2 Tesla usability and human-machine interaction

2.2.1 Tesla and concerns with software updates

In the last few years, Tesla has faced criticism for its lack of responsible human factors testing prior to releasing new features. A thorough analysis found that the vehicles posed hazards to drivers, particularly when the autopilot would spontaneously disengage in dangerous situations with little warning (Gillmore and Tenhundfeld, 2020). These circumstances emphasize that Tesla has introduced potentially risky updates without conducting comprehensive testing (Gillmore and Tenhundfeld, 2020). This issue is seen plainly in a recent Tesla crash in San Francisco, in which a Tesla using the new “Full Self-Driving” mode braked unexpectedly under a bridge, resulting in an eight-car pileup (FOX NEWS, 2023). Additionally, the National Highway Traffic Safety Transportation Administration 2021 Tesla Model S complaint pages have many mentions of “phantom braking,” when the vehicle brakes for no apparent reason, and other complaints of Tesla autopilot disengaging in particular geographic locations (National Highway Traffic Safety Administration, 2021).

Tesla is not the only AV company currently having issues with inadequate autonomous programming. The truth remains that self-driving cars just are not “human enough” yet, as demonstrated by a recent situation where a Cruise vehicle got stuck in wet concrete in California with no driver in the car (Tangermann, 2022). These scenarios emphasize that the computers running these systems lack the fluid reasoning and improvisational skills that humans naturally possess-at least for now. Until computers develop more advanced adaptive thinking, manufacturers must remember that humans are still integral to these systems; if they fail as a team, it can have deadly consequences for people both inside and outside the vehicle.

2.2.2 Tesla and commitment to large touchscreen dashboards

The number of brands transitioning to touchscreens would imply thorough human factors considerations and mitigations. However, despite the push from developers for tech-heavy interiors, many drivers are unsatisfied with these interfaces, pushing some brands, including Porsche, to turn back to buttons (Gitlin, 2023). Given that physical buttons have had decades of redesigns and optimizations, it should come as no surprise that human factors research has found several noteworthy issues with these new touchscreen interfaces. The literature reveals several advantages for physical buttons: they do not require visual guidance like touchscreens do (Gillmore and Tenhundfeld, 2020), they provide haptic feedback (Pitts et al., 2012), and they can be distinguished from each other by shape, size, and function (i.e., pressing or turning). In addition to this, research does show that buttons outperform touchscreens for driver reaction time and successful operation (Gitlin, 2022).

However, due to the increased focus on touchscreens in vehicles despite the aforementioned criticisms, researchers are beginning to investigate best practices for design. In their study using a driving simulator, Rümelin and Butz found that direct touch buttons (rather than remote-controlled) resulted in faster task completion, but drivers could not interact blindly in most cases. The researchers emphasized the benefits of using physical components around the screen to enhance user orientation and suggested that “position-independent gestures” (such as swiping down anywhere on the screen to pause music) could help with visual distraction. Additionally, the researchers found that users could have been more confident if they received “constant feedback while performing the gesture” (Rümelin and Butz, 2013).

2.2.3 Tesla and design at the cost of usability

Tesla’s market strategy emphasizes pushing the boundaries of the automobile industry and embracing a futuristic aesthetic in designs. However, this pursuit of aesthetics sometimes comes at the expense of usability. The 2021 addition of a “yoke” style steering wheel is a perfect example of Tesla trying to make a change to car design for the sake of aesthetic without doing sufficient human factors research ahead of time (Guerster, 2021). Consumer Reports released a review of the Tesla yoke, which identified difficulty making turns, difficulty retaining grip, missing signals, and accidental button-presses as potential concerns (Barry, 2021a). During this change from steering wheel to yoke, Tesla also replaced turn-signal and windshield-wiper stalks with buttons on the yoke, which Consumer Reports suggested could contribute to confusion and mistakes in high-stress moments, such as during a rainstorm or before an accident (Barry, 2021a). These concerns were echoed by actual drivers, as evidenced by the numerous complaints submitted to the National Highway Traffic Safety Administration (NHTSA), where consumers discussed their issues with the updated steering wheel design, particularly regarding location of the horn button, which is located differently from traditional steering wheels, in the moments leading up to accidents (National Highway Traffic Safety Administration, 2021). Driver dissatisfaction with the human factors of the unconventional yoke style was confirmed 2 years after the initial launch, when Tesla released a wheel replacement that sold out in a week (Hurd, 2023).

2.2.4 Autopilot: trust vs. misguided dependence

In addition to human factors concerns in the car environment, criticisms of autonomous settings encouraging distraction are founded. Even though Tesla requires that drivers oversee the system when autopilot is on, drivers are not always discouraged from breaking the rules. There are numerous examples of drivers entirely disengaging with their autonomous system, such as sleeping with the autonomous system engaged (ABC News, 2019; WSJ Exclusive, 2023). However, even if conditions for distraction are put in place by the brand, the precedent has been set for drivers to be at fault, such as in the case of a Tesla driver who was arrested for riding in the back seat of their car (Quintana, 2021).

This type of behavior, handing over complete control to the autopilot system raises the question of trust and transparency between drivers and their cars. While distraction poses concerns with autonomy, it is also true that drivers who do not want to “check out” must be aware of their vehicle’s decision-making, which has prompted research into increasing trust via transparency. Transparency entails making the inner workings and processes of a technology understandable and accessible to users. Transparency in semi-autonomous vehicles also seems to increase trust and performance for the user, with the caveat that too many additional cues may also increase mental workload (Helldin, 2014). A 2020 study found that people’s trust in automated vehicles is influenced by the information they receive before and during the initial stages of driving, finding that trust tends to recover after take-overs or malfunctions if the system subsequently operates without errors (Kraus et al., 2020). To prevent temporary trust issues, it is crucial to include transparent information encompassing system limitations by incorporating transparency into tutorials and designs for human-machine interaction in AVs (Kraus et al., 2020). This area of research has become so significant that some have even proposed a method to determine transparency in Level 2 AV’s (Liu et al., 2022), and research has also looked into the possibility of augmented reality as a way to increase transparency in these cars in the future (Detjen et al., 2021). Additionally, transparency needs to be considered from a balanced perspective, with drivers fully understanding not only what their vehicles can do, but what they cannot do, to avoid complacency in situations as outlined above with drivers disengaging from their partially autonomous systems. In short, manufacturers should capitalize on transparency to help drivers allow their cars to do what they do well – and to know when to step in as necessary.

2.3 Tesla and innovation

While it is important to acknowledge the existence of human factors issues in Teslas, it is equally worth highlighting the brand’s notable features that bring significant benefits to users. Tesla is renowned for a commitment to technological innovation, constantly pushing the boundaries of automobile design. This dedication is evident through consistent introduction of new features such as personalization and easy user profiles, providing users with enhanced convenience due to Tesla’s high level of digitization (Gillmore and Tenhundfeld, 2020). Tesla pioneered frequent “over-the-air” (OTA) updates, which have remained fairly consistent compared with other manufacturers attempting the same strategy (Doll, 2022). A recent interview with James Farley Jr., CEO of Ford, explained that a piece of the reason other car manufacturers have not been able to complete OTA updates the way Tesla has is because other companies outsource their electronics and controls, while Tesla makes their own materials, and thus own them. They can, as a result, test and push updates more easily. In this interview, it was also mentioned that other companies are now attempting this style of design, which has “never ever” been done before (Stumpf, 2023) - a clear example of Tesla leading the curve on new tech.

Aside from notable features, while Tesla got its start as a luxury car brand, its focus on building more affordable EVs should be commended. Without Tesla 1) building its expansive charging network and 2) offering “lower-priced” cars with better feature sets than high-end cars, it is very likely the EV revolution would not be as far along as it is today (Barry, 2022). Tesla has earned recognition for its remarkable success in carving a prominent position for itself within a well-established industry, notably by pioneering cutting-edge technologies to drive progress (Stringham et al., 2022).

Moreover, Tesla has established a reputation for creating a brand identity characterized by real-time experimentation with products, and sometimes users. This approach has not only allowed them to stand out but has also been instrumental in the company’s success as the market leader in EVs, with a 67.8% market share across their four models according to Elektrek, surpassing competitors by a significant margin (Johnson, 2022).

3 Exploring the paradigms of design in modern cars

3.1 Autonomous vehicles and attention/distraction

In the AV industry, the duality of attention and distraction is a pervasive debate - how do manufacturers deal with the limitations of current technology? How can drivers operate with unexpected mental model changes as their vehicle disengages, switching between autonomous and human-controlled systems?

One of the main goals of self-driving cars is to alleviate the cognitive burdens and stress placed on drivers while on the road. However, the issue of attention and distraction is an unavoidable topic in the AV industry until Level 5 autonomy is realized. For example, Tesla’s older AVs still require a small amount of torque to be applied to the wheel, and only in 2021 and newer releases have they included driver-facing cameras to monitor attentiveness. This creates a potential (and documented) problematic handover moment where the vehicle needs to hand control to the driver, but the driver may not be ready or the vehicle may not realize it needs to hand over control (Posada, 2022).

Additionally, the mental state requirements imposed on the driver in autonomous mode are vastly different from those in an emergency, with little room for error. Determining the balance of control between the human and the autonomous system in this transitional timeframe is key. As will be discussed in Section 3.5, Tesla vehicles in autopilot mode have crashed multiple times when drivers are not monitoring the system or their environment. Drivers can have poor partnerships with the vehicle if the system is insufficiently transparent with its capabilities and limitations, the driver has an incorrect mental model of the system, or when the driver becomes bored if not mentally engaged in their task. Due to the limits of human cognition, people simply are not good at monitoring tasks. Computers, on the other hand, excel at monitoring. During the timeframe where the technology is not fully reliable, there may be an opportunity to design autonomous systems that exist as a backup for drivers who become incapacitated, rather than relying on people, who are proven to be insufficient monitors, to take over. Creating systems that support incapacitated drivers could solve issues with fatigued driving accidents and address an accessibility gap for people with medical conditions.

3.2 The speed of innovation and version control

Aside from constant issues drivers and manufacturers must consider with regards to attention and distraction, the rapidly evolving world of automotive technology has given rise to a host of additional uncertainties, as new innovations are being tested and integrated into vehicles at an unprecedented pace. One notable example of this is the software update approach taken by Tesla, which has been known to push the boundaries of safety and encourage its drivers to serve as test subjects for new technology - what some may call “real-world” testing. Tesla is certainly more committed to frequent OTAs than competitors5, but this begs a question about safety and how informed the end user is about Tesla’s frequent changes to their vehicle. While many other companies release updates to the public with a slower cadence (often after testing), Tesla has been known to treat the public as test subjects with its new technology updates causing issues such as wildly incorrect mileage estimates, dead batteries and disabled features (Kisengo, 2023; Johnson, 2023). Adopting new software in this way should follow a thorough process, beginning with software certification for the level of autonomy that the car operates with. For the sake of software stability, any new release needs to have a certification process, regression testing, and validation of results before implementation.

Despite these concerns, Tesla has continued to forge ahead with its ambitious plans, which have already led to significant changes in car design and functionality, most notably in the eradication of nearly all physical buttons in the car. In general, and across all car brands, there has been a sizable amount of consumer pushback on the buttons issue to the point where two large manufacturers, Hyundai and Porsche, have said on the record they will stick with physical buttons in their cars moving forwards. Hyundai is keeping dials and knobs, and their VP of Design was recently quoted as saying “this is because the move to digital screens is often more dangerous, as it often requires multiple steps and means drivers have to take their eyes off the road to see where they need to press” (Ottley, 2023). In the luxury car arena, Porsche, after some experimentation with more digital displays, is returning to analog in 2024 according to their head of user experience design who noted that they learned a lot from recent customer feedback and market surveys (Gitlin, 2023). Aside from buttons, there are other design trends both Teslas and others have incorporated that warrant re-evaluation: namely, changes to the steering wheel and design trends that place form over function. For example, the yoke steering wheel was billed as a major improvement that would allow the driver to more easily see the screens in front of them while also offering easy control over the vehicle (Guerster, 2021), but, as discussed, the design came with many unforeseen usability issues and criticisms.

The concerns surrounding Tesla’s autopilot systems are so significant that the National Highway Traffic Safety Administration (NHTSA) is currently conducting an investigation into Tesla crashes in the United States, specifically to analyze the potential dangers associated with autonomous systems on the road. In a recent legal deposition, Ashok Elluswamy, the director of Autopilot Software at Tesla, stated that he was unaware of the presence of human factors engineers on the Tesla autopilot team and admitted to being unfamiliar with the concept of perception-reaction time. This statement contradicts Elluswamy’s earlier assertion during the deposition that any attentive individual in the vehicle would readily detect and override any system malfunction (Superior Court of the State, 2022). This issue exists on a larger scale for Tesla, which frequently claims their models rely on working with a human driver to maintain safety without demonstrating sufficient human factors research to support the claim.

Furthermore, Tesla’s approach to introducing new technology in their cars is a bold move, as they are not releasing these changes in installments for users to become familiar with gradually, which is true for both subtle hardware changes, but more importantly for major software changes. New features are being packaged as a single software update, requiring users to learn and adapt to them on their own time. This approach is redefining driver habits, as users are expected to acclimate to interface and car design changes without any formal training or guidance, which for some users could be overwhelming. While individual new features may be exciting and useful, combining many new systems and gadgets for the sake of a futuristic and aesthetically pleasing interior can be overwhelming for the user’s adaptability. Tesla’s all-at-once approach could put users at risk, as they may not be fully prepared to handle the changes and could become distracted while trying to figure out how to use them all together.

While innovation and progress are essential for advancing the automotive industry, it is important to consider the impact of these changes on the end-users and ensure that they have the knowledge and skills to use the new technology safely. Tesla may need to reconsider the current approach and find a balance between innovation and user education to ensure the safety of drivers and passengers.

3.3 User-centric communication in autonomy systems

Until the industry achieves Level 5 self-driving capabilities, transparency during vehicle self-driving is a crucial aspect for semi-autonomous vehicles, forming an essential foundation for a successful vehicle-human partnership6. One of the most significant concerns surrounding autonomous driving is the potential moments of automotive failure, requiring a swift handover of control to the driver. Such situations pose serious safety risks, demanding immediate identification of the issue and quick responses while the driver re-engages with the environment.

A critical aspect of addressing these risks is the implementation of human-in-the-loop (HITL) design. HITL design ensures that the driver remains an integral part of the driving process, even when the vehicle is operating autonomously. This design philosophy emphasizes continuous driver engagement and situational awareness, providing timely and relevant information to the driver about the vehicle’s actions and the surrounding environment.

Situational awareness is essential for maintaining safety and effectiveness in semi-autonomous driving. It involves the driver’s ability to perceive, comprehend, and project the status and dynamics of the driving environment. HITL design supports situational awareness by ensuring that drivers are always informed about the vehicle’s operational status, upcoming maneuvers, and any potential hazards. This approach helps drivers to remain prepared to take control when necessary, reducing the likelihood of accidents during handovers.

As an offset to this issue, there also remains the problem of “phantom braking” and other false alarms (National Highway Traffic Safety Administration, 2021), potentially leading to drivers disbelieving their vehicle’s warning signs and putting themselves at risk (Barry, 2021b). Such occurrences (of phantom braking, for example,) not only undermine user confidence in the autopilot feature but also run the risk of desensitizing drivers to sudden braking. This habituation could lead to nonchalance when faced with a genuinely hazardous situation, compromising overall road safety. Transparency in disclosing how Tesla addresses and mitigates these issues can help reassure drivers and establish trust in the autopilot system, fostering a safer driving experience.

Moreover, transparency supports a sense of responsibility among drivers. When users are aware of the reasons behind the vehicle’s actions, they can feel more in control and accountable for their driving experience. Understanding the decision-making process of the car encourages drivers to remain attentive and ready to intervene if necessary, reducing overreliance on the autonomous features. By educating customers about the car’s capabilities and limitations, Tesla empowers drivers to make informed decisions and actively engage in the driving process.

Additionally, as we move toward more significant automation, it is also important that consumers and researchers can easily understand the systems at work - even if they are not drivers in the cabin. A concerning aspect of Tesla’s information management is how it is sometimes difficult to find the specific features in each model by year, especially for hardware changes. This is especially prudent given that year-by-year, the interior of the same model vehicle can change significantly, such as pivoting the tablet from vertical to horizontal in the Model S between 2021 and 2022 (Clavey, 2021) or removing the turning stalks in the new Model 3 (Levin, 2023). The website “Not a Tesla App” has detailed documentation for software updates (Not a Tesla App, 2022), but there is a noteworthy lack of transparent information on the physical components of each model.

Overall, transparency around Tesla vehicles is vital for instilling confidence, promoting responsible driving behavior, and enhancing road safety. By incorporating human-in-the-loop design principles and maintaining driver situational awareness, Tesla can ensure that drivers remain engaged and informed. This approach not only addresses immediate safety concerns but also fosters a safer and more user-centric driving experience for the future.

3.4 Aligning company goals with user needs – a reflection on prioritizing safety

It is important for any automobile companies developing self-driving technologies to balance their marketing messages with a responsibility to promote safe driving practices. In the case of Tesla, there exist some discrepancies in this approach. By promoting vehicles as a way to entertain oneself [i.e., the TV shows and video games displayed prominently on the dashboard screen on the company’s Model S website page (Tesla. Tesla, 2022)], Tesla may be inadvertently encouraging drivers to engage in these dangerous behaviors while driving. While technology can certainly enhance the driving experience and make it more enjoyable, it should never be used in a way that compromises safety. All automotive manufacturers should take care to ensure that their advertising and messaging does not encourage dangerous behavior on the road. There are several ways in which Tesla could better balance marketing messages (and improve safety systems)7 with promoting safe driving practices today. Some suggestions based on these issues are to:

1. Emphasize the importance of driver attention. While Tesla’s Autopilot and Full Self-Driving features offer significant benefits, it is important for the company to remind drivers that they still need to maintain attention and control of the vehicle. Tesla could make this message a more prominent part of their marketing materials, both on their website and in their advertisements.

2. Encourage responsible use of technology. Tesla could also promote responsible use of technology in their marketing messages. This could include reminding drivers to use hands-free features responsibly, or to avoid engaging in distracting activities while behind the wheel. This may also include reminding users of their system’s limitations, and by revising their marketing terms such as “autopilot” in inappropriate situations.

3. Highlight safety features. Tesla could place a greater emphasis on the safety features built into their vehicles. This could include promoting features like automatic emergency braking, lane departure warnings, and blind spot detection, which can help to prevent accidents.

4. Use real-world scenarios. Tesla could also use real-world scenarios in their marketing materials to demonstrate the importance of safe driving practices. For example, they could show a video of a distracted driver narrowly avoiding an accident, followed by a reminder to always stay focused on the road.

Additionally, Tesla’s brand image uses futuristic designs and vehicles tailored for tech-savvy, dexterous, and mentally agile individuals. Some of the main arguments supporting AVs include the prevention of accidents caused by human error and providing transportation options for individuals lacking autonomy, such as the elderly and disabled drivers (Greig, 2021). However, it is crucial to consider that Tesla vehicles, with the potential for dangerous driving conditions when human error occurs, a demonstrated lack of human factors testing prior to releases, and the demand for high cognitive load, are not setting precedents that effectively address these long-term goals. Tesla may lay the groundwork for inattentiveness and miss the goal of inclusivity by providing a platform geared towards technological immersion, not driver usability.

3.5 Liability, legislation, and regulation

Safety and liability are closely related, especially with technology. At time of writing, there have been several noteworthy cases in which a driver was found to be at fault for an accident that occurred while a vehicle was in autopilot mode. The person monitoring Uber autopilot in the first self-driving accident with a casualty was found to be at fault for distraction by the National Transportation Safety Board (NTSB) and has pleaded guilty to endangerment (ABC News. ABC News, 2022). More recently, a driver sued Tesla in 2020 when her Tesla hit a curb, causing the airbag to deploy and cause serious injuries to her face. In April 2023, the jury found that, because Tesla marketed their vehicles as requiring driver attention, the company was not to blame (Roy et al., 2023a). One juror even stated that Teslas are not “self-driving car(s),” which contributed to their decision (Roy et al., 2023b). An additional ongoing case8 is looking into charging a driver for felony manslaughter for an accident in which his Tesla killed two others while in autopilot mode (ABC News. ABC News, 2023). These cases are not the only of their kind, but they underscore a precedent where autopilot responsibility falls on the driver’s shoulders.

On the other hand, recent findings have suggested that Tesla’s marketing may have contributed to inattention by using language that implies full autonomy for features, even though the technology is not capable. In fact, the attorney in the aforementioned case involving the two casualties has said that he will argue that Tesla markets vehicles as self-driving (ABC News. ABC News, 2023). In 2021, senators recommended a federal probe looking into Tesla’s false advertising (Isidore, 2021). Today, there is an ongoing lawsuit, at time of writing, filed by five police officers against Tesla for “exaggerat[ing] the actual capabilities of autopilot,” related to an accident in which a Model X crashed into the officers while on autopilot mode (Lenihan, 2023). An additional ongoing probe by NHTSA is looking into autopilot crashes in which people were totally disengaged at the time of the accident, including looking into the Tesla driver alert strategy (Shepardson, 2023). Additionally, the FSD (full self-driving) advertising and capability disconnect of Teslas have been called into question by larger authorities, such as the city of San Francisco, which recently took aim at Tesla with legislation to block the term “full self-driving” from their cars after a Tesla in autopilot mode caused an 8-car pileup (California, 2022). Even more convincingly, Tesla recently recalled over 350,000 cars with the FSD system due to issues with its performance around intersections and with speed limits (Press, 2023).

In general, there is currently no real framework for how liability should be considered in cases with partial autonomy in accidents. The cases mentioned above and others like them demonstrate a complexity that is difficult to parse out legally - especially since autonomous systems are not homogeneous and often include varying levels of human intervention (Yosha, 2022a). Like with many technological advancements, the systems in AVs are evolving faster than the law can keep up. There are many different dimensions to consider in response to this question, with common variables including: human risk tolerance, technology capabilities, moral quandaries, traffic laws, and more (Di et al., 2020). In her analysis of Di, Chen, and Talley’s paper, Holly Evarts highlights the importance of a liability policy in avoiding moral hazards for human drivers and assisting AV manufacturers in managing the balance between traffic safety and production expenses. Evarts suggests that government subsidies provided to AV manufacturers for reducing production costs could serve as a significant incentive to produce AVs that surpass human drivers’ capabilities, leading to improved traffic safety and efficiency. Conversely, without proper regulation or subsidies, AV manufacturers may prioritize profit over traffic system wellbeing, potentially causing detrimental effects to overall traffic management (Evarts, 2020).

Manufacturers of autonomous vehicles argue that accidents involving their vehicles should not be their fault, as the responsibility lies with the driver who is supposed to be monitoring the vehicle’s performance and taking control if necessary (Yosha, 2022b). On the other hand, there is an argument that manufacturers should be held liable for accidents involving their vehicles, as they are responsible for designing and testing the technology that is supposed to operate safely on the roads. In this view, the manufacturers should be held responsible for any defects or malfunctions in the technology that lead to accidents, regardless of whether the driver was supposed to be monitoring the vehicle’s performance or not.

Another perspective is that both the manufacturers of AVs and the drivers should share responsibility for accidents involving these vehicles (GriffithLaw. GriffithLaw, 2022). In this view, manufacturers should be responsible for ensuring that their vehicles are safe and reliable, while the drivers should be responsible for monitoring the vehicle’s performance and taking control if necessary.

Assigning liability for an accident involving AVs based on the level of autonomy being used at the time of the accident is a plausible approach. Figure 1 (above) shows the Society of Automotive Engineers’ (SAE) classification system for AVs, which ranges from Level 0 (no automation) to Level 5 (full automation). At the lower levels of autonomy (Levels 0–2), the driver is expected to be actively engaged in the driving task, and the responsibility for any accidents that occur would typically fall on the driver. At the higher levels of autonomy (Levels 3–5), the vehicle assumes greater responsibility for the driving task, and the driver’s role becomes more passive. Therefore, it is reasonable to argue that at the higher levels of autonomy, the responsibility for accidents may shift more towards the vehicle’s technology and away from the driver. However, this would require careful consideration of the specific circumstances of each accident and an assessment of the level of control that the driver had over the vehicle at the time. Additionally, this is challenging given that despite the simplicity of the SAE’s matrix, there is no validation of the proposed levels by any certifying authority.

Ultimately, the question of who should be at fault for accidents involving AVs will need to be addressed through continued improvements to legislation, new regulation, certification around common definitions of autonomy (such as the SAE matrix), and a consensus will need to be reached among stakeholders in the automotive industry, government agencies, and the general public (Yosha, 2022a). However, we must teach and test the next-generation of drivers about self-driving and autonomous technology. When it comes to the DMV’s driver tests or general driver’s education, self-driving technology is largely ignored and most certainly not a required part of obtaining a license (if one has a car with applicable technology).

Without careful consideration of the precedents they are establishing, Tesla and other AV manufacturers run the risk of causing long-term harm to users by promoting hazardous design standards or further widening the accessibility gap. It is essential for any brand to think critically about the implications of their choices to ensure the safety and inclusivity of AVs in the future. If Tesla maintains consistent brand strength compared to other EV and AV manufacturers, it is of paramount importance that they prioritize safety and the other components of building a reliable car and trustworthy brand. All AV manufacturers must also focus not only on designing the best car, but also more deeply understanding how to build the best machine that functions symbiotically in a world that has vehicles from dozens of manufacturers, many of which will simply not contain AV technology.

3.6 Proactive design vs. reactive design

The three-point seatbelt that we rely on today was designed in the 1950s by Volvo because of numerous deaths and serious injuries caused by the inadequate design of the previous system (Bell, 2021). The Chernobyl nuclear powerplant disaster led to the adoption of far stronger regulations that have avoided accidents of this magnitude since (Whelehon, 2021). Instances like these emphasize that it can be the tendency to consider human factors engineering as a failure/risk analysis tool - so much that people often refer to safety rules as being “written in blood” (Whelehon, 2021). However, learning by failure should not be considered an acceptable human factors strategy when it comes to the millions of vehicles that are hitting the roads with autonomous technology.

Although it is true that human factors research post-accident is critical, there has also been discussion of including “Safety-II” into the human factors Equation Hollnagel et al., 2015. The concept behind Safety-I focuses predominantly on looking at the pieces of a system that fail, with “human error” often being the cause of the accident. Safety-II, on the other hand, takes the stance that the majority of scenarios go as designed, with human flexibility as a potential reason for success. A compelling example of Safety-II in action can be seen in aviation, where pilot adaptability has often averted disaster. In 2009, Captain Chesley “Sully” Sullenberger successfully landed US Airways Flight 1,549 on the Hudson River after both engines failed, thanks to his quick thinking and training (Britannica, 2022). This incident, and many others like it, underscores how understanding and supporting human flexibility can lead to positive outcomes even in critical situations.

In general, Safety-II can work together with Safety-I to first look at the many successful examples before then looking at what went wrong if an accident occurs. This article’s point, that anticipating errors rather than operating reactively is an important piece of human factors engineering, has a place in AV development. Investigating the factors contributing to successful interactions among Tesla drivers can provide valuable insights for designing safer and more user-friendly autonomous systems. For instance, by analyzing instances where Tesla’s Autopilot system successfully assisted drivers in avoiding collisions, we can identify the features that contributed to these successes and enhance them. Additionally, proactive design might involve rigorous simulation testing to foresee potential failure modes and address them before they occur in the real world. By learning from both failures and successes, and by emphasizing proactive over reactive strategies, human factors engineering can lead to the development of safer and more reliable autonomous vehicles.

4 Heuristics proposal and the Quick Car Scorecard

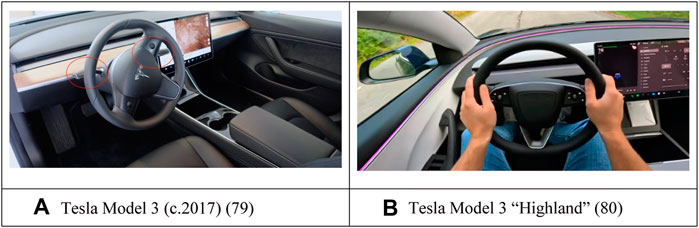

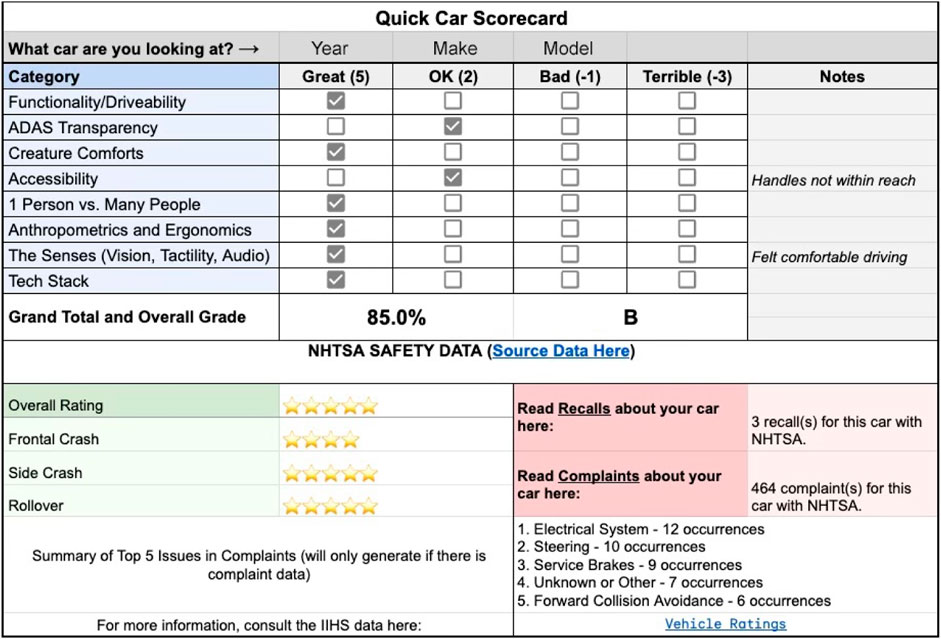

There are numerous principles that those who design, test, and purchase cars should be aware of. However, many consumers choose their vehicles after conducting personal research, which often includes watching or reading reviews, receiving recommendations, test driving, and more. In response to the evolving complexity of AVs, which can sometimes resemble computers on wheels, we want to offer a new approach to meet the needs of diverse users when it comes to purchasing a vehicle. We call this approach the “Quick Car Scorecard” and it combines elements of human factors, UX, and convenience. We propose a scorecard as shown in Figure 3 below, with categories and definitions in Table 1.

Figure 3. The Quick Car Scorecard with NHTSA data and ChatGPT 4 integration for summarizing complaint data. Example car (with model year, make, then model) and ratings shown, with example consumer notes.

4.1 Using the Quick Car Scorecard

The Quick Car Scorecard is designed to help both HF practitioners and buyers evaluate the usability of vehicles. The following is an overview of the design and how it works in practice:

1. Define Categories: The scorecard includes categories such as functionality and drivability, ADAS transparency, creature comforts, accessibility and more (see Figure 3; Table 1).

2. Evaluate Each Category: Users rate each category based on their personal experience or other data source related to the category.

3. Calculate the Usability Score: The theoretical scorecard has a series of formulas that calculate the Scorecard. Essentially, each category is worth a standard set of points and worse ratings mean a hit to a car’s usability score.

4.1.1 Example application

Consider an example where the Quick Car Scorecard is used to evaluate the usability of an AV. Consider Figure 3, the vehicle was rated across the following categories:

• Functionality and Drivability: Evaluates the vehicle’s fundamental driving and usability features, such as handling, power delivery, interior space, and day-to-day utility.

• ADAS Transparency: Assesses how transparent and communicative the vehicle’s ADAS features are.

• Creature Comforts: Gauges the presence and quality of amenities that enhance the user’s comfort and convenience during their journey.

• Accessibility: Evaluates the vehicle’s adaptability to cater to a diverse range of users, including those with varying physical abilities, heights, and visual acuity.

• 1 Person vs. Many People: Assesses the vehicle’s adaptability and performance in catering to both individual drivers and multiple passengers.

• Anthropometrics, Ergonomics, and Comfort: Measures the car’s capacity for personalization and adjustability to cater to a wide range of user anthropometrics and preferences.

• The Senses – Key Data: Evaluates the car’s presentation of crucial information using the primary human senses: visual, auditory, and haptic.

• Technology Stack: Analyzes the integration, functionality, and user-friendliness of the car’s technology stack.

The Quick Car Scorecard, as a theoretical tool, offers a standardized way to evaluate the usability of AVs, making it easier for consumers and practitioners to make informed decisions. By adopting such tools, the automotive industry can move towards creating vehicles that are not only technologically advanced but also user-friendly and safe for all. The below table breaks down the thought process behind the categories we used and offers a rough description and specific goal for manufacturers to improve their offerings.

5 Driving innovation forward. Safely

Before summarizing our findings and recommendations, it is important to acknowledge and engage with some of the main counterarguments that challenge perspectives offered thus far. The following subsections will address noteworthy counterpoints surrounding the complex issues in AVs and their design.

5.1 Counterargument: drivers bear ultimate responsibility because they are expected to monitor the technology and agree to do so

While it is true that drivers are being held responsible for accidents involving AVs, we argue that this should not absolve manufacturers like Tesla of their responsibilities. These companies should prioritize design and safety, ensuring that consumers can accurately assess their vehicles’ capabilities. The current state of technology, where drivers are expected to monitor the road while being tempted to relax, raises ethical questions about placing potentially dangerous automation in untrained hands. Furthermore, comparing autonomous and human-driven vehicles is not straightforward, as humans often have physical and cognitive limitations that interfere with the expected level of awareness. Since autonomous systems are still in the early stages of development, premature adoption without substantial safety measures and regulations could lead to unforeseen issues. Therefore, a more cautious approach that balances the benefits of automation with its potential hazards is required now.

5.2 Counterargument: private competition will lead to innovation and improvement over time

While the argument for private competition as a catalyst for innovation has merit, it is also important to acknowledge the shortfalls of this concept. While competition can drive progress quickly, it can also push features into the market without appropriate safety measures. The development of any new technology cannot ignore the moral, ethical, and social considerations of liability, accountability, and impact on public perception in favor of speed. As laid out in this paper, Tesla’s approach of frequently introducing new features through OTA updates and yearly hardware changes has sometimes resulted in unforeseen issues and user confusion or harm. It is important to develop a well-balanced regulatory framework to complement competition by providing guidelines and standards such that innovation can align with safety; and recent investigations by bodies like NHTSA underscore the necessity of oversight. Using regulations and obtaining manufacturer commitments to prioritize safety, can avoid reckless innovation and create a foundation for private competition to develop these technologies responsibly. While the potential for fully AVs is exciting, it is important to ensure that the technology is genuinely prepared for complex real-world scenarios without compromising safety.

5.3 Counterargument: Teslas are safe and autonomous vehicles have so far killed far fewer people than humans do on average

Tesla will often plug their safety awards on their website (Tesla, 2022) and in their reports (Tesla. Tesla, 2021), which include detailed accounts of their models’ five-star NHTSA ratings. This focus demonstrates a real concern for - and success in - their vehicle’s ability to protect passengers physically in the event of an accident. This side of safety, however, does not necessarily address the impact of errors on others who happen to be caught in an accident due to a malfunction in the human-machine system - or the human factors concerns that are brought to light in this paper. Additionally, while it is true that AVs, including Tesla cars, have shown promise in reducing accident-related fatalities compared to human drivers (Wang, 2022; National Highway Traffic Safety Administration, 2022), humanity and regulatory agencies must take a more nuanced view of safety in this context. While machines with advanced logic, computer vision and machine learning models are inherently more consistent in following traffic rules, they can still make critical errors, especially in uncommon and complex scenarios. If a technological error occurs and the person is not successfully engaged in the environment, the chances of a serious accident increase. These rare but significant consequences can pose unique risks, especially when it comes to setting precedents for irresponsible design. Safety should not solely be about minimizing fatalities; it should also focus on implementing good human factors practices and design controls - and maximizing successes - in the early stages of a new technology. If companies are tempted to cut corners or use drivers as test subjects for software, they run the risk of undermining important ethical obligations to the public. Human-machine interaction plays a crucial role in how these systems are used, and the designs should be carefully designed to minimize the risk of user confusion and distraction without minimizing the constant human element in the system.

5.4 Future research

Though AVs are a pervasive topic in today’s news, there are many uncertainties with respect to how the cars of the future will work together to make the world safer and to how legislation will impact car design. This paper is intended to serve as a springboard for improving many aspects of future AVs, specifically focused on applying human factors principles to make cars safer for drivers/passengers and for those around the cars (pedestrians, cyclists, cars with less advanced technology, etc.). The Quick Car Scorecard is an example of a tool that may help consumers to quickly identify cars on metrics that matter to them in the moment and matter over future years of ownership.

Moving forward, it would be beneficial to see more research aimed at addressing these uncertainties and enhancing the understanding of AVs’ role in the future. This includes investigating the feasibility of a Quick Car Scorecard as a consumer tool, delving into heuristics specific to AV design, and examining the implications of emerging technologies, particularly FSD and the potential principles guiding its beta phase. Additionally, the outcome of the ongoing NHTSA probe into Tesla’s marketing strategies is not yet known, which may shape litigation and liability in future.

There also could be merit in the notion that AVs may be better suited to monitoring a driver, rather than the reverse, and completing research on that concept could be valuable. Furthermore, it may be worthwhile to investigate the potential for short-term prioritization of vehicle-vehicle or vehicle-infrastructure communication to enhance accident prevention as we develop these technologies (NTSB, 2023). Lastly, it would be interesting to see research into improving accessibility for individuals who are not tech-savvy but can benefit significantly from transportation independence, ensuring inclusivity and addressing their specific needs in the AV landscape.

5.5 Navigating the road to autonomous safety

The future of AVs holds immense potential, but it is crucial to approach it with careful consideration and a focus on human factors. Rather than perceiving autonomy as a one-size- fits-all solution, it should be viewed as a teammate that works alongside human drivers to solve problems (Rix, 2022). This perspective shift can lead to the design of systems that are more user-friendly and conducive to human-machine collaboration. Manufacturers can design with transparency and personalization in mind to accommodate individual preferences and needs, ultimately enhancing user satisfaction and trust in autonomous systems for a broader group of users.

The role of human factors specialists in the safe and effective deployment of AVs is critical, and clearly overlooked in the development of features that exist on a variety of modern automobiles. Crashes involving AVs should be thoroughly analyzed by these experts to understand the underlying causes and identify areas for improvement (Robson Forensic, 2018). Conducting in-depth investigations, can reveal lessons from incidents and help rectify design flaws quickly. However, failure analysis is reactive by default. Using a more nuanced Safety-II approach should lead to better understanding and consideration of the numerous human-machine interactions that take place in these types of vehicles. Leveraging the decades of psychological and human factors research in human-machine interaction allows human factors professionals to work with other designers and engineers to proactively set these systems up for success.

The authors of this paper fully support the advancement of autonomous technology - and of Tesla as a manufacturer - but it is precisely because of this support that we emphasize the need for responsible development and testing prior to releases, especially for market leaders with significant sway. By analyzing9 and improving upon the human factors heuristics for AV design, we can ensure that these vehicles fulfill their promise of enhancing road safety while minimizing risks, maximizing performance, and making transportation safer for all.

6 Conclusion

This paper includes a comprehensive literature review exploring issues with human cognition and vehicle automation, usability and human factors concerns specific to Tesla, and the evolving legal landscape surrounding AVs. This background information, along with the subsequent analysis, sheds light on the ongoing debate surrounding attention and distraction in AVs, including the fine line between reaping the benefits of automation and the dangers of over-reliance. The research presented in the literature review and analysis discusses the importance of transparency in vehicle decision-making to bolster user trust, as understanding a vehicle’s actions and motives can encourage responsible driver behavior and preparedness. However, it is important to balance display information to avoid cognitive overload for the user.

A large component of our discussion is Tesla’s fast-paced approach to pushing new technology, without necessarily testing usability thoroughly beforehand. This analysis underscores the necessity for manufacturers to balance their marketing with safety, using responsible marketing language that accurately portrays system capabilities. In this context, proactive user-centered design is paramount, especially given that Tesla and other AV manufacturers are setting precedents for the future. These design choices can have lasting consequences, so it is crucial to think ahead by using the human factors research and resources at our disposal to innovate thoughtfully and deliberately.

Furthermore, the paper includes a list of noteworthy legal incidents for Tesla, with a focus on precedent-setting for liability and regulations. As it stands, the driver is often liable if they were not monitoring as expected when an accident occurred. We highlight the challenge of accountability when human attention wavers due to cognitive limits and vehicle interface design. The paper concludes by summarizing key human factors heuristics for AVs and introducing the Quick Car Scorecard as an example of a tool to help buyers make informed decisions about the usability of their cars. This scorecard uses eight main features of vehicle design to loop the user into the discussion of usability and provide them with the information necessary to make a decision about a vehicle that will best suit their needs.

As we transition from traditional vehicles to autonomous systems, it is essential to maintain vigilance and demand high standards from designers - for the sake of people both inside the vehicle and outside it. The combination of robotic driving and unpredictable human behavior creates potentially dangerous situations that standard driving tests may not adequately consider. While fully autonomous vehicles may offer a safer future - and are an exciting technology for us to look forward to - it is vital to acknowledge and address the current limitations of the technology. We want to emphasize the importance of responsible development, human factors research, and proactive design when creating these vehicles, while allowing the flexibility to innovate in this industry. The preservation of human life should always be a guiding principle in the development of AVs, and designers should be held accountable for adhering to this fundamental value.

Author contributions

KB: Writing–review and editing, Writing–original draft, Methodology, Investigation, Conceptualization. DP: Writing–review and editing, Writing–original draft, Software, Methodology, Investigation, Data curation, Conceptualization.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. The Tufts University Tisch Library paid the publication fee for this article with the Tisch Open Access Fund.

Acknowledgments

The authors would like to thank our friends, family, and others in the Tufts community who inspired this paper. Additionally, ChatGPT (GPT-4 and September 2023 version), using the API, temperature: 1, and additional prompt keywords including: “human factors,” “UX professional,” “automotive research,” and “academic,” was used for light revisions and refinement of ideas throughout the paper.

Conflict of interest

KB declares that although they are employed by the Department of Transportation, the paper was created independently, utilizing solely their personal time and resources. KB confirms that there are no financial of personal interests that could bias the findings or conclusions presented in the paper.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

The views expressed in this paper are based solely on our research and analysis, and do not represent the official stance or policies of the DOT.

Footnotes

1For a full timeline of Tesla’s rise to the top, this article is suggested reading.

2Tesla often releases detailed but difficult to read/comprehend software updates, so drivers may not fully understand what changes have been implemented between software versions.

3On the other hand, ignoring human factors can allow deviance to become normalized in design - this should be avoided at all costs since it can lead to fatal outcomes. Read more here.

4For additional research on the complications arising from automation overlapping with human processing, please read (Anderson, 2020).

5Read all available Tesla software release notes here, supported by the Tesla community. Though the information appears to be transparent, the information is very technical. This could potentially prevent people from fully understanding the changes to their cars. It would be more beneficial for Tesla to use a higher level of explanation for non-engineers. Generally, if a manufacturer makes a software change that affects operation this should be explicitly clear to the end user.

6Tesla has employed scoring systems before releasing self-driving to customers, a clever way of gamifying and rewarding good behavior, even if the result was the release of a system to end user that could lead to worse behavior later down the road.

7A simple implementation of checking the logic of a weight sensor for a driver seat could be used to prevent a driver from engaging autopilot and then switching out of the driver seat. In fact, the car could pull over in a situation like this and disable autopilot for a period of time. Recently Tesla implemented a new solution utilizing their interior facing camera. Read more here.

8To read more about the case, review the following here.

9For additional research regarding the impact of a future with AVs, please read (Fagnant and Kockelman, 2015).

References

ABC News (2019). Tesla driver caught asleep at the wheel in Bay Area. Available at: https://abc7news.com/tesla-sleeping-driver-auotpilot-autopilot/5611307/ (Accesed April 6, 2023).

ABC News. ABC News (2022). The backup driver in the 1st death by a fully autonomous car pleads guilty to endangerment. Available at: https://abcnews.go.com/Business/wireStory/backup-driver-1st-death-fully-autonomous-car-pleads-101777744 (Accesed September 19, 2023).

ABC News. ABC News (2023). As a criminal case against a Tesla driver wraps up, legal and ethical questions on Autopilot endure. Available at: https://abcnews.go.com/Business/wireStory/criminal-case-tesla-driver-ends-legal-ethical-questions-102274826 (Accesed September 19, 2023).

Ahlstrom, V. (2016). FAA human factors (ANG-E25) 2016-12-human-factors-design-standard. Available at: https://hf.tc.faa.gov/publications/2016-12-human-factors-design-standard/.Accesed 2023 August 12.

Anderson, M. (2020). Human factors 101 the ironies of automation. Available at: https://humanfactors101.com/2020/05/24/the-ironies-of-automation/.

Barry, K. (2021a). Tesla’s new steering yoke shows little benefit and potential safety pitfalls. Available at: https://www.consumerreports.org/cars-driving/tesla-steering-yoke-little-benefit-potential-safety-pitfalls-a1034204120/.

Barry, K. (2021b). Tesla remotely recalls full self-driving software for “phantom braking” problem. Available at: https://www.consumerreports.org/car-recalls-defects/tesla-recall-full-self-driving-software-phantom-braking-a5626328806/.

Barry, K. (2022). Consumer reports. Available at: https://www.consumerreports.org/cars/hybrids-evs/how-the-tesla-model-s-changed-the-world-a7291465820/.

Bell, D. (2021). Volvo’s gift to the world, modern seat belts have saved millions of lives. Available at: https://www.forbes.com/sites/douglasbell/2019/08/13/60-years-of-seatbelts-volvos-great-gift-to-the-world/ (Accesed May 31, 2024).

Britannica (2022). US Airways flight 1549 | description, pilot, and facts | britannica. Available at: https://www.britannica.com/topic/US-Airways-Flight-1549-incident (Accesed September 26, 2023).

California (2022). New California law effectively bans Tesla from advertising its cars as Full Self-Driving. Available at: https://www.sfchronicle.com/tech/article/new-california-law-effectively-bans-tesla-from-17672908.php.Accesed 2024 March 13.

Campbell, J. L., Brown, J. L., Graving, J. S., Richard, C. M., Lichty, M. G., Sanquist, T., et al. (2016). Human factors design guidance.

Cardosi, K., Zuschlag, M., Lennertz, T., and John, A. (2021). Volpe national transportation systems center (U.S.). Updating the 2016 FAA human factors design standard (HF-STD-001B): automation, workstation design, and information management. Available at: https://rosap.ntl.bts.gov/view/dot/62419.

Cerezuela, G. P., Tejero, P., Chóliz, M., Chisvert, M., and Monteagudo, M. J. (2004). Wertheim’s hypothesis on ‘highway hypnosis’: empirical evidence from a study on motorway and conventional road driving. Accid. Analysis Prev. 36 (6), 1045–1054. doi:10.1016/j.aap.2004.02.002

Choksey, J. S., and WardlawPower, C. J. D. (2021). Levels of autonomous driving, explained. Available at: https://www.jdpower.com/cars/shopping-guides/levels-of-autonomous-driving-explained.Accesed 2023 August 2.

Clavey, W. (2021). Inside Tesla’s redesigned Model S: closer look at the new 2022 interior. TractionLife.Com Available at: https://tractionlife.com/2022-tesla-model-s-interior-features-and-changes/.

DeBord, M. (2015). Elon Musk: it will be “impossible to run out of range” in a Tesla, self-driving features coming in 2015. Available at: https://www.businessinsider.com/here-comes-elon-musks-announcement-about-ending-tesla-range-anxiety-2015-3.

Detjen, H., Salini, M., Kronenberger, J., Geisler, S., and Schneegass, S. (2021). “Towards transparent behavior of automated vehicles: design and evaluation of HUD concepts to support system predictability through motion intent communication,” in Proceedings of the 23rd international conference on mobile human-computer interaction (New York, NY, USA: Association for Computing Machinery), 1–12. Available at: https://dl.acm.org/doi/10.1145/3447526.3472041.

Di, X., Chen, X., and Talley, E. (2020). Liability design for autonomous vehicles and human-driven vehicles: a hierarchical game-theoretic approach. Transp. Res. Part C Emerg. Technol. 118, 102710. doi:10.1016/j.trc.2020.102710

Dodd, S., Lubold, N., Bui, B., and Finseth, T. (2022). Honeywell aerospace engineering advanced technology. Touch user interfaces in air traffic control: final guidelines report with recommended updates for the. FAA Hum. Factors Des. Stand. (HFDS) [FAA HF-STD-001B]. Available at: https://rosap.ntl.bts.gov/view/dot/61043.

Doll, S. E. (2022). Over-the-air updates: how does each EV automaker compare? Available at: https://electrek.co/2022/06/07/over-the-air-updates-how-does-each-ev-automaker-compare/.Accesed 2023 July 10.

Elon Musk (2023). Elon Musk reacts to viral sleeping Tesla driver video. Available at: https://www.youtube.com/watch?v=Lk6tQ4Jj1kM.Accesed 2023 August 2.

Engström, J., Markkula, G., Victor, T., and Merat, N. (2017). Effects of cognitive load on driving performance: the cognitive control hypothesis. Hum. Factors 59 (5), 734–764. doi:10.1177/0018720817690639

ESA Automation (2017). ESA automation. Available at: https://www.esa-automation.com/wp-content/uploads/2017/10/04_Guidelines-for-designing-touch-screen-user-interfaces-.pdf (Accesed September 18, 2023).

Evarts, H. (2020). Who’s Liable? The AV or the human driver? Available at: https://www.engineering.columbia.edu/news/liability-human-autonomous-vehicle.Accesed 2023 August 3.

Fagnant, D. J., and Kockelman, K. (2015). Preparing a nation for autonomous vehicles: opportunities, barriers and policy recommendations. Transp. Res. Part A Policy Pract. 77, 167–181. doi:10.1016/j.tra.2015.04.003

FOX NEWS (2023). Surveillance video shows moment Tesla S brakes on Bay Bridge before 8-car pileup. Available at: https://www.ktvu.com/news/surveillance-video-shows-moment-tesla-s-brakes-on-bay-bridge-before-8-car-pileup.

Gillmore, S. C., and Tenhundfeld, N. L. (2020). The good, the bad, and the ugly: evaluating Tesla’s human factors in the wild west of self-driving cars. Proc. Hum. Factors Ergonomics Soc. Annu. Meet. 64 (1), 67–71. doi:10.1177/1071181320641020

Gitlin, J. M. (2022). Ars Technica Buttons beat touchscreens in cars, and now there’s data to prove it. Available at: https://arstechnica.com/cars/2022/08/yes-touchscreens-really-are-worse-than-buttons-in-cars-study-finds/ (Accesed May 19, 2023).

Gitlin, J. M. (2023). Ars Technica Buttons are back at Porsche as we see the 2024 Cayenne interior. Available at: https://arstechnica.com/cars/2023/03/buttons-are-back-at-porsche-as-we-see-the-2024-cayenne-interior/ (Accesed April 6, 2023).

Greig, J. (2021). How autonomous vehicles could help the elderly and disabled in the near future. Available at: https://www.techrepublic.com/article/how-autonomous-vehicles-could-help-the-elderly-and-disabled-in-the-near-future/ (Accesed May 25, 2023).

GriffithLaw. GriffithLaw (2022). Who can Be sued when a driverless car causes an accident? Available at: https://www.griffithinjurylaw.com/blog/automated-cars-may-affect-liability-in-crash-injury-lawsuits.cfm.Accesed 2023 August 9.

Guerster, J. (2021). Human factors research suggests Tesla’s yoke steering wheel misses one key feature: speed variable control. LinkedIn. Available at: https://www.linkedin.com/pulse/human-factors-research-suggests-teslas-new-yoke-wheel-jon-guerster/.

Helldin, T. (2014). Transparency for Future Semi-Automated Systems: effects of transparency on operator performance, workload and trust. Available at: https://urn.kb.se/resolve?urn=urn:nbn:se:his:diva-10164.Accesed 2023 August 5.

Hollnagel, E., Wears, R. L., and Braithwaite, J. (2015). From safety-I to safety-II: a white paper. Available at: https://psnet.ahrq.gov/issue/safety-i-safety-ii-white-paper (Accesed October 1, 2023).