95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. ICT , 17 November 2016

Sec. Virtual Environments

Volume 3 - 2016 | https://doi.org/10.3389/fict.2016.00029

This article is part of the Research Topic Virtual and Augmented Reality for Education and Training View all 10 articles

In this paper, we present a new framework for analyzing and designing virtual reality (VR) techniques. This framework is based on two concepts – system fidelity (i.e., the degree with which real-world experiences are reproduced by a system) and memory (i.e., the formation and activation of perceptual, cognitive, and motor networks of neurons). The premise of the framework is to manipulate an aspect of system fidelity in order to assist a stage of memory. We call it the Altered-Fidelity Framework for Enhancing Cognition and Training (AFFECT). AFFECT provides nine categories of approaches to altering system fidelity to positively affect learning or training. These categories are based on the intersections of three aspects of system fidelity (interaction fidelity, scenario fidelity, and display fidelity) and three stages of memory (encoding, implicit retrieval, and explicit retrieval). In addition to discussing the details of our new framework, we show how AFFECT can be used as a tool for analyzing and categorizing VR techniques designed to facilitate learning or training. We also demonstrate how AFFECT can be used as a design space for creating new VR techniques intended for educational and training systems.

Virtual reality (VR) is a complex field that uses cutting-edge technologies to deliver experiences that are realistic due to high-fidelity sensory stimuli and natural interactions. Its underlying simulations allow those experiences to be controllable, flexible, and repeatable, which is ideal for learning and training purposes. As a result, numerous educational and training VR systems have been developed and investigated by researchers. However, VR has not been more widely adopted because it is more expensive than conventional educational methods and less realistic than real-world training exercises. Hence, VR has primarily been adopted in a limited number of fields, including aviation, health care, mining, and military.

Nearly all educational and training VR systems are developed to emulate the real world with a high degree of fidelity (Bowman and McMahan, 2007). But this approach is costly considering the expense of high-fidelity technologies (e.g., full-body tracking systems) and the development of high-fidelity simulations (e.g., detailed graphical models, realistic physics, and plausible artificial intelligence). Additionally, this approach is flawed as current systems nearly always fail to provide all of the visual, auditory, haptic, olfactory, and vestibular cues that most real-world tasks involve. Flight simulators are one of the few successful examples (Lee, 2005). In light of these limitations, it is not surprising that VR’s success has been primarily restricted to fields in which real-world learning or training exercises are expensive, difficult to arrange, or could result in death.

While much research is focused on overcoming VR’s limitations in realism, we suggest that researchers should take a new approach to developing educational and training VR systems. Specifically, we advocate leveraging the virtualness (i.e., the artificial aspects) of VR to provide educational and training solutions that are more effective than current VR approaches, and possibly more effective than conventional educational methods and real-world exercises. For example, consider a technique in which the training scenario can be fast-forwarded after the trainee makes a mistake to the resulting consequence and then rewound back to the decision point just before the error. This technique will convey to the trainee the cause-and-effect relationship between the mistake and resulting consequence, in addition to allowing the trainee to correct the mistake. We refer to this technique as “causation rewinding” (see Case Study #4: Aircraft Evacuation Serious Game for an example within the literature).

To facilitate our advocacy for leveraging the virtualness of VR for educational and training purposes, we have developed a new framework for analyzing and designing VR techniques. This framework is based on two previously unpaired axes – system fidelity and memory. System fidelity is the degree with which real-world experiences are reproduced by a system (McMahan et al., 2012). System fidelity is concerned with how realistic a VR system or experience is. Memory refers to the formation and activation of perceptual, cognitive, and motor networks of neurons (Fuster, 1997). It essentially regards the encoding, storage, and retrieval of information within the brain.

The point of our new framework is to consider how system fidelity can be altered to positively affect memory capabilities. In other words, it concerns how the virtualness of VR can be leveraged to improve learning or training. We refer to our new framework as the Altered-Fidelity Framework for Enhancing Cognition and Training (AFFECT).

In this paper, we present the details of AFFECT and its two axes. We describe how three aspects of a system (its interaction fidelity, scenario fidelity, and display fidelity) contribute to its overall fidelity. We also discuss the human memory system and the specifics of encoding, implicit memory retrieval, and explicit memory retrieval. We then provide four case studies to show how AFFECT can be used as an analysis tool. Finally, we demonstrate how AFFECT can be used as a new design space for creating VR techniques intended for educational and training purposes.

In this section, we briefly review three types of frameworks and taxonomies that are relevant to AFFECT – learning-centric frameworks, technology-based learning frameworks, and VR-based learning frameworks.

The most well-known framework focused on learning is Bloom’s taxonomy. Bloom et al. (1956) presented a hierarchical taxonomy of educational goals to describe the stages of cognitive-based learning. The categories of the taxonomy originally included knowledge, comprehension, application, analysis, synthesis, and evaluation, with each building on the prior categories. Krathwohl (2002) later revised the categories of the taxonomy to reflect learning-centric activities, as opposed to educational objectives. The new categories, starting with the foundation, include remember (i.e., to recall facts and concepts), understand (i.e., explain ideas or concepts), apply (i.e., use information in new ways), analyze (i.e., draw connections among ideas), evaluate (i.e., justify a stance or decision), and create (i.e., produce new content). See Figure 1 for a visual representation of the taxonomy.

A popular framework focused on learning motor skills is the taxonomy developed by Dave (1970). Like Bloom’s taxonomy, Dave’s taxonomy consists of categories of objectives that depend on the previous categories as foundations. Starting from the base, the taxonomy includes imitation (i.e., copying the actions of someone else), manipulation (i.e., performing actions from instructions), precision (i.e., executing a skill reliably), articulation (i.e., adapting and integrating skills to new situations), and naturalization (i.e., automated and unconscious execution of a task). Naturalization is particularly relevant to our later discussion of implicit retrieval in Section “Implicit Retrieval.”

There are many other learning-centric frameworks, but such an in-depth review is outside the scope of this paper. However, we do recommend that readers interested in affective skills, which deal with emotions and behaviors, should read about the affective domain described by Kraftwohl et al. (1967). The framework is highly relevant to VR systems designed to train interpersonal skills.

There have also been numerous frameworks concerned with how technology affects learning. We focus our review on two of these. We chose these frameworks because we felt they were applicable to VR systems, in addition to general technologies.

In their framework for understanding courseware, Mayes and Fowler (1999) explain that conceptual learning consists of three stages – conceptualization, construction, and application – and that educational technologies can be designed to facilitate these stages. Conceptualization refers to the initial introduction and internalization of a concept. Construction builds upon conceptualization and refers to the process of performing meaningful tasks in order to elaborate upon and combine concepts. Finally, application is the process of applying concepts in new contexts. Mayes and Fowler (1999) also describe how educational technologies, specifically courseware, should be designed to facilitate these three stages of learning. Primary courseware is technologies designed to introduce learners to new concepts. Secondary courseware provides a task-based approach to learning through technologies. Finally, tertiary courseware should be designed to allow learners to apply the targeted concepts to new contexts. With regard to VR systems, examples of primary, secondary, and tertiary courseware include tutorials, practice modules, and open worlds, respectively.

Edelson (2001) presents a similar framework that he refers to as the Learning-for-Use model. This model characterizes the development of useable understanding as a three-step process consisting of motivation, knowledge construction, and knowledge refinement. Motivation involves the learner recognizing the need for new knowledge. Once a learner is motivated, knowledge construction can occur, which is the internalization of new knowledge. The third stage, knowledge refinement, occurs through the process of organizing and connecting knowledge structures. Edelson (2001) recommended that learning technologies should be designed to facilitate the three stages of the Learning-for-Use model. In particular, technologies for motivation should allow learners to experience demand and curiosity. Knowledge-construction technologies should enable learners to observe and receive communication. Finally, technologies for knowledge refinement should provide opportunities for learners to apply and reflect upon their knowledge.

Considering the frameworks of Mayes and Fowler (1999) and Edelson (2001), we can draw several similarities between the two frameworks. Mayes and Fowler’s stage of conceptualization is synonymous with Edelson’s knowledge construction. Additionally, Edelson’s knowledge refinement can be viewed as the combination of Mayes and Fowler’s stages of construction and application. Hence, Edelson’s stage of motivation is the most distinguished difference between the two frameworks. Because their framework considers knowledge refinement to occur through two distinct processes, we suggest that Mayes and Fowler’s framework should be altered to include Edelson’s additional stage of motivation. As a result, we can view learning to take place in four stages – motivation, conceptualization, construction, and application. The implication for VR systems is that learning should be properly motivated, whether in tutorials or completely new simulations.

There are far fewer frameworks concerned with how VR technologies affect learning. There are many principles and guidelines for designing educational and training VR systems scattered throughout the literature. However, we are specifically concerned with frameworks that can be used for analyzing, categorizing, and designing new VR techniques. We describe the ones that we found within the literature here.

Lee et al. (2010) present a VR-centric framework that represents how VR features can impact learning. The researchers identify representational fidelity (i.e., the realism of the simulation) and immediacy of control (i.e., the ability to smoothly navigate the virtual environment and manipulate objects) as the features of VR that can be decided by system designers. They describe how these features in turn affect the interaction experience (i.e., usability), which consists of perceived usefulness and perceived ease of use. Then, they discuss the learning experience as consisting of multiple psychological factors, including presence, motivation, cognitive benefits, control and active learning, and reflective thinking. Lee et al. (2010) explain that both the VR features and the interaction experience affect these psychological factors, in addition to the psychological factors being influenced by student characteristics, such as spatial abilities and learning styles. Finally, the psychological factors ultimately determine the outcomes of performance achievement, perceived learning, and satisfaction.

Chen et al. (2004) present a learning-centric framework that guides how a VR system should be developed around the learning experience. Their framework begins with a macro-strategy and learning objectives, which are decided by the targeted types of learning (i.e., labels, verbal information, intellectual skills, or cognitive strategies). Once the learning objectives have been decided, an integrative goal is necessary to combine the learning objectives into a comprehensive and purposeful activity. The system designer then needs to identify an “enterprise scenario” that consists of the problem context, the problem representation, and the problem manipulation space. The final step of the macro-strategy is to determine how to provide tools that support the enterprise scenario. Tools can include behavioral modeling, cognitive modeling, coaching, and scaffolding. Once the macro-strategy is complete, Chen et al. (2004) explain that principles of multimedia design (Mayer, 2002) should be incorporated into the outcomes of the macro-strategy, as a micro-strategy.

The frameworks of Lee et al. (2010) and Chen et al. (2004) take opposing approaches to designing new VR techniques for learning purposes. The framework of Lee et al. (2010) focuses on how the features of the VR system can be altered to ultimately influence learning outcomes. The framework of Chen et al. (2004) begins with the desired learning outcomes and builds a VR system around them. As we will demonstrate later in this paper, either approach can be taken with our new framework, as it focuses on the intersections of system fidelity and memory.

In this section, we present our new framework for analyzing and designing VR techniques for enhancing cognition, education, and training. Our new framework is based on two axes – system fidelity and memory. Again, system fidelity is the degree with which real-world experiences are reproduced by a system (McMahan et al., 2012) while memory refers to the formation and activation of perceptual, cognitive, and motor networks of neurons (Fuster, 1997). We discuss both system fidelity and memory in detail within the following sections; however, the point of the framework is to consider how system fidelity can be altered to positively affect memory capabilities. For example, in Section “Case Study #3: Pinball Game Simulator,” we describe how the fidelity of a system’s interactions can be altered to improve motor memory. Hence, we refer to our new framework as the AFFECT.

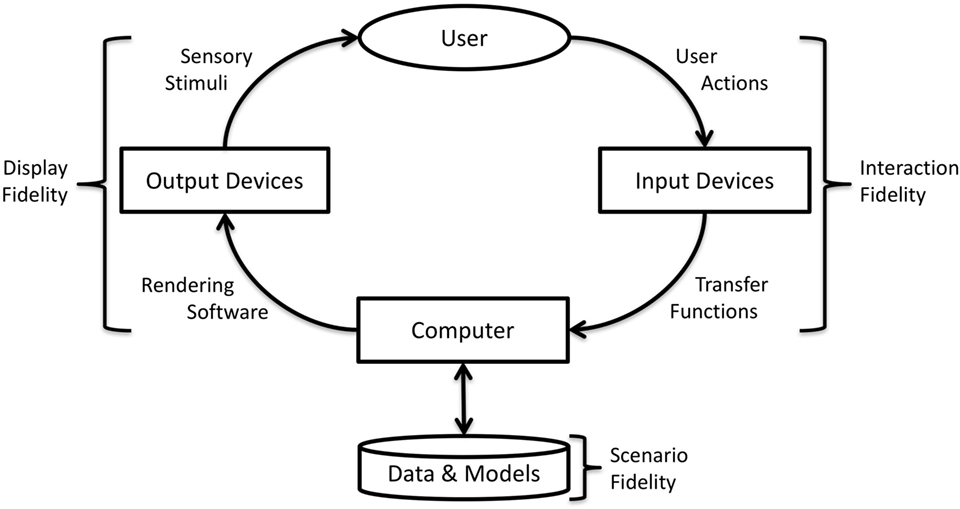

In order to understand the concepts and details of system fidelity, we must first consider the flow of information that occurs when a user interacts with a system. Every interaction begins with a user action that either directly manipulates or is captured by an input device through sensing technology. A transfer function translates the input device’s data into a meaningful operation or event that the system processes (Hinckley et al., 2004). Data and models underlying the simulation help to determine the outcomes of this operation or event. Rendering software then translates the current state of the simulation into data signals that are displayed by the output devices. The sensory stimuli produced by the output devices are then perceived by the user to determine if the desired interaction was accomplished or not. This flow of information is depicted by the User-System Loop in Figure 2.

Figure 2. The User-System Loop depicts the flow of information when a user interacts with a system. The three aspects of system fidelity are also indicated.

Considering the User-System Loop, system fidelity as a whole can be divided into three phases or types of fidelity (Ragan et al., 2015). First, interaction fidelity is the degree with which real-world actions are reproduced in an interactive system (McMahan et al., 2012). Second, scenario fidelity is the degree with which rules, behaviors, and environment properties are reproduced in a simulation, as compared to the real world (Ragan et al., 2015). Finally, display fidelity is the degree with which real-world sensory stimuli are reproduced by a system (McMahan et al., 2012). We use these three types of fidelity to categorize techniques along the system fidelity axis of our new framework.

Interaction fidelity describes how realistically users move their bodies to interact with a system, the input devices that sense or are manipulated by those movements, and the interpretation of input data as meaningful system events. As an example comparison, head tracking affords a higher degree of interaction fidelity to real-world walking than a walking-in-place technique (Usoh et al., 1999). Head tracking provides walking motions more similar to actual human gait, and the physical head movements are directly translated into the same virtual camera movements. However, on the other hand, walking-in-place techniques translate physical marching gestures meant to emulate walking into virtual steps forward. Head tracking allows real-world walking to be reproduced within a VR system while walking-in-place techniques provide a limited imitation.

Within our framework, interaction fidelity serves as one of the system fidelity categories. There are three key aspects to consider when classifying a VR technique or system under the interaction fidelity category – whether the technique purposefully alters how users must move their bodies to interact, whether the system is implemented with a specialized input device, and whether the technique translates the input data in a non-isomorphic (i.e., not a one-to-one mapping) manner. The underwater arc-welding training system developed by Wang et al. (2009) is an example of altering how users move and using a particular input device. The researchers restricted the movements of users and the angle of the welding rod during training by using a force feedback device and employing the concept of haptic guidance (Feygin et al., 2002). Researchers have also leveraged interaction fidelity by using non-isomorphic transfer functions (i.e., scaled-linear and non-linear mapping functions). For instance, Le Ngoc and Kalawsky (2013) used amplified head rotations to enable flight simulation training on single-monitor systems comparable to multiple-monitor systems, despite the smaller field of view.

Scenario fidelity concerns the models and attributes underlying the simulation’s physics, time, artificial intelligence, and virtual environment. Most game engines provide these simulation features through simple physics, time representations, autonomous agent behaviors, and interfaces for designing the virtual environment (Lewis and Jacobson, 2002). However, for more-realistic simulations, specialized engines must often be developed. For example, consider the scenario of filling a virtual glass with virtual water. Artists often rely on particle systems to emulate such 3D fluid effects, but they cannot match the realistic appearance and behavior of simulating fluids through equations of fluid motion (Crane et al., 2007).

As one of the system fidelity categories, scenario fidelity helps to classify those techniques and systems that implement specialized physics engines, alter time, employ particular behaviors for virtual agents, or manipulate the conventional properties of the virtual environment and its objects. Several specialized implementations of physics have been investigated for various educational and training purposes, including bone dissection (Agus et al., 2003), wildfire spread (Sherman et al., 2007), and medical palpation (Ullrich and Kuhlen, 2012). Researchers have also investigated how to manipulate the perception of time within simulations (Schatzschneider et al., 2016). Artificial intelligence, virtual agents, and crowd simulations have been focused on for developing education and training systems. For example, dynamic and interactive crowds for evacuation simulations have been developed using psychological models for more-realistic crowd behaviors (Kim et al., 2012). Finally, virtual environment and object properties have also been manipulated for educational purposes. Oren et al. (2012) found that adding unique colors to virtual assembly pieces allowed VR users to better train for an assembly task than users that trained with the real-world pieces, which were all of the same color.

Finally, display fidelity brings to consideration the rendering algorithms, output devices, and sensory stimuli produced in a system. It is synonymous with the concept of immersion being an objective quality of a VR system (Bowman and McMahan, 2007). For example, a high-quality HMD with a wide field of view, head tracking, and a high refresh rate provides a higher degree of display fidelity and immersion than a simple desktop monitor (assuming the same content is provided to both using the same rendering algorithm).

As the final system fidelity category, display fidelity helps to classify those techniques that purposefully alter the sensory stimuli produced by an education or training system. For example, cartoon and sketch-based rendering algorithms have been shown to elicit greater negative emotions (e.g., anger, fear, and guilt) than a more-realistic rendering algorithm, when interacting with a rapidly deteriorating virtual patient (Volonte et al., 2016). In some cases, display fidelity is altered by providing additional sensory stimuli beyond conventional visuals and audio. For example, Tang et al. (2014) developed a tactile sleeve device consisting of multiple linear resonate actuators to provide the sensations of being touched during autism interventions.

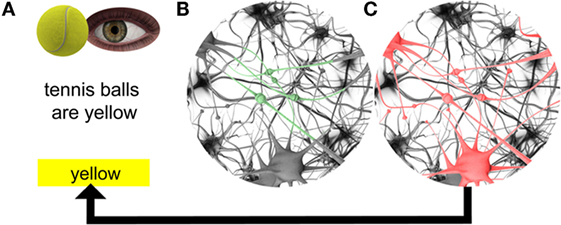

When it comes to the process of memory formation, there are three critical stages – encoding, storage, and retrieval (Smith, 1980). Encoding is the process of learning new information by translating perceived stimuli into understandable constructs and interpreting those constructs in the context of prior memories (Tulving and Thomson, 1973). Storage refers to the formation and maintenance of memory networks consisting of interconnected neurons that are formed by associations (Fuster, 1997). Finally, retrieval is the reactivation of those memory networks to complete the act of remembering the information stored in them (Fuster, 1997). See Figure 3 for a graphical depiction of this process.

Figure 3. The three stages of memory. (A) Encoding involves the development of new concepts (tennis balls are yellow) from perceived stimuli (the tennis ball) and prior memories (the color yellow). (B) Storage involves the formation and maintenance of neuron-based memory networks. (C) Retrieval involves the activation of the neuron-based memory networks.

Of the three stages, encoding and retrieval are the only stages that can be assisted to improve memory. The storage stage is obviously important; information never stored can never be retrieved (Tulving and Thomson, 1973). However, storage is completely internal to the brain and the biological processes that oversee the formation of neuron networks. Hence, for our new framework, we only focus on the encoding and retrieval aspects of memory.

Furthermore, we have decided to distinguish between the retrieval of implicit and explicit memory. Implicit retrieval, or implicit memory, is the activation of a memory network in the absence of conscious recollection (Graf and Schacter, 1985). For example, the act of typing the word “memory” for an experienced typist involves implicit retrieval. The typist does not consciously think about pressing the individual keys (“m,” “e,” “m,” “o,” “r,” and “y”), but executes the subtasks unconsciously. On the other hand, explicit retrieval or memory is the intentional activation of a memory network with conscious effort (Graf and Schacter, 1985). In the following sections, we discuss encoding and these concepts of retrieval in further detail.

Encoding is the essential first step to memory formation. If information is not encoded, it cannot be retrieved later on. There are several factors that impact encoding, including attention, distinctiveness, organization, and specificity.

Attention is the process of determining what to encode by focusing on it (Chun and Turk-Browne, 2007). It involves allocating cognitive resources to selected stimuli and activated memory networks. Unselected stimuli are not encoded. Hence, the underlying selection process is crucial when there are distractions (i.e., other stimuli and activations competing with key task information for the attentional resources). Additionally, it is important to note that established memories can affect this selection process and guide what is attended to (Chun and Turk-Browne, 2007). For example, when reading the news, a reader’s attention may be distracted from the current sentence to a song coming from a nearby radio, if it is the reader’s favorite song.

Distinctiveness is the quality of encoded information being distinguished as unique, which enhances the discriminability of that information during retrieval (Hunt, 2003). There are two types of distinctiveness – primary and secondary (Schmidt, 1991). Primary distinctiveness is the quality of encoded information being different compared to other recent stimuli. For example, a red pen will be distinctive among a cup of blue pens (assuming the user is not colorblind). Some researchers attribute the effectiveness of primary distinctiveness to increased attention and processing, though others contribute it to storage and retrieval (McDaniel and Geraci, 2006). On the other hand, secondary distinctiveness is the quality of encoded information being different compared to established memories. In the prior example, the blue pens would have secondary distinctiveness if the cup were normally filled with black pens. Both types of distinctiveness during encoding have been shown to improve retrieval, though the effectiveness of primary distinctiveness depends on how the information is distinguished from other recent stimuli (McDaniel and Geraci, 2006).

Organization is the active process of encoding information in relation to other stimuli or established memories (Hunt and McDaniel, 1993). There are several approaches to relational encoding. One approach is elaboration, which is the process of relating a concept with prior knowledge (Klein and Kihlstrom, 1986). For example, a person may attempt to remember a new acquaintance’s name (e.g., “George”) by activating memory networks with the same name (e.g., “George Washington,” “George Lucas”) or even adding to those networks (e.g., “This George is not as charismatic as George Clooney”). Another approach to organization is chunking, which involves grouping and encoding information into larger units already present in established memory (Tulving and Craik, 2000). For instance, when given a US phone number, we remember three things – the area code, the prefix, and the line number – rather than 10 individual digits. Categorization, the process of grouping similar concepts according to some semantic criterion, is also an organization approach (Klein and Kihlstrom, 1986). For example, the reader can categorize elaboration, chunking, and categorization under the concept of organization to better remember the various aspects that affect encoding.

Specificity, commonly known as the encoding specificity principle, is the idea that cues used later on for retrieval must be part of the original encoding process (Tulving and Thomson, 1973). This aspect of encoding supports the notion that studying for a test in the same room that the test will take place should improve test performance. In particular, there are two concepts of memory that rely on specificity. First, context-dependent memory refers to the improved retrieval of information when the environmental context of retrieval matches the context in which the information was encoded (Smith, 1994), such as the test room example. The second concept is state-dependent memory, in which retrieval of information is improved when the individual is in the same state of consciousness as he or she was when the information was encoded. State-dependent memory can naturally occur with moods (Weingartner et al., 1977) or be induced pharmacologically (McGaugh, 1973).

Considering these four aspects of encoding, there are some important considerations to make with regard to our framework. First, as technique designers, we can positively influence the encoding process. We can influence attention by omitting or suppressing distractions. We can affect primary distinctiveness by creating contrast between more-important elements of information and non-essential ones. We can promote organization through interactions that allow the user to categorize or sort virtual objects, whether those objects are concrete representations or symbols for abstract concepts. We can also enable specificity by placing the user, physically or virtually, in the same environment as the one that the user is expected to retrieve the encoded information. Because encoding only has to occur once to enable retrieval, these techniques can be used for tutorial-style simulations that are designed as introductory lessons and not intended for repeated use.

However, the second important consideration is the realization that we can only influence some aspects of encoding and only to certain degrees. After all, encoding is an internal cognitive process that is ultimately under the control of the user and not us. For instance, though we can reduce potential distractions, there is nothing that prevents the user’s memory from distracting his or her attention from the important aspects of a simulated task (e.g., how to use a wrench to complete an assembly) to personal memories (e.g., the wrench looks like a wrench often used by the user’s deceased father). Similarly, secondary distinctiveness is dependent upon the user’s previous memories and whether the key information presented is unique or distinguished given their prior experiences. While we can design techniques to teach organization strategies, the employment of those strategies are at the discretion of the user. Finally, we may be able to enable specificity through context-dependent environments, but inducing particular states of consciousness is difficult without potentially harming or negatively affecting the user.

Our final important consideration concerns specificity. This aspect of encoding has been debated within the memory research community, as findings have been inconsistent with regard to the benefits of context-dependent memory and state-dependent memory. Increasing specificity has been found to improve retrieval, have no effect on retrieval, or even decrease retrieval performance (Nairne, 2002). The authors have encountered this latter negative effect of specificity in their own prior research on context-dependent memory via olfactory cues (Howell et al., 2015). Considering such results, Nairne (2002) has argued that specificity has a correlational effect with retrieval performance, as opposed to a causal effect. In other words, increasing specificity is not guaranteed to improve retrieval. We still generally consider specificity as a good design guideline for education and training systems. After all, it is more intuitive to train surgical motor skills in an operating room environment than a mineshaft environment. However, we warn against technique designers relying solely on increased specificity to enhance cognition and training.

As explained above, implicit retrieval or memory is the unconscious retrieval of stored information. Implicit memory is synonymous with procedural memory (Roediger, 1990), which contains stored information for how to accomplish routine actions (Tulving, 1985). As such, implicit or procedural memory underlies motor skills, priming, classical conditioning, and possibly other forms of learning (Roediger, 1990). This is why many provided examples of implicit memory involve motor skills, such as typing on a keyboard, driving a car, or riding a bicycle. The term motor memory is often used to refer to this form of procedural memory (Korman et al., 2007).

Priming is another form of implicit memory in which exposure to a stimulus influences the response to a subsequent stimulus (Tulving et al., 1982). Consider the phrases “Italian pants” and “Polish shoes.” Now, consider “Hang pants” and “Polish shoes.” In the first set of stimuli, most readers likely think of footwear from Poland after being primed to think of pants from Italy. However, in the second set of stimuli, many readers probably imagine the act of polishing and shining shoes given the prime of putting a pair of pants on a hanger.

Classical conditioning occurs when two stimuli are repeatedly paired, and an unconscious response to the second stimulus is eventually elicited by the presentation of the first stimulus alone (Mauk et al., 1986). The first stimulus is often referred to as the conditioned stimulus (CS) while the second is called the unconditioned stimulus (US). The implicit response to the US is known as the unconditioned response (UR), and once conditioned, it becomes the conditioned response (CR). An example of classical conditioning would be to pair the sound of a bell (the CS) with the presentation of food (the US) for a dog. Due to the food, the dog involuntarily salivates (the UR). Eventually, after repeated pairings, the dog will salivate in response to the sound of the bell (the CR), without the presentation of the food.

In our framework, we classify techniques under the implicit retrieval category if they promote motor memory, apply priming, or employ classical conditioning. It is important to note that techniques intended to establish motor memory or classical conditioning require repeated use to be effective, as both aspects of implicit memory are developed through repetition (Roediger, 1990). Hence, these techniques are best suited for practice-oriented simulations that are designed to be repeatedly used. While priming may be accomplished without repetition by leveraging common associations (Tulving et al., 1982), it can fail if a user’s memory does not have those associations due to different past experiences or cultural background.

Unlike implicit retrieval, explicit retrieval of memories requires conscious effort. This includes many types of memory tasks, from reminiscing about your high school graduation, to remembering what you ate yesterday for lunch, to recalling who is the President of the United States. Explicit memory is also referred to as declarative memory (Squire, 1986). Furthermore, it can be broken down into two categories of memories – episodic memory and semantic memory (Roediger, 1990).

Episodic memory stores and facilitates the retrieval of information concerning past episodes or events experienced (Johnson, 2010). Reminiscing about your high school graduation and remembering what you ate yesterday for lunch are examples of episodic memory. Perceptual events can be stored in episodic memory solely in terms of their perceptible stimuli and attributes; however, retrieval of this information for inspection can change the episodic memory (Tulving, 1972). In particular, false memories can arise due to reconstructive processes filling in missing elements while remembering, which frequently leads to errors (Roediger and McDermott, 1995). This can lead to remembering events quite differently from the way they happened or remembering events that never occurred.

Semantic memory stores and facilitates the retrieval of information concerning facts and relationships (Johnson, 2010). Recalling who is the President of the United States or what the word “semantic” means is examples of semantic memory. Semantic memory does not contain perceptual information like episodic memory does. Instead, semantic memory stores cognitive constructs translated from perceptual stimuli (Tulving, 1972). As such, semantic memory reflects our understood knowledge of the external world. Semantic memory is also less susceptible to being changed upon retrieval and inspection (Tulving, 1972).

We classify techniques in our framework under the explicit retrieval category if they are primarily concerned with the storage and retrieval of episodic and semantic information. Examples of episodic techniques include those focused on producing emotional stimuli for later retrieval, such as fear arousal (Chittaro et al., 2014), and those focused on providing a second-party perspective of an event, such as virtual body ownership (Kilteni et al., 2013). Examples of semantic techniques include those focused on conveying factual information, such as the formula to calculate the volume of a pyramid (Lai et al., 2016), and those focused on conveying associations, such as what defects to look for when inspecting a haul truck part (McMahan et al., 2008).

With the fundamentals of AFFECT defined in the previous section, we now describe how the framework can be used as a taxonomy and analysis tool. Note that taxonomies are useful for classifying items into categories for the purpose of better understanding the items and the relationships among them (Bowman et al., 2005). We then present four case studies of previously developed systems, analyze and categorize their techniques designed for enhancing cognition, education, and training, and discuss the effectiveness of the techniques, if known.

When using AFFECT to classify a technique, we employ the following checklists to categorize the technique within the system fidelity and memory axes:

• Are the intended user actions purposefully not realistic?

Yes: classify under Interaction Fidelity.

No: continue.

• Do the input devices purposefully alter the user actions?

Yes: classify under Interaction Fidelity.

No: continue.

• Are the transfer functions purposefully non-isomorphic?

Yes: classify under Interaction Fidelity.

No: continue.

• Does the simulation purposefully use specialized physics?

Yes: classify under Scenario Fidelity.

No: continue.

• Does the simulation purposefully use specialized time?

Yes: classify under Scenario Fidelity.

No: continue.

• Does the simulation purposefully use specialized agent behaviors?

Yes: classify under Scenario Fidelity.

No: continue.

• Does the simulation purposefully use an unrealistic environment or objects?

Yes: classify under Scenario Fidelity.

No: continue.

• Are the rendering algorithms purposefully not realistic?

Yes: classify under Display Fidelity.

No: continue.

• Do the output devices purposefully provide additional sensory stimuli?

Yes: classify under Display Fidelity.

No: classify under Display Fidelity.

• Does the technique purposefully promote attention?

Yes: classify under Encoding.

No: continue.

• Does the technique purposefully promote distinctiveness?

Yes: classify under Encoding.

No: continue.

• Does the technique purposefully promote organization?

Yes: classify under Encoding.

No: continue.

• Does the technique purposefully rely on specificity?

Yes: classify under Encoding.

No: continue.

• Does the technique purposefully promote motor memory?

Yes: classify under Implicit Retrieval.

No: continue.

• Does the technique purposefully rely on priming?

Yes: classify under Implicit Retrieval.

No: continue.

• Does the technique purposefully promote classical conditioning?

Yes: classify under Implicit Retrieval.

No: continue.

• Does the technique purposefully promote episodic memory?

Yes: classify under Explicit Retrieval.

No: continue.

• Does the technique purposefully promote semantic memory?

Yes: classify under Explicit Retrieval.

No: classify under Explicit Retrieval.

Note the end of the system fidelity axis checklist. If a technique does not alter system fidelity in any of the previously checked ways, we classify the technique under display fidelity by default. We made this decision for two reasons. First, the checklist must have closure and be complete. Otherwise, some techniques would never be classified within the system fidelity axis. Second, at this point in the checklist, the only remaining techniques will be conventional systems that do not alter fidelity and instead attempt to maintain high levels of specificity (see Encoding). Because specificity is an aspect of the encoding process, which involves processing perceived sensory stimuli, we felt that these techniques were best categorized under display fidelity, which directly concerns the sensory stimuli produced by a system.

Also note the end of the memory axis checklist. If a technique is not classified by the end of this checklist, we categorize the technique under explicit retrieval by default. However, unlike the system fidelity axis checklist, a technique should never reach the end of the memory axis checklist. Considering that implicit memory and explicit memory encompass the entire memory system (Roediger, 1990), every technique not classified under encoding will fall into one of these two categories. Considering the natures of implicit and explicit memory, a technique is most likely relevant to explicit memory if it is difficult to judge which retrieval category is the most appropriate.

Now, we present four case studies of previously developed education and training systems. We specifically selected a range of case studies in terms of our framework’s two axes and in terms of the systems’ educational or training purposes. For each case study, we describe the system and its purpose, analyze and categorize its notable techniques, and then discuss their effectiveness.

The Minimally Invasive Surgery Trainer – VR (MIST VR) was specifically designed to provide a more-objective assessment tool for laparoscopic training, which was missing from traditional laparoscopic box trainers (Wilson et al., 1997). The system consisted of two laparoscopic instruments held in gimbals that tracked the motions of the instruments through potentiometers. The real-world movements of the instruments were directly translated to the simulation to control two virtual laparoscopic instruments within an accurately scaled operating volume, which could be viewed on a computer monitor. The system also included a foot pedal for simulating diathermy (i.e., the use of high heat to destroy organic material and to cauterize blood vessels).

The MIST VR simulation had multiple modes, including a tutorial, training tasks, assessment tasks, and playback. Six tasks of progressive complexity were designed to simulate some of the basic maneuvers performed during a laparoscopic cholecystectomy. Accuracy, errors, and time to completion were recorded during the tasks.

The tasks included: (1) grasping a virtual sphere and placing it in a virtual box, (2) transferring the sphere between the virtual instruments before placing it in the box, (3) grasping alternate segments of a virtual pipe, (4) grasping the sphere and precisely touching it with the tip of the other instrument multiple times, (5) using one instrument and the pedal to diathermy three virtual plates away, and (6) using one instrument to diathermy the plates away while holding the sphere in a virtual box with the other instrument (Grantcharov et al., 2004). These abstract tasks were intentionally designed to avoid distracting surgeons with the (fallible) appearance of virtual organs (Wilson et al., 1997).

The first notable technique employed by the MIST VR system was the use of actual laparoscopic instruments to provide the “look and feel” of performing a real-world laparoscopic cholecystectomy.

In terms of system fidelity, these physical instruments clearly increased interaction fidelity but did not purposefully alter it by producing unrealistic user actions or forcing certain user actions. Additionally, MIST VR employed isomorphic transfer functions to provide direct manipulation of the virtual instruments. Because the instruments were physical devices, they also clearly did not alter the simulation. However, the instruments did purposefully serve to provide additional sensory stimuli in terms of tactile and kinesthetic cues. Hence, we classify them under display fidelity.

In terms of memory, these instruments were not intended to promote attention, distinctiveness, or organization. While they did increase specificity through the tactile and kinesthetic cues, they did not rely solely on encoding those cues. Instead, the system was designed to allow trainees to use those cues in real time to improve their motor skills. Specifically, the instruments were used to promote motor memory. Hence, we categorize the instruments for implicit retrieval.

We refer to the laparoscopic instruments as “haptic props.” “Haptic” clearly denotes the additional tactile and kinesthetic sensory stimuli provided by the instruments. We adopted the term “props” from theatrical productions, in which the term refers to an object used on stage by the actors during a performance. If we consider simulations synonymous to theatrical performances, then the laparoscopic instruments are definitely objects used by the trainees during a performance.

In a study conducted by Grantcharov et al. (2004), surgeons that trained on the MIST VR system demonstrated significantly greater improvement in real-world operating room performance than surgeons that did not receive VR training. In particular, VR trainees performed significantly faster, with fewer errors, and with better movements. These results indicate that the haptic props were successful in promoting motor memory.

The second notable technique employed by the MIST VR system was the use of abstracted objects instead of virtual organs in order to avoid distracting the surgeons.

In terms of interaction fidelity, these objects did not purposefully produce unrealistic user actions. In fact, they were designed to practice the basic maneuvers involved in laparoscopic cholecystectomies. However, the simulation purposefully used these unrealistic objects, with regard to laparoscopic cholecystectomies, to decrease distractions. Hence, we categorize them under scenario fidelity. In terms of memory, these abstracted objects were clearly intended to promote attention by eliminating the distraction of virtual organs. Therefore, we classify the abstracted objects as encoding.

According to Wilson et al. (1997), the appearance of virtual organs has been shown to not enhance training. This suggests that the abstracted objects should be sufficient for training, especially if they do reduce distractions due to unrealistic organs. However, research conducted by Moore et al. (2008) indicates that experienced laparoscopic surgeons performed worse than medical students when using the MIST VR system. The researchers suggested that one explanation for the results could be due to the lack of contextual feedback. Hence, the efficacy of the abstracted objects is questionable.

Vincent et al. (2008) developed an immersive VR system for teaching mass casualty triage skills. The system consisted of an HMD, stereo earphones, and three electromagnetic sensors for tracking the head and both hands. The researchers also employed a previously developed command system based on body poses and gestures (Sherstyuk et al., 2007). This command system allowed the trainees to interact with the simulation, such as raising the left hand overhead to summon a virtual equipment tray or picking up a virtual instrument by selecting it with the right hand.

The system’s simulation consisted of three triage scenarios and each of those consisted of five patients with various injuries. Vincent et al. (2008) purposely designed the scenarios to occur in a dark room to avoid potential distractors, such as police sirens, helicopter lights, and alarms. Trainees examined the virtual patients and used the command system to engage virtual instruments and supplies. Upon completing each examination, trainees assigned each patient to a triage category, indicated the main injury, and selected the appropriate intervention from a dropdown list. The trainees were then automatically transported to the next patient without needing to navigate. After completing each triage scenario, the trainees removed the HMD and watched a short video demonstrating an expert medical professional’s approach to triage within the VR scenario.

The most obvious technique employed by the triage VR system is the use of gestural commands. Vincent et al. (2008) claimed that the command system and HMD made their system fully immersive. Furthermore, they cited prior research that indicated fully immersed students gain more knowledge from a simulation than students who interacted with a mouse and computer screen (Gutiérrez et al., 2007).

In terms of system fidelity, the gestural commands were not as realistic as 3D interaction techniques that employ isomorphic transfer functions. Instead, the gestures substituted realistic kinematics and kinetics with symbolic movements. Hence, we classify the gestural commands under the interaction fidelity category.

In terms of memory, the system’s gestural commands were not intended to promote attention, distinctiveness, or organization. Additionally, the commands did not rely on specificity. Hence, we dismiss categorizing the gestures as encoding. Because the gestures were symbolic and did not employ isomorphic mappings, they did not promote motor memory. They also did not rely on priming or promote classical conditioning. Hence, the gestures did not affect implicit retrieval. However, the gestural commands did promote semantic memory. In order to learn and effectively use each gesture, trainees were required to learn what the corresponding triage action would be and understand when it should be taken. Hence, we classify the gestural commands under explicit memory. Additionally, we refer to the gestural commands as “semantic gestures” because they promoted semantic memory.

In a study evaluating the effectiveness of their VR teaching system, Vincent et al. (2008) found that novice learners demonstrated improved triage intervention scores and reported higher levels of self efficacy. These benefits could be partially due to the use of semantic gestures. However, the researchers suggested that the improvements were possibly due to increased familiarity with the gestural commands. Furthermore, the improvements were possibly due to the short videos shown after each VR scenario, as opposed to the scenarios themselves. Hence, it is difficult to judge the effectiveness of using semantic gestures.

The second interesting technique employed by the researchers was purposefully designing the scenarios to occur in a simplified dark room to avoid more-realistic distractions, such as police sirens and helicopter lights.

In terms of system fidelity, the simplified environment did not produce unrealistic interactions and alter interaction fidelity. However, the simplified environment was not realistic, as the researchers pointed out. Hence, we classify the simplistic environment technique under scenario fidelity. In terms of memory, the simplified environment was intended to promote attention by eliminating realistic distractions. So, we categorize the simplified environment under encoding.

As discussed above, there were many confounds in the study conducted by the researchers. Therefore, it is impossible to judge the effectiveness of the simplified environment technique. Further research is needed to better understand the efficacy of such a technique.

The final interesting technique developed by Vincent et al. (2008) was automatically transporting the trainee to the next patient after each intervention was completed.

In terms of interaction fidelity, the automatic transportation technique eliminated realistic user actions by not requiring the trainee to navigate. Hence, the automated interaction is an interaction fidelity technique. With regard to memory, it was not explicitly stated, but the authors likely employed the technique to promote attention to the important aspects of triage, as opposed to navigating between patients. Hence, we classify the automated interaction under encoding.

Like the other techniques, we cannot speak to the effectiveness of the automatic teleportation feature. However, we do believe the idea of eliminating mundane tasks through automated interactions is promising. Such techniques should be further researched.

Milot et al. (2010) developed a pinball game simulator to investigate the effects of haptic guidance and error amplification on training motor skills. The concept of haptic guidance is to use force feedback to guide the user’s movements in order to demonstrate a desired movement trajectory to the motor system for later imitation. The concept of error amplification is to use force feedback to artificially increase performance errors that occur when imitating a target movement trajectory. This technique is based on prior research studies that suggest that the motor system detects kinematic errors in one trial and proportionally corrects them in subsequent trials (Thoroughman and Shadmehr, 2000; Fine and Thoroughman, 2007).

In order to evaluate the effects of haptic guidance and error amplification, Milot et al. (2010) developed a computerized pinball game consisting of five bumper targets (shown one at a time), a virtual pinball, and a virtual flipper. The game was viewed via a computer monitor. To provide force feedback, the researchers used a timing-assistive plastic pinball exercise robot (TAPPER). The TAPPER consisted of a forearm brace mounted on a frame, a freely rotating hand brace connected to a pneumatic cylinder, and a button that would be pressed by the user’s fingers when the hand or robot rotated the hand brace. Pressing the button would activate the flipper in the pinball game. The researchers used the TAPPER to implement both haptic guidance and error amplification.

With regard to system fidelity, the haptic guidance technique clearly altered the user’s actions by using force feedback to guide the user’s movements along a desired trajectory. Hence, it is an interaction fidelity technique. In terms of memory, the theory behind haptic guidance is that it promotes motor memory by demonstrating desired movement trajectories. Therefore, it is an implicit retrieval technique.

In their study, Milot et al. (2010) found that training with the haptic guidance technique significantly reduced errors with regard to pressing the button to activate the virtual flipper on time. In comparison to the error amplification technique, they found that haptic guidance was more beneficial for a subset of less-skilled players. These results, along with previous ones (Feygin et al., 2002), indicate that haptic guidance is a viable training technique for certain motor skills.

Like haptic guidance, the error amplification technique altered the user’s actions. Therefore, we classify it under interaction fidelity. Also similar to haptic guidance, the theory behind error amplification is that it promotes motor memory by allowing the motor system to correct kinematic errors faster. Hence, it too is an implicit retrieval technique.

Milot et al. (2010) also found that training with the error amplification technique significantly reduced timing errors. In contrast to haptic guidance, their results indicated that a subset of more-skilled players benefited more from error amplification. Hence, the error amplification technique should be considered as a viable motor-memory training technique.

Chittaro (2012) developed a serious game to allow people to experience realistic aircraft evacuation scenarios to learn more about passenger safety on aircrafts. In particular, the game focused on an emergency-landing scenario in which the player must complete multiple tasks to successfully evacuate the virtual aircraft. The tasks included locating the nearest exits prior to the emergency, maintaining a brace position during landing, avoiding grabbing luggage during the evacuation, reaching the nearest exit, locating an alternate exit when the nearest ones is inaccessible, avoiding pushing and fighting with other passengers, crawling below smoke, and jumping on the exit slides.

In addition to developing the learning tasks above, Chittaro (2012) designed his serious game to include several features specifically to enhance the learning process. First, he included realistic sounds (e.g., shouting from other passengers) and visuals (e.g., fire and smoke) to provide a more-realistic experience. Second, he chose to omit the portrayal of the character’s harm (e.g., no sounds of the character dying by suffocation) in order to avoid making the virtual experience too emotionally intense. Instead, textual information was used to convey consequences. As another feature, Chittaro (2012) altered the simulation’s time by returning the player to the part of the game in which a wrong decision was made, but only after the textual consequence of that decision was reached. Finally, to make the serious game accessible to users that do not play video games, Chittaro (2012) mapped available actions to keys and provided an onscreen legend. Additionally, he constrained the paths of the virtual character during navigation to simplify interaction.

Chittaro (2012) included the realistic sounds and visuals to ensure that the training environment cues better matched the context of a real-world emergency landing. In terms of system fidelity, these realistic cues did not affect the user’s actions or require any special features of the simulation. Additionally, this technique did not require unrealistic rendering algorithms or involve specialized output devices to provide additional sensory stimuli. Hence, we classify these environmental cues by default under display fidelity, as explained above. With regard to memory, environmental cues are a clear example of relying on specificity. Therefore, they are an encoding technique.

In his study, Chittaro (2012) found that playing the serious game significantly increased users’ knowledge regarding aircraft evacuations and their reported levels of self efficacy. However, because he used questionnaires for his assessments, those learning benefits should not be attributed to the environmental cues, which rely on specificity of the retrieval context. As discussed in Section “Encoding,” specificity is believed to only have a correlational effect on retrieval and not a causal one.

By omitting the realistic portrayal of the character’s harm to avoid making the learning experience too emotionally intense, Chittaro (2012) was essentially choosing to suppress emotional stimuli that may distract the learner. Like environmental cues, these suppressed cues did not affect the user’s actions, did not require any special simulation features, and did not involve special rendering algorithms or output devices. Hence, we categorize them by default as a display fidelity technique. With regard to memory, the cues promoted attention by suppressing emotional stimuli that may distract the learner. Therefore, we classify them under encoding.

In later research, Chittaro et al. (2014) compared suppressed cues to portrayals of character harm to determine the effects of fear arousal on learning. The researchers found that indeed the suppressed cues resulted in lower levels of self-reported fear and skin conductance. However, they also found that the emotionally intense cues resulted in significantly higher increase in knowledge than the suppressed cues. Hence, suppressed cues, at least fear-based ones, may not be an effective technique for enhancing education and training.

We refer to Chittaro’s feature that altered the simulation’s time to convey cause-and-effect relationships as “causation rewinding.” This approach is clearly a scenario fidelity technique, as it purposefully uses a specialized concept of time. With regard to memory, the technique does not intentionally promote attention, distinctiveness, or organization, and it does not rely on specificity. Causation rewinding also does not promote motor memory, priming, or classical conditioning. Instead, it purposefully promotes semantic memory by conveying the relationship between the cause (the wrong decision) and the effect (the consequence). Hence, it is an explicit memory technique.

While Chittaro (2012) found that playing the serious game significantly increased users’ knowledge and reported levels of self efficacy, there is no discussion of what role the causation rewinding technique may have played in those benefits. Hence, further research is needed to determine the effectiveness of using the technique to improve explicit knowledge of cause-and-effect relationships.

By mapping available actions to keys (with a visible legend) and restraining the paths for navigation tasks, Chittaro (2012) was essentially simplifying the interactions required to use the serious game. As these simplified interactions do not require realistic interactions in terms of user movements, we classify the approach under interaction fidelity. Furthermore, in terms of memory, the techniques purposefully promote attention to the important aspects of evacuating an aircraft by eliminating interface distractions, such as how to brace for landing and how to navigate the aisle way.

Like causation rewinding, there is no discussion to what the potential effects of the simplified interactions were. However, for education and training systems focused on semantic knowledge, we consider this approach to be a viable technique. Further research is needed to confirm this though.

In Table 1, we present a summary of the techniques that we analyzed using our new framework. Next to each technique, we provide a symbol representing the effectiveness of the technique to enhance cognition, learning, and training. The plus symbol (+) indicates that the technique has been demonstrated to have positive effects on the targeted aspect of memory. The minus symbol (−) indicates that the technique has been demonstrated to have negative effects. The plus-minus symbol (±) indicates conflicting results have been reported regarding the effectiveness of the technique. Finally, the question mark (?) indicates that the efficacy of the technique is unknown.

Considering Table 1 as an overview, we gain some interesting insights on how system fidelity may affect memory (and, in turn, learning and training). First, altering interaction fidelity can positively affect implicit memory, particularly those relating to motor memory. Milot et al. (2010) demonstrated that both haptic guidance and error amplification positively affect motor training for a pinball game simulation. Similarly, altering display fidelity may have a positive effect on motor memory, as seen with MIST VR’s haptic props approach (Wilson et al., 1997). Third, altering display fidelity may not benefit the encoding process. Chittaro et al. (2014) demonstrated that suppressed cues could have a negative effect on increasing knowledge during training. Prior research indicates that environmental cues can actually have a negative effect on encoding (Howell et al., 2015), though Nairne (2002) contends that the effects of such cues are merely correlational and not causal. Finally, the table suggests that there is much to be researched and learned about regarding the other intersections of system fidelity and memory. Reviewing more literature involving educational and training systems could perhaps solve this. However, because many systems employ and confound multiple techniques at once, such literature-based research may be futile. Hence, we suggest that the community should begin investigating these intersections through controlled studies and evaluations.

As Bowman et al. (2005) discuss, taxonomies can also serve as design spaces for system developers. Hence, in this section, we demonstrate how AFFECT can serve as a design space by thinking of new techniques. These techniques fill the empty intersections of Table 1, which we have not found any examples for in the prior literature. In this section, we present the concepts of two new VR techniques – Action Rewinding and Uncommon Percepts.

For our first demonstration of AFFECT being used as a design space, we discuss the process of designing a new technique that alters scenario fidelity to improve implicit retrieval.

Prior to considering how we will alter scenario fidelity, we should determine how we want the technique to affect implicit retrieval. Looking at the memory axis checklist at the beginning of Section “As an Analysis Tool,” we can choose to target motor memory, priming, or classical conditioning. To keep this example simple, we will focus on motor memory. However, we should have a specific motor task in mind. We will consider the game of billiards and the task of stroking the cue stick to make a shot.

Now, we must decide how to alter scenario fidelity to promote motor memory for our motor task. Considering the system fidelity axis checklist (see As an Analysis Tool), we can use specialized physics, time, or agent behaviors to alter scenario fidelity. Alternatively, we can use an unrealistic environment or objects. Before selecting an approach, we need to consider each in terms of motor memory and our task of playing billiards.

Obviously, specialized physics would be necessary to accurately represent and portray the forces involved with stroking the stick, striking the cue ball, and the subsequent ball collisions that ensue. We would refer to such a technique as “action physics” to indicate that the technique merely recreates the physics required to simulate a particular action with a high degree of fidelity. However, we will continue with our example in order to design a more-advanced technique.

So, how can time be altered to promote motor memory in this example? Those readers that have spent a fair amount of time playing billiards will probably agree that a time-consuming aspect to learning the motor skills involved is positioning the balls to practice particular shots. To further complicate the learning task, it can be difficult to repeatedly position the balls in the exact same locations without affecting the nuances of a shot. These issues provide an opportunity to improve learning the motor skills of shooting by altering time. Specifically, after each shot, we can rewind the simulation before the cue ball was struck in order to perfectly reset the positions of all the balls and to allow consecutive performances of the motor task (as opposed to interrupting the shooting task with the task of repositioning balls). We call this technique “action rewinding” to indicate that the technique rewinds the simulation to allow an action to be retaken.

For our second design example, we discuss the process of designing a technique that alters display fidelity to improve explicit retrieval.

In deciding how to affect explicit retrieval, we must choose to target either episodic memory or semantic memory. For this example, we will focus on episodic memory to differentiate the new technique from the prior examples (i.e., semantic gestures and causation rewinding). Again, we should have a specific learning task in mind. Because episodic memory concerns past experiences, we will focus on providing simulated, uncommon experiences for the purpose of enriching and informing future decisions. For example, if we simulate the experience of conducting a surgery, the episodic memories that form from that simulated experience should help in making future decisions regarding whether or not to become a surgeon.

Now, we must decide how to alter display fidelity to promote such an episodic memory. Consulting the system fidelity axis checklist, we can purposefully use unrealistic rendering algorithms or provide additional sensory stimuli with specialized output devices. Additionally, we could just develop a generic system with common degrees of interaction fidelity, scenario fidelity, and display fidelity to be classified as a display fidelity technique by default. However, that is not useful in regard to the purpose of this design example. In order to keep this example simple, we will alter display fidelity by providing additional sensory stimuli.

For the additional sensory stimuli, we will use an olfactory display to provide smells. But, how can smells be used to create a useful episodic memory of conducting a surgery? For anyone that has ever observed a surgery, the smell of burnt flesh is an unusual and unforgettable scent associated with being in the operating room. Therefore, we could use an olfactory display to emit an odor similar to burnt flesh to better simulate the experience of conducting a surgery and to promote a more-realistic episodic memory. Such a memory may actually convince some users not to become surgeons!

Note that we refer to this technique as an “uncommon percept” due to the uncommon experience of conducting a surgical procedure, not the unusual burnt flesh odor associated with it. Additionally, we use the term “percept” instead of “cue” because we are not referring to sensory stimuli available during encoding but targeting episodic memory. As discussed in Section “Explicit Retrieval,” episodic memories can consist solely of perceptual events, such as the presence of an unpleasant odor.

In this paper, we have presented a novel framework for analyzing, categorizing, and designing VR techniques intended for educational and training purposes. The AFFECT consists of nine categories that represent the intersections of two axes – system fidelity and memory. We have described how system fidelity can be altered by manipulating the VR system’s interaction fidelity, scenario fidelity, or display fidelity. We have also described the stages of memory and specifically focused on encoding, implicit retrieval, and explicit retrieval. We have presented four case studies to demonstrate how AFFECT can be used as a taxonomy to analyze and classify VR techniques. From these case studies, we were able to gain some interesting insights into how the aspects of system fidelity affect the various stages of memory, learning, and training. Finally, we showed how AFFECT could be used as a design space for ideating novel VR techniques for educational and training purposes.

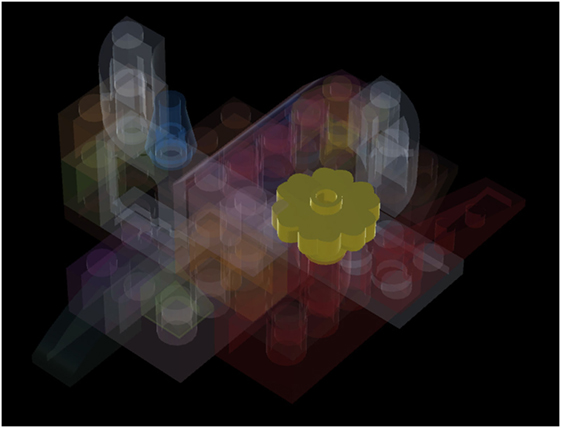

Our next steps are to use AFFECT as a design space to systematically explore the effects of system fidelity on learning and training. We have already begun this research, starting with the intersection of display fidelity and encoding. A promising technique that we have preliminarily explored is to use unrealistic rendering algorithms to promote the distinctiveness of key information. We refer to this technique as “distinctive cues.” Figure 4 shows a version of the technique in which a key object is made distinctive by rendering the surrounding objects with a semi-transparent shader. We are currently in the process of planning a controlled study to evaluate the efficacy of the technique.

Figure 4. The “distinctive cues” technique is intended to make a key object distinctive by rendering the surrounding objects with less realism.

RM developed an initial version of the framework. RM and NH refined the framework together. RM and NH wrote and revised the paper.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This material is based on work supported by the National Science Foundation under Grant No. 1552344 – “CAREER: Leveraging the Virtualness of Virtual Reality for More-Effective Training.”

Agus, M., Giachetti, A., Gobbetti, E., Zanetti, G., and Zorcolo, A. (2003). Real-time haptic and visual simulation of bone dissection. Presence 12, 110–122. doi: 10.1162/105474603763835378

Bloom, B., Englehart, M., Furst, E., Hill, W., and Krathwohl, D. (1956). “Taxonomy of educational objectives: the classification of educational goals,” in Handbook I: Cognitive Domain. New York, Toronto: Longmans, Green.

Bowman, D. A., Kruijff, E., LaViola, J. J. Jr., and Poupyrev, I. (2005). 3D User Interfaces: Theory and Practice. Boston: Addison-Wesley.

Bowman, D. A., and McMahan, R. P. (2007). Virtual reality: how much immersion is enough? IEEE Comput. 40, 36–43. doi:10.1109/MC.2007.257

Chen, C. J., Toh, S. C., and Fauzy, W. M. (2004). The theoretical framework for designing desktop virtual reality-based learning environments. J. Interact. Learn. Res. 15, 147–167.

Chittaro, L. (2012). “Passengers’ safety in aircraft evacuations: employing serious games to educate and persuade,” in Persuasive Technology. Design for Health and Safety: 7th International Conference, PERSUASIVE 2012, Linköping, Sweden, June 6-8, 2012. Proceedings, eds M. Bang and E. Ragnemalm (Berlin: Springer), 215–226.

Chittaro, L., Buttussi, F., and Zangrando, N. (2014). “Desktop virtual reality for emergency preparedness: user evaluation of an aircraft ditching experience under different fear arousal conditions,” in 20th ACM Symposium on Virtual Reality Software and Technology (VRST) (New York, NY, USA: ACM), 141–150.

Chun, M. M., and Turk-Browne, N. B. (2007). Interactions between attention and memory. Curr. Opin. Neurobiol. 17, 177–184. doi:10.1016/j.conb.2007.03.005

Crane, K., Llamas, I., and Tariq, S. (2007). “Real-time simulation and rendering of 3D fluids,” in GPU Gems 3, ed. H. Nguyen (Boston: Addison-Wesley Professional), 633–675.

Dave, R. H. (1970). “Psychomotor levels,” in Developing and Writing Educational Objectives, ed. R. J. Armstrong (Tucson, AZ: Educational Innovators Press), 33–34.

Edelson, D. C. (2001). Learning-for-use: a framework for the design of technology-supported inquiry activities. J. Res. Sci. Teach. 38, 355–385. doi:10.1002/1098-2736(200103)38:3<355::AID-TEA1010>3.3.CO;2-D

Feygin, D., Keehner, M., and Tendick, F. (2002). “Haptic guidance: experimental evaluation of a haptic training method for a perceptual motor skill,” in IEEE Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (HAPTICS), Orlando, FL.

Fine, M. S., and Thoroughman, K. A. (2007). Trial-by-trial transformation of error into sensorimotor adaptation changes with environmental dynamics. J. Neurophysiol. 98, 1392–1404. doi:10.1152/jn.00196.2007

Fuster, J. N. M. (1997). Network memory. Trends Neurosci. 20, 451–459. doi:10.1016/S0166-2236(97)01128-4

Graf, P., and Schacter, D. L. (1985). Implicit and explicit memory for new associations in normal and amnesic subjects. J. Exp. Psychol. Learn. Mem. Cogn. 11, 501–518. doi:10.1037/0278-7393.11.3.501

Grantcharov, T. P., Kristiansen, V. B., Bendix, J., Bardram, L., Rosenberg, J., and Funch-Jensen, P. (2004). Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br. J. Surg. 91, 146–150. doi:10.1002/bjs.4407

Gutiérrez, F., Pierce, J., Vergara, V. M., Coulter, R., Saland, L., Caudell, T. P., et al. (2007). “The effect of degree of immersion upon learning performance in virtual reality simulations for medical education,” in Medicine Meets Virtual Reality 15: In Vivo, In Vitro, In Silico: Designing the Next in Medicine, eds J. D. Westwood, R. S. Haluck, H. M. Hoffman, G. T. Mogel, R. Phillips, R. A. Robb, and K. G. Vosburgh (Amsterdam: IOS Press), 155–160.

Hinckley, K., Jacob, R., and Ware, C. (2004). “Input/output devices and interaction techniques,” in CRC Computer Science and Engineering Handbook. Boca Raton, FL: CRC Press.

Howell, M. J., Herrera, N. S., Moore, A. G., and McMahan, R. P. (2015). A reproducible olfactory display for exploring olfaction in immersive media experiences. Multimed. Tools Appl. 75, 12311. doi:10.1007/s11042-015-2971-0

Hunt, R. R. (2003). Two contributions of distinctive processing to accurate memory. J. Mem. Lang. 48, 811–825. doi:10.1016/S0749-596X(03)00018-4

Hunt, R. R., and McDaniel, M. A. (1993). The enigma of organization and distinctiveness. J. Mem. Lang. 32, 421–445. doi:10.1006/jmla.1993.1023

Johnson, J. (2010). Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Guidelines. San Fransisco: Morgan Kaufmann.

Kilteni, K., Bergstrom, I., and Slater, M. (2013). Drumming in immersive virtual reality: the body shapes the way we play. IEEE Trans. Vis. Comput. Graph. 19, 597–605. doi:10.1109/TVCG.2013.29

Kim, S., Guy, S. J., Manocha, D., and Lin, M. C. (2012). “Interactive simulation of dynamic crowd behaviors using general adaptation syndrome theory,” in ACM Symposium on Interactive 3D Graphics and Games (i3D), New York.

Klein, S. B., and Kihlstrom, J. F. (1986). Elaboration, organization, and the self-reference effect in memory. J. Exp. Psychol. Gen. 115, 26–38. doi:10.1037/0096-3445.115.1.26

Korman, M., Doyon, J., Doljansky, J., Carrier, J., Dagan, Y., and Karni, A. (2007). Daytime sleep condenses the time course of motor memory consolidation. Nat. Neurosci. 10, 1206–1213. doi:10.1038/nn1959

Kraftwohl, D. R., Bloom, B. S., and Masia, B. B. (1967). Taxonomy of Educational Objectives, the Classification of Educational Goals: Handbook II: Affective Domain. New York: David McKay Company.

Krathwohl, D. R. (2002). A revision of Bloom’s taxonomy: an overview. Theory Pract. 41, 212–218. doi:10.1207/s15430421tip4104_2

Lai, C., McMahan, R. P., Kitagawa, M., and Connolly, I. (2016). “Geometry explorer: facilitating geometry education with virtual reality,” in Virtual, Augmented and Mixed Reality: 8th International Conference, VAMR 2016, Held as Part of HCI International 2016, Toronto, Canada, July 17-22, 2016. Proceedings, eds S. Lackey and R. Shumaker (Switzerland: Springer International Publishing), 702–713.

Le Ngoc, L., and Kalawsky, R. S. (2013). “Evaluating usability of amplified head rotations on base-to-final turn for flight simulation training devices,” in IEEE Virtual Reality Conference (VR). Lake Buena Vista, FL.