- Wearable Computer Laboratory, Advanced Computing Research Centre, School of Information Technology and Mathematical Sciences, University of South Australia, Mawson Lakes, SA, Australia

This paper presents design guidelines and recommendations for developing cursor manipulation interaction devices to be employed in a wearable context. The work presented in this paper is the culmination three usability studies designed to understand commercially available pointing (cursor manipulation) devices suitable for use in a wearable context. The set of guidelines and recommendations presented are grounded on experimental and qualitative evidence derived from three usability studies and are intended to be used in order to inform the design of future wearable input devices. In addition to guiding the design process, the guidelines and recommendations may also be used to inform users of wearable computing devices by guiding toward the selection of a suitable wearable input device. The synthesis of results derived from a series of usability studies provide insights pertaining to the choice and usability of the devices in a wearable context. That is, the guidelines form a checklist that may be utilized as a point of comparison when choosing between the different input devices available for wearable interaction.

Introduction

This paper presents design guidelines for developing cursor manipulation human–computer interaction devices to be employed in a wearable context. Input modalities and interaction techniques suitable for wearable computers are well researched areas, despite this, how to seamlessly and appropriately interact with a wearable computer is still a challenging, multifaceted problem that requires attention. Appropriately and effectively interacting with a wearable was a documented issue and challenge at the advent of wearable computers and although much research has been undertaken in this area, for the most part, it remains as an unsolved problem that is vital to wearable computing. Although researchers have made significant advancements in the many aspects of wearable computing (Thomas, 2012), input techniques continue to be a key challenge (Chen et al., 2013). Interacting in a wearable context brings with it challenges, such as appropriateness of interaction modality or technique, having to divide attention between interacting with the wearable and performing a simultaneous or primary task (Starner, 2002a,b), the practicalities of device size, weight, and unobtrusiveness (Rekimoto, 2001; Starner, 2001), suitable access time (Ashbrook et al., 2008; Starner, 2013), and the social consequences and implications of doing so (Feiner, 1999; Toney et al., 2003). Although interacting with a wearable computer poses challenges not relevant to its desktop counterpart, the requirements to enter textual data and control the wearable in the way of command entry remain the same. With desktop systems, this is typically performed by the keyboard and mouse, respectively. The challenge is to find suitable input modalities and interaction techniques for wearable computers. The primary task being undertaken by a user while interacting with the wearable computer adds further complexity as one or both hands may be occupied while the user is performing the task, the attention requirements may vary greatly and the task or user’s context may exclude particular forms of input.

Appropriate input modalities are fundamental to wearable interaction. Research in the field has explored hands-free input, such as gesture and speech recognition (Cleveland and McNinch, 1999; Broun and Campbell, 2001; Starner, 2002a,b; Lyons et al., 2004). Starner et al. (2000) developed an infrared vision system in the form of a pendant in order to detect hand gestures performed in front of the body and used to control home automation systems. SixthSense (Mistry and Maes, 2009; Mistry et al., 2009) uses a camera and projector that can be either attached to a hat or combined in a pendant and worn on the body. The system tracks the user’s index fingers and thumb (using colored markers) enabling in-air hand gestures. GestureWrist (Rekimoto, 2001) is a wrist-worn device that recognizes hand gestures by measuring arm shape changes and forearm movements. Other researchers have also explored the use of wrist-worn interfaces (Feldman et al., 2005; Jungsoo et al., 2007; Lee et al., 2011; Kim et al., 2012; Zhang and Harrison, 2015) that recognize hand gestures. While BackHand (Lin et al., 2015) uses the back of the hand (via strain gage sensors) in order to recognize hand gestures. GesturePad (Rekimoto, 2001) is a module that can be attached to the inside of clothes allowing gesturing on the outside of the garment of clothing. PocketTouch (Saponas et al., 2011) allows through garment, eyes-free gesture control, and text input. Hambone (Deyle et al., 2007) is a bio-acoustic gesture interface that uses sensors placed on either side of the wrist. Sound information from hand movements is transmitted via bone conduction allowing the mapping of gestures to control an application. Other researchers have also explored the use of muscle–computer interfaces (Saponas et al., 2009, 2010; Huang et al., 2015; Lopes et al., 2015). The Myo is a gesture controlled commercially available armband using electromyography (EMG) sensors and a 9-axis inertial measurement unit (IMU, to sense motion, orientation, and rotation of the forearm). Haque et al. (2015) use the Myo armband for pointing and clicking interaction (although implemented for a large displays, the authors state head-worn displays as an application for future work). Skinput (Harrison et al., 2010), OmniTouch (Harrison et al., 2011), SenSkin (Ogata et al., 2013), and Weigel et al. (2014) explore using the skin as an input surface. Serrano et al. (2014) explores the use of hand-to-face gestural input as a means to interact with head-worn displays. Glove-based systems (Lehikoinen and Roykkee, 2001; Piekarski and Thomas, 2003; Kim et al., 2005; Witt et al., 2006; Bajer et al., 2012) are also used in an endeavor to enable hands-free interaction in that the user is not required to hold a physical device. The Peregrine Glove is a commercially available device that consists of 18 touch points and 2 contact pads that allow for user programmable actions. Researchers have also explored textile interfaces where electronics are integrated/woven into the fabric of garments for wearable interaction, for example, pinstripe (Karrer et al., 2011) and embroidered jog wheel (Zeagler et al., 2012). An emphasis of the wearable research community has also been to explore implicit means of obtaining input, such as context awareness (Krause et al., 2006; Kobayashi et al., 2011; Watanabe et al., 2012) and activity recognition (Stiefmeier et al., 2006; Ward et al., 2006; Lukowicz et al., 2010; Muehlbauer et al., 2011; Gordon et al., 2012).

Although advantageous in that the above modalities facilitate hands-free or hands-limited interaction with a wearable computer, they are not without their own individual limitations. The imprecise nature (Moore, 2013) of speech recognition software, users’ language abilities (Witt and Morales Kluge, 2008; Shrawankar and Thakare, 2011; Hermansky, 2013), changes in voice tone (Hansen and Varadarajan, 2009; Boril and Hansen, 2010), and environmental demands affect voice recognition levels (Cleveland and McNinch, 1999; Mitra et al., 2012). Shneiderman (2000) states that speech interferes with cognitive tasks, such as problem solving and memory recall. There are also privacy issues surrounding the speaking of information and social implications to vocalizing commands (Shneiderman, 2000; Starner, 2002a,b). Likewise, social context and social acceptability (Rico and Brewster, 2010a,b) must be taken into consideration when gesturing (Montero et al., 2010; Rico and Brewster, 2010a,b; Profita et al., 2013). Subtle gestures may falsely trigger (or accidentally) as a result of the user’s normal movement (Kohlsdorf et al., 2011). Mid-air gesturing may be prone to fatigue (Hincapie-Ramos et al., 2014). With social conventions in mind, Dobbelstein et al. (2015) explore the belt as an unobtrusive wearable input device for use with head-worn displays that facilitates subtle, easily accessible, and interruptible interaction. Although the user is also not required to hold a physical device with glove-based systems, having extra material surround the hand may hinder the task, for example, reduce tactile feeling, or interfere with reaching into tight spaces. Glove-based systems may also interfere with ordinary social interaction (Rekimoto, 2001), for example, shaking hands, etc. While textile interfaces provide controls that are always with the wearer, they may suffer from accidental touches or bumps that unintentionally activate a command (Holleis et al., 2008; Komor et al., 2009).

Wrist-based interfaces have been explored by researchers, for example, Facet (Lyons et al., 2012), WatchIt (Perrault et al., 2013). Although wrist-based interfaces provide a way of facilitating fast, easy access time, they pose their own unique challenges; the small screen size has implications for usability (Baudisch and Chu, 2009; Oakley and Lee, 2014; Xiao et al., 2014) (for example, readability, input, etc.); there are limitations placed on interactions (Xiao et al., 2014); there are privacy issues surrounding the displaying of personal information visible for all to see; both hands are effectively occupied when interacting with the device (one that has the device placed on the wrist and the other required to interact with it); and using two hands to interact with the device may be difficult while walking (Zucco et al., 2006). However, wrist-based interfaces are advantageous in that both hands are free when the user stops using it, and the user is not required to put away a device (Ashbrook et al., 2011).

Researchers have also explored small, subtle form factors that provide always available, easily accessible, and interruptible input in the form of ring-based or finger-worn devices, for example, iRing (Ogata et al., 2012), Nenya (Ashbrook et al., 2011), Magic Finger (Yang et al., 2012), and CyclopsRing (Chan et al., 2015). Typically, these devices still require the use of one or both hands while performing the interaction and are often restrictive in the amount of input provided. Similarly, voice and gesturing input are typically restricted to a limited set of commands and are typically not usable without specifically tailoring applications for their use. Gesturing systems may also require extra or special sensing equipment (Mistry and Maes, 2009; Mistry et al., 2009; Harrison et al., 2011). Typically these input modalities map directly to specific actions rather than providing a continuous signal that can be used for general-purpose direct manipulation (Chen et al., 2013). In addition, the above also typically tightly couples the task and the device and does not necessarily provide general-purpose interaction.

There are many head-worn displays emerging on the market. For example, Epson has released the Moverio BT-200, a binocular head-worn see-through display that is tethered to a small handheld controller. The Moverio BT-300 release is imminent. The Moverio BT-200 head-worn display enables interaction through the use of a touchpad situated on the top of the controller (along with function keys, such as home, menu, back, and so on). Microsoft has released the hololens, an untethered, binocular head-worn see-through display. Hololens uses gaze to move the cursor, gesture for interaction and voice commands for control. The Hololens also provides a handheld clicker for use during extended interactive sessions, which provides selection, scrolling, holding, and double-clicking interaction. Meta is working toward releasing see-through augmented reality 3D binocular eyeglasses called the META 2. The eyeglasses can be connected to a small pocket computer that comes bundled with the glasses. Interaction with META eyeglasses is via finger and hand gestures performed in mid-air and in front of the body. Among the most notable is Google Glass, a wearable computer running Android with a monocular optical head-worn display. Users interact with Google Glass using voice commands and a touchpad running along the arm of the device. As an alternative to using voice or gesture to control Google Glass, Google has released MyGlass. My Glass is an application that allows the user to touch, swipe, or tap her mobile phone in order to provide input to Glass. However, researchers have mentioned the possible difficulty of interacting with a touch screen device while wearing a head-worn display (Kato and Yanagihara, 2013). Lucero et al. (2013) draw attention to the unsolved problem of the nature of interaction with head-worn displays (i.e., interactive glasses) and highlight the need for research to explore open questions, such as how input is provided to head-worn displays/glasses, and to determine what the appropriate and suitable interaction metaphors are for head-worn displays.

Given the diversity of wearable devices available and that wearable computing is a relatively new discipline, there does not yet exist a standardized way of interacting with a wearable computer, in contrast to desktop computers where a keyboard and mouse are the standard devices. In many cases, however, the requirements for textual data and command entry/control techniques remain the same. Symbolic entry, which is the entering of alphanumeric data, may be performed by keyboards suitable for use in a wearable context (i.e., wrist-worn or handheld) and via speech recognition (e.g., speech input similar to that available in Google Glass). Command entry/control techniques may be performed via voice commands (e.g., through speech input as utilized in Google Glass) and gestures (e.g., available in Google Glass and Moverio BT-200 by using the touchpad, and in META with finger/hands performed in mid-air and in front of the body). Although these devices are compelling examples of wearable computing, they will typically require the development of specialized software designed to operate specifically for the device and its provided interaction technique(s). The interaction techniques typically provide discrete input that may be directly mapped to a specific action and, as a result, do not provide general-purpose input. That is, existing applications may not be able to run on these devices easily as the interaction does not necessarily map to the existing software; in which case, one will have to re-write or modify the software so that it is suitable for use on the wearable device. Depending upon the software, the mapping of the available input technique to a specific command may not always be possible.

The yet to be released META provides mounting evidence of the ability to create powerful computing devices that are bundled into small, body-worn form factors suitable for mobile computing. In light of this, it is possible to run current desktop applications on a wearable device with the likes of wearable systems (similar to the META), which provide pocket-sized powerful computing power, and display via a socially acceptable head-worn display/eyeglasses. As a result, there will be a need for general-purpose input, such as that provided by means of cursor control. The prolific use of Graphical User Interfaces found on desktop computers typically requires the use of a pointing device in order to interact with the system. A pointing device, such as a desktop computer mouse, provides the user with the ability to perform on screen, two-dimensional cursor control. Users who wish to run their desktop applications on their wearable computers (Tokoro et al., 2009) will likewise require the use of a suitable pointing device given that the interaction metaphor remains the same (for example, normal desktop command entry, workers with checklists and manuals, selecting music from a library, controlling social media). Without the ability to fabricate specialized pointing devices suitable for use with wearable computers, users will, therefore, need to turn their attention to commercially available input devices. The input devices must be suitable for use with wearable computers and as previously stated, provide general-purpose input. A mechanism for continuous pointing suitable for use with wearable computing is vital (Oakley et al., 2008; Chen et al., 2013) as various forms of wearable computing are adopted.

Interest in input devices and interaction techniques suitable for use with head-worn displays is growing, as evidenced by the following investigations that have designed input devices with the intention of being used with a head-worn display: uTrack (Chen et al., 2013), FingerPad (Chan et al., 2013), PACMAN UI (Kato and Yanagihara, 2013), and MAGIC pointing (Jalaliniya et al., 2015). With the release of wearable glasses-based displays that are smaller, less obtrusive, and targeted toward the consumer market, research into suitable, subtle, and socially acceptable interaction with head-worn displays is crucial. There is growing interest in the necessity to understand appropriate input devices and interaction with head-worn displays, as evidenced by Lucero et al. (2013); the authors investigate interaction with glasses (utilizing the Moverio BT in their study). Serrano et al. (2014) explore hand-to-face gestural input as a means of interacting with head-worn displays (utilizing the Moverio BT in their studies).

Although the input modalities presented thus far are compelling in their application to wearable computing, they typically require specialized hardware and/or software to operate. The modalities mostly provide discrete input that may be directly mapped to a specific action. Also, the form factor of the device may be tailored to suit a particular task. Without the ability to implement specialized hardware and/or software, users need to turn their attention to commercially available devices as a means to provide suitable wearable interaction. Users may also wish to run current applications on a wearable device. To this end, the requirements for textual data and command entry remain the same as a desktop system where this is typically performed by the keyboard and mouse, respectively. In light of this, users instead need to make use of commercially available input devices that can be used without having to be constructed or having to install specialized software. The input devices must be suitable for use with wearable computers and provide general-purpose input. To this end, we are interested in the commercially available devices that are capable of performing general-purpose interaction via cursor control and for use with a head-worn display. Although natural user interfaces (gestural interaction, etc.) are an emerging area for wearables and some may allow for cursor control interaction, we restrict our exploration to commercially available input devices suitable for use with wearable computers and those devices specifically implemented to perform cursor manipulation and control.

The devices we have chosen can typically be grouped into the follow four form factors: isometric joysticks, handheld trackballs, body mounted trackpads, and gyroscopic devices. The four commercially available pointing devices that have been identified within these groupings are as follows: handheld trackball (Figure 1), touchpad (Figure 2), gyroscopic mouse (Figure 3), and Twiddler2 mouse (Figure 4). The pointing devices chosen use different technologies and are, therefore, representative of the four main interaction methods used to provide cursor control and command entry. Although there may be a variety of models and form factors available of the devices, they operate in a similar fashion. All four devices enable the user to perform general-purpose input (i.e., cursor control) and can be used in place of a desktop mouse. The devices were chosen due to the following reasons; their suitability for use with wearable computers; their use was noted in the wearable research literature (Starner et al., 1996; Feiner et al., 1997; Rhodes, 1997, 2003; Baillot et al., 2001; Lukowicz et al., 2001; Nakamura et al., 2001; Piekarski and Thomas, 2001; Bristow et al., 2002; Livingston et al., 2002; Krum et al., 2003; Lehikoinen and Roykkee, 2003; Lyons, 2003; Lyons et al., 2003; Baber et al., 2005; Pingel and Clarke, 2005); and they are representative of the commercial devices available for cursor control and command entry. Additionally, the devices provide general-purpose interaction suitable for use with wearable computers and are readily available as commercial off-the-shelf products. All four devices provide the user with the ability to perform general cursor movement, object selection, and object drag and drop and all four devices may be used with either the left or right hand.

We are interested in determining what the guidelines are based on formal evaluations of commercially available pointing devices suitable for use with wearable computers (while wearing a head-worn display). We have evaluated the pointing devices via three comparative user studies (Zucco et al., 2005, 2006, 2009) in order to determine their effectiveness in traditional computing tasks (selection, drag and drop, and menu selection tasks), while wearing a computer and a head-worn binocular display. From the qualitative and quantitative findings of these studies, we have derived a set of design guidelines. These guidelines and recommendations are designed to provide insight and direction when designing pointing devices suitable for use with wearable computing systems. While some of these guidelines may not be surprising, they are derived from the empirical and qualitative evidence of the wearable studies and, therefore, provide a foundation upon which to guide the design of wearable pointing devices. Although the guidelines are specific to the design of wearable pointing devices, where appropriate, they may be generalized to influence and guide the design of future wearable input devices in the broad.

The key contributions of this paper are twofold:

1. A set of explicit guidelines and recommendations in order to inform the design of future wearable pointing devices. The goal is better inform designers and developers of particular features that should be considered during the creation of new wearable input devices.

These guidelines encompass the following:

a. the effectiveness of the devices for cursor manipulation tasks,

b. the user’s comfort when using the devices, and

c. considerations when performing concurrent tasks.

The guidelines and recommendations also serve to assist users in selecting a pointing device that is suitable for use in a wearable context.

2. A summary/synthesis of findings from the series of usability studies. The summary provides valuable information pertaining to the pointing devices and may assist in guiding and informing users when choosing between pointing devices suitable for use in a wearable context.

The next section describes the relevant literature pertaining to wearable guidelines, usability studies, and input devices. Following this, we present a summary of the findings from our systematic usability studies evaluating the commercially available pointing devices. Following the synthesis of findings, we present and describe the guidelines and recommendations. The presentation of guidelines is followed by a discussion concerning the guidelines along with concluding remarks.

Background

The following section explores relevant knowledge in the areas of design guidelines, usability studies, and input devices for wearable computers.

Design Guidelines

The design of wearable computers and accompanying peripherals is an important field of investigation. Despite this, very little work has been published concerning design guidelines for wearable computers that are grounded on experimental evidence. A notable contribution to the field is the work of Gemperle et al. (1998) examining dynamic wearability, which explores the comfortable and unobtrusive placement of wearable forms on the body in a way that does not interfere with movement. The design guidelines presented by Gemperle et al. (1998) are derived from their exploration of the physical shape of the wearable computing devices and pertain to the creation of wearable forms in relation to the human body. The work of Gemperle et al. (1998) produced design guidelines for the on body placement of wearable forms, in contrast to the guidelines derived from this body of work in order to inform the design of wearable pointing devices and serve to assist users in selecting a pointing device suitable for use in a wearable context.

Input Devices for Pointing Interaction

Pointing is fundamental to most human–computer interactions (Oakley et al., 2008; Lucero et al., 2013). A mechanism for continuous pointing suitable for use with wearable computing is vital (Oakley et al., 2008; Chen et al., 2013) as various forms of wearable computing systems are adopted. In addition to the aforementioned commercially available pointing devices, researchers have also explored different means by which to perform command entry and cursor control in a wearable system. Manipulating a two degree of freedom device to control an on-screen cursor is an important design issue for wearable computing input devices.

Rosenberg (1998) explores the use of bioelectric signals generated by muscles in order to control an onscreen pointer. Using electromyogram (EMG) data of four forearm muscles responsible for moving the wrist, the Biofeedback Pointer allows the control of a pointer via hand movements (up, down, left, right). The intensity of the wrist movement and, therefore, generated muscle activity is responsible for controlling the velocity of the pointer. In a user study where three participants carried out point and select tasks, the Biofeedback Pointer was found to perform an average of 14% as well as the standard mouse it was being compared to.

An acceleration sensing glove (ASG) was developed by Perng et al. (1999) using six 2-axis accelerometers with a wrist-mounted controller, RF transmitter, and battery pack. Five accelerometers were placed on the fingertips and one on the back of the hand. The accelerometer placed on the back of the hand operated as a tilt-motion detector moving an on-screen pointer. The thumb, index, and middle fingers were used to perform mouse clicks by curling the fingers. The glove could also be used to recognize gestures.

SCURRY (Kim et al., 2005) is another example of a glove-like device worn on the hand and is composed of a base module (housing two gyroscopes) and ring modules that can be worn on up to four fingers (housing two acceleration sensors each). The base module provides cursor movement and the ring modules are designed to recognize finger clicks. When a ring module is worn on the index finger, SCURRY performs as a pointing device. SCURRY was evaluated against a Gyration optical mouse and was found to perform similarly.

FeelTip (Hwang et al., 2005) is a wrist-mounted device (worn like a watch) consisting of an optical sensor (Agilent ADNS-2620) that is placed just below a transparent plate with tip for tactile feedback. Moving a finger (index or thumb) over the tip moves the mouse cursor and pressing on the tip in turn presses on the switch (that is located under the optical sensor) and triggers a left mouse click. The authors evaluate the device against an analog joystick across four tasks, pointing, 1D menu selection, 2D map navigation, and text entry. The FeelTip performed better than the joystick for the menu and map navigation tasks. For the pointing task over the 5 days, the average throughput was 2.63 bps for FeelTip and 2.27 bps for the joystick.

FingerMouse (De La Hamette and Troster, 2006) is a small-sized (43 mm × 18mm) visual input system that uses two cameras to track the user’s hand when placed in front of the cameras and is battery powered. By tracking the in-air movements of the user’s hand, the FingerMouse can control an on-screen mouse pointer. The device can be worn attached to the user’s chest or belt.

Oakley et al. (2008) evaluated a pointing method using an inertial sensor pack. The authors compare the performance of the pack when held in the hand, mounted on the back of the hand, and on the wrist. The results reveal that the held positions performed better than the wrist. Although exact throughput values are not reported, it appears from the graph that the hand positions were just over 3 bps, while the wrist performed just under.

Tokoro et al. (2009) explored a method for pointing by attaching two accelerometers to the user’s body, for example, the thumbs or elbows, etc. Cursor movement and target selection was achieved by the intersection of the two axes as a result of accelerometer positioning. In a comparative study, the authors found that the trackball, joystick, and optical mouse performed better than the proposed accelerometer technique. Horie et al. (2012) build on this work by evaluating the placement of the accelerometers on different body parts (i.e., index finger, elbow, shin, little finger, back of hand, foot, thumb and middle finger on one hand, wrist and head and hand) and under the different conditions (i.e., standing with both hands occupied, standing with one-hand occupied, and sitting with both hands occupied). The authors also included a Wii remote and a trackball in the evaluation. Selection is achieved by maintaining the cursor over a target for 1 second for the accelerometer conditions and the Wii remote. Results show a stabilizing of performance on the third or fourth day for all conditions with the exception of the shin and wrist (taking longer to stabilize) and the trackball (stabilizing after 1 day). The authors found that the trackball and Wii remote performed better than the accelerometer conditions. However, the authors conclude that any of the proposed conditions may be used for pointing and are advantageous due to the fact that the user does not have to hold a device in order to use the accelerometer method.

Calvo et al. (2012) developed a pointing device called the G3, which is designed to be used by United States Air Force dismounted soldiers. The G3 consists of a tactical glove that has a gyroscopic sensor, two buttons, and a microcontroller attached to the index finger, the side of the index finger and the back of the glove, respectively. The gyroscopic sensor (attached to the tip of the index finger) is used for cursor movement, however, the cursor will only move if one of the buttons is being held down, this is employed to avoid inadvertent cursor movement. The other button performs a left-click. The authors evaluated the G3 against the touchpad and trackpoint, which are embedded into the General Dynamics operational small wearable computer (MR-1 GD2000). The authors reveal that the G3 performed with a throughput of 3.13 bps (SD = 0.22), movement time of 1024.43 (SD = 54.90), and an error rate of 15.51 (SD = 1.12). Movement time units do not appear to be reported by the authors. The touchpad performed with a throughput of 2.46 bps (SD = 0.15), movement time of 1329.13 (SD = 85.93), and an error rate of 3.29 (SD = 0.77). The trackpoint performed with a throughput of 1.49 bps (SD = 0.07), a movement time of 1997.64 (SD = 98.28), and an error rate of 11.05 (SD = 1.44). The G3 had a higher throughput and performed faster than its two counterparts but less accurately.

Attaching a magnet to the back of the thumb and two magnetometers to the back of the ring finger, Chen et al. present uTrack (Chen et al., 2013), a 3D input system that can be used for pointing. Users move the thumb across the fingers in order to control an onscreen cursor. In an evaluation performed while participants were seated in front of a monitor, the authors found that participants were able to use uTrack for cursor control tasks and the technique was well received.

FingerPad (Chan et al., 2013) is a nail-mounted device that allows the index finger to behave as a touchpad. The system is implemented via magnetic tracking by mounting a magnetic sensing plate and a neodymium magnet to the index finger and thumb, respectively. When the fingers are brought together in a pinching motion, the index finger becomes the touchpad and the thumb may be used for interactions, such as pointing, menu selections, and stroke gestures. The authors evaluated the device via a point and select user study measuring trial completion time and error rate. Participants were asked to move an on-screen cursor to a target and select it using either a thumb flick gesture or a click technique with the opposite hand. Users evaluated the system while sitting at a desk and walking on a treadmill. Interestingly, the authors did not find a significant difference between the walking and seated completion times, however, noted a significant difference of error rate between the walking and seated completion times when target size was below 2.5 mm. The flick gesture required for selecting targets performed significantly slower than the click method in the walking condition only. Across both movement conditions, the target size of 1.2 mm performed significantly slower than the 5 and 10 mm target sizes; while the 0.6 mm target size performed slower and with more errors than the other target sizes (1.2, 2.5, 5, and 10 mm). NailO (Kao et al., 2015), a device mounted to the fingernail facilitates subtle gestural input. The authors (Kao et al., 2015) state that future work may extend the device to act as a touchpad.

Kato and Yanagihara (2013) introduce PACMAN UI, a vision-based input interface also designed to be used as an input technique suitable for use with a head-worn display. Users form a fist and position the index finger and thumb to form the letter C (i.e., a Pacman shape). Doing so exposes the middle finger, which is used to control an onscreen cursor. Moving the middle finger causes the onscreen cursor to move accordingly. Users click by pinching the index finger and thumb together. An empirical user study comparing the PACMAN UI to a wireless mouse found the PACMAN UI to be 44% faster than the wireless mouse.

Jalaliniya et al. (2015) explore the combination of head and eye movements for hands-free mouse pointer manipulation for use with head-worn displays. A prototype was developed using Google Glass. Inertial sensors built into Glass were used to track head movements and an additional external infrared camera was positioned under the display to detect eye gaze. Eye gaze is used to position the cursor in the vicinity of the target and head movements are used to zero in on the target. The authors evaluate the composite interaction technique against the use of head movements alone in a target selection task (target sizes 30 and 70 pixels and target distances of 100 and 280 pixels). Users were required to point to the targets using either a combination of head and eye movements or head movements alone, targets were then selected by tapping on the Glass touchpad. The composite technique was found to be significantly faster across both target sizes with distances of 280 pixels. Interestingly, error rate was not significantly different between pointing techniques.

In an earlier study, Jalaliniya et al. (2014) evaluated the use of gaze tracking, head tracking, and a commercially available handheld finger mouse for pointing within a head-worn display. A target selection task displayed on a head-worn display required participants to select the targets (60, 80, and 100 pixels in size) using gaze-pointing, head-pointing, and mouse-pointing in order to manipulate a pointer for target selection. Experimental results determined that eye-pointing was significantly faster than head-pointing or mouse-pointing, however, less accurate than the two (although not significantly so). Participants reported a preference for head pointing.

Several projects have identified the need for wearable menu interaction and have explored and incorporated the use of menus in the wearable computer interface; however, this has typically involved the use of a dedicated input device in order to interact with the menus presented onscreen. The VuMan (Akella et al., 1992; Smailagic and Siewiorek, 1993, 1996; Bass et al., 1997; Smailagic et al., 1998) series of wearable computer and descendants are examples of employing the use of dedicated input devices to interact with on-screen objects. Cross et al. (2005) implemented a pie menu interface and used a three-button input device (up, down, and select) for interaction. Blasko and Feiner (2002, 2003) use a touchpad device separated into four strips that correspond to a finger of the hand. The onscreen menu is designed to be used specifically with the device and each strip corresponds to a menu. The user glides their finger along the vertical strip to highlight the corresponding menu on screen. KeyMenu (Lyons et al., 2003) is a pop-up hierarchical menu that uses the Twiddler input device to provide a one-to-one mapping between buttons on the keyboard and the menu items presented on the screen.

Evaluation of Wearable Pointing Devices

A review of wearable literature reveals that there have been a limited number of empirical studies dedicated exclusively to investigating the usability of pointing devices for wearable computers. Section “Input Devices for Pointing Interaction” presented novel devices by which to perform command entry and cursor control in a wearable system using specialized hardware and/or software. Typically, the usability of those novel devices was evaluated via formal or informal user evaluations and, in some cases, the devices were also compared against commercially available devices. In addition to the description of the novel devices, Section “Input Devices for Pointing Interaction” also presented the results of their evaluation where reported by the researchers. The studies presented in this section, however, describe the research undertaken that focuses on the evaluation of commercial pointing devices for use with wearable computers.

Chamberlain and Kalawsky (2004) evaluate participants performing selection tasks with two pointing systems (touch screen with stylus and off-table mouse) while wearing a wearable computer. Participants were required to don a wearable computer system (Xybernaut MA IV) housed in a vest with a fold-down touch screen and had a 5 min training session to familiarize themselves with the input devices. Participants did not wear a head-worn display; however, they were evaluated while wearing a wearable computer in stationary and walking conditions. For the walking condition, participants were required to walk around six cones placed at 1-m intervals apart in a figure eight motion. Interestingly, the authors have chosen to evaluate two different interaction methods, where by the stylus does not have an on-screen cursor; however, the off-table mouse has an onscreen cursor. In light of this, the results are not surprising. The authors conclude that the stylus was the fastest device (918.3 ms faster than the off-table mouse in the standing condition and 963.7 ms faster than the off-table mouse in the walking condition) and had the lowest cognitive workload (evaluated via NASA-TLX). The mouse took 145.9 ms longer to use while walking than while standing and the stylus took 101 ms longer to use while walking than while standing; however, these findings were not statistically significant. The off-table mouse reported the lower error rate (the stylus resulting in 24.2 and 19.7 more errors in the standing and walking conditions, respectively). Interestingly, the off-table mouse produced fewer errors in the walking condition than the standing condition. Although workload rating values (NASA-TLX) are not specified by the authors, they do state that there was a significantly higher overall workload for the off-table mouse. The physical, temporal, effort, and performance sub-scales were significantly different. Participants were observed slowing down in order to select the targets and navigate around the cones in the walking condition. The off-table mouse used in the study appears to be the same design as the trackball used in the studies undertaken by the authors (Zucco et al., 2005, 2006, 2009).

Witt and Morales Kluge (2008) evaluated three wearable input devices used to perform menu selection tasks in aircraft maintenance. The study evaluated both domain experts and laymen performing short and long menu navigation tasks. The input devices consisted of a pointing device (handheld trackball), a data glove for gesture recognition and speech recognition. For short menu navigation tasks; laymen performed fastest with the trackball, followed by speech, then data glove (statistical difference between the trackball and data glove only). Domain experts performed comparably faster with the trackball and data glove, followed by speech (however, no significant differences were found across the three devices). For long menu navigation tasks, both laymen and domain experts performed faster with the trackball, followed by the data glove, then speech (however, no significant difference for laymen was found; no significant difference between trackball and data glove was found for domain experts). The trackball mouse outperformed the other devices. However, qualitative data indicated that domain experts preferred the hands-free nature of the data glove. Speech interaction was found to be difficult by domain experts who exhibited a wide range of language abilities.

Summary of Experiments

This section presents a summary of the results from a formal systematic series of usability studies we performed that evaluate the commercially available pointing devices for use with wearable computers. The design guidelines for wearable pointing devices are based in part from the results and observations from these studies. The studies were motivated by the need to address the existing gap in knowledge. The studies were undertaken while the participants employed a wearable computer and a binocular head-worn display. The first study in the series (Zucco et al., 2005) evaluated the pointing devices (gyroscopic mouse, trackball, and Twiddler2) performing selection tasks. Selection of targets is performed by positioning the cursor on the target and clicking the select button on the pointing device. The second study (Zucco et al., 2006) extended the selection study by evaluating the devices when performing an increasingly complex task, that is, drag and drop. Performing a drag and drop operation requires the user to press the button on the pointing device to select the target, move the cursor (while keeping the button pressed) and release the button once the object is repositioned on the destination target. The complexity of the first study is also extended by the introduction of a walking condition in addition to a stationary condition. The third study (Zucco et al., 2009) evaluated the pointing devices performing menu selection tasks. Menu selection is one of the most common forms of command entry on modern computers. The menu structures evaluated in the study consisted of two-levels: eight top-level menu items and sub-menus containing eight menu items for each top-level item. Menu selection was modeled on typical menu interaction found implemented in standard GUI applications. A sub-menu was activated by clicking on its parent item. The target menu item was selected by clicking on the desired item. Menu selection introduces the complexity of the need to employ precise mouse movements required to steer to sub-menu items.

Ranking of Pointing Devices

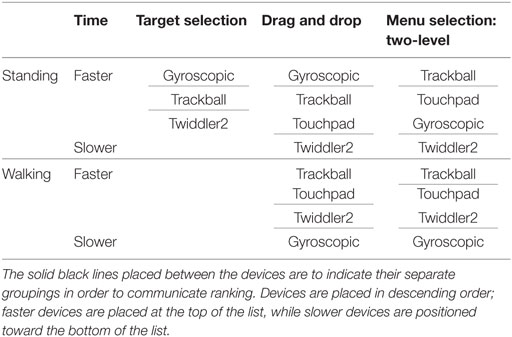

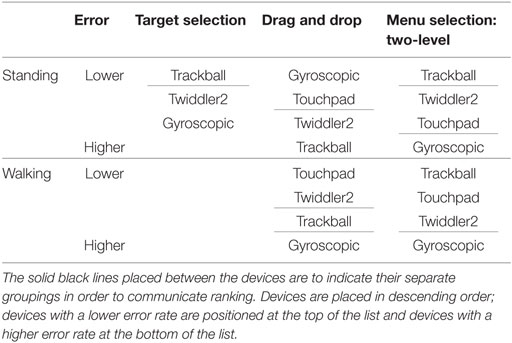

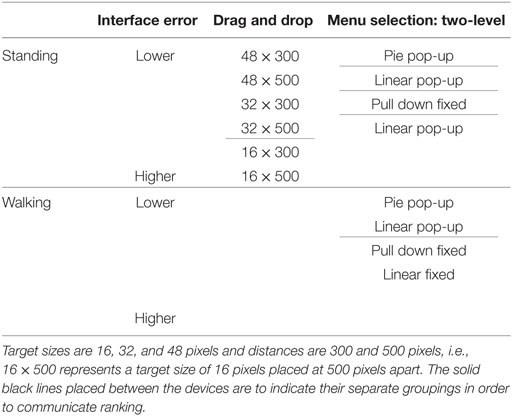

The series of studies undertaken (Zucco et al., 2005, 2006, 2009) reveal that there is a measurable difference between the pointing devices and that a ranking can be established pertaining to the use of the devices in a wearable context. Tables 1 and 2 provide a visual summary of pointing device performance for each of the three interaction tasks evaluated. The tables are broken up into standing and walking mobility conditions and the solid black lines placed between the devices are to indicate their separate groupings in order to communicate ranking.

Target Selection User Study

The results of the target selection study presented in Zucco et al. (2005) found that the gyroscopic mouse facilitated the fastest selection of targets. The trackball ranked second, with the Twiddler2 performing the slowest for target selection tasks. The trackball was significantly more accurate than the other devices. The Twiddler2 and gyroscopic mouse ranked comparably second for accuracy (no significant difference was found between this pair).

Drag and Drop User Study

As described in Zucco et al. (2006), the gyroscopic mouse was most efficient at dragging and dropping targets while stationary. The trackball and touchpad ranked comparably second, while the Twiddler2 performed drag and drop operations the slowest. The gyroscopic and touchpad mouse most accurately drag and dropped targets, while the Twiddler2 and trackball were the least accurate (a significant difference was found between the gyroscopic mouse and trackball only for missed dropped locations, no other significant difference was noted). The total number of errors in a task is made up of missed target selections and missed drop locations. Qualitative evidence suggested that users found that the trackball was susceptible to dropping the target being dragged and, therefore, resulting in missed drop location errors. To further understand the source of errors, error analysis was separated into missed target selections and missed drop locations.

Interacting while walking highlighted the trackball and the touchpad as performing comparably the fastest, the Twiddler2 ranking second, while the slowest device was found to be the gyroscopic mouse. The Twiddler2 and touchpad devices were most accurate at executing the dragging and dropping of targets in the walking experiment, the trackball the next accurate, while the gyroscopic mouse was least accurate (no significance was found between the Twiddler2 and touchpad; in addition, no significant difference was reported between the Twiddler2 and trackball for missed dropped locations).

Menu Selection Study

The results derived from the two-level menu selection study presented in Zucco et al. (2009) identified that two-level menus were selected the fastest when using the trackball while standing. The touchpad and gyroscopic mouse resulted in comparably the second fastest times, while the Twiddler2 performed the least efficiently (a significant difference was found between the trackball and gyroscopic mouse, and trackball and Twiddler2 pairs). The trackball led to the most accurate selection of menu items, followed by the Twiddler2 and Touchpad performing comparably second. The gyroscopic mouse suffered the highest error rate (a significant difference was found when comparing the gyroscopic mouse with the trackball and the Twiddler2 mouse).

While walking, the trackball was the fastest device, followed by the touchpad and Twiddler2 ranked comparably second (no significant difference between this pair), resulting in the gyroscopic mouse placed last. The trackball performed with the least amount of errors, followed by the touchpad and the Twiddler2. The gyroscopic mouse performed with significantly more errors than the other devices.

Pointing Device Task and Mobility Suitability

As a result of the studies, we found that certain devices are better suited to certain task(s) and/or mobility condition(s). In the selection study (Zucco et al., 2005), the gyroscopic mouse was the fastest, least accurate device. In the drag and drop study (Zucco et al., 2006), the gyroscopic mouse was the fastest and most accurate device while stationary. Although performing a drag and drop operation is a more complex task than target selection, it is interesting to note that the drag and drop findings correlate to the results of the selection study. The results obtained from the two studies seemingly make the gyroscopic mouse a suitable choice when both interaction models (selection and drag and drop) are required (and the user is to remain stationary while using the device). However, the results obtained from the third user study evaluating menu selection (Zucco et al., 2009) suggest that the gyroscopic mouse is a less suitable choice when precise menu selection is needed, such as the steering necessary for the linear pop-up menu. The gyroscopic mouse seems to favor coarse mouse movements; therefore, it may be a suitable choice if used in conjunction with interface objects that allow for coarse mouse movements, for example, a pie menu. The gyroscopic mouse was comparably placed second when performing menu selection tasks.

The gyroscopic mouse is not suitable for use while walking as it was found to be the slowest and least accurate device while used in this condition (for both drag and drop and menu studies). Participants were noticed to slow down considerably while walking with the gyroscopic mouse. This is unsurprising given that the accelerometer technology in the gyroscopic mouse is sensitive to vibration noise, making the device very difficult to use while walking.

The Twiddler2 reported the slowest times and one of the second lowest error rates for all tasks while stationary. While walking, the Twiddler2 was found to perform with the second fastest time for drag and drop tasks and one of the second fastest times for menu selection tasks. The Twiddler2 performed with one of the lower error rates in both walking tasks (drag and drop and menu selection studies). Some participants reported difficulties with the trackpoint stating that it was difficult to “get right” as it was necessary to coordinate moving the thumb off of the trackpoint before pressing a button on the front of the device. However, some participants reported that cursor movement was relatively stable when walking. In light of this, the Twiddler2 may be a preferred choice when stable and accurate use is a requirement over speed of use.

The trackball was the fastest and most accurate device while stationary when performing menu selection tasks, and was the second fastest device in the selection and drag and drop tasks. The trackball reported the lowest error rate performing target selection tasks; however, it was one of the most error-prone devices when performing drag and drop tasks. The touchpad reported one of the second fastest times when performing drag and drop and menu selection tasks. In the drag and drop study, the trackball and touchpad were comparably the fastest devices while walking. These findings along with similar findings in the menu selection study seemingly make the trackball or touchpad a suitable choice for use in a wearable context over all the interaction models (selection, drag and drop, and menu selection), and over both mobility conditions (standing and walking).

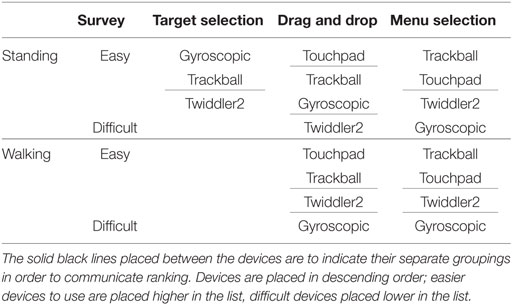

Users’ Reference of Pointing Device

The studies undertaken (Zucco et al., 2005, 2006, 2009) confirm that users have a preference of pointing device(s). Table 3 provides a visual summary of users’ preference of pointing device ascertained by means of a survey for each of the usability studies. Across all studies except the target selection study, participants prefer the touchpad and trackball devices over the other two. In the selection study, participants prefer the gyroscopic and trackball mouse.

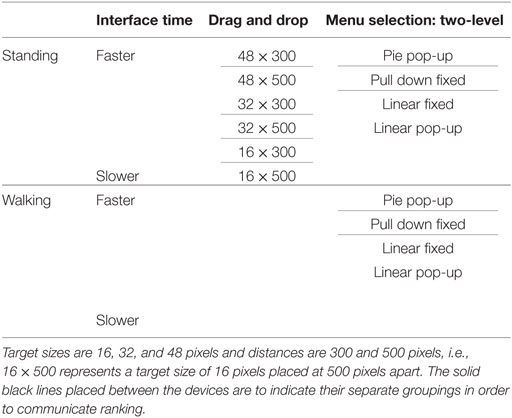

Wearable User Interface Components

The results obtained from the drag and drop, menu selection also provide valuable insights into the interface components that are efficient and effective within a wearable context. Tables 4 and 5 provide a visual summary of the performance of interface components (menu items, and target size and distance combinations) for the drag and drop, menu selection. The tables are broken up into standing and walking mobility conditions and the solid black lines placed between the interface components are to indicate their separate groupings in order to communicate ranking.

Drag and Drop

The results derived from the drag and drop study found that target sizes of 48 pixels placed at a distance of 300 pixels apart are dragged and dropped significantly faster than any other combination, followed by size and distance combinations of 32 × 300 pixels and 48 × 500 pixels performing comparably as the next fastest. The third and fourth times were reported by target and distance combinations of 32 × 500 pixels and 16 × 300 pixels, respectively. Finally, target sizes of 16 pixels placed at a distance of 500 pixels apart were dragged and dropped the slowest. As evidenced, it takes longer to complete a task as the difficulty of the task (i.e., index of difficulty) increases (Fitts, 1954, 1992; MacKenzie, 1995), that is, either by decreasing the size of the target and/or increasing the distance between the targets. Targets that are larger and are closer together can be dragged and dropped faster than targets that are smaller and further away from each other. Target sizes of 16 pixels placed at either 300 pixels or 500 pixels apart were more error prone than the other size and distance combinations.

Menu Selection

The results derived from the menu selection study found that, for two-level menus, pie pop-up menus were selected the fastest when standing, followed by the pull down fixed ranked second, and the linear fixed and the linear pop-up menus ranked third performing comparably (no significant difference could be identified for this pair). The pie pop-up menu performed with the fewest errors, followed by the linear pop-up, pull down fixed, and lastly the linear fixed (all differences were significant). While walking, two-level menus were selected the fastest with the pie pop-up, followed by the pull down fixed ranked second, and the linear fixed and the linear pop-up menus ranked third performing comparably (no significant difference could be identified for this pair). The pie pop-up and linear pop-up menus performed comparably and with significantly fewer errors than the pull down fixed and linear fixed menus (also performing comparably). The fastest menu over all the devices in a wearable context and across both mobility conditions is the pie pop-up, while the slowest are the linear fixed and the linear pop-up menus. The precise steering necessary to select a sub-menu in the linear pop-up makes it a poor choice while walking.

Top-Level (Single-Level) Menu Selection

Analysis of the results derived from the menu selection study found that, for single-level (top-level) menus while standing and walking, the pull down menu outperformed the other menu structures. The linear fixed and the pie pop-up performed comparably second (no significant difference was found for this pair), and the linear pop-up was the slowest of the menu structures (in addition, the pie pop-up and linear pop-up were not significantly different in the standing condition only). In the standing condition, the pull down fixed and the linear fixed menus performed with fewer errors than the pie pop-up and linear pop-up menus (although no significance was found for any of the pairs in the standing condition). The menu structures for the walking condition can be ordered as follows: linear pop-up, pull down fixed, linear fixed, pie pop-up (a significant difference found between the linear pop-up and pie pop-up pair only).

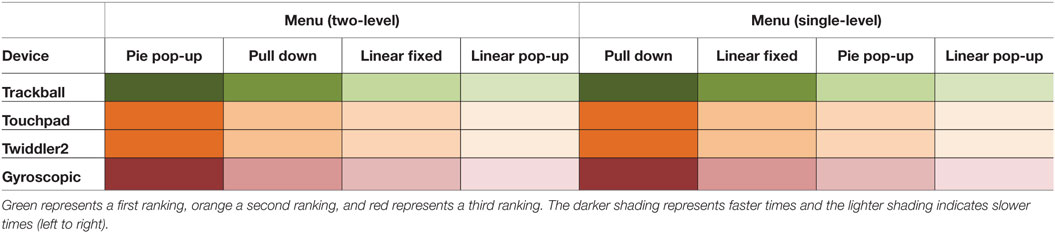

Combined Devices and User Interface Components

A combination of pointing devices and user interface components are summarized in Table 6 (only studies reporting an interaction between pointing devices and interface objects are presented). Menu structures are summarized over both movement conditions (standing and walking). Green shading represents a first ranking, orange represents being placed second, and red depicts a third ranking. The darker shading indicates faster times and the lighter shading portrays slower times (typically moving left to right).

As depicted in the summary presented in Table 6, the trackball is seemingly the best choice of device for use with any of the menu structures. The pie pop-up menu (two-level) and the pull down menu (single-level) are the best choice of interface components for use with any of the pointing devices in a wearable context.

The combination of the trackball and the pie pop-up menu allows the fastest selection time for two-level menus over both movement conditions. Performing with the next best times, a pull down menu may be a suitable choice in the case where a pie menu is not appropriate as the interaction technique. Teamed with the touchpad, the pull down menu reported the second fastest time (after the trackball). The trackball teamed with the pull down menu enables the fastest selection time for single-level menus over both movement conditions. The linear fixed or pie pop-up menus could form a suitable choice in the case where a pull down menu is not appropriate as the interaction technique for single-level menus. Teamed with the touchpad, the linear fixed and pie pop-up single-level menus reported the second fastest times (after the trackball).

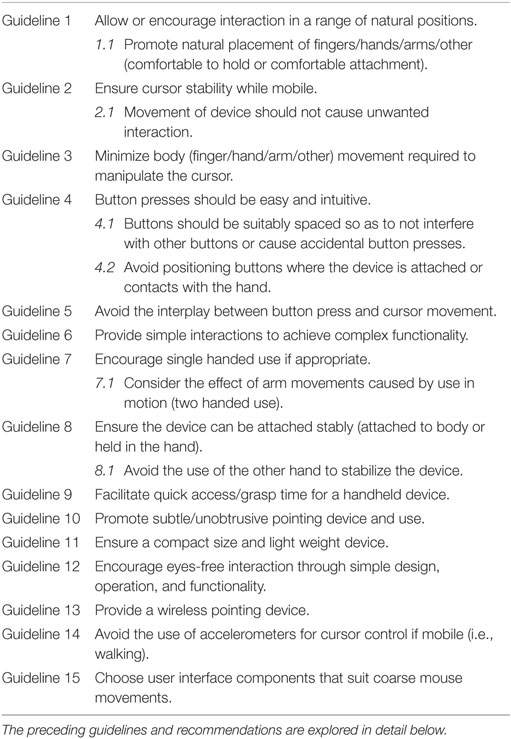

Guidelines and Recommendations

This section provides general guidelines and recommendations to help guide the design and selection of pointing devices suitable for use with wearable computers. These recommendations are derived from the detailed and specific qualitative feedback in addition to the quantitative findings from the three studies summarized in this paper (evaluating pointing devices within a wearable context). These guidelines and recommendations are designed to provide insight and direction when designing pointing devices suitable for use with wearable computers. While some of these guidelines may not be surprising, they are derived from the empirical and qualitative evidence of the three wearable studies and, therefore, provide a foundation upon which to guide the design of wearable pointing devices. Although the guidelines are specific to the design of wearable pointing devices, where appropriate, they may be generalized to influence and guide the design of wearable input devices in the broad. The qualitative evidence was obtained from participants’ responses to the following questions: (1) Were there any aspects of the (device) that made moving the cursor and/or pressing the button to (perform the task) difficult? (2) What did you like about using the (device)? (3) What did you dislike about using the (device)? and (4) Any other comments?

Guideline 1: Allow or Encourage Interaction in a Range of Natural Positions

Allow or encourage interaction with a pointing device in a range of positions. Some participants preferred that the Twiddler2 could be used in a range of positions, by stating that “when arm was tired you could drop arm to side” and “could hold my hand by my side and operate it fine, the other devices had to be held up in front of me.” The trackball was also “able to have hand anywhere and just use the thumb to move,” “can be used in any arm position” and “could drop device to side.” Qualitative comments suggest that forcing the hand or arm into specific positions while operating the device should be avoided. A device that can be operated while the hand is held in numerous positions is favorable. This may contribute to reducing the fatigue and effort associated with operating a device in forced positions. For example, some participants found that when using the gyroscopic mouse that there was “no relaxing of the hand during usage,” and that “the hand has to remain in a certain (not relaxed) position all the time.” Some users also found this to be true of the touchpad reporting; “both hands are in air, without any rest,” and “having to hold up both arms to operate it.”

In a similar vein, a pointing device should allow natural placement of fingers/hands/arms/other (guideline 1.1) while in use and be comfortable to hold. Natural placement refers to the natural (normal/typical) and comfortable positioning of the body in order to minimize the amount of strain placed on muscles and ligaments. Comments from participants highlighted the importance of this by reporting that the gyroscopic mouse “fits nicely into the hand.” Likewise, highlighting the importance, albeit with unfavorable comments, some participants reported that the Twiddler2 resulted in “awkward finger positions,” and that the “finger didn’t naturally align with the A or E buttons.” In addition, the Twiddler2 was “hard to reach around and click properly,” given that the “buttons are in a difficult position.” A user found that “holding arm in the same position can be strenuous” while using the touchpad. Although the Twiddler2 could be used in a range of positions, some participants found it to be “not comfortable,” “the design of the mouse does not seem ergonomic – making it difficult to hold and operate,” “it was uncomfortable to hold,” and “no comfortable way of holding the mouse. No comfortable way of pressing the button.” Comfort should also be considered in the case of devices attached to the body (guideline 1.1). A comment from a participant suggested he/she disliked “the strap” on the Twiddler2, with another participant stating “how the strap was attached made a difference to the systems accuracy and usability.” This may suggest, as Dunne and Smyth (2007) state, that when attention is drawn to the discomfort (un-wearability) caused by a device, that this in turn impacts negatively upon the cognitive capabilities of the user. The guidelines by Gemperle et al. (1998) also support the need for comfortable attachment systems.

Guideline 2: Ensure Cursor Stability while Mobile

Given the pointing device may be in use while mobile and stationary, it is important that the mechanics of the device are designed so that user movement (e.g., caused by walking) does not affect cursor movement. The cursor should not involuntarily move as a result of the user’s movement. The gyroscopic mouse suffered the greatest in this regard with some users commenting; “body movement interferes with mouse movement,” and the device “moved when you did making it impossible to use when walking.” Walking also affected the trackball device with some participants finding that the “ball moves during walking when not desired sometimes.” However, the Twiddler2 was found to be “stable during body movement,” and “stable/consistent mouse motion during both the walking and stationary experiments.” Some participants found that “walking made very little difference to operation” of the touchpad. While others found that “walking movement made my hands shake making the cursor move.” However, a probable cause of this may be the interplay of the arms resulting from use in motion (discussed in guideline 7.1) rather than the mechanics of the touchpad.

This concept is also true for the movement of a device (e.g., in the hand or on the body), as the device movement should not cause unwanted interaction (guideline 2.1), i.e., involuntary cursor movement or accidental button press. A user of the trackball noted: “if I held the trackball strangely the cursor would move by itself.” The Twiddler3 (consumer launch, May 2015) makes use of a slight delay of <0.5 ms from the time that the user first presses the Nav Stick and when the cursor moves, in an attempt to avoid inadvertent cursor movement caused by accidental bumping of the Nav Stick.

Guideline 3: Minimize Body (Finger/Hand/Arm/Other) Movement Required to Manipulate the Cursor

Minimize movement (fingers/hands/arms/other) required to control/move the cursor on the screen. A participant commented that the “small size of the touchpad overtime requires multiple strokes to move the cursor.” Likewise, the trackball required that “when the distance was large, it requires repositioning thumb on ball for a second movement,” “the repeated thumb movement to get the cursor from one side of the screen to the other, the other devices really only needed one movement.” Some participants favored the Twiddler2 as “the thumb movement is better than the trackball – one gesture as opposed to a couple.” Some participants noted that the gyroscopic mouse required “too much arm movement,” “requires large wrist/arm movements” and “too much arm/wrist motion for simple tasks.”

Guideline 4: Button Press Should Be Easy and Intuitive

Button press should be easy and simple and not increase the effort or force required to operate the device. Some users reported that “the button is required to be pressed a little harder than I would have liked” while using the trackball. Likewise, users of the Twiddler2 reported that “a more sensitive button would be suitable,” and that “the button was hard to press.” However, some participants reported that the “button was easy to push” on the gyroscopic mouse.

Buttons should be suitably spaced so as to not interfere with other buttons or cause accidental button presses (guideline 4.1). Selection buttons should be positioned for easy and intuitive activation. Some users found that “fingers are slightly cramped on the buttons” and “the buttons were too close” on the Twiddler2 reporting that “many times I pressed the buttons accidently,” it was “hard to hold and had to be careful to press the right button,” and that there are “too many buttons that can accidently be pressed.”

In addition to the above, avoid positioning buttons where the device is attached or contacts with the hand (guideline 4.2). This appears to cause users to expend extra effort to avoid inadvertently registering a mouse click. For example, the “finger has to hold device and press the button simultaneously” when using the trackball. Some users found that “securing the device with a single finger and avoiding excessive pressure from activating the button was a minor learning challenge,” and “not being able to rest my index finger on the trigger button without fear of clicking.”

Guideline 5: Avoid the Interplay between Button Press and Cursor Movement

Be mindful of the interplay between button press and cursor movement and vice-versa. Pressing the button should not affect the position of the cursor on screen. That is, a button press should not cause the cursor to move involuntarily. Likewise, moving the cursor should not result in an unwanted button press. Comments from participants reported issues of this nature across all of the devices, for example, the gyroscopic mouse reported, “the highly sensitive nature that even a click for the mouse moves the cursor away from the dot” and “releasing the trigger often caused the mouse to move slightly.” When using the trackball, “occasionally when pressing the button I would inadvertently move the mouse just off the target.” Likewise for the Twiddler2, “clicking button and mouse at the same time – would often move the mouse unintentionally,” “clicking a button on one side pushes it against my thumb, making the pointer move off the target.” The touchpad appeared to suffer the least from this, although one participant reported; “cursor moves when tapping,” and another, “when walking, it’s easy to accidentally click – tap.”

Guideline 6: Provide Simple Interactions to Achieve Complex Functionality

Avoid having to engage multiple fingers at the same time – this may be especially true for handheld devices. To hold the select button down while moving the cursor proved to be challenging in a wearable context. Comments in this regard stated that the gyroscopic mouse required “too many buttons to press at once,” “sending the message to your finger then to your thumb can be quite different and can take a bit of getting used to,” “having to keep a button pressed while moving the cursor, I sometimes got confused between having to drag and having to move the cursor,” and finally disliked “coordinating – holding two buttons while moving it around.” Having to coordinate two simultaneous actions may have contributed to the high workload rating of the gyroscopic mouse. Likewise, some participants reported that they “sometimes found it difficult to keep finger on the trigger while moving the ball” while using the trackball. The Twiddler2 received comments, such as “hard to press E key and walk and drag mouse,” “difficult to press button and move cursor at same time (device is a little too big),” “found it difficult to maintain pressure on the A key while using the thumb to move the cursor,” “found it difficult to maintain pressure on the A key while using the thumb to move the cursor.” Likewise for the touchpad; “trying to hold the button and drag can be rather annoying as it was rather uncomfortable.” In light of this, designs should allow for simple interactions that achieve complex actions, for example, the use of only one button to achieve a drag and drop operation. The D key on the Twiddler2 emulates a left mouse button being held down and as a result, is not required to be held down while moving a target. A comment in favor of this reported that the Twiddler2 “was the easiest to use as I didn’t have to think about it while I was moving the cursor.” A participant suggested of the gyroscopic mouse, “the button to turn it on and off would possibly be better using a switch so you don’t have to keep your hand clenched.”

Guideline 7: Encourage Single Handed Use if Appropriate

If appropriate, discourage engaging the use of both hands while interacting with a pointing device. A recommendation is that one hand be free at all times in order to interact with the user’s environment. Indicative comments pertaining to the trackball is “you’d probably be able to do other things whilst it’s on your finger.” The touchpad, however, requires that “two hands are occupied, but if you can afford that, no problems.” In addition, attaching a pointing device to the body in a way that facilitates hands-free interaction with the user’s environment when the device is not in use is recommended. This allows for quick access time as reported by Ashbrook et al. (2008), and the user is not forced to put the device away (holstering) while not in use. A participant stated that the touchpad was “at hand without being in hand,” and “does not get in the way when not in use.”

In the case where a two-handed interaction design may be appropriate, consider the effect of dual arm movements caused by use in motion, i.e., walking (guideline 7.1). This was identified by some users of the touchpad finding that the “normal arm motion while walking makes it awkward to use,” and “both arms moving while walking meant sometimes finger left the surface.”

Guideline 8: Ensure the Device Can Be Attached Stably (Attached to Body or Held in the Hand)

If the device is to be attached to or placed on the body, it is important that interaction with the device does not cause it to move or slip in any way. Similarly, if the device is to be held in the hand, interaction with the device should feel stable, well placed in the hand, and free from movement or chance of slipping. Pressing the button or moving the cursor should not cause the device to move in the hand so as to cause the user to have to reposition it. Users may feel they have a lack of control over the device if it moves around during use or if it appears to be loosely attached to the body. Some users reported that the trackball “moved around when trying to use it” and that “moving cursor was difficult to get used to because it’s not very stable.” Similarly, the Twiddler2 was “tricky to get the strap adjustment perfect,” “very un-firm fitting,” “pressing buttons is difficult if device isn’t strapped in completely,” and “have to get the right grip otherwise the force of pressing the button can move the whole device.” Use of the trackball required users “to remove my thumb from the ball rest it on the side of the device to stabilize it while I pressed the trigger.” The “trackball device is not stable enough for precise control if held only one-handed” and “needed left hand to support right hand while using it.” However, one should avoid the use of the “free” hand in order to stabilize the device (guideline 8.1). If the device is attached to the body, or held in the hand, it is important that the user does not feel the need to use the hand that is not in use in order to stabilize the device. In this respect, the device would be considered a two-handed device that does not allow for one hand to be free to interact with the environment (as suggested in guideline 7). For example, some participants reported that when using the Twiddler2 device, that they “have to hold it with my left hand to stabilize the use of the device.”

Guideline 9: Facilitate Quick Access/Grasp Time for a Handheld Device

If the device is to be held in the hand, then it is recommended that the design would allow for quick access/grasp time. Ideally, a design that considers and allows for some sort of on body placement (similar to a mobile phone pouch) or allows the repositioning of the device on the body while not in use would be advantageous. In this case, it is recommended that the design would facilitate quick access/grasp time when engaging with the device and likewise would facilitate the quick return when not in use. Ashbrook et al. (2008) found that a device placed on the wrist afforded the fastest access time, followed by a device placed in a pocket and lastly devices holstered on the hip had the slowest access time. Rekimoto (2001) suggests allowing for the ability to quickly change between holding the device and putting it away should hands-free operation not be supported. Likewise, Lyons and Profita (2014) suggest that device placement should allow for ease of access, use, and return. Placing or positioning the device on the body should not inadvertently cause device activation (that is, involuntary button press or cursor movement).

Guideline 10: Promote Subtle/Unobtrusive Pointing Device and Use

A wearable device should be subtle and non-obtrusive and encourage use in a public environment. A comment highlighted this in relation to the trackball; “the size was great if say this type of tech was used in public then it would be easy to pull out without it feeling like you are pulling out a large amount of wires and hardware,” that is, input devices that have a low social weight (Toney et al., 2002, 2003). Suggesting the importance of public and socially aware use, one participant reported that the touchpad is “not very stylish.” Resulting from their work on designing for wearability, Gemperle et al. (1998) also recommend unobtrusive placement as an important consideration, in addition to keeping esthetics in mind. Likewise, Rekimoto (2001) suggests that a wearable device should be as unobtrusive and natural as possible in order to be adopted for everyday use. Discrete, private and subtle interaction has also been emphasized in recent work (Chan et al., 2013; Lucero et al., 2013) as important in allowing users to act as naturally as possible in a public setting.

Guideline 11: Ensure a Compact Size and Light Weight Device

The size of the device should be designed to fit the hand of the general user population or provide a means to adjust the device in order to fit a variety of hand sizes. The size and weight of the device should be such that it does not cause extra muscle fatigue or strain while in use. The trackball was favorable for being “compact, non-intrusive,” and “small.” However, the Twiddler2 “device a little large to control and hold,” “bulky controller,” and “didn’t suit my hand at all, it felt very awkward to use.” Devices should be nicely weighted so as to avoid muscle fatigue or strain caused by heavy devices. Some participants preferred the “light weight” nature of the trackball device. Gemperle et al. (1998) also found size and weight to be an important consideration.

Guideline 12: Encourage Eyes-Free Interaction Through Simple Design, Operation, and Functionality

Given that users may be mobile while wearing a head-worn display, the device should facilitate minimal visual attention, that is, users are not forced to look at the device while in use. The device should be simple to operate and provide appropriate tactile feedback so that users may focus on the task within the wearable domain and minimize looking at the device. Exemplar comments pertaining to the trackball device were; “nice and simple. Comfortable,” “easy trigger style click button,” and “the trigger button is easy and comfortable to use.” Komor et al. (2009) suggest that in a mobile setting, the user should be able to expend little to no visual attention in order to access and use an interface.

Guideline 13: Provide a Wireless Pointing Device

Given a wearable/mobile context, input devices are recommended to be wireless in nature so that cords do not interfere with the user’s environment or task. The wireless nature of the gyroscopic mouse received favorable comments from participants. While “the wire sometimes got in the way” when using the trackball mouse and similarly when using the Twiddler2, the “cable got in the way sometimes.” One participant disliked the Twiddler2 because “it was connected via wires.” The use of wireless communication supports guideline 10, as a more subtle physical device can be designed.

Guideline 14: Avoid the Use of Accelerometers for Cursor Control if Mobile (i.e., Walking)

Accelerometer technology should be avoided as a means to control the cursor if used while walking. The accelerometer is extremely sensitive to vibration noise caused by walking, making the device extremely difficult to use while mobile. As noted in the comments for the gyroscopic mouse, “I had to stop each time I wanted the device to work – very slow walking pace!,” “very difficult to control the cursor while walking,” and “any movement causes the mouse to react – making the system unusable.”

Guideline 15: Choose User Interface Components that Suit Coarse Mouse Movements