94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 07 April 2025

Sec. Brain-Computer Interfaces

Volume 19 - 2025 | https://doi.org/10.3389/fnhum.2025.1386275

This article is part of the Research Topic Forward and Inverse Solvers in Multi-Modal Electric and Magnetic Brain Imaging: Theory, Implementation, and Application View all 9 articles

Sanjay Ghosh1,2

Sanjay Ghosh1,2 Chang Cai3*

Chang Cai3* Ali Hashemi4

Ali Hashemi4 Yijing Gao1

Yijing Gao1 Stefan Haufe4

Stefan Haufe4 Kensuke Sekihara5

Kensuke Sekihara5 Ashish Raj1

Ashish Raj1 Srikantan S. Nagarajan1*

Srikantan S. Nagarajan1*Introduction: Electromagnetic brain imaging is the reconstruction of brain activity from non-invasive recordings of electroencephalography (EEG), magnetoencephalography (MEG), and also from invasive ones such as the intracranial recording of electrocorticography (ECoG), intracranial electroencephalography (iEEG), and stereo electroencephalography EEG (sEEG). These modalities are widely used techniques to study the function of the human brain. Efficient reconstruction of electrophysiological activity of neurons in the brain from EEG/MEG measurements is important for neuroscience research and clinical applications. An enduring challenge in this field is the accurate inference of brain signals of interest while accounting for all sources of noise that contribute to the sensor measurements. The statistical characteristic of the noise plays a crucial role in the success of the brain source recovery process, which can be formulated as a sparse regression problem.

Method: In this study, we assume that the dominant environment and biological sources of noise that have high spatial correlations in the sensors can be expressed as a structured noise model based on the variational Bayesian factor analysis. To the best of our knowledge, no existing algorithm has addressed the brain source estimation problem with such structured noise. We propose to apply a robust empirical Bayesian framework for iteratively estimating the brain source activity and the statistics of the structured noise. In particular, we perform inference of the variational Bayesian factor analysis (VBFA) noise model iteratively in conjunction with source reconstruction.

Results: To demonstrate the effectiveness of the proposed algorithm, we perform experiments on both simulated and real datasets. Our algorithm achieves superior performance as compared to several existing benchmark algorithms.

Discussion: A key aspect of our algorithm is that we do not require any additional baseline measurements to estimate the noise covariance from the sensor data under scenarios such as resting state analysis, and other use cases wherein a noise or artifactual source occurs only in the active period but not in the baseline period (e.g., neuro-modulatory stimulation artifacts and speech movements).

Electromagnetic brain imaging is an effective technique being used intensively to understand the neural mechanisms of the complex human brain and behavior important for both neuroscience research and clinical applications (Phillips et al., 1997; He et al., 2018). In particular, electroencephalography (EEG) and magnetoencephalography (MEG) are two widely used techniques that provide non-invasive recordings of the electrical activity of the brain by sensing its remote magnetic and electric fields, respectively (Baillet et al., 2001). Electromagnetic brain imaging requires solving an ill-posed inverse problem for reconstruction of brain activity (at cortical brain sources) from non-invasive EEG/MEG recordings. In particular, it is crucial to determine both the spatial location and the temporal dynamics of neurophysiological activity. In tomographic EEG/MEG source localization pipelines, current dipole sources are considered to be located on each voxel inside the brain. As a result, the number of locations of potential brain sources (thousands of voxels) is typically much larger than the number of sensors (just a few hundred). In addition, several types of noise (such as environmental interference and sensor noise) inevitably affect EEG/MEG signals (Michel and He, 2019; Edelman et al., 2015). Therefore, reconstructing brain source activities accurately from scalp EEG/MEG measurements becomes a challenging task. It opens up the possibility of using sophisticated mathematical or neurophysiological priors of both brain signals and noise statistics to achieve improved recovery of brain activities.

Integral to inverse modeling algorithm in electromagnetic brain imaging (Riaz et al., 2023) are forward models. The primary current density is mathematically defined as a vector field in a continuum (volume space or surface space, depending on the assumptions for the source space). Then an electric scalar field or magnetic vector field that is produced by the primary current density is described by quasi-static equations of electromagnetism. A discretization of the space of the brain and surrounding tissues is employed to provide these equations with a numerical solution. Note that the forward problem aims to achieve a solution for the electric or magnetic fields given the location and timing of brain source activity. The recovery of the primary current source location and timing is basically the Cauchy inverse problem of electromagnetism (Riaz et al., 2023).

Several methods have been introduced over the past decades to solve the inverse problem of brain source imaging. Common inverse solvers for EEG/MEG source imaging can be broadly classified into three categories—model-based dipole fitting, dipole scanning methods, and distributed whole brain source imaging methods (Baillet et al., 2001; Cai et al., 2018; Hosseini et al., 2018; Cai et al., 2023). The working principle of dipole fitting methods is to approximate brain activity with a small number of equivalent current dipoles (Scherg, 1990). These classical methods achieve good solutions when the source activity is relatively simple consisting only one to two dipoles In addition, it is practically challenging for dipole fitting-based methods to determine the true number of current dipoles to be estimated. Dipole scanning methods are also referred to as methods of spatial filtering or beamforming which estimate the time course at every candidate location while suppressing the interference from activity at the other candidate source locations (Van Veen et al., 1997; Zumer et al., 2006; Cai et al., 2023). Examples of scanning techniques are minimum-variance adaptive beamforming (Robinson and Rose, 1992; Sekihara and Scholz, 1996) as well as several variants of adaptive beamformers (Sekihara and Nagarajan, 2008). The fidelity of such brain signal estimates is affected by many factors such as signal-to-noise ratio (SNR), source correlations, and the number of time samples. However, the reconstruction performance of beamforming methods can be significantly compromised if the brain sources are highly correlated, although recent Bayesian extensions overcome this limitation (Cai et al., 2023).

Distributed whole-brain source imaging methods do not require prior knowledge of the number of sources (Wipf and Nagarajan, 2009). These methods approximate the primary electrical current density by discretizing the whole brain volume, assuming a dipolar current source at each voxel. The task is then to estimate the amplitudes (and orientations) of the sources by minimizing a cost function (He et al., 2011). Some form of prior constraints or regularizers are used to obtain a unique and neurophysiological meaningful solution (Ioannides et al., 1990). The minimum-norm estimation algorithm (MNE) (Hämäläinen and Ilmoniemi, 1994) minimizes the L2 norm of the solution favoring smaller overall power of the brain activity. Other variants of MNE include the weighted MNE (wMNE) (Dale and Sereno, 1993), low-resolution brain electromagnetic tomography (LORETA) (Pascual-Marqui et al., 1994), and standardized LORETA (sLORETA) (Pascual-Marqui et al., 2002). However, L2 norm minimization methods produce diffuse estimates that lack sufficient resolution to localize and distinguish multiple sources. To overcome this limitation, algorithms based on L1 norm minimization (Ding and He, 2008; Liu et al., 2022) and sparsity-inducing norms induced by empirical Bayesian inference (referred to as sparse Bayesian learning, SBL) (Wipf et al., 2010) are developed. Friston et al. (2008) introduced a sparse solution for distributed sources, of the sort enforced by equivalent current dipole (ECD) models. These sparsity-based source reconstruction algorithms can be derived within a Bayesian framework (Wipf et al., 2010; Liu et al., 2019; Oikonomou and Kompatsiaris, 2020; Liu et al., 2020; Cai et al., 2021; Hashemi et al., 2021b, 2022; Ghosh et al., 2023; Cai et al., 2023). We argue that these Bayesian techniques are found to be most efficient in estimating the model hyper-parameters directly from the data using hierarchical algorithms. Importantly, the Champagne algorithm (Wipf et al., 2010) is derived in an empirical Bayesian fashion, incorporating deep theoretical ideas about sparse-source recovery from noisy constrained measurements. Inspired by its promising performance, attempts have further been made by several researchers to improve upon Champagne algorithm (Wipf et al., 2010). One potential direction of improvement is to accurately model noise that exhibits structured precision parameter (Liu et al., 2020).

Accurate inference of brain signals of interest while accounting for all sources of noise that contribute to sensor measurements is the key challenge in electromagnetic brain imaging. Noise statistics in the model play a crucial role in the success of sparse source recovery. In particular, the statistical characteristics of the noise in sensor data plays an important role in the working of Bayesian algorithms for electromagnetic brain imaging. Existing studies have considered noise covariance matrices with either diagonal (Wipf et al., 2010; Cai et al., 2019) or full structure (Hashemi et al., 2022). In this study, we consider another type of realistic noise whose covariance can be characterized by a structured matrix. This type of noise is present when there are just a few active sources of environmental noise, each of which may be picked up by multiple MEG/EEG sensors with high spatial correlations. A key aspect of our noise estimation algorithm is that we do not require any additional baseline measurements to estimate the noise covariance from the sensor data under scenarios such as resting-state analysis, and other use cases wherein a noise or artifactual source occurs only in the active period but not in the baseline period (e.g., neuromodulatory stimulation artifacts and speech movements). To the best of our knowledge, no existing algorithm has addressed the brain source estimation problem with a structure-noise covariance.

The main contributions of this paper are as follows:

1. We introduce a novel robust empirical Bayesian framework for electromagnetic brain imaging under the structured noise covariance assumption. In particular, we perform inference of the variational Bayesian factor analysis (VBFA) noise model iteratively in conjunction with source reconstruction. It provides us a tractable algorithm for iteratively estimating the noise covariance and the brain source activity. The proposed algorithm is found to be quite robust to initialization and computationally efficient.

2. The proposed algorithm does not require any additional baseline measurements to estimate noise covariance from sensor data. We note that this is not the case for many of the existing algorithms for electromagnetic brain imaging.

3. We perform exhaustive experiments to demonstrate the effectiveness of the proposed electromagnetic brain imaging algorithm on both simulated and real datasets. In particular, we quantify the correctness of the localization of the sources and the estimation of source time courses for simulated brain noise with structured covariance matrix. We show that the new algorithm achieves competitive performance with respect to benchmark methods on both synthetic and real MEG data and is able to resolve distinct and functionally relevant brain areas.

This paper is summarized as follows. In Section 2.1, we introduce generative model of the inverse problem. Table 1 list a summary of the variables and definitions used in Section 2. The proposed Bayesian formulation along with a brief account of existing Bayesian frameworks are presented in Section 2.2 and Section 2.3. Then, we present experiments applying our approach on synthetic (Section 3) and real MEG data (Section 4), where we also compare the proposed algorithm with baseline and state-of-the-art methods for electromagnetic brain imaging. Finally, we discuss implications and future directions in Section 5.

In the typical electromagnetic brain imaging problem setup, brain activity is modeled by a number of electric current dipoles, where the location, orientation, and magnitude of each dipole collectively determine the signal observed at the EEG/MEG electrodes. The position of the dipoles within the brain contains valuable information on brain function, which is used in clinical applications and cognitive neuroscience studies (Leahy et al., 1998; Gross, 2019). The inverse problem of estimating the locations and the moments of the current dipoles from the recorded EEG signal is ill-posed in nature.

The forward model, describing the EEG/MEG measurements as a function of the brain sources, is given by

where is the sensor measurements at time point k, m is the number of sensor measurements. Moreover, , is the activity of the brain sources at time point k, n is the number of voxels. In addition, the whole time series data {y1, y2, …, yK} are collectively denoted y, and the whole time series data {x1, x2, …, xK} are collectively denoted x. The lead-field matrix is given by whose columns reflect the sensors response induced by the unit current sources. Note that, in simulations here, we assume a pre-defined orientation (e.g., normal constraint) for the local lead-field at each voxel. Therefore, it can be reduced to a m × 1 vector (Sekihara and Nagarajan, 2015). However for real data, we use a three-column lead-field for each voxel and estimate the source time-series at the orientation corresponding to maximum power at each vowel. Furthermore, refers to additive noise in the measurements not arising from brain sources. We consider that zk is drawn from a multivariate Gaussian probability distribution parameterized by the precision matrix Λ−1. In particular, we assume that noise refers to any background interference including biological and environmental sources outside the span of the lead-field as well as sensor noise. It is assumed that EEG/MEG measurements are collected for spontaneous brain activity (i.e., resting-state), such that separate recording time-windows capturing noise only activity might not be available. Another scenario where noise-only recordings may not be available are task-induced contrastive experimental designs where noise or artifact signals are only present in the active condition but not in the baseline. One such example is active post-movement related paradigms such as speaking or other movement tasks wherein any artifacts observed in the sensors due to the movement will be present in the post-movement period; that is, the baseline pre-movement periods cannot be used to estimate the noise statistics. Therefore, here, we jointly infer both source estimate and noise statistics from the same data segment as described below.

Given prior distributions of sources and noise, the generative model in Equation 1 becomes a probabilistic model. We assume a zero-mean Gaussian prior with diagonal covariance Φ = diag(ϕ) for the underlying source distribution. In other words, , where the diagonal contains n distinct unknown variances associated with n brain sources.

We propose to solve the inverse problem within the Bayesian learning framework. Modeling independent sources through a Gaussian zero-mean prior with diagonal covariance matrix leads to sparsity of the resulting source distributions, that is, at the optimum many of the estimated source variances are zero. The goal is to find the maximum a posteriori probability (MAP) solution for xk. The posterior probability p(xk|yk) can be derived by using Bayes' theorem (Sekihara and Nagarajan, 2015):

where and . In this case, it is straightforward to show that the posterior probability density p(xk|yk) is also Gaussian. Suppose, the posterior probability takes the following form:

where is the posterior mean, and Γ is the posterior precision matrix. Furthermore, we adapt the derivation in Sekihara and Nagarajan (2015)[See pages 233–235 in Section B.3] to obtain:

Note that we need both Φ and Λ to compute . Assuming Λ and Φ as follows are known, we repeat the following three iterative steps until convergence. At the (l + 1)-th iteration:

1. Estimate , assuming known Λ(l) and Φ(l).

2. Estimate Φ(l+1), assuming known and Λ(l).

3. Estimate Λ(l+1), assuming known and Φ(l+1).

We estimate Φ in the (l + 1)-th iteration by maximizing the following cost function, which is defined as the logarithm of marginal likelihood p(y|Φ) given Φ:

where the model data covariance

Then, similar to Champagne (Wipf et al., 2010; Cai et al., 2019), we utilize a convex bounding on the cost logarithm (Equation 4),

where g = diag(g1, g2, ⋯ , gn) and g0 are auxiliary variables. Setting the derivative of with respect to ϕi and gi generates the update rules below,

The update rule of Φ is defined as .

To estimate Λ, we perform inference on a variational Bayesian factor analysis model of the following residual noise at the l-th iteration (Nagarajan et al., 2007):

where the residual noise at the k-th time-point is . We note that our estimation problem involving a multivariate Gaussian noise process becomes intractable if both the source covariance is non-diagonal and non-sparse and the noise covariance is full rank. The assumption of structured noise helps with accurate estimation with the use of the variational Bayesian factor analysis methods which are robust to smaller data sizes and to the underlying factor dimension specification. The joint estimation of both diagonal sparse source covariance and structured noise covariance is what we are aiming for. Currently, we perform factor analysis-based decomposition of xk as follows:

where A ∈ ℝm×q is a mixing matrix, uk is a q-dimensional column vector, and ε is modeling noise. Notice that we drop the iteration symbol l for simplification of notations.

We further assume the prior probability distribution of the factor uk to be the zero-mean Gaussian with its precision matrix equal to the identity matrix as follows:

We define the j-th row of mixing matrix A as a column vector aj such that

Currently we assume the prior distribution of aj to be:

where the j-th diagonal element of the modeling noise precision matrix Σ is denoted σj, and α = diag(α1, α2, …, αq) is a diagonal matrix. It can be further shown that the posterior probability distribution has the form of the Gaussian distribution:

where z = [z1, ⋯ , zK] is the residual signal of K time points, and and σjΨ are the mean and precision matrix of the posterior distribution.

For simplicity, the prior probability distribution of the factor uk is assumed to be the zero-mean Gaussian with its precision matrix equal to the identity matrix,

The factor activity u = [u1, ⋯ , uK] is assumed to be independent across time. Thus, the joint prior distribution has the form

where I is an identity matrix of size P × P. The modeling noise ε is assumed to be Gaussian with the mean of zero:

where Ω is a diagonal precision matrix. Currently, one can show that the posterior distribution p(uk|zk) is also Gaussian, which we assume to be:

where and Σu are mean and precision, respectively.

By using the variational Bayesian expectation maximization (EM) algorithm, it can be derived that

where , . Note that the hyper-parameters α and Ω can be updated as follows:

where and . Finally, we iteratively update the above equations until the free energy function converged and the covariance matrix of the structured noise computed only using the signal of interest is given by

We refer to the proposed brain source imaging method as structured noise Champagne (SNC). The key aspect is the novel way of estimating the covariance of the residual noise within each iteration.

In this section, we focus on experiments with simulated data. In particular, we follow a standard protocol from the literature for simulating MEG source signals. We combine this with simulated structured noise of which the precision matrix has low rank. More details are provided in Section 3.3.

The performance of brain source reconstructions is evaluated using response receiver operating characteristics (FROC) (Cai et al., 2021). It basically measures the probability of detecting a true source in an image vs. the expected value of the number of false-positive detection per image. We further compute the A′ metric which is the area under the FROC curve (Owen et al., 2012; Snodgrass and Corwin, 1988). Note that the A′ metric determines the hit rate (hr) of correctly detecting the active sources. We define the hit rate (hr) as the number of hits for dipolar sources divided by the true number of dipolar sources in the brain. A dipolar source is considered as hit when recovered signal power is beyond a certain threshold value. First, the voxels localized by each algorithm that are included in the calculation of hit rates are defined as voxels that are (i) at least 1% of the maximum activation of the localization result and (ii) within the largest 10% of all of the voxels in the brain. Within these subsets of voxels, we test whether each voxel is within the ten nearest voxels to a true source. If estimated activity of a particular voxel lies within a true source, that source gets labeled as a “hit.” We also define another metric false positive rate (fr) as the number of potential false-positive dipolar sources divided by the total number of false dipolar sources. Note that a larger AOC value indicates a higher hit rate (hr) than a false-positive rate (fr). The expression for the A′ metric (Owen et al., 2012) is given by:

In our experiments, we also study the accuracy of the time course reconstructions. This accuracy metric is defined as the correlation coefficient between the seed and estimated source time courses for each hit. The overall performance of both the accuracy of the localization and reconstruction of time courses is computed by combining A′ and . The aggregated performance (AP) is given by Cai et al. (2019):

We see that AP values range in [0, 1]. A higher value of AP indicates a better overall performance of source localization and time course reconstruction.

We compare our method structured noise Champagne (SNC) with the following existing source localization methods:

1. Minimum current estimate (MCE) (Matsuura and Okabe, 1995),

2. Standardized low-resolution brain electromagnetic tomography (sLORETA) (Pascual-Marqui et al., 2002),

3. Linearly constrained minimum variance (LCMV) (Van Veen et al., 1997),

4. Noise learning Champagne (NLC) (Cai et al., 2021).

We apply these existing methods within the targeted structured noise model. For experiments with real data, we first estimate the noise variance using the variational Bayesian factor analysis (VBFA) algorithm (Nagarajan et al., 2007) and use the original Champagne (Nagarajan et al., 2007) to estimate the location of active brain sources. This result would set an upper bound on the performance of Champagne with noise learning when baseline data are not available for real data.

We generate source signal data by simulating dipole sources with a fixed orientation. Damped sinusoidal oscillations with frequencies sampled randomly between 1 and 75 Hz are created as voxel source time courses. The time-courses are then projected to the sensors using the lead-field matrix generated by the forward model. We consider 271 MEG sensors and a single-shell spherical model (Hallez et al., 2007) implemented in SPM12 (http://www.fil.ion.ucl.ac.uk/spm) at the default spatial resolution of 8,196 voxels corresponding approximately to a 5-mm inter-voxel spacing. We simulated 480 samples for which sampling frequency is 1200 Hz and signal duration is 0.8 s.

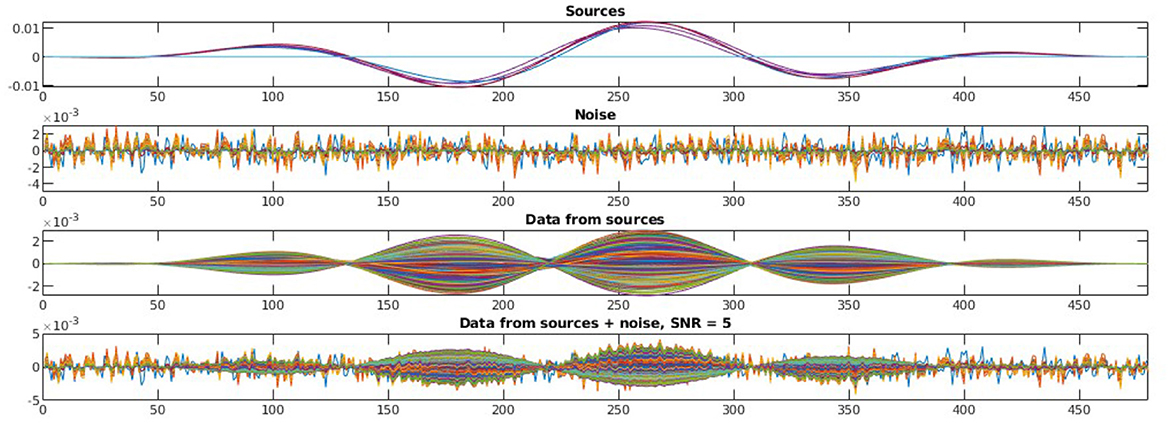

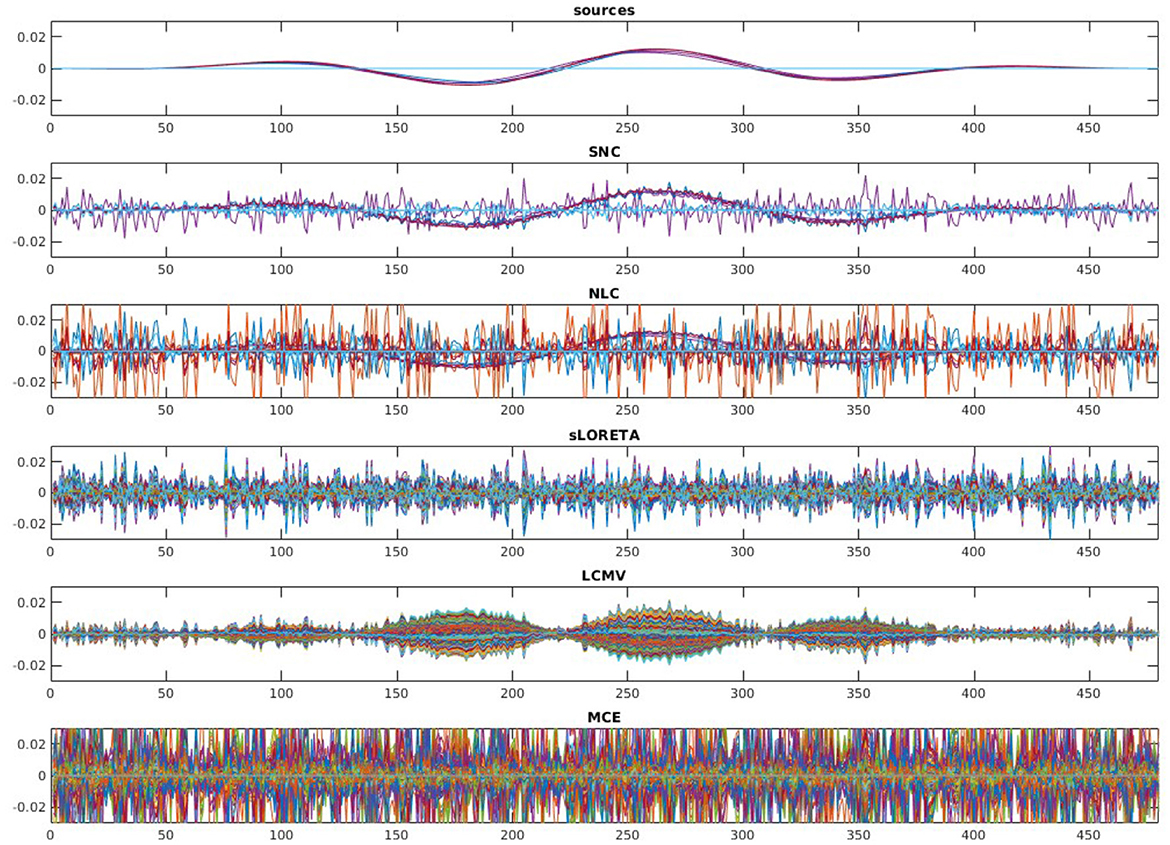

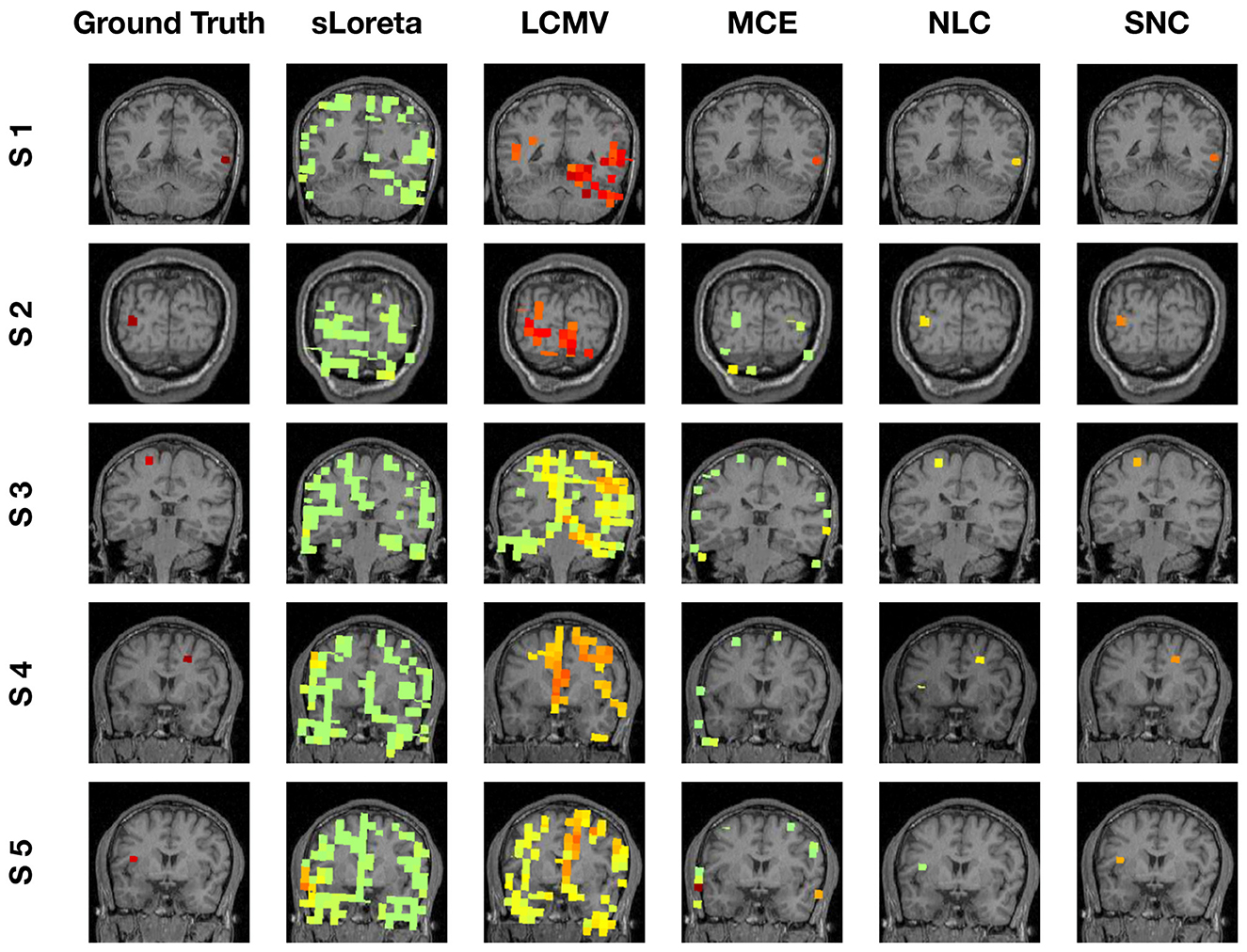

To evaluate the robustness of the proposed method, we randomly choose noise activity with real brain noise consisting of actual resting-state sensor recordings collected from ten human subjects presumed to have only spontaneous brain activity and sensor noise. Signal-to-noise ratio (SNR) and correlations between voxel time courses are varied to examine algorithm performance. The SNR and time course correlation are defined in Owen et al. (2012). We show an example of time-course reconstruction using our proposed method in Figures 1, 2. The top plot in Figure 1 is the simulated ground-truth MEG signal with five active sources. This is followed by the time-course at the MEG sensor (before adding the noise). Moreover, finally, the time-course at the bottom in Figure 1 is the measured signal at the MEG sensor with additive noise of 5 dB. The reconstructed time-series using our method SNC is shown in Figure 2. We also display the time-series reconstructions obtained using NLC (Cai et al., 2021), sLORETA (Pascual-Marqui et al., 2002), LCMV (Van Veen et al., 1997), and MCE (Matsuura and Okabe, 1995). It is noteworthy that the new method SNC is able to reconstruct the time-series best. For all simulations, the inter-source correlation coefficient was fixed at 0.99 and the SNR was fixed at 3 dB. To highlight the source localization by all five methods, we show the power at each voxel of the reconstructed time-series and compare it with the ground-truth in Figure 3. Notice that our method SNL achieves the best results to rightly localize the brain sources. In other words, it shows the localization performance of the methods in the simulation experiment.

Figure 1. Simulated MEG signal, structured noise, and measurement from MEG sensors. In this experiment, we have five brain sources as we see on the top plot. The second plot shows the noise generated as per our model of structured noise. The third plot presents the measured signal at the MEG sensor resulting only from the active brain source time-series—Lx in Equation 1. Finally, the time-series at the bottom is the one captured at MEG sensors resulting from both active sources and noise—y in Equation 1. For all these, we show 480 time-points.

Figure 2. Reconstruction results on the simulated MEG measurement shown in Figure 1. Recall, we have five brain sources in this experiment. It is desirable for a good brain source imaging method to recover the brain source signal successfully while also suppressing the noise. Notice that in this experiment for the considered structured noise model, noise learning Champagne (NLC), and our method structured noise Champagne (SNC) are able to mitigate the noise more successfully. Between them, SNC is able to produce cleaner brain source time-series. In this experiment, standardized low-resolution brain electromagnetic tomograph (sLORETA), linearly constrained minimum variance (LCMV), and minimum current estimate (MCE) failed to achieve satisfactory reconstruction.

Figure 3. Localization results of simulation experiment (visualization of brain sources power). In this experiment, we simulated five brain sources: S1, S2, S3, S4, and S5. For each brain source, we display the localization by all five methods. Notice that our method SNC is able to localize the sources most accurately.

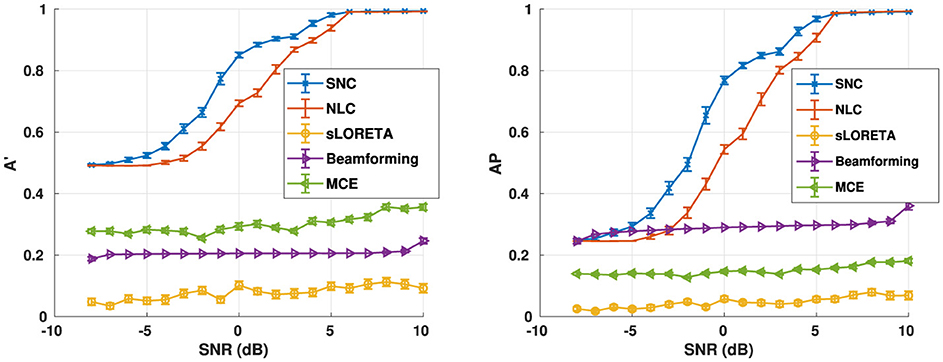

We also evaluate algorithm performance as a function of SNR, as shown in Figure 4. The reconstruction performance is evaluated for five randomly seeded dipolar sources with an inter-source correlation coefficient of 0.99. The simulations were performed at SNRs from –8 dB to 10 dB at a step of 1 dB. Both metrics suggest that our method structured noise Champagne (SNC) is able to localize the active brain sources more accurately than the existing methods.

Figure 4. Aggregate performance in simulations for varying noise levels for the number of noise factors m = 40. In this experiment, we use five active brain sources in our simulation. On the other hand, we first simulated a structured noise drawn from (0, Λ−1). Furthermore, we use the knowledge of the structured noise model at the heart of our method to reconstruct the voxel-level time-series. Notice that our method structured noise Champagne (SNC) performs better than every existing method in terms of correctly localizing the active brain sources.

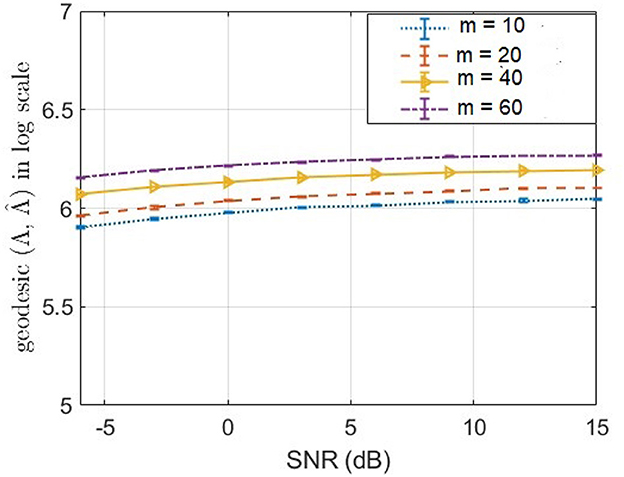

Our method structured noise Champagne (SNC) deviates from the original Champagne algorithm by the way of noise precision update step. In the original Champagne algorithm, Λ is learnt from available baseline or control measurements (Wipf et al., 2010). In contrast, here in structured noise Champagne (SNC), we update rules for estimation of a diagonal noise covariance, without baseline measurements. In Figure 5, we demonstrate how well the structured noise is reconstructed. In particular, we compute the geodesic distance (Venkatesh et al., 2020) between true structured noise and the reconstructed one using SNC. Then, we plot the geodesic distance across a range of signal-to-noise ratio (SNR) for different values of rank. We found that our method SNC is able to predict the structured noise fairly consistently across SNR values. However, we empirically also found that there is still scope for improvement in the estimated noise precision. We will focus on new technical innovations to address this limitation in our future research.

Figure 5. Geodesic distance (Venkatesh et al., 2020) between true and estimated precision matrices of structured noise for a different number of noise factors m.

Real MEG data were acquired in the Biomagnetic Imaging Laboratory at the University of California, San Francisco (UCSF) with a CTF Omega 2000 whole-head MEG system from VSM MedTech (Coquitlam, BC, Canada) at a 1,200 Hz sampling rate. Formal consent was collected from each participant in our study for using his/her data for research studies. All study protocols were approved by the Committee for Human Research at UCSF. The lead-field for each subject was calculated in NUTMEG (Hinkley et al., 2020) using a single-sphere head model and an 8-mm voxel grid corresponding to 5,300 voxels. Each lead-field column was normalized to have a norm of unity. The MEG data were digitally filtered from 1 to 45 Hz to remove artifacts and DC offset. In addition, trials with clear artifacts or visible noise in the MEG sensors that exceeded 10 pT fluctuations were excluded prior to source localization analysis. We experimented on one real MEG auditory evoked fields (AEF) dataset (Cai et al., 2021) to evaluate the performance of our newly introduced brain source imaging method SNC.

In this section, we discuss the neural source localization performance of our method structured noise Champagne (SNC) on auditory evoked fields (AEF) in the MEG signal. AEF are often characterized as a function of latency, scalp topography, and the perceptual/cognitive process (Godey et al., 2001). Source localization from auditory evoked fields (AEF) data using MEG measurement is a potential alternative for studying human brain function (Teale et al., 1996). In all experiments with AEF data, the neural response time-series was elicited during passive listening to binaural tones (600 ms duration, carrier frequency of 1 kHz, 40 dB SL). The post-stimulus window in which AEF were analyzed was set to be +50 ms to +150 ms.

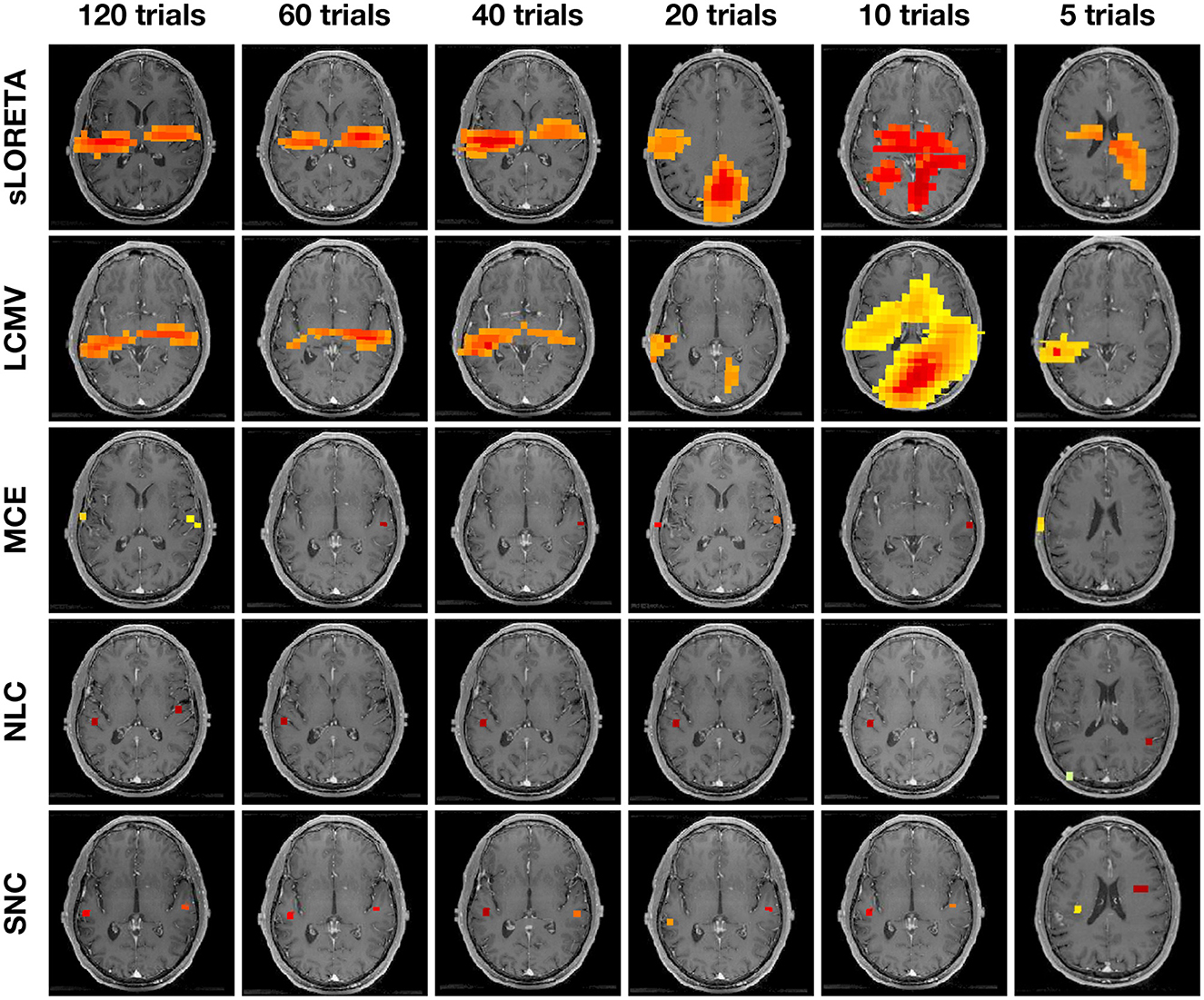

Figure 6 shows auditory evoked field (AEF) localization results vs. the number of trials from a single representative subject. We compare our result with other methods—sLORETA, LCMV, MCE, and NLC. The power at each voxel around the M100 peak is plotted for each algorithm. SNC is able to localize the expected bilateral brain activation with focal reconstructions under all trial settings. Specifically, the activities localize to Heschl's gyrus in the temporal lobe, which is the characteristic location of the primary auditory cortex. NLC is able to localize the bilateral auditory activity but with shrinkage on one side of the brain activity. The other algorithms do not show robustness compared to SNC. Notice that localization of MCE is biased toward the edge of the head. On the other hand, sLORETA and LCMV produce several areas of pseudo-brain activity. We further note that the LCMV beamformer has a disadvantage in this structured noise scenario due to its well-described weakness for temporally correlated sources as they occur in the auditory cortices for AEFs.

Figure 6. Auditory evoked field (AEF) localization results vs. number of trials from one representative subject using standardized low-resolution brain electromagnetic tomograph (sLORETA), linearly constrained minimum variance (LCMV), minimum current estimate (MCE), noise learning Champagne (NLC), and our method structured noise Champagne (SNC). Note that NLC is able to localize the bilateral auditory activity. In contrast, MCE localization is biased toward the edge of the head. Both sLORETA and LCMV produce some areas of pseudo brain activity.

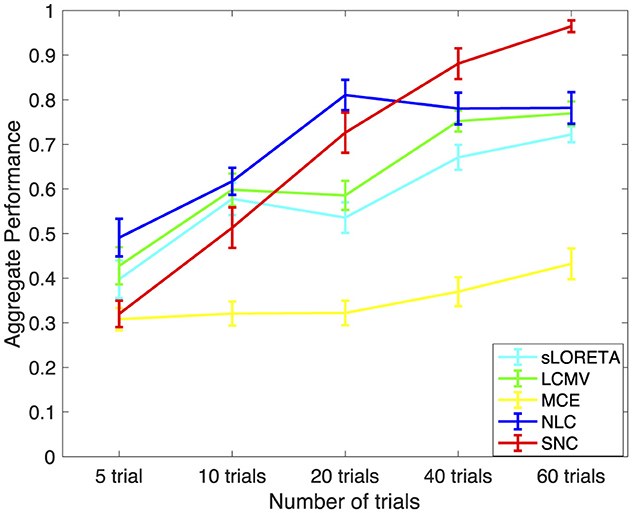

In Figure 7, we present the performance results of sLORETA, LCMV, MCE, NLC, and SNC for AEF localization vs. number of trials for one subject. The error bars in each plot show standard error. Trials are randomly chosen from around 120 trials from each subject, and the number of trials is set in a range from 5 to 60. Each condition is repeated over 30 times for each subject. In this case, we consider the ground truth as the brain activity estimated from approximately 120 trials. In general, increasing the number of trials increases the performance of all algorithms. Notice that all algorithms perform similar when the number of trials is under 10. However, both NLC and SNC work better when the number of trials is above 20. Importantly, when the number of trials increases higher than 40, SNC outperforms all other methods in terms of efficiently localizing the active brain sources.

Figure 7. Aggregate performance results vs. number of trials for auditory evoked field (AEF) data using standardized low-resolution brain electromagnetic tomograph (sLORETA), linearly constrained minimum variance (LCMV), minimum current estimate (MCE), noise learning Champagne (NLC), and our method structured noise Champagne (SNC). It is interesting to note that SNC achieves the best aggregated performance after 40 trials. As expected, the performance also improves with the number of trials.

This study offers an efficient way to estimate contributions to sensors from noise without the need for additional “baseline” or “control” data, while preserving robust reconstruction of complex brain source activity. The underlying data estimation part of our algorithm is based on a principled idea of estimating noise statistics from the model residuals at each iteration of the alternating minimization step of the Champagne algorithm. The key step of the noise learning operation is accomplished by the fact that the residual noise at each iteration exhibits structured precision statistics. The proposed algorithm is readily available to handle a variety of configurations of brain sources under high noise and interference conditions without the need for additional baseline measurements—a requirement that commonly arises in datasets like resting state data analyses. In this context, we note some of the noise/interference reduction strategies—signal space separation (SSS) (Taulu et al., 2005), signal-space projection (SSP) (Uusitalo and Ilmoniemi, 1997), and dual signal subspace projection (DSSP) (Sekihara et al., 2016) for EEG/MEG signals. These methods are primary preprocessing techniques for the EEG/MEG analysis pipeline. In fact, these methods are precisely used to mitigate the noise/interference from the MEG/EEG sensor and external sources. However, none of these are designed to reconstruct the voxel-level time-series. On contrary, our method SNC estimates the MEG/EEG time-series at each voxel of the sensor data.

Exhaustive experimental results demonstrate that the proposed source imaging method offers significant theoretical and empirical advantages over the existing benchmark algorithms when the noise covariance cannot be accurately determined in advance. In simulations, we particularly explored noise learning algorithmic performance for complex source configurations with highly correlated time-courses, and high levels of noise and interference. These simulation results establish the fact that our method outperforms the classical Champagne algorithm (Nagarajan et al., 2007) with an incorrect noise covariance as it achieves higher score of aggregated performance as compared to this and other existing benchmarking methods (Matsuura and Okabe, 1995; Pascual-Marqui et al., 2002; Van Veen et al., 1997; Cai et al., 2021). It is relevant to mention that data-driven approaches, that is, artificial neural networks (ANN)-based inverse solutions, are receiving increasing interest in the literature (Razorenova et al., 2020; Sun et al., 2020; Hecker et al., 2021; Liang et al., 2023). It would be an interesting future extension to explore the scope of our proposed noise learning scheme within these recent artificial intelligence techniques.

To the best of our understanding, the improved performance of this algorithm arises from the efficient the method of estimating the noise statistics via factor analysis of the residual component. Moreover, the proposed structured noise Champagne (SNC) algorithm is found to be robust even when the algorithms are initialized to incorrect noise values. Most importantly, the proposed method is able to robustly localize brain activity with a few trials or even with a single trial in the AEF dataset. This is indeed a significant advancement in electromagnetic brain imaging. We argue that this phenomenon may dramatically cut down the duration of data collection up to 10-fold. This scan-time reduction is particularly important in studies involving children with autism, patients with dementia, or any other subjects who have difficulty tolerating long periods of data collection. In summary, our proposed method offers significant advantages over many existing benchmark algorithms for electromagnetic brain source imaging.

Finally, we would like to discuss some tradeoffs in the current algorithm. Here, we restrict to a diagonal source covariance matrix estimate, which ensures the sparsity of brain sources (Hashemi et al., 2021a), and our convex bounding cost function ensures guaranteed convergence (Wipf et al., 2011; Wipf and Nagarajan, 2009). The structured low-dimensional manifold assumption of the noise covariance helps with accurate estimation with the use of the variational Bayesian factor analysis methods which are robust to smaller data sizes and to the underlying factor dimension specification (Nagarajan et al., 2006, 2007). However, if we want to solve a joint signal and noise estimation problem where both the source and noise covariances are non-diagonal and non-sparse, this problem can become intractable (Hashemi et al., 2020, 2021a). We hope to examine this problem in our future study.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by the UCSF Human Research Protection Program Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

SG: Formal analysis, Methodology, Software, Validation, Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Visualization. CC: Formal analysis, Methodology, Software, Validation, Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Visualization. AH: Formal analysis, Methodology, Software, Validation, Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Visualization. YG: Formal analysis, Methodology, Software, Validation, Writing – original draft, Writing – review & editing, Conceptualization, Data curation, Visualization. SH: Conceptualization, Funding acquisition, Investigation, Supervision, Writing – original draft, Writing – review & editing, Methodology, Project administration, Resources. KS: Conceptualization, Funding acquisition, Investigation, Supervision, Writing – original draft, Writing – review & editing, Methodology, Project administration, Resources. AR: Conceptualization, Funding acquisition, Investigation, Supervision, Writing – original draft, Writing – review & editing, Methodology, Project administration, Resources. SN: Conceptualization, Funding acquisition, Investigation, Supervision, Writing – original draft, Writing – review & editing, Methodology, Project administration, Resources.

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by the National Institutes of Health grants: R01DC013979 (SN), R01NS066654 (SN), R01NS64060 (SN), P50DC019900 (SN), National Science Foundation Grant BCS-1262297 (SN), and the Knowledge Innovation Program of Wuhan-Shuguang Project 2023010201010124, National Natural Science Foundation of China under Grant 62277023 and financially supported by self-determined research funds of CCNU from the colleges' basic research and operation of MOE under Grant CCNU24JCPT037; and an industry contract from Ricoh MEG USA Inc. Ricoh MEG USA Inc. was not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication.

We would like to thank all the members of the Biomagnetic Imaging Laboratory for their invaluable contributions to the MEG data acquisition and data curation.

SN is a founding advisory board member of HippoClinic Inc. and is a consultant to MEGIN Inc. He has previously served on the advisory board of Rune Labs, and is also the recipient of a industry contract from Ricoh MEG USA Inc. KS was employed by Signal Analysis Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Baillet, S., Mosher, J. C., and Leahy, R. M. (2001). Electromagnetic brain mapping. IEEE Signal Process. Mag. 18, 14–30. doi: 10.1109/79.962275

Cai, C., Diwakar, M., Chen, D., Sekihara, K., and Nagarajan, S. S. (2019). Robust empirical bayesian reconstruction of distributed sources for electromagnetic brain imaging. IEEE Trans. Med. Imaging 39, 567–577. doi: 10.1109/TMI.2019.2932290

Cai, C., Hashemi, A., Diwakar, M., Haufe, S., Sekihara, K., and Nagarajan, S. S. (2021). Robust estimation of noise for electromagnetic brain imaging with the Champagne algorithm. Neuroimage 225:117411. doi: 10.1016/j.neuroimage.2020.117411

Cai, C., Long, Y., Ghosh, S., Hashemi, A., Gao, Y., Diwakar, M., et al. (2023). Bayesian adaptive beamformer for robust electromagnetic brain imaging of correlated sources in high spatial resolution. IEEE Trans. Med. Imag. 42, 2502–2512. doi: 10.1109/TMI.2023.3256963

Cai, C., Sekihara, K., and Nagarajan, S. S. (2018). Hierarchical multiscale Bayesian algorithm for robust MEG/EEG source reconstruction. Neuroimage 183, 698–715. doi: 10.1016/j.neuroimage.2018.07.056

Dale, A. M., and Sereno, M. I. (1993). Improved localization of cortical activity by combining EEG and MEG with MRI cortical surface reconstruction: a linear approach. J. Cogn. Neurosci. 5, 162–176. doi: 10.1162/jocn.1993.5.2.162

Delorme, A., Palmer, J., Onton, J., Oostenveld, R., and Makeig, S. (2012). Independent EEG sources are dipolar. PLoS ONE 7:e30135. doi: 10.1371/journal.pone.0030135

Ding, L., and He, B. (2008). Sparse source imaging in electroencephalography with accurate field modeling. Hum. Brain Mapp. 29, 1053–1067. doi: 10.1002/hbm.20448

Edelman, B. J., Johnson, N., Sohrabpour, A., Tong, S., Thakor, N., and He, B. (2015). Systems neuroengineering: understanding and interacting with the brain. Engineering 1, 292–308. doi: 10.15302/J-ENG-2015078

Friston, K., Harrison, L., Daunizeau, J., Kiebel, S., Phillips, C., Trujillo-Barreto, N., et al. (2008). Multiple sparse priors for the m/EEG inverse problem. Neuroimage 39, 1104–1120. doi: 10.1016/j.neuroimage.2007.09.048

Ghosh, S., Cai, C., Gao, Y., Hashemi, A., Haufe, S., Sekihara, K., et al. (2023). “Bayesian inference for brain source imaging with joint estimation of structured low-rank noise,” in IEEE International Symposium on Biomedical Imaging (ISBI), 1–5. doi: 10.1109/ISBI53787.2023.10230330

Godey, B., Schwartz, D., De Graaf, J., Chauvel, P., and Liegeois-Chauvel, C. (2001). Neuromagnetic source localization of auditory evoked fields and intracerebral evoked potentials: a comparison of data in the same patients. Clin. Neurophysiol. 112, 1850–1859. doi: 10.1016/S1388-2457(01)00636-8

Gross, J. (2019). Magnetoencephalography in cognitive neuroscience: a primer. Neuron 104, 189–204. doi: 10.1016/j.neuron.2019.07.001

Hallez, H., Vanrumste, B., Grech, R., Muscat, J., De Clercq, W., Vergult, A., et al. (2007). Review on solving the forward problem in EEG source analysis. J. Neuroeng. Rehabil. 4:46. doi: 10.1186/1743-0003-4-46

Hämäläinen, M. S., and Ilmoniemi, R. J. (1994). Interpreting magnetic fields of the brain: minimum norm estimates. Med. Biol. Eng. Comput. 32, 35–42. doi: 10.1007/BF02512476

Hashemi, A., Cai, C., Gao, Y., Ghosh, S., Müller, K.-R., Nagarajan, S. S., et al. (2022). Joint learning of full-structure noise in hierarchical Bayesian regression models. IEEE Trans. Med. Imag. 43, 610–624. doi: 10.1109/TMI.2022.3224085

Hashemi, A., Cai, C., Kutyniok, G., Müller, K.-R., Nagarajan, S., and Haufe, S. (2020). Unification of sparse Bayesian learning algorithms for electromagnetic brain imaging with the majorization minimization framework. bioRxiv. doi: 10.1101/2020.08.10.243774

Hashemi, A., Cai, C., Muller, K. R., Nagarajan, S., and Haufe, S. (2021a). Joint learning of full-structure noise in hierarchical Bayesian Regression models. bioRxiv. doi: 10.1101/2021.11.28.470264

Hashemi, A., Gao, Y., Cai, C., Ghosh, S., Müller, K.-R., Nagarajan, S., et al. (2021b). “Efficient hierarchical bayesian inference for spatio-temporal regression models in neuroimaging,” in Advances in Neural Information Processing Systems, 24855–24870.

He, B., Sohrabpour, A., Brown, E., and Liu, Z. (2018). Electrophysiological source imaging: a noninvasive window to brain dynamics. Annu. Rev. Biomed. Eng. 20, 171–196. doi: 10.1146/annurev-bioeng-062117-120853

He, B., Yang, L., Wilke, C., and Yuan, H. (2011). Electrophysiological imaging of brain activity and connectivity-challenges and opportunities. IEEE Trans. Biomed. Eng. 58, 1918–1931. doi: 10.1109/TBME.2011.2139210

Hecker, L., Rupprecht, R., Tebartz Van Elst, L., and Kornmeier, J. (2021). Convdip: A convolutional neural network for better EEG source imaging. Front. Neurosci. 15:569918. doi: 10.3389/fnins.2021.569918

Hinkley, L. B., Dale, C. L., Cai, C., Zumer, J., Dalal, S., Findlay, A., et al. (2020). NUTMEG: open source software for M/EEG source reconstruction. Front. Neurosci. 14:710. doi: 10.3389/fnins.2020.00710

Hosseini, S. A. H., Sohrabpour, A., Akçakaya, M., and He, B. (2018). Electromagnetic brain source imaging by means of a robust minimum variance beamformer. IEEE Trans. Biomed. Eng. 65, 2365–2374. doi: 10.1109/TBME.2018.2859204

Ioannides, A. A., Bolton, J. P., and Clarke, C. (1990). Continuous probabilistic solutions to the biomagnetic inverse problem. Inverse Probl. 6:523. doi: 10.1088/0266-5611/6/4/005

Leahy, R., Mosher, J., Spencer, M., Huang, M., and Lewine, J. (1998). A study of dipole localization accuracy for MEG and EEG using a human skull phantom. Electroencephalogr. Clin. Neurophysiol. 107, 159–173. doi: 10.1016/S0013-4694(98)00057-1

Liang, J., Yu, Z. L., Gu, Z., and Li, Y. (2023). Electromagnetic source imaging with a combination of sparse bayesian learning and deep neural network. IEEE Trans. Neural Syst. Rehabilit. Eng. 31, 2338–2348. doi: 10.1109/TNSRE.2023.3251420

Liu, F., Wan, G., Semenov, Y. R., and Purdon, P. L. (2022). “Extended electrophysiological source imaging with spatial graph filters,” in International Conference on Medical Image Computing and Computer-Assisted Intervention (Springer), 99–109. doi: 10.1007/978-3-031-16431-6_10

Liu, F., Wang, L., Lou, Y., Li, R.-C., and Purdon, P. L. (2020). Probabilistic structure learning for EEG/MEG source imaging with hierarchical graph priors. IEEE Trans. Med. Imaging 40, 321–334. doi: 10.1109/TMI.2020.3025608

Liu, K., Yu, Z. L., Wu, W., Gu, Z., Zhang, J., Cen, L., et al. (2019). Bayesian electromagnetic spatio-temporal imaging of extended sources based on matrix factorization. IEEE Trans. Biomed. Eng. 66, 2457–2469. doi: 10.1109/TBME.2018.2890291

Matsuura, K., and Okabe, Y. (1995). Selective minimum-norm solution of the biomagnetic inverse problem. IEEE Trans. Biomed. Eng. 42, 608–615. doi: 10.1109/10.387200

Michel, C. M., and He, B. (2019). EEG source localization. Handb. Clin. Neurol. 160, 85–101. doi: 10.1016/B978-0-444-64032-1.00006-0

Mosher, J. C., Lewis, P. S., and Leahy, R. M. (1992). Multiple dipole modeling and localization from spatio-temporal MEG data. IEEE Trans. Biomed. Eng. 39, 541–557. doi: 10.1109/10.141192

Nagarajan, S. S., Attias, H. T., Hild, I. I. K. E., and Sekihara, K. (2006). A graphical model for estimating stimulus-evoked brain responses from magnetoencephalography data with large background brain activity. Neuroimage 30, 400–416. doi: 10.1016/j.neuroimage.2005.09.055

Nagarajan, S. S., Attias, H. T., Hild, K. E., and Sekihara, K. (2007). A probabilistic algorithm for robust interference suppression in bioelectromagnetic sensor data. Stat. Med. 26, 3886–3910. doi: 10.1002/sim.2941

Oikonomou, V. P., and Kompatsiaris, I. (2020). A novel Bayesian approach for EEG source localization. Comput. Intell. Neurosci. 2020:8837954. doi: 10.1155/2020/8837954

Owen, J. P., Wipf, D. P., Attias, H. T., Sekihara, K., and Nagarajan, S. S. (2012). Performance evaluation of the Champagne source reconstruction algorithm on simulated and real M/EEG data. Neuroimage 60, 305–323. doi: 10.1016/j.neuroimage.2011.12.027

Pascual-Marqui, R. D. (2002). Standardized low-resolution brain electromagnetic tomography (sloreta): technical details. Methods Find. Exp. Clin. Pharmacol. 24, 5–12. Available online at: https://www.institutpsychoneuro.com/wp-content/uploads/2015/10/sLORETA2002.pdf

Pascual-Marqui, R. D., Michel, C. M., and Lehmann, D. (1994). Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. Int. J. Psychophysiol. 18, 49–65. doi: 10.1016/0167-8760(84)90014-X

Phillips, J. W., Leahy, R. M., Mosher, J. C., and Timsari, B. (1997). Imaging neural activity using MEG and EEG. IEEE Eng. Med. Biol. Magaz. 16, 34–42. doi: 10.1109/51.585515

Razorenova, A., Yavich, N., Malovichko, M., Fedorov, M., Koshev, N., and Dylov, D. V. (2020). “Deep learning for non-invasive cortical potential imaging,” in International Workshop on Machine Learning in Clinical Neuroimaging (Springer), 45–55. doi: 10.1007/978-3-030-66843-3_5

Riaz, U., Razzaq, F. A., Areces-Gonzalez, A., Piastra, M. C., Vega, M. L. B., Paz-Linares, D., et al. (2023). Automatic quality control of the numerical accuracy of EEG lead fields. Neuroimage 273:120091. doi: 10.1016/j.neuroimage.2023.120091

Robinson, S. E., and Rose, D. F. (1992). “Current source image estimation by spatially filtered MEG,” in Biomagnetism Clinical Aspects, eds. M. Hoke, et al. (New York: Elsevier Science Publishers), 761–765.

Scherg, M. (1990). Fundamentals of dipole source potential analysis. Auditory evoked magnetic fields and electric potentials. Adv. Audiol. 6:25.

Sekihara, K., Kawabata, Y., Ushio, S., Sumiya, S., Kawabata, S., Adachi, Y., et al. (2016). Dual signal subspace projection (DSSP): a novel algorithm for removing large interference in biomagnetic measurements. J. Neural Eng. 13:036007. doi: 10.1088/1741-2560/13/3/036007

Sekihara, K., and Nagarajan, S. S. (2008). Adaptive Spatial Filters for Electromagnetic Brain Imaging. Berlin, Heidelber: Springer-Verlag.

Sekihara, K., and Nagarajan, S. S. (2015). Electromagnetic Brain Imaging: a Bayesian Perspective. Cham: Springer. doi: 10.1007/978-3-319-14947-9

Sekihara, K., and Scholz, B. (1996). “Generalized Wiener estimation of three-dimensional current distribution from biomagnetic measurements,” in Biomag 96: Proceedings of the Tenth International Conference on Biomagnetism, eds. C. J. Aine, et al. (New York: Springer-Verlag), 338–341. doi: 10.1007/978-1-4612-1260-7_82

Snodgrass, J. G., and Corwin, J. (1988). Pragmatics of measuring recognition memory: applications to dementia and amnesia. J. Exper. Psychol. 117:34. doi: 10.1037//0096-3445.117.1.34

Sun, R., Sohrabpour, A., Ye, S., and He, B. (2020). SIFNet: electromagnetic source imaging framework using deep neural networks. bioRxiv, 2020–05. doi: 10.1101/2020.05.11.089185

Taulu, S., Simola, J., and Kajola, M. (2005). Applications of the signal space separation method. IEEE Trans. Signal Proc. 53, 3359–3372. doi: 10.1109/TSP.2005.853302

Teale, P., Goldstein, L., Reite, M., Sheeder, J., Richardson, D., Edrich, J., et al. (1996). Reproducibility of MEG auditory evoked field source localizations in normal human subjects using a seven-channel gradiometer. IEEE Trans. Biomed. Eng. 43, 967–969. doi: 10.1109/10.532131

Uusitalo, M., and Ilmoniemi, R. (1997). Signal-space projection method for separating MEG or EEG into components. Med. Biol. Eng. Comput. 35, 135–140. doi: 10.1007/BF02534144

Van Veen, B. D., Van Drongelen, W., Yuchtman, M., and Suzuki, A. (1997). Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 44, 867–880. doi: 10.1109/10.623056

Venkatesh, M., Jaja, J., and Pessoa, L. (2020). Comparing functional connectivity matrices: a geometry-aware approach applied to participant identification. Neuroimage 207:116398. doi: 10.1016/j.neuroimage.2019.116398

Wipf, D., and Nagarajan, S. (2009). A unified Bayesian framework for MEG/EEG source imaging. Neuroimage 44, 947–966. doi: 10.1016/j.neuroimage.2008.02.059

Wipf, D. P., Owen, J. P., Attias, H. T., Sekihara, K., and Nagarajan, S. S. (2010). Robust Bayesian estimation of the location, orientation, and time course of multiple correlated neural sources using MEG. Neuroimage 49, 641–655. doi: 10.1016/j.neuroimage.2009.06.083

Wipf, D. P., Rao, B. D., and Nagarajan, S. (2011). Latent variable Bayesian models for promoting sparsity. IEEE Trans. Inf. Theory 57, 6236–6255. doi: 10.1109/TIT.2011.2162174

Zumer, J., Attias, H., Sekihara, K., and Nagarajan, S. (2006). “A probabilistic algorithm integrating source localization and noise suppression of MEG and EEG data,” in Advances in Neural Information Processing Systems, 19. doi: 10.7551/mitpress/7503.003.0208

Recall that the posterior probability distribution function p(xk|yk) is Gaussian which we assumed to be . Furthermore, the exponential part of this Gaussian distribution is given by:

where stands for the terms that do not contain xk.

We also know that and . Hence, the posterior probability p(xk|yk) can be derived by using Bayes' rule in Equation 2. We see that the exponential part of the posterior probability p(xk|yk) takes the form:

where ≃ again stands for the terms that do not contain xk.

Comparing the quadratic terms of xk on the right sides of Equations 18, 19:

Similarly, comparing the linear terms of xk on the right sides of Equations 18, 19:

Furthermore, using the matrix inversion formula of Equation (C.92) in Sekihara and Nagarajan (2015), Equation 21 can be rewritten as:

Keywords: electromagnetic brain imaging, magnetoencephalography (MEG), brain source reconstruction, Bayesian inference, structured noise learning, factor analysis

Citation: Ghosh S, Cai C, Hashemi A, Gao Y, Haufe S, Sekihara K, Raj A and Nagarajan SS (2025) Structured noise champagne: an empirical Bayesian algorithm for electromagnetic brain imaging with structured noise. Front. Hum. Neurosci. 19:1386275. doi: 10.3389/fnhum.2025.1386275

Received: 14 February 2024; Accepted: 11 March 2025;

Published: 07 April 2025.

Edited by:

Takfarinas Medani, University of Southern California, United StatesReviewed by:

Johannes Vorwerk, UMIT TIROL - Private University for Health Sciences and Health Technology, AustriaCopyright © 2025 Ghosh, Cai, Hashemi, Gao, Haufe, Sekihara, Raj and Nagarajan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chang Cai, Y2NoYW5nZW5lcmd5QGdtYWlsLmNvbQ==; Srikantan S. Nagarajan, c3JpQHVjc2YuZWR1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.