- 1Department of Brain and Behavioral Sciences, University of Pavia, Pavia, Italy

- 2Psychology Department, University of Milano-Bicocca, Milan, Italy

- 3Inter-University Laboratory of Human Movement Biology, Univ Lyon, University Claude Bernard Lyon 1, Villeurbanne, France

- 4Centre for Research on Brain, Language and Music (CRBLM), Montreal, QC, Canada

- 5Psychology Department, University of Montreal, Montreal, QC, Canada

- 6International Laboratory for Brain, Music and Sound Research (BRAMS), Montreal, QC, Canada

Introduction: Music making is a process by which humans across cultures come together to create patterns of sounds that are aesthetically pleasing. What remains unclear is how this aesthetic outcome affects the sensorimotor interaction between participants.

Method: Here we approach this question using an interpersonal sensorimotor synchronization paradigm to test whether the quality of a jointly created chord (consonant vs. dissonant) affects movement coordination. We recruited non-musician participants in dyads to perform a dyadic synchronization-continuation task (dSCT): on each trial, participants first synchronized their movements to a metronome (synchronization phase) and then continued tapping together at the same tempo without the metronome (continuation phase). Each tap yielded a note and participants heard both their own and that of their partner, thus creating a chord that was varied to be either consonant (Perf5 or Maj6) or dissonant (Min2 or Maj2). For each trial, participants also rated the pleasure they felt in creating the sounds together. Additionally, they completed questionnaires about social closeness to the other participant, musical reward sensitivity and musical training.

Results: Results showed that participants' taps were closer in time when they jointly created consonant (high pleasure) vs. dissonant (low pleasure) chords, and that pleasure experienced by the dyad in each trial predicted interpersonal synchronization. However, consonance did not affect individual synchronization with the metronome or individual tapping when the metronome was discontinued. The effect of consonance on synchronization was greater in dyads who reported feeling less close prior to the task.

Discussion: Together, these results highlight the role of consonance in shaping the temporal coordination of our actions with others. More broadly, this work shows that the aesthetic outcome of what we create together affects joint behaviors.

1 Introduction

Human cultures across the globe engage in music making: people come together and sing, play flutes, or beat drums to create aesthetically pleasing sounds. This process involves interpersonal synchronization, which entails the coordination of actions, emotions, thoughts, and even physiological rhythms among two or more people (Bernieri and Rosenthal, 1991; Ackerman and Bargh, 2010; Palumbo et al., 2017). To achieve such coordination, individuals must understand each other's intentions, adapt to different environments, take others' perspectives, and make quick decisions to synchronize effectively (Hasson et al., 2004; D'Ausilio et al., 2015; Sacheli et al., 2018). What remains unclear is the extent to which the aesthetic quality of what is jointly created affects this coordination of action.

Joint music-making provides a unique channel to study humans' ability to precisely synchronize movements in time (Repp, 2005; Repp and Su, 2013; Keller et al., 2014; Abalde et al., 2024). When playing together, musicians must adapt their production of tone sequences based on auditory information from themselves and their partners in order to synchronize effectively (Goebl and Palmer, 2009; Wing et al., 2014). Rhythmic interpersonal coordination can be measured by calculating the asynchrony between the onsets of sounds that are supposed to occur simultaneously in a piece. While some studies explored temporally precise rhythmic interpersonal coordination during naturalistic, expressive ensemble performance (Keller and Appel, 2010; Ragert et al., 2013; Keller et al., 2014; Colley et al., 2018; Laroche et al., 2022; Proksch et al., 2022), most relevant research has been conducted using sensorimotor synchronization tasks, where participants are required to perform simple movements, such as finger taps (Mates et al., 1992; Konvalinka et al., 2009, 2010; Nowicki et al., 2013; Schultz and Palmer, 2019). This task allows researchers to manipulate various conditions, providing insights into social and prosocial behaviors, as well as synchronization and cooperation processes (Konvalinka et al., 2010; D'Ausilio et al., 2015). Indeed, rhythmic joint action can be affected by factors that are related to musical expression (Keller, 2013), including tempo (Rasch, 1988; Konvalinka et al., 2010), metrical structure (Large et al., 2002; Keller and Repp, 2005; Snyder et al., 2006; Rankin et al., 2009), intensity (Goodman, 2002), and timbre (Ternström and Karna, 2002; Ternström, 2003; Sundberg, 2006). Intonation, the accurate control of pitch, is crucial for achieving consonance in music ensembles (Keller, 2013). Selective adjustments in intonation are fundamental to achieve harmonic consonance for the overall sound (Papiotis et al., 2011, 2012). However, research on interpersonal synchronization has primarily focused on the temporal aspects of coordination, neglecting the potential influence of the aesthetic quality of the joint outcome (e.g., Konvalinka et al., 2010; D'Ausilio et al., 2015).

One principal aesthetic dimension of music is consonance. Based on the work of Helmholtz (1913) and Terhardt (1984) identified sensory consonance and harmony as the two main roots of consonance. The former operates at the auditory sensation level and is linked to frequency relations, while the latter relies on pitch relationships and involves a more sophisticated cognitive process. Consonance has been investigated in literature from different perspectives, including arithmetical, psychoacoustic, neurophysiological, and cultural (for a comprehensive review, see Di Stefano et al., 2022). However, due to contrasting evidence, there is still no general consensus on how consonance is governed in music (Di Stefano et al., 2022). Nonetheless, consonant sounds are generally perceived as pleasant, while dissonant sounds as unpleasant (Trainor et al., 2002; Bendor and Wang, 2005; Di Stefano et al., 2022). This preference for consonance is observed in infants and appears to be a universal trait (Vos and Troost, 1989; Zentner and Kagan, 1998; Trainor et al., 2002; Masataka, 2006; Fritz et al., 2009).

We conjecture that the aesthetic quality of the joint outcome, particularly its consonance, might affect performance during sensorimotor tasks. One reason for this is the overlap in neural underpinnings of consonance processing and joint motor action. Specifically, Minati et al. (2009) found that consonant sounds elicited activation in the right hemisphere premotor cortex and inferior parietal lobe, among others. These brain regions are also implicated in auditory-motor integration at the individual level (Chen et al., 2008; Giovannelli et al., 2014; Lega et al., 2016; Siman-Tov et al., 2022) and in understanding others' action intentions (Ortigue et al., 2010). Based on this neural overlap, one might hypothesize that consonance influences joint action, as investigated in our study. Additionally, research with adults has shown that the learning of rules is easier when conveyed through consonant intervals compared to dissonant ones, indicating that consonance has a positive effect on higher-level cognitive abilities (Crespo-Bojorque and Toro, 2016; Di Stefano et al., 2022). To our knowledge, Komeilipoor et al. (2015) is the only study to have investigated the role of consonance in a sensorimotor synchronization task. They had individual participants perform sliding movements with their fingers to the sound of a metronome consisting of a consonant or dissonant chord. They found that consonance did not affect synchronization of movements while the metronome was present. But, puzzlingly, when the metronome was removed and participants were asked to continue moving in the same tempo, they did so less precisely and with greater variability in the dissonant (vs. consonant) condition. This result suggests that consonance has an effect on individual sensorimotor synchronization. However, it is important to recognize that in that study, participants did not themselves participate in the creation of the sound and the consonance was instead driven by an external stimulus beyond their control. Thus, it remains unclear whether sensorimotor synchronization is affected by the aesthetic quality of an individual or a jointly created outcome.

The aim of this study was to test whether the consonance of a jointly created chord affects the synchronization of movements between participants. We reasoned that when participants tap together and each person creates a sound, forming a chord, the timing of their movements would be more synchronized if the chord is consonant. We expected this effect might arise from a mutual adaptation of movement (Konvalinka et al., 2010; Nowicki et al., 2013; Van Der Steen and Keller, 2013; Keller et al., 2014; Uccelli et al., 2023), as well as from processing advantages for consonance and aesthetic pleasant chords (Bones et al., 2014; Tabas et al., 2019). If we follow this line of reasoning, we might also expect that individuals who are more sensitive to the aesthetic outcome should exhibit a greater difference between consonant and dissonant sounds. As a proxy for sensitivity to aesthetic outcome we used the extended Barcelona Music Reward Questionnaire (eBMRQ; Cardona et al., 2022). The original version of this questionnaire (BMRQ, Mas-Herrero et al., 2014) is correlated with the aesthetic facet of “Openness to Experience” section of the NEO-PI-R (Costa and McCrae, 1992), indicating that higher aesthetic sensitivity for art and beauty correlates with higher BMRQ scores. Indeed, some studies have employed the BMRQ to investigate aesthetic reward sensitivity in the music domain and beyond (Mas-Herrero et al., 2018; Hernández et al., 2019; Witek et al., 2023). Given this questionnaire's relevance for assessing aesthetic sensitivity, we used it to explore our hypothesis that those who are more sensitive to aesthetic outcome may show a greater effect of consonance. Additionally, we might expect individuals who are more socially close to show higher consonance effect. Our reasoning is that if individuals are socially close, they likely perceive themselves as part of the same group. Thus, the outcomes the outcomes of a joint action matter more than if they belonged to different groups. From an evolutionary perspective, people in the same group may have more frequent interactions with each other rather than with outsiders (“shadow of the future” effects, Axelrod, 1984). Hence, we expect that the greater the social closeness between individuals, the more they will care about the quality of their joint outcomes, resulting in a stronger impact of consonance on their interpersonal synchronization.

When testing the effect of the joint outcome on rhythmic interpersonal synchronization, it is important to take into account not only the intrinsic acoustic properties of the auditory stimulus (Goodman, 2002; Ternström and Karna, 2002; Ternström, 2003; Sundberg, 2006), but also social and psychological factors (Keller et al., 2014), and individual expertise. For instance, musical expertise is known to promote proficiency in action–effect anticipation, leading to smaller asynchronies in such interpersonal tapping task (Aschersleben and Prinz, 1995; Aschersleben, 2002; Keller and Koch, 2008; Vuust et al., 2009; Pecenka and Keller, 2011; Schultz and Palmer, 2019), and maintaining a more consistent metronome rate when receiving other feedback (Schultz and Palmer, 2019). Social skills and personality traits, such as social competence and empathy, also affect coordination timing. For example, children with higher social skills synchronize better in dyadic drumming tasks (Kleinspehn, 2008), while autistic traits are linked to deficits in interpersonal motor coordination (Curioni et al., 2017) and synchronization difficulties (Kasten et al., 2023). Investigating the effect of consonance on these aspects could provide deeper insights into how aesthetical and pleasant stimuli influences motor coordination in a population with varying levels of social skills and autistic traits. Additionally, inter-dyadic differences in spontaneous rhythm production behaviors (e.g., speech, gait, and dance) influence synchrony in joint tasks, with greater synchrony predicted by smaller differences in spontaneous production rates (Zamm et al., 2016; Palmer et al., 2019; Tranchant et al., 2022).

In the present study, we recruited non-musician participants in a dyadic synchronization-continuation task (dSCT), in which they first synchronized their movements to a metronome together (synchronization phase) and then continued tapping at the same tempo without the metronome (continuation phase). Each participant heard the auditory feedback (notes) from themselves and their partner, and we varied these sounds so that the chord they jointly created was either consonant or dissonant. If the consonance of the joint outcome and the subjective pleasure derived from it affect how paired participants synchronize their movements, we expect that consonance as well as the subjective pleasure of each chord influence the precision of the tapping coordination, both during synchronization and continuation phase (aim 1). To rule out that this effect was due to overall effects on synchronization, we also tested whether the two participants' individual synchronization to the metronome was affected by consonance (aim 2). Further, we reasoned that if the aesthetic quality of the metronome affects movement, this effect should be stronger in those who are more sensitive to the aesthetic quality of music, i.e., more sensitive to musical reward (aim 3). We also hypothesized that dyads who feel socially closer would show a greater effect of consonance on synchronization (aim 4). Finally, to confirm the validity of the measure of interpersonal synchronization, we tested whether participants who have greater musical training achieve, as expected, greater synchronization.

2 Method

2.1 Participants

Forty-two volunteers took part in the study (mean age = 23.64 ± 3.20 years; 21 men and 21 women). Participants were pseudorandomly divided into 21 dyads, ensuring they were unfamiliar with their assigned partner prior to the experiment. The dyads included seven male-male, seven female-female, and seven mixed-gender dyads (as done in Nowicki et al., 2013). All participants were neurologically healthy and did not report any hearing impairments. Most of them were right-handed (N = 38), while 4 were left-handed. Participants were non-musicians, defined as having received < 2 years of formal or informal musical training, assessed using the Musical Training subscale of the Gold-MSI questionnaire (Müllensiefen et al., 2014). The experimental protocol was approved by the local ethics committee of the University of Pavia (Ethical Committee Prot. # 132/23) and participants were treated in accordance with the Declaration of Helsinki.

2.2 Materials

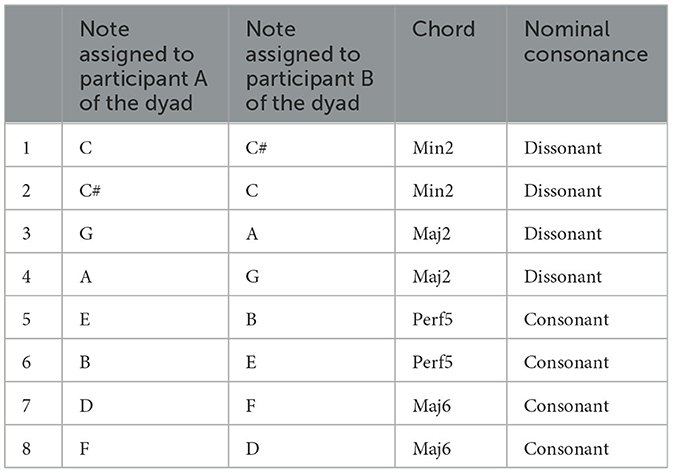

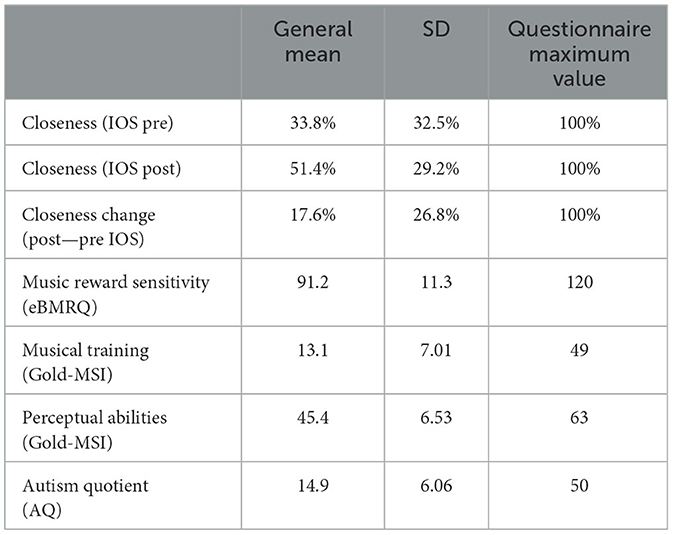

The whole experimental procedure is charted in Figure 1A. With regard to the dyadic synchronization task, participants were positioned facing each other on opposite sides of the table, with a panel placed in the center to obstruct their view of the other participant during the task (Figure 1B). Each participant tapped on a computer keyboard placed in front of them. The two keyboards used by participants in the dyad were linked to the same computer. During the task, one participant pressed the A key on one keyboard while the other pressed the L key on the other keyboard. To receive the sound feedback as well as to ensure participants could not hear the sounds produced by clicking the keys, they were equipped with noise-canceling earphones (see Figure 1B). Taps were recorded by a custom Python interface running Pygame (a set of bindings to Simple DirectMedia Layer, SDL, connected to the two keyboards), which also created the sound of feedback and the click of the metronome, as well as the final gong indicating the end of each trial (see Figure 1D). The metronome sound was a woodblock sound wave file of 30 ms duration, included by default in the Teensy Python interface (Van Vugt, 2020; see also Schultz and Van Vugt, 2016), while the duration of the tap feedback sound was either 150, 200, or 400 ms (held constant within each trial). Each participant received auditory feedback in the form of one of eight distinct tones synthesized as pure sine waves with a 5 ms linear fade in and one of the following frequencies: C (261 Hz), C# (277.18 Hz), E (329.63 Hz), F (349.23 Hz), G (392 Hz), A (440 Hz), B (493.88 Hz), and D (587.33 Hz). During the dyadic synchronization-continuation task (see details below), each participant heard the auditory feedback from themselves and the other, thus creating a chord, which could be either consonant (Perf5 or Maj6) or dissonant (Min2 or Maj2). We use “chord” here to refer to two notes played simultaneously, as shown in Table 1 and Figure 1C. The selection of these chords was based on previous studies (Krumhansl and Cuddy, 2010; McDermott et al., 2010). These studies revealed that Perf5 and Maj6 chords received high pleasure ratings. Conversely, Min2 and Maj2 chords were associated with low ratings of pleasure. These results were obtained when participants rated chords from very unpleasant to very pleasant (McDermott et al., 2010), as well as when indicated how effectively a tone completed an unfinished scale, such as how well the C note concluded the ascending scale C-D-E-F-G-A-B (from very badly to very well, Krumhansl and Cuddy, 2010). The onset of each tap of both participants as well as metronome timings were written to a file for offline analysis (see Van Vugt, 2020).

Figure 1. (A) Flow chart of the procedure. IOS, Inclusion of Other in Self scale, administered once before (pre) and once after (post) the dSCT; Individual-SCT, individual synchronization-continuation task; dSCT, dyadic synchronization-continuation task; eBMRQ, extended Barcelona Music Reward Questionnaire; Gold-MSI, Goldsmith Musical Sophistication Index; AQ, Autism Quotient Questionnaire. Assessment of inter-individual differences is outlined in gray, while the assessment of pitch discrimination and synchronization abilities with a dashed line. (B) Top view of the experimental setup. Participants sat facing each other on opposite sides of the table, with a central panel preventing them from seeing each other; they wore noise-canceling earphones. Participant A of the dyad (in blue) taps on the “A” key of one keyboard, while participant B (in yellow) on the “L” key of the other. Keyboards and earphones were linked to the same computer. (C) The four musical chords employed in the experiment, divided into consonant and dissonant ones. (D) Dyadic synchronization-continuation task (dSCT) structure. Red dots indicate clicks of the metronome, which is discontinued in the continuation phase, while blue (Participant A) and yellow (Participant B) dots refer to participants' taps. A gong (depicted on top right corner) indicates the end of each trial. Time differences between each participant's tap and the closest metronome click (Δt = tap A/B – metronome) were calculated for the individual timing in the synchronization phase, while time differences between participant A and B closest taps were computed for the interpersonal synchronization (Δt = |tap A – tap B|) in both synchronization and continuation phase, bottom right corner of the Figure.

2.3 Procedure

After participants arrived in the lab, they responded to questionnaires, underwent perceptual testing, and performed individual and joint tapping tasks, as illustrated in Figure 1A. Comprehensively, the procedure lasted 2 h.

2.3.1 Perceived social closeness

Participants were asked to indicate the perceived social closeness to the other participant of the dyad using the Inclusion of Self in the Other scale (IOS; Aron et al., 1992), implemented via the jsPsych plugin (see Kinley and Van Vugt, 2023). Participants were asked to indicate their perceived closeness to their partner in the dyad by adjusting the amount of overlap of two circles (see example in Figure 1A), where greater overlap indicated higher perceived closeness. This test was conducted both before (pre) and after (post) the collaborative tasks to measure changes in closeness (see Figure 1A). We are interested in the effects of previously existing closeness, uncontaminated by changes that might happen as a result of the experiment, on the interpersonal synchronization and on the consonance effects. Additionally, we aim to look at the changes between the two measures' timing. To mitigate the influence of social desirability bias, participants completed the test privately on both occasions, ensuring their responses remained undisclosed to their pairs.

2.3.2 Assessment of pitch discrimination and synchronization abilities

We reasoned that two prerequisites for adequately performing the dyadic synchronization-continuation task (dSCT) are I) the perceptual ability to discriminate between the chords used in the experiment and II) the ability to motorically synchronize with the metronome. Thus, prior to the dSCT, participants performed two preliminary tasks, evaluating pitch discrimination (pitch discrimination perceptual test) and sensorimotor synchronization abilities (individual synchronization-continuation task, SCT; see Figure 1A). The pitch discrimination perceptual test aimed to assess that participants could accurately distinguish between the chords used in the dSCT. Participants listened to a total of 10 chord pairings, consisting of combinations of the chords listed in Table 1, thus creating 6 pairings with different and 4 with the same chords. Participants were required to indicate whether chords in each pair were identical or different by pressing either the A or L key on the computer (counterbalanced across participants). The software OpenSesame (Mathôt et al., 2012) was used for stimuli presentation and data collection. The individual sensorimotor synchronization abilities of each participant of the dyad were assessed through an individual-SCT. In this task, participants were instructed to synchronize their tapping with the metronome (synchronization phase), starting at the fifth click, using their dominant hand on the assigned key (A or L, counterbalanced across participants). After this phase, consisting of 20 metronome clicks, the metronome stopped, and participants were told to continue tapping for a duration equivalent to 20 clicks, maintaining the same tempo (continuation phase). At each tap, participants received auditory feedback in the form of A note (440 Hz). Each trial concluded with the sound of a gong. Participants underwent a total of nine trials, determined by the random combination of three metronome tempo (Inter-Onset Intervals, IOI; 450, 550, or 650 ms) x 3 auditory feedback durations (150, 200, and 400 ms).

2.3.3 Dyadic synchronization-continuation task

The materials and the procedure employed in this task are similar to the individual-SCT. Participants were instructed not only to synchronize their taps with the metronome, as they did in the individual-SCT, but also to align their taps with each other. While tapping, participants received auditory feedback (i.e., a note) from both themselves and their partner. If they tapped simultaneously, they jointly created a chord, which could be either consonant or dissonant (see Figure 1C). Based on which note was assigned to each participant in each trial, the dyad could create a total of eight different chords (refer to Table 1). These instructions were chosen so that they could apply to both the synchronization and continuation phases equally. The dyads completed 72 trials, which were randomly determined by combining three metronome IOI (450, 550, or 650 ms), three auditory feedback durations (150, 200, and 400 ms) and eight chords (see Table 1). A break was offered when half of the trials were completed. At the end of each trial, participants were asked to rate how much they liked the chord they produced together on that trial (subjective ratings of pleasure) on a scale from 1 to 10. We instructed participants to consider this range from very unpleasant to highly pleasant sounds, to use the entire rating scale, and to rate independently of their synchronization with the other. To provide their ratings, participants indicated with their hand the chosen number on a paper sheet, hidden from the view of the other (Figure 1B).

2.3.4 Individual spontaneous tapping rate

Each participant engaged in a spontaneous tapping task to assess their spontaneous tapping rate individually without a pacing stimulus (see Figure 1A). Participants A and B of each dyad performed this task separately. They were asked to tap as regularly as possible for about 1 min at a comfortable, self-chosen pace (Wing and Kristofferson, 1973; Hammerschmidt et al., 2021; Pfordresher et al., 2021), while the other participant waited. This test aimed to be able to control for spontaneous tapping rates in joint synchronization tasks (see Zamm et al., 2016; Tranchant et al., 2022). Since this analysis was not directly relevant to our aims the results are included in the Supplementary Section 4.

2.3.5 Music reward sensitivity

Furthermore, participants completed the extended version of the Barcelona Music Reward Questionnaire (eBMRQ; Cardona et al., 2022) to measure their music reward sensitivity. This questionnaire consists of 24 items, divided into six subscales: Music Seeking, Emotion Evocation, Mood Regulation, Sensorimotor, Social, and Musical Absorption, with four items per subscale. Each item (e.g., “When I share music with someone, I feel a special connection with that person.”) requires responses on a 5-point Likert scale, ranging from “completely disagree” to “completely agree.”

2.3.6 Musical training and perceptual abilities

Then, each participant filled out the Musical Training and Perceptual Abilities subscales of the Goldsmith Musical Sophistication Index (Gold-MSI; Müllensiefen et al., 2014) to evaluate the influence of musical expertise on interpersonal tapping abilities. The Musical Training subscale comprises 7 items, such as “I engaged in regular, daily practice of a musical instrument (including voice) for N years,” with N varying across a 7-point scale for each item (e.g., 0, 1, 2, 3, 4–5, 6–9, 10+). Responses are then scored from 1 to 7, based on the position of the number of years within the scale (for instance, 0 years is scored as 1, 1 year as 2, 2 as 3, and so on, up to 10+ scored as 7). The Perceptual Abilities subscale includes 9 items (e.g., “I am able to judge whether someone is a good singer or not”) and require responses on a 7-point Likert scale, ranging from “totally disagree” to “totally agree.”

2.3.7 Autism

Lastly, participants completed the Autism Quotient Questionnaire (AQ; Ashwood et al., 2016) to investigate autistic-like traits influence (Tryfon et al., 2017; Bloch et al., 2019; Granner-Shuman et al., 2021; Carnevali et al., 2024) on sensorimotor synchronization abilities. This 50-item questionnaire offers four response options, ranging from “totally agree” to “totally disagree.” For some items, points are given for disagreeing (e.g., “I prefer to do things with others rather than on my own”), while in others for agreeing (e.g., “I prefer to do things the same way over and over again”).

2.4 Data analysis

2.4.1 Assessment of pitch discrimination and synchronization abilities

In the pitch discrimination perceptual test, we computed the mean and standard deviation of correct responses (out of ten) to verify participants' ability to distinguish sounds. Additionally, this task enabled us to screen participants for amusia (Peretz et al., 2002; Liu et al., 2017; Whiteford and Oxenham, 2017). We observed a mean of 8.95 correct responses, with a standard deviation of 1.03. All participants performed above chance level (X = 5), as indicated by the significant one-sample t-test against the hypothesis μ = 5 [t(41) = 24.75, p < 0.001], confirming adequate ability in distinguishing between the chosen chords. We then analyze the individual synchronization-continuation task (SCT) as follows: we computed the signed timing difference between each tap and the nearest metronome click (in ms) and then we aggregated these differences within each of the nine trials for each participant, to determine the mean and SD (in ms). The distribution of the means and SDs across participants had the following parameters: SkewnessM = 0.08, KurtosisM = 3.72; SkewnessSD = 0.84, KurtosisSD = 2.42. The mean values ranged from a minimum of −181.90 ms to a maximum of 225.81 ms. As a result, we determined that all participants have normal proficiency in both pitch discrimination and sensorimotor synchronization abilities. Consequently, we decided to retain the entire sample for further analysis.

2.4.2 Dyadic synchronization-continuation task

We analyzed the subjective ratings of pleasure performing a within-participants ANOVA with consonance of the chords (two levels: consonant vs. dissonant), auditory feedback duration (three levels: 150, 200, and 400 ms) and metronome tempo (three levels of IOI: 450, 550, and 650 ms) as factors, to assess whether the consonant chords were rated higher than dissonant ones (see Krumhansl and Cuddy, 2010; McDermott et al., 2010), as well as to see differences in pleasure rating based on the duration of the sound and the metronome tempo. Then, we analyzed tapping data inspecting interpersonal synchronization and individual tapping precision. Both synchronization and continuation phases were included in the analyses. For interpersonal synchronization, we analyzed both phases (synchronization and continuation) in the same way: by measuring the time difference between the taps of the two participants. We incorporated the factor “Task Phase” in the ANOVA model. For individual tapping precision, we conducted different analyses for the two phases: during the synchronization phase, we analyzed the time difference between the participant's taps and the metronome, while for the continuation phase, when the metronome was discontinued, we examined the Inter-Tap Intervals (ITIs), Thus, we performed two different ANOVA models, one for each phase. Specifically, when investigating participants' interpersonal synchronization, we firstly excluded a few trials (n = 6, 0.39% of all trials) in which, due to a technical glitch, only one participant's taps were recorded. Then, we paired each tap from participant A with the tap from participant B that was closest in time, and we calculated the absolute time difference (in ms) between the two taps (Δt = |tap A – tap B|; see Figure 1D). We excluded taps after the end-of-trial sound signal and, to avoid incorrect tap matching (e.g., participant B started tapping later compared to participant A, thus the dyad has not started synchronizing yet), we also removed absolute difference values > 80%*metronome IOI. Following this criterion, 1.05% of matching pairs were excluded. We aggregated these absolute taps differences within each of the 72 trials for each dyad to determine the mean of absolute tap time difference (in ms), both for the synchronization and the continuation phase, using the formula mean(Δt), where Δt is the time difference calculated above. The distribution of the means of absolute taps difference showed considerable departure from normality (Skewness = 2.36, Kurtosis = 10.73), so we applied a logarithmic transformation (transformed scores Skewness = 0.55, Kurtosis = 3.17). These log transformed means, calculated within each trial, were then averaged across the trials of the same dyad, separately for each task phase, tempo, auditory feedback duration and consonance of the sound (36 values per dyad). After this data pre-processing, we performed a within-dyads ANOVA on log transformed means with consonance of the chords (two levels: consonant vs. dissonant), tempo (three levels of metronome IOI: 450, 550, and 650 ms), auditory feedback duration (three levels: 150, 200, and 400 ms) and task phase (two levels: synchronization vs. continuation) as factors.

To examine the individual tapping precision and determine whether this measure is affected by consonance, for the synchronization phase we analyzed each individual's timing deviation from the metronome. We matched each tap with the closest (in time) click of the metronome, and we calculated the signed time difference (in ms) between the metronome click and the nearest participant's tap for this phase (Δt = tap A/B – metronome; see Figure 1D). We then calculated the mean and variability of these signed time differences (in ms), with the formulas mean(Δt) and sd(Δt). Due to the presence of negative values and the adherence to the normality assumption for the means' and SDs' distributions (SkewnessM = −0.17, KurtosisM = 3.39, SkewnessSD = 1.09, KurtosisSD = 2.95), we opted not to apply a logarithmic transformation to the variables. Consequently, the values will be reported in their original scale. The means and SDs of signed time differences calculated within each trial were then averaged across the trials of the same participant (18 values per participant). Thus, we performed two within-participant ANOVAs with mean and variability (SD) of signed time differences as dependent variables, and the same set of variables described above as factors, except for task phase. For the continuation phase, where the metronome is discontinued, we analyzed the consonance effect on the Inter-Tap Intervals (ITIs). We calculated ITIs between consecutive taps of the same participant, and then averaged them across trials to determine the mean and standard deviation of ITIs (18 values per participant). Thus, we performed two within-participant ANOVAs with mean ITI and standard deviation ITI as the dependent variables (in ms), and the same set of factors described above.

All the ANOVA models were performed using the ez package (Lawrence, 2016) in the R statistical language (R Core Team, 2023). Post-hoc comparisons were computed using the package rstatix (Kassambara, 2019) with Holm correction method. Following the recommendation of Bakeman (2005), we reported generalized effect sizes (; Olejnik and Algina, 2003).

Lastly, regardless of the consonant/dissonant properties of the auditory stimuli, we investigated if dyadic pleasure influences how participants synchronize their movements (pleasure and interpersonal synchronization relationship). For each dyad, we collected all trials, and we computed a per-dyad regression slope between pleasure (calculated as the average score between participant A and participant B for each trial) and the mean absolute tap differences (in log ms). We then tested whether these regression slopes were significantly different from zero on the group level using a t-test.

2.4.3 Assessment of psychological constructs

We calculated the difference between post-experimental and pre-experimental closeness (IOS) scores for each dyad, hence yielding a change in closeness rating. For clarity, we expressed IOS scores as a percentage. To examine whether the effect of consonance correlates with closeness or musical reward sensitivity, we first averaged the mean absolute tap differences (in log ms) for both consonant and dissonant trials for each dyad and we calculated an estimate of the dyad's consonance effect by subtracting the mean dissonant score from the mean consonant score for each dyad. Then we computed the correlation between this dyad consonant effect and the dyad-summed musical reward and closeness scores (two separate correlations).

Additionally, we conducted an exploratory analysis investigating whether dyadic differences in social factors (i.e., social closeness and autism), musical experience and music reward sensitivity correlate with interpersonal synchronization. We summed the scores of participant A and participant B for each questionnaire (IOS pre, eBMRQ, AQ, Musical Training, and Perceptual Abilities) and we employed these dyadic scores sum in a correlation analysis with the mean of absolute taps difference (in log ms) aggregated for each dyad. We performed the correlation analyses using package stats in the R statistical language (R Core Team, 2023).

3 Results

3.1 Dyadic synchronization-continuation task

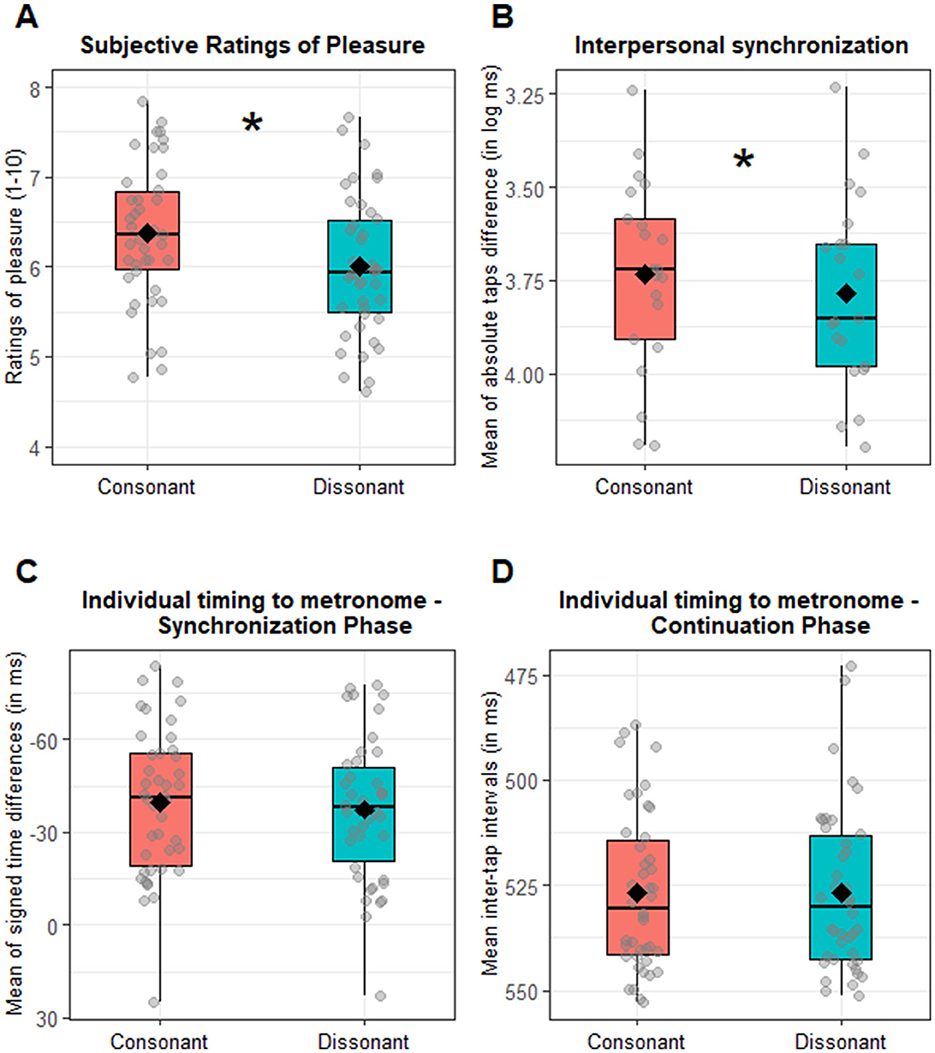

3.1.1 Subjective rating of pleasure

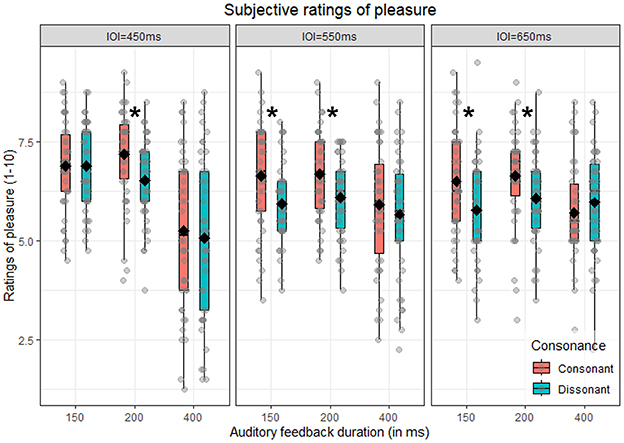

The main aim of this analysis is to confirm that subjective pleasure is predicted by consonance. Additionally, we investigated whether this effect interacted with both feedback duration and metronome IOI. Thus, we performed a repeated-measure ANOVA with consonance, auditory feedback duration and metronome IOI as within-subjects factors. As expected, we found a significant main effect of consonance [F(1, 41) = 7.61, p = 0.009, = 0.02]: consonant chords were rated significantly higher (M = 6.37, SD = 1.50) compared to dissonant ones (M = 6.00, SD = 1.39), as illustrated in Figure 4A. This effect significantly interacted with both feedback duration and metronome IOI, as indicated by the significant three-way interaction consonance x metronome IOI x auditory feedback duration [F(4, 164) = 2.77, p = 0.029, = 0.01]. To further explore this interaction, we analyzed pleasure ratings separately by metronome IOI and auditory feedback duration, to test in which combination of conditions consonant chords were rated higher than dissonant ones. This was true for the IOI = 550 ms [t(41) = 2.97, p = 0.028] and IOI = 650 ms [t(41) = 3.80, p = 0.004] in the 150 ms auditory feedback duration, and for all the metronome IOI conditions in the 200 ms auditory feedback duration condition [450 ms: t(41) = 3.50, p = 0.008; 550 ms: t(41) = 3.03, p = 0.028; 650 ms: t(41) = 3.05, p = 0.028], as illustrated in Figure 2. In sum, consonant trials are overall associated with higher pleasure (main consonance effect), although the pattern is not significant across all combinations of metronome tempo and auditory feedback duration (interaction). Since the main interest of this analysis was the effect of consonance on subjective pleasure, the interactions that do not involve consonance have been moved to Supplementary Section 1.

Figure 2. Subjective rating of pleasure. Boxplot of the subjective ratings of pleasure as a function of auditory feedback duration (150, 200, and 400 ms), consonance (consonant vs. dissonant) and split by metronome IOI (450, 550, and 650 ms). Diamonds indicate the mean for each condition, while dots refer to the single participants' mean pleasure rating for that specific combination of conditions. Asterisks indicate a significant difference between consonant vs. dissonant in that specific combination of conditions.

3.1.2 Interpersonal synchronization (dSCT)

To test whether consonance affected interpersonal synchronization (aim 1), we performed an ANOVA on the interpersonal synchronization (calculated as mean of absolute taps difference in log ms), which revealed a main significant effect of consonance [F(1, 20) = 7.99, p = 0.010, = 0.01]. Crucially, participants demonstrated better synchronization with each other when they produced a consonant chord (3.73 log ms, 48.55 ms) compared to a dissonant one (3.78 log ms, 51.89 ms), as illustrated in Figure 4B. None of the interaction effects with consonance were significant (all Fs < 2.37, ps > 0.107). Since the main interest of our study was on the effect of consonance, all the other main or interaction effects that do not involve consonance have been moved to Supplementary Section 2.

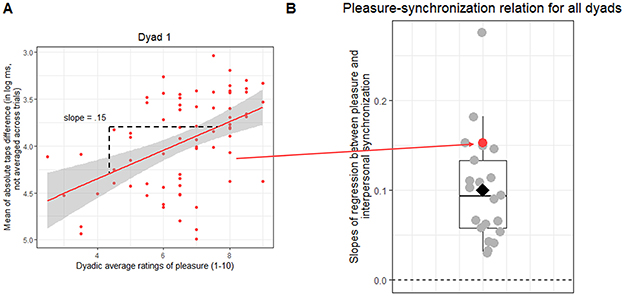

3.1.3 Pleasure and interpersonal synchronization

When analyzing the correlation between dyadic pleasure rating and interpersonal synchronization (aim 1), we found a positive slope in all dyads (mean = 0.10, SD = 0.06), indicating that the higher the pleasure, the higher is the interpersonal synchronization. This slope was significantly different from zero on the group level [t(20) = 7.78, p < 0.001; Figure 3B]. We conclude that, independently from the metronome IOI and the auditory feedback duration, dyads tended to tap more closely together on trials that were rated as more pleasant (see Figures 3A, B).

Figure 3. Pleasure and interpersonal synchronization relationship for all dyads. (A) Shows one example dyad. Each dot corresponds to one of the 72 trials. The red line indicates the regression model fit, predicting the mean of absolute taps difference (in log ms, not averaged per trial) by dyadic average ratings of pleasure (slope = 0.15), and the shaded area its standard error. Note that the y axis is inverted with higher values suggesting better interpersonal synchrony. The slope was extracted for group analysis. This analysis was repeated for all dyads individually. (B) Shows the slopes for all the dyads as dots. The red dot indicates the slope for the dyad shown in (A); the diamond indicates the slopes' mean. Higher values indicate steeper slope lines.

3.1.4 Individual tapping precision (dSCT)

To test whether consonance affected individual timing relative to metronome (aim 2), we performed an ANOVA on the mean of signed time differences (in ms) during the synchronization phase. No significant main effect of consonance emerged [F(1, 41) = 2.42, p = 0.127, = 0.003; MConsonant = −39.53 ms; MDissonant = −37.37 ms], as shown in Figure 4C. None of the other interaction effects with consonance reached significance either (all Fs < 1.65, ps > 0.164). Additionally, we performed the same analysis on the variability (SD) of signed time differences (in ms). This analysis indicated again no significant main effect of consonance [F(1, 41) = 3.32, p = 0.076, = 0.003; SDConsonant = 63.47 ms; SDDissonant = 61.06 ms]. None of the interaction effects with consonance reached the significance (all Fs < 1.61, ps > 0.173). Since the main interest of our study was on the effect of consonance, the interaction effects not involving consonance have been included in Supplementary Section 3A. In the continuation phase, when investigating the effect of consonance, the ANOVA on the mean inter-tap interval (ITIs) indicated no significant main effect of consonance [F(1, 41) = 0.07, p = 0.789, < 0.001; MConsonant = 525.72 ms; MDissonant = 525.94 ms], see Figure 4D. The interaction between auditory feedback duration and consonance [F(2, 82) = 3.83, p = 0.026, = 0.01] was statistically significant. However, corrected post-hoc comparisons testing for consonance vs. dissonance differences within each auditory feedback duration did not reveal any significant differences [all ts(41) < 2.15, ps > 0.114; Supplementary Section 3B, Supplementary Figure 3B]. No other interactions with consonance were significant (all Fs < 1.62, ps > 0.173). Looking at the variability (SD) in the ITIs as a function of consonance, the ANOVA revealed only a trend toward a significant consonance effect [F(1, 41) = 3.81, p = 0.058, =.003; SDConsonant = 30.88 ms; SDDissonant = 32.30 ms]. None of the interaction effects with consonance reached significance (all Fs < 1.47, ps > 0.213). Since the main interest of our study was on the effect of consonance, the interaction effects not involving consonance have been included in Supplementary Section 3B. In sum, we found no overall significant effects of consonance on individual tapping performance, neither in terms of synchronization to the metronome nor in terms of tapping continuation without the metronome.

Figure 4. Consonance effect. (A) Boxplot of the subjective ratings of pleasure as a function of consonance (consonant vs. dissonant). Dots refer to the individual participants' mean rating of pleasure for each condition. (B) Boxplot of consonance effect on interpersonal synchronization (measured as the mean of absolute taps difference log transformed). Diamonds indicate the general consonant and dissonant means, while dots refer to the dyadic mean of absolute taps difference for each condition. Note that the y axis is inverted so that higher values suggest better interpersonal synchrony. (C) Boxplot of the consonance effect on the individual tapping precision (tap timing relative to the metronome) in the synchronization phase (measured as the mean of signed time differences in ms). Diamonds indicate the general consonant and dissonant means, while dots refer to the individual mean of signed difference for each condition. Zero suggests perfect synchronization with the metronome. (D) Boxplot of the consonance effect on individual tapping precision in the continuation phase (measured as mean inter-tap interval, mean ITI). Diamonds indicate consonant and dissonant mean ITI, while dots refer to the individual mean ITI for each condition. Asterisks indicate a significant difference between consonant vs. dissonant condition.

3.2 Assessment of psychological constructs

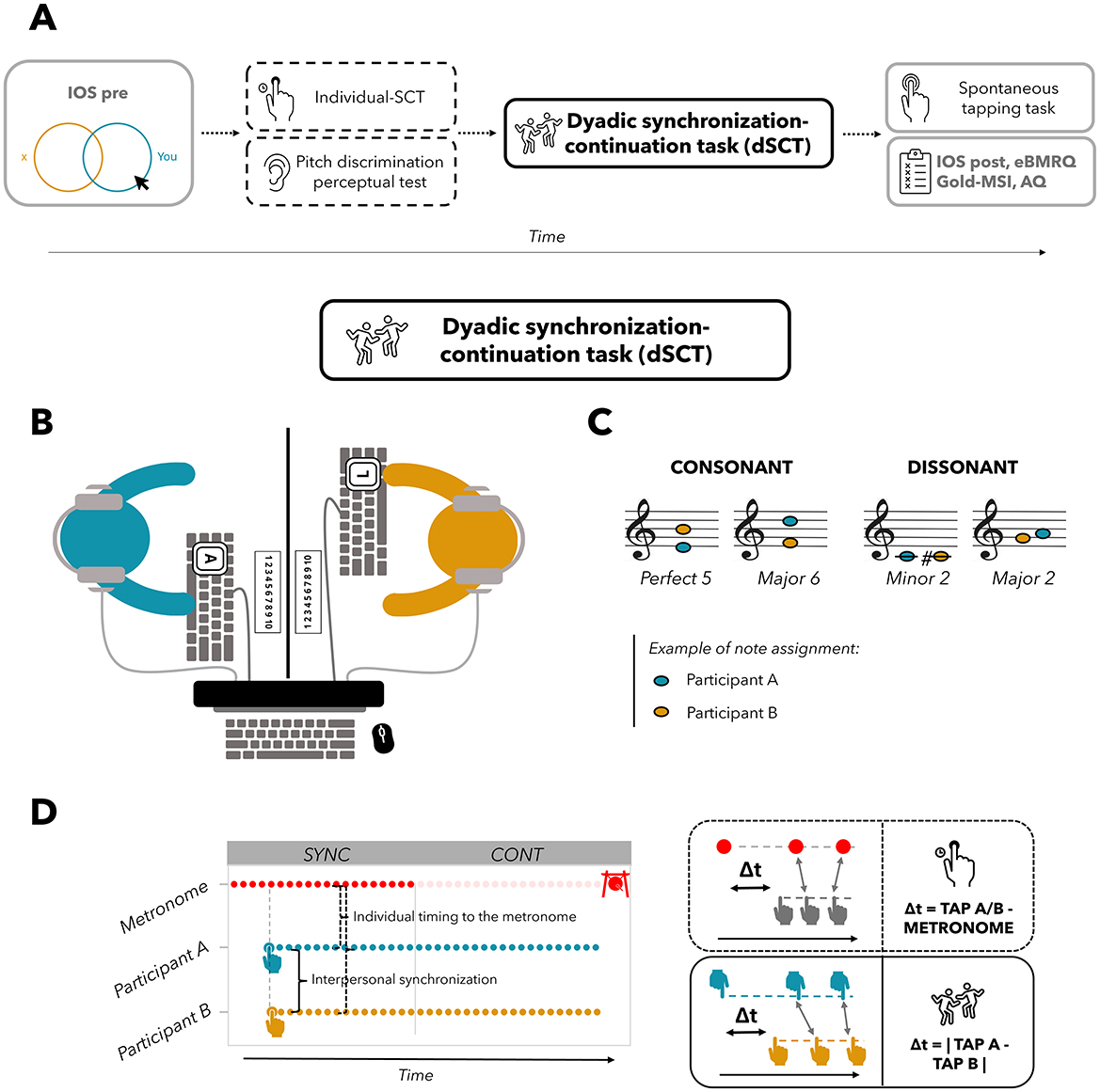

Table 2 reports the general mean, SD and maximum value for each questionnaire. IOS values, expressed as a percentage, were significantly higher after the experiment than before [t(41) = −4.26, p < 0.001], demonstrating an increased perceived closeness after the experiment.

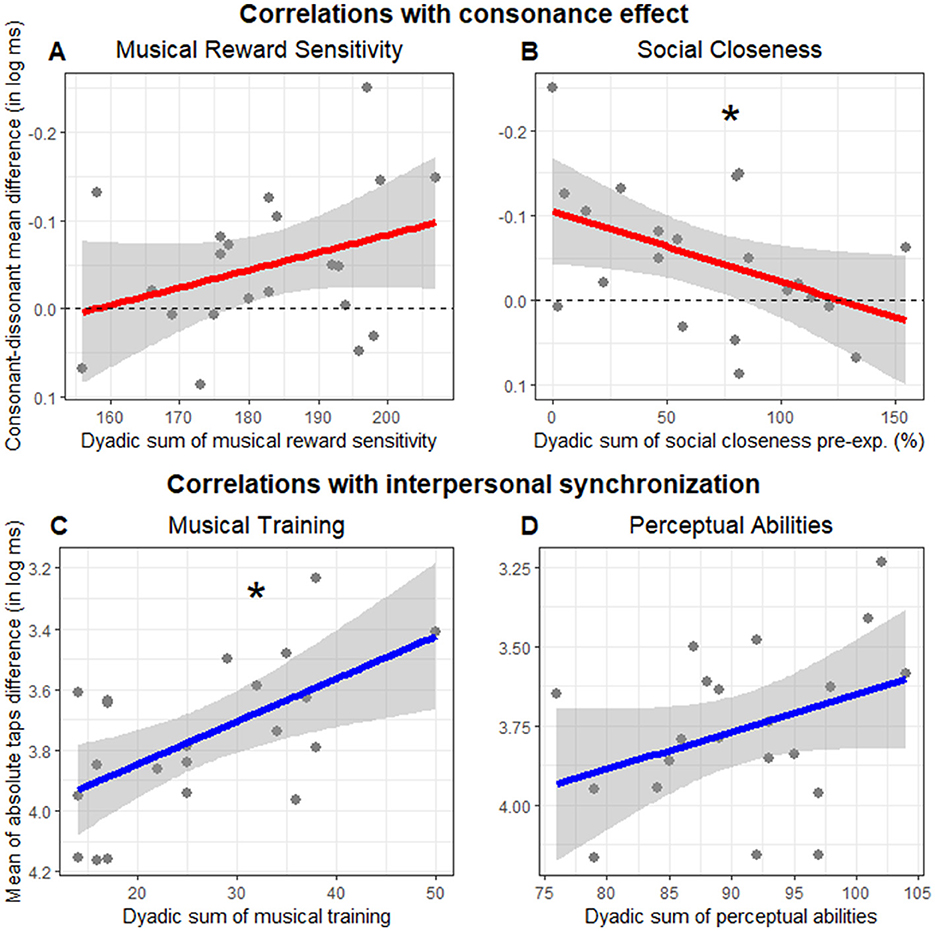

3.2.1 Inter-dyadic differences on consonance effect

To investigate the effect of musical reward sensitivity (aim 3) and perceived closeness (aim 4) on consonance, we performed a correlation analysis between the consonance effect (calculated for each dyad as the interpersonal synchronization in the consonant minus the dissonant trials) and both eBMRQ dyadic scoring sum and IOS pre dyadic sum. The dyadic music reward sensitivity scores did not significantly correlate with the consonance effect (r = −0.33, p = 0.139; Figure 5A). The perceived closeness, uncontaminated by changes that might happen as a result of the experiment (IOS pre), significantly correlated with the consonance effect (r = 0.46, p = 0.038), but the direction of this effect was opposite to what we had hypothesized: the higher the perceived closeness before the experiment, the smaller the effect of consonance during dSCT (Figure 5B). Since we did not have hypotheses about the relationships between the consonance effect and the other questionnaire scores, we have moved these to the Supplementary Section 6A.

Figure 5. Correlation with consonance effect (A, B) and interpersonal synchronization (C, D). (A, B) Depict the consonance effect (measured as interpersonal synchronization in the consonant minus the dissonant trials, in log ms) by dyadic sum of (A) music reward sensitivity (eBMRQ) and (B) social closeness before the experimental session (IOS pre, expressed in percentage). Higher points suggest higher consonant effect. Dashed lines indicate values with no consonance-dissonance difference. (C, D) Represent the interpersonal synchronization (measured as the mean of absolute taps difference in log ms) as a function of the dyadic sum of (C) musical training and (D) perceptual abilities. Each point indicates the mean of absolute taps difference for each dyad. Note that the y axis is inverted with higher values suggesting better interpersonal synchrony. Lines indicate the regression model fit and the shaded area its standard error. Asterisks indicate significant correlations.

3.2.2 Inter-dyadic differences on interpersonal synchronization

We performed an exploratory correlation analysis to investigate the relationships between dyadic scoring sum of each questionnaire and interpersonal synchronization (measured as the mean of absolute taps difference in log ms). Only the dyadic musical training sum showed a significant correlation with interpersonal synchronization (r = −0.59, p = 0.005, Figure 5C). In contrast, the correlation with perceptual abilities was not significant (r = −0.36, p = 0.105, Figure 5D). These results indicated that the higher the dyadic musical training sum, the higher their interpersonal synchronization. Social factors (IOS pre: r = −0.04, p = 0.864; AQ: r = −0.27, p = 0.232), as well as eBMRQ dyadic scores sum (r = −0.19, p = 0.415) did not correlate significantly with interpersonal synchronization. We reported all the correlations between questionnaire dyadic scoring sum and interpersonal synchronization in Supplementary Section 6B.

4 Discussion

The present study investigated whether the quality of a joint outcome can shape the dynamics of interpersonal movement synchronization between individuals. Paired non-musician participants performed a dyadic synchronization–continuation task (dSCT). Each participant heard the auditory feedback from themselves and their partner, thus creating a chord, which could be either consonant (Perf5 or Maj6) or dissonant (Min2 or Maj2). Results showed that interpersonal synchronization accuracy was higher when participants produced consonant chords together (high pleasure), compared to dissonant ones (low pleasure). Since the consonant and dissonant conditions (varied within dyads) only differed in the pitch content, with no differences in auditory feedback duration and metronome tempo, we argue that the interpersonal sensorimotor timing differences observed are driven by the consonance created by the dyad. Supporting this finding, we also found that the dyad's subjective rating of pleasure from the chord they produced together predicted interpersonal synchronization on a per-trial basis. Therefore, both an objective intrinsic property of the auditory stimulus (i.e., the consonance), as well as a subjective measure of pleasure of the joint outcome significantly influences how participants synchronize their movements to each other, affecting the temporal coordination of their actions. Interestingly, the effect of consonance was stronger for dyads that reported feeling less close at the beginning of the experiment. Finally, we corroborate previous findings, by demonstrating a significant effect of musical training (even in non-musician participants) on interpersonal synchronization (Pecenka and Keller, 2011), thus supporting the validity of our measure in accurately assessing tapping production abilities in an interpersonal context. Together these findings suggest that the pleasantness of the joint auditory outcomes positively influences the accuracy of interpersonal synchronization, highlighting the importance of perceptual and aesthetic emotional factors in collaborative motor tasks.

Our findings indicate a significant relationship between the acoustic properties of the joint outcome and interpersonal synchronization (aim 1). The observed greater dyadic synchronization accuracy for consonant chords compared to dissonant ones suggests that the sensory-driven quality of what we produce together directly influences interpersonal motor coordination. Indeed, in our case, predictions about the quality of the joint outcome are purely driven by incoming perceptual information, since participants were unaware of the chords in advance, ruling out top-down expectations or strategic influences on their behavior. These results can be interpreted in light of the processing advantages for consonance compared to dissonance (Bones et al., 2014; Tabas et al., 2019; for a review, see Di Stefano et al., 2022). At the neural level, data has shown that consonant vs. dissonant stimuli are processed differently at both subcortical (Fishman et al., 2001; McKinney et al., 2001; Tramo et al., 2001; Bidelman and Krishnan, 2009) and cortical levels (Itoh et al., 2003, 2010; Bidelman and Grall, 2014) of the auditory system. These neurobiological studies have demonstrated that consonance processing begins early in the human auditory cortex and that additional neural resources are recruited to encode and discriminate dissonant chords compared to consonant ones (Tervaniemi et al., 2011; Virtala et al., 2013; Crespo-Bojorque et al., 2018). Interestingly, this distinctive activation pattern is observed in both humans and monkeys, suggesting a shared evolutionary trait (Fishman et al., 2001; Kadia and Wang, 2003; Bendor and Wang, 2005). The advantages of consonance extend beyond perceptual processing to impact higher-level cognitive abilities and motor performance. Crespo-Bojorque and Toro (2016) found that learning of stimulus-response association rules is facilitated when conveyed through consonant rather than dissonant intervals, while Komeilipoor et al. (2015) demonstrated that individual movement performance is less variable and more precise following exposure to a consonant as compared to a dissonant metronome. Our results align with these findings, showing that creating consonance together affects how we motorically synchronize with partners, thereby extending previous research to highlight the social impact of consonance. Minati et al. (2009) also observed strong right hemisphere activation (including premotor cortex and inferior parietal lobe) in response to consonant sounds. These brain regions are part of the dorsal auditory stream, which integrates auditory and motor information (Rauschecker, 2011; Lega et al., 2016). This neural pathway is particularly active in the right hemisphere for both rhythm perception (Chen et al., 2008; Siman-Tov et al., 2022) and production (Giovannelli et al., 2014). Moreover, other brain areas activated by consonant sounds, such as the orbitofrontal cortex, amygdala, and anterior cingulate gyrus (Dellacherie et al., 2009; Omigie et al., 2015), coincide with regions engaged in social behavior during interpersonal task (Beer et al., 2006; Cacioppo et al., 2014, see also aim 4). Taken together, this neural overlap between areas involved in consonance processing and interpersonal interaction bolsters the picture emerging from our study that these processes are linked.

Building upon the established link between consonance and enhanced synchronization, our findings underscore the significance of subjective pleasure in shaping interpersonal coordination. The observation that dyads' self-reported pleasure rating of the joint outcome predicts synchronization accuracy on a trial-by-trial basis highlights the interplay between perception and aesthetic pleasure in motor control. Previous studies have shown that negative interpersonal perception disrupts mutual motor adjustments (Sacheli et al., 2012) while improving synchronization (lower movement correction and variability). This suggests that partners who report a negative interpersonal bond execute a cooperative task more individually, less adapting to each other's motor behavior. Similarly, recent studies have experimentally manipulated emotional states (positive, negative, or neutral) and demonstrated that individuals induced with positive emotions, as opposed to negative emotions or a neutral state, maintained behavioral synchrony with other group members for a longer period of time (Smykovskyi et al., 2022). In contrast, inducing negative emotions significantly reduced the time spent in synchrony and decreased levels of synchronization (Smykovskyi et al., 2024). We speculate a similar mechanism may be at work in the present study, where the positive affective experience plausibly generated by consonant chords may promote more precise interpersonal movement coordination (i.e., mutual adaptation), but not necessarily improve the precision of the performance itself (i.e., individual synchronization with the metronome). Following this reasoning, we might hypothesize that consonance acts as a mediator of a pleasant affective experience, which in turn affects interpersonal motor coordination. Indeed, we showed that consonant chords received higher ratings of pleasure compared to dissonant ones, in line with a host of prior studies (Koelsch et al., 2006; Sammler et al., 2007; Krumhansl and Cuddy, 2010; McDermott et al., 2010; Komeilipoor et al., 2015). However, it is important to note that the present study design does not allow us to definitively disentangle the specific contributions of low-level perceptual features (consonance) and higher-level aesthetic experiences to the observed effects. Future studies employing more complex musical stimuli are necessary to test the selective contribution of these factors and to further explore the causal relationship between pleasure and interpersonal synchronization. Indeed, although we confirm that consonant chords were rated higher than dissonant ones, the levels of pleasure experienced by the presentation of single chords composed by pure tones are limited, as demonstrated also by the low variability of chord ratings of pleasure. Future studies could investigate full-fledged musical stimuli that presumably evoke more intense experiences of pleasure (see Blood and Zatorre, 2001; Salimpoor et al., 2011).

Interestingly, consonance affects synchronization between individuals but not individual tapping metrics, suggesting that the effect of consonance is primarily social in nature (aim 2). This result may seem to contradict the study by Komeilipoor et al. (2015), which demonstrated that individual motor synchronization performance, when the metronome was discontinued, was less precise and showed greater variability in the dissonant (vs. consonant) condition. In our study, consonance effects during individual tapping in the continuation phase did not reach significance, and, despite the significant interaction between consonance and auditory feedback duration, no consonant vs. dissonant differences were found in any feedback duration conditions. Thus, consonance did not affect overall individual synchronization with the metronome. Indeed, in our study participants were explicitly instructed to synchronize with each other, emphasizing the interpersonal aspect over individual synchronization, which may lead to the different outcomes compared to Komeilipoor et al. (2015), where participants tested alone were instructed to synchronize to a metronome. Previous studies have shown that when people engage in joint actions, top-down rule-based mechanisms can regulate bottom-up sensory-driven processes (Konvalinka et al., 2010). Specifically, when participants are instructed to perform a joint action, they mutually and continuously adapt their tap intervals, employing a “mutual adaptation” strategy (Konvalinka et al., 2010; Nowicki et al., 2013; Van Der Steen and Keller, 2013; Keller et al., 2014; Uccelli et al., 2023). Furthermore, in the study by Komeilipoor et al. (2015), consonance was not generated by the participants' movements but was instead delivered by an external stimulus beyond their control, a crucial difference that may help explain the divergent outcomes between their study and ours.

When investigating if reward sensitivity affects consonance, we anticipated that individuals more sensitive to aesthetic outcomes would show a more pronounced difference between consonant and dissonant sounds (aim 3). Although we did not find a significant correlation, the direction of the effect followed our expectations. Future research could delve further into this relationship, particularly examining which stages of the interaction between joint outcome and interpersonal synchronicity are most influenced by reward sensitivity (e.g., consonance, pleasantness, or beauty in general).

Our results indicate that the effect of consonance has a social component, as it is significantly modulated by the quality of the dyadic relationship prior to the experiment (aim 4). The direction of this relation was opposite to what we had hypothesized. Specifically, we demonstrated that the impact of consonance on interpersonal synchronization is greater in dyads that reported feeling less close before the task. We do not have a definitive explanation for this finding, and given that it was opposite to our hypothesis, we think further confirmatory experiments are needed to decide if this effect is robust. However, we might speculate on a potential underlying mechanism: individuals who already feel closer rely less on their joint outcomes to guide their behaviors, because the prior closeness buffers the need for a pleasurable outcome. By analogy, close friends may feel more at ease to have tough (not pleasurable) conversations because of the strength of their social bond. A limitation of this explanation is that the participants in our study were recruited specifically to not know each other beforehand, and hence the level of closeness would be limited. Individuals with less close interpersonal relationships may benefit more from positive external stimuli, such as consonant and pleasant interactions, to improve their emotional state and sense of connectedness (Lee et al., 2013; Taruffi and Koelsch, 2014; Schäfer et al., 2020). While the bidirectional relationship between perceived closeness and interpersonal synchronization has been previously established (Hove and Risen, 2009; Basile et al., 2022; Hu et al., 2022; Bégel et al., 2024), our results raise a possibility that this relationship could be mediated by the aesthetic experience of what is created together. Future studies should further explore these interactions and their causal direction, such as by manipulating the quality of the dyadic relationship and examining the effect of consonance on dyadic synchronization tasks.

5 Conclusion

In conclusion, our study demonstrates that the aesthetic quality of collaboratively produced sounds significantly influences the precision of interpersonal motor synchronization. These findings build on previous research examining factors such as tempo, timbre, and intensity in rhythmic joint actions, and highlight the importance of considering aesthetic and consonant elements in collaborative motor tasks. From a clinical perspective, these results are particularly valuable. If consonant musical pitch intervals can enhance movement synchronization more effectively than dissonant intervals, future research could leverage these stimuli for treating neurological and psychiatric disorders. By promoting the joint creation of pleasant sounds and synchronized movements, these techniques could improve movement performance in patients with sensory-motor deficits, such as Parkinson's disease (Rodger et al., 2014; Komeilipoor et al., 2015). Additionally, considering that schizophrenic patients often exhibit reduced synchronous behaviors, impaired movement and gestures, and social-affective disorders, pitch-based interpersonal synchronization tasks could help improve movement synchronization, foster feelings of closeness, and enhance social interactions (Varlet et al., 2012; Lavelle et al., 2014; Raffard et al., 2015; Dean et al., 2021).

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://osf.io/y2aeh/?view_only=9e5991a23b414881a39cc678e7678ba9.

Ethics statement

The studies involving humans were approved by University of Pavia (Ethical Committee Prot. # 132/23). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

GL: Data curation, Formal analysis, Investigation, Visualization, Writing – original draft. LS: Conceptualization, Methodology, Writing – review & editing. C-EB: Conceptualization, Methodology, Writing – review & editing. CL: Conceptualization, Methodology, Project administration, Supervision, Writing – original draft, Writing – review & editing. FV: Conceptualization, Data curation, Formal analysis, Funding acquisition, Methodology, Project administration, Software, Supervision, Visualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by FRQNT Research Support for New Academics (#316243) to FV, NSERC Discovery (RGPIN-2022-04362) to FV, and by Bial Foundation Grant (241/2020) to CL.

Acknowledgments

We would like to thank all the participants for lending their time to participate in this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2024.1472632/full#supplementary-material

References

Abalde, S. F., Rigby, A., Keller, P. E., and Novembre, G. (2024). A framework for joint music making: behavioral findings, neural processes, and computational models. Neurosci. Biobehav. Rev. 2024:105816. doi: 10.1016/j.neubiorev.2024.105816

Ackerman, J. M., and Bargh, J. A. (2010). “Two to tango: automatic social coordination and the role of felt effort,” in Effortless Attention, ed. B. Bruya (The MIT Press), 335–372.

Aron, A., Aron, E. N., and Smollan, D. (1992). Inclusion of Other in the Self Scale and the structure of interpersonal closeness. J. Personal. Soc. Psychol. 63, 596–612. doi: 10.1037/0022-3514.63.4.596

Aschersleben, G. (2002). Temporal control of movements in sensorimotor synchronization. Brain Cogn. 48, 66–79. doi: 10.1006/brcg.2001.1304

Aschersleben, G., and Prinz, W. (1995). Synchronizing actions with events: the role of sensory information. Percept. Psychophys. 57, 305–317. doi: 10.3758/BF03213056

Ashwood, K. L., Gillan, N., Horder, J., Hayward, H., Woodhouse, E., McEwen, F. S., et al. (2016). Predicting the diagnosis of autism in adults using the Autism-Spectrum Quotient (AQ) questionnaire. Psychol. Med. 46, 2595–2604. doi: 10.1017/S0033291716001082

Bakeman, R. (2005). Recommended effect size statistics for repeated measures designs. Behav. Res. Methods 37, 379–384. doi: 10.3758/BF03192707

Basile, C., Lecce, S., and Van Vugt, F. T. (2022). Synchrony during online encounters affects social affiliation and theory of mind but not empathy. Front. Psychol. 13:886639. doi: 10.3389/fpsyg.2022.886639

Beer, J. S., John, O. P., Scabini, D., and Knight, R. T. (2006). Orbitofrontal cortex and social behavior: integrating self-monitoring and emotion-cognition interactions. J. Cogn. Neurosci. 18, 871–879. doi: 10.1162/jocn.2006.18.6.871

Bégel, V., Demos, A. P., and Palmer, C. (2024). Duet synchronization interventions affect social interactions. Sci. Rep. 14:9930. doi: 10.1038/s41598-024-60485-w

Bendor, D., and Wang, X. (2005). The neuronal representation of pitch in primate auditory cortex. Nature 436, 1161–1165. doi: 10.1038/nature03867

Bernieri, F. J., and Rosenthal, R. (1991). “Interpersonal coordination: behavior matching and interactional synchrony,” in Fundamentals of Nonverbal Behavior, eds. R. S. Feldman and B. Rimé (New York, NY: Cambridge University Press), 401–432.

Bidelman, G. M., and Grall, J. (2014). Functional organization for musical consonance and tonal pitch hierarchy in human auditory cortex. NeuroImage 101, 204–214. doi: 10.1016/j.neuroimage.2014.07.005

Bidelman, G. M., and Krishnan, A. (2009). Neural correlates of consonance, dissonance, and the hierarchy of musical pitch in the human brainstem. J. Neurosci. 29, 13165–13171. doi: 10.1523/JNEUROSCI.3900-09.2009

Bloch, C., Vogeley, K., Georgescu, A. L., and Falter-Wagner, C. M. (2019). INTRApersonal synchrony as constituent of INTERpersonal synchrony and its relevance for autism spectrum disorder. Front. Robot. AI 6:73. doi: 10.3389/frobt.2019.00073

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U. S. A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Bones, O., Hopkins, K., Krishnan, A., and Plack, C. J. (2014). Phase locked neural activity in the human brainstem predicts preference for musical consonance. Neuropsychologia 58, 23–32. doi: 10.1016/j.neuropsychologia.2014.03.011

Cacioppo, S., Zhou, H., Monteleone, G., Majka, E. A., Quinn, K. A., Ball, A. B., et al. (2014). You are in sync with me: neural correlates of interpersonal synchrony with a partner. Neuroscience 277, 842–858. doi: 10.1016/j.neuroscience.2014.07.051

Cardona, G., Ferreri, L., Lorenzo-Seva, U., Russo, F. A., and Rodriguez-Fornells, A. (2022). The forgotten role of absorption in music reward. Ann. N. Y. Acad. Sci. 1514, 142–154. doi: 10.1111/nyas.14790

Carnevali, L., Valori, I., Mason, G., Altoè, G., and Farroni, T. (2024). Interpersonal motor synchrony in autism: a systematic review and meta-analysis. Front. Psychiatry 15:1355068. doi: 10.3389/fpsyt.2024.1355068

Chen, J. L., Penhune, V. B., and Zatorre, R. J. (2008). Listening to musical rhythms recruits motor regions of the brain. Cerebr. Cortex 18, 2844–2854. doi: 10.1093/cercor/bhn042

Colley, I. D., Varlet, M., MacRitchie, J., and Keller, P. E. (2018). The influence of visual cues on temporal anticipation and movement synchronization with musical sequences. Acta Psychol. 191, 190–200. doi: 10.1016/j.actpsy.2018.09.014

Costa, P. T., and McCrae, R. R. (1992). Normal personality assessment in clinical practice: the NEO Personality Inventory. Psychol. Assess. 4, 5–13. doi: 10.1037/1040-3590.4.1.5

Crespo-Bojorque, P., Monte-Ordoño, J., and Toro, J. M. (2018). Early neural responses underlie advantages for consonance over dissonance. Neuropsychologia 117, 188–198. doi: 10.1016/j.neuropsychologia.2018.06.005

Crespo-Bojorque, P., and Toro, J. M. (2016). Processing advantages for consonance: a comparison between rats (Rattus norvegicus) and humans (Homo sapiens). J. Comparat. Psychol. 130, 97–108. doi: 10.1037/com0000027

Curioni, A., Minio-Paluello, I., Sacheli, L. M., Candidi, M., and Aglioti, S. M. (2017). Autistic traits affect interpersonal motor coordination by modulating strategic use of role-based behavior. Mol. Aut. 8:23. doi: 10.1186/s13229-017-0141-0

D'Ausilio, A., Novembre, G., Fadiga, L., and Keller, P. E. (2015). What can music tell us about social interaction? Trends Cogn. Sci. 19, 111–114. doi: 10.1016/j.tics.2015.01.005

Dean, D. J., Scott, J., and Park, S. (2021). Interpersonal coordination in schizophrenia: a scoping review of the literature. Schizophr. Bullet. 47, 1544–1556. doi: 10.1093/schbul/sbab072

Dellacherie, D., Pfeuty, M., Hasboun, D., Lefèvre, J., Hugueville, L., Schwartz, D. P., et al. (2009). The birth of musical emotion: a depth electrode case study in a human subject with epilepsy. Ann. N. Y. Acad. Sci. 1169, 336–341. doi: 10.1111/j.1749-6632.2009.04870.x

Di Stefano, N., Vuust, P., and Brattico, E. (2022). Consonance and dissonance perception. A critical review of the historical sources, multidisciplinary findings, and main hypotheses. Phys. Life Rev. 43, 273–304. doi: 10.1016/j.plrev.2022.10.004

Fishman, Y. I., Volkov, I. O., Noh, M. D., Garell, P. C., Bakken, H., Arezzo, J. C., et al. (2001). Consonance and dissonance of musical chords: neural correlates in auditory cortex of monkeys and humans. J. Neurophysiol. 86, 2761–2788.

Fritz, T., Jentschke, S., Gosselin, N., Sammler, D., Peretz, I., Turner, R., et al. (2009). Universal recognition of three basic emotions in music. Curr. Biol. 19, 573–576. doi: 10.1016/j.cub.2009.02.058

Giovannelli, F., Innocenti, I., Rossi, S., Borgheresi, A., Ragazzoni, A., Zaccara, G., et al. (2014). Role of the dorsal premotor cortex in rhythmic auditory-motor entrainment: a perturbational approach by rTMS. Cerebr. Cortex 24, 1009–1016. doi: 10.1093/cercor/bhs386

Goebl, W., and Palmer, C. (2009). Synchronization of timing and motion among performing musicians. Music Percept. 26, 427–438. doi: 10.1525/mp.2009.26.5.427

Goodman, E. (2002). “Ensemble performance,” in Musical Performance: A Guide to Understanding, ed. J. Rink (Cambridge: Cambridge University Press), 153–167.

Granner-Shuman, M., Dahan, A., Yozevitch, R., and Gvirts Problovski, H. Z. (2021). The association among autistic traits, interactional synchrony and typical pattern of motor planning and execution in neurotypical individuals. Symmetry 13:1034. doi: 10.3390/sym13061034

Hammerschmidt, D., Frieler, K., and Wöllner, C. (2021). Spontaneous motor tempo: investigating psychological, chronobiological, and demographic factors in a large-scale online tapping experiment. Front. Psychol. 12:677201. doi: 10.3389/fpsyg.2021.677201

Hasson, U., Nir, Y., Levy, I., Fuhrmann, G., and Malach, R. (2004). Intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640. doi: 10.1126/science.1089506

Helmholtz, H. L. F. (1913). Die Lehre von der Tonempfindungen als physiologische Grundlage fur die Theorie der Musik. F. Vieweg, Brauschweig (1863), 6th Edn (E. Terhardt, Trans.). Royal College of Physicians in Edinburgh.

Hernández, M., Palomar-García, M.-Á., Nohales-Nieto, B., Olcina-Sempere, G., Villar-Rodríguez, E., Pastor, R., et al. (2019). Separate contribution of striatum volume and pitch discrimination to individual differences in music reward. Psychol. Sci. 30, 1352–1361. doi: 10.1177/0956797619859339

Hove, M. J., and Risen, J. L. (2009). It's all in the timing: interpersonal synchrony increases affiliation. Soc. Cogn. 27, 949–960. doi: 10.1521/soco.2009.27.6.949

Hu, Y., Cheng, X., Pan, Y., and Hu, Y. (2022). The intrapersonal and interpersonal consequences of interpersonal synchrony. Acta Psychol. 224:103513. doi: 10.1016/j.actpsy.2022.103513

Itoh, K., Suwazono, S., and Nakada, T. (2003). Cortical processing of musical consonance: an evoked potential study. NeuroReport 14, 2303–2306. doi: 10.1097/00001756-200312190-00003

Itoh, K., Suwazono, S., and Nakada, T. (2010). Central auditory processing of noncontextual consonance in music: an evoked potential study. J. Acoust. Soc. Am. 128, 3781–3787. doi: 10.1121/1.3500685

Kadia, S. C., and Wang, X. (2003). Spectral integration in A1 of awake primates: neurons with single- and multipeaked tuning characteristics. J. Neurophysiol. 89, 1603–1622. doi: 10.1152/jn.00271.2001

Kasten, K., Jacoby, N., and Ahissar, M. (2023). Poor synchronization yet adequate tempo-keeping in adults with autism. Aut. Res. 16, 1161–1173. doi: 10.1002/aur.2926

Keller, P. E. (2013). “Musical ensemble performance : a theoretical framework and empirical findings on interpersonal coordination,” in Proceedings of the Fourth International Symposium on Performance Science (ISPS 2013), 28–31 August 2013 (Vienna), 271–285.

Keller, P. E., and Appel, M. (2010). Individual differences, auditory imagery, and the coordination of body movements and sounds in musical ensembles. Music Percept. 28, 27–46. doi: 10.1525/mp.2010.28.1.27

Keller, P. E., and Koch, I. (2008). Action planning in sequential skills: relations to music performance. Quart. J. Exp. Psychol. 61, 275–291. doi: 10.1080/17470210601160864

Keller, P. E., Novembre, G., and Hove, M. J. (2014). Rhythm in joint action: psychological and neurophysiological mechanisms for real-time interpersonal coordination. Phil. Trans. R. Soc. B 369:20130394. doi: 10.1098/rstb.2013.0394

Keller, P. E., and Repp, B. H. (2005). Staying offbeat: sensorimotor syncopation with structured and unstructured auditory sequences. Psychol. Res. 69, 292–309. doi: 10.1007/s00426-004-0182-9

Kinley, I., and Van Vugt, F. T. (2023). A jsPsych Plugin for the Inclusion of Other in the Self (IOS) Scale. doi: 10.31234/osf.io/u429f

Kleinspehn, A. (2008). Goal-Directed Interpersonal Action Synchronization Across the Lifespan: a Dyadic Drumming Study.

Koelsch, S., Fritz, T. V, Cramon, D. Y., Müller, K., and Friederici, A. D. (2006). Investigating emotion with music: an fMRI study. Hum. Brain Map. 27, 239–250. doi: 10.1002/hbm.20180

Komeilipoor, N., Rodger, M. W. M., Craig, C. M., and Cesari, P. (2015). (Dis-)Harmony in movement: effects of musical dissonance on movement timing and form. Exp. Brain Res. 233, 1585–1595. doi: 10.1007/s00221-015-4233-9

Konvalinka, I., Vuust, P., Roepstorff, A., and Frith, C. D. (2009). A Coupled Oscillator Model of Interactive Tapping. Available at: https://jyx.jyu.fi/handle/123456789/20884 (accessed July 13, 2024).

Konvalinka, I., Vuust, P., Roepstorff, A., and Frith, C. D. (2010). Follow you, follow me: continuous mutual prediction and adaptation in joint tapping. Quart. J. Exp. Psychol. 63, 2220–2230. doi: 10.1080/17470218.2010.497843

Krumhansl, C. L., and Cuddy, L. L. (2010). “A theory of tonal hierarchies in music,” in Music Perception, eds. M. Riess Jones, R. R. Fay, and A. N. Popper (New York, NY: Springer New York), 51–87.

Large, E. W., Fink, P., and Kelso, S. J. (2002). Tracking simple and complex sequences. Psychol. Res. 66, 3–17. doi: 10.1007/s004260100069

Laroche, J., Tomassini, A., Volpe, G., Camurri, A., Fadiga, L., and D'Ausilio, A. (2022). Interpersonal sensorimotor communication shapes intrapersonal coordination in a musical ensemble. Front. Hum. Neurosci. 16:899676. doi: 10.3389/fnhum.2022.899676

Lavelle, M., Healey, P. G. T., and McCabe, R. (2014). Nonverbal behavior during face-to-face social interaction in schizophrenia: a review. J. Nerv. Mental Dis. 202, 47–54. doi: 10.1097/NMD.0000000000000031

Lawrence, M. A. (2016). ez: Easy Analysis and Visualization of Factorial Experiments. Available at: https://cran.r-project.org/web/packages/ez/index.html (accessed July 14, 2024).

Lee, C. J., Andrade, E. B., and Palmer, S. E. (2013). Interpersonal relationships and preferences for mood-congruency in aesthetic experiences. J. Consum. Res. 40, 382–391. doi: 10.1086/670609

Lega, C., Stephan, M. A., Zatorre, R. J., and Penhune, V. (2016). Testing the role of dorsal premotor cortex in auditory-motor association learning using transcranical magnetic stimulation (TMS). PLoS ONE 11:e0163380. doi: 10.1371/journal.pone.0163380

Liu, F., Jiang, C., Francart, T., Chan, A. H. D., and Wong, P. C. M. (2017). Perceptual learning of pitch direction in congenital amusia. Music Percept. 34, 335–351. doi: 10.1525/mp.2017.34.3.335

Masataka, N. (2006). Preference for consonance over dissonance by hearing newborns of deaf parents and of hearing parents. Dev. Sci. 9, 46–50. doi: 10.1111/j.1467-7687.2005.00462.x