- 1Department of Psychology, University of Fribourg, Fribourg, Switzerland

- 2Department of Psychology, Tufts University, Medford, MA, United States

The human brain is sensitive to threat-related information even when we are not aware of this information. For example, fearful faces attract gaze in the absence of visual awareness. Moreover, information in different sensory modalities interacts in the absence of awareness, for example, the detection of suppressed visual stimuli is facilitated by simultaneously presented congruent sounds or tactile stimuli. Here, we combined these two lines of research and investigated whether threat-related sounds could facilitate visual processing of threat-related images suppressed from awareness such that they attract eye gaze. We suppressed threat-related images of cars and neutral images of human hands from visual awareness using continuous flash suppression and tracked observers’ eye movements while presenting congruent or incongruent sounds (finger snapping and car engine sounds). Indeed, threat-related car sounds guided the eyes toward suppressed car images, participants looked longer at the hidden car images than at any other part of the display. In contrast, neither congruent nor incongruent sounds had a significant effect on eye responses to suppressed finger images. Overall, our results suggest that only in a danger-related context semantically congruent sounds modulate eye movements to images suppressed from awareness, highlighting the prioritisation of eye responses to threat-related stimuli in the absence of visual awareness.

Introduction

Detecting threats in the environment is a key survival function that our brain has to fulfill. The human visual system demonstrates a remarkable efficiency in processing signals of potential threat, even when those signals are not consciously perceived (for reviews see Öhman et al., 2007; Tamietto and De Gelder, 2010; Hedger et al., 2015; Diano et al., 2017; Bertini and Làdavas, 2021). For example, subliminally primed images signaling danger evoke heightened social avoidance (Wyer and Calvini, 2011). Angry faces suppressed from awareness modulate likeability ratings of neutral items (Almeida et al., 2013). Suppressed fearful faces evoke larger skin conductance responses (Lapate et al., 2014) and break into awareness faster (Yang et al., 2007; Hedger et al., 2015). Fearful faces located in the blind field of hemianopic patients facilitate discrimination of simple visual stimuli presented in their intact visual field (Bertini et al., 2019). Unaware visual processing of threat-related images, such as fearful faces, is subserved by subcortical structures such as the amygdala (Jiang and He, 2006; Tamietto and De Gelder, 2010; Méndez-Bértolo et al., 2016; Wang et al., 2023), the superior colliculus and pulvinar (LeDoux, 1998; Morris et al., 1998; Whalen et al., 1998; Bertini et al., 2018), and the visual cortices (Pessoa and Adolphs, 2010).

Human oculomotion studies provide insights into how aware and unaware visual stimuli influence actions (e.g., Rothkirch et al., 2012; Glasser and Tadin, 2014; Spering and Carrasco, 2015). Under aware viewing conditions, fearful faces and body postures elicit faster saccadic orienting responses than neutral stimuli (Bannerman et al., 2009). Moreover, oculomotor actions are differentially guided by fearful faces even in the absence of visual awareness. That is, even when fearful faces are entirely suppressed from visual awareness using continuous flash suppression (CFS, Tsuchiya and Koch, 2005), the eyes are attracted toward fearful faces, and repulsed away from angry faces (Vetter et al., 2019). Given the important role of the superior colliculus in controlling eye gaze (Munoz and Everling, 2004) and relaying sensory information (Stein and Meredith, 1993; LeDoux, 1998), it is possible that the superior colliculus, together with the amygdala, mediates the convergence of unconscious threat processing and reflexive oculomotor responses.

In a sensory rich world, threat is conveyed through multiple modalities. Sounds not only arrive faster in the brain than visual signals, threat-related sounds in particular evoke arousal (Burley et al., 2017), capture attention (Zhao et al., 2019; Gerdes et al., 2021), and alert the brain via the brain stem reflex (Juslin and Västfjäll, 2008). The amygdala and the auditory cortex respond interactively to aversive sounds and their perceived unpleasantness (Kumar et al., 2012, for a review, see Frühholz et al., 2016). The primary auditory cortex encodes and predicts threat from fear-conditioned sounds (Staib and Bach, 2018). Further, the superior colliculus is a key mediator for eye responses to sounds, for example, saccadic orienting (see Hu and Vetter, 2024, for a review). However, few studies have tested how threat-related cross-modal stimuli influence eye movements. Responding to threat under natural conditions usually involves sensory processing of concurrent visual and auditory cues. Sounds, in particular, can be heard from all directions and travel around obstacles. Thus, sounds might allow individuals to detect potential threats that are not immediately visible, such as an animal behind a bush. Here, we tested how the eyes respond to threat by using ecologically valid, multimodal stimuli.

Integrating information provided through the different senses improves perception. For example, audiovisual stimulus pairs are detected faster (Miller, 1986) and localized with higher precision (Alais and Burr, 2004) than the same stimuli presented unimodally. Multisensory integration is guided by causal inference: the degree of integration depends on the probability that the signals from the different senses share a common origin (Körding et al., 2007; Badde et al., 2021; Shams and Beierholm, 2022). Accordingly, semantically congruent information in different modalities is more likely to be integrated than incongruent information (Dolan et al., 2001; Doehrmann and Naumer, 2008; Noppeney et al., 2008). Although multisensory integration per se requires awareness of the integrated information (Palmer and Ramsey, 2012; Montoya and Badde, 2023), aware information in one modality can facilitate the breakthrough into awareness for suppressed, congruent information presented in another modality (Mudrik et al., 2014). For example, congruent sounds can facilitate the detection and identification of visual stimuli suppressed from awareness (Chen and Spence, 2010; Conrad et al., 2010; Chen et al., 2011; Alsius and Munhall, 2013; Lunghi et al., 2014; Aller et al., 2015; Lee et al., 2015; Delong and Noppeney, 2021) as do congruent haptic stimuli (Lunghi et al., 2010; Hense et al., 2019). This facilitation potentially relies on an established association between the visual and auditory signals (Faivre et al., 2014). Consistently, cross-modal facilitation in the absence of awareness depends on semantic congruency: Chen and Spence (2010) paired backward masked images with naturalistic sounds, including threat-related sounds such as gunshots, helicopter and motor engine sounds. When the sounds were semantically congruent to the masked image, naming the object depicted in the image was facilitated; in turn, naming the object was compromised when sounds were incongruent. Similarly, semantically congruent sounds (car engine or bird singing), compared to irrelevant sounds, prolonged the perceptual dominance of the corresponding image under binocular rivalry conditions (Chen et al., 2011). Interestingly, an overall, sound-independent perceptual dominance of car over bird images emerged in this study. Given that cars can have a threatening character, this dominance of car images ties nicely with previous results showing that threat-related visual images break more easily into awareness.

The present study was designed to probe whether the influence of threat-related images suppressed from awareness on oculomotor actions is modulated by congruent, threat-related sounds. As in a previous study, we combined CFS with eye tracking (Vetter et al., 2019) and measured observers’ eye movements to suppressed images of a car (threat-related) or human hands (not threat-related) while either sounds of a car engine or finger snapping (or no sound) were simultaneously presented. In contrast to previous studies measuring enhanced visual detection or faster break-through of unaware threat-related images into awareness, we tracked the eyes to directly examine the influence of sounds on actions toward suppressed images. By focusing on oculomotor responses, which the participant was not aware of, we controlled for potential confounds (Stein et al., 2011) such as faster button presses in the presence of fear-inducing sounds (Globisch et al., 1999) or fear-inducing images (Bannerman et al., 2009) and thus can directly link changes in eye gaze behavior to changes in visual stimulus awareness.

We chose car stimuli as those have been shown to gain preferential access to awareness just as other fear-related stimuli (Chen et al., 2011) and are associated with threat-related, semantically congruent sounds such as roaring engines. We chose hand stimuli based on evidence showing that the brain distinguishes between human-related stimuli and inanimate stimuli, such as human hands (Bracci et al., 2018) and vehicles (DiCarlo et al., 2012), with specific brain pathways and regions dedicated to the processing of these categories (Caramazza and Shelton, 1998; Kriegeskorte et al., 2008; Grill-Spector and Weiner, 2014; Sha et al., 2015; Bracci and Op De Beeck, 2023). Also, human and inanimate sound categories induce differential representations in visual cortex even in the absence of visual stimulation (Vetter et al., 2014). Thus, given that human hand and inanimate car images are treated distinctively by the brain and have a differential relationship to threat, we predicted that the eyes are guided differentially by these images when they are suppressed from visual awareness. Given that threat-related signals are prioritized in unaware processing, we hypothesized that congruent car sounds may affect eye gaze to unseen car images.

Materials and methods

Participants

Twenty participants (17 females, mean age 23.5 years) were included in the final analysis. We determined the sample size based on simulations of our statistical model (see Cary et al., 2024 for an example). Data of three additional participants were excluded from all analyses; for one of them the continuous flash suppression worked only in 37% of trials resulting in sparse eye data (<75%). Two of them performed clearly above chance level (38 and 47% correct responses with chance being at 25%) when indicating the location of the suppressed stimulus despite reporting to not have seen the stimulus. All participants took part in exchange for payment, indicated normal or corrected-to-normal vision and normal hearing, and signed an informed consent form prior to the start of the experiment. The experiment was approved by the Psychology Ethics Committee of the University of Fribourg.

Apparatus and stimuli

Participants positioned their head on a chin and forehead rest to stabilize head position and viewed a monitor (ROG Strix XG258Q Series Gaming LCD Monitor, 60 Hz, 1,280 × 1,024 resolution) through a mirror stereoscope (ScreenScope, ASC Scientific, Carlsbad, United States). The distance between the stereoscope and the chin rest ranged from 10 to 13 cm; the position of the stereoscope was adjusted for each participant to ensure good stereo fusion while allowing the eye-tracker to catch the eye from underneath the stereoscope. The monitor was placed at a distance of 100 cm from the participant (the target image subtending 1.97° × 2.92° of visual angle).

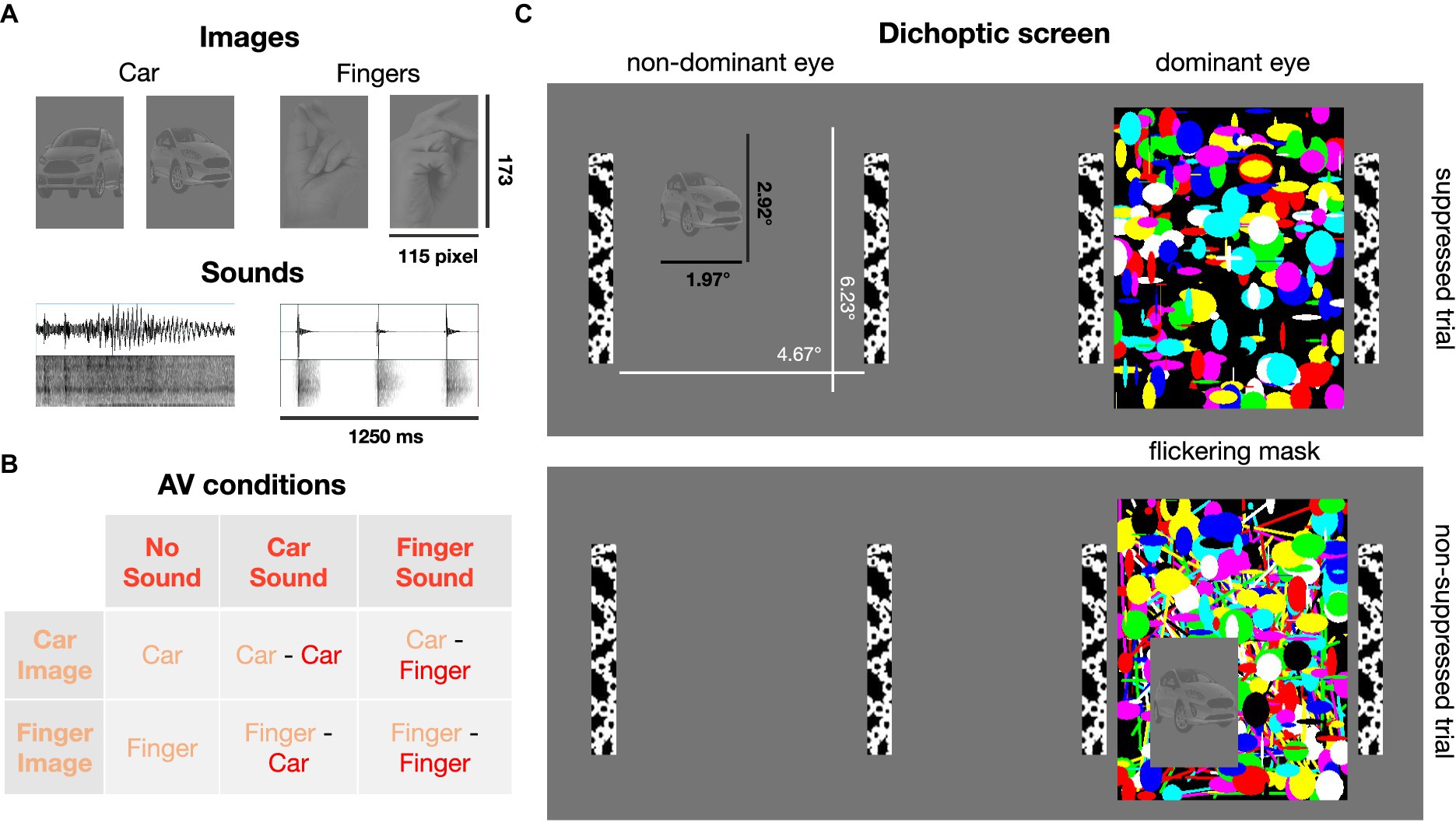

Twelve static images (six of snapping fingers, six of cars) were selected as visual stimuli. The angle from which the hands and cars were shown varied across images (Supplementary Figure S1). Raw images were processed in Adobe Photoshop (version 23.2.2). The images were first rendered achromatic, the background was removed and replaced with a mid-gray (RGB 128, 128, 128) layer, and the contrast level of the foreground containing the object was reduced. Images were cut to a size of 115 by 173 pixel. All image foregrounds were equated for overall luminance (most frequent luminance in the foregrounds) and contrast (i.e., the standard deviation of the luminance distribution) using the SHINE toolbox (Willenbockel et al., 2010) in MATLAB.

To create mask images, randomly chosen shapes of various colors were overlayed (Figure 1), and, importantly, each set of masks was created anew for each trial. Thus, none of the mask images were ever same, to exclude that systematic mask features influence eye movements. Mask images changed at a frequency of 30 Hz (Sterzer et al., 2008; Alsius and Munhall, 2013).

Figure 1. Visual and auditory stimuli. (A) Low-contrast images of cars and human hands (2 exemplars shown here, 6 exemplars per image category were used in total) were presented, typically, to the non-dominant eye. Car engine or finger snapping sounds were delivered concurrently with image presentation. (B) Six combinations of image and sound categories were tested. (C) Dichoptic screen viewed through a stereoscope. A high contrast flickering mask was presented to the dominant eye. Suppression from awareness with continuous flash suppression was achieved by presenting the car or finger image to the other, non-dominant eye (82% of trials). In the non-suppressed control condition (18% of trials), the image was presented on top of the flickering mask and thus clearly visible.

The screen was divided into two stimulus regions projected to the left and right eye, respectively, by means of the stereoscope. To help fuse the two images, black and white bars framed the stimulus region for each eye (4.67° × 6.23°; Figure 1). Target images were randomly displayed in one of the four quadrants of one stimulus region. In typical suppression trials, the mask was presented to the dominant eye and the low contrast target images were presented to the non-dominant eye to achieve optimal suppression. In the remaining trials, the image was displayed on top of the mask presented to the dominant eye, so that the finger/car image was clearly visible. These non-suppressed, conscious trials were introduced as a positive control, to ensure that participants paid attention and reported the image’s position and content correctly when it was clearly visible. Eye dominance was determined with a hole-in-card test (Miles, 1930) at the beginning of the study for each participant and verified after participants had been familiarized with the experiment (Ding et al., 2018). Six participants exhibited left eye dominance.

Twelve sound files (see Supplementary material, six different sounds of a person snapping their fingers and six different sounds of a starting car engine or an approaching car) were cut to a duration of 1,250 ms and normalized for sound pressure level in Audacity (version 3.2.1). Audio tracks were faded in and out for 25 ms, to avoid abrupt on- and offset. Sounds were delivered diotically through over-ear headphones (Sennheiser HD 418) and sound volume was adjusted to be at a comfortable level for each participant.

Gaze locations of the non-dominant eye were recorded monocularly at 1,000 Hz using an EyeLink 1,000 (SR Research, Ottawa, Canada). To map eye positions onto screen coordinates, 5-point calibration procedures were performed at the beginning of the experiment, before the beginning of a new block, and when participants repeatedly failed to maintain fixation during the initial fixation period of a trial (Figure 2).

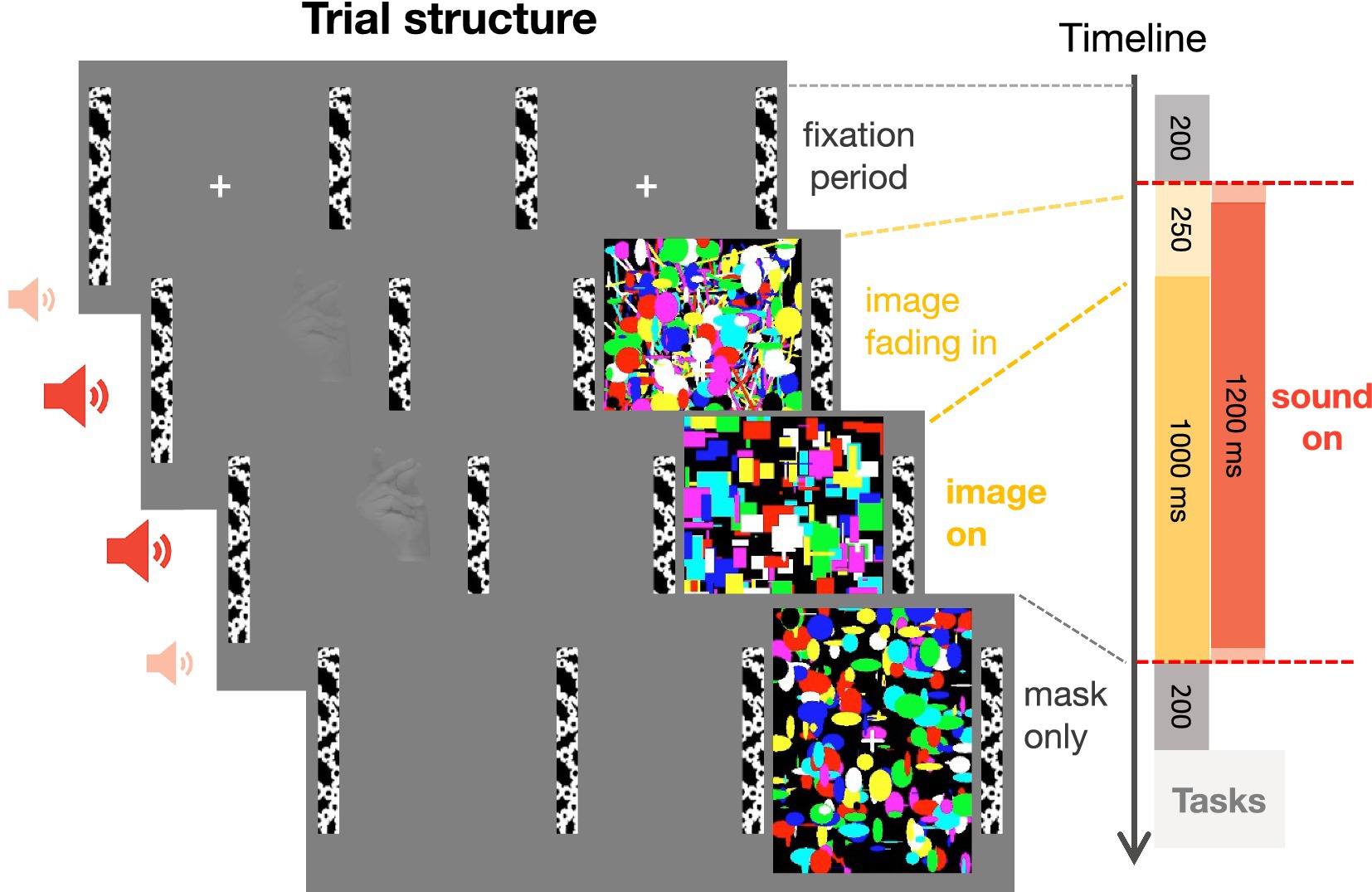

Figure 2. Trial structure. After a mandatory fixation period of 200 ms, an image of a car or a hand was gradually faded in for 250 ms and presented for 1,000 ms to the non-dominant eye while a flickering mask was continuously presented to the dominant eye. Simultaneously to the onset of the visual images, a sound, lasting for the whole duration of image presentation, was presented (except in the no sound condition). The mask was displayed for an additional 200 ms to prevent aftereffects of the target image. After stimulus presentation, participants indicated the location and category of the target image, guessing if the image was successfully suppressed, and rated the visibility of the target image.

Images and sounds were presented via MATLAB (R2019b) using the Cogent toolbox (Wellcome Department of Imaging Neuroscience, University College London).

Tasks

Participants were instructed to detect any image behind the flickering mask. After each trial, participants answered three questions about the (suppressed) visual stimulus (non-speeded). First, they indicated the quadrant in which they thought the image had been presented (four-alternative forced-choice). They responded by pressing one of four keys on a conventional keyboard. Two ways of quadrant to key assignments were used and counterbalanced across participants. Second, participants indicated the image category (car or fingers, two-alternative forced-choice), again by pressing one of two assigned keys on the keyboard. Third, participants rated the visibility of the target image as either ‘not seen at all,’ ‘brief glimpse,’ ‘almost clear,’ or ‘very clear’ (Sandberg et al., 2010). Each of the three questions was sequentially displayed on the screen. The order of position and categorization tasks was counterbalanced across participants; the visibility rating was always prompted last. The first two tasks served as objective measures of awareness. Participants’ performance allowed us to determine whether participants were able to localize and identify the images above chance level. The visibility rating served as subjective measure of awareness. Participants were instructed to make a guess in the first two tasks if they did not see the target image and to ignore the sounds.

Procedure

Each trial started with a mandatory fixation period of 200 ms (Figure 2), i.e., the trial only began after participants maintained fixation of a centrally presented fixation cross for at least 200 ms. After the fixation period, the flickering mask started. The target image was gradually faded in for 250 ms, to avoid abrupt image onset and to achieve more effective suppression (Tsuchiya et al., 2006). The sound started directly after the fixation period and lasted for 1,250 ms. The target image was displayed at full contrast for another 1,000 ms before it disappeared. The mask was displayed for an extra 200 ms to prevent afterimages of the target stimulus. There was break every 22 trials (~2.5 min) allowing participants to rest their eyes.

The experiment comprised 528 trials divided into six blocks of 88 trials, administered in two sessions on two separate days. In 432 trials (82% of total trials), the image was presented to the non-dominant eye and the flickering mask to the dominant eye to achieve suppression of the target image from awareness. In 96 trials (18%), the image was displayed on top of the mask presented to the dominant eye, so that the finger/car image was clearly visible. Trial type (suppressed or non-suppressed), image position (upper left, upper right, lower left, lower right), image content (fingers, car), and sound content (fingers, car, no sound) were presented in randomized order.

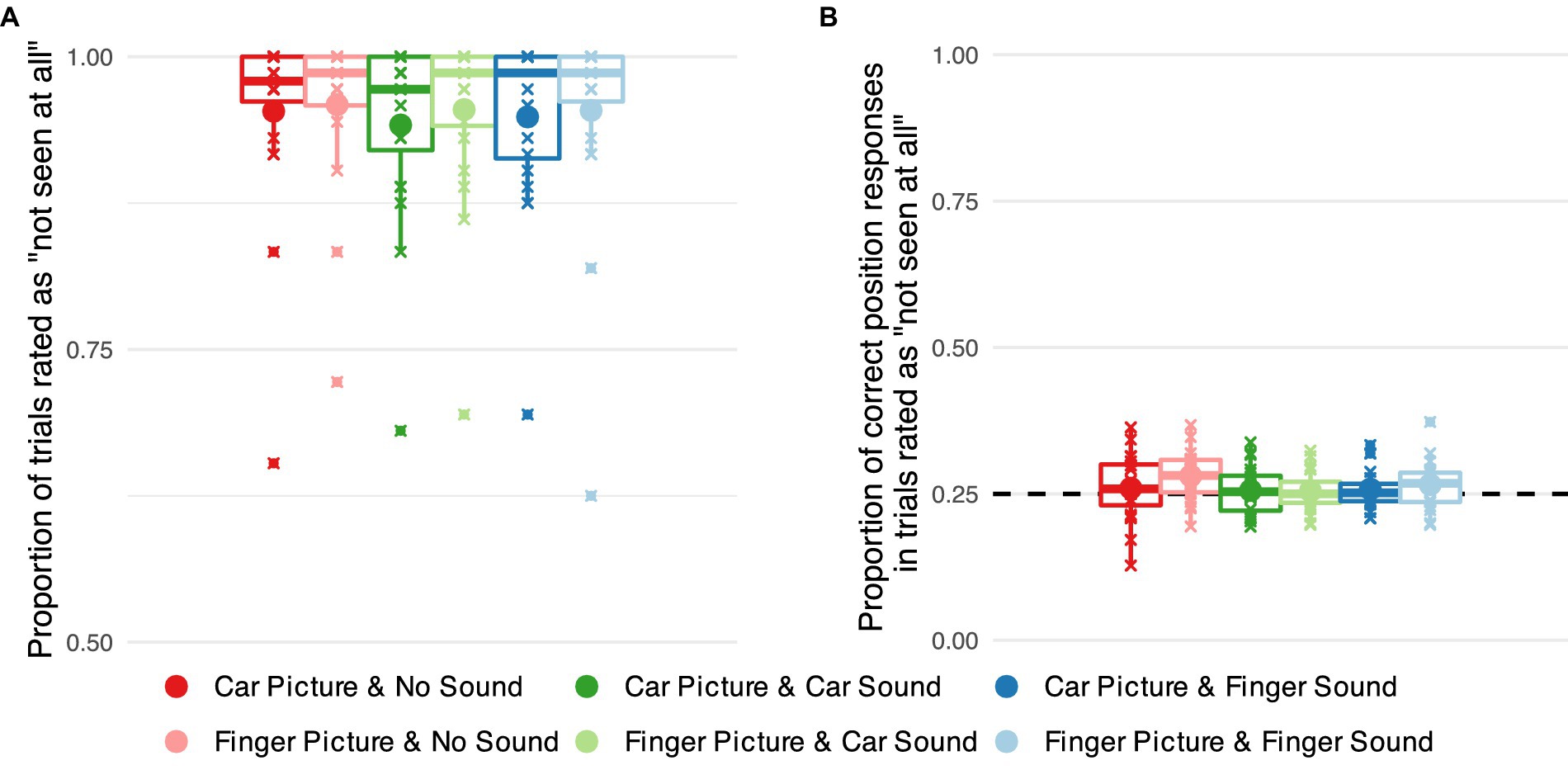

Data analysis – behavior

The suppressive effects of continuous flash suppression vary inter-and intra-individually (Hesselmann et al., 2016). We employed subjective and objective measures to ensure that only trials in which the image was indeed fully suppressed from awareness were included in the analysis. First, only trials with a subjective visibility rating of zero (‘not seen at all’) were included in the analysis (70 out of 88 trials per condition on average, see Figure 3A for breakthrough rates). Second, to validate these subjective judgments, participants’ average accuracy in the position task was calculated for these subjectively invisible trials. Data from the categorization task were not analyzed further, as an inspection of the results indicated that many participants reported the identity of the sound when the image was successfully suppressed (Supplementary Figure S2).

Figure 3. Objective and subjective measures of awareness of target images suppressed from visual awareness using continuous flash suppression. (A) Proportion of successfully suppressed trials, i.e., trials rated as ‘not seen at all’ separately for each combination of visual target stimulus category (car vs. finger) and sound category (no sound vs. car sound vs. finger snapping sound). Small markers show subject-level averages, large markers group averages. (B) Proportions of correct responses in the position task in successfully suppressed trials for each of the six stimulus categories. The dashed line indicates chance level performance.

To analyze whether the success of continuous flash suppression differed across stimulus categories, we recoded the subjective visibility ratings into a binary variable (‘not seen at all’ vs. all other ratings). We conducted a hierarchical logistic regression with predictors image type and sound type, their interaction, and participant-level intercepts to check whether this binary indicator of suppression varied across stimulus categories.

We moreover checked the accuracy of participants’ responses in the localization task in trials for which they indicated to not have seen the target image. We conducted a hierarchical logistic regression on response accuracy with predictors image type and sound type, their interaction, and participant-level intercepts. We additionally compared performance in each category against chance level by calculating planned contrasts against .

Data analysis – dwell time

To quantify the effects of the different visual and auditory stimuli on gaze, we analyzed the distribution of fixations across the stimulus region. Trials in which less than 25% of the recorded gaze positions were located inside the stimulus field (for example, because the participant repeatedly blinked during the trial) were excluded from this analysis (<1% of trials).

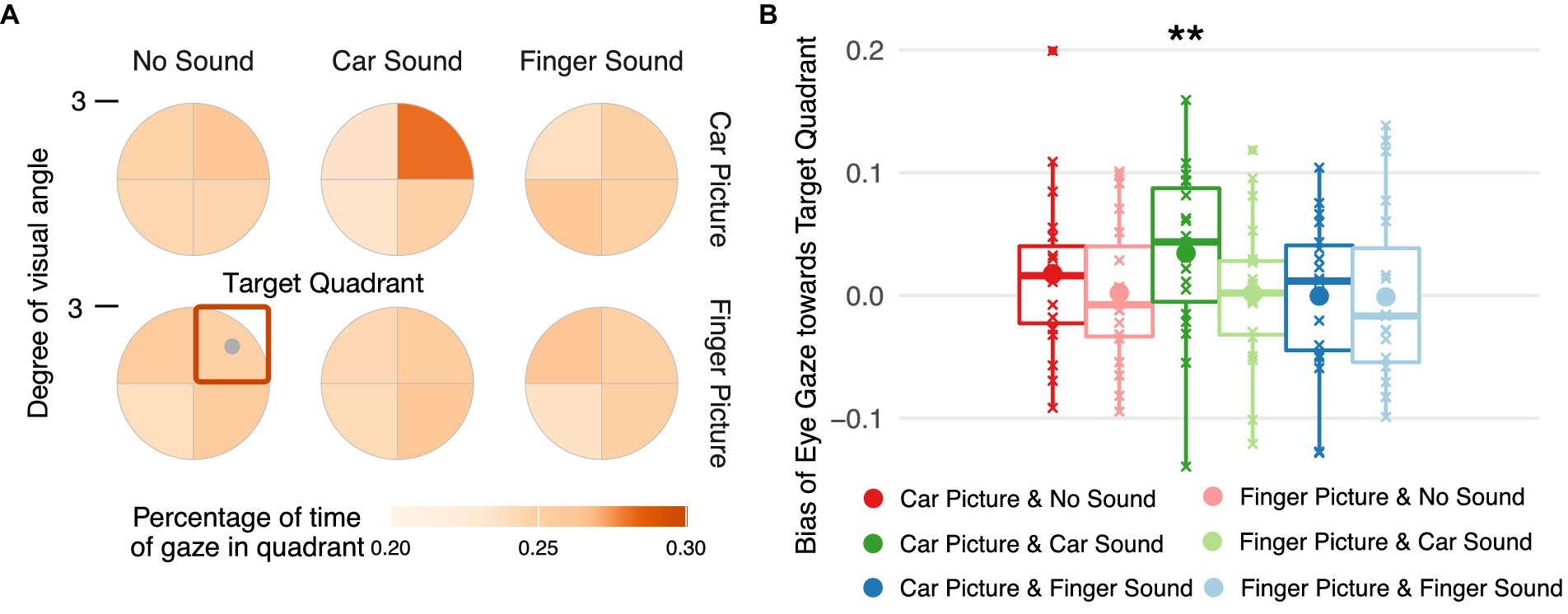

Gaze positions were transformed into polar coordinates and discretized by dividing the stimulus region into four wedges corresponding to the four quadrants in which a target image could have been displayed. To allow averaging of trials across the four possible target image locations, the coordinates were rotated so that the target image was in the upper-right quadrant. For each target quadrant, we calculated the relative dwell time, i.e., the percentage of time gaze rested in that quadrant relative to the total number of valid eye position samples in the period between 500 ms after image fully on and the end of the trial. To statistically analyze the eyes’ responses to the image, the average percentage of time for which gaze rested in one of the three non-target quadrants was subtracted from the one for the target quadrant, giving a dwell time bias score. A bias score of 0 indicates that a participant looked with equal probability at the target quadrant than at the other three quadrants, a positive score indicates a bias toward looking at the target image even though it was likely suppressed from awareness. A linear mixed model with predictors image type and sound type, their interaction, and participant-level intercepts was fitted to the dwell time bias scores. Bias scores for each condition were contrasted against to test for significant biases toward the target image.

Results

First, we examined the proportion of trials that were rated as ‘not seen.’ When the target image was presented to the non-dominant eye, the vast majority of trials were rated as “not seen” (about 95% of trials on average, see Figure 3A) indicating successful suppression. There was a marginal effect of image category (car vs. finger) on the proportion of trials rated as not seen, (1) = 3.564, p = 0.059, with the car images being rated slightly less often as not seen. No significant effect of sound, (2) = 2.371, p = 0.306 and no significant interaction between image and sound, (2) = 0.557, p = 0.757, emerged.

Second, we analyzed localization accuracy in the position task for trials in which the target image was rated as ‘not seen’ (Figure 3B). The analysis showed no significant effect of image category, (1) = 0.852, p = 0.356, of sound condition, (2) = 1.591, p = 0.452, or their interaction (2) = 0.599, p = 0.742. Planned contrasts against chance level, i.e., 25% correct responses, showed no statistically significant deviations from chance performance in any category.

In sum, our behavioral measures demonstrate that the target images were successfully suppressed from visual awareness in our experimental set-up. That is, in the vast majority of trials, the images were rated as invisible, and participants were unable to localize the images they rated as ‘not seen’.

Finally, we inspected gaze positions during the stimulation period and statistically analyzed a dwell time bias score reflecting the percentage of time participants’ gaze rested on the target quadrant with respect to the other three quadrants. Only one of the experimental conditions was associated with a significant bias in dwell time (Figure 4A), namely car images paired with car sounds, i.e., the eyes remained longer in the quadrant with a car image than in the other three quadrants when a car sound was played during the image presentation, [0.009, 0.057], t(144) = 2.594, p = 0.005 (Figure 4B). No significant bias in dwell times emerged for any other condition (see Supplementary Table S1 for all estimated parameters, their 95% highest posterior density intervals, and a regions of practical equivalence analysis confirming the results of the frequentist contrast evaluation).

Figure 4. Spatial distribution of eye gaze during stimulus presentation. (A) Percentage of time gaze rested in each of the four spatial quadrants during the time interval starting 500 ms after full onset of the target image and ending at trial offset. Gaze coordinates were aligned such that the target center (gray dot) is in the upper right quadrant (orange square). (B) Dwell time bias toward the target quadrant per target image and sound condition. The bias in gaze position over time was quantified by subtracting the mean percentage of time the gaze rested in one of the three non-target quadrants from the percentage of time gaze rested on the target quadrant.

Discussion

Threat-related information is prioritized during perceptual processing and guides oculomotor actions even in the absence of awareness (Bannerman et al., 2009; Rothkirch et al., 2012; Vetter et al., 2019). Yet, it remains unclear whether this special link between threat-related information and the oculomotor system is limited to visually conveyed information. In this study, we investigated how sounds influence observers’ eye movements to threat-related images when these images were fully suppressed from visual awareness. Suppression from visual awareness was determined based on subjective visibility ratings and verified for each observer using objective measures. After each trial, participants reported the location and category of the image and rated its visibility on a categorical scale (‘not seen at all,’ ‘brief glimpse,’ ‘almost clear,’ ‘very clear’). We analyzed only those trials in which subjective visibility was zero (Figure 3A), and only data from participants whose objective localization performance across trials with subjectively zero visibility was at chance level (Figure 3B). Critically, across those trials in which images were fully suppressed from visual awareness, we determined using eye tracking how the eyes reacted to threat-related car images compared to non-threat related images of human hands when they were paired with congruent, incongruent, or no sounds. Semantically congruent car sounds indeed guided eye movements toward suppressed threat-related car images even when participants were subjectively and objectively unaware of these images. Images by themselves, i.e., images presented without sounds did not attract the eyes in a significant manner. Thus, image content or low-level visual image features (e.g., contrast or spatial frequency) alone did not guide eye gaze. Also, our observed effect is not a mere audio-visual co-occurrence effect, instead our effect is specific to the combination of threat-related car sounds with car images, driving the eyes closer to car images during full suppression from awareness.

Our findings highlight once more the efficiency of vision and action responding to threats below the threshold of sensory awareness (Yang et al., 2007; Chen et al., 2011; Almeida et al., 2013; Hedger et al., 2015; Bertini et al., 2019). However, in contrast to the majority of previous studies showing threat-related effects with emotional face images (fearful or angry), our study demonstrates that threats conferred by inanimate objects and in different modalities can guide action. The eye gaze effect being stronger for car images when presented concurrently with car sounds resembles the preferential breakthrough of car images in binocular rivalry (with and without sounds, Chen et al., 2011). The ability to quickly detect and respond to potential threats confers a survival advantage (Öhman et al., 2001) and thus might have evolutionary roots. Here we show that the threat associated with an approaching car can guide the eyes toward car images even in the entire absence of visual awareness. Indeed, in modern real-life situations, cars and car sounds are often linked to potential danger (Bradley and Lang, 2017), triggering heightened attention and rapid, automatic responses. Hence, the here revealed link between the threat posed by cars and oculomotor actions might suggest an adaption of an evolutionary rooted brain mechanism to modern-day threats.

Furthermore, our results suggest cross-modal enhancement of threat-related images suppressed from visual awareness. Gaze exclusively rested more frequently on “unseen” car images when congruent car sounds were displayed. Previously, we found that threat-related emotional faces (without sounds) guided eye gaze in the absence of awareness (Vetter et al., 2019). Here, we extended these findings to threat-related objects in a multisensory context, indicating cross-modal facilitation of eye gaze guidance in the absence of awareness. In turn, this extends the range of effects of cross-modal facilitation of suppressed visual information from explicit perceptual reports to the unaware guidance of oculomotor actions. Previous studies reported that congruent auditory (Chen and Spence, 2010; Chen et al., 2011; Alsius and Munhall, 2013; Lunghi et al., 2014; Lee et al., 2015; Delong and Noppeney, 2021) and tactile (Lunghi et al., 2010; Hense et al., 2019; Liaw et al., 2022) information facilitates the breakthrough of suppressed visual information into awareness. Here, we find the same enhancing effect of congruent auditory information on suppressed visual information, however, the effect shows in unaware eye movements, and thus, unaware actions.

This unaware multisensory effect on eye gaze could be supported by the fast subcortical retinocollicular pathway (Spering and Carrasco, 2015). The superior colliculus (SC) receives inputs from the retinal ganglion cells and the auditory cortex (Benavidez et al., 2021; Liu et al., 2022), and may contribute to gaze changes toward car images in response to sounds. SC is known for its multimodal function in sensory integration that facilitates neural and oculomotor responses. Multisensory integration effects are strongest when weak unisensory stimuli are combined (Meredith and Stein, 1986), especially when recording from SC neurons (Stein et al., 2020). It is thus likely that in our study the congruent sounds elicited heightened representations of the suppressed, low contrast car image not only in visual areas but also in the SC, and thus facilitated eye movements. That this crossmodal effect is specific to threat-related stimuli, in our case the car, could be accounted for by the SC-pulvinar-amygdala circuit (LeDoux, 1998; Rafal et al., 2015; Kragel et al., 2021; Kang et al., 2022). For example, activation of the colliculo-amygdalar circuitry is important for rodent freezing behavior following fear-evoking visually approaching stimuli (Wei et al., 2015). The amygdala is also closely linked to the inferior colliculus (the acoustic relay center) via the thalamus (LeDoux et al., 1990). As sounds increase in intensity, thus conveying warning, the amygdala is activated alongside the posterior temporal sulcus within the auditory cortex (Bach et al., 2008). Therefore, the observed sound effects on eye gaze to suppressed car images could potentially be explained by a coordinated subcortical system involving the colliculi and amygdala (Jiang and He, 2006; Méndez-Bértolo et al., 2016; Wang et al., 2023). In addition to these subcortical structures, early sensory cortices of all sensory modalities are involved in threat processing (Li and Keil, 2023). For example, auditory cortex encodes the emotional valence of sounds during threat assessment (Concina et al., 2019). Thus, in addition to processing along the subcortical route, visual, auditory and multisensory cortices might have played an important role in processing threat-related stimulus content in the absence of awareness.

In the present study, we focused on the comparison of eye responses to suppressed threat-related car and neutral hand images and sounds. Future studies could test the generability of our findings to other types of threat-related naturalistic stimuli, e.g., using standardized databases of affective stimuli (e.g., Balsamo et al., 2020). Also, due to a general positivity bias (e.g., Fairfield et al., 2022) and given that attention is biased toward positive natural sounds (e.g., Wang et al., 2022), future studies could test how positively valenced stimuli may modulate eye movements in the absence of visual awareness.

Conclusion

Our results show that threat conferred by car images and engine sounds attracts eye gaze even in the entire absence of visual awareness. Thus, multisensory enhancement of threat-related unaware visual representations leads to specific actions, potentially mediated by subcortical brain circuits responsible for eye movement control and multisensory interaction.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Psychology Ethics Committee, University of Fribourg. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JH: Conceptualization, Data curation, Formal analysis, Investigation, Visualization, Writing – original draft, Writing – review & editing. SB: Conceptualization, Data curation, Formal analysis, Supervision, Visualization, Writing – original draft, Writing – review & editing. PV: Conceptualization, Funding acquisition, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by a PRIMA grant (PR00P1_185918/1) from the Swiss National Science Foundation to PV.

Acknowledgments

We thank Jülide Bayhan for help with data collection.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2024.1441915/full#supplementary-material

References

Alais, D., and Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262. doi: 10.1016/j.cub.2004.01.029

Aller, M., Giani, A., Conrad, V., Watanabe, M., and Noppeney, U. (2015). A spatially collocated sound thrusts a flash into awareness. Front. Integr. Neurosci. 9:16. doi: 10.3389/fnint.2015.00016

Almeida, J., Pajtas, P. E., Mahon, B. Z., Nakayama, K., and Caramazza, A. (2013). Affect of the unconscious: visually suppressed angry faces modulate our decisions. Cogn. Affect. Behav. Neurosci. 13, 94–101. doi: 10.3758/s13415-012-0133-7

Alsius, A., and Munhall, K. G. (2013). Detection of audiovisual speech correspondences without visual awareness. Psychol. Sci. 24, 423–431. doi: 10.1177/0956797612457378

Bach, D. R., Schachinger, H., Neuhoff, J. G., Esposito, F., Salle, F. D., Lehmann, C., et al. (2008). Rising sound intensity: an intrinsic warning cue activating the amygdala. Cereb. Cortex 18, 145–150. doi: 10.1093/cercor/bhm040

Badde, S., Hong, F., and Landy, M. S. (2021). Causal inference and the evolution of opposite neurons. Proc. Natl. Acad. Sci. 118:e2112686118. doi: 10.1073/pnas.2112686118

Balsamo, M., Carlucci, L., Padulo, C., Perfetti, B., and Fairfield, B. (2020). A bottom-up validation of the IAPS, GAPED, and NAPS affective picture databases: differential effects on behavioral performance. Front. Psychol. 11:2187. doi: 10.3389/fpsyg.2020.02187

Bannerman, R. L., Milders, M., De Gelder, B., and Sahraie, A. (2009). Orienting to threat: faster localization of fearful facial expressions and body postures revealed by saccadic eye movements. Proc. R. Soc. B Biol. Sci. 276, 1635–1641. doi: 10.1098/rspb.2008.1744

Benavidez, N. L., Bienkowski, M. S., Zhu, M., Garcia, L. H., Fayzullina, M., Gao, L., et al. (2021). Organization of the inputs and outputs of the mouse superior colliculus. Nat. Commun. 12:4004. doi: 10.1038/s41467-021-24241-2

Bertini, C., Cecere, R., and Làdavas, E. (2019). Unseen fearful faces facilitate visual discrimination in the intact field. Neuropsychologia 128, 58–64. doi: 10.1016/j.neuropsychologia.2017.07.029

Bertini, C., and Làdavas, E. (2021). Fear-related signals are prioritised in visual, somatosensory and spatial systems. Neuropsychologia 150:107698. doi: 10.1016/j.neuropsychologia.2020.107698

Bertini, C., Pietrelli, M., Braghittoni, D., and Làdavas, E. (2018). Pulvinar lesions disrupt fear-related implicit visual processing in hemianopic patients. Front. Psychol. 9:2329. doi: 10.3389/fpsyg.2018.02329

Bracci, S., Caramazza, A., and Peelen, M. V. (2018). View-invariant representation of hand postures in the human lateral occipitotemporal cortex. NeuroImage 181, 446–452. doi: 10.1016/j.neuroimage.2018.07.001

Bracci, S., and Op De Beeck, H. P. (2023). Understanding human object vision: a picture is worth a thousand representations. Annu. Rev. Psychol. 74, 113–135. doi: 10.1146/annurev-psych-032720-041031

Bradley, M. M., and Lang, P. J. (2017). “International affective picture system” In: Encyclopedia of personality and individual differences. eds. V. Zeigler-Hill and T. K. Shackelford (Springer, Cham: Springer International Publishing), 1–4. doi: 10.1007/978-3-319-28099-8_42-1

Burley, D. T., Gray, N. S., and Snowden, R. J. (2017). As far as the eye can see: relationship between psychopathic traits and pupil response to affective stimuli. PLoS One 12:e0167436. doi: 10.1371/journal.pone.0167436

Caramazza, A., and Shelton, J. R. (1998). Domain-specific knowledge systems in the brain: the animate-inanimate distinction. J. Cogn. Neurosci. 10, 1–34. doi: 10.1162/089892998563752

Cary, E., Lahdesmaki, I., and Badde, S. (2024). Audiovisual simultaneity windows reflect temporal sensory uncertainty. Psychon. Bull. Rev. doi: 10.3758/s13423-024-02478-4

Chen, Y.-C., and Spence, C. (2010). When hearing the bark helps to identify the dog: semantically-congruent sounds modulate the identification of masked pictures. Cognition 114, 389–404. doi: 10.1016/j.cognition.2009.10.012

Chen, Y.-C., Yeh, S.-L., and Spence, C. (2011). Crossmodal constraints on human perceptual awareness: auditory semantic modulation of binocular rivalry. Front. Psychol. 2:212. doi: 10.3389/fpsyg.2011.00212

Concina, G., Renna, A., Grosso, A., and Sacchetti, B. (2019). The auditory cortex and the emotional valence of sounds. Neurosci. Biobehav. Rev. 98, 256–264. doi: 10.1016/j.neubiorev.2019.01.018

Conrad, V., Bartels, A., Kleiner, M., and Noppeney, U. (2010). Audiovisual interactions in binocular rivalry. J. Vis. 10:27. doi: 10.1167/10.10.27

Delong, P., and Noppeney, U. (2021). Semantic and spatial congruency mould audiovisual integration depending on perceptual awareness. Sci. Rep. 11:10832. doi: 10.1038/s41598-021-90183-w

Diano, M., Celeghin, A., Bagnis, A., and Tamietto, M. (2017). Amygdala response to emotional stimuli without awareness: facts and interpretations. Front. Psychol. 7:2029. doi: 10.3389/fpsyg.2016.02029

DiCarlo, J. J., Zoccolan, D., and Rust, N. C. (2012). How does the brain solve visual object recognition? Neuron 73, 415–434. doi: 10.1016/j.neuron.2012.01.010

Ding, Y., Naber, M., Gayet, S., Van Der Stigchel, S., and Paffen, C. L. E. (2018). Assessing the generalizability of eye dominance across binocular rivalry, onset rivalry, and continuous flash suppression. J. Vis. 18:6. doi: 10.1167/18.6.6

Doehrmann, O., and Naumer, M. J. (2008). Semantics and the multisensory brain: how meaning modulates processes of audio-visual integration. Brain Res. 1242, 136–150. doi: 10.1016/j.brainres.2008.03.071

Dolan, R. J., Morris, J. S., and De Gelder, B. (2001). Crossmodal binding of fear in voice and face. Proc. Natl. Acad. Sci. 98, 10006–10010. doi: 10.1073/pnas.171288598

Fairfield, B., Padulo, C., Bortolotti, A., Perfetti, B., Mammarella, N., and Balsamo, M. (2022). Do older and younger adults prefer the positive or avoid the negative? Brain Sci. 12:393. doi: 10.3390/brainsci12030393

Faivre, N., Mudrik, L., Schwartz, N., and Koch, C. (2014). Multisensory integration in complete unawareness: evidence from audiovisual congruency priming. Psychol. Sci. 25, 2006–2016. doi: 10.1177/0956797614547916

Frühholz, S., Trost, W., and Kotz, S. A. (2016). The sound of emotions—towards a unifying neural network perspective of affective sound processing. Neurosci. Biobehav. Rev. 68, 96–110. doi: 10.1016/j.neubiorev.2016.05.002

Gerdes, A. B. M., Alpers, G. W., Braun, H., Köhler, S., Nowak, U., and Treiber, L. (2021). Emotional sounds guide visual attention to emotional pictures: an eye-tracking study with audio-visual stimuli. Emotion 21, 679–692. doi: 10.1037/emo0000729

Glasser, D. M., and Tadin, D. (2014). Modularity in the motion system: independent oculomotor and perceptual processing of brief moving stimuli. J. Vis. 14:28. doi: 10.1167/14.3.28

Globisch, J., Hamm, A. O., Esteves, F., and Öhman, A. (1999). Fear appears fast: temporal course of startle reflex potentiation in animal fearful subjects. Psychophysiology 36, 66–75. doi: 10.1017/S0048577299970634

Grill-Spector, K., and Weiner, K. S. (2014). The functional architecture of the ventral temporal cortex and its role in categorization. Nat. Rev. Neurosci. 15, 536–548. doi: 10.1038/nrn3747

Hedger, N., Adams, W. J., and Garner, M. (2015). Fearful faces have a sensory advantage in the competition for awareness. J. Exp. Psychol. Hum. Percept. Perform. 41, 1748–1757. doi: 10.1037/xhp0000127

Hense, M., Badde, S., and Röder, B. (2019). Tactile motion biases visual motion perception in binocular rivalry. Atten. Percept. Psychophys. 81, 1715–1724. doi: 10.3758/s13414-019-01692-w

Hesselmann, G., Darcy, N., Ludwig, K., and Sterzer, P. (2016). Priming in a shape task but not in a category task under continuous flash suppression. J. Vis. 16:17. doi: 10.1167/16.3.17

Hu, J., and Vetter, P. (2024). How the eyes respond to sounds. Ann. N. Y. Acad. Sci. 1532, 18–36. doi: 10.1111/nyas.15093

Jiang, Y., and He, S. (2006). Cortical responses to invisible faces: dissociating subsystems for facial-information processing. Curr. Biol. 16, 2023–2029. doi: 10.1016/j.cub.2006.08.084

Juslin, P. N., and Västfjäll, D. (2008). Emotional responses to music: the need to consider underlying mechanisms. Behav. Brain Sci. 31, 559–575. doi: 10.1017/S0140525X08005293

Kang, S. J., Liu, S., Ye, M., Kim, D.-I., Pao, G. M., Copits, B. A., et al. (2022). A central alarm system that gates multi-sensory innate threat cues to the amygdala. Cell Rep. 40:111222. doi: 10.1016/j.celrep.2022.111222

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., and Shams, L. (2007). Causal inference in multisensory perception. PLoS One 2:e943. doi: 10.1371/journal.pone.0000943

Kragel, P. A., Čeko, M., Theriault, J., Chen, D., Satpute, A. B., Wald, L. W., et al. (2021). A human colliculus-pulvinar-amygdala pathway encodes negative emotion. Neuron 109, 2404–2412.e5. doi: 10.1016/j.neuron.2021.06.001

Kriegeskorte, N., Mur, M., Ruff, D. A., Kiani, R., Bodurka, J., Esteky, H., et al. (2008). Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron 60, 1126–1141. doi: 10.1016/j.neuron.2008.10.043

Kumar, S., Von Kriegstein, K., Friston, K., and Griffiths, T. D. (2012). Features versus feelings: dissociable representations of the acoustic features and valence of aversive sounds. J. Neurosci. 32, 14184–14192. doi: 10.1523/JNEUROSCI.1759-12.2012

Lapate, R. C., Rokers, B., Li, T., and Davidson, R. J. (2014). Non-conscious emotional activation colors first impressions: a regulatory role for conscious awareness. Psychol. Sci. 25, 349–357. doi: 10.1177/0956797613503175

LeDoux, J. E. (1998). The emotional brain: the mysterious underpinnings of emotional life (1st. Touchstone ed) New York: Simon & Schuster.

LeDoux, J. E., Farb, C., and Ruggiero, D. (1990). Topographic organization of neurons in the acoustic thalamus that project to the amygdala. J. Neurosci. 10, 1043–1054. doi: 10.1523/JNEUROSCI.10-04-01043.1990

Lee, M., Blake, R., Kim, S., and Kim, C.-Y. (2015). Melodic sound enhances visual awareness of congruent musical notes, but only if you can read music. Proc. Natl. Acad. Sci. 112, 8493–8498. doi: 10.1073/pnas.1509529112

Li, W., and Keil, A. (2023). Sensing fear: fast and precise threat evaluation in human sensory cortex. Trends Cogn. Sci. 27, 341–352. doi: 10.1016/j.tics.2023.01.001

Liaw, G. J., Kim, S., and Alais, D. (2022). Direction-selective modulation of visual motion rivalry by collocated tactile motion. Atten. Percept. Psychophys. 84, 899–914. doi: 10.3758/s13414-022-02453-y

Liu, X., Huang, H., Snutch, T. P., Cao, P., Wang, L., and Wang, F. (2022). The superior colliculus: cell types, connectivity, and behavior. Neurosci. Bull. 38, 1519–1540. doi: 10.1007/s12264-022-00858-1

Lunghi, C., Binda, P., and Morrone, M. C. (2010). Touch disambiguates rivalrous perception at early stages of visual analysis. Curr. Biol. 20, R143–R144. doi: 10.1016/j.cub.2009.12.015

Lunghi, C., Morrone, M. C., and Alais, D. (2014). Auditory and tactile signals combine to influence vision during binocular rivalry. J. Neurosci. 34, 784–792. doi: 10.1523/JNEUROSCI.2732-13.2014

Méndez-Bértolo, C., Moratti, S., Toledano, R., Lopez-Sosa, F., Martínez-Alvarez, R., Mah, Y. H., et al. (2016). A fast pathway for fear in human amygdala. Nat. Neurosci. 19, 1041–1049. doi: 10.1038/nn.4324

Meredith, M. A., and Stein, B. E. (1986). Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 56, 640–662. doi: 10.1152/jn.1986.56.3.640

Miles, W. R. (1930). Ocular dominance in human adults. J. Gen. Psychol. 3, 412–430. doi: 10.1080/00221309.1930.9918218

Miller, J. (1986). Timecourse of coactivation in bimodal divided attention. Percept. Psychophys. 40, 331–343. doi: 10.3758/BF03203025

Montoya, S., and Badde, S. (2023). Only visible flicker helps flutter: tactile-visual integration breaks in the absence of visual awareness. Cognition 238:105528. doi: 10.1016/j.cognition.2023.105528

Morris, J. S., Öhman, A., and Dolan, R. J. (1998). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470. doi: 10.1038/30976

Mudrik, L., Faivre, N., and Koch, C. (2014). Information integration without awareness. Trends Cogn. Sci. 18, 488–496. doi: 10.1016/j.tics.2014.04.009

Munoz, D. P., and Everling, S. (2004). Look away: the anti-saccade task and the voluntary control of eye movement. Nat. Rev. Neurosci. 5, 218–228. doi: 10.1038/nrn1345

Noppeney, U., Josephs, O., Hocking, J., Price, C. J., and Friston, K. J. (2008). The effect of prior visual information on recognition of speech and sounds. Cereb. Cortex 18, 598–609. doi: 10.1093/cercor/bhm091

Öhman, A., Carlsson, K., Lundqvist, D., and Ingvar, M. (2007). On the unconscious subcortical origin of human fear. Physiol. Behav. 92, 180–185. doi: 10.1016/j.physbeh.2007.05.057

Öhman, A., Flykt, A., and Esteves, F. (2001). Emotion drives attention: detecting the snake in the grass. J. Exp. Psychol. Gen. 130, 466–478. doi: 10.1037/0096-3445.130.3.466

Palmer, T. D., and Ramsey, A. K. (2012). The function of consciousness in multisensory integration. Cognition 125, 353–364. doi: 10.1016/j.cognition.2012.08.003

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–782. doi: 10.1038/nrn2920

Rafal, R. D., Koller, K., Bultitude, J. H., Mullins, P., Ward, R., Mitchell, A. S., et al. (2015). Connectivity between the superior colliculus and the amygdala in humans and macaque monkeys: virtual dissection with probabilistic DTI tractography. J. Neurophysiol. 114, 1947–1962. doi: 10.1152/jn.01016.2014

Rothkirch, M., Stein, T., Sekutowicz, M., and Sterzer, P. (2012). A direct oculomotor correlate of unconscious visual processing. Curr. Biol. 22, R514–R515. doi: 10.1016/j.cub.2012.04.046

Sandberg, K., Timmermans, B., Overgaard, M., and Cleeremans, A. (2010). Measuring consciousness: is one measure better than the other? Conscious. Cogn. 19, 1069–1078. doi: 10.1016/j.concog.2009.12.013

Sha, L., Haxby, J. V., Abdi, H., Guntupalli, J. S., Oosterhof, N. N., Halchenko, Y. O., et al. (2015). The animacy continuum in the human ventral vision pathway. J. Cogn. Neurosci. 27, 665–678. doi: 10.1162/jocn_a_00733

Shams, L., and Beierholm, U. (2022). Bayesian causal inference: a unifying neuroscience theory. Neurosci. Biobehav. Rev. 137:104619. doi: 10.1016/j.neubiorev.2022.104619

Spering, M., and Carrasco, M. (2015). Acting without seeing: eye movements reveal visual processing without awareness. Trends Neurosci. 38, 247–258. doi: 10.1016/j.tins.2015.02.002

Staib, M., and Bach, D. R. (2018). Stimulus-invariant auditory cortex threat encoding during fear conditioning with simple and complex sounds. NeuroImage 166, 276–284. doi: 10.1016/j.neuroimage.2017.11.009

Stein, T., Hebart, M. N., and Sterzer, P. (2011). Breaking continuous flash suppression: a new measure of unconscious processing during interocular suppression? Front. Hum. Neurosci. 5:167. doi: 10.3389/fnhum.2011.00167

Stein, B. E., Stanford, T. R., and Rowland, B. A. (2020). Multisensory integration and the Society for Neuroscience: then and now. J. Neurosci. 40, 3–11. doi: 10.1523/JNEUROSCI.0737-19.2019

Sterzer, P., Haynes, J. D., and Rees, G. (2008). Fine-scale activity patterns in high-level visual areas encode the category of invisible objects. J. Vis. 8, 10.1–10.12. doi: 10.1167/8.15.10

Tamietto, M., and De Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709. doi: 10.1038/nrn2889

Tsuchiya, N., and Koch, C. (2005). Continuous flash suppression reduces negative afterimages. Nat. Neurosci. 8, 1096–1101. doi: 10.1038/nn1500

Tsuchiya, N., Koch, C., Gilroy, L. A., and Blake, R. (2006). Depth of interocular suppression associated with continuous flash suppression, flash suppression, and binocular rivalry. J. Vis. 6, 6–1078. doi: 10.1167/6.10.6

Vetter, P., Badde, S., Phelps, E. A., and Carrasco, M. (2019). Emotional faces guide the eyes in the absence of awareness. eLife 8:e43467. doi: 10.7554/eLife.43467

Vetter, P., Smith, F. W., and Muckli, L. (2014). Decoding sound and imagery content in early visual cortex. Curr. Biol. 24, 1256–1262. doi: 10.1016/j.cub.2014.04.020

Wang, Y., Luo, L., Chen, G., Luan, G., Wang, X., Wang, Q., et al. (2023). Rapid processing of invisible fearful faces in the human amygdala. J. Neurosci. 43, 1405–1413. doi: 10.1523/JNEUROSCI.1294-22.2022

Wang, Y., Tang, Z., Zhang, X., and Yang, L. (2022). Auditory and cross-modal attentional bias toward positive natural sounds: behavioral and ERP evidence. Front. Hum. Neurosci. 16:949655. doi: 10.3389/fnhum.2022.949655

Wei, P., Liu, N., Zhang, Z., Liu, X., Tang, Y., He, X., et al. (2015). Processing of visually evoked innate fear by a non-canonical thalamic pathway. Nat. Commun. 6:6756. doi: 10.1038/ncomms7756

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., and Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998

Willenbockel, V., Sadr, J., Fiset, D., Horne, G. O., Gosselin, F., and Tanaka, J. W. (2010). Controlling low-level image properties: the SHINE toolbox. Behav. Res. Methods 42, 671–684. doi: 10.3758/BRM.42.3.671

Wyer, N. A., and Calvini, G. (2011). Don’t sit so close to me: unconsciously elicited affect automatically provokes social avoidance. Emotion 11, 1230–1234. doi: 10.1037/a0023981

Yang, E., Zald, D. H., and Blake, R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion 7, 882–886. doi: 10.1037/1528-3542.7.4.882

Keywords: continuous flash suppression, eye movements, sounds, visual awareness, threat, multisensory interaction, superior colliculus, amygdala

Citation: Hu J, Badde S and Vetter P (2024) Auditory guidance of eye movements toward threat-related images in the absence of visual awareness. Front. Hum. Neurosci. 18:1441915. doi: 10.3389/fnhum.2024.1441915

Edited by:

Frouke Nienke Boonstra, Royal Dutch Visio, NetherlandsReviewed by:

Jason J. Braithwaite, Lancaster University, United KingdomAlessandro Bortolotti, University of Studies G. d’Annunzio Chieti and Pescara, Italy

Copyright © 2024 Hu, Badde and Vetter. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Junchao Hu, anVuY2hhby5odUB1bmlmci5jaA==; Petra Vetter, cGV0cmEudmV0dGVyQHVuaWZyLmNo

†These authors have contributed equally to this work

Junchao Hu

Junchao Hu Stephanie Badde2†

Stephanie Badde2† Petra Vetter

Petra Vetter