95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

BRIEF RESEARCH REPORT article

Front. Hum. Neurosci. , 14 September 2023

Sec. Speech and Language

Volume 17 - 2023 | https://doi.org/10.3389/fnhum.2023.1122480

This article is part of the Research Topic Neural correlates of connected speech indices in acquired neurological disorders. View all 6 articles

Yina M. Quique1

Yina M. Quique1 G. Nike Gnanateja2*

G. Nike Gnanateja2* Michael Walsh Dickey3,4

Michael Walsh Dickey3,4 William S. Evans3

William S. Evans3 Bharath Chandrasekaran4,5*

Bharath Chandrasekaran4,5*Introduction: People with aphasia have been shown to benefit from rhythmic elements for language production during aphasia rehabilitation. However, it is unknown whether rhythmic processing is associated with such benefits. Cortical tracking of the speech envelope (CTenv) may provide a measure of encoding of speech rhythmic properties and serve as a predictor of candidacy for rhythm-based aphasia interventions.

Methods: Electroencephalography was used to capture electrophysiological responses while Spanish speakers with aphasia (n = 9) listened to a continuous speech narrative (audiobook). The Temporal Response Function was used to estimate CTenv in the delta (associated with word- and phrase-level properties), theta (syllable-level properties), and alpha bands (attention-related properties). CTenv estimates were used to predict aphasia severity, performance in rhythmic perception and production tasks, and treatment response in a sentence-level rhythm-based intervention.

Results: CTenv in delta and theta, but not alpha, predicted aphasia severity. Neither CTenv in delta, alpha, or theta bands predicted performance in rhythmic perception or production tasks. Some evidence supported that CTenv in theta could predict sentence-level learning in aphasia, but alpha and delta did not.

Conclusion: CTenv of the syllable-level properties was relatively preserved in individuals with less language impairment. In contrast, higher encoding of word- and phrase-level properties was relatively impaired and was predictive of more severe language impairments. CTenv and treatment response to sentence-level rhythm-based interventions need to be further investigated.

Aphasia is a communication disorder resulting from damage to brain regions that support language. Due to their communication impairments, people with aphasia (PWA) experience social isolation, loss of autonomy, and other consequences that affect their quality of life (Cruice et al., 2006; Hilari, 2011). Intervention approaches leveraging rhythmic aspects of speech and music have been shown to be beneficial in improving language production in PWA (e.g., Stahl et al., 2011, 2013). However, little is known about PWA’s encoding of speech rhythmic properties and their connection to language impairments, rhythmic perception/production, and treatment response to rhythm-based interventions.

The amplitude envelope conveys rhythmic cues that are important for speech perception (Rosen, 1992; Shannon et al., 1995). The neural processing of these cues in continuous speech can be assessed by examining cortical tracking using electroencephalography (EEG). Specifically, cortical tracking of the speech envelope (CTenv) can be used as a proxy to assess the neural processing of rhythmic properties in continuous speech, but has not been previously examined in post-stroke aphasia. CTenv refers specifically to the tracking of the acoustic/linguistic properties of the speech signal by the low-frequency cortical activity in the M/EEG (Ding and Simon, 2014). The cortical activity in the M/EEG reflects activity of populations of neurons (Lakatos et al., 2019; Gnanateja et al., 2022) – essential for processing rhythm cues in speech and music (Peelle et al., 2013; Doelling et al., 2019; Poeppel and Assaneo, 2020; Gnanateja et al., 2022). CTenv could thus open a window into PWA’s encoding of speech rhythmic properties, which could shed light on treatment response to rhythm-based interventions.

CTenv has been examined in the delta, theta, and alpha bands of neural oscillations. The delta band (~1–4 Hz) aligns with the word and phrase boundaries in connected speech and prosodic cues, and has been associated with higher-order language processing (Ghitza, 2017; Teoh et al., 2019). The theta band (∼4–8 Hz) broadly corresponds to the syllabic rate (5–8 Hz) found in multiple languages (Ding et al., 2017) and is key in segmenting auditory information into syllabic chunks (Ghitza, 2013; Kösem and van Wassenhove, 2016; Poeppel and Assaneo, 2020). Activity in this band has been shown to be stronger for intelligible speech (Peelle et al., 2013) than for unintelligible speech (Pefkou et al., 2017); or equal in both types of stimuli (Howard and Poeppel, 2010). The alpha band (~8–12 Hz) is associated with attentional processes (Obleser and Weisz, 2012; Wöstmann et al., 2017) and effortful listening (Paul et al., 2021).

CTenv in these bands has been used to investigate neurophysiological mechanisms underlying speech, language, and music processing in neurotypical populations (e.g., Doelling et al., 2014), including in Spanish speakers (e.g., Assaneo et al., 2019; Pérez et al., 2019; Lizarazu et al., 2021). These neurophysiological mechanisms could inform our understanding of how the encoding of rhythmic properties of speech are affected by aphasia and how they may influence treatment response to rhythm-based interventions. Therefore, the current study examines the association between CTenv and aphasia severity, rhythmic perception/production abilities, and treatment response to a sentence-level rhythm-based intervention in post-stroke aphasia.

Recent evidence from primary progressive aphasia (PPA; Dial et al., 2021) demonstrated an increased CTenv in theta but not delta compared to neurotypicals, implying that people with PPA may rely more on acoustic properties during continuous speech processing. To date, this is one of the few studies that investigate CTenv in adult neurogenic language disorders (see De Clercq et al., 2023; Kries et al., 2023). Thus, the association between CTenv and language impairments as measured by aphasia severity is, to our knowledge, yet to be examined.

CTenv may also be associated with rhythmic perception and production measures in individuals with aphasia. Some PWA show difficulties in rhythmic perception tasks (Zipse et al., 2014; Sihvonen et al., 2016), such as identifying whether two excerpts with rhythmic variations are the same or different. Some others show more difficulties than controls in rhythmic production tasks, such as continuing a rhythmic pattern after its offset – with some PWA showing preserved abilities in synchronizing to a given rhythm (Zipse et al., 2014). Importantly, a better understanding of rhythmic perception and production in aphasia can guide language treatment candidacy, especially for rhythm-based interventions. For example, Curtis et al. (2020) reported that PWA with better rhythmic abilities were more likely to benefit from an additional rhythmic cue (hand tapping) in response to melodic intonation therapy. Similarly, Stefaniak et al. (2021) found a correlation between the perception of rhythmic patterns and picture description fluency in PWA. The association between rhythmic measures and CTenv could help to understand rhythmic performance in aphasia in ways that can support candidacy and treatment response.

Rhythmic elements have been successfully employed in aphasia rehabilitation. For example, Stahl et al. (2011) examined how PWA learned lyrics using three conditions: melodic intoning, rhythmic speech, and a control condition, and reported a comparable degree of benefit between the melodic and rhythmic conditions. A follow-up study by Stahl et al. (2013) showed improved sentence learning using singing and rhythmic therapy. Also, simultaneous production of audiovisual speech, which depends on the synchronization of speech rhythmic properties between a patient and clinician, has resulted in improved sentence learning in PWA (Fridriksson et al., 2012, 2015), which is consistent with previous work on neurotypicals showing that audiovisual speech enhances CTenv and improves speech perception (Chandrasekaran et al., 2009; Crosse et al., 2015). However, it is currently unknown whether the neural encoding of rhythmic properties in connected speech (as reflected in CTenv) is predictive of treatment response in this type of rhythm-based interventions. The current study, therefore, provides a preliminary examination of the relationship between CTenv and treatment response in a rhythm-based treatment described by Quique et al. (2022).

In short, the purpose of this study was threefold. First, we examined CTenv in Spanish-speaking PWA and assessed the extent to which CTenv in the delta, theta, and alpha bands was associated with aphasia severity. Second, we examined if CTenv in those bands was related to behavioral measures of rhythmic perception and production in PWA. Third, we assessed if CTenv in those bands predicted treatment response to the sentence-level rhythm-based intervention previously reported by Quique et al. (2022).

Nine Spanish-speaking PWA were recruited from an outpatient neurology institute in Medellín, Colombia. These participants were a subset of the Quique et al. (2022) participants, selected because they lived in Medellin, where the necessary EEG instrumentation was located. Inclusionary criteria consisted of aphasia after a single left hemisphere cerebrovascular accident confirmed by neurology referral, a Western Aphasia Battery aphasia quotient between 20 and 85 (WAB-AQ, score range: [0–100]; Kertesz, 1982; Kertesz and Pascual-Leone, 2000), an aphasia post-onset greater than 4 months, and Spanish as a native language. Exclusion criteria consisted of other neurological conditions that could potentially affect speech or cognition, significant premorbid psychiatric history, and severe apraxia of speech. Nine participants were enrolled in the study (Table 1). All participants completed a rhythm-based intervention to learn scripted sentences after their EEG assessment (Quique et al., 2022). Thus, CTenv was assessed at one timepoint, before the rhythm-based intervention. All participants were able to hear pure tones at 40 dB HL at 0.5, 1, 2, and 4 kHz on an audiometric screening test. This study was approved by the institutional review board at the University of Pittsburgh and by the local partner institution in Colombia (Neuromedica).

Quique et al. (2022) investigated the extent to which unison production of speech could support PWA in learning scripted sentences (i.e., formulaic sentences used in everyday communication). As unison production of speech depends on rhythmic properties, it was hypothesized that highlighting these properties could facilitate learning of scripted sentences. Specifically, Quique and colleagues used rhythmic cues during unison production of speech in which: (a) digitally-added beats were aligned with word stress (stress-aligned condition); (b) digitally-added beats were equally spaced across the sentence (metronomic condition); (c) sentences were presented without added beats (control condition). PWA learned a set of 30 scripted sentences over five training sessions distributed over 2 weeks. As shown in Table 1, participants were between 1 and 3.5 years post-onset of aphasia. PWA demonstrated learning of scripted sentences over time across conditions. Furthermore, both rhythm-enhanced conditions engendered larger treatment response (i.e., improved sentence-level learning) than the control condition.

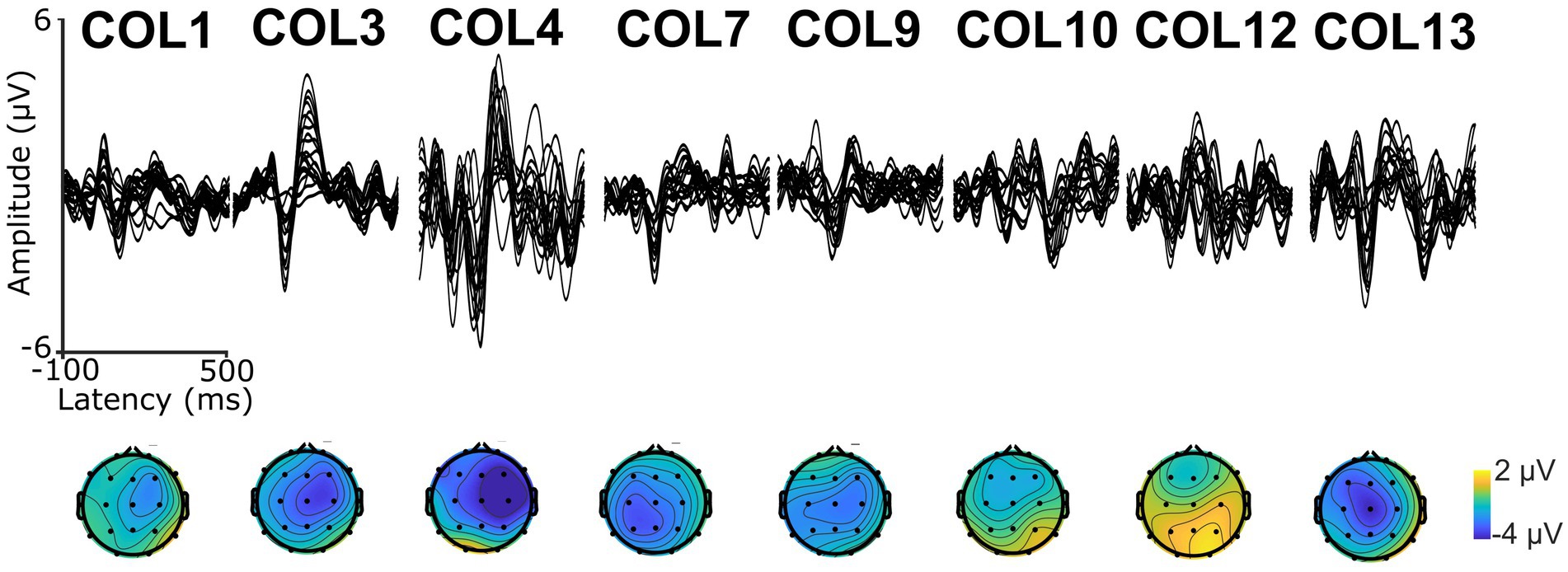

A 9-min excerpt of a Spanish adaptation of the audiobook “Who was Albert Einstein” (Brallier, 2002) was used to assess CTenv. The excerpt was recorded by a human male native speaker of Spanish. The excerpt was divided into approximately 1-min segments, each beginning and ending with complete sentences to preserve the storyline. To ensure attention, participants answered a comprehension question after each segment, as previously used in PPA (Dial et al., 2021). See Supplementary material for an example of a story segment. The envelope spectrum of the excerpt was 6.3 Hz, which is closely related to the syllable rate (Obleser and Weisz, 2012; Ghitza, 2013; Kösem and van Wassenhove, 2016). See modulation spectrum in Supplementary Figure S1. Also, to validate the recording of auditory electrophysiology responses and ensure that auditory stimuli reached the auditory cortex, we recorded Auditory Long Latency Responses (ALLRs; Swink and Stuart, 2012; Reetzke et al., 2021; See Figure 1). For this purpose, we used a sinusoidally modulated (6 Hz) tone of carrier frequency of 1,000 Hz and another unmodulated pure tone of frequency of 1,000 Hz. Both tones were 1 second in duration and were repeated 100 times, with an inter-stimulus interval of 1 second.

Figure 1. Auditory Long Latency Responses (ALLRs, top) and scalp topography (bottom) of the first negative wave (N1) in each participant.

A USB audio interface (Behringer U-Phoria–UMC404HD) was used to present the auditory stimuli. Electroencephalography (EEG) was performed using a Nihon–Kohden 32–channel clinical EEG system. Nineteen scalp electrodes were placed on the scalp following the international 10–20 system (Klem et al., 1999), along with two mastoid electrodes. The online reference was placed at Cz. Electrode impedance was under 5 kΩ for most electrodes, and the maximum impedance did not exceed 20 kΩ. The sampling rate for EEG recording was 5,000 Hz. The data were re-referenced offline to the average of both mastoids. The analog electrical audio signal from one of the audio interface outputs was directly plugged into one of the leads on the EEG amplifier (with appropriate attenuation) to align the EEG to the stimulus onset. Further, as the EEG system was used for clinical purposes, ALLRs were recorded to ensure that the instrumentation was optimal. Specifically, if the instrument was sensitive to record reliable ALLRs in the latency range of 50 to 250 ms that were higher than the pre-stimulus baseline and had a morphology and topography consistent with ALLRs.

Participants were first presented with the ALLRs stimuli and then listened to the audiobook. Stimuli were presented binaurally with insert earphones (ER-3A; Etymotic Research) at 84 dB SPL (Becker and Reinvang, 2007; Robson et al., 2014) using PsychoPy (Peirce et al., 2019). During ALLRs, participants were instructed to listen to the tones without any additional task. During the audiobook, participants were instructed to listen to the story and answer the multiple-choice questions. They were also asked to avoid extraneous movements and look at a fixation cross on the computer screen.

Preprocessing was performed using EEGLAB (Delorme and Makeig, 2004) in MATLAB (MathWorks Inc., 2019). To reduce data size and improve computational efficiency, we first down-sampled the data to 128 Hz. Then, we applied minimum-phase non-causal Sinc FIR filters with a high pass cut-off frequency of 1 Hz, filter order of 846; and a low-pass cut-off frequency of 15 Hz, filter order of 212. Filtered data were then re-referenced to the two mastoid channels (Di Liberto et al., 2015; O’Sullivan et al., 2017). Large movement artifacts were suppressed using Artifact Subspace Reconstruction (ASR; Mullen et al., 2013; Plechawska-Wojcik et al., 2019). After ASR, the data were separated into epochs from −5 s to 70 s (i.e., the onset of each 1-min segment), resulting in 9 epochs. Independent Component Analysis was performed on the epoched data to remove eye movement and muscle artifacts (Jung et al., 2001). The independent components were then inspected visually for time course, topography, and spectrum and were removed if they corresponded to ocular or muscular activity.

To extract the theta, and alpha bands from the EEG, the data was further filtered using third-order butterworth filters; theta (lf = 4 Hz; hf = 8 Hz), and alpha (lf = 8 Hz; hf = 15 Hz). For the delta however, a different preprocessing procedure was followed, where the high pass filter was changed. The raw EEG data was filtered using a windowed sinc filters (lf = 0.75, order 846; hf = 15, order 212). This data was subjected to the same preprocessing steps until ICA. The ICA weights that were derived from the 1–15 hz filtered data were copied over to 0.75–15 Hz filtered data (Barbieri et al., 2021). The ICA components with artifacts were then rejected. This was done to ensure good ICA decomposition, as highpass filtering with 1 Hz provides better stationarity in the EEG (Winkler et al., 2015). Finally, to extract the delta band, this data was low pass filtered at 4 Hz with a third-order butterworth filter which essentially resulted in a 0.75–4 Hz filter band. The magnitude response of the filters and filter characteristics used to derive the three different EEG bands are shown in Supplementary Figure S2.

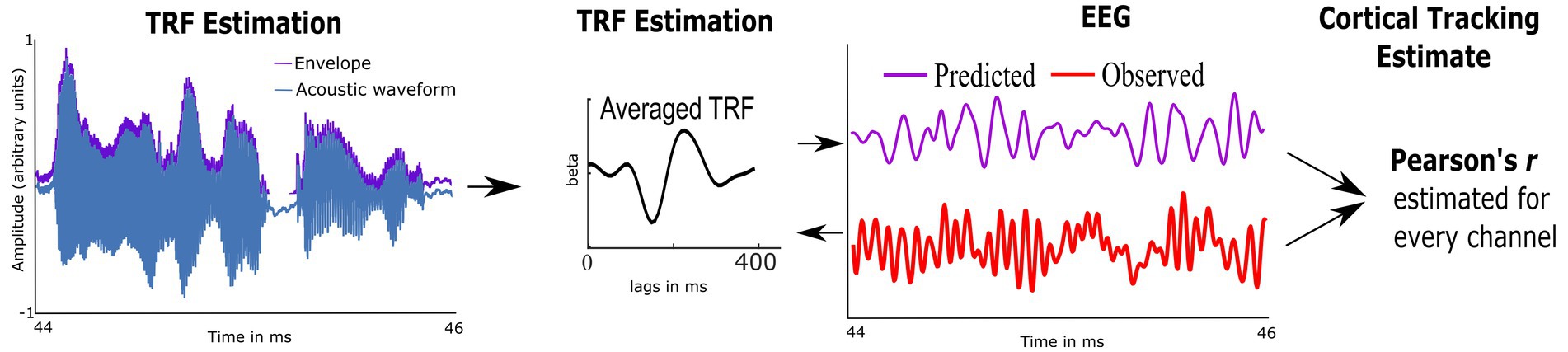

Preprocessed EEG was analyzed using custom routines in Matlab and the multivariate Temporal Response Function toolbox (mTRF; Crosse et al., 2016). We used mTRFs with forward modeling to estimate how well the temporal envelope predicted EEG activity; this provided an individual measure of CTenv (McHaney et al., 2021). First, the multiband Hilbert transform was used to estimate the temporal envelopes of each 1-min speech segment. Second, using the preprocessed EEG data and the temporal envelopes, the mTRFs were estimated via regularized linear ridge regression (see Figure 2 and Supplementary Figure S3). mTRFs were estimated for lags ranging from −100 ms to 650 ms. To reduce overfitting and obtain the best model fit across electrodes and participants, a regularization parameter optimized from 20 to 220 using k-fold (leave-one-out) cross-validation was applied (Crosse et al., 2016). Model fit was assessed using Pearson’s correlation between the predicted EEG (stimulus envelope convolved with mTRFs) and the observed EEG data, which was considered the CTenv estimate. To test the robustness of the obtained CTenv estimates, we compared them against random CTenv estimates generated from one hundred mismatched permutations of EEG responses and the audiobook envelopes. Comparing the observed CTenv against the random CTenv provides evidence regarding the association between cortical activity due to specific auditory input in the speech envelope rather than a chance response. As CTenv can be affected by the cortical lesions and related volume conducted electrical field at the electrodes, only the five electrodes that had the highest CTenv per participant were averaged to obtain one metric of cortical tracking.

Figure 2. Schematic representation of the Temporal Response Function (TRF), used to estimate cortical tracking of the speech envelope (CTenv; mapping of speech envelope to the neural data). A linear kernel to map the speech envelope of the audiobook onto the EEG is estimated using ridge regression. The cross-validated model fit is estimated using Pearson’s correlation between the EEG-predicted data and the observed EEG, which serves as a metric of CT.

To characterize language performance, participants were given the Spanish adaptation of the Western Aphasia Battery (WAB; Kertesz, 1982; Kertesz and Pascual-Leone, 2000). To characterize rhythmic perception and production, participants were given the computerized version of the Beat Alignment Test (CA-BAT; Harrison and Müllensiefen, 2018) and the Synchronization with the Beat of Music Task from the Beat Alignment Test (BAT-sync; Iversen and Patel, 2008). In the CA-BAT, participants listen to musical excerpts presented twice: once with superimposed beats arranged in synchrony with the excerpt and once with beats either too fast or too slow in relation to the excerpt. Participants are asked to indicate in which of the two presentations the beats were “on the beat” with the excerpt. In the BAT-sync, participants are asked to listen and tap with their left hand to the beat of 12 musical excerpts with various musical styles. The BAT-sync provides a measure of synchronization (ranging between 0 and 1) based on the correlation between intertap means (average tapping intervals for each of the 12 excerpts) and tactus levels of the underlying beat of each excerpt (most likely to occur tapping tempos).

Statistical analyzes were performed in Rstudio (RStudio Team, 2016; R Core Team, 2020). We used a robust bayesian estimation to assess if the observed CTenv differed from an empirical chance distribution (see Kruschke, 2013). To examine the three study aims, we implemented bayesian generalized linear regression models using Stan (Carpenter et al., 2017; Stan Development Team, 2020) with the R brms package (Bürkner, 2018). This modeling approach is advantageous for the exploratory nature of the current study as it characterizes uncertainty in the estimates of interest through credible intervals (Kruschke and Liddell, 2018), rather than p-values, and quantifies whether the data support hypothesized effects or null findings.

The association between CTenv and aphasia severity was assessed using WAB-AQ as the dependent variable and CTenv estimates in the alpha, delta, and theta bands as predictors. The association between CTenv and rhythmic perception/production measures was assessed using two models, one for each rhythmic measure (CA-BAT and BAT-sync), with CTenv estimates in the alpha, delta, and theta bands as predictors. The association between CTenv and treatment response to the rhythm-based intervention from Quique et al. (2022) was assessed using mixed-effects models to take advantage of the available trial-level treatment data. Specifically, trial-level word accuracy (i.e., the proportion of correct words out of the total number of words in each sentence, at each session). was used as a dependent variable. Fixed effects were session and CTenv estimates. Random effects included random intercepts for participants and items, and a random slope of session for participants. Models were run separately for the rhythm-enhanced and control conditions. Details about each model can be found in Supplementary materials.

Nine PWA were enrolled and completed the study. All participants showed ALLR peaks, indicating that: (1) the EEG setup was appropriate to record neural responses evoked by auditory signals, and (2) the auditory neural centers in all the participants received afferent input regarding sound onset information. See Figure 1 for a depiction of ALLRs per participant. Thus, we assessed CTenv in all the participants. The strength of CTenv estimates was consistent with previous reports (Di Liberto et al., 2015). Based on 90% credible intervals, the observed CTenv estimates were reliably higher than the randomly generated CTenv estimates across bands and participants (ß = −0.03, CI: −0.03, −0.02), indicating an association between cortical processing and a specific auditory input (i.e., the speech envelope). See Figure 3 for a depiction of observed versus random CTenv measures per participant and band.

Figure 3. Observed (averaged across the five best electrodes) CTenv (blue) and CTenv to null/random model (red; based on 100 random pairings of the speech envelope and EEG) in each participant for the delta, theta, and alpha band, respectively. Error bars represent 95% confidence intervals of chance distribution. The scalp topography of CTenv estimates (Pearson’s correlation) in the delta, theta, and alpha bands, are shown at the bottom of each panel for each participant.

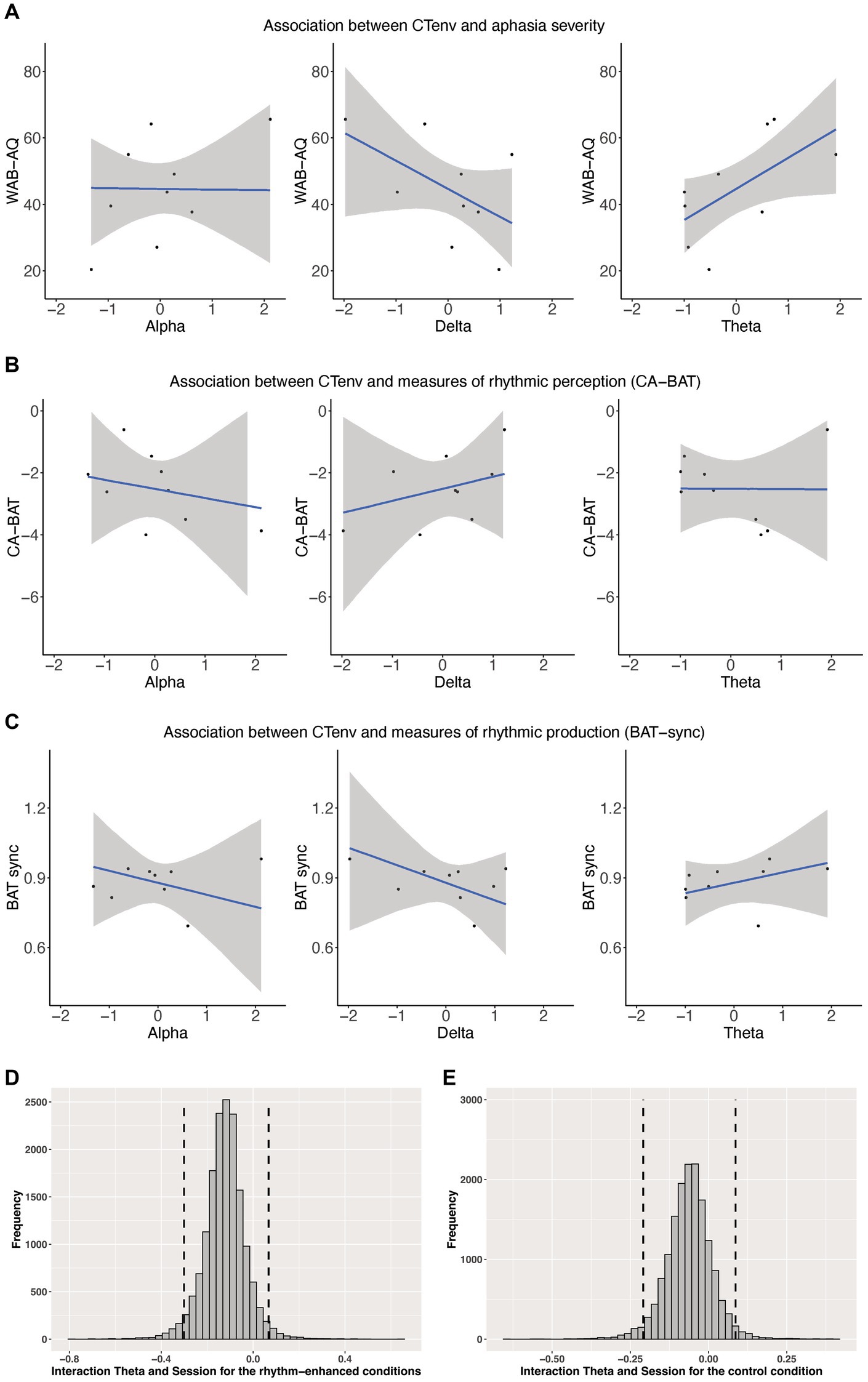

We assessed whether CTenv measures could be used as a metric to predict aphasia severity. CTenv in the theta band was a reliable predictor of aphasia severity (ß = 9.07, CI: 1.94, 15.29), suggesting that increased tracking in this band is associated with an increase in WAB-AQ (less impairment). The credible interval for CTenv in the delta band included zero (ß = −8.06, CI: −16.27, 1.48), and so did not meet the criteria we defined as a robust effect, but 93% of the posterior distribution for this effect was below zero. This provides weak but positive evidence that increased tracking in the delta band could be associated with a decrease in WAB-AQ (more impairment). CTenv in the alpha band was not a reliable predictor of aphasia severity (ß = 0.07, SE = CI: −8.26, 9.25). See Figure 4. See also Supplementary Figure S4 in our Supplementary materials.

Figure 4. (A) Shows scatterplots of the association between CTenv and aphasia severity. (B) Shows Scatterplots of the association between CTenv and CA-BAT. (C) Shows Scatterplots of the association between CTenv and BAT-sync. Black dots = raw data. Blue line = estimated slope from models reported in Supplementary Tables S1–S3. Gray area = 90% model uncertainty intervals. (D,E) Show the posterior distribution and 90% highest density intervals (HDIs) of the interaction effects between the theta band and session for the rhythm enhanced and control conditions. Dashed lines mark the 90% highest density intervals (HDIs) for the posterior distribution.

CTenv did not reliably predict CA-BAT performance (delta: ß = 0.39, CI: −0.84, 1.63; theta: ß = −0.01, CI: −0.91, 0.87; alpha: ß = −0.29, CI: −1.53, 0.99). Similarly, CTenv did not reliably predict BAT-sync performance (delta: ß = −0.07, CI: −0.20, 0.06; theta: ß = 0.04, CI: −0.04, 0.13; alpha: ß = −0.05, CI: −0.18, 0.08). These results indicate little association between rhythm perception/production (as measured by CA-BAT and BAT-sync) with CTenv in PWA. See Figure 4. See also Supplementary Figures S5, S6 in our Supplementary materials.

CTenv in the theta band showed a reliable main effect for the rhythm-enhanced conditions (ß = 1.06, CI: 0.48, 1.66), and a similar effect for the control condition (ß = 0.80, CI: 0.01, 1.59). Thus, these results indicate that the odds of producing a correct word, at the midpoint of treatment, were greater in people with higher CTenv in the theta-band. Consistent with Quique et al. (2022), within this sub-group of participants from Quique et al. (2022), there was also a reliable main effect of session for all models across conditions and bands (all estimates were positive, and the CI excluded zero), indicating a positive treatment response over time. Although the interaction effect between CTenv in theta and session for the rhythm-enhanced (ß = −0.12, CI: −0.27, 0.02) and control conditions (ß = −0.06, CI: −0.18, 0.05) included zero, examining the posterior distributions showed indications of a positive effect. Specifically, 93% of the posterior distribution was below zero for the rhythm-enhanced, and 83% for the control conditions (See Figure 4). This result provides weak but positive evidence that a higher CTenv in theta may be associated with a reduced treatment response to the sentence-level rhythm-based intervention. There were no other main effects or interaction effects for CTenv in alpha or delta bands (CI included zero with no substantial portions of their posterior distribution suggesting meaningful effects). See Supplementary materials for complete model output for all models (Supplementary Figures S7–S16).

The current study examined CTenv in Spanish speakers with aphasia and its association with language impairments, behavioral measures of rhythmic perception/production, and treatment response to a rhythm-based intervention. CTenv in the theta band was associated with language impairments in our cohort of Spanish speakers with stroke-induced aphasia. We also found some evidence that CTenv in the delta band may be associated with language impairments. These preliminary findings may suggest that cortical activity in the theta and delta bands, which have been shown to be essential for speech processing in neurotypical individuals (Poeppel and Assaneo, 2020), could be affected by acquired brain lesions.

The direction of the relationship between language impairments and CTenv in the delta and theta bands had intriguing patterns. Higher CTenv in the delta band was likely associated with more-severe language impairments, whereas higher CTenv in theta band was associated with less-severe language impairments. Although this opposite directionality could indicate an inverse relationship between delta and theta rhythms to compensate for language impairments in aphasia, our results do not provide conclusive evidence. The association between higher CTenv in the delta band and more severe language impairments could reflect a compensatory mechanism to support language comprehension. Such increases in CTenv and decreased speech comprehension have been reported in older adults, suggesting that increased tracking could indicate altered speech processing abilities (e.g., Decruy et al., 2019). McHaney et al. (2021) reported an association between increased delta tracking and speech-in-noise comprehension in older adults (for speech in noise compared to quiet). However, other studies have shown that increased CTenv may reflect, instead, an imbalance between excitatory and inhibitory brain processes or the recruitment of additional brain regions for processing information (e.g., Brodbeck et al., 2018; Zan et al., 2020).

The association between CTenv in the theta band and language impairments could suggest that the higher CTenv, the more appropriately PWA can segment syllabic information (Teng et al., 2018). However, increased tracking in the theta band has been observed in individuals with logopenic PPA, who typically show phonological processing deficits, compared to neurotypical older adults (Dial et al., 2021). Dial et al. (2021) suggested that increased tracking in PPA could result from a shift of function from atrophic temporoparietal cortex to spared brain regions in both hemispheres; it could be further affected by increased functional connectivity in the temporal cortices and hypersynchrony in the frontal cortex (Ranasinghe et al., 2017). Such a shift of function could also be seen in brain damage due to stroke; however, the pathology differs from PPA. Further, Etard and Reichenbach (2019) reported that increased tracking in the theta band was associated with perceived speech clarity in young adults. Given the lack of evidence in CTenv and aphasia, or other acquired neurological disorders, these interpretations need to be investigated in future studies. The exact mechanism driving the relationship between cortical tracking and aphasia severity needs further large-scale intervention across a wide range of aphasia severities using spatially and temporally precise neuroimaging approaches.

None of the CTenv bands were reliable predictors of behavioral measures of rhythm perception (i.e., CA-BAT, BAT-sync); this lack of association is inconsistent with previous findings. For example, Nozaradan et al. (2011) found that in neurotypical participants, beat perception was associated with a sustained periodic response in the EEG spectrum. Similarly, Nozaradan et al. (2013) showed links between neural responses, listening to rhythmic sound patterns, and tapping to beats. Further, Colling et al. (2017) showed that CTenv and beat synchronization to a metronome were affected in individuals with dyslexia. Although we did not simultaneously use EEG with behavioral measures of rhythm perception/production, given previous evidence, an association would have been expected. Thus, more research is needed to understand associations between CTenv and measures of rhythm perception/production in acquired neurological disorders.

The preliminary interaction effect between CTenv in theta and session indicates that there may be an association between CTenv and treatment response to a sentence-level rhythm-based intervention, but this conclusion would require additional examination and replication. Rhythm-based interventions have been recommended in language disorders such as dyslexia (e.g., Goswami, 2011; Thomson et al., 2013), which has been linked to concomitant rhythmic difficulties (e.g., Wolff, 2002; Flaugnacco et al., 2014; Ladányi et al., 2020). Similarly, individuals with aphasia have shown rhythm processing deficits (Zipse et al., 2014; Sihvonen et al., 2016), but have also benefited from rhythm-based interventions (e.g., Stahl et al., 2011, 2013; Quique et al., 2022). Further research can continue to examine whether encoding of speech rhythmic properties could provide neurophysiologically informed decision-making regarding the choice of intervention in individuals with aphasia.

The current study had some limitations. First, it had a limited sample size of PWA; thus, reproducing our findings in future studies with larger sample sizes would be needed to provide robust evidence. Second, there were limited observations within each variable available for modeling (e.g., for aphasia severity and rhythm perception/production, we had one observation per participant). Thus, replicating these findings in larger samples with multiple observations per participant is necessary. Third, because of the cortical lesions in our participants with aphasia, we did not select electrodes of interest a priori; instead, we used the averaged CTenv of the five electrodes with the highest values. However, future studies should acquire anatomical data and perform source-level analysis to avoid confounds of volume conduction artifacts introduced by the brain lesion. Similarly, we believe that anatomical changes in the cortex after stroke may have an influence on CTenv, but we did not have lesion data for these participants; this information is needed to investigate the role of lesion size and location on CTenv in aphasia. Fourth, our results could not provide a clear mechanistic explanation for the directionality of the relationship between CTenv and aphasia severity. Future studies are necessary to understand the neurophysiological mechanisms underlying such findings. Fifth, CTenv was assessed only at one timepoint (before the rhythm-based intervention). Future studies using such neural metrics before and after intervention could provide objective neurophysiological evidence that can guide interventions, predict outcomes, and measure the mechanisms of treatment-related improvements. Overall, CTenv in the theta band was associated with language impairments. CTenv in theta may be associated with treatment response to sentence-level rhythm-based interventions, but further research is needed to provide robust evidence.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were reviewed and approved by the Institutional Review Board at the University of Pittsburgh and by Neuromedica, the local partner institution in Colombia. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Data collection, analysis, and background literature review were conducted by YQ, GNG, WE, and MD. Data analyses and interpretation were conducted by YQ and GNG. Manuscript preparation was conducted by YQ, GNG, WE, MD, and BC. All authors contributed to the article and approved the submitted version.

This research project was funded by the Council of Academic Programs in Communication Sciences and Disorders (CAPCSD) Ph.D. Scholarship, the ASHFoundation New Century Scholars Doctoral Scholarship, and the Doctoral Student Award from the University of Pittsburgh School of Health and Rehabilitation Sciences to YQ. Funding to BC was by National Institute for Communication Sciences and Disorders award R01DC013315 and Northwestern University. Funding to GNG was provided by the Vice-Chancellors Graduate Research Education funds.

Sincere thanks to Sara Gonzalez for her work on data collection. Special acknowledgment to Sergio Cabrera, Vanessa Benjumea, and Neuromedica’s team. We are also profoundly grateful to our participants and their families in Medellin.

The authors declare that the research was conducted without any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2023.1122480/full#supplementary-material

Assaneo, M. F., Rimmele, J. M., Orpella, J., Ripollés, P., de Diego-Balaguer, R., and Poeppel, D. (2019). The lateralization of speech-brain coupling is differentially modulated by intrinsic auditory and top-down mechanisms. Front. Integr. Neurosci. 13, 1–11. doi: 10.3389/fnint.2019.00028

Barbieri, E., Litcofsky, K. A., Walenski, M., Chiappetta, B., Mesulam, M.-M., and Thompson, C. K. (2021). Online sentence processing impairments in agrammatic and logopenic primary progressive aphasia: evidence from ERP. Neuropsychologia 151:107728. doi: 10.1016/j.neuropsychologia.2020.107728

Becker, F., and Reinvang, I. (2007). Successful syllable detection in aphasia despite processing impairments as revealed by event-related potentials. Behav. Brain Funct. 3:6. doi: 10.1186/1744-9081-3-6

Brodbeck, C., Presacco, A., Anderson, S., and Simon, J. Z. (2018). Over-representation of speech in older adults originates from early response in higher order auditory cortex. Acta Acustica united with Acustica 104, 774–777. doi: 10.3813/aaa.919221

Bürkner, P.-C. (2018). Advanced Bayesian multilevel modeling with the R package brms. R J. 10, 395–411. doi: 10.32614/rj-2018-017

Carpenter, B., Gelman, A., Hoffman, M. D., Lee, D., Goodrich, B., Betancourt, M., et al. (2017). Stan: a probabilistic programming language. J. Stat. Softw. 76, 1–34. doi: 10.18637/jss.v076.i01

Chandrasekaran, C., Trubanova, A., Stillittano, S., Caplier, A., and Ghazanfar, A. A. (2009). The natural statistics of audiovisual speech. PLoS Comput. Biol. 5:e1000436. doi: 10.1371/journal.pcbi.1000436

Colling, L. J., Noble, H. L., and Goswami, U. (2017). Neural entrainment and sensorimotor synchronization to the beat in children with developmental dyslexia: an EEG study. Front. Neurosci. 11:360. doi: 10.3389/fnins.2017.00360

Crosse, M. J., Butler, J. S., and Lalor, E. C. (2015). Congruent visual speech enhances cortical entrainment to continuous auditory speech in noise-free conditions. J. Neurosci. 35, 14195–14204. doi: 10.1523/jneurosci.1829-15.2015

Crosse, M. J., Di Liberto, G. M., Bednar, A., and Lalor, E. C. (2016). The multivariate temporal response function (mTRF) toolbox: a MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 10:604. doi: 10.3389/fnhum.2016.00604

Cruice, M., Worrall, L., and Hickson, L. (2006). Perspectives of quality of life by people with aphasia and their family: suggestions for successful living. Top. Stroke Rehabil. 13, 14–24. doi: 10.1310/4jw5-7vg8-g6x3-1qvj

Curtis, S., Nicholas, M. L., Pittmann, R., and Zipse, L. (2020). Tap your hand if you feel the beat: differential effects of tapping in melodic intonation therapy. Aphasiology 34, 580–602. doi: 10.1080/02687038.2019.1621983

De Clercq, P., Kries, J., Mehraram, R., Vanthornhout, J., Francart, T., and Vandermosten, M. (2023). Detecting post-stroke aphasia using EEG-based neural envelope tracking of natural speech. medRxiv [Preprint]. doi: 10.1101/2023.03.14.23287194

Decruy, L., Vanthornhout, J., and Francart, T. (2019). Evidence for enhanced neural tracking of the speech envelope underlying age-related speech-in-noise difficulties. J. Neurophysiol. 122, 601–615. doi: 10.1152/jn.00687.2018

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Di Liberto, G. M., O'Sullivan, J. A., and Lalor, E. C. (2015). Low-frequency cortical entrainment to speech reflects phoneme-level processing. Curr. Biol. 25, 2457–2465. doi: 10.1016/j.cub.2015.08.030

Dial, H. R., Gnanateja, G. N., Tessmer, R. S., Gorno-Tempini, M. L., Chandrasekaran, B., and Henry, M. L. (2021). Cortical tracking of the speech envelope in Logopenic variant primary progressive aphasia. Front. Hum. Neurosci. 14:597694. doi: 10.3389/fnhum.2020.597694

Ding, N., Patel, A. D., Chen, L., Butler, H., Luo, C., and Poeppel, D. (2017). Temporal modulations in speech and music. Neurosci. Biobehav. Rev. 81, 181–187. doi: 10.1016/j.neubiorev.2017.02.011

Ding, N., and Simon, J. Z. (2014). Cortical entrainment to continuous speech: functional roles and interpretations. Front. Hum. Neurosci. 8:311. doi: 10.3389/fnhum.2014.00311

Doelling, K. B., Arnal, L. H., Ghitza, O., and Poeppel, D. (2014). Acoustic landmarks drive delta-theta oscillations to enable speech comprehension by facilitating perceptual parsing. NeuroImage 85, 761–768. doi: 10.1016/j.neuroimage.2013.06.035

Doelling, K. B., Assaneo, M. F., Bevilacqua, D., Pesaran, B., and Poeppel, D. (2019). An oscillator model better predicts cortical entrainment to music. Proc. Natl. Acad. Sci. 116:10113. doi: 10.1073/pnas.1816414116

Etard, O., and Reichenbach, T. (2019). Neural speech tracking in the Theta and in the Delta frequency band differentially encode clarity and comprehension of speech in noise. J. Neurosci. 39:5750. doi: 10.1523/JNEUROSCI.1828-18.2019

Flaugnacco, E., Lopez, L., Terribili, C., Zoia, S., Buda, S., Tilli, S., et al. (2014). Rhythm perception and production predict reading abilities in developmental dyslexia. Front. Hum. Neurosci. 8:392. doi: 10.3389/fnhum.2014.00392

Fridriksson, J., Basilakos, A., Hickok, G., Bonilha, L., and Rorden, C. (2015). Speech entrainment compensates for Broca's area damage. Cortex 69, 68–75. doi: 10.1016/j.cortex.2015.04.013

Fridriksson, J., Hubbard, H. I., Hudspeth, S. G., Holland, A. L., Bonilha, L., Fromm, D., et al. (2012). Speech entrainment enables patients with Broca's aphasia to produce fluent speech. Brain 135, 3815–3829. doi: 10.1093/brain/aws301

Ghitza, O. (2013). The theta-syllable: a unit of speech information defined by cortical function. Front. Psychol. 4:138. doi: 10.3389/fpsyg.2013.00138

Ghitza, O. (2017). Acoustic-driven delta rhythms as prosodic markers. Lang. Cogn. Neurosci. 32, 545–561. doi: 10.1080/23273798.2016.1232419

Gnanateja, G. N., Devaraju, D. S., Heyne, M., Quique, Y. M., Sitek, K. R., Tardif, M. C., et al. (2022). On the role of neural oscillations across timescales in speech and music processing. Front. Comput. Neurosci. 16:872093. doi: 10.3389/fncom.2022.872093

Goswami, U. (2011). A temporal sampling framework for developmental dyslexia. Trends Cogn. Sci. 15, 3–10. doi: 10.1016/j.tics.2010.10.001

Harrison, P. M. C., and Müllensiefen, D. (2018). Development and validation of the computerised adaptive beat alignment test (CA-BAT). Sci. Rep. 8:12395. doi: 10.1038/s41598-018-30318-8

Hilari, K. (2011). The impact of stroke: are people with aphasia different to those without? Disabil. Rehabil. 33, 211–218. doi: 10.3109/09638288.2010.508829

Howard, M. F., and Poeppel, D. (2010). Discrimination of speech stimuli based on neuronal response phase patterns depends on acoustics but not comprehension. J. Neurophysiol. 104, 2500–2511. doi: 10.1152/jn.00251.2010

Iversen, J., and Patel, A. (2008). “The beat alignment test (BAT): surveying beat processing abilities in the general population”, in: 10th international conference on music perception, and cognition (ICMPC 10), eds. K. I. Miyazaki, Y. Hiraga, M. Adachi, Y. Nakajima, and M. Tsuzaki).

Jung, T. P., Makeig, S., McKeown, M. J., Bell, A. J., Lee, T. W., and Sejnowski, T. J. (2001). Imaging brain dynamics using independent component analysis. Proc. IEEE 89, 1107–1122. doi: 10.1109/5.939827

Kertesz, A. (1982). Western aphasia battery test manual. San Antonio, TX: The Psychological Corporation.

Kertesz, A., and Pascual-Leone, Á. (2000). Batería de afasías Western (The western aphasia battery en versión y adaptación castellana). Spain: Nau llibres.

Klem, G., Lüders, H., Jasper, H., and Elger, C. (1999). The ten-twenty electrode system of the international federation. The International Federation of Clinical Neurophysiology. Electroencephalogr. Clin. Neurophysiol. Suppl. 52, 3–6.

Kösem, A., and van Wassenhove, V. (2016). Distinct contributions of low- and high-frequency neural oscillations to speech comprehension. Lang. Cogn. Neurosci. 32, 536–544. doi: 10.1080/23273798.2016.1238495

Kries, J., De Clercq, P., Gillis, M., Vanthornhout, J., Lemmens, R., Francart, T., et al. (2023). Exploring neural tracking of acoustic and linguistic speech representations in individuals with post-stroke aphasia. bioRxiv [Preprint]. doi: 10.1101/2023.03.01.530707

Kruschke, J. K. (2013). Bayesian estimation supersedes the t test. J. Exp. Psychol. Gen. 142, 573–603. doi: 10.1037/a0029146

Kruschke, J. K., and Liddell, T. M. (2018). The Bayesian new statistics: hypothesis testing, estimation, meta-analysis, and power analysis from a Bayesian perspective. Psychon. Bull. Rev. 25, 178–206. doi: 10.3758/s13423-016-1221-4

Ladányi, E., Persici, V., Fiveash, A., Tillmann, B., and Gordon, R. L. (2020). Is atypical rhythm a risk factor for developmental speech and language disorders? WIREs Cogn. Sci. 11:e1528. doi: 10.1002/wcs.1528

Lakatos, P., Gross, J., and Thut, G. (2019). A new unifying account of the roles of neuronal entrainment. Curr. Biol. 29, R890–R905. doi: 10.1016/j.cub.2019.07.075

Lizarazu, M., Carreiras, M., Bourguignon, M., Zarraga, A., and Molinaro, N. (2021). Language proficiency entails tuning cortical activity to second language speech. Cereb. Cortex 31, 3820–3831. doi: 10.1093/cercor/bhab051

MathWorks Inc. (2019). MATLAB (R2019 a). (Natick, Massachusetts: The MathWorks Inc) Availabkle at: https://www.mathworks.com

McHaney, J. R., Gnanateja, G. N., Smayda, K. E., Zinszer, B. D., and Chandrasekaran, B. (2021). Cortical tracking of speech in Delta band relates to individual differences in speech in noise comprehension in older adults. Ear Hear. 42, 343–354. doi: 10.1097/AUD.0000000000000923

Mullen, T., Kothe, C., Chi, Y. M., Ojeda, A., Kerth, T., Makeig, S., et al. (2013). “Real-time modeling and 3D visualization of source dynamics and connectivity using wearable EEG”, in: 2013 35th annual international conference of the IEEE engineering in medicine and biology society (EMBC), 2184–2187.

Nozaradan, S., Peretz, I., Missal, M., and Mouraux, A. (2011). Tagging the neuronal entrainment to beat and meter. J. Neurosci. 31:10234. doi: 10.1523/JNEUROSCI.0411-11.2011

Nozaradan, S., Zerouali, Y., Peretz, I., and Mouraux, A. (2013). Capturing with EEG the neural entrainment and coupling underlying sensorimotor synchronization to the beat. Cereb. Cortex 25, 736–747. doi: 10.1093/cercor/bht261

O’Sullivan, J., Chen, Z., Herrero, J., McKhann, G. M., Sheth, S. A., Mehta, A. D., et al. (2017). Neural decoding of attentional selection in multi-speaker environments without access to clean sources. J. Neural Eng. 14:056001. doi: 10.1088/1741-2552/aa7ab4

Obleser, J., and Weisz, N. (2012). Suppressed alpha oscillations predict intelligibility of speech and its acoustic details. Cereb. Cortex 22, 2466–2477. doi: 10.1093/cercor/bhr325

Paul, B. T., Chen, J., Le, T., Lin, V., and Dimitrijevic, A. (2021). Cortical alpha oscillations in cochlear implant users reflect subjective listening effort during speech-in-noise perception. PLoS One 16:e0254162. doi: 10.1371/journal.pone.0254162

Peelle, J. E., Gross, J., and Davis, M. H. (2013). Phase-locked responses to speech in human auditory cortex are enhanced during comprehension. Cereb. Cortex 23, 1378–1387. doi: 10.1093/cercor/bhs118

Pefkou, M., Arnal, L. H., Fontolan, L., and Giraud, A. L. (2017). theta-band and beta-band neural activity reflects independent syllable tracking and comprehension of time-compressed speech. J. Neurosci. 37, 7930–7938. doi: 10.1523/JNEUROSCI.2882-16.2017

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., et al. (2019). PsychoPy2: experiments in behavior made easy. Behav. Res. Methods 51, 195–203. doi: 10.3758/s13428-018-01193-y

Pérez, A., Dumas, G., Karadag, M., and Duñabeitia, J. A. (2019). Differential brain-to-brain entrainment while speaking and listening in native and foreign languages. Cortex 111, 303–315. doi: 10.1016/j.cortex.2018.11.026

Plechawska-Wojcik, M., Kaczorowska, M., and Zapala, D. (2019). “The artifact subspace reconstruction (ASR) for EEG signal correction. A comparative study’, in: Information systems architecture and technology: Proceedings of 39th international conference on information systems architecture and technology – ISAT 2018, eds. J. Świątek, L. Borzemski, and Z. Wilimowska, United States: Springer International Publishing), 125–135.

Poeppel, D., and Assaneo, M. F. (2020). Speech rhythms and their neural foundations. Nat. Rev. Neurosci. 21, 322–334. doi: 10.1038/s41583-020-0304-4

Quique, Y. M., Evans, W. S., Ortega-Llebaría, M., Zipse, L., and Dickey, M. W. (2022). Get in sync: active ingredients and patient profiles in scripted-sentence learning in Spanish speakers with aphasia. J. Speech Lang. Hear. Res. 65, 1478–1493. doi: 10.1044/2021_jslhr-21-00060

R Core Team (2020). R: A language and environment for statistical computing. 3.6.3 Edn. (Vienna, Austria: R Foundation for Statistical Computing).

Ranasinghe, K. G., Hinkley, L. B., Beagle, A. J., Mizuiri, D., Honma, S. M., Welch, A. E., et al. (2017). Distinct spatiotemporal patterns of neuronal functional connectivity in primary progressive aphasia variants. Brain 140, 2737–2751. doi: 10.1093/brain/awx217

Reetzke, R., Gnanateja, G. N., and Chandrasekaran, B. (2021). Neural tracking of the speech envelope is differentially modulated by attention and language experience. Brain Lang. 213:104891. doi: 10.1016/j.bandl.2020.104891

Robson, H., Cloutman, L., Keidel, J. L., Sage, K., Drakesmith, M., and Welbourne, S. (2014). Mismatch negativity (MMN) reveals inefficient auditory ventral stream function in chronic auditory comprehension impairments. Cortex 59, 113–125. doi: 10.1016/j.cortex.2014.07.009

Rosen, S. (1992). Temporal information in speech: acoustic, auditory and linguistic aspects. Philos. Trans. R. Soc. Lond. 336, 367–373. doi: 10.1098/rstb.1992.0070

RStudio Team (2016). “RStudio: Integrated development environment for R.” 1.1.456 ed (Boston, MA: RStudio, Inc.).

Shannon, R. V., Zeng, F.-G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science 270, 303–304. doi: 10.1126/science.270.5234.303

Sihvonen, A. J., Ripollés, P., Leo, V., Rodríguez-Fornells, A., Soinila, S., and Särkämö, T. (2016). Neural basis of acquired Amusia and its recovery after stroke. J. Neurosci. 36, 8872–8881. doi: 10.1523/JNEUROSCI.0709-16.2016

Stahl, B., Henseler, I., Turner, R., Geyer, S., and Kotz, S. A. (2013). How to engage the right brain hemisphere in aphasics without even singing: evidence for two paths of speech recovery. Front. Hum. Neurosci. 7:35. doi: 10.3389/fnhum.2013.00035

Stahl, B., Kotz, S. A., Henseler, I., Turner, R., and Geyer, S. (2011). Rhythm in disguise: why singing may not hold the key to recovery from aphasia. Brain 134, 3083–3093. doi: 10.1093/brain/awr240

Stefaniak, J. D., Lambon Ralph, M. A., De Dios Perez, B., Griffiths, T. D., and Grube, M. (2021). Auditory beat perception is related to speech output fluency in post-stroke aphasia. Sci. Rep. 11:3168. doi: 10.1038/s41598-021-82809-w

Swink, S., and Stuart, A. (2012). Auditory long latency responses to tonal and speech stimuli. J. Speech Lang. Hear. Res. 55, 447–459. doi: 10.1044/1092-4388(2011/10-0364)

Teng, X., Tian, X., Doelling, K., and Poeppel, D. (2018). Theta band oscillations reflect more than entrainment: behavioral and neural evidence demonstrates an active chunking process. Eur. J. Neurosci. 48, 2770–2782. doi: 10.1111/ejn.13742

Teoh, E. S., Cappelloni, M. S., and Lalor, E. C. (2019). Prosodic pitch processing is represented in delta-band EEG and is dissociable from the cortical tracking of other acoustic and phonetic features. Eur. J. Neurosci. 50, 3831–3842. doi: 10.1111/ejn.14510

Thomson, J. M., Leong, V., and Goswami, U. (2013). Auditory processing interventions and developmental dyslexia: a comparison of phonemic and rhythmic approaches. Read. Writ. 26, 139–161. doi: 10.1007/s11145-012-9359-6

Winkler, I., Debener, S., Müller, K. R., and Tangermann, M. (2015). “On the influence of high-pass filtering on ICA-based artifact reduction in EEG-ERP,” in: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC), 4101–4105.

Wolff, P. H. (2002). Timing precision and rhythm in developmental dyslexia. Read. Writ. 15, 179–206. doi: 10.1023/A:1013880723925

Wöstmann, M., Lim, S.-J., and Obleser, J. (2017). The human neural alpha response to speech is a proxy of attentional control. Cereb. Cortex 27, 3307–3317. doi: 10.1093/cercor/bhx074

Zan, P., Presacco, A., Anderson, S., and Simon, J. Z. (2020). Exaggerated cortical representation of speech in older listeners: mutual information analysis. J. Neurophysiol. 124, 1152–1164. doi: 10.1152/jn.00002.2020

Keywords: aphasia (language), rhythm, cortical tracking of speech, EEG, Spanish

Citation: Quique YM, Gnanateja GN, Dickey MW, Evans WS and Chandrasekaran B (2023) Examining cortical tracking of the speech envelope in post-stroke aphasia. Front. Hum. Neurosci. 17:1122480. doi: 10.3389/fnhum.2023.1122480

Received: 13 December 2022; Accepted: 28 August 2023;

Published: 14 September 2023.

Edited by:

Matthew Walenski, East Carolina University, United StatesCopyright © 2023 Quique, Gnanateja, Dickey, Evans and Chandrasekaran. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: G. Nike Gnanateja, bmlrZWduYW5hdGVqYUBnbWFpbC5jb20=; Bharath Chandrasekaran, YmNoYW5kcmFAbm9ydGh3ZXN0ZXJuLmVkdQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.