95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 10 June 2022

Sec. Brain-Computer Interfaces

Volume 16 - 2022 | https://doi.org/10.3389/fnhum.2022.867377

This article is part of the Research Topic Artificial Intelligence in Brain-Computer Interfaces and Neuroimaging for Neuromodulation and Neurofeedback View all 5 articles

Hands-free interfaces are essential to people with limited mobility for interacting with biomedical or electronic devices. However, there are not enough sensing platforms that quickly tailor the interface to these users with disabilities. Thus, this article proposes to create a sensing platform that could be used by patients with mobility impairments to manipulate electronic devices, thereby their independence will be increased. Hence, a new sensing scheme is developed by using three hands-free signals as inputs: voice commands, head movements, and eye gestures. These signals are obtained by using non-invasive sensors: a microphone for the speech commands, an accelerometer to detect inertial head movements, and an infrared oculography to register eye gestures. These signals are processed and received as the user's commands by an output unit, which provides several communication ports for sending control signals to other devices. The interaction methods are intuitive and could extend boundaries for people with disabilities to manipulate local or remote digital systems. As a study case, two volunteers with severe disabilities used the sensing platform to steer a power wheelchair. Participants performed 15 common skills for wheelchair users and their capacities were evaluated according to a standard test. By using the head control they obtained 93.3 and 86.6%, respectively for volunteers A and B; meanwhile, by using the voice control they obtained 63.3 and 66.6%, respectively. These results show that the end-users achieved high performance by developing most of the skills by using the head movements interface. On the contrary, the users were not able to develop most of the skills by using voice control. These results showed valuable information for tailoring the sensing platform according to the end-user needs.

The main purpose behind the concept of Electronic Aids for Daily Living, as presented by Little (2010), is to help persons with disabilities to operate electronic appliances. In addition, other similar concepts have appeared in recent years such as alternative computer access, environmental control systems, communication aids, and power mobility, which are closely related to the same idea of bringing more independence to individuals with diseases or physical limitations. As indicated in Cowan (2020), these systems allow people with disabilities to control devices in four categories: comfort (remote controls of temperature, windows, curtains, lights), communications (hands free telephone, augmentative, and alternative communication), home security (access to alarms or doors locks), and leisure (television or music appliances).

There have been studies to show positive aspects of using alternative control systems for users with disabilities, described as improvements in the quality of life, autonomy, and personal security (Rigby et al., 2011; Myburg et al., 2017). As a consequence, a lot of research has been done to develop hands-free and alternative input methods for Human Machine Interfaces (HMIs). For instance, Brose et al. (2010) presented emerging technologies for interacting with assistive systems, such as three dimensional joysticks, chin and head controls, computer vision to interpret face gestures from the user, voice recognition, control by eye gazes and head movements, Electro Encephalografic (EEG) signals, and Brain Computer Interfaces (BCI).

Commonly, the proposed interfaces are based on the remaining patient's abilities. For instance, head movement detection has been used as an input method. It was developed an application to activate phonetic systems for verbal communication in Chinese, which uses computer vision to capture features of head motion and distinguishes between nine gestures (Chang et al., 2020). A Human Computer Interface (HCI) for controlling a computer, based on head movements detected by a Microsoft Kinect was presented in Martins et al. (2015). Meanwhile, it was implemented the detection of facial expressions for navigating and executing basic commands in the computer, by using the Emotiv Epoc+ device (Šumak et al., 2019). Besides, an application was implemented by Lu and Zhou (2019) to manipulate the computer's cursor for typing and drawing, based on EMG signals for detecting five facial movements. Another alternative to implementing hands-free interfaces is based on eye tracking and gaze estimation. An algorithm to estimate the gaze point of the user's visual plane has been presented in Lee et al. (2021), which uses a head mounted camera and is described as less invasive and more comfortable for the user. Furthermore, a control based on Electro Oculography (EOG) signals for manipulating a quad copter in four directions: up, down, left, and right was implemented (Milanizadeh and Safaie, 2020); and EOG applications for activating smart home appliances have been developed (Akanto et al., 2020; Molleapaza-Huanaco et al., 2020). Meanwhile, several eye tracking techniques and related research with modern approaches such as machine learning (ML), Internet of Things (IoT), and cloud computing are summarized in Klaib et al. (2021); and a deep learning approach for gaze estimation is presented in Pathirana et al. (2022), which is indicated to perform robust detection in unconstrained environment settings. Finally, the voice commands have been used as an intuitive input method to control a system (Boucher et al., 2013). Recently, there have been developed new systems that incorporate techniques based on Artificial Neural Networks (ANNs) (Anh and Bien, 2021), which uses voice recognition to control a manipulator robot arm. In addition, it is presented in Loukatos et al. (2021) a voice interface to remotely trigger farming actions or query the values of process parameters.

In addition, multi modal schemes have been used to increment the number of possible instructions than those achievable with only one individual input. For instance, a multi modal HMI that incorporates EOG, EMG, and EEG signals was developed for controlling a robotic hand (Zhang et al., 2019). Besides, Papadakis Ktistakis and Bourbakis (2018) propose to use voice commands, body postures recognition, and pressure sensors to steer a robotic nurse. Again, in Sarkar et al. (2016) it was implemented a multi-modal scheme based on movements from the user's wrists and voice commands in order to manipulate the computer's cursor and execute the instructions. Also, EEG and EOG are combined for controlling a virtual keyboard for “eye typing” in Hosni et al. (2019).

On the other hand, there are long standing disorders that disconnect the person's brain from the body (paralysis), as a consequence, it is difficult to take advantage of the remaining abilities, such as voice commands or body movements. As a result, BCI is a technology developed for detecting the user's intentions to control an upper limb assistive robot, by using the P300 component obtained from EEG (Song et al., 2020). Similarly, a brain machine was developed by Zhang et al. (2017) to command a semi-autonomous Intelligent Robot for a drinking task. Hochberg et al. (2012) presented the feasibility to control an Arm Robot's actions obtained from neuron signals. Moreover, another study was developed by Fukuma et al. (2018) for decoding two types of hand movements (grasp and open), based on real-time Magnetoencephalography (MEG), to control a robotic hand. In addition, Woo et al. (2021) presented more applications of BCIs, such as those developed for controlling wheelchairs, computers, exoskeletons, drones, web browsers, cleaning robots, games, and simulators, among other systems.

Indeed, there have been important contributions these days, however, environmental control technology addresses other important issues: to fit the user's abilities, to be harmless, friendly and non-invasive, to be easy to maintain and low-cost, and to accomplish medical standards to be useful in real world environments. The control technology interface must meet the end-user's expectations and preferences in order to be successful, otherwise, the application will represent an obstacle and will be abandoned (Cowan et al., 2012). As an example, it is presented in Lovato and Piper (2019) that because of the availability of voice inputs in some electronic devices, children could search the internet when they are able to speak clearly, even before they have learned to read and write. Nowadays, devices like smart speakers (Amazon's Alexa, Apples'Siri) or microphone search services (Google, Youtube) have demonstrated that speech commands are a natural way to interact with computers. For those reasons, the community is calling for research in the areas of biomedical engineering to develop more attractive Human Machine Interfaces (HMI) to facilitate persons with disabilities the interaction with assistive devices or everyday home appliances. Expectation and evolution of interfaces are discussed in Karpov and Yusupov (2018), which remark other important aspects of present HMIs to be considered: intuitiveness, ergonomics, friendliness, reliability, efficacy, universality, and multimodality.

A traditional target for implementing and testing alternative interfaces has been the power wheelchairs. For that reason, Urdiales (2012) exposes different options to steer a wheelchair by using conventional joysticks, video game joysticks, tongue switches, touchscreens, early speech recognition modules, Electromyography (EMG), eye tracking based on Electrooculagraphic signals (EOG), and BCI. Another review of input technologies for steering a wheelchair is presented by Leaman and La (2017), where they summarized several methods based on biological signals, BCI, computer vision, game controllers, haptic feedback, touch, and voice. Despite the fact that there are several proposals for alternative inputs, only few of them have been evaluated with the end users to identify the real challenges and opportunities. According to the review paper presented by Bigras et al. (2020), the Wheelchair Skills Training Program (Dalhousie University, 2022) presents appropriate tasks for assessing power wheelchairs, the protocol is available and its scoring scales are well established. For that reason, 15 skills from the WST were adapted for the Robotics Wheelchair Test Skills (RWTS) by Boucher et al. (2013) to evaluate a smart wheelchair prototype. In this study, there were involved nine actively wheelchair users with years of driving experience. By using voice commands, the participants are able to request automated actions from the system in order to complete the tasks. Also, in Anwer et al. (2020) healthy users participated to evaluate an eye and voice controls for steering a wheelchair, however, only five skills tasks from the WST were used.

This article presents a modular system with different sensing methods for hands-free inputs. Moreover, the proposed platform includes hardware with several possibilities for connection to more electronic devices. Unlike other systems, this sensing platform is designed to be expanded for including new interfaces and adapted to different applications for environmental control systems. Thus, this platform has been tested in a power mobility application. The evaluation has been done by a standard protocol, involving volunteers with severe mobility problems and non-wheelchair users.

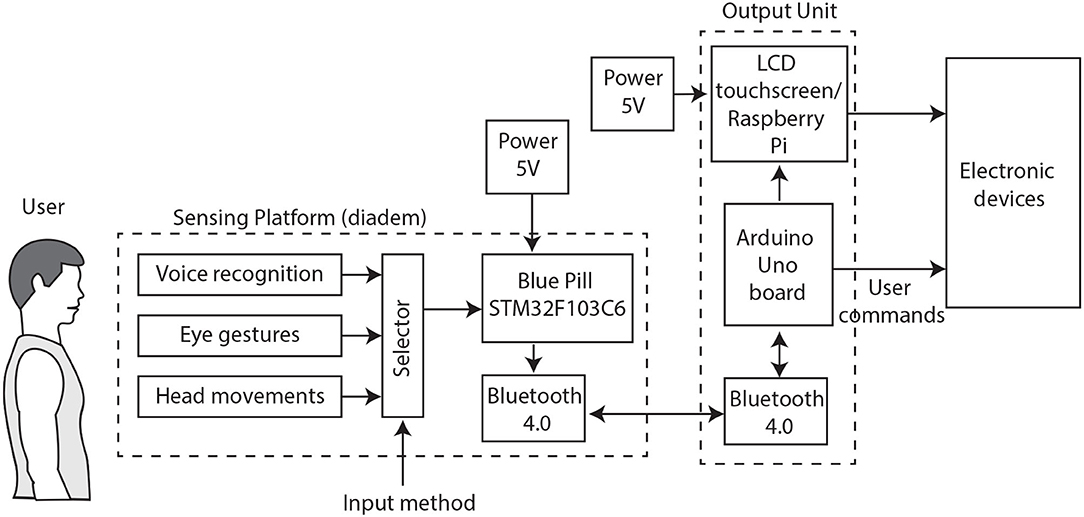

A general diagram of the system is illustrated in Figure 1, where two main blocks are indicated: the Sensing Platform (SP) and the Output Unit (OU). There are three input modes available in the SP: voice recognition, head movements, and eye gestures; which could be selected one at a time. A pair of Bluetooth modules, configured as “slave” and “master,” respectively; are used for sending data between the SP and the OU through serial communication. The OU processes the received data to identify the user's commands and generates control actions for electronic devices. The hardware and other components from both the SP and the OU are described in the next sections.

Figure 1. The block diagram illustrates the two main sections: the Sensing Platform or diadem and the Output Unit.

The input modules are connected to a Blue Pill Development Board from STMicroelectronics, based on a 32-bit microcontroller STM32F103C6 (STMicroelectronics, 2022) that has an ARM Cortex-M3 core operating at a 72 MHz frequency. This microcontroller is programmed to acquire data from the selected input, process the information, and send it through the UART protocol. There are used Bluetooth 4.0 modules configured at 9,600 bauds per second for serial communication. These modules use low energy technology and high data transfers. In addition, a micro-USB connector is used to energize the Blue Pill, as shown in Figure 1. The 5V DC power is provided by a lightweight portable charger. This power bank has a capacity of 26,800 mAh with several standard ports used for power input and output.

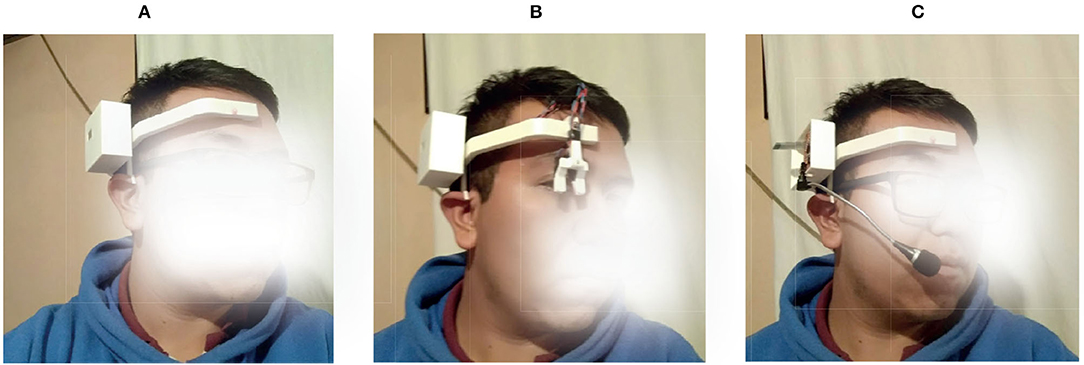

A head-mounted wearable device (diadem) was designed to carry the electronic components for hands-free interfaces. A solidWorks model was created first, as illustrated by Figure 2. There are five parts for assembling the wearable device: the front and back pieces, the circuit box container, the ear rest piece, and the IR sensors holder. Under this scheme, it is possible to configure one interface without replacing the complete diadem. In the end, this design was printed with a solid material to be used during the prototype testing. The next sections describe the electronic modules used for the interfaces.

For this first interface, an Elechouse Voice Recognition V3 module (Elechouse, 2022) is used, which is an integrated control speaking recognition board. This module is able to store 80 different voice commands and recognize seven at the same time, with a 99% accuracy under the ideal environment. It includes an analog input: a 3.5 mm connector for a mono channel microphone. Also, the small board has serial port pins for easy control and I2C pins. Before using it, the voice module needs to be configured with clear voice commands or even sounds, as there are users with limited speech or with difficulties speaking clearly. Moreover, there is no need to configure a specific language. The serial port control needs code libraries available at the Arduino UNO presented in Figure 1. For that reason, a serial communication path must be established, between the Elechouse board and the Arduino, through the Bluetooth modules and the STM32F103C6 ports as depicted in Figure 3.

The second interface uses an MPU6050 sensor, which integrates a 3-axis accelerometer and gyroscope on the same board. The acceleration data, with a 16-bit resolution, is transmitted to the STM32F103C6 via the I2C protocol. The acceleration measurements are used to calculate the inclination angles when the users tilt their head in four directions: forward/backward, corresponding to the x-axis; and left/right, corresponding to the y-axis, as illustrated in Figure 4. To measure the inclination angles in both the x and y-axis, there were implemented in code the next mathematical relations:

Where θx and θy are the inclination angles running from −90 to 90°; and accelx, accely, and accelz are the acceleration values obtained from the sensor. Finally, a string with the movement angles is transmitted to the OU via the Bluetooth connection. In order to receive this data string, other devices like mobile telephones, tablets, or computers could be linked by a Bluetooth connection.

Finally, the third interface is based on ocular movement detection for identifying eye gestures. The proposed circuit uses two reflectance sensors QTR-1A; which are small, lightweight, and carry an infrared LED and phototransistor pair. These sensors are typically used for edge detection and line following; however, for eye movement detection the emitter sends light to the eye, which is reflected from the frontal surface of the eyeball back to the phototransistor, as illustrated in the block diagram shown in Figure 5. The sensor produces an analog voltage output between 0 and 5 V as a function of the reflected light. This signal is received by two pins in the STM32FC103C6, configured as Analog to Digital Converter (ADC) inputs with 12 bits resolution. In the microcontroller, other code functions were deployed for smoothing the digitized signal and a DC blocker. In addition, it was implemented a special algorithm based on Artificial Neural Networks (ANNs) to determine the user's direction of the gaze and eye movements.

The OU receives data from the SP, as presented in Figure 1. This information is processed by the Arduino ifbUNO board (Arduino, 2022), which incorporates an 8-bit microcontroller ATmega328P operating at 16 MHz. The implemented code in this processor includes libraries to easy-control the Voice Recognition module and functions developed to identify commands from the other interfaces. Also, there are included functions to generate outputs for controlling electronic devices. In addition, an LCD touchscreen is included in the OU, which is supported by a Raspberry Pi 4 Model B with Raspbian OS. The Raspberry Pi 4 was released with a 1.5 GHz 64-bit quad core ARM Cortex-A72 processor; connectivity through 802.11ac Wi-Fi, Gigabit Ethernet, and Bluetooth 5; besides 2 USB ports 3.0, 2 USB ports 2.0, 2 micro HDMI ports supporting 4 K@60 Hz. The touchscreen displays a Graphical User Interface (GUI) developed in the programming language Python. The GUI presents text strings from the information received by the Arduino UNO, as the readings from interfaces, the identified voice commands, and output values. Finally, it is necessary a 5V DC power supply for powering the Raspberry, however, this will be described in Section 3.1 as a part of the implementation study.

Several pins on the Arduino can be configured as basic communication peripherals: Universal Serial Asynchronous Receiver-Transmitter (UART), Inter-Integrated Circuit (I2C), Serial Peripheral Interface (SPI), and 14 general pins that can be used as digital input-output or as Pulse Width Modulation (PWM) outputs. Besides, the UART protocol allows the Arduino to communicate with computers via the USB port. On the other hand, the Raspberry Pi has a 40-pin header, with 26 General Purpose Input Output (GPIO) and power (3.3, 5 V) or ground pins. These GPIO pins enable the Raspberry Pi to communicate with other external devices in the real world. Furthermore, the GPIO set includes pins to be configured as well as simple inputs and output devices, other functions can be used: PWM, I2C, SPI, and UART.

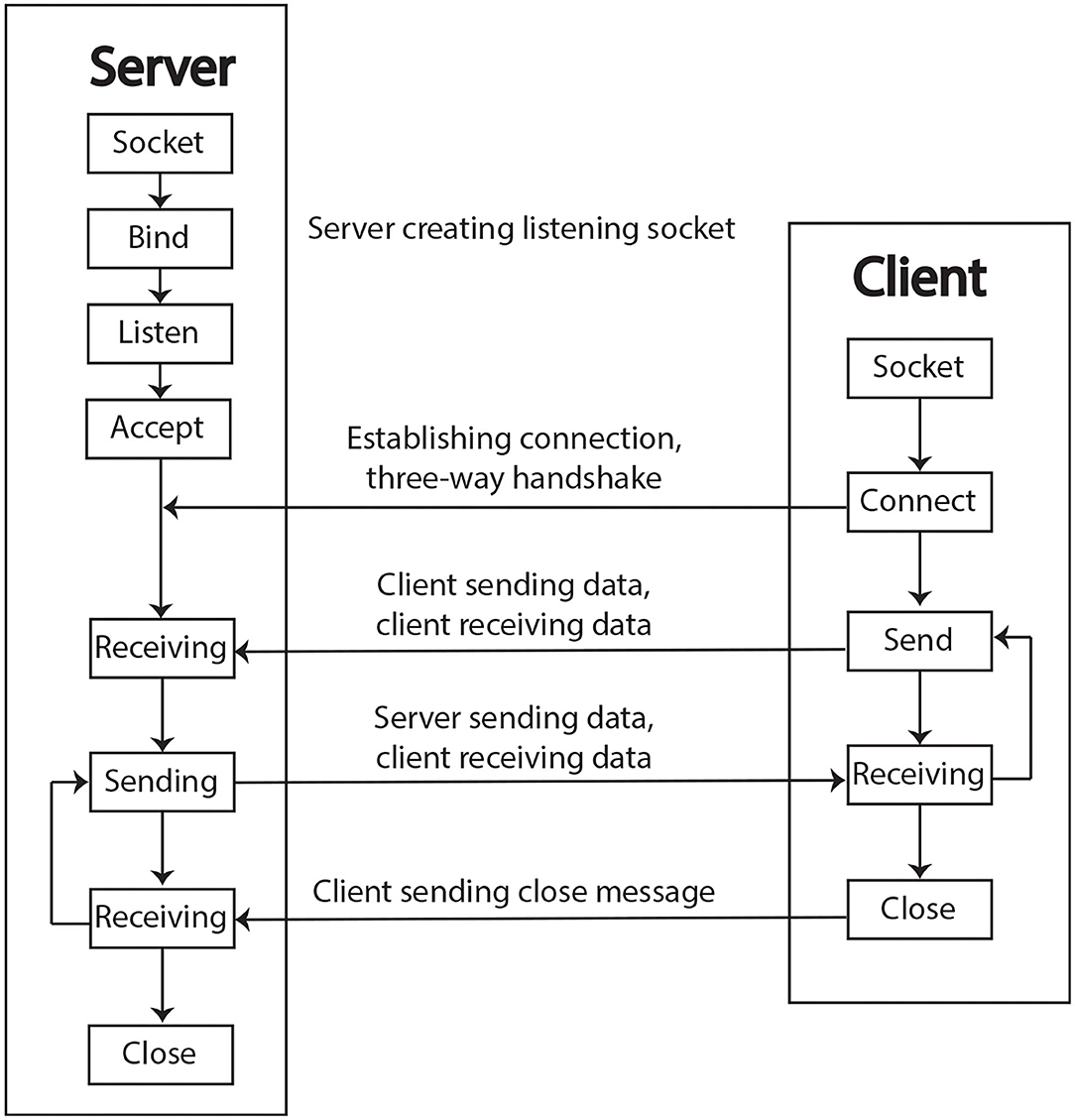

As there is growing technology for smart environments and interconnected devices relying on the Internet of Things (IoT), the Raspberry Pi acts as a “node” on a network to send and receive data. The “Python socket programming” enables programs to send and receive data at any time over the internet or a local network (Python, 2022). Python provides a socket class to implement sockets or objects for listening on a particular port at an IP address, while the other socket reaches out to form a connection, as a server and client scheme.

The process to build socket objects in Python is described as follows: the first step is to import the socket library, then initialize an object with proper parameters, open a connection to an IP address with port or URL, send data, receive data, and finally close the connection. This process is depicted by the flow diagram in Figure 6. When creating a socket object, the default protocol is the Transmission Control Protocol (TCP) because of its reliability, as it ensures successful delivery of the data packets. Similarly, the Internet Protocol (IP) identifies every device across the internet and allows data to be sent from one device to another across the internet. Nowadays, the TCP/IP model is the default method of communication on the internet. As a consequence, networking and sockets expand the boundaries for developing remote control applications.

Figure 6. The block diagram of the socket flow connection taken from Python (2022).

In consequence, the OU possesses a large number of communication modalities for controlling smart home applications and networked devices, including recent trends on the Internet of Things (IoT). The output functionality is defined by the programmed code in the open-source Arduino Software (IDE) or the Raspberry libraries available for the Python IDE. The GPIO and the Arduino UNO peripherals are used by electric engineers for communicating to external devices such as computers, robots, electric appliances, house sensors, motors, alarms, lights, etc., as illustrated in Figure 7.

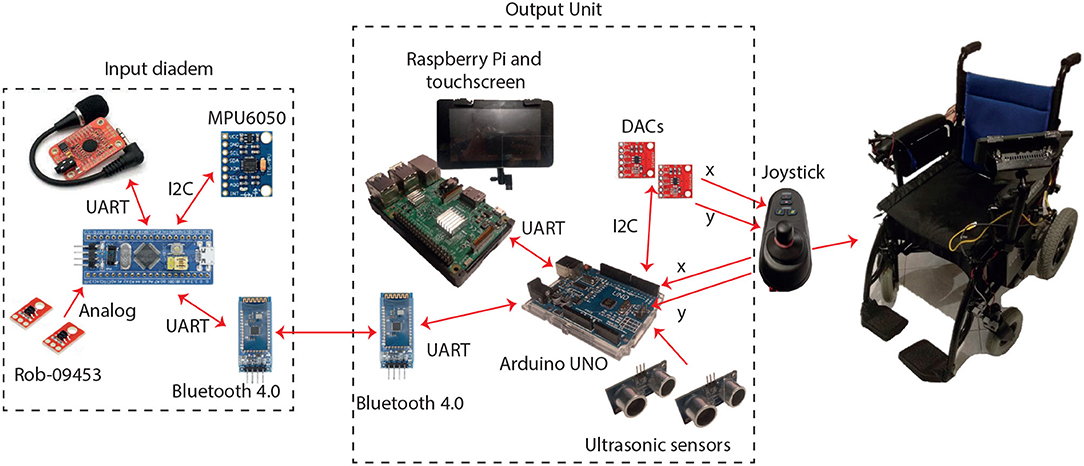

Commercial models of power wheelchairs incorporate a conventional joystick for steering, however, there are people with severe disabilities who find it difficult to use it because of their physical limitations. Consequently, smart wheelchairs explore alternatives and technologies for interfaces, which are hands-free and non-invasive (Urdiales, 2012; Leaman and La, 2017). To address the issue, a commercial wheelchair was modified to incorporate the SP and the OU. This electric wheelchair is powered by two 24 V/20 Ah batteries, providing 8 h of autonomy under normal conditions, as indicated on the distributor website (Powercar, 2022).

The default interface is a hand operated joystick, which integrates a 5 V DC USB-A output and is used to supply the Raspberry in the OU. Furthermore, the joystick provides two analog voltages, from 0 to 5 V, to control the wheelchair movements; however, they are substituted by outputs from two Digital-to-Analog Converters (DACs) MCP4725. These DACs use the I2C protocol for communicating with the Arduino. Also, an anti-collision module with ultrasonic sensors was installed in the wheelchair. Two sensors are placed in the front part of the wheelchair to detect close objects within a 1.2-m range. The installed hardware of the application and the communications protocols are shown in Figure 8.

Figure 8. The power standard wheelchair and the components of the sensing platform and the Output Unit.

Figure 9 presents the printed diadem for the SP and its configuration for the hands-free interfaces. To successfully control the power wheelchair with the proposed system, several considerations were made as described next.

Figure 9. Different modules could be changed for the interface operation of the diadem. (A) Head interface, (B) Voice interface, (C) Eyes interface.

The speaking module was trained to recognize eight intuitive voice commands for controlling the wheelchair: “Stop,” “move,” “go,” “run,” “forward,” “backward,” “left,” and “right.” These commands are classified into “attention” and “orientation” categories, as the user might need continuous or discrete movements. To be more specific, an attention command has to be followed by an orientation command: e.g., say “move” +“right” to turn right for 2 s, or use “run”+“forward” for a continuous forward movement until another command is received. The commands are summarized and described in Table 1.

Another alternative was to use the two-axis head movements to control the wheelchair; however, as the MPU6050 is very sensitive, slight head movements are detected as changes in the inclination angles and may cause wheelchair involuntary movements. For safety, a cubic function was implemented to map the inclination angles to the wheelchair operating voltages, as presented in Figure 10. The cubic function was chosen because it describes a quasi-linear zone in the middle of the curve; consequently, the wheelchair starts moving softly when the inclination angles reach the rising part of the curve and it remains still static in the middle “dead zone.” As a consequence, the users have to move their heads over a threshold angle to start moving the wheelchair. The mathematical relations for mapping the acceleration values to analog voltages are presented next.

Where θx and θy are head inclination angles from −90 to 90°, voltageX and voltageY are the control outputs voltages between 0 and 5 V, m and n are the slope factors for adjusting the flat zone of the function, and r is used for moving the quasi-linear zone along the x-axis, s is a scale factor and 2.5 is the reference voltage. By changing these values, the movement response could be adjusted according to the user's needs.

Finally, Table 2 is presented to summarize the operation of the head movements interface.

For configuring this recognition module, a virtual instrument was used to capture samples from both QTR-1A sensors during the four eye movements “open,” “close,” “left,” and “right.” The analog signal was sampled with a sampling rate of 50 Hz during a 1.5 s lapse. Another part of the acquisition process is filtering. After being digitized, the signal passes through digital filters to take out the noise and the DC component. First, it is used an Exponential Moving Average (EMA), which is the 1st infinite response filter for smoothing the signal without using much memory. This filter was implemented by using the recursive exponential relation presented next:

where x(n) is the input value and y(n) is the output of the filter, at a moment in time n. Also, y(n − 1) is the previous output and w is the weighting factor.

Additionally, a DC blocker filter was used. The DC blocker is a 1st order Infinite Impulse Response (IIR) filter described by the next recursive equation:

where x(n) is the input value and y(n) is the output of the filter, at a moment in time n. Besides, x(n − 1) is the previous input value and y(n − 1) is the previous output value, and α determines the corner frequency.

To create the data base, the acquisition process was repeated 200 times to capture signals from the user making the left, right, open, and close eye gestures, 50 repetitions for each movement. In Figure 11, there are shown representative signals from the four eye gestures.

The collected data was saved as text files, then they were used as the training data base for the ANN. The implemented ANN uses a two-layer architecture with 10 neurons in the first layer and four neurons in the second layer. It used a heuristic approach to select this architecture, as there was needed a small topology that accomplishes well the classification requirements. The MATLAB Neural Network Pattern Recognition (Mathworks, 2022) was used to create and train the ANN. There were generated functions for both the ANN and the filters to be deployed on the Blue Pill. Finally, the embedded system uses a sequence of recognized eye gestures to control the wheelchair movements, as indicated in Table 3.

The Wheelchair Skills Test (WST) (Dalhousie University, 2022) is a repeatable protocol for evaluating a well-defined set of tasks relevant to all wheelchair users. Expressly, 15 skills from the WST v. 4.3.1 were selected to be tested in the public space at Tecnológico de Monterrey Campus Ciudad de Mexico (ITESM-CCM) by using the head movements and the voice recognition interfaces. The selected skills are indicated in Table 4. The evaluation from the eye tracking module was not able to be completed, as there were important condition variations during the test because of condition changes in the different scenarios.

As described in the WST protocol, the scale for scoring the skills capacity is “2” (pass) if the task was completed safely and without difficulties, “1” (pass with difficulties) if the task was completed with collisions, excessive time or extra effort, and “0” (fail) is the task was aborted as it was considered unsafe. The Total Capacity is obtained by dividing the sum of the individual scores by twice the number of skills. For this case, the next equation was used.

Two volunteers with severe disabilities were recruited to validate the interfaces. Volunteer A is a 45-year-old male with spastic paraplegia because of a spinal cord injury and without his upper limbs, but he is able to speak and move his head without any problem. Volunteer B is a 19-years old male with quadriplegia, who is able to speak clearly but his head movements are a bit restricted because of damage to his spine. Both volunteers read an informed consent and authorized their participation. The trials were approved by the Ethics Committee of the Tecnológico de Monterrey, under registration number 13CI19039138.

The obstacles and scenarios described in the WST were adapted to the available conditions at the Campus, as presented in Figure 12. In addition, for safety issues, foam materials were employed to recreate some test scenarios like corridors and walls. During 2 days, 3-h sessions were planned for each participant to steer the wheelchair by using the SP. The first step was to familiarize with the SP, thus each volunteer experimented with the interfaces in an open space. After that, the participant was asked to complete the proposed 15 skills, in a single attempt.

Figure 12. Available scenarios for the WST. (A) Turn 90°, (C) avoid obstacles, (B) gets through the door, (D) ascends/descends the small ramp, (E) ascends/descends curve ramp, (F) roll across side slope, (G) roll 100 m, (H) roll forward/backward, (I) turn 180°/maneuvers sideways.

By using the head movements interface, both volunteers achieved most of the tasks without any problems. The Total Skills Capacity was 93.3% for volunteer A and 86.8% for volunteer B. On the other hand, by using the voice commands interface, both volunteers ranked similar results as they experimented with the same limitations to steering the wheelchair. The summarize the Total Skills Capacities, 63.3% was ranked for volunteer A and 66.6% for volunteer B. The individual scores are presented in Table 5.

Volunteers scored total capacities of 93.3 and 86.6% by using the head control; meanwhile, 63.3 and 66.6% were scored with the voice control. According to the results, both volunteers achieved well most of the skills by using head control. On the contrary, by using the voice module they experimented with problems to complete some tasks. The obtained scores for the head control test are closer to 100%; however, both volunteers experienced more problems commanding with the voice control. For comparison, the average capacity obtained by wheelchair users in Boucher et al. (2013) was 100 and 94.8%, by using a standard joystick and the vocal interface, respectively. The low scores shown in Table 5 indicate problems with controlling the wheelchair by voice commands; however, the volunteers achieved to complete most of the skills by themselves, not by using an automated system as indicated in Boucher et al. (2013). Indeed, automation is very helpful, but volunteers expressed satisfaction to be in charge and completing the task without any help from the system. In particular, tetraplegia has imposed a wide range of limitations and restrictions for Volunteer B, but he was able to increase their independent mobility using the SP. As indicated in Rigby et al. (2011), a key goal is to enable the patient's autonomy in activities that provide meaning purpose and enjoyment in their daily lives. The Volunteers expressed considerable expectations related to the use of alternative interfaces in their daily activities. Furthermore, the scores by using the head movements interface are promising and represent a high-level of control and independence for tetraplegics or persons with atrophied limbs. Volunteers agreed that the proposed input was easy to understand but training will be important for being more confident with the technology. Besides, it is important to consider parameter adjustments according to the user's needs, speed, and rhythm. In particular, volunteer A proposed modifications of the head movements interface as he felt to be more comfortable tilting down his head instead of upward, while Volunteer B preferred the opposite configuration.

The WST helped to collect valuable information about the interaction between users and interfaces; besides, opportunity areas for enhancing the proposed system. The WST is an important standard for training and assessing wheelchair skills (Bigras et al., 2020) with end users and specialized rehabilitation counselors to improve the sensing platform functionality. Although the scenarios were adapted to the Campus conditions, the test was challenging for the volunteers and the obtained scores contributed to growing positive evidence about the sensing platform for a practical application. This evaluation contributes to validating the end users' persistence to enhance their quality of life (Myburg et al., 2017).

The voice control method presented problems because of the module's inability to recognize commands on time. Also, sometimes it is not easy for the user to speak accurate and clear vocal instructions while the wheelchair is moving, as they felt under pressure. Also, the voice control is limited to few instructions. In order to be more effective, it is important to train and configure more commands and implement new functions in the Output Unit. Meanwhile, for using the head as an input method, special training will be recommended in order to develop a more efficient control, however, this input method has proven to be very intuitive for the user. The eye tracking interface is still characterized in order to be evaluated by end users: ambient lighting, the emitter-detector response, and the continuous use of the infrared, as eyes tend to become dry and fatigued (Singh and Singh, 2012). It will be important to expand the testing protocol to the eye's gestures interface to collect more information from end users for better customization.

Despite the limited number of participants during the evaluation, target users were enrolled to validate the sensing platform with a power mobility application. In fact, both volunteers are non-wheelchair users, without experience to steer a power wheelchair and many difficulties to control one by conventional methods. Obviously, it was a challenging process to develop trials with unhealthy subjects, as they need several considerations to keep them safe and fewest work sessions than healthy subjects. Nevertheless, there are many advantages to including end users in the study, even if the sample is small. For this reason, the obtained results should be treated as guidance for future studies. Furthermore, there is a lack of testing and validation with end-users in similar proposals (Machangpa and Chingtham, 2018; Silva et al., 2018; Umchid et al., 2018).

Finally, the Raspberry Pi included in this design is limited to receiving and showing data on the touchscreen. Undoubtedly, there are further possibilities for developing connectivity to other applications but at this moment the voice module depends on libraries available at the Arduino. Therefore, a new version is being developed to embed all the systems in the Raspberry, including outputs for new applications.

As the proposed design is very modular, it is possible to transfer the implementation to another assistive technology such as a robotic arm or to control electronic devices in the user's environment. As indicated, customization is very important, however, it is fundamental to consider other conditions of the patient like mental capacities, logic, and verbal skills, emotions (tolerance, frustration), and physical conditions (force, head, and neck mobility). A key goal is to use the same platform for different applications, as the system is very modular. If the user feels fully accustomed to the sensing platform for steering his wheelchair, the next step is to adapt the same interface to command other devices like home appliances. Therefore, connectivity with other systems becomes a fundamental topic for hands free interfaces. Thus, connectivity with other services and systems is a fundamental topic in the development of technology. Further, there are new expectations for eye tracking applications (Trabulsi et al., 2021) and new trends, e.g., the use of Face Coding Action Systems, based on eye tracking, for emotions recognition (Clark et al., 2020).

More interfaces should be included in the sensing platform to tailor to other users. The proposed system supports patients with remaining abilities such as emitting vocal commands or sounds and controlling the head or eye movements. Nonetheless, if there are still remaining abilities in the patient for consciously executing basic tasks, there is an opportunity to adapt a sensing input for satisfying basic needs, like drinking water (Hochberg et al., 2012) or typing messages (Peters et al., 2020). As there are progressive diseases, it is a challenge to adapt systems to the patient's evolving needs. However, there are opportunities even for patients with disorders of consciousness, as interfaces could be designed to differentiate minimally conscious states from vegetative (Lech et al., 2019).

A modular system was presented for sensing multiple inputs to command a smart wheelchair. The implemented alternative inputs allowed users with strong disabilities to recover independence during the WST. Also, the tests with end users showed important feedback for customization and other opportunity areas for the system. Moreover, the employed hardware offers multiple possibilities for connectivity to other electronic devices and for exploring smart environments.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

The studies involving human participants were reviewed and approved by Ethics Committee of the Tecnológico de Monterrey under registration number 13CI19039138. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

MR, PP, and AM contributed with the idea of the smart wheelchair. MR and PP carried out the testing with volunteers. MR wrote the first draft of the manuscript. PP and AM wrote other sections of the manuscript. All authors reviewed the submitted version of the manuscript.

This study was supported by a scholarship award from Tecnológico de Monterrey Campus Ciudad de México and a scholarship for living expenses from CONACYT.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Akanto, T. R., Ahmed, F., and Mollah, M. N. (2020). “A simplistic approach to design a prototype of smart home for the activation of home appliances based on Electrooculography(EOG),” in 2020 2nd International Conference on Advanced Information and Communication Technology (Dhaka: ICAICT), 235–239.

Anh, M. N., and Bien, D. X. (2021). Voice recognition and inverse kinematics control for a redundant manipulator based on a multilayer artificial intelligence network. J. Robot. 2021:e5805232. doi: 10.1155/2021/5805232

Anwer, S., Waris, A., Sultan, H., Butt, S. I., Zafar, M. H., Sarwar, M., et al. (2020). Eye and voice-controlled human machine interface system for wheelchairs using image gradient approach. Sensors 20:5510. doi: 10.3390/s20195510

Arduino (2022). UNO R3 ∣ Arduino Documentation. Available online at: https://docs.arduino.cc/hardware/uno-rev3 (accessed January 2, 2022)

Bigras, C., Owonuwa, D. D., Miller, W. C., and Archambault, P. S. (2020). A scoping review of powered wheelchair driving tasks and performance-based outcomes. Disabil. Rehabil. 15, 76-91. doi: 10.1080/17483107.2018.1527957

Boucher, P., Atrash, A., Kelouwani, S., Honoré, W., Nguyen, H., Villemure, J., et al. (2013). Design and validation of an intelligent wheelchair towards a clinically-functional outcome. J. NeuroEng. Rehabil. 10:58. doi: 10.1186/1743-0003-10-58

Brose, S. W., Weber, D. J., Salatin, B. A., Grindle, G. G., Wang, H., Vazquez, J. J., et al. (2010). The role of assistive robotics in the lives of persons with disability. Am. J. Phys. Med. Rehabil. 89, 509–521. doi: 10.1097/PHM.0b013e3181cf569b

Chang, C.-M., Lin, C.-S., Chen, W.-C., Chen, C.-T., and Hsu, Y.-L. (2020). Development and application of a human-machine interface using head control and flexible numeric tables for the severely disabled. Appl. Sci. 10:7005. doi: 10.3390/app10197005

Clark, E. A., Kessinger, J., Duncan, S. E., Bell, M. A., Lahne, J., Gallagher, D. L., et al. (2020). The facial action coding system for characterization of human affective response to consumer product-based stimuli: a systematic review. Front. Psychol. 11:920. doi: 10.3389/fpsyg.2020.00920

Cowan, D. (2020). “Electronic assistive technology,” in Clinical Engineering, 2nd Edn, eds A. Taktak, P. S. Ganney, D. Long, and R. G. Axell (Birminghan, AL: Academic Press), 437–471. doi: 10.1016/B978-0-08-102694-6

Cowan, R. E., Fregly, B. J., Boninger, M. L., Chan, L., Rodgers, M. M., and Reinkensmeyer, D. J. (2012). Recent trends in assistive technology for mobility. J. NeuroEng. Rehabil. 9:20. doi: 10.1186/1743-0003-9-20

Dalhousie University. (2022). Wheelchair Skills Program (WSP). Available online at: https://wheelchairskillsprogram.ca/en/ (accessed April 6, 2022).

Elechouse (2022). Speak Recognition, Voice Recognition Module V3 : Elechouse, Arduino Play House. Available online at: https://www.elechouse.com (accessed January 31, 2022).

Fukuma, R., Yanagisawa, T., Yokoi, H., Hirata, M., Yoshimine, T., Saitoh, Y., et al. (2018). Training in use of brainmachine interface-controlled robotic hand improves accuracy decoding two types of hand movements. Front. Neurosci. 12:478. doi: 10.3389/fnins.2018.00478

Hochberg, L. R., Bacher, D., Jarosiewicz, B., Masse, N. Y., Simeral, J. D., Vogel, J., et al. (2012). Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485, 372-375. doi: 10.1038/nature11076

Hosni, S. M., Shedeed, H. A., Mabrouk, M. S., and Tolba, M. F. (2019). EEG-EOG based virtual keyboard: toward hybrid brain computer interface. Neuroinformatics 17, 323–341. doi: 10.1007/s12021-018-9402-0

Karpov, A. A., and Yusupov, R. M. (2018). Multimodal interfaces of human-computer interaction. Herald Russ. Acad. Sci. 88, 67–74. doi: 10.1134/S1019331618010094

Klaib, A. F., Alsrehin, N. O., Melhem, W. Y., Bashtawi, H. O., and Magableh, A. A. (2021). Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies. Expert Syst. Appl. 166:114037. doi: 10.1016/j.eswa.2020.114037

Leaman, J., and La, H. M. (2017). A comprehensive review of smart wheelchairs: past, present, and future. IEEE Trans. Hum. Mach. Syst. 47, 486–499. doi: 10.1109/THMS.2017.2706727

Lech, M., Kucewicz, M. T., and Czyżewski, A. (2019). Human computer interface for tracking eye movements improves assessment and diagnosis of patients with acquired brain injuries. Front. Neurol. 10:6. doi: 10.3389/fneur.2019.00006

Lee, K.-F., Chen, Y.-L., Yu, C.-W., Jen, C.-L., Chin, K.-Y., Hung, C.-W., et al. (2021). Eye-wearable head-mounted tracking and gaze estimation interactive machine system for human-machine interface. J. Low Freq. Noise Vibrat. Active Control 40, 18–38. doi: 10.1177/1461348419875047

Little, R. (2010). Electronic aids for daily living. Phys. Med. Rehabil. Clin. North Am. 21, 33-42. doi: 10.1016/j.pmr.2009.07.008

Loukatos, D., Fragkos, A., and Arvanitis, K. G. (2021). “Exploiting voice recognition techniques to provide farm and greenhouse monitoring for elderly or disabled farmers, overWi-Fi and LoRa interfaces,” in Bio-Economy and Agri-Production, eds D. Bochtis, C. Achillas, G. Banias, and M. Lampridi (Athens: Academic Press). 247–263. doi: 10.1016/B978-0-12-819774-5.00015-1

Lovato, S. B., and Piper, A. M. (2019). Young children and voice search: what we know from human-computer interaction research. Front. Psychol. 10:8. doi: 10.3389/fpsyg.2019.00008

Lu, Z., and Zhou, P. (2019). Hands-free human-computer interface based on facial myoelectric pattern recognition. Front. Neurol. 10:444. doi: 10.3389/fneur.2019.00444

Machangpa, J. W., and Chingtham, T. S. (2018). Head gesture controlled wheelchair for quadriplegic patients. Proc. Comput. Sci. 132, 342-351. doi: 10.1016/j.procs.2018.05.189

Martins, J. M. S., Rodrigues, J. M. F., and Martins, J. A. C. (2015). Low-cost natural interface based on head movements. Proc. Comput. Sci. 67, 312-321. doi: 10.1016/j.procs.2015.09.275

Mathworks (2022). Neural Net Pattern Recognition—MATLAB—MathWorks América Latina. Available online at: https://la.mathworks.com/help/deeplearning/ref/neuralnetpatternrecognition-app.html (accessed April 4, 2022).

Milanizadeh, S., and Safaie, J. (2020). EOG-based HCI system for quadcopter navigation. IEEE Trans. Instrum. Measure. 69, 8992–8999. doi: 10.1109/TIM.2020.3001411

Molleapaza-Huanaco, J., Charca-Morocco, H., Juarez-Chavez, B., Equiño-Quispe, R., Talavera-Suarez, J., and Mayhua-López, E. (2020). “IoT platform based on EOG to monitor and control a smart home environment for patients with motor disabilities,” in 2020 13th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (Chengdu: CISP-BMEI), 784–789.

Myburg, M., Allan, E., Nalder, E., Schuurs, S., and Amsters, D. (2017). Environmental control systems—the experiences of people with spinal cord injury and the implications for prescribers. Disabil. Rehabil. Assist. Technol. 12, 128-136. doi: 10.3109/17483107.2015.1099748

Papadakis Ktistakis, I., and Bourbakis, N. (2018). “A multimodal humanmachine interaction scheme for an intelligent robotic nurse,” in 2018 IEEE 30th International Conference on Tools With Artificial Intelligence (Volas: IEEE), 749–756.

Pathirana, P., Senarath, S., Meedeniya, D., and Jayarathna, S. (2022). Eye gaze estimation: a survey on deep learning-based approaches. Expert Syst. Appl. 199:116894. doi: 10.1016/j.eswa.2022.116894

Peters, B., Bedrick, S., Dudy, S., Eddy, B., Higger, M., Kinsella, M., et al. (2020). SSVEP BCI and eye tracking use by individuals with late-stage ALS and visual impairments. Front. Hum. Neurosci. 14:595890. doi: 10.3389/fnhum.2020.595890

Powercar (2022). Silla de Baterías Powerchairj Powercar. Available online at: https://www.powercar.com.mx/silla-de-baterias (accessed April 4, 2022).

Python, R. (2022). Socket Programming in Python (Guide)—Real Python. Available online at: https://realpython.com/pythonsockets/ (accessed April 4, 2022).

Rigby, P., Ryan, S. E., and Campbell, K. A. (2011). Electronic aids to daily living and quality of life for persons with tetraplegia. Disabil. Rehabil. Assist. Technol. 6, 260-267. doi: 10.3109/17483107.2010.522678

Sarkar, M., Haider, M. Z., Chowdhury, D., and Rabbi, G. (2016). “An Android based human computer interactive system with motion recognition and voice command activation,” in 2016 5th International Conference on Informatics, Electronics and Vision (Dhaka), 170–175.

Silva, Y. M. L. R., Simões, W. C. S. S., Naves, E. L. M., Bastos Filho, T. F., and De Lucena, V. F. (2018). Teleoperation training environment for new users of electric powered wheelchairs based on multiple driving methods. IEEE Access 6, 55099–55111.

Singh, H., and Singh, J. (2012). Human eye tracking and related issues: a review. Int. J. Sci. Res. Publ. 2, 1–9. Available online at: http://www.ijsrp.org/research-paper-0912.php?rp=P09146

Song, Y., Cai, S., Yang, L., Li, G., Wu, W., and Xie, L. (2020). A practical EEG-based human-machine interface to online control an upper-limb assist robot. Front. Neurorobot. 14:32. doi: 10.3389/fnbot.2020.00032

STMicroelectronics (2022). STM32F103C6 - Mainstream Performance Line, Arm Cortex-M3 MCU With 32 Kbytes of Flash memory, 72 MHz CPU, Motor Control, USB and CAN. Available online at: https://www.st.com/en/microcontrollers-microprocessors/stm32f103c6.html (accessed April 1, 2022).

Šumak, B., Špindler, M., Debeljak, M., Heričko, M., and Pušnik, M. (2019). An empirical evaluation of a hands-free computer interaction for users with motor disabilities. J. Biomed. Inform. 96:103249. doi: 10.1016/j.jbi.2019.103249

Trabulsi, J., Norouzi, K., Suurmets, S., Storm, M., and Ramsøy, T. Z. (2021). Optimizing fixation filters for eye-tracking on small screens. Front. Neurosci. 15:578439. doi: 10.3389/fnins.2021.578439

Umchid, S., Limhaprasert, P., Chumsoongnern, S., Petthong, T., and Leeudomwong, T. (2018). “Voice controlled automatic wheel chair,” in 2018 11th Biomedical Engineering International Conference (Chiang Mai), 1–5. doi: 10.1109/BMEiCON.2018.8609955

Urdiales, C. (2012). “A Dummy's guide to assistive navigation devices,” in Collaborative Assistive Robot for Mobility Enhancement (CARMEN): The Bare Necessities: Assisted Wheelchair Navigation and Beyond, ed C. Urdiales (Berlin; Heidelberg: Springer), 19–39.

Woo, S., Lee, J., Kim, H., Chun, S., Lee, D., Gwon, D., et al. (2021). An open source-based BCI application for virtual world tour and its usability evaluation. Front. Hum. Neurosci. 15:647839. doi: 10.3389/fnhum.2021.647839

Zhang, J., Wang, B., Zhang, C., Xiao, Y., and Wang, M. Y. (2019). An EEG/EMG/EOG-based multimodal human-machine interface to real-time control of a soft robot hand. Front. Neurorobot. 13:7. doi: 10.3389/fnbot.2019.00007

Keywords: human-machine interface, speech control, head movements, eyes gestures, assistive technology, disabled people, multi platform

Citation: Rojas M, Ponce P and Molina A (2022) Development of a Sensing Platform Based on Hands-Free Interfaces for Controlling Electronic Devices. Front. Hum. Neurosci. 16:867377. doi: 10.3389/fnhum.2022.867377

Received: 01 February 2022; Accepted: 04 May 2022;

Published: 10 June 2022.

Edited by:

Hiram Ponce, Universidad Panamericana, MexicoReviewed by:

Sadik Kamel Gharghan, Middle Technical University, IraqCopyright © 2022 Rojas, Ponce and Molina. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mario Rojas, bWFyaW8ucm9qYXNAdGVjLm14

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.