94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

MINI REVIEW article

Front. Hum. Neurosci. , 27 January 2022

Sec. Cognitive Neuroscience

Volume 16 - 2022 | https://doi.org/10.3389/fnhum.2022.813684

This article is part of the Research Topic Shared responses and individual differences in the human brain during naturalistic stimulations View all 10 articles

Movies and narratives are increasingly utilized as stimuli in functional magnetic resonance imaging (fMRI), magnetoencephalography (MEG), and electroencephalography (EEG) studies. Emotional reactions of subjects, what they pay attention to, what they memorize, and their cognitive interpretations are all examples of inner experiences that can differ between subjects during watching of movies and listening to narratives inside the scanner. Here, we review literature indicating that behavioral measures of inner experiences play an integral role in this new research paradigm via guiding neuroimaging analysis. We review behavioral methods that have been developed to sample inner experiences during watching of movies and listening to narratives. We also review approaches that allow for joint analyses of the behaviorally sampled inner experiences and neuroimaging data. We suggest that building neurophenomenological frameworks holds potential for solving the interrelationships between inner experiences and their neural underpinnings. Finally, we tentatively suggest that recent developments in machine learning approaches may pave way for inferring different classes of inner experiences directly from the neuroimaging data, thus potentially complementing the behavioral self-reports.

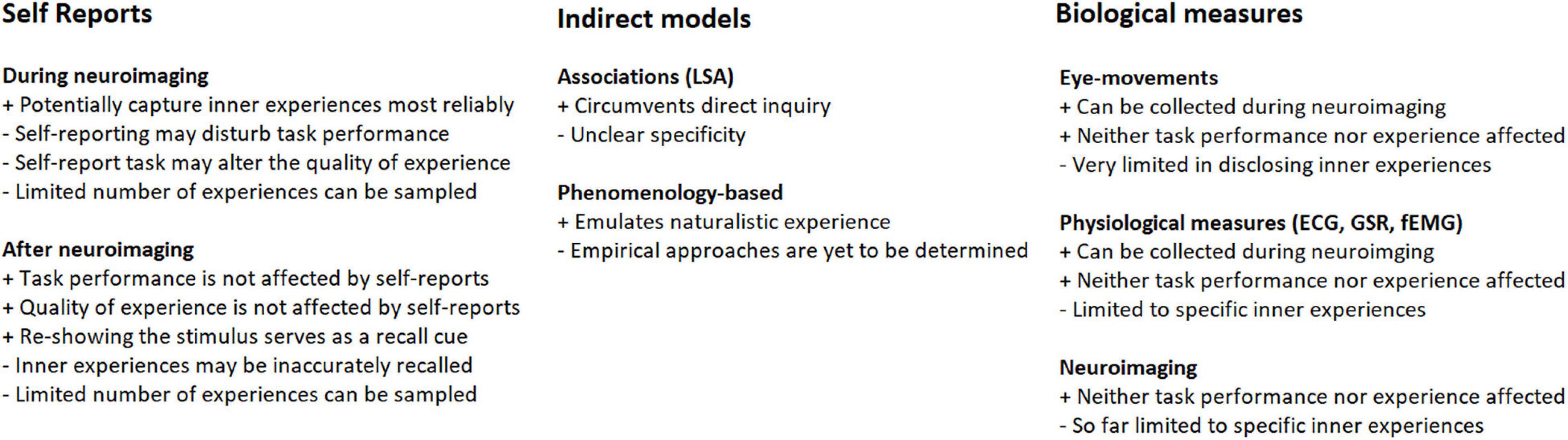

Movies and narratives are increasingly used as stimuli in functional magnetic resonance imaging (fMRI), electroencephalography (EEG), and magnetoencephalography (MEG) studies (Bartels and Zeki, 2004; Hasson et al., 2004; Dmochowski et al., 2012; Lankinen et al., 2014) (for a recent review, see Jaaskelainen et al., 2021). Such media-based stimuli are especially advantageous in that they allow for elicitation of a range of ecologically valid emotional and cognitive states in experimental subjects that would be difficult to achieve using traditional neuroimaging paradigms. This opens up possibilities to study the underlying neural mechanisms, which is one of the key questions in cognitive neuroscience. Elicitation of genuine strong emotions provides one good example where movies and narratives are more robust than other types of stimuli (Westermann et al., 1996). It is, however, highly important to take into account inter-subject variability in how the media-based stimuli are experienced. For example, a movie clip that is positive-emotional for one subject can be negatively experienced by another. Similarly, cognitive states and memories evoked by a narrative can significantly differ between subjects. Inter-subject variability in the elicited emotional and cognitive states can be utilized in the analysis of their neural basis. For example, if mental imagery is elicited only in some subjects during listening to an audiobook and if only those subjects exhibit activity in visual cortical areas, one can infer that visual cortical areas are important for mental imagery. It is worth mentioning that throughout the history of experimental psychology, there have been two competing schools of thought arguing on whether or not inner experiences can be consciously and reliably accessed by experimental subjects. For a recent account of this debate, and for arguments in favor of the validity of self-reporting subjective experiences see Hurlburt et al. (2017). A host of methods have been developed to assess the variability in behavioral experiences while subjects are watching movies or listening to narratives, and utilize the variability to guide neuroimaging data analyses (see Figure 1). Here, we review some of these important approaches.

Figure 1. Summary illustration of the different types of approaches that are available for sampling of inner experiences. The potential strengths and limitations are indicated with + and – signs.

Post-experiment questionnaires can be used to quantify which aspects of movies or narratives have been memorized during neuroimaging. These provide a good example of a way to quantify the variability in how movies or narratives are experienced. The information provided by such questionnaires can then be used to guide neuroimaging data analyses. In one study, higher inter-subject correlation (ISC) of hippocampal hemodynamic activity, determined by calculating voxel-wise correlations between all pairs of subjects (Hasson et al., 2004; Kauppi et al., 2010; Pajula et al., 2012), went hand-in-hand with memorizability of movie contents 3 weeks later (Hasson et al., 2008). In another study, ISC of EEG activity correlated with subsequent memorization of movie clip content (Cohen and Parra, 2016). In a third example of this approach, subjects watched emotionally aversive vs. neutral movie clips during positron emission tomography. Memorization of events in the clips 3 weeks after the scan correlated with activity levels in the amygdala and orbitofrontal areas (Cahill et al., 1996). Naturally, assessing what is memorized of a stimulus afterward is straightforward to accomplish with post-neuroimaging questionnaires or recounts. Assessing how the subjects emotionally experienced media-based stimuli during neuroimaging is already a bit trickier. We review these approaches in the following.

Movies and narratives represent a powerful tool for neuroimaging studies of emotions via their capability to induce robust emotions. Since emotional experiences can be highly variable across individual subjects, we argue that it is essential to ask the subjects about their experiences to inform the fMRI data analysis. The simplest approach is to ask the subjects afterward (Jones and Fox, 1992; Cahill et al., 1996; Aftanas et al., 1998; Jaaskelainen et al., 2008; Nummenmaa et al., 2012, 2014; Raz et al., 2012). In one study, subjects were asked to rate their experienced emotional valence and arousal continuously in two separate re-showings of the movie clips after the neuroimaging session. The ratings were then used to inform the neuroimaging data analyses. ISC of brain activity in default-mode and dorsal attentional networks were observed to co-vary with experienced valance and arousal, respectively (Nummenmaa et al., 2012). Naturally, obtaining continuous ratings for more than two classes of emotional experiences would be highly taxing for subjects, and obtaining even just one continuous rating is strenuous in case the movie or narrative is a long one. To circumvent this problem, we advise to obtain ratings of experienced categorical emotions as non-continuous variables (Jones and Fox, 1992).

In addition to emotional experiences per se one can obtain self-reports of specific aspects of emotional processing such as emotion regulation. Success in emotion regulation during watching of anger-eliciting movie clips has been observed to correlate with greater involvement of VMPFC in emotion regulation network (Jacob et al., 2018). Frontal EEG activity has been associated with better emotion regulation during induction of emotions (Nitschke et al., 2004; Dennis and Solomon, 2010). Degree of self-reported suspense has also been successfully used in fMRI data analyses (Metz-Lutz et al., 2010; Hsu et al., 2014). As a word of caution, the chances for obtaining reliable ratings are lower for more ambiguous and interpretable emotion categories (e.g., social emotions). We advise careful attention to be paid to how subjects are instructed, and running pilot experiments when asking to self-report categories of higher ambiguity.

In case of experienced humorousness, sadness, emotional valence, and emotional arousal, post-neuroimaging recall closely matches what is experienced during first-time watching of movie clips during neuroimaging. This is true at least when subjects are re-viewing the movie clips as memory cues for recall of what they had experienced during the first viewing of the clips in the scanner (Hutcherson et al., 2005; Raz et al., 2012; Jaaskelainen et al., 2016). Further studies are needed to determine for which emotional and other experiences the post-neuroimaging self-reports are accurate enough [e.g., using our published method (Jaaskelainen et al., 2016)]. In cases where post-scan emotion-ratings do not seem reliable, there are ways to sample emotional experiences during neuroimaging. These are briefly discussed below.

In addition to the post-scanning self-reports of emotional experiences, paradigms have been developed where subjects continuously rate their experienced emotions while viewing the emotional movie clips in the scanner (Hutcherson et al., 2005). This approach potentially captures the emotional experiences more accurately than recall-based self-reporting, as the experiences might be different when viewing the emotional clips for the first vs. second time. Lending support for this concern, at least in case of some inner experiences there seem to be mismatches between retrospective reports and moment-to-moment experiences obtained in the scanner (Hurlburt et al., 2015). On the other hand, emotional responses might be altered by the ongoing rating task due to differential attentional demands.

There are findings showing that brain activity patterns elicited during passive viewing of emotional movie clips differ from brain activity patterns elicited while subjects are rating their emotions during viewing of the clips in the fMRI scanner (Hutcherson et al., 2005). Given this, we recommend quantification of emotional experiences via asking the subjects after the neuroimaging session how they had experienced the media-based stimuli while in the scanner. We advise to use the stimuli as recall cues when obtaining the ratings and validation of the post-scan ratings with separate behavioral experiments (Jaaskelainen et al., 2016). In addition to emotions, there are cognitive states, such as mental imagery or mentalization, that are elicited by media-based stimuli. In the following, we review behavioral methods with which such inner experiences can be quantified.

In addition to experienced emotions, there are methods that have been developed to assess other inner experiences during neuroimaging such as mental imagery elicited by movies and narratives. These are called experience-sampling methods. One recent experience-sampling approach involved re-playing a narrative in segments to subjects after the neuroimaging session. The subjects were then tasked to produce a list of words in 20–30 s after each segment best describing what had been on their minds while they had heard the segment in the fMRI scanner. Word-list similarities were then estimated with latent semantic analysis (LSA). This was followed by representational similarity analysis (RSA; Kriegeskorte et al., 2008) of shared similarities between word-listings and voxel-wise fMRI activity as assessed by ISC metrics (Saalasti et al., 2019). Naturally, such word lists could also be probed for, e.g., occurrence of emotional words to estimate emotional reactions. Multi-dimensional scaling provides a manual alternative for automated algorithms such as LSA. In this approach, volunteers rate pair-wise similarities between word lists using, e.g., visual-analog scale (Bisanz et al., 1978).

There are also studies that have used experience sampling with other types of task paradigms. One example of such is a study where experimental subjects were presented intermittently with beeps during recording of resting-state fMRI. The beeps prompted the subjects to describe their thoughts and feelings just before each beep via answering specific questions (Hurlburt et al., 2015). In another study, it was observed that differences in inner experiences as sampled with post-scanning self-reports predicted differences in resting-state functional connectivity (Gorgolewski et al., 2014). In this approach, a questionnaire was utilized that prompted for agreeing or disagreeing with given statements. Examples of the statements include presence of social cognition, thinking about present vs. past, and thinking in words vs. images. Subjects rich with imagery showed differential visual-area connectivity (Gorgolewski et al., 2014).

Notably, it seems that there can be differences between online vs. post-scanning experience sampling (Hurlburt et al., 2015). The challenge of sampling subjective experience without affecting the experience itself is well known in studies on consciousness (Block, 2005; Dehaene et al., 2006). How can we know whether one has a conscious experience without resorting to cognitive functions such as attention, memory, or inner speech? Naturally, this is an intrinsic limitation in cognitive science: how to sample experience without altering its neural underpinnings. We discuss this in the following.

Self-report methods have their potential caveats. First of all, it is poorly understood to what extent humans can be self-aware of what is on their minds, due to limits of consciousness. While we may not be fully self-aware, it can be argued that with proper experience-sampling methods a good deal of the inner workings of our minds can be uncovered (Hurlburt et al., 2017). Importantly, self-reports can be used in human research, in contrast to animal models. Thus, further development of experience-sampling methods has the potential of broadening the scope of findings that can be obtained exclusively in human studies. In order to achieve reliable and valid ratings, subjects need to be explained very clearly what they are to rate. For example, inter-rater reliability was reduced when subjects rated goal-directed movements in movie clips compared with presence of faces in them (Lahnakoski et al., 2012).

Another limitation of self-reports is that they take time. For example, obtaining continuous valence and arousal measures for emotional movie clips shown to subjects during fMRI, the clips had to be played twice to the experimental subjects after the fMRI scanning session, one time for assessment of valence, and another time for assessment of arousal (Nummenmaa et al., 2012). In practice, the amount of time that a single subject can be expected to participate in an experiment is limited to a few hours and thus the number of variables that can be sampled is very limited.

The third limitation of self-reports is that they easily alter the experience of movies and narratives if they are obtained during neuroimaging, and on the other hand they can be less reliable if they are obtained separately after scanning (Hurlburt et al., 2015). In practice, however, it seems re-presenting the stimulus after scanning offers a powerful memory cue for retrospective self-reports of how the stimulus was experienced. This has been empirically shown in case of ratings of the humorousness and certain experienced emotions elicited by movie clips (Raz et al., 2012; Jaaskelainen et al., 2016), however, the assumption needs to be validated for other types of self-reported experiences.

There are other types of measures that can be obtained to either replace or complement verbal self-reports. It is possible to ask subjects to paint body maps of feelings that relate to their emotional experience (Nummenmaa et al., 2013). This can be one way to circumvent potential confounds that arise when asking subjects to verbally categorize their emotions. Besides self-reports, emotions can be estimated from physiological data (i.e., galvanic skin response and heart-rate measures; Wang et al., 2018) and facial muscle activity (Jonghwa and Ande, 2008; Jerritta et al., 2014), respectively. Notably, these measures can be recorded simultaneously with fMRI.

Recording of eye-movements provides yet another complementary behavioral measure. In one study, it was observed that variations in eye-movements failed to predict variations in brain activity beyond the early visual areas (Lahnakoski et al., 2014). Other studies have noted that visual representations are relatively independent of eye-movements in hierarchically higher visual areas (Lu et al., 2016; Nishimoto et al., 2017). However, there are findings demonstrating a coupling between eye-movements and hippocampal activity (Hannula and Ranganath, 2009). This suggests that eye-movements have the potential of offering indirect information about the inner workings of the human mind. In the context of eye-movement recordings, variations in pupil size can be taken as an additional measure of arousal (Wang et al., 2018). However, this is easily confounded by constantly changing luminance levels in the case of watching movies, which need to be taken into account. Pupil size has been also linked to processing load, yet on this we failed to obtain significant results in one of our studies (Smirnov et al., 2014). Thus, more studies are needed to determine in which ways eye-movement recordings can be useful in enhancing our understanding of how subjects experience media-based stimuli.

One potential way to circumvent these caveats and to optimize the synergy between experience sampling and neuroimaging was proposed over two decades ago by Francisco Varela (Varela, 1996; Rodriguez et al., 1999), who coined the term “neuro-phenomenology.” Phenomenology is the philosophical study of the structures of experience and consciousness from the first-person perspective (Callagher and Zahavi, 2020). Varela was inspired by pioneering works of the phenomenologists Merleau-Ponty (1978) and Husserl (1999). Following-up previous attempts to bridge the gap between neural activity of the mind and the subjective experience (e.g., “Hard Problem of Consciousness”) (Chalmers, 1995), Varela reframed the gap by methodologically integrating the two realms, while merging the quantitative statistical power of neuroimaging parameters with the experiential first-person descriptions. However, thus far there has not been any systematic empirical implementation of the neurophenomenological approach (Berkovich-Ohana et al., 2020).

Movies and narratives provide an excellent opportunity to this end: while early neuroimaging tended to rely on dichotomous and over-simplified approaches, the paradigm shift to use media-based stimuli in neuroscience has emphasized the need for a neurophenomenological approach that would accommodate the complex and multifaceted features of natural phenomena. At the theoretical level, phenomenological representations and concepts can accommodate and clarify neural mechanisms and processes, as instantiated in the recent Graded Empathy Framework (Levy and Bader, 2020). This neurophenomenological model of empathy leans on phenomenological analyses revealing parametric leveling of the empathic experience, and these straightforwardly align with neural mechanisms during experiments with movie and narrative stimuli. Accordingly, movies and narratives often evoke multiple levels of neural processes, and phenomenological analyses can describe these processes from a naturalistic and experiential point of view. In that way, neuro-phenomenology introduces a new dimension to the field of neuroscience – this dimension extends self-reported measures. Thus, neuro-phenomenological approaches may further advance neuroimaging research with media-based stimuli.

Another intriguing, yet completely different possibility is emerging for estimating experienced emotions based on distributed brain activity patterns. Specifically, it seems that it is possible to classify, in many instances well above chance level, basic emotions such as anger, fear, sadness, or happiness from fMRI data using multi-voxel pattern based machine-learning algorithms (Kragel and LaBar, 2015; Saarimäki et al., 2016). Recently, this has been demonstrated also in case of social emotions such as pride and love (Saarimäki et al., 2018). In addition, brain activity accompanying sadness differs between two types of sadness-inducing movies, one involving also sympathy and another involving also hate (Raz et al., 2012). Emotion classification can be accomplished also from EEG data at accuracies as high as 80% (Shuang et al., 2016; Yano and Suyama, 2016; Özerdem and Polat, 2017). EEG also suffices for estimating emotional valence – i.e., negative vs. positive emotional state (Costa et al., 2006; Zhao et al., 2018). Notably, in a recent study, emotional brain activity states were tracked over time during resting state fMRI. The results suggested transitions between emotional states once every 5–15 s during the resting state (Kragel et al., 2021).

Taken together these results suggest that it is possible in principle to assess emotional states that a given subject experienced based on brain activity that is recorded during viewing of movies and listening to narratives. Intriguingly, while at this point rather tentative leads than readily usable tools, these types of approaches might provide ways to circumvent one of the major challenges in cognitive science by allowing one to sample experiences as they take place without altering its neural underpinnings via intrusive prompts for self-reports and without the potential loss of validity via asking the subjects afterward. These approaches should be further developed and validated in future studies.

Having detailed annotation of movies and narratives (e.g., presence of people, social interactions, movements) and the recorded brain activity provides only partially the keys for understanding of the neural mechanisms underlying the emotional and cognitive states elicited by the media-based stimuli. To get the complete picture, the inner experiences of experimental subjects need to be additionally estimated. Historically, there have been two opposite schools of thought, one arguing for the usability of introspection (i.e., self-reports) as a method, and the other arguing that introspection is not useful. Several approaches have been developed that can be utilized to tap on different aspects of inner experiences, such as memorization of events, emotional experiences, thoughts, and mental imagery. It seems that post-scanning self-reports are valid for some classes of experiences, such as experiences of humorousness and certain emotions. However, it also seems that for some other inner experiences online-sampling is more accurate. In neuroimaging studies, utilizing movies and narratives as stimuli and applying introspective self-reports can capture essential information that helps guide the neuroimaging data analysis. We foresee that further development of such methods will open new important avenues in cognitive neuroscience. Finally, machine learning approaches that allow for classification and tracking of emotional (and possibly other mental) states based on neuroimaging data may have potential for yielding complementary information to that provided by self-reports in the future.

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

IJ, VK, and AS were supported by the International Laboratory of Social Neurobiology ICN HSE RF Government Grant Ag. No. 075-15-2019-1930. JA was supported by the National Institutes of Health (Grant Nos. R01DC016915, R01DC016765, and R01DC017991). JL was supported by the Academy of Finland (Grant No. 328674).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aftanas, L. I., Lotova, N. V., Koshkarov, V. I., and Popov, S. A. (1998). Non-linear dynamical coupling between different brain areas during evoked emotions: an EEG investigation. Biol. Psychol. 48, 121–138. doi: 10.1016/s0301-0511(98)00015-5

Bartels, A., and Zeki, S. (2004). Functional brain mapping during free viewing of natural scenes. Hum. Brain Mapp. 21, 75–85. doi: 10.1002/hbm.10153

Berkovich-Ohana, A., Dor-Ziderman, Y., Trautwein, F.-M., Schweitzer, Y., Nave, O., Fulder, S., et al. (2020). The hitchhiker’s guide to neurophenomenology - the case of studying self boundaries with meditators. Front. Psychol. 11:1680. doi: 10.3389/fpsyg.2020.01680

Bisanz, G. L., LaPorte, R. E., Vesonder, G. T., and Voss, J. F. (1978). On the representation of prose: new dimensions. J. Verbal. Learn. Verbal. Behav. 17, 337–357. doi: 10.1016/s0022-5371(78)90219-0

Cahill, L., Haier, R. J., Fallon, J., Alkire, M. T., Tang, C., Keator, D., et al. (1996). Amygdala activity at encoding correlated with long-term, free recall of emotional information. Proc. Natl. Acad. Sci. U.S.A. 93, 8016–8021. doi: 10.1073/pnas.93.15.8016

Callagher, S., and Zahavi, D. (2020). The Phenomenological Mind, 3rd Edn. Oxfordshire: Taylor and Francis.

Cohen, S. S., and Parra, L. C. (2016). Memorable audiovisual narratives synchronize sensory and supramodal neural responses. eNeuro 3, ENEURO.203–ENEURO.216. doi: 10.1523/ENEURO.0203-16.2016

Costa, T., Rognoni, E., and Galati, D. (2006). EEG phase synchronization during emotional response to positive and negative film stimuli. Neurosci. Lett. 406, 159–164. doi: 10.1016/j.neulet.2006.06.039

Dehaene, S., Changeux, J. P., Naccache, L., Sackur, J., and Sergent, C. (2006). Conscious, preconscious, and subliminal processing: a testable taxonomy. Trends Cogn. Sci. 10, 204–211. doi: 10.1016/j.tics.2006.03.007

Dennis, T. A., and Solomon, B. (2010). Frontal EEG and emotion regulation: electrocortical actiivty in response to emotional film clips is associated with reduced mood induction and attention interference effects. Biol. Psychol. 85, 456–464. doi: 10.1016/j.biopsycho.2010.09.008

Dmochowski, J. P., Sajda, P., Dias, J., and Parra, L. C. (2012). Correlated components of ongoing EEG point to emotionally laden attention - a possible marker of engagement? Front. Hum. Neurosci. 6:112. doi: 10.3389/fnhum.2012.00112

Gorgolewski, K. J., Lurie, D., Urchs, S., Kipping, J. A., Craddock, R. C., Milham, M. P., et al. (2014). A correspondence between individual differences in the brain’s intrinsic functional architecture and the content and form of self-generated thoughts. PLoS One 9:e97176. doi: 10.1371/journal.pone.0097176

Hannula, D., and Ranganath, C. (2009). The eyes have it: hippocampal activity predicts expression of memory in eye movements. Neuron 63, 592–599. doi: 10.1016/j.neuron.2009.08.025

Hasson, U., Furman, O., Clark, D., Dudai, Y., and Davachi, L. (2008). Enhanced intersubject correlations during movie viewing correlate with successful episodic encoding. Neuron 57, 452–462. doi: 10.1016/j.neuron.2007.12.009

Hasson, U., Nir, Y., Levy, I., Fuhrmann, G., and Malach, R. (2004). Intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640. doi: 10.1126/science.1089506

Hsu, C. T., Conrad, M., and Jacobs, A. M. (2014). Fiction feelings in Harry Potter: haemodynamic response in the mid-cingulate cortex correlates with immersive reading experience. Neuroreport 25, 1356–1361. doi: 10.1097/WNR.0000000000000272

Hurlburt, R. T., Alderson-Day, B., Fernyhough, C., and Kuhn, S. (2015). What goes on in the resting-state? A qualitative glimpse into resting-state experience in the scanner. Front. Psychol. 6:1535. doi: 10.3389/fpsyg.2015.01535

Hurlburt, R. T., Alderson-Day, B., Fernyhough, C., and Kuhn, S. (2017). Can inner experience be apprehended in high fidelity? Examining brain activation and experience from multiple perspectives. Front. Psychol. 8:43. doi: 10.3389/fpsyg.2017.00043

Hutcherson, C. A., Goldin, P. R., Ochsner, K. N., Gabrieli, J. D., Feldman Barrett, L., and Gross, J. J. (2005). Attention and emotion: does rating emotion alter neural responses to amusing and sad films. Neuroimage 27, 656–668. doi: 10.1016/j.neuroimage.2005.04.028

Jaaskelainen, I. P., Koskentalo, K., Balk, M. H., Autti, T., Kauramäki, J., Pomren, C., et al. (2008). Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. Open Neuroimag. J. 2, 14–19. doi: 10.2174/1874440000802010014

Jaaskelainen, I. P., Pajula, J., Tohka, J., Lee, H.-J., Kuo, W.-J., and Lin, F.-H. (2016). Brain hemodynamic activity during viewing and re-viewing of comedy movies explained by experienced humor. Sci. Rep. 6:27741. doi: 10.1038/srep27741

Jaaskelainen, I. P., Sams, M., Glerean, E., and Ahveninen, J. (2021). Movies and narratives as naturalistic stimuli in neuroimaging. Neuroimage 224:117445. doi: 10.1016/j.neuroimage.2020.117445

Jacob, Y., Gilam, G., Lin, T., Raz, G., and Hendler, T. (2018). Anger modulates influence hierarchies within and between emotional reactivity and regulation networks. Front. Behav. Neurosci. 12:60. doi: 10.3389/fnbeh.2018.00060

Jerritta, S., Murugappan, M., Wan, K., and Yaacob, S. (2014). Emotion recognition from facial EMG signals using higher order statistics and principal component analysis. J. Chin. Inst. Chem. Eng. 37, 385–394. doi: 10.1080/02533839.2013.799946

Jones, N. A., and Fox, N. A. (1992). Electroencephalogram asymmetry during emotionally evocative films and its relation to positive and negative affectivity. Brain Cogn. 20, 280–299. doi: 10.1016/0278-2626(92)90021-d

Jonghwa, K., and Ande, E. (2008). Emotion recognition based on physiological changes in music listening. IEEE Trans. Pattern. Anal. Mach. Intell. 30, 2067–2083. doi: 10.1109/TPAMI.2008.26

Kauppi, J. P., Jaaskelainen, I. P., Sams, M., and Tohka, J. (2010). Inter-subject correlation of brain hemodynamic responses during watching a movie: localization in space and frequency. Front. Neuroinform. 4:5. doi: 10.3389/fninf.2010.00005

Kragel, P. A., Hariri, A. R., and LaBar, K. S. (2021). The temporal dynamics of spotaneous emotional brain states and their implications for mental health. J. Cogn. Neurosci. doi: 10.1162/jocn_a_01787 [Epub Online ahead of print].

Kragel, P. A., and LaBar, K. S. (2015). Multivariate neural biomarkers of emotional states are categorically distinct. Soc. Cogn. Affect. Neurosci. 10, 1437–1448. doi: 10.1093/scan/nsv032

Kriegeskorte, N., Mur, M., and Bandettini, P. (2008). Representational similarity analysis - connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2:4. doi: 10.3389/neuro.06.004.2008

Lahnakoski, J. M., Glerean, E., Jaaskelainen, I. P., Hyönä, J., Hari, R., Sams, M., et al. (2014). Synchronous brain activity across individuals underlies shared psychological perspectives. Neuroimage 100, 316–324. doi: 10.1016/j.neuroimage.2014.06.022

Lahnakoski, J. M., Glerean, E., Salmi, J., Jaaskelainen, I. P., Sams, M., Hari, R., et al. (2012). Naturalistic fMRI mapping reveals superior temporal sulcus as the hub for distributed brain network for social perception. Front. Hum. Neurosci. 6:233. doi: 10.3389/fnhum.2012.00233

Lankinen, K., Saari, J., Hari, R., and Koskinen, M. (2014). Intersubject consistency of cortical MEG signals during movie viewing. Neuroimage 92, 217–224. doi: 10.1016/j.neuroimage.2014.02.004

Levy, J., and Bader, O. (2020). Graded empathy: a neuro-phenomenological hypothesis. Front. Psychiatry 11:554848. doi: 10.3389/fpsyt.2020.554848

Lu, K. H., Hung, S. C., Wen, H., Marussich, L., and Liu, Z. (2016). Influences of high-level features, gaze, and scene transitions on the reliability of BOLD responses to natural movie stimuli. PLoS One 11:e0161797. doi: 10.1371/journal.pone.0161797

Metz-Lutz, M. N., Bressan, Y., Heider, N., and Otzenberger, H. (2010). What physiological changes and cerebral traces tell us about adhesion to fiction during theater-watching? Front. Hum. Neurosci. 19:59. doi: 10.3389/fnhum.2010.00059

Nishimoto, S., Huth, A. G., Bilenko, N. Y., and Gallant, J. L. (2017). Eye movement-invariant representations in the human visual system. J. Vis. 17:11. doi: 10.1167/17.1.11

Nitschke, J. B., Heller, W., Etienne, M. A., and Miller, G. A. (2004). Prefrontal cortex activity differentiates processes affecting memory in depression. Biol. Psychol. 67, 125–143. doi: 10.1016/j.biopsycho.2004.03.004

Nummenmaa, L., Glerean, E., Hari, R., and HIetanen, J. K. (2013). Bodily maps of emotions. Proc. Natl. Acad. Sci. U.S.A. 111, 646–651.

Nummenmaa, L., Glerean, E., Viinikainen, M., Jaaskelainen, I. P., Hari, R., and Sams, M. (2012). Emotions promote social interaction by synchronizing brain activity across individuals. Proc. Natl. Acad. Sci. U.S.A. 109, 9599–9604.

Nummenmaa, L., Saarimäki, H., Glerean, E., Gotsopoulos, A., Jaaskelainen, I. P., Hari, R., et al. (2014). Emotional speech synchronizes brains across listeners and engages large-scale dynamic brain networks. Neuroimage 102, 498–509.

Özerdem, M. S., and Polat, H. (2017). Emotion recognition based on EEG features in movie clips with channel selection. Brain Inform. 4, 241–252.

Pajula, J., Kauppi, J. P., and Tohka, J. (2012). Inter-subject correlation in fMRI: method validation against stimulus-model based analysis. PLoS One 8:e41196. doi: 10.1371/journal.pone.0041196

Raz, G., Winetraub, Y., Jacob, Y., Kinreich, S., Maron-Katz, A., Shaham, G., et al. (2012). Portraying emotions at their unfolding: a multilayered approach for probing dynamics of neural networks. Neuroimage 60, 1448–1461.

Rodriguez, E., George, N., Lachaux, J. P., Martinerie, J., Renault, B., and Varela, F. J. (1999). Perception’s shadow: long-distance synchronization of human brain activity. Nature 397, 430–433.

Saalasti, S., Alho, J., Bar, M., Glerean, E., Honkela, T., Kauppila, M., et al. (2019). Inferior parietal lobule and early visual areas support elicitation of individualized meanings during narrative listening. Brain Behav. 9:e01288.

Saarimäki, H., Ejtehadian, L. F., Glerean, E., Jaaskelainen, I. P., Vuilleumier, P., Sams, M., et al. (2018). Distributed affective space represents multiple emotion categories across the brain. Soc. Affect. Cogn. Neurosci. 13, 471–482.

Saarimäki, H., Gotsopoulos, A., Jaaskelainen, I. P., Lampinen, J., Vuilleumier, P., Hari, R., et al. (2016). Discrete neural signatures of basic emotions. Cereb. Cortex 26, 2563–2573.

Shuang, L., Jingling, T., Xu, M., Yang, J., Qi, H., and Ming, D. (2016). Improve the generalization of emotional classifiers across time by using training samples from different days. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2016, 841–844.

Smirnov, D., Glerean, E., Lahnakoski, J. M., Salmi, J., Jaaskelainen, I. P., Sams, M., et al. (2014). Fronto-parietal network supports context-dependent speech comprehension. Neuropsychologia 63, 293–303.

Varela, F. J. (1996). Neurophenomenology: a methodological remedy for the hard problem. J. Conscious. Stud. 3, 330–349.

Wang, C.-A., Baird, T., Huang, J., Coutinto, J. D., Brien, D. C., and Munoz, D. P. (2018). Arousal effects on pupil size, heart rate, and skin conductance in an emotional face task. Front. Neurol. 9:1029. doi: 10.3389/fneur.2018.01029

Westermann, R., Spies, K., Stahl, G., and Hesse, F. W. (1996). Relative effectiveness and validity of mood induction procedures: a meta-analysis. Eur. J. Soc. Psychol. 26, 557–580.

Yano, K., and Suyama, T. (2016). A novel fixed low-rank constrained EEG spatial filter estimation with application to movie-induced emotion recognition. Comput. Intell. Neurosci. 2016:6734720.

Keywords: naturalistic stimuli, memory, attention, emotion, social cognition, language, movies, narratives

Citation: Jääskeläinen IP, Ahveninen J, Klucharev V, Shestakova AN and Levy J (2022) Behavioral Experience-Sampling Methods in Neuroimaging Studies With Movie and Narrative Stimuli. Front. Hum. Neurosci. 16:813684. doi: 10.3389/fnhum.2022.813684

Received: 12 November 2021; Accepted: 06 January 2022;

Published: 27 January 2022.

Edited by:

Klaus Kessler, Aston University, United KingdomReviewed by:

Robert A. Seymour, University College London, United KingdomCopyright © 2022 Jääskeläinen, Ahveninen, Klucharev, Shestakova and Levy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Iiro P. Jääskeläinen, aWlyby5qYWFza2VsYWluZW5AYWFsdG8uZmk=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.