- 1Department of Psychiatry, Stanford University School of Medicine, Stanford, CA, United States

- 2Neosensory, Palo Alto, CA, United States

Haptic devices use the sense of touch to transmit information to the nervous system. As an example, a sound-to-touch device processes auditory information and sends it to the brain via patterns of vibration on the skin for people who have lost hearing. We here summarize the current directions of such research and draw upon examples in industry and academia. Such devices can be used for sensory substitution (replacing a lost sense, such as hearing or vision), sensory expansion (widening an existing sensory experience, such as detecting electromagnetic radiation outside the visible light spectrum), and sensory addition (providing a novel sense, such as magnetoreception). We review the relevant literature, the current status, and possible directions for the future of sensory manipulation using non-invasive haptic devices.

Introduction

The endeavor of getting information to the brain via unusual channels has a long history. We here concentrate on non-invasive devices that use haptics, or the sense of touch. In recent years, as computing technology has advanced, many haptic-based devices have been developed. We categorize these devices into three groups based on their function: sensory substitution, sensory expansion, and sensory addition.

The key to understanding the success of haptics requires remembering that the brain does not directly hear or see the world. Instead, the neural language is built of electrochemical signals in neurons which build some representation of the outside world. The brain's neural networks take in signals from sensory inputs and extract informationally-relevant patterns. It strives to adjust to whatever information it receives and works to extract what it can. As long as the data reflects some relevant feature about the outside world, the brain works to decode it (Eagleman, 2020). In this sense, the brain can be viewed as a general-purpose computing device: it absorbs the available signals and works to determine how to optimally make use of them.

Sensory substitution

Decades ago, researchers realized that the brain's ability to interpret different kinds of incoming information implied that one might be able to get one sensory channel to carry another's information (Bach-y-Rita et al., 1969). In a surprising demonstration, Bach-y-Rita et al. placed blind volunteers in a reconfigured dental chair in which a grid of four hundred Teflon tips could be extended and retracted by mechanical solenoids. Over the blind participant a camera was mounted on a tripod. The video stream of the camera was converted into a poking of the tips against the volunteer's back. Objects were passed in front of the camera while blind participants in the chair paid careful attention to the feelings in their backs. Over days of training, they became better at identifying the objects by their feel. The blind subjects learned to distinguish horizontal from vertical from diagonal lines, and more advanced users could learn to distinguish simple objects and even faces—simply by the tactile sensations on their back. Bach-y-Rita's findings suggested that information from the skin can be interpreted as readily (if with lower resolution) as information coming from the eyes, and this demonstration opened the floodgates of sensory substitution (Hatwell et al., 2003; Poirier et al., 2007; Bubic et al., 2010; Novich and Eagleman, 2015; Macpherson, 2018).

The technique improved when Bach-y-Rita and his collaborators allowed the blind user to point the camera, using his own volition to control where the “eye” looked (Bach-y-Rita, 1972, 2004). This verified the hypothesis that sensory input is best learned when one can interact with the world. Letting users control the camera closed the loop between muscle output and sensory input (Hurley and Noë, 2003; Noe, 2004). Perception emerges not from a passive input, but instead as a result of actively exploring the environment and matching particular actions to specific changes in sensory inputs. Whether by moving extraocular muscles (as in the case of sighted people) or arm muscles (Bach-y-Rita's participants), the neural architecture of the brain strives to figure out how the output maps to subsequent input (Eagleman, 2020).

The subjective experience for the users was that objects captured by the camera were felt to be located at a distance instead of on the skin of the back (Bach-y-Rita et al., 2003; Nagel et al., 2005). In other words, it was something like vision: instead of stimulating the photoreceptors, the information stimulated touch receptors on the skin, resulting in a functionally similar experience.

Although Bach-y-Rita's vision-to-touch system was the first to seize the public imagination, it was not the first attempt at sensory substitution. In the early 1960s, Polish researchers had passed visual information via touch, building a system of vibratory motors mounted on a helmet that “drew” the images on the head through vibrations [the Elektroftalm; (Starkiewicz and Kuliszewski, 1963)]. Blind participants were able to navigate specially prepared rooms that were painted to enhance the contrast of door frames and furniture edges. Unfortunately, the device was heavy and would get hot during use, and thus was not market-ready—but the proof of principle was there.

These unexpected approaches worked because inputs to the brain (such as photons at the eyes, air compression waves at the ears, pressure on the skin) are all converted into electrical signals. As long as the incoming spikes carry information that represents something important about the outside world, the brain will attempt to interpret it.

In the 1990s, Bach-y-Rita et al. sought ways to go smaller than the dental chair. They developed a small device called the BrainPort (Bach-y-Rita et al., 2005; Nau et al., 2015; Stronks et al., 2016). A camera is attached to the forehead of a blind person, and a small grid of electrodes is placed on the tongue. The “Tongue Display Unit” of the BrainPort uses a grid of stimulators over three square centimeters. The electrodes deliver small shocks that correlate with the position of pixels, feeling something like Pop Rocks candy in the mouth. Bright pixels are encoded by strong stimulation at the corresponding points on the tongue, gray by medium stimulation, and darkness by no stimulation. The BrainPort gives the capacity to distinguish visual items with a visual acuity that equates to about 20/800 vision (Sampaio et al., 2001). While users report that they first perceive the tongue stimulation as unidentifiable edges and shapes, they eventually learn to recognize the stimulation at a deeper level, allowing them to discern qualities such as distance, shape, direction of movement, and size (Stronks et al., 2016).

The tongue provides an excellent brain-machine interface because it is densely packed with touch receptors (Bach-y-Rita et al., 1969; Bach-y-Rita, 2004). When brain imaging is performed on trained subjects (blind or sighted), the motion of electrotactile shocks across the tongue activates the MT+ area of the visual cortex, an area which is normally involved in visual motion (Merabet et al., 2009; Amedi et al., 2010; Matteau et al., 2010).

Of particular interest is the subjective experience. The blind participant Roger Behm describes the experience of the BrainPort:

Last year, when I was up here for the first time, we were doing stuff on the table, in the kitchen. And I got kind of... a little emotional, because it's 33 years since I've seen before. And I could reach out and see the different-sized balls. I mean I visually see them. I could reach out and grab them—not grope or feel for them—pick them up, and see the cup, and raise my hand and drop it right in the cup (Bains, 2007).

Tactile input can work on many locations on the body. For example, the Forehead Retina System converts a video stream into a small grid of touch on the forehead (Kajimoto et al., 2006). Another device hosts a grid of vibrotactile actuators on the abdomen, which use intensity to represent distance to the nearest surfaces. Researchers used this device to demonstrate that blind participants' walking trajectories are not preplanned, but instead emerge dynamically as the tactile information streams in Lobo et al. (2017, 2018).

As 5% of the world has disabling hearing loss, researchers have recently sought to build sensory substitution for the deaf (Novich and Eagleman, 2015). Assimilating advances in high-performance computing into a sound-to-touch sensory-substitution device worn under the shirt, Novich and Eagleman (2015) built a vest that captured sound around the user and mapped it onto vibratory motors on the skin, allowing users to feel the sonic world around them. The theory was to transfer the function of the inner ear (breaking sounds into different frequencies and sending the data to the brain) to the skin.

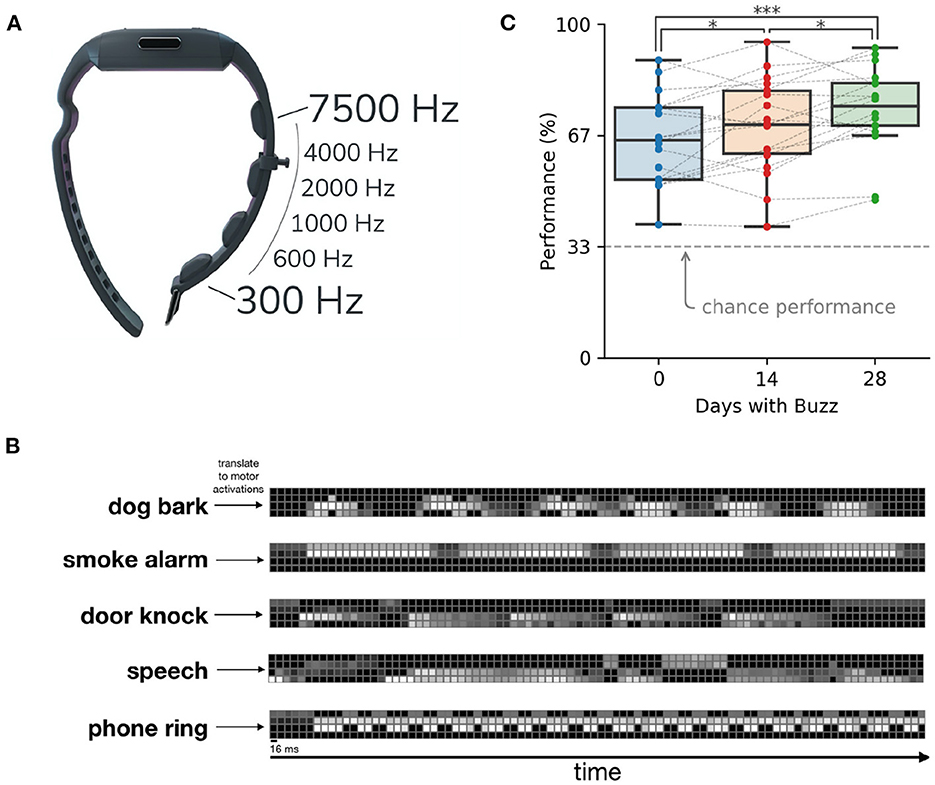

Does the skin have enough bandwidth to transmit all the information of sound? After all, the cochlea is an exquisitely specialized structure for capturing sound frequencies with high fidelity, while the skin is focused on other measures and has poor spatial resolution. Conveying a cochlear-level of information through the skin would require several thousand vibrotactile motors—too many to fit on a person. However, by compressing the speech information, just a few motors suffice (Koffler et al., 2015; Novich and Eagleman, 2015). Such technology can be designed in many different form factors, such as a chest strap for children and a wristband with vibratory motors (Figure 1).

Figure 1. A sensory substitution wristband for deafness (the Neosensory Buzz). (A) Four vibratory motors in a wristband transfer information about sound levels in different frequency bands. (B) Sample sounds translated into patterns of vibration on the motors of the wristband. Brighter colors represent higher intensity of the motor. (C) Participant performance on sound identification improves through time. Data reprinted from Perrotta et al. (2021).

On the first day of wearing the wristband, users are at the very least able to use the vibrations as cues that a noise is happening. Users quickly learn to use the vibrations to differentiate sounds, such as a dog barking, a faucet running, a doorbell ringing, or someone calling their name. A few days into wearing the wristband, users report that the conscious perception of the vibrations fades into the background but still aids them in knowing what sounds are nearby. Users' ability to differentiate patterns of vibrations improves over time. Figure 1C shows performance scores over time on a three-alternative forced choice paradigm, where the three choices were picked at random from a list of 14 environmental sounds. One environmental sound was presented as vibrations on the wristband and the user had to choose which sound they felt (Perrotta et al., 2021).

Moreover, after several months users develop what appears to be a direct subjective experience of external sound. After 6 months, one user reported that he no longer has a sensation of buzzing followed by an interpretation of those vibrations, but instead, “I perceive the sound in my head” (personal interview). This was a subjective report and qualia are not possible to verify; nonetheless we found the claim sufficiently interesting to note here.

The idea of converting touch into sound is not new (Traunmüller, 1980; Cholewiak and Sherrick, 1986; Weisenberger et al., 1991; Summers and Gratton, 1995; Galvin et al., 2001; Reed and Delhorne, 2005). In 1923, Robert Gault, a psychologist at Northwestern University, heard about a deaf and blind ten-year-old girl who claimed to be able to feel sound through her fingertips. Skeptical, he ran experiments. He stopped up her ears and wrapped her head in a woolen blanket (and verified on his graduate student that this prevented the ability to hear). She put her finger against the diaphragm of a “portophone” (a long hollow tube), and Gault sat in a closet and spoke through it. Her only ability to understand what he was saying was from vibrations on her fingertip. He reports,

After each sentence or question was completed her blanket was raised and she repeated to the assistant what had been said with but a few unimportant variations.... I believe we have here a satisfactory demonstration that she interprets the human voice through vibrations against her fingers.

Gault mentions that his colleague has succeeded at communicating words through a thirteen-foot-long glass tube. A trained participant, with stopped-up ears, could put his palm against the end of the tube and identify words that were spoken into the other end. With these sorts of observations, researchers have attempted to make sound-to-touch devices, but until recent decades the machinery was too large and computationally weak to make for a practical device.

Similarly, the Tadoma method, developed in the 1930s, allows people who are deaf and blind to understand the speech of another person by placing a hand over the face and neck of the speaker. The thumb rests lightly on the lips and the fingers fan out to cover the neck and cheek, allowing detection of moving lips, vibrating vocal cords, and air coming out of the nostrils. Thousands of deaf and blind children have been taught this method and have obtained proficiency at understanding language almost to the point of those with hearing, all through touch (Alcorn, 1945).

In the 1970s, deaf inventor Dimitri Kanevsky developed a two-channel vibrotactile device, one of which captures the envelope of low frequencies, and the other high. Two vibratory motors sit on the wrists. By the 1980s, similar inventions in Sweden and the United States were proliferating. The problem was that all these devices were too large, with too few motors (typically just one) to make an impact. Due to computational limitations in previous years, earlier attempts at sound-to-touch substitution relied on band-pass filtering audio and playing this output to the skin over vibrating solenoids. The solenoids operated at a fixed frequency of less than half the bandwidth of some of these band-passed channels, leading to aliasing noise. Further, multichannel versions of these devices were limited in the number of actuators due to battery size and capacity constraints. With modern computation, the desired mathematical transforms can be performed in real time, at little expense, and without the need of custom integrated circuits, and the whole device can be made as an inexpensive, wearable computing platform.

A wrist-worn sound-to-touch sensory substitution device was recently shown in brain imaging to induce activity in both somatosensory and auditory regions, demonstrating that the brain rapidly recruits existing auditory processing areas to aid in the understanding of the touch (Malone et al., 2021).

There are cost advantages to a sensory substitution approach. Cochlear implants typically cost around $100,000 for implantation (Turchetti et al., 2011). In contrast, haptic technologies can address hearing loss for some hundreds of dollars. Implants also require an invasive surgery, while a vibrating wristband is merely strapped on like a watch.

There are many reasons to take advantage of the system of touch. For example, people with prosthetic legs have difficulty learning how to walk with their new prosthetics because of a lack of proprioception. To allow participants to understand the position of an artificial limb, other sensory devices can channel the information. For example, research has shown improvements in stair descent for lower limb amputees using haptic sensory substitution devices (Sie et al., 2017), and haptic sensory substitution devices have also been created for providing sensory feedback for upper limb prosthetics (Cipriani et al., 2012; Antfolk et al., 2013; Rombokas et al., 2013). Some sensory substitution devices for upper limb amputees use electrotactile stimulation instead of vibrotactile stimulation, targeting different receptors in the skin (Saleh et al., 2018).

This same technique can be used for a person with a real leg that has lost sensation—as happens in Parkinson's disease or peripheral neuropathy. In unpublished internal experiments, we have successfully piloted a solution that used sensors in a sock to measure motion and pressure and fed the data into the vibrating wristband. By this technique, a person understands where her foot is, whether her weight is on it, and whether the surface she's standing on is even. A recent systematic review synthesizing the findings of nine randomized controlled trials showed that sensory substitution devices are effective in improving balance measures of neurological patient populations (Lynch and Monaghan, 2021).

Touch can also be used to address problems with balance. This has been done with the BrainPort tongue display (Tyler et al., 2003; Danilov et al., 2007): the head orientation was fed to the BrainPort tongue grid: when the head was straight up, the electrical stimulation was felt in the middle of the tongue grid; when the head tilted forward, the electrical signal moved toward the tip of the tongue; when the head tilted back, the stimulation moved toward the rear; side-to-side tilts were encoded by left and right movement of the electrical signal. In this way, a person who had lost all sense of which way her head was oriented could feel the answer on her tongue. Of note, the residual benefits extended even after taking off the device. Users' brains figured out how to take residual vestibular signals as well as existing visual and proprioceptive signals and strengthen them with the guidance of the helmet. After several months of using the helmet, many participants were able to reduce the frequency of their usage.

We have developed a similar experimental system that is currently unpublished but bears mentioning for illustration purposes. In a balance study underway at Stanford University, we use a vibratory wristband in combination with a 9-axis inertial measurement unit (IMU) that is clipped to the collar of a user. The IMU outputs an absolute rotation relative to a given origin, which is set as the device's position when the user is standing upright. The pitch and roll of the rotation are mapped to vibrations on the wristband to provide additional balance information to the user's brain: the more one tilts away from upright, the higher the amplitude of vibrations one feels on the wrist. The direction of the tilt (positive or negative pitch or roll) is mapped to the vibration location on the wrist. Results of this approach will be published in the future.

Besides the basic five senses, more complex senses can be aided with sensory substitution devices. People with autism spectrum disorder often have a decreased ability to detect emotion in others; this, in one preliminary project, machine learning algorithms classify the emotional states detected in speech and communicate these emotional states to the brain via vibrations on the wrist. Currently, the machine learning algorithm detects and communicates how much someone's speech matches seven different emotions (neutral, surprise, disgust, happiness, sadness, fear, and anger) and communicates that to the wearer (ValenceVibrations.com).

Sensory substitution opens new opportunities to compensate for sensory loss. However, similar devices can move past compensation and instead build on top of normal senses—we call these devices sensory expansion devices. Instead of filling in gaps for someone with a sensory deficit, these expand the unhindered senses to be better, wider, or faster.

Sensory expansion

Many examples of sensory expansion have been demonstrated in animals. For example, mice and monkeys can be moved from color-blindness to color vision by genetically engineering photoreceptors to contain human photopigment (Jacobs et al., 2007; Mancuso et al., 2009). The research team injected a virus containing the red-detecting opsin gene behind the retina. After 20 weeks of practice, the monkeys could use the color vision to discriminate previously indistinguishable colors.

In a less invasive example, we created a sensory expansion device by connecting a vibrating wristband to a near-wavelength infrared sensor and an ultraviolet sensor. Although our eyes capture only visible light, the frequencies of the electromagnetic spectrum adjacent to visible light are in fact visible to a variety of animals. For instance, honeybees can see ultraviolet patterns on flowers (Silberglied, 1979). By capturing the intensity of light in these ranges and mapping those intensities to vibrations, a user can pick up on information in these invisible light regions without gene editing or retinal implants. In this way, a wrist-worn device can expand vision beyond its natural capabilities. One of us (DME) wore an infrared bolometer connected to a haptic wristband and was able to easily detect infrared cameras in the darkness (Eagleman, 2020).

To illustrate the breadth of possibilities, it bears mention that we have performed an unpublished preliminary experiment with blindness. Using lidar (light detection and ranging), we tracked the position of every moving object in an office space—in this case, humans moving around. We connected the data from the lidar sensors to our vibrating vest, such that the vest vibrated to tell the wearer if they were approaching an obstacle like a wall or chair, where there were people nearby, and what direction they should move to most quickly reach a target destination. We tested this sensory expansion device with a blind participant. He wore the vest and could feel the location of objects and people around him as well as the quickest path to a desired destination (such as a conference room). Interestingly, there was no learning curve: he immediately understood how to use the vibrations to navigate without colliding into objects or people. Although sensory substitution devices can fill the gap left by vision impairment, this device did more than that—it offered an expanded, 360° sense of space. A sighted person could also wear this device to expand their sense of space, allowing them to know what objects or people are behind them. Because this device does more than alleviate a sensory loss, it is an example of sensory expansion.

Haptic sensory expansion is not limited to vision. Devices from hearing aids to the Buzz can reach beyond the normal hearing scale—for example, into the ultrasonic range (as heard by cats or bats), or the infrasonic (as heard by elephants) (Wolbring, 2013).

The sense of smell can also be benefited by sensory expansion. To illustrate an unpublished possibility, imagine converting the data from an array of molecular detectors into haptic signals. While this is unproven, the goal should be clear: for a person to access a new depth of odor detection, beyond the natural sensory acuity of human smell.

One can also detect temperature via sensory expansion. In preliminary experiments, participants use an array of mid-wavelength infrared sensors to detect the temperature of nearby objects and translate the data to vibrations on the wrist. The wearer learns to interpret the vibrations as a sense of temperature, but one that does not stop at the skin—instead, their sense of temperature has expanded to include objects in the surrounding environment.

For the purpose of illustrating the width of possibilities, we note that internal signals in the body—such as the sense of one's own blood sugar levels—can be easily expanded by combining easily-obtainable technologies. For example, continuous glucose monitoring devices allow users to look at their level of blood sugar at any point; however, the user still must pull out a cell phone to consult an app. By connecting a haptic device to a continuous glucose monitoring device, one could create a sensory expansion device that allows users to have continuous access to their blood sugar levels without having to visually attend to a screen.

More broadly, one could also make a device to expand one's sense of a partner's wellbeing. By connecting sensors that detect a partner's breathing rate, temperature, galvanic skin response, heart rate, and more, a haptic device could expand the user's information flow to allow them to feel their partner's internal signals. Interestingly, this sense need not be limited by proximity: the user can sense how their partner is feeling even from across the country, so long as they have an internet connection.

An important question for any haptic sensory device is whether the user is gaining a new sensory experience or is instead consciously processing the incoming haptic information. In the former case, the subjective experience of a temperature sensing wristband would be similar to the subjective experience of touching a hot stove (without needing to touch or be near the surface), while the latter case would be closer to feeling an alert on the wrist that warns of a hot surface. As mentioned above, previous work has shown evidence for a direct subject experience of a new sense, whether from brain imaging or through subjective questionnaires (Bach-y-Rita et al., 2003; Nagel et al., 2005). Until this investigation is done on each new sensory device, it is unknown whether the device is providing a new sense or rather a signal that can be consciously perceived as touch.

While these devices all expand on one existing sense or another, other devices can go further, representing entirely new senses. These devices form the third group: sensory addition.

Sensory addition

Due to the brain's remarkable flexibility, there is the possibility of leveraging entirely new data streams directly into perception (Hawkins and Blakeslee, 2007; Eagleman, 2015).

One increasingly common example is the implantation of small neodymium magnets into the fingertips. By this method, “biohackers” can haptically feel magnetic fields. The magnets tug when exposed to electromagnetic fields, and the nearby touch nerves register this. Information normally invisible to humans is now streamed to the brain via the sensory nerves from the fingers. People report that detecting electromagnetic fields (e.g., from a power transformer) is like touching an invisible bubble, one with a shape that can be assessed by moving one's hand around (Dvorsky, 2012). A world is detectable that previously was not: palpable shapes live around microwave ovens, computer fans, speakers, and subway power transformers.

Can haptic devices achieve the same outcome without implanting magnets into the fingertips? One developer created a sensory addition device using a haptic wristband that translates electromagnetic fields into vibrations (details of the project at neosensory.com/developers). Not only is such an approach less invasive, it is also more customizable. Instead of just feeling the presence of an electromagnetic field, this device decomposes the frequency of an alternating current signal and presents the intensity of different parts of the spectrum via different vibrating motors. Thus, an electrician can add this new sense to their perception, knowing the frequency and intensity of electric signals flowing through live wires.

What if you could detect not only the magnetic field around objects but also the one around the planet—as many animal species do? Researchers at Osnabrück University devised a belt called the feelSpace to allow humans to tap into that signal. The belt is ringed with vibratory motors, and the motor pointed to the north buzzes. As you turn your body, you always feel the buzzing in the direction of magnetic north.

At first, it feels like buzzing, but over time it becomes spatial information: a feeling that north is there (Kaspar et al., 2014). Over several weeks, the belt changes how people navigate: their orientation improves, they develop new strategies, they gain a higher awareness of the relationship between different places. The environment seems more ordered. Relationships between places can be easily remembered.

As one subject described the experience, “The orientation in the cities was interesting. After coming back, I could retrieve the relative orientation of all places, rooms and buildings, even if I did not pay attention while I was actually there” (Nagel et al., 2005). Instead of thinking about a sequence of cues, they thought about the situation globally. Another user describes how it felt: “It was different from mere tactile stimulation, because the belt mediated a spatial feeling.... I was intuitively aware of the direction of my home or of my office.” In other words, his experience is not of sensory substitution, nor is it sensory expansion (making your sight or hearing better). Instead, it's a sensory addition. It's a new kind of human experience. The user goes on:

During the first 2 weeks, I had to concentrate on it; afterwards, it was intuitive. I could even imagine the arrangement of places and rooms where I sometimes stay. Interestingly, when I take off the belt at night I still feel the vibration: When I turn to the other side, the vibration is moving too—this is a fascinating feeling! (Nagel et al., 2005).

After users take off the feelSense belt, they often report that they continue having a better sense of orientation for some time. In other words, the effect persists even without wearing the device. As with the balance helmet, weak internal signals can get strengthened when an external device confirms them. (Note that one won't have to wear a belt for long: researchers have recently developed a thin electronic skin—essentially a little sticker on the hand—that indicates north; see Bermúdez et al., 2018).

Other projects tackle tasks that require a great deal of cognitive load. For example, a modern aircraft cockpit is packed with visual instruments. With a sensory addition device, a pilot can feel the high-dimensional stream of data instead of having to read all the data visually. The North Atlantic Treaty Organization (NATO) released a report on haptic devices developed to help pilots navigate in low-visibility settings (Van Erp and Self, 2008). Similarly, Fellah and Guiatni (2019) developed a haptic sensory substitution device to give pilots access to the turn rate angle, climb angle, and flight control warning messages via vibrations.

As a related example, researchers at our laboratory are piloting a system to allow doctors to sense the vitals of a patient without having to visually consult a variety of monitors. This device connects a haptic wristband to an array of sensors that measure body temperature, blood oxygen saturation, heart rate, and heart rate variability. Future work will optimize how these data streams are presented to the doctor and with what resolution. Both psychophysical testing and understanding user needs will shape this optimized mapping (for example, how much resolution can a doctor learn to feel in the haptic signal, and what is the smallest change in blood pressure that should be discernible via the device?).

Finally, it is worth asking whether haptic devices are optimal for sending data streams to the brain. After all, one could leverage a higher-resolution sense, such as vision or audition, or perhaps use multiple sensory modalities. This is an open question for the future; however, haptics is advantageous due simply to the fact that vision and hearing are necessary for so many daily tasks. Skin is a high-bandwidth, mostly unused information channel—and therefore its almost-total availability makes it an attractive target for new data streams.

Conclusion

We have reviewed some of the projects and possibilities of non-invasive, haptic devices for passing new data streams to the brain. The chronic rewiring of the brain gives it tremendous flexibility: it dynamically reconfigures itself to absorb and interact with data. As a result, electrical grids can come to feed visual information via the tongue, vibratory motors can feed hearing via the skin, and cell phones can feed video streams via the ears. Beyond sensory substitution, such devices can be used to endow the brain with new capacities, as we see with sensory expansion (extending the limits of an already-existing sense) and sensory addition (using new data streams to create new senses). Haptic devices have moved rapidly from computer-laden cabled devices to wireless wearables, and this progress, more than any change in the fundamental science, will increase their usage and study.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Solutions IRB. The patients/participants provided their written informed consent to participate in this study.

Author contributions

DE wrote the manuscript. MP engineered many of the devices described. Both authors contributed to the article and approved the submitted version.

Conflict of interest

DE and MP were employed by Neosensory.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alcorn, S. (1945). Development of the Tadoma method for the deaf-blind. J Except Children. 77, 247–57. doi: 10.1177/001440294501100407

Amedi, A., Raz, N., Azulay, H., Malach, R., and Zohary, E. (2010). Cortical activity during tactile exploration of objects in blind and sighted humans. Restorat Neurol Neurosci. 28, 143–156. doi: 10.3233/RNN-2010-0503

Antfolk, C., D'Alonzo, M., Rosén, B., Lundborg, G., Sebelius, F., and Cipriani, C. (2013). Sensory feedback in upper limb prosthetics. Expert Rev. Med. Dev. 10, 45–54. doi: 10.1586/erd.12.68

Bach-y-Rita, P. (2004). Tactile sensory substitution studies. Ann New York Acad Sci. 1013, 83–91. doi: 10.1196/annals.1305.006

Bach-y-Rita, P., Collins, C. C., Saunders, F. A., White, B., and Scadden, L. (1969). Vision substitution by tactile image projection. Nature. 221, 963–964. doi: 10.1038/221963a0

Bach-y-Rita, P., Danilov, Y., Tyler, M. E., and Grimm, R. J. (2005). Late Human Brain Plasticity: Vestibular Substitution with a Tongue BrainPort Human-Machine Interface. Intellectica. Revue de l'Association Pour La Recherche Cognitive.doi: 10.3406/intel.2005.1362

Bach-y-Rita, P., Tyler, M. E., and Kaczmarek, K. A. (2003). Seeing with the Brain. Int J Hum Comput Interact. 15, 285–295. doi: 10.1207/S15327590IJHC1502_6

Bains, S. (2007). Mixed Feelings. Wired. Conde Nast. Available online at: https://www.wired.com/2007/04/esp/

Bermúdez, G. S. C., Fuchs, H., Bischoff, L., Fassbender, J., and Makarov, D. (2018). Electronic-skin compasses for geomagnetic field-driven artificial magnetoreception and interactive electronics. Nat. Electron. 1, 589–95. doi: 10.1038/s41928-018-0161-6

Bubic, A., Striem-Amit, E., and Amedi, A. (2010). Large-Scale Brain Plasticity Following Blindness and the Use of Sensory Substitution Devices. Multisensory Object Perception in the Primate Brain. Berlin: Springer. doi: 10.1007/978-1-4419-5615-6_18

Cholewiak, R. W., and Sherrick, C. E. (1986). Tracking skill of a deaf person with long-term tactile aid experience: a case study. J. Rehabilitat. Res. Develop. 23, 20–26.

Cipriani, C., D'Alonzo, M., and Carrozza, M. C. (2012). A miniature vibrotactile sensory substitution device for multifingered hand prosthetics. IEEE Trans. Bio-Med. Eng. 59, 400–408. doi: 10.1109/TBME.2011.2173342

Danilov, Y. P., Tyler, M. E., Skinner, K. L., Hogle, R. A., and Bach-y-Rita, P. (2007). Efficacy of electrotactile vestibular substitution in patients with peripheral and central vestibular loss. J. Vestibular Res. Equilib. Orient. 17, 119–130. doi: 10.3233/VES-2007-172-307

Dvorsky, G. (2012). What Does the Future Have in Store for Radical Body Modification?. Gizmodo. Available online at:https://gizmodo.com/what-does-the-future-have-in-store-for-radical-body-mod-5944883 (accessed September 20, 2012).

Eagleman, D. (2015). “Can We Create New Senses for Humans?” TED. Available online at: https://www.ted.com/talks/david_eagleman_can_we_create_new_senses_for_humans?language=en

Fellah, K., and Guiatni, M. (2019). Tactile display design for flight envelope protection and situational awareness. IEEE Trans. Haptics 12, 87–98. doi: 10.1109/TOH.2018.2865302

Galvin, K. L., Ginis, J., Cowan, R. S. C., Blamey, P. J., and Clark, G. M. (2001). A comparison of a new prototype tickle talkertm with the tactaid 7. Aust. New Zealand J. Audiol. 23, 18–36. doi: 10.1375/audi.23.1.18.31095

Hatwell, Y., Streri, A., and Gentaz, E. (2003). Touching for Knowing: Cognitive Psychology of Haptic Manual Perception. Amsterdam: John Benjamins Publishing. doi: 10.1075/aicr.53

Hawkins, J., and Blakeslee, S. (2007). On Intelligence: How a New Understanding of the Brain Will Lead to the Creation of Truly Intelligent Machines. New York, NY: Macmillan.

Hurley, S., and Noë, A. (2003). Neural plasticity and consciousness: reply to block. Trends Cognit. Sci. 7, 342. doi: 10.1016/S1364-6613(03)00165-7

Jacobs, G. H., Williams, G. A., Cahill, H., and Nathans, J. (2007). Emergence of novel color vision in mice engineered to express a human cone photopigment. Science 315, 1723–1725. doi: 10.1126/science.1138838

Kajimoto, H., Kanno, Y., and Tachi, S. (2006). “Forehead electro-tactile display for vision substitution,” in Proc. EuroHaptics, 11. Citeseer.

Kaspar, K., König, S., Schwandt, J., and König, P. (2014). The experience of new sensorimotor contingencies by sensory augmentation. Consciousness Cognit. 28, 47–63. doi: 10.1016/j.concog.2014.06.006

Koffler, T., Ushakov, K., and Avraham, K. B. (2015). Genetics of hearing loss: syndromic. Otolaryngol Clin North Am. 48, 1041–1061. doi: 10.1016/j.otc.2015.07.007

Lobo, L., Higueras-Herbada, A., Travieso, D., Jacobs, D. M., Rodger, M., and Craig, C. M. (2017). “Sensory substitution and walking toward targets: an experiment with blind participants,” in Studies in Perception and Action XIV: Nineteenth International Conference on Perception and Action (Milton Park: Psychology Press). p. 35.

Lobo, L., Travieso, D., Jacobs, D. M., Rodger, M., and Craig, C. M. (2018). Sensory substitution: using a vibrotactile device to orient and walk to targets. J. Exp. Psychol. Appl. 24, 108–124. doi: 10.1037/xap0000154

Lynch, P., and Monaghan, K. (2021). Effects of sensory substituted functional training on balance, gait, and functional performance in neurological patient populations: a systematic review and meta-analysis. Heliyon 7, e08007. doi: 10.1016/j.heliyon.2021.e08007

Macpherson, F. (2018). Sensory Substitution and Augmentation: An Introduction. Sensory Substitution and Augmentation. doi: 10.5871/bacad/9780197266441.003.0001

Malone, P. S., Eberhardt, S. P., Auer, E. T., Klein, R., Bernstein, L. E., and Riesenhuber, M. (2021). Neural Basis of Learning to Perceive Speech through Touch Using an Acoustic-to-Vibrotactile Speech Sensory Substitution. bioRxiv. doi: 10.1101/2021.10.24.465610

Mancuso, K., Hauswirth, W. W., Li, Q., Connor, T. B., Kuchenbecker, J. A., Mauck, M. C., et al. (2009). Gene therapy for red-green colour blindness in adult primates. Nature 461, 784–787. doi: 10.1038/nature08401

Matteau, I., Kupers, R., Ricciardi, E., Pietrini, P., and Ptito, M. (2010). Beyond visual, aural and haptic movement perception: hMT+ Is activated by electrotactile motion stimulation of the tongue in sighted and in congenitally blind individuals. Brain Res. Bull. 82, 264–270. doi: 10.1016/j.brainresbull.2010.05.001

Merabet, L. B., Battelli, L., Obretenova, S., Maguire, S., Meijer, P., and Pascual-Leone, A. (2009). Functional recruitment of visual cortex for sound encoded object identification in the blind. Neuroreport 20, 132–138. doi: 10.1097/WNR.0b013e32832104dc

Nagel, S. K., Carl, C., Kringe, T., Märtin, R., and König, P. (2005). Beyond sensory substitution–learning the sixth sense. J. Neural Eng. 2, R13–26. doi: 10.1088/1741-2560/2/4/R02

Nau, A. C., Pintar, C., Arnoldussen, A., and Fisher, C. (2015). Acquisition of visual perception in blind adults using the brainport artificial vision device. Am. J. Occup. Therapy 69, 1–8. doi: 10.5014/ajot.2015.011809

Novich, S. D., and Eagleman, D. M. (2015). Using space and time to encode vibrotactile information: toward an estimate of the skin's achievable throughput. Exp. Brain Res. 23, 2777–88. doi: 10.1007/s00221-015-4346-1

Perrotta, M. V., Asgeirsdottir, T., and Eagleman, D. M. (2021). Deciphering sounds through patterns of vibration on the skin. Neuroscience 458, 77–86. doi: 10.1016/j.neuroscience.2021.01.008

Poirier, C., Volder, A. G. D., and Scheiber, C. (2007). What neuroimaging tells us about sensory substitution. Neurosci. Biobehav. Rev. 31, 1064–70. doi: 10.1016/j.neubiorev.2007.05.010

Reed, C. M., and Delhorne, L. A. (2005). Reception of environmental sounds through cochlear implants. Ear Hear. 26, 48–61. doi: 10.1097/00003446-200502000-00005

Rombokas, E., Stepp, C. E., Chang, C., Malhotra, M., and Matsuoka, Y. (2013). Vibrotactile sensory substitution for electromyographic control of object manipulation. IEEE Trans. Bio-Med. Eng. 60, 2226–2232. doi: 10.1109/TBME.2013.2252174

Saleh, M., Ibrahim, A., Ansovini, F., Mohanna, Y., and Valle, M. (2018). “Wearable system for sensory substitution for prosthetics,” in 2018 New Generation of CAS (NGCAS). p. 110–13. doi: 10.1109/NGCAS.2018.8572173

Sampaio, E., Maris, S., and Bach-y-Rita, P. (2001). Brain plasticity: ‘visual' acuity of blind persons via the tongue. Brain Res. 908, 204–207. doi: 10.1016/S0006-8993(01)02667-1

Sie, A., Realmuto, J., and Rombokas, E. (2017). “A lower limb prosthesis haptic feedback system for stair descent,” in 2017 Design of Medical Devices Conference. American Society of Mechanical Engineers Digital Collection. doi: 10.1115/DMD2017-3409

Silberglied, R. E. (1979). Communication in the ultraviolet. Ann. Rev. Ecol. Syst. 10, 373–398. doi: 10.1146/annurev.es.10.110179.002105

Starkiewicz, W., and Kuliszewski, T. (1963). The 80-channel elektroftalm. Proc. Int. Congress Technol. Blindness 1, 157.

Stronks, H. C., Mitchell, E. B., Nau, A. C., and Barnes, N. (2016). Visual task performance in the blind with the brainport v100 vision aid. Expert Rev. Med. Dev. 13, 919–931. doi: 10.1080/17434440.2016.1237287

Summers, I. R., and Gratton, D. A. (1995). Choice of speech features for tactile presentation to the profoundly deaf. IEEE Trans. Rehabilitat. Eng. 3, 117–121. doi: 10.1109/86.372901

Traunmüller, H. (1980). The sentiphone: a tactual speech communication aid. J. Commun. Disorders 13, 183–193. doi: 10.1016/0021-9924(80)90035-0

Turchetti, G., Bellelli, S., Palla, I., and Forli, F. (2011). Systematic review of the scientific literature on the economic evaluation of cochlear implants in paediatric patients. Acta Otorhinolaryngol. Ital. 31, 311–318.

Tyler, M., Danilov, Y., and Bach-Y-Rita, P. (2003). Closing an open-loop control system: vestibular substitution through the tongue. J. Integrat. Neurosci. 2, 159–164. doi: 10.1142/S0219635203000263

Van Erp, J. B., and Self, B. P. (2008). Tactile Displays for Orientation, Navigation and Communication in Air, Sea and Land Environments. North Atlantic Treaty Organisation, Research and Technology Organisation.

Weisenberger, J. M., Craig, J. C., and Abbott, G. D. (1991). Evaluation of a principal-components tactile aid for the hearing-impaired. J. Acoust. Soc. Am. 90, 1944–1957. doi: 10.1121/1.401674

Keywords: senses, sensory substitution devices, hearing loss, haptics, sound

Citation: Eagleman DM and Perrotta MV (2023) The future of sensory substitution, addition, and expansion via haptic devices. Front. Hum. Neurosci. 16:1055546. doi: 10.3389/fnhum.2022.1055546

Received: 27 September 2022; Accepted: 23 December 2022;

Published: 13 January 2023.

Edited by:

Davide Valeriani, Google, United StatesReviewed by:

Andreas Kalckert, University of Skövde, SwedenCopyright © 2023 Eagleman and Perrotta. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: David M. Eagleman,  ZGF2aWRlYWdsZW1hbkBzdGFuZm9yZC5lZHU=

ZGF2aWRlYWdsZW1hbkBzdGFuZm9yZC5lZHU=

David M. Eagleman

David M. Eagleman Michael V. Perrotta

Michael V. Perrotta