- Centre for Human Psychopharmacology, Swinburne University of Technology, Hawthorn, VIC, Australia

The magnocellular system has been implicated in the rapid processing of facial emotions, such as fear. Of the various anatomical possibilities, the retino-colliculo-pulvinar route to the amygdala is currently favored. However, it is not clear whether and when amygdala arousal activates the primary visual cortex (V1). Non-linear visual evoked potentials provide a well-accepted technique for examining temporal processing in the magnocellular and parvocellular pathways in the visual cortex. Here, we investigated the relationship between facial emotion processing and the separable magnocellular (K2.1) and parvocellular (K2.2) components of the second-order non-linear multifocal visual evoked potential responses recorded from the occipital scalp (OZ). Stimuli comprised pseudorandom brightening/darkening of fearful, happy, neutral faces (or no face) with surround patches decorrelated from the central face-bearing patch. For the central patch, the spatial contrast of the faces was 30% while the modulation of the per-pixel brightening/darkening was uniformly 10% or 70%. From 14 neurotypical young adults, we found a significant interaction between emotion and contrast in the magnocellularly driven K2.1 peak amplitudes, with greater K2.1 amplitudes for fearful (vs. happy) faces at 70% temporal contrast condition. Taken together, our findings suggest that facial emotional information is present in early V1 processing as conveyed by the M pathway, and more activated for fearful as opposed to happy and neutral faces. An explanation is offered in terms of the contest between feedback and response gain modulation models.

Introduction

The magnocellular (M) visual system has been implicated in rapidly processing salient facial emotions, such as fear because it provides the main neural drive into the rapid collico-pulvinar route to the amygdala (Morris et al., 2001; Vuilleumier et al., 2003; de Gelder et al., 2011; Rafal et al., 2015; Méndez-Bértolo et al., 2016). The M pathway is a rapidly conducting neural stream providing motion and spatial localization information, as well as transient attention (Laycock et al., 2008). It possesses high gain for luminance contrast, and relative to the parvocellular (P) pathway it shows greater capability for high temporal and low spatial frequency stimulation. The P visual system processes in parallel to the M system, however it is less sensitive to luminance contrast, is chromatically (R/G) sensitive, and has a preference for low temporal and high spatial frequency stimulation. The P system is also considered to have slower conduction and it appears to not contribute directly to the collicular pathway (Livingstone and Hubel, 1988; Merigan and Maunsell, 1993).

Human anatomical evidence for the subcortical “low road” route (LeDoux, 1996) for emotional processing derives from functional magnetic resonance imaging (fMRI; Morris et al., 2001; Vuilleumier et al., 2003; Sabatinelli et al., 2009; de Gelder et al., 2011; Kleinhans et al., 2011; Rafal et al., 2015; Méndez-Bértolo et al., 2016), diffusion imaging (Tamietto et al., 2012; Rafal et al., 2015), magnetoencephalography (MEG; McFadyen et al., 2017) and computational modeling (Rudrauf et al., 2008; Garvert et al., 2014). Supporting the notion of rapid subcortical input to the amygdala, studies have found the estimated synaptic integration time for the subcortical pathway (80–90 ms) to be faster than that of the cortical visual pathway (145–170 ms; Morris et al., 1999; Öhman, 2005; Garvert et al., 2014; Silverstein and Ingvar, 2015; McFadyen et al., 2017). Furthermore, the superior colliculus comprises predominantly M neural inputs (Leventhal et al., 1985; Burr et al., 1994; Márkus et al., 2009).

Recently, these findings were confirmed electrocorticographically, where M-biased low spatial frequency fearful faces were found to evoke early activity in the lateral amygdala, 75 ms post-stimulus onset (Méndez-Bértolo et al., 2016). Additionally, several studies have reported faster and greater P100 amplitude responses to low spatial frequency fearful faces compared to neutral (Pourtois et al., 2005; Vlamings et al., 2009), with a recent study by Burt et al. (2017) pointing to specific M contribution. Taken together, the rapid colliculo-pulvinar-amygdala pathway forms the dominant hypothesis for the early facilitation of salient visual information processing (Öhman, 2005).

Critically, however, many of these studies only focus on how the salient visual information reaches the amygdala, and not what happens after. There is considerable evidence suggesting a relationship, or re-entry, between activity in the amygdala and primary visual cortex (V1; Morris et al., 1998; Sabatinelli et al., 2009) via the M pathway. The separation of M and P projections remains intact from retinal ganglion cells to V1 (Nassi and Callaway, 2009), with the M pathway terminating primarily in layer 4Cα of V1 and the P pathway terminating primarily in layer 4Cα of V1 (Fitzpatrick et al., 1985). However, little is known as to whether facial emotional stimuli reach V1 via M or P inputs, or with what timing. Also, direct inputs from the geniculo-cortical stream possess small receptive fields insufficient to code for a whole face. Hence, inputs to the occipital cortex from other regions that can code faces and particularly facial emotion are required.

It is possible to discriminate temporal M and P contributions to V1 with nonlinear multifocal visual evoked potentials (VEP; Baseler and Sutter, 1997; Klistorner et al., 1997; Jackson et al., 2013; Hugrass et al., 2018). In multifocal VEP experiments, multiple patches of light are flashed and de-correlated in pseudorandom binary sequences. Not only does this method allow for simultaneous recordings across the visual field, but it also analyses higher-order temporal nonlinearities through Wiener kernel decomposition (Sutter and Tran, 1992). The K1 kernel response measures the overall impulse response function of the neural system. The K2.1 response measures the nonlinearity (neural recovery) over one video frame, while K2.2 measures the recovery over two video frames (Sutter, 2000). Klistorner et al. (1997) proposed that the K2.1 response reflects M pathway activity due to its high contrast gain and a saturating contrast response function. Similarly, the main component (N95-P130) of the K2.2 response is thought to reflect P functioning as the response waveform has low contrast gain and a non-saturating contrast response function (Klistorner et al., 1997). However, the notion of isolating M and P contributions to cortical processing has been questioned, with Skottun (2013) suggesting that the M signal cannot be isolated by high temporal frequencies because temporal filtering occurs between the lateral geniculate nucleus and V1, with a reduction in temporal frequency cutoff of around 10 Hz found in primate single-cell studies (Hawken et al., 1996). Further, Skottun (2014) proposed that attributing VEP responses to the M and P systems based on contrast-response properties is problematic because of the mixing of inputs. In response, we argue that non-zero higher-order Wiener kernels of the VEP exist precisely because of such cortical filtering. Thus, the M and P nonlinear contributions to the VEP are heavily weighted to the first and second slices of the second-order response respectively (Klistorner et al., 1997; Jackson et al., 2013), based on contrast gain, contrast response functions, and peak latencies, and hence are easily separable. This identification has been backed up by recent studies investigating individual differences in behavior and physiology with correlations demonstrated between psychophysical flicker fusion frequencies and K2.1 peak amplitudes from the multifocal VEP (Brown et al., 2018). Here, we address the question of whether different emotional states affect the nonlinear structure of occipitally generated evoked responses. Any variation in response to emotional salience likely relates to the functional connections from emotion parsing regions such as the amygdala to the visual cortex.

The question of whether facial emotional stimuli reach V1 via M or P inputs has not been reported in human non-linear multifocal VEP recordings. Thus, the current study aimed to utilize this well-validated technique to evaluate whether emotional stimuli such as fearful, happy, and neutral faces would affect the early cortical (V1) M and P signatures.

Materials and Methods

Participants

Fourteen participants (nine males, fix females; M = 24 years, SD = 3.65 years) gave written informed consent and participated in the experiment at the Swinburne University of Technology, Melbourne, Australia. The first author was included in the sample. All participants had normal, or corrected-to-normal, visual acuity, and no neurological condition. The study was conducted with the approval of the Swinburne Human Research Ethics Committee and following the code of ethics of the Declaration of Helsinki.

Visual Stimuli

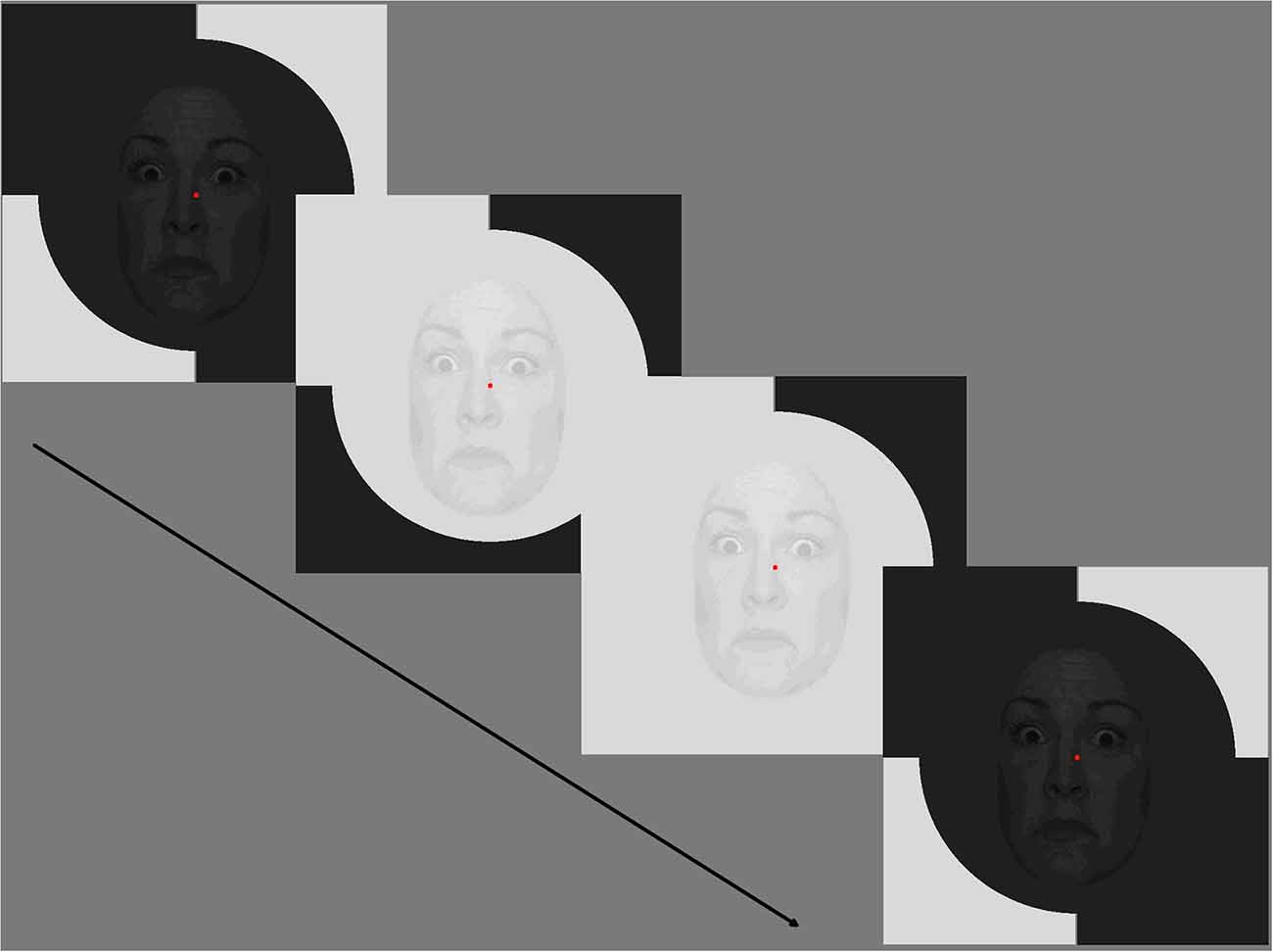

The achromatic stimuli were presented on a 60 Hz LCD monitor (ViewSonic) with linearised color output (measured with a ColorCal II), at a viewing distance of 70 cm. The 9-patch multifocal dartboard was created using VPixx software (version 3.21)1, with a 5.4° diameter central patch and two outer rings of four patches (21.2° and 48° diameter; Hugrass et al., 2018). The luminance for each patch fluctuated between two levels, under the control of a pseudorandom binary m-sequence (m = 14) and modulated at the video frame rate of 60 Hz. All participants completed eight VEPs of varying temporal luminance contrasts (10% and 70% Michelson) for the outer patches, with an overall mean screen luminance of 65cd/m2. Of important note, unlike previous multifocal VEP studies (Sutherland and Crewther, 2010; Jackson et al., 2013; Crewther et al., 2015, 2016; Burt et al., 2017; Hugrass et al., 2018) that used a diffuse central patch, fearful, happy, neutral faces (or no face) from the Nimstim Face Set (Tottenham et al., 2009) were superimposed on the luminance fluctuation of the central patch. The spatial contrast (Michelson) of the central patch was either 30% (face) or 0% (no face). Thus, each pixel of this central image underwent a pseudorandom binary sequence of increases and decreases in luminance (Figure 1).

Figure 1. Example of a fearful condition with 70% temporal modulation. Stimuli comprised of pseudorandom brightening/darkening of fearful, happy, neutral faces (or no face) with surround patches decorrelated from the central face-bearing patch. For the central patch, the spatial contrast of the faces was 30% while the temporal contrast of the per-pixel luminance increment/decrement was 10% or 70%. Note that for each condition (happy, fearful, neutral) faces of different actors changed every second, but maintained emotional state. Consent was obtained for the use of NimStim stimuli.

Stimuli comprised pseudorandom brightening/darkening of fearful, happy, neutral faces (or no face) with surround patches decorrelated from the central face-bearing patch. For the central patch the spatial contrast of the faces was 30% while the temporal contrast of the per-pixel brightening/darkening was 10% or 70% (Klistorner et al., 1997; Jackson et al., 2013; Brown et al., 2018; Hugrass et al., 2018).

M-sequences allow information from all stimulus patches to be available through rotation of the starting point of the binary sequence for each patch, resulting in full decorrelation (Sutter, 2000). For this experiment, we only analyzed responses to the central patch. Separate recordings were made with happy, neutral, fearful, and no face conditions at the different temporal contrasts. For each experimental condition, the m-sequences were split into four approximately one-minute recording segments, with the recordings lasting 32 min in total for the eight conditions. Participants were instructed to maintain strict fixation on the central patch during the recordings and to rest their eyes between recordings.

Non-linear VEP Recording and Analysis

Non-linear achromatic multifocal VEPs were recorded using a 64-channel Quickcap and Scan 4.5 acquisition software (Neuroscan, Compumedics). Electrode site Fz served as ground and linked mastoid electrodes were used as a reference (Burt et al., 2017; Hugrass et al., 2018). EOG was monitored by positioning electrodes above and below the left eye.

EEG data were processed using Brainstorm (Tadel et al., 2011). EEG data were band-pass filtered (0.1–40 Hz) and signal space projection was applied to remove the eye-blink artifact. Custom Matlab/Brainstorm scripts were written for the multifocal VEP analyses to extract K1, K2.1, and K2.2 kernel responses for the central patch. K1 is the difference between responses to the light and dark patches. K2.1 measures neural recovery over one frame by comparing responses when a transition did or did not occur. Similarly, K2.2 measures neural recovery over two frames but includes an interleaving frame of either polarity (refer to Klistorner et al., 1997; Sutter, 2000 for in-depth descriptions of the kernels).

For each participant, the electrode with the highest amplitude responses was selected for group-level averages. The highest amplitude responses were recorded at Oz for all participants. Peak amplitudes and latencies of kernels K1, K2.1 and K2.2 were identified using Igor Pro 8.03 (Wavemetrics, Lake Oswego), establishing latency windows for peak identification from the grand mean averages. Values were then exported to SPSS (Version 20, IBM). To control for amplitude outliers a Winsorizing approach (Hastings et al., 1947; Dixon, 1960) was applied, limiting extreme values to the values of the 95th and 5th percentiles. For this outlier control, the data for the eight conditions associated with K2.1N60-P90 (FE70%: 2 cases; HA10%:1) and K2.1N103-P127 (FE70%: 1 case; HA70%: 2 cases; HA10%: 1 case; NE70: 1 case) amplitudes were adjusted for a small number of cases. These values were then used for linear mixed-effect modeling analysis and to present the mean values shown in the figures below. To allow for multiple comparisons, an alpha value of 0.006 was used for any follow-up pairwise comparisons (based on the eight stimulus conditions: FE30%, HA30%, NE30%, NoForm30%, FE70%, HA70%, NE70%, NoForm70%), and a 99% confidence interval was used for comparisons of marginal means associated with significant interactions.

Results

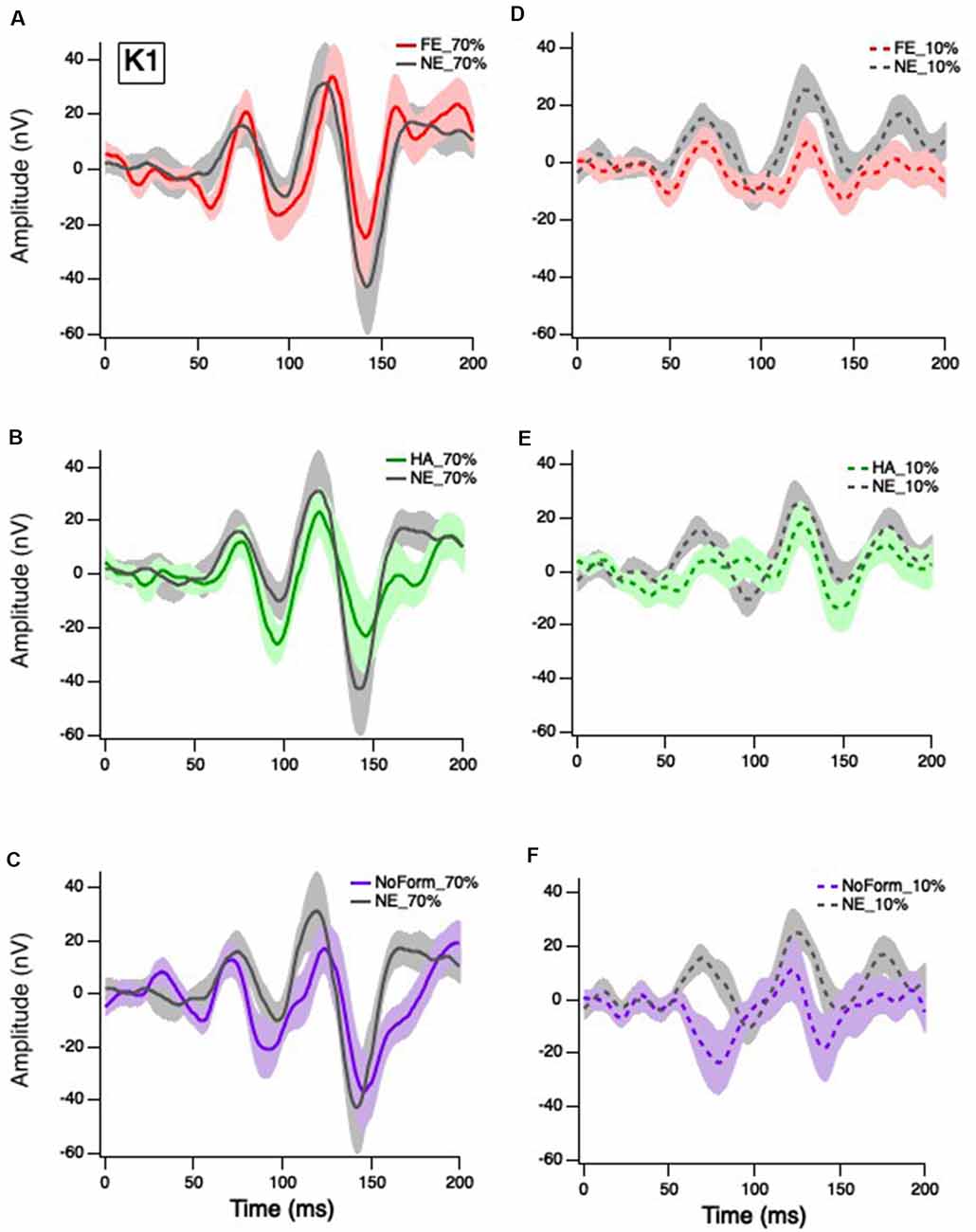

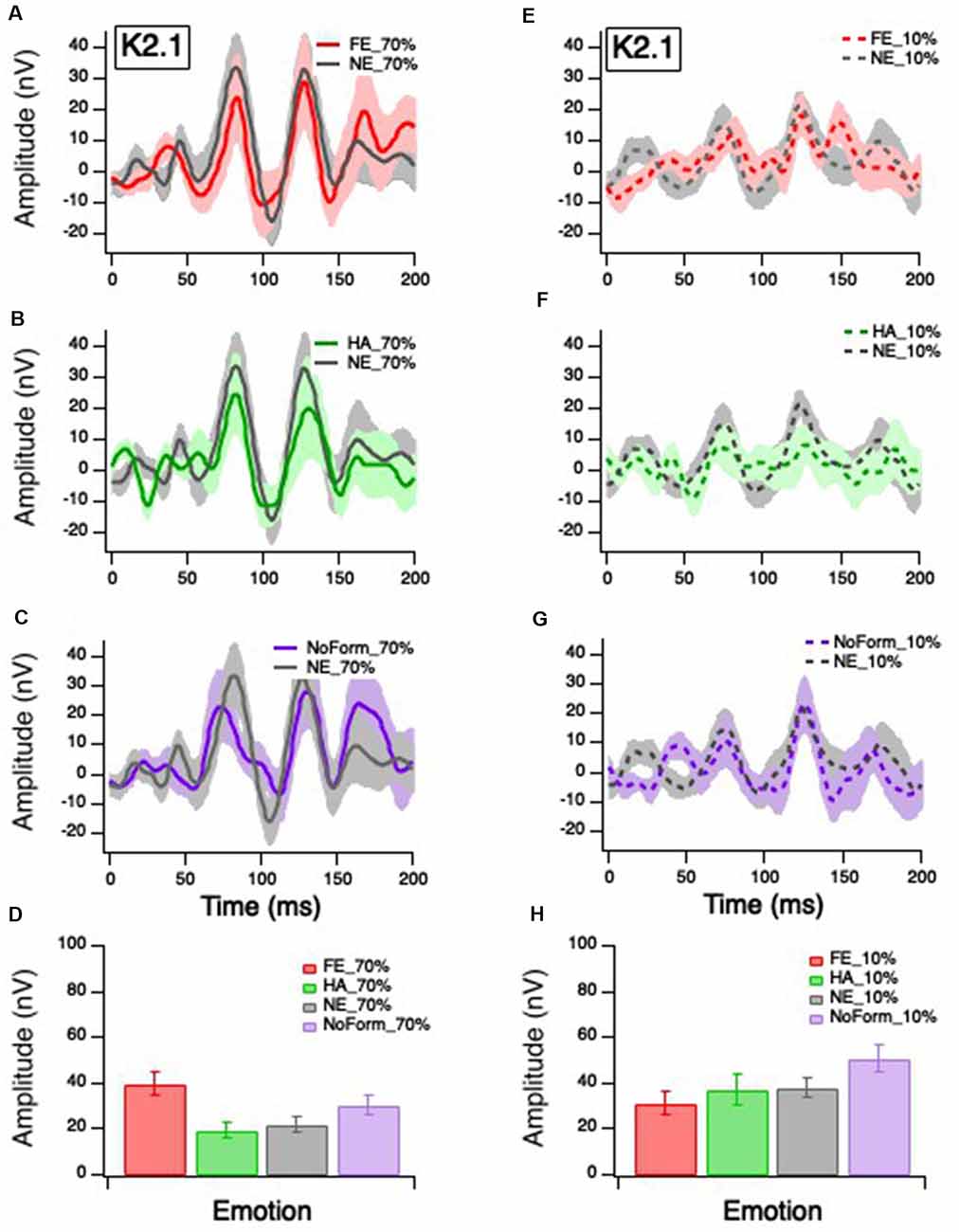

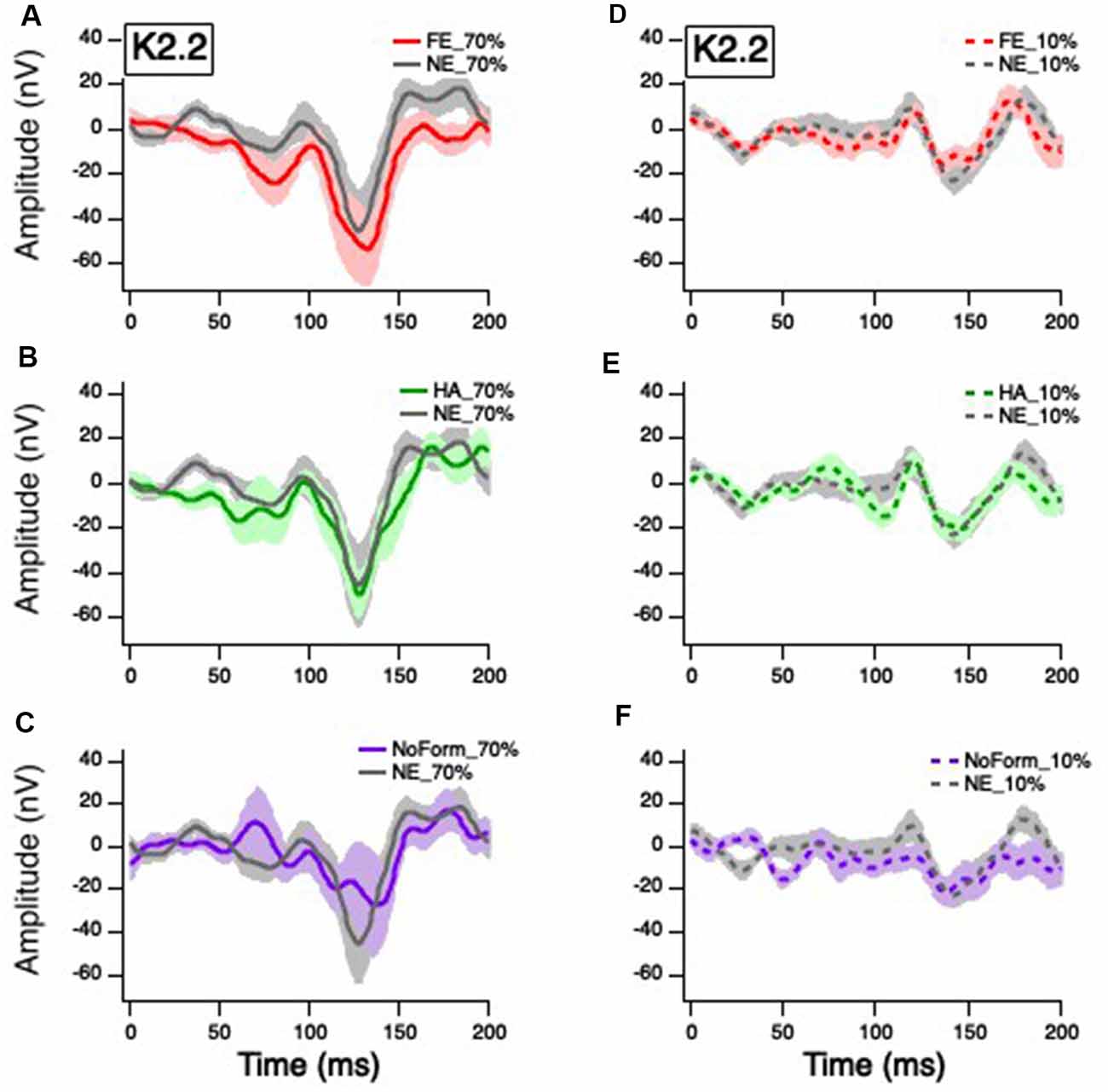

Grand averages for the K1, K2.1, and K2.2 responses were calculated for all experimental conditions (happy, fearful and neutral facial expressions, low and high temporal contrasts) and are presented in Figures 2–4, respectively. As expected, the cortically recorded VEP responses produced variations in amplitude according to contrast across all kernels (Klistorner et al., 1997). Separate linear mixed-effects models were computed to investigate the effects of emotion (fear, happy, neutral, no form) and temporal contrast (10%, 70%) on separate early and late peak amplitudes of the K1 (N58-P80; N94-P118), K2.1 (N60-P90; N103-P127), and K2.2 (N85-P104; N119-P157) responses. Time windows for peak estimation were established to account for individual differences across conditions. Some departures from the data of Klistorner et al. (1997), Jackson et al. (2013), and Hugrass et al. (2018) are apparent, due to differences in stimulus frame rate, reference/ground location (mastoid/Fz vs. Fz/mastoid).

Figure 2. Grand mean average K1 responses. Solid red, green and purple lines correspond to the averaged waveforms for the 70% temporal contrast conditions with (A) fearful, (B) happy, and (C) no form stimuli superimposed in the central stimuli, respectively, all plotted against neutral (gray). Dashed red, green and purple lines correspond to the averaged waveforms for the 10% temporal contrast conditions with (D) fearful, (E) happy, and (F) no form superimposed in the central patch, respectively, all plotted against (gray).

Figure 3. Grand mean average K2.1 responses. Solid red, green and purple lines correspond to the averaged waveforms for the 70% temporal contrast conditions with (A) fearful, (B) happy, and (C) no form stimuli superimposed in the central stimuli, respectively, all plotted against (gray). Dashed red, green and purple lines correspond to the averaged waveforms for the 10% temporal contrast conditions with (D) fearful, (E) happy, and (F) no form superimposed in the central patch, respectively, all plotted against (gray). Mean peak amplitude values of K2.1N60-P90 for 70% and 10% temporal contrast conditions across all emotions are shown in (G,H), respectively, to illustrate the significant emotion by contrast interaction.

Figure 4. Grand mean average K2.2 responses. Solid red, green, gray, and purple lines correspond to the averaged waveforms for the 70% temporal contrast conditions with (A) fearful, (B) happy, neutral, and (C) no form stimuli superimposed on the central patch, respectively. Dashed red, green, gray, and purple lines correspond to the averaged waveforms for the 10% temporal contrast conditions with (D) fearful, (E) happy, neutral stimuli, and (F) no form superimposed on the central patch, respectively.

K1 Amplitude

Klistorner et al. (1997) suggested that the first-order response (K1) is produced by complex interactions between the M and P pathways. Separate linear-mixed model analyses for early and late K1 peak-trough amplitudes produced no significant main effects of emotion, K1N58-P80: F(3,27) = 1.202, p = 0.328; K1N94-P118: F(3,27) = 0.748, p = 0.535; nor were there any significant emotion by contrast interactions, K1N58-P80: F(2,53) = 0.139, p = 0.870; K1N94-P118: F(2.55) = 0.444, p = 0.644. As expected, there was a significant main effect of contrast on K1 but only for the earlier peak amplitudes, with greater responses at 70% (Figures 2A–C) than 10% temporal contrast (Figures 2D–F), K1N58-P80: F(1,62) = 7.895, p = 0.007. In summary, short-latency K1 peak amplitudes are greater in magnitude when the central patch is modulated at high contrast, but they are not affected by facial emotion.

K2.1 Amplitude

Klistorner et al. (1997) and Jackson et al. (2013) suggest that the K2.1N60-P90 waveform is of M pathway origin, based on contrast gain, contrast saturation, and peak latencies. Figure 3 illustrates K2.1 waveform for 70% temporal contrast (Figures 3A–C) and 10% temporal contrast (Figures 3D–F). One can see that the mean value of no form 10% in Figure 3H appears larger than the other emotions, which may suggest that the inclusion of facial stimuli in the central stimulus patch appears to have had some effect.

The linear-mixed model analysis showed a significant main effect of contrast on K2.1N60-P90 amplitude, F(1,85) = 10.688, p = 0.002, but no significant main effect of emotion, F(3,46) = 2.26, p = 0.094. There was a significant interaction between emotion and contrast, F(3,41) = 4.823, p = 0.030, with the greatest amplitude for fearful faces in the 70% temporal contrast (Figure 3D), and greatest amplitude for no form in the 10% temporal contrast condition (Figure 3H). To ensure that the no form condition did not induce spurious effects, we conducted a post hoc separate linear mixed effect model without the no form condition and found a significant main effect of contrast, F(1,63) = 5.399, p = 0.023, and significant emotion and contrast interaction, F(2,52) = 4.951, p = 0.011.

No significant main effects or interactions were found for the later K2.1 peaks (K2.1N103-P127: p > 0.05).

K2.2 Amplitude

Previous studies (Jackson et al., 2013) indicate that the small early K2.2N85-P104 peak is also of M origin. The linear mixed-effect model showed there was no significant main effect of contrast on the K2.2 N85-P104 amplitude, F(1,48) = 1.025, p = 0.316. There was, however, a significant main effect of emotion, F(3,41) = 7.012, p = 0.001, with greater amplitude for the no form condition compared to happy (Mdiff = −20.876, p = 0.002) and neutral (Mdiff = −20.290, p = 0.004) faces. There was no significant emotion by contrast interaction, F(3,41) = 1.813, p = 0.160.

The second peak K2.2N119-P157 is thought to be of P origin (Jackson et al., 2013). Figure 4 illustrates a greater K2.2N119-P157 amplitude to 70% temporal contrast (Figures 4A–C) compared to 10% temporal contrast (Figures 4E–G), compared to K2.1 (Figure 3). As such, the linear mixed-effect model produced a significant main effect of contrast, F(1,66) = 40.251, p < 0.001. There was no significant main effect of emotion, F(3,39) = 0.109, p = 0.954, or interaction between contrast and emotion, F(3,39) = 0.015, p = 0.997. Overall, it suggests that any emotional effect on the occipital VEP is of M and not P origin.

Discussion

Nonlinear multifocal VEP recordings of the visual cortex have become perhaps the best available method for measuring human M and P temporal processing (Baseler and Sutter, 1997; Klistorner et al., 1997; Jackson et al., 2013; Brown et al., 2018; Hugrass et al., 2018). These studies typically examine M and P responses to flashing unstructured patches with a range of temporal contrasts, although Baseler and Sutter (1997) used contrast reversing checkerboards. However, no study to date has extended this technique to controlled luminance fluctuation of emotional faces, where, despite the random flicker, a clear percept of facial emotion is possible.

Considering the M and P pathways are known to contrast saturating and non-saturating, respectively (Kaplan et al., 1990; Klistorner et al., 1997; Jackson et al., 2013), there was no surprise that we found overall minimal K2.1 response differences between 10% and 70% temporal contrast, but greater difference when compared to K2.2N119-P157 waveforms. While some divergence in overall appearance of kernel waveforms compared with previous publications was observed, this can be partly explained by electrical reference/ground choices (aural medulla ref/Fz ground) rather than Fz as a reference with the aural ground as used by Klistorner et al. (1997) and Jackson et al. (2013). Another possible explanation for variation in response amplitudes relates to the presence or not of a facial percept. The presence of a percept implies higher-order visual processing that may result in feedback in area V1 (Fang et al., 2008). Also, the facial stimuli are likely to activate orientation-selective receptive fields of neurons in area V1 which the no form stimuli are less likely to stimulate, with differences in latency and waveform (Crewther and Crewther, 2010).

Based on the popular notion that the M pathway feeds into the colliculo-pulvinar-amygdala for rapid emotional processing we were interested in whether emotional content would have any effect on early occipital kernel responses. Interestingly, at the 70% temporal contrast level, we found fearful faces produced greater K2.1 amplitude compared to happy faces (which produced the smallest K2.1 amplitude) and neutral faces, which aligns with previous measures showing stronger and faster amygdala activation to fearful cf neutral faces (Öhman, 2005; Adolphs, 2008; Garvert et al., 2014; Méndez-Bértolo et al., 2016) and early visual cortical ERP by emotional faces (Vlamings et al., 2009; Burt et al., 2017). Before the current study, little was known as to the functional anatomy by which facial emotional information reaches V1, and with what timing. Thus, the current study provides evidence that emotional information is included in the first evoked response recording in V1 and is conveyed through the M pathway. Also, the recent literature on the normalization model of attention (Reynolds and Heeger, 2009; Herrmann et al., 2010; Zhang et al., 2016) needs to be considered, wherein neuronal firing rates of cortical neurons are dependent on the extent of the attentional field. Specifically, it has been found that both negative and positive emotional faces increase V1 activity relative to neutral faces, but at the same time, negative emotions narrow the attention field in V1 while positive emotion broadens the attention field (Zhang et al., 2016). Such articles introduce the notion of response gain as an attentional effect.

Emotional salience acts similarly to attention, with neural theories invoking response gain modulation of the pulvinar by amygdalar activity (Williams et al., 2004; van den Bulk et al., 2014). Previous studies have found the pulvinar to be crucial in gating and controlling information outflow from V1 (Purushothaman et al., 2012). Some studies (Vlamings et al., 2009; Attar et al., 2010; Burt et al., 2017) have found contrast response gain effects of the amygdala to fearful expressions to increase hMT and extrastriate early cortical responses (i.e., P100), thus potentially explaining why the M component, which should be saturated at 70% contrast, is being altered by emotional expression. Moreover, primate data are supportive, showing fast conducting projections from the inferior pulvinar to area middle temporal (MT; Warner et al., 2010; Kwan et al., 2019). But, while there is evidence of strong pulvinar-amygdala input, there is little evidence of a direct amygdala-pulvinar feedback pathway. The absence of such a pathway presents a problem in explaining very rapid changes in visual processing. However, transmission modulation of the pulvinar by the amygdala through verified projections onto the Thalamic Reticular Nucleus (TRN; Zikopoulos and Barbas, 2012), acting as an “emotional attention” mechanism (John et al., 2016), is highly plausible. This idea is further strengthened with evidence from optogenetic manipulation of amygdala activity producing strong contrast gain effects (Aizenberg et al., 2019).

Cortico-cortical feedback of emotional parsing by the amygdala back to the visual cortex is an alternative mechanism demanding exploration. The amygdala possesses myriad connections with the extrastriate cortex, including the insular cortex (Jenkins et al., 2017). Another alternative feedback pathway relates to the orbitofrontal cortex (OFC), a recipient of amygdala projections feeding information back to V1, with a role in further evaluation of the salient information. Kveraga et al. (2007) reported M information projected rapidly and early (~130 ms) to the OFC. Furthermore, analyses of effective connectivity using dynamic causal modeling showed that M-biased stimuli significantly activated pathways from the occipital visual cortex to OFC (Kveraga et al., 2007). However, these multisynaptic pathways likely have slower conduction to the striate cortex, and hence are less likely to contribute to the early K2.1 VEP component.

The biological and social significance of the human face, as a shape, needs to also be considered when interpreting our results. Previous studies have reported faces to capture attention more efficiently than non-face stimuli (Theeuwes and der Stigchel, 2006; Langton et al., 2008; Devue et al., 2009). For example, Langton et al. (2008) found that participants’ ability to search an array of objects for a target butterfly was slowed when an irrelevant face appeared in the array. This demonstrates that even when a non-face object is the target of a goal-directed search, the presence of a face prevails over other stimuli. However, electrophysiologically, Thierry et al. (2007) found that when showing pictures of faces and cars, it was not the category that evoked a greater N170 amplitude, but rather the within-category variability such as position, angle, and size of the stimuli that resulted in amplitude modification. Moreover, the difference in K2.1 response amplitude to fearful, happy, neutral, and no form provides strong evidence for an emotional effect. Future research should consider implementing other non-face emotional stimuli to address the question of stimulus specificity.

Taken together, we were able to detect responses to emotional faces in early V1 processing via nonlinear multifocal VEPs over the occipital cortex, implying that there is differential early visual processing of emotional faces with the M pathway connections of V1. In particular, we found that fearful faces at 70% temporal contrast produce a greater M pathway nonlinearity than do happy or neutral faces. Further exploration of putative feedback and response gain modulation models will be needed to fully explain the VEP differences observed.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Swinburne Human Research Ethics Committee, Swinburne University of Technology. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

EM created the experimental design, performed testing and data collection, analyzed the data, and wrote the manuscript. DC contributed to stimulus creation and manuscript editing. Both authors contributed equally to interpreting the results.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Prof. Denny Meyer for her statistical advice.

Footnotes

References

Adolphs, R. (2008). Fear, faces, and the human amygdala. Curr. Opin. Neurobiol. 18, 166–172. doi: 10.1016/j.conb.2008.06.006

Aizenberg, M., Rolón-Martínez, S., Pham, T., Rao, W., Haas, J. S., and Geffen, M. N. (2019). Projection from the amygdala to the thalamic reticular nucleus amplifies cortical sound responses. Cell Rep. 28, 605.e4–615.e4. doi: 10.1016/j.celrep.2019.06.050

Attar, C. I., Müller, M. M., Andersen, S. K., Büchel, C., and Rose, M. (2010). Emotional processing in a salient motion context: integration of motion and emotion in both V5/hMT+ and the amygdala. J. Neurosci. 30, 5204–5210. doi: 10.1523/JNEUROSCI.5029-09.2010

Baseler, H. A., and Sutter, E. E. (1997). M and P components of the VEP and their visual field distribution. Vision Res. 37, 675–690. doi: 10.1016/s0042-6989(96)00209-x

Brown, A., Corner, M., Crewther, D. P., and Crewther, S. G. (2018). Human flicker fusion correlates with physiological measures of magnocellular neural efficiency. Front. Hum. Neurosci. 12:176. doi: 10.3389/fnhum.2018.00176

Burr, D. C., Morrone, M. C., and Ross, J. (1994). Selective suppression of the magnocellular visual pathway during saccadic eye movements. Nature 371, 511–513. doi: 10.1038/371511a0

Burt, A., Hugrass, L., Frith-Belvedere, T., and Crewther, D. (2017). Insensitivity to fearful emotion for early ERP components in high autistic tendency is associated with lower magnocellular efficiency. Front. Hum. Neurosci. 11:495. doi: 10.3389/fnhum.2017.00495

Crewther, D. P., Brown, A., and Hugrass, L. (2016). Temporal structure of human magnetic evoked fields. Exp. Brain Res. 234, 1987–1995. doi: 10.1007/s00221-016-4601-0

Crewther, D. P., and Crewther, S. G. (2010). Different temporal structure for form versus surface cortical color systems—evidence from chromatic non-linear VEP. PLoS One 5:e15266. doi: 10.1371/journal.pone.0015266

Crewther, D. P., Crewther, D., Bevan, S., Goodale, M. A., and Crewther, S. G. (2015). Greater magnocellular saccadic suppression in high versus low autistic tendency suggests a causal path to local perceptual style. R. Soc. Open Sci. 2:150226. doi: 10.1098/rsos.150226

de Gelder, B., van Honk, J., and Tamietto, M. (2011). Emotion in the brain: of low roads, high roads and roads less travelled. Nat. Rev. Neurosci. 12, 425–425. doi: 10.1038/nrn2920-c1

Devue, C., Laloyaux, C., Feyers, D., Theeuwes, J., and Brédart, S. (2009). Do pictures of faces and which ones, capture attention in the inattentional-blindness paradigm? Perception 38, 552–568. doi: 10.1068/p6049

Dixon, W. J. (1960). Simplified estimation from censored normal samples. Ann. Math. Stat. 31, 385–391. doi: 10.1214/aoms/1177705900

Fang, F., Kersten, D., and Murray, S. O. (2008). Perceptual grouping and inverse fMRI activity patterns in human visual cortex. J. Vis. 8:2. doi: 10.1167/8.7.2

Fitzpatrick, D., Lund, J., and Blasdel, G. (1985). Intrinsic connections of macaque striate cortex: afferent and efferent connections of lamina 4C. J. Neurosci. 5, 3329–3349. doi: 10.1523/JNEUROSCI.05-12-03329.1985

Garvert, M. M., Friston, K. J., Dolan, R. J., and Garrido, M. I. (2014). Subcortical amygdala pathways enable rapid face processing. NeuroImage 102, 309–316. doi: 10.1016/j.neuroimage.2014.07.047

Hastings, C., Mosteller, F. Jr., Tukey, J. W., and Winsor, C. P. (1947). Low moments for small samples: a comparative study of order statistics. Ann. Math. Stat. 18, 413–426. doi: 10.1214/aoms/1177730388

Hawken, M. J., Shapley, R. M., and Grosof, D. H. (1996). Temporal-frequency selectivity in monkey visual cortex. Vis. Neurosci. 13, 477–492. doi: 10.1017/s0952523800008154

Herrmann, K., Montaser-Kouhsari, L., Carrasco, M., and Heeger, D. J. (2010). When size matters: attention affects performance by contrast or response gain. Nat. Neurosci. 13, 1554–1559. doi: 10.1038/nn.2669

Hugrass, L., Verhellen, T., Morrall-Earney, E., Mallon, C., and Crewther, D. P. (2018). The effects of red surrounds on visual magnocellular and parvocellular cortical processing and perception. J. Vis. 18:8. doi: 10.1167/18.4.8

Jackson, B. L., Blackwood, E. M., Blum, J., Carruthers, S. P., Nemorin, S., Pryor, B. A., et al. (2013). Magno- and parvocellular contrast responses in varying degrees of autistic trait. PLoS One 8:e66797. doi: 10.1371/journal.pone.0066797

Jenkins, L. M., Stange, J. P., Barba, A., DelDonno, S. R., Kling, L. R., Briceño, E. M., et al. (2017). Integrated cross-network connectivity of amygdala, insula, and subgenual cingulate associated with facial emotion perception in healthy controls and remitted major depressive disorder. Cogn. Affect. Behav. Neurosci. 17, 1242–1254. doi: 10.3758/s13415-017-0547-3

John, Y. J., Zikopoulos, B., Bullock, D., and Barbas, H. (2016). The emotional gatekeeper: a computational model of attentional selection and suppression through the pathway from the amygdala to the inhibitory thalamic reticular nucleus. PLoS Comput. Biol. 12:e1004722. doi: 10.1371/journal.pcbi.1004722

Kaplan, E., Lee, B. B., and Shapley, R. M. (1990). Chapter 7 new views of primate retinal function. Prog. Ret. Res. 9, 273–336. doi: 10.1016/0278-4327(90)90009-7

Klistorner, A., Crewther, D. P., and Crewther, S. G. (1997). Separate magnocellular and parvocellular contributions from temporal analysis of the multifocal VEP. Vision Res. 37, 2161–2169. doi: 10.1016/s0042-6989(97)00003-5

Kleinhans, N. M., Richards, T., Johnson, L. C., Weaver, K. E., Greenson, J., Dawson, G., et al. (2011). FMRI evidence of neural abnormalities in the subcortical face processing system in ASD. NeuroImage 54, 697–704. doi: 10.1016/j.neuroimage.2010.07.037

Kveraga, K., Boshyan, J., and Bar, M. (2007). Magnocellular projections as the trigger of top-down facilitation in recognition. J. Neurosci. 27, 13232–13240. doi: 10.1523/JNEUROSCI.3481-07.2007

Kwan, W. C., Mundinano, I.-C., de Souza, M. J., Lee, S. C. S., Martin, P. R., Grünert, U., et al. (2019). Unravelling the subcortical and retinal circuitry of the primate inferior pulvinar. J. Comp. Neurol. 527, 558–576. doi: 10.1002/cne.24387

Langton, S. R. H., Law, A. S., Burton, A. M., and Schweinberger, S. R. (2008). Attention capture by faces. Cognition 107, 330–342. doi: 10.1016/j.cognition.2007.07.012

Laycock, R., Crewther, D., and Crewther, S. (2008). The advantage in being magnocellular: a few more remarks on attention and the magnocellular system. Neurosci. Biobehav. Rev. 32, 1409–1415. doi: 10.1016/j.neubiorev.2008.04.008

Leventhal, A. G., Rodieck, R. W., and Drehkr, B. (1985). Central projections of cat retinal ganglion cells. J. Comp. Neurol. 237, 216–226. doi: 10.1002/cne.902370206

Livingstone, M., and Hubel, D. (1988). Segregation of form, color, movement and depth: anatomy, physiology, and perception. Science 240, 740–749. doi: 10.1126/science.3283936

Márkus, Z., Berényi, A., Paróczy, Z., Wypych, M., Waleszczyk, W. J., Benedek, G., et al. (2009). Spatial and temporal visual properties of the neurons in the intermediate layers of the superior colliculus. Neurosci. Lett. 454, 76–80. doi: 10.1016/j.neulet.2009.02.063

McFadyen, J., Mermillod, M., Mattingley, J. B., Halász, V., and Garrido, M. I. (2017). A rapid subcortical amygdala route for faces irrespective of spatial frequency and emotion. J. Neurosci. 37, 3864–3874. doi: 10.1523/jneurosci.3525-16.2017

Méndez-Bértolo, C., Moratti, S., Toledano, R., Lopez-Sosa, F., Martínez-Alvarez, R., Mah, Y. H., et al. (2016). A fast pathway for fear in human amygdala. Nat. Neurosci. 19, 1041–1049. doi: 10.1038/nn.4324

Merigan, W. H., and Maunsell, J. H. R. (1993). How parallel are the primate visual pathways? Annu. Rev. Neurosci. 16, 369–402. doi: 10.1146/annurev.ne.16.030193.002101

Morris, J. S., DeGelder, B., Weiskrantz, L., and Dolan, R. J. (2001). Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain 124, 1241–1252. doi: 10.1093/brain/124.6.1241

Morris, J. S., Friston, K. J., Büchel, C., Young, A. W., Calder, A. J., and Dolan, R. J. (1998). A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121, 47–57. doi: 10.1093/brain/121.1.47

Morris, J. S., Ohman, A., and Dolan, R. J. (1999). A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. U S A 96, 1680–1685. doi: 10.1073/pnas.96.4.1680

Nassi, J. J., and Callaway, E. M. (2009). Parallel processing strategies of the primate visual system. Nat. Rev. Neurosci. 10, 360–372. doi: 10.1038/nrn2619

Öhman, A. (2005). The role of the amygdala in human fear: automatic detection of threat. Psychoneuroendocrinology 30, 953–958. doi: 10.1016/j.psyneuen.2005.03.019

Pourtois, G., Dan, E. S., Grandjean, D., Sander, D., and Vuilleumier, P. (2005). Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum. Brain Mapp. 26, 65–79. doi: 10.1002/hbm.20130

Purushothaman, G., Marion, R., Li, K., and Casagrande, V. A. (2012). Gating and control of primary visual cortex by pulvinar. Nat. Neurosci. 15, 905–912. doi: 10.1038/nn.3106

Rafal, R. D., Koller, K., Bultitude, J. H., Mullins, P., Ward, R., Mitchell, A. S., et al. (2015). Connectivity between the superior colliculus and the amygdala in humans and macaque monkeys: virtual dissection with probabilistic DTI tractography. J. Neurophysiol. 114, 1947–1962. doi: 10.1152/jn.01016.2014

Reynolds, J. H., and Heeger, D. J. (2009). The normalization model of attention. Neuron 61, 168–185. doi: 10.1016/j.neuron.2009.01.002

Rudrauf, D., David, O., Lachaux, J.-P., Kovach, C. K., Martinerie, J., Renault, B., et al. (2008). Rapid interactions between the ventral visual stream and emotion-related structures rely on a two-pathway architecture. J. Neurosci. 28, 2793–2803. doi: 10.1523/JNEUROSCI.3476-07.2008

Sabatinelli, D., Lang, P. J., Bradley, M. M., Costa, V. D., and Keil, A. (2009). The timing of emotional discrimination in human amygdala and ventral visual cortex. J. Neurosci. 29, 14864–14868. doi: 10.1523/JNEUROSCI.3278-09.2009

Silverstein, D. N., and Ingvar, M. (2015). A multi-pathway hypothesis for human visual fear signaling. Front. Syst. Neurosci. 9:101. doi: 10.3389/fnsys.2015.00101

Skottun, B. C. (2013). On using very high temporal frequencies to isolate magnocellular contributions to psychophysical tasks. Neuropsychologia 51, 1556–1560. doi: 10.1016/j.neuropsychologia.2013.05.009

Skottun, B. C. (2014). A few observations on linking VEP responses to the magno- and parvocellular systems by way of contrast-response functions. Int. J. Psychophysiol. 91, 147–154. doi: 10.1016/j.ijpsycho.2014.01.005

Sutherland, A., and Crewther, D. P. (2010). Magnocellular visual evoked potential delay with high autism spectrum quotient yields a neural mechanism for altered perception. Brain 133, 2089–2097. doi: 10.1093/brain/awq122

Sutter, E. (2000). The interpretation of multifocal binary kernels. Doc. Ophthalmol. 100, 49–75. doi: 10.1023/a:1002702917233

Sutter, E. E., and Tran, D. (1992). The field topography of ERG components in man—I. The photopic luminance response. Vision Res. 32, 433–446. doi: 10.1016/0042-6989(92)90235-b

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., and Leahy, R. M. (2011). Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011:879716. doi: 10.1155/2011/879716

Tamietto, M., Pullens, P., de Gelder, B., Weiskrantz, L., and Goebel, R. (2012). Subcortical connections to human amygdala and changes following destruction of the visual cortex. Curr. Biol. 22, 1449–1455. doi: 10.1016/j.cub.2012.06.006

Theeuwes, J., and der Stigchel, S. V. (2006). Faces capture attention: evidence from inhibition of return. Vis. Cogn. 13, 657–665. doi: 10.1080/13506280500410949

Thierry, G., Martin, C. D., Downing, P. E., and Pegna, A. J. (2007). Is the N170 sensitive to the human face or to several intertwined perceptual and conceptual factors? Nat. Neurosci. 10, 802–803. doi: 10.1038/nn0707-802

Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. doi: 10.1016/j.psychres.2008.05.006

van den Bulk, B. G., Meens, P. H. F., van Lang, N. D. J., de Voogd, E. L., van der Wee, N. J. A., Rombouts, S. A. R. B., et al. (2014). Amygdala activation during emotional face processing in adolescents with affective disorders: the role of underlying depression and anxiety symptoms. Front. Hum. Neurosci. 8:393. doi: 10.3389/fnhum.2014.00393

Vlamings, P. H. J. M., Goffaux, V., and Kemner, C. (2009). Is the early modulation of brain activity by fearful facial expressions primarily mediated by coarse low spatial frequency information? J. Vis. 9:12. doi: 10.1167/9.5.12

Vuilleumier, P., Armony, J. L., Driver, J., and Dolan, R. J. (2003). Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 6, 624–631. doi: 10.1038/nn1057

Warner, C. E., Goldshmit, Y., and Bourne, J. A. (2010). Retinal afferents synapse with relay cells targeting the middle temporal area in the pulvinar and lateral geniculate nuclei. Front. Neuroanat. 4:8. doi: 10.3389/neuro.05.008.2010

Williams, M. A., Morris, A. P., McGlone, F., Abbott, D. F., and Mattingley, J. B. (2004). Amygdala responses to fearful and happy facial expressions under conditions of binocular suppression. J. Neurosci. 24, 2898–2904. doi: 10.1523/JNEUROSCI.4977-03.2004

Zhang, X., Japee, S., Safiullah, Z., Mlynaryk, N., and Ungerleider, L. G. (2016). A normalization framework for emotional attention. PLoS Biol. 14:e1002578. doi: 10.1371/journal.pbio.1002578

Keywords: magnocellular, non-linear VEP, emotion, contrast, V1

Citation: Mu E and Crewther D (2020) Occipital Magnocellular VEP Non-linearities Show a Short Latency Interaction Between Contrast and Facial Emotion. Front. Hum. Neurosci. 14:268. doi: 10.3389/fnhum.2020.00268

Received: 09 December 2019; Accepted: 15 June 2020;

Published: 09 July 2020.

Edited by:

Teri Lawton, Perception Dynamics Institute (PDI), United StatesReviewed by:

Caterina Bertini, University of Bologna, ItalyJohn Frederick Stein, University of Oxford, United Kingdom

John F. Shelley-Tremblay, University of South Alabama, United States

Copyright © 2020 Mu and Crewther. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Eveline Mu, ZW11QHN3aW4uZWR1LmF1

Eveline Mu

Eveline Mu David Crewther

David Crewther