- 1Artificial Intelligence Research Center, Department of Information Technology and Human Factors, National Institute of Advanced Industrial Science and Technology (AIST), Tokyo, Japan

- 2Graduate School of Media and Governance, Keio University, Kanagawa, Japan

Electroencephalogram (EEG)-based brain-computer interfaces (BCIs) can potentially enable people to non-invasively and directly communicate with others using brain activities. Artifacts generated from body activities (e.g., eyeblinks and teeth clenches) often contaminate EEGs and make EEG-based classification/identification hard. Although independent component analysis (ICA) is the gold-standard technique for attenuating the effects of such contamination, the estimated independent components are still mixed with artifactual and neuronal information because ICA relies only on the independence assumption. The same problem occurs when using independent vector analysis (IVA), an extended ICA method. To solve this problem, we designed an independent low-rank matrix analysis (ILRMA)-based automatic artifact reduction technique that clearly models sources from observations under the independence assumption and a low-rank nature in the frequency domain. For automatic artifact reduction, we combined the signal separation technique with an independent component classifier for EEGs named ICLabel. To assess the comparative efficiency of the proposed method, the discriminabilities of artifact-reduced EEGs using ICA, IVA, and ILRMA were determined using an open-access EEG dataset named OpenBMI, which contains EEG data obtained through three BCI paradigms [motor-imagery (MI), event-related potential (ERP), and steady-state visual evoked potential (SSVEP)]. BCI performances were obtained using these three paradigms after applying artifact reduction techniques, and the results suggested that our proposed method has the potential to achieve higher discriminability than ICA and IVA for BCIs. In addition, artifact reduction using the ILRMA approach clearly improved (by over 70%) the averaged BCI performances using artifact-reduced data sufficiently for most needs of the BCI community. The extension of ICA families to supervised separation that leaves the discriminative ability would further improve the usability of BCIs for real-life environments in which artifacts frequently contaminate EEGs.

1. Introduction

An electroencephalogram (EEG)-based brain–computer interface (BCI) is a well-established technology that enables communicating with others without performing actual body movements by finding specific brain activity patterns from EEGs and converting these into predefined commands (Wolpaw et al., 2002). Several paradigms are used for eliciting robust time-independent or -dependent potentials in EEGs, such as motor imagery (MI) (Pfurtscheller and Da Silva, 1999), event-related potential (ERP) (Squires et al., 1976), and steady-state visual evoked potential (SSVEP) (Regan, 1966). Along with these paradigms, developments in machine-learning-based classifiers/identifiers have contributed to improvements in finding specific (elicited) patterns. These paradigms, in combination with signal processing modules and emerging technologies (e.g., wearable sensing, mobile computing, and virtual/augmented reality), have attracted increasing attention in many domains, such as medicine and robotics for real-world applications (Wang et al., 2018; Ogino et al., 2019; Vourvopoulos et al., 2019).

Artifact potentials must be reduced in all EEGs in order to realize robust real-world BCI applications because strong artifact contamination effects can easily reduce BCI performances (Kanoga et al., 2019b). During EEG measurements in real environments, biological artifacts like muscular and ocular ones cannot be avoided because it is difficult for people to voluntarily control the number of their artifact-generating activities. For example, healthy adult males blink ~20 times per minute (i.e., once every ~3 s) to maintain the moisture of their eyes (Karson, 1983). Most BCI paradigms provide visual stimuli or cues for eliciting specific neuronal patterns, and the abovementioned artifacts could contaminate the resulting EEGs. Further, muscular and ocular artifacts will contaminate all EEGs as long as the scalp has some conductivity. These unavoidable artifacts have high-amplitude electrical potentials and overlapping frequency characteristics compared to EEGs (Halliday et al., 1998; Hagemann and Naumann, 2001); thus, contamination by such artifacts makes EEG-based classification/identification hard. These contamination effects can be attenuated by increasing the distance from the source (Kanoga et al., 2016).

For denoising the contamination effects in EEG analysis, the well-known and powerful blind source separation (BSS) technique based on independent component analysis (ICA) has been widely used for the last 20 years (Jung et al., 2000). Usually, artifact reduction involves three steps: (1) training a demixing matrix, (2) identifying the types of separated independent components (ICs), and (3) remixing EEGs by using only neuronal ICs and an inverse demixing matrix. To improve both the computational cost and the accuracy of training a demixing matrix, many ICA algorithms, such as fast ICA (Hyvärinen and Oja, 1997), second-order blind interference (SOBI) (Belouchrani et al., 1997), and information maximization (infomax) ICA (Bell and Sejnowski, 1995), have been proposed. The SOBI and infomax ICA algorithms are used most commonly for EEG signal processing (Choi et al., 2005; Urigüen and Garcia-Zapirain, 2015). For example, EEGLAB, an enormous interactive toolbox for EEG analysis, implements the infomax ICA algorithm (Delorme and Makeig, 2004). Recently, ICLabel (Pion-Tonachini et al., 2019), an automatic IC classifier, has been integrated into the EEGLAB toolbox for online streaming EEG data. Overall, an ICA-based approach remains the gold-standard for artifact reduction.

While real-world EEG-based BCI applications are being developed steadily, ICA-based source estimation still poses some problems. ICA algorithms comprehensively minimize the reconstruction error with a linear combination for an entire sequence of trials. However, this approach overestimates sources for representing the latent waveform of an observation; thus, the estimation leads to oversubtraction or spectral distortion of the EEG power (Wallstrom et al., 2004; Castellanos and Makarov, 2006). Proposing a more rigorous representation of estimated sources than ICA is a major challenge in EEG signal processing for constructing effective classifiers/identifiers.

To represent meaningful waveforms from an observation, we focused on the recurrent properties of an artifactual waveform over trials. Biological artifacts are based on a person's organ structure. An organ system reproducibly and unconsciously activates a non-cerebral source (e.g., eyeball) in the same manner and generates similar electrical potentials (e.g., electrooculogram signals); therefore, person-specific artifacts share a few basic functions for representing the waveforms and can be considered low-rank matrices comprising multiple short time segments. This study represents and removes such waveforms using an independent low-rank matrix analysis (ILRMA) that finds a low-rank non-negative matrix based on statistical independence (Kitamura et al., 2016). To improve its usability for EEG analysis, we used ICLabel for artifact reduction. We investigated the discriminabilities of artifact-reduced EEGs obtained by different methods by using OpenBMI, an open-access EEG dataset that contains EEG data, through the three abovementioned BCI paradigms (MI, ERP, and SSVEP). The BCI performances with these three paradigms after applying artifact reduction techniques were obtained. The results suggest that the proposed method can potentially achieve higher discriminability than ICA for BCIs.

2. Artifact Reduction Techniques

2.1. Mixing and Demixing of EEGs

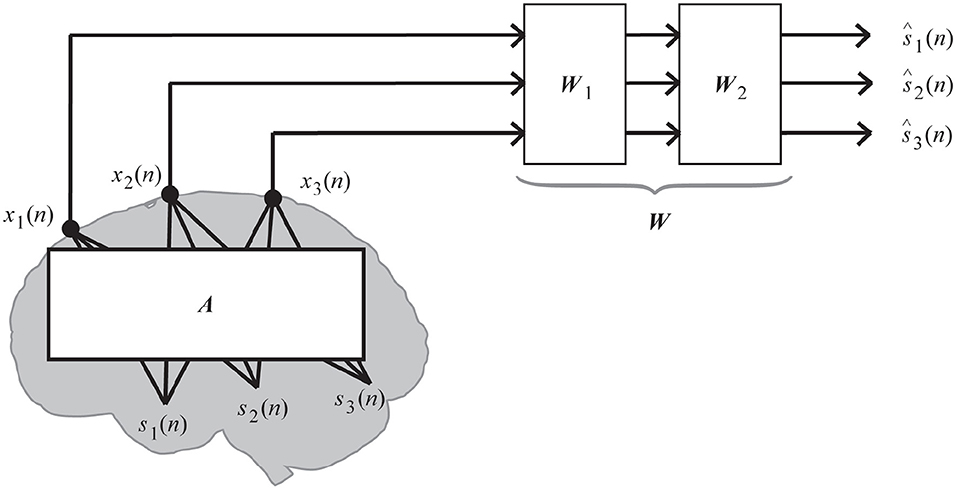

A typical approach to artifact reduction in EEG observations is based on the following assumption: P-channel EEG observations are overdetermined/determined and linear combinations of unknown cerebral Q sources including artifactual and neuronal ones and white noises. Neuronal cells have limited propagation because the cortical connectivity is highly weighted toward short (<500 μm) connections (Budd and Kisvárday, 2001). Thus, the electrical potentials of neuronal activities spread through a contiguous cortical region with a high attenuation penalty in proportion with the distance from the sources (Arieli et al., 1996; Onton and Makeig, 2006). By matching the underlying dynamics of the generation and propagation of EEG potentials, the aforementioned assumption can be represented as

where is the EEG observation at the nth sampling point (1 ≤ n ≤ N), is the unknown source, A is the P × Q full-rank unknown mixing matrix, and is the additive zero-mean noise (T indicates the transpose).

Here, we have only an artifact-(un)contaminated EEG observation matrix X = [x(1), …, x(N)] ∈ ℝP×N. Because source estimation through the inverse process is evidently intractable, four additional assumptions are made (James and Hesse, 2004; Vigario and Oja, 2008): (1) the noise is spatially uncorrelated with the observed data (𝔼[As(n)d(n)] = 0, where 𝔼[·] is the expectation operator); (2) the noise is temporally uncorrelated (𝔼[d(n)d(n + τ)] = 0, where τ is the lag time (∀τ > 0)); (3) the number of sources is equal to or lower than the number of observations (P ≥ Q); and (4) the mixing matrix A does not change over time. Under these assumptions, we can simultaneously estimate both the source matrix and the demixing matrix W(= A−1) ∈ ℝQ×P to blindly separate the observations into artifactual/neuronal sources:

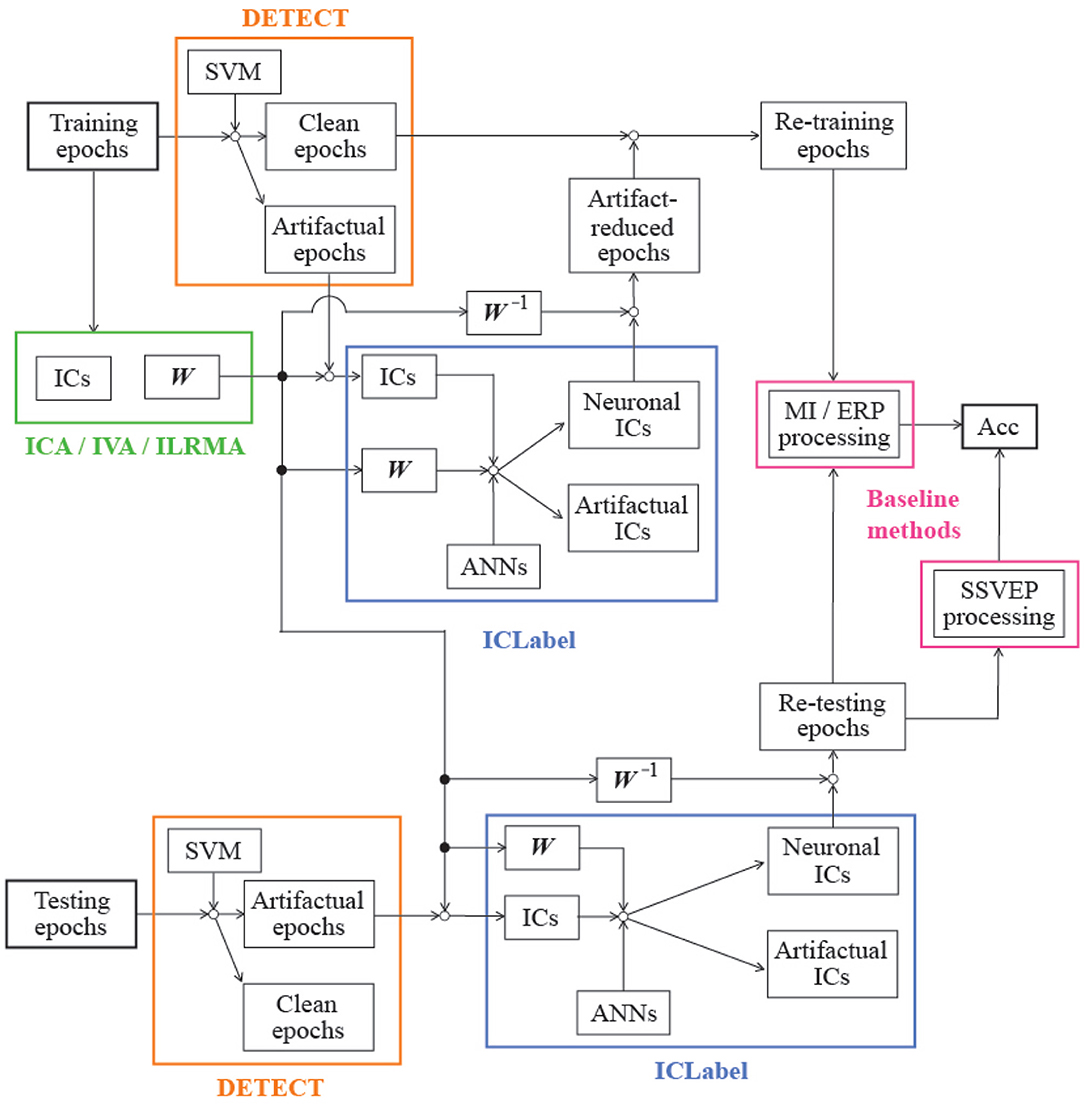

The linear mixing and demixing of EEGs shown in Figure 1 accounts for the comprehensive demixing matrix W(= W1W2) because signal separation algorithms sometimes first decorrelate the data by W1 and then demix them by W2, which is originally learned from the algorithm. In this study, we decorrelated the data before applying a matrix factorization technique; thus, the following representation of W has the same meaning as W2 in Figure 1.

Figure 1. Linear mixing and demixing of EEGs (Kanoga and Mitsukura, 2017).

In practice, artifact reduction requires three stages of processing: (1) decomposing the input matrix; (2) identifying whether the decomposed component is artifactual or neuronal; and (3) reconstructing the artifact-reduced signals using only neuronal components. In this case, we assumed that the EEG observations are labeled signals; the dimensionality of the sources and observations is the same (the value of P and Q is 20, 32, or 10 for MI, ERP, or SSVEP paradigm. The value depends on the number of selected channels in the BCI paradigm. More detailed information is described in sections 3.1, 3.2, and 3.3). In addition, we decomposed datasets using three BSS methods for ICA families: ICA, independent vector analysis (IVA), and ILRMA. Then, the decomposed components were automatically identified using the ICLabel function in the EEGLAB toolbox proposed by Pion-Tonachini et al. (2019). Based on the labels, artifact-reduced signals were linearly reconstructed.

Although ICA algorithms handle time-series data, IVA and ILRMA algorithms approximate a bin-wise instantaneous mixture model in a short-time Fourier transform (STFT) domain. An EEG time series is transformed into a sequence of complex-valued signals by using STFT with a 50% overlapped 1-s Hamming window. Thus, the observations and sources in each time-frequency slot are described as and , where a couple of (i, j) defines the ith frequency bin and jth time frame over STFT (1 ≤ i ≤ I and 1 ≤ j ≤ J). IVA and ILRMA algorithms assume the following mixing system:

where is a frequency-wise mixing matrix (aiq is the steering vector for the qth source, and H indicates the Hermitian transpose). In this paper, we set the value of time length to 1 s because some frequency-domain artifact reduction techniques translate EEG data into STFT domain based on 1-s windows (Kanoga and Mitsukura, 2014; Mohammadpour and Rahmani, 2017) and ILRMA showed its high separation accuracy for 1-s time length data (Kitamura et al., 2017). This mixing system is the rank-1 spatial model (Duong et al., 2010); thus, the relationships between observations and sources can be represented:

where is the STFT of the estimated signals and is the demixing matrix. Note that the demixing matrices for IVA, ILRMA, and ICA have different dimensionalities because of the differences in the domain used (IVA and ILRMA: W ∈ ℝQ×P×I, ICA: W ∈ ℝQ×P).

2.2. Matrix Factorization Techniques

2.2.1. Independent Component Analysis

ICA is the most famous classical method for separating multichannel EEG observations x(n) into statistically independent sources based on an estimated demixing matrix W (Jung et al., 2000; Delorme et al., 2007). The sources can be said to be statistically independent when the following relationship holds:

where and are the joint and the marginal probability distribution of the sources, respectively. Thus, ICA algorithms optimize the demixing matrix W by minimizing the dependence between these distributions. This study applied the extended infomax ICA algorithm implemented by Lee et al. (1999) using the runica function in EEGLAB to the observations. In this algorithm, the dependence in the distributions is represented as the mutual information (Kullback–Leibler distribution) between the estimated sources and observations :

where

By applying the relationship to Equation (7), Equation (6) can be rewritten as a cost function for optimizing the demixing matrix:

The entropy of given observations is a constant. In addition, a gradient update rule based on the natural gradient (Amari, 1998) with learning rate η is used to solve the optimization problem:

where

In every iteration, the distribution of the estimated source for the score function is chosen from the super-Gaussian or sub-Gaussian based on the sign of the fourth cumulant of each source , where Mk is the kth moment ().

In a real environment, the expectation operator 𝔼[·] is the expected value of the empirical distribution (the sample average of the variable).

2.2.2. Independent Vector Analysis

IVA is an extension of the ICA algorithm to multivariate components (vectorized signals) (Hiroe, 2006; Kim et al., 2006b). Like ICA algorithms, IVA defines the dependence between joint probability distributions and marginal probability products using the Kullback–Leibler divergence; however, it introduces a vector density model that has a variance dependency within a source vector. This study applied the natural-gradient-based IVA algorithm implemented by Kim et al. (2006b) based on the ivabss function from an open-access toolbox available on Github (https://github.com/teradepth/iva).

Two conditions are assumed: (1) elements of a source vector are mutually independent of those of other source vectors; and (2) within a source vector, the elements are highly dependent on each other. Based on these assumptions, the cost function for multivariate random variables to separate the components from the observations can be written as

The cost function preserves the inherent dependency within each source vector, though it removes the dependency between different source vectors.

By differentiating the object function with respect to the coefficients of demixing matrices Wi and using the natural gradient, we can derive a gradient update rule as

where

where a⋆ denotes the complex conjugate of a. Among a number of possible function forms, one of the simplest and most effective score functions is given as follows:

To define an optimal form of the function , which has dependency within a source vector, IVA algorithms introduce a vector density model as a scale mixture of a Gaussian distribution with a fixed mean and a variable variance (Kim et al., 2006a):

where α is a normalization term, μiq and Σiq are respectively the mean vector and covariance matrix of the qth source signal in the ith frequency bin, and a† is the conjugate transpose of a.

2.2.3. Independent Low-Rank Matrix Analysis

The ILRMA method unifies IVA and non-negative matrix factorization (Lee and Seung, 1999; Sawada et al., 2013) by considering the determined situation (P = Q) and a linear time-invariant mixing system (Kitamura et al., 2016). This study applied the ILRMA algorithm implemented by Kitamura et al. (2016) using the ILRMA function from an open-access toolbox available on Github (https://github.com/d-kitamura/ILRMA). The algorithm estimates both the demixing matrix Wi and the STFT of the estimated signals yij by approximately decomposing into the non-negative elements tik and vkj of the basis matrix and the activation matrix with a latent variable zqk of the partitioning function Z, which indicates whether or not the kth basis (1 ≤ k ≤ K) belongs to the qth source. For the decomposition, the ILRMA algorithm has the following cost function:

where . The cost function finds a low-rank time-frequency structure for sources using the first and second terms in Equation (18) and maximizes the statistical independence between sources using the second and third terms in Equation (18).

In this algorithm, the demixing matrix Wi can be efficiently updated through iterative projection based on the auxiliary function technique (Ono, 2011):

where rijq is the estimated variance of each source under the complex Gaussian distribution and eq, a unit vector in which the qth element is equal to unity. These update rules have been reported to be faster and more stable than conventional update rules (e.g., natural gradient). After the update, the separated signal yij is also updated:

In addition, the basis matrix Tq, activation matrix Vq, and partitioning function Z can be updated by the majorization-minimization algorithm (Hunter and Lange, 2000):

Finally, the estimated source model is represented as

Note that the demixing matrix Wi and the estimated variance rijq are normalized at each iteration to avoid the risk of diverging as follows:

The number of bases for all sources K and number of iterations were set to J/10 and 200, respectively.

2.3. Component Identification

For identifying the estimated ICs obtained from ICA, IVA, and ILRMA, we used the ICLabel (https://github.com/sccn/ICLabel) classifier proposed by Pion-Tonachini et al. (2019) (freely available as a package in EEGLAB; Delorme and Makeig, 2004; Delorme et al., 2011). This classifier uses three artificial neural networks (ANNs) (specifically, two “Classifier” networks and one “Generator” network): (1) a convolutional neural network (CNN) optimized by an unweighted cross-entropy loss, (2) a CNN optimized by a weighted cross-entropy loss and neuronal IC classification errors, and (3) a semi-supervised learning generative adversarial network (SSGAN) (Odena, 2016; Salimans et al., 2016). The ICLabel classifier inputs, architectures, and training paradigms are described in detail in Appendices B and E in Pion-Tonachini et al. (2019). By using these three ANNs and the three IC features, the ICLabel classifier classified unlabeled EEG ICs into seven categories: (1) brain, (2) muscle, (3) eye, (4) heart, (5) line noise, (6) channel noise, and (7) others. In this study, because of the property of the epoch identification method described in section 4, we removed ICs whose labels were “muscle” or “eye.” Currently, the ICLabel classifier has been trained using 6352 EEG recordings in storage drives collected over the past 15 years at Swartz Center for Computational Neuroscience (SCCN) at UC San Diego.

To accurately identify IC labels using the ICLabel classifier, three discriminable features were calculated from each IC: (1) 32 × 32 pixel scalp topography using the topoplot function in EEGLAB, (2) median power spectral densities (PSDs) from 1 to 50 Hz using a variation of the Welch method (Welch, 1967), and (3) the autocorrelation function. The scalp topographies and PSDs were scaled such that each had a maximum absolute value of 0.99. Further, the autocorrelation vectors were normalized such that the zero-lag value was 0.99. Note that the estimated mixing matrix (W−1), channel locations in 3D space, and channel labels were required to generate the scalp topographies. We collected information about the channel locations in 3D space from the sample_locs folder in EEGLAB toolbox. In addition, the estimated demixing matrix has a 3D structure except for ICA; thus, the corresponding frequency band (e.g., 8–30 Hz) was extracted from whole frequency bins, and the summation was computed to transform the matrix into a 2D structure. Furthermore, the matrix was scaled by the number of extracted frequency bins.

2.4. Signal Reconstruction

By performing the identification process using ICLabel, labels are obtained for the estimated ICs. If the label is “eye” or “muscle,” all components of the artifactual IC are set to zeros. Based on the modified sources, artifact-reduced EEG signals in EEG observations were reconstructed using the inverse linear demixing process in ICA. While applying IVA and ILRMA, the sources were translated into frequency components. Thus, all frequency components of the artifactual ICs were set to zeros and translated into time-series data by the inverse STFT. Then, the artifact-reduced EEG signals were reconstructed, and the inverse ICA linear demixing process was performed.

3. Materials and Baseline Methods

To assess the discriminability of artifact-reduced EEGs by ICA, IVA, and ILRMA, we downloaded an open-access EEG dataset published by Lee et al. (2019) from the webpage http://gigadb.org/dataset/view/id/100542/File_page. The EEG data were recorded using 62 electrodes according to the International 10-20 system using BrainAmp (Brain Products; Munich, Germany) with a sampling rate of 1,000 Hz. In the analysis procedures, we commonly downsampled all EEG data to 100 Hz. The reference and ground channels were nasion and AFz, respectively. The impedance of the EEG electrodes was maintained below 10 kΩ. Participants were instructed to comfortably sit in a chair with armrests ~60 cm in front of a 21-inch LCD monitor (refresh rate: 60 Hz; resolution: 1, 600 × 1, 200). In addition, they were asked to relax their muscles and minimize their eye and muscle movements during the BCI paradigms. Before beginning the experiments, five kinds of 10-s artifact-contaminated EEG data were measured: (1) eye blinking, (2) repetitive horizontal eye movements, (3) repetitive vertical eye movements, (4) teeth clenching, and (5) flexing both arms.

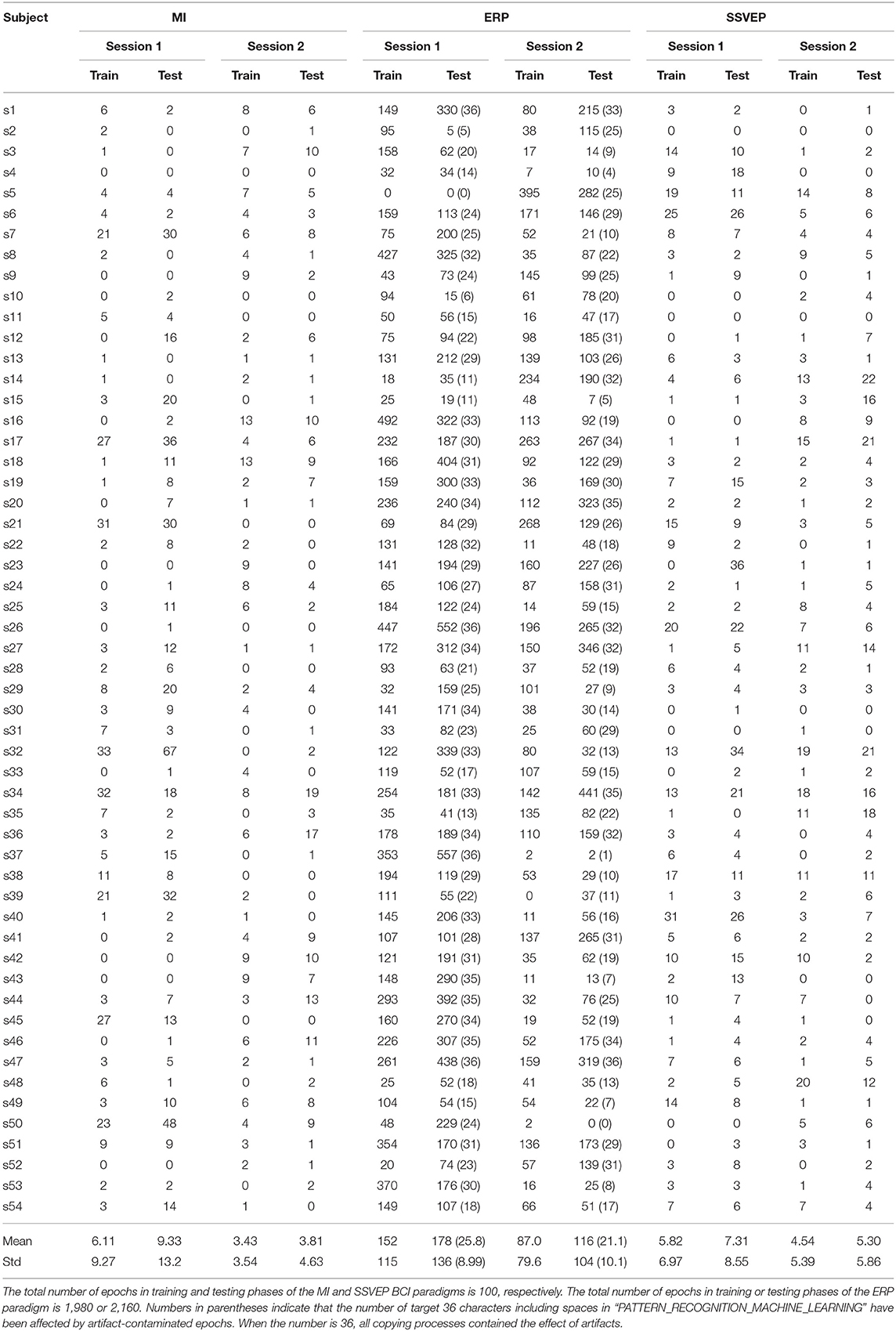

The dataset has the following three properties: (1) a large number of subjects (54 healthy participants; 29 males and 25 females; age: 24–35 years), (2) multiple sessions (two sessions on different days), and (3) multiple paradigms (a binary-class MI, a 36-symbol ERP, and a four-target-frequency SSVEP). Each session consisted of training and testing phases. All BCI paradigms were developed based on the OpenBMI toolbox (Lee et al., 2016) and Psychtoolbox (Brainard, 1997). We used this dataset because (1) EEGs in the three BCI paradigms were collected from the same participants, (2) each paradigm was conducted for 2 days, and (3) baseline analysis methods based on Matlab functions in the OpenBMI toolbox (https://github.com/PatternRecognition/OpenBMI) are available. A single dataset having all these properties is very important for fairly comparing algorithms to reveal general performances with intra- and inter-subject/paradigm variabilities in BCI research. To verify the change in the discrimination accuracy with artifact-reduced EEGs, the baseline analysis methods, including feature extraction and classification algorithms for each paradigm described in Lee et al. (2019), were used. Each paradigm and processing stream are described in detail in the following subsections.

3.1. MI Paradigm and Processing

The MI paradigm was designed based on a well-established protocol (Pfurtscheller and Neuper, 2001): a training/testing phase had 100 trials with 50 right and 50 left hand motion imagery tasks resulting in binary classification. Each trial lasted 13 ± 1.5 s. In the first 3 s, a black fixation cross appeared at the center of the monitor. After the preparation time, the participant imagined a right or left grasping motion for 4 s depending on whether a right arrow or left arrow was displayed, respectively, and then remained in the resting state for 6 ± 1.5 s.

In the MI paradigm, 20 electrodes in the motor cortex region (FC-5/3/1/2/4/6, C-5/3/1/z/2/4/6, and CP-5/3/1/z/2/4/6) were selected. The EEG data of the selected channels were band-pass filtered between 8 and 30 Hz through a fifth order Butterworth filter and further segmented into 2.5-s epochs, which are data segments of 1.0 to 3.5 s after the cue onsets (Pfurtscheller and Neuper, 2001; Fazli et al., 2009). We applied the filter bank common spatial pattern (FBCSP) to the epochs, which has been widely used in MI-based BCIs to maximize the discrimination of the binary class (Ang et al., 2008). A subset of the top and bottom two rows from the projection matrix was used for calculating log-variance features. Based on the features, linear discriminant analysis (LDA) classifiers were constructed and used.

3.2. ERP Paradigm and Processing

The ERP paradigm was designed based on a typical row-column speller system with random-set presentation (Yeom et al., 2014) and face stimuli (Kaufmann et al., 2011). The six rows and six columns were configured with 36 symbols (alphabets A to Z, numerals 1 to 9, and underscore “_”). Each trial sequence lasted 19.5 s. In the first 4.5 s, a target character was highlighted for attracting the participant's attention. After the preparation time, all rows and columns were flashed one by one (12 stimulus flashes) for 13 s and then remained in the resting state for 2 s. The stimulus-time interval was set to 80 ms and the interstimulus interval (ISI) to 135 ms. The highlighted target character was estimated based on data of five sequences (i.e., 60 flashes). In the training phase, participants were asked to copy the 33 characters including spaces in “NEURAL_NETWORKS_AND_DEEP_LEARNING” by gazing at the target character on the monitor, resulting in 1980 trials and binary classification (target or non-target character). In the testing phase, participants tried to copy the 36 characters including spaces in “PATTERN_RECOGNITION_MACHINE_LEARNING,” resulting in 2,160 trials.

In the ERP paradigm, 32 electrodes (Fp-1/2, F-7/3/z/4/8, FC-5/1/2/6, T-7/8, C-3/z/4, TP-9/10, CP-5/1/2/6, P-7/3/z/4/8, PO-9/10, and O-1/z/2) were selected. The EEG data of the selected channels were band-pass filtered between 0.5 and 40 Hz through a fifth order Butterworth filter and then baseline-corrected by subtracting the average amplitudes of the prestimulus within an interval of 200 ms with respect to the stimulus onset. In addition, 0.8-s epochs after the onset were extracted for analysis. From the epochs, the mean amplitudes (MAs) over eight non-overlapping samples were calculated as the 320-dimensional subject-dependent spatio-temporal features (10 dimensions, 32 channels). Based on the features, LDA classifiers were constructed and used.

3.3. SSVEP Paradigm and Processing

The SSVEP paradigm was designed based on general requirements for SSVEP-based BCIs that run over four specific commands (Parini et al., 2009). Four flickers at 5.45, 6.67, 8.57, and 12 Hz were displayed at four positions (down, right, left, and up) on a monitor. Each target frequency was presented 25 times for both the training and the testing phases, resulting in four target identification problems. Each trial lasted 10 s. In the first 4 s, the participant gazed in the box where the target was highlighted (not flickering) in a different color, and the target flicker was then presented for 4 s with an ISI of 6 s to induce the target SSVEP.

In the SSVEP paradigm, 10 electrodes in the occipital region (P-7/3/z/4/8, PO-9/10, and O-1/z/2) were selected. The EEG data of the selected channels were segmented into 2-s epochs with respect to the stimulus onset. We applied multichannel canonical correlation analysis (CCA) (Lin et al., 2006) for identifying the target frequency index by calculating the correlation values between the input data and the prepared sinusoidal templates of the corresponding frequencies (5.45, 6.67, 8.57, and 12 Hz). Although this identification process does not need training data owing to the use of an unsupervised classifier, only data from the testing phase were used for evaluating the BCI performance.

4. Assessments

In this study, we assumed that artifact-reduced epochs are correctly classified if the artifact reduction technique effectively reduced artifactual effects from artifact-contaminated epochs. However, we do not know how many epochs of the aforementioned paradigms were contaminated by artifacts because the open-access EEG dataset does not provide such information. Empirically, it is difficult to completely avoid the generation of biological artifacts during EEG paradigms. Thus, we expected that some epochs were contaminated by some artifacts during each BCI paradigm. To identify the type of epoch (not artifact-contaminated or artifact-contaminated), we applied the detection of events in continuous time (DETECT) epoch identification method proposed by Lawhern et al. (2013); this method requires training data with clean and artifactual label information to make a multiclass SVM model (https://github.com/VisLab/detect). Usually, training data has a short time length (e.g., less than 5 s). Thus, in this study, 10 1-s no artifact-contaminated EEG data detected based on a manual inspection and extracted from the training phase of each BCI paradigm as “clean” epochs and five types of 10-s EEG data contaminated by artifacts, such as eye blinking, horizontal/vertical eye movements, teeth clenching, and flexing both arms, were prepared as “artifactual” epochs because these are well-known to generate ocular/muscular artifacts during EEG measurements. For training an SVM model, each 10-s-length artifactual data was separated into 10 1-s-length data without overlapping. Based on the 60 1-s epochs (10 1-s epochs × 6 classes), a 6-class SVM model was constructed. Note that segmented epochs of the BCI paradigms have different time length (i.e., MI: 2.5, ERP: 0.8, and SSVEP: 2.0 s). To apply DETECT based on a processing strategy for 1-s-length data, we extracted first 1-s data from the “clean” epochs if the target BCI paradigm was MI or SSVEP. For the epochs of the ERP paradigm, 0.2-s data before the stimulus onsets were concatenated to the “clean” epochs. Then, autoregressive features were extracted from the epochs to construct a multiclass SVM classifier of each BCI paradigm. The classifier and hard thresholding for the estimated artifactual class (certainty value obtained using the DETECT toolbox was over 0.5 or not) finally identified each epoch as being clean or artifact contaminated. Table 1 lists the identification results. In all paradigms, data recorded in session 2 (day 2) had less artifactual data than data recorded in session 1 (day 1). In the MI and ERP paradigms, the number of artifactual data recorded in day 2 was significantly lower than data recorded in day 1 (p = 0.001, 0.009 for MI and ERP paradigms in t-test). However, in the SSVEP paradigm, the number in day 2 was not significantly lower (p = 0.172 in t-test). Note that we identify the epoch is neuronal unless a certainty value of all classes exceeded the hard threshold; thus, the thresholding process found an explicit artifactual class over 6 class labels. If the certainty values distributed throughout all classes and no one did not exceed the threshold, this modest identification method can not find artifact-contaminated epochs. In Table 1, there was an outlier: subject 5 in the session 1 of ERP paradigm had no artifact-contaminated epoch. This phenomenon might be caused by the above-mentioned reason.

Table 1. Number of artifact-contaminated epochs in training and testing phases of each BCI paradigm and subject.

Through the epoch identification process, artifact-contaminated epochs in both the training and the testing phases were detected. In the training phase, we also assumed that artifact-reduced epochs contribute to the construction of an effective classifier if the artifact reduction technique effectively reduced artifactual effects from the artifact-contaminated epochs. Therefore, the demixing matrix W was first trained by using all epochs in the training phase, and artifactual ICs were then removed from the artifact-contaminated epochs using the artifact reduction process described in sections 2.2, 2.3, and 2.4. After artifact reduction, the clean and artifact-reduced epochs in the training phase were applied to the baseline analysis methods described in section 3. For performance evaluation, the artifact-contaminated epochs in the testing phase were used to compute the classification accuracy of the artifact-reduced epochs:

where Ncorrect is the number of correct predictions, and Ntotal is the total number of artifact-contaminated epochs in the testing phase, as listed in Table 1, when the BCI paradigm was MI or SSVEP. Note that ERP data requires an averaging process for finding obvious feature waveforms (e.g., N200 and P300), and the averaged waveform relates to the classification performance. In other words, we cannot calculate the classification accuracy for each artifact-contaminated epoch in the paradigm. The numbers in parentheses in Table 1 indicate the number of characters affected by artifacts (Ntotal), which is directly related to the assessment results. Figure 2 shows the block diagram of the assessment procedure used in this study.

A three-way repeated measures analysis of variances (ANOVAs) was applied to the classification accuracy to explore the effect of the two sessions, three BCI paradigms, and three artifact reduction methods. In addition, artifact-reduced signals obtained using ICA, IVA, and ILRMA and that were reconstructed from muscular or ocular artifact-contaminated signals were visualized to qualitatively investigate the artifact reduction performances.

5. Results

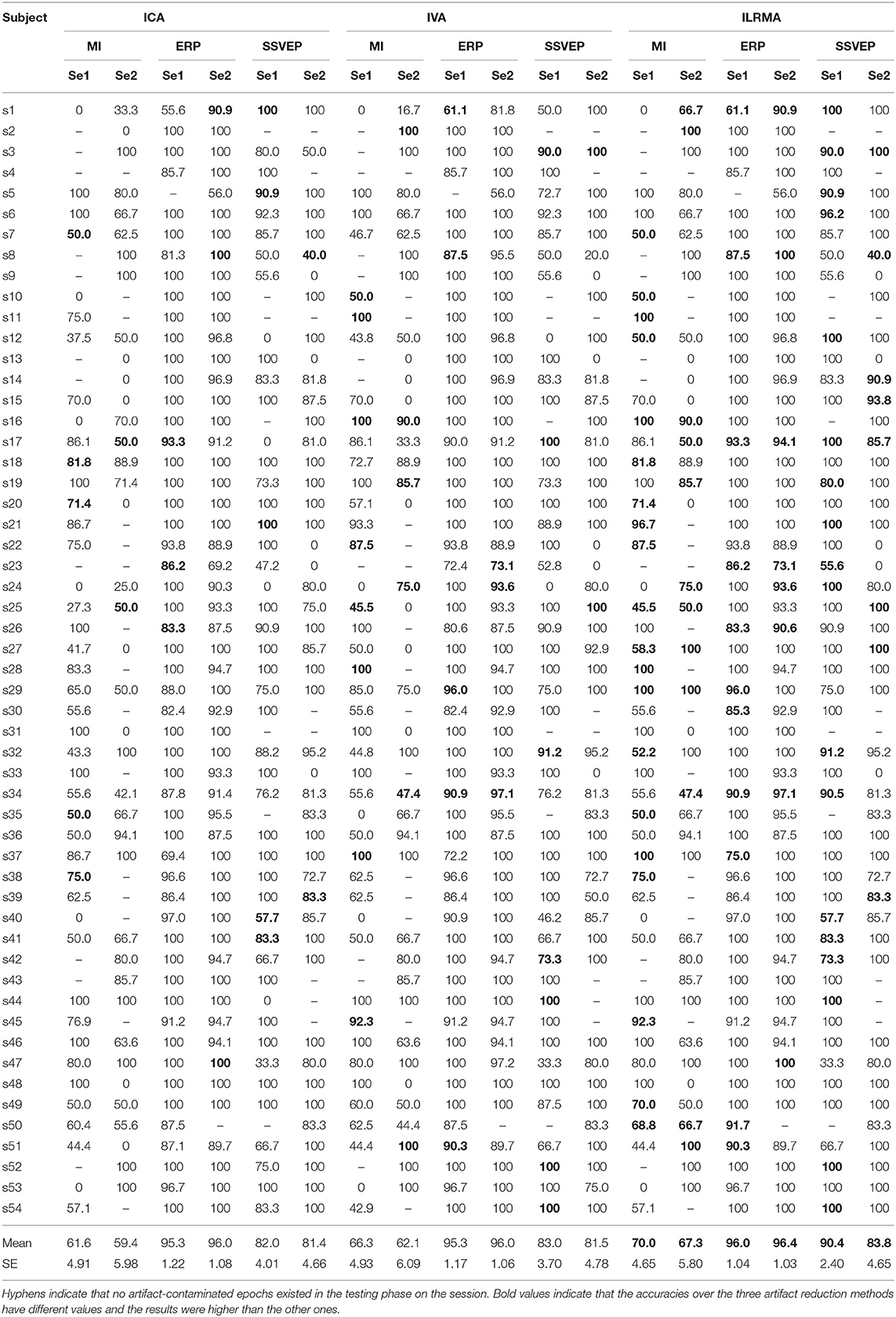

5.1. BCI Performance Before/After Applying Artifact Reduction Technique

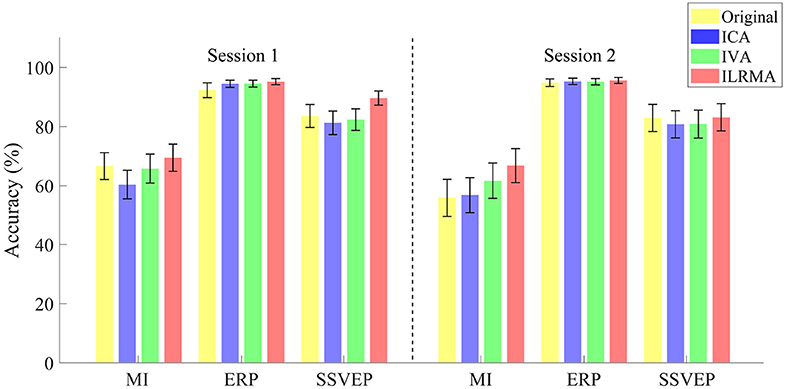

Table 2 lists the classification accuracies for all subjects, sessions, paradigms, and artifact reduction methods. In addition, Figure 3 shows the averaged classification accuracies over all subjects. A three-way repeated measures ANOVA using the classification accuracies of all subjects showed the significant main effects of the BCI paradigms [F(2, 829) = 113.09, p < 0.001] and artifact reduction methods [F(2, 829) = 3.05, p = 0.048]; however, it did not show any significant main effect of the sessions [F(1, 829) = 1.29, p = 0.256]. There were no interaction effects among them. post-hoc analysis using Tukey test revealed that the ICA and ILRMA results had a significant difference (p = 0.039).

Table 2. Classification accuracies for all subjects, sessions, paradigms, and artifact reduction methods.

Figure 3. Averaged classification accuracies over all subjects for sessions, paradigms, and artifact reduction methods. Original indicates the results of using all data without artifact reduction method.

The classification accuracies obtained using ILRMA in all cases were always equal to or higher than the higher accuracy of using ICA or IVA (see Table 2). In particular, ILRMA improved the discriminability of artifact-reduced data for 31 subjects in session 1 and 24 subjects in session 2. When there was a difference in the artifact reduction performance, we highlighted the superior results in bold in the table. The averaged accuracy of using ILRMA in all BCI paradigms was also equal to or higher than that of using ICA and IVA (Figure 3). Interestingly, in some cases, artifact-contaminated data showed higher BCI performance than ICA and IVA. However, ILRMA always showed equal or higher performance compared to artifact-contaminated situations. For these results, ILRMA salvaged effective components for solving the classification problem from artifact-contaminated signals in the MI and SSVEP paradigms. Conversely, in the ERP paradigm, ICA was sufficient to remove the artifactual components and achieved almost 100% accuracy.

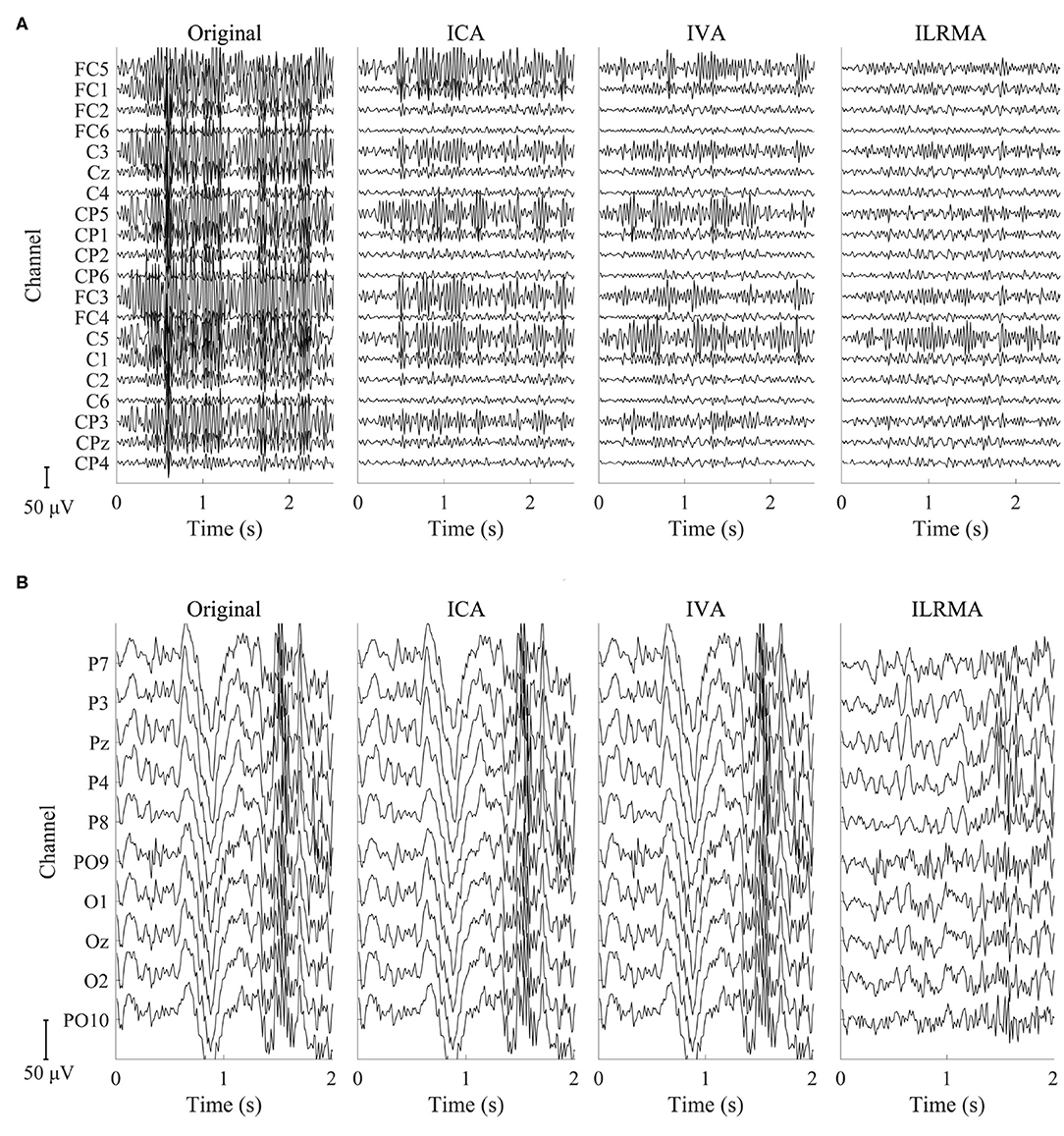

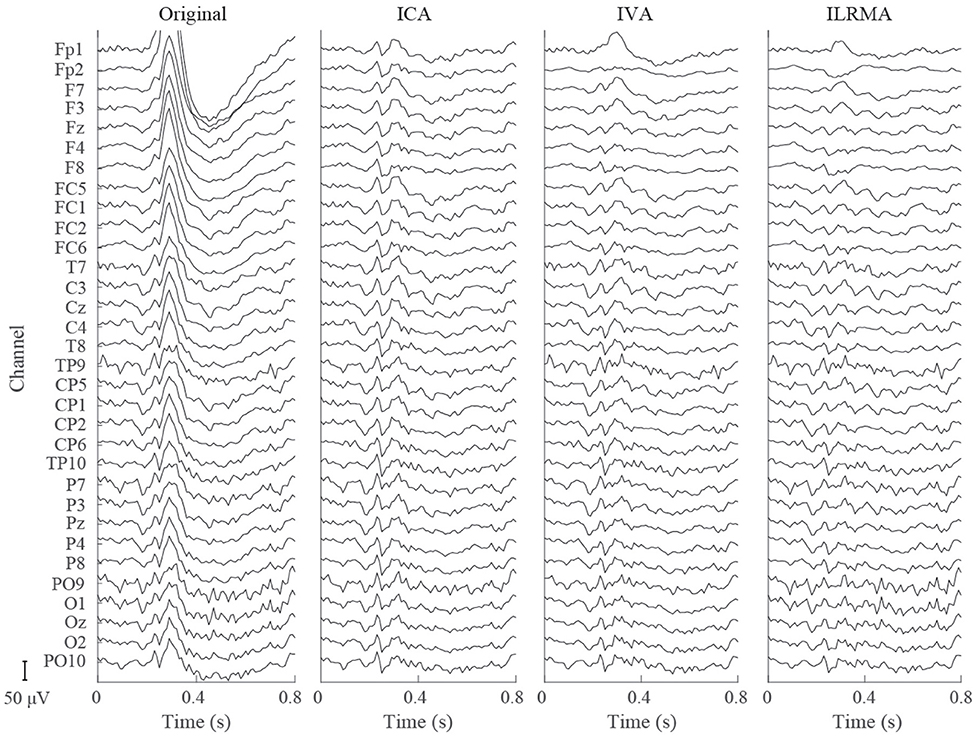

5.2. Representation of Original and Artifact-Reduced Signals

Figures 4, 5 show artifact-contaminated EEG epochs and artifact-reduced EEG epochs obtained using ICA, IVA, and ILRMA in the MI, ERP, and SSVEP paradigms. They were qualitatively indicated that ILRMA could better remove artifact effects compared to ICA and IVA. In addition, the task-independent components were removed by ILRMA to leave characteristic features in each paradigm (e.g., event-related desynchronization caused by motor imagery and evoked potential by steady-state visual stimulus) instead of the attenuating power of all frequency components. This resulted in improvements in these BCI performances.

Figure 4. Artifact-contaminated EEG epoch and artifact-reduced EEG epochs estimated by ICA, IVA, and ILRMA in the (A) MI paradigm and (B) SSVEP paradigm.

Figure 5. Artifact-contaminated EEG epoch and artifact-reduced EEG epochs estimated by ICA, IVA, and ILRMA in the ERP paradigm.

6. Discussion

6.1. Automatic Processing Architecture

ICA-based artifact reduction techniques have been widely used in the field of EEG signal processing because of their powerful signal separation accuracy, simplicity (low computational cost), and ease of use (Delorme et al., 2007; Dimigen, 2019; Jiang et al., 2019). The techniques for limiting ocular and muscular artifacts (Chen et al., 2019; Tian et al., 2020) other than the ICA family are useful if they are integrated in a cascade-type processing module, which can automatically identify the type of artifact contained in the EEG observation. A simple filtering (linear combination) approach such as ICA, which multiplies the demixing matrix W as a filter, is faster and user-friendly. IVA and ILRMA use this property and can sufficiently cope with online processing as long as they can learn the demixing matrix. In addition, these algorithms can benefit from the ICLabel classifier (Pion-Tonachini et al., 2019) for IC identification to realize an automatic artifact reduction method. Thus, the ILRMA-based artifact reduction technique (1) has higher accuracy than ICA, (2) has low computational cost (equivalent to ICA) in an online process, (3) is a changeable module for the ICA decomposition function, and (4) can simultaneously remove multiple types of artifacts. Note that the ICLabel classifier is expected to be updated frequently in the future. Although the EEGLAB toolbox keeps track of updates in the run_ICL function, the label assignment results may change depending on the updates. In this study, we selected the “default” version for IC identification.

6.2. Efficacy of Artifact Reduction for BCIs

Researchers who propose original artifact reduction techniques for BCIs should describe not only the signal quality but also the discriminative performance of the extracted components to demonstrate their efficacy in BCIs. The performances of proposed artifact reduction techniques in most previous studies were evaluated and ranked based on a metric (e.g., signal-to-noise ratio and correlation) that indicates how the signal quality of the estimated neuronal sources was preserved (Islam et al., 2016). We do not know the original (true) neuronal sources of EEG observations; thus, synthetic data whose pseudo-neuronal/pseudo-artifactual sources and mixing process are known were usually used to calculate the metric (Chen et al., 2019; Mucarquer et al., 2019). After the quantified evaluation of signal quality in the estimated sources through the proposed artifact reduction technique, the separation ability for real data is qualitatively shown (Blum et al., 2019; Kanoga et al., 2019a). However, the evaluation of the discriminative performance of the remaining sources (extracted components) is not a major/standard quantitative one in this field. To implement an artifact reduction technique into the BCI framework, it is more important to know which aspect of the technique is the most crucial to the classification/identification performance. In this study, we demonstrated improved MI- and SSVEP-based BCI performances using our proposed technique, which represents common and recurrent properties of artifactual waveforms into trials over all classes in low-rank bases and automatically removes them. Except for session 2 in the MI-based BCI performance, our proposed method showed over 70% averaged accuracies (Table 2), which is required for satisfactory BCI control (Sellers et al., 2006). When we consider the time latency during an MI period and change the starting time point of MI features from fixed to flexible by using time window selection algorithm such as correlation-based time window selection (Feng et al., 2018), the average performance of the MI-based BCI might be improved. Furthermore, using other kind of feature extraction method such as sparse FBCSP (Zhang et al., 2015) is one of good solution to improve the MI-based BCI performance because the method automatically chose the filter bands with superior accuracies compared with FBCSP. In the case of the ERP-based BCI, ICA was already effective enough. Therefore, the superiority of ILRMA could not be confirmed; however, its performance is equivalent to that of ICA. Although this paper did not present the quantitative signal quality of the estimated neuronal sources because we did not prepare synthetic data to avoid the artificial bias of neuronal characteristics (all EEG observations have unclear individual differences such as amplitudes and latencies, so we could not easily predict the features and generate pseudo/synthetic data), the classification/identification results obtained with three well-known BCI paradigms should be helpful information for practitioners and implementers.

6.3. Limitations

The results obtained using the DETECT toolbox were treated as the grand truth. However, the muscular label reflected the characteristics of “clenching” and “flexing both arms.” Other types of muscular artifacts, such as “changing head direction,” may not be extracted as artifactual epochs. In addition, the “100% accuracy” was sometimes calculated using only one testing epoch although other accuracies were calculated using more epochs (e.g., 20). The comparison of artifact reduction techniques was fair because the number of artifact-contaminated epochs was the same over the factor. However, the comparisons of BCI paradigms and multiday effects were not fair: each factor has different numbers of artifact-contaminated epochs. Evaluating the techniques as fairly as possible by using the DETECT toolbox is very difficult. For solving this problem, an artifact-contaminated EEG dataset with multiple types of intensity-manipulated artifacts is required in this research field to enable rapid developments in artifact reduction techniques for BCIs.

6.4. Future Works

Further improvement of ILRMA-based artifact reduction techniques is expected through the introduction of an identification algorithm for decomposed frequency components and a soft-threshold-like wavelet-enhanced ICA (Castellanos and Makarov, 2006). Despite the fact that ILRMA decomposes the STFT of the original signals up to each frequency bin, our automatic processing architecture reconstructs artifact-reduced signals by replacing artifactual source(s) with zeros (replacing entire frequency bins with zeros) to adapt the ICLabel classifier, which needs time-series ICs. In other words, a lot of neural information is lost in the reduction step. Signal reconstruction should be made more sophisticated by considering the effective frequency band adjusted to the BCI paradigm.

Moreover, we need further investigations of artifact reduction methods in practical situations such as using wearable devices that have small number of channels (in an extreme case, the number of channels is only one) for EEG measurements. In such situation, the performance of artifact reduction techniques will change and might be decreased. Recent studies attempt to propose a generic artifact removal algorithm (Chen et al., 2019). Unlike the time-domain algorithm, frequency-domain methods (i.e., IVA and ILRMA) can separate single-channel data if the differences in data-driven spectral basis functions can be learned well (Kanoga and Mitsukura, 2014; Kanoga et al., 2019a). Thus, we will investigate our proposed algorithm in practical situations and extend it as a generic and user-friendly algorithm for reducing artifacts from EEG data.

The ICA family, including IVA and ILRMA, represents EEG observations through linear combinations of sources based on a time-invariant demixing matrix; the trained demixing matrix may sometimes cause instability through inter-/intrasubject variabilities. By integrating with a transfer learning algorithm (Pan and Yang, 2009; Tan et al., 2018), relearning from the general filter (demixing matrix) to the user-specific filter according to the data while performing online processing could potentially reduce the variability and provide more convenient and practical BCIs.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://gigadb.org/dataset/view/id/100542/File_page/1.

Author Contributions

SK obtained the initial idea for this study. All authors contributed to the planning and design of the study. SK and TH analyzed the data and interpreted the results. SK compiled the first draft of the article. All authors participated in revisions to finalize the draft of the manuscript.

Funding

This research was supported in part by the New Energy and Industrial Technology Development Organization (NEDO), Japan.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Amari, S.-I. (1998). Natural gradient works efficiently in learning. Neural Comput. 10, 251–276. doi: 10.1162/089976698300017746

Ang, K. K., Chin, Z. Y., Zhang, H., and Guan, C. (2008). “Filter bank common spatial pattern (FBCSP) in brain-computer interface,” in 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence) (Hong Kong: IEEE), 2390–2397.

Arieli, A., Sterkin, A., Grinvald, A., and Aertsen, A. (1996). Dynamics of ongoing activity: Explanation of the large variability in evoked cortical responses. Science 273, 1868–1871. doi: 10.1126/science.273.5283.1868

Bell, A. J., and Sejnowski, T. J. (1995). An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7, 1129–1159. doi: 10.1162/neco.1995.7.6.1129

Belouchrani, A., Abed-Meraim, K., Cardoso, J.-F., and Moulines, E. (1997). A blind source separation technique using second-order statistics. IEEE Trans. Signal Process. 45, 434–444. doi: 10.1109/78.554307

Blum, S., Jacobsen, N., Bleichner, M. G., and Debener, S. (2019). A Riemannian modification of artifact subspace reconstruction for EEG artifact handling. Front. Hum. Neurosci. 13:141. doi: 10.3389/fnhum.2019.00141

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vis. 10, 433–436. doi: 10.1163/156856897X00357

Budd, J. M., and Kisvárday, Z. F. (2001). Local lateral connectivity of inhibitory clutch cells in layer 4 of cat visual cortex (area 17). Exp. Brain Res. 140, 245–250. doi: 10.1007/s002210100817

Castellanos, N. P., and Makarov, V. A. (2006). Recovering EEG brain signals: artifact suppression with wavelet enhanced independent component analysis. J. Neurosci. Methods 158, 300–312. doi: 10.1016/j.jneumeth.2006.05.033

Chen, X., Liu, Q., Tao, W., Li, L., Lee, S., Liua, A., et al. (2019). ReMAE: a user-friendly toolbox for removing muscle artifacts from EEG. IEEE Trans. Instrum. Meas. 69, 2105–2119. doi: 10.1109/TIM.2019.2920186

Choi, S., Cichocki, A., Park, H.-M., and Lee, S.-Y. (2005). Blind source separation and independent component analysis: a review. Neural Inform. Process. Lett. Rev. 6, 1–57.

Delorme, A., and Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Delorme, A., Mullen, T., Kothe, C., Acar, Z. A., Bigdely-Shamlo, N., Vankov, A., et al. (2011). EEGLAB, SIFT, NFT, BCILAB, and ERICA: New tools for advanced EEG processing. Comput. Intell. Neurosci. 2011:10. doi: 10.1155/2011/130714

Delorme, A., Sejnowski, T., and Makeig, S. (2007). Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage 34, 1443–1449. doi: 10.1016/j.neuroimage.2006.11.004

Dimigen, O. (2019). Optimizing the ICA-based removal of ocular EEG artifacts from free viewing experiments. Neuroimage 207:116117. doi: 10.1016/j.neuroimage.2019.116117

Duong, N. Q., Vincent, E., and Gribonval, R. (2010). Under-determined reverberant audio source separation using a full-rank spatial covariance model. IEEE Trans. Audio Speech Lang. Process. 18, 1830–1840. doi: 10.1109/TASL.2010.2050716

Fazli, S., Popescu, F., Danóczy, M., Blankertz, B., Müller, K.-R., and Grozea, C. (2009). Subject-independent mental state classification in single trials. Neural Netw. 22, 1305–1312. doi: 10.1016/j.neunet.2009.06.003

Feng, J., Yin, E., Jin, J., Saab, R., Daly, I., Wang, X., et al. (2018). Towards correlation-based time window selection method for motor imagery BCIs. Neural Netw. 102, 87–95. doi: 10.1016/j.neunet.2018.02.011

Hagemann, D., and Naumann, E. (2001). The effects of ocular artifacts on (lateralized) broadband power in the EEG. Clin. Neurophysiol. 112, 215–231. doi: 10.1016/S1388-2457(00)00541-1

Halliday, D. M., Conway, B. A., Farmer, S. F., and Rosenberg, J. R. (1998). Using electroencephalography to study functional coupling between cortical activity and electromyograms during voluntary contractions in humans. Neurosci. Lett. 241, 5–8. doi: 10.1016/S0304-3940(97)00964-6

Hiroe, A. (2006). “Solution of permutation problem in frequency domain ICA, using multivariate probability density functions,” in International Conference on Independent Component Analysis and Signal Separation (Charleston: Springer), 601–608. doi: 10.1007/11679363_75

Hunter, D. R., and Lange, K. (2000). Quantile regression via an mm algorithm. J. Comput. Graph. Stat. 9, 60–77. doi: 10.1080/10618600.2000.10474866

Hyvärinen, A., and Oja, E. (1997). A fast fixed-point algorithm for independent component analysis. Neural Comput. 9, 1483–1492. doi: 10.1162/neco.1997.9.7.1483

Islam, M. K., Rastegarnia, A., and Yang, Z. (2016). Methods for artifact detection and removal from scalp EEG: a review. Neurophysiol. Clin. 46, 287–305. doi: 10.1016/j.neucli.2016.07.002

James, C. J., and Hesse, C. W. (2004). Independent component analysis for biomedical signals. Physiol. Meas. 26:R15. doi: 10.1088/0967-3334/26/1/R02

Jiang, X., Bian, G.-B., and Tian, Z. (2019). Removal of artifacts from EEG signals: a review. Sensors 19:987. doi: 10.3390/s19050987

Jung, T.-P., Makeig, S., Humphries, C., Lee, T.-W., McKeown, M. J., Iragui, V., et al. (2000). Removing electroencephalographic artifacts by blind source separation. Psychophysiology 37, 163–178. doi: 10.1111/1469-8986.3720163

Kanoga, S., Kanemura, A., and Asoh, H. (2019a). Multi-scale dictionary learning for ocular artifact reduction from single-channel electroencephalograms. Neurocomputing 347, 240–250. doi: 10.1016/j.neucom.2019.02.060

Kanoga, S., and Mitsukura, Y. (2014). Eye-blink artifact reduction using 2-step nonnegative matrix factorization for single-channel electroencephalographic signals. J. Signal Process. 18, 251–257. doi: 10.2299/jsp.18.251

Kanoga, S., and Mitsukura, Y. (2017). “Review of artifact rejection methods for electroencephalographic systems,” in Electroencephalography 69, 69–89. doi: 10.5772/68023

Kanoga, S., Nakanishi, M., and Mitsukura, Y. (2016). Assessing the effects of voluntary and involuntary eyeblinks in independent components of electroencephalogram. Neurocomputing 193, 20–32. doi: 10.1016/j.neucom.2016.01.057

Kanoga, S., Nakanishi, M., Murai, A., Tada, M., and Kanemura, A. (2019b). Robustness analysis of decoding SSVEPs in humans with head movements using a moving visual flicker. J. Neural Eng. 17:016009. doi: 10.1088/1741-2552/ab5760

Karson, C. N. (1983). Spontaneous eye-blink rates and dopaminergic systems. Brain 106, 643–653. doi: 10.1093/brain/106.3.643

Kaufmann, T., Schulz, S., Grünzinger, C., and Kübler, A. (2011). Flashing characters with famous faces improves ERP-based brain-computer interface performance. J. Neural Eng. 8:056016. doi: 10.1088/1741-2560/8/5/056016

Kim, T., Attias, H. T., Lee, S.-Y., and Lee, T.-W. (2006a). Blind source separation exploiting higher-order frequency dependencies. IEEE Trans. Audio Speech Lang. Process. 15, 70–79. doi: 10.1109/TASL.2006.872618

Kim, T., Lee, I., and Lee, T.-W. (2006b). “Independent vector analysis: definition and algorithms,” in 2006 Fortieth Asilomar Conference on Signals, Systems and Computers (Pacific Grove, CA: IEEE), 1393–1396. doi: 10.1109/ACSSC.2006.354986

Kitamura, D., Ono, N., and Saruwatari, H. (2017). “Experimental analysis of optimal window length for independent low-rank matrix analysis,” in 2017 25th European Signal Processing Conference (EUSIPCO) (Kos: IEEE), 1170–1174. doi: 10.23919/EUSIPCO.2017.8081392

Kitamura, D., Ono, N., Sawada, H., Kameoka, H., and Saruwatari, H. (2016). Determined blind source separation unifying independent vector analysis and nonnegative matrix factorization. IEEE/ACM Trans. Audio Speech Lang. Process. 24, 1622–1637. doi: 10.1109/TASLP.2016.2577880

Lawhern, V., Hairston, W. D., and Robbins, K. (2013). Detect: A MATLAB toolbox for event detection and identification in time series, with applications to artifact detection in EEG signals. PLoS ONE 8:e62944. doi: 10.1371/journal.pone.0062944

Lee, D. D., and Seung, H. S. (1999). Learning the parts of objects by non-negative matrix factorization. Nature 401:788. doi: 10.1038/44565

Lee, M.-H., Fazli, S., Kim, K.-T., and Lee, S.-W. (2016). “Development of an open source platform for brain-machine interface: OpenBMI,” in 2016 4th International Winter Conference on Brain-Computer Interface (BCI) (Yongpyong: IEEE), 1–2. doi: 10.1109/IWW-BCI.2016.7457440

Lee, M.-H., Kwon, O.-Y., Kim, Y.-J., Kim, H.-K., Lee, Y.-E., Williamson, J., et al. (2019). EEG dataset and OpenBMI toolbox for three BCI paradigms: an investigation into BCI illiteracy. GigaScience 8:giz002. doi: 10.1093/gigascience/giz002

Lee, T.-W., Girolami, M., and Sejnowski, T. J. (1999). Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Comput. 11, 417–441. doi: 10.1162/089976699300016719

Lin, Z., Zhang, C., Wu, W., and Gao, X. (2006). Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Trans. Biomed. Eng. 53, 2610–2614. doi: 10.1109/TBME.2006.886577

Mohammadpour, M., and Rahmani, V. (2017). “A hidden Markov model-based approach to removing EEG artifact,” in 2017 5th Iranian Joint Congress on Fuzzy and Intelligent Systems (CFIS) (Qazvin: IEEE), 46–49. doi: 10.1109/CFIS.2017.8003655

Mucarquer, J. A., Prado, P., Escobar, M.-J., El-Deredy, W., and Zañartu, M. (2019). Improving EEG muscle artifact removal with an EMG array. IEEE Trans. Instrum. Meas. 69, 815–824. doi: 10.1109/TIM.2019.2906967

Odena, A. (2016). Semi-supervised learning with generative adversarial networks. arXiv preprint arXiv:1606.01583.

Ogino, M., Kanoga, S., Muto, M., and Mitsukura, Y. (2019). Analysis of prefrontal single-channel EEG data for portable auditory ERP-based brain-computer interfaces. Front. Hum. Neurosci. 13:250. doi: 10.3389/fnhum.2019.00250

Ono, N. (2011). “Stable and fast update rules for independent vector analysis based on auxiliary function technique,” in 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA) (New Paltz, NY: IEEE), 189–192. doi: 10.1109/ASPAA.2011.6082320

Onton, J., and Makeig, S. (2006). Information-based modeling of event-related brain dynamics. Prog. Brain Res. 159, 99–120. doi: 10.1016/S0079-6123(06)59007-7

Pan, S. J., and Yang, Q. (2009). A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345–1359. doi: 10.1109/TKDE.2009.191

Parini, S., Maggi, L., Turconi, A. C., and Andreoni, G. (2009). A robust and self-paced BCI system based on a four class SSVEP paradigm: algorithms and protocols for a high-transfer-rate direct brain communication. Comput. Intell. Neurosci. 2009:864564. doi: 10.1155/2009/864564

Pfurtscheller, G., and Da Silva, F. L. (1999). Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857. doi: 10.1016/S1388-2457(99)00141-8

Pfurtscheller, G., and Neuper, C. (2001). Motor imagery and direct brain-computer communication. Proc. IEEE 89, 1123–1134. doi: 10.1109/5.939829

Pion-Tonachini, L., Kreutz-Delgado, K., and Makeig, S. (2019). ICLabel: an automated electroencephalographic independent component classifier, dataset, and website. Neuroimage 198, 181–197. doi: 10.1016/j.neuroimage.2019.05.026

Regan, D. (1966). Some characteristics of average steady-state and transient responses evoked by modulated light. Electroencephalogr. Clin. Neurophysiol. 20, 238–248. doi: 10.1016/0013-4694(66)90088-5

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., and Chen, X. (2016). “Improved techniques for training GANs,” in Advances in Neural Information Processing Systems (Barcelona), 2234–2242.

Sawada, H., Kameoka, H., Araki, S., and Ueda, N. (2013). Multichannel extensions of non-negative matrix factorization with complex-valued data. IEEE Trans. Audio Speech Lang. Process. 21, 971–982. doi: 10.1109/TASL.2013.2239990

Sellers, E. W., Krusienski, D. J., McFarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2006). A P300 event-related potential brain-computer interface (BCI): the effects of matrix size and inter stimulus interval on performance. Biol. Psychol. 73, 242–252. doi: 10.1016/j.biopsycho.2006.04.007

Squires, K. C., Wickens, C., Squires, N. K., and Donchin, E. (1976). The effect of stimulus sequence on the waveform of the cortical event-related potential. Science 193, 1142–1146. doi: 10.1126/science.959831

Tan, C., Sun, F., Kong, T., Zhang, W., Yang, C., and Liu, C. (2018). “A survey on deep transfer learning,” in International Conference on Artificial Neural Networks (Rhodes: Springer), 270–279. doi: 10.1007/978-3-030-01424-7_27

Tian, Z., Ling, B. W.-K., Zhou, X., Lam, R. W.-K., and Teo, K.-L. (2020). Suppressing the spikes in electroencephalogram via an iterative joint singular spectrum analysis and low-rank decomposition approach. Sensors 20:341. doi: 10.3390/s20020341

Urigüen, J. A., and Garcia-Zapirain, B. (2015). EEG artifact removal—state-of-the-art and guidelines. J. Neural Eng. 12:031001. doi: 10.1088/1741-2560/12/3/031001

Vigario, R., and Oja, E. (2008). BSS and ICA in neuroinformatics: from current practices to open challenges. IEEE Rev. Biomed. Eng. 1, 50–61. doi: 10.1109/RBME.2008.2008244

Vourvopoulos, A. T., Jorge, C., Abreu, R., Figueiredo, P., Fernandes, J.-C., and Bermúdez i Badia, S. (2019). Efficacy and brain imaging correlates of an immersive motor imagery BCI-driven VR system for upper limb motor rehabilitation: a clinical case report. Front. Hum. Neurosci. 13:244. doi: 10.3389/fnhum.2019.00244

Wallstrom, G. L., Kass, R. E., Miller, A., Cohn, J. F., and Fox, N. A. (2004). Automatic correction of ocular artifacts in the EEG: a comparison of regression-based and component-based methods. Int. J. Psychophysiol. 53, 105–119. doi: 10.1016/j.ijpsycho.2004.03.007

Wang, M., Li, R., Zhang, R., Li, G., and Zhang, D. (2018). A wearable SSVEP-based BCI system for quadcopter control using head-mounted device. IEEE Access 6, 26789–26798. doi: 10.1109/ACCESS.2018.2825378

Welch, P. (1967). The use of fast Fourier transform for the estimation of power spectra: a method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 15, 70–73. doi: 10.1109/TAU.1967.1161901

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Yeom, S.-K., Fazli, S., Müller, K.-R., and Lee, S.-W. (2014). An efficient ERP-based brain-computer interface using random set presentation and face familiarity. PLoS ONE 9:e111157. doi: 10.1371/journal.pone.0111157

Keywords: electroencephalogram, brain–computer interface, independent component analysis, artifact reduction, independent low-rank matrix analysis

Citation: Kanoga S, Hoshino T and Asoh H (2020) Independent Low-Rank Matrix Analysis-Based Automatic Artifact Reduction Technique Applied to Three BCI Paradigms. Front. Hum. Neurosci. 14:173. doi: 10.3389/fnhum.2020.00173

Received: 25 February 2020; Accepted: 20 April 2020;

Published: 09 June 2020.

Edited by:

Tzyy-Ping Jung, University of California, San Diego, United StatesReviewed by:

Erwei Yin, China Astronaut Research and Training Center, ChinaDong Ming, Tianjin University, China

Copyright © 2020 Kanoga, Hoshino and Asoh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Suguru Kanoga, cy5rYW5vdWdhQGFpc3QuZ28uanA=

Suguru Kanoga

Suguru Kanoga Takayuki Hoshino

Takayuki Hoshino Hideki Asoh1

Hideki Asoh1