94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Hum. Neurosci. , 04 May 2018

Sec. Cognitive Neuroscience

Volume 12 - 2018 | https://doi.org/10.3389/fnhum.2018.00186

Qianru Xu1,2

Qianru Xu1,2 Elisa M. Ruohonen2

Elisa M. Ruohonen2 Chaoxiong Ye1,2

Chaoxiong Ye1,2 Xueqiao Li2

Xueqiao Li2 Kairi Kreegipuu3

Kairi Kreegipuu3 Gabor Stefanics4,5

Gabor Stefanics4,5 Wenbo Luo1,6*

Wenbo Luo1,6* Piia Astikainen2

Piia Astikainen2It is not known to what extent the automatic encoding and change detection of peripherally presented facial emotion is altered in dysphoria. The negative bias in automatic face processing in particular has rarely been studied. We used magnetoencephalography (MEG) to record automatic brain responses to happy and sad faces in dysphoric (Beck’s Depression Inventory ≥ 13) and control participants. Stimuli were presented in a passive oddball condition, which allowed potential negative bias in dysphoria at different stages of face processing (M100, M170, and M300) and alterations of change detection (visual mismatch negativity, vMMN) to be investigated. The magnetic counterpart of the vMMN was elicited at all stages of face processing, indexing automatic deviance detection in facial emotions. The M170 amplitude was modulated by emotion, response amplitudes being larger for sad faces than happy faces. Group differences were found for the M300, and they were indexed by two different interaction effects. At the left occipital region of interest, the dysphoric group had larger amplitudes for sad than happy deviant faces, reflecting negative bias in deviance detection, which was not found in the control group. On the other hand, the dysphoric group showed no vMMN to changes in facial emotions, while the vMMN was observed in the control group at the right occipital region of interest. Our results indicate that there is a negative bias in automatic visual deviance detection, but also a general change detection deficit in dysphoria.

Depression is a common and easily recurring disorder. Decades ago, Beck (1976) suggested that negatively biased information processing plays a role in the development and maintenance of depression. According to his theory, a dysphoric mood is maintained through attention and memory functions biased toward negative information, and these cognitive biases also expose individuals to recurrent depression (Beck, 1967, 1976).

Previous empirical studies have indeed demonstrated a negative bias in attention and memory functions in depression (Ridout et al., 2003, 2009; Linden et al., 2011; for reviews see, Mathews and Macleod, 2005; Browning et al., 2010; Delle-Vigne et al., 2014). Depressed participants have a pronounced bias toward negative stimuli as well as toward sad faces (Gotlib et al., 2004; Dai and Feng, 2012; Bistricky et al., 2014).

Brain responses, such as electroencephalography (EEG) and magnetoencephalography (MEG) responses, allow face processing to be studied in a temporally accurate manner. Previous studies have demonstrated that different evoked EEG/MEG responses reflect different stages of face perception (Bourke et al., 2010; Luo et al., 2010; Delle-Vigne et al., 2014). P1 (or P100) in event-related potentials (ERPs) and its magnetic counterpart M100 are thought to reflect the encoding of low-level stimulus features and are also modulated by emotional expressions (e.g., Batty and Taylor, 2003; Susac et al., 2010; Dai and Feng, 2012). P1 is also affected by depression: sad faces elicited greater responses than neutral and happy faces in the depressed group reflecting an attentive negative bias in depression (Dai and Feng, 2012). The following N170 component in ERPs and the magnetic M170 both index the structural encoding of faces (Bentin et al., 1996; Liu et al., 2002). This component has also shown emotional modulation in some studies (for positive results, see e.g., Batty and Taylor, 2003; Miyoshi et al., 2004; Japee et al., 2009; Wronka and Walentowska, 2011; and for negative results, see, e.g., Eimer and Holmes, 2002; Herrmann et al., 2002; Eimer et al., 2003; Holmes et al., 2003). In addition, depression alters the N170/M170: some ERP studies have found a smaller N170 response in depressed participants than in healthy controls (Dai and Feng, 2012), while others have found no such effects (Maurage et al., 2008; Foti et al., 2010; Jaworska et al., 2012). Negative bias has been reported as a higher N170 amplitude for sad faces relative to happy and neutral faces in depressed participants (Chen et al., 2014; Zhao et al., 2015). The P2 component (also labeled as P250), a positive polarity ERP response approximately at 200–320 ms in the temporo-occipital region, is followed by the N170 and reflects the encoding of emotional information (Zhao and Li, 2006; Stefanics et al., 2012; Da Silva et al., 2016). It has also a counterpart in MEG responses, sometimes labeled M220 (e.g., Itier et al., 2006; Schweinberger et al., 2007; Bayle and Taylor, 2010). In an ERP study with an emotional face intensity judgment task, depressed participants showed larger P2 amplitude for sad faces than happy and neutral ones, reflecting a negative bias in their attentive level, which was not found in the control group (Dai and Feng, 2012).

Although the negative bias in depression is well documented in settings involving sustained attention (for reviews see, Mathews and Macleod, 2005; Browning et al., 2010; Delle-Vigne et al., 2014), few studies have focused on automatic processing of emotional stimuli in depressed participants. Since our adaptive behavior relies largely on preattentive cognition (Näätänen et al., 2010), it is important to investigate emotional face processing in preattentive levels in healthy and dysphoric participants.

Studies based on the electrophysiological brain response called visual mismatch negativity (vMMN), a visual counterpart of the auditory MMN (Näätänen et al., 1978), have demonstrated that automatic change detection is altered in depression (Chang et al., 2010; Qiu et al., 2011; for a review, see Kremláček et al., 2016). vMMN is an ERP component elicited by rare “deviant” stimuli among repetitive “standard” stimuli over posterior electrode sites approximately at 100–200 ms post stimulus but also in a later latency range, up to 400 ms after the stimulus onset (e.g., Czigler et al., 2006; Astikainen et al., 2008; Stefanics et al., 2012, 2018; for a review, see Stefanics et al., 2014).

Related to depression, three studies have investigated the vMMN to changes in basic visual features (Chang et al., 2011; Qiu et al., 2011; Maekawa et al., 2013; for a review, see Kremláček et al., 2016), and one to changes in facial emotions (Chang et al., 2010). In study by Chang et al. (2010), centrally presented schematic faces were applied as stimuli (neutral faces as standard stimuli and happy and sad faces as deviant stimuli). The results showed that the early vMMN (reflecting mainly modulation of the N170 component) was reduced compared to the control group and the late vMMN (reflecting mainly modulation in P2 component) was absent in the depression group. This study thus demonstrated no negative bias, but a general deficit in the cortical change detection of facial expressions. Since in this study neutral faces were always applied as standard stimuli and emotional faces as deviant stimuli, it is unclear whether the modulations in ERPs were due to facial emotion processing as such or due to change detection in facial emotions. This applies nearly to all vMMN studies with facial expressions as the changing feature, as visual and face-sensitive components are known to be modulated by emotional expression (e.g., Batty and Taylor, 2003). This problem is particularly difficult when a neutral standard face and an emotional deviant face are used in the oddball condition, as the exogenous responses are the greatest to emotional faces (e.g., Batty and Taylor, 2003). This problem can be solved using only emotional faces in the oddball condition and analyzing the vMMN as a difference between the responses to the same facial emotion (e.g., a happy face) presented as deviant and standard stimuli (see Stefanics et al., 2012). This analysis method allows separating the vMMN, which reflects change detection, and the emotional modulation of visual and face-sensitive components.

In the present study, we investigated automatic face processing and change detection in emotional faces in two groups of participants: those with depressive symptoms (Beck’s Depression Inventory ≥ 13; here referred to as the dysphoric group) and in gender- and age- matched never-depressed control participants. The stimuli and procedure were similar to those reported previously by Stefanics et al. (2012), but instead of happy and fearful faces, we applied happy and sad faces. We chose happy and sad faces since impairments in the processing of both of these have been in previous studies associated to depression (happy faces: Fu et al., 2007; sad faces: e.g., Bradley et al., 1997; Gollan et al., 2008), and because these facial emotions make it possible to study mood-congruent negative bias in depression. Recordings of MEG were applied, which provide excellent temporal resolution and relatively good spatial resolution; in addition, its signal is less disturbed by the skull and scalp than the EEG signal (for a review, see Baillet, 2017).

Importantly, during the stimulus presentation the participants conducted a task related to stimuli presented in the center of the screen, while at the same time, emotional faces were presented in the periphery. In most of the previous studies of unattended face processing, face stimuli have been presented in the center of the visual field (e.g., Zhao and Li, 2006; Astikainen and Hietanen, 2009), as well as in the study where depression and control groups were compared (Chang et al., 2010). Centrally presented pictures might be difficult to ignore, and in real life, we also acquire information from our visual periphery. We hypothesize that rare changes in facial emotions presented in the peripheral vision in a condition in which participants ignore the stimuli will result in amplitude modulations in responses corresponding to the vMMN. We expect that the experimental manipulation of the stimulus probability will elicit the vMMN in three time windows reflecting the three stages of facial information processing. This hypothesis is based on previous ERP studies applying the oddball condition in which amplitude modulations in P100, N170, and P2 have been found (Zhao and Li, 2006; Astikainen and Hietanen, 2009; Chang et al., 2010; Susac et al., 2010; Stefanics et al., 2012). In addition to stimulus probability effects, modulations by facial emotions are expected in the MEG counterparts of N170 and P2 (Miyoshi et al., 2004; Zhao and Li, 2006; Japee et al., 2009; Chen et al., 2014; Hinojosa et al., 2015). However, it is not clear if the first processing stage, M100, can be expected to be different in amplitude for sad and happy faces. In ERP studies, the corresponding P1 component have been modulated in amplitude for happy and fearful faces (Luo et al., 2010; Stefanics et al., 2012), but there are no previous studies contrasting sad and happy face processing in a stimulus condition comparable to the present study. Importantly, based on prior studies we expect that a group difference can be found for vMMN at the time windows for P1, N170, and P2, but it might not be specific to sad or happy faces (Chang et al., 2010). A depression-related negative bias, larger responses to sad than happy faces specifically in the dysphoric group, is also expected. Studies that have used attended stimulus conditions suggest that the negative bias is present in the two later processing stages (i.e., N170 and P2, Dai and Feng, 2012; Chen et al., 2014; Zhao et al., 2015; Dai et al., 2016; also, for a negative bias in P1, see Dai and Feng, 2012).

Thirteen healthy participants (control group) and ten participants with self-reported depressive symptoms (dysphoric group) volunteered for the study. The participants were recruited via email lists and notice board announcements at the University of Jyväskylä and with an announcement in a local newspaper. Inclusion criteria for all participants were age between 18 and 45 years, right handedness, normal or corrected to normal vision, and no self-reported neurological disorders. Inclusion criteria for the participants in the dysphoric group were self-reported symptoms of depression (13 scores or more as measured with the BDI-II) or a recent depression diagnosis. The exclusion criteria for all participants were self-reported anamnesis of any psychiatric disorders other than depression or anxiety in the dysphoric group (such as bipolar disorder or schizophrenia) and current or previous abuse of alcohol or drugs.

All but one of the participants in the dysphoric group reported having a diagnosis of depression given by a medical doctor in Finland. According to their self-reported diagnoses, one participant had mild depression (F32.0), four had moderate depression (F32.1), one had severe depression (F32.2), two had recurrent depression with moderate episode (F33.1), and one did not remember which depression diagnosis was given. One participant reported to have a comorbid anxiety disorder, one reported a previous anxiety disorder diagnosis, and one reported a previous anxiety disorder combined with an eating disorder. They were included in the study because comorbidity with anxiety is high among depressed individuals. Because in some cases the diagnosis had been given more than 1 year ago, the current symptom level was assessed prior to the experiment with Beck’s Depression Inventory (BDI-II, Beck et al., 1996).

According to the BDI-II manual, the following normative cutoffs are recommended for the interpretation of BDI-II scores: 0–13 points = minimal depression, 14–19 points = mild depression, 20–28 points = moderate depression, and 29–63 points = severe depression (Beck et al., 1996). Based on these cut-off values, there were four participants with mild depression, four participants with moderate depression, and two participants with severe depression. The BDI-II scores and demographics are reported in Table 1. Written informed consent was obtained from the participants before their participation. The experiment was carried out in accordance with the Declaration of Helsinki. The ethical committee of the University of Jyväskylä approved the research protocol.

The visual stimuli were black and white photographs (3.7° wide × 4.9° tall) of 10 different models (five males and five females) from Pictures of Facial Affect (Ekman and Friesen, 1976). Stimuli were presented on a dark-gray background screen at a viewing distance of 100 cm. Each trial consisted of four face stimuli randomly presented at four fixed locations at the corners of an imaginary square (eccentricity, 5.37°) and a fixation cross in the center of the screen. The four faces were presented at the same time, each face showing the same emotion (either happy or sad). On each panel, two male and two female faces were presented. The duration of each stimulus was 200 ms.

An oddball condition was applied in which an inter-stimulus interval (ISI) randomly varied from 450 to 650 ms (offset to onset). The experiment consisted of four stimulus blocks in which frequent (90%; standard) stimuli were randomly interspersed with rare (10%; deviant) stimuli. In two experimental blocks, sad faces were presented frequently as standard stimuli, while happy faces were presented rarely as deviant stimuli. In the other two blocks, happy faces were presented as standard stimuli and sad faces were presented as deviant stimuli. Each block contained 450 standard stimuli and 50 deviant stimuli, and the order of the four blocks were randomized across participants.

The participants’ task was to fixate to the cross in the center of the screen, ignore the emotional faces, and respond by pressing a button as soon as possible when they detected a change of the cross in the screen center. The change in cross was a lengthening of its horizontal line or vertical line with a frequency of 11 changes per minute. Face and cross changes never co-occurred.

The visually evoked magnetic fields were recorded with a 306-channel whole-head system (Elekta Neuromag Oy, Helsinki, Finland) consisting of 204 planar gradiometers and 102 magnetometers in a magnetically shielded room at the MEG Laboratory, University of Jyväskylä. The empty room activity was recorded for 2 min before and after the experiment to estimate intrinsic noise levels. It was confirmed that all the magnetic materials that may distort the measurement had been removed from participants before the experiment. The locations of three anatomical landmarks (the nasion and left and right preauricular points) and five Head Position Indicator coils (HPI-coils, two on the forehead, two behind the ears, and one on the crown), as well as a number of additional points on the head were determined with an Isotrak 3D digitizer (PolhemusTM, United States) before the experiment started. During the recording, participants were instructed to sit in a chair with their head inside the helmet-shaped magnetometer and their hands on a table. The vertical electro-oculogram (EOG) was recorded with bipolar electrodes, one above and one below the right eye. The horizontal EOG was recorded with bipolar electrodes placed lateral to the outer canthi of the eyes.

First, the spatiotemporal signal space separation (tSSS) method (Taulu et al., 2005; Supek and Aine, 2014) in the MaxFilter software (Elekta-Neuromag) was used to remove the external interference from the MEG data. The MaxFilter software was also applied for head movement correction and transforming the head origin to the same position for each participant. Then, the MEG data were analyzed using the Brainstorm software (Tadel et al., 2011). Recordings were filtered offline by a band-pass filter between 0.1 and 40 Hz. To avoid potential artifacts, epochs with values exceeding ±200 μV in EOG channels were rejected from the analysis. Next, eye blink and heartbeat artifacts were identified based on EOG and electrocardiographic (ECG) channels using a signal-space projection (SSP) method (Uusitalo and Ilmoniemi, 1997) and removed from the data. To compare the results more directly with the previous ERP studies and to the results of Stefanics et al. (2012) in particular, data from magnetometers were analyzed. The data were segmented into epochs from -200 ms before to 600 ms after the stimulus onset and baseline corrected to the 200 ms pre-stimulus period. Trials were averaged separately for happy standard, sad standard, happy deviant, and sad deviant stimuli for each participant, the number of accepted trials being 651 (SD = 13.57), 639 (SD = 23.45), 83 (SD = 3.18), and 81 (SD = 4.69), respectively. The percentage of accepted trials for happy and sad deviants, and happy and sad standards were 83% (SD = 3.18%), 81% (SD = 4.69%), 72% (SD = 1.51%), and 71% (SD = 2.61%), respectively. There were no group differences in the number of accepted trials (all p-values > 0.34).

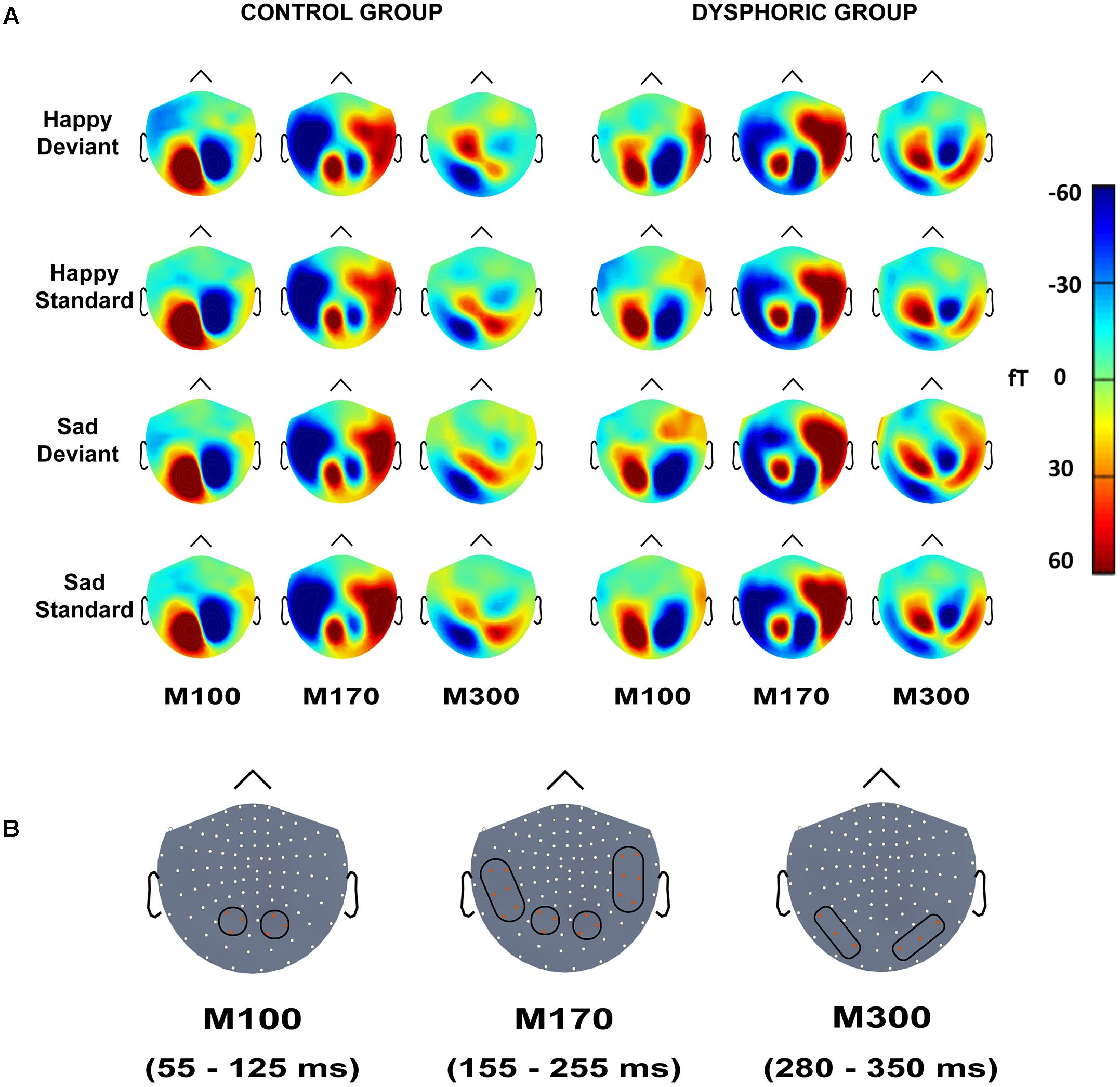

The peak amplitude values for each participant, separately for each stimulus type and emotion, were measured in three time windows: 55–125 ms, 155–255 ms, and 280–350 ms post-stimulus, corresponding to the three major responses, M100, M170, and M300, found from the grand-averaged data (Figures 1–3). Based on prior findings (Peyk et al., 2008; Taylor et al., 2011; Stefanics et al., 2012), we defined two (M100, M300) or four (M170) regions of interest (ROIs) for the peak amplitude analysis for each response (Figure 3). For M100, the peak amplitudes were averaged across sensors at bilateral occipital regions (Left ROI: MEG1911, MEG1921, MEG2041; Right ROI: MEG2311, MEG2321, MEG2341). For M170, the peak amplitudes were averaged across sensors at bilateral temporal and occipital sites (Left temporal ROI: MEG1511, MEG1521, MEG1611, MEG1641, MEG1721, MEG0241; Right temporal ROI: MEG1321, MEG1331, MEG1441, MEG2421, MEG2611, MEG2641; Left occipital ROI: MEG1911, MEG1921, MEG2041; Right occipital ROI: MEG2311, MEG2321, MEG2341). For M300, the peak amplitude values were averaged across sensors at occipital sites (Right ROI: MEG1721, MEG1731, MEG1931; Left ROI: MEG2331, MEG2511, MEG2521). In addition to peak amplitudes, the peak latencies were also measured for each component from the same sensors as used in the amplitude analysis. Since two participants’ data did not show M300 responses (one in control group and another in dysphoric group), they were excluded from the analysis for this response.

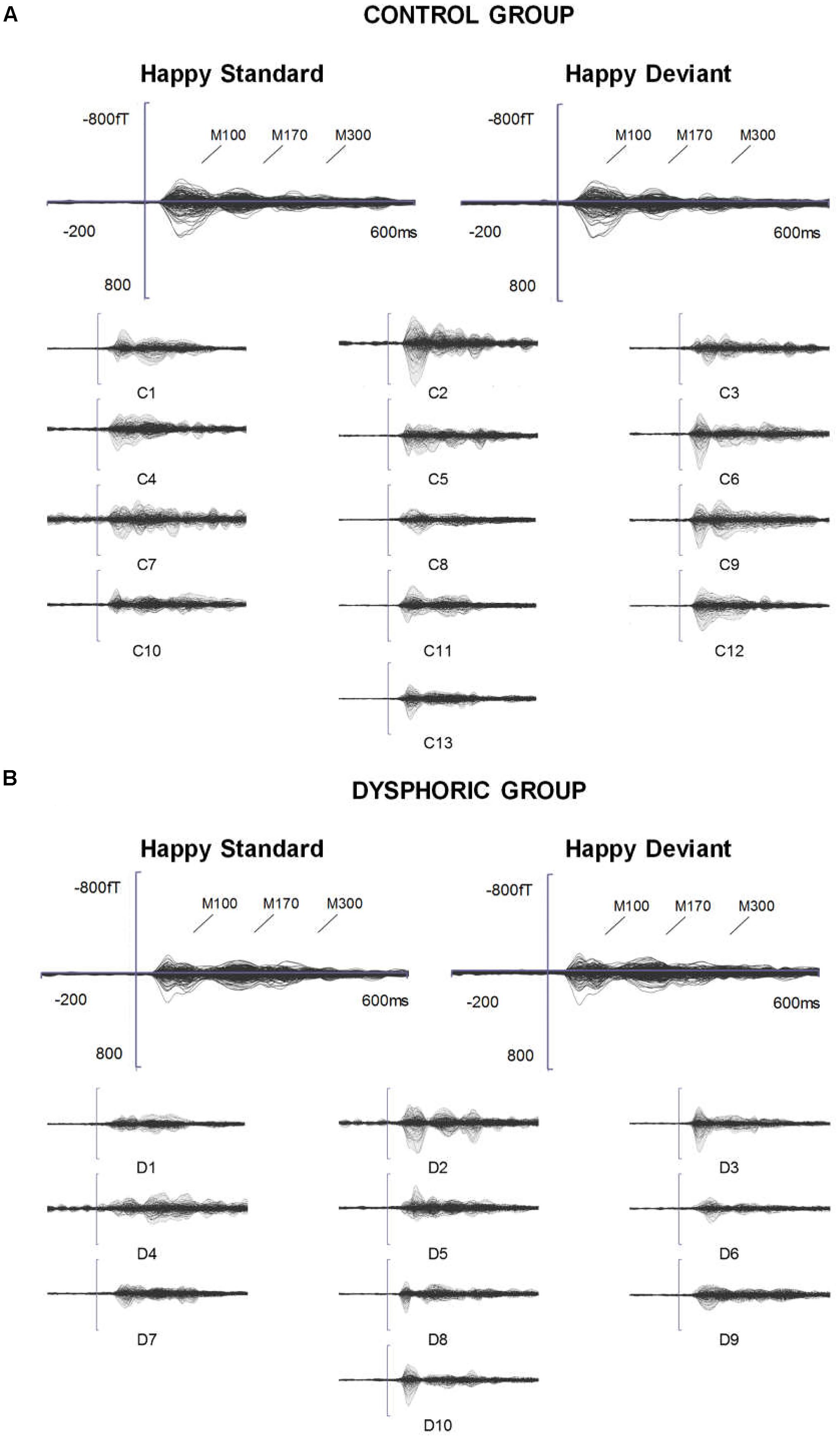

FIGURE 1. ERFs reflecting three stages of face processing (M100, M170, and M300). (A) Butterfly view of the grand-averaged responses to happy standard and happy deviant faces in the control group (top) and individual participants’ responses to happy standard faces (C1–C13). (B) Butterfly view of the grand-averaged responses to happy standard and happy deviant faces in the dysphoric group (top) and individual participants’ responses to happy standard faces (D1–D10). It should be noted that signals from both magnetometers and gradiometers are included here. For visualization purposes, the gradiometer values are 0.04 times multiplied due to the different units for magnetometers (T) and gradiometers (T/m).

For the behavioral task, the analysis included hit rate and false alarm calculations. The hit rate was calculated as the ratio between button presses in a 100–2,000 ms interval after the event and the actual number of cross-changes. The false alarm rate was calculated as the ratio between button presses that were not preceded by a cross-change in a 100–2,000 ms interval before the event and the actual number of cross-changes.

Repeated-measures analysis of variance (ANOVA) was used to analyze the reaction time and accuracy (hit rate and false alarm) for the cross-change task. A within-subjects factor Stimulus Block (Sad vs. Happy standard) and a between-subjects factor Group (Control vs. Dysphoric) were applied.

Peak amplitudes and peak latencies separately at different ROIs in three time windows were analyzed with a three-way repeated-measures ANOVA with within-subjects factors Stimulus Type (Standard vs. Deviant) and Emotion (Sad vs. Happy), and a between-subjects factor Group (Control vs. Dysphoric).

In addition, because the visual inspection of the topographic maps of the M300 showed that there might be differences in the lateralization of the responses in dysphoric and control groups, we further studied this possibility using the lateralization index. First, all peak values from the right hemisphere ROI were multiplied by -1 to correct for the polarity difference (also see Morel et al., 2009; Ulloa et al., 2014). Then, using these rectified response values, the lateralization index was calculated for responses to each stimulus type (Happy Deviant, Happy Standard, Sad Deviant, Sad Standard) as follows: Lateralization index = (Left – Right)/(Left + Right). A three-way repeated-measures ANOVA with the within-subjects factors Stimulus Type (Standard vs. Deviant) and Emotion (Sad vs. Happy), and the between-subjects factor Group (Control vs. Dysphoric) was applied.

Besides, possible differences in the lateralization of the M300 response were investigated separately for happy and sad, as well as for deviant, and standard stimulus responses with repeated-measures ANOVAs. Furthermore, because small sample size can limit the possibility to observe existing significant differences in multi-way ANOVAs, we also compared lateralization indexes separately for the happy (Deviant happy – Standard happy) and sad (Deviant sad – Standard sad) vMMN between the groups with independent samples t-tests (bootstrapping method with 1,000 permutations; Supplementary Materials).

For all significant ANOVA results, post hoc analyses were conducted using two-tailed paired t-tests to compare the differences involving within-subjects factors and using independent-samples t-tests for between-subjects comparisons, both with a bootstrapping method using 1,000 permutations (Good, 2005).

For all analyses, presents effect size estimates for ANOVAs and Cohen’s d for t-tests. Cohen’s d was computed using pooled standard deviations (Cohen, 1988). In addition, we conducted the Bayes factor analysis to estimate whether the null results in post hoc analyses were observed by chance (Rouder et al., 2009). The Bayes Factor (BF10) provides an odds ratio for the alternative/null hypotheses (values < 1 favor the null hypothesis and values > 1 favor the alternative hypothesis). For example, a BF10 of 0.5 would indicate that the null hypothesis is two times more likely than the alternative hypothesis.

Whenever a significant interaction effect with the factor Group was found, two-tailed Pearson correlation coefficients were used to evaluate the correlation between the BDI-II score and the brain response. Bootstrap estimates of correlation were performed with 1,000 permutations.

The significance level was set to p < 0.05 for all tests.

For the reaction time and accuracy, neither significant main effects nor interaction effects were found (all p-values > 0.17). The mean reaction times were 384 ms (SD = 53) and 394 ms (SD = 69) for happy and sad standard stimulus blocks, respectively, and the mean reaction time for the whole experiment was 386 ms (SD = 61). The hit rate for blocks with happy faces as the standard stimuli was 98.86% (SD = 0.02), and 98.74% (SD = 0.02) for blocks with sad standard faces. The mean hit rate was 98.79% (SD = 0.02) for the whole experiment. The mean false alarm was below 1% both for happy and sad standard stimulus blocks, and the mean of the experiment was 0.96% (SD = 0.01). The mean reaction times were 380 ms (SD = 16) and 393 ms (SD = 27) for the control and dysphoric groups, respectively. The hit rate was above 98% for both groups (M = 99.1%, SD = 0.02 for the control group; M = 98.4%, SD = 0.02 for the dysphoric group). The mean false alarm rates were 0.978% (SD = 0.01) for the control group and 0.931% (SD = 0.004) for the dysphoric group, respectively.

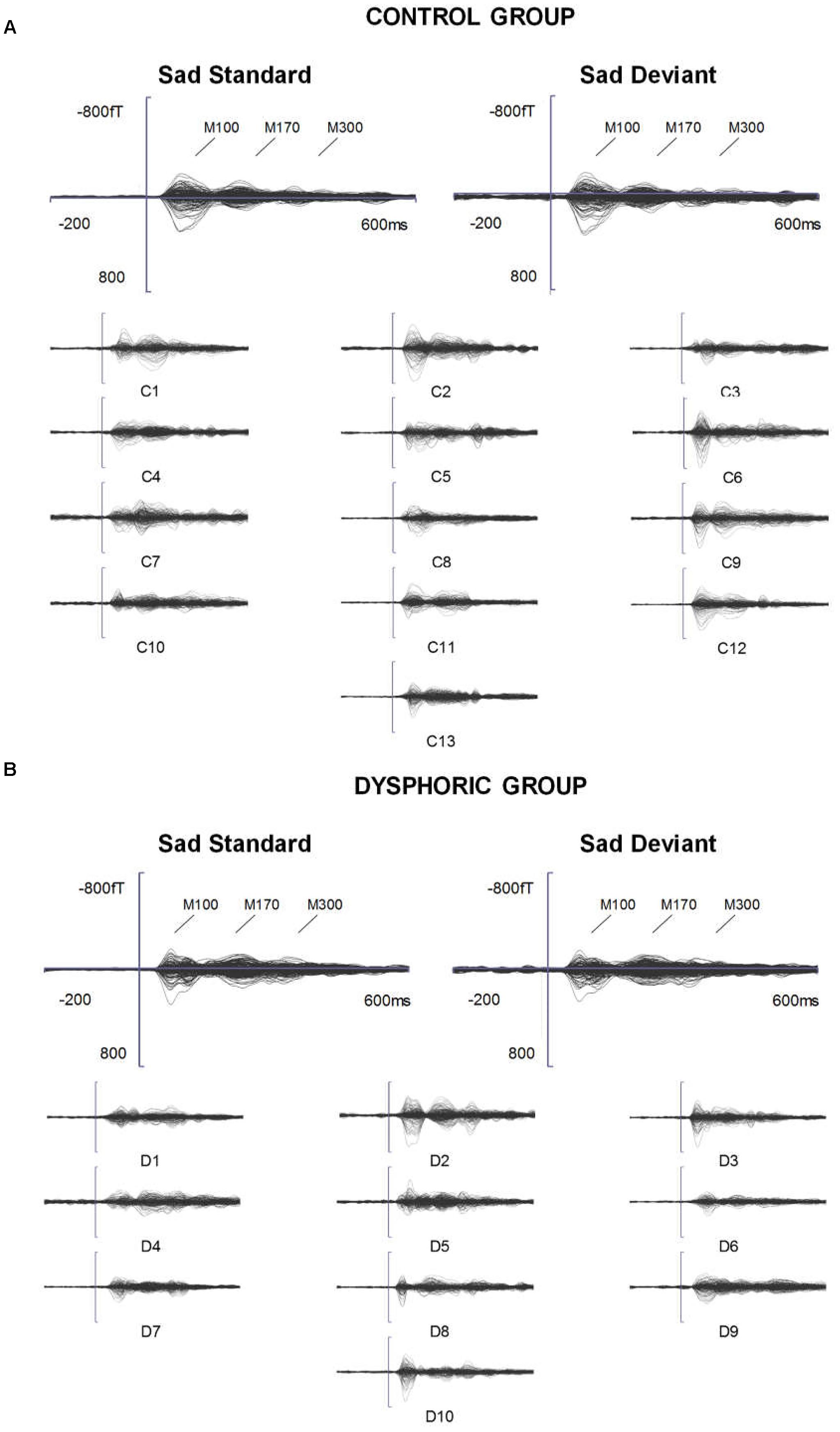

The grand-averaged evoked fields showed characteristic M100, M170, and M300 responses for both happy and sad faces presented as standard and deviant stimuli (Figures 1, 2). Butterfly views of the standard and deviant responses and each individual participant’s responses (for the standards only) are shown separately for happy (Figure 1) and sad stimuli (Figure 2) for the control and dysphoric groups. The topographic maps for each response type are shown in Figure 3A, and the ROIs for each response are shown in Figure 3B.

FIGURE 2. ERFs reflecting three stages of face processing (M100, M170, and M300). (A) Butterfly view of the grand-averaged responses to sad standard and sad deviant faces in the control group (top) and individual participants’ responses to sad standard faces (C1–C13). (B) Butterfly view of the grand-averaged responses to sad standard and sad deviant faces in the dysphoric group (top) and individual participants’ responses to sad standard faces (D1–D10). It should be noted that signals from both magnetometers and gradiometers are included here. For visualization purposes, the gradiometer values are 0.04 times multiplied due to the different units for magnetometers (T) and gradiometers (T/m).

FIGURE 3. (A) Topographic maps of grand-averaged magnetic fields for happy deviant, happy standard, sad deviant, and sad standard faces in the control (left) and dysphoric groups (right) for the M100, M170, and M300 shown here at 85, 205, and 315 ms after stimulus onset, respectively. (B) Magnetometer sensors and the regions of interests used for analyses are marked with black frames for M100, M170, and M300. Each participant’s peak values were extracted from the time windows of 55–125 ms, 155–255 ms, and 280–350 ms after stimulus onset for M100, M170, and M300, respectively.

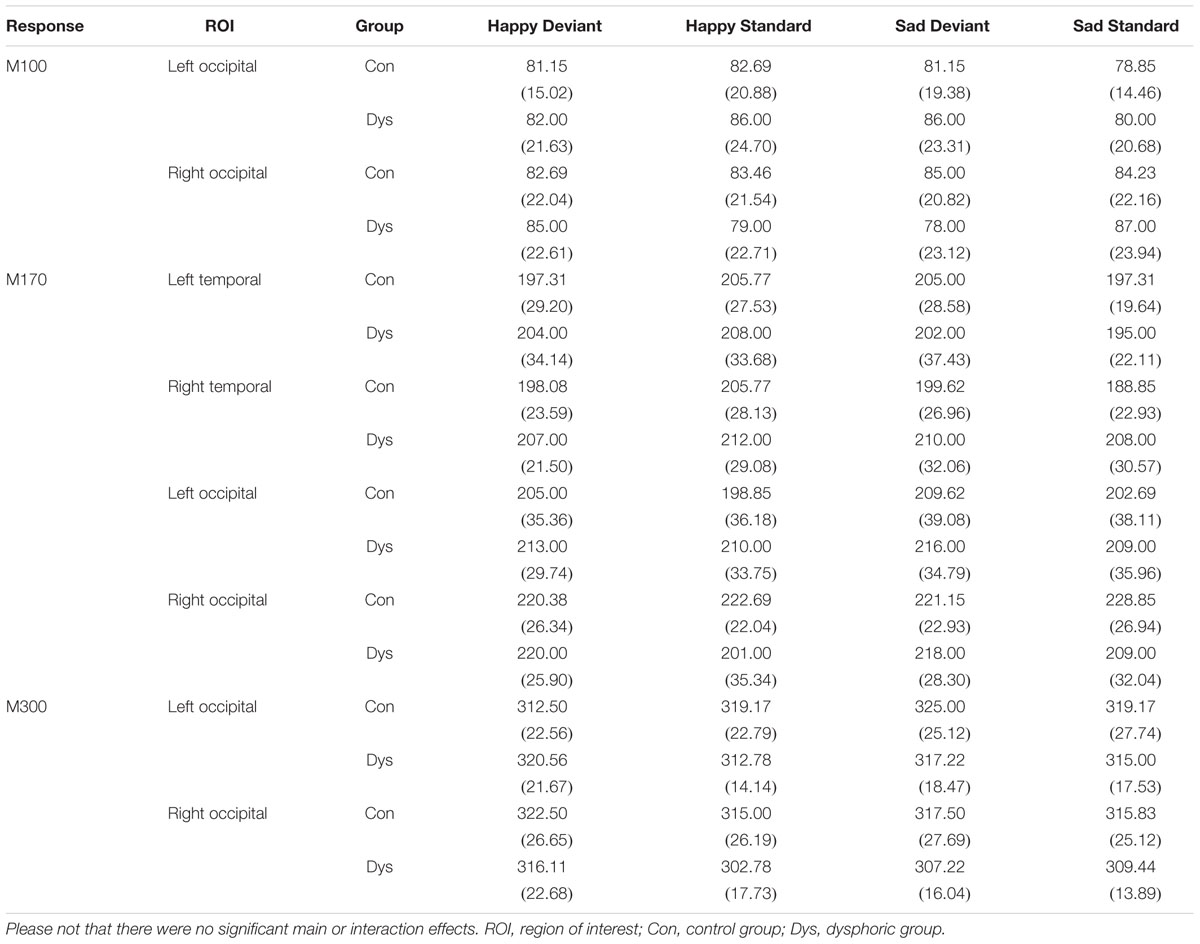

The response latencies are reported in Table 2. There were no significant main effects or interaction effects in response latencies.

TABLE 2. Mean peak latency (ms) and standard deviation (in parentheses) for each response and ROI in the control and dysphoric groups.

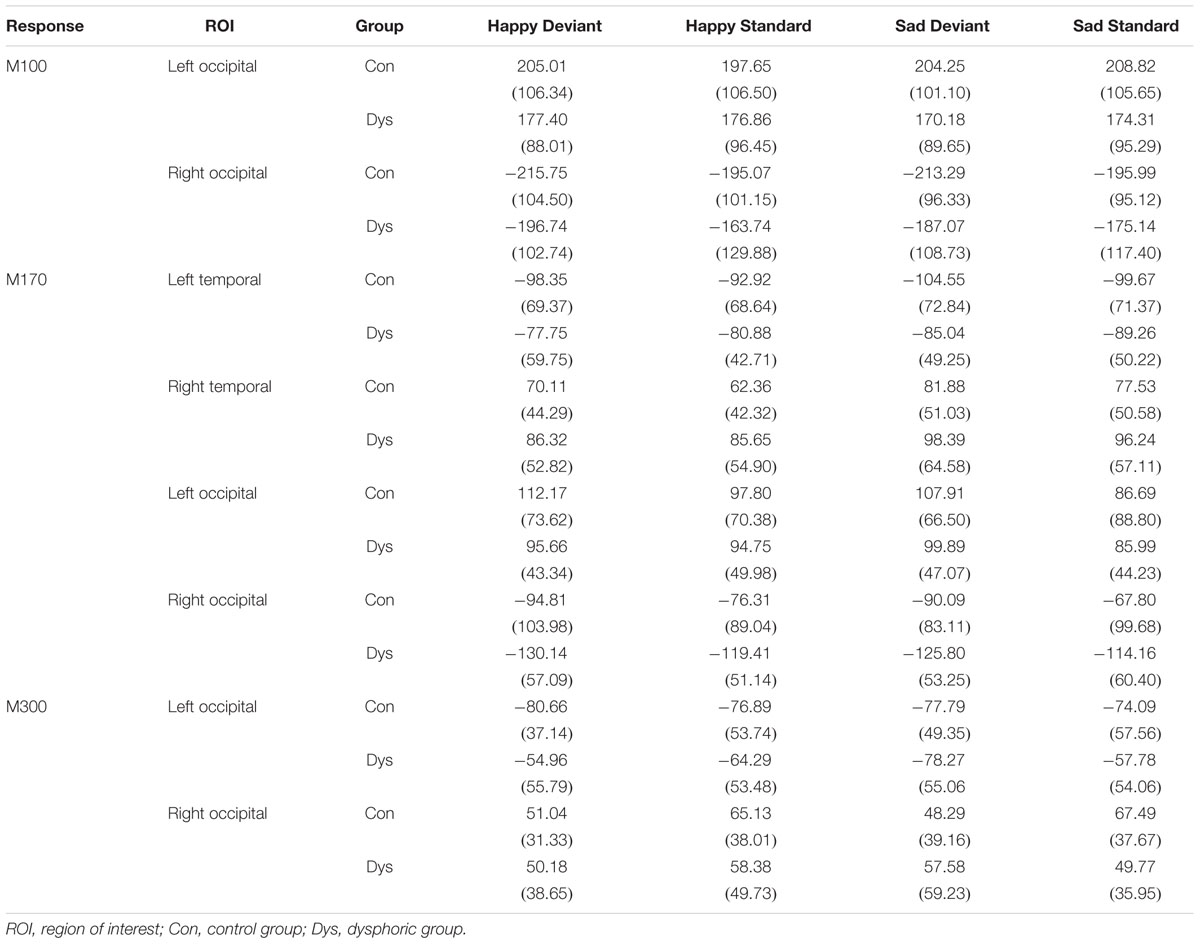

The peak amplitude values are reported in Table 3. Next, the results of the amplitude analyses are reported separately for each component.

TABLE 3. Peak amplitude values (fT) and standard deviation (in parentheses) for each response and ROI in the control and dysphoric groups.

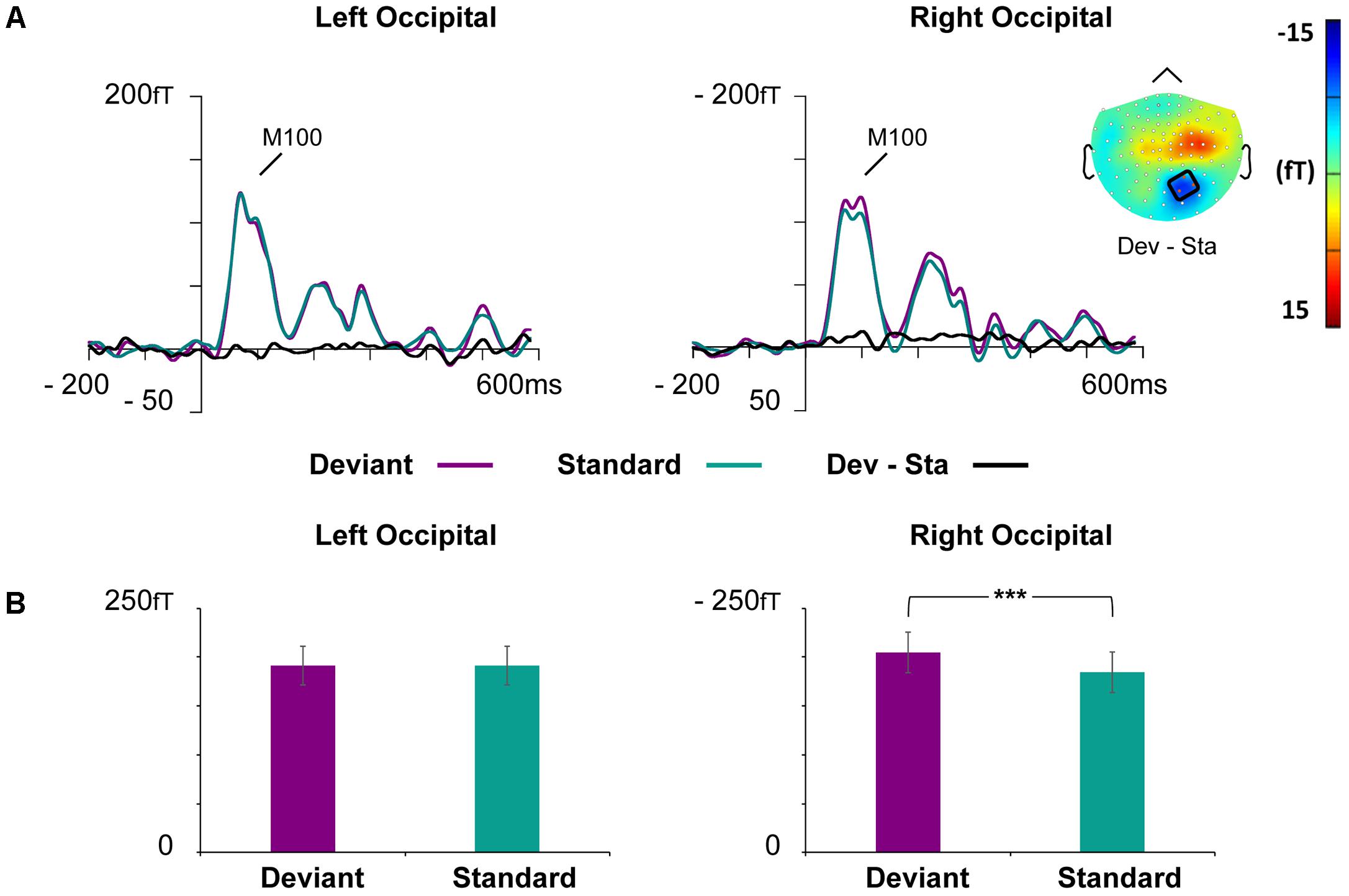

Waveforms of the event-related magnetic fields (ERFs) showed a strong M100 response peaking approximately at 85 ms after the stimulus onset on bilateral occipital regions (Figure 4).

FIGURE 4. Grand-averaged ERFs demonstrating the M100. (A) Waveforms of evoked magnetic fields to the standard and deviant stimuli and deviant minus standard differential response at the left and right occipital ROIs. The topography of the vMMN (deviant – standard) depicted as the mean value of activity 55–125 ms after stimulus onset. (B) Bar graph for the M100 peak value with standard errors to deviant and standard faces at the left and right occipital ROIs. Asterisks mark a significant difference at ∗∗∗p < 0.001.

At the left occipital ROI, neither main effects nor interaction effects were found (all p-values > 0.226).

At the right occipital ROI, there was a significant main effect of Stimulus Type, F(1,21) = 30.22, p < 0.001, = 0.59, indicating larger ERF amplitudes for the deviant faces than standard faces. The other main effects and all interaction effects were non-significant (all p-values > 0.342).

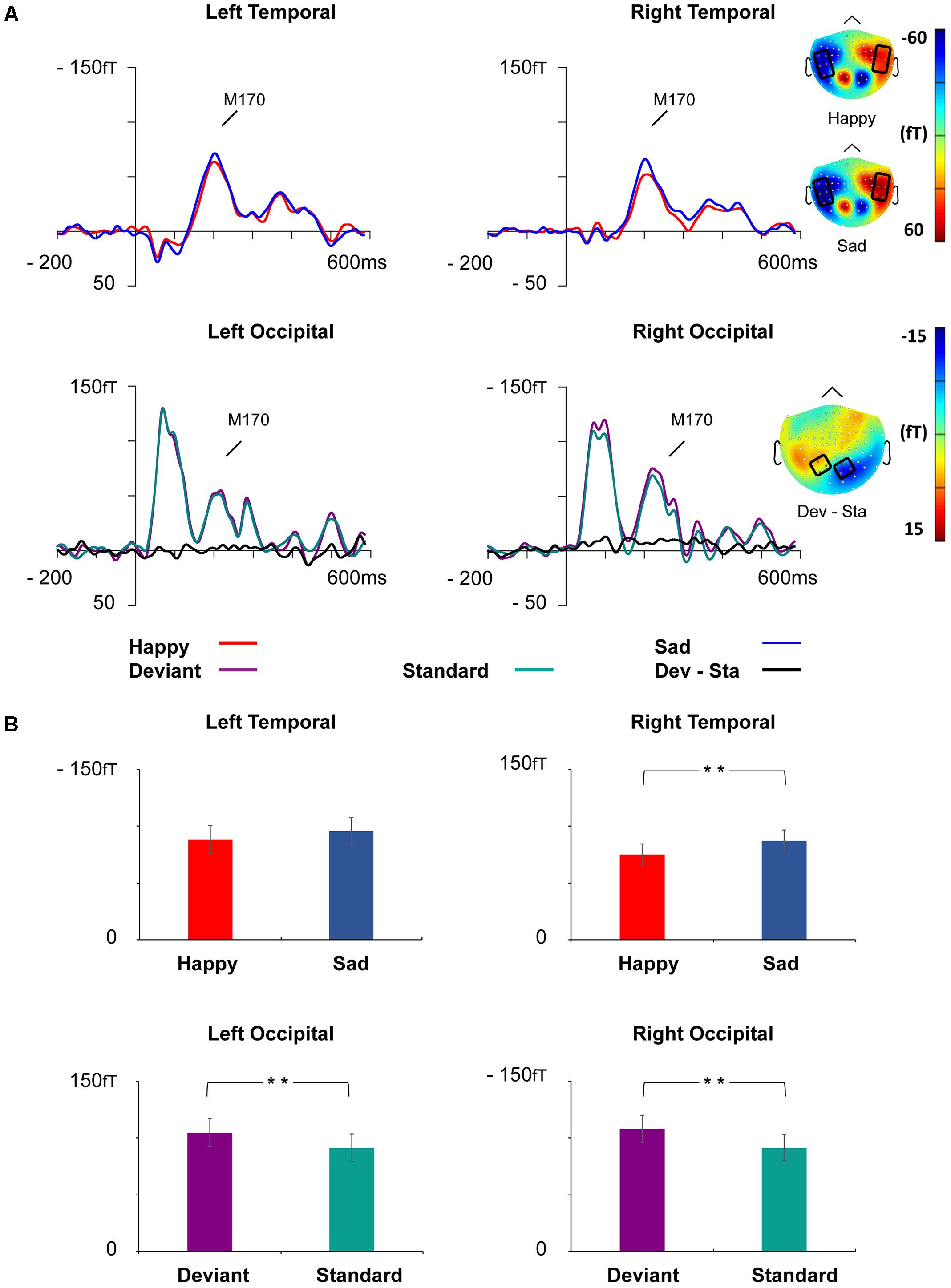

Event-related magnetic field waveforms showed a strong M170 response peaking approximately 205 ms after the stimulus onset in the bilateral temporo-occipital regions (Figure 5).

FIGURE 5. Grand-averaged ERFs demonstrating the M170 response. (A) Upper: Waveforms of ERFs to happy and sad faces at right and left temporal ROIs and corresponding topographic maps. Lower: Waveforms of ERFs to standard and deviant faces at the left and right occipital ROIs and the topography of the vMMN (deviant – standard). Topographic maps are depicted as the mean value of activity 155–255 ms after stimulus onset. (B) Bar graph for the M170 peak amplitude values with standard errors to happy and sad faces (upper) and deviant and standard stimuli (lower) at the left and right temporal and occipital ROIs, respectively. Asterisks mark significant differences at ∗∗p < 0.01.

At the left temporal ROI, there was a marginally significant main effect for Emotion, F(1,21) = 3.46, p = 0.077, = 0.14, reflecting more activity for sad than happy faces. Other main effects and interaction effects were non-significant (all p-values > 0.261).

At the right temporal ROI, a main effect of Emotion was observed, F(1,21) = 8.52, p = 0.008, = 0.29, wherein sad faces induced larger amplitudes than happy faces. Neither other main effects nor any of the interaction effects were significant (all p-values > 0.353).

At the left occipital ROI, a main effect of Stimulus Type was found, F(1,21) = 9.29, p = 0.006, = 0.31, reflecting larger activity for deviant faces than standard faces. Other main effects and interaction effects were non-significant (all p-values > 0.223).

At the right occipital ROI, a main effect of Stimulus Type was found, F(1,21) = 12.81, p = 0.002, = 0.38, reflecting larger activity for deviant faces than standard faces. Other main effects and interaction effects were non-significant (all p-values > 0.288).

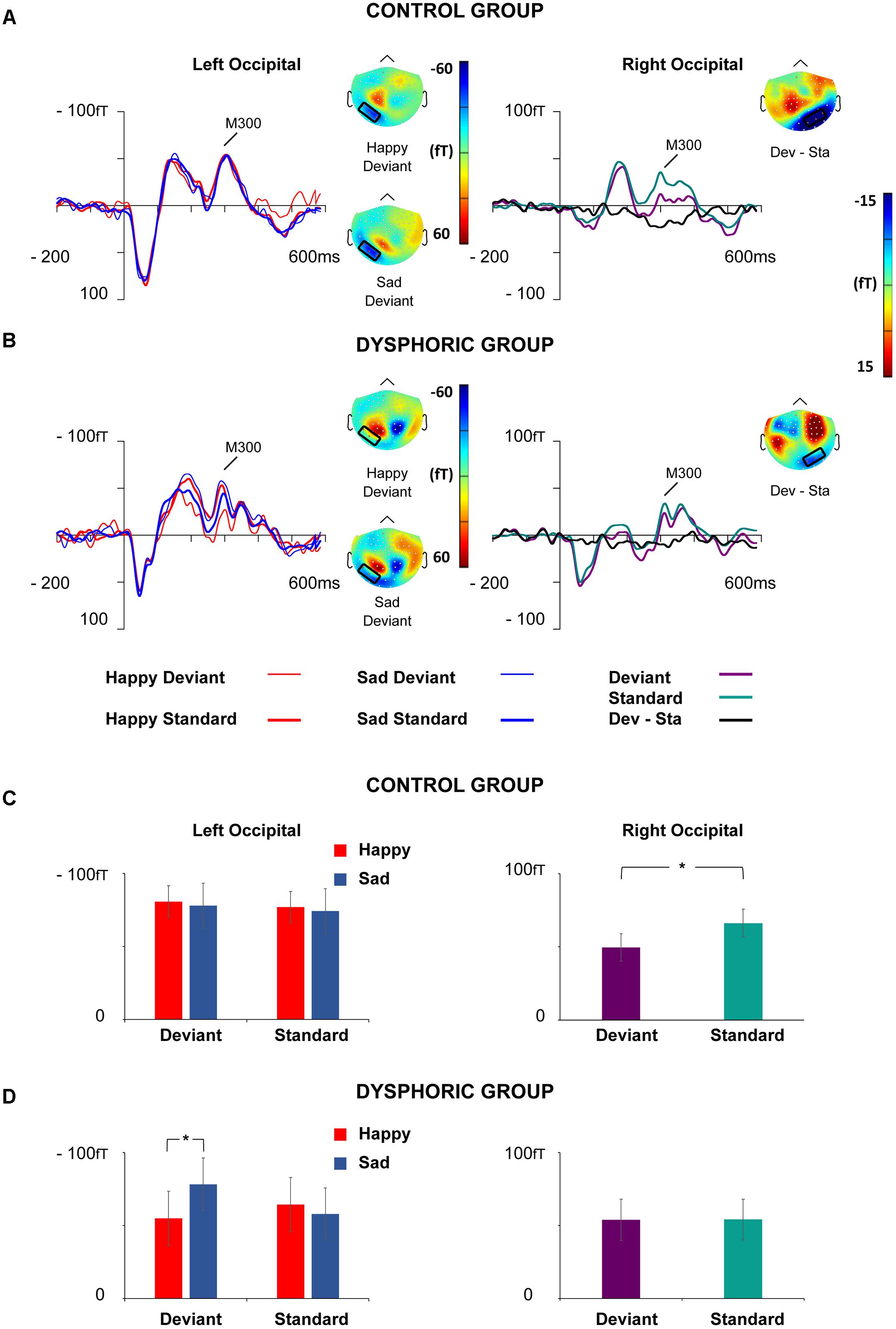

The waveforms of the ERF demonstrated a bipolar M300 activity over the bilateral occipital ROI peaking approximately 315 ms after the stimulus onset (Figure 6).

FIGURE 6. Grand-averaged ERFs for the M300 response. Waveforms of ERFs at the left and right occipital ROIs in the control (A) and dysphoric group (B). Topographic maps of Happy and Sad Deviant (Left), and the vMMN (Deviant – Standard) responses (Right) are depicted as the mean value of activity 280–350 ms after stimulus onset. Bar graphs for the M300 peak value with standard errors at left and right occipital ROIs in the control (C) and dysphoric group (D). Note that in the left hemisphere there was an interaction effect of Emotion × Stimulus Type × Group, which showed that sad deviant faces induced more activity than happy deviant faces in the dysphoric group but not in the control group. In the right hemisphere, an interaction effect of Stimulus Type × Group was found, indicating that the vMMN was elicited in the control but not in the dysphoric group. Asterisk marks significant differences at ∗p < 0.05.

At the left occipital ROI, a significant interaction effect of Emotion × Stimulus Type, F(1,19) = 4.48, p = 0.048, = 0.19, a marginally significant interaction effect of Emotion × Group, F(1,19) = 4.34, p = 0.051, = 0.186, and a significant interaction effect of Emotion × Stimulus Type × Group, F(1,19) = 4.52, p = 0.047, = 0.19, was found. The other main effects and interaction effects were non-significant (all p-values > 0.315).

Post hoc tests for the Emotion × Stimulus Type interaction did not show any significant differences for any of the comparisons (all p-values > 0.128, all BF10s < 0.67).

Post hoc tests for Emotion × Group interaction showed that sad faces induced larger activity than happy faces in the dysphoric group, t(8) = 3.27, p = 0.030, CI 95% [3.72, 13.34], d = 0.16, BF10 = 5.72, but there were no differences between the responses to sad and happy faces in the control group, t(12) = 0.67, p = 0.513, CI 95% [-11.14, 4.85], d = 0.06, BF10 = 0.35. No differences between the groups were found in happy or sad face responses (all p-values > 0.395, all BF10s < 0.53).

Post hoc tests for the three-way interaction showed that no differences were found between the groups in amplitudes to any of the stimulus types per se (Happy Deviant, Happy Standard, Sad Deviant, Sad Standard, all p-values > 0.244, all BF10s < 0.68), or in the vMMN responses (Happy Deviant – Happy Standard, Sad Deviant – Sad Standard), all p-values > 0.225, all BF10s < 0.67). Thus, we split the data by group and run a two-way repeated-measures of ANOVA with Stimulus Type (Standard vs. Deviant) × Emotion (Sad vs. Happy) in each group separately. There was neither a significant main effect nor interaction effects in the control group (all p-values > 0.517). In the dysphoric group, an interaction effect of Emotion × Stimulus type was found, F(1,8) = 6.87, p = 0.031, = 0.46. Amplitude values for sad deviant faces were larger than for happy deviant faces, t(8) = 4.91, p = 0.011, CI 95% [15.18, 32.98], d = 0.38, BF10 = 35.9. Further, a marginally significant difference was found reflecting larger amplitude values for sad deviant faces than sad standard faces in the dysphoric group, t(8) = 2.09, p = 0.085, CI 95% [-38.45, -1.19], d = 0.38, BF10 = 1.42. No other significant results between responses to different stimulus type pairs were found in the dysphoric group (all p-values > 0.168, all BF10s < 0.76). There was also a main effect of Emotion in the dysphoric group, F(1,8) = 10.67, p = 0.011, = 0.57, reflecting more activity for sad faces than happy faces.

At the right occipital ROI, the main effect of the Stimulus Type, F(1,19) = 5.40, p = 0.031, = 0.22, and an interaction effect of Stimulus Type × Group was found, F(1,19) = 5.15, p = 0.035, = 0.21. The other main effects and interaction effects were non-significant (all p-values > 0.328).

The responses were smaller in amplitude for deviant faces than for standard faces in the whole group level. Post hoc analysis for the Stimulus Type × Group interaction revealed that the groups did not differ in any of the stimulus responses as such (all p-values > 0.476, all BF10s < 0.48). However, in the control group responses to deviant faces were smaller in amplitude than those to standard faces, t(11) = 2.87, p = 0.020, CI 95% [-28.14, -5.97], d = 0.49, BF10 = 4.23. There was no such difference in the dysphoric group, t(8) = 0.06, p = 0.949, CI 95% [-6.29, 6.02], d = 0.005, BF10 = 0.32. In addition, a group difference was found in the vMMN amplitude (deviant – standard differential response), t(19) = 2.27, p = 0.031, CI 95% [-28.86, -4.01], d = 1.05, BF10 = 2.14, reflecting a larger vMMN amplitude in the control than in the dysphoric group.

In the whole group level the correlations between BDI-II scores and M300 response amplitudes were non-significant for all stimulus types at the left (all p-values > 0.062) and right occipital ROIs (all p-values > 0.438). The same applied for the correlations calculated separately for the dysphoric group (at the left ROI, all p-values > 0.107 and at the right ROI, all p-values > 0.299).

The analysis of the lateralization index revealed neither main effects nor interaction effects (all p-values > 0.107).

The main goal of the present study was to examine the emotional encoding and automatic change detection of peripherally presented facial emotions in dysphoria. MEG recordings showed prominent M100, M170, and M300 components to emotional faces. All of the components were modulated by the presentation rate of the stimulus (deviant vs. standard), corresponding to the results of the previous studies conducted on healthy participants (Zhao and Li, 2006; Astikainen and Hietanen, 2009; Susac et al., 2010; Stefanics et al., 2012). M170 was also modulated by emotion, responses being larger for sad than happy faces. M300 showed both a negative bias and impaired change detection in dysphoria.

The negative bias in the dysphoric group, which was demonstrated as a relative difference in M300 amplitude for sad and happy faces in comparison to the control group, seems to be associated with change detection, as the deviant stimulus responses, but not the standard stimulus responses, were larger for sad than happy faces in the dysphoric group. This is a novel finding, and previous studies using the oddball condition in depressed participants have not separately investigated responsiveness for standard and deviant stimuli but used the differential response (deviant – standard) in their analysis (Chang et al., 2010, 2011; Qiu et al., 2011). In general, our finding of the negative bias in emotional face processing extends from the previous findings involving attended stimulus conditions (Bistricky et al., 2014; Chen et al., 2014; Zhao et al., 2015; Dai et al., 2016) to ignore condition. However, the negative bias in the present study was not found in the first processing stages (M100 and M170) as in the studies applying attentive condition in depressed participants (Dai and Feng, 2012; Chen et al., 2014; Zhao et al., 2015). In a prior study with dysphoric participants, elevated P3 ERP component to sad target faces reflected negative bias in attentive face processing in previously depressed participants, but no differences were found in the earlier N2 component in comparison to never depressed participants (Bistricky et al., 2014). Future studies are needed to investigate whether the discrepancy between our results and previous studies with depressed participants (Dai and Feng, 2012; Chen et al., 2014; Zhao et al., 2015) is related to different participant groups (depressed vs. dysphoric) or amount of attention directed toward stimuli (ignore vs. attend condition).

The finding that control participants, but not dysphoric participants, showed the vMMN in the right occipital region indicates that, in addition to the negative bias, there is a deficit in change detection in general in dysphoria. Our results thus reveal that a dysphoric state affects not only attended change detection in facial emotions (e.g., Bistricky et al., 2014; Chen et al., 2014), but also the automatic change detection of emotional faces in one’s visual periphery. This finding of a vMMN deficit in dysphoria at the latency window of the M300 is also in line with the previous ERP study applying an ignore condition and reporting that the late vMMN (at 220–320 ms, reflecting the modulation of P2) was observed in the control group but was absent in the depression group (Chang et al., 2010). However, since in this study standard faces were neutral and deviant faces emotional, the effects of emotional processing and deviance detection cannot be distinguished. Here, we calculated the vMMN as a differential response between the responses to the same stimulus presented as deviant and standard. Our results showing the decreased vMMN amplitude at the latency of M300 in the dysphoric group, relative to the control group, indicate that it is specifically the change detection that is impaired in participants with depression symptoms.

Here, we did not find group differences in the vMMN related to earlier processing stages. The previous vMMN study conducted on depressed participants has reported a larger vMMN at the latency of N170 in control than depression group, and also larger vMMN amplitude to sad than happy deviant faces (Chang et al., 2010). However, again, it is unclear whether these findings reflect emotional encoding or deviance detection, as in this study standard faces were neutral and deviant faces emotional and the vMMN was calculated as a difference between responses to these.

Our finding of the altered emotional vMMN in dysphoria is in line with prior results in schizophrenia, a psychiatric disorder with known deficits in emotion processing, in which diminished automatic brain responses to emotional faces in patients were reported (Csukly et al., 2013). The vMMN was also suggested in autism spectrum disorder as an indicator of affective reactivity; given that vMMN responses to emotional faces showed a correlation with Autism Spectrum Quotient (AQ) scores (Gayle et al., 2012). Here, we did not find correlations between the M300 amplitude and BDI-II scores within the dysphoric group. The lack of correlations can be interpreted as indicating that the alterations observed in M300 reflect more trait- than state-dependent factors of depression. However, the lack of correlation can also be explained by the small sample size.

We also investigated the possible lateralization effect for the occipital M300 because the visual observation of the topographical maps showed some differences between the groups in lateralization for this component. However, none of the various investigations revealed differences in the lateralization between the groups. There were no clear lateralization differences in M300 in sad and happy face processing in the whole group level either. Some previous studies have reported that the vMMN to emotional faces has a right hemisphere dominance (Gayle et al., 2012; Li et al., 2012; Stefanics et al., 2012), while others have not found it (Kovarski et al., 2017), but these findings have been related to earlier face processing stages.

Besides the findings related to dysphoria, there were findings related to automatic change detection and emotion processing that apply to the whole participant group. All investigated components (M100, M170, and M300) were modulated by stimulus rarity, likely reflecting the vMMN response. In the previous EEG and MEG studies, the vMMN has been elicited at the earliest processing stage, i.e., in the P1 time window (Susac et al., 2010; Stefanics et al., 2012) but also at the latency of N170 and later P2 component (Zhao and Li, 2006; Astikainen and Hietanen, 2009; Chang et al., 2010). It should be noted that it is unclear whether the vMMN to emotional faces is a separate component from the visual and face-related components (i.e., P1, N170, and P2) or whether the vMMN is the amplitude modulation of these canonical components. To our knowledge, only one previous study has directly addressed this question. In this study, independent component analysis (ICA) and two stimulus conditions varying the probability of the emotional faces were used to separate vMMN and N170 components (Astikainen et al., 2013). The ICA revealed two components within the relevant 100–200 ms latency range. One component, conforming to N170, differed between the emotional and neutral faces, but not as a function of the stimulus probability, and the other, confirming to vMMN, was also modulated by the stimulus probability. However, neither in this study (Astikainen et al., 2013) nor in other previous studies the functional independence of the vMMN from P1/M100 or P2/M300 responses have been investigated.

Here emotional modulation was found at the second stage (M170) of face processing, as in several previous studies (Batty and Taylor, 2003; Eger et al., 2003; Williams et al., 2006; Zhao and Li, 2006; Blau et al., 2007; Leppänen et al., 2007; Schyns et al., 2007; Japee et al., 2009; Wronka and Walentowska, 2011; Astikainen et al., 2013; Chen et al., 2014). The emotional modulation of M170 was observed at the right temporal ROI, which corresponds to previous findings (Williams et al., 2006; Japee et al., 2009; Wronka and Walentowska, 2011). In our study, sad faces induced a greater N170 response than happy faces, while previous ERP studies have not found a difference between the N170 amplitude for happy and sad faces (Batty and Taylor, 2003; Hendriks et al., 2007; Chai et al., 2012). It is notable, however, that in the present study the involvement of dysphoric participants might explain the difference in the results compared to previous studies conducted only on healthy participants (Batty and Taylor, 2003; Hendriks et al., 2007; Chai et al., 2012).

The present study has some limitations. First, our analysis was carried out in the sensor instead of in the source space. Due to the lack of individual structural magnetic resonance images (MRIs), we restricted our analysis to the sensor level. We selected the ROIs for the analysis based on the topographies in the control group, which served as a reference group for the comparison with the dysphoric group. Future studies should investigate potential differences in the sources of brain responses to emotional faces, especially those for M300 between depressed and control participants. In addition, the relatively small sample size warrants a replication of the study with larger participant groups. It is possible that some existing effects were not observable with the current small sample size. It is also worth mentioning that the dysphoric group had depressive symptoms during the measurement, and nearly all of them had a diagnosis of depression. However, the diagnoses were not confirmed in the beginning of the study.

The present study was not designed to determine whether the underlying mechanism related to the vMMN is related to the detection of regularity violations (“genuine vMMN,” Kimura, 2012; Stefanics et al., 2014) or whether it reflects only different levels of neural adaptation in neural populations responding to standard and deviant stimuli (neural adaptation). The most common way to investigate the underlying neural mechanism has been to apply a control condition in which the level of neural adaptation is the same as for the deviant stimulus in the oddball condition, but where no regularity exists (an equiprobable condition, Jacobsen and Schröger, 2001). This control condition has not yet been applied in vMMN studies using facial expressions as a changing feature (some studies have used an equiprobable condition, but the probability of the oddball deviant and control stimulus in the equiprobable condition has been different; Li et al., 2012; Astikainen et al., 2013; Kovarski et al., 2017). Other vMMN studies have used a proper equiprobable condition, and they have demonstrated a genuine vMMN (e.g., for orientation changes, see Astikainen et al., 2008; Kimura et al., 2009). This is an aspect that should be studied in the context of emotional face processing as well. In one study (Kimura et al., 2012), however, the stimulus condition applied allowed neural responses to regularity violations to be observed. Namely, an immediate repetition of an emotional expression was presented as a deviant stimulus violating the pattern of constantly changing (fearful and happy). The vMMN reflecting the detection of the regularity violations was elicited at 280 ms and 350 ms after the stimulus onset for the fearful and the happy faces, respectively. It is thus possible that in our study the differential responses at the two first stages also reflect the neural adaptation to repeatedly presented standard stimuli rather than the genuine vMMN.

In sum, the present results show that there is a negative bias in dysphoria toward rare sad faces, extending the findings of an attentive negative bias in depression to automatic face processing. The results also demonstrate impaired automatic change detection in emotional faces in dysphoria. These findings related to automatic face processing might have significant behavioral relevance that affects, for instance, real-life social interactions.

PA and GS conceived and designed the experiments. ER, XL, and KK performed the data acquisition. QX analyzed the data. ER, XL, and CY contributed to the data analysis. PA, QX, CY, KK, GS, and WL interpreted the data. PA and QX drafted the manuscript. All the authors revised and approved the manuscript.

This work was supported by grants from the National Natural Science Foundation of China (NSFC 31371033) to WL. KK was supported by the Institutional Research Grant IUT02-13 from Estonian Research Council.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer JK declared a past co-authorship with several of the authors GS, KK, and PA to the handling Editor.

The authors thank Veera Alhainen, Toni Hartikainen, Pyry Heikkinen, Iiris Kyläheiko, and Annukka Niemelä for their help in data collection. Dr. Simo Monto for providing technical help with the MEG measurements; and Weiyong Xu, Tiantian Yang, and Yongjie Zhu for their help in the data analysis.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnhum.2018.00186/full#supplementary-material

Astikainen, P., Cong, F., Ristaniemi, T., and Hietanen, J. K. (2013). Event-related potentials to unattended changes in facial expressions: detection of regularity violations or encoding of emotions? Front. Hum. Neurosci. 7:557. doi: 10.3389/fnhum.2013.00557

Astikainen, P., and Hietanen, J. K. (2009). Event-related potentials to task-irrelevant changes in facial expressions. Behav. Brain Funct. 5:30. doi: 10.1186/1744-9081-5-30

Astikainen, P., Lillstrang, E., and Ruusuvirta, T. (2008). Visual mismatch negativity for changes in orientation - A sensory memory-dependent response. Eur. J. Neurosci. 28, 2319–2324. doi: 10.1111/j.1460-9568.2008.06510.x

Baillet, S. (2017). Magnetoencephalography for brain electrophysiology and imaging. Nat. Neurosci. 20, 327–339. doi: 10.1038/nn.4504

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 17, 613–620. doi: 10.1016/S0926-6410(03)00174-5

Bayle, D. J., and Taylor, M. J. (2010). Attention inhibition of early cortical activation to fearful faces. Brain Res. 1313, 113–123. doi: 10.1016/j.brainres.2009.11.060

Beck, A. T. (1967). Depression: Clinical, Experimental, and Theoretical Aspects. Philadelphia, PA: University of Pennsylvania Press.

Beck, A. T. (1976). Cognitive Therapy and the Emotional Disorders. Oxford: International Universities Press.

Beck, A. T., Steer, R. A., and Brown, G. K. (1996). Beck Depression Inventory-II. San Antonio, TX: Psychological Corporation 490–498.

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551.Electrophysiological

Bistricky, S. L., Atchley, R. A., Ingram, R., and O’Hare, A. (2014). Biased processing of sad faces: an ERP marker candidate for depression susceptibility. Cogn. Emot. 28, 470–492. doi: 10.1080/02699931.2013.837815

Blau, V. C., Maurer, U., Tottenham, N., and McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3:7. doi: 10.1186/1744-9081-3-7

Bourke, C., Douglas, K., and Porter, R. (2010). Processing of facial emotion expression in major depression: a review. Aust. N. Z. J. Psychiatry 44, 681–696. doi: 10.3109/00048674.2010.496359

Bradley, B. P., Mogg, K., and Lee, S. C. (1997). Attentional biases for negative information in induced and naturally occurring dysphoria. Behav. Res. Ther. 35, 911–927. doi: 10.1016/S0005-7967(97)00053-3

Browning, M., Holmes, E. A., and Harmer, C. J. (2010). The modification of attentional bias to emotional information: a review of the techniques, mechanisms, and relevance to emotional disorders. Cogn. Affect. Behav. Neurosci. 10, 8–20. doi: 10.3758/CABN.10.1.8

Chai, H., Chen, W. Z., Zhu, J., Xu, Y., Lou, L., Yang, T., et al. (2012). Processing of facial expressions of emotions in healthy volunteers: an exploration with event-related potentials and personality traits. Neurophysiol. Clin. 42, 369–375. doi: 10.1016/j.neucli.2012.04.087

Chang, Y., Xu, J., Shi, N., Pang, X., Zhang, B., and Cai, Z. (2011). Dysfunction of preattentive visual information processing among patients with major depressive disorder. Biol. Psychiatry 69, 742–747. doi: 10.1016/j.biopsych.2010.12.024

Chang, Y., Xu, J., Shi, N., Zhang, B., and Zhao, L. (2010). Dysfunction of processing task-irrelevant emotional faces in major depressive disorder patients revealed by expression-related visual MMN. Neurosci. Lett. 472, 33–37. doi: 10.1016/j.neulet.2010.01.050

Chen, J., Ma, W., Zhang, Y., Wu, X., Wei, D., Liu, G., et al. (2014). Distinct facial processing related negative cognitive bias in first-episode and recurrent major depression: evidence from the N170 ERP component. PLoS One 9:e0119894. doi: 10.1371/journal.pone.0109176

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences, 2nd Edn. Hillsdale, NJ: Lawrence Erlbaum Associates.

Csukly, G., Stefanics, G., Komlósi, S., Czigler, I., and Czobor, P. (2013). Emotion-related visual mismatch responses in schizophrenia: impairments and correlations with emotion recognition. PLoS One 8:e75444. doi: 10.1371/journal.pone.0075444

Czigler, I., Weisz, J., and Winkler, I. (2006). ERPs and deviance detection: visual mismatch negativity to repeated visual stimuli. Neurosci. Lett. 401, 178–182. doi: 10.1016/j.neulet.2006.03.018

Dai, Q., and Feng, Z. (2012). More excited for negative facial expressions in depression: evidence from an event-related potential study. Clin. Neurophysiol. 123, 2172–2179. doi: 10.1016/j.clinph.2012.04.018

Dai, Q., Wei, J., Shu, X., and Feng, Z. (2016). Negativity bias for sad faces in depression: an event-related potential study. Clin. Neurophysiol. 127, 3552–3560. doi: 10.1016/j.clinph.2016.10.003

Da Silva, E. B., Crager, K., and Puce, A. (2016). On dissociating the neural time course of the processing of positive emotions. Neuropsychologia 83, 123–137. doi: 10.1016/j.neuropsychologia.2015.12.001

Delle-Vigne, D., Wang, W., Kornreich, C., Verbanck, P., and Campanella, S. (2014). Emotional facial expression processing in depression: data from behavioral and event-related potential studies. Neurophysiol. Clin. 44, 169–187. doi: 10.1016/j.neucli.2014.03.003

Eger, E., Jedynak, A., Iwaki, T., and Skrandies, W. (2003). Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia 41, 808–817. doi: 10.1016/S0028-3932(02)00287-7

Eimer, M., and Holmes, A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431. doi: 10.1097/00001756-200203250-00013

Eimer, M., Holmes, A., and McGlone, F. P. (2003). The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 3, 97–110. doi: 10.3758/CABN.3.2.97

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo Alto CA: Consulting Psychologists Press.

Foti, D., Olvet, D. M., Klein, D. N., and Hajcak, G. (2010). Reduced electrocortical response to threatening faces in major depressive disorder. Depress. Anxiety 27, 813–820. doi: 10.1002/da.20712

Fu, C. H., Williams, S. C., Brammer, M. J., Suckling, J., Kim, J., Cleare, A. J., et al. (2007). Neural responses to happy facial expressions in major depression following antidepressant treatment. Am. J. Psychiatry 164, 599–607. doi: 10.1176/ajp.2007.164.4.599

Gayle, L. C., Gal, D. E., and Kieffaber, P. D. (2012). Measuring affective reactivity in individuals with autism spectrum personality traits using the visual mismatch negativity event-related brain potential. Front. Hum. Neurosci. 6:334. doi: 10.3389/fnhum.2012.00334

Gollan, J. K., Pane, H. T., McCloskey, M. S., and Coccaro, E. F. (2008). Identifying differences in biased affective information processing in major depression. Psychiatry Res. 159, 18–24. doi: 10.1016/j.psychres.2007.06.011

Good, P. (2005). Permutation, Parametric, and Bootstrap Tests of Hypotheses, 3rd Edn. New York, NY: Springer

Gotlib, I. H., Krasnoperova, E., Yue, D. N., and Joormann, J. (2004). Attentional biases for negative interpersonal stimuli in clinical depression. J. Abnorm. Psychol. 113, 127–135. doi: 10.1037/0021-843X.113.1.127

Hendriks, M. C., van Boxtel, G. J., and Vingerhoets, A. J. (2007). An event-related potential study on the early processing of crying faces. Neuroreport 18, 631–634. doi: 10.1097/WNR.0b013e3280bad8c7

Herrmann, M. J., Aranda, D., Ellgring, H., Mueller, T. J., Strik, W. K., Heidrich, A., et al. (2002). Face-specific event-related potential in humans is independent from facial expression. Int. J. Psychophysiol. 45, 241–244. doi: 10.1016/S0167-8760(02)00033-8

Hinojosa, J. A., Mercado, F., and Carretié, L. (2015). N170 sensitivity to facial expression: a meta-analysis. Neurosci. Biobehav. Rev. 55, 498–509. doi: 10.1016/j.neubiorev.2015.06.002

Holmes, A., Vuilleumier, P., and Eimer, M. (2003). The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Cogn. Brain Res. 16, 174–184. doi: 10.1016/S0926-6410(02)00268-9

Itier, R. J., Herdman, A. T., George, N., Cheyne, D., and Taylor, M. J. (2006). Inversion and contrast-reversal effects on face processing assessed by MEG. Brain Res. 1115, 108–120. doi: 10.1016/j.brainres.2006.07.072

Jacobsen, T., and Schröger, E. (2001). Is there pre-attentive memory-based comparison of pitch? Psychophysiology 38, 723–727. doi: 10.1017/S0048577201000993

Japee, S., Crocker, L., Carver, F., Pessoa, L., and Ungerleider, L. G. (2009). Individual differences in valence modulation of face-selective m170 response. Emotion 9, 59–69. doi: 10.1037/a0014487.Individual

Jaworska, N., Blier, P., Fusee, W., and Knott, V. (2012). The temporal electrocortical profile of emotive facial processing in depressed males and females and healthy controls. J. Affect. Disord. 136, 1072–1081. doi: 10.1109/TMI.2012.2196707.Separate

Kimura, M. (2012). Visual mismatch negativity and unintentional temporal-context-based prediction in vision. Int. J. Psychophysiol. 83, 144–155. doi: 10.1016/j.ijpsycho.2011.11.010

Kimura, M., Katayama, J. I., Ohira, H., and Schröger, E. (2009). Visual mismatch negativity: new evidence from the equiprobable paradigm. Psychophysiology 46, 402–409. doi: 10.1111/j.1469-8986.2008.00767

Kimura, M., Kondo, H., Ohira, H., and Schröger, E. (2012). Unintentional temporal context-based prediction of emotional faces: an electrophysiological study. Cereb. Cortex 22, 1774–1785. doi: 10.1093/cercor/bhr244

Kovarski, K., Latinus, M., Charpentier, J., Cléry, H., Roux, S., Houy-Durand, E., et al. (2017). Facial expression related vMMN: disentangling emotional from neutral change detection. Front. Hum. Neurosci 11:18. doi: 10.3389/fnhum.2017.00018

Kremláček, J., Kreegipuu, K., Tales, A., Astikainen, P., Põldver, N., Näätänen, R., et al. (2016). Visual mismatch negativity (vMMN): a review and meta-analysis of studies in psychiatric and neurological disorders. Cortex 80, 76–112. doi: 10.1016/j.cortex.2016.03.017

Leppänen, J. M., Kauppinen, P., Peltola, M. J., and Hietanen, J. K. (2007). Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Res. 1166, 103–109. doi: 10.1016/j.brainres.2007.06.060

Li, X., Lu, Y., Sun, G., Gao, L., and Zhao, L. (2012). Visual mismatch negativity elicited by facial expressions: new evidence from the equiprobable paradigm. Behav. Brain Funct. 8:7. doi: 10.1186/1744-9081-8-7

Linden, S. C., Jackson, M. C., Subramanian, L., Healy, D., and Linden, D. (2011). Sad benefit in face working memory: an emotional bias of melancholic depression. J. Affect. Disord. 135, 251–257. doi: 10.1016/j.jad.2011.08.002

Liu, J., Harris, A., and Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916. doi: 10.1038/nn909

Luo, W., Feng, W., He, W., Wang, N., and Luo, Y. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49, 1857–1867. doi: 10.1016/j.neuroimage.2009.09.018.Three

Maekawa, T., Katsuki, S., Kishimoto, J., Onitsuka, T., Ogata, K., Yamasaki, T., et al. (2013). Altered visual information processing systems in bipolar disorder: evidence from visual MMN and P3. Front. Hum. Neurosci. 7:403. doi: 10.3389/fnhum.2013.00403

Mathews, A., and Macleod, C. M. (2005). Cognitive vulnerability to emotional disorders. Annu. Rev. Clin. Psychol. 1, 167–195. doi: 10.1146/annurev.clinpsy.1.102803.143916

Maurage, P., Campanella, S., Philippot, P., de Timary, P., Constant, E., Gauthier, S., et al. (2008). Alcoholism leads to early perceptive alterations, independently of comorbid depressed state: an ERP study. Neurophysiol. Clin. 38, 83–97. doi: 10.1016/j.neucli.2008.02.001

Miyoshi, M., Katayama, J., and Morotomi, T. (2004). Face-specific N170 component is modulated by facial expressional change. Neuroreport 15, 911–914. doi: 10.1097/00001756-200404090-00035

Morel, S., Ponz, A., Mercier, M., Vuilleumier, P., and George, N. (2009). EEG-MEG evidence for early differential repetition effects for fearful, happy and neutral faces. Brain Res. 1254, 84–98. doi: 10.1016/j.brainres.2008.11.079

Näätänen, R., Astikainen, P., Ruusuvirta, T., and Huotilainen, M. (2010). Automatic auditory intelligence: an expression of the sensory–cognitive core of cognitive processes. Brain Res. Rev. 64, 123–136. doi: 10.1016/j.brainresrev.2010.03.001

Näätänen, R., Gaillard, A. W., and Mäntysalo, S. (1978). Early selective-attention effect on evoked potential reinterpreted. Acta psychol. 42, 313–329. doi: 10.1016/0001-6918(78)90006-9

Peyk, P., Schupp, H. T., Elbert, T., and Junghöfer, M. (2008). Emotion processing in the visual brain: a MEG analysis. Brain Topogr. 20, 205–215. doi: 10.1007/s10548-008-0052-7

Qiu, X., Yang, X., Qiao, Z., Wang, L., Ning, N., Shi, J., et al. (2011). Impairment in processing visual information at the pre-attentive stage in patients with a major depressive disorder: a visual mismatch negativity study. Neurosci. Lett. 491, 53–57. doi: 10.1016/j.neulet.2011.01.006

Ridout, N., Astell, A., Reid, I., Glen, T., and O’Carroll, R. (2003). Memory bias for emotional facial expressions in major depression. Cogn. Emot. 17, 101–122. doi: 10.1080/02699930302272

Ridout, N., Noreen, A., and Johal, J. (2009). Memory for emotional faces in naturally occurring dysphoria and induced negative mood. Behav. Res. Ther. 47, 851–860. doi: 10.1016/j.brat.2009.06.013

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., and Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychon. Bull. Rev. 16, 225–237. doi: 10.3758/PBR.16.2.225

Schweinberger, S. R., Kaufmann, J. M., Moratti, S., Keil, A., and Burton, A. M. (2007). Brain responses to repetitions of human and animal faces, inverted faces, and objects - An MEG study. Brain Res. 1184, 226–233. doi: 10.1016/j.brainres.2007.09.079

Schyns, P. G., Petro, L. S., and Smith, M. L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Curr. Biol. 17, 1580–1585. doi: 10.1016/j.cub.2007.08.048

Stefanics, G., Csukly, G., Komlósi, S., Czobor, P., and Czigler, I. (2012). Processing of unattended facial emotions: a visual mismatch negativity study. Neuroimage 59, 3042–3049. doi: 10.1016/j.neuroimage.2011.10.041

Stefanics, G., Heinzle, J., Attila Horváth, A., and Enno Stephan, K. (2018). Visual mismatch and predictive coding: a computational single-trial ERP study. J. Neurosci. 38, 4020–4030. doi: 10.1523/JNEUROSCI.3365-17.2018

Stefanics, G., Kremláček, J., and Czigler, I. (2014). Visual mismatch negativity: a predictive coding view. Front. Hum. Neurosci. 8:666. doi: 10.3389/fnhum.2014.00666

Supek, S., and Aine, C. J. (2014). Magnetoencephalography: From Signals to Dynamic Cortical Networks. Heidelberg: Springer doi: 10.1007/978-3-642-33045-2

Susac, A., Ilmoniemi, R. J., Pihko, E., Ranken, D., and Supek, S. (2010). Early cortical responses are sensitive to changes in face stimuli. Brain Res. 1346, 155–164. doi: 10.1016/j.brainres.2010.05.049

Tadel, F., Baillet, S., Mosher, J. C., Pantazis, D., and Leahy, R. M. (2011). Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011, 879716. doi: 10.1155/2011/879716

Taulu, S., Simola, J., and Kajola, M. (2005). Applications of the signal space separation method. IEEE Trans. Signal. Process. 53, 3359–3372. doi: 10.1109/TSP.2005.853302

Taylor, M. J., Bayless, S. J., Mills, T., and Pang, E. W. (2011). Recognising upright and inverted faces: MEG source localisation. Brain Res. 1381, 167–174. doi: 10.1016/j.brainres.2010.12.083

Ulloa, J. L., Puce, A., Hugueville, L., and George, N. (2014). Sustained neural activity to gaze and emotion perception in dynamic social scenes. Soc. Cogn. Affect. Neurosci. 9, 350–357. doi: 10.1093/scan/nss141

Uusitalo, M. A., and Ilmoniemi, R. J. (1997). Signal-space projection method for separating MEG or EEG into components. Med. Biol. Eng. Comput. 35, 135–140. doi: 10.1007/BF02534144

Williams, L. M., Palmer, D., Liddell, B. J., Song, L., and Gordon, E. (2006). The “when” and “where” of perceiving signals of threat versus non-threat. Neuroimage 31, 458–467. doi: 10.1016/j.neuroimage.2005.12.009

Wronka, E., and Walentowska, W. (2011). Attention modulates emotional expression processing. Psychophysiology 48, 1047–1056. doi: 10.1111/j.1469-8986.2011.01180.x

Zhao, L., and Li, J. (2006). Visual mismatch negativity elicited by facial expressions under non-attentional condition. Neurosci. Lett. 410, 126–131. doi: 10.1016/j.neulet.2006.09.081

Keywords: automatic, change detection, dysphoria, emotional faces, magnetoencephalography

Citation: Xu Q, Ruohonen EM, Ye C, Li X, Kreegipuu K, Stefanics G, Luo W and Astikainen P (2018) Automatic Processing of Changes in Facial Emotions in Dysphoria: A Magnetoencephalography Study. Front. Hum. Neurosci. 12:186. doi: 10.3389/fnhum.2018.00186

Received: 27 November 2017; Accepted: 17 April 2018;

Published: 04 May 2018.

Edited by:

Xiaolin Zhou, Peking University, ChinaReviewed by:

Jan Kremláček, Charles University, CzechiaCopyright © 2018 Xu, Ruohonen, Ye, Li, Kreegipuu, Stefanics, Luo and Astikainen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wenbo Luo, bHVvd2JAbG5udS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.